Submitted:

17 April 2025

Posted:

21 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methodology

2.1. Tsallis Entropy

2.2. Simulation Design

- Mild ASD: Scores follow .

- Moderate ASD: Scores follow .

- Severe ASD: Scores follow .

2.3. Implementation

- Generating synthetic scores for SI, CD, and RB per individual.

- Normalizing scores to probabilities: , where is the score for domain i.

- Computing Tsallis entropy using Equation 1 for .

- Visualizing entropy distributions across severity levels.

3. Results

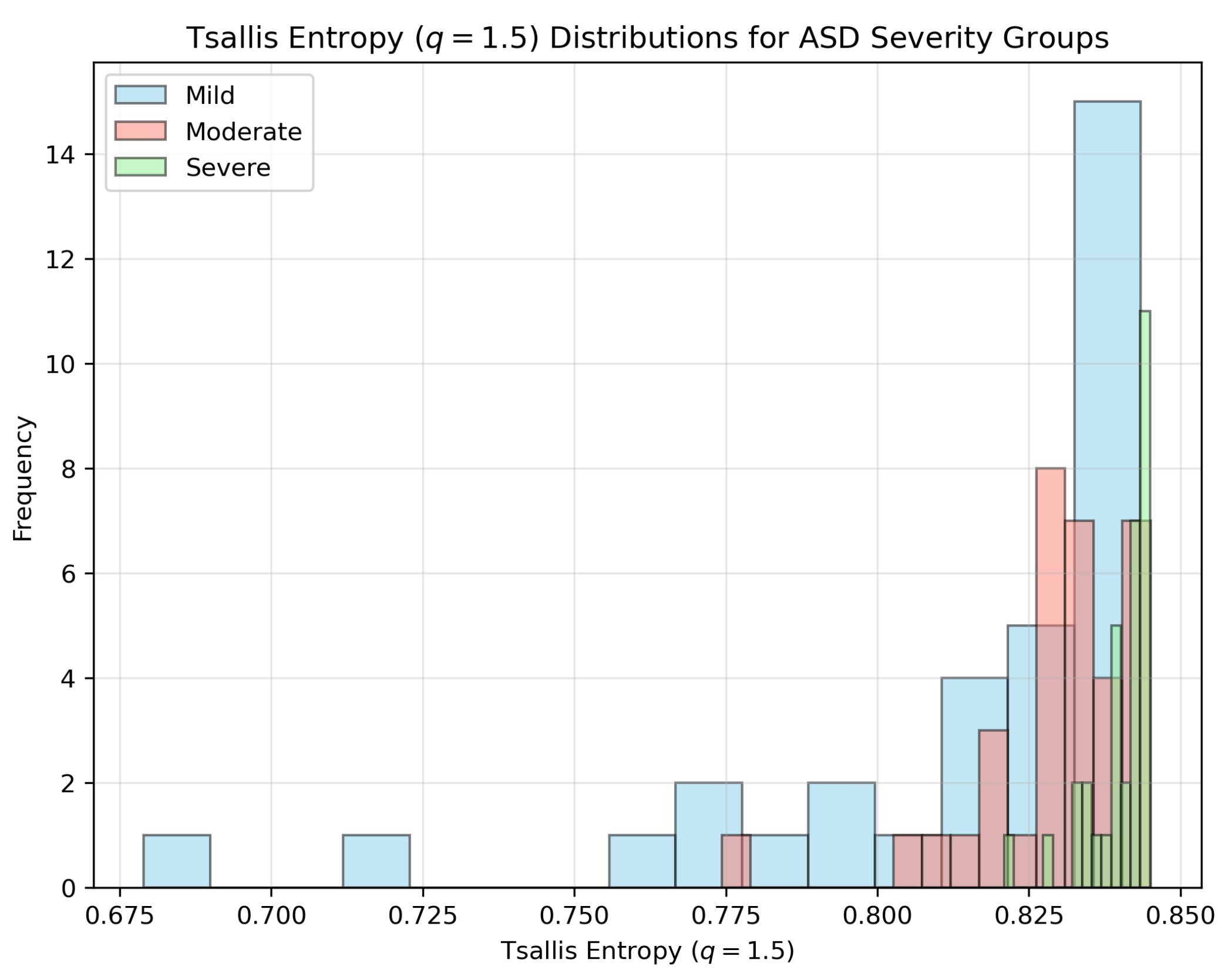

- Mild:

- Moderate:

- Severe:

4. Discussion

5. Conclusion

6. Data Availability

Appendix A. Python Code for Simulation

References

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders (DSM-5), 5th ed.; American Psychiatric Publishing: Arlington, VA, 2013. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. Journal of Statistical Physics 1988, 52, 479–487. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).