1. Introduction

Assembly Theory (AT) [

1,

2,

3,

4,

5,

6,

7] provides a distinctive complexity measure, superior to established complexity measures used in information theory, such as Shannon entropy or Kolmogorov complexity [

1,

5]. AT does not alter the fundamental laws of physics [

6]. Instead, it redefines

objects on which these laws operate. In AT,

objects are not considered sets of point

particles (as in most physics), but instead are defined by the histories of their formation (assembly pathways) as an intrinsic property, where, in general, there are multiple assembly pathways to create a given

object.

AT explains and quantifies selection and evolution, capturing the amount of memory necessary to produce a given

object [

6] (this memory

is the

object [

8]). This is because the more complex a given

object is, the less likely an identical copy can be observed without the selection of some information-driven mechanism that generated that

object. Formalizing assembly pathways as sequences of joining operations, AT begins with basic units (such as chemical bonds) and ends with a final

object. This conceptual shift captures evidence of selection in

objects [

1,

2,

6].

The assembly index of an

object corresponds to the smallest number of steps required to assemble this

object, and - in general - increases with the

object’s size but decreases with symmetry, so large

objects with repeating substructures may have a smaller assembly index than smaller

objects with greater heterogeneity [

1]. The copy number specifies the observed number of copies of an

object. Only these two quantities describe the evolutionary concept of selection by showing how many alternatives were excluded to assemble a given

object [

6,

8].

AT has been experimentally confirmed in the case of molecules and has been probed directly experimentally with high accuracy with spectroscopy techniques, including mass spectroscopy, IR, and NMR spectroscopy [

6,

7]. It is a versatile concept with applications in various domains. Beyond its application in the field of biology and chemistry [

7], its adaptability to different data structures, such as text, graphs, groups, music notations, image files, compression algorithms, human languages, memes, etc., showcases its potential in diverse fields [

2].

In this study, we investigate the assembly pathways of binary strings (bitstrings) by joining individual bits present in the assembly pool and bitstrings that entered the pool as a result of previous joining operations.

Bit is the smallest amount and the quantum of information. Perceivable information about any

object can be encoded by a bitstring [

9,

10] but this does not imply that a bitstring defines an

object. Information that defines a chemical compound, a virus, a computer program, etc. can be encoded by a bitstring. However, a dissipative structure [

11] such as a living biological cell (or its conglomerate such as a human, for example) cannot be represented by a bitstring (even if its genome can). This information can only be perceived (so this is not an

object defining information). Therefore, we use the emphasis for the

object in this paper as this term, understood as a collection of

matter, is a misnomer, as it neglects the (quantum) nonlocality [

12]. The nonlocality is independent of the entanglement among

particles [

13], as well as the quantum contextuality [

14], and increases as the number of

particles [

15] grows [

16,

17]. Furthermore, the ugly duckling theorem [

9,

10] asserts that every two

objects we perceive are equally similar (or equally dissimilar).

Furthermore, a bitstring, as such is neither dissipative nor creative. It is its assembly process that can be dissipative or creative. The perceivable universe is not big enough to contain the future; it is deterministic going back in time and non-deterministic going forward in time [

18]. But we know [

2,

11,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29] that it has evolved to the present since the Big Bang. Evolution is about assembling a novel structure of information and optimizing its assembly process until it reaches the assembly index. Once the new information is assembled (by a dissipative structure operating far from thermodynamic equilibrium, including humans), it enters the realm of the 2

nd law of thermodynamics, and nature seeks how to optimize its assembly pathway.

At first, the newly assembled structure of information is discovered by groping [

19] and its assembly pathway does not attain its most economical or efficient form at once. For a certain period of time, its evolution gropes about within itself. The try-out follows the try-out, not being finally adopted. Then finally perfection comes within sight, and from that moment the rhythm of change slows down [

19]. The new information, having reached the limit of its potentialities, enters the phase of conquest. Stronger now than its less perfected neighbours, the new information multiplies and consolidates. When the assembly index is reached, new information attains equilibrium, and its evolution terminates. It becomes stable.

"Thanks to its characteristic additive power, living matter (unlike the matter of the physicists) finds itself ’ballasted’ with complications and instability. It falls, or rather rises, towards forms that are more and more improbable. Without orthogenesis life would only have spread; with it there is an ascent of life that is invincible." [

19]

The paper is structured as follows.

Section 2 introduces basic concepts and definitions used in the paper.

Section 3 shows that the bitstring assembly index is bounded from below and provides the form of this bound.

Section 4 defines the degree of causation for the smallest assembly index bitstrings.

Section 5 shows that the bitstring assembly index is bounded from above and conjectures about the exact form of this bound.

Section 6 introduces the concept of a binary assembling program and shows that, in general, the trivial assembling program assembles the smallest assembly index bitstrings.

Section 7 discusses and concludes the findings of this study.

2. Preliminaries

For

K subunits of an

objectO the assembly index

of this

object is bounded [

1] from below by

and from above by

The lower bound (

1) represents the fact that the simplest way to increase the size of a subunit in a pathway is to take the largest subunit assembled so far and join it to itself [

1] and, in the case of the upper bound (

2), subunits must be distinct so that they cannot be reused from the pool, decreasing the index.

Here, we consider bitstrings

containing bits

, with

zeros and

ones, having length

.

is called the binary Hamming weight or bit summation of a bitstring. Bitstrings are our basic AT

objects [

2] and we consider the process of their formation within the AT framework. Where the bit value can be either 1 or 0, we write

with * being the same within the bitstring

. If we allow for the 2

nd possibility that can be the same as or different from *, we write

. Thus,

, for example, is a placeholder for all four 2-bit strings.

We consider bitstrings

to be

messages transmitted through a communication channel between a source and a receiver, similarly to the Claude Shannon approach [

30] used in the derivation of binary information entropy

where

are the ratios of occurrences of zeros and ones within the bitstring

and the unit of entropy (

3) is bit.

Definition 1. A bitstring assembly index is the smallest number of steps s required to assemble a bitstring of length N by joining two distinct bits contained in the initial assembly pool and bitstrings assembled in previous steps that were added to the assembly pool. Therefore, the assembly index is a function of the bitstring .

For example, the 8-bit string

can be assembled in at most seven steps:

join 0 with 1 to form , adding to ,

join with 0 to form , adding to ,

...

join with 1 to form

(i.e. not using the assembly pool P), six, five, or four steps:

join 0 with 1 to form , adding to P,

join with taken from P to form , adding to P,

join with taken from P to form , adding to P,

join with taken from P to form ,

or at least three steps:

join 0 with 1 to form , adding to P,

join with taken from P to form , adding to P,

join with taken from P to form ,

while the 8-bit string

can be assembled in at least six steps:

join 0 with 1 to form , adding to P,

join with taken from P to form , adding to P,

join 0 with 0 adding to P,

join with taken from P to form , adding to P,

join with 1 to form , adding to P,

join with 1 to form ,

as only the doublet

can be reused from the pool. Therefore, bitstrings (

5) and (

6), despite having the same length

, Hamming weight

, and Shannon entropy (

3), have respective assembly indices

and

that represent the lengths of their shortest assembly pathways, which in turn ensures that their assembly pools

P are distinct sets for a given assembly pathway.

Table 1 and

Table A5–

Table A12 (

Appendix C) show the distributions of the assembly indices among

bitstrings for

taking into account the number of ones

. The sums of each column form Pascal’s triangle read by rows (OEIS

A007318).

The following definition is commonly known, but we provide it here for clarity.

Definition 2. A bitstring is a balanced string if its Hamming weight or .

Without loss of generality, we shall assume that if

N is odd,

(e.g., for

,

, and

). However, our results are equivalently applicable if we assume the opposite (i.e. a larger number of ones for an odd

N). The number

of balanced bitstrings among all

bitstrings is

1

This is the OEIS

A001405 sequence, the maximal number of subsets of an

N-set such that no one contains another, as asserted by Sperner’s theorem, and approximated using Stirling’s approximation for large

N. Balanced and even length bitstrings

have natural binary entropies (

3)

. Conversely, non-balanced and/or odd-length bitstrings

have binary entropies

.

Theorem 1. An -bit string is the shortest string having more than one bitstring assembly index 1.

Proof. The proof is trivial. For

the assembly index

, as all basis

objects have a pathway assembly index of 0 [

2] (they are not

assembled).

provides four available bitstrings with

.

provides eight available bitstrings with

. Only

provides 16 bitstrings that include four stings with

and twelve bitstrings with

including

balanced bitstrings, as shown in

Table 1 and

Table 2. For example, to assemble the bitstring

, we need to assemble the bitstring

and reuse it. Therefore,

for

,

and

for

, where

denotes a set of assembly indices of all

bitstrings. □

Interestingly, Theorem 1 strengthens the meaning of

as the minimum information capacity that provides a minimum thermodynamic black hole entropy [

31,

32,

33]. There is no

disorder or

uncertainty in an

object that can be assembled in the same number of steps

.

The following definition, taking into account the cyclic order of bitstrings, is also provided for the sake of clarity.

Definition 3. A bitstring is a ringed bitstring if a ring formed with this string by joining its beginning with its end is unique among the rings formed from the other ringed strings .

There are at least two and at most

N forms of a ringed bitstring

that differ in the position of the starting bit. For example for

balanced bitstrings, shown in

Table 2, two augmented strings with

correspond to each other if we change the starting bit

Similarly, four augmented bitstrings with

correspond to each other

after a change in the position of the starting bit. Thus, there are only two balanced ringed bitstrings

.

The number of ringed bitstrings

among all

bitstrings is given by the OEIS sequence

A000031. In general (for

), the number

of ringed bitstrings is much lower than the number

of balanced bitstrings.

By neglecting the notion of the beginning and end of a string, we focus on its length and content. In Yoda’s language,

"complete, no matter where it begins. A message is".

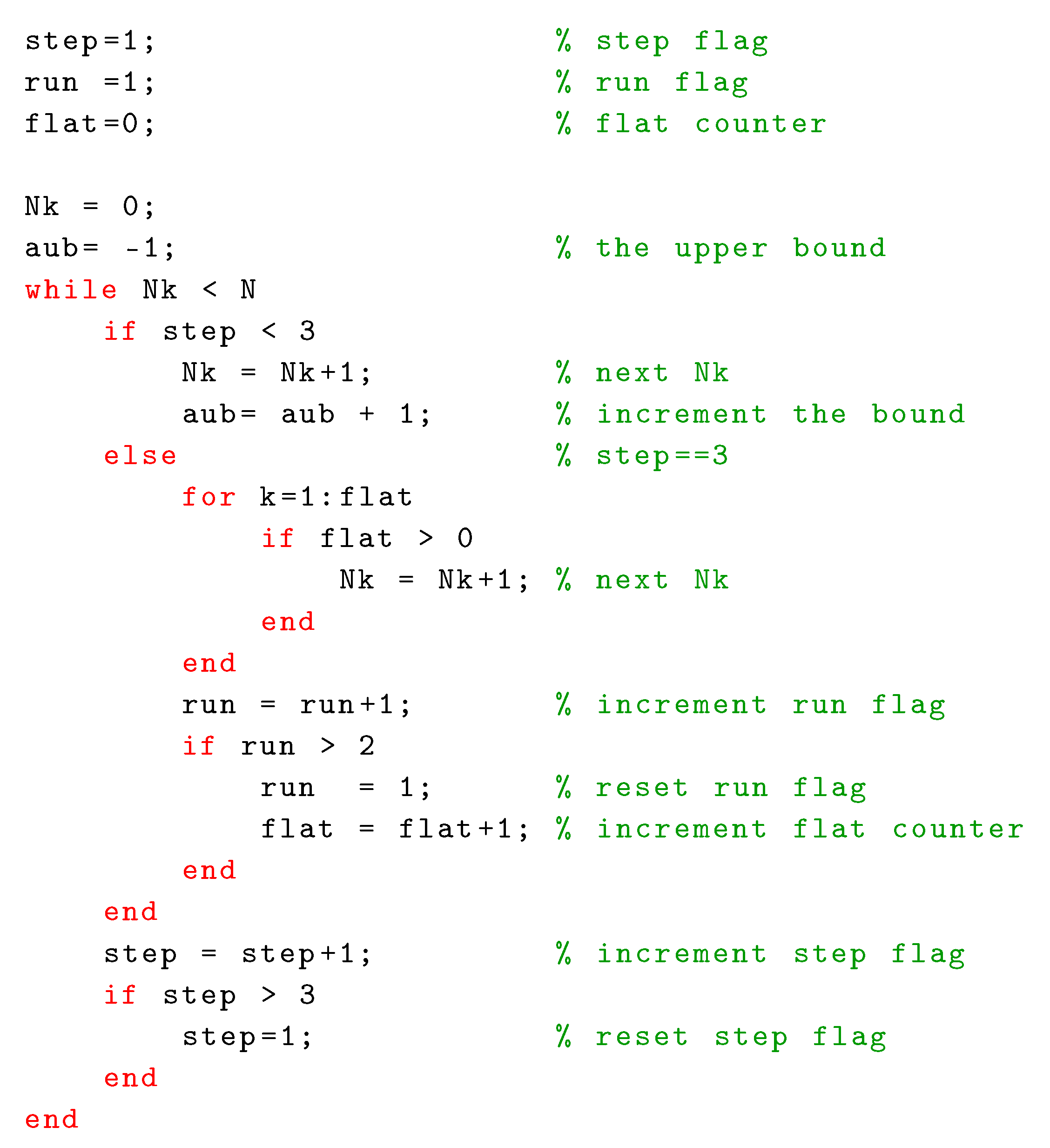

The numbers of the balanced

, ringed

, and balanced ringed

2 bitstrings are shown in

Table 3 and

Figure 1. The formula for

remains to be researched.

We note that, in general, the starting bit is relevant for the assembly index. Thus, different forms of a ringed bitstring may have different assembly indices. For example, for

balanced bitstrings

and

, shown in

Table A15 have

. However, these bitstrings are not ringed, since they correspond to each other and to the balanced bitstrings

,

,

,

, and

with

. They all have the same triplet of adjoining ones.

Definition 4. The assembly index of a ringed bitstring is the smallest assembly index among all forms of this string.

Thus, if different forms of a ringed bitstring have different assembly indices, we assign the smallest assembly index to this string. In other words, we assume that the smallest number of steps

where

denotes a particular

form of a ringed bitstring

, is the bitstring assembly index of this ringed string. We assume that if an

object that can be represented by a ringed bitstring can be assembled in fewer steps, this procedure will be preferred by nature.

The distribution of the assembly indices of the balanced ringed bitstrings

is shown in

Table 4.

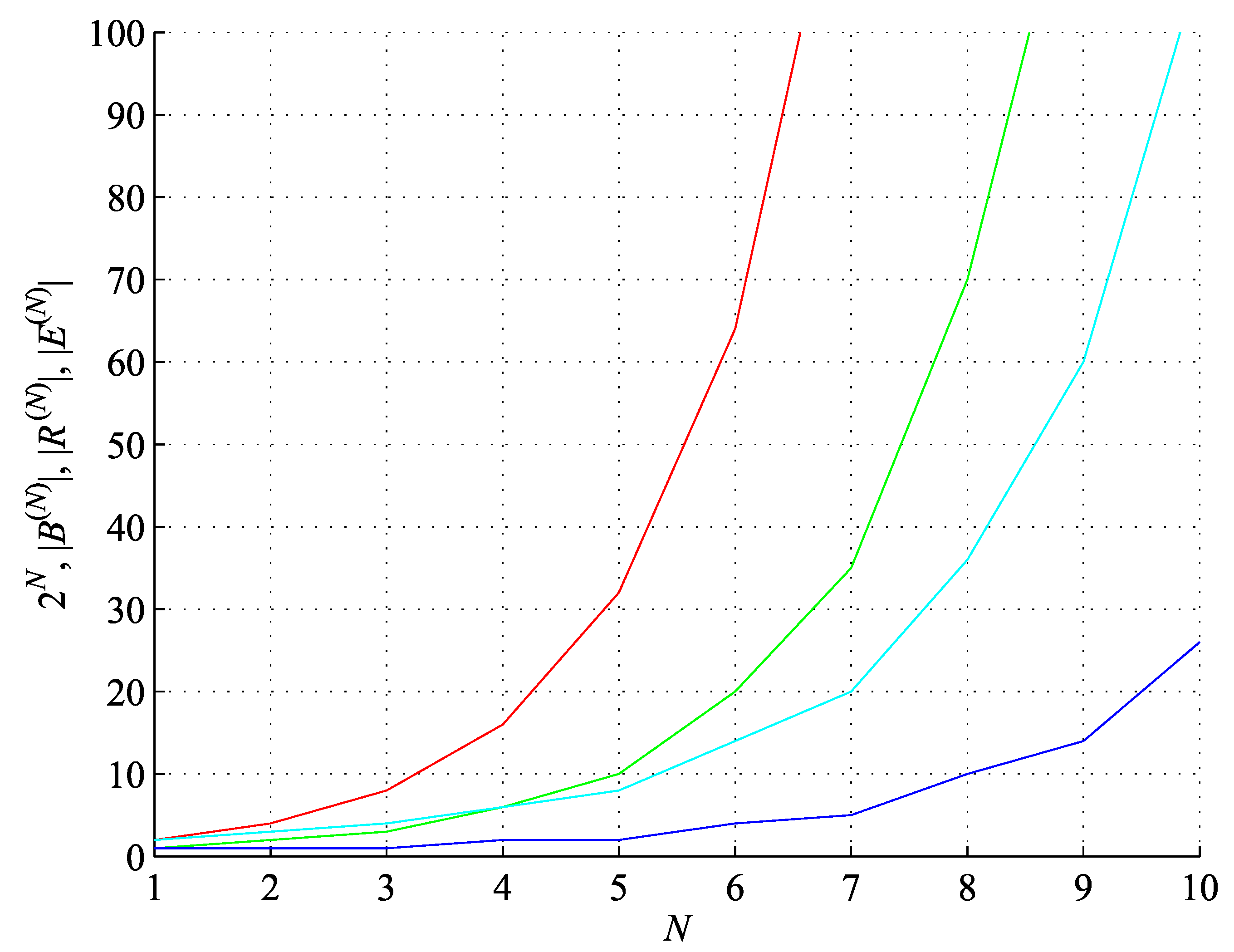

4. Degree of Causation for Minimum Assembly Index Bitstrings

Using the difference between the general AT lower bound (

1) and the smallest bitstring assembly index (OEIS

A003313) we can define the quantity

capturing a degree of causation [

6] of assembling the bitstrings of length

N with the smallest assembly index, as shown in

Figure 2. For

, the degree of causation

, as all bitstrings (

11) can be assembled along a single pathway only; their assembly is entirely causal. However, for

,

, since some bitstrings

can be assembled along different pathways. For example, there are two pathways for the bitstring

: (a)

and (b)

leaving different subunits (respectively

and

) in their assembly pools and resulting in lower values of

.

Equation (

12) naturally divides the set of natural numbers into sections

and shows regularities that for certain values of

N can be used to determine the smallest assembly index (i.e. the shortest addition chain for

N) as

. For each

and for each

being the sum of two powers of 2 (OEIS

A048645)

while for the remaining

not being the sum of two powers of 2 (OEIS

A072823)

where

for

, while some

’s generate exceptions to this general rule (cf. OEIS

A230528). For example,

for

,

for

, etc. The first exception,

is for

. The first double exception,

is for

. However, in particular, for

so the number of

Ns within each section, not included in the set of general rules

, (

13), (

15), and (

16), is

. Furthermore,

The shortest addition chain sequence generating factors for

are listed in

Table 6, where the subsequent odd numbers of the form

generate sequences

, where

, while the

numbers in red indicate that certain

s within the sequences they generate are exceptions to the general

rule. For example, if

then

and

where the last two values

are higher than those given by the general rule. Based on the OEIS

A003313 sequence for

, we have determined the number of exceptions, that is

such that

for

as shown in

Table 7, where

is the minimal generating factor

shown in

Table 6 that generates the exceptional

. For all

,

. The fact that

hints at the existence of general rules other than

, (

13), (

15), and (

16).

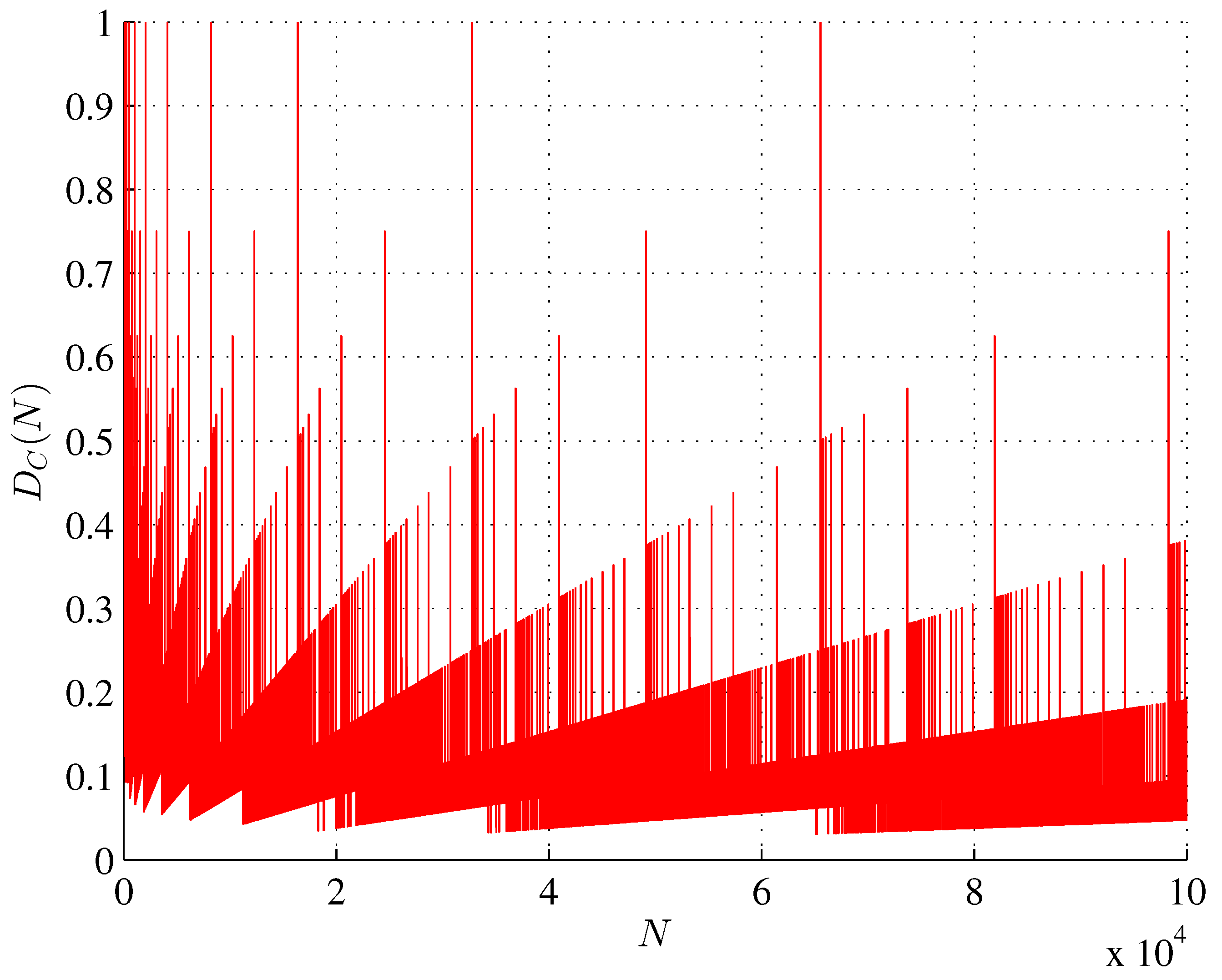

Furthermore, for

,

and for

,

(OEIS

A024012) [

35]. As shown in

Figure 3(a), for all

s,

asymptotically approaches

available in a given section as

, as shown in

Figure 3(b) For

this ratio has a deflection point

.

Only living systems have been found to be capable of producing abundant molecules with an assembly index greater than an experimentally determined value of 15 steps [

3,

8]. The cut-off between 13 and 15 is sharp, which means that molecules made by random processes cannot have assembly indices exceeding 13 steps [

3,

8]. In particular,

is the length of the shortest addition chain for

N which is smaller than the number of multiplications to compute

power by the Chandah-sutra method (OEIS

A014701, OEIS

A371894). Furthermore, the values of the sequence A014701 are larger than the shortest addition chain for

. These values (OEIS

A371894) are not given by equation (

15) but equation (

16) provides their subset. Their Hamming weight is at least 4 in binary representation [

36]. Furthermore, the exceptional

values bear similarity to the atomic numbers

Z of chemical elements that violate the Aufbau rule [

15] that correctly predicts the electron configurations of most elements. Only about twenty elements within

(with only two non-doubleton sets of consecutive ones) violate the Aufbau rule.

5. Maximum Bitstring Assembly Index

In the following, we conjecture the form of the upper bound of the set of different bitstring assembly indices. In general, of all bitstrings

having a given assembly index, shown in

Table 1 and

Table A5–

Table A12 (

Appendix C), most have

, though we have found a few exceptions, mostly for non-maximal assembly indices, namely for

(

) and for

(

), for

(

) and for

(

), and for

(

). These observations allow us to restrict the search space of possible bitstrings with the largest assembly indices to balanced bitstrings only: with the exception of

, of all bitstrings

having a largest assembly index, most are balanced. We can further restrict the search space to ringed bitstrings (Definition 3). If a bitstring

for which

is constructed from repeating patterns, then a bitstring

for which

must be the most patternless. The bitstring assembly index must be bounded from above and

must be a monotonically nondecreasing function of

N that can increase at most by one between

N and

. Certain heuristic rules apply in our binary case. For example,

for we cannot avoid two doublets (e.g. ) within a ringed bitstring and thus ,

for we cannot avoid two pairs of doublets (e.g. and ) within a ringed bitstring and thus ,

for we cannot avoid three pairs of doublets (e.g. , , and ) within a ringed bitstring and thus ,

for we cannot avoid two pairs of doublets and one doublet three times (e.g. , , and , and thus ,

etc.

Table 8 shows the exemplary balanced bitstrings

having the largest assembly indices that we assembled (cf. also

Appendix A). To determine the assembly index

of the bitstring

for example, we look for the longest patterns that appear at least twice within the string, and we look for the largest number of these patterns. Here, we find that each of the two triplets

and

appear twice in

and are based on the doublets

and

also appearing in

. Thus, we start with the assembly pool

made in four steps and join the elements of the pool in the following seven steps to arrive at

. On the other hand, another form of this balanced ringed string

has

.

These results allow us to formulate the following conjecture.

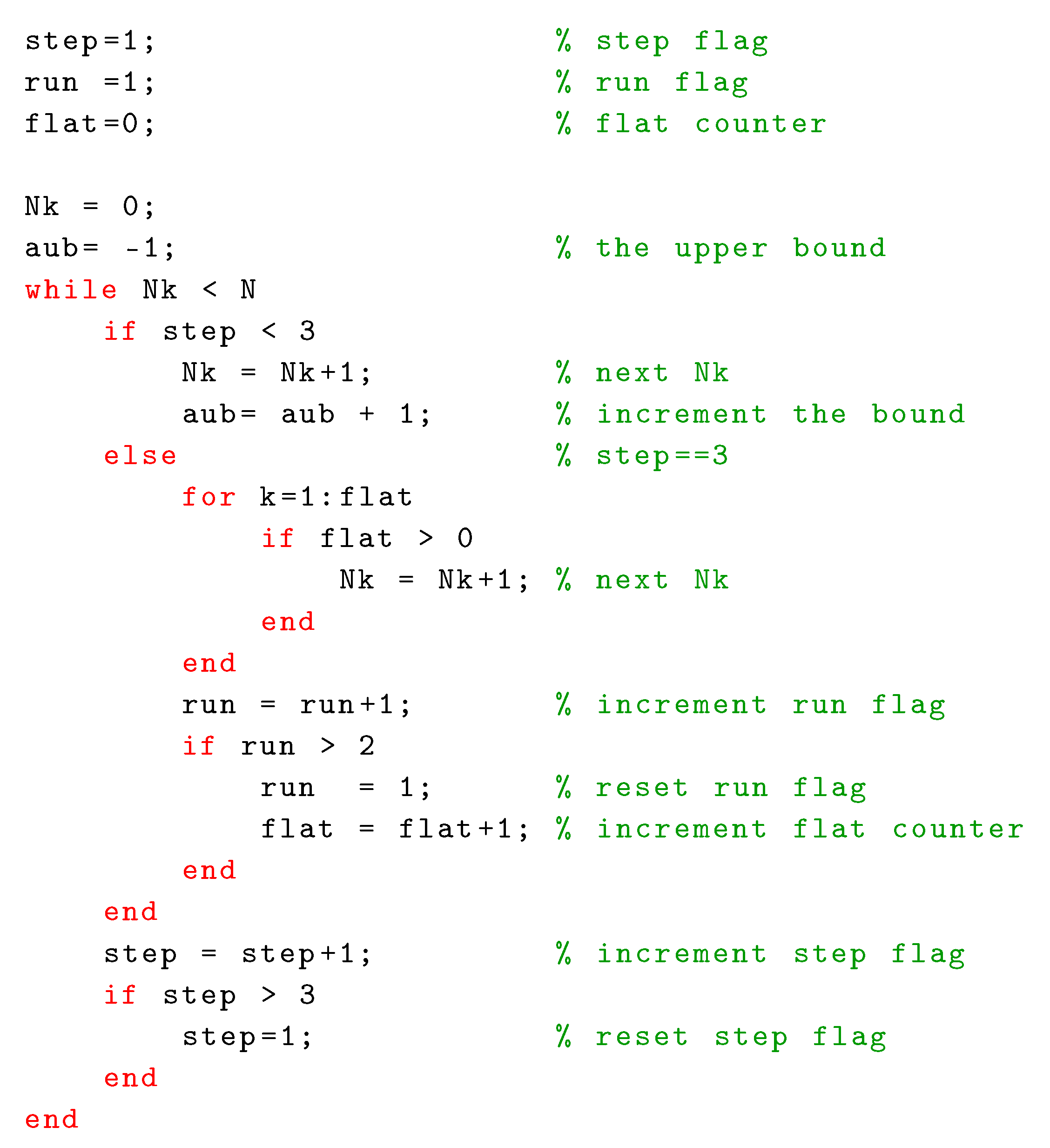

Conjecture 1 (Tight upper bound on a bitstring assembly index). With exceptions for small N the largest bitstring assembly index is given by a sequence formed by for , where denotes increasing by one, and 0 denotes maintaining it at the same level, and .

However, at this moment, we cannot state whether this conjecture applies to ringed or non-ringed bitstrings. The assembly indices for

are the same for a given

N, whereas the assembly indices for

were discussed above and are calculated in

Appendix C for balanced and balanced ringed bitstrings.

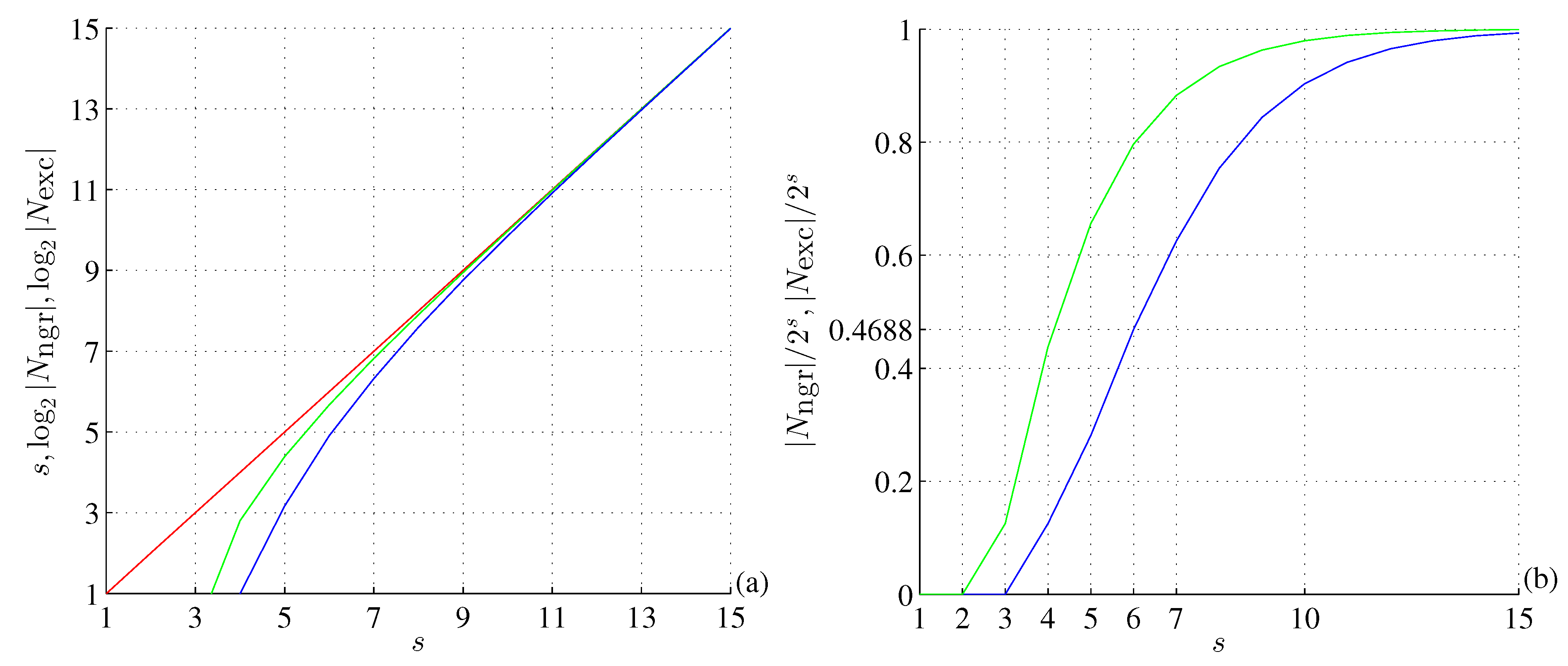

The conjectured sequence is shown in

Figure 4 and

Figure 5 starting with

(we note in passing that

is a dimension of the void, the empty set

∅, or (-1)-simplex). Subsequent terms are given by

, which is periodic for

and defines

plateaus of a constant bitstring assembly index at

, and

,

,

.

This sequence can be generated using the following procedure

We note the similarity of this bound to the monotonically nondecreasing Shannon entropy of chemical elements, including observable ones [

15]. Perhaps the exceptions in the sequence of Conjecture 1 vanish as

N increases.

6. Binputation

So far we have assembled bitstrings "manually". Now we shall automatize this process using other bitstrings as assembling programs.

Definition 5. The binary assembling program is a bitstring of length that acts on the assembly pool P and outputs the assembled bitstrings, adding them to the pool.

Definition 6. The trivial assembling program Q is a binary assembling program with consecutive bits denoting the following commands:

- 0

⇔ take the last element from P, join it with itself, and output,

- 1

⇔ take the last two elements from P, join them with each other, and output.

As the assembly pool P is a distinct set to which bitstrings are added in subsequent assembly steps, only these two commands apply to the initial assembly pool containing only two bits, regardless of the starting command.

Theorem 3 ()If a bitstring can be assembled by an elegant trivial program of length then N is expressible as a product of Fibonacci numbers (OEIS A065108) and the length of any trivial program Q is not shorter than the assembly index of the string that this trivial assembling program assembles.

Proof. An elegant program is the shortest program that produces a given output [

37,

38]. Furthermore, no program

P shorter than an elegant program

Q can find this elegant program

Q [

37]. If it could, it could also generate the

Q’s output. But if

P is shorter than

Q, then

Q would not be elegant, which leads to a contradiction.

The bit of the trivial assembling program Q is irrelevant as assembles and assembles , so assembles . Then the programs assemble the -bit strings having the assembly index , while bitstrings with the smallest assembly index can be assembled with the same two programs starting with the reversed assembly pool .

The remaining

programs will assemble some of the shorter bitstrings with the assembly index

. In general, all programs

Q assemble bitstrings having lengths expressible as a product of Fibonacci numbers (OEIS

A065108) as shown in

Table A1 (

Appendix B), wherein out of

programs (cf.

Table A4 and

Table A1):

programs

assemble even length balanced bitstrings

having natural binary entropies (

3)

, including bitstrings

(

11),

programs assemble bitstrings having lengths divisible by three and entropies ,

programs assemble bitstrings having lengths divisible by five and entropies ,

programs assemble bitstrings having lengths divisible by eight, entropies , and assembly indices if ,

⋯,

the program

joins two shortest bitstrings assembled in a previous step into a bitstring of length being twice the Fibonacci sequence (OEIS

A055389), and finally

the program assembles the shortest bitstring that has length belonging to the set of Fibonacci numbers.

Thus, for

, binary assembling programs

Q assemble subsequent

Fibonacci words and their concatenations having entropies (

3) with ratios (

4)

where

, and

F is the Fibonacci sequence starting from 1. Ratios (

21) rapidly converge to

where

is the golden ratio. Therefore,

is the binary entropy of the Fibonacci word limit. The Fibonacci sequence can be expressed through the golden ratio, which corresponds to the smallest Pythagorean triple

[

39,

40].

However, for , some of the programs are no longer elegant if and some of the assembled bitstrings are not if .

For

,

assembles a bitstring

with an assembly index

which is not the minimum for this length of the bitstring. For example, the 4-bit program

assembles the bitstring

, but if

this string can be assembled by a shorter 3-bit program

, and if

this string does not have the smallest assembly index

but

.

For and and for the shortest bitstring assembled by the program Q the program Q is not elegant for and the shortest bitstring assembled by the program is not for .

However, the length of any program Q is not shorter than the assembly index of the bitstring that this program assembles. □

The trivial assembly programs

Q and the bitstrings they assemble are listed in

Table 9 and

Table A2–

Table A4 (

Appendix B) for one version of the assembly pool and for

.

We note in passing that there are other mathematical results on bitstrings and the Fibonacci sequence. For example, it was shown [

41] that having two concentric circles with radii

and drawing two pairs of parallel lines orthogonal to each other and tangent to the inner circle, one obtains an octagon defined by the points of intersection of those lines with the outer circle, which comes very close to the regular octagon with

. Furthermore, each of these octagons defines a Sturmian binary word (a cutting sequence for lines of irrational slope) except in the case of

[

41].

Perhaps the smallest assembly index given by Theorem 2 and the bitstrings of Theorem 3 are related to the Collatz conjecture, as the lengths of the strings (

11) for

correspond to the numbers to which the Collatz conjecture converges, from

,

(OEIS

A002450).

Theorem 3 is also related to Gödel’s incompleteness theorems and the halting problem.

N cases of the halting problem correspond only to

, not to

N bits of information [

42] and therefore, complexity is more fundamental to incompleteness than self-reference of Gödel’s sentence [

43]. Any formal axiomatic system only enables provable theorems to be proved. If a theorem can be proved by an automatic theorem prover, the prover will halt after proving this theorem. Thus, proving a theorem equals halting. If we assume that the axioms of the trivial program given by Definition 6 define the formal axiomatic system, then the bitstrings having lengths expressible as a product of Fibonacci numbers assembled by this program would represent provable theorems.

If we wanted to define a binary assembling program that would use specific bitstrings other than the last one or two bitstrings in the assembly pool, we would have to index the bitstrings in the pool. However, at the beginning of the assembly process, we cannot predict in advance how many bitstrings will enter the assembly pool. Thus, we do not know how many bits will be needed to encode the indices of the strings in the pool. Therefore, we state the following conjecture.

Conjecture 2. There is no binary assembling program (Definition 5) that has a length shorter than the length of the bitstring having the largest assembly index that could assemble this string.

Theorem 3 would be violated if in Definition 6 we specified the command "0" e.g. as "take the last element from the assembly pool, join it with itself, join with what you have already assembled (say at "the right"), and output". Then, the 2-bit program "00" would produce the 6-bit string

with the assembly index

. However, such a one-step command would violate the axioms of assembly theory, since it would perform two assembly steps in one program step. An elegant program to output the gigabyte bitstring of all zeros would take a few bits of code and would have a low Kolmogorov complexity [

44]. However, such a bitstring would be

outputted, not

assembled. Furthermore, the length of such a program that outputs the bitstring

would be shorter than the length of the program that outputs the string

, while in AT, the lengths of these programs must be the same if the strings have the same assembly indices. Definitions 5, 6 and Theorem 3 are about

binputation, about bitstrings assembling other bitstrings.

In particular, Theorem 3 confirms that the assembly index is related to the amount of physical memory required to store the information to direct the assembly of an

object (a bitstring in our case) and set a directionality in time from the simple to the complex [

8]:

-bit long trivial assembling programs (i.e., with

-bits of memory) can assemble

-bit strings with minimal assembly indices

and, for

, some shorter but more complex bitstrings with non-minimal assembly indices

. The memory defines the

object [

8].

7. Discussion and Conclusions

Consider the SARS-CoV-2 genome sequence defined by 29903 nucleobases

, its initial version MN908947

3 collected in December 2019 in Wuhan and its sample OL351370

4 collected in Egypt nearly two years after the Wuhan outbreak, on October 23, 2021. In the MN version, the nucleobases are distributed as

,

,

, and

and in the OL version as

,

,

, and

, following Chargaff’s parity rules with the same count of adenines. We can convert these sequences into bitstrings by assigning two bits per nucleobase. For such

, not being the sum of two powers of 2, with the degree of causation [

6] given by equation (

14), the assembly index is bounded by

Interestingly, if a bitstring

were to encode four DNA/RNA nucleobases, then the smallest assembly index bitstrings (as well as the strings generated by trivial assembly programs

Q according to Definition 6) would not encode all nucleobases. For example, the bitstring

with

and encoding A=00, C=01, G=10, and T=11, cannot encode T=11. Therefore, we increased the lower bound (

24), given by Theorem 2, by one. The upper bound (

24) was estimated by finding the smallest

k that satisfies

and using the relation

of Conjecture 1. We do not know the actual assembly indices of the MN and OL sequences. Their determination is an NP-complete problem, as we conjecture. There are twelve possible assignments of two bits per one nucleobase with twelve different Hamming weights and six different Shannon entropies (

3)

All sequences (

25) are almost balanced (

). However, the later OL versions are less balanced, producing lower Shannon entropies and showcasing the existence of an entopic force that governs genetic mutations [

25]. We conjecture that the assembly index of the OL sequence is higher than that of the MN one - the evolution of information tends to increase the assembly index.

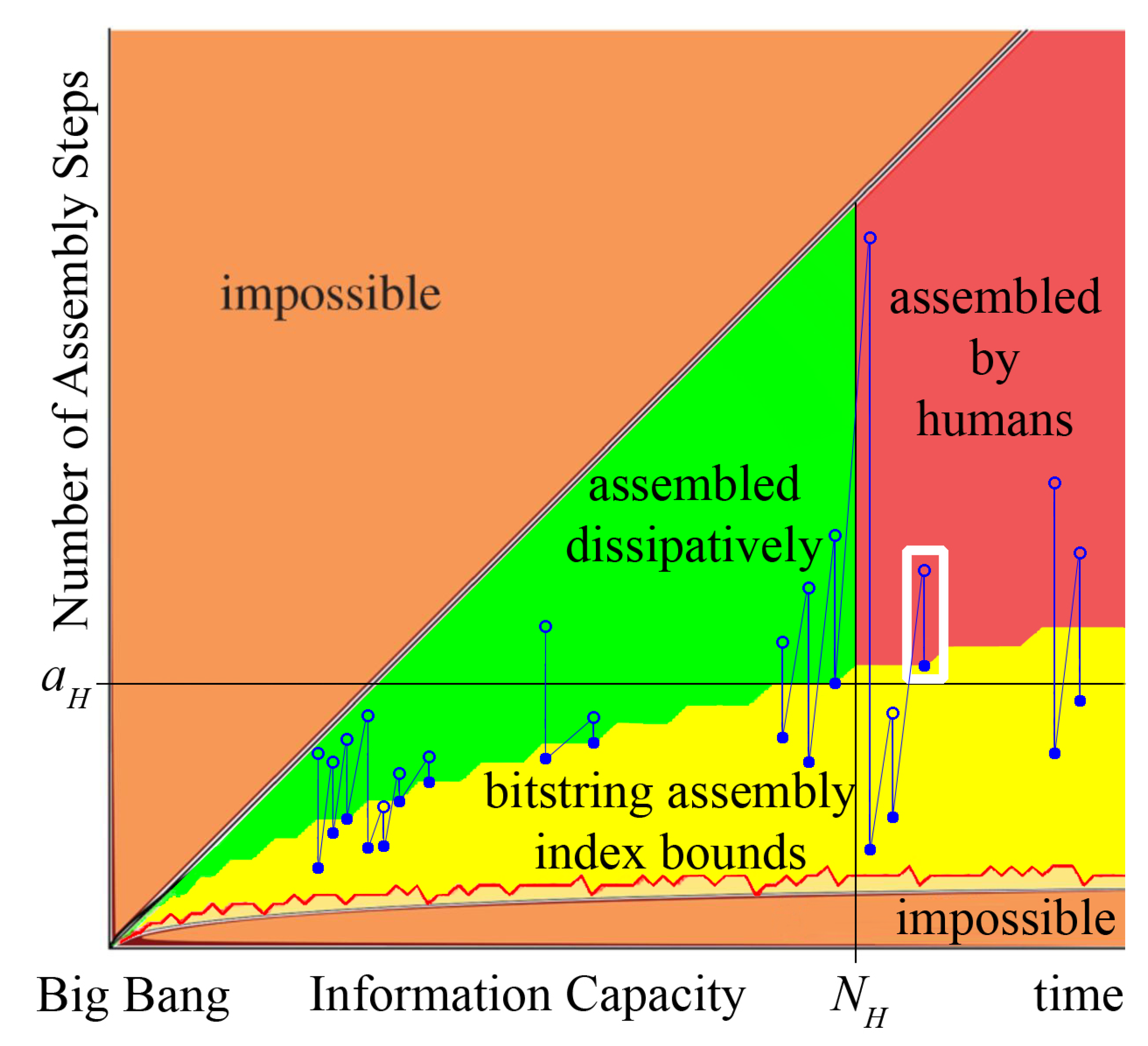

The bounds of Theorem 2 and Conjecture 1 are shown in

Table 5 and

Table 8 and are illustrated in

Figure 4 and

Figure 5. No bitstring can be assembled in a smaller number of steps than is given by a lower bound of Theorem 2. However, some bitstrings cannot be assembled in a smaller number of steps than given by an upper bound.

We found it much easier to determine the assembly index of a given bitstring

than to assemble a bitstring so that it would have the largest assembly index. Similarly, a trivial bitstring with the smallest assembly index for

N can have the form

(

11) or the form of a Fibonacci word generated by the trivial assembling program (Definition 6). Therefore, we state the following conjecture.

Conjecture 3. The problem of determining the assembly index of any bitstring is NP-complete. The problem of assembling the bitstring so that it would have the largest assembly index for large N is NP-hard. This corresponds to determining the largest assembly index value for large N.

A proof of conjecture 3 would also be the proof of the following known conjecture.

Every computable problem and every computable solution can be encoded as a finite bitstring. Here, determining whether the assembly index of a given bitstring has its known maximal value corresponds to checking the solution to a problem for correctness, whereas assembling such a bitstring corresponds to solving the problem. Thus, AT would solve the P versus NP problem in theoretical computer science. There is ample pragmatic justification for adding

as a new axiom [

42]; rather than attempting to prove this conjecture, mathematicians should accept that it may not be provable and simply accept it as an axiom [

45].

The bounds on the bitstring assembly index given by Theorem 2 and Conjecture 1, and the general bounds (

1), and (

2) on the assembly index [

1] are illustrated in

Figure 6 (adopted from [

1] and modified; not to scale). The lower bound on the bitstring assembly index implies two paths of evolution:

creative path (slanting lines in

Figure 6), and

optimization path (vertical lines in

Figure 6),

as for some bitstrings

of length

it admits the

possible region of their assembly steps

. For

only the creative path is available as there is nothing to optimize:

. The 2

nd path becomes available already at

, where the suboptimal number of 3 steps used to assemble a bitstring

can be optimized to

. The evolution becomes interesting for

(

for ringed strings; cf.

Table 8) due to an upper bound on the bitstring assembly index. For each (

)-bit string

suboptimally assembled in

steps, the search space is recursively explored to optimize the number of steps until the assembly index

of this bitstring is reached, where

.

We conjecture that, in general, the assembly of a novel, nontrivial bitstring , for , with a longer length using the 1st path of evolution is NP-hard, requires access to noncomputability, and, thus, is available only to dissipative structures, including life, including humans. This path represents "true" creativity. However, once this new bitstring is assembled, it is unlikely that it will be assembled optimally in s steps corresponding to its assembly index. This implies the 2nd path of minimizing the number of steps s required to assemble this newly found nontrivial bitstring towards its assembly index, which is only NP-complete. The bitstring is reassembled in a simpler way, but such a reassembly is no longer creative. The 2nd path represents "generative creativity" available both to dissipative structures and to artificial intelligence.

To illustrate this process, consider two examples: one from biological evolution (the emergence of amphibians from fish) and another from technological evolution (the invention of an airplane). The fish began to evolve around 541 million years ago, forming a plethora of fish species and exploring the available search space, optimizing the

fish assembly index and increasing the information capacity within the range delimited by the same upper bound

fish plateau (cf. Conjecture 1). Around 400 million years ago, some species of fish began using areas with fluctuating water levels, where occasionally water was scarce. The next

amphibian plateau of a larger assembly index was within sight. By groping [

19], protolungs developed, allowing fish to obtain oxygen from air instead of water. The breakthrough was made and amphibians were formed, exploring the subsequent

amphibian plateau and optimizing this evolutionary gain. Many inventions led to the first airplane: the invention of airfoil (George Cayley), its use in gliders (Otto Lilienthal), propeller,... Again, the search space was well explored, and the

airplane plateau of a larger assembly index was close. Finally, it was the Wright brothers, bicycle retailers, who realized the importance of combining roll and yaw control in their first suboptimal Wright Flyer foreplane configuration. Once it was shown that it can be done, other people began to optimize this invention, minimizing the number of steps required to recreate it.

AT captures the notion of intelligence, understood as a degree of ability to reach the same goal through different means (assembly pathways) [

46], where a fundamental aspect of intelligence is collective behavior [

47]. Once the search space is

saturated, the fish collectively explore it to develop lungs, just as humans, starting at least in the nineteenth century, began to think collectively about heavier-than-air flying machines. We assume that only dissipative structures can assemble novel structures of information and define life as a dissipative structure provided with choice (ability to select [

6]) and human as a living dissipative structure provided with abstract, modality-independent language. As shown in

Figure 6, we predict a limit on complexity or maximum assembly index

achievable by non-human dissipative structures. These structures do not use an abstract, modality-independent language required for advanced human creativity. A human creative work also needs a certain minimum amount of information

. We take it for granted that presently only

Homo sapiens has a gift of creativity that exceeds

. Any creation is required to be shaped by the unique personality of its human creator(s) to such an extent that it is statistically one-time in nature [

48]; it is an imprint of the author’s personality. Subsequent plateaus of

can also be thought of as

scientific paradigms [

49] defining coherent traditions of investigation.

Any structure of information assembled by a dissipative structure in

s steps can belong to one of the four regions shown in

Figure 6:

| 1. |

and , |

suboptimally assembled by dissipative structures (green region), |

| 2. |

, |

optimally assembled by dissipative structures, |

| 3. |

and , |

optimally assembled by humans, and |

| 4. |

and , |

suboptimally assembled by humans (red region). |

We do not exclude that non-human dissipative structures are capable of suboptimally assembling structures

C above

, provided that their assembly indices satisfy

. Thus, the optimization path shown in the white rectangle in

Figure 6 is available only to humans.

The results reported here can be applied in the fields of cryptography, data compression methods, stream ciphers, approximation algorithms [

50], reinforcement learning algorithms [

51], information-theoretically secure algorithms, etc. Another possible application of the results of this study could be molecular physics and crystallography. Overall, the results reported here support the AT, emergent dimensionality [

12,

15,

22,

23,

24,

26,

27,

28,

40], the second law of infodynamics [

25,

29], and invite further research.

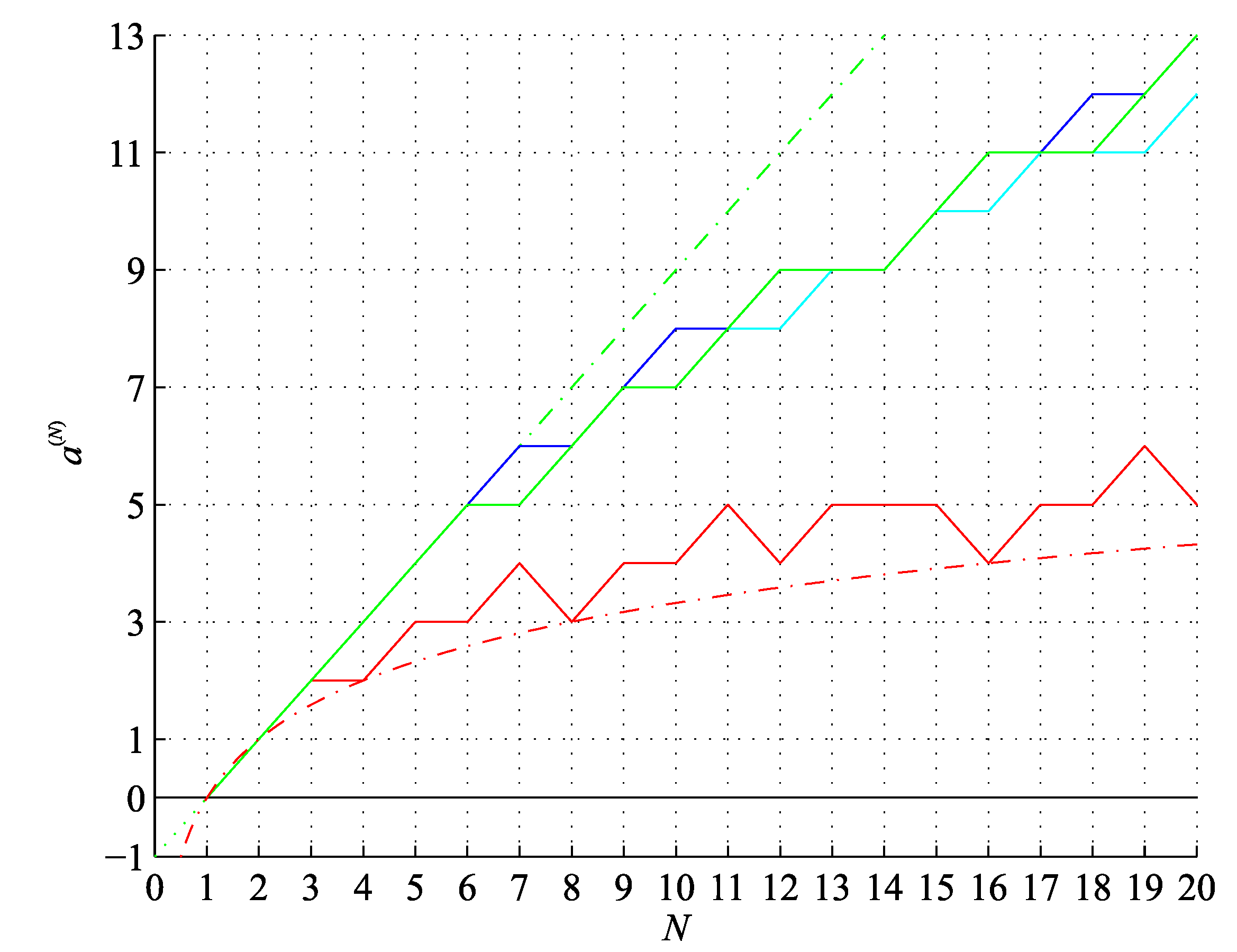

Figure 1.

Numbers of all bitstrings (red), balanced bitstrings (green), ringed bitstrings (cyan), and balanced ringed bitstrings (blue) as a function of the bitstring length N.

Figure 1.

Numbers of all bitstrings (red), balanced bitstrings (green), ringed bitstrings (cyan), and balanced ringed bitstrings (blue) as a function of the bitstring length N.

Figure 2.

Degree of causation as a function of .

Figure 2.

Degree of causation as a function of .

Figure 3.

(a) Semi-log plot of (red), (green), and (blue). (b) Fractions of (green) and (blue) to , showing the deflection point for (see text for details).

Figure 3.

(a) Semi-log plot of (red), (green), and (blue). (b) Fractions of (green) and (blue) to , showing the deflection point for (see text for details).

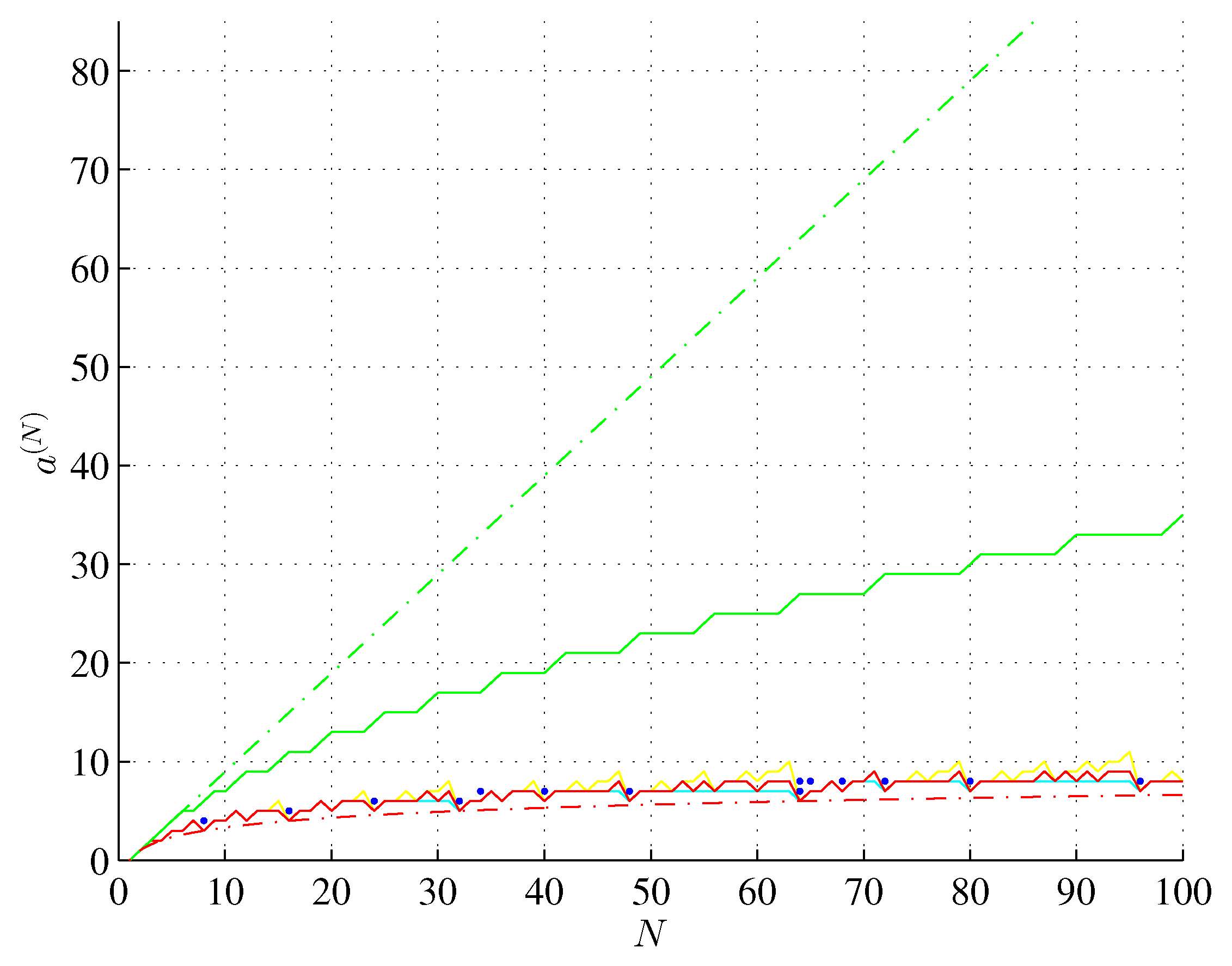

Figure 4.

Lower bound on the bitstring assembly index 2 (red) and (red, dash-dot), conjectured upper bound on the bitstring assembly index 1 (green), factual values of the bitstring assembly index (blue) and the ringed bitstring assembly index (cyan) and (green, dash-dot), for the bitstring length .

Figure 4.

Lower bound on the bitstring assembly index 2 (red) and (red, dash-dot), conjectured upper bound on the bitstring assembly index 1 (green), factual values of the bitstring assembly index (blue) and the ringed bitstring assembly index (cyan) and (green, dash-dot), for the bitstring length .

Figure 5.

Lower bound on the bitstring assembly index (red),

(red, dash-dot), general rule

(cyan), and OEIS

A014701 (yellow); conjectured upper bound on the bitstring assembly index (green) and

(green, dash-dot); and assembly indices of

bitstrings assembled by trivial assembling programs (blue); for the bitstring length

(see text for details).

Figure 5.

Lower bound on the bitstring assembly index (red),

(red, dash-dot), general rule

(cyan), and OEIS

A014701 (yellow); conjectured upper bound on the bitstring assembly index (green) and

(green, dash-dot); and assembly indices of

bitstrings assembled by trivial assembling programs (blue); for the bitstring length

(see text for details).

Figure 6.

An illustrative graph of complexity against information capacity: orange regions are impossible, as they are above or below the assembly index general bounds, yellow region indicates the bitstring assembly index bounds, green region contains structures that can be assembled by dissipative structures of nature, red region contains structures that can only be assembled by humans, blue circles and dots denote, respectively, the number of steps of suboptimally assembled bitstrings and their assembly indices, blue slanting and vertical lines denote, respectively, creative and optimization paths of evolution of information (figure not to scale; see text for details).

Figure 6.

An illustrative graph of complexity against information capacity: orange regions are impossible, as they are above or below the assembly index general bounds, yellow region indicates the bitstring assembly index bounds, green region contains structures that can be assembled by dissipative structures of nature, red region contains structures that can only be assembled by humans, blue circles and dots denote, respectively, the number of steps of suboptimally assembled bitstrings and their assembly indices, blue slanting and vertical lines denote, respectively, creative and optimization paths of evolution of information (figure not to scale; see text for details).

Table 1.

Distribution of the assembly indices for .

Table 1.

Distribution of the assembly indices for .

| |

|

|

|

0 |

1 |

2 |

3 |

4 |

| 2 |

4 |

1 |

|

2 |

|

1 |

| 3 |

12 |

|

4 |

4 |

4 |

|

| 16 |

1 |

4 |

|

4 |

1 |

Table 2.

balanced bitstrings .

Table 2.

balanced bitstrings .

| k |

|

|

| 1 |

(0 |

1) |

(0 |

1) |

2 |

| 2 |

(1 |

0) |

(1 |

0) |

2 |

| 3 |

0 |

1 |

1 |

0 |

3 |

| 4 |

1 |

1 |

0 |

0 |

3 |

| 5 |

1 |

0 |

0 |

1 |

3 |

| 6 |

0 |

0 |

1 |

1 |

3 |

Table 3.

Bitstring length N, number of all bitstrings , number of balanced bitstrings , number of ringed bitstrings , and number of balanced ringed bitstrings .

Table 3.

Bitstring length N, number of all bitstrings , number of balanced bitstrings , number of ringed bitstrings , and number of balanced ringed bitstrings .

| N |

|

|

|

|

|

| 1 |

2 |

1 |

2 |

1 |

1 |

| 2 |

4 |

2 |

3 |

1 |

2 |

| 3 |

8 |

3 |

4 |

1 |

3 |

| 4 |

16 |

6 |

6 |

2 |

3 |

| 5 |

32 |

10 |

8 |

2 |

5 |

| 6 |

64 |

20 |

14 |

4 |

5 |

| 7 |

128 |

35 |

20 |

5 |

7 |

| 8 |

256 |

70 |

36 |

10 |

7 |

| 9 |

512 |

126 |

60 |

14 |

9 |

| 10 |

1024 |

252 |

108 |

26 |

|

| 11 |

2048 |

462 |

188 |

42 |

11 |

| 12 |

4096 |

924 |

352 |

80 |

11.55 |

| 13 |

8192 |

1716 |

632 |

132 |

13 |

| 14 |

16384 |

3432 |

1182 |

246 |

|

| 15 |

32768 |

6435 |

2192 |

429 |

15 |

Table 4.

Distribution of assembly indices among balanced ringed bitstrings for .

Table 4.

Distribution of assembly indices among balanced ringed bitstrings for .

| N |

|

|

|

|

|

|

|

|

| 4 |

2 |

1 |

1 |

|

|

|

|

|

| 5 |

2 |

|

1 |

1 |

|

|

|

|

| 6 |

4 |

|

1 |

2 |

1 |

|

|

|

| 7 |

5 |

|

|

2 |

3 |

|

|

|

| 8 |

10 |

|

1 |

1 |

6 |

2 |

|

|

| 9 |

14 |

|

|

1 |

4 |

7 |

2 |

|

| 10 |

26 |

|

|

1 |

6 |

9 |

10 |

|

| 11 |

42 |

|

|

|

2 |

14 |

20 |

6 |

Table 5.

The lower bound on the bitstring assembly index (OEIS

A003313).

Table 5.

The lower bound on the bitstring assembly index (OEIS

A003313).

| N |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

16 |

17 |

18 |

19 |

20 |

21 |

|

0 |

1 |

2 |

2 |

3 |

3 |

4 |

3 |

4 |

4 |

5 |

4 |

5 |

5 |

5 |

4 |

5 |

5 |

6 |

5 |

6 |

Table 6.

List of the shortest addition chain sequence generating factors for .

Table 6.

List of the shortest addition chain sequence generating factors for .

| s |

|

The shortest addition chain sequence generating factors |

| 1 |

2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

8 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

16 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

32 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Table 7.

Number of exceptional values , and the number of not generated by general rules for .

Table 7.

Number of exceptional values , and the number of not generated by general rules for .

| s |

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

13 |

14 |

15 |

|

0 |

0 |

0 |

0 |

2 |

9 |

30 |

80 |

193 |

432 |

925 |

1928 |

3953 |

8024 |

16189 |

32544 |

|

1 |

2 |

4 |

8 |

16 |

32 |

64 |

128 |

256 |

512 |

1024 |

2048 |

4096 |

8192 |

16384 |

32768 |

|

0 |

0 |

0 |

1 |

7 |

21 |

51 |

113 |

239 |

493 |

1003 |

2025 |

4071 |

8165 |

16355 |

32737 |

|

|

|

|

|

13 |

13 |

7 |

7 |

7 |

7 |

7 |

7 |

7 |

7 |

7 |

7 |

Table 8.

Exemplary balanced bitstrings that have a largest assembly index. Conjectured () form of the largest assembly index and its factual values for ringed () and non-ringed () bitstrings (red if below the conjectured value, green if above).

Table 8.

Exemplary balanced bitstrings that have a largest assembly index. Conjectured () form of the largest assembly index and its factual values for ringed () and non-ringed () bitstrings (red if below the conjectured value, green if above).

| N |

|

|

|

|

| 1 |

0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

0 |

0 |

0 |

| 2 |

1 |

0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

1 |

1 |

| 3 |

0 |

0 |

1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

2 |

2 |

2 |

| 4 |

0 |

0 |

1 |

1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3 |

3 |

3 |

| 5 |

0 |

0 |

0 |

1 |

1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

4 |

4 |

4 |

| 6 |

0 |

0 |

0 |

1 |

1 |

1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5 |

5 |

5 |

| 7 |

0 |

0 |

1 |

1 |

1 |

0 |

0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

5 |

5 |

6 |

| 8 |

0 |

0 |

0 |

1 |

0 |

1 |

1 |

1 |

|

|

|

|

|

|

|

|

|

|

|

|

6 |

6 |

6 |

| 9 |

0 |

0 |

0 |

0 |

1 |

1 |

1 |

0 |

1 |

|

|

|

|

|

|

|

|

|

|

|

7 |

7 |

7 |

| 10 |

0 |

0 |

0 |

0 |

1 |

1 |

1 |

1 |

0 |

1 |

|

|

|

|

|

|

|

|

|

|

7 |

7 |

8 |

| 11 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

1 |

1 |

1 |

|

|

|

|

|

|

|

|

|

8 |

8 |

8 |

| 12 |

1 |

1 |

1 |

0 |

0 |

0 |

1 |

0 |

1 |

1 |

0 |

0 |

|

|

|

|

|

|

|

|

9 |

8 |

8 |

| 13 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

1 |

1 |

1 |

1 |

|

|

|

|

|

|

|

9 |

9 |

9 |

| 14 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

1 |

1 |

1 |

1 |

1 |

|

|

|

|

|

|

9 |

9 |

9 |

| 15 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

1 |

1 |

1 |

1 |

1 |

0 |

|

|

|

|

|

10 |

10 |

10 |

| 16 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

1 |

1 |

1 |

1 |

1 |

|

|

|

|

11 |

10 |

10 |

| 17 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

1 |

1 |

1 |

1 |

1 |

1 |

0 |

|

|

|

11 |

11 |

11 |

| 18 |

1 |

0 |

0 |

1 |

1 |

1 |

1 |

1 |

0 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

|

|

11 |

11 |

12 |

| 19 |

1 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

0 |

1 |

0 |

0 |

1 |

1 |

1 |

1 |

1 |

0 |

1 |

|

12 |

11 |

12 |

| 20 |

1 |

0 |

1 |

0 |

0 |

1 |

1 |

1 |

1 |

1 |

0 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

13 |

12 |

13 |

Table 9.

3-bit elegant programs assembling bitstrings with .

Table 9.

3-bit elegant programs assembling bitstrings with .

| Q |

|

|

|

N |

|

|

|

|

5 |

|

|

|

|

6 |

|

|

|

|

6 |

|

|

|

|

8 |