1. Introduction

Pork occupies a significant niche in the global diet, with countless swine bred annually as per the United States Department of Agriculture (USDA). An omnipresent research topic in the meat industry is pig welfare, which often comes with the onus of a considerable human workforce due to the exhaustive nature of monitoring welfare conditions [

1]. However, the prevalent demand for enhanced pork productivity and quality underscores the need for a shift in traditional practices [

2] [

3].

Our paramount goal revolves around amplifying the accuracy of emotion recognition in pigs - a pursuit carrying significant implications for animal welfare through early stress detection and preventive measures. In response to this pressing need, the advent of the Pig Emotion Recognition (PER) system, leveraging cutting-edge deep learning algorithms and neural networks, heralds a transformative era in animal husbandry. The PER system serves as a viable and scalable alternative for the farming industry, capable of detecting pig emotions without the need for human observers [

4]. Its integration in livestock management could significantly reduce workforce expenses and time, while outperforming human efficiency in terms of accuracy.

Breakthroughs in deep neural networks, such as Visual Geometry Group (VGG) [

5], Inception [

6], ResNet [

7], MobileNet [

8], and Xception [

9], have spurred dramatic advancements in sectors like healthcare and traffic infrastructure [

10] [

11]. Now, these formidable convolution neural networks (CNNs) are set to revolutionize automated agricultural machinery, overcoming the pitfalls of traditional methods, such as laborious tasks and subjective judgments [

12]. Prior research on facial emotion recognition accentuates the essential role of substantial, well-preprocessed datasets for optimized performance, even with the most advanced architectures [

13] [

15] [

16].

However, the journey to a robust PER system requires a hefty investment of time and effort to collate high-quality data samples. Collaboration between deep learning scientists and agricultural research institutes often lies at the core of this data collection process. To sculpt a reliable and efficient PER system, both an exhaustive dataset and a top-tier deep neural network are crucial. The acquisition of a PER dataset is an indispensable first step, followed by its meticulous management for training avant-garde neural networks, thus elevating the PER system’s performance and accuracy. Furthermore, most datasets are extremely unbalanced. obtaining a balanced PER dataset is rare, and removing some data samples from the major group of classification could waste the potential trainable data samples.

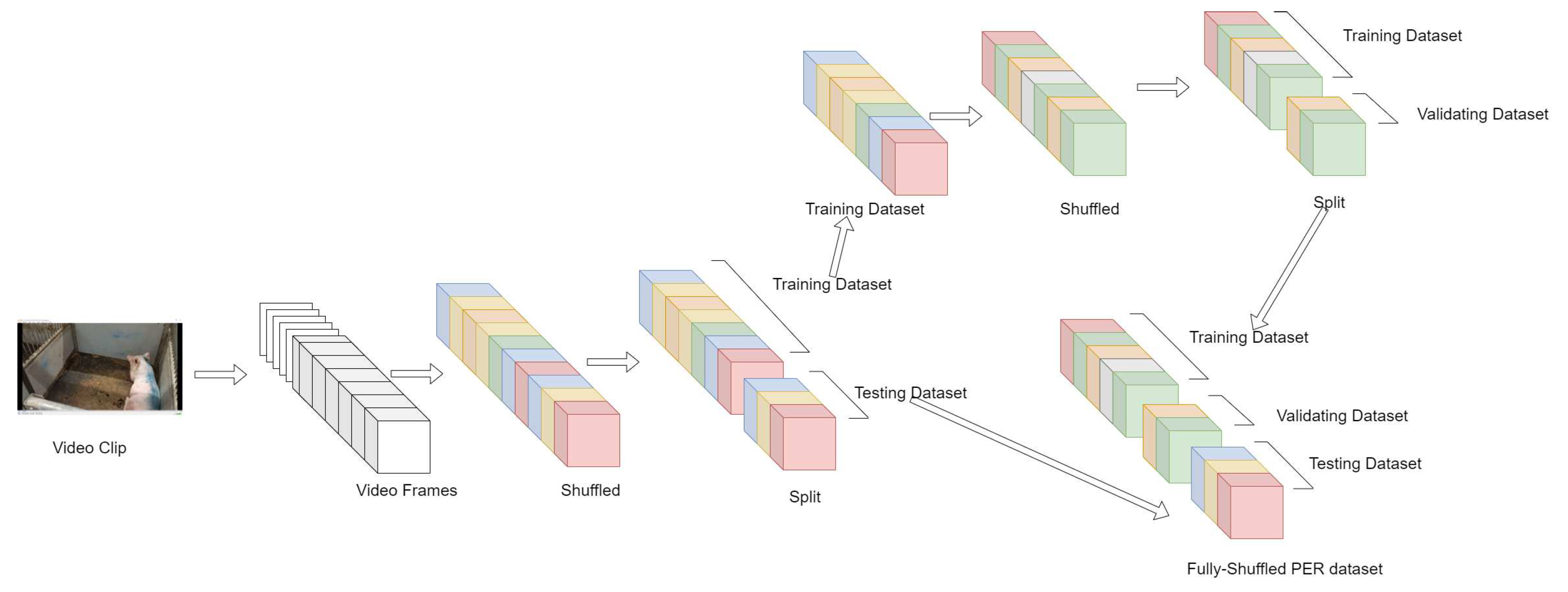

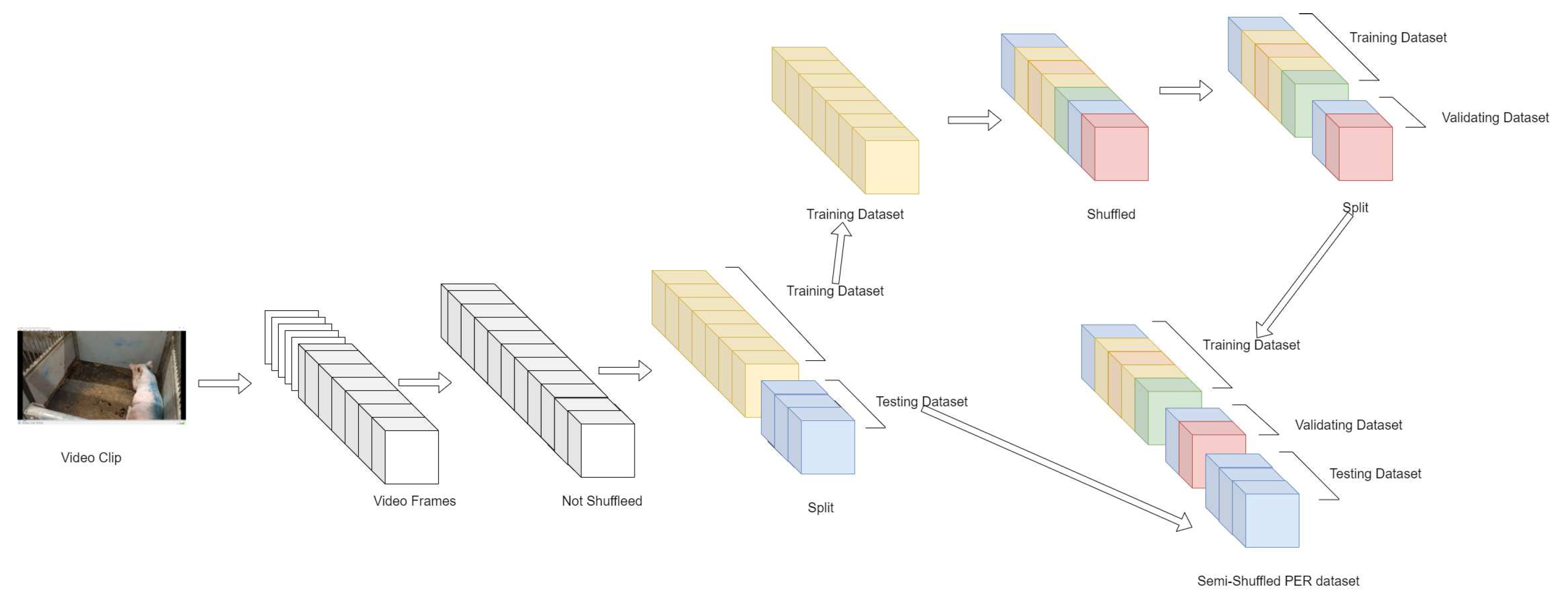

A thorough dataset analysis necessitates the elimination of superfluous video frames, background pixels, and unrelated subjects to maximize neural network performance. It is vital to navigate the challenges of sequential images, which may identically display due to limited animal mobility in confined spaces. Random shuffling of the dataset between training and testing groups can be a pitfall, leading to skewed experimental results and unreliable classification in real-world scenarios. By addressing these insights and optimizing content for search engines, the potential impact of deep neural networks on large-scale livestock management crystallizes, marking a shift towards more efficient and reliable agricultural solutions [

13].

Pig datasets obtained from animal experimental facilities, industrial pig farming facilities, and academic research institutions often comprise unprocessed video clips with features irrelevant to pig emotions. Moreover, these samples typically do not present the pig in an unoccupied space, given their limited mobility. Our unique contribution lies in the strategic application of methods to a PER dataset, particularly in the context of sequentially generated images from video clips. This partitioning application is especially challenging due to the sequential data nature, but our semi-shuffle method provides an effective antidote. Though shuffling sequential images and dividing them into training and testing groups can adequately train and test the PER model, the pre-trained PER model might falter in real-life scenarios due to shared training and testing dataset samples. This paper underscores the need for a partition that the model has yet to encounter, better simulating real-world deployment scenarios. The cross-validation is the method to resample the data samples for training or testing datasets [

14]. It is an applicable tool to check if the accuracy is misrepresenting in practical performance. However, randomly shuffling the time-sequential captured images can still render almost perfect performance. Some shuffled images share the training and testing data group and cause biased interpretation.

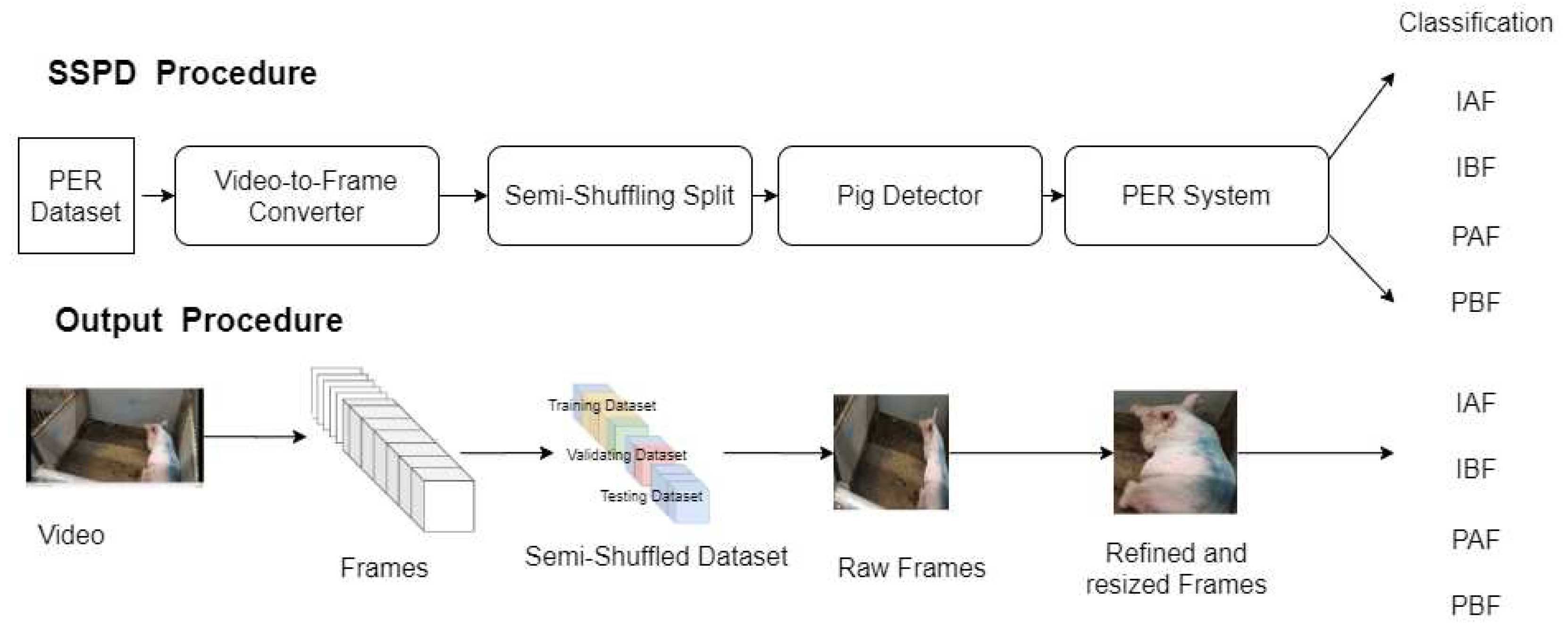

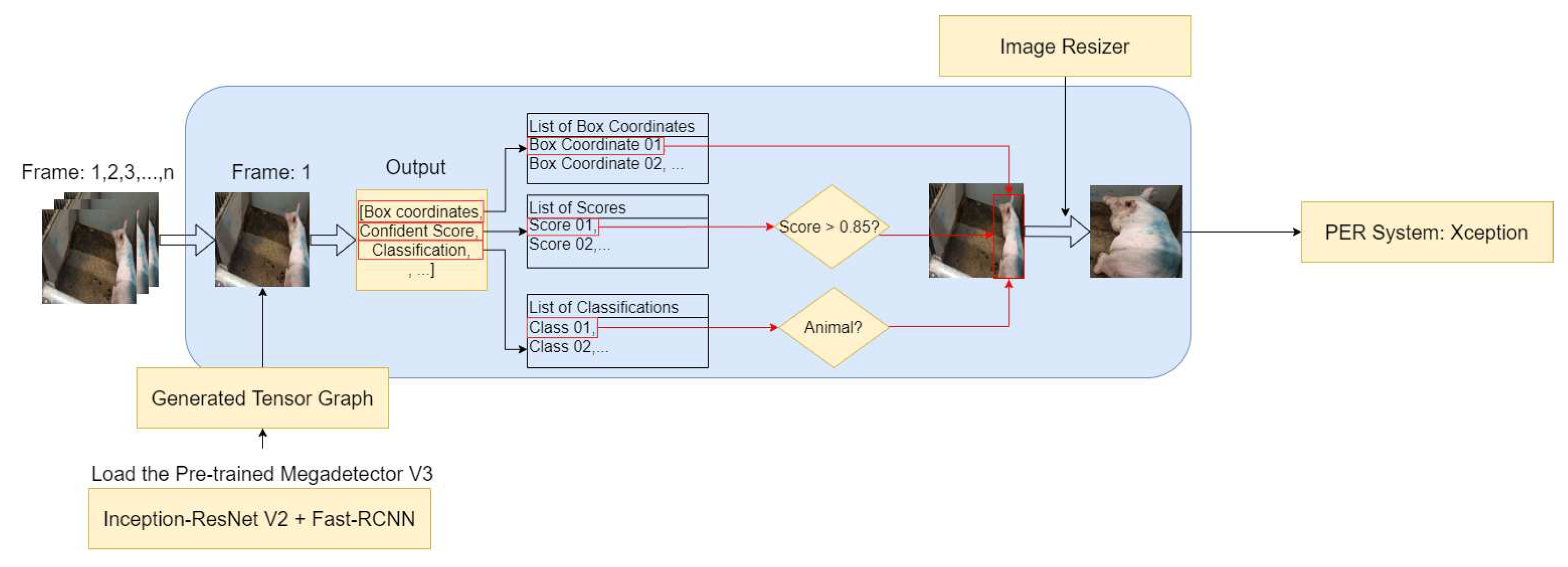

To mitigate this issue, we propose the Semi-Shuffling and Pig Detector (SSPD)-PER dataset, intended to produce less biased experimental results. Our pioneering contributions to these preprocessing techniques encompass:

The SSPD-PER addresses sequentially generated images from video clips and prevents testing sample sharing with the PER model during training, thereby enhancing objective evaluation.

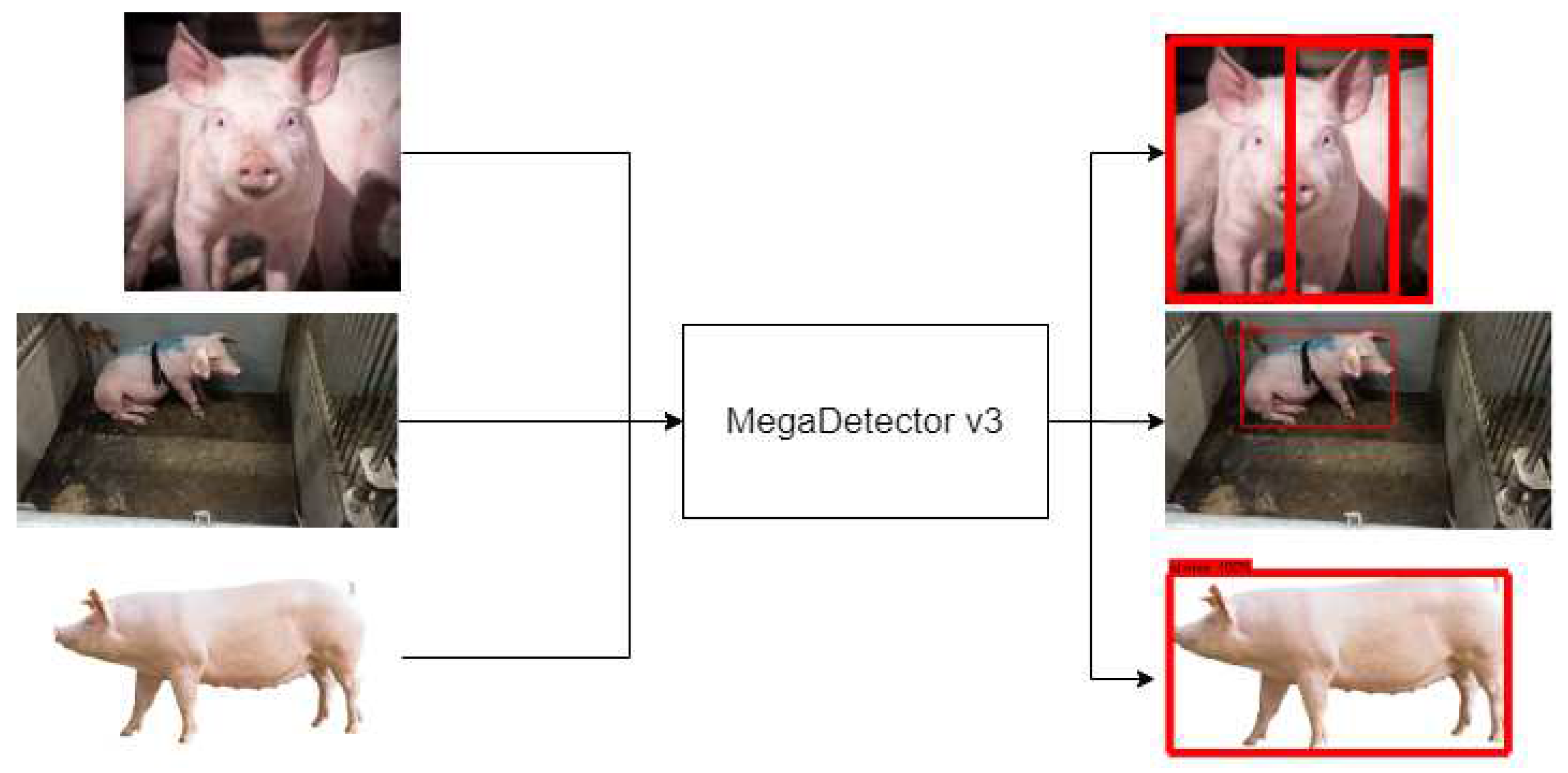

The SSPD-PER discards a significant number of irrelevant pixels before training the Xception model, allowing focus on the pig region in an image and training the PER model with only pertinent information.

The Xception architecture, known for its lightweight design and competitive results, can be trained with the SSPD-PER dataset, potentially outperforming existing simple-CNN architectures [

13].

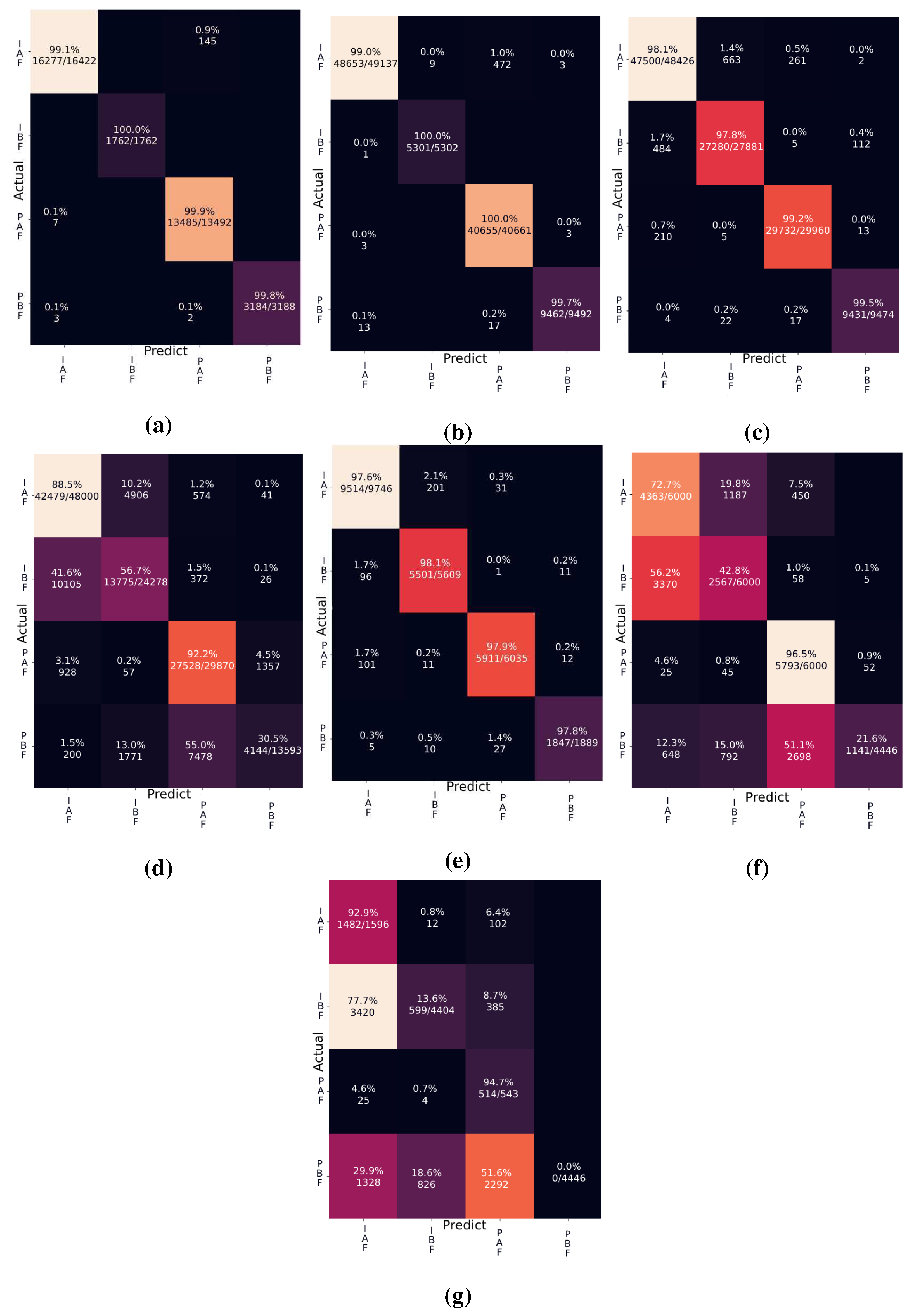

Implementing the SSPD-PER resulted in improved accuracy in the isolation-after-feeding class and revealed the true f1-score, indicating the need for further dataset classification management. Collectively, our proposed preprocessing techniques, coupled with the Xception architecture, pave the path for a more accurate and reliable PER model in real-world applications.

The rest of the paper is organized as follows.

Section 2 compares with other pig-related approaches.

Section 3 explains our proposed preprocessing techniques by semi-shuffling techniques and pig detector before training the Xception architecture.

Section 4 has the experimental results.

Section 5 shows discussion. Finally,

Section 6 summarizes our proposed methods.

2. Related Work

A notable body of research within the realm of pig welfare underscores the confluence of various aspects such as pig weight, pig tag number identification, symptoms of stress, and social status. Such contributions offer a vantage point into the wellbeing of pigs, serving as a cornerstone in improving their living conditions. Critical engagement with these research findings propels the discourse on pig welfare, enabling a better understanding of the emotional states of these animals, an underappreciated yet pivotal area of study.

Recent work elucidates the nuanced interplay of social dynamics among pigs, spotlighting the quintessential role of comprehending these interactions in gauging overall pig welfare [

17]. Discomfort and stress are immediate by-products of surgical procedures, notably the castration of male pigs, demanding immediate attention for welfare improvement [

18] [

19]. With a pivot towards technology, implantable telemetric devices have emerged as instrumental tools to monitor pig emotions by tracking heart rate, blood pressure, and receptor variation [

20]. The increasing attention animal and agricultural scientists devote to understanding animal emotional expressions continues to gain momentum. The research conducted by Lezama Garcia et al. [

21] underscores the relationship between facial expressions, combined poses, and the welfare of domestic animals such as pigs. Close inspection of animal facial expressions and body poses can furnish invaluable insights into their emotional states, thereby enhancing post-surgical treatment and food safety standards. Building on this premise, innovative technologies like convolutional neural networks (CNNs) and machine learning algorithms have been employed to detect pig curvature and estimate pig weight and emotional valence [

22]. Additionally, advanced architectures such as the ResNet 50 neural network and CNN-LSTM have been applied to detect and track pigs, measure the valence of emotional situations in pigs through vocal frequency, and sequentially monitor and detect aggressive behaviors in pigs. [

23] Neethirajan [

24] highlighted that postures of eyes, ears, and snout could help determine negative emotions in pigs using YOLO architectures combined with RCNN. Briefer et al. [

25] employed high and low frequencies to identify negative and positive valence in pigs, using a ResNet 50 neural network, which includes CNNs. Hansen et al. [

26] showed that frontal images display the eyelid region, and heat maps provide a deep convolutional neural network to determine if pig stress levels are increasing, thereby degrading overall pig welfare.

Hakansson et al. [

27] utilized CNN-LSTM to sequentially monitor and detect aggressive pig behaviors, such as biting, allowing farmers to intervene before the situation worsens. Imfeld-Mueller et al. [

28] measured the high vocal frequency to measure the valence of emotional situation for pigs. Capuani et al. [

29] and applied a CNN with a extreme randomized tree (ERT) classifier to discern positive and negative emotions in swine vocalization through machine learning. Wang et al. [

30] employed a lightweight CNN-based model for early warning in sow oestrus sound monitoring. Ocepek et al.[

31] used the YOLOv4 model, while Xu et al. [

32] applied ResNet 50 for pig face recognition. Ocepek et al. also applied the Mask R-CNN to remove irrelevant segments from pig images. However, the pre-trained mask R-CNN does not include the pig’s classification, and the authors only trained with 533 "pig-like" images and tested with 50 "pig-like" testing samples. Their approach is unreliable for extracting the pig segmentation as Megadetector v3, which is trained with millions of trainable samples. Removing all irrelevant pixels is the key to standardizing our PER dataset, as our trainable model primarily focuses on the relevant information. Removing the complete all extraneous information may improve the accuracy further. However, our methods significantly reduce a massive portion of extraneous pixels before entering the model’s training procedure. The extraneous pixels account for more than 80% of an image. Without using the Megadetector, the huge portion of extraneous pixels will cause the model mainly focus on extraneous information and misrepresent the performance in the end. The small portion of the extraneous pixels will not affect the model performance significantly.

Concurrent advancements, such as the application of the YOLOv4 model and Mask R-CNN, have revolutionized pig face recognition and image processing. Notwithstanding, the pre-trained Mask R-CNN, when tested under certain conditions, failed to include pig classification, thereby rendering the approach unreliable for extracting pig segmentation. Removing the remained extraneous information completely may improve the accuracy further. However, our methods significantly reduce huge portion of extraneous pixels before entering the model’s training process. Ahn et al. [

33] exploited the tiny YOLOv4 model to view pigs from a top-view camera. Low et al. [

34] tested CNN-LSTM with a ResNet50 back-boned model for full-body pig detection from a top-view camera. Colaco et al. [

35] [

36] used the PER dataset containing thermal images, instead of colored or gray-scaled images, and trained their proposed depth-wise separable designed architecture. These studies demonstrate the wide array of techniques employed in pig emotion recognition, emphasizing the importance of understanding and monitoring pig welfare.

By harnessing an array of techniques in pig emotion recognition, we witness the burgeoning importance of understanding and monitoring pig welfare. In recent years, researchers have charted impressive progress in improving pig welfare conditions. Central to these strides are in-depth studies focusing on pig facial expressions, body poses, social relationships, and feeding intervals. Our proposed recognition system seeks to continue this trend, aiming to improve pig welfare through accurate recognition and monitoring of pig emotions. This endeavor draws on diverse methodologies and architectures gleaned from the extant literature. Key among these considerations is the impact of feeding intervals and the manipulation behavior of pen mates on pig behavior [

17,

21,

22,

23,

24,

25,

27,

31,

35,

36]. Restrictive feeding can lead to increased aggression in pigs, resulting in antagonistic social behavior when interacting with other pigs. To develop solutions and animal welfare monitoring platforms that address aggression and tail biting, it is essential to understand the impact of feeding intervals and manipulation behavior of pen mates. Abnormal behavior in pigs may be attributed to the redirection of the pig’s exploitative behavior, such as the ability to interact with pen mates when grouped or kept in isolation.By comprehending the effects of feeding intervals and access to socializing conditions on pig behavior, we propose four specific treatments: isolation after feeding (IAF), isolation before feeding (IBF), paired after feeding (PAF), and paired before feeding (PBF). These treatments are designed to spotlight how different feeding and socializing conditions shape pigs’ emotions and behavior. By unraveling the intricacies of pig behavior under varied conditions, we aim to enable researchers and farmers to cultivate optimal environments that uphold pig welfare and mitigate negative emotional experiences. This pursuit underscores the importance of continued research and engagement with innovative technologies in the sphere of pig welfare.

5. Discusssion

In our study, we found that sequential shuffling of training and testing data derived from video clips may obstruct an accurate evaluation. Our experimental results elucidate the linkage between the performance of emotion classification and the refinement process within our Semi-Shuffle Pig Detector - Pig Emotion Recognition (SSPD-PER) system. While the classification of isolated and paired instances becomes more discernible, the delineation between ’after’ and ’before’ feeding remains nebulous throughout our SSPD-PER system’s process. We recognize that the classification of Isolation After Feeding (IAF), Isolation Before Feeding (IBF), Paired After Feeding (PAF), and Paired Before Feeding (PBF) does not provide a direct measure of pig emotions. However, in the existing classifications, PAF represents the most positive emotion, while IBF indicates the most negative. To advance real-time pig welfare, further research into the reliability of pig feeding conditions is indispensable.

Concerning the practical applicability of our classifier, our primary goal is to enhance the precision of emotion recognition in pigs. These advance holds substantial implications for animal welfare, facilitating the identification and mitigation of stress and distress without time constraints. Moreover, the pig husbandry industry requires production efficiency, also without time limitations. As global pork demand increases, the conventional approach of hiring additional farmworkers may not suffice to meet production goals. Hence, our research underscores the necessity of innovative solutions like ours for sustainable and humane growth.

5.1. Enhancing Objectivity: The Role of Semi-Shuffling in Reducing Bias in Experimental Outcomes

The semi-shuffling technique is a method used in machine learning and statistics to reduce bias in the results. The technique is particularly useful in situations where the data may have some inherent order or structure that could potentially introduce bias into the analysis.

Data Shuffling: In a typical machine learning process, data is often shuffled before it’s split into training and test sets. This is done to ensure that the model is not influenced by any potential order in the data. For example, if you’re working with a time series dataset and you don’t shuffle the data, your model might simply learn to predict the future based on the past, which is not what you want if you’re trying to identify underlying patterns or relationships in the data.

Semi-Shuffling: However, in some cases, completely shuffling the data might not be ideal. For example, if you’re working with time series data, completely shuffling the data would destroy the temporal relationships in the data, which could be important for your analysis. This is where the semi-shuffling technique comes in. Instead of completely shuffling the data, you only shuffle it within certain windows or blocks. This allows you to maintain the overall structure of the data while still introducing some randomness to reduce bias.

The semi-shuffling technique contributes to less biased results by ensuring that the model is not overly influenced by any potential order or structure in the data. By introducing some randomness into the data, the technique helps to ensure that the model is learning to identify true underlying patterns or relationships, rather than simply memorizing the order of the data.

It’s important to note that while the semi-shuffling technique can help to reduce bias, it’s not a silver bullet. It’s just one tool in a larger toolbox of techniques for reducing bias in machine learning and statistical analysis. Other techniques might include things like cross-validation, regularization, and feature selection, among others.

5.2. SSPD-PER Method’s Promising Impact on Pig Well-being and the Challenges of its Real-world Implementation

The SSPD-PER method is poised to significantly enhance pig welfare in real-world settings by providing a more nuanced understanding of pig emotions. In leveraging deep learning technology, the SSPD-PER can reliably detect and interpret pig emotions, thereby allowing for timely identification of distress or discomfort in pigs. This ability not only aids in improving the animals’ well-being but also aids farmers in optimizing their livestock management practices, leading to increased productivity and improved animal welfare standards.

Implementing the SSPD-PER method could serve as a revolutionary tool in early disease detection and stress management, improving the pigs’ quality of life. An accurate understanding of animal emotions could inform and influence farmers’ decisions regarding feeding times, living conditions, and social interactions among the animals, thereby enabling more humane and considerate treatment.

However, several challenges may arise during the implementation of the SSPD-PER method in field settings. Firstly, the quality of data inputs is paramount for the successful application of this method. The video footage must be clear and free of environmental noise to ensure accurate recognition of pig emotions. Achieving this quality consistently in diverse farm settings might prove challenging due to varying lighting conditions, possible obstruction of camera view, or pig movements.

Secondly, the system’s effective integration into current farm management practices may be another hurdle. Farmers and livestock managers would need training to understand the data generated by the SSPD-PER method and apply it to their day-to-day decision-making. They may need to adapt to new technology, which can take time and resources.

Lastly, there are technical considerations tied to the processing power required to run sophisticated algorithms, the requirement of stable internet connectivity for cloud-based analysis, and the potential for high initial setup costs. Addressing these challenges would be critical to making this advanced technology widely accessible and effective in enhancing pig welfare across diverse farming operations. Future research could focus on optimizing the SSPD-PER system for more seamless integration into existing farming operations and making it more accessible for different scales of farming enterprises.

5.3. Deciphering Relevance: Criteria for Eliminating Extraneous Elements in Video Data Analysis

The criteria used for determining irrelevant video frames, extraneous background pixels, and unrelated subjects in our study are based on multiple factors, primarily focused on the relevancy to pig emotion recognition.

Irrelevant Video Frames: Frames were deemed irrelevant if they did not contain any information useful for understanding pig emotions. For instance, frames where pigs are not visible, or their emotional indicators, such as facial expressions or body postures, are obstructed or not discernible were classified as irrelevant. Similarly, frames where pigs are asleep or inactive might not contribute much towards emotion recognition and thus could be categorized as irrelevant.

Extraneous Background Pixels: Pixels were deemed extraneous if they belonged to the background or objects within the environment that do not contribute to pig emotion recognition. This includes elements such as the pig pen structure, feeding apparatus, or any other non-pig related objects in the frame. The goal here is to focus the machine learning algorithm’s attention on the pigs and their emotions, minimizing noise or distraction from irrelevant elements in the environment.

Unrelated Subjects: Any object or entity within the frame that is not the pig whose emotions are being monitored is considered an unrelated subject. This could include other animals, farm personnel, or any moving or stationary object in the background that could potentially distract from or interfere with the accurate recognition of pig emotions.

The criteria for determining irrelevance in each of these cases are defined based on the specific task at hand, i.e., emotion recognition in pigs, and the unique attributes of the data, including the specific conditions of the farm environment, the pigs’ behavior, and the quality and angle of the video footage. These parameters can be fine-tuned and adapted as needed to suit different situations or requirements.

5.4. Outshining Competition: Benchmarking the Xception Architecture’s Competitive Edge in Lightweight Design

The Xception architecture, renowned for its lightweight design, has proven to be highly competitive in various applications. By leveraging depth-wise separable convolutions, Xception promotes efficiency and reduces computational complexity, enabling faster, more efficient training and deployment even on less powerful hardware.

When compared to other architectures, Xception’s unique advantages come to the fore. For example, traditional architectures like VGG and AlexNet, while powerful, are comparatively heavier, requiring more computational resources and often leading to longer training times. On the other hand, newer architectures like ResNet and Inception, while they do address some of these challenges, they may not match the efficiency and compactness offered by Xception.

Furthermore, Xception’s design fundamentally differs from other models, allowing it to capture more complex patterns. While most architectures use standard convolutions, Xception replaces them with depth-wise separable convolutions, which allows it to capture spatial and channel-wise information separately. This distinction enables Xception to model more complex interactions with fewer parameters, enhancing its performance on a wide range of tasks.

However, it’s also important to mention that the choice of architecture depends on the specific task and the available data. For tasks involving more complex data or demanding higher accuracy, more powerful, albeit resource-intensive models like EfficientNet or Vision Transformer may be more appropriate. Conversely, for tasks requiring real-time performance or deployment on edge devices, lightweight models like MobileNet or Xception would be more suitable.

Looking ahead, future research could explore hybrid models that combine the strengths of various architectures or investigate more efficient architectures using techniques like neural architecture search. In the realm of animal emotion recognition, specifically, there is much potential for experimenting with novel architectures that can capture temporal patterns in behavior, which is a crucial aspect of emotion.

5.5. Translating Theory into Practice: Unveiling the Real-World Impact and Challenges of Novel Methods in Pig Welfare Enhancement

This research is poised to fundamentally reshape the landscape of livestock welfare, specifically regarding pigs, by employing the innovative Semi-Shuffling and Pig Detector (SSPD) method within the Pig Emotion Recognition (PER) system. The practical implications are far-reaching and multidimensional.

Foremost, the enhanced accuracy in recognizing and interpreting pig emotions, enabled by the SSPD-PER method, lays a solid foundation for improved pig welfare. By more accurately interpreting pig emotions, farmers and animal welfare specialists will be able to better identify signs of distress, pain, or discomfort in pigs. This early detection and intervention can prevent chronic stress and its related health issues, leading to better physical health and quality of life for the animals.

In addition, by understanding the emotional states of pigs, we can create more harmonious living environments that cater to their emotional and social needs, thus reducing the likelihood of aggressive behavior and fostering healthier social dynamics. This not only improves the animals’ overall welfare but also potentially increases productivity within the industry, as stress and poor health can negatively impact growth and reproduction.

Implementing the SSPD-PER method does, however, present potential challenges. One such challenge is the need for substantial investment in technology, infrastructure, and training. As the SSPD-PER method employs advanced machine learning algorithms and neural networks, it requires both the hardware capable of running these systems and personnel with the necessary technical knowledge to operate and maintain them.

Furthermore, as with any AI-based system, there may be ethical considerations and regulatory requirements to be met. Transparency in how data is collected, stored, and utilized will be essential, and safeguards should be in place to ensure privacy and ethical use of the technology.

Lastly, while the SSPD-PER system has shown promise in research settings, real-world applications often present unforeseen challenges, such as environmental variations, diverse pig behaviors, and operational difficulties in larger scale farms. Continuous refinement and optimization of the SSPD-PER method will be crucial in navigating these potential issues and ensuring its successful implementation in the field. While challenges are inherent in any new technology deployment, the potential benefits to pig welfare, agricultural efficiency, and productivity offered by the SSPD-PER method merit careful consideration and exploration.