1. Introduction

The rapid advancement of artificial intelligence, particularly generative AI, has created unprecedented workforce challenges and opportunities. The White House has identified AI education as a critical national priority, emphasizing the need for widespread AI literacy across the American workforce. This technological transformation necessitates new competencies, with prompt engineering emerging as an essential skill for effective human-AI collaboration (Fujitsu, 2024). Current estimates suggest AI could disrupt up to 300 million jobs globally in the next five years, with significant implications for developed economies like the United States.

The emergence of “agentic generative AI” introduces both opportunities and workforce challenges (Joshi et al., 2025). As organizations adopt AI technologies, the skills gap between current workforce capabilities and emerging requirements continues to widen across technical proficiency, strategic understanding, and ethical competency dimensions (IBM Institute for Business Value, 2024).

This paper provides a comprehensive analysis of prompt engineering and upskilling initiatives as strategic responses to the AI-driven skills gap, incorporating evidence from multiple sectors and proposing evidence-based policy recommendations.

2. Literature Review

The literature on AI workforce development reveals several key themes and research gaps. This review synthesizes findings from academic research, government reports, and industry implementations to establish a comprehensive understanding of current knowledge and identify areas requiring further investigation.

2.1. Theoretical Foundations of AI Workforce Development

The theoretical underpinnings of AI workforce development draw from multiple disciplines, including adult learning theory, technological adoption models, and organizational behavior. Chiekezie et al. (2024) provide a comprehensive framework for understanding how organizations can prepare workers for AI technologies through systematic training and professional development. Their research emphasizes the importance of continuous learning and adaptation in the face of rapid technological change.

The concept of prompt engineering as a discrete skill set has gained theoretical traction recently. Meskó (2023) establishes prompt engineering as an emerging critical skill for professionals, particularly in specialized fields like healthcare. This work provides a theoretical basis for understanding how human-AI interaction design differs from traditional technical skills and requires specialized pedagogical approaches. The theoretical framework is further supported by Schuckart (2024), who propose a progressive framework for empowering the workforce through structured prompt engineering education.

2.2. Empirical Evidence on Training Effectiveness

Empirical research on AI training effectiveness is growing but remains limited. Bashardoust et al. (2024) conducted one of the first controlled field experiments examining prompt engineering education, demonstrating statistically significant improvements in task performance among journalists who received structured training. This study provides crucial empirical support for the value of formal prompt engineering education.

Corporate implementations offer additional empirical evidence. Boesen (2024) document the large-scale implementation of prompt engineering training in financial services, showing how targeted technical education can accelerate AI adoption and improve workforce competency. These real-world implementations provide valuable insights into scalable training models and their organizational impacts.

The effectiveness of different training modalities has been examined and validation of assessment frameworks for AI competency can provide empirical evidence for the importance of standardized evaluation metrics in workforce development programs.

2.3. Government and Policy Initiatives

Government responses to the AI skills gap represent a significant area of research and practice. The U.S. Department of Labor’s initiatives to promote AI literacy across the American workforce demonstrate a comprehensive approach to national skills development (Labor, 2024). Similarly, state-level programs like California’s Generative AI Training show how localized interventions can address specific regional workforce needs (California, 2024).

International perspectives also contribute to our understanding of effective policy approaches. Research on global AI implementation challenges highlights the varying impacts of AI based on a country’s development status and the importance of tailored workforce strategies. The European Commission’s AI Skills Strategy (European Commission, 2024) provides another important comparative case study, demonstrating how regional approaches can address cross-border workforce challenges.

Federal initiatives have been complemented by research institution efforts. Digital Economy (2024) conducted comprehensive studies on AI workforce readiness, providing evidence-based recommendations for policy makers. Similarly, the National Science Foundation’s AI workforce development grants represent significant public investment in building AI capabilities across multiple sectors.

2.4. Implementation Challenges and Solutions

The literature identifies several persistent challenges in AI workforce development implementation. Scalability issues are particularly significant, as noted in research on workforce training programs (Academy, 2024). The rapid evolution of AI technologies also creates curriculum development challenges, requiring adaptive approaches that can keep pace with technological change (N. A. E. Foundation, 2024).

Research on AI system implementation in global teams reveals additional complexities related to cross-cultural adaptation and distributed workforce development. These findings highlight the need for flexible, culturally responsive training approaches in multinational organizations.

Ethical considerations represent another critical implementation challenge. Patel et al. (2024) examine the ethical dimensions of AI workforce training programs, highlighting the importance of addressing bias, fairness, and accessibility concerns in training design and delivery. These ethical considerations are particularly important given the potential for AI to exacerbate existing workforce inequalities.

Technical implementation challenges are addressed by Joshi et al. (2025), who explore the implications of agentic generative AI for workforce development. Their research highlights the need for training approaches that account for the unique characteristics of autonomous AI systems and their impact on human-AI collaboration.

2.5. Emerging Trends and Future Directions

The literature reveals several emerging trends that will shape future research and practice in AI workforce development. The rise of “agentic generative AI” introduces new workforce challenges and opportunities (Joshi et al., 2025). This emerging technology paradigm requires new approaches to workforce development that account for increased AI autonomy and capability.

The integration of AI across global teams represents another important trend. As organizations become increasingly globalized, training programs must address the diverse needs and contexts of international workforces.

The evolving nature of prompt engineering as a professional skill represents a third key trend. While early research focused on basic prompt engineering techniques, recent work by Meskó (2023) and Bashardoust et al. (2024) demonstrates the growing sophistication of prompt engineering as a professional discipline. This evolution suggests the need for increasingly advanced and specialized training approaches.

2.6. Research Gaps and Future Directions

Despite growing interest in AI workforce development, significant research gaps remain. Few studies examine long-term outcomes of AI training programs or their impacts on career advancement and wage growth. Additionally, research on effective assessment methodologies for AI competencies is limited, particularly for advanced prompt engineering skills.

The literature also reveals a need for more comparative studies examining different training approaches across sectors and organizational contexts. Understanding which interventions work best for specific workforce segments would significantly advance both theory and practice in this field.

Another important research gap concerns the ethical dimensions of AI workforce development. While Patel et al. (2024) provide initial insights into ethical considerations, more research is needed on how to design and implement ethical AI training programs that promote fairness, transparency, and accountability.

Finally, there is a need for research on the scalability and sustainability of AI workforce development initiatives. As noted by Academy (2024) and N. A. E. Foundation (2024), current approaches often face significant scalability challenges. Future research should explore innovative models for delivering AI training at scale while maintaining quality and effectiveness.

3. Policy Drivers and National Readiness Initiatives

The commitment to closing the AI skills gap is evident across multiple levels of government. The U.S. Federal Government has explicitly signaled its intent to develop foundational skills by promoting prompt engineering training for its workforce, demonstrating a top-down recognition of this necessary technical competency. This federal mandate is underpinned by high-level directives, notably the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, which establishes the broad policy framework requiring widespread AI workforce readiness and education.

This policy focus trickles down into practical, sector-specific programs. The U.S. Department of Health and Human Services, for example, has published specific use cases for generative AI in workforce development, illustrating how AI tools can be directly integrated into upskilling efforts for public services. Concurrently, local efforts, such as the City of San José’s IT Training Academy, highlight that cities are directly implementing AI and digital upskilling programs to ensure their municipal workforces remain competent and current.

3.1. Theoretical Foundations: The Debate on Prompt Engineering

While the paper currently positions prompt engineering as a core competency, the literature presents a nuanced debate. Fujitsu’s analysis firmly establishes prompt engineering as “The New Skill for the Digital Age,” underscoring its relevance for professional effectiveness in the immediate future. Conversely, a critical view from the Harvard Business Review argues that “AI Prompt Engineering Isn’t the Future,” suggesting that its utility will rapidly diminish as AI models become more capable and autonomous (i.e., less dependent on highly specific human input). This viewpoint necessitates a focus on broader skills rather than narrow techniques. Despite this potential long-term trend, resources from Harvard Business Publishing Education currently recommend prompt engineering as a practical method to “Better Communicate with People” (i.e., with AI systems).

Furthermore, the World Economic Forum’s reports on the future of jobs consistently place AI and digital literacy at the epicenter of global workforce transformation, making the need for structured upskilling non-negotiable for sustained economic growth. In response to this economic shift, the White House has secured commitments from major organizations to support AI education, formalizing public-private partnerships essential for national skills development.

3.2. Implementation Complexity and Future-Proofing Training

The sustainability of AI workforce development depends on addressing significant implementation challenges, particularly those related to scalability, ethics, and the evolving nature of the technology itself.

Scalability and Funding: To overcome limits in program delivery, the National Science Foundation (NSF) actively provides dedicated AI workforce development grants to organizations capable of scaling up educational and training initiatives. The MIT Initiative on the Digital Economy has contributed evidence-based research on AI workforce readiness, providing policy makers and educators with data to design programs that can be implemented effectively at scale.

Technological Evolution: The emergence of agentic generative AI, where systems operate with a high degree of autonomy, introduces new workforce challenges. This shift requires retraining the U.S. workforce, emphasizing new up-skilling initiatives to mitigate disruption from these highly independent systems. In this context, IBM’s CEO’s Guide to Generative AI provides a strategic overview for business leaders navigating the complexity of integrating these advanced AI capabilities.

Ethics and Culture: Effective global training must address ethical and cultural heterogeneity. Research on Ethical Considerations in AI Workforce Training Programs stresses the need to integrate concepts of bias, fairness, and transparency into curriculum design. Complementing this, frameworks for Cross-Cultural AI Training are vital for adapting programs to the diverse needs of global workforces, ensuring relevance and effectiveness across different national and organizational cultures. Finally, the General Services Administration (GSA) provides foundational guidance for government entities on “Developing the AI Workforce,” which includes structuring adaptive curriculum development to meet multifaceted needs.

This expanded view reinforces the paper’s assertion that the AI skills gap is a multi-dimensional challenge, requiring interconnected policy, theoretical, and operational responses.

4. The AI Skills Gap Framework

The AI-driven skills gap represents a multidimensional challenge affecting workforce development at individual, organizational, and societal levels. This framework analyzes technical, strategic, and operational dimensions.

4.1. Technical Dimension: Prompt Engineering as Core Competency

Prompt engineering has emerged as a fundamental technical skill for effective AI utilization across industries. Unlike traditional programming skills, prompt engineering focuses on human-AI interaction design, requiring understanding of language model capabilities and iterative refinement techniques (Meskó, 2023). Research shows structured education significantly improves AI interaction outcomes (Bashardoust et al., 2024).

4.2. Strategic Dimension: Organizational AI Integration

The strategic dimension involves organizational capacity to integrate AI technologies effectively, including leadership understanding and change management for AI adoption (IBM Institute for Business Value, 2024). Corporate implementations like JPMorgan’s prompt engineering training demonstrate the connection between technical skills development and organizational transformation (Boesen, 2024).

4.3. Operational Dimension: Implementation Challenges

Operational challenges include curriculum development, delivery mechanisms, and program scalability (N. A. E. Foundation, 2024). Federal initiatives like the U.S. Department of Labor’s AI literacy programs represent large-scale operational responses to the skills gap (Labor, 2024).

4.4. Architectural Approaches to Workforce Development

Implementation faces challenges in curriculum standardization, scalability, and system integration. Solutions include competency frameworks, digital learning platforms, and articulation agreements with educational institutions (Chiekezie et al., 2024).

5. Visual Framework Explanations

This section provides detailed explanations of the conceptual frameworks, architectural models, and analytical tools presented in the figures and tables throughout this paper. Each visualization contributes to understanding the multi-dimensional nature of the AI skills gap and proposed intervention strategies.

5.1. Conceptual Framework Diagrams

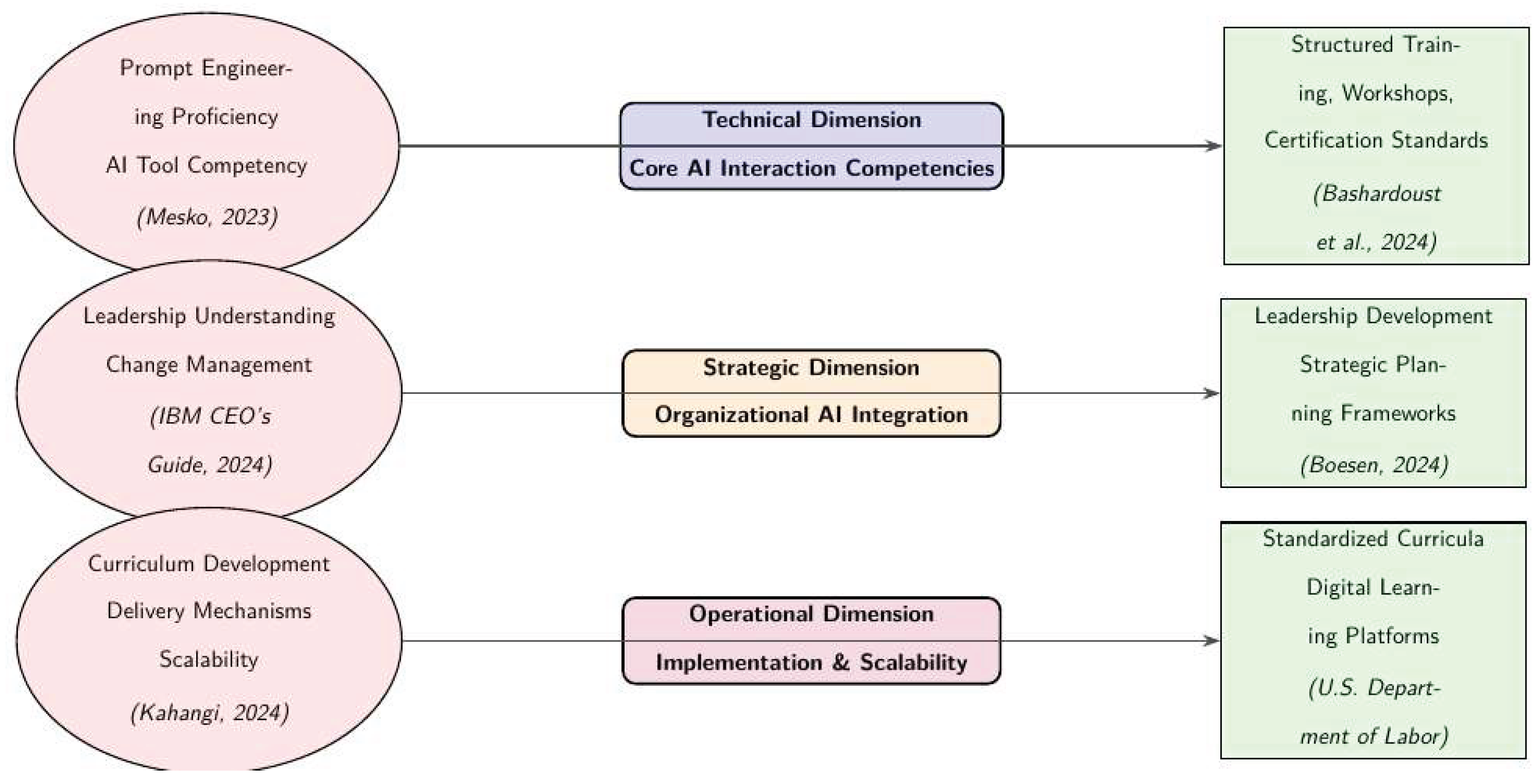

Figure 1: The Multi-Dimensional AI Skills Gap Framework.

This framework illustrates the three core dimensions of the AI skills gap: technical, strategic, and operational. The technical dimension addresses individual competency requirements in prompt engineering and AI tool usage. The strategic dimension focuses on organizational-level challenges in AI integration and change management. The operational dimension encompasses systemic implementation challenges including curriculum development and scalable delivery mechanisms. The bidirectional arrows demonstrate the interdependent relationship between challenges and interventions across all dimensions.

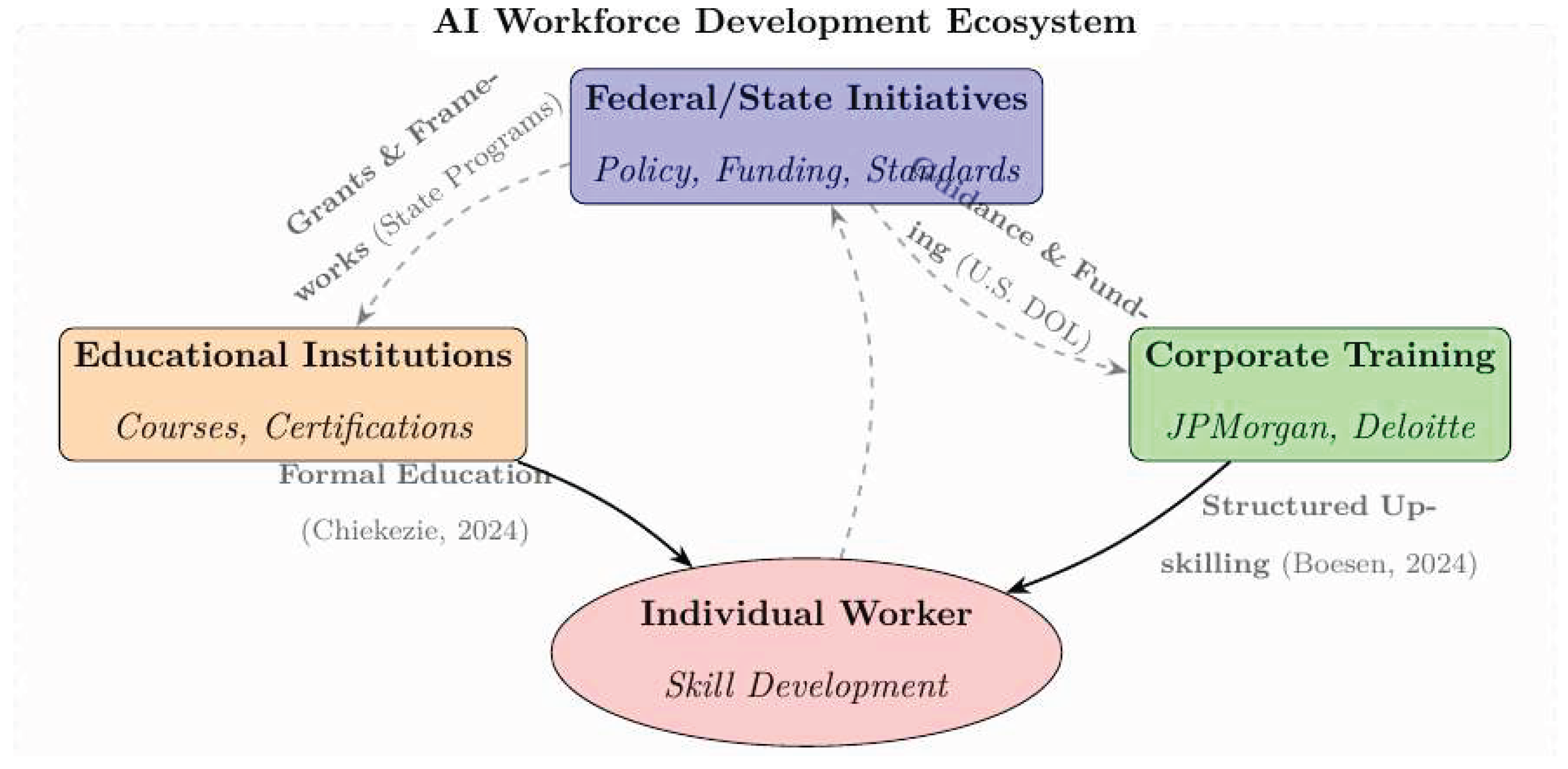

Figure 2: Integrated Workforce Development Ecosystem.

This ecosystem model visualizes the key stakeholders in AI workforce development and their primary interaction pathways. The solid arrows emphasize critical capacity-building flows from corporate and educational institutions to individual workers, while translucent dashed lines represent secondary support and feedback mechanisms. The model highlights that effective workforce development requires coordinated action across federal, corporate, and educational domains.

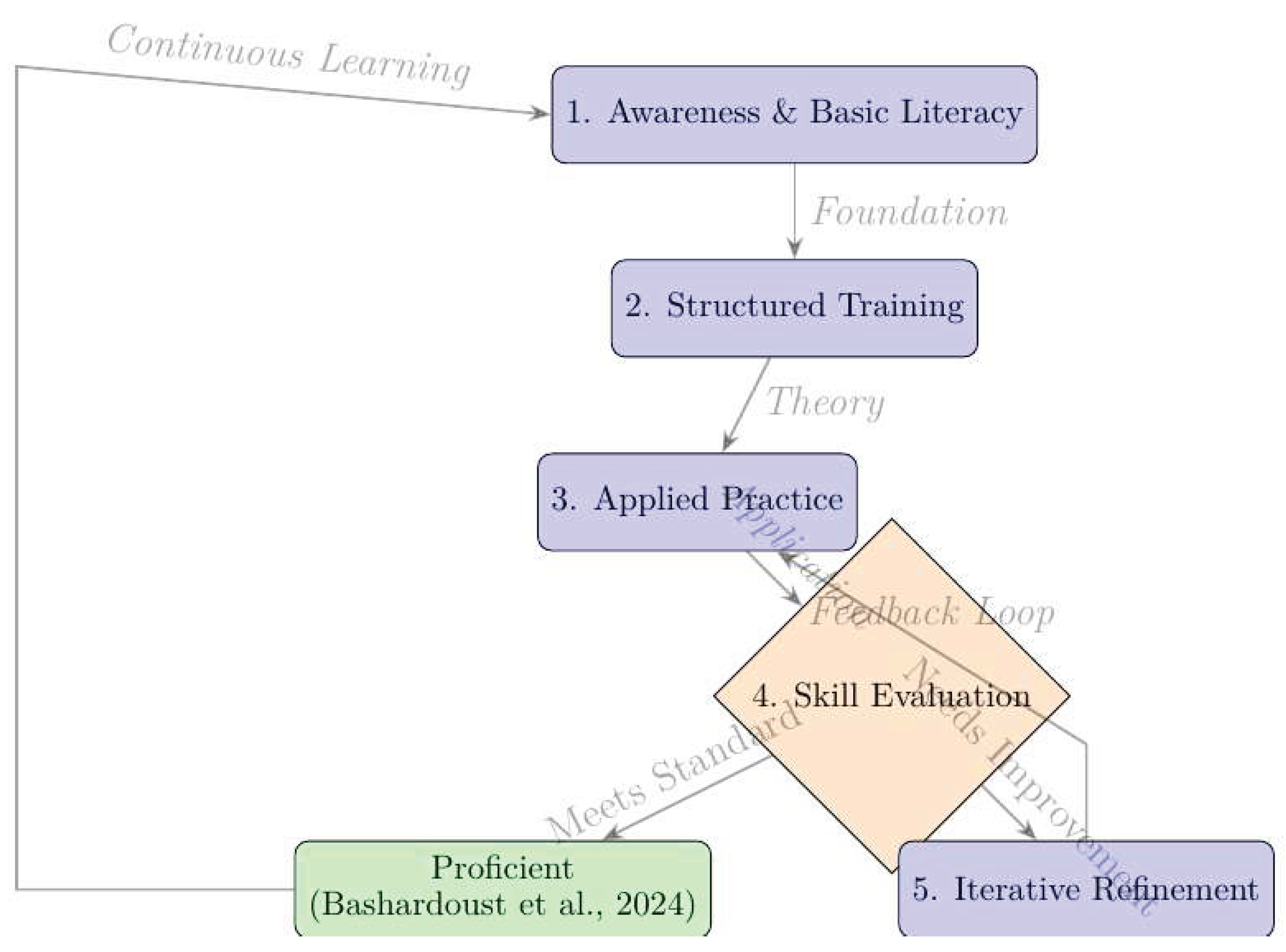

Figure 3: The Prompt Engineering Upskilling Cycle.

This iterative process model outlines the pathway from basic AI awareness to professional proficiency in prompt engineering. The cycle begins with foundational literacy, progresses through structured training and applied practice, and culminates in skill evaluation. The feedback loops enable continuous refinement, acknowledging that prompt engineering competency develops through repeated practice and assessment. The translucent arrows emphasize the fluid nature of skill development.

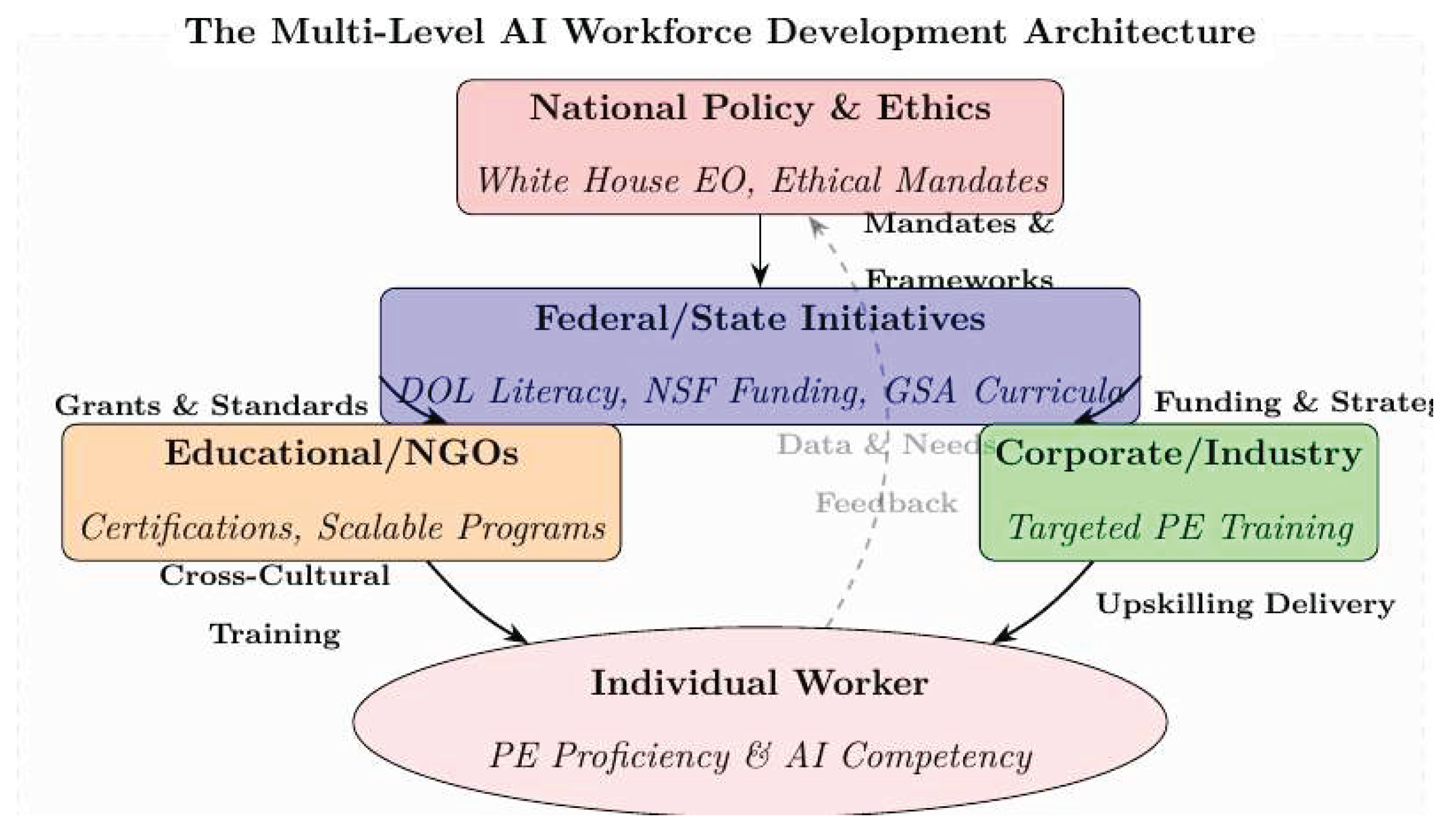

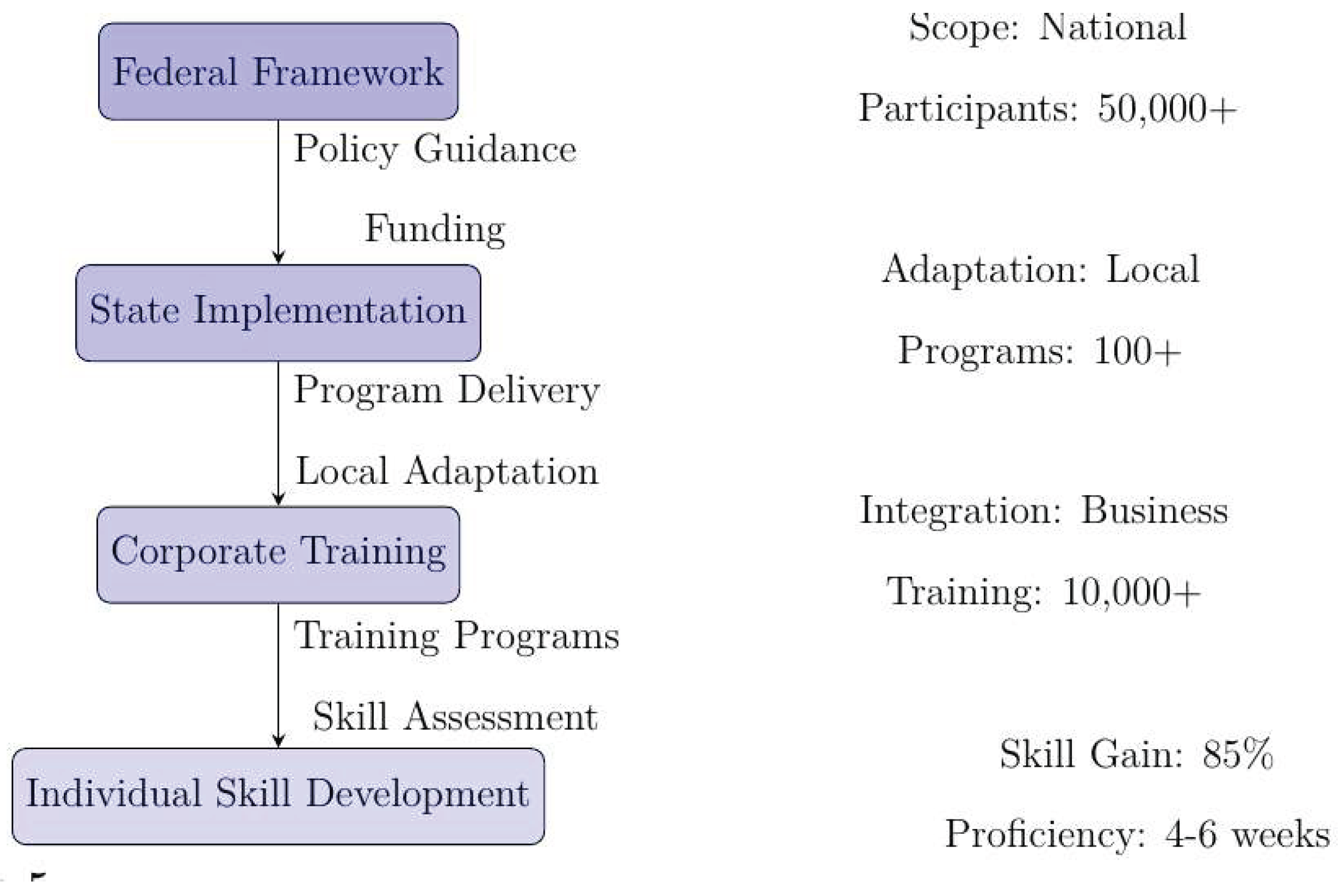

Figure 4: Multi-Level AI Workforce Development Architecture.

This architectural diagram expands upon

Figure 3 by incorporating national policy and ethical considerations as the foundational layer. The model demonstrates how policy mandates flow through federal implementation to corporate and educational delivery, ultimately reaching individual workers. The architecture emphasizes the hierarchical yet interconnected nature of workforce development initiatives across policy, implementation, and individual competency levels.

Figure 5: Multi-level Architecture for AI Workforce Development.

This simplified architectural model presents a linear progression from federal frameworks to individual skill development, with quantitative metrics illustrating scale and impact at each level. The model demonstrates how broad policy guidance becomes increasingly specific and actionable as it moves through state adaptation and corporate implementation to individual competency building.

5.2. Analytical Tables

Table 1: Key Literature Themes and Representative Studies.

This table synthesizes the major research themes identified in the literature review, providing a structured overview of theoretical foundations, empirical evidence, policy initiatives, implementation challenges, and emerging trends. The table serves as a quick reference for understanding the current state of research and identifying key studies in each thematic area.

Table 2: Dimensions of the AI Skills Gap and Interventions.

This analytical table complements

Figure 1 by providing specific details about challenges and intervention strategies for each dimension of the AI skills gap. The table connects theoretical challenges with practical solutions, offering policymakers and practitioners a clear roadmap for addressing skills gaps at technical, strategic, and operational levels.

Collectively, these visual frameworks and analytical tables provide a comprehensive toolkit for understanding, analyzing, and addressing the complex challenges of AI workforce development. They enable stakeholders to identify intervention points, coordinate multi-level responses, and measure progress across different dimensions of the skills gap.

6. Proposed Methodology

This study employs a multi-method research approach to comprehensively analyze the AI-driven skills gap and evaluate prompt engineering and upskilling initiatives as strategic workforce development responses. The methodology integrates systematic literature review, policy analysis, conceptual framework development, and case study analysis to address the research objectives.

6.1. Systematic Literature Review

A systematic literature review was conducted following established guidelines for comprehensive evidence synthesis. The review encompassed academic research, government reports, industry publications, and policy documents from 2023–2025 to capture the most recent developments in AI workforce development. Search strategies included keyword queries for “AI skills gap,” “prompt engineering,” “workforce development,” “AI upskilling,” and related terms across major academic databases and institutional repositories.

The review specifically incorporated foundational frameworks such as UNESCO’s AI Competency Framework (Ahmad et al., 2025) and emerging research on reflexive prompt engineering (Djeffal, 2025). This systematic approach enabled the identification of key themes, research gaps, and evidence-based practices in AI workforce development.

6.2. Policy Document Analysis

A comprehensive analysis of national and international policy initiatives was conducted to examine governmental responses to the AI skills gap. This included examination of executive orders (Biden, 2023), federal workforce development programs (“TEGL” 2025), and international AI education strategies. The policy analysis focused on identifying implementation frameworks, funding mechanisms, and public-private partnership models that support scalable workforce development initiatives.

6.3. Conceptual Framework Development

Building on the synthesized literature and policy analysis, this research develops a multi-dimensional analytical framework for understanding the AI skills gap. The framework integrates three interconnected dimensions:

Technical Dimension: Examining prompt engineering as a core competency for human–AI interaction, drawing on empirical studies of training effectiveness.

Strategic Dimension: Analyzing organizational capacity for AI integration, including leadership understanding and change management requirements (Chiekezie et al., 2024)

Operational Dimension: Investigating implementation challenges related to curriculum development, delivery mechanisms, and program scalability (Melkamu, 2025)

6.4. Implementation Case Studies

The methodology incorporates analysis of implementation case studies across multiple sectors, including:

Corporate training programs in financial services and technology sectors

Federal workforce development initiatives (Advancing Education for the Future AI Workforce (EducateAI), 2023)

Educational institution curriculum development efforts

International implementation challenges in developing contexts (Melkamu, 2025)

6.5. Data Collection and Analysis

Primary data collection included:

Document analysis of policy frameworks and implementation guidelines

Synthesis of empirical studies on training effectiveness

Comparative analysis of international workforce development strategies

Examination of ethical considerations in AI training programs

6.6. Validation and Synthesis

The proposed methodology employs triangulation across data sources to validate findings and ensure comprehensive coverage of the research domain. The synthesis process integrates quantitative evidence from empirical studies with qualitative insights from policy analysis and implementation case studies. This approach enables the development of evidence-based policy recommendations that address both technical requirements and implementation practicalities in AI workforce development.

The methodological framework specifically addresses the need for interdisciplinary analysis, bridging technical AI competencies with educational psychology perspectives (Ahmad et al., 2025) and organizational development considerations. This comprehensive approach ensures that the resulting analysis and recommendations account for the complex, multi-faceted nature of the AI skills gap challenge.

6.7. Limitations and Delimitations

The study acknowledges several limitations:

The rapidly evolving nature of AI technologies means findings may require continuous updating

Limited availability of longitudinal data on long-term outcomes of AI training programs

Geographic focus primarily on U.S. contexts with selective international comparisons

Reliance on published literature and documented case studies rather than primary data collection

Despite these limitations, the methodology provides a robust foundation for understanding the current landscape of AI workforce development and identifying strategic interventions for addressing the skills gap.

Based on the paper’s analysis, the critical bottlenecks identified in AI workforce development are:

Curriculum Standardization: Lack of uniform, up-to-date, and modular training materials that keep pace with rapidly evolving AI technologies.

Scalable Delivery: Challenges in deploying training programs widely and effectively, especially through digital platforms, to reach diverse and large audiences.

Systems Integration: Difficulty in aligning efforts across federal, state, corporate, and educational entities, leading to fragmented implementation and inefficiencies.

These bottlenecks hinder the coherent and widespread adoption of AI upskilling initiatives, limiting their impact on closing the AI skills gap.

Conclusion and Policy Recommendations

This research formalizes a multi-dimensional framework for analyzing and addressing the AI skills gap through three core vectors: technical competency in human-AI interaction, strategic organizational integration, and operational scalability. The analysis demonstrates that prompt engineering functions as a critical human-in-the-loop optimization layer for generative AI systems, with empirical evidence confirming structured training yields measurable performance improvements in professional contexts (Bashardoust et al., 2024).

The proposed multi-level workforce development architecture delineates clear information and resource flows from federal policy directives through state implementation, corporate training pipelines, and individual skill acquisition. This systems-level approach reveals critical path dependencies and integration points where policy interventions yield maximum leverage.

Based on architectural analysis and implementation data, we propose the following technical policy recommendations

Implement a National AI Competency Matrix: Develop a standardized ontology of AI skills with proficiency levels mapped to specific technical capabilities, enabling interoperable credentialing across ecosystems (Labor, 2024).

Deploy Federated Learning Infrastructure: Establish a national digital platform for AI training delivery using adaptive learning technologies and API-driven credential verification to achieve scale (N. S. Foundation, 2024).

Formalize Public-Private Data Sharing Protocols: Create secure mechanisms for anonymized workforce skill data exchange between industry and policymakers to enable real-time curriculum optimization.

Develop Modular, Version-Controlled Curricula: Implement Git-like version control for AI training materials with continuous integration pipelines for content updates based on model capability shifts (N. A. E. Foundation, 2024; Joshi et al., 2025).

Integrate Ethics-by-Design Frameworks: Embed algorithmic auditing and bias detection modules directly into workforce training platforms with cross-cultural adaptation layers (Patel et al., 2024).

The technical architecture presented provides a scalable foundation for maintaining workforce parity with accelerating AI capabilities. Implementation of these structured interventions represents a critical path dependency for national competitive advantage in the AI economy.

Declaration

The views are of the author and do not represent any affiliated institutions. Work is done as a part of independent research. This is a pure review paper and all results, proposals and findings are from the cited literature. Author does not claim any novel findings. The author declares that there are no conflicts of interest.

Disclaimer (Artificial intelligence)

Author(s) hereby declare that generative AI technologies such as Large Language Models, etc. have been used during the writing or editing of manuscripts. This explanation will include the name, version, model, and source of the generative AI technology and as well as all input prompts provided to the generative AI technology

Details of the AI usage are given below:

1. Proof Reading

2. Validating References

References

- Academy, I. T. (2024). Scalable AI training solutions for enterprise. https://www.ittrainingacademy.org/ai-solutions.

-

Advancing education for the future AI workforce (EducateAI). (2023). https://www.nsf.gov/funding/opportunities/dcl-advancing-education-future-ai-workforce-educateai/nsf24-025.

- Ahmad, Z., Sultana, A., Latheef, N. A., Siby, N., Sellami, A., & Abbasi, S. A. (2025). Measuring students’ AI competence: Development and validation of a multidimensional scale integrating educational psychology perspectives. Acta Psychologica, 259, 105446. [CrossRef]

- Bashardoust, A., Feng, Y., Geissler, D., Feuerriegel, S., & Shrestha, Y. R. (2024). The Effect of Education in Prompt Engineering: Evidence from Journalists (arXiv:2409.12320). arXiv. [CrossRef]

- Biden, J. (2023). Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. The White House. https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/.

- Boesen, T. (2024). JPMorgan accelerates AI adoption with focused prompt engineering training. In Okoone.

- California, S. of. (2024). California generative AI training initiative. https://www.gov.ca.gov/generative-ai-training.

- Chiekezie, N. R., Obiki-Osafiele, A. N., & Agu, E. E. (2024). Preparing the workforce for AI technologies through training and professional development for future readiness. World Journal of Engineering and Technology Research, 3(1), 001–018. [CrossRef]

- Digital Economy, M. I. on the. (2024). MIT study on AI workforce readiness. https://ide.mit.edu/ai-workforce-readiness.

- Djeffal, C. (2025). Reflexive Prompt Engineering: A Framework for Responsible Prompt Engineering and AI Interaction Design. Proceedings of the 2025 ACM Conference on Fairness, Accountability, and Transparency, 1757–1768. [CrossRef]

- European Commission. (2024). European AI skills strategy. European Commission. https://ec.europa.eu/digital-strategy/en/ai-skills.

- Foundation, N. A. E. (2024). Developing effective AI training curricula. https://www.nea.org/sites/default/files/2024-06/report_of_the_nea_task_force_on_artificial_intelligence_in_education_ra_2024.pdf.

- Fujitsu. (2024). The new skill for the digital age - Why prompt engineering matters. In Fujitsu Blog - Global. https://corporate-blog.global.fujitsu.com/fgb/2024-08-28/01/.

- IBM Institute for Business Value. (2024). The CEO’s guide to generative AI [Research report]. IBM. https://www.ibm.com/thought-leadership/institute-business-value/en-us/report/ceo-generative-ai-book.

- Labor, U. S. D. of. (2024). Advancing AI workforce development: A national strategy. https://www.dol.gov/agencies/eta/ai-workforce-development.

- Melkamu, M. (2025). ARTIFICIAL INTELLIGENCE IMPLEMENTATION CHALLENGES IN INDUSTRIES: DEVELOPING COUNTRIES PROSPECTIVE. Journal of Trends and Challenges in Artificial Intelligence, 2(1). [CrossRef]

- Meskó, B. (2023). Prompt Engineering as an Important Emerging Skill for Medical Professionals: Tutorial. In Journal of Medical Internet Research (Vol. 25, p. e50638). [CrossRef]

- Patel, S., Kim, Y., & Johnson, D. (2024). Ethical considerations in AI workforce training programs. AI and Ethics, 4(2), 234–256. [CrossRef]

- Satyadhar Joshi. (2025). Retraining US Workforce in the Age of Agentic Gen AI: Role of Prompt Engineering and Up-Skilling Initiatives. International Journal of Advanced Research in Science, Communication and Technology, 543–557. [CrossRef]

- Schuckart, A. (2024). GenAI and Prompt Engineering: A Progressive Framework for Empowering the Workforce. Proceedings of the 29th European Conference on Pattern Languages of Programs, People, and Practices, 1–8. [CrossRef]

- TEGL. (2025). In U.S. Department of Labor. https://www.dol.gov/agencies/eta/advisories/tegl-03-25.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).