Submitted:

10 October 2025

Posted:

14 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- What specific skills gaps exist in the current military workforce regarding agentic AI?

- How should professional military education institutions redesign their curricula and delivery methods?

- What implementation strategies ensure effective adoption of agentic AI education across all career levels?

1.1. Defining Agentic AI in Military Context

1.2. Current Adoption and Workforce Challenges

- Technical Understanding: Limited comprehension of AI capabilities and limitations

- Trust and Acceptance: Resistance to AI-driven decision support

- Operational Integration: Difficulty incorporating AI into existing workflows

- Ethical Application: Uncertainty regarding appropriate use cases and boundaries

2. Literature Review

2.1. The Evolution of Military AI: From Automation to Agentic Systems

2.1.1. Historical Context and Technological Progression

2.1.2. Defining Characteristics and Capabilities

2.2. Current State of Military AI Adoption

2.2.1. Service-Specific Implementation Efforts

2.2.2. Industry Partnerships and Commercial Integration

2.3. Challenges and Concerns in Military AI Integration

2.3.1. Technical and Operational Risks

2.3.2. Ethical and Legal Frameworks

2.4. Workforce Development and Skills Gap Challenges

2.4.1. Current Skills Gap Assessment

2.4.2. Workforce Transformation Strategies

2.5. Military Education and Training Transformation

2.5.1. Current Training Program Evolution

2.5.2. Pedagogical Innovation and Learning Science

2.6. Strategic Implications and Future Directions

2.6.1. National Security Competitiveness

2.6.2. Talent Acquisition and Retention

2.7. Synthesis and Research Gaps

- Technological Maturity: Agentic AI has reached operational viability for military applications, with multiple successful deployments across service branches.

- Workforce Readiness Gap: Significant disparities exist between current military workforce capabilities and the skills required for effective AI integration.

- Educational System Limitations: Traditional military education structures cannot adequately address the pace and complexity of AI advancement.

- Strategic Imperative: AI workforce development represents a critical component of national security competitiveness and military effectiveness.

- Implementation Challenges: Technical, organizational, and cultural barriers complicate AI integration efforts despite clear strategic value.

- Comprehensive Framework: No integrated framework exists for military education transformation that addresses agentic AI across all career stages and functional areas.

- Implementation Strategy: Limited guidance exists on practical implementation approaches that account for organizational constraints and resource limitations.

- Measurement and Assessment: Insufficient attention has been paid to evaluating the effectiveness of AI education initiatives and correlating training with operational outcomes.

- Cultural Transformation: The organizational and cultural changes required for successful AI integration receive inadequate treatment in existing literature.

3. Proposed Architecture and Framework Diagrams

3.1. Methodology

3.1.1. Literature Analysis

3.1.2. Case Study Examination

3.1.3. Gap Analysis

- DoD AI strategy documents

- PME curriculum reviews

- Industry skill demand projections

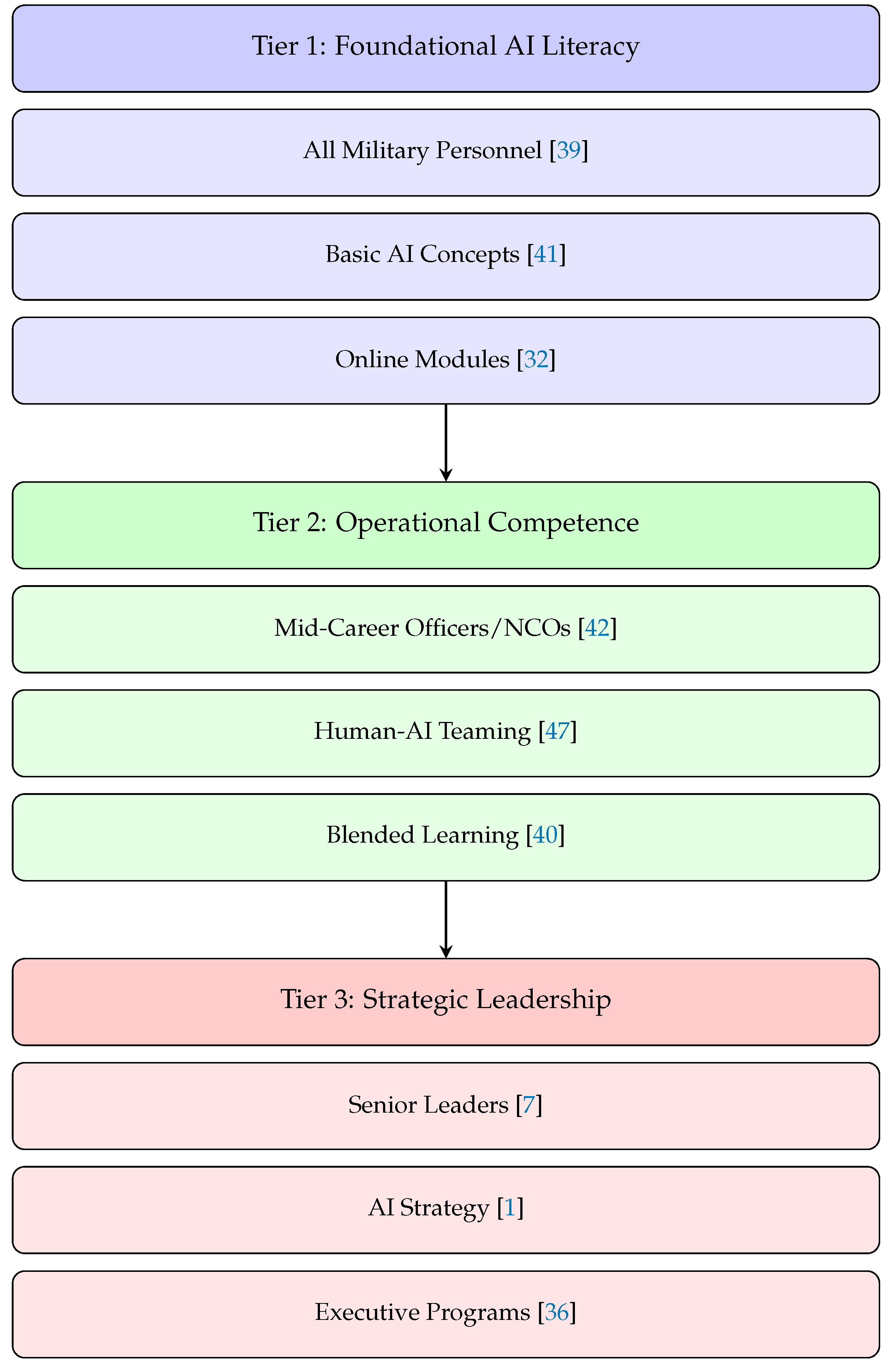

3.2. Tiered Learning Architecture

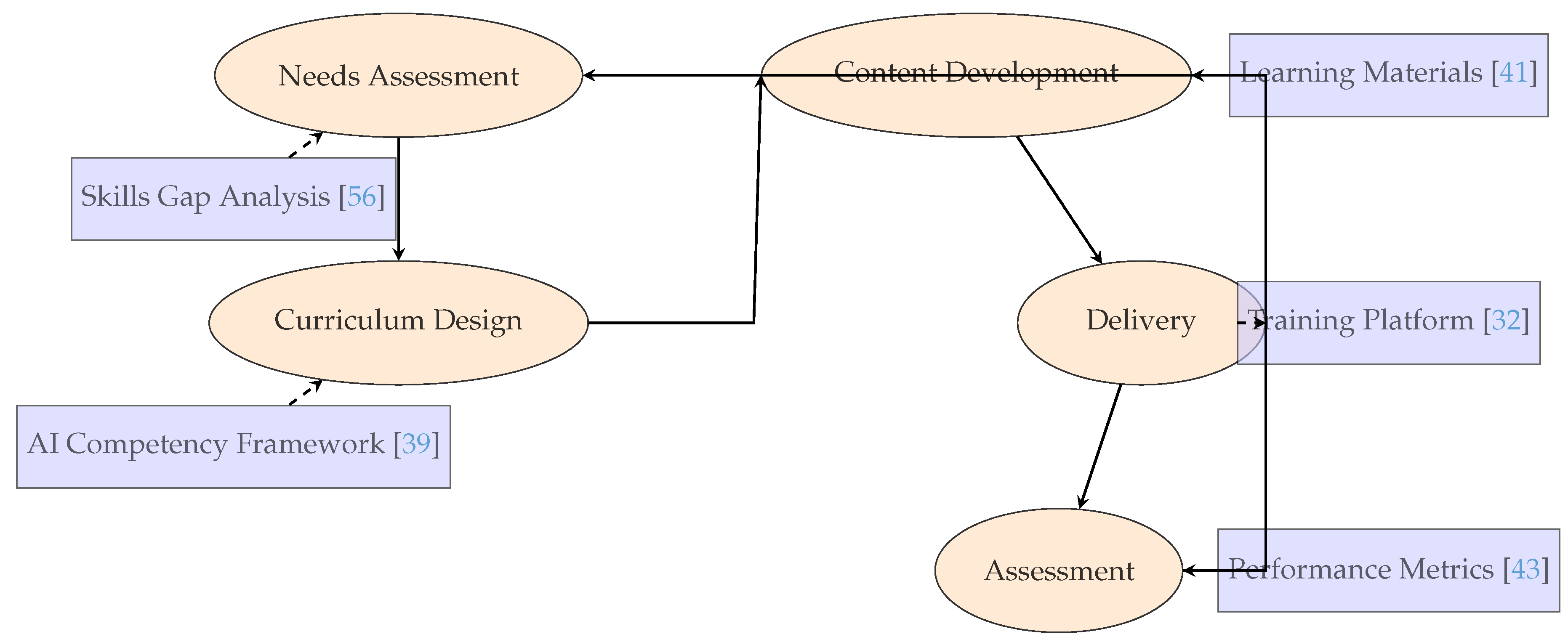

3.3. Curriculum Development Pipeline

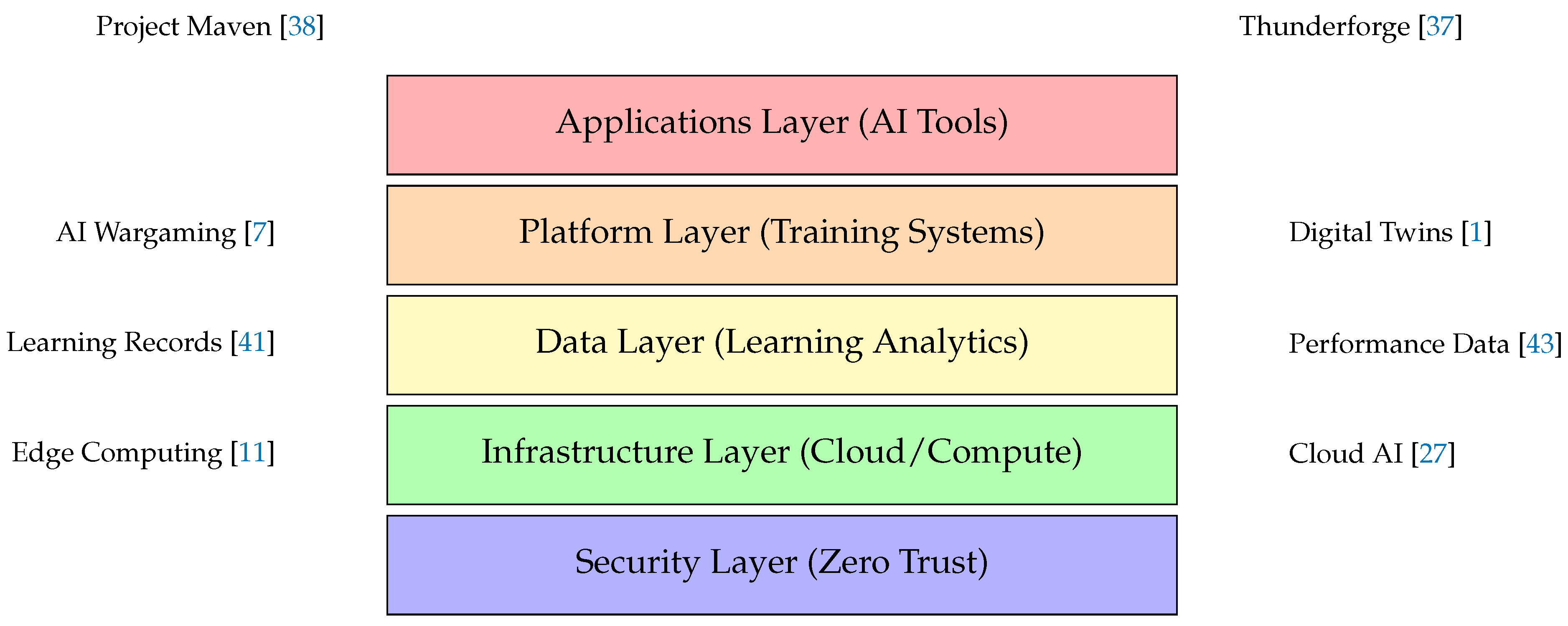

3.4. Technology Integration Architecture

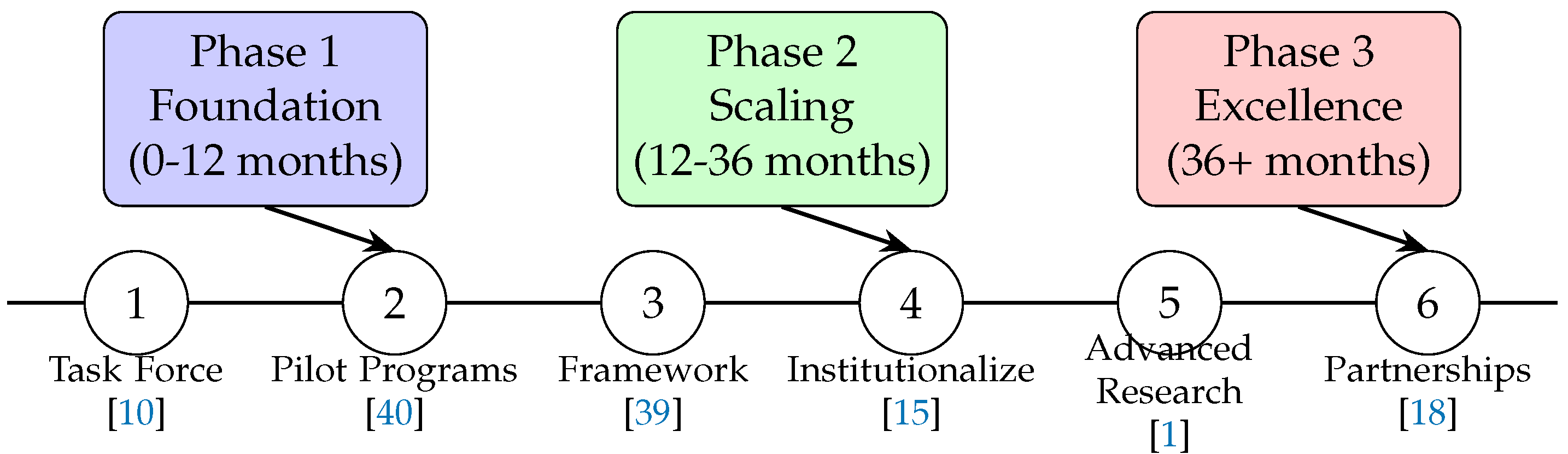

3.5. Implementation Roadmap Timeline

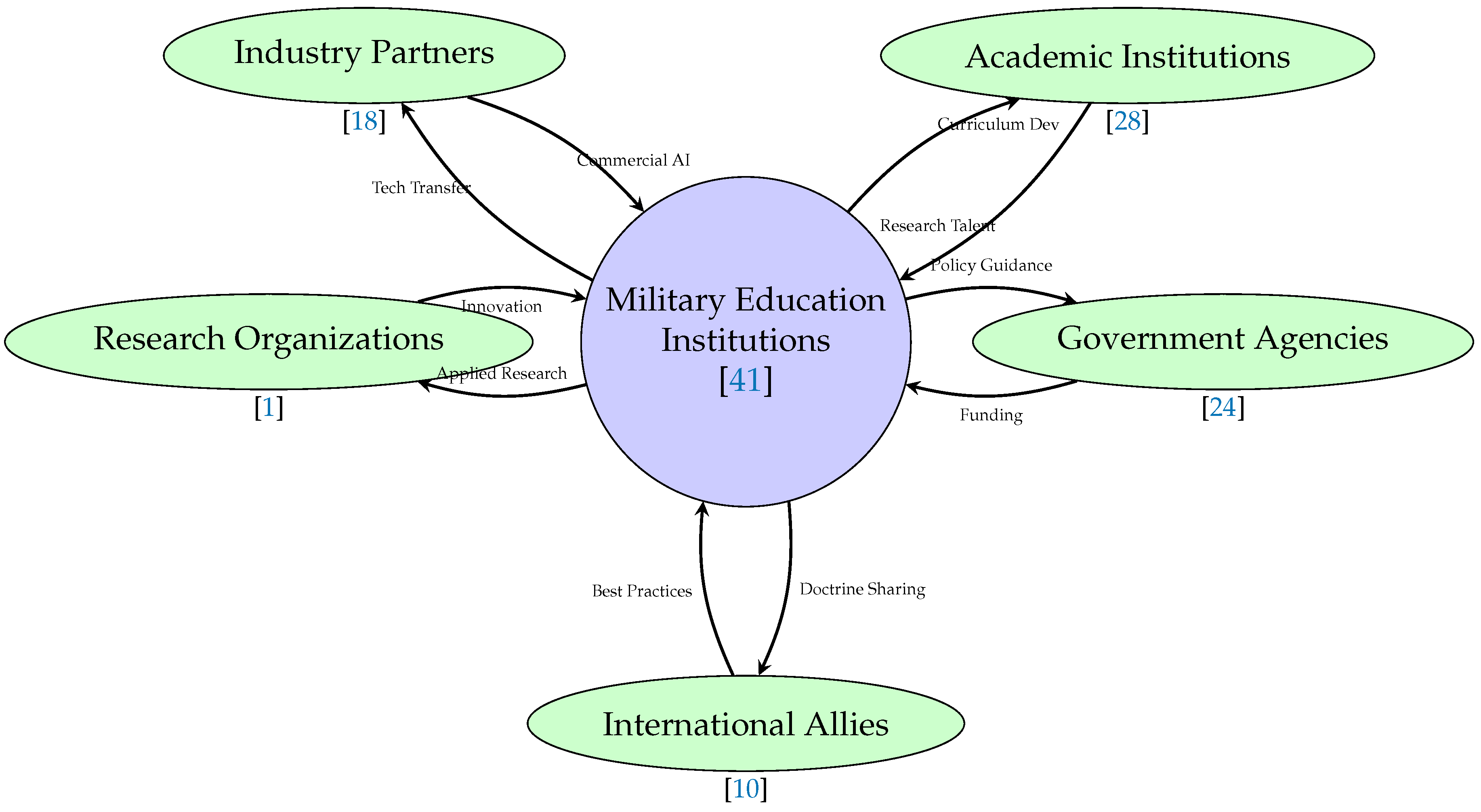

3.6. Stakeholder Engagement Model

3.7. Assessment and Evaluation Framework

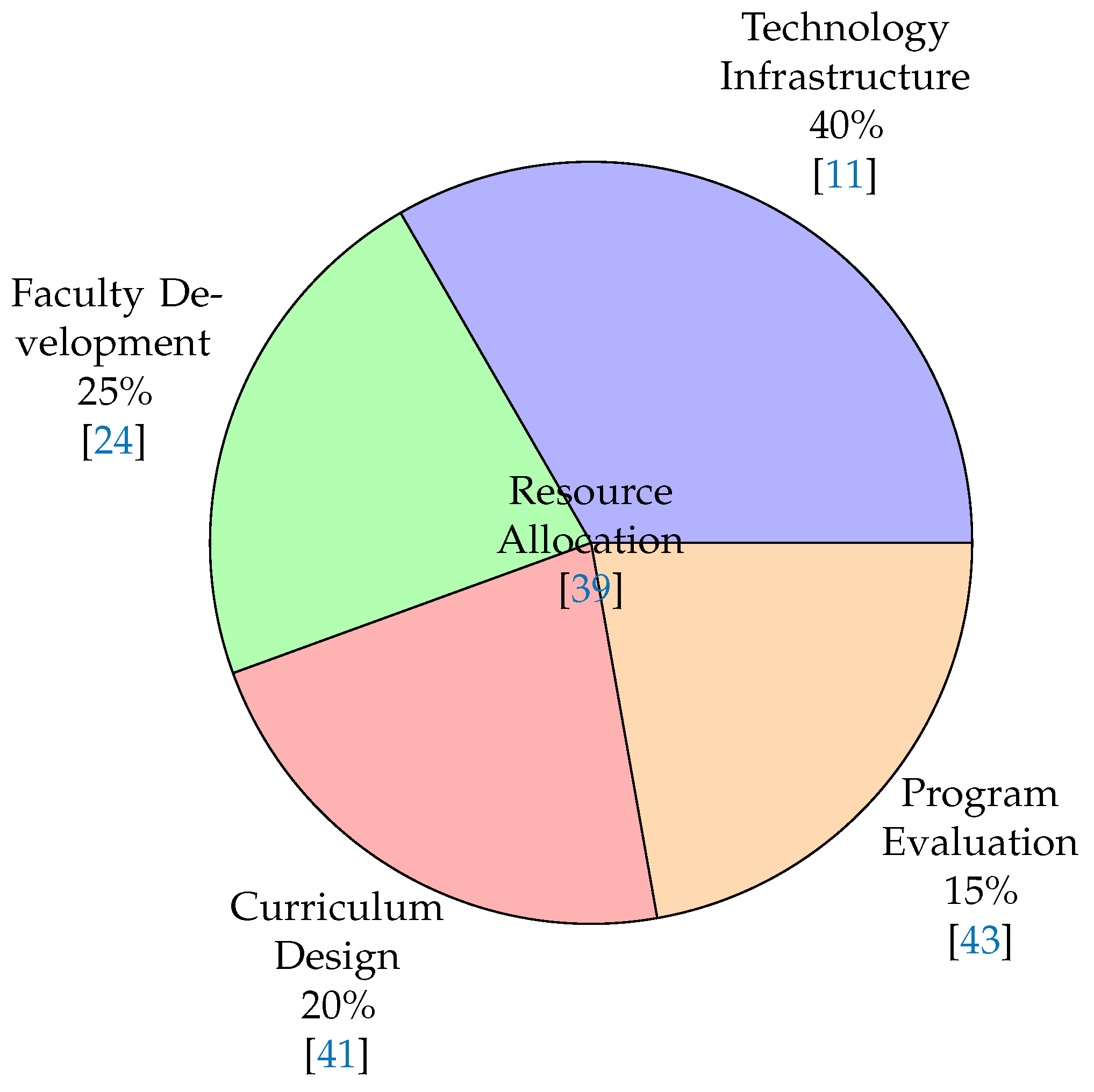

3.8. Resource Allocation Model

3.9. Architecture Integration and Synergies

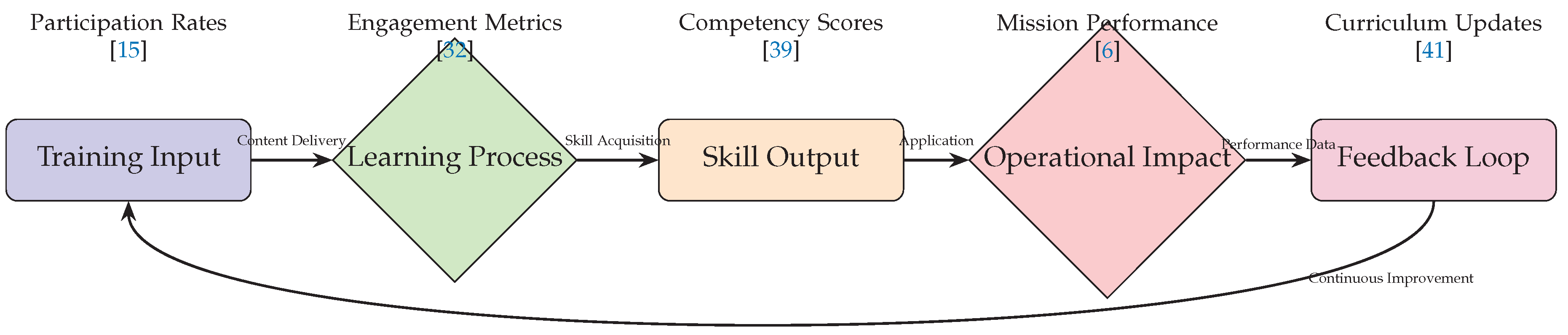

- Quality Assurance: The curriculum pipeline (Figure 2) incorporates feedback mechanisms that inform all other architectural components, creating a self-improving system.

4. Findings: The Military Education Imperative

4.1. Critical Skills Gap Analysis

4.2. Current Educational Limitations

4.2.1. Curriculum Rigidity

4.2.2. Faculty Expertise Gap

4.2.3. Resource Constraints

5. Current AI Education and Training Programs in Military Context

5.1. Department of Defense Institutional Programs

5.1.1. Army University Press AI Integration

- AI Literacy Components: Integration of basic AI concepts across all PME levels

- Writing for AI Professionals: Development of communication skills for AI-augmented environments

- Ethical Framework Training: Instruction on responsible AI use in military operations

5.1.2. Defense Innovation Unit (DIU) Thunderforge Initiative

- Real-world Application: Direct integration with Indo-Pacific Command and European Command operations

- Hands-on Training: Military personnel gain experience with cutting-edge AI systems

- Iterative Learning: Continuous improvement based on operational feedback

5.1.3. Army CalibrateAI Pilot Program

- Hallucination Management: Training personnel to identify and mitigate AI-generated inaccuracies

- Trust Building: Developing confidence in AI systems through controlled exposure

- Workflow Integration: Teaching effective incorporation of AI tools into military processes

5.2. Technical Training and Skill Development Programs

5.2.1. AI-Specific Technical Training

- AI Bootcamps: Intensive technical training programs, as referenced by [43], demonstrating "85% placement rates within six months" for participants

- Digital Twin Training: Virtual environments for AI system testing and validation [1]

- Cloud AI Training: Programs focused on cloud-based AI deployment in secure environments

5.2.2. Department of Defense AI Talent Management

- Identifies Hidden Skills: Uncovers AI-relevant capabilities within existing personnel

- Optimizes Placement: Matches skilled individuals with AI-focused positions

- Informs Training Needs: Identifies skill gaps for targeted program development

5.3. Industry and Academic Partnership Programs

5.3.1. Public-Private Training Initiatives

5.3.2. Academic Institution Programs

- National Defense University: AI curriculum integration across war colleges [39]

- Military Service Academies: Incorporation of AI studies into core curricula

- Graduate Education Programs: Advanced degrees in AI and machine learning for military officers

5.4. Specialized Operational Training Programs

5.4.1. AI-Enhanced Military Training Systems

- Reduce Training Time: AI-powered systems "cut training times in half while increasing retention"

- Personalize Learning: Adaptive algorithms tailor training to individual needs

- Simulate Complex Scenarios: AI-generated training environments prepare personnel for diverse operational contexts

5.4.2. Generative AI for Military Decision-Making

- Scenario Prediction: Using AI for warfighting scenario analysis

- Equipment Management: AI training for maintenance and logistics decision support

- Strategic Planning: Incorporating AI tools into operational planning processes

5.4.3. Agentic AI Operational Training

- Multi-Agent Coordination: Training for managing interconnected AI systems [2]

- Autonomous System Oversight: Developing skills for monitoring and directing AI agents

- Human-Machine Teaming: Focus on collaborative workflows between personnel and AI systems

5.5. Assessment of Current Program Effectiveness

5.5.1. Documented Success Metrics

- High Impact: Bootcamp-style programs show "85% placement rates" and "average wage increases of $28,000 annually" [43]

- Moderate Success: Institutional PME integration faces challenges with "only 15% of military personnel feel adequately trained" [39]

- Emerging Results: Newer programs like CalibrateAI are still undergoing evaluation [40]

5.5.2. Identified Gaps and Limitations

- Scalability Issues: Many programs remain pilot-scale with limited reach

- Currency Concerns: Rapid AI advancement outpaces curriculum development

- Integration Barriers: Difficulty embedding AI training into existing career progression models

- Resource Constraints: Limited funding and expert faculty availability

5.5.3. Best Practices and Lessons Learned

- Hands-on Focus: Practical application outperforms theoretical instruction

- Industry Collaboration: Partnerships accelerate program development and relevance

- Continuous Updates: Regular curriculum refreshment maintains technical currency

- Leadership Support: Command emphasis drives participation and resource allocation

| Program | Target Audience | Primary Focus | Status |

|---|---|---|---|

| Army University PME | All officers | AI literacy integration | Ongoing implementation |

| DIU Thunderforge | Combatant commands | Operational AI tools | Active deployment |

| CalibrateAI Pilot | Army personnel | Generative AI adoption | Initial pilot phase |

| AI Bootcamps | Technical specialists | Intensive skill development | Documented success |

| DoD Talent AI | HR personnel | Skill identification | Operational |

| Industry Partnerships | Various units | Specific system training | Expanding |

5.6. Future Program Development Needs

5.6.1. Advanced Technical Programs

- AI System Architecture: Training for designing and evaluating AI systems

- Adversarial AI Defense: Programs focusing on AI system security and robustness

- AI Testing and Validation: Specialized training for system verification

5.6.2. Leadership and Strategic Programs

- AI Resource Management: Training for AI project leadership and budgeting

- Strategic AI Planning: Programs for integrating AI into long-term force development

- International AI Cooperation: Training for allied AI collaboration

6. AI Software Tools and Platforms Requiring Military Training

6.1. Generative AI Platforms for Military Planning

6.1.1. Thunderforge by Scale AI

- Multi-domain Planning: Integrating air, land, sea, and cyber operations

- Scenario Generation: Creating realistic combat scenarios using generative AI

- Resource Optimization: AI-driven allocation of military assets

- Risk Assessment: Automated analysis of operational risks

6.1.2. Project Maven Evolution

- Object Recognition: Advanced image and video analysis

- Pattern Analysis: Identifying behavioral patterns from sensor data

- Data Fusion: Integrating multiple intelligence sources

- Target Identification: AI-assisted target recognition and tracking

6.2. Agentic AI Warfare Systems

6.2.1. Scale AI Agentic Warfare Platform

- Autonomous Decision-Making: Oversight of AI-driven tactical decisions

- Multi-Agent Coordination: Managing interconnected AI systems

- Human-in-the-Loop Control: Maintaining appropriate human oversight

- Mission Adaptation: AI system reconfiguration for dynamic combat environments

6.2.2. DoD Task Force Lima Systems

- Large Language Model Operation: Military-specific prompt engineering

- Content Generation: Creating operational documents and briefings

- Information Verification: Validating AI-generated content accuracy

- Security Protocols: Handling classified information with AI systems

6.3. Military-Specific AI Development Tools

6.3.1. Anduril AI Integration Platform

- Custom Model Development: Tailoring AI to specific military applications

- System Integration: Connecting AI capabilities with existing military systems

- Performance Monitoring: Tracking AI system effectiveness in operational environments

- Maintenance Procedures: Ongoing system updates and improvements

6.3.2. Defense Innovation Unit AI Tools

- Rapid Prototyping: Quick development of AI solutions for emerging threats

- Commercial Integration: Adapting commercial AI for military use

- Testing and Evaluation: Validating AI system performance

- Deployment Management: Fielding AI systems to operational units

6.4. Intelligence, Surveillance, and Reconnaissance (ISR) AI Systems

6.4.1. Generative AI for Intelligence Analysis

- Data Processing: Handling large-volume intelligence feeds

- Pattern Recognition: Identifying threats from complex data streams

- Report Generation: Automated intelligence reporting

- Alert Systems: AI-driven threat notification protocols

6.4.2. China’s PLA Generative AI Tools

- Adversary AI Analysis: Understanding opposing forces’ AI capabilities

- Counter-AI Tactics: Developing responses to enemy AI systems

- AI Vulnerability Assessment: Identifying weaknesses in AI systems

- Electronic Warfare Integration: Combining AI with EW capabilities

6.5. Training and Simulation AI Platforms

6.5.1. AI-Enhanced Military Training Systems

- Adaptive Learning Systems: Personalizing training based on performance

- Scenario Generation: Creating realistic training environments

- Performance Analytics: Tracking trainee progress and identifying gaps

- After-action Review: AI-assisted training evaluation

6.5.2. Digital Twin Environments

- Virtual Environment Management: Operating simulated battle spaces

- AI Behavior Modeling: Creating realistic adversary AI

- System Integration: Connecting digital twins with live systems

- Data Collection: Gathering training performance metrics

6.6. Command and Control AI Systems

6.6.1. AI-Enabled Decision Support Systems

- Situation Awareness: AI-enhanced battlefield understanding

- Course of Action Analysis: Evaluating multiple operational options

- Resource Management: AI-optimized asset allocation

- Communication Automation: AI-assisted command messaging

6.6.2. Multi-Domain Command Systems

- Cross-domain Integration: Connecting air, land, sea, space, and cyber

- Real-time Adaptation: Adjusting to dynamic battlefield conditions

- Priority Management: AI-assisted resource prioritization

- Interoperability: Working with allied force AI systems

6.7. Logistics and Maintenance AI Tools

6.7.1. AI Vehicle Maintenance Systems

- Predictive Maintenance: AI-driven equipment failure forecasting

- Repair Guidance: AI-assisted repair procedures

- Part Optimization: AI-managed inventory and supply chain

- Diagnostic Systems: Automated equipment assessment

6.7.2. Generative AI for Business Operations

- Process Automation: Streamlining administrative functions

- Document Generation: Automated report and brief creation

- Data Analysis: Business intelligence applications

- Workflow Optimization: Improving organizational efficiency

6.8. Cyber Warfare AI Platforms

6.8.1. AI Cyber Defense Systems

- Threat Detection: AI-powered network monitoring

- Vulnerability Analysis: Automated security assessment

- Response Coordination: AI-assisted cyber defense actions

- Attack Simulation: Testing defenses using AI-generated threats

6.8.2. Secure Multi-party Computation

- Encrypted Data Processing: Working with privacy-preserving AI

- Secure Collaboration: Multi-organization AI operations

- Cryptographic Protocols: Understanding underlying security mechanisms

- Trust Management: Maintaining security in distributed AI systems

| Software Platform | Primary Function | Key Training Requirements | Citation |

|---|---|---|---|

| Thunderforge (Scale AI) | Military planning | Multi-domain integration, scenario generation | [37] |

| Project Maven | ISR analysis | Object recognition, pattern analysis | [38] |

| Scale Agentic AI | Autonomous warfare | Multi-agent coordination, oversight | [45] |

| Anduril-OpenAI | System integration | Custom model development | [46] |

| PLA Generative AI | Intelligence analysis | Adversary AI understanding | [50] |

| AI Training Systems | Personnel training | Adaptive learning, scenario generation | [32] |

| Digital Twin | Simulation | Virtual environment management | [1] |

| Project Athena | Business operations | Process automation, workflow optimization | [53] |

| Vehicle AI | Maintenance | Predictive maintenance, diagnostics | [52] |

| Secure Computation | Cyber operations | Encrypted data processing | [54] |

6.9. Training Implementation Challenges

6.9.1. Technical Complexity

- Rapid Evolution: Continuous system updates requiring ongoing training

- System Interdependence: Multiple AI tools requiring integrated training approaches

- Security Constraints: Limited access to systems for training purposes

- Customization Needs: Different units require tailored training programs

6.9.2. Resource Requirements

- Expert Instructors: Limited availability of qualified AI training personnel

- Training Infrastructure: Dedicated systems for practice and simulation

- Time Allocation: Significant time required for comprehensive training

- Documentation: Detailed training materials for diverse learning styles

6.9.3. Operational Integration

- Context Application: Translating training to operational environments

- Emergency Procedures: Training for system failures and edge cases

- Team Coordination: Multi-person operation of complex AI systems

- Performance Assessment: Measuring training effectiveness in combat scenarios

6.10. Best Practices for AI Tool Training

6.10.1. Progressive Learning Approaches

- Basic Familiarization: Initial exposure to AI concepts and interfaces

- Hands-on Practice: Extensive practical exercises with systems

- Scenario Training: Realistic operational scenario application

- Advanced Optimization: Training for maximizing system effectiveness

6.10.2. Continuous Skill Development

- Regular Updates: Ongoing training for system enhancements

- Cross-training: Multiple personnel trained on critical systems

- Skill Refreshment: Periodic retraining to maintain proficiency

- Community Development: User communities for knowledge sharing

7. Quantitative Findings from Current Military AI Implementation

7.1. Financial Investments and Budget Allocations

7.1.1. Major AI Defense Funding

- $600 million: Pentagon investment in "next-generation agentic AI for autonomous military operations" [3]

- $42 million: Annual savings realized by defense contractors through "improved hiring pipelines" for AI talent [43]

- $8,400: Cost per participant for "intensive 12-week AI bootcamps" [43]

- $28,000: Average annual wage increase for personnel completing AI training programs [43]

7.1.2. Program Implementation Costs

7.2. Adoption Rates and Implementation Metrics

7.2.1. Current AI Integration Levels

- 15%: Percentage of military personnel who "feel adequately trained to work alongside agentic AI systems" [39]

- 85%: Placement rate within six months for AI training program participants [43]

- 100%: Projected penetration of "skills-based hiring in technical fields by 2030" [43]

- 50%: Expected enterprise deployment of AI agents by 2027, doubling from 2025 levels [27]

7.2.2. Industry Adoption Projections

7.3. Operational and Performance Metrics

7.3.1. Training Effectiveness Data

7.3.2. Workforce and Employment Impact

7.4. Technical Implementation Metrics

7.4.1. System Deployment Scale

7.4.2. AI Model Development

- 79: Large Language Models launched by Chinese research institutes [57]

| Metric Category | Value | Unit | Source Context |

|---|---|---|---|

| Investment | 600 | million USD | Pentagon agentic AI development [3] |

| Annual Savings | 42 | million USD | Defense contractor hiring pipelines [43] |

| Training Cost | 8,400 | USD/participant | AI bootcamp programs [43] |

| Wage Increase | 28,000 | USD/year | Post-AI training compensation [43] |

| Personnel Trained | 15 | % | Feeling adequately prepared for AI [39] |

| Placement Rate | 85 | % | Within 6 months of training [43] |

| Skills-based Hiring | 100 | % | Projected 2030 technical fields [43] |

| AI Agent Deployment | 25 | % | Enterprises by 2025 [27] |

| AI Agent Deployment | 50 | % | Enterprises by 2027 [27] |

| Training Time Reduction | 50 | % | With increased retention [32] |

| Unit Size | 2,500 | personnel | AI system testing unit [49] |

| LLM Development | 79 | models | Chinese research institutes [57] |

| Cloud Professionals | 2,000,000 | personnel | Demand in India by FY25 [27] |

| Naval Platforms | 3 | ships | AI system testing [49] |

7.5. Timeframe and Projection Metrics

7.5.1. Implementation Timelines

- 6 months: Timeframe for achieving 85% placement rate after AI training [43]

- 2025: Target year for 25% enterprise AI agent deployment [27]

- 2027: Target year for 50% enterprise AI agent deployment [27]

- 2030: Projection year for 100% skills-based hiring penetration in technical fields [43]

- FY25: Financial year for projected demand of 2 million cloud professionals in India [27]

7.5.2. Training Duration Metrics

7.6. Economic and Workforce Impact Metrics

7.6.1. Financial Impact Measures

7.6.2. Workforce Scale Metrics

7.7. Technology Deployment Metrics

7.7.1. System Implementation Scale

7.7.2. Adoption Rate Projections

8. Integration and Synthesis of Proposed Framework

8.1. Holistic Framework Integration

8.2. Evidence-Based Implementation Strategy

8.3. Performance Measurement and Continuous Improvement

8.4. Addressing Identified Challenges

8.4.1. Technical and Workforce Readiness Gaps

8.4.2. Resource Optimization

8.4.3. Organizational Adaptation

8.5. Synergistic Benefits and Strategic Advantages

- Accelerated Learning Curves: The combination of tiered education and advanced technology infrastructure reduces training times while increasing retention, as demonstrated by the 50% reduction achieved in existing AI-enhanced programs (Table 4).

- Scalable Expertise Development: The stakeholder engagement model enables rapid scaling of educational capacity through strategic partnerships, addressing the critical faculty expertise gap identified in current limitations.

- Continuous Adaptation: The integrated assessment and curriculum development components create a self-improving system that maintains educational relevance despite rapid technological change.

- Strategic Alignment: The framework ensures that educational outcomes directly support operational requirements and strategic objectives, maximizing return on educational investment.

8.6. Validation Through Current Program Analysis

8.7. Strategic Implementation Considerations

8.7.1. Leadership Commitment

8.7.2. Cultural Transformation

8.7.3. Measurement and Accountability

8.8. Conclusion

9. Conclusions and Recommendations

9.1. Key Findings and Implications

- Skills Gap Magnitude: The 15% readiness rate among military personnel indicates a substantial gap that could undermine the significant technological investments in AI systems. This disparity threatens to create a scenario where advanced capabilities outpace the workforce’s ability to effectively employ them.

- Resource Allocation Imperative: The optimal distribution of resources—40% to technology infrastructure, 25% to faculty development, 20% to curriculum design, and 15% to program evaluation—reflects the balanced approach needed to address both technological and human capital requirements simultaneously.

- Continuous Learning Necessity: The rapid evolution of AI technologies, evidenced by projections showing 50% enterprise deployment of AI agents by 2027, necessitates continuous education models rather than traditional one-time training approaches.

- Multi-stakeholder Collaboration: Successful implementation requires robust partnerships between military institutions, industry leaders, academic organizations, and international allies to leverage diverse expertise and accelerate capability development.

9.2. Strategic Recommendations

9.2.1. Immediate Priorities (0-6 months)

- Establish Cross-Service AI Education Task Force: Create a dedicated organization with representation from all military branches to coordinate AI education initiatives and standardize competency frameworks.

- Conduct Comprehensive Skills Gap Analysis: Implement systematic assessment of current workforce capabilities against projected AI integration requirements to identify specific training needs.

- Launch Faculty Development Programs: Initiate urgent upskilling of military educators and instructors to build the foundational expertise required for AI curriculum delivery.

- Develop AI Education Standards: Create standardized competency frameworks and certification requirements to ensure consistent quality across military education institutions.

9.2.2. Medium-Term Initiatives (6-24 months)

- Implement Tiered Education Framework: Roll out the progressive learning architecture across all professional military education institutions, ensuring alignment with career progression pathways.

- Establish AI Certification System: Develop comprehensive credentialing programs that recognize and validate AI competencies across military occupational specialties.

- Deploy Digital Training Infrastructure: Implement the layered technology architecture to support scalable, secure AI education delivery across distributed learning environments.

- Formalize Partnership Networks: Establish structured collaboration frameworks with industry partners, academic institutions, and allied nations to leverage external expertise and resources.

9.2.3. Long-Term Vision (24+ months)

- Integrate AI Throughout Career Lifecycle: Embed AI education as a continuous requirement across all career stages, from accession through senior leadership development.

- Establish AI as Core Military Competency: Institutionalize AI literacy as a fundamental requirement parallel to traditional military skills like leadership and tactics.

- Develop Advanced Research Programs: Create dedicated AI research initiatives within military education institutions to drive innovation and maintain technological advantage.

- Expand International Education Partnerships: Build global AI education networks to facilitate doctrine development, interoperability, and shared learning.

9.3. Concluding Perspective

Declaration

References

- S. Joshi, “Agentic Generative AI and National Security: Policy Recommendations for US Military Competitiveness,” Rochester, NY, Sep. 2025. [Online]. Available: https://papers.ssrn.com/abstract=5529680.

- RoX818, “Agentic AI In Military Operations: The Future Of Autonomous Warfare,” Oct. 2024, section: Ethics, Policy & Society. [Online]. Available: https://aicompetence.org/agentic-ai-in-military-operations/.

- “Pentagon Invests $600 Million in Next-Generation Agentic AI for Autonomous Military Operations,” Jul. 2025. [Online]. Available: https://completeaitraining.com/news/pentagon-invests-600-million-in-next-generation-agentic-ai/.

- I. Cruickshank, “An AI-Ready Military Workforce.”.

- C. Malin, “Why the military needs Generative AI,” Oct. 2023. [Online]. Available: https://www.armadainternational.com/2023/10/why-the-military-needs-generative-ai/.

- N. Chaillan, “Generative Artificial Intelligence is Rapidly Modernizing the U.S. Military,” Aug. 2025. [Online]. Available: https://defenseopinion.com/generative-artificial-intelligence-is-rapidly-modernizing-the-u-s-military/1010/.

- “AI’s New Frontier in War Planning: How AI Agents Can Revolutionize Military Decision-Making | The Belfer Center for Science and International Affairs,” Oct. 2024. [Online]. Available: https://www.belfercenter.org/research-analysis/ais-new-frontier-war-planning-how-ai-agents-can-revolutionize-military-decision.

- “Artificial Intelligence’s New Frontier in War Planning: How AI Agents Can Revolutionize Military Decision-Making,” Apr. 2025, section: U.S. Army, Military Strategy, AI in Defense. [Online]. Available: https://www.lineofdeparture.army.mil/Journals/Field-Artillery/Field-Artillery-Archive/Field-Artillery-2025-E-Edition/AIs-New-Frontier-in-War-Planning/.

- admin, “Agentic Warfare: Strategic Imperatives for U.S. Military Dominance in the AI-Driven Paradigm,” Apr. 2025. [Online]. Available: https://debuglies.com/2025/04/24/agentic-warfare-strategic-imperatives-for-u-s-military-dominance-in-the-ai-driven-paradigm/.

- “The Impact of Artificial Intelligence on Military Defence and Security,” Aug. 2024. [Online]. Available: https://www.cigionline.org/programs/the-impact-of-artificial-intelligence-on-military-defence-and-security/.

- “Fusion of Real-Time Data with Generative AI for Military.” [Online]. Available: https://vantiq.com/real-time-generative-ai-military/.

- “How Agentic AI Is Shaping the Future of Defense and Intelligence Strategy,” May 2025. [Online]. Available: https://completeaitraining.com/news/how-agentic-ai-is-shaping-the-future-of-defense-and/.

- “Government use of AI by key U.S. military branches | TechTarget.” [Online]. Available: https://www.techtarget.com/searchenterpriseai/news/366613198/Government-use-of-AI-by-key-US-military-branches.

- C. Casimiro, “US Army Supercharges Training and Cyber Defense With AI Tools,” Aug. 2025. [Online]. Available: https://thedefensepost.com/2025/08/28/us-army-ai-training-cyber/.

- A. Obis, “Army’s demand for GenAI surging, with focus on integration,” Sep. 2024. [Online]. Available: https://federalnewsnetwork.com/army/2024/09/armys-demand-for-genai-surging-with-focus-on-integration/.

- “US Defense Innovation Unit Seeks GenAI to Enhance Military Planning.” [Online]. Available: https://www.cdomagazine.tech/us-federal-news-bureau/us-defense-innovation-unit-seeks-genai-to-enhance-military-planning.

- S. D. Inc, “Military Applications of AI in 2024,” Mar. 2024. [Online]. Available: https://sdi.ai/blog/the-most-useful-military-applications-of-ai/.

- U. Banerjee, “Here Are The Top AI Startups Shaping U.S. Defense,” Apr. 2025. [Online]. Available: https://aimmediahouse.com/ai-startups/here-are-the-top-ai-startups-shaping-u-s-defense.

- W. Marcellino, J. Welch, B. Clayton, S. Webber, and T. Goode, “Acquiring Generative Artificial Intelligence to Improve U.S. Department of Defense Influence Activities.”.

- R. Heath, “Some military experts are wary of generative AI,” May 2024. [Online]. Available: https://www.axios.com/2024/05/01/pentagon-military-ai-trust-issues.

- “The Risks of Artificial Intelligence in Weapons Design | Harvard Medical School,” Aug. 2024. [Online]. Available: https://hms.harvard.edu/news/risks-artificial-intelligence-weapons-design.

- “ChatGPT Sparks U.S. Debate Over Military Use of AI | Arms Control Association.” [Online]. Available: https://www.armscontrol.org/act/2023-06/news/chatgpt-sparks-us-debate-over-military-use-ai.

- A. Tiwari, “Reskilling in the Era of AI,” Mar. 2025. [Online]. Available: https://aruntiwari.com/reskilling-in-the-era-of-ai/.

- “Bridging the AI Gap: Feds Focus on Workforce Training for GenAI Tools.” [Online]. Available: https://www.meritalk.com/articles/bridging-the-ai-gap-feds-focus-on-workforce-training-for-genai-tools/.

- B. Geiselman, “Generative AI can help fill skills gap, attract younger workers,” Apr. 2025. [Online]. Available: https://www.plasticsmachinerymanufacturing.com/manufacturing/article/55277973/generative-ai-can-help-fill-skills-gap-attract-younger-workers.

- “Upskilling the Workforce: Strategies for 2025 Success.” [Online]. Available: https://blog.getaura.ai/upskilling-the-workforce-guide.

- “The Necessity For Upskilling And Reskilling In An Agentic AI Era.” [Online]. Available: https://www.bwpeople.in/article/the-necessity-for-upskilling-and-reskilling-in-an-agentic-ai-era-557843.

- “General Assembly Launches AI Academy to Close AI Skills Gap Across All Roles.” [Online]. Available: https://www.businesswire.com/news/home/20250415925998/en/General-Assembly-Launches-AI-Academy-to-Close-AI-Skills-Gap-Across-All-Roles.

- “AI innovation set to revolutionise military training landscape | Shephard.” [Online]. Available: https://www.shephardmedia.com/news/training-simulation/sponsored-ai-innovation-set-to-revolutionise-military-training-landscape/.

- “Transforming Military Training with AI.” [Online]. Available: https://www.4cstrategies.com/news/transforming-military-training-with-ai/.

- “How generative AI can improve military training | Shephard.” [Online]. Available: https://www.shephardmedia.com/news/training-simulation/how-generative-ai-can-improve-military-training/.

- B. Stilwell, “How the Army and Air Force Integrate AI Learning Into Combat Training,” Feb. 2020, section: Military Life. [Online]. Available: https://www.military.com/military-life/how-army-and-air-force-integrate-ai-learning-combat-training.html.

- “Generative Artificial Intelligence and the Future of Military Strategy,” Jul. 2025. [Online]. Available: https://completeaitraining.com/news/generative-artificial-intelligence-and-the-future-of/.

- “Why Staffing Partners Are Critical in Acqui-Hiring & M&A,” Aug. 2025, section: Staffing and Recruitment. [Online]. Available: https://www.vbeyond.com/blog/ai-in-defense-rethinking-talent-and-readiness/.

- G. Drenik, “Workforce Reskilling Is The Competitive Edge In The Agentic AI Era,” section: Leadership Strategies. [Online]. Available: https://www.forbes.com/sites/garydrenik/2025/06/10/workforce-reskilling-is-the-competitive-edge-in-the-agentic-ai-era/.

- “Agentic Warfare Is Here. Will America Be the First Mover?” Apr. 2025. [Online]. Available: https://warontherocks.com/2025/04/agentic-warfare-is-here-will-america-be-the-first-mover/.

- J. Harper, “Combatant commands to get new generative AI tech for operational planning, wargaming,” Mar. 2025. [Online]. Available: https://defensescoop.com/2025/03/05/diu-thunderforge-scale-ai-combatant-commands-indopacom-eucom/.

- K. Requiroso, “Pentagon Advances Generative AI in Military Operations Amid US-China Tech Race,” Apr. 2025. [Online]. Available: https://www.eweek.com/news/pentagon-generative-ai-military-operations/.

- “An AI-Ready Military Workforce.” [Online]. Available: https://ndupress.ndu.edu/Media/News/News-Article-View/Article/3449468/an-ai-ready-military-workforce/.

- J. Harper, “Army kicks off generative AI pilot to tackle drudge work, hallucinations,” Oct. 2024. [Online]. Available: https://defensescoop.com/2024/10/18/army-generative-ai-pilot-calibrateai-tackle-drudge-work-hallucinations/.

- “Enhancing Professional Military Education with AI.” [Online]. Available: https://www.armyupress.army.mil/Journals/Journal-of-Military-Learning/Journal-of-Military-Learning-Archives/JML-April-2025/Enhancing-pme-with-ai/.

- A. Collazzo, “Warfare at the Speed of Thought: Balancing AI and Critical Thinking for the Military Leaders of Tomorrow - Modern War Institute,” Feb. 2025. [Online]. Available: https://mwi.westpoint.edu/warfare-at-the-speed-of-thought-balancing-ai-and-critical-thinking-for-the-military-leaders-of-tomorrow/, https://mwi.westpoint.edu/warfare-at-the-speed-of-thought-balancing-ai-and-critical-thinking-for-the-military-leaders-of-tomorrow/.

- Satyadhar Joshi, “The Impact of AI on Veteran Employment and the Future Workforce Development: Opportunities, Barriers, and Systemic Solutions,” World Journal of Advanced Research and Reviews, vol. 27, no. 2, pp. 328–341, Sep. 2025. [Online]. Available: https://journalwjarr.com/node/2727.

- E. AI, “How the Department of Defense is using AI to unearth talent in the military reserves,” Jul. 2022. [Online]. Available: https://eightfold.ai/blog/dod-ai-military-reserves/.

- “Scale AI | Accelerating agentic warfare capabilities.” [Online]. Available: https://scale.com/agentic-warfare.

- W. Knight, “OpenAI Is Working With Anduril to Supply the US Military With AI,” Wired, section: tags. [Online]. Available: https://www.wired.com/story/openai-anduril-defense/.

- “How Generative AI Improves Military Decision-Making | AFCEA International,” Apr. 2025. [Online]. Available: https://www.afcea.org/signal-media/how-generative-ai-improves-military-decision-making.

- V. Tangermann, “Pentagon Signs Deal to "Deploy AI Agents for Military Use",” Mar. 2025. [Online]. Available: https://futurism.com/pentagon-signs-deal-deploy-ai-agents-military-use.

- N. E. Souki, “Pentagon’s New Gen-AI Can Analyze War but Doesn’t Understand War,” Apr. 2025. [Online]. Available: https://insidetelecom.com/us-uses-generative-ai-military-applications/.

- I. Group®, “China’s PLA Leverages Generative AI for Military Intelligence: Insikt Group Report.” [Online]. Available: https://www.recordedfuture.com/research/artificial-eyes-generative-ai-chinas-military-intelligence.

- “Artificial Eyes: Generative AI in China’s Military Intelligence.”.

- L. C. Williams, “The Army wants AI to aid in vehicle repairs,” Aug. 2025. [Online]. Available: https://www.defenseone.com/defense-systems/2025/08/army-wants-ai-aid-vehicle-repairs/407645/.

- measley, “Army evaluating generative AI tools to support business ops,” Jan. 2025. [Online]. Available: https://defensescoop.com/2025/01/14/army-project-athena-generative-ai-streamline-business-operations/.

- R. Pathak and S. Joshi, “Secure Multi-party Computation Protocol for Defense Applications in Military Operations Using Virtual Cryptography,” in Contemporary Computing, S. Ranka, S. Aluru, R. Buyya, Y.-C. Chung, S. Dua, A. Grama, S. K. S. Gupta, R. Kumar, and V. V. Phoha, Eds. Berlin, Heidelberg: Springer, 2009, pp. 389–399.

- “US government is giving leading AI companies a bunch of cash for military applications,” Jul. 2025. [Online]. Available: https://www.engadget.com/ai/us-government-is-giving-leading-ai-companies-a-bunch-of-cash-for-military-applications-185347762.html.

- “SHRM Report Warns of Widening Skills Gap as AI Adoption Reaches Nearly Half of U.S. Workforce.” [Online]. Available: https://www.shrm.org/about/press-room/shrm-report-warns-of-widening-skills-gap-as-ai-adoption-reaches-.

- H. Zarrar and S. A. Kakar, “Generative Artificial Intelligence & its Military Applications by the US and China – Lessons for South Asia,” Journal of Computing & Biomedical Informatics, Apr. 2024. [Online]. Available: https://jcbi.org/index.php/Main/article/view/494.

| Skill Category | Current Coverage | Required Enhancement |

|---|---|---|

| Technical AI Literacy | Basic digital literacy | Advanced AI concepts, system architecture |

| Ethical Decision-Making | Traditional ethics training | AI-specific ethical frameworks |

| Human-Machine Teaming | Limited exposure | Collaborative workflow design |

| System Oversight | Basic supervision skills | AI monitoring and intervention protocols |

| Adaptive Learning | Standardized training | Continuous skill refreshment |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).