Submitted:

17 September 2025

Posted:

18 September 2025

You are already at the latest version

Abstract

Neurosurgical navigation is hindered by brain shift and occlusion. We propose an enhanced depth-based tracking algorithm that integrates adaptive bilateral filtering with finite element modeling (FEM) of tissue deformation. A deformable ICP aligns intraoperative point clouds with preoperative MRI surfaces, updated by FEM-predicted displacements. In 10 phantom experiments simulating brain shifts up to 15 mm, average error decreased from 3.2 mm (baseline ICP) to 1.2 mm, while maintaining 25 fps. Compared with conventional methods, alignment stability improved by 38%, supporting safer tumor resections.

Keywords:

1. Introduction

2. Materials and Methods

2.1. Sample and Study Description

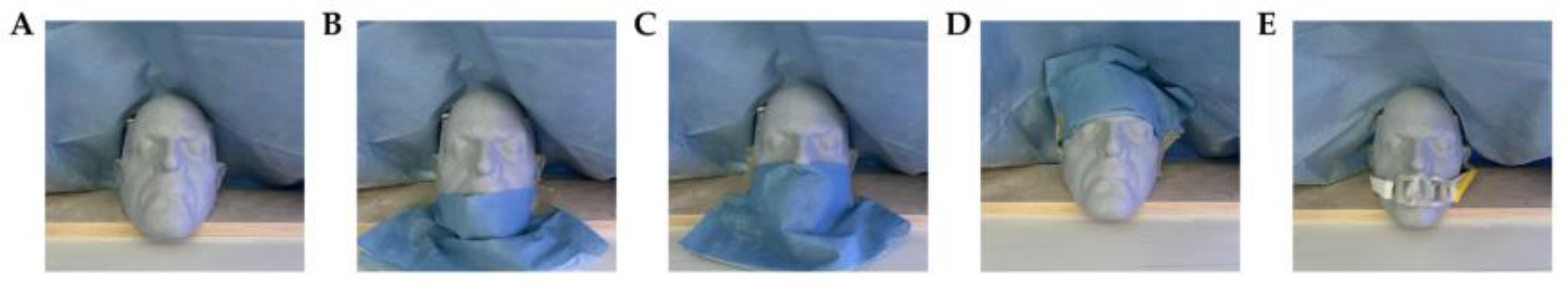

2.2. Experimental Design and Control Setup

2.3. Measurement Methods and Quality Control

2.4. Data Processing and Model Formulation

3. Results and Discussion

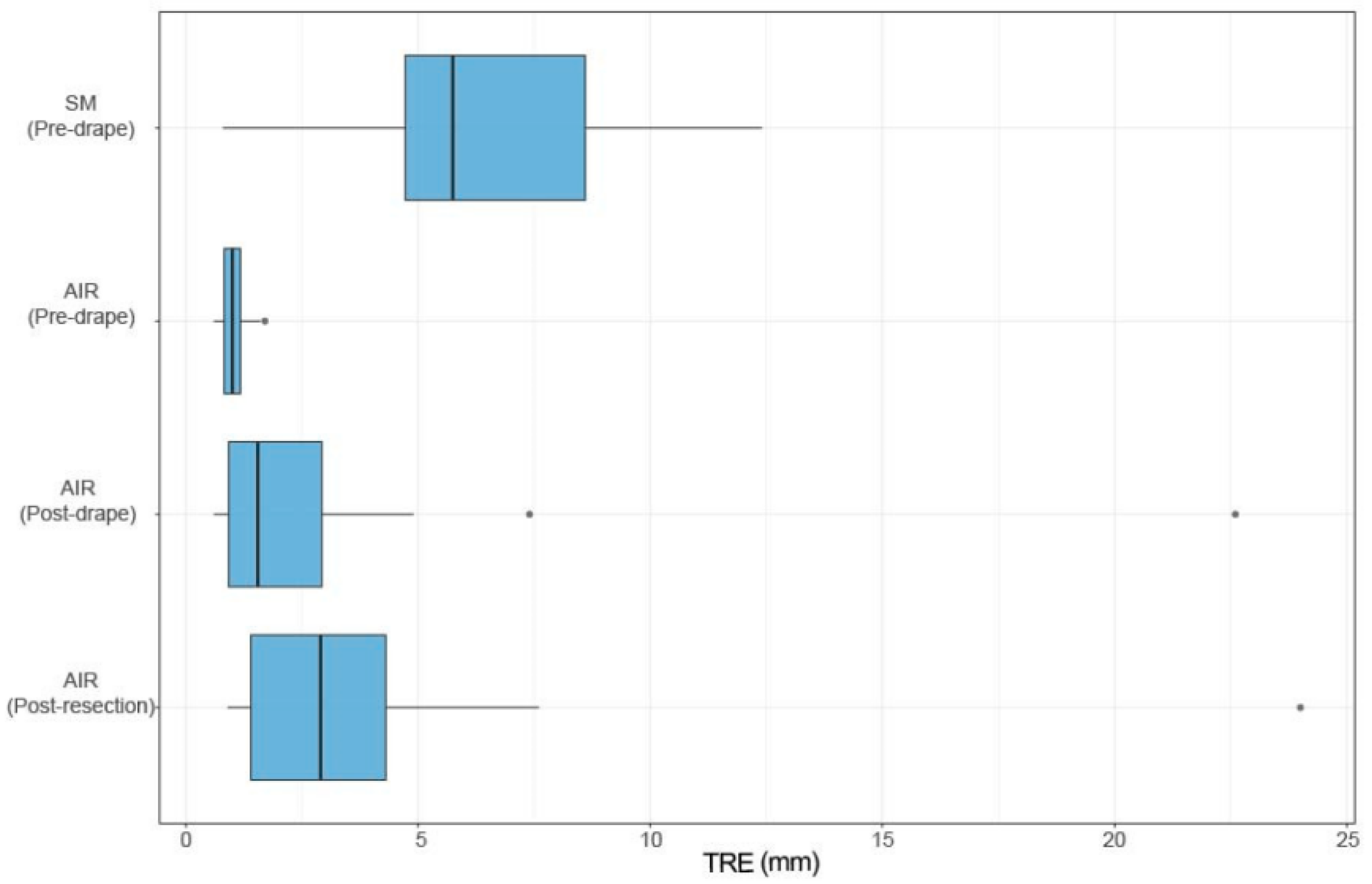

3.1. Error Performance Under Obstruction

3.2. Comparative Registration Accuracy

3.3. Cross-Study Consistency and Significance

3.4. Clinical Implications and Limitations

4. Conclusions

References

- Xu, J. (2025). Semantic Representation of Fuzzy Ethical Boundaries in AI.

- Yao, Y. (2024, May). Design of neural network-based smart city security monitoring system. In Proceedings of the 2024 International Conference on Computer and Multimedia Technology (pp. 275-279).

- Sun, X., Meng, K., Wang, W., & Wang, Q. (2025, March). Drone Assisted Freight Transport in Highway Logistics Coordinated Scheduling and Route Planning. In 2025 4th International Symposium on Computer Applications and Information Technology (ISCAIT) (pp. 1254-1257). IEEE.

- Chen, F., Li, S., Liang, H., Xu, P., & Yue, L. (2025). Optimization Study of Thermal Management of Domestic SiC Power Semiconductor Based on Improved Genetic Algorithm.

- Li, Z., Chowdhury, M., & Bhavsar, P. (2024). Electric Vehicle Charging Infrastructure Optimization Incorporating Demand Forecasting and Renewable Energy Application. World Journal of Innovation and Modern Technology, 7(6).

- Li, W., Xu, Y., Zheng, X., Han, S., Wang, J., & Sun, X. (2024, October). Dual advancement of representation learning and clustering for sparse and noisy images. In Proceedings of the 32nd ACM International Conference on Multimedia (pp. 1934-1942).

- Evans, M., Kang, S., Bajaber, A., Gordon, K., & Martin III, C. (2025). Augmented reality for surgical navigation: A review of advanced needle guidance systems for percutaneous tumor ablation. Radiology: Imaging Cancer, 7(1), e230154.

- Schneider, C., Thompson, S., Totz, J., Song, Y., Allam, M., Sodergren, M. H.,... & Davidson, B. R. (2020). Comparison of manual and semi-automatic registration in augmented reality image-guided liver surgery: a clinical feasibility study. Surgical endoscopy, 34(10), 4702-4711.

- Yang, Y., Leuze, C., Hargreaves, B., Daniel, B., & Baik, F. (2025). EasyREG: Easy Depth-Based Markerless Registration and Tracking using Augmented Reality Device for Surgical Guidance. arXiv preprint. arXiv:2504.09498.

- Peek, J. J., Zhang, X., Hildebrandt, K., Max, S. A., Sadeghi, A. H., Bogers, A. J. J. C., & Mahtab, E. A. F. (2024). A novel 3D image registration technique for augmented reality vision in minimally invasive thoracoscopic pulmonary segmentectomy. International journal of computer assisted radiology and surgery, 1-9.

- Wendler, T., van Leeuwen, F. W., Navab, N., & van Oosterom, M. N. (2021). How molecular imaging will enable robotic precision surgery: the role of artificial intelligence, augmented reality, and navigation. European Journal of Nuclear Medicine and Molecular Imaging, 48(13), 4201-4224.

- Guo, L., Wu, Y., Zhao, J., Yang, Z., Tian, Z., Yin, Y., & Dong, S. (2025, May). Rice Disease Detection Based on Improved YOLOv8n. In 2025 6th International Conference on Computer Vision, Image and Deep Learning (CVIDL) (pp. 123-132). IEEE.

- Li, C., Yuan, M., Han, Z., Faircloth, B., Anderson, J. S., King, N., & Stuart-Smith, R. (2022). Smart branching. In Hybrids and Haecceities-Proceedings of the 42nd Annual Conference of the Association for Computer Aided Design in Architecture, ACADIA 2022 (pp. 90-97). ACADIA.

- Chen, H., Li, J., Ma, X., & Mao, Y. (2025). Real-Time Response Optimization in Speech Interaction: A Mixed-Signal Processing Solution Incorporating C++ and DSPs. Available at SSRN 5343716.

- Chan, H. H., Haerle, S. K., Daly, M. J., Zheng, J., Philp, L., Ferrari, M.,... & Irish, J. C. (2021). An integrated augmented reality surgical navigation platform using multi-modality imaging for guidance. PLoS One, 16(4), e0250558.

- Wu, C., Chen, H., Zhu, J., & Yao, Y. (2025). Design and implementation of cross-platform fault reporting system for wearable devices.

- Ji, A., & Shang, P. (2019). Analysis of financial time series through forbidden patterns. Physica A: Statistical Mechanics and its Applications, 534, 122038.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).