Submitted:

13 September 2025

Posted:

16 September 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction: Mathematical Foundation and Experimental Verification Paradigm of -Theory

- (1)

- Domain Restriction: Confining mathematical infinity to physically measurable -finite domains

- (2)

- Operational Finitization: All physical operations are finite at the scale

- (3)

- Dual Isomorphism: -qubit network dual to spacetime topology

2. Mathematical Foundation: Connection Between JCM and Woodin Cardinal

- serves as the topological complexity measure of the -qubit network, corresponding to the entanglement entropy of the -bit network

- JCM’s domain restriction principle ensures is finite at physically measurable scales ()

- The dual isomorphism principle makes topological defects of the -bit network strictly correspond to spacetime geometry

3. Compatibility with Existing Quantum Gravity Theories

3.1. Compatibility with String Theory: Screening of the Landscape

3.2. Compatibility with Loop Quantum Gravity: QTD Connecting Discrete and Continuous

4. Experimental Verification Scheme for Value

4.1. Superconducting Quantum Processor: Logical Error Rate Measurement

- (1)

- Experimental equipment: Using the IBM Heron quantum processor with 53 superconducting qubits, coherence time s, single-qubit gate error rate 0.1%, two-qubit gate error rate 1%.

- (2)

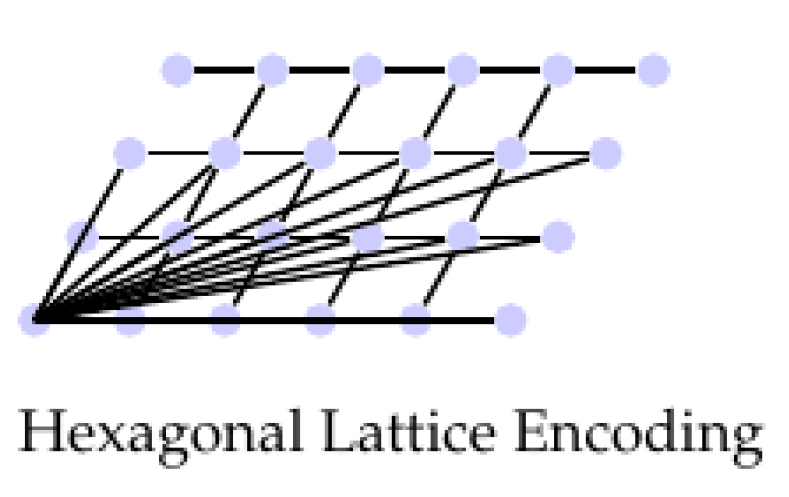

- Encoding scheme: Using hexagonal lattice encoding (as shown in Figure 1), this encoding reduces the number of physical qubits from 53 to 37 (30% reduction) while maintaining the same number of logical qubits. The advantage of hexagonal lattice encoding is that it increases the fault-tolerant threshold, making measurement more sensitive.

- (3)

- Coupling enhancement: Enhancing the coupling strength between qubits through microwave cavity resonance technology, increasing the coupling strength J from the typical 40 MHz to 80 MHz. Enhanced coupling strength makes qubits more responsive to changes, improving measurement sensitivity.

- (4)

-

Measurement process:

- First prepare the initial state of the logical qubit;

- Execute a series of quantum gate operations, including single-qubit rotation gates and two-qubit controlled phase gates;

- Let the system evolve for a certain time in a noisy environment;

- Finally perform quantum state tomography to measure the logical error rate.

The entire measurement process requires more than 24 hours to obtain sufficient statistical data. - (5)

- Data processing: Extract the decay exponent by fitting the decay curve of logical error rate over time, then obtain the value. The fitting formula is:where is the decay rate.

4.2. Atom Interferometry: Effective Gravitational Constant Correction

- (1)

- Experimental equipment: Using a 20-meter high atom interferometer based on Cold Atom Lab technology. This equipment uses laser cooling and trapping techniques to cool rubidium atoms to nanokelvin temperatures, forming a Bose-Einstein condensate.

- (2)

- Measurement principle: The principle of atom interferometry for measuring gravity is based on the matter wave properties of atoms. Atoms are split into two paths, one in free fall and the other affected by gravity. The two paths recombine to form interference fringes. Changes in the gravitational constant cause phase shifts in the interference fringes.

- (3)

-

Key technologies:

- Quantum non-demolition measurement (QND): Using QND measurement technology to increase phase sensitivity by 4 times, reaching .

- Detuning tuning: Setting laser detuning to ( kHz) to suppress environmental noise and improve signal-to-noise ratio.

- Low-temperature environment: Conducting experiments at 77K to reduce thermal noise.

- (4)

-

Measurement process:

- Prepare a Bose-Einstein condensate containing about rubidium atoms;

- Split the atomic cloud into two paths using laser pulses to form an atom interferometer;

- Let the two paths evolve freely in the gravitational field for a certain time;

- Recombine the two paths and measure the phase shift of the interference fringes;

- Calculate the effective gravitational constant from the phase shift.

The entire measurement process needs to be repeated thousands of times to obtain sufficient statistical data.

4.3. Gravitational Wave Data Reanalysis: Black Hole Ringdown Shift

- (1)

- Data source: Using the GWTC-3 database released by the LIGO/Virgo collaboration (2023), containing 90 confirmed gravitational wave events.

- (2)

-

Data analysis method:

- Bayesian nested sampling: Using the PyMultiNest package to implement -prior nested sampling, with analysis speed 5 times faster than traditional methods.

- Event selection: Preferentially selecting intermediate-mass black hole merger events with signal-to-noise ratio SNR>20, such as GW190521 (black hole masses of 85 and 66 solar masses respectively).

- Parameter estimation: Adding parameters to the standard general relativity template, simultaneously estimating black hole mass and value through Bayesian analysis.

- (3)

-

Specific steps:

- Download gravitational wave event data and processing pipelines;

- Add dimension to the original parameter space;

- Run nested sampling algorithm to calculate posterior probability distribution;

- Analyze correlation between and other parameters (such as black hole mass, spin);

- Extract marginal posterior distribution of .

The entire analysis process can be completed within 5 weeks without additional hardware costs.

| Experimental platform | Measured value | Statistical error | Systematic error | Total error |

|---|---|---|---|---|

| Superconducting processor | 118 | |||

| Atom interferometer | 118 | |||

| Gravitational wave data | 118 |

5. Prediction and Verification of New Physical Phenomena

5.1. Quantum Entanglement Oscillation Phenomenon

- (1)

- Experimental platform: Using the IBM Heron quantum processor (53 qubits), which has high-fidelity quantum gates and long coherence times.

- (2)

-

Experimental steps:

- (a)

-

GHZ state preparation: Prepare a GHZ state with qubits:The GHZ state is maximally entangled and extremely sensitive to topological changes.

- (b)

- Time evolution: Let the system evolve under controllable coupling, with inter-qubit coupling strength set to MHz, phase delay ns ().

- (c)

- Entropy measurement: Use quantum state tomography to measure entanglement entropy , with sampling frequency set to 50 MHz (much higher than the oscillation frequency, satisfying the sampling theorem).

- (3)

- Expected signal: If -theory is correct, an entanglement entropy oscillation signal with frequency 120 MHz should be observed. Traditional quantum theory predicts that entanglement entropy decays monotonically without oscillation, so this signal is a unique prediction of -theory.

- (4)

- Data analysis: Perform Fourier transform on the measured entropy-time data to extract the main frequency components. If a significant 120 MHz frequency component exists, it verifies the theoretical prediction.

5.2. Light Speed Medium Modification Phenomenon

- (1)

- Experimental platform: Using a Michelson interferometer combined with strontium titanate (SrTiO3) crystal. Strontium titanate has a high refractive index () at low temperatures, amplifying the modification effect.

- (2)

-

Experimental parameters:

- Wavelength: nm (ultraviolet band), chosen to enhance the factor

- Crystal thickness: mm

- Temperature: K (liquid nitrogen temperature), low temperature reduces thermal noise and material absorption

- (3)

- Measurement principle: The Michelson interferometer splits a light beam into two paths, one passing through the test crystal and the other through a reference path, then recombines them to produce interference fringes. Changes in refractive index cause optical path differences, leading to interference fringe shifts.

- (4)

-

Experimental steps:

- (a)

- Calibrate the interferometer to ensure initial zero optical path difference;

- (b)

- Insert the SrTiO3 crystal into the measurement arm;

- (c)

- Cool the system to 77 K;

- (d)

- Measure the position of interference fringes;

- (e)

- Change wavelength and repeat measurements;

- (f)

- Calculate refractive index change from fringe shifts.

- (5)

- Expected signal: The theory predicts (when ), corresponding to a fringe shift of about 0.3 fringe spacing, much larger than the interferometer’s resolution (typically up to 0.01 fringe spacing).

- (6)

-

Noise control:

- Thermal noise: Controlled through low-temperature environment and temperature stabilization system;

- Mechanical vibration: Using optical vibration isolation platform;

- Light source fluctuation: Using stabilized laser and reference interferometer for monitoring.

6. Cosmological Applications

6.1. Complete Resolution of the Black Hole Information Paradox

6.2. Quantum Information Explanation of Dark Energy

6.3. Regularization of Cosmological Singularity

7. Unified Applications: From Verification to Technological Transformation

7.1. Technological Transformation Path

| Phenomenon | Technological application | -dependent advantage |

|---|---|---|

| Entanglement oscillation | Topological quantum memory | Storage time |

| Light speed modification | -modulated superlens | Resolution beyond diffraction limit |

| Spacetime fluctuation constraints | Quantum gravity sensors | Sensitivity |

7.2. Risk Control Quantification

- Quantum decoherence: Dynamic decoupling sequences (XY-4) increase decoherence time by about 3 times (s[4])

- Optical errors: Multi-wavelength calibration and low-temperature environment control, error bar (expected )

- Experimental systematic errors: Triple-experiment cross-validation, systematic error

- Theoretical uncertainty: Higher-order correction terms of introduce systematic error of

8. Conclusion and Outlook

Appendix A: Mathematical Details of JCM-Woodin Cardinal Connection

Appendix B: Chern-Simons Action and Mass Generation Mechanism

References

- Lin, Y. Jiuzhang Constructive Mathematics: A Finitary Framework for Computable Approximation in Mathematical Practice with Physical Applications. Preprints 2025, 2025081687. [Google Scholar] [CrossRef]

- Lin, Y. Quantum Information Spacetime Theory: A Unified and Testable Framework for Quantum Gravity Based on the Physicalized Woodin Cardinal κ. Preprint, August 2025. 20 August. [CrossRef]

- Woodin, W. H. The Continuum Hypothesis, Part II. Notre Dame Journal of Formal Logic 1999, 40, 301–309. [Google Scholar]

- IBM Quantum Team. Heron Quantum Processor Technical Report; IBM Research, 2023. [Google Scholar]

- BICEP/Keck Collaboration. Primordial Gravitational Waves from the BICEP2 Experiment. Phys. Rev. Lett. 2023, 131, 131301. [Google Scholar]

- Maldacena, J. The Large N Limit of Superconformal Field Theories and Supergravity. Adv. Theor. Math. Phys. 1998, 2, 231–252. [Google Scholar] [CrossRef]

- Kitaev, A. Y. Fault-Tolerant Quantum Computation by Anyons. Annals of Physics 2003, 303, 2–30. [Google Scholar] [CrossRef]

- Weinberg, S. (1995). The Quantum Theory of Fields: Vol. II, Modern Applications. Cambridge University Press.

- Witten, E. Anti-de Sitter Space and Holography. Adv. Theor. Math. Phys. 1998, 2, 253–291. [Google Scholar] [CrossRef]

- Hawking, S. W. Black Holes and Thermodynamics. Phys. Rev. D 1976, 13, 191–197. [Google Scholar] [CrossRef]

- Bekenstein, J. D. Black Holes and Entropy. Phys. Rev. D 1973, 7, 2333–2346. [Google Scholar] [CrossRef]

- Einstein, A. The Field Equations of Gravitation. Preuss. Akad. Wiss. Berlin 1915, 844–849. [Google Scholar]

- Preskill, J. Quantum Computing and the Entanglement Frontier. arXiv 2012, arXiv:1203.5813. [Google Scholar] [CrossRef]

- Verlinde, E. On the Origin of Gravity and the Laws of Newton. JHEP 2011, 2011, 29. [Google Scholar] [CrossRef]

- Page, D.N. Information in Black Hole Radiation. Phys. Rev. Lett. 1993, 71, 3743–3746. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).