Submitted:

20 October 2025

Posted:

21 October 2025

You are already at the latest version

Abstract

Keywords:

Introduction

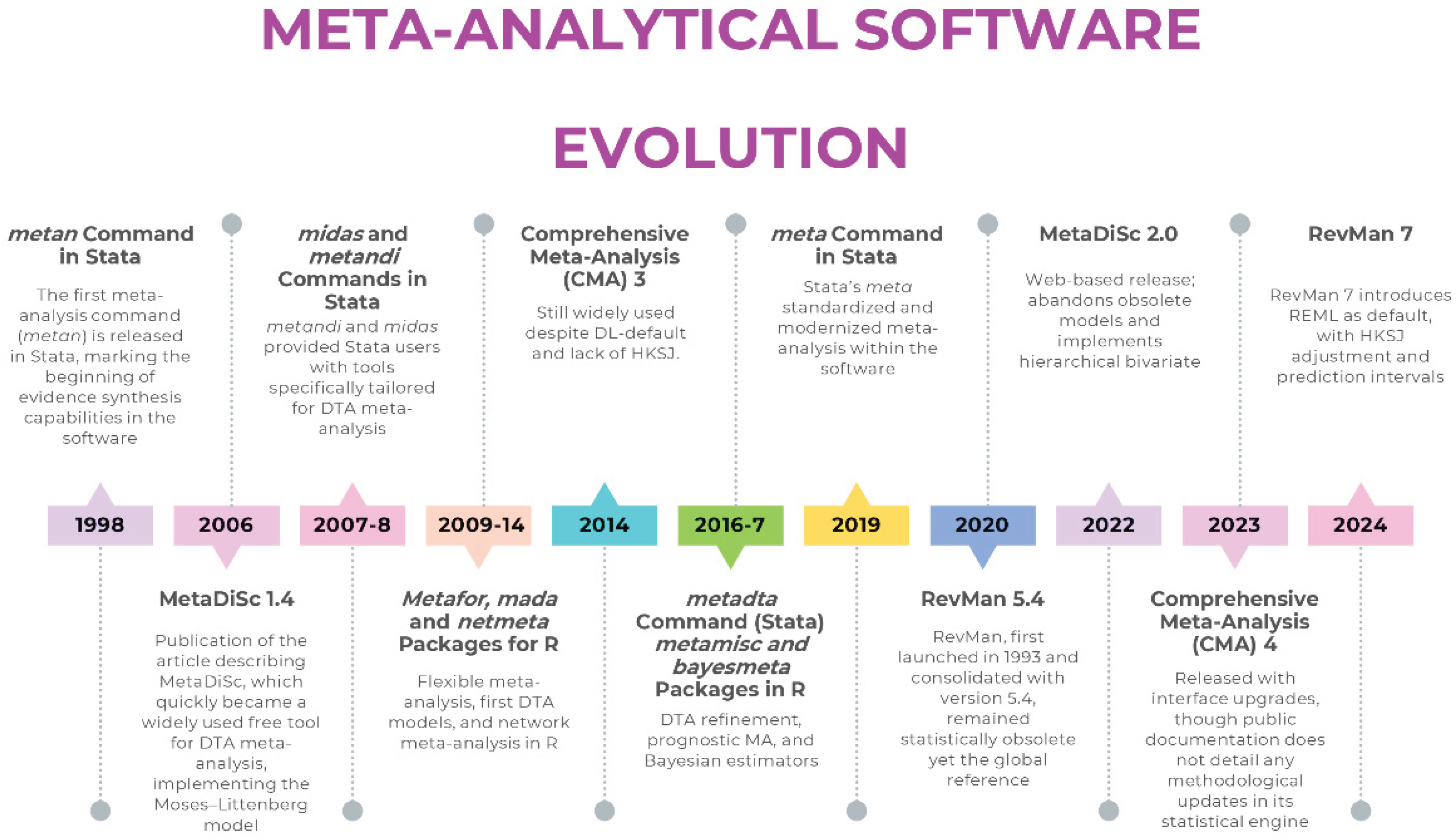

How Software Determines the Model: From Legacy Defaults to Modern Standards

Review Manager (RevMan): A Tale of Two Versions

- For intervention reviews, RevMan 5.4 [20] defaults to the DL estimator for random-effects models— a built-in constraint of the software, rather than an optional setting a user can modify. The dominance of the DL estimator did not arise arbitrarily: its computational simplicity and early endorsement facilitated widespread adoption. In scenarios with a large number of studies and low heterogeneity, its performance is often comparable to more advanced estimators. Simulation studies show that when the number of studies is small or heterogeneity is substantial, DL tends to underestimate τ², which results in narrower confidence intervals than intended. This behaviour is not an implementation flaw but a statistical property of the estimator, and it is the reason why likelihood-based alternatives such as REML are preferred when modelling heterogeneity is clinically relevant. More robust alternatives such as REML or PM are absent in RevMan 5.4, as are HKSJ adjustments that correct the well-documented deficiencies of Wald-type intervals. Prediction intervals—now considered essential for interpreting clinical heterogeneity—are also not provided. Even the graphical outputs are problematic: forest plots often apply a confusing label, “M-H, Random,” which reflects the use of Mantel–Haenszel weights in the heterogeneity statistic prior to DL estimation, but is frequently misinterpreted as a literal hybrid model. The Mantel-Haenszel (MH) method is a fixed-effect approach by definition, yet the software applies the DL random-effects estimator, creating a significant source of confusion for users.

- In diagnostic test accuracy (DTA) reviews, RevMan does not internally estimate bivariate or HSROC models; instead, it treats sensitivity and specificity as separate outcomes and fits a Moses–Littenberg SROC by default. While this interface can display parameters from a hierarchical model if supplied externally, the software itself does not perform the hierarchical estimation. This means that in RevMan, the user selects the framework indirectly through the software’s capabilities rather than through an explicit modelling choice. This approach systematically overstates accuracy compared with hierarchical models [18], because it assumes a symmetric SROC and treats the regression slope as a threshold effect.

- Robust Default Estimator: The default estimator for τ2 is now REML, with DL remaining as a user-selectable option.

- HKSJ Confidence Intervals: The HKSJ adjustment is now available for calculating CIs for the summary effect, providing better coverage properties than traditional Wald-type intervals.

- Prediction Intervals: The software now calculates and displays prediction intervals on forest plots, enhancing the interpretation of heterogeneity by showing the expected range of effects in future studies.

MetaDiSc 1.4 vs 2.0: Modelling Frameworks Compared

- Bivariate Hierarchical Model: MetaDiSc 2.0 uses a hierarchical random-effects model as its core engine, modelling sensitivity and specificity as a correlated pair, which more accurately reflects how test performance varies across study populations and settings.

- Confidence and Prediction Regions: The software generates both a 95% confidence region for the summary point (quantifying uncertainty in the mean estimate) and a 95% prediction region (illustrating the expected range of true accuracy in a future study).

Comprehensive Meta-Analysis: Closed-Code Estimation and Default Constraints

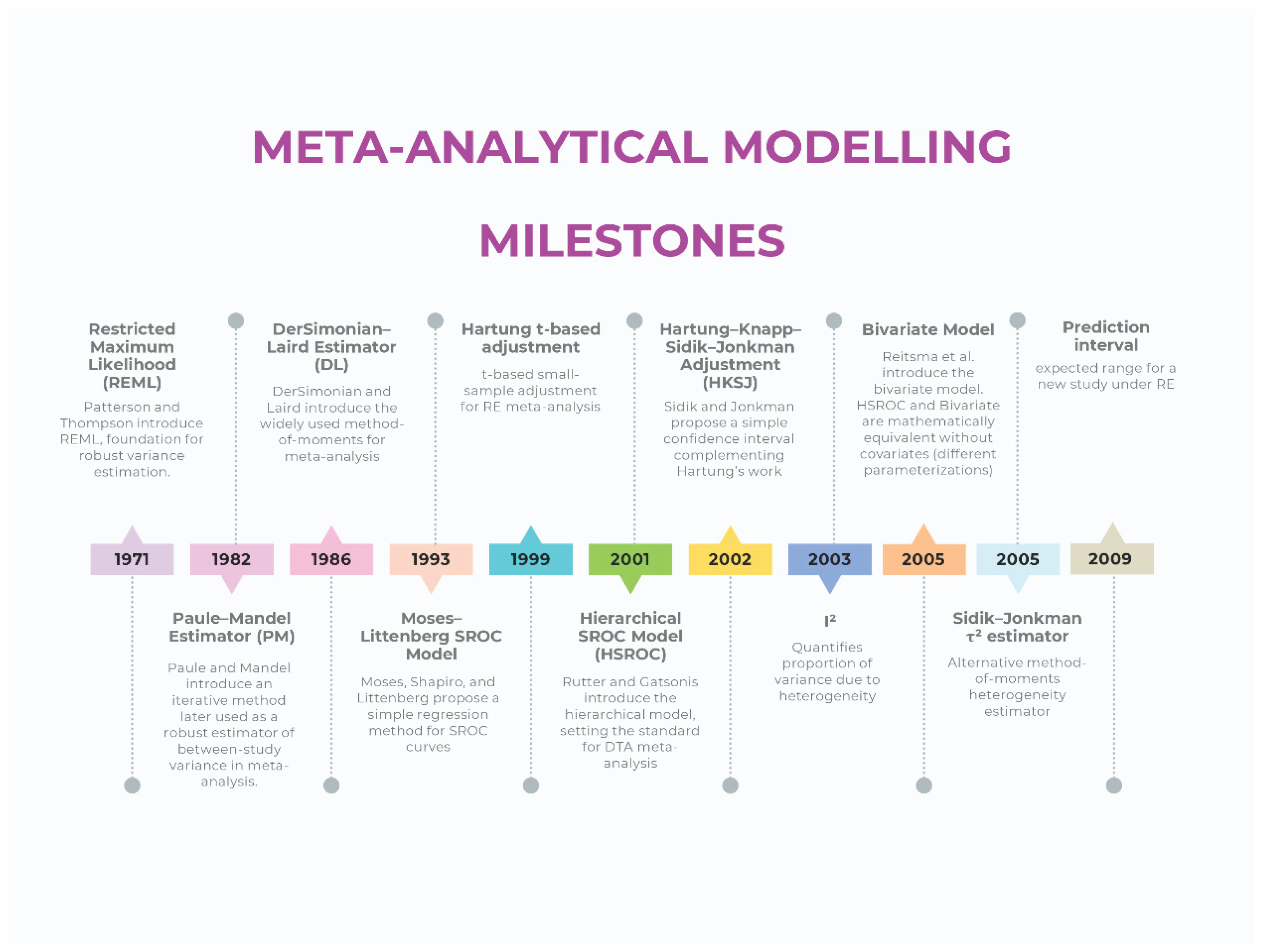

Current Methodological Standards For Meta-Analytic Modelling (Intervention Reviews)

Modeling (Estimator)

Confidence Intervals and Prediction Intervals

Heterogeneity

Current Methodological Standards For Meta-Analytic Modelling (Diagnostic Test Accuracy Reviews)

Modeling (Estimator)

Confidence Regions and Prediction Regions

Heterogeneity

How Software Choice Shapes Inference in Practice

Hidden Estimation Rules: Continuity Corrections and Automatic Exclusions

Implementation Guidance for Authors, Reviewers, and Editors

Conclusions

Original work

Informed consent

AI Use Disclosure

Ethical Statement

Data Availability Statement

Conflict of interest

References

- Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.5 (updated August 2024). Cochrane, 2024. Available from www.cochrane.org/handbook.

- Deeks JJ, Bossuyt PM, Leeflang MM, Takwoingi Y (editors). Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy. Version 2.0 (updated July 2023). Cochrane, 2023. Available from https://training.cochrane.org/handbook-diagnostic-test-accuracy/current.

- DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986 Sep;7(3):177-88. [CrossRef] [PubMed]

- Viechtbauer, W. (2005). Bias and Efficiency of Meta-Analytic Variance Estimators in the Random-Effects Model. Journal of Educational and Behavioral Statistics, 30(3), 261-293. [CrossRef]

- Veroniki AA, Jackson D, Viechtbauer W, Bender R, Bowden J, Knapp G, et al. Methods to estimate the between-study variance and its uncertainty in meta-analysis. Res Synth Methods. 2016;7(1):55–79. [CrossRef]

- Paule RC, Mandel J. Consensus values and weighting factors. J Res Nat Bur Stand. 1982;87(5):377--385. [CrossRef]

- van Aert RCM, Jackson D. Multistep estimators of the between-study variance: The relationship with the Paule-Mandel estimator. Stat Med. 2018 Jul 30;37(17):2616-2629. [CrossRef] [PubMed]

- Hartung, J. An alternative method for meta--analysis. Biom J. 1999;41(8):901--916.

- Hartung J, Knapp G. On tests of the overall treatment effect in meta--analysis with normally distributed responses. Stat Med. 2001;20(12):1771--1782. [CrossRef]

- Hartung J, Knapp G. A refined method for the meta--analysis of controlled clinical trials with binary outcome. Stat Med. 2001;20(24):3875--3889. [CrossRef]

- Sidik K, Jonkman JN. A simple confidence interval for meta--analysis. Stat Med. 2002;21(21):3153--3159. [CrossRef]

- IntHout, J. , Ioannidis, J.P. & Borm, G.F. The Hartung-Knapp-Sidik-Jonkman method for random effects meta-analysis is straightforward and considerably outperforms the standard DerSimonian-Laird method. BMC Med Res Methodol 14, 25 (2014). [CrossRef]

- Röver, C. , Knapp, G. & Friede, T. Hartung-Knapp-Sidik-Jonkman approach and its modification for random-effects meta-analysis with few studies. BMC Med Res Methodol 15, 99 (2015). [CrossRef]

- Langan D, Higgins JPT, Jackson D, Bowden J, Veroniki AA, Kontopantelis E, Viechtbauer W, Simmonds M. A comparison of heterogeneity variance estimators in simulated random-effects meta-analyses. Res Synth Methods. 2019 Mar;10(1):83-98. [CrossRef] [PubMed]

- Moses LE, Shapiro D, Littenberg B. Combining independent studies of a diagnostic test into a summary ROC curve: data-analytic approaches and some additional considerations. Stat Med 1993;12:1293-316. [CrossRef]

- Reitsma JB, Glas AS, Rutjes AWS, Scholten RJPM, Bossuyt PM, Zwinderman AH. Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic reviews. J Clin Epidemiol, 2005; 58, 10, 982e90. [CrossRef]

- Rutter CM, Gatsonis CA. A hierarchical regression approach to meta-analysis of diagnostic test accuracy evaluations. Stat Med. 2001 Oct 15;20(19):2865-84. [CrossRef] [PubMed]

- The Moses-Littenberg meta-analytical method generates systematic differences in test accuracy compared to hierarchical meta-analytical models. J Clin Epidemiol. 2016 Dec;80:77-87. [CrossRef] [PubMed]

- Wang J, Leeflang M. Recommended software/packages for meta-analysis of diagnostic accuracy. J Lab Precis Med 2019;4:22. [CrossRef]

- Review Manager 5 (RevMan 5) [Computer program]. Version 5.4. Copenhagen: The Cochrane Collaboration, 2020.

- Review Manager (RevMan) [Computer program]. Version 7.2.0. The Cochrane Collaboration, 2024. Available at revman.cochrane.org.

- Zamora J, Abraira V, Muriel A, Khan K, Coomarasamy A. Meta-DiSc: a software for meta-analysis of test accuracy data. BMC Med Res Methodol. 2006 Jul 12;6:31. [CrossRef] [PubMed]

- Plana, M.N. , Arevalo-Rodriguez, I., Fernández-García, S. et al. Meta-DiSc 2.0: a web application for meta-analysis of diagnostic test accuracy data. BMC Med Res Methodol 22, 306 (2022). [CrossRef]

- Brüggemann, P. , Rajguru, K. Comprehensive Meta-Analysis (CMA) 3.0: a software review. J Market Anal 10, 425–429 (2022). [CrossRef]

- Borenstein, M. (2022). Chapter 27. Comprehensive meta-analysis software, Wiley: Reviews in Health Research: Meta-analysis in Context (eds. M. Egger, J. P. T. Higgins & G. Davey Smith), pp. 535–548. Hoboken, NJ.

- Mheissen S, Khan H, Normando D, Vaiid N, Flores-Mir C (2024) Do statistical heterogeneity methods impact the results of meta- analyses? A meta epidemiological study, e: 19(3), 0298. [CrossRef]

- Comprehensive Meta-Analysis Version 4. Borenstein M, Hedges L, Higgins J, Rothstein H. Biostat, Inc.

- Mheissen S, Khan H, Normando D, Vaiid N, Flores-Mir C. Do statistical heterogeneity methods impact the results of meta- analyses? A meta epidemiological study. PLoS One. 2024 Mar 19;19(3):e0298526. [CrossRef] [PubMed]

- Nyaga, V.N. , Arbyn, M. Metadta: a Stata command for meta-analysis and meta-regression of diagnostic test accuracy data – a tutorial. Arch Public Health 80, 95 (2022). [CrossRef]

- Roger, M. Harbord & Penny Whiting, 2009. “metandi: Meta-analysis of diagnostic accuracy using hierarchical logistic regression,” Stata Journal, StataCorp LP, vol. 9(2), pages 211-229, June. [CrossRef]

- Dwamena, BA. MIDAS: Stata module for meta-analytical integration of diagnostic test accuracy studies. Statistical Software Components S456880, Boston College Department of Economics, revised 13 Dec 2009.

- Doebler P, Holling H. Meta-analysis of diagnostic accuracy with mada. Available online: https://cran.r-project.org/web/packages/mada/vignettes/mada.

- Zhou Y, Dendukuri N. Statistics for quantifying heterogeneity in univariate and bivariate meta-analyses of binary data: the case of meta-analyses of diagnostic accuracy. Stat Med. 2014 Jul 20;33(16):2701-17. [CrossRef] [PubMed]

- Hernán MA, Robins JM. Causal Inference: What If. Boca Raton: Chapman & Hall/CRC; 2020.

- Weber F, Knapp G, Ickstadt K, Kundt G, Glass Ä. Zero-cell corrections in random-effects meta-analyses. Res Synth Methods. 2020 Nov;11(6):913-919. [CrossRef] [PubMed]

- Wei, JJ. , Lin, EX., Shi, JD. et al. Meta-analysis with zero-event studies: a comparative study with application to COVID-19 data. Military Med Res 8, 41 (2021). [CrossRef]

- Veroniki AA, McKenzie JE. Introduction to new random-effects methods in RevMan. Cochrane Methods Group; 2024. Available at: https://training.cochrane.

| Software | Domain focus | Main strengths (historical) | Major limitations | Status / maintenance | Adequacy |

|---|---|---|---|---|---|

| RevMan 5.4 | Interventions, DTA | Free, official Cochrane tool, intuitive interface | DL only, WT CIs, no PIs, DTA models, no advanced regression or Bayesian/network options | Replaced by RevMan 7 |  |

| RevMan 7 (Web) | Interventions, DTA | Successor to RevMan 5.4, updated interface, integration with Cochrane systems | DTA functionality limited to display of external parameters; Still limited compared to R/Stata in advanced modeling (e.g., lack of user-adjustable meta-regression or network meta-analysis) | Actively maintained |  |

| Meta-DiSc 1.4 | DTA | First free tool for diagnostic meta-analysis, simple interface | Separate Se/Sp, Moses–Littenberg only, no hierarchical modeling, no CIs/PIs | Still widely used because of historical citation patterns |  |

| Meta-DiSc 2 | DTA | Modernized interface, implementation of hierarchical models | Lacks the advanced flexibility of script-based platforms (e.g., handling multiple covariates, non-linear models, or advanced influence diagnostics). Limited reproducibility compared to code-based solutions. | Released but limited adoption; partially solves 1.4 problems |  |

| CMA 3 | Interventions | Affordable, easy GUI, widely adopted in early 2000s | Closed-code environment; estimation methods not directly inspectable, default DL, no HKSJ, limited estimators, no transparency or reproducibility | Commercial; not updated to current methods |  |

| CMA 4 | Interventions | Affordable, easy GUI, implementation of PIs | Core model settings (e.g., estimator, CI method) remain undisclosed and presumably unchanged; problems of transparency and reproducibility persist | Commercial; not clarified if updated to current methods |  |

| R (metafor, meta, mada) | Interventions, DTA, advanced | Full implementation of robust estimators, transparency, reproducibility, continuous updates | Statistical literacy and coding skills needed | Actively maintained |  |

| Stata (meta, metadta, midas, metandi) | Interventions, DTA | Robust, validated commands, widely used in applied research | Commercial license required, statistical literacy needed | Actively maintained |  |

Robust and up to date (implements current recommended models and estimators);

Robust and up to date (implements current recommended models and estimators);  Restricted capabilities despite modern modelling (offers partial or limited implementation of current methods);

Restricted capabilities despite modern modelling (offers partial or limited implementation of current methods);  Outdated/problematic (relies on obsolete estimators or defaults). DTA: Diagnostic Test Accuracy; GUI: Graphical User Interface; CMA: Comprehensive Meta-Analysis; HKSJ: Hartung–Knapp–Sidik–Jonkman; CI: Confidence Intervals; PI: Prediction Intervals; WT: Wald-Type; DL: DerSimonian–Laird; Se: Sensitivity; Sp: Specificity.

Outdated/problematic (relies on obsolete estimators or defaults). DTA: Diagnostic Test Accuracy; GUI: Graphical User Interface; CMA: Comprehensive Meta-Analysis; HKSJ: Hartung–Knapp–Sidik–Jonkman; CI: Confidence Intervals; PI: Prediction Intervals; WT: Wald-Type; DL: DerSimonian–Laird; Se: Sensitivity; Sp: Specificity.| Suboptimal modeling practice | Software | Why it is problematic | Solution / Recommended alternative |

|---|---|---|---|

| Random-effects with DL | RevMan 5.4, CMA | Underestimates τ² and produces narrower CIs when heterogeneity is present | Use REML or PM estimators (RevMan 7, R or Stata) |

| WT CIs with k > 2 and τ² > 0 | RevMan 5.4, CMA | Lower coverage when between-study variance is non-zero | Apply HKSJ or present both WT and HKSJ (RevMan 7, R or Stata) |

| No PI | RevMan 5.4, CMA | Does not describe dispersion of effects in new settings | Implement prediction intervals (RevMan 7, R or Stata) |

| Separate modeling of Se & Sp | MetaDiSc 1.4 | Does not account for correlation between Se & Sp | Use hierarchical models (Rutter & Gatsonis, Reitsma) (R or Stata) |

| Moses–Littenberg SROC | MetaDiSc 1.4 | Does not incorporate between-study variability | Use hierarchical models (Rutter & Gatsonis, Reitsma) (R or Stata) |

| No meta-regression with robust estimators | RevMan 5.4, MetaDiSc 1.4, CMA | Restricts ability to explore sources of heterogeneity | Use meta-regression (R or Stata) |

| Closed-code implementation | CMA | Internal algorithms not inspectable | Use script-based software (R or Stata) |

| Lack of advanced models (Bayesian, network, hierarchical) | All three | Cannot handle complexity of modern evidence synthesis | Use R (netmeta, bayesmeta) or Bayesian frameworks (JAGS, Stan) |

| Undeclared continuity correction (mHA) | RevMan 5.4, CMA (NS) | Imposes automatic corrections without disclosure | Use models handling zero cells directly (e.g., beta-binomial or Peto for rare events; in DTA, use bivariate/HSROC); alternatively, declare correction explicitly |

| For new meta-analyses, use platforms that allow explicit control of the estimator and uncertainty method rather than legacy versions such as RevMan 5.4, MetaDiSc 1.4, or CMA. |

| For intervention reviews: REML or PM estimators with HKSJ-adjusted CIs can improve uncertainty quantification; mHK may be useful when τ² is close to zero. |

| For DTA reviews: hierarchical bivariate (Reitsma) or HSROC (Rutter & Gatsonis) models provide joint estimation of Se and Sp. |

| Always report PIs in random-effects models. Reporting PIs enhances interpretability of random-effects estimates. |

| Favor transparent and reproducible solutions (R: metafor, meta, mada; Stata: meta, metadta, midas). |

| Report heterogeneity using the appropriate metrics (intervention: Q, I², τ²; DTA: τ²Se, τ²Sp, ρ), avoid univariate I² in DTA. |

| Explore heterogeneity properly through meta-regression and subgroup analyses. |

| When using methods that require continuity corrections for zero-cell studies (e.g., inverse-variance with ratio measures), the specific correction applied (e.g., mHA, Carter) should be reported so that the modelling step is transparent. Statistical models that handle zero cells directly—such as those based on the binomial likelihood (e.g., bivariate/HSROC models in DTA)—provide an alternative that does not require a continuity adjustment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).