Submitted:

31 July 2025

Posted:

31 July 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

2.1. Overview of Academic Publishing’s Role in Scholarly Communication

2.2. Editorial Gatekeeping and Bias

2.3. Prestige-Driven Metrics and Research Assessment

2.4. Barrier and Equity Issues in Research Accessibility

3. Present Study

4. Method

4.1. Document Search and Collection

4.2. Search Strategy and Dataset Construction

4.3. Bibliometric Analysis

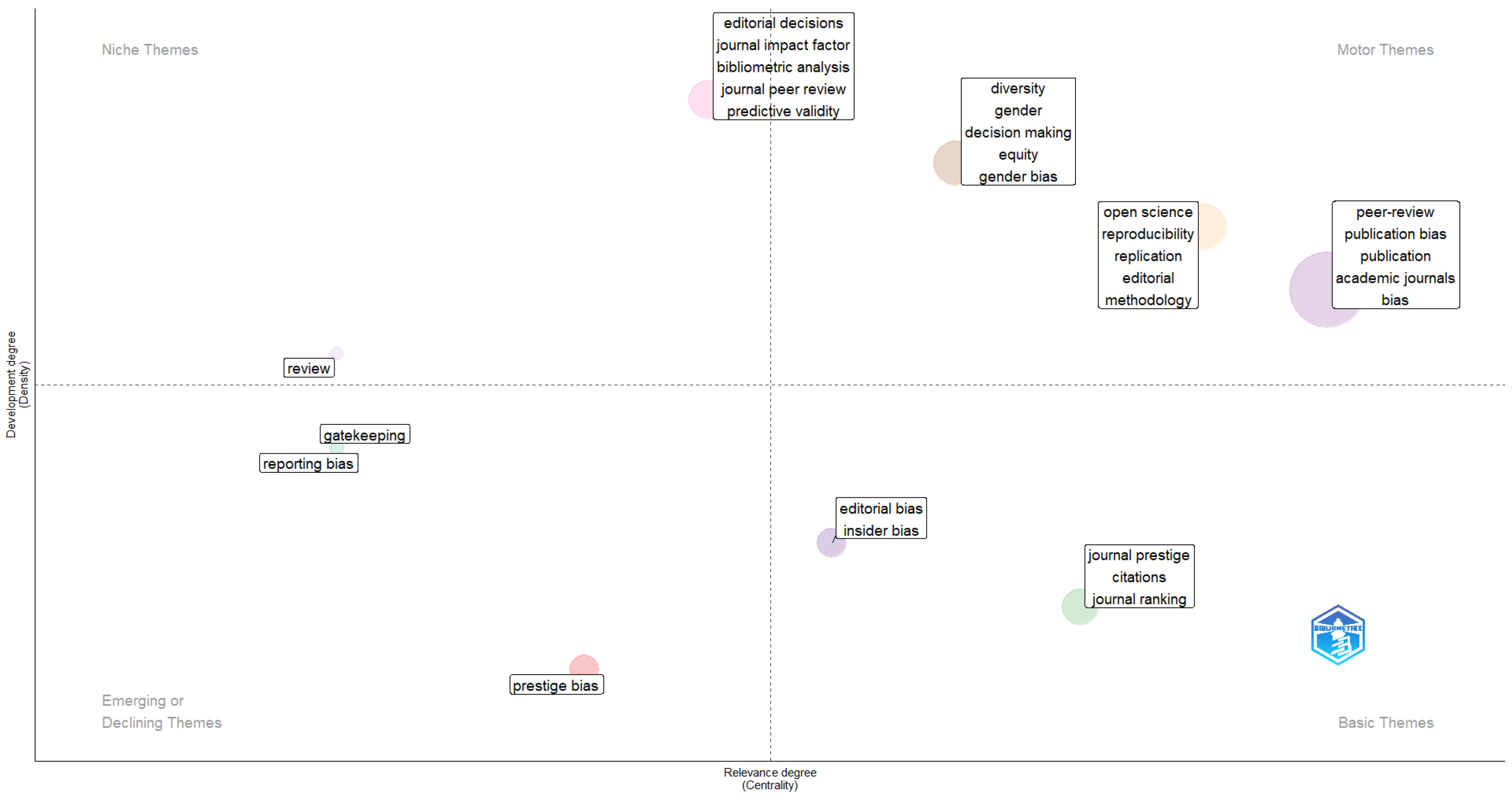

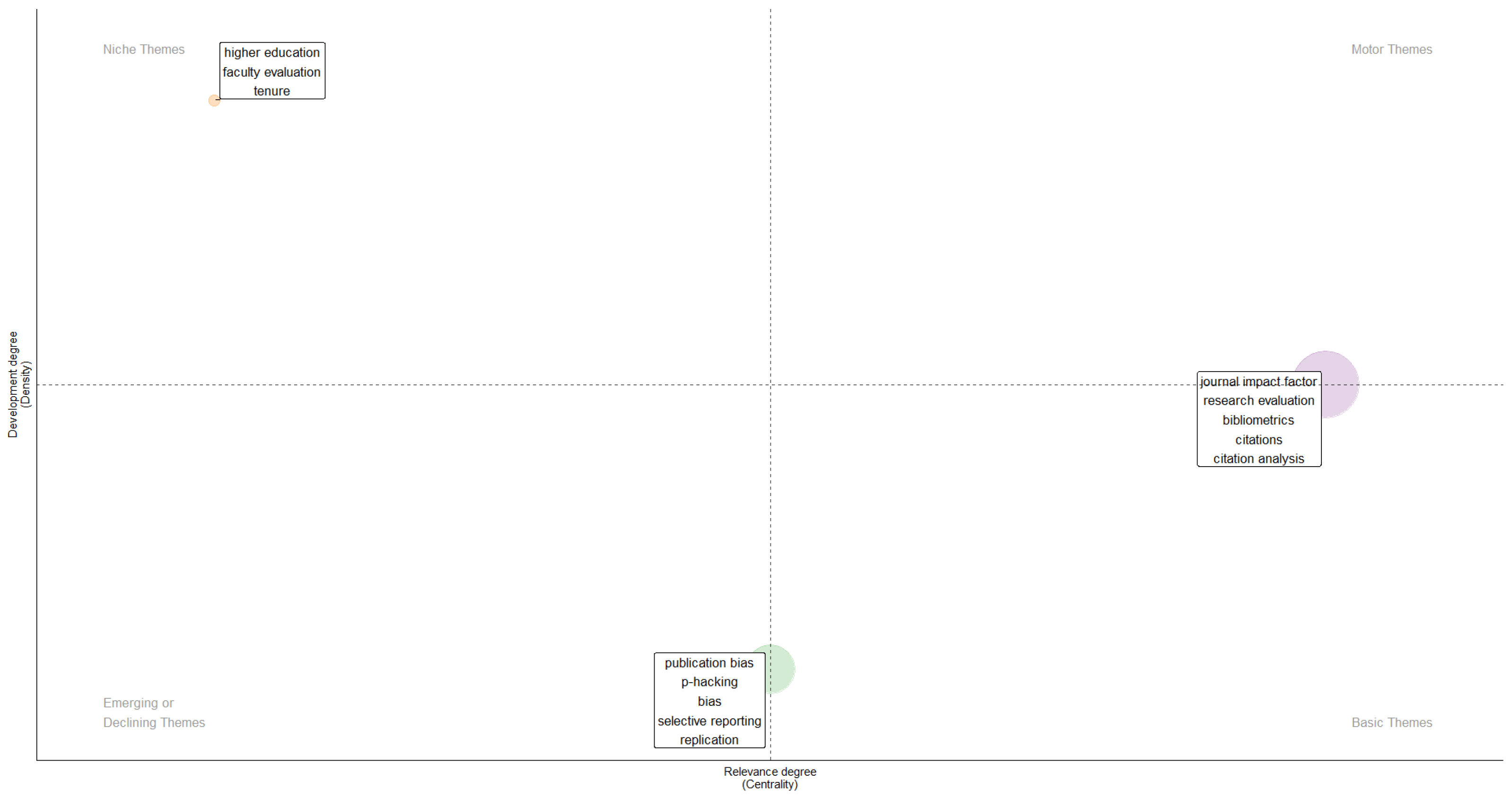

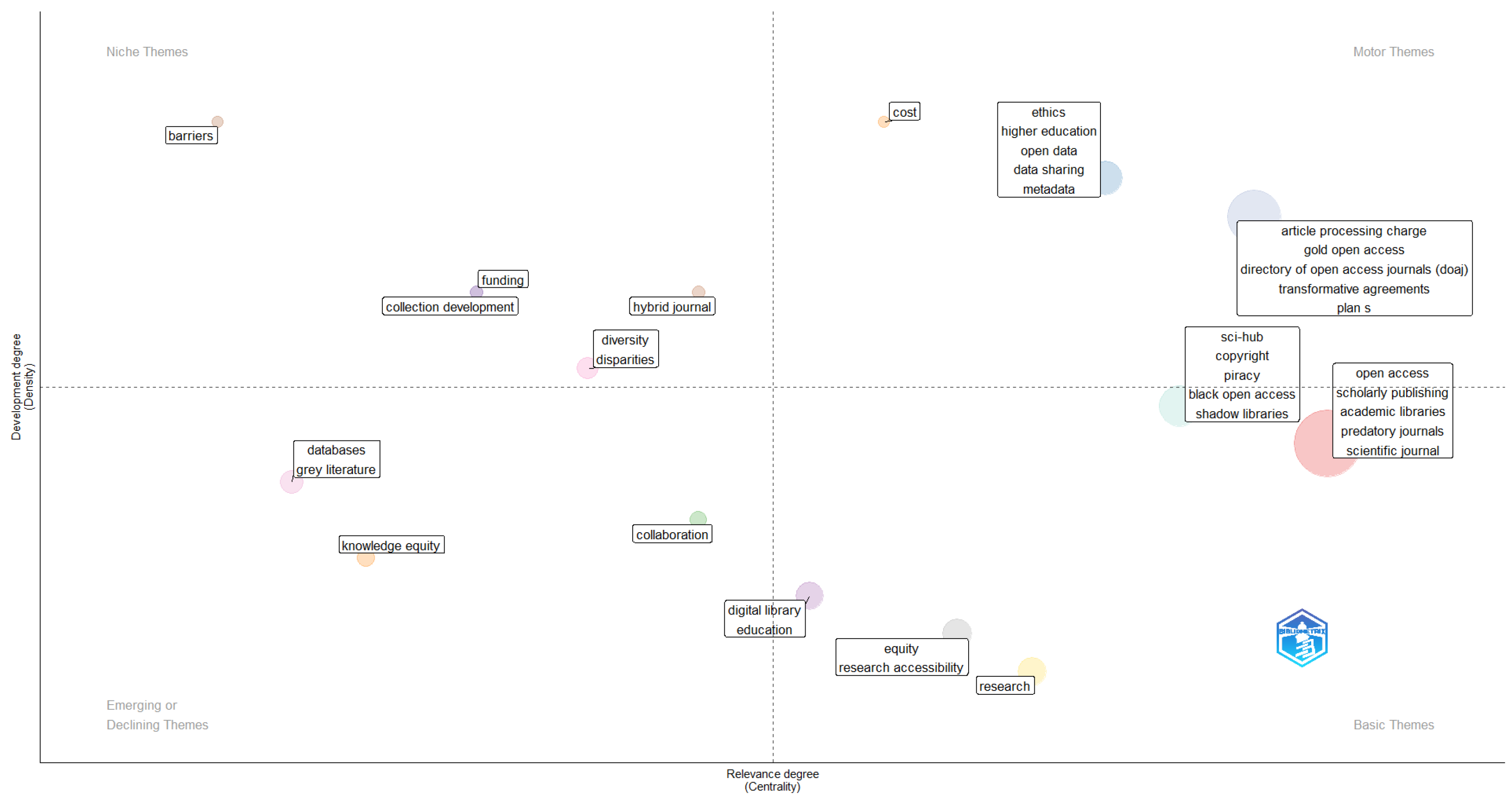

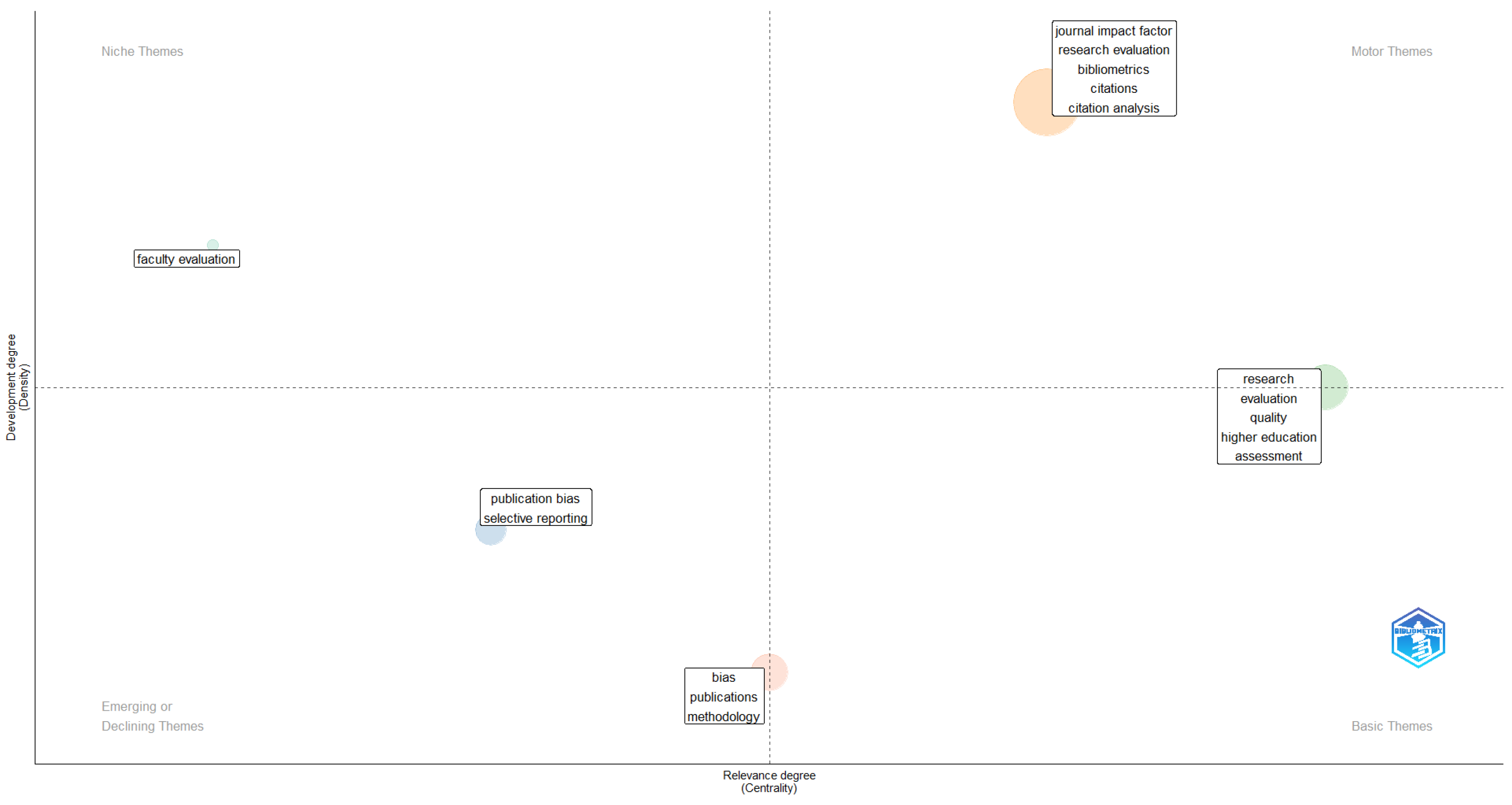

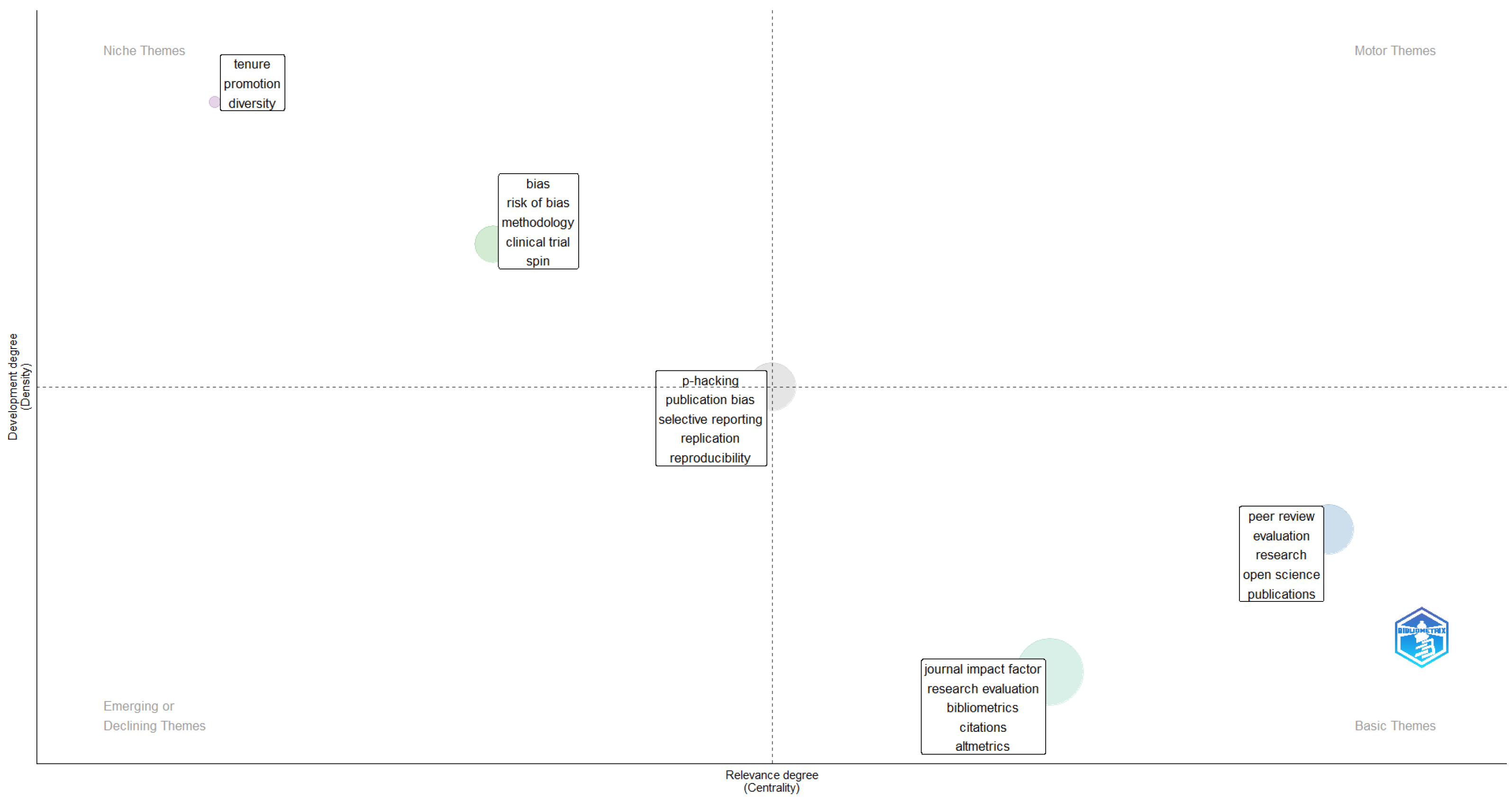

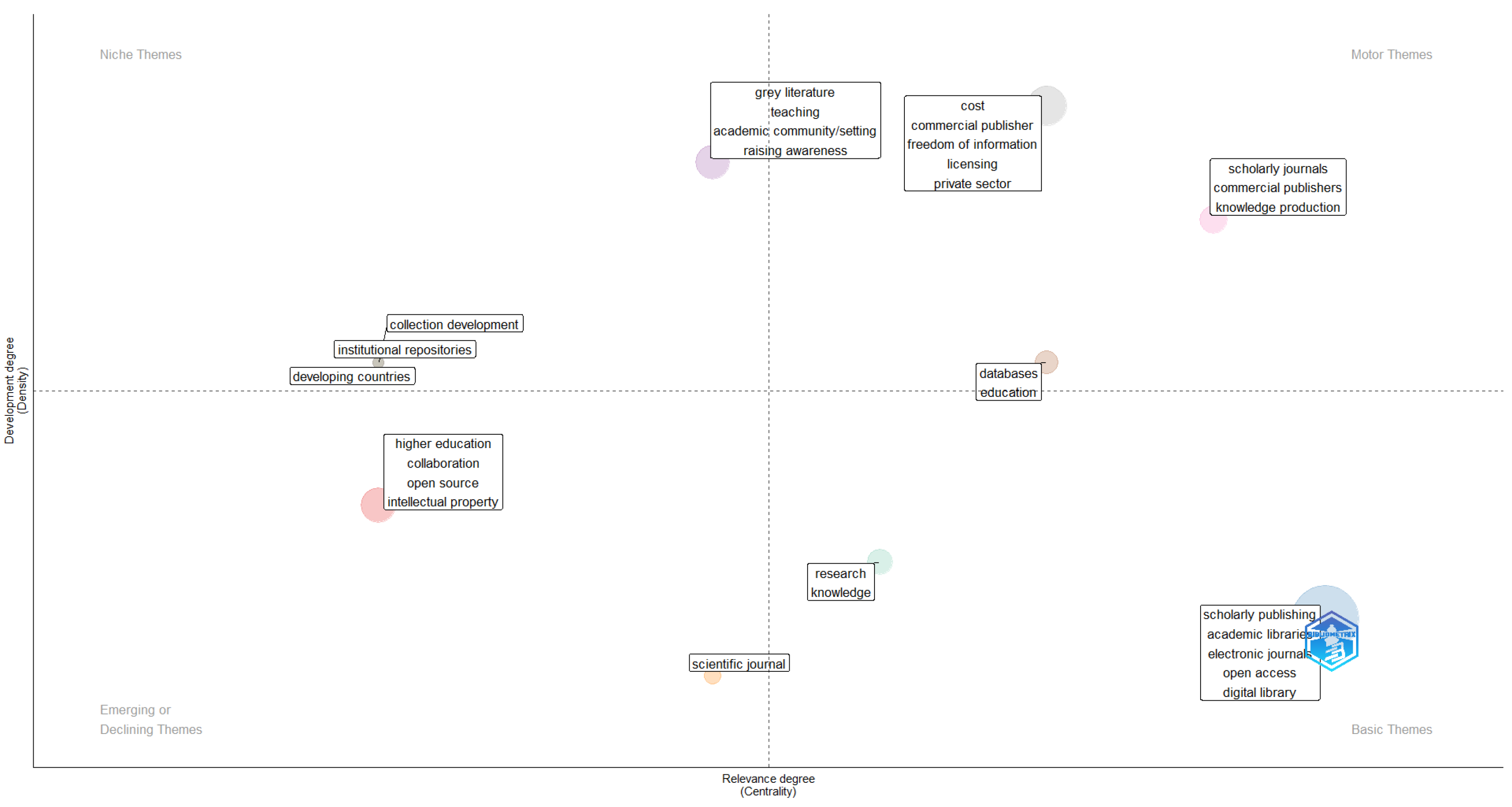

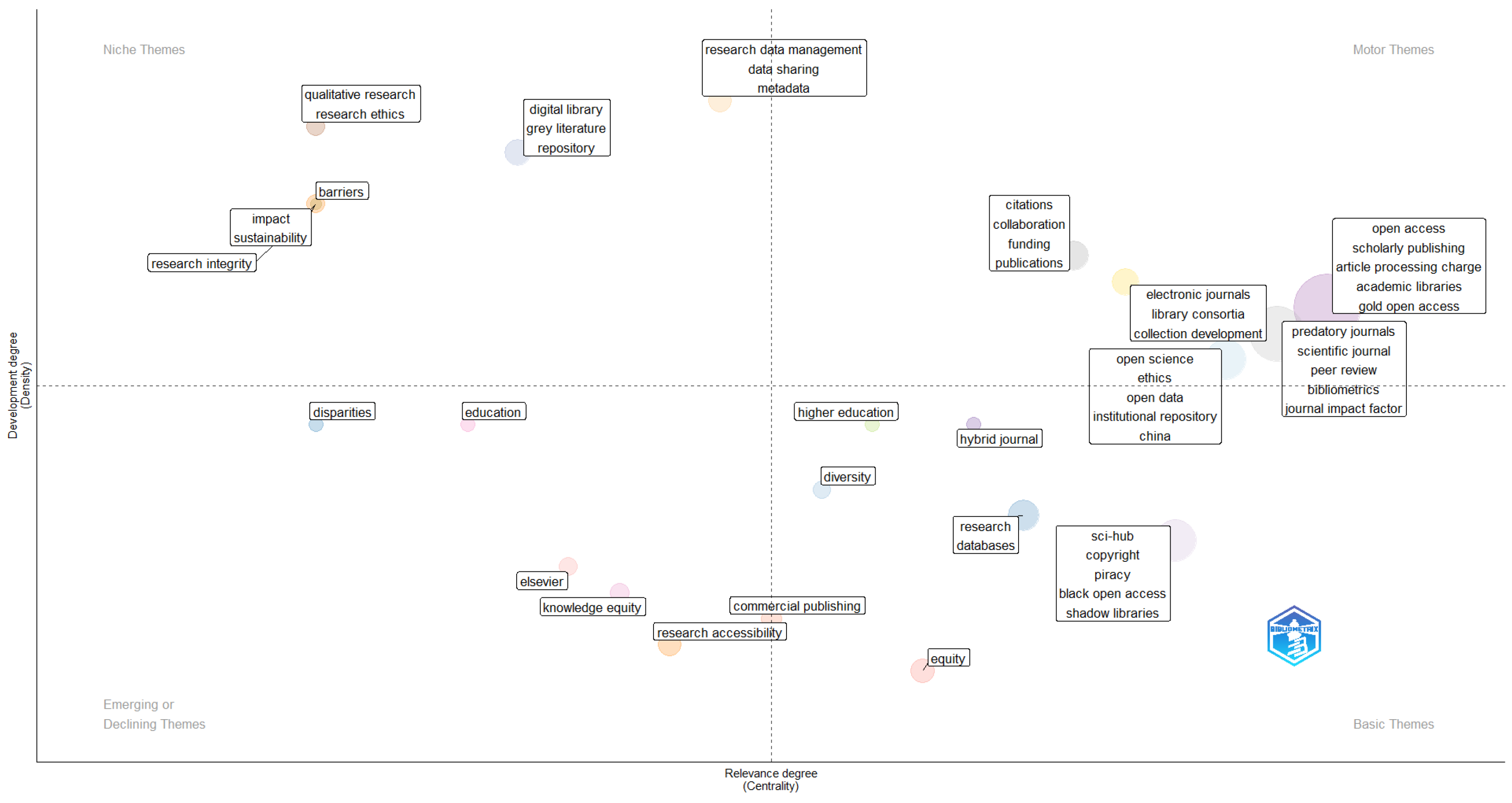

- Emerging or Declining Themes (low centrality, low density): This quadrant represents topics that are either underdeveloped or losing relevance, often characterized by weak integration with other themes and limited research activity.

- Niche Themes (low centrality, high density): This quadrant represents Well-developed but isolated topics with strong internal coherence and limited external linkage.

- Basic Themes (high centrality, low density): This quadrant represents foundational topics with wide relevance but lower development, often serving as conceptual anchors for the field.

- Motor Themes (high centrality, high density): This quadrant represents both well-developed and highly connected topics, indicating the forefront of the research domain.

5. Results

5.1. Performance Analysis

5.1.1. Gatekeeping and Editorial Bias

5.1.2. Prestige-Driven Metrics and Research Evaluation

5.1.3. Barrier and Equity Issues in Research Accessibility

5.1.4. Time Sliced Analysis: Prestige-Driven Metrics and Research Evaluation Before and After DORA

5.1.5. Time Sliced Analysis: Barrier and Equity Issues in Research Accessibility Before and After Sci-Hub

5.2. Conceptual Analysis

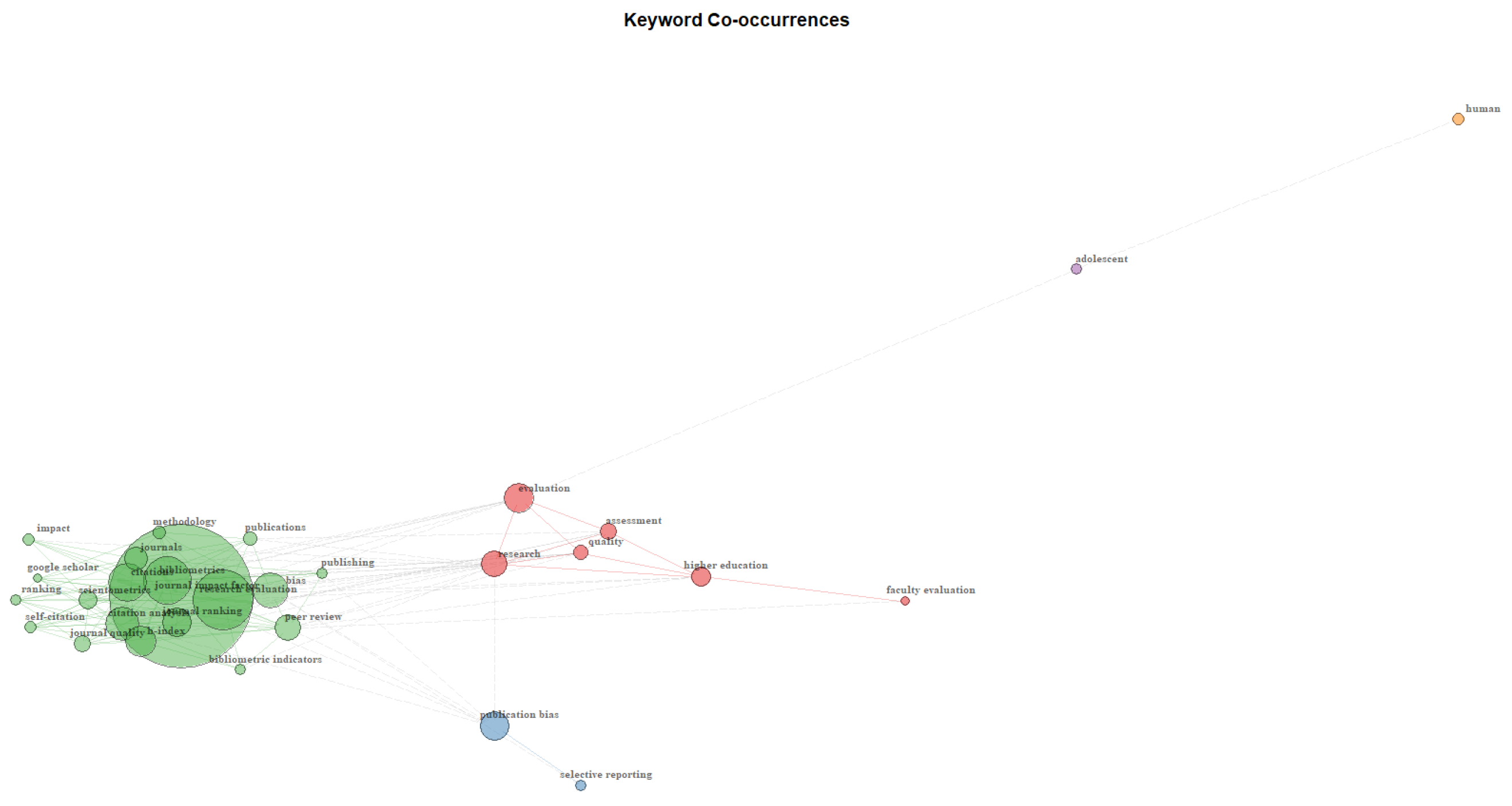

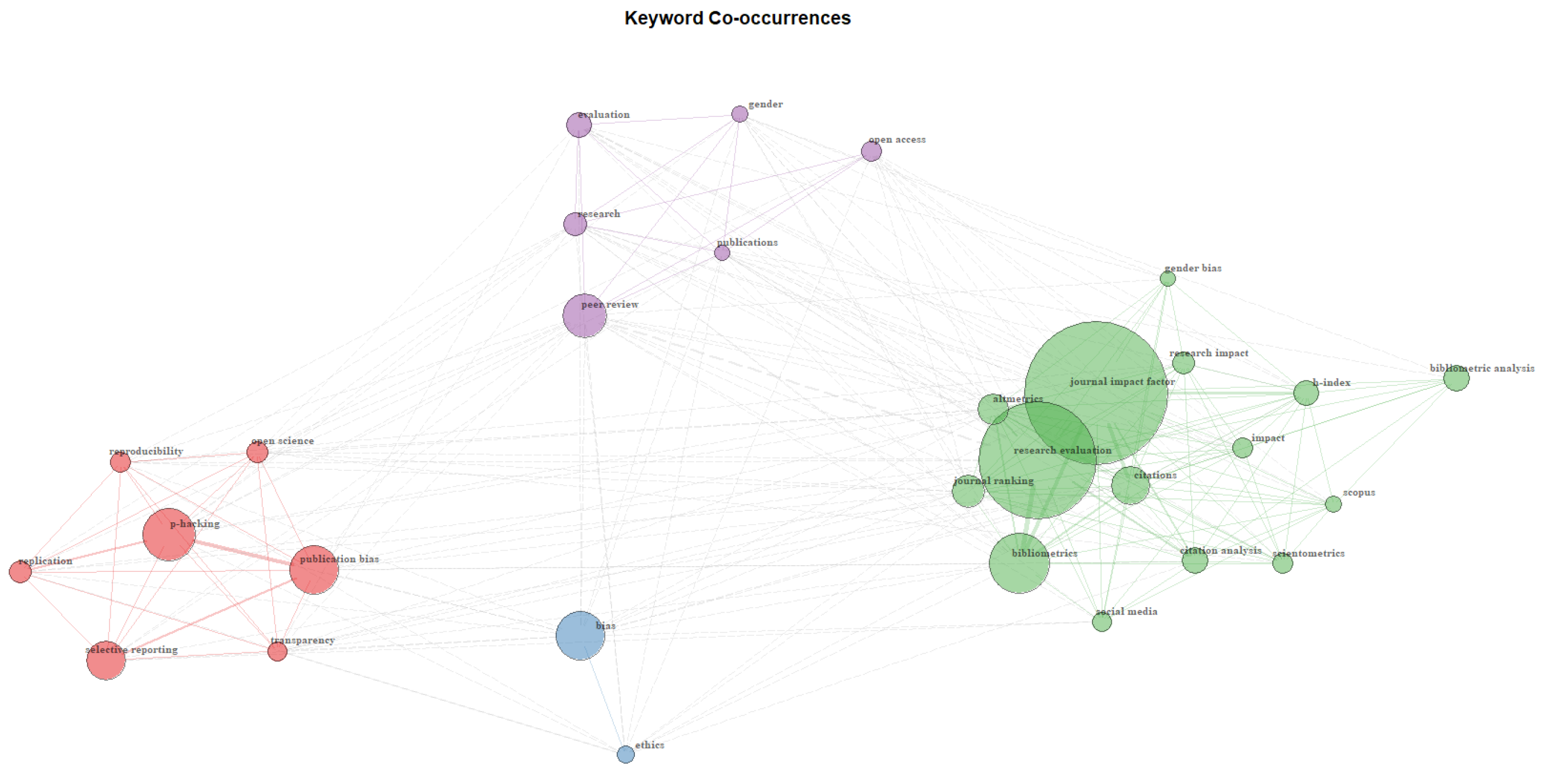

5.2.1. Keyword Co-Occurrence Network

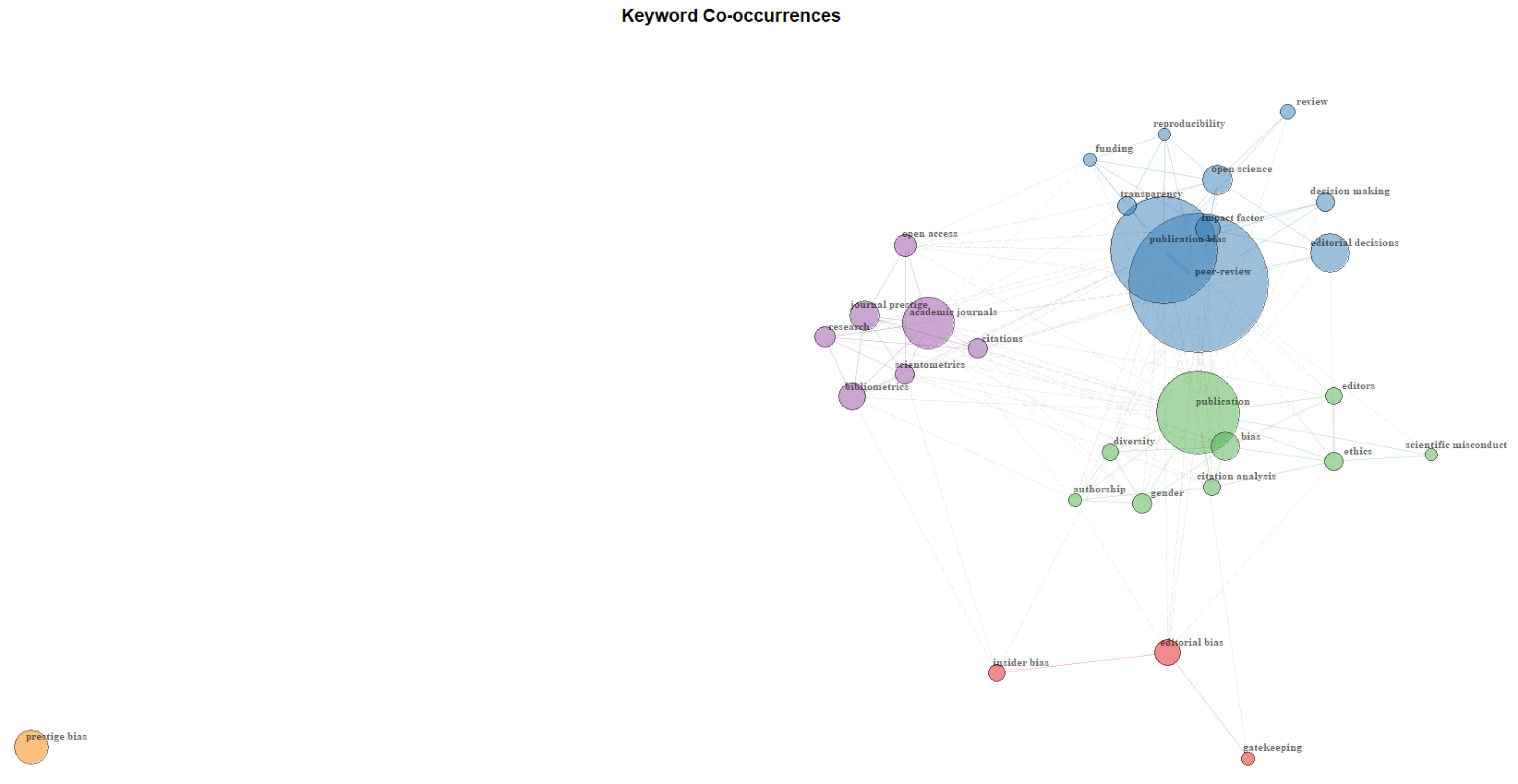

Gatekeeping and Editorial Bias

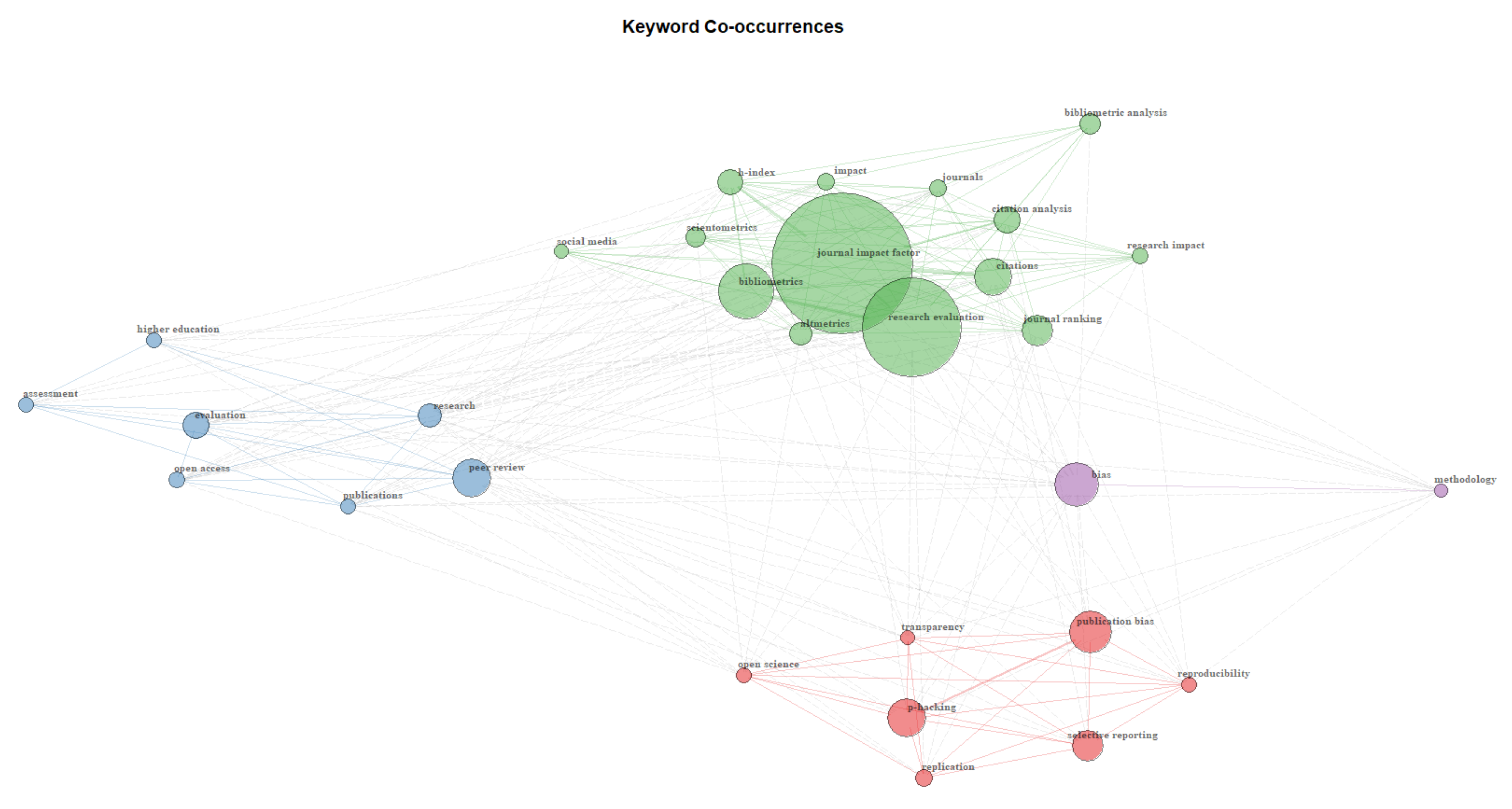

Prestige-Driven Metrics and Research Evaluation

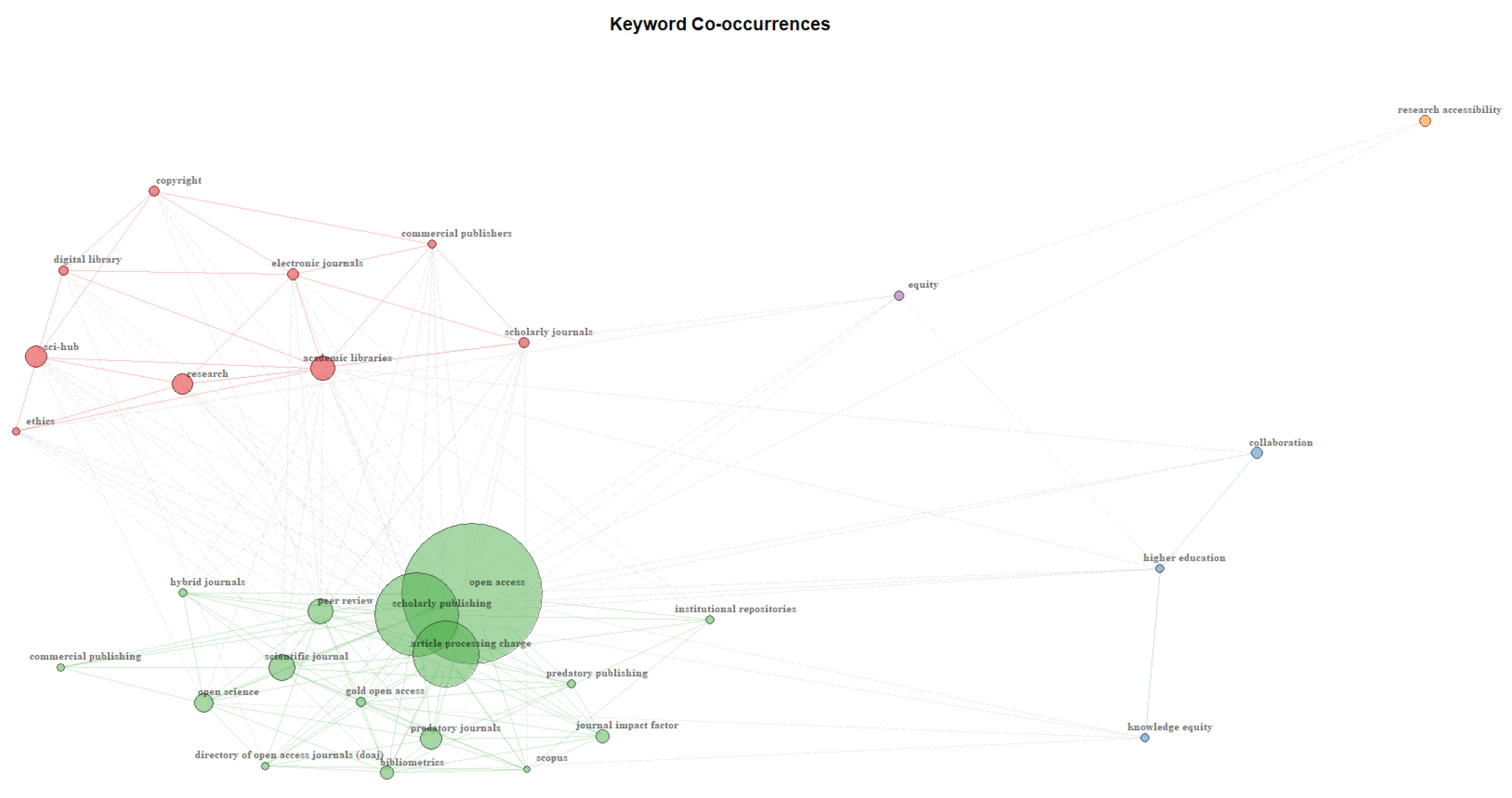

Barrier and Equity Issues in Research Accessibility

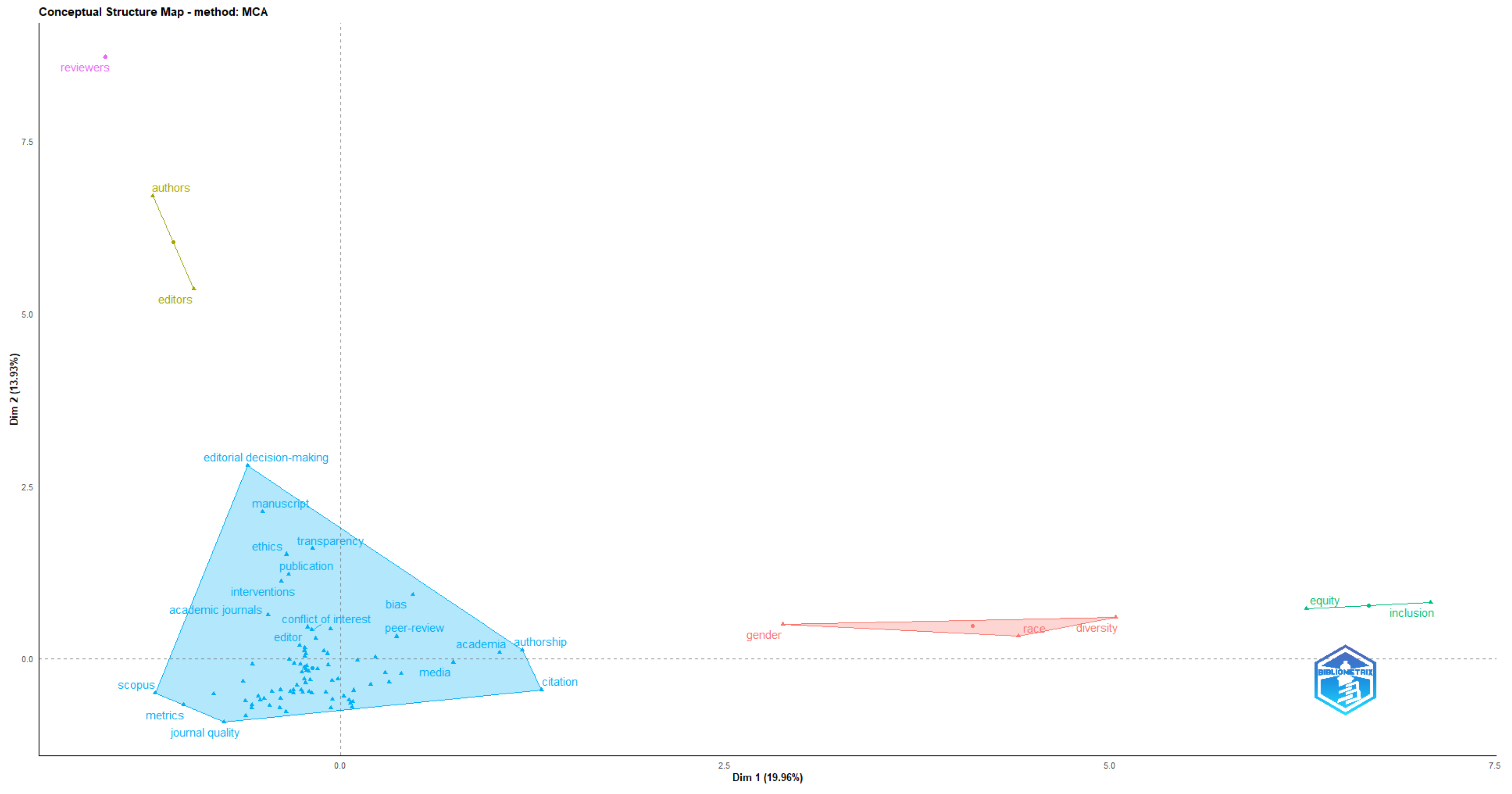

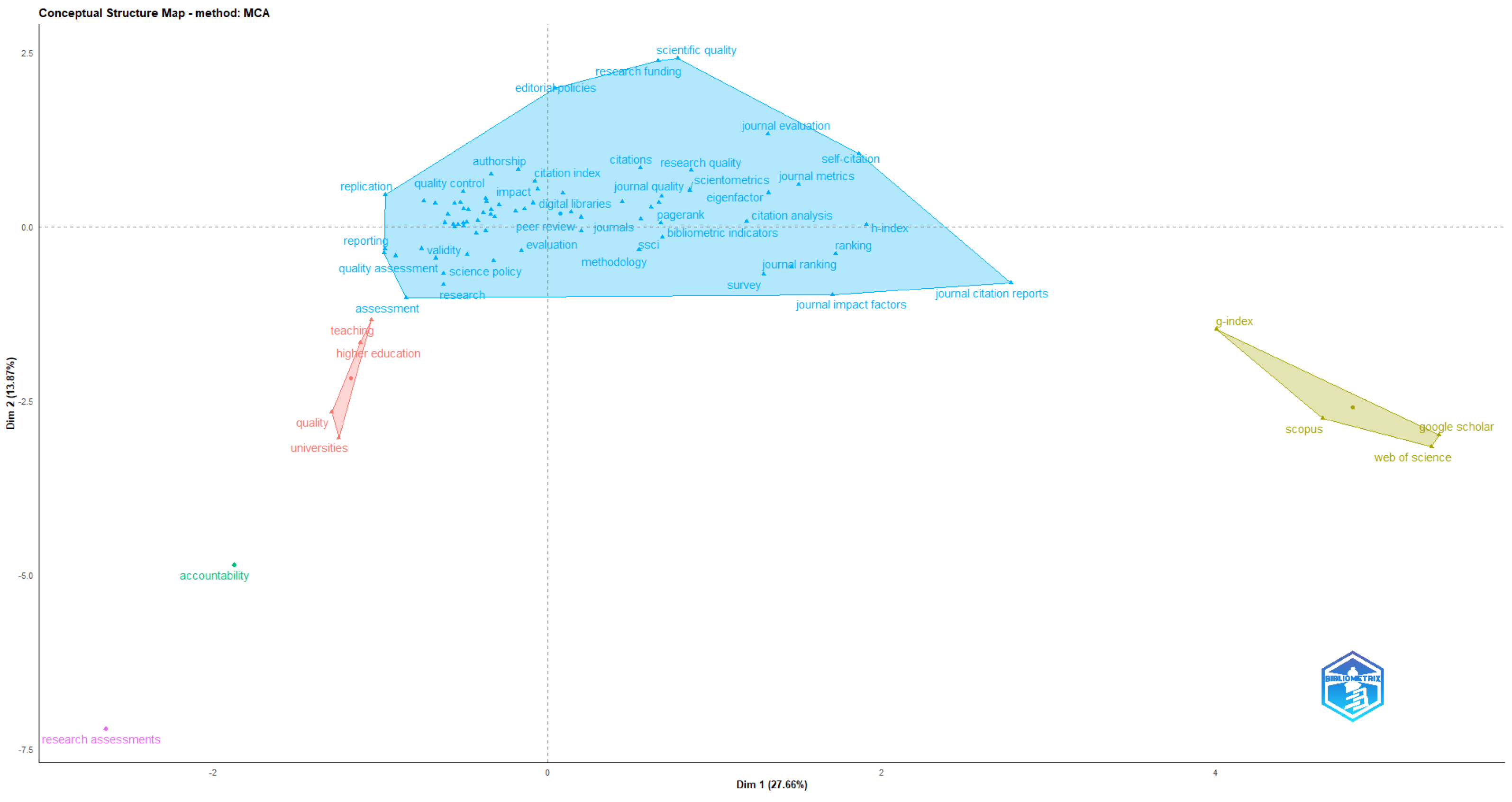

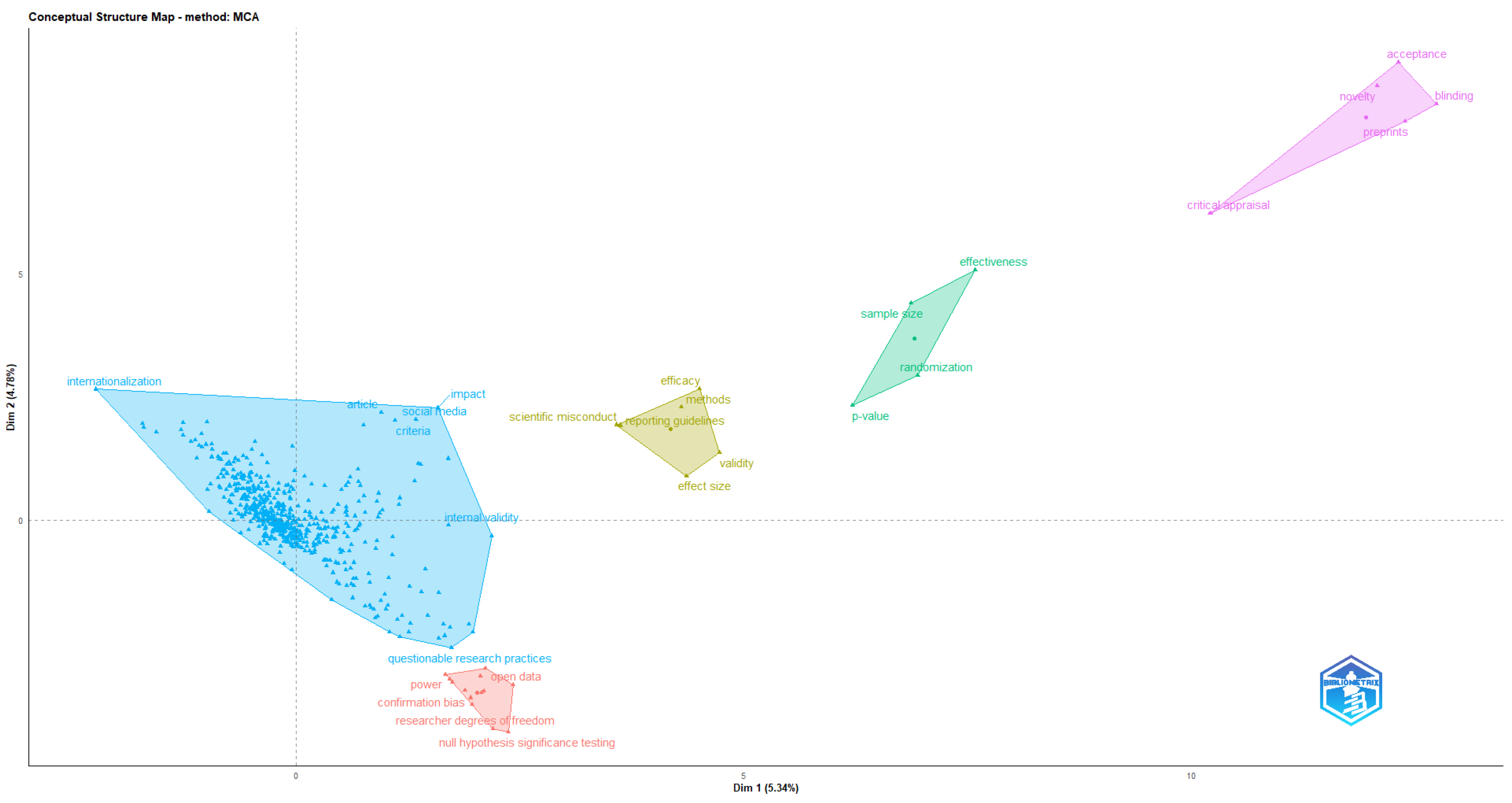

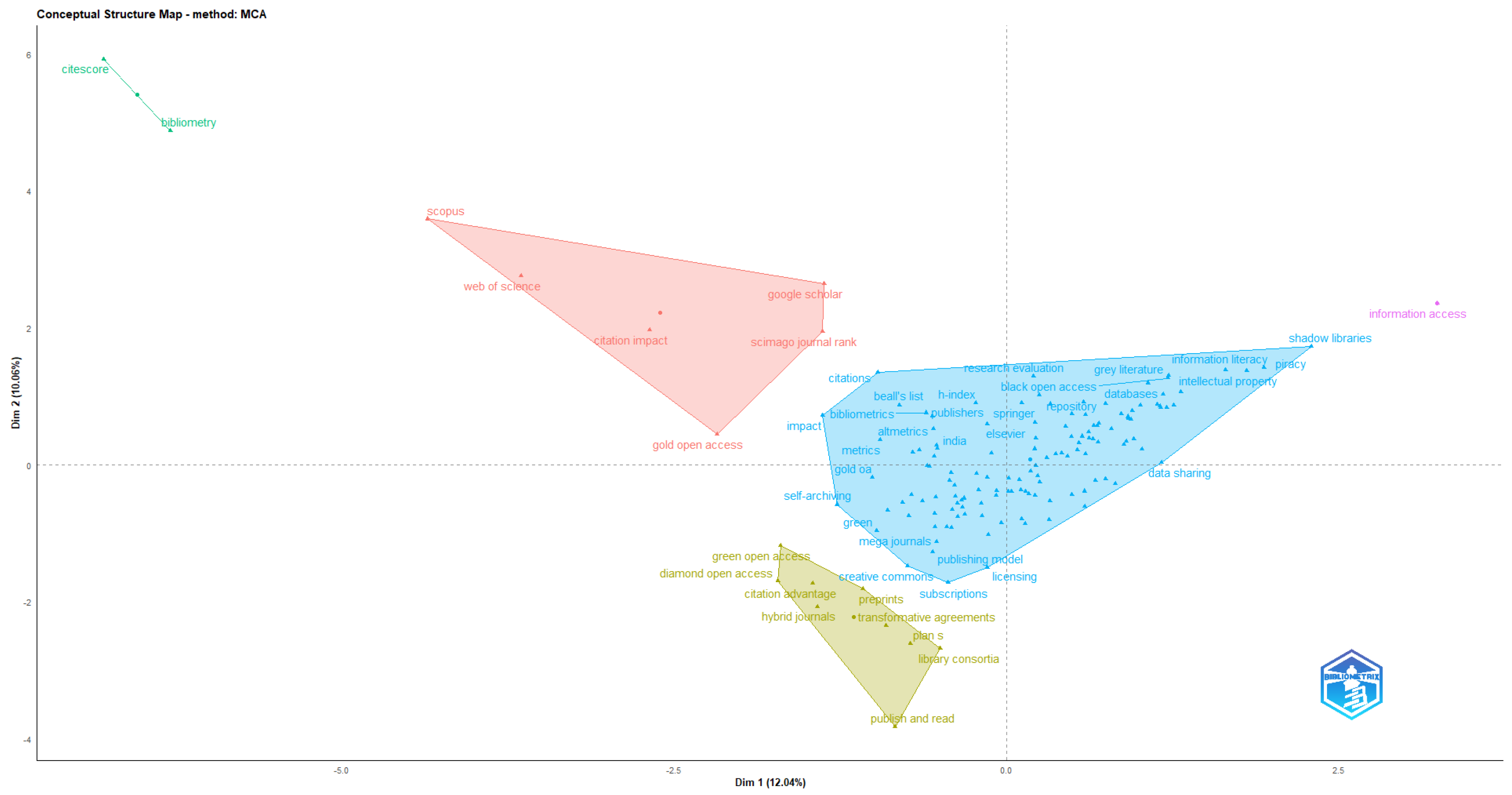

5.2.2. MCA-Based Conceptual Clustering

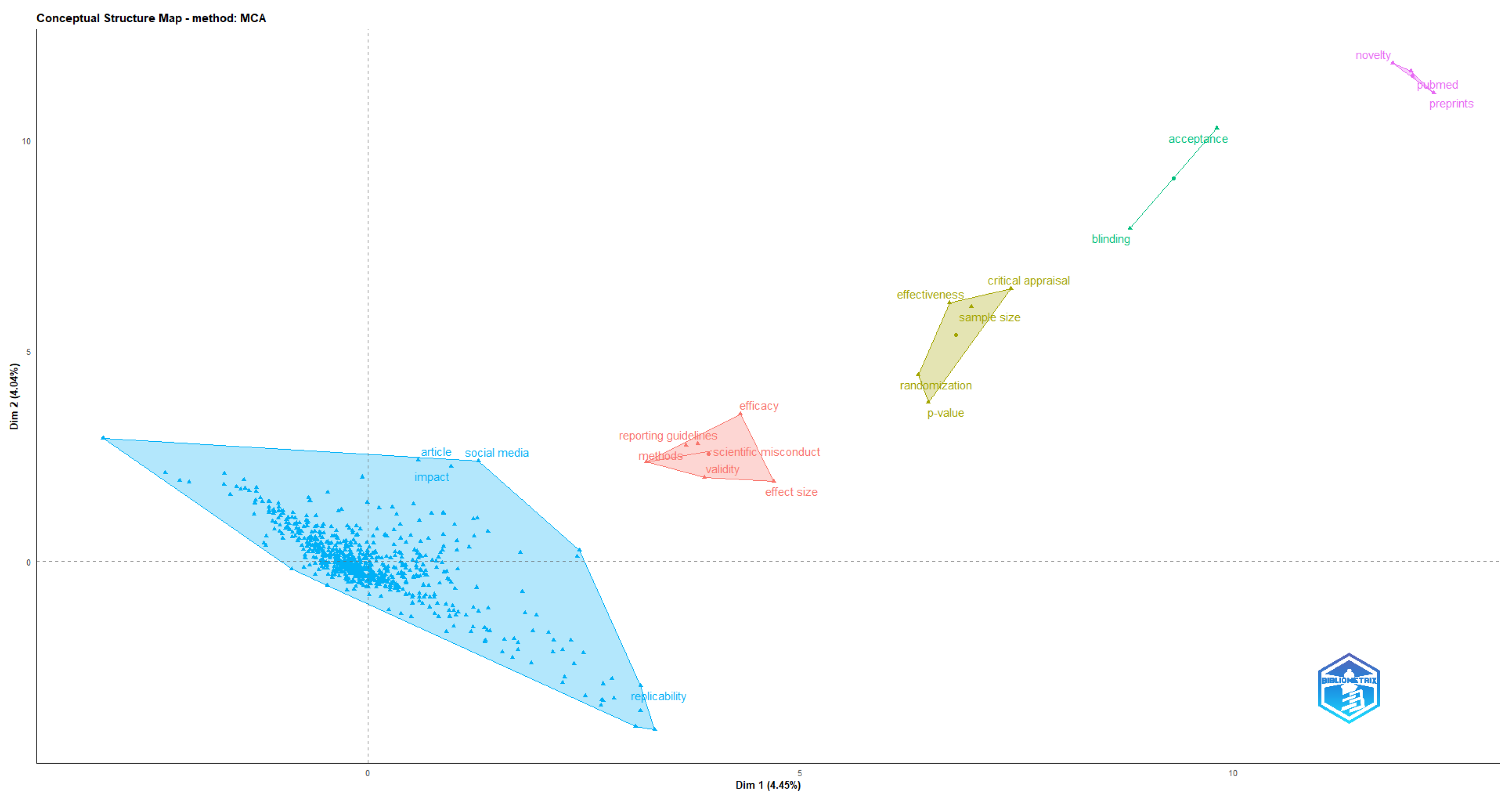

Gatekeeping and Editorial Bias

Prestige-Driven Metrics and Research Evaluation

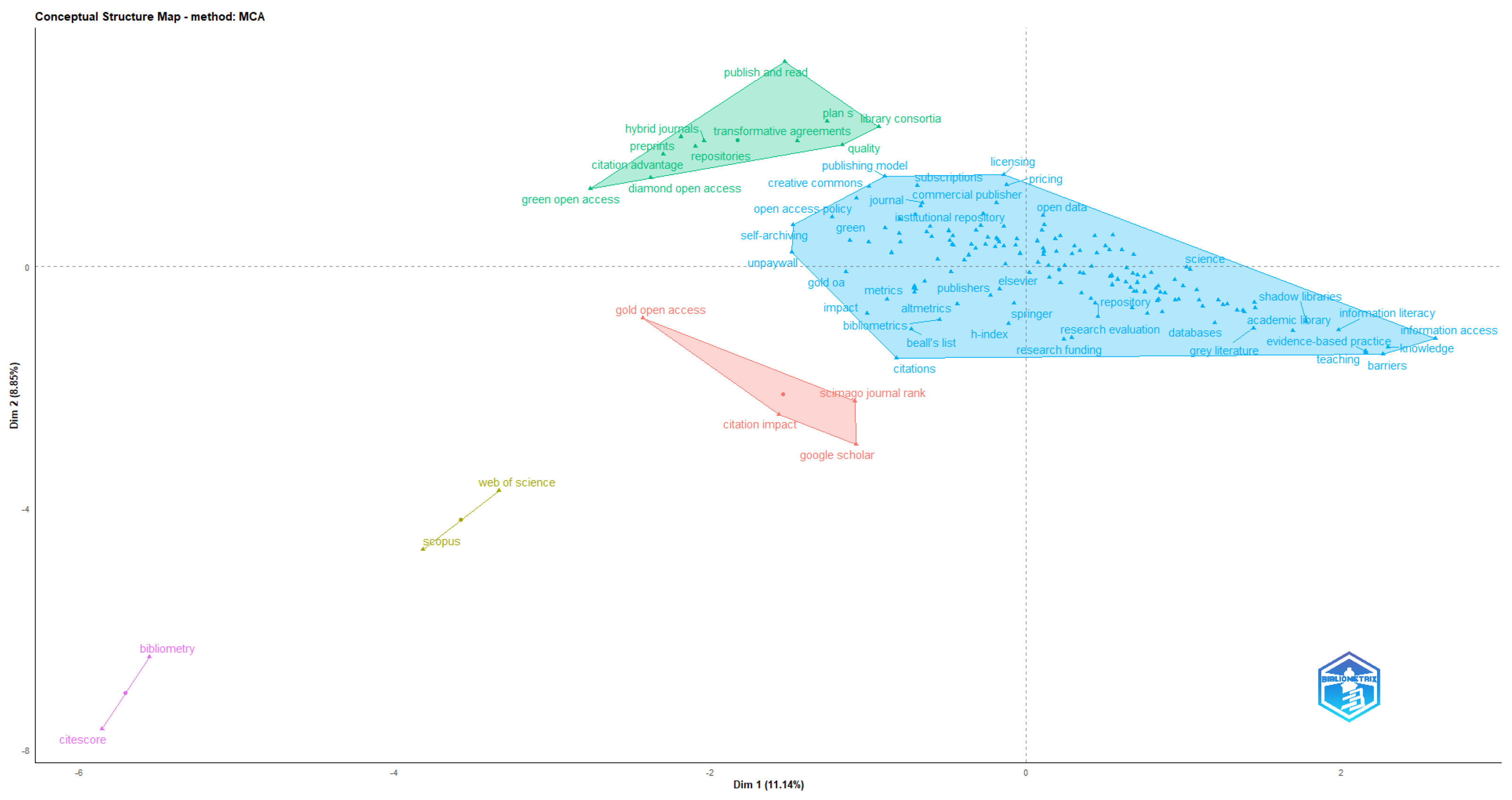

Barrier and Equity Issues in Research Accessibility

5.2.3. Thematic Map

Gatekeeping and Editorial Bias

Prestige-Driven Metrics and Research Evaluation

Barrier and Equity Issues in Research Accessibility

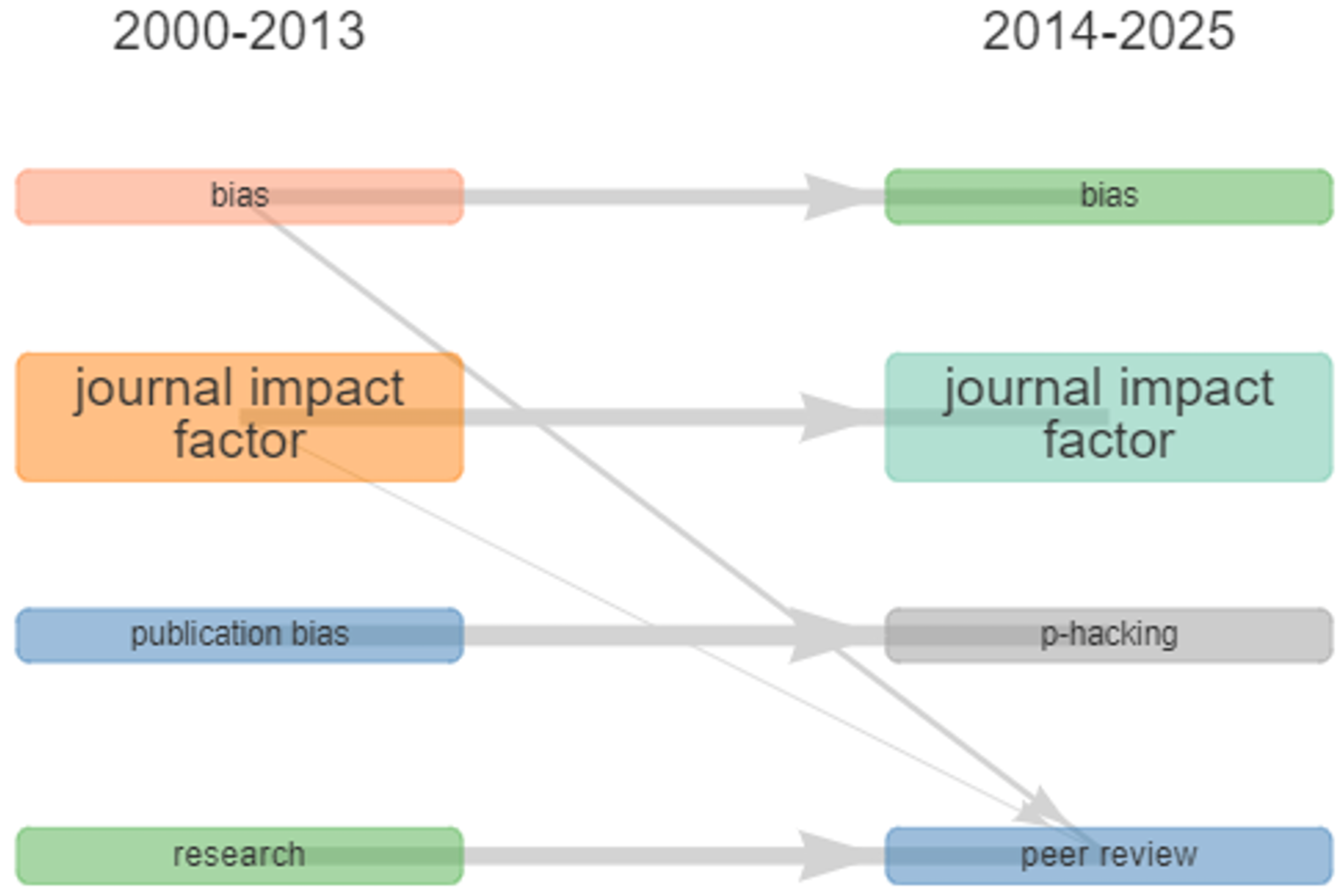

5.2.4. Time Sliced Analysis: Prestige-Driven Metrics and Research Evaluation Before and After DORA

Keyword Co-Occurrence Network Before DORA

MCA-Based Conceptual Clustering Before DORA

Thematic Map Before DORA

Keyword Co-Occurrence Network After DORA

MCA-Based Conceptual Clustering After DORA

Thematic Map After DORA

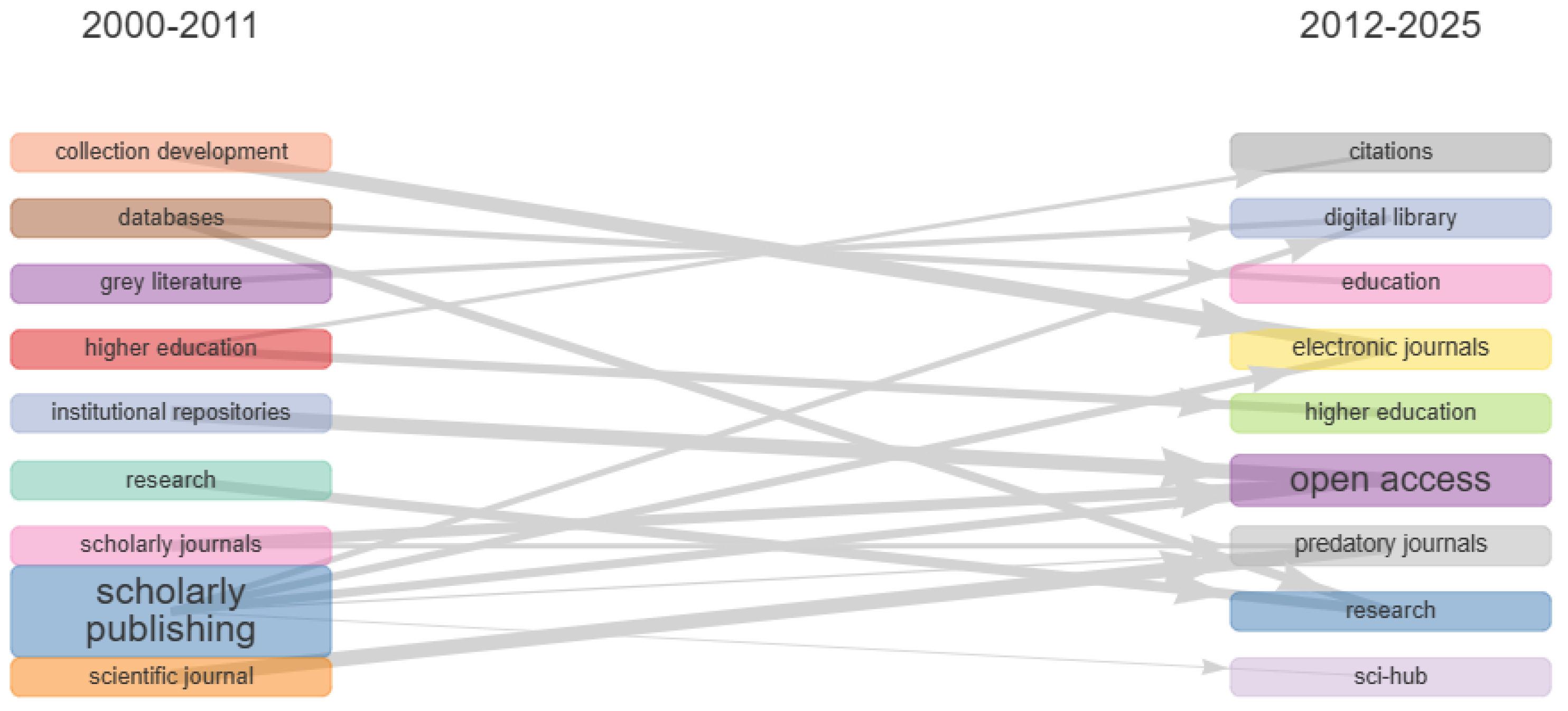

Thematic Evolution After DORA

5.2.5. Time Sliced Analysis: Barrier and Equity Issues in Research Accessibility Before and After Sci-Hub

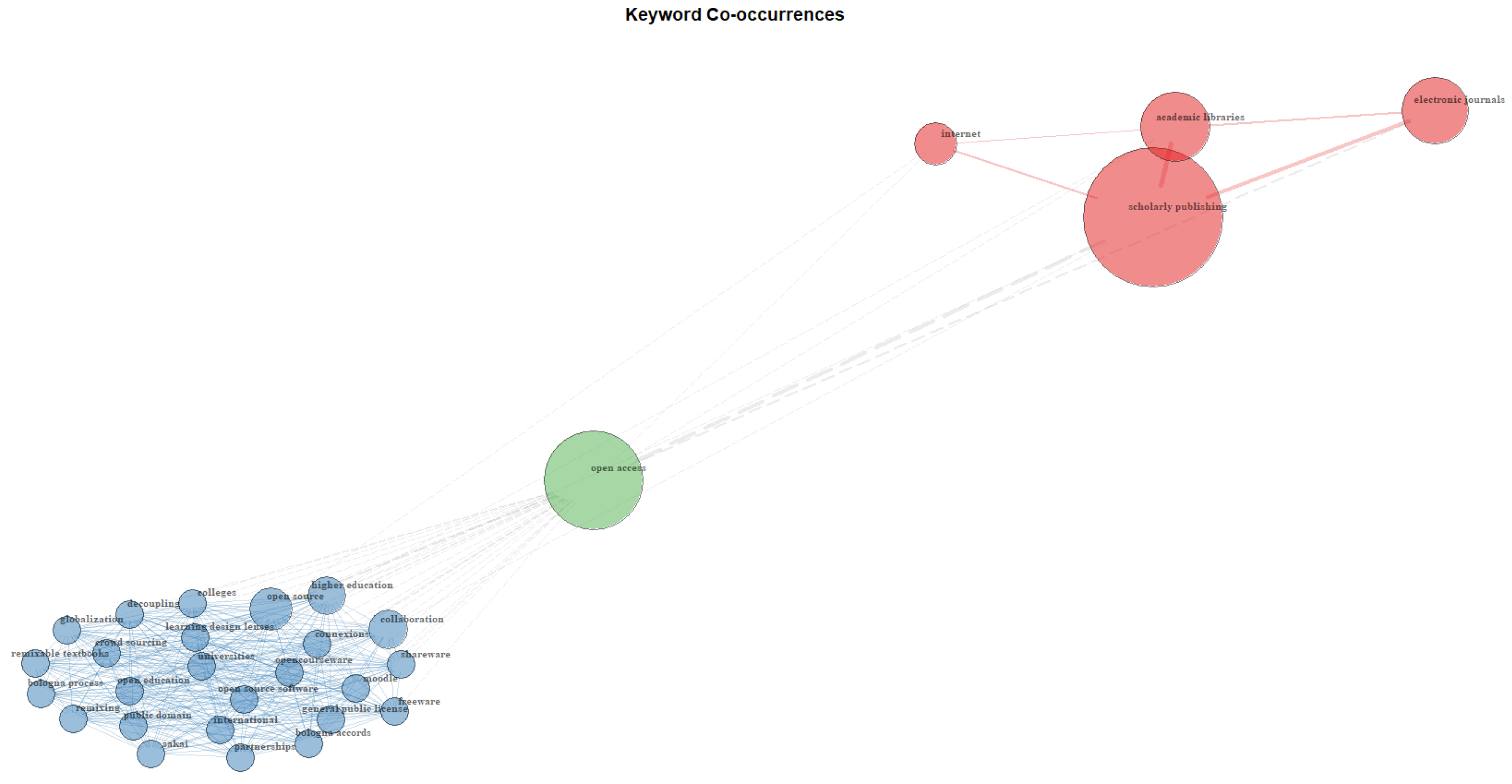

Keyword Co-Occurrence Network BEFORE Sci-Hub

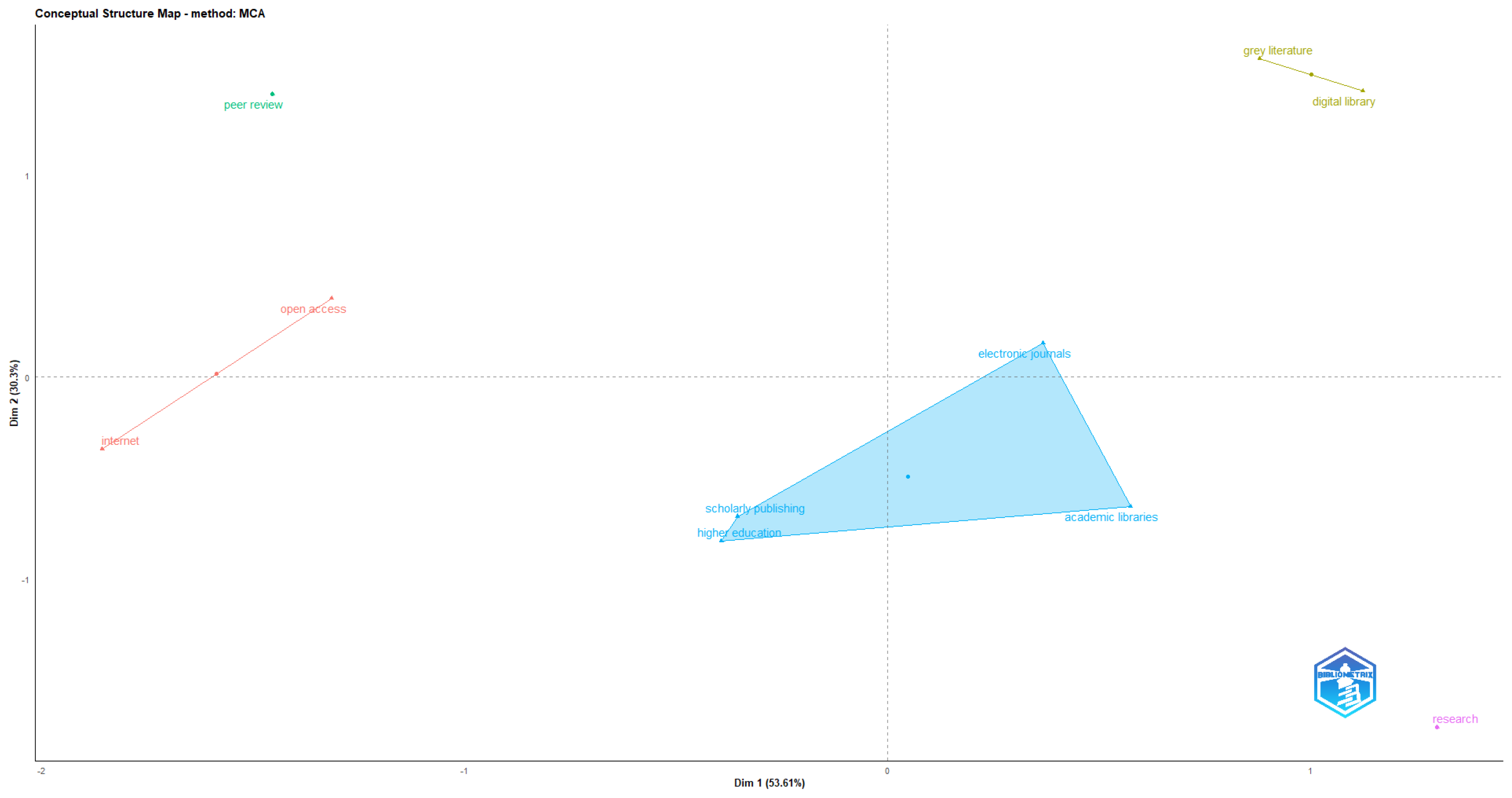

MCA-Based Conceptual Clustering Before Sci-Hub

Thematic Map Before Sci-hub

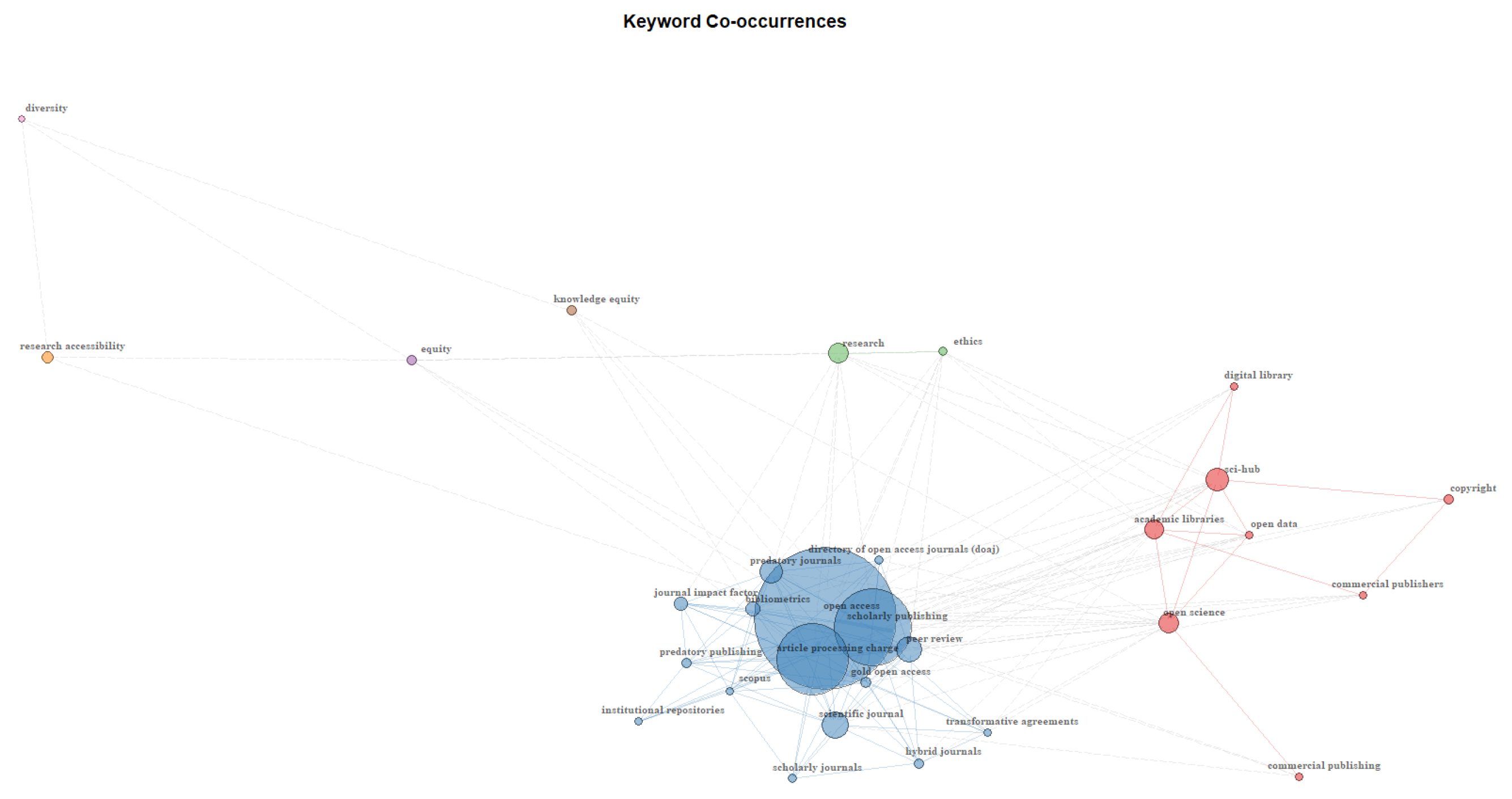

Keyword Co-Occurrence Network After Sci-Hub

MCA-Based Conceptual Clustering After Sci-Hub

Thematic Map After Sci-Hub

Thematic Evolution After Sci-Hub

5.3. Literature Landscape Through Result Integration

5.3.1. Gatekeeping and Editorial Bias

5.3.2. Prestige-Driven Metrics and Research Evaluation

5.3.3. Barrier and Equity Issues in Research Accessibility

5.4. Key Literature Changes Through Result Integration

5.4.1. Prestige-Driven Metrics and Research Evaluation Before and After DORA

5.4.2. Barrier and Equity Issues in Research Accessibility Before and After Sci-Hub

6. Discussion

6.1. Who Decides What Knowledge Is? Editorial Elitism in Scholarly Publishing

6.2. Reassessing Metrics and Meaning in Research Evaluation

6.3. Rewriting the Terms of Access: Political Economy and Equity in Scholarly Publishing

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| JIF | Journal Impact Factor |

| DORA | Declaration on Research Assessment |

| Altmetrics | Alternative Metrics |

| APC | Article Processing Charges |

| WoS | Web of Science |

| MCA | Multiple Correspondence Analysis |

| DOAJ | Directory of Open Access Journals |

| LLM | Large Language Models |

Appendix A. Removed and Consolidated Keywords

References

- Bedeian, A.G. Peer review and the social construction of knowledge in the management discipline. Academy of Management Learning & Education 2004, 3, 198–216. [Google Scholar] [CrossRef]

- Hyland, K. Academic publishing: Issues and challenges in the construction of knowledge; Oxford University Press: UK, 2016. [Google Scholar]

- Connell, R. Southern theory: The global dynamics of knowledge in social science, 1 ed.; Routledge, 2020. [CrossRef]

- Fricker, M. Epistemic injustice: Power and the ethics of knowing, 1 ed.; Oxford University PressOxford, 2007. [CrossRef]

- Lawrence, P.A. The politics of publication. Nature 2003, 422, 259–261. [Google Scholar] [CrossRef] [PubMed]

- Brembs, B. Reliable novelty: New should not trump true. PLOS Biology 2019, 17, e3000117. [Google Scholar] [CrossRef] [PubMed]

- Brembs, B.; Button, K.; Munafò, M. Deep impact: Unintended consequences of journal rank. Frontiers in Human Neuroscience 2013, 7. [Google Scholar] [CrossRef] [PubMed]

- Primack, R.B.; Regan, T.J.; Devictor, V.; Zipf, L.; Godet, L.; Loyola, R.; Maas, B.; Pakeman, R.J.; Cumming, G.S.; Bates, A.E.; et al. Are scientific editors reliable gatekeepers of the publication process? Biological Conservation 2019, 238, 108232. [Google Scholar] [CrossRef]

- Tennant, J.P.; Waldner, F.; Jacques, D.C.; Masuzzo, P.; Collister, L.B.; Hartgerink, C.H.J. The academic, economic and societal impacts of open access: An evidence-based review. F1000Research 2016, 5, 632. [Google Scholar] [CrossRef]

- Moher, D.; Bouter, L.; Kleinert, S.; Glasziou, P.; Sham, M.H.; Barbour, V.; Coriat, A.M.; Foeger, N.; Dirnagl, U. The hong kong principles for assessing researchers: Fostering research integrity. PLOS Biology 2020, 18, e3000737. [Google Scholar] [CrossRef]

- Ross, J.S.; Gross, C.P.; Desai, M.M.; Hong, Y.; Grant, A.O.; Daniels, S.R.; Hachinski, V.C.; Gibbons, R.J.; Gardner, T.J.; Krumholz, H.M. Effect of blinded peer review on abstract acceptance. JAMA 2006, 295, 1675. [Google Scholar] [CrossRef]

- Helmer, M.; Schottdorf, M.; Neef, A.; Battaglia, D. Gender bias in scholarly peer review. eLife 2017, 6. [Google Scholar] [CrossRef]

- Kidney International. Guide for authors.

- Nature. Other types of submissions.

- Teixeira Da Silva, J.A.; Daly, T. Inclusive editorial policies can reduce epistemic injustice in academic authorship. CHEST 2024, 166, e22–e23. [Google Scholar] [CrossRef]

- Meneghini, R.; Mugnaini, R.; Packer, A.L. International versus national oriented Brazilian scientific journals. A scientometric analysis based on SciELO and JCR-ISI databases. Scientometrics 2006, 69, 529–538. [Google Scholar] [CrossRef]

- Neuberger, J.; Counsell, C. Impact factors: Uses and abuses. European Journal of Gastroenterology & Hepatology 2002, 14, 209–211. [Google Scholar] [CrossRef] [PubMed]

- Casadevall, A.; Fang, F.C. Causes for the persistence of impact factor mania. mBio 2014, 5 Publisher: American Society for Microbiology. [Google Scholar] [CrossRef] [PubMed]

- Hicks, D.; Wouters, P.; Waltman, L.; De Rijcke, S.; Rafols, I. Bibliometrics: The leiden manifesto for research metrics. Nature 2015, 520, 429–431. [Google Scholar] [CrossRef]

- Biagioli, M.; Lippman, A. (Eds.) Gaming the metrics: Misconduct and manipulation in academic research; The MIT Press, 2020. [CrossRef]

- Lawson, S.; Gray, J.; Mauri, M. Opening the black box of scholarly communication funding: A public data infrastructure for financial flows in academic publishing. Open Library of Humanities 2016, 2, e10. [Google Scholar] [CrossRef]

- Segado-Boj, F.; Martín-Quevedo, J.; Prieto-Gutiérrez, J.J. Jumping over the paywall: Strategies and motivations for scholarly piracy and other alternatives. Information Development 2024, 40, 442–460. [Google Scholar] [CrossRef]

- Chan, L.; Costa, S. Participation in the global knowledge commons: Challenges and opportunities for research dissemination in developing countries. New Library World 2005, 106, 141–163. [Google Scholar] [CrossRef]

- Wan, S. Which nationals use sci-hub mostly? The Serials Librarian 2023, 83, 228–232. [Google Scholar] [CrossRef]

- Rossello, G.; Martinelli, A. Breach of academic values and misconduct: The case of Sci-Hub. Scientometrics 2024, 129, 5227–5263. [Google Scholar] [CrossRef]

- Cagan, R. San Francisco declaration on research assessment. Disease Models & Mechanisms 2013, p. dmm.012955. [CrossRef]

- Declaration on Research Assessment [DORA]. San Francisco declaration on research assessment, 2012.

- Priem, J.; Taraborelli, D.; Groth, P.; Neylon, C. Altmetrics: A manifesto 2011. DigitalCommons@University of Nebraska - Lincoln. https://digitalcommons.unl.edu/scholcom/185/.

- Nazim, M.; Bhardwaj, R.K. Open access initiatives in European countries: Analysis of trends and policies. Digital Library Perspectives 2023, 39, 371–392. [Google Scholar] [CrossRef]

- Sugimoto, C.R.; Larivière, V. Measuring research: What everyone needs to know; What everyone needs to know, Oxford University Press: New York, 2018. [Google Scholar]

- Donthu, N.; Kumar, S.; Mukherjee, D.; Pandey, N.; Lim, W.M. How to conduct a bibliometric analysis: An overview and guidelines. Journal of Business Research 2021, 133, 285–296. [Google Scholar] [CrossRef]

- Padmalochanan, P. Academics and the field of academic publishing: Challenges and approaches. Publishing Research Quarterly 2019, 35, 87–107. [Google Scholar] [CrossRef]

- Green, T. Is open access affordable? Why current models do not work and why we need internet-era transformation of scholarly communications. Learned Publishing 2019, 32, 13–25. [Google Scholar] [CrossRef]

- Buljung, B.; Bongiovanni, E.; Li, Y. Navigating the research lifecycle for the modern researcher, 2 ed.; Colorado Pressbooks Network, 2024.

- Xu, L.; Grant, B. Doctoral publishing and academic identity work: Two cases. Higher Education Research & Development 2020, 39, 1502–1515. [Google Scholar] [CrossRef]

- Binfield, P.; Rolnik, Z.; Brown, C.; Cole, K. Academic journal publishing. The Serials Librarian 2008, 54, 141–153. [Google Scholar] [CrossRef]

- De Vries, D.R.; Marschall, E.A.; Stein, R.A. Exploring the peer review process: What is it, does it work, and can it be improved? Fisheries 2009, 34, 270–279. [Google Scholar] [CrossRef]

- Day, A.; Peters, J. Quality indicators in academic publishing. Library Review 1994, 43, 4–72. [Google Scholar] [CrossRef]

- Yuan, J.T.; Aires, D.J.; DaCunha, M.; Funk, K.; Habashi-Daniel, A.; Moore, S.A.; Heimes, A.; Sawaf, H.; Fraga, G.R. The h-index for associate and full professors of dermatology in the united states: An epidemiologic study of scholastic production. Cutis 2017, 100, 395–398. [Google Scholar]

- Garfield, E. The history and meaning of the journal impact factor. JAMA 2006, 295, 90. [Google Scholar] [CrossRef]

- N. K., S.; Mathew K., S.; Cherukodan, S. Impact of scholarly output on university ranking. Global Knowledge, Memory and Communication 2018, 67, 154–165. [Google Scholar] [CrossRef]

- Bradshaw, C.J.A.; Brook, B.W. How to rank journals. PLOS ONE 2016, 11, e0149852. [Google Scholar] [CrossRef] [PubMed]

- Havey, N.; Chang, M.J. Do journals have preferences? Insights from the journal of higher education. Innovative Higher Education 2022, 47, 915–926. [Google Scholar] [CrossRef] [PubMed]

- Jussim, L.; Honeycutt, N. Bias in psychology: A critical, historical and empirical review. Swiss Psychology Open 2024, 4, 5. [Google Scholar] [CrossRef]

- Emerson, G.B.; Warme, W.J.; Wolf, F.M.; Heckman, J.D.; Brand, R.A.; Leopold, S.S. Testing for the presence of positive-outcome bias in peer review: A randomized controlled trial. Archives of Internal Medicine 2010, 170. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A. Why most published research findings are false. PLoS Medicine 2005, 2, e124. [Google Scholar] [CrossRef]

- Koch-Weser, D.; Yankauer, A. The authorship and fate of international health papers submitted to the American Journal of Public Health in 1989. American Journal of Public Health 1993, 83, 1618–1620. [Google Scholar] [CrossRef]

- Weller, A.C. Editorial peer review: Its strengths and weaknesses; ASIST monograph series; Information Today: Medford, N.J, 2001. [Google Scholar]

- Gibson, J.L. Against desk rejects! PS: Political Science & Politics 2021, 54, 673–675. [Google Scholar] [CrossRef]

- Rath, M.; Wang, P. Open peer review in the era of open science: a pilot study of researchers’ perceptions. In Proceedings of the 2017 ACM/IEEE Joint Conference on Digital Libraries (JCDL), Toronto, ON, Canada, jun 2017; pp. 1–2. [Google Scholar] [CrossRef]

- Ross-Hellauer, T. What is open peer review? A systematic review. F1000Research 2017, 6, 588. [Google Scholar] [CrossRef]

- Hunter, J. Post-publication peer review: Opening up scientific conversation. Frontiers in Computational Neuroscience 2012, 6. [Google Scholar] [CrossRef]

- Universities, UK. The concordat to support research integrity, 2025.

- Wright, J.; Avouris, A.; Frost, M.; Hoffmann, S. Supporting academic freedom as a human right: Challenges and solutions in academic publishing. The International Journal of Human Rights 2022, 26, 1741–1760. [Google Scholar] [CrossRef]

- McKiernan, E.C.; Schimanski, L.A.; Muñoz Nieves, C.; Matthias, L.; Niles, M.T.; Alperin, J.P. Use of the Journal Impact Factor in academic review, promotion, and tenure evaluations. eLife 2019, 8, e47338. [Google Scholar] [CrossRef] [PubMed]

- Salinas, S.; Munch, S.B. Where should I send it? Optimizing the submission decision process. PLOS ONE 2015, 10, e0115451. [Google Scholar] [CrossRef] [PubMed]

- Baccini, A.; De Nicolao, G.; Petrovich, E. Citation gaming induced by bibliometric evaluation: A country-level comparative analysis. PLOS ONE 2019, 14, e0221212. [Google Scholar] [CrossRef] [PubMed]

- D’Souza, B.; Kulkarni, S.; Cerejo, C. Authors’ perspectives on academic publishing: initial observations from a large-scale global survey. Science Editing 2018, 5, 39–43. [Google Scholar] [CrossRef]

- Wang, J.L.; Li, X.; Fan, J.R.; Yan, J.P.; Gong, Z.M.; Zhao, Y.; Wang, D.M.; Ma, L.; Ma, N.; Guo, D.M.; et al. Integrity of the editing and publishing process is the basis for improving an academic journal’s Impact Factor. World Journal of Gastroenterology 2022, 28, 6168–6202. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A.; Maniadis, Z. Quantitative research assessment: Using metrics against gamed metrics. Internal and Emergency Medicine 2024, 19, 39–47. [Google Scholar] [CrossRef]

- Plevris, V. From integrity to inflation: Ethical and unethical citation practices in academic publishing. Journal of Academic Ethics. [CrossRef]

- Meho, L.I. Gaming the metrics? Bibliometric anomalies and the integrity crisis in global university rankings, 2025. Version Number; 4. [CrossRef]

- Rafols, I.; Leydesdorff, L.; O’Hare, A.; Nightingale, P.; Stirling, A. How journal rankings can suppress interdisciplinary research: A comparison between innovation studies and business & management. Research Policy 2012, 41, 1262–1282. [Google Scholar] [CrossRef]

- Edwards, M.A.; Roy, S. Academic research in the 21st century: Maintaining scientific integrity in a climate of perverse incentives and hypercompetition. Environmental Engineering Science 2017, 34, 51–61. [Google Scholar] [CrossRef]

- Leckert, M. (E-) valuative metrics as a contested field: A comparative analysis of the altmetrics- and the leiden manifesto. Scientometrics 2021, 126, 9869–9903. [Google Scholar] [CrossRef]

- Rushforth, A.; Hammarfelt, B. The rise of responsible metrics as a professional reform movement: A collective action frames account. Quantitative Science Studies 2023, 4, 879–897. [Google Scholar] [CrossRef]

- Boury, H.; Albert, M.; Chen, R.H.C.; Chow, J.C.L.; DaCosta, R.; Hoffman, M.M.; Keshavarz, B.; Kontos, P.; McAndrews, M.P.; Protze, S.; et al. Exploring the merits of research performance measures that comply with the San Francisco declaration on research assessment and strategies to overcome barriers of adoption: Qualitative interviews with administrators and researchers. Health Research Policy and Systems 2023, 21. [Google Scholar] [CrossRef] [PubMed]

- Dewidar, O.; Elmestekawy, N.; Welch, V. Improving equity, diversity, and inclusion in academia. Research Integrity and Peer Review 2022, 7, 4. [Google Scholar] [CrossRef] [PubMed]

- Larivière, V.; Haustein, S.; Mongeon, P. The oligopoly of academic publishers in the digital era. PLOS ONE 2015, 10, e0127502. [Google Scholar] [CrossRef]

- van Bellen, S.; Alperin, J.P.; Larivière, V. The oligopoly of academic publishers persists in exclusive database, 2024. Version Number; 1. [CrossRef]

- Björk, B.C. Open access to scientific articles: A review of benefits and challenges. Internal and Emergency Medicine 2017, 12, 247–253. [Google Scholar] [CrossRef]

- Harwell, E.N. Academic journal publishers antitrust litigation, 2024.

- Segado-Boj, F.; Prieto-Gutiérrez, J.; Martín-Quevedo, J. Attitudes, willingness, and resources to cover article publishing charges: The influence of age, position, income level country, discipline and open access habits. Learned Publishing 2022, 35, 489–498. [Google Scholar] [CrossRef]

- Solomon, D.J.; Björk, B. Publication fees in open access publishing: Sources of funding and factors influencing choice of journal. Journal of the American Society for Information Science and Technology 2012, 63, 98–107. [Google Scholar] [CrossRef]

- Klebel, T.; Ross-Hellauer, T. The APC-barrier and its effect on stratification in open access publishing. Quantitative Science Studies 2023, 4, 22–43. [Google Scholar] [CrossRef]

- Budzinski, O.; Grebel, T.; Wolling, J.; Zhang, X. Drivers of article processing charges in open access. Scientometrics 2020, 124, 2185–2206. [Google Scholar] [CrossRef]

- Cantrell, M.H.; Swanson, J.A. Funding sources for open access article processing charges in the social sciences, arts, and humanities in the united states. Publications 2020, 8, 12. [Google Scholar] [CrossRef]

- Ellingson, M.K.; Shi, X.; Skydel, J.J.; Nyhan, K.; Lehman, R.; Ross, J.S.; Wallach, J.D. Publishing at any cost: A cross-sectional study of the amount that medical researchers spend on open access publishing each year. BMJ Open 2021, 11, e047107. [Google Scholar] [CrossRef]

- Zhang, L.; Watson, E.M. Measuring the impact of gold and green open access. The Journal of Academic Librarianship 2017, 43, 337–345. [Google Scholar] [CrossRef]

- Gouzi, F.; Tasovac, T. Gold, green, diamond: What you should know about open access publishing models authors, 2025.

- Normand, S. Is diamond open access the future of open access? The iJournal: Student Journal of the Faculty of Information, 2018, 3, 1–7. [Google Scholar]

- Himmelstein, D.S.; Romero, A.R.; Levernier, J.G.; Munro, T.A.; McLaughlin, S.R.; Greshake Tzovaras, B.; Greene, C.S. Sci-Hub provides access to nearly all scholarly literature. eLife 2018, 7, e32822. [Google Scholar] [CrossRef] [PubMed]

- Greshake, B. Looking into Pandora’s box: The content of Sci-hub and its usage. F1000Research 2017, 6, 541. [Google Scholar] [CrossRef]

- Karaganis, J. (Ed.) Shadow libraries: Access to knowledge in global higher education; International Development Research Centre, The MIT Press: Cambridge, 2018. [Google Scholar]

- Bohannon, J. Who’s downloading pirated papers? Everyone. Science 2016, 352, 508–512. [Google Scholar] [CrossRef]

- Sugiharto, S. De-westernizing hegemonic knowledge in global academic publishing: Toward a politics of locality. Journal of Multicultural Discourses 2021, 16, 321–333. [Google Scholar] [CrossRef]

- Schiltz, M. Science without publication paywalls: cOAlition S for the realisation of full and immediate open access. Frontiers in Neuroscience 2018, 12, 656. [Google Scholar] [CrossRef]

- Grötschel, M. Project DEAL: Plans, challenges, results, 2019.

- Aria, M.; Cuccurullo, C. bibliometrix: An R-tool for comprehensive science mapping analysis. Journal of Informetrics 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Johs, H. Multiple correspondence analysis for the social sciences, 1 ed.; Routledge: Abingdon, Oxon; New York, NY: Routledge, 2018, 2018. [Google Scholar] [CrossRef]

- Cobo, M.; López-Herrera, A.; Herrera-Viedma, E.; Herrera, F. An approach for detecting, quantifying, and visualizing the evolution of a research field: A practical application to the fuzzy sets theory field. Journal of Informetrics 2011, 5, 146–166. [Google Scholar] [CrossRef]

- Lorenzo Lledó, G.; Scagliarini Galiano, C. Revisión bibliométrica sobre la realidad aumentada en Educación. Revista General de Información y Documentación 2018, 28. [Google Scholar] [CrossRef]

- Ossom Williamson, P.; Minter, C.I.J. Exploring PubMed as a reliable resource for scholarly communications services. Journal of the Medical Library Association: JMLA 2019, 107, 16–29. [Google Scholar] [CrossRef]

- Moher, D.; Naudet, F.; Cristea, I.A.; Miedema, F.; Ioannidis, J.P.A.; Goodman, S.N. Assessing scientists for hiring, promotion, and tenure. PLOS Biology 2018, 16, e2004089. [Google Scholar] [CrossRef]

- Pulverer, B. Impact fact-or fiction? The EMBO Journal 2013, 32, 1651–1652. [Google Scholar] [CrossRef] [PubMed]

- Fire, M.; Guestrin, C. Over-optimization of academic publishing metrics: Observing Goodhart’s law in action. GigaScience 2019, 8. [Google Scholar] [CrossRef] [PubMed]

- Sinclair, B.J. Letting ChatGPT do your science is fraudulent (and a bad idea), but AI-generated text can enhance inclusiveness in publishing. Current Research in Insect Science 2023, 3, 100057. [Google Scholar] [CrossRef]

- Seglen, P.O. Why the impact factor of journals should not be used for evaluating research. BMJ 1997, 314, 497–497. [Google Scholar] [CrossRef] [PubMed]

- Van Raan, A.F.J. Fatal attraction: Conceptual and methodological problems in the ranking of universities by bibliometric methods. Scientometrics 2005, 62, 133–143. [Google Scholar] [CrossRef]

- Ross-Hellauer, T.; Görögh, E. Guidelines for open peer review implementation. Research Integrity and Peer Review 2019, 4. [Google Scholar] [CrossRef]

- Chan, L.; Kirsop, B.; Arunachalam, S. Towards open and equitable access to research and knowledge for development. PLoS Medicine 2011, 8, e1001016. [Google Scholar] [CrossRef]

- Mathew, R.P.; Patel, V.; Low, G. Predatory journals- the power of the predator versus the integrity of the honest. Current Problems in Diagnostic Radiology 2022, 51, 740–746. [Google Scholar] [CrossRef]

- Willinsky, J. The access principle: The case for open access to research and scholarship; Digital libraries and electronic publishing, MIT Press: Cambridge, Mass, 2006. [Google Scholar]

- Posada, A.; Chen, G. Inequality in knowledge production: The integration of academic infrastructure by big publishers. In Proceedings of the 22nd International Conference on Electronic Publishing. OpenEdition Press, jun 2018. [Google Scholar] [CrossRef]

- Fyfe, A.; Coate, K.; Curry, S.; Lawson, S.; Moxham, N.; Røstvik, C.M. Untangling academic publishing: A history of the relationship between commercial interests, academic prestige and the circulation of research, 2017.

- Bowen, W.M.; Schwartz, M. The chief purpose of universities: Academic discourse and the diversity of ideas; MSL Academic Endeavors: Cleveland, Ohio, 2010. [Google Scholar]

- Nahai, F. Diversity on journal editorial boards: Why it is important. Aesthetic Surgery Journal 2021, 41, 635–637. [Google Scholar] [CrossRef]

| # | Topic | Key Concept | Search String | Time Slicing |

|---|---|---|---|---|

| 1 | Gatekeeping and editorial bias | Editorial gatekeeping, Editorial subjectivity / bias, Biased desk rejection, Biased, peer review process, Confirmation bias in peer review, Positive publication bias, Inclusion/exclusion of replication or negative results, Hierarchical publishing / prestige bottlenecks |

Block A: Gatekeeping & Editorial Control Scopus: ( "editorial gatekeeping" OR "editorial bias" OR "editorial subjectivity" OR "editorial decision*" OR "insider bias" OR ( "desk reject*" AND ( bias OR subjectivity OR favoritism OR transparency ) ) ) 731 documents found on Scopus |

No time slicing |

| Block B: Bias in peer review / inclusion ( "peer review bias" OR "publication bias" OR "positive results bias" OR "positive publication bias" OR "null results exclusion" OR "negative results exclusion" OR "negative findings exclusion" OR "null findings exclusion" OR "file drawer problem" ) AND ( "peer review" OR "academic publishing" OR "scientific communication" OR "research dissemination" ) 516 documents found on Scopus |

||||

| Block C: Journal Prestige barriers "prestige journals" OR "hierarchical publishing" OR "publishing hierarchy" OR "publication hierarchy" OR "journal prestige" OR "publication bottleneck" 191 documents found on Scopus |

||||

| 2 | Prestige-driven metrics and research evaluation | Overemphasis of publication metrics (e.g., impact factor, citation counts, journal quartiles. Distorted research evaluation. Hypercompetition in publishing, "Natural selection of bad science", distorted publication practice (e.g., p-hacking, selective reporting). |

Block A: Metrics overreliance ( "impact factor" OR "journal impact factor" OR "journal quartile" OR "journal rankings" OR "citation-based metric*" OR "research metric*" ) AND ( "overreliance" OR "misuse" OR "abuse" OR "limitations" OR "criticism" OR "flaws" OR "problem*" OR "bias*" OR "gaming" OR "inappropriate" ) 3571 documents found on Scopus |

2000–2013 = pre-DORA/Leiden 2014-2025 = post-DORA/Leiden adoption |

| Block B: Research assessment & evaluation "research assessment fairness" OR "research evaluation" OR "faculty evaluation fairness" OR "academic evaluation fairness" OR "promotion and tenure" OR "RPT" OR "review promotion tenure" 1704 documents found on Scopus |

||||

| Block C: Problems / distortions caused by metrics "natural selection of bad science" OR "p-hacking" OR "selective reporting" OR "salami slicing" OR "research malpractice" OR "reductionist evaluation" OR "metrics distortion" OR "prestige-driven publishing" 1812 documents found on Scopus |

||||

| Block D: Broader discourse documents "DORA declaration" OR "San Francisco Declaration on Research Assessment" OR "Leiden Manifesto" OR "responsible metrics" OR "responsible research assessment" OR "responsible evaluation" 130 documents found on Scopus |

||||

| 3 | Barrier and equity issues in research accessibility | Commercialization of publishing and academic publication oligopoly. Paywalls from subscription-based models. Accessibility problem from article processing charges (APCs). Open access as an inequitable partial solution. Regional and institutional inequities in access. Sci-Hub as a symptom of access issues. Global disparities in scholarly publishing participation |

Block A: Publishing Commercialization & oligopoly “commercial publishing” OR "academic publishing oligopoly" OR "publishing oligopoly" OR "commercial publishers" OR "academic publishing industry" OR "publishing market concentration" 536 documents found on Scopus |

2000-2011 = pre Sci-hub 2012-2025 = post Sci-hub |

| Block B: Barriers to access "academic journals paywall*" OR "subscription-based journal*" OR "subscription cost*" OR "restricted research access" OR "barriers to research access" OR "access to research" OR "research access" AND ( "inequit*" OR "disparity" OR "librar*" ) 318 documents found on Scopus |

||||

| Block C: Open access challenges & APCs "article processing charges" OR "cost of open access" OR "open access inequity" OR "APC waiver" OR "affordability of open access" OR "predatory open access" 553 documents found on Scopus |

||||

| Block D: Global equity & participation "equity in scholarly publishing" OR "global inequities in publishing" OR "research accessibility" OR "knowledge equity" OR "research participation barriers" OR "low-income country publishing" OR "underfunded institutions" OR "global South research access" 143 documents found on Scopus |

||||

| Block E: Sci-Hub and shadow libraries “Sci-Hub” OR "shadow library" OR "pirate library" OR "illegal access to research" OR "circumventing paywalls" OR "Sci-Hub citation impact" OR "Sci-Hub and research access" OR “black open access” OR “shadow libraries” 155 documents found on Scopus |

| Topic 1: Gatekeeping and editorial bias | Topic 2: Prestige-driven metrics and research evaluation | Topic 3: Barrier and equity issues in research accessibility | |

|---|---|---|---|

| N of analyzed documents (Total document - duplicates) | 1420 (1438/18) | 7044 (7217/173) | 1637 (1705/68) |

| Annual growth rate % | 10.7 | 9.08 | 8.09 |

| Average citations per year per document | 2.955 | 3.588 | 1.583 |

| Document Types (N) | article (880) book (16) book chapter (61) conference paper (47) editorial (157) review (259) |

article (4558) book (24) book chapter (197) conference paper (848) editorial (165) review (1252) |

article (1138) book (17) book chapter (102) conference paper (129) editorial (49) review (202) |

| Maximally and minimally productive year (N of publications) | 2019 (158) 2000 (5) | 2022 (600) 2000 (32) |

2024 (171) 2003 (10) |

| Top corresponding author’s country (N of documents) | USA (365) | USA (1096) | USA (399) |

| Top 5 sources | 1. Review of financial studies 2. PLOS one 3. Scientometrics 4. BMJ Open 5. Review of corporate finance studies |

1. Cochrane database of systematic review 2. PLOS one 3. Scientometric 4. Journal of clinical epidemiology 5. Research evaluation |

1. Learned publishing 2. Insights: The UKSG journal 3. Scientometrics 4. Publications 5. Serial librarians |

| Top 5 keywords | 1. Peer review 2. Publication bias 3. Bias 4. Prestige bias 5. publication |

1. Impact factor 2. Research evaluation 4. Bibliometrics 4. Publication bias 5. P-hacking |

1. Article processing charge 2. Scholarly publishing 3. Open access 4. Publishing 5. Sci-hub |

| 2000-2013 pre-DORA and Leiden Manifesto |

2014-2025 post-DORA and Leiden Manifesto |

|

|---|---|---|

| N of analyzed documents | 1664 | 5380 |

| Annual growth rate % | 18.35 | -1.26 |

| Average citations per year per document | 3.572 | 3.593 |

| Document Types (N) | article (1004) book (1) book chapter (30) conference paper (300) editorial (52) review (277) |

article (3554) book (23) book chapter (167) conference paper (548) editorial (113) review (975) |

| Maximally and minimally productive year (N of publications) | 2013 (286) 2000 (32) |

2022 (600) 2025 (281) |

| Top corresponding author’s country (N of documents) | USA (296) | China (825) |

| Top 5 sources | 1. PLOS one 2. Scientometric 3. Cochrane database of systematic review 4. Journal of clinical epidemiology 5. Lecture note in computer science |

1. Cochrane database of systematic review 2. PLOS one 3. Scientometrics 4. Journal of clinical epidemiology 5. BMJ open |

| Top 5 keywords | 1. Impact factor 2. Bibliometrics 3. Research evaluation 4. Bias 5. Citation analysis |

1. Research evaluation 2. Impact factor 3. Bibliometrics 4. P-hacking 5. Publication bias |

| 2000-2011 Pre Sci-hub |

2012-2025 Post Sci-hub |

|

|---|---|---|

| N of analyzed documents | 276 | 1361 |

| Annual growth rate % | 10.19 | 5.8 |

| Average citations per year per document | 0.8838 | 1.725 |

| Document types (N) | article (160) book (3) book chapter (16) conference paper (38) editorial (16) review (43) |

article (978) book (14) book chapter (86) conference paper (91) editorial (33) review (159) |

| Maximally and minimally productive year (N of publications) | 2011 (32) 2003 (10) |

2024 (171) 2012 (37) |

| Top corresponding author’s country (N of documents) | USA (81) | USA (258) |

| Top 5 sources | 1. Serials librarian 2. Information services and use 3. D-Lib Magazine 4. College and research libraries 5. First monday |

1. Learned publishing 2. Insights: the UKSG journal 3. Scientometrics 4. Publications 5. Publishing research quarterly |

| Top 5 keywords | 1. Publishing 2. Open access 3. Electronic publishing 4. Libraries 5. Internet |

1. Open access 2. Article processing charge 3. Publishing 4. Sci-hub 5. Predatory journals |

| Insights from performance analysis | Insights from conceptual analysis | Key characteristic of the discourse from integration | |

|---|---|---|---|

| Topic 1 | Highest growth across topics, indicating a steadily increasing scholarly interest. The discourse is not only empirical but also reflective and opinion-driven US is the dominant country |

Focus on peer review systems and editorial practices Clusters reveal discourses on editorial bias, inclusion, and power asymmetries in the scholarly communication process. Recurring keywords are “peer review,” “publication bias,” “prestige bias” |

Discourse is critical and reform-oriented The main theme is transparency, bias, power in editorial systems Links to epistemic justice and legitimacy in academia |

| Topic 2 | Largest number of literature among all topics. Active discussion as seen from the volume of conference proceeding and citation rate. US is the dominant country Sources represent a mix of methodological and policy-focused journals. |

Focus on impact factors, rankings, citation metrics Clusters reveal the discourse of scholarly metrics, its limitations, and the following problem. Recurring keywords are “impact factor,” “research evaluation,” “metrics” |

Critique of metric-based academic culture The main theme is performance pressures, questionable research practices, scholarly metricization Mix of critical reassessment and institutional entrenchment |

| Topic 3 | Relatively lowest scholarly interest as seen from annual growth rate and citation rate. US is the dominant country Sources focus on the library and information science area. |

Focus on access inequities, APCs, copyright, predatory publishing Clusters reveal concerns of economic and legal barriers to research accessibility, as well as grassroot responses. Recurring keywords are “open access,” “APC,” “predatory publishing” |

Equity and ethics at the center of the discourse The main theme is institutional reform, funding, informal infrastructures Tensions between open science ideals and exclusionary research access |

| Insights from performance analysis | Insights from conceptual analysis | |

|---|---|---|

| Pre-DORA | Rapid publication growth High number of conference proceedings, implying topic engagement Dominated by USA |

Evaluation centered on quantification and hierarchy Journal impact factor viewed as legitimate proxy for value Early concerns (e.g., selective reporting) were marginal Critiques were emergent and peripheral |

| Post-DORA | Relatively lower engagement from growth rate and number of conference proceedings. More diversified discussion as seen from the distribution of document type. Dominated by China |

Reflexive and multidimensional discourse Focus on methodological integrity, ethics, and equity Research integrity and peer review now central Emphasis on rethinking research evaluation |

| Key change from Integrated insight | Shift from a metrics-dominated, performance-driven model to a more reflexive, ethically grounded, and pluralistic evaluation paradigm. Metrics are now contextualized within broader concerns of transparency, fairness, and methodological rigor. Keyword shifts to conversation in examining the processes and incentives that shape scientific outputs. |

|

| Insights from performance analysis | Insights from conceptual analysis | |

|---|---|---|

| Pre-Sci-hub | Relatively smaller starting point interest from high growth but low citation rate Dominated by USA Sources are library-oriented field |

Framed as infrastructure and digitization issue Themes focused on institutional roles (e.g., libraries) Open access was fragmented and peripheral Debate largely within traditional publishing structures Keywords imply discussion around the infrastructure of research access. |

| Post-Sci-hub | Relatively higher engagement with the topic as seen from nearly doubled citation rate albeit lower growth rate. Dominated by USA Sources include science evaluation and communication venues. |

Shift to systemic, ethical, and legal critique Central themes are APCs, piracy, predatory journals, open access Motor themes now include reform and inclusivity Expanded discourse on grassroots dissemination and data ethics Keywords imply economic and political-oriented discourse, with Sci-hub as a key object. |

| Key change + Integrated insight | Shift from technical/institutional access issues to a politicized, systemic critique of publishing norms. The field now engages with ethical, economic, and global dimensions of access, driven by Sci-Hub’s role in exposing structural inequities and legitimizing informal knowledge sharing. |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).