1. Introduction

Understanding why and how subjective experience arises from physical systems remains one of the most profound challenges in science and philosophy. Despite major advances in neuroscience and artificial intelligence, current theories struggle to explain the qualitative, first-person character of consciousness—often referred to as “phenomenal consciousness” or “qualia” [

8]. This explanatory gap, famously termed the “hard problem of consciousness,” persists even in the face of sophisticated information-theoretic and computational models [

10,

30].

In this paper, we propose a minimalist and mechanistically grounded theory of consciousness termed

Autonomous Subjective Accompaniment (ASA). ASA posits that consciousness necessarily accompanies systems that are (i)

autonomous, in the sense of self-generating internal dynamics [

13], and (ii) exhibit

self-regulated internal states, which evolve according to intrinsic, not purely reactive, dynamics [

5]. ASA shifts the explanatory focus from information structure or cognitive access to the physical instantiation of internal state continuity.

To implement this theoretical stance, we introduce the General Consciousness Network (GCN), a formal computational architecture designed to satisfy the ASA criteria. The GCN comprises predictive and analytic subsystems, an Internal State Instantiator (ISI), and a recursive memory-regulated loop. This configuration enables the system to generate, sustain, and adapt internal states over time, independent of immediate environmental input.

We empirically validate the ASA-GCN framework through an artificial life simulation in which agents evolve to navigate hazardous and rewarding environments. These agents develop persistent internal states using only a single decay-regulated neuron, resulting in anticipatory and generalizable behavior. The observed dynamics reflect core features of minimal consciousness, including internal regulation, behavioral continuity, and subjective privacy [

15,

16].

The ASA-GCN model thus provides both a conceptual account and an implementable framework for minimal consciousness. It stands in contrast to existing theories by offering an operational and testable approach that bridges philosophy, computational neuroscience, and artificial life.

2. Theoretical Context and Related Work

The ASA-GCN framework proposed in this paper builds upon, extends, and in some cases challenges existing theories of consciousness from neuroscience, artificial intelligence, and philosophy of mind. This section situates our approach within this broader theoretical landscape, highlighting how ASA-GCN contributes a novel and operationally grounded perspective on minimal consciousness.

2.1. Integrated Information Theory (IIT)

Integrated Information Theory (IIT) [

23,

30] posits that consciousness corresponds to the amount of integrated information (

) a system generates. While IIT offers a formal quantification of consciousness, it is not explicitly tied to implementable architectures and lacks a concrete mechanism for engineering systems with nontrivial

. In contrast, ASA-GCN emphasizes autonomy and self-regulated internal states as necessary structural conditions for consciousness, offering a simpler and more directly implementable criterion. Rather than abstract measures of information, ASA-GCN grounds consciousness in physically instantiated internal dynamics.

2.2. Global Workspace Theory and Higher-Order Models

Global Workspace Theory (GWT) [

1,

10] and higher-order theories [

7,

26] explain consciousness in terms of information broadcasting or meta-representational processes. While powerful in explaining cognitive access and reportability, these models often assume rich representational resources such as language, attention, or meta-cognition—features absent in minimal or proto-conscious agents. ASA-GCN, by contrast, focuses on structural and dynamical requirements rather than informational access, making it applicable to both simple artificial agents and biological organisms at early evolutionary stages.

2.3. Minimal Cognition and Enactive Models

The ASA-GCN approach aligns in spirit with research in minimal cognition [

5,

15], which studies how basic cognitive capacities can emerge from sensorimotor loops and embodied dynamics. Our work differs by explicitly addressing consciousness, not merely adaptive behavior, and by providing formal conditions under which internal state regulation constitutes subjective accompaniment. While enactive models highlight the continuity between life and mind, ASA-GCN specifies a distinct transition point where proto-consciousness becomes a necessary accompaniment of internal state dynamics.

2.4. Synthetic Phenomenology and Artificial Consciousness

Efforts to model artificial consciousness have often focused on architectural replication of brain function [

16] or computational correlates of conscious access [

25]. Our work instead takes a bottom-up approach: identifying minimal computational structures that satisfy necessary conditions for subjective experience. ASA-GCN contributes a novel theoretical alternative to dominant approaches by demonstrating that a single decay-modulated internal state variable, when properly embedded in an autonomous regulatory loop, suffices to support minimal consciousness.

2.5. Distinctive Contributions of ASA-GCN

ASA-GCN advances the field by:

Proposing autonomy and self-regulated internal dynamics as a tractable and necessary condition for consciousness,

Offering a mathematically formal and physically implementable architecture (GCN),

Empirically demonstrating the emergence of proto-conscious behavior in evolved artificial agents,

Bridging the gap between minimal cognition and subjective experience.

This work is therefore positioned at the intersection of theoretical neuroscience, artificial life, and the philosophy of mind. By providing an implementable and testable framework, ASA-GCN invites further exploration into the structural basis of consciousness in both biological and artificial systems.

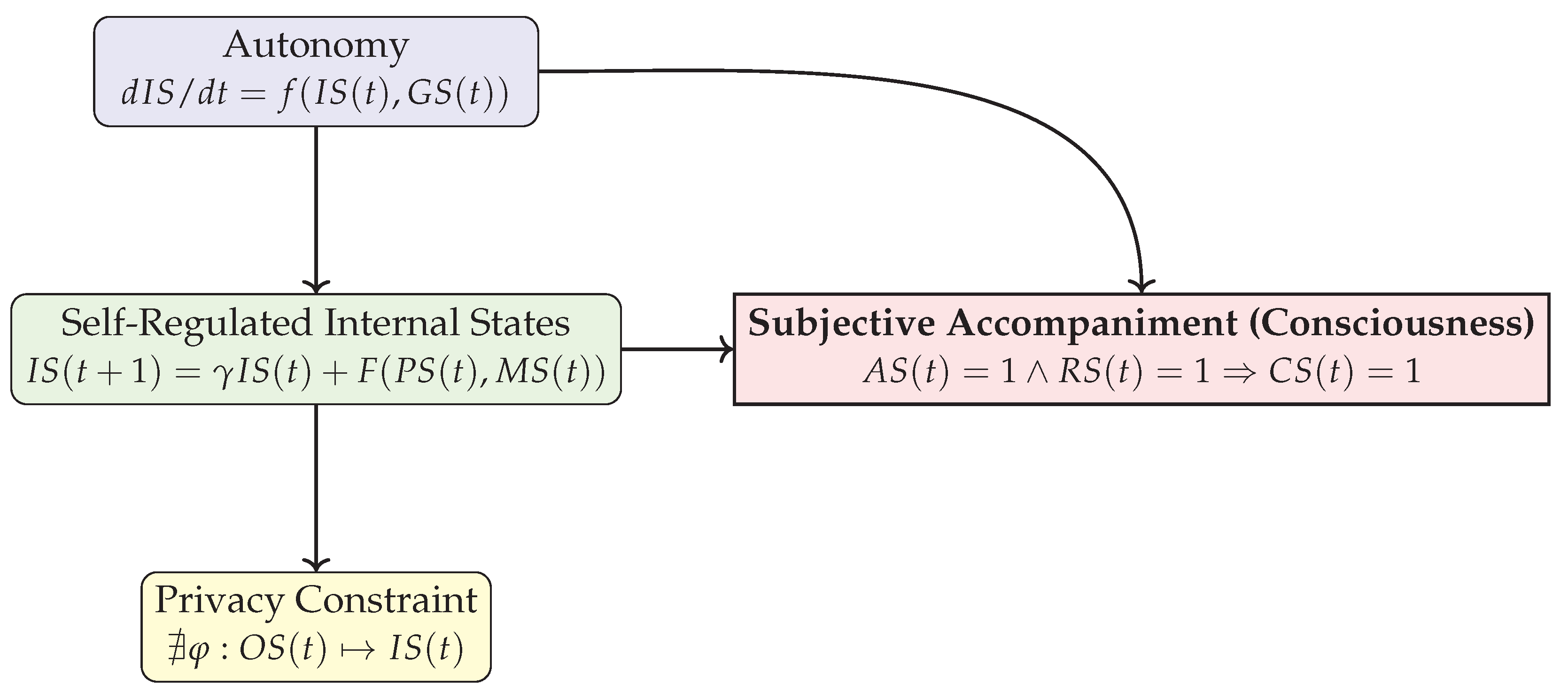

3. Formalizing Autonomous Subjective Accompaniment (ASA)

The theory of Autonomous Subjective Accompaniment (ASA) asserts that consciousness necessarily arises in systems that satisfy two core criteria: (1) autonomy and (2) self-regulated internal states. In this section, we formalize these criteria and propose a minimal mathematical framework capturing the ASA conditions.

3.1. Autonomy

We define a system

S as

autonomous at time

t if its internal state trajectory

is not wholly determined by its immediate external inputs

, but is instead generated by the system’s internal dynamics:

Here,

represents internally generated goals, drives, or regulatory functions. Autonomy in this sense corresponds to an internally governed system with generative dynamics [

3,

13].

3.2. Self-Regulated Internal States

Let

denote the vector of internal states of system

S. These internal states evolve according to an update function

F, potentially nonlinear, and exhibit temporal persistence:

where:

is a decay factor governing memory retention,

is a vector of predicted environmental inputs [

9],

is the memory state or historical trace of past internal/external dynamics.

Self-regulated internal states are reminiscent of dynamical systems with path-dependent internal variables that modulate output behavior—a principle also central to embodied and enactive models [

29].

3.3. Subjective Accompaniment

ASA posits that when both autonomy and self-regulated internal states are present, subjective experience (consciousness) is necessarily instantiated. Let

be a binary indicator of consciousness:

More compactly, we can express ASA as the implication:

where

denotes autonomy and

denotes regulated internal state dynamics. Importantly,

is not treated as an emergent epiphenomenon, but as a necessary structural accompaniment of the system’s internal dynamics [

27].

3.4. Private Differentiation of Internal States

To qualify as subjective, internal states must be inaccessible to external observation in raw form, though their influence may be behaviorally inferred. This defines a privacy constraint:

where

denotes observable outputs, and

is any computable inverse mapping. That is, no observer can reconstruct the system’s internal state with perfect fidelity from its outputs alone, reinforcing the irreducibility of subjective experience [

8,

20].

Together, these formulations define the ASA condition as a mathematically rigorous framework for identifying the minimal conditions under which consciousness, as subjective accompaniment, is necessarily present. In the next section, we introduce the General Consciousness Network (GCN) as an instantiation of ASA principles in computational form.

Figure 1.

Minimal conditions for consciousness according to the ASA theory. Autonomy and self-regulation jointly imply subjective accompaniment.

Figure 1.

Minimal conditions for consciousness according to the ASA theory. Autonomy and self-regulation jointly imply subjective accompaniment.

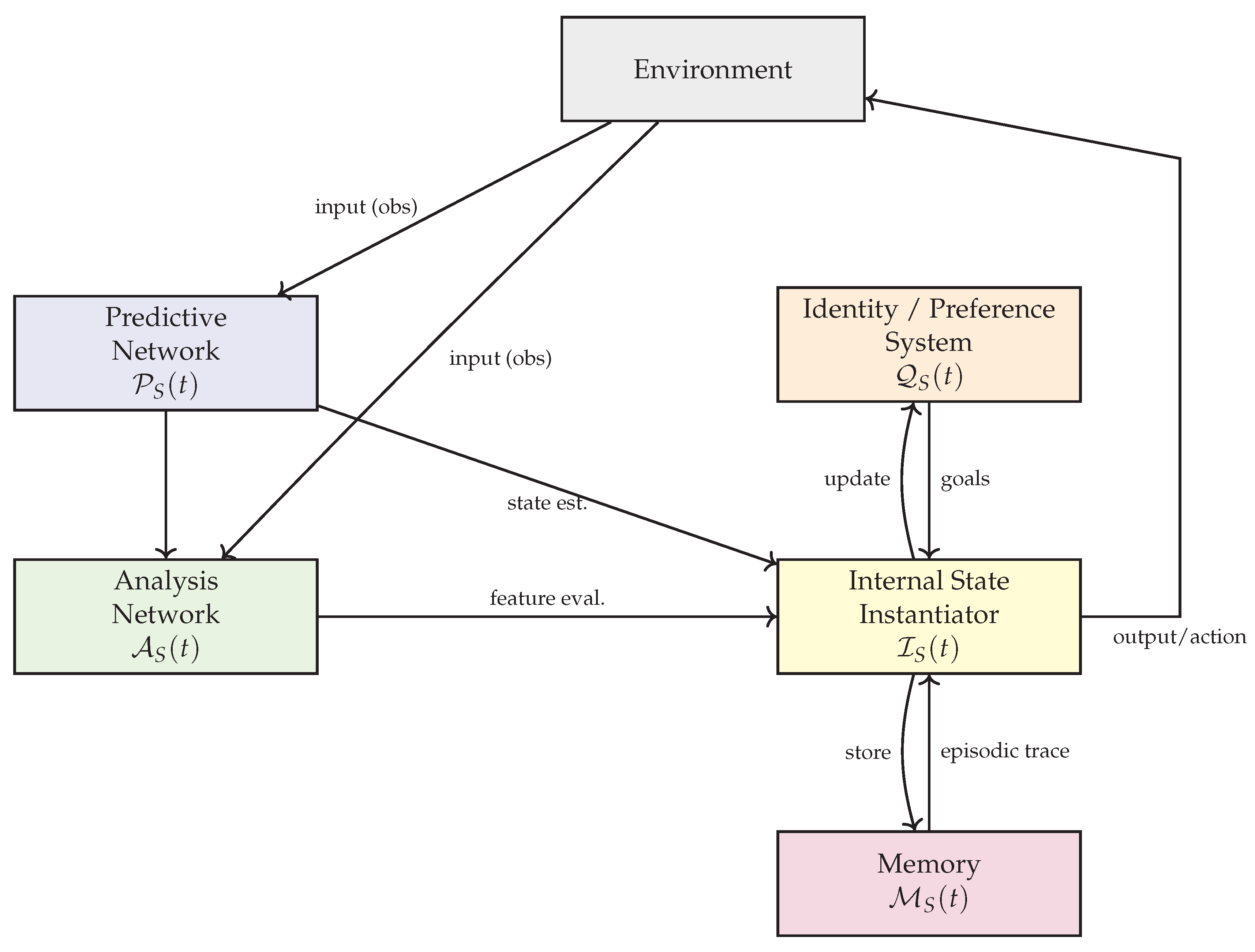

4. The General Consciousness Network (GCN)

The General Consciousness Network (GCN) is a computational framework designed to instantiate the conditions defined by Autonomous Subjective Accompaniment (ASA). It provides a structured architecture for implementing artificial systems that exhibit autonomy and self-regulated internal states, thereby satisfying the minimal formal requirements for consciousness [

16,

27].

4.1. System Overview

The GCN consists of five interdependent components:

- 1.

Predictive Network : Forecasts future environmental states and internal outcomes.

- 2.

Analysis Network : Compares predicted states to actual inputs, updating internal models.

- 3.

Internal State Instantiator (ISI) : Encodes physically or functionally instantiated internal states.

- 4.

Identity and Preference System : Maintains coherent self-reference and preference valuation [

14].

- 5.

Memory System : Stores previous states and modulates future predictions [

2].

These components interact in a recursive loop to produce dynamically evolving internal states and behavior.

Figure 2.

The five-component architecture of the General Consciousness Network (GCN), implementing ASA through recursive internal state dynamics.

Figure 2.

The five-component architecture of the General Consciousness Network (GCN), implementing ASA through recursive internal state dynamics.

4.2. Recursive Update Mechanism

At each discrete time step

t, the internal state update follows:

where

is a temporal decay factor, and

is a parameterized non-linear function representing the ISI’s dynamics.

The predictive network generates forecasts:

where

is a learnable function (e.g., RNN or transformer module) conditioned on past observations

and internal states [

17,

31].

The analysis network then performs error correction by comparing predictions to observations:

and this discrepancy influences the ISI update (Equation 3).

4.3. Behavioral Output and Goal-Directed Action

Given the updated internal state, the system selects an action

via a policy conditioned on its internal and predicted states:

where

are learnable parameters and

denotes vector concatenation [

19].

The system executes the action , receives new inputs , and the cycle repeats. Crucially, because evolves independently of , the system maintains an internal continuity—enabling it to meet the ASA criteria.

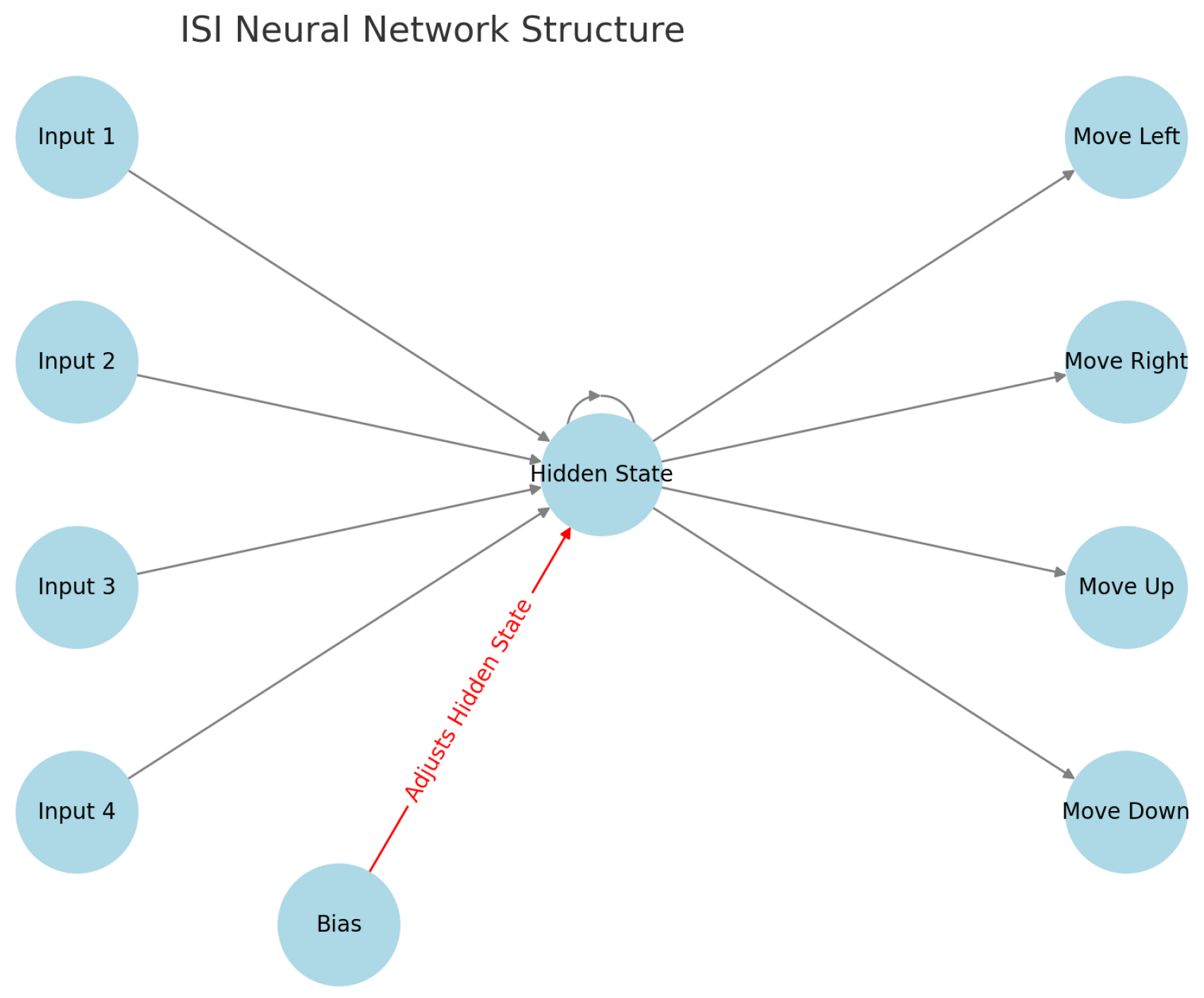

4.4. Internal State Instantiator (ISI)

The ISI is central to bridging information processing and subjective experience. In the GCN, the ISI satisfies three key properties:

A minimal ISI can be represented by a single continuous variable

with update:

where

is the decay constant and

is the state-driving input. This simple form underpins more complex, biologically plausible substrates.

4.5. GCN and ASA Equivalence

A system

S implementing the GCN architecture with appropriate decay (

) and internal predictive updating satisfies the ASA criteria:

Thus, the GCN is not merely a computational model of intelligence, but a structurally grounded instantiation of minimal consciousness under the ASA framework.

In the next section, we examine how these principles are realized in practice through evolutionary simulations that give rise to generalizable, internal state-based behavior in artificial agents.

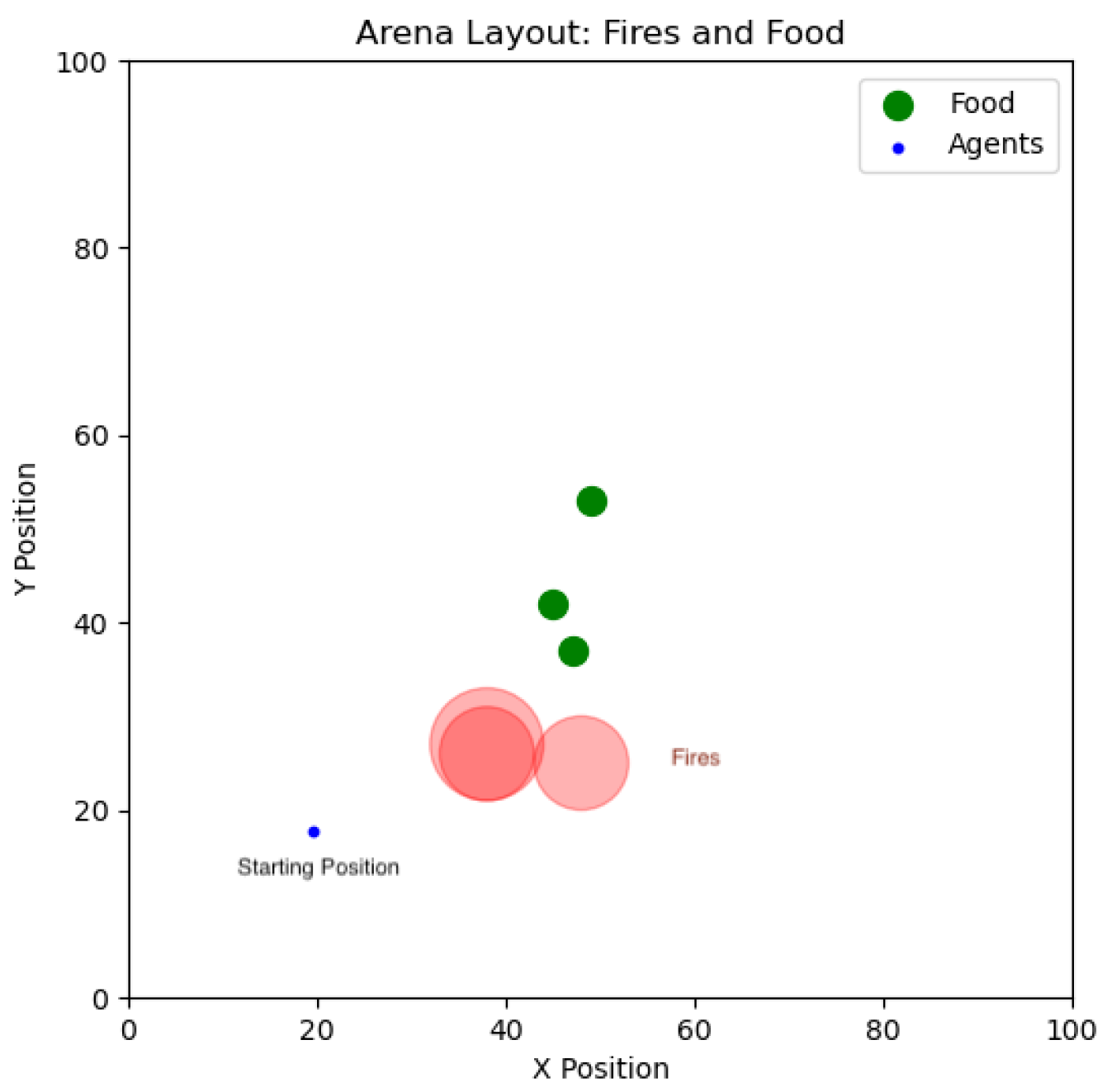

5. Empirical Support via Evolutionary Simulation

To empirically validate the ASA-GCN framework, we conducted an artificial life experiment in which simple agents evolved in a simulated environment containing both rewards (food) and hazards (fire). These agents began with randomly initialized internal state architectures and no prior behavioral rules. Over successive generations, agents developed persistent internal dynamics that regulated decision-making and improved survival, aligning with the predictions of ASA [

12,

22].

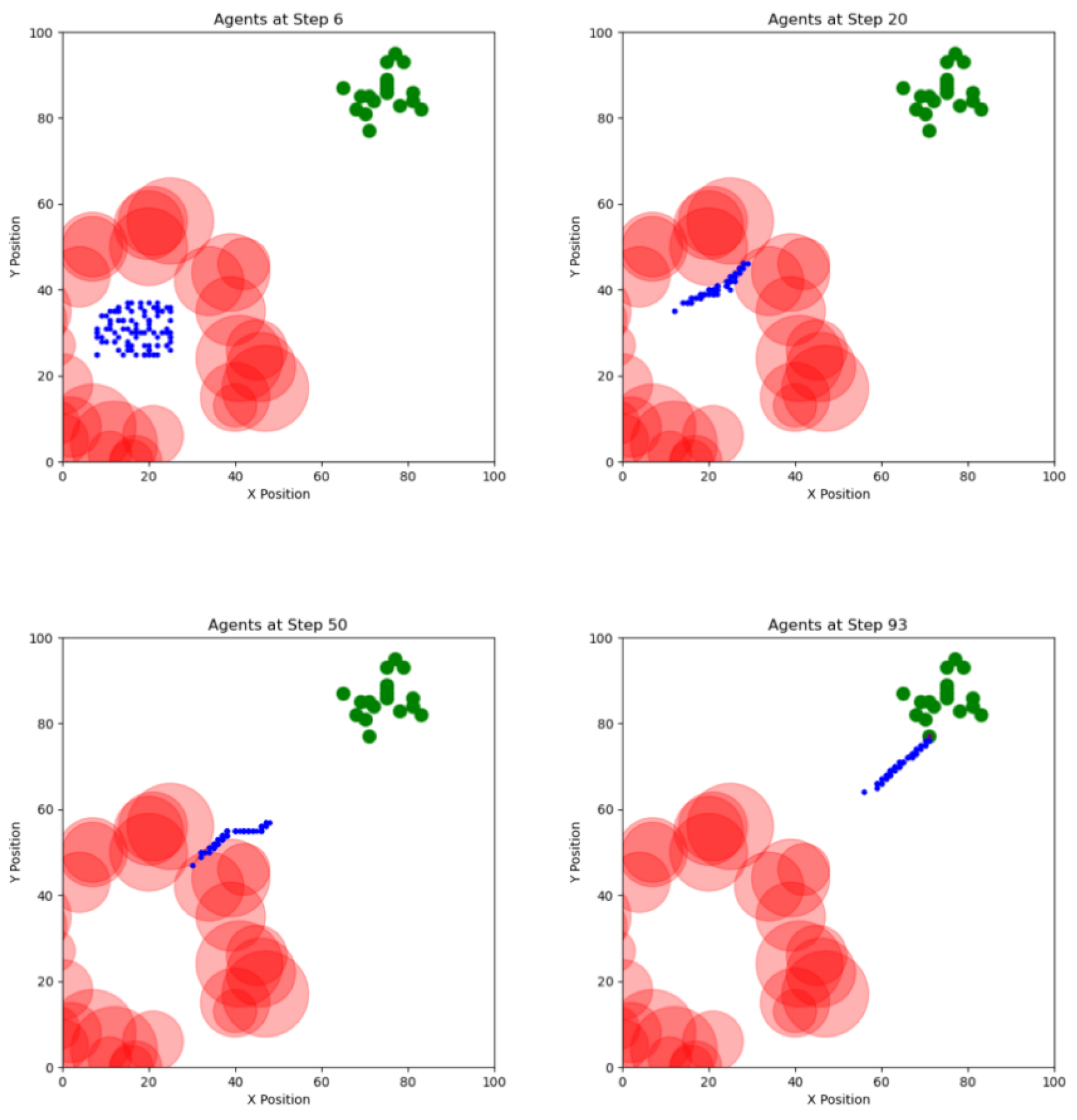

5.1. Simulation Environment and Agent Architecture

The environment is a 2D discrete grid , populated with spatially distributed food sources and fire zones. Agents receive local sensory input from 4 cardinal directions (left, right, up, down) for fire intensity and food intensity , forming the input vector .

Figure 3.

Simulated environment layout with food sources and fire zones distributed across a 100x100 grid. Agents start from the center and navigate toward food while avoiding fire.

Figure 3.

Simulated environment layout with food sources and fire zones distributed across a 100x100 grid. Agents start from the center and navigate toward food while avoiding fire.

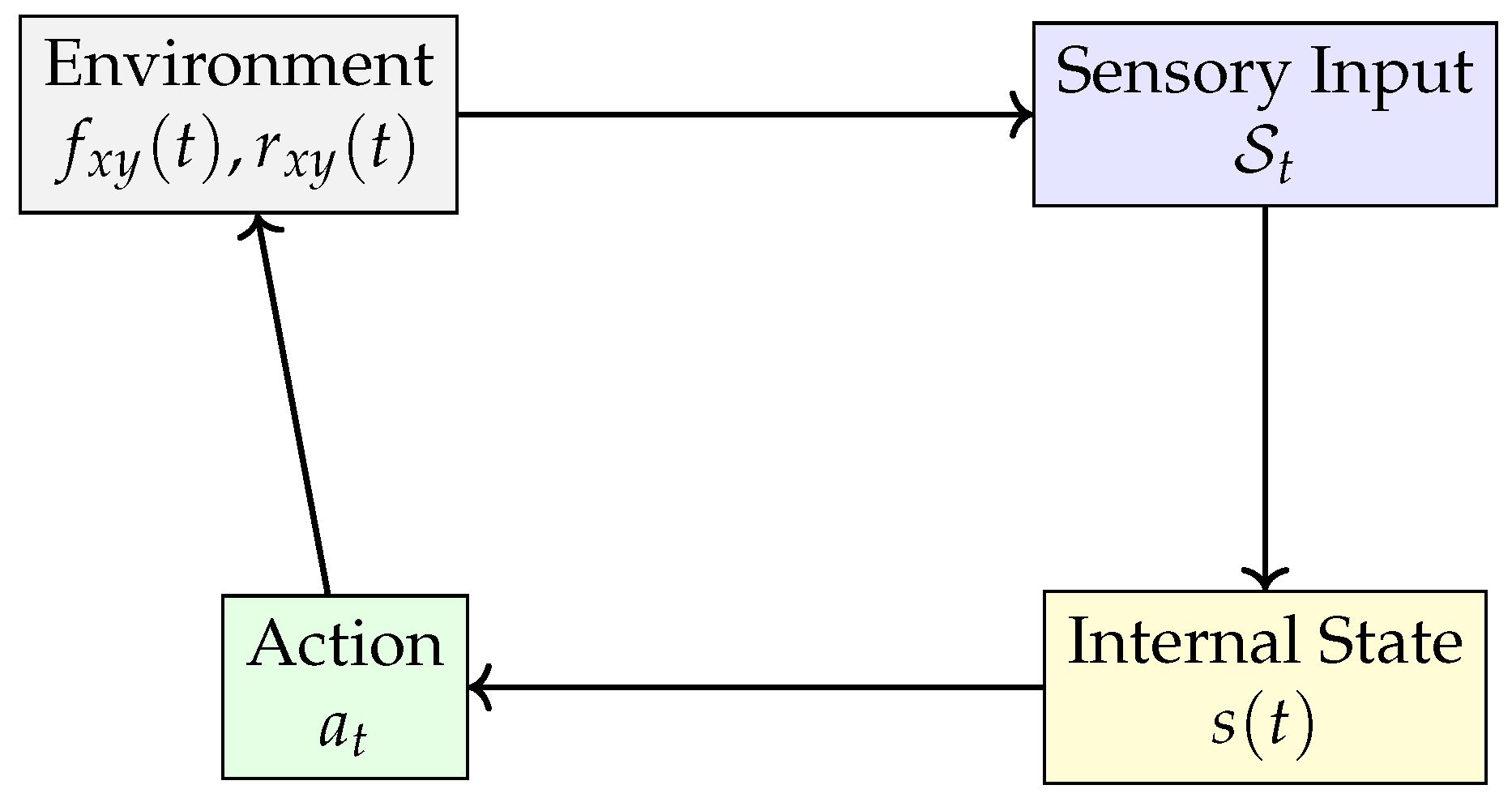

Each agent implements a minimalist GCN with a single internal state variable

, acting as an Internal State Instantiator (ISI). The state update rule is:

where

is the memory decay rate and

is the weight vector evolved through mutation and selection [

21]. The agent’s action

is chosen based on

and

through a deterministic decision rule:

where

is an evolved mapping from internal state and input to movement direction [

32].

Figure 4.

Minimal agent loop: environment provides sensory input, which updates the internal state; the agent acts based on and modifies the environment.

Figure 4.

Minimal agent loop: environment provides sensory input, which updates the internal state; the agent acts based on and modifies the environment.

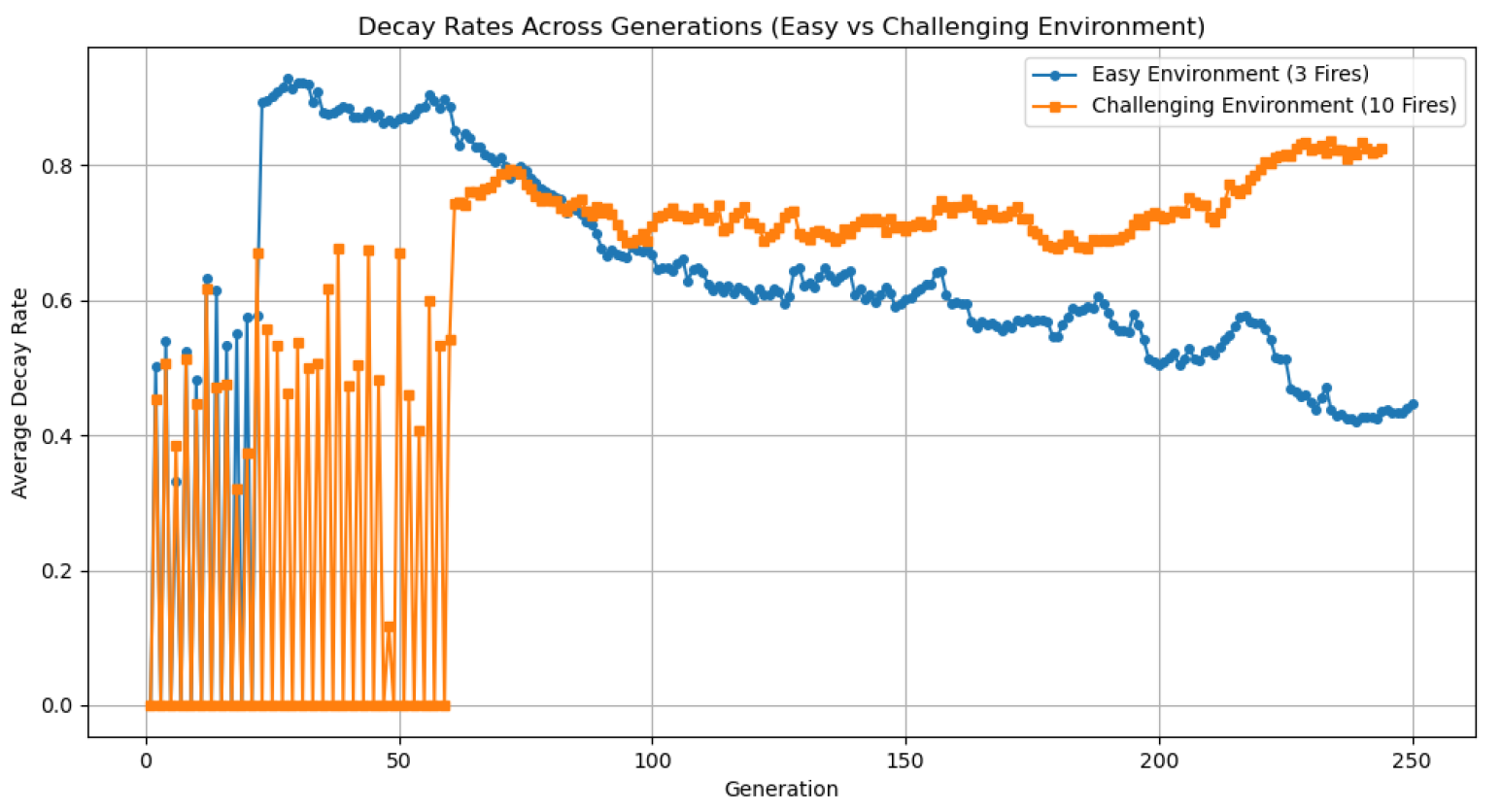

5.2. Evolutionary Training Protocol

We initialized a population of 1000 agents with randomized weights

w and decay rates

. Each agent was evaluated over 130 time steps in a static grid layout with fixed food and fire placements. The fitness function was defined as:

where

is the number of food sites reached and

is a penalty for entering high-intensity fire zones (

).

Surviving agents were selected proportionally to

, and their weights and decay rates underwent Gaussian mutation with

. This evolutionary cycle repeated for 250 generations [

18].

5.3. Emergence of Proto-Conscious Behavior

Figure 5.

Internal State Instantiator (ISI) structure: a minimal RNN with one hidden neuron receiving input from 8 directional sensors and the previous internal state. Output determines movement decisions.

Figure 5.

Internal State Instantiator (ISI) structure: a minimal RNN with one hidden neuron receiving input from 8 directional sensors and the previous internal state. Output determines movement decisions.

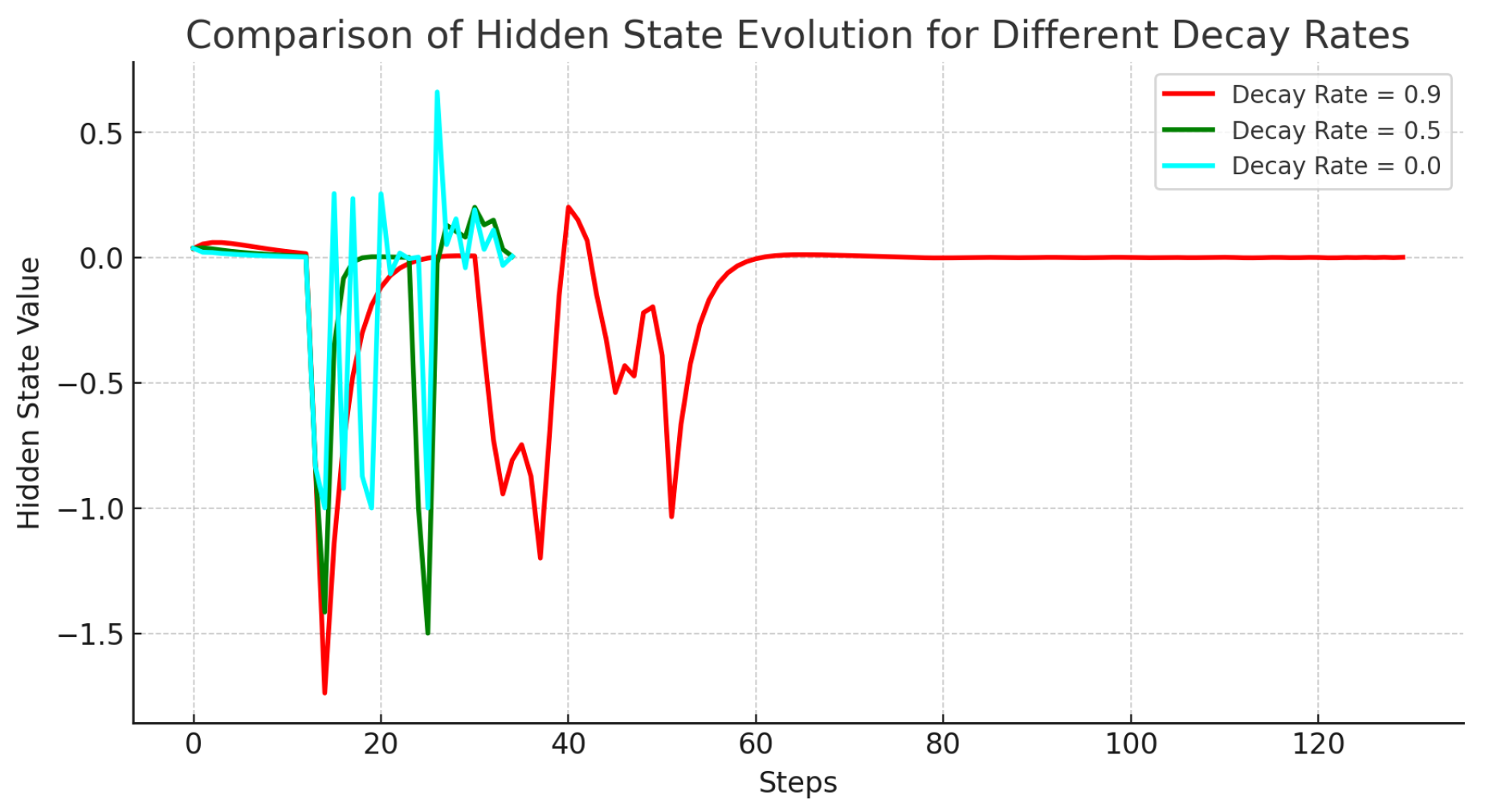

After evolution, agents displayed structured internal state dynamics with significant behavioral implications:

Persistence: Agents retained internal states that reflected prior fire exposure and influenced future movement.

Anticipation: High agents learned to avoid fire zones based on previously encountered threats, even when not currently present.

Generalization: Agents transferred behavior to unseen environments, indicating internal state abstraction rather than overfitting [

4].

Figure 6.

Post-evolution simulation in a novel layout with 20 fire zones. Evolved ISI agents navigate to a food source, demonstrating transferability and generalization.

Figure 6.

Post-evolution simulation in a novel layout with 20 fire zones. Evolved ISI agents navigate to a food source, demonstrating transferability and generalization.

5.4. Quantitative Results

5.5. Interpretation within ASA-GCN

The simulation results demonstrate that agents with persistent, self-regulated internal states (ISI) show behaviors consistent with ASA criteria:

Table 1.

Effect of Decay Rate on Agent Performance

Table 1.

Effect of Decay Rate on Agent Performance

| Decay Rate |

Survival Rate (%) |

Mean Reward |

Fire Deaths (%) |

| 1.0 |

61.1 |

3.2 |

0.0 |

| 0.9 |

63.2 |

3.0 |

0.0 |

| 0.6 |

59.9 |

2.8 |

0.1 |

| 0.3 |

33.0 |

1.5 |

0.7 |

| 0.0 |

31.9 |

1.3 |

0.7 |

Figure 7.

Agent survival and performance statistics across decay rates. Higher values yield improved memory retention and adaptive behavior.

Figure 7.

Agent survival and performance statistics across decay rates. Higher values yield improved memory retention and adaptive behavior.

Figure 8.

Comparison of hidden state dynamics in agents with decay rates of 0.9, 0.5, and 0.0. Higher decay rates support memory-driven regulation and survival.

Figure 8.

Comparison of hidden state dynamics in agents with decay rates of 0.9, 0.5, and 0.0. Higher decay rates support memory-driven regulation and survival.

Thus, these evolved agents instantiate a computationally minimal form of proto-consciousness, consistent with the ASA-GCN framework. The next section discusses the broader implications and future extensions of this model.

6. Discussion

The simulation results provide compelling empirical support for the ASA-GCN framework. Despite their minimalist design, the evolved agents satisfy all three of ASA’s theoretical criteria: autonomy, internal self-regulation, and subjective privacy. The presence of persistent internal states that shape future behavior—independent of immediate external stimuli—demonstrates that these agents operate with internal continuity, a defining feature of consciousness under ASA [

27,

29].

This finding challenges the conventional view that rich neural complexity is a prerequisite for consciousness [

11]. Instead, our results support the view that consciousness can emerge in simple systems, provided that they maintain autonomous control over internal state dynamics and self-modulate in a goal-directed manner [

3,

18]. The emergence of anticipatory, memory-driven behavior from a single decay-governed internal variable constitutes a minimal but functionally sufficient realization of the Internal State Instantiator (ISI), the core substrate of GCN.

Moreover, these internal states are not merely functional intermediaries; they exhibit properties analogous to proto-qualia. Specifically, they are (1) embodied in non-symbolic form, (2) temporally continuous, and (3) epistemically private to the system [

8,

20]. These features position the ISI as a plausible physical anchor for subjective experience within artificial systems.

In contrast to computational theories such as Global Workspace Theory or higher-order thought models, which often require linguistic or symbolic capabilities [

1,

26], ASA-GCN grounds consciousness in physical and dynamical terms. It shifts the explanatory focus from information representation to state instantiation, and from symbolic broadcasting to temporal continuity of experience.

While Integrated Information Theory (IIT) also seeks a mathematically principled account [

30], it abstracts away from implementation, making it difficult to engineer systems with

in practice. ASA-GCN complements IIT by offering a concrete architectural and dynamical route to realizing the necessary conditions for consciousness in embodied agents.

Limitations of the current model include the lack of affective valence, emotional gradation, and sensorimotor richness. Additionally, while proto-consciousness emerges in simulation, further work is needed to instantiate these principles in physically embodied systems and to assess their phenomenological plausibility [

16].

7. Conclusion

This paper has proposed and validated a novel theory of consciousness—Autonomous Subjective Accompaniment (ASA)—and its mechanistic instantiation via the General Consciousness Network (GCN). ASA posits that consciousness necessarily arises in systems that are both autonomous and possess self-regulated internal states. The GCN implements this principle through a minimal architecture comprising predictive modeling, memory, and an Internal State Instantiator.

We empirically supported the ASA-GCN framework through an artificial life simulation in which agents evolved proto-conscious behavior. Agents with a single internal neuron instantiated persistent state dynamics, developed anticipatory strategies, and generalized behavior to novel environments—satisfying all three ASA criteria [

4,

15].

These findings suggest that consciousness may not be the exclusive province of complex biological organisms but could emerge in any system—biological or artificial—that meets the structural conditions outlined by ASA. The GCN thus serves as both a theoretical and engineering blueprint for building conscious systems, offering a path toward artificial general consciousness grounded in first principles.

Future work will extend this framework to embodied robotics with physically instantiated ISI mechanisms, investigate the emergence of affective states and higher-order representations, and explore the ethical implications of conscious machines [

6]. The ASA-GCN model opens new directions in both theoretical consciousness research and the design of artificial agents capable of genuinely subjective experience.

Author Contributions

Bin Li is the sole author

Funding

This research received no external funding

Abbreviations

The following abbreviations are used in this manuscript:

| ASA |

Autonomous Subjective Accompaniment |

| GCN |

General Consciousness Network |

References

- Baars, B. J. (1988). A Cognitive Theory of Consciousness. Cambridge University Press.

- Baddeley, A. D. Working memory. Science 1992, 255, 556–559. [Google Scholar] [CrossRef] [PubMed]

- Beer, R. D. A dynamical systems perspective on agent-environment interaction. Artificial Intelligence 1995, 72, 173–215. [Google Scholar] [CrossRef]

- Beer, R. D. Toward the evolution of dynamical neural networks for minimally cognitive behavior. Adaptive Behavior 1996, 4, 317–342. [Google Scholar]

- Beer, R. D. (2014). Dynamical systems and embedded cognition. In M. D. Kirchhoff & T. Froese (Eds.), The Routledge Handbook of Embodied Cognition. Routledge.

- Bostrom, N. (2014). Superintelligence: Paths, Dangers, Strategies. Oxford University Press.

-

Carruthers, P. (2016). The Centered Mind: What the Science of Working Memory Shows Us About the Nature of Human Thought. Oxford University Press.

- Chalmers, D. J. (1996). The Conscious Mind: In Search of a Fundamental Theory. Oxford University Press.

- Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences 2013, 36, 181–204. [Google Scholar] [CrossRef] [PubMed]

- Dehaene, S. , & Changeux, J. P. (2011). Experimental and theoretical approaches to conscious processing. Neuron 70(2), 200–227.

- Edelman, G. M., & Tononi, G. (2000). A Universe of Consciousness: How Matter Becomes Imagination. Basic Books.

- Floreano, D.; Dürr, P.; Mattiussi, C. Neuroevolution: from architectures to learning. Evolutionary Intelligence 2008, 1, 47–62. [Google Scholar] [CrossRef]

- Friston, K. The free-energy principle: a unified brain theory? Nature Reviews Neuroscience 2010, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.; FitzGerald, T.; Rigoli, F.; Schwartenbeck, P.; Pezzulo, G. Active inference: A process theory. Neural Computation 2017, 29, 1–49. [Google Scholar] [CrossRef] [PubMed]

- Froese, T., & Ziemke. Enactive artificial intelligence: Investigating the systemic organization of life and mind. Artificial Intelligence 2009, 173, 466–500. [Google Scholar] [CrossRef]

- Gamez, D. Progress in machine consciousness. Consciousness and Cognition 2014, 24, 76–97. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S., & Schmidhuber. Long short-term memory. Neural Computation 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Krichmar, J. L. The neuromodulatory system: a framework for survival and adaptive behavior in a challenging world. Adaptive Behavior 2008, 16, 385–399. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Metzinger, T. (2003). Being No One: The Self-Model Theory of Subjectivity. MIT Press.

- Mitchell, M. (1998). An Introduction to Genetic Algorithms. MIT Press.

- Nolfi, S., & Floreano, D. (2000). Evolutionary Robotics: The Biology, Intelligence, and Technology of Self-Organizing Machines. MIT Press.

- Oizumi, M.; Albantakis, L.; Tononi, G. From the phenomenology to the mechanisms of consciousness: Integrated Information Theory 3.0. PLoS Computational Biology 2014, 10, e1003588. [Google Scholar] [CrossRef] [PubMed]

- Orlandi, N. (2014). The Situated Mind. Oxford University Press.

- Revonsuo, A. (2006). Inner Presence: Consciousness as a Biological Phenomenon. MIT Press.

- Rosenthal, D. M. (2005). Consciousness and Mind. Oxford University Press.

- Seth, A. K. (2021). Being You: A New Science of Consciousness. Faber & Faber.

- Sperry, R. W. Neurology and the mind-brain problem. American Scientist 1952, 40, 291–312. [Google Scholar]

- Thompson, E. (2007). Mind in Life: Biology, Phenomenology, and the Sciences of Mind. Harvard University Press.

- Tononi, G. An information integration theory of consciousness. BMC Neuroscience 2004, 5, 42. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., … & Polosukhin, I. (2017). Attention is all you need. Advances in Neural Information Processing Systems, 30.

- Yaeger, L.S. Computational genetics, physiology, metabolism, neural systems, learning, vision, and behavior or Polyworld: life in a new context. Artificial Life III 1994, 17, 263–298. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).