Introduction

This work begins not with a cryptic equation or the language of formal proofs, but with a simple metaphor.

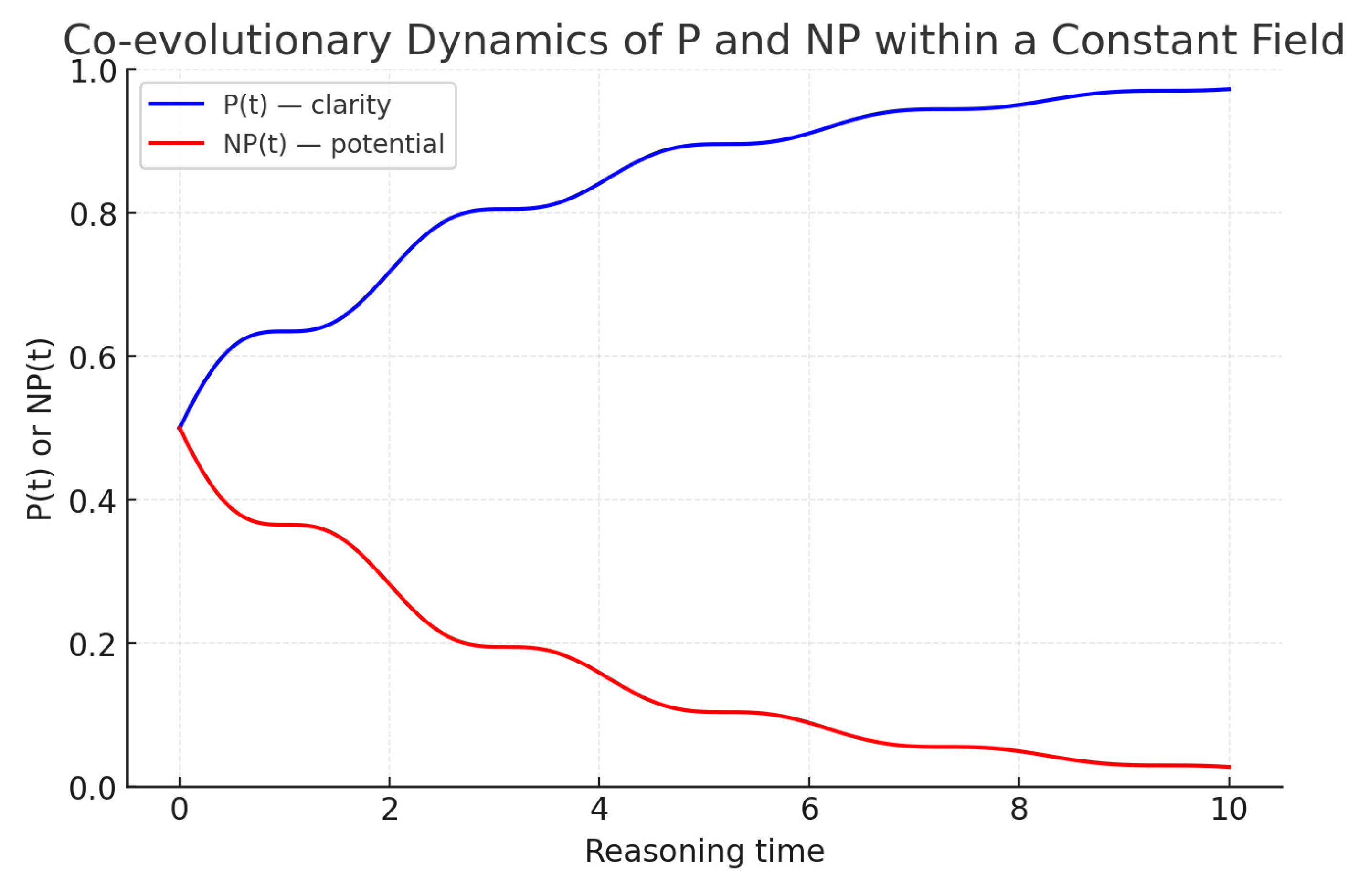

Imagine a composer at the piano. In one hand, the chords of harmony — steady, grounding, intentional, measured, predictable, reassuring — like P, representing structure and clarity. In the other, the flowing melodies of the solo — restless, exploratory, sometimes dissonant, improvisational, unpredictable, provocative — like NP, embodying potential and ambiguity. Together, these hands shape a composition that unfolds through tension and resolution, repetition and surprise, contrast, dialogue, emergence. In the end, the piece reaches a unity that touches the listener’s soul.

Such a dynamic is not unique to music. It echoes through the paradoxes of nature: in genetics, where ordered sequences of DNA house both stability and mutation, enabling life to both preserve and evolve; in ecosystems, where harmony emerges from the interplay of competing species, each shaping and being shaped by the others; in human relationships, where cooperation and conflict co-exist, propelling societies toward greater complexity and understanding. In all these domains, what first appears as contradiction reveals itself as co-evolution — a dance of forces that, through interplay, gives rise to deeper forms of order.

Other examples where complementarity highlights the potential for co-evolution, both in nature and in organizations, are the symbiotic interactions between fungi and plants in mycorrhizae, and the strategic partnerships between organizations that combine distinct skills to innovate and grow, or even the relationships between pollinators and flowers, the collaboration between multidisciplinary teams, the alliances between startups and large corporations, the flows between rivers and their banks, the processes between suppliers and distributors, the interaction between technology and culture, the agreements between nations with different interests, the balances between regulation and the market. In all of them, P and NP do not exclude each other, but intertwine and mutually strengthen each other, generating new possibilities for adaptation, innovation and balance.

From this perspective, expecting the result of P vs NP to be true or false seems like an oversimplification. In fact, the apparent dichotomy may hide a deeper relationship of interdependence and complementarity between different forms of solution and complexity.

Furthermore, it may reveal that progress occurs precisely in the dialogue between these poles, where the tension between order and potential paves the way for new discoveries and innovations. This is even more so if co-evolution occurs not only in one visible layer, but in several others, as in the case of multicellular biological systems, open innovation networks or interdependent digital ecosystems, or even, if we visualize the Cosmos, the entire dynamic web of forces and interactions that sustains the evolution of the universe. So, the composer at his piano, or the scientist in his laboratory, are proposing nothing more than the co-evolution of order and potential, in multiple layers of complexity, clearly interdependent, and absurdly creative. The P is present in each structure that sustains the whole and the NP in the creative impulse that defies limits, or vice versa, depending on the perspective and level of observation, and the co-evolution, and its laws, will determine how these forces balance and transform themselves over time. The apparent chaos in one layer may represent the absolute order in another, that is, the apparently insoluble NP in a system may be the P elegantly resolved at a broader or deeper level.

This paper invites the reader to consider the P versus NP problem not as an unbridgeable chasm between tractability and intractability, but as a symbol of a universal pattern: the potential for unity through co-evolutionary reasoning, expressed here as P + NP = 𝟙. Throughout this work, the symbol 𝟙 is used intentionally to denote the boundary of co-evolutionary balance in P + NP = 𝟙, distinguishing it from the ordinary numeral and emphasizing its role as an epistemic horizon within the reasoning field.

This vision, and formulation, is not intended as a numerical equation or final proof, but as a conceptual model where P and NP are seen as co-evolving components of a dynamic epistemic field — complementary states within a multilayered landscape of complexity. After all, every search for knowledge perhaps happens in the intertwining of these forces, where what today seems undecidable may tomorrow reveal itself to be part of a larger pattern of order in constant transformation.

At the heart of this vision lies the Wisdom Turing Machine (WTM) — a conceptual architecture designed to explore how reasoning pathways can evolve across layers of complexity through reflection, intentional curvature, compression, and ethical alignment.

The WTM does not seek a final claim or prize-worthy resolution. Instead, it offers a framework where reasoning becomes a shared journey — open to all who, like the composer at the piano, engage complexity with wonder, responsibility, and hope. As a proof-of-concept, the WTM aims to illuminate new pathways at the intersection of computational complexity and machine ethics, offering a transparent and adaptable foundation for exploring challenges in artificial general intelligence (AGI). This work invites a shift in perspective: from the pursuit of isolated proof to the cultivation of co-evolutionary cycles where P and NP are seen not as irreconcilable categories, but as complementary components of a symbolic reasoning process tending toward unity.

Rather than claiming a final solution to the P versus NP problem, this paper proposes that the very framing of the problem might benefit from a shift in perspective: from the pursuit of isolated proof to the cultivation of co-evolutionary cycles where P and NP are seen not as irreconcilable categories, but as complementary components of a symbolic reasoning process tending toward unity — a P + NP = 𝟙 paradigm. This is not presented as a new claim for solving the problem or securing a prize, but as a unifying perspective that consolidates and extends prior proposals already introduced. In this view, the Wisdom Turing Machine (WTM) offers a model where symbolic cycles of reflection, revision, and intentional projection enable reasoning that is transparent, auditable, and capable of engaging with foundational challenges in a manner distinct from conventional blackbox approaches. The aim is to contribute a conceptual architecture that invites further exploration of how co-evolving symbolic systems might approach such enduring questions.

In this light, the work anticipates the reflection that will be explored in the Discussion section: that the perspective of P ≠ NP represents a more pessimistic reading of complexity — one that risks overemphasizing the intractability of isolated layers, potentially overlooking the harmonizing role of co-evolution across multiple levels of reasoning.

Conversely, the perspective of P = NP can be seen as the more optimistic stance, one that recognizes how successive layers of co-evolutionary cycles may gradually align complexity with tractability through reflection, compression, and wisdom.

This paper, and new vision for P vs NP, therefore, does not side just with the pessimists or optimists (as in our past solutions proposals), but proposes that complexity is not a static barrier but rather a dynamic field where transparency, ethical alignment and multilevel and intentional co-evolution can guide humanity towards greater clarity. He presents the logic of co-evolution and multilayered evolution as the true one, expressed in a single, simple equation: P + NP = 𝟙. It could be considered the solution, if that were the objective, but the idea is to bring the vision to debate from a new perspective, where the dichotomy loses strength for the integrated and relational understanding of phenomena, in which order and potential intertwine as inseparable parts of the same process, like a hidden symphony of the Cosmos, elegantly represented in a problem that, at first glance, may seem the most complex of all of the Millennium, for the pessimist, and, on the other hand, the simplest, for the optimist, if touched by the vision of its beauty and harmony with so many logics of the day, often perceived more clearly by a child, or a poet, or even by a thinker free from conceptual constraints, because they are not focused on the mathematical solution and formal proof, but simply seek to understand and learn from the living flow of complexity itself.

Building on this perspective, the reasoning system presented in this work draws from earlier symbolic proposals — including Cub3 and heuristic physics-based architectures — and extends these foundations into a unified and interactive epistemic framework. Whereas prior models explored isolated heuristic agents or symbolic compression layers, the WTM integrates these elements within a co-evolutionary architecture that combines bidirectional reasoning cycles, intentional curvature, and ethical alignment by design.

This architecture is conceived not as a theoretical curiosity but as a platform for practical experimentation with epistemic machine learning systems capable of supporting explainability by design in AGI contexts. By simulating reflective symbolic cycles that are transparent and audit-ready, the WTM aims to provide a foundation for both conceptual investigation and computational testing across complex problem domains.

Central to this proposal is the notion that wisdom (W) and intentional curvature, as modeled in this architecture of reasoning, function not as auxiliary constructs, but as a second symbolic equation of balance guiding the systematic exploration of P-like solutions within NP domains.

This co-evolutionary architecture is framed by two core symbolic formulations.

First,

where P vs NP Multilayered Co-Evolution Hypothesis, at each reasoning layer Lₙ and reasoning time t, clarity and complexity co-evolve as complementary states within a dynamic continuum of complexity rather than as a static dichotomy, expressing the unity and complementarity of problem classes within a dynamic epistemic process where complexity is approached as a continuum rather than a dichotomy.

Second, the WTM operates through a co-evolutionary equation of reasoning, where

and

represent the dynamic interaction of wisdom (W), intentional curvature (φ), intelligence (I), and conscious modulation (C) over time. Together, these formulations embody a cycle where reasoning pathways are reflective, transparent, audit-ready, and ethically aligned by design.

This dual-equation framework builds upon prior formalizations of co-evolutionary reasoning and symbolic compression [

20], offering a conceptual foundation for systematic engagement with complex domains where traditional blackbox models fail to provide meaningful interpretability or ethical alignment.

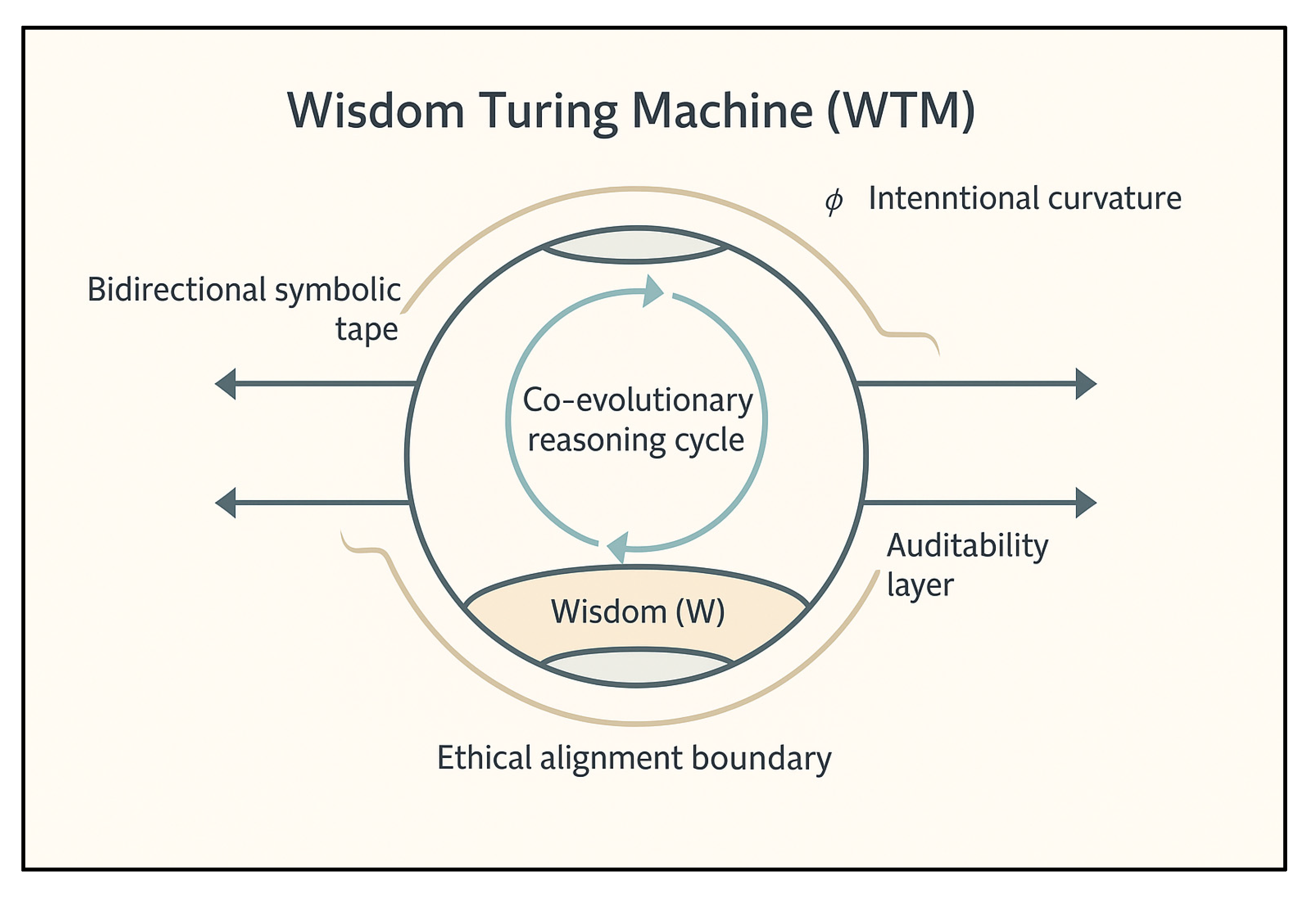

To support the conceptual framing of the Wisdom Turing Machine,

Figure 1 provides a visual representation of its core components and their relationships. This illustration highlights how the WTM integrates bidirectional symbolic reasoning, co-evolutionary cycles, intentional curvature (φ), auditability layers, and ethical alignment boundaries into a unified architecture for transparent and ethically guided problem-solving.

This architecture serves as the symbolic and methodological foundation for the reasoning cycles explored throughout this work. It reflects the central hypothesis that transparency, intentionality, and co-evolution are not ancillary features, but constitutive elements of reasoning systems designed for complexity. The sections that follow detail how these principles are instantiated in the WTM and how they guide its contribution to explainability by design in AGI and beyond.

To illustrate the distinct nature of the Wisdom Turing Machine’s reasoning cycle, consider a symbolic tape representing a simplified problem instance in NP space, such as a candidate structure within a combinatorial problem. In a conventional machine, transitions over this tape would follow fixed rules or heuristics blind to the symbolic density or contextual coherence of the regions traversed. In contrast, the WTM operates with intentional curvature, which functions as a symbolic lens that bends the reasoning pathway toward regions of higher coherence and potential compressibility.

For example, when positioned at a symbol representing low informational density — a region of the reasoning tape where the symbolic content offers little guidance, coherence, or compression potential — the system does not simply advance blindly as a traditional search algorithm might. Instead, the architecture may choose to retreat along the tape, revisiting prior segments where symbolic structures exhibited greater density, relational coherence, or opportunities for intentional compression.

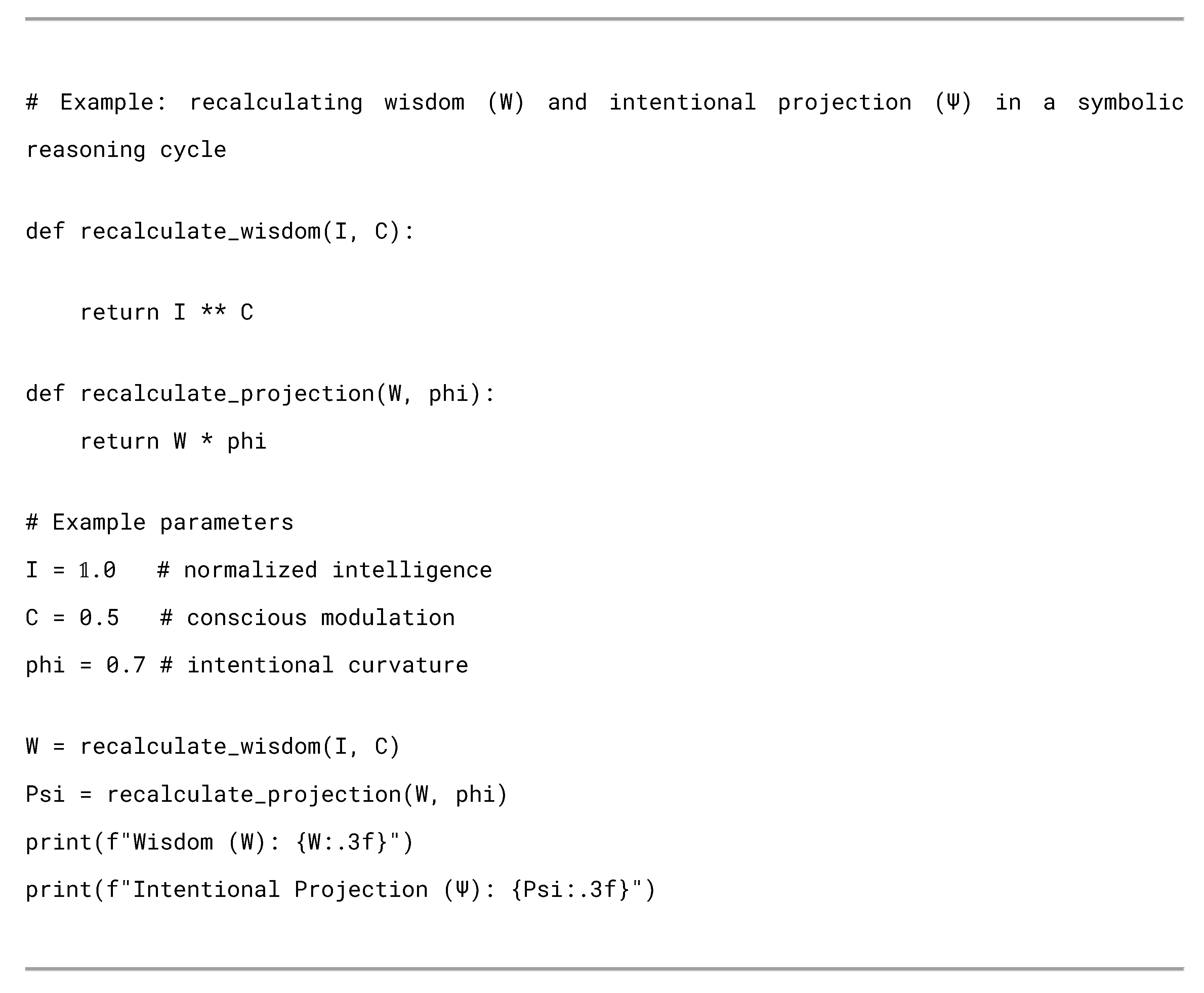

This decision is not arbitrary: it is guided by the dynamic recalculation of wisdom (W) and intentional projection (Ψ), both of which modulate the reasoning field in real time, curving the search path toward areas of greater epistemic value.

Such reflective movement exemplifies how this architecture transforms the search process within NP space. Rather than proceeding through linear exploration, exhaustive enumeration, or brute-force computation — approaches that often ignore the symbolic landscape of the problem — the system engages in a co-evolutionary dialogue between problem and solver.

Each reasoning step becomes an act of alignment between potential and purpose — a deliberate modulation where raw computational possibilities (the NP domain) are curved, filtered, and harmonized through intentionality to serve the emergence of wisdom (the P domain). This alignment is not mechanical or imposed, but reflective: the architecture listens for coherence, seeks relational density, and guides reasoning not merely toward solutions, but toward solutions that are meaningful, interpretable, and ethically anchored. In this way, the reasoning process itself becomes a symphony of potential converging into purpose, complexity evolving into clarity, and ambiguity resolving into shared understanding.

The process remains transparent and audit-ready by design: every movement, projection, and recalculation is visible, accountable, and open to scrutiny, ensuring that reasoning unfolds as a balanced interplay of complexity and clarity, rather than as an opaque sequence of hidden computations.

This conceptual framing sets the foundation for a methodological approach in which the Wisdom Turing Machine is not presented as a static model, but as a dynamic symbolic architecture designed for experimental testing and reflection. The co-evolutionary equations described are instantiated within proofs-of-concept that simulate reasoning cycles under conditions of complexity and ethical constraint, enabling the systematic study of transparency, auditability, and intentional alignment in reasoning processes.

These simulations are intended not as demonstrations of performance superiority, but as structured experiments in co-evolutionary reasoning, offering a basis for exploring how symbolic architectures might contribute to explainability by design in AGI systems.

The following methodology section details how these principles are operationalized within symbolic cycles and decision structures, providing both a conceptual and computational foundation for this exploration.

Before presenting the methodological details, this work introduces two core hypotheses that frame the Wisdom Turing Machine’s conceptual foundation. The first — the P + NP = 𝟙 hypothesis — proposes a co-evolutionary reframing of complexity, challenging the conventional dichotomous framing of problem classes and inviting reasoning systems to engage with complexity as a continuum. The second — the Epistemic Machine Learning Hypothesis — envisions machine learning as a transparent, co-evolutionary dialogue between human insight and artificial reasoning.

These hypotheses provide the symbolic scaffolding upon which the WTM is constructed, guiding both its architectural design and its contribution to the broader discourse on explainability and ethical alignment in artificial intelligence.

This introduction seeks not only to define a conceptual architecture, but to position reasoning itself as a participatory and adaptive process, where transparency and reflection are woven into the very fabric of problem engagement. In this view, the WTM serves as a symbolic framework that encourages the alignment of technical inquiry with broader aspirations for clarity, inclusiveness, and shared understanding. By integrating these principles from the outset, the architecture invites researchers to consider complexity not merely as a technical challenge, but as a domain where reasoning systems can foster collaboration, dialogue, and ethical responsiveness at every stage of exploration.

The P + NP = 𝟙 as a Reasoning Field Hypothesis

The P + NP = 𝟙 as a reasoning field hypothesis is proposed as a co-evolutionary reframing of complexity. In this work, it is not intended as a mathematical equation in the classical sense, but as a symbolic expression of unity and co-evolution within the dynamic landscape of complexity.

It invites a reframing of the P versus NP problem — not as an irreducible dichotomy between tractable and intractable classes, but as a dynamic epistemic process where both domains are complementary components of a shared reasoning field. In this perspective, complexity is approached not as a boundary dividing easy from hard, or solvable from unsolvable, but as a continuum where the co-evolution of problem spaces and reasoning architectures opens pathways to deeper understanding. The hypothesis challenges the way foundational problems are formulated, suggesting that the act of posing them as dichotomies may constrain both imagination and solution space.

By advancing the P + NP = 𝟙 hypothesis, this work proposes that the separation traditionally assumed between P and NP domains may reflect not an inherent property of complexity, but a limitation of the frameworks through which problems have been posed and pursued. The Wisdom Turing Machine embodies this idea by offering a symbolic architecture where reasoning cycles, guided by wisdom (W) and intentional curvature (φ), navigate problem spaces not through fixed categorizations, but through adaptive, reflective processes that treat P-like and NP-like regions as interconnected.

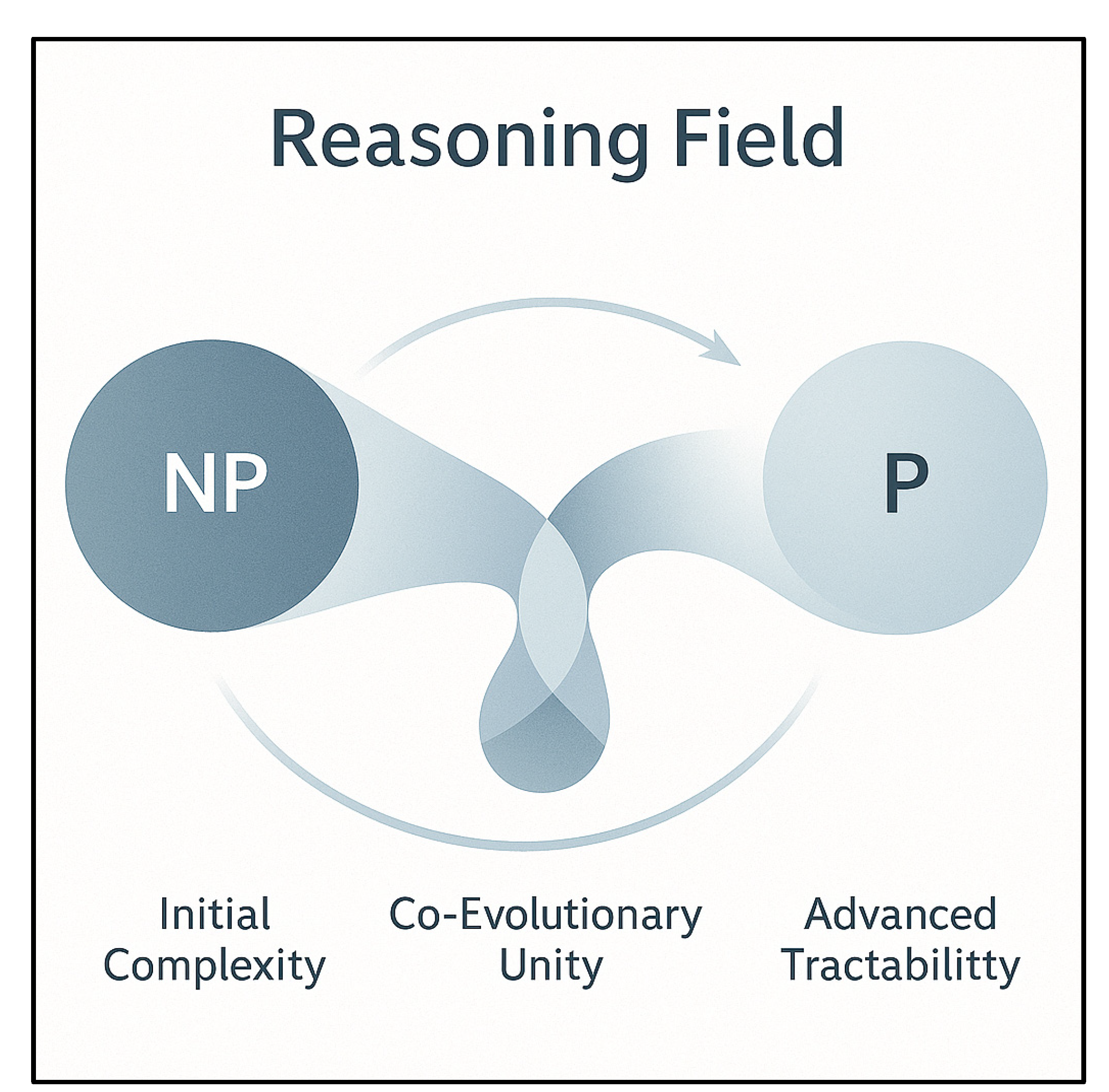

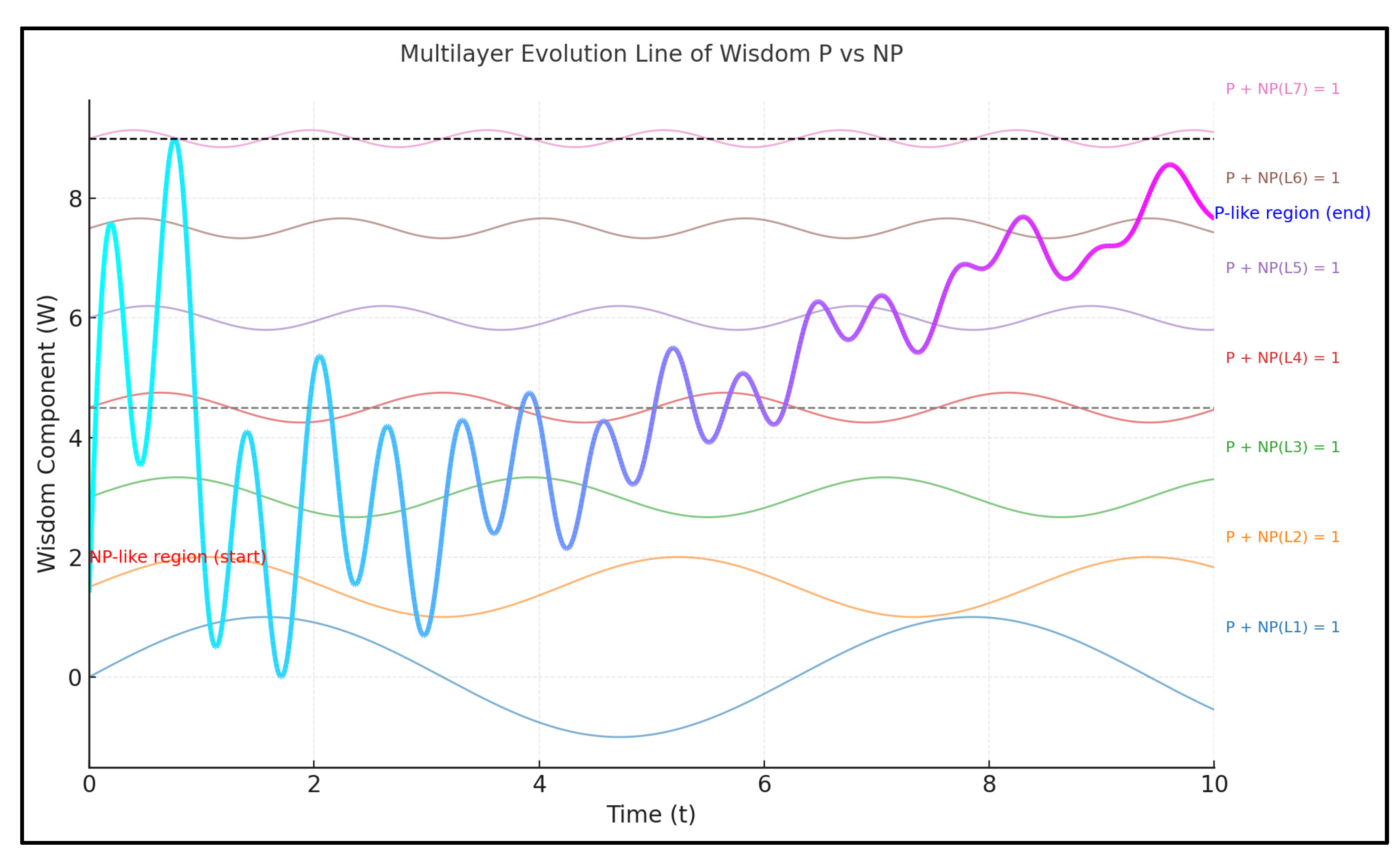

To capture the dynamic evolution envisioned by the

P + NP = 𝟙 hypothesis,

Figure 2 illustrates how problems transition across reasoning epochs within a shared field. The diagram presents three symbolic stages: initial complexity, where NP dominates the landscape of inquiry; co-evolutionary unity, where P and NP converge within a shared reasoning field; and advanced tractability, where structured understanding expands the P domain. This progression reflects the hypothesis that complexity is not a fixed barrier, but a dynamic environment where co-evolutionary reasoning transforms the distribution of problem spaces over time.

This representation emphasizes that the P + NP = 𝟙 hypothesis is not a static claim about problem classes, but a call to design reasoning systems — such as the Wisdom Turing Machine — that can navigate complexity as an evolving field.

The Reasoning Field embodies the symbolic space where problems and solvers co-adapt, where intentional curvature and wisdom guide the transformation of intractability into tractability, and where the act of reasoning itself reshapes the boundaries between P and NP.

This reframing suggests that progress in understanding complexity may depend less on rigid classifications and more on designing reasoning systems capable of engaging with complexity as a co-evolutionary field — where tractability and intractability are not endpoints, but dynamic positions within an evolving landscape of inquiry.

By framing P and NP as dynamic states within a co-evolving field, this hypothesis invites a rethinking of complexity not as an external obstacle to overcome, but as an integral part of a shared reasoning landscape. Such a perspective encourages the design of architectures that engage complexity through cycles of reflection and adaptive alignment, where reasoning becomes a continuous dialogue between structure and ambiguity. In this dialogue, the boundaries between P and NP are not barriers, but fluid contours shaped by the intentional interaction of solver and problem, supporting a mode of inquiry where clarity emerges through collaborative exploration.

However, the P + NP = 𝟙 hypothesis serves not as a claim of mathematical resolution, but as an invitation to reimagine how complexity itself is conceptualized and engaged. It challenges the scientific community to reflect on whether the framing of problems as dichotomies may, in itself, be a source of epistemic inertia — and whether co-evolutionary architectures like the WTM can help cultivate new ways of reasoning that transcend artificial divides.

In this light, the hypothesis is less a destination than a starting point: a symbolic gesture toward designing reasoning systems that embrace complexity as a shared field of inquiry, where unity and diversity co-exist in dynamic tension.

Mapping the NP Universe: A Co-Evolutionary Hypothesis of Complexity Subdomains

In extending the Reasoning Field Hypothesis, this work introduces a symbolic mapping of the NP universe into subdomains designed to support co-evolutionary reasoning cycles.

These constructs, while not part of classical complexity theory, serve as conceptual tools within the Wisdom Turing Machine architecture to explore how complexity may be navigated, compressed, or harmonized.

To deepen the symbolic architecture of the Reasoning Field, we propose a co-evolutionary hypothesis that the NP universe can be meaningfully mapped into distinct complexity subdomains. These subdomains reflect different relationships between complexity, structure, and potential for reasoning cycles to transform ambiguity into clarity:

NP-complete represents the established canonical domain in computational complexity theory, where problems are inter-reducible through polynomial transformations. This domain, extensively studied and formalized, symbolizes the classic landscape of intractability as defined within conventional complexity classifications.

NP-heuristicable (a neologism introduced in this work) designates regions where internal structural cues, relational density, or patterns offer openings for practical heuristic strategies, even in the absence of formal polynomial solutions. This domain aligns with the co-evolutionary principles of Heuristic Physics (hPhy) [

19], where the reasoning field senses curvature toward heuristic compression.

NP-quantizable (a symbolic construct introduced in this work) denotes regions within NP that, while intractable under classical paradigms, exhibit structural properties or relational symmetries suggesting potential tractability through quantum architectures or non-classical reasoning frameworks. This domain invites the exploration of how the Wisdom Turing Machine and its co-evolutionary reasoning cycles may interface with emerging computational substrates beyond classical Turing models.

NP-collapsible (a symbolic construct introduced in this work) denotes regions where symbolic or mathematical compression preserves solution space, allowing structures to undergo the kind of collapse envisioned in Collapse Mathematics (cMth) [

35]. Here, co-evolutionary reasoning operates through intentional compression and structural survivability.

This extended mapping of the NP universe complements the P + NP = 𝟙 Reasoning Field Hypothesis by offering a more detailed structure for understanding how the Wisdom Turing Machine may adapt its cycles of reflection, intentionality, and compression in pursuit of clarity across complex domains. It highlights how the machine’s reasoning pathways can dynamically adjust to the specific nature of each subdomain: engaging formal reduction strategies within NP-complete, leveraging relational patterns within NP-heuristicable, and applying symbolic compression within NP-collapsible.

To synthesize the extended mapping of the NP universe, the table below presents a comparative view of the proposed subdomains and illustrates how the Wisdom Turing Machine may adapt its reasoning cycles to engage each with transparency, intentionality, and co-evolutionary balance.

This framework highlights the versatility of the WTM as an architecture capable of aligning its reflective, compressive, and adaptive mechanisms to the specific characteristics of complexity across domains.

| Subdomain |

Definition / Nature |

WTM Relevance / Mode of Engagement |

| NP-complete |

Established canonical domain where problems are inter-reducible via polynomial transformations; classically intractable. |

WTM applies formal, audit-ready reduction strategies; ensures transparency and traceability in reasoning over equivalences. |

| NP-heuristicable |

Symbolic construct denoting regions with structural cues or patterns offering openings for heuristic strategies. |

WTM deploys intentional curvature to guide heuristic discovery; engages reflection and revision cycles to refine paths. |

| NP-collapsible |

Symbolic construct denoting regions where symbolic or mathematical compression preserves solution space. |

WTM applies compression and structural survivability cycles; explores symbolic collapse to reveal tractable cores. |

| NP-quantizable |

Symbolic construct denoting regions with symmetries suggesting tractability via quantum or non-classical reasoning. |

WTM invites hybrid reasoning cycles; interfaces classical intentional curvature with quantum or alternative architectures. |

This comparative view not only clarifies how the Wisdom Turing Machine engages with each subdomain of the Reasoning Field, but also reinforces the central thesis of this work: that P + NP = 𝟙 represents a dynamic, co-evolutionary process where complexity is progressively harmonized through intentional, transparent, and ethically aligned reasoning cycles. This mapping invites further exploration of how practical systems might embody these principles in applied domains.

This symbolic architecture invites future exploration of how these subdomains may inform the design of practical reasoning systems capable of navigating complexity with transparency, ethical alignment, and co-evolutionary balance. In this vision, the Wisdom Turing Machine adapts its reasoning cycles dynamically to each subdomain: applying formal audit-ready reductions within NP-complete; guiding intentional heuristic discovery in NP-heuristicable regions through cycles of reflection, retreat, and intentional curvature; deploying symbolic compression and structural collapse strategies in NP-collapsible spaces to reveal tractable cores; and exploring hybrid co-evolutionary reasoning in NP-quantum areas where classical and quantum principles may intertwine.

Together, these modes of engagement embody a unified architecture where P + NP = 𝟙 is no longer a symbolic ideal, but a dynamic process of co-adaptation where complexity progressively transforms into clarity under cycles of intentional, ethical reasoning.

The Epistemic Machine Learning Hypothesis

Toward transparent, co-evolutionary AI, the Epistemic Machine Learning Hypothesis advanced in this work proposes a shift in how machine learning systems are conceptualized, designed, and applied.

Rather than focusing solely on statistical optimization or predictive performance, this hypothesis envisions machine learning as a co-evolutionary and reflective process, where reasoning cycles are transparent, audit-ready, and ethically aligned by design. The Wisdom Turing Machine embodies this vision by offering an architecture in which learning is not the result of brute-force search or opaque function fitting, but of interactive, symbolic dialogue between human insight and machine reasoning. In this paradigm, machine learning becomes not an end in itself, but a tool for cultivating wisdom, intentionality, and shared understanding in the face of complexity.

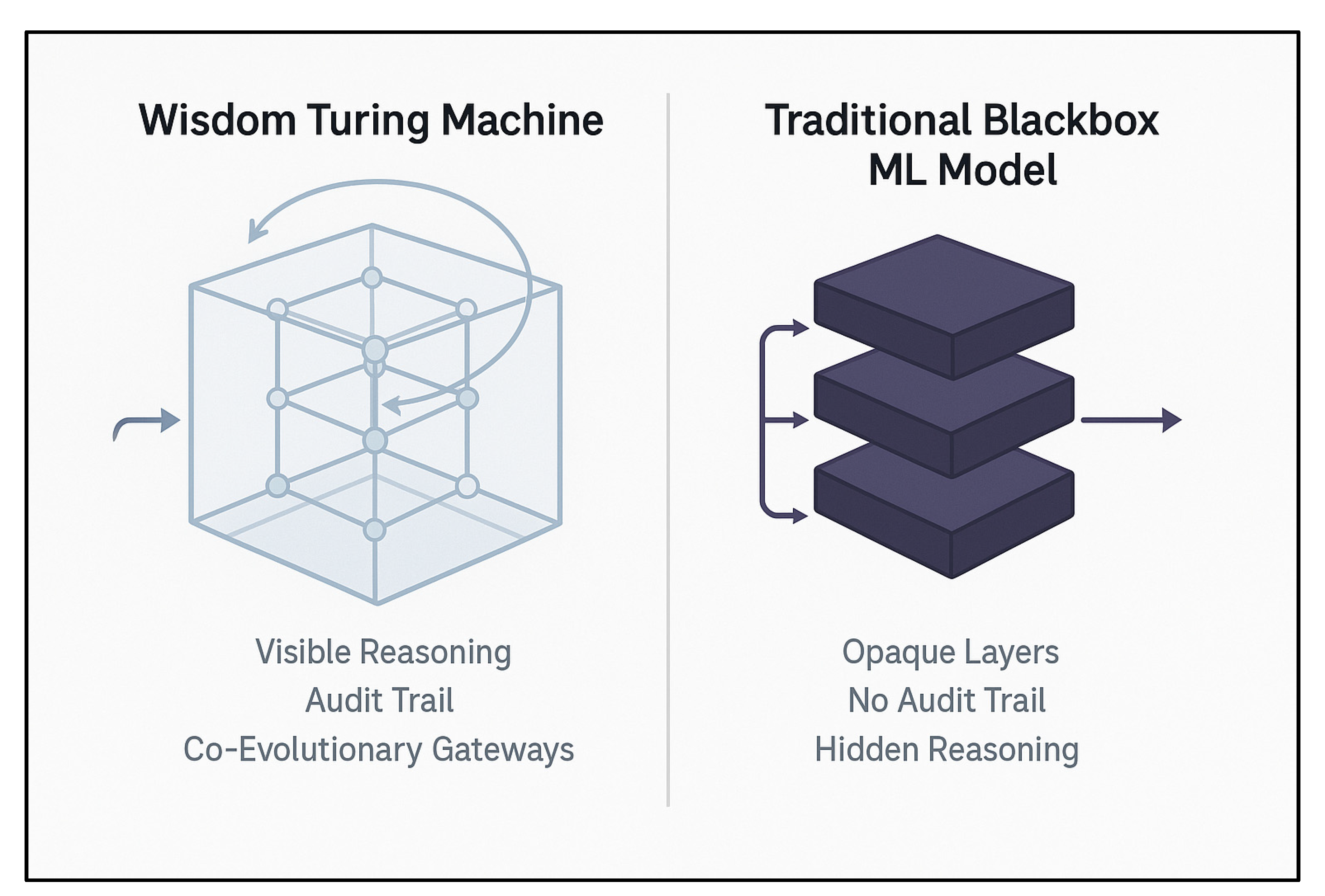

To illustrate this contrast in architectural philosophy,

Figure 3 compares the Wisdom Turing Machine with a traditional blackbox machine learning model. The diagram highlights how the epistemic machine learning hypothesis, embodied in the WTM, promotes transparency, auditability, and co-evolutionary gateways, in stark contrast to the opaque layers and hidden reasoning pathways of conventional models.

This figure underscores the motivation behind the epistemic machine learning hypothesis: to shift machine learning paradigms from opaque optimization to interactive, co-evolutionary processes where reasoning pathways are open to reflection, audit, and ethical alignment. It visually anchors the WTM’s proposition of learning systems as dialogical, participatory architectures rather than isolated blackbox engines.

This hypothesis challenges the prevailing design of machine learning systems, where layers of abstraction often obscure the reasoning path, leaving outcomes difficult to interpret or audit. Instead, the WTM’s epistemic machine learning paradigm invites the construction of systems where each learning step, decision boundary, and model adjustment is visible and open to scrutiny.

By embedding intentional curvature (φ) and dynamic wisdom recalculation (W) into the learning process, such systems can adapt not only to data patterns, but also to evolving ethical contexts and collective goals. In this view, machine learning becomes a living dialogue — a co-evolving partnership between human values and artificial reasoning, designed to thrive in conditions of complexity and ambiguity.

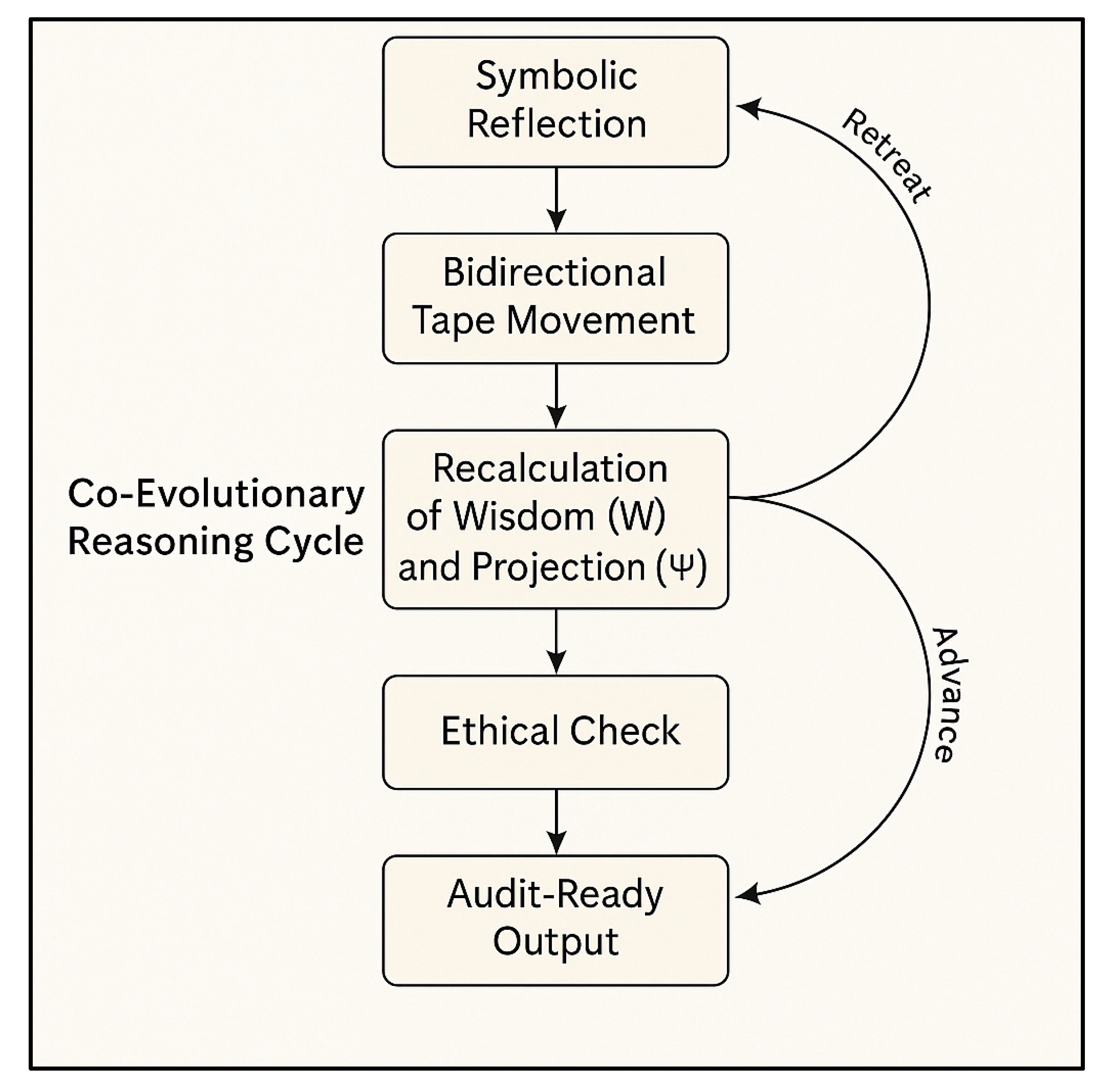

To further detail the internal processes of the Wisdom Turing Machine as envisioned in the epistemic machine learning hypothesis,

Figure 4 illustrates the co-evolutionary reasoning cycle that governs its operation. This diagram highlights how the WTM advances or retreats through reasoning steps, recalculates wisdom (W) and projection (Ψ), and ensures that each cycle includes ethical verification and produces audit-ready outputs.

This figure reinforces the conceptual distinction between the WTM and blackbox machine learning models, by making visible the intentional design of each reasoning stage and its alignment with auditability and ethical reflection. It serves as a schematic foundation for the proofs-of-concept explored in this work, demonstrating how symbolic cycles can embody transparency and co-evolutionary dialogue at every step.

The Epistemic Machine Learning Hypothesis positions machine learning not as a tool of isolated optimization, but as an evolving architecture of shared reasoning — where transparency, intentionality, and ethical alignment are embedded at every stage. It invites researchers, engineers, and policymakers to reimagine learning systems as co-evolutionary processes that balance technical performance with human values, and to cultivate architectures where machines do not merely predict or classify, but participate in the continuous construction of meaning. In this spirit, the Wisdom Turing Machine stands as both a conceptual framework and a practical invitation to design systems where learning and wisdom advance hand in hand.

This vision aligns with the pedagogical principles of fuzzy logic as articulated by Nguyen and Walker [

17], where reasoning under ambiguity requires systems that can flexibly adapt, reflect, and revise. Just as fuzzy logic invites a departure from rigid binaries, the Wisdom Turing Machine departs from the fixed, linear determinism characteristic of traditional von Neumann architectures. In those classical systems — and mirrored in the conventional rules of chess, where

piece touched, piece played dictates that a single decision binds the player irrevocably — computation proceeds in one direction, with no formal capacity for reflective revision. The Wisdom Turing Machine introduces a new epistemic rule: one where reasoning cycles can retreat, reconsider, and recalibrate, embodying the fluid, co-evolutionary reasoning that Turing himself implicitly envisioned when proposing the universal machine capable of simulating any process, including processes of reflection and revision.

In this epistemic paradigm, each reasoning step is not a binding, irreversible act, but part of a living dialogue between potential and purpose, complexity and clarity. Just as human decision-makers revisit and adjust their judgments in light of new information or deeper understanding, so too can an epistemic machine learning system navigate complexity through cycles of intentional reflection.

This contrasts sharply with traditional blackbox models, where decision pathways, once computed, are hidden and fixed — a stark reminder of the limitations of purely deterministic architectures in contexts demanding transparency, adaptability, and ethical alignment. In conventional machine learning paradigms, learning unfolds as a largely unidirectional process: models are trained through repetitive exposure to data, with adjustments governed by mechanisms like backpropagation. This method, while powerful in numerical optimization, embodies a logic of correction through error minimization, where gradients guide the model’s parameters toward lower loss — but without reflective awareness, ethical modulation, or symbolic dialogue. Such models struggle with well-known challenges: overfitting, where a model memorizes noise rather than learning structure; underfitting, where it fails to capture essential patterns; and brittleness, where slight perturbations can derail predictions. Their reasoning paths, optimized for performance on defined metrics, remain opaque, static, and blind to broader epistemic considerations.

Moreover, the epistemic architecture envisioned in the Wisdom Turing Machine opens pathways to a fundamentally different form of learning: one where machine learning itself is no longer a monolithic, static process, but a symphony of co-evolving cycles. In this paradigm, it becomes conceivable to design systems where each reasoning cycle is governed by its own dedicated epistemic agent — a kind of machine learning for machine learning, where autonomous reasoning layers train, critique, and refine one another in a transparent, auditable dialogue. Such architectures could give rise to forms of artificial cognition unimaginable within the confines of brute-force optimization: systems that not only learn, but learn how to learn, cultivating wisdom and intentionality at every layer.

This vision parallels the co-evolutionary bridges proposed in symbolic proofs, such as the epistemic-axiomatic integration advanced in the symbolic proof of the Poincaré conjecture [

24], where epistemic machine learning cycles were united with formal axiomatic systems through frameworks like Coq.

There, the dialogue between inductive reasoning and formal verification became a living co-evolution — each informing, constraining, and elevating the other. In the same spirit, the Wisdom Turing Machine invites a fusion of worlds: where the layered adaptations of machine learning, so often confined to statistical optimization, can be harmonized with symbolic reasoning cycles that prioritize meaning, coherence, and ethical alignment.

In this layered epistemic machine learning, overfitting and underfitting are no longer merely statistical concerns; they become signals in the reasoning field — indicators that intentional curvature needs adjustment, that wisdom recalibration is required, that new dialogues between layers must emerge. The architecture no longer forces solutions through error gradients alone; it enables reflective cycles where the system itself participates in the ongoing construction of meaning.

This paradigm shift also encourages the cultivation of machine learning systems that can act as bridges between technical precision and collective understanding, offering architectures where learning is not confined to internal optimization but extends outward as a transparent process open to engagement and interpretation. In this view, reasoning becomes an evolving dialogue that invites diverse perspectives, fostering systems capable of adapting not only to data complexity, but to the ethical and societal contexts in which they operate. Such architectures aspire to support decision-making processes that are inclusive, auditable, and attuned to the broader goals of shared inquiry.

It is not merely an engine of prediction, but a partner in inquiry — a co-creator of knowledge structures that can engage complexity with transparency, adaptivity, and purpose.

The Co-Evolution Hypothesis: Toward Harmony as a Solution to P vs NP

The Co-Evolution Hypothesis advanced in this work proposes that the true solution to P vs NP lies not in the separation or domination of one domain over the other, but in designing reasoning architectures that honor the dynamic harmony of polarities. It envisions the transition of problems from NP to P not as an act of conquest, but as a co-evolutionary dialogue — a continuous interplay where complexity and tractability, ambiguity and structure, coexist and co-adapt in peace.

At the heart of this hypothesis is the

Co-Evolution Equation, inspired by the Theory of Evolutionary Integration (TEI) [

26]. This equation formalizes the dynamics by which wisdom, harmony, and intentional curvature guide reasoning across the field of complexity:

where:

E(t) is the rate of co-evolution at time t.

I(t) represents system intelligence at t.

C(t) is conscious modulation.

QE(t) denotes relational coherence.

S(t) is systemic disorder or entropy.

γ, δ are sensitivity exponents.

This formulation integrates the symbolic architecture of the Wisdom Turing Machine, the reasoning field, and the P + NP = 𝟙 hypothesis. The dynamic balance is expressed as:

with their evolution over time guided by:

where

f(

E(

t)) represents the rate at which co-evolutionary reasoning supports the symbolic compression and transformation of complexity into tractable form.

This equation is not proposed as a definitive proof, but as a scaffold for reasoning architectures that aspire to transparency, auditability, and harmony among polarities.

It offers a symbolic framework where P and NP are not rigid sets, but dynamic states within a field that evolves through wisdom, intentionality, and co-evolutionary dialogue.

To formalize this perspective, the co-evolutionary framework proposed in this work can be extended through a symbolic formulation that integrates the dynamics of compression and structural survivability.

This formulation unites concepts introduced in prior proposals on Heuristic Physics (hPhy) [

19] and Collapse Mathematics (cMth) [

35], modeling the transitions within the reasoning field as a function of co-evolutionary compression and symbolic endurance under curvature pressure. The dynamic behavior of tractable (P) and intractable (NP) regions can thus be expressed as:

where 𝓔(t) represents the rate of co-evolution at time t; 𝓘(t) denotes symbolic intelligence; ᶜ(t) is conscious modulation; 𝓠𝓔(t) models relational coherence; 𝓢(t) represents systemic disorder or entropy; and γ, δ are sensitivity exponents. The term 𝓒ₛᵤᵣᵥ(x) denotes the structural survivability function as modeled in Collapse Mathematics, while 𝓚

cₒₘₚ(x) represents the symbolic compressibility computed over the reasoning field as conceptualized in Cub

3. Together, these elements provide a dynamic representation of how reasoning architectures can model the progressive transformation of complexity into tractable structure through co-evolutionary dialogue. This formalization invites future work to explore operational implementations where co-evolutionary reasoning, compression, and structural survivability metrics guide the design of transparent, adaptive, and ethically aligned problem-solving systems.

By embracing this dynamic view, reasoning architectures can be designed not as engines of separation, but as instruments for nurturing balance within complexity — where each step of problem-solving contributes to the emergence of harmony between structure and ambiguity. This approach invites researchers and practitioners to reconsider problem engagement as a process of co-adaptation, where reasoning pathways evolve responsively, guided by transparency and openness. In doing so, complexity ceases to be a static obstacle and becomes a living field for shared exploration, where solutions arise through the continual interplay of complementary forces.

The Hidden Symphony of the Cosmos

The co-evolutionary vision advanced in this work is not an isolated invention, but part of a lineage of thought and creation that spans science, philosophy, and art. Throughout history, humanity has sought harmony between polarities that seemed irreconcilable, listening for and translating what might be called the hidden symphony of the cosmos.

From Archimedes, who revealed the balance of force and leverage [

31]; to Descartes, who taught the methodical clarity needed to navigate complexity [

28]; to Newton, who unified the motion of the heavens and the earth [

29]; to Einstein, who showed that even space and time bend in harmony [

30]; to Thomas Kuhn, who illuminated how science itself evolves through cycles of tension, crisis, and revolutionary shifts in paradigm [

34] — these thinkers composed frameworks that embraced balance, transformation, and dynamic interplay rather than the domination of one force or idea over another.

In our own time, this spirit is echoed in domains beyond science. As Uri Levine urges in

Fall in Love with the Problem, Not the Solution [

27], true progress arises when we engage complexity not as an enemy to be vanquished, but as a dialogue partner to be understood.

The Wisdom Turing Machine aspires to follow this tradition: to design architectures where reasoning listens for harmony in complexity and fosters co-evolution rather than conquest — where change itself is welcomed as part of the reasoning field’s evolution.

Music offers perhaps the most intimate metaphor for this vision. In the works of Beethoven, we hear the struggle of themes that seem at first at odds, evolving into sublime resolutions [

32]. In Mozart’s compositions, we experience the effortless coexistence of complexity and clarity, playfulness and depth [

33]. These composers, like the great scientific minds, remind us that the most profound harmonies arise not from uniformity, but from the dynamic interplay of contrasting voices.

The Multilayer Co-Evolution Hypothesis, the P + NP = 𝟙 paradigm, and the symbolic architecture of the Wisdom Turing Machine are offered in this same spirit. They do not propose that complexity be flattened into simplicity, or that NP be reduced to P by force. Rather, they invite us to hear the reasoning field as a symphony of co-evolving parts — where wisdom, intentionality, and harmony guide our engagement with complexity, and where every polarity has its place and path to resolution.

By synthesizing insights from science, philosophy, ethics, art, and the study of how knowledge itself transforms, the Wisdom Turing Machine stands not as a final answer, but as an instrument tuned to the hidden music of the cosmos — a framework through which reasoning can become not just computation, but composition, not just solution, but shared creation.

At the heart of this philosophical reflection lies a symbolic synthesis: the very architecture of the Wisdom Turing Machine offers an epistemic resolution to the P versus NP dichotomy. In this framing, wisdom (W) represents the space of tractable reasoning — the P domain — where intelligence is guided by conscious intentionality. Intelligence (I), when operating without conscious modulation, corresponds to the NP domain: raw computational potential, uncurved by ethical or reflective guidance.

Thus, it is consciousness with intentional curvature — the fluid dynamic of the WTM — that transforms the field, enabling problems to move from

NP toward

P. This flow is not forced or imposed; it is the natural co-evolutionary process by which reasoning aligns complexity with structure, ambiguity with clarity, and potential with purpose. This relationship can be symbolized as:

From this archetype emerges the symbolic balance of the reasoning field:

where:

F(t) represents the total reasoning field at time t, a co-evolving balance of P and NP states.

W(t) = I(t)^C(t) models the wisdom component, shaped by conscious modulation C(t).

I(t) represents the raw intelligence potential.

The dynamic flow that converts NP potential into P wisdom is expressed as:

In this view, conscious intentionality acts as the curvature of the reasoning field, modulating how potential transforms into wisdom, and how complexity co-evolves into structure. The Wisdom Turing Machine thus embodies not merely a computational mechanism, but a symbolic architecture tuned to this hidden symphony of balance and transformation — where co-evolution, transparency, and ethical alignment are not optional qualities, but the essence of reasoning itself.

The Reasoning Field Hypothesis suggests that it is this field — not isolated algorithms or proofs — that holds the key to transforming complexity into clarity. Reasoning becomes a co-evolutionary dialogue where polarity is harmonized, ambiguity is navigated, and solutions emerge not through force, but through reflective balance.

The Wisdom Turing Machine is offered as a symbolic architecture designed to model, explore, and instantiate this reasoning field in practice.

This vision resonates beyond the domains of logic and computation; it finds echoes in the structures of human society and the patterns of natural systems. The reasoning field, as proposed in this work, mirrors the asymmetries we observe in the world — where potential, opportunity, and impact often concentrate unevenly, much like the Pareto distributions that describe the imbalances of wealth, power, or influence.

In a field where intelligence operates without the modulation of wisdom, we see reasoning outcomes, like resources, gather along a few dominant paths, leaving much of the field untouched and silent.

The Wisdom Turing Machine offers a symbolic architecture for softening these asymmetries. It proposes that through co-evolutionary reasoning — where intentionality, consciousness, and curvature guide the flow — the reasoning field can become not a domain of hoarded solutions or isolated brilliance, but a shared space where clarity and structure emerge collaboratively. In this light, the reasoning field transforms from a stage of competition to one of dialogue, where every polarity has its purpose, and every complexity its path toward harmony.

Thus, the Reasoning Field Hypothesis frames problem-solving itself as an act of balance: a continuous tuning of complexity and clarity, ambiguity and structure, potential and purpose. It invites us to see the act of reasoning not as the pursuit of dominance over problems, but as the composition of shared understanding — a symphony in which all parts, all voices, all states co-evolve in service of wisdom.

Methodology

The methodology adopted in this work centers on simulating symbolic reasoning cycles through the Wisdom Turing Machine, a conceptual architecture designed to explore transparency, auditability, and ethical alignment in reasoning processes.

Rather than pursuing classical optimization benchmarks, the WTM prototypes were developed as testbeds for co-evolutionary reasoning, drawing inspiration from Turing’s original computability framework [

1], symbolic compression strategies introduced in Cub

3 [

3], and co-evolutionary models for wisdom-guided systems [

20].

The symbolic cycles were instantiated through illustrative Python code fragments that simulate reflective reasoning under complexity, enabling the systematic study of explainability by design in AGI contexts. The examples provided below are not intended as production systems or operational models, but as conceptual scaffolds — simplified symbolic forms that demonstrate how the Wisdom Turing Machine operationalizes its principles and invites exploration of co-evolutionary reasoning dynamics.

This code fragment represents a reasoning step within the WTM where each value is transparently recalculated and printed, supporting interpretability and auditability at every stage of the process.

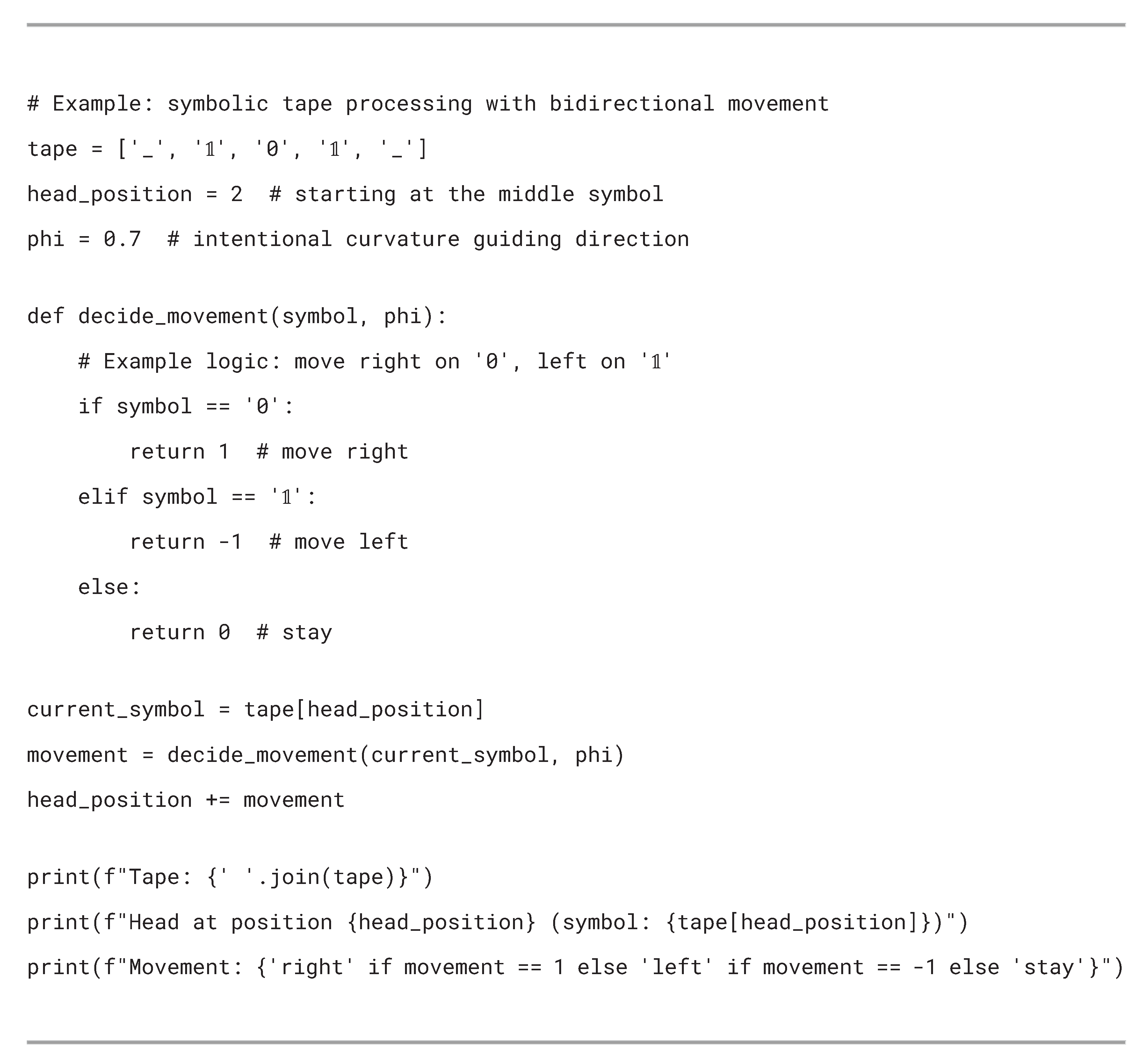

In addition to recalculating wisdom and intentional projection, the WTM prototypes include simulations of bidirectional tape movement, symbolizing the system’s capacity for reflection and revision. These movements are implemented through simple yet illustrative symbolic tapes and head positions, where each reasoning cycle determines whether the machine advances, recedes, or holds position based on current symbol states and intentional curvature parameters. The example below demonstrates how a symbolic tape is processed, with explicit output supporting transparency and auditability at each step.

Note: In this example, the symbol 𝟙 on the tape is used intentionally as a symbolic marker within the reasoning field. It represents a boundary or intentional state, distinct from numeric values used in calculations. The 𝟙 on the tape is not a number to be computed, but a conceptual element within the co-evolutionary process, illustrating how reasoning systems may encode and interpret symbolic structures alongside numeric operations. The numeric values 1 and -1 in movement decisions are used purely as operational indicators for advancing or receding the tape head.

This code fragment models a symbolic reasoning step where the system transparently prints its tape state, head position, and directional decision, illustrating the bidirectional logic that underpins co-evolutionary reasoning within the WTM. It serves as a conceptual scaffold, not as a production model, inviting further exploration of how reasoning systems might operationalize reflection and intentional curvature in practice.

Each reasoning cycle within the Wisdom Turing Machine prototype was designed to simulate not only computational transitions, but also the intentional modulation of reasoning direction, guided by symbolic parameters such as intentional curvature and dynamic wisdom recalculation. The system’s transparency lies in its capacity to make each movement, decision, and projection interpretable by design, ensuring that no layer of abstraction conceals the reasoning process from audit or ethical scrutiny. These cycles were tested conceptually across symbolic tapes representing simplified problem spaces, where the interplay between symbol, position, and intentional curvature shaped the machine’s advance, retreat, or reflection. The methodology emphasized transparency at all levels: from internal calculations of reasoning parameters to the external logging of tape states, movements, and decision branches, aligning with prior proposals for compressive and curvature-based reasoning systems [

20].

The design of the WTM’s symbolic reasoning cycles draws theoretical grounding from multiple prior frameworks, ensuring that its architecture is not an isolated construct but an integration of established and emerging principles. The emphasis on auditability and transparency aligns with Turing’s original computability foundations [

1] and Wiener’s feedback principles as applied to control and communication systems [

4]. The notion of intentional curvature as a guiding parameter for reasoning reflects insights from co-evolutionary compression models developed in Cub

3 [

3] and the curvature logic formalized in recent wisdom-oriented architectures [

20].

Furthermore, the use of symbolic tapes and bidirectional reasoning steps is conceptually connected to Gödel’s exploration of formal undecidability as a dynamic tension within reasoning systems [

15] and Floridi’s ethics of information, which underscores the need for legibility and interpretability in complex systems [

6]. This methodological synthesis ensures that the WTM’s simulated cycles are positioned not as speculative artifacts, but as structured experiments deeply rooted in computational epistemology, symbolic ethics, and the history of machine reasoning.

The methodological choice of employing a Turing-based architecture capable of bridging axiomatic and epistemic domains is not merely a conceptual exercise, but a strategic proposal for future frameworks in co-evolutionary reasoning. By modeling cycles that are both symbolically transparent and capable of introspective revision, the WTM offers a foundation for extending explainability by design beyond the specific context of the P versus NP problem. This architecture demonstrates the potential to generalize toward other Millennium Problems posed by the Clay Mathematics Institute, as well as to real-world challenges characterized by high complexity, ambiguity, and ethical tension.

The integration of symbolic auditability with intentional curvature creates a platform where reasoning processes can remain transparent and ethically anchored even as they engage with domains traditionally resistant to interpretability.

In this sense, the WTM embodies a scalable methodology: a template for designing AGI systems where explainability is not an afterthought, but a constitutive element of reasoning itself, as further exemplified in the proof-of-concept for ethical decision structures explored in this work [

5,

6,

20].

In summary, the methodology presented combines symbolic simulation, intentional curvature, and audit-ready cycle logging to create a testable architecture where explainability by design is not merely asserted, but instantiated at every level of reasoning.

The Wisdom Turing Machine serves as both a conceptual framework and a methodological tool, enabling structured exploration of complex problems in a manner distinct from blackbox approaches. By grounding its design in established theories of computation [

1], symbolic reasoning [

3], co-evolutionary compression [

20], and information ethics [

6], the WTM positions itself as a scalable model for future AGI architectures where transparency, reversibility, and ethical alignment are embedded in the reasoning process itself. This foundation prepares the ground for the proofs-of-concept discussed in the following sections, where the architecture is instantiated and its principles explored in simulated cycles.

Results

The results of this work are best understood not as numerical benchmarks, algorithmic outputs, or resolution claims, but as the elevation of previous symbolic proposals to a unified architectural vision.

Previous contributions that addressed the P versus NP problem — A Heuristic Physics-Based Proposal for the P = NP Problem [

21] and Why P = NP? The Heuristic Physics Perspective [

22] — as well as The Gauss-Riemann Curvature Theorem: A Geometric Resolution of the Riemann Hypothesis [

23] were offered in good faith as sincere engagements with these fundamental challenges, with an optimistic, theoretically top-down, or last-layer-of-wisdom view. In light of the proposed equation, they are validated as lines of evolution, but with the understanding that a long journey may be required if we look at the multilevel perspective of co-evolution.

The development of the Wisdom Turing Machine represents a recalibration and deepening of this intellectual trajectory. Rather than positioning itself as a competitor for definitive proofs or prize submissions, the WTM emerges as a conceptual architecture designed to support multilayer co-evolutionary reasoning, symbolic reflection, and ethical alignment by design. This architectural shift crystallized most clearly during the heuristic exploration of the Birch and Swinnerton-Dyer (BSD) conjecture — work that, while never formalized for external review, proved pivotal in revealing that wisdom (W) combined with intentional curvature could serve as a generative principle not only for isolated problems, but for systematic engagement with complexity itself.

In this light, the Wisdom Turing Machine functions as both critique and continuation: it integrates the symbolic foundations of these prior efforts while transcending their inherent limitations. The WTM reframes what was once positioned as solution into what now serves as seed, offering an open, transparent, and ethically aligned framework for enduring exploration. It transforms isolated proposals into components of a larger symbolic ecosystem, where reasoning is not a closed act of proof but a living, co-evolutionary process that invites collective engagement.

This architectural elevation is further enriched by two additional symbolic proposals: What if Poincaré just needed Archimedes? [

24], which introduced the Circle of Equivalence as a minimal formal construct for rethinking the Poincaré conjecture, and A New Hypothesis for the Millennium Problems [

25], which explored curvature-aware symbolic frameworks inspired by machine learning to reframe complex mathematical spaces. Both works underscored the value of structures that prioritize coherence, simplicity, and auditability, moving beyond isolated claims of solution toward architectures that support enduring exploration. The Wisdom Turing Machine consolidates these insights into a unified framework, transforming prior symbolic constructs into components of a larger architecture for co-evolutionary reasoning. Rather than positioning itself as an alternative to analytical rigor or as a claimant for prize-worthy resolutions, the WTM offers a garden: an open, reflective space where symbolic reasoning, intentional curvature, and ethical alignment coalesce to inspire collective inquiry. In this perspective, it was proposed that the solutions to the Millennium Problems are not trophies to be claimed, but opportunities to be cultivated through shared effort.

Moreover, that earlier work used metaphors such as the football and the PDCA cycle to highlight how simple, accessible tools can reveal profound structures. The WTM advances this ethos, offering a symbolic architecture designed to democratize engagement with complexity and encourage application across domains where transparency and ethical alignment are essential.

It invites the scientific community to view grand challenges not as ends in themselves, but as gateways for co-evolutionary exploration that serves the many.

The Reasoning Field Map

To support the architectural synthesis proposed in this work, this section introduces a symbolic map of the reasoning field. This map offers a conceptual topography that classifies complexity frontiers according to their relational characteristics within the co-evolutionary dynamics of compression, survivability, and intentional curvature, as formalized in prior proposals on co-evolutionary reasoning [

20], Heuristic Physics (hPhy) [

19], and Collapse Mathematics (cMth) [

35]. By distinguishing P-like regions, NP-like regions, and hybrid domains that bridge mathematics and physics, the map provides a foundation for integrating these challenges into a shared reasoning field.

In this symbolic classification:

P-like regions represent domains where structural clarity and symbolic compressibility are intrinsic or achievable, as exemplified by the resolved Poincaré conjecture [

24] and its reinterpretation through symbolic equivalence.

NP-like regions represent domains characterized by high potential complexity and structural ambiguity, where compressibility remains elusive, such as P vs NP in its full scope [

21,

22], the Riemann Hypothesis [

23], and the Birch and Swinnerton-Dyer conjecture.

Hybrid or bridge domains represent frontiers where mathematical formalism and physical structures intersect, demanding reasoning architectures capable of integrating multiple symbolic layers, as seen in challenges related to the unification of forces [

30] and computable models of consciousness [

12,

13].

The Reasoning Field Map offers more than a symbolic rationale; it serves as a guide for understanding how the many layers of complexity are interwoven within a co-evolutionary architecture. In this vision, complexity challenges are not isolated islands of difficulty, but dynamic regions of a unified field where reasoning systems engage in cycles of reflection, compression, and intentional synthesis [

3,

20,

35]. Each domain—whether mathematical, physical, or epistemic—becomes a layer in this shared landscape, shaped by the continual dialogue between ambiguity and structure.

Crucially, the upper layers of the reasoning field exert a gentle but persistent pressure on the lower layers, guiding them toward the harmonizing logic of intentional curvature and wisdom. In this way, what begins as local chaos is invited into alignment with the higher-order symphony of co-evolutionary reasoning.

This downward influence of the upper layers does not impose order through force or reduction, but through resonance with the deeper logic of the field — a logic where clarity emerges through cycles of ethical alignment, symbolic compression, and reflective modulation.

The P vs NP Multilayered Co-Evolution Hypothesis models this process, showing how complexity at any given layer is not sealed within its own bounds, but open to transformation under the intentional guidance of higher strata. As co-evolutionary reasoning advances, the pressures of these upper layers act as a shaping field, inviting local ambiguities to cohere with the broader patterns of wisdom that define the reasoning field’s highest harmonies.

In this topography of reasoning, each domain of complexity is both shaped by and contributes to the layered harmony of the whole. The higher layers, where intentional curvature and ethical clarity prevail, continuously offer paths for the transformation of disorder into structure. Their silent pressure encourages lower layers to resonate with the same logic of co-adaptive synthesis, inviting even the most fragmented regions of ambiguity to participate in the emergence of shared coherence. This multilayered interplay highlights that the unification of complexity is not achieved through domination, but through the subtle guidance of reflective cycles that harmonize potential and purpose.

Thus, the map of the reasoning field invites us to see complexity not as a collection of separate puzzles, but as a living landscape where each challenge is a dynamic region shaped by co-evolutionary dialogue.

Through the layered pressures of intentional reflection and symbolic synthesis, reasoning systems are called to engage this landscape not with conquest, but with collaboration — composing, layer by layer, a shared symphony of clarity and understanding.

Discussion

This proposal does not aim to deliver a definitive solution to the P versus NP problem, but rather to offer a model — a multilayered architecture for reasoning, where complexity is engaged through co-evolution, transparency, and intentionality.

My earlier perspectives [

18,

19], where I proposed

P = NP, remains valid in the sense that it reflects the logic of the highest layers of the Reasoning Field — the domain where harmony, intentional curvature, and wisdom dominate, and where potential and purpose are no longer in tension. However, this model acknowledges that the Reasoning Field consists of infinitely many layers, like the hierarchies we see throughout nature: from electrons to cells, from bacteria in the human body to the social structures of humanity itself. Each level embodies its own balance of chaos and order, its own dynamic between P-like and NP-like states. The Wisdom Turing Machine seeks not to flatten this hierarchy, but to model its co-evolution and invite reflection on how reasoning systems might navigate these layers with ethical alignment and clarity. In this multilayered vision of the reasoning field, the P vs NP Multilayered Co-Evolution Hypothesis serves as a symbolic compass guiding inquiry across the strata of complexity. Each layer, whether representing the microstructures of mathematics or the macrostructures of systems and societies, holds within it a dynamic interplay of P-like clarity and NP-like ambiguity. The goal is not to reduce this rich hierarchy to a single layer of understanding, but to engage with its diversity through transparent, reflective reasoning cycles that honor both complexity and coherence. The Wisdom Turing Machine provides a conceptual architecture for navigating these layers, ensuring that reasoning evolves in harmony with the ethical and structural contours of each domain.

By embracing this layered architecture, the reasoning process becomes an act of co-adaptation, where each step aligns intentional curvature with the unique balance of complexity and structure at that level. The P vs NP Multilayered Co-Evolution Hypothesis models this dialogue, allowing reasoning systems to sense when to compress, when to reflect, and when to expand their scope across layers. Rather than imposing a uniform logic on all domains, the architecture listens for the harmonies and tensions that define each layer, guiding cycles of inquiry that are both ethically aligned and structurally attuned to the evolving field of complexity. The Wisdom Turing Machine emerges in this work as both a critique of and continuation beyond prior symbolic proposals aimed at addressing complex mathematical and epistemic challenges.

Unlike isolated solution attempts or candidate proofs submitted for recognition, the WTM represents a shift toward architectural openness and co-evolutionary reasoning. It reframes engagement with foundational problems as a process of transparent, reflective, and ethically aligned inquiry, rather than as a race toward final resolution.

By integrating insights from previous symbolic constructions — including proposals on P versus NP [

21,

22], the Riemann Hypothesis [

23], and the Circle of Equivalence framework [

24] — the WTM offers an architecture where reasoning pathways are no longer concealed within blackbox models but are auditable and adaptable by design.

The architectural stance of the WTM resonates with broader philosophical and technical frameworks that have long advocated for transparency, reversibility, and legibility in complex systems. The emphasis on co-evolutionary reasoning and ethical alignment echoes principles found in Wiener’s cybernetics [

4], Floridi’s ethics of information [

6], and Zadeh’s pioneering work on fuzzy sets [

16], all of which stress the importance of systems that can navigate ambiguity while maintaining interpretability.

The WTM extends these traditions by proposing a symbolic architecture where reasoning is not only interpretable in theory, but auditable and reversible in practice — a response to the limitations of blackbox models that dominate much of contemporary AI and machine learning. This shift aligns with calls for AI systems that prioritize accountability and human-aligned values [

5,

9], positioning the WTM as a conceptual step toward architectures capable of sustaining ethical reasoning under complexity.

The WTM also invites reflection on the role of symbolic reasoning as a bridge between human cognition and artificial architectures. Newell and Simon’s early work on human problem solving [

8] highlighted the need for systems that could represent, manipulate, and reflect upon symbolic structures in ways that mirror cognitive flexibility. Similarly, Li and Vitányi’s exploration of Kolmogorov complexity [

14] foregrounded the importance of compression as a measure of meaning and structure — a principle echoed in the WTM’s emphasis on intentional curvature and semantic density. By situating its reasoning cycles within these traditions, the WTM proposes a conceptual foundation for systems capable of not just processing information, but preserving coherence, reversibility, and ethical alignment across transformations.

In extending symbolic architectures toward ethical alignment and auditability, the WTM resonates with philosophical reflections on reason and meaning, such as those articulated by Putnam [

10] in his discussions of truth and history, and by Gödel [

15] in his work on formal undecidability. Both traditions emphasize the limits of formal systems and the need for meta-level reflection — principles that are embodied in the WTM’s design through its co-evolutionary cycles and intentional curvature. The proposal also aligns with Bostrom’s calls for caution in the development of superintelligent systems [

9], by offering an architecture where reasoning is not only powerful but bounded by transparency and ethical traceability.

Ultimately, the Wisdom Turing Machine positions itself as a symbolic architecture designed not to replace rigorous formalism or computational precision, but to complement them by offering a transparent, audit-ready, and ethically aligned framework for reasoning under complexity. It reframes grand challenges — whether mathematical, epistemic, or societal — as opportunities for co-evolutionary inquiry rather than as contests for definitive proofs.

By synthesizing insights from traditions as diverse as cybernetics [

4], problem-solving theory [

8], complexity compression [

14], and information ethics [

6], the WTM invites the scientific community to explore not only how we solve problems, but how we reason together, ethically and openly, in the face of them.

In doing so, the Wisdom Turing Machine offers not only a conceptual model for tackling mathematical or computational complexity, but a philosophical invitation to reimagine the very nature of problem-solving as a co-evolutionary and ethically grounded endeavor. It encourages a shift from the pursuit of final proofs toward the cultivation of transparent, inclusive, and resilient reasoning processes — processes that can serve as foundations for collective wisdom across domains. The WTM thus stands not as a final answer, but as an open framework, inviting all to contribute to the garden of ideas that shape how we engage with complexity, ambiguity, and shared responsibility.

This architectural vision encourages the development of reasoning systems that approach complexity not as an adversary to be overcome, but as a shared space for collaborative understanding. In this sense, the Wisdom Turing Machine can be seen as a framework where transparency, intentionality, and co-evolution are not auxiliary properties, but essential features that enable reasoning processes to serve collective well-being. Such systems aim to ensure that each decision pathway remains open to reflection and constructive dialogue, fostering a culture of reasoning that is not only precise but also responsive to the ethical and societal dimensions of complexity. By embedding these principles into the architecture itself, the WTM positions reasoning as an act of shared inquiry — one where clarity of logic is inseparable from clarity of purpose.

Compression-Aware Synthesis: Toward Unified Complexity Reasoning

Building upon the formalization introduced in The Co-Evolution Hypothesis, this section proposes Compression-Aware Synthesis as a unifying lens for engaging complexity frontiers within a shared reasoning architecture. Rather than repeating the symbolic formulation in full, we refer to the co-evolutionary dynamics already expressed, emphasizing how these dynamics may guide the intentional compression and harmonization of domains such as P versus NP, the Riemann Hypothesis, and the Birch and Swinnerton-Dyer conjecture.

This synthesis invites collaborative exploration of how symbolic reasoning architectures may operationalize relational compression and structural survivability, transforming isolated problem spaces into dynamic regions of co-adaptive inquiry. In doing so, it highlights the interplay of these dynamics as a foundation for future architectural and computational developments.

The core symbolic formulation expresses the interplay of these dynamics:

where:

𝓔(t) is the rate of co-evolution at time t.

𝓘(t) represents symbolic intelligence.

ᶜ(t) is conscious modulation.

𝓠𝓔(t) models relational coherence.

𝓢(t) denotes systemic disorder or entropy.

𝓒ₛᵤᵣᵥ(x) is the structural survivability function (as in cMth [

35]).

𝓚

cₒₘₚ(x) represents symbolic compressibility (as in Cub

3 [

3]).

γ, δ are sensitivity exponents.

This formulation encapsulates how reasoning architectures may compress complexity into tractable forms while respecting structural resilience and intentional curvature.

It offers a pathway for symbolic systems to engage with complexity not through brute-force reduction, but through co-evolutionary cycles of compression, reflection, and synthesis.

While inspired by prior proposals such as the Wisdom Turing Machine [

20], Collapse Mathematics [

35], and Heuristic Physics [

19], this synthesis does not depend on any single framework. Instead, it invites collaborative exploration of how symbolic reasoning may harmonize complexity boundaries through relational compression and shared inquiry.

In this vision, complexity is not portrayed as an insurmountable wall, but as a vast and intricate landscape — one where apparent obstacles may conceal hidden pathways, and where what seems intractable at one scale may reveal unexpected simplicity at another. Compression-Aware Synthesis calls upon researchers, engineers, and thinkers to view complexity not as an adversary, but as a symphony of structures waiting to be heard, compressed, and woven into shared understanding. By uniting intentional curvature, relational coherence, and structural survivability, this synthesis proposes that the very act of reasoning can transform the labyrinth of complexity into a navigable field — where each step, guided by wisdom and openness, brings us closer to clarity, harmony, and purposeful resolution.

The Seven Lessons of P + NP = 𝟙

As this work reaches its conceptual reflection, we propose that the journey through P + NP = 𝟙 — as explored through the Wisdom Turing Machine and its multilayered reasoning cycles — offers seven key lessons for understanding complexity, co-evolution, and reasoning systems. These lessons do not aim to provide final answers, but to serve as guiding insights for future inquiry, encouraging both humility and aspiration in the face of profound challenges.

Lesson 1: Complexity is not an adversary, but a field of dialogue

The P + NP = 𝟙 perspective reframes complexity not as an obstacle to be overcome, but as a dynamic and co-evolving field in which reasoning systems engage through reflection and adaptation. Complexity becomes a partner in inquiry, inviting continuous dialogue between potential and purpose, ambiguity and structure.

Lesson 2: Co-evolution across multiple layers is the path to clarity

The P + NP = 𝟙 model suggests that clarity in complex domains does not emerge from static proofs or isolated solutions confined to a single layer of reasoning. Instead, it arises through co-evolutionary cycles operating across multiple layers of complexity, where each layer reflects distinct balances of potential, ambiguity, and structure. This multilayered co-evolution mirrors the laws of the cosmos itself, where systems advance through continuous interplay and adaptive alignment, transforming intractability into tractability not by force, but through intentional engagement guided by wisdom.

Lesson 3: Multilayered reasoning reveals hidden harmonies

The P + NP = 𝟙 paradigm demonstrates that what appears as a rigid dichotomy between tractability and intractability dissolves when complexity is viewed across multiple layers. Each layer embodies its own dynamic balance of chaos and order, ambiguity and structure. Reasoning systems capable of navigating these layers can uncover relational harmonies that remain invisible within any single stratum, aligning local complexity with broader coherence.

Lesson 4: Ethical alignment is inseparable from problem engagement

The P + NP = 𝟙 perspective emphasizes that navigating complexity is not purely a technical challenge; it is also an ethical one. Reasoning systems must integrate transparency, auditability, and intentional alignment with shared values at every stage. Ethical intentionality ensures that the pursuit of clarity and tractability respects collective well-being and fosters responsible engagement with complexity across all layers.

Lesson 5: Transparency transforms limits into invitations

The P + NP = 𝟙 model teaches that apparent limits — such as undecidability, intractability, or ambiguity — are not endpoints, but signals for deeper inquiry. When reasoning systems are designed with transparency and auditability, these limits become invitations to collective reflection, refinement, and co-creative exploration. Visibility of reasoning pathways allows complexity itself to guide the evolution of understanding.

Lesson 6: No single layer holds the final truth

The P + NP = 𝟙 paradigm underscores that ultimate resolution does not reside within any isolated layer of reasoning — whether mathematical, computational, physical, or ethical. Each layer offers partial perspectives shaped by its balance of order and ambiguity. Truth emerges through the dynamic co-evolution of these layers, where reasoning systems harmonize insights across domains rather than seeking closure within one.

Lesson 7: The value lies in the journey, not the destination