1. Introduction

Quantum mechanics allows a physical system to exist in a superposition of multiple eigenstates, yet upon measurement, we observe only a single, definite outcome. How and why does a quantum superposition transform into a concrete reality during measurement? This question, known as the quantum measurement problem, remains a fundamental and contentious issue in quantum theory. Several interpretations have been proposed to address this question, each with distinct conceptual strengths and weaknesses:

Copenhagen Interpretation: This interpretation postulates an explicit division between the quantum and classical domains. Upon measurement, the wavefunction collapses non-unitarily into a single eigenstate, with probabilities dictated by the Born rule. While widely used due to its simplicity and practical utility, the Copenhagen interpretation does not provide a clear dynamical mechanism for collapse, relying instead on an ambiguous "Heisenberg cut" separating quantum from classical behavior (von Neumann, Mathematical Foundations of Quantum Mechanics, 1932). As a result, it introduces two fundamentally different types of evolution-unitary evolution governed by Schrödinger’s equation and non-unitary collapse-without a physically explicit criterion to distinguish when collapse occurs.

Many-Worlds Interpretation (MWI): Everett's formulation (Everett, The Relative State Formulation of Quantum Mechanics, 1957). avoids wavefunction collapse altogether, proposing that all possible outcomes simultaneously occur in a continuously branching universal wavefunction, effectively creating a multiverse. This interpretation removes the special role of measurement and maintains purely unitary dynamics. However, it raises significant conceptual issues, such as justifying why observers experience a unique outcome and deriving the Born rule probabilities from the universal wavefunction’s structure. Despite attempts based on decision theory and typicality arguments, achieving consensus on the Born rule derivation remains challenging, leaving open fundamental questions about probability and observer identity. (Everett, et al., 1973)

Objective Collapse Models: Theories like Ghirardi-Rimini-Weber (Ghirardi, Rimini, & Weber, 1986) and Continuous Spontaneous Localization (CSL) introduce new nonlinear and stochastic elements that spontaneously localize the wavefunction, producing collapse independent of observation. These models effectively solve the measurement problem by providing a physical mechanism for collapse, testable through empirical phenomena such as spontaneous heating and decoherence. However, these theories require introducing new physical parameters absent from standard quantum mechanics, often conflicting with symmetries like Lorentz invariance and raising questions regarding faster-than-light signaling and preferred reference frames. (Diósi, 1989) (Penrose, 1996) (Pearle, 1989)

Environment-Induced Decoherence: Though not an interpretation itself, decoherence (Zeh, 1970) (Zurek, Decoherence, einselection, and the quantum origins of the classical, 2003) is a physical process crucial to interpreting quantum mechanics. Decoherence describes how a quantum system interacting with a large environment rapidly loses coherence in a preferred basis, known as the "pointer basis," becoming effectively classical. However, decoherence alone does not produce a single definite outcome. Instead, it yields a classical statistical mixture of possible outcomes without specifying why only one is perceived. Decoherence thus shifts the measurement problem rather than fully resolving it, emphasizing the need for an additional criterion to transition from a decohered mixture to an actual observed outcome.

Relational and Epistemic Interpretations: Interpretations like Relational Quantum Mechanics (Rovelli, 1996) and Quantum Bayesianism (Fuchs, Mermin, & Schack, An introduction to QBism with an application to the locality of quantum mechanics, 2014) hold an epistemic view, interpreting the quantum state not as a physical entity but as reflecting an observer’s knowledge or beliefs. Collapse, therefore, becomes a Bayesian update of information upon measurement. While elegantly avoiding the need for physical collapse, these views raise questions about intersubjective agreement, why multiple observers consistently perceive identical outcomes, and may be accused of sidestepping rather than solving the measurement problem, particularly regarding why certain outcomes are realized and others are not.

Our work seeks to propose a framework retaining the universal validity of quantum dynamics, as in Many-Worlds and decoherence approaches, without introducing fundamentally new physics or ad hoc elements. We aim to provide a clear, quantitative criterion for the emergence of definite measurement outcomes, addressing the interpretive ambiguities of existing approaches. Our solution centers on the concept of thermodynamic irreversibility, positing wavefunction collapse as an emergent phenomenon governed by the Second Law of Thermodynamics. When a measurement interaction produces sufficient entropy (e.g., dissipating heat or entropy into an environment), entanglement becomes effectively irreversible, suppressing interference and establishing classical definiteness.

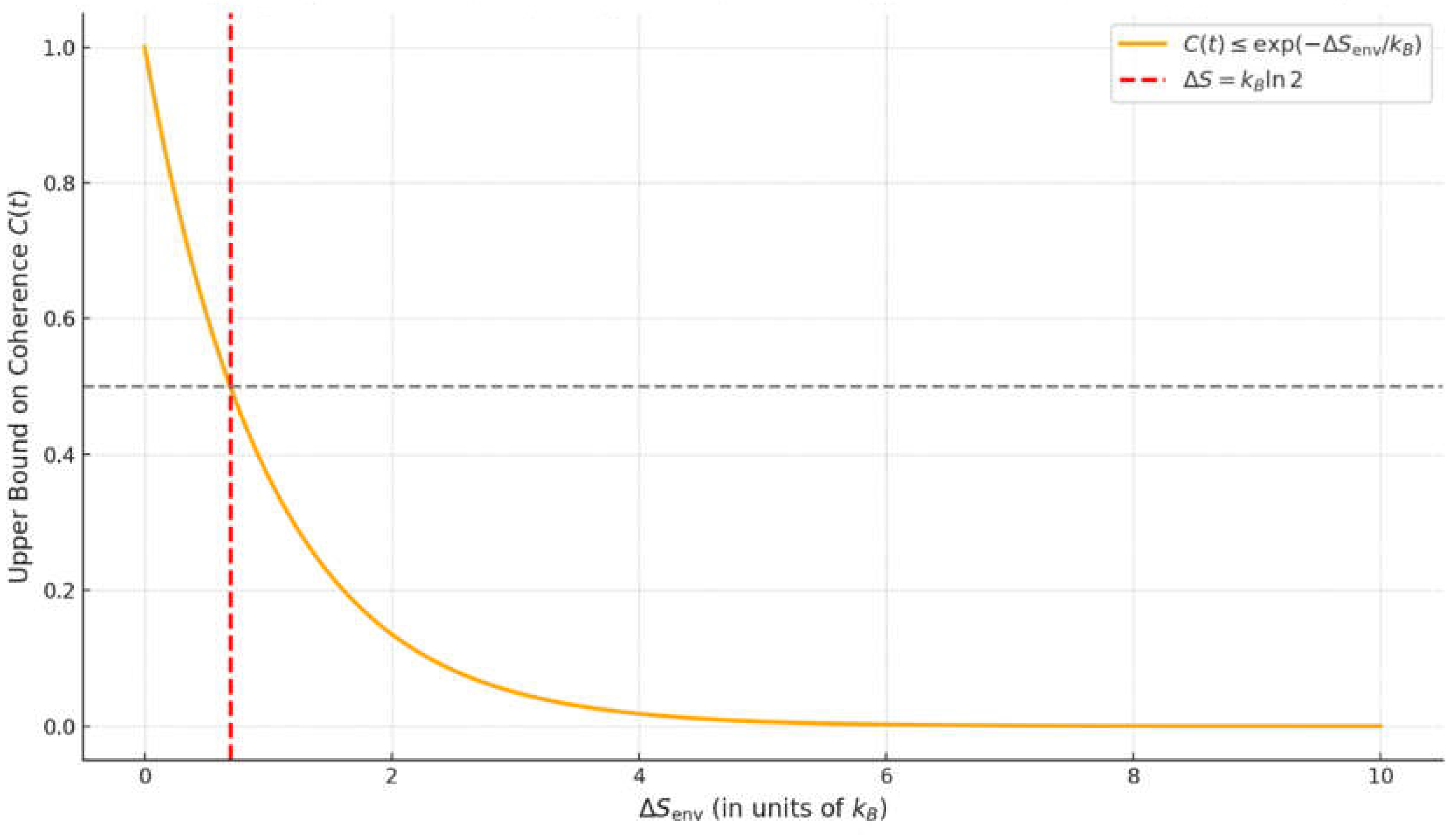

This thermodynamic collapse criterion is expressed rigorously via an entropy-coherence inequality, demonstrating that quantum coherence decays exponentially with entropy production in the environment:

Here, each qubit of information recorded in the environment carries at least of entropy, marking the threshold at which the environment fully encodes which-path information, thereby irreversibly destroying interference. Below this entropy threshold, coherence could, in principle, be restored, as exemplified by quantum eraser experiments. Beyond this threshold, however, recoherence becomes exponentially improbable, and classical definiteness emerges robustly.

By invoking fluctuation theorems like Jarzynski's equality and Crooks' relation, we quantify the practical irreversibility of measurement outcomes, formally linking wavefunction collapse to statistical thermodynamics. Our interpretation builds upon decoherence theory and Quantum Darwinism (Zurek, 2009), providing an explicit entropy-based boundary between reversible quantum dynamics and irreversible classical outcomes.

In the subsequent sections, we:

Formally describe open quantum system dynamics, decoherence, and entropy generation;

Derive the entropy-coherence inequality from foundational principles;

Outline operational methods to measure environmental entropy via calorimetry and photon scattering;

Derive the Born rule from symmetry considerations (envariance) and maximum entropy inference, without new physical assumptions;

Analyze observer-relative collapse, resolving paradoxes like Wigner's Friend and delayed-choice interference via entropy-based consistency;

Demonstrate relativistic consistency using the Tomonaga-Schwinger formalism, ensuring frame-independent collapse tied to local entropy;

Propose an optomechanical experiment to empiricallyPropose an optomechanical experiment to empirically test the entropy-collapse relationship, linking entropy production to measurable interference visibility.

Thus, our Entropy-Induced Collapse interpretation provides a coherent, falsifiable explanation for wavefunction collapse, grounded in established thermodynamics and quantum information theory. Rather than asserting new physics or ambiguous observer roles, it offers a clear, quantitative mechanism whereby quantum possibilities irreversibly become classical facts through entropy generation.

2. Theory and Literature Review

2.1. Measurement and the Problem of Outcomes

In standard quantum mechanics, the state of an isolated system

evolves under the Schrödinger equation:

resulting in deterministic, unitary evolution. This evolution preserves quantum superpositions and is time-reversible: if

evolves to |

, one can, in principle, reverse the Hamiltonian dynamics to restore the original state.

However, the quantum measurement problem arises because this unitary evolution predicts superpositions of measurement outcomes rather than definite results. For instance, consider a quantum system initially in the superposition , where and are orthonormal eigenstates of the measured observable. The measuring apparatus , initially in a "ready" state , interacts unitarily with the system to yield a combined, entangled state:

Here are apparatus pointer states that record outcomes 1 and 2, respectively. This entangled state is often referred to as the ‘measurement superposition’ or a ‘Schrödinger cat state’ involving the system and measurement device . While a valid solution of the Schrödinger equation, it contradicts experience: we never perceive superpositions.

The traditional Copenhagen interpretation resolves this discrepancy by introducing a dual dynamics: during measurement, the wavefunction non-unitarily "collapses" to one outcome, with probabilities , given by the Born rule. While pragmatically successful (von Neumann, Mathematical Foundations of Quantum Mechanics, 1932), this approach lacks a dynamical explanation for collapse, relying on an ambiguous division (the "Heisenberg cut") between quantum and classical regimes. Bell criticized this ad hoc dualism as conceptually problematic, leaving "measurement" ill-defined at the fundamental level. (Bell, 1990)

2.2. Decoherence and the Appearance of Classicality

Our approach makes no modification to Schrödinger evolution. Instead, we explain why observers effectively see stochastic state reduction in practice. Beginning in the 1970s and 1980s, Zeh, Zurek, and others developed the theory of environment-induced decoherence. Decoherence considers the system (S) coupled not just to an apparatus memory (M), but also to a large environment (E). Though the global state remains a superposition, the environment rapidly entangles with the system or apparatus, effectively measuring it. For example, air molecules, stray photons, and internal degrees of the apparatus become correlated with whether it is in .

Denote the (normalized) environment states that correlate with each outcome by

(these might represent distinct states of billions of environment particles). The total state after a very short decoherence time

would be

. The reduced density matrix of the system and memory, obtained by tracing out the environment, becomes:

In fact, for a macroscopic environment, for (environment states for different outcomes are practically orthogonal), and the interference terms are negligible. Thus, decoherence yields exactly the type of mixture one would expect after collapse, at least for local observations of . Decoherence is extremely effective: even a single scattered photon can carry away enough phase information to visibly reduce interference of a massive object, and a macroscopic apparatus interacting with a thermal environment will decohere in incredibly short times (nanoseconds or less) for any discernible superposition. This explains why Schrödinger cat states are not seen in everyday life: they quickly decohere into apparently classical mixtures. However, a key point is that decoherence by itself does not select a single outcome, the state (2) is still a superposition (albeit of many degrees of freedom). If we include the environment in our description, no collapse has occurred; the exact quantum state remains a pure state with full information of both possibilities.

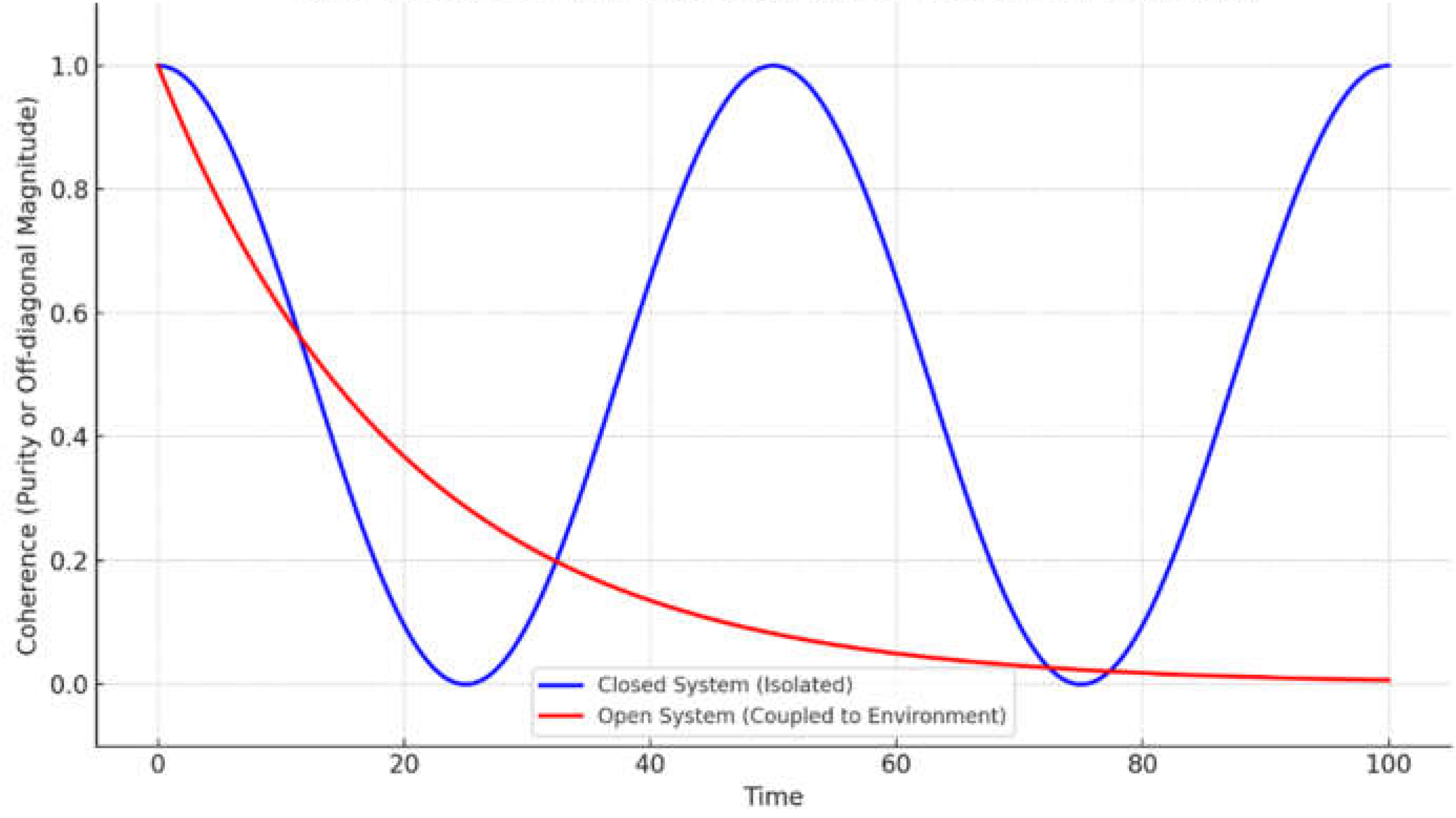

In principle, an uber-observer (like Wigner in the Wigner’s Friend thought experiment) who could control the environment might recohere the branches. For example, if one could make E interact in a way that causes to overlap again, the superposition (2) could be recombined, revealing interference between the outcomes. This is essentially what happens in quantum eraser experiments: if which-path information encoded in an environment-like degree of freedom is erased, interference fringes reappear. This shows that while decoherence is necessary for the appearance of collapse, it is not sufficient. It yields a diagonal reduced density matrix in the pointer basis, but this classicality is reversible in principle as long as unitarity and full information preservation hold.

2.3. Thermodynamic Irreversibility and Collapse Criterion

Our contribution is to identify irreversibility as the missing ingredient that distinguishes apparent collapse from mere decoherence. We assert that when the dispersal of information into the environment becomes thermodynamically irreversible, the superposition is, for all observational purposes, collapsed. This is not a new dynamical law, but a statement about how typical entropy-producing interactions are effectively irreversible. The distinction between reversible and irreversible decoherence can be quantified by entropy. Consider the entropy of the environment (or apparatus) after the measurement interaction. If the measurement only entangles a small number of environmental degrees of freedom, or encodes phase reversibly, the von Neumann entropy remains low, and reversal is, in principle, possible. If instead the entropy significantly increases (for example, many particles gain bits of which-path information, or heat is dissipated into a bath), then reversal would require reducing entropy, achievable only via rare fluctuations or external work. Indeed, no process that leaves a stable record can yield < 0. Hence, any measurement that imprints a lasting outcome increases total entropy.

Collapse criterion formalization: We define the time of collapse

from a given observer’s perspective) as the moment when environmental entropy has increased sufficiently to make further unitary evolution incapable of restoring the initial coherence. Symbolically, one could say:

where

is typically on the order of a few

, often approximated as

≈

per qubit of recorded information. Once this threshold is crossed, the state may be treated as an incoherent mixture for any future observer who shares the same thermodynamic arrow of time.

To illustrate: For example, if a single photon escapes into the environment carrying one bit of which-path information, ∼ is reached. Beyond this point, interference is effectively lost-unless that photon is intercepted and its information erased. In a typical measurement, S ≫ , the apparatus dumps a large heat into a reservoir, maybe worth of entropy, making reversal hopeless. This criterion aligns with intuition: a ‘measurement’ amplifies a microscopic uncertainty into many macroscopic degrees of freedom (apparatus, lab, etc.), increasing entropy in the process. This is why one cannot ‘un-measure’ a typical outcome. This criterion sharpens the quantum-classical boundary: it is not about the mass of an object or some arbitrary Heisenberg cut, but about entropy and information flow. A microscopic system measured in a way that does not create a lot of entropy (e.g. a weak measurement that barely disturbs a system) might be partially reversible (you could “unmeasure” it), which is indeed a concept being experimentally explored in quantum information. Conversely, even a single qubit becomes irreversibly collapsed if its result is recorded in a thermodynamically irreversible way, such as being printed and burned, dispersing the information irretrievably.

2.4. Interpretative Synthesis and Clarification

Our model integrates aspects of several existing interpretations:

Like Many-Worlds (Everett, The Relative State Formulation of Quantum Mechanics, 1957), we maintain universal unitarity and no fundamental wavefunction collapse. However, we reject an ontology of infinite equally real branches, proposing instead that "collapse" arises when an outcome becomes thermodynamically irreversible from the observer’s perspective.

Borrowing from Relational Quantum Mechanics (Rovelli, 1996), we emphasize that collapse is observer-relative, occurring when a specific observer acquires irreversible thermodynamic records. Different observers may initially assign differing quantum states, but they reconcile their descriptions upon mutual interactions and shared irreversible entropy production.

Unlike Objective Collapse Models (GRW, CSL, Penrose), we introduce no new stochastic dynamics or hidden physics. Our predictions align strictly with standard quantum mechanics and known thermodynamics, avoiding the conceptual and empirical complications these models face (Bassi, Lochan, Satin, Singh, & Ulbricht, 2013).

Compared to QBism (Fuchs, Mermin, & Schack, An introduction to QBism with an application to the locality of quantum mechanics, 2014), we agree that wavefunction collapse corresponds to epistemic Bayesian updating. However, we retain the wavefunction’s ontic, objective character. Thermodynamic irreversibility, rather than subjective belief, constrains observers, ensuring intersubjective consistency.

In sum, we propose an entropy-induced collapse framework:

Quantum measurement outcomes arise from thermodynamic irreversibility.

Decoherence alone is insufficient; irreversibility distinguishes collapse.

The collapse criterion is rigorously defined by environmental entropy thresholds.

Subsequent sections will rigorously formalize these claims, demonstrate relativistic consistency, analyze observer-dependent collapse scenarios (e.g., Wigner’s Friend), and propose empirical tests to verify the model’s predictions, ensuring falsifiability and alignment with established physics.

3. Formalism: Entropy, Coherence Relations and Dynamics

3.1. Measurement Interaction and Entropy Production

Consider a quantum system

measured by an apparatus

(serving as the observer’s memory register), and coupled to an environment

. We denote the orthonormal eigenstates of the measured observable (and pointer basis of

) as

and

, respectively, where

i labels the outcome (for simplicity, assume a discrete, nondegenerate spectrum). The total initial state (system + memory + environment) at time

is prepared as:

Here is the ready state of the apparatus (before recording any result), and is the environment’s initial state. We assume and have low entropy states, e.g. pure or equilibrium reference states. The coefficients are the probability amplitudes for each outcome in the initial superposition (so the Born rule would later emerge as ).

The first stage of measurement is a controlled unitary between

and

that correlates the memory with the system’s state. Schematically,

is defined by

This is the von Neumann premeasurement, which produces an entangled state across

and

at time

:

At this stage, no environmental interaction has occurred. If

were microscopic, the state would retain full coherence and be entirely reversible. However,

is macroscopic, so its many internal degrees of freedom act as conduits to the external environment, causing decoherence to rapidly set in. Following

, the memory’s state (now correlated with

) interacts with the environment

(which could include the apparatus’s thermal bath, photons, air molecules, etc.). We can consider a unitary

that entangles

(and

indirectly) with

. Typically, this could be modeled as each pointer state

becoming correlated with an orthogonal environment state

:

such that

(different outcomes lead to effectively orthogonal environment states). The total

state for

(after decoherence,

) is then:

To analyze what an observer can access, we trace out

to obtain the reduced density matrix of the system and memory:

This partial trace effectively suppresses off-diagonal coherence terms in the pointer basis, yielding an apparent classical mixture of outcomes. We emphasize explicitly that no physical collapse of the wavefunction occurs; the global quantum state remains pure and fully entangled. The loss of coherence is observer-relative, arising due to practical inaccessibility of the detailed environmental states.

This environment-induced decoherence mechanism clearly demonstrates how classical outcomes naturally emerge from quantum entanglement combined with partial trace operations over inaccessible degrees of freedom. However, the classicality produced here remains practically irreversible, rather than fundamentally irreversible, as coherence recovery (recoherence) remains theoretically possible under conditions where environmental states could be controlled or reversed, though practically infeasible in realistic macroscopic environments.

3.2. Thermodynamic Decoherence and the Coherence-Entropy Bound

Following the interaction with the environment the off-diagonal coherence terms in the reduced state vanish due to approximate orthogonality of environmental states . Hence, is (approximately) diagonal in the pointer basis with probabilities for each outcome: .

The von Neumann entropy of

thus increased from 0 (pure initial state) to:

which equals the Shannon entropy of the outcome distribution. This entropy quantifies our uncertainty when observing only

, and equals the entanglement entropy between

and

, since the global state is pure. The environment

has gained the same entropy (if

was initially pure) because the global state is still pure, so

. At this stage, we have reproduced the standard decoherence result: the system+apparatus is in an apparent classical mixture. However, this apparent classicality is reversible in principle. An observer with access to

could, in theory, restore the off-diagonal coherence by undoing the entanglement correlations. The entropy

is often called entanglement entropy, it is not true thermodynamic entropy because the total state is still pure.

Recoherence remains possible because the outcome-distinguishing information resides in correlations with ; if these are reversed, the system can return to a pure state. Now, consider the case where the interaction is thermodynamically irreversible-e.g., dumps heat into or triggers macroscopic environmental differences. In such a case, the environment’s entropy truly increases (not just entanglement entropy, but thermodynamic entropy). For example, suppose had to perform amplification that released of heat into a reservoir (a part of ). That heat increases ’s entropy by (if at temperature T) (Landauer, 1961). Or, might trigger a macroscopically different state in the environment (like different patterns of air molecule motion or different photon emissions), effectively increasing the coarse-grained entropy. The effective state of is no longer pure if is modeled as initially mixed or traced over partially due to its large, uncontrolled degrees of freedom. Alternatively, we incorporate a statistical mixture in ’s initial state to mimic a thermal environment, so that the final state is mixed, not a single pure wavefunction like (6). To handle this formally, one can model the interaction as a completely positive trace-preserving (CPTP) quantum channel acting on (with traced out).

Such a channel

takes the pre-decoherence

to

which is given by (7). Since

involves coupling to a large environment (possibly at finite temperature), it will in general be irreversible (non-unitary) for

. One can often approximate it by a Lindblad master equation for the

density matrix:

where

is a Lindblad dissipator that produces decoherence and damping. The Lindblad form guarantees that the entropy

increases (or stays constant) as a result of the dissipative part (this is the quantum analog of

-theorem for entropy in open systems). One can rigorously show

for a Lindbladian evolution that satisfies detailed balance (or more generally, that

approaches some equilibrium, increasing entropy if it is not already at equilibrium) (Spohn, 1978).

In our case, the equilibrium (long-time) state of under continuous measurement interactions would be a diagonal mixture (maximally mixed over whatever outcomes remain possible). We can now articulate an entropy-coherence tradeoff. Consider a measure of coherence in the system. A simple measure is the off-diagonal norm: e.g. , or even the sum of absolute squares of off-diagonals. We define coherence via standard measures such as the , or the Frobenius norm of off-diagonals. (Baumgratz, Cramer, & Plenio, 2014).

For pure states like (5), this coherence measure is maximal (of order 1). For the mixture (7), it is nearly 0. A more invariant measure of quantum coherence is the purity ). Initially (pure state). After decoherence (7), (unless one outcome had probability 1). Purity and von Neumann entropy are inversely related for a fixed spectrum. In two-outcome systems, they are functionally equivalent. We can thus qualitatively say as entropy of increases from 0 to , the coherence/purity decreases. If remains pure reference state, then is the entanglement entropy between and , and coherence can, in principle, be restored. If instead is effectively a bath that irreversibly gains entropy , then will not decrease even if we later act on alone; some entropy has flowed to (and is inaccessible).

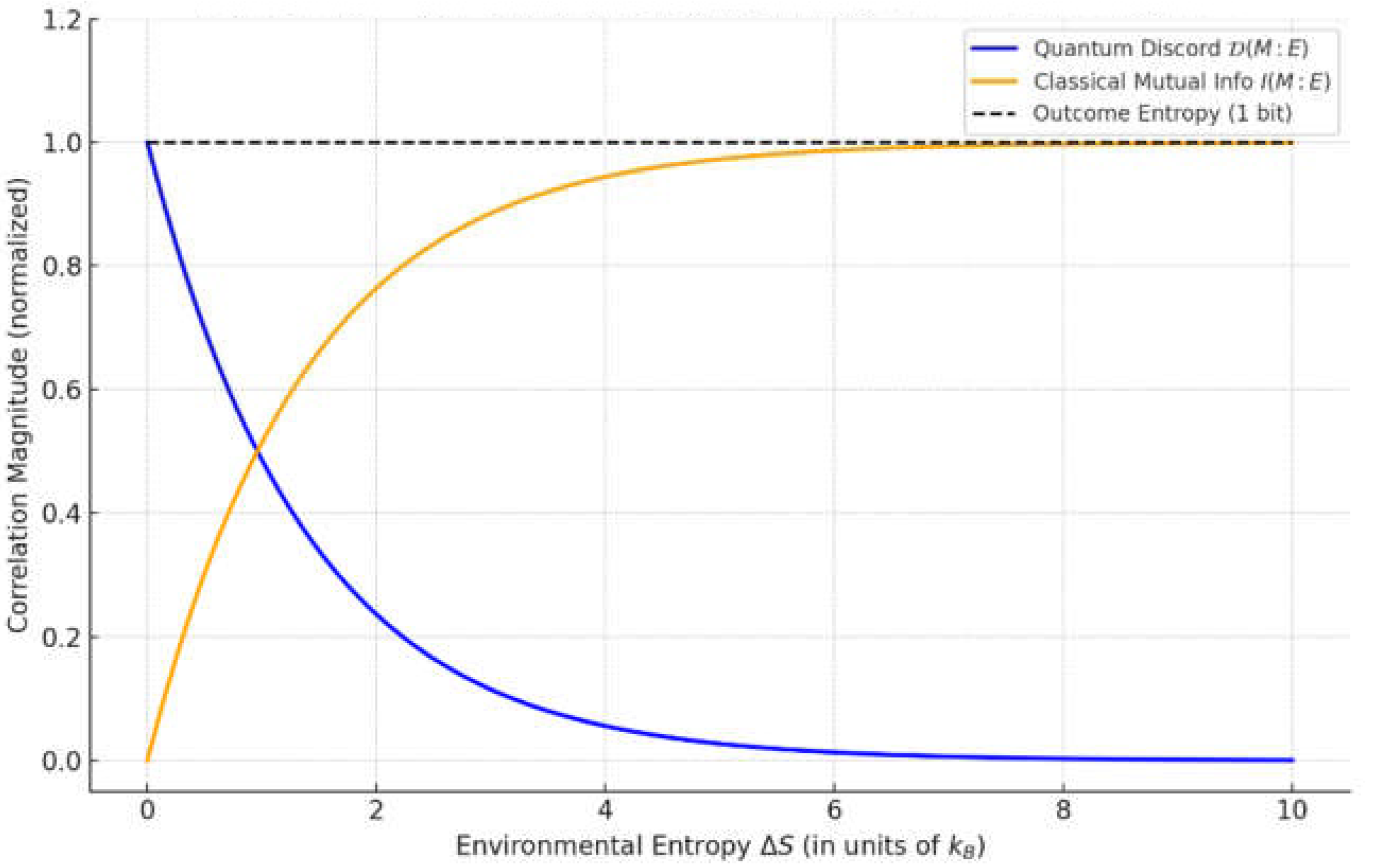

We propose that a useful quantitative indicator of collapse is the quantum discord between the memory and the rest (system or environment ) (Ollivier & Zurek, 2001). Discord is a measure of quantum correlations (more general than entanglement) between two subsystems X and Y. A state with 0 discord is essentially classical with respect to one of the subsystems (it can be written as a statistical mixture of product states that are orthogonal on one side). Prior to collapse, quantum discord between and is nonzero, reflecting entanglement. After effective collapse, these correlations become classical as outcome information is redundantly encoded in the environment. One can show that generic decoherence processes tend to drive discord to zero: indeed, a theorem by Shabani and Lidar showed that if the initial state has no discord (is classical on ’s side), then the reduced dynamics is completely positive (no ambiguity of the dynamical map) (Shabani & Lidar, 2009).

As measurement concludes, the joint state approaches a form with vanishing quantum discord, since the environment has decohered the memory into distinguishable outcome states. We can thus state:

Proposition 1 (Discord-Entropy relation):

Let be the reduced density matrix of the memory and environment during a measurement interaction. Then:

If residual coherence is present,

As the environment’s entropy production , the discord

In the limit , the system is effectively classical.

In the limit where the environment has produced a large entropy , the post-measurement correlations are effectively classical, (with macroscopically distinguishable and ). Indeed, one can check that state has zero discord (it is a classical-quantum state). Before that point, in the partial decoherence regime, one can find basis where has some off-diagonal elements between and , indicating .

In summary, the vanishing of discord coincides with effective wavefunction collapse. We can connect these ideas with a more thermodynamic statement. An informative scenario to analyze is the application of fluctuation theorems to the measurement process. Consider reversing a completed measurement. To successfully restore the coherent superposition, one must collect the information distributed in and feed it back in a controlled way, effectively performing erasure of the which-outcome information. According to Landauer’s principle, erasing one bit of logical information requires a minimum entropy increase of , corresponding to a work cost (Landauer, 1961). If the measurement generated entropy, then at minimum one must expend work to remove that entropy again.

The Jarzynski equality states

(where

is free energy difference and work distribution average), in context of measurements, it implies on average you cannot do better than the second law, though rare single trajectories might temporarily violate it. The Crooks fluctuation theorem gives the ratio of probability of undoing a process. If a forward process (measurement) produces entropy

, then Crooks’ theorem implies the probability of seeing a trajectory that decreases entropy by

(i.e. the reverse) is exponentially small:

. For

much larger than a few

, this ratio is astronomically tiny. Thus, once a measurement has generated, say,

of entropy, the odds that it spontaneously “uncollapses” (coherently recoheres) is

even if it were in principle possible. For

,

utterly negligible. In practice, interacting with a heat bath, one would have to perform extremely coordinated operations to get the entropy out; any random fluctuation is incredibly unlikely to bring the memory and environment back to their initial pure state. This formalizes the idea of irreversibility: although microscopic quantum theory is reversible, the probability of a spontaneous recoherence after entropy

has been generated is effectively zero. We can sum up with an entropy, coherence inequality. While a rigorous general inequality would require specifying measures, an intuitive form is:

where

is a measure of quantum coherence (off-diagonality) remaining in the system’s state (relative to the initial superposition basis), and

is the entropy produced in the environment up to time

t.

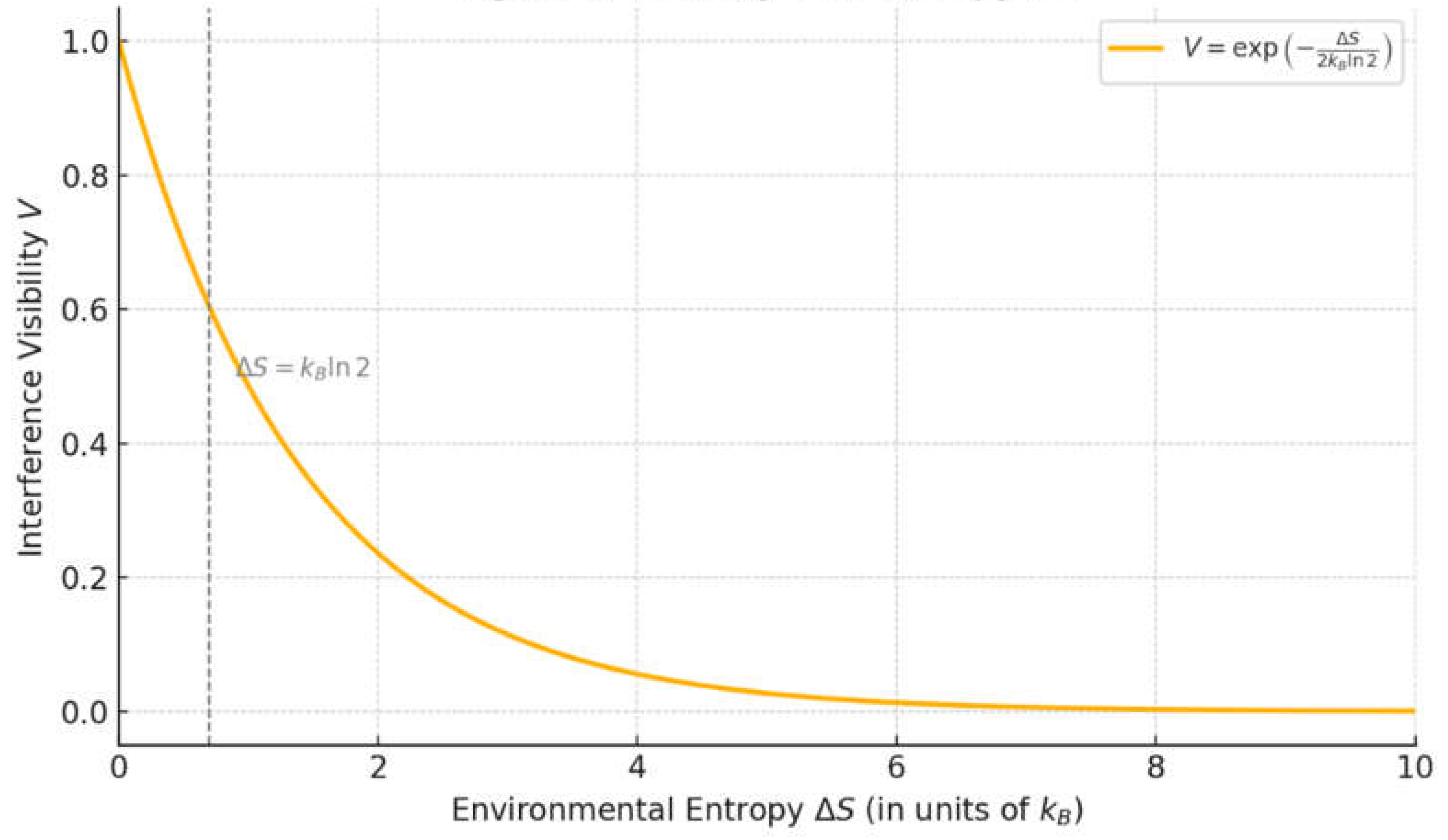

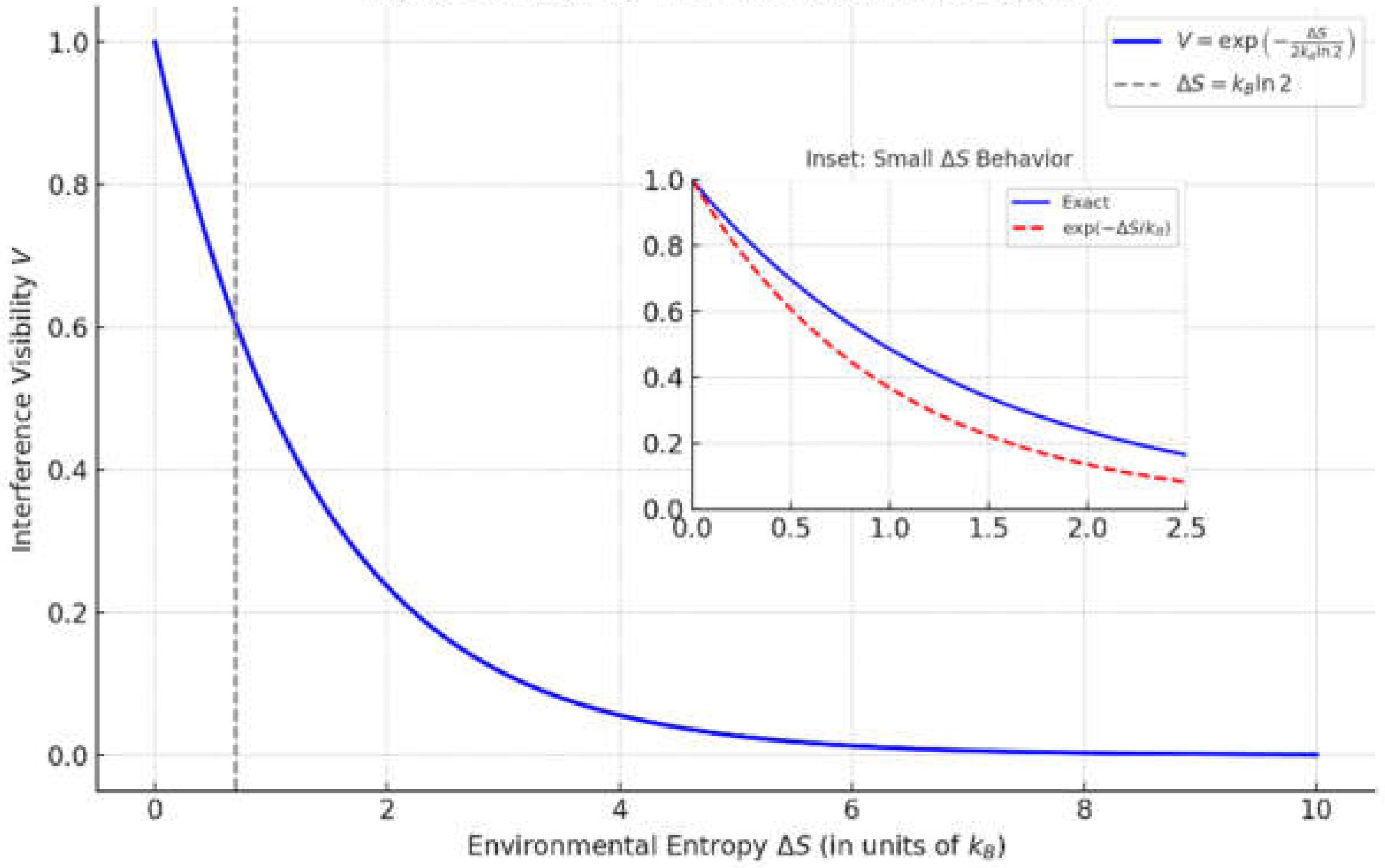

In the early stages ( small), coherence decays roughly linearly or quadratically (as typical in decoherence theory (Zurek, Decoherence, einselection, and the quantum origins of the classical, 2003)). Once , coherence is suppressed to a few tens of percent at most. By the time (many bits of entropy), is exponentially tiny. This is consistent with detailed models in decoherence literature (e.g., the “visibility” of interference fringes decays as where is a decoherence functional often proportional to number of emitted particles or entropy). Our inequality (9) encapsulates that beyond a certain entropy, remaining coherence is bounded by an exponentially small factor. Thus, a large entropy production guarantees negligible coherence. In particular, if we set a threshold for one bit, we can say: if , then it might be possible to erase the information and restore interference (the measurement has not fully collapsed). If , at least one bit is worth of entropy is in the environment, in principle one bit could still be erased, but typically actual is orders of magnitude larger.

For a precise statement: in a measurement that writes bits of information (distinguishing 2n outcomes), at least of entropy must be produced somewhere. Usually much more is produced in a macroscopic apparatus for one bit, but that is the fundamental lower bound. Therefore, effective collapse requires at least one bit of entropy. Conversely, any apparent wavefunction collapse that is accomplished with significantly less than entropy cost could potentially be reversible and should not be considered a true irreversible measurement.

3.3. Poincaré Recurrence and Its Suppression

A subtle issue in quantum mechanics is the Poincaré recurrence theorem. For a finite, closed quantum system with discrete energy spectrum, the state will evolve quasi-periodically and return arbitrarily close to its initial state after some (usually enormous) recurrence time . This would imply that even after decoherence, given enough time the system + environment could, in principle, recohere (the branches recombine), the wavefunction “uncollapses”, albeit after a time that might far exceed the age of the universe. While this is a theoretical possibility in a finite, closed universe, does it undermine our claim that wavefunction collapse is effectively permanent? The key is the size of relative to any practical timescale. If an environment has effective degrees of freedom (Hilbert space dimension extremely large), is generally exponentially large in . The key lies in the scale of relative to any practical timescale. For an environment with degrees of freedom (and Hilbert space dimension ), recurrence time grows exponentially, or even super-exponentially, with . For example, a system of particles has dimension and recurrence time roughly in units of characteristic time steps. For qubits, the Hilbert space dimension is , and the recurrence time typically scales as , often exponentially in . If is Avogadro-number scale , is absurdly huge.

In the thermodynamic limit, as the environment size ; thus, an infinite environment will never recohere. So in the thermodynamic limit, the evolution is effectively irreversible; this is analogous to how an ideal gas in a box (finite N) will theoretically have recurrences (Loschmidt’s paradox), but for those recurrences occur after fantastical times (like years). These timescales exceed any cosmological bound and are thus physically irrelevant. No observer will wait for that, and any slight perturbation breaks the perfect recurrence. In our quantum case, any coupling to external degrees (the universe is not perfectly closed) will destroy the exact recurrence. Thus, Poincaré recurrences are an extreme FAPP phenomenon: theoretically real, but practically irrelevant.

Our entropy criterion makes this quantitative. According to Crooks’ fluctuation theorem, the probability of a spontaneous recurrence i.e. a trajectory that reduces the system's entropy by , is approximately (Crooks, 1999). For macroscopic entropy increases , on the order of or more, the reverse probability becomes astronomically small, effectively zero. Thus, while the underlying quantum dynamics is formally time-symmetric, practical asymmetry, manifesting as irreversibility, arises due to the sheer size of the accessible state space. This statistical irreversibility validates the use of the Second Law in quantum contexts and justifies treating wavefunction collapse as effectively permanent for all practical purposes.

One could formalize this by looking at the fidelity for the total state. At , the fidelity is unity: . Following decoherence, the system’s state becomes nearly orthogonal to the initial one, and fidelity drops near zero, especially if the environment states correlated with outcomes are orthogonal. Over extremely long timescales, fidelity may exhibit rare peaks corresponding to partial Poincaré recurrences. But the expected recurrence time can be estimated from entropy or state-space volume. If entropy is produced, the effective dimension of the accessible state space is (by Boltzmann’s relation) (Boltzmann, 1909). Since recurrence time scales with the volume of accessible Hilbert space, for macroscopic systems, this becomes a doubly exponential function of entropy.

For example (approximately the entropy associated with 100 molecules), becomes hyperastronomical, far beyond any conceivable physical timescale. Therefore, once , the likelihood of branch recombination via recurrence becomes negligible. Collapse is thus practically irreversible.

In conclusion, our thermodynamic interpretation remains fully consistent with Poincaré’s theorem. While a closed system may, in theory, return arbitrarily close to its original state, such a recurrence would require a time so vast, and a reversal so precise, that it poses no practical challenge to our criterion. Indeed, it supports our view that collapse is not a fundamental process but one that emerges effectively for all practical purposes (FAPP). As Bell emphasized, any satisfactory resolution requires a precise definition of FAPP. In our case, this means: “irreversible except on timescales exponentially exceeding any reasonable multiple of the age of the universe,” a standard robust enough to warrant calling the process irreversible.

3.4. Relativistic Covariance with Tomonaga-Schwinger Formalism

We now address how to formulate our entropy-based collapse criterion in a way that is consistent with relativistic principles. A common concern for collapse-based interpretations is their apparent nonlocality: for instance, if two particles are entangled across light-years and one is measured, does collapse instantaneously affect the other, seemingly violating causality? In standard quantum theory, there is no physical signal, correlations are revealed upon comparison, but each local outcome is random. Our interpretation preserves this feature: because collapse is not a physical process but an emergent one tied to entropy production, there is no superluminal propagation of physical effects. The appearance of collapse is frame-dependent as in Relational QM (RQM) or Many-Worlds Interpretation (MWI). Suppose Alice and Bob share an entangled pair. In Alice’s rest frame, when she performs a measurement, entropy is generated locally, and she can regard the state as having collapsed at that moment. In Bob’s frame, it may appear that his measurement occurred first. But since both observers’ conclusions depend on local entropy generation, and any eventual comparison requires subluminal communication, no causal paradox arises. The ordering of collapse is observer-relative, not physically absolute. The condition “sufficient entropy has been generated” can be phrased in a covariant way: one can examine the quantum state on a space-like hypersurface. Using the Tomonaga-Schwinger formalism, one evolves the quantum state by moving a space-like surface through spacetime, rather than a single time parameter for all space (Tomonaga, 1946) (Schwinger, 1948). The state is the state of the system on hypersurface . Each observer traces a world-line through spacetime, interacting with the system and generating entropy locally through measurement-like events. Different Lorentz observers may slice spacetime differently, but if they are considering the same physical situation, the entanglement structure and entropy distribution will be such that all observers agree on invariant facts: for instance, if an outcome is recorded into many photons radiating outward, that is an invariant scenario.

(Ghose & Home, 1991) showed that Tomonaga-Schwinger formalism can describe Einstein, Podolsky, Rosen (EPR) correlations covariantly, delineating measurement completion on one side and its instantaneous but a causal effect on the wavefunction of the other, in a way consistent with relativity (Ghose & Home, 1991). In our terms, one might say: on any given space-like slice after Alice’s measurement, the global state will be a decohered, entangled state including Alice’s environment. The reduced state for Bob’s particle will appear collapsed to any observer whose hypersurface places Alice’s measurement event in the past light cone. There is no invariant instantaneous “collapse moment”; what is invariant is the Heisenberg picture correlation: on a joint slice.

This has been extensively discussed in the context of relativistic quantum mechanics: the measurement outcome on one side and the conditional state on the other are connected via nonlocal correlations, but these do not entail causal violations (Maudlin, 2011) (Eberhard & Ross, 1989). Our entropy-based criterion adds no new physical content but offers interpretive clarity: each observer updates their quantum state description at the point where a local interaction has produced irretrievable entropy. This update proceeds via the global quantum state defined on a space-like hypersurface, allowing it to be expressed in a Lorentz-invariant formalism, such as Tomonaga-Schwinger evolution. An observer whose frame has not yet intersected the entropic interaction will still see a superposition, but this is inconsequential, as once they cross the interaction region, they too will observe the associated entropy and reach the same conclusion. The upshot is that Lorentz covariance is preserved precisely because our framework avoids any physically propagating collapse mechanism. Each event (e.g. a detector firing) is localized and just entangles whatever is in its future light cone. Observers may temporarily disagree on whether collapse has occurred in their respective frames, but they will never disagree on observable outcomes when comparing records. This is analogous to how different observers in relativity can disagree on the time order of spacelike-separated events but never on causally connected ones (Taylor & Wheeler, 1992). Because collapse in our model is epistemic, triggered by thermodynamically irreversible record formation, it adheres to the principle of locality in the propagation and accessibility of physical information.

We can also comment on quantum field theory: In quantum field theory (QFT), particle measurements correspond to local interactions that entangle quantum field modes, often involving the vacuum, which possesses an infinite number of degrees of freedom. A detector click (excitation) usually involves emitting many quanta (e.g. phonons, photons), again an entropic event. Our entropy criterion also applies to field degrees of freedom: if a superposition of distinct field configurations leads to different particle number states or energy distributions that thermalize, effective collapse has occurred. Using Tomonaga-Schwinger, one can propagate the state consistently and see that no paradox arises. Our interpretation’s strength is that it does not require specifying an absolute simultaneity for collapse, which is a notorious problem for objective collapse models (some like GRW choose a preferred frame, violating relativity slightly; others try to formulate relativistic versions with considerable difficulty) (Bassi, Lochan, Satin, Singh, & Ulbricht, 2013). Because we do not treat collapse as a physical process, our approach entirely sidesteps the problem of defining simultaneity, as in relational and many-worlds interpretations.

We have thus established the formal underpinnings of our approach: unitary quantum mechanics plus a criterion of thermodynamic irreversibility. We saw that once entropy is generated, quantum coherence and discord vanish, and any revival is exponentially unlikely. In the next section, we derive the Born rule within this framework, showing that the usual probability postulate emerges from considering symmetry (envariance) and maximum entropy principles. This will further cement that no extra postulates are needed, the usual rules of quantum measurement can be derived given our understanding of what constitutes a measurement.

3.5. Derivation of the Born Rule from Entropy and Envariance

A central requirement for any interpretation that preserves the formalism of standard quantum mechanics is to explain the origin of the Born rule; that is, why the probability of obtaining outcome is given by for the state descried in (4). In Everettian many-worlds interpretations, deriving the Born rule remains contentious. Various strategies (including decision theory, relative frequencies, and symmetry arguments) have been proposed, but consensus remains elusive (Wallace, 2012) (Deutsch, 1999). Here, we present a derivation that aligns with our entropy-based perspective, building on Zurek’s concept of environment-assisted invariance (envariance).

To recap, Zurek introduced envariance as a symmetry property of entangled states (Zurek, Probabilities from entanglement, Born’s rule from envariance, 2005). For instance, consider a maximally entangled pure state: , where is the dimension of the support. If one applies a unitary transformation to and a corresponding inverse transformation to , the global state remains unchanged (up to a global phase), implying the system’s state is envariant under that transformation. Thus, an observer with access only to has no way to tell which basis is which; they must assign equal probabilities to the outcomes by symmetry (indifference). From this, one concludes for equal coefficients. Then by a reasoning of splitting amplitudes into rational ratios and continuity (a sort of Gleason’s argument or using the additivity of entropy), one can deduce for general coefficients (Gleason, 1957).

We incorporate an entropy principle: In our interpretation, prior to collapse the observer’s knowledge is described by a density matrix like (7). Lacking any further information (from outside the system), the maximum entropy principle of statistical mechanics suggests the observer should assign probabilities that maximize their entropy of uncertainty given the constraints (Jaynes, 1957). If the only constraint is that the state is known to be (7) with weights , then the probabilities are already determined as . But if one were in a situation of complete ignorance about coefficients (like entangled with an inaccessible environment and one only knows the dimension of the support), one should assign equal probabilities (principle of indifference, which in this quantum context is justified by envariance symmetry). This is strengthened by recognizing that an observer can only assign a definite outcome probability once the system is part of an effectively classical mixture, emergent through decoherence and entropy production.

Symmetry Argumentation with Rational Weights: Suppose the combined state (6) has two terms of equal amplitude and orthogonal environment states. By symmetry, there is no distinguishing feature between outcome 1 and 2 (the physical situation is symmetric under swapping those outcomes along with swapping environment states). Therefore, the probability an observer should assign to outcome 1 equals that of 2, and they must sum to 1, giving . In the case of rational squared amplitudes, e.g., with , we can construct identically prepared sub-states ( copies of type outcome-1, copies of outcome-2). By symmetry and frequency reasoning, the probabilities are . This is the frequency interpretation at the level of rational weights. Taking limits to irrational ratios gives the same conclusion (continuity argument). This is essentially Gleason’s theorem reasoning but can be made intuitive. Zurek’s derivation goes through these steps in detail.

The Born rule can also be derived by demanding that the observer’s probability assignment maximizes the Shannon entropy , subject to normalization and consistency constraints imposed by the quantum state. Zurek noted that probabilities must be an “objective reflection of the state”. Suppose we have a pure quantum state . Then, the assigned probabilities should depend only on , and in the special case where all amplitudes are equal, they should reduce to . This follows from the principle of maximum entropy: for a fixed number of outcomes, the entropy is maximized when all are equal. When the amplitudes differ, the maximum entropy distribution under the constraint of the known state structure must reflect those differences, implying . Using the method of Lagrange multipliers to maximize under normalization and known expectation constraints leads to distributions where , assuming the constraint function reflects the known amplitudes. In the quantum case, the natural constraint derives from , leading directly to the Born rule. But here we already know from Gleason’s theorem that the only consistent assignment is . (Gleason, 1957)

A more intuitive argument is that the Born rule ensures consistency: for a given density matrix, which can be decomposed into pure-state mixtures in many ways, the predicted measurement outcomes must remain invariant under such decompositions. This uniqueness essentially pins down the . As this has been extensively proven in the literature, we will not elaborate further. Our point is that we do not need to postulate the Born rule; it follows from symmetry and information-theoretic arguments that are fully in line with our interpretation’s philosophy. Moreover, our collapse criterion respects the Born rule: we do not claim that higher-weight branches collapse more quickly. Collapse is driven by entropy generation, which depends on record formation, not outcome bias. In a symmetric situation all outcomes produce similar entropy; in an asymmetric one, also similar entropy per outcome. As such, there is no bias introduced. The selection of a particular outcome remains fundamentally random (or, in the global sense, every outcome occurs in a branch, but each branch is realized for observers within it). The probabilities must therefore be exactly the to match the frequencies observed and to avoid signaling. If one instead postulated a different rule, such as assigning probabilities proportional to , it would conflict with experimental observations and violate envariance symmetry: swapping two equal-amplitude coefficients would no longer preserve outcome probabilities under such a rule. Only the assignment respects both empirical data and the symmetry principles fundamental to envariance. Thus, by combining environment-induced symmetry with the principle of maximum entropy under uncertainty, we uniquely recover the Born rule.

In essence, when the wavefunction collapse becomes relevant, the observer’s state of knowledge is such that they should treat the reduced density matrix as a classical probability distribution over outcomes. The only consistent choice for that distribution is the one equal to the diagonal of , which is by construction. Our interpretation therefore does not need to assume Born’s rule; it emerges naturally as the link between the quantum state’s amplitude-squared and the classical entropy of ignorance after decoherence.

In summary, Born’s rule is derived rather than assumed by appealing to the symmetry of entangled states (which forces equal outcomes to have equal probability) and the additivity of probabilities for composite events (which aligns with the quadratic norm property). The result is that the probability for each branch is the relative frequency given by the squared amplitude. This dovetails with the interpretation: those squared amplitudes also determine the entanglement entropy between branch and environment, in fact, . Maximizing entropy under the constraint imposed by the amplitudes leads uniquely to the Born rule. While this may appear tautological, it reflects the self-consistency of the amplitude-squared interpretation across both informational and physical grounds.

Having established the Born rule, we can now move to a higher level: how to test and apply this interpretation. We will propose an experiment where the “amount of collapse” can be tuned by controlling entropy, and show how our criterion can be quantitatively supported or falsified.

3.6. Experimental Proposal: Optomechanical Test of Entropy-Induced Collapse

A defining strength of a physical interpretation lies in its testability. Whereas most quantum interpretations remain empirically indistinguishable, since they yield identical predictions, our framework permits a novel class of experiment: one in which the entropy generated during a measurement-like interaction is varied, and its influence on interference visibility is observed. The essential aim is to determine whether a quantifiable or abrupt transition in coherence occurs when a specific entropy threshold is surpassed. We propose an optomechanical interferometry experiment using a mesoscopic object-massive enough for tunable environmental decoherence to potentially induce collapse, yet sufficiently controllable to retain quantum coherence under low-noise conditions.

Consider a nanosphere or dielectric mirror with a mass in the range of (approximately kg) that can be prepared in a spatial superposition (for instance, in an optomechanical cavity or double-slit arrangement). While significantly more massive than electrons or photons, interferometric experiments with large molecules and proposals extending upto ) suggest that quantum control at this scale is becoming experimentally feasible. This object serves as the system , while the measurement apparatus detects which-path information. A measurement device extracts which-path information by scattering or coupling. A possible configuration introduces a which-path detector, such as a laser that scatters differently depending on whether the object traverses path A or B. The interaction strength can be modulated to control the degree of which-path information extracted. The environment comprises all remaining degrees of freedom e.g., thermal gas, blackbody radiation, parameterized by ambient pressure, and temperature .

The goal is to operate in a regime where, in the absence of environmental decoherence, interference fringes are fully visible (i.e., visibility ). This likely means operating in extreme high vacuum ( atm) and cryogenic temperatures ( a few K or less) so that the coherence time of the object is long (the mean free path of residual gas is huge and thermal emission is low) (Romero-Isart, et al., 2011). Though technically demanding, such conditions have been achieved in state-of-the-art systems, including LIGO and cryogenically cooled optomechanical oscillators. To test the collapse criterion, we propose deliberately introducing controlled decoherence e.g., via increased gas pressure or tunable photon scattering to induce varying degrees of environmental entropy. For example, allow a known partial pressure of gas in the chamber to collide with the object, or use a controlled laser that entangles with the object’s position (scattering photons carry which-path info). By adjusting the gas pressure or the laser interaction time/power, one can tune the effective environment coupling. The interference visibility is defined as: , where are the maximum and minimum detected intensities. for perfect coherence, for complete decoherence (no interference).

In our framework, the visibility s directly related to the entropy irreversibly produced in the environment during the measurement interaction. n the weak decoherence regime, perturbation theory suggests , where corresponds to a small leakage of which-path information, typically quantified in bits. Then, . But as increases, coherence decays exponentially: specifically, the visibility is expected to decrease approximately as , or faster in some scenarios. A more concrete expression, drawn from standard decoherence theory, is where is the decoherence factor. For instance, a particle of mass and cross-sectional area , immersed in a gas with particle density and thermal velocity has experiences decoherence characterized by, representing the average number of scattering events in time . Each collision typically encodes approximately one bit of which-path information, contributing of entropy. Thus, is effectively proportional to the number of informational bits lost to the environment.

Hence, the environmental entropy can be approximated as , assuming each collision delivers path-distinguishing information. In this simplified model, one finds ; the factor of the arises from the particular geometry of the scattering setup, such as in a double-slit interference scenario. The central insight is that interference visibility decays exponentially with entropy production. Our experimental aim is to measure while systematically varying . The question then becomes: How can be quantified or measured? This can be approached either by directly measuring the environment, for instance, by counting scattered photons or monitoring heat dissipation, or by inferring entropy indirectly from known parameters. For instance, In a photon-mediated decoherence setup, one could employ a faint probe laser initially in a coherent state. As it interacts with the object in a path-dependent manner, via scattering or phase shift, it becomes entangled with the system. Tomographic reconstruction of the laser’s post-interaction state reveals how much which-path information, and thus entropy, was transferred. Alternatively, if the primary entropy sink is a thermal reservoir, one can use the thermodynamic relation = , where is the heat exchanged and is the reservoir temperature.

We propose a concrete implementation using an optical interferometer (e.g., Mach-Zehnder type), wherein a lightweight mirror or membrane is suspended in each arm. The photonic superposition between the two arms becomes entangled with the mechanical position of the mirrors via optomechanical coupling. By tuning the intensity of the laser, one can control the extent to which the photon’s path imprints momentum onto the mirror, effectively acting as a tunable which-path detector. This momentum transfer thermalizes via phonon excitations, transferring entropy into the mirror’s internal degrees of freedom. Interference visibility is measured at the output. For a sufficiently massive mirror, even minimal photon-induced kicks can produce detectable entropy increases, particularly if the mirror's thermal reservoir (phonon bath) is not perfectly isolated.

Predictions: Our model predicts no sharp discontinuity in visibility at the entropy threshold . Rather, it anticipates a smooth crossover, with coherence loss becoming prominent once significantly exceeds this value. Thus, a pronounced decline in visibility is expected around the point where entropy production crosses one bit, i.e. . As an illustrative case, suppose one increases background gas pressure in a double-slit interference setup. At ultra-high vacuum (effectively zero collisions), . When the average number of collisions during the superposition time reaches , visibility remains high (e.g., ). Around one collision on average (), visibility might fall to or so. These thresholds are illustrative and depend on the assumption that each collision provides nearly one bit of distinguishable which-path information. In reality, the amount of entropy generated per interaction depends on how well the environment can resolve the path, collisions that are gentle, symmetric, or lack spatial resolution may contribute less than one bit. Thus, the entropy-visibility scaling should be interpreted as an upper-bound trend, with full decoherence arising only when the cumulative information loss becomes thermodynamically irreversible.

As the number of collisions increases further, , the visibility rapidly vanishes. While this visibility trend aligns with standard decoherence theory, our interpretation introduces a new layer: collapse is identified with the crossing of a thermodynamic entropy threshold. In situations where the environmental degrees of freedom remain accessible, e.g., a single photon carrying which-path information, a quantum erasure protocol can reverse the apparent collapse and restore interference. For example, if we use a single photon as the environment (so was potentially small and localized), one could potentially perform a measurement on that photon that erases its information and see interference return. Conversely, once information is irreversibly disseminated into a large environment, entailing high entropy, the process is effectively irreversible and interference cannot be recovered.

This setup enables empirical discrimination among interpretations: The Many-Worlds Interpretation maintains that interference is always, in principle, recoverable, though it concedes that practical restoration becomes infeasible post-decoherence.Objective collapse theories (e.g., GRW) posit that interference is lost due to spontaneous, intrinsic collapse mechanisms-independent of environmental entropy. GRW, for instance, predicts a collapse rate of per nucleon, implying that a superposition involving nucleons should collapse within , even in perfect isolation. By contrast, our interpretation predicts that coherence persists indefinitely in the absence of entropy production. Collapse is never spontaneous; it is always conditional upon thermodynamic irreversibility. Hence, an ideal falsification test would involve isolating a mesoscopic object to suppress environmental entropy generation. If interference persists beyond GRW’s predicted collapse time, it would falsify objective collapse models and support entropy-induced collapse.

Several ongoing experiments investigate matter-wave interference using macromolecules and nanoparticles. The current experimental record demonstrates interference for molecules with masses up to

. No deviation from standard quantum predictions has been observed, placing tighter constraints on objective collapse models. For instance, GRW’s collapse rate parameter

is forced to lower values, and CSL models require increased localization lengths to remain viable. The proposed experiment offers a means to detect whether decoherence exceeds the expected contribution from thermodynamic environmental interactions. If interference were to vanish despite negligible entropy production (e.g., under ultra-high vacuum conditions), it would suggest the presence of new physics, such as gravity-induced collapse mechanisms. To date, all observations align with standard decoherence theory: interference is suppressed only when identifiable environmental interactions are present. For instance, ongoing projects such as MAQRO aim to test spatial superpositions for particles in the

range, utilizing space-based environments to minimize decoherence. Observation of interference in such regimes would further confirm the absence of any unforeseen collapse mechanisms up to these mass scales. In summary, a tunable optomechanical interferometer provides a platform to empirically map visibility

as a function of environmental entropy

. The expected behavior is illustrated in

Figure 1:

Visibility remains near unity while , followed by a rapid exponential decay in coherence as entropy increases further. The threshold marks the boundary between reversible quantum dynamics and effectively irreversible collapse. A direct measurement of through environmental monitoring would allow verification that the visibility drops to approximately 50% when , consistent with one bit of which-path information being irreversibly recorded. In the low-entropy regime, a linear relationship between and may emerge, supporting the predicted exponential suppression of coherence. Suppose a nanosphere placed in spatial superposition for To preserve coherence, the system must undergo fewer than one gas collision during that interval. Assuming a mean thermal velocity and cross-section , this sets a pressure bound . At a pressure of Pa, one expects roughly 5 collisions in , sufficient to generate , or greater, thereby suppressing interference. We predict no interference then. Intermediate might give collisions ( partial). Such predictions can be tested by measuring the interference contrast under controlled variations in pressure or scattering rates.

By plotting visibility as a function of pressure (or controlled scattering rate), and mapping this to estimated entropy production , one can directly test the functional dependence predicted by our model. Any observed deviation, such as a sudden visibility drop not attributable to entropy, or a slower-than-expected decay, would indicate new physics or failure of the entropy-based collapse hypothesis. Thus far, all data remain consistent with standard decoherence theory. Crucially, our interpretation implies a practical threshold of reversibility: coherence is maintainable as long as remains below a critical value, even in large systems, but if goes high, quantum behavior is lost irrecoverably. This insight aligns with experimental practice and supports the development of entropy-minimizing techniques, such as quantum error correction, that preserve coherence by suppressing entropy flow.

A further test involves a Wigner’s Friend-type setup: a small observer (e.g., a qubit memory) measures a quantum system, followed by a delayed measurement by a larger observer. By controlling whether the ‘friend’s’ record is preserved or erased prior to the final measurement, one can probe the reversibility of collapse. Photonic experiments by Proietti et al. (2019) demonstrated violations of classical assumptions about observer-independent facts under reversible measurement conditions. In our framework, if the friend’s measurement is weak or thermodynamically reversible, no collapse has occurred, and Wigner can still observe interference. Conversely, if the friend’s interaction produces significant entropy, collapse occurs from their frame, and Wigner will no longer observe interference, only classical correlations. This removes the paradox: apparent contradictions only arise when entropy is low and records are reversible. Once irreversible records exist, all observers agree on a definite outcome. The experiment by Proietti et al. can be explained as: they effectively had the “friend” as just another photon (with a quantum-controlled measurement). That is reversible, leading to correlations violating assumptions of observer-independent facts. Our interpretation maintains that observer-independent facts require thermodynamic irreversibility. In Proietti’s setup, no such irreversibility occurred.

Thus, testing these ideas with small quantum computers (where you simulate an observer with a qubit memory interacting and perhaps coupling to environment) could provide further evidence that when entropy is small, you get entangled super-observer states (friend and Wigner entangled); when entropy is large, you get classical records and decoherence. Small quantum computers can emulate Wigner’s Friend experiments by encoding observer memory in qubits and introducing controllable environmental coupling. By varying entropy, one can simulate and detect the transition from quantum superposition to classical definiteness. To date, all experimental results are consistent with our interpretation: collapse occurs precisely when entropy renders recoherence unfeasible. Nevertheless, exploring superpositions at larger scales remains essential, particularly where gravity-induced or other exotic collapse mechanisms may emerge.

4. Ontology and Interpretational Implications

Our entropy-based criterion for collapse implies a specific ontological commitment: the universal wavefunction is ontic and evolves unitarily at all times. Collapse is not a fundamental dynamical event, but an emergent, observer-relative phenomenon, corresponding to an epistemic update once entropy growth renders further quantum interference practically impossible. We now clarify what is considered “real” in this framework, and reconcile ontic unitarity with observer-dependent collapse, while avoiding interpretational vagueness.

Wavefunction ontology: We regard the wavefunction, more precisely, the universal quantum state, which may be a state vector or density operator on a Hilbert space, as a representation of physical reality. This ontological view aligns with interpretations such as the Many-Worlds Interpretation (MWI), Bohmian Mechanics (where the wavefunction guides hidden variables), and objective collapse theories (where the wavefunction spontaneously localizes). However, unlike Bohmian Mechanics, we posit no hidden variables; and unlike collapse models, we do not invoke non-unitary dynamics. In this respect, our approach is closest to Everettian ontology: the universal wavefunction encompasses all possible outcomes in a continuous, unbroken superposition.

Branches and Relative Facts: Within this global state, a “branch” corresponds to a subset of degrees of freedom that have decohered into a consistent classical narrative e.g., a system in state , an apparatus recording outcome 1, and an environment encoding that result. Branch 2 is the analogous for result 2. These branches are (approximately) orthogonal and do not significantly interfere due to environmental decoherence. Each branch thus supports an emergent classical reality, within which observers find themselves embedded. We adopt a view informed by Relational Quantum Mechanics (Rovelli) and refined by the framework of Quantum Darwinism: namely, that “facts” are not absolute but emerge as stable, redundant records distributed across many environmental degrees of freedom. In our approach, it is the increase in entropy that guarantees the proliferation and irreversibility of these environmental fragments, thereby stabilizing a given outcome as effectively classical.

Observer-relative collapse: The Wigner’s Friend thought experiment offers a clear illustration of observer-relative collapse. Suppose the friend () measures a system (), and the measurement entails amplification and entropy generation. From ’s perspective, a definite outcome occurs, and the state of knowledge updates accordingly: “ is in state , I (Friend) have memory of ”. would say the wavefunction collapsed. From Wigner’s () external perspective, having not yet interacted with the system or the friend, the combined state of S+F+lab remains a coherent superposition: , in principle. In principle, Wigner could perform an interference experiment on the entire lab to reveal coherence between branches, assuming the system remained sufficiently isolated and entropy production was negligible. However, if ’s measurement produced significant entropy, for example, by irreversibly recording the result in the environment, then even Wigner would be unable to restore coherence in practice. From Wigner’s point of view, the friend’s lab has decohered into an effectively mixed state. So Wigner would also then see the friend’s lab in a statistical mixture (with probability friend already got outcome ). In that case, when Wigner opens the door, he will find that the friend has already obtained the outcome with probability , and not be able to see any interference. At that point, both F and W agree that a collapse has occurred. Wigner might retroactively describe the collapse as happening “when I became entangled with the friend, and the irreversibility in the friend’s lab ensured that only one outcome was consistently observable.” Conversely, if the friend’s measurement were implemented in a fully reversible manner, for instance, using a qubit-based memory that became entangled without dissipating entropy, then Wigner could, in principle, detect interference.

In such a scenario, our interpretation holds that the friend did not experience an irreversible record. The friend’s state may have been a coherent superposition of seeing outcomes 0 and 1, with no stable memory, a possibility implausible for humans, but feasible for qubit-based “observers.” So there was no objective fact yet, and Wigner finds a superposition, consistent with no collapse. This is consistent with the frameworks of consistent histories or relational quantum mechanics: if Wigner can erase the measurement, then any subjective experience the friend had must also be reversible, implying no stable memory, and thus no classical outcome. Once entropy is generated, any observer that interacts with the environment will get correlated to the outcome and hence join that branch. At that point, all such observers share the same record; the outcome becomes objectively real for them within that branch. This is how our approach avoids the problematic “many perceptions” issue associated with MWI. We do not posit a literal splitting of consciousness. Instead, each observer’s classical state, embodied in a memory correlated with an outcome, resides within a single branch. Those records remain consistent across all macroscopic observers.

No global collapse event: There is no single, absolute moment when “the wavefunction collapses for the universe.” Collapse is always relative to a subsystem that lost track of coherence. On the global scale, there is just continuous unitary evolution (the state of the whole universe remains pure if started pure, with ever-increasing entanglement and entropy confined to subsystems). One can visualize this structure as a branching tree: the universal state continually divides into an ever-expanding web of outcomes, akin to Everett’s many worlds. Crucially, however, these “worlds” are not fundamental splits but emergent structures defined by high entropy and stable, redundant classical records. If somehow entropy could decrease dramatically, worlds could recombine, but as argued, that is practically impossible. We want to emphasize that although we speak of observer-dependent collapse, it does not mean an observer can arbitrarily choose reality. The criterion is physical: any system that plays the role of observer (i.e. acquires info) and increases entropy will find itself on one branch. This is objective in the sense that any other system that later interacts and shares that entropy flow will join the same branch. So ultimately, an unambiguous classical reality emerges within each branch.

QBist comparison: QBism holds that the wavefunction represents an agent’s personal belief about future experiences. In contrast, our view treats the wavefunction, and its linear evolution, as an objective, physical entity, not a personal belief. Probabilities in our interpretation arise from an observer’s ignorance about which decohered branch they inhabit-not from subjective Bayesian degrees of belief. We agree with QBism insofar as collapse can be viewed as a Bayesian update of knowledge upon acquiring new information. However, unlike QBism, we regard the wavefunction of the universe, or of systems not directly observed, as ontologically real, independent of any particular agent’s beliefs..

Classical reality and irreversibility: In our framework, classical reality emerges as the ensemble of macroscopic branches characterized by high entropy, rendering quantum interference effectively negligible. Each branch supports a consistent classical history, in line with the consistent histories formalism, where interference between different decohered sequences is negligible due to suppressed off-diagonal terms. This parallels the consistent histories interpretation, though that approach typically does not integrate thermodynamic irreversibility into the formalism. In our approach, the consistency of classical histories is guaranteed by entropy: once a fact is irreversibly recorded, alternative histories rapidly decohere and become inaccessible. Solving apparent paradoxes:

Schrödinger’s Cat: The cat is entangled with a quantum event (alive or dead). In our interpretation, if the system is perfectly isolated, the cat and the device may remain in quantum superposition. However, the cat, being a complex thermodynamic system, rapidly diverges into distinct high-entropy states upon becoming alive or dead. Practically, even if the initial cat-device entanglement is idealized, within milliseconds the "alive" and "dead" branches will diverge thermodynamically, producing sharply distinct entropy signatures in the cat's physiology and environment, generating substantial entropy, whether from the physiological contrast between life and death or from the recording mechanisms like the Geiger counter which irreversibly encodes outcome data. Thus, in the thermodynamic sense defined earlier, the superposition effectively collapses almost instantaneously. The cat is either alive or dead long before anyone opens the box, because the cat’s own environment (itself, the air in the box) causes irreversibility. So our interpretation aligns with “macro reality”: we would not expect to open the box and find a coherent half-alive half-dead cat state. The cat, in this context, functions as an observer: it "registers" the outcome by physically embodying one branch of the superposition, life or death. For us, since we did not know, we treat as superposition. But by the time we look, entropy has made the cat’s state definite for all practical observers. So no contradiction, we will see a definite outcome. (If one replaced the cat with a cryogenically preserved organism or minimized entropy in a highly controlled setup, collapse might be delayed, but with a living cat, such control is unachievable.)

Bell’s Theorem and nonlocality: Our interpretation preserves the standard quantum predictions and introduces no hidden variables; thus, violations of Bell inequalities remain fully intact. We adopt the stance that outcomes are realized upon measurement; that is, collapse corresponds to an observer becoming entangled with and embedded in a specific branch and that quantum correlations exhibit nonlocality without enabling faster-than-light signaling. Since collapse is not a dynamical cause but a thermodynamic consequence, it does not involve any propagating influence or signal, and thus respects relativistic locality. Accordingly, our interpretation satisfies Bell’s theorem by embracing the same nonlocal structure inherent to quantum entanglement, without invoking hidden variables. Since we do not posit hidden variables, our interpretation avoids the constraints such as parameter fine-tuning or nonlocal hidden variable conflicts those models must confront. As with all interpretations that retain standard quantum mechanics, outcomes remain fundamentally random yet exhibit strong correlations dictated by the structure of entanglement.

Macroscopic superpositions in the universe: A natural question arises: if collapse is not fundamental, do branches of the wavefunction exist where seemingly contradictory outcomes occur (e.g., one branch where a lab observes outcome A, and another where outcome B is seen)? Yes, in principle they exist in the universal wavefunction. Indeed, such branches exist in principle, but they do not interact. In one branch, an observer might perceive “heads,” while in another, a counterpart observes “tails.” Is this the many-worlds interpretation? In effect, yes, it resembles a many-worlds picture, where distinct outcomes emerge as decohered branches of a single, unitary wavefunction. However, we refrain from philosophically equating these branches with fully realized “other worlds” on par with our own. They may instead be regarded as counterfactual possibilities, present in the wavefunction’s structure but excluded from our experience by the thermodynamic arrow of time and decoherence. They persist mathematically, but are physically inaccessible once decoherence has rendered them orthogonal. Whether this constitutes “many worlds” or a single world with many unrealized alternatives is ultimately a matter of interpretive semantics. We conceive of these branches as irreversibly separated realities, akin to Everett’s many worlds, but defined by entropy-induced separation rather than ontological simultaneity. Everett held that all branches exist simultaneously and equally. In contrast, we argue that branches attain classical reality only once thermodynamic irreversibility renders their interference negligible.” Prior to this entropy threshold, interference remains possible, indicating that the “worlds” were not yet truly distinct.