1. Introduction

Object detection, as a core technology in computer vision, has extensive application value in fields such as autonomous driving, security monitoring, and remote sensing analysis [

1,

2,

3]. As environmental conditions become increasingly complex and variable, traditional single-modality detection systems face severe challenges. While visible images can provide rich color and texture information, they perform poorly in adverse weather or insufficient lighting conditions [

4]. In contrast, infrared images can capture thermal information radiated by objects, performing excellently in nighttime and low-light environments, but they have lower resolution and less clear edge information [

5]. Therefore, multispectral object detection technology that comprehensively utilizes the complementary modalities of visible and infrared light has become an effective approach to solving this problem [

6,

7].

In recent years, deep learning technology has driven the rapid development of multispectral object detection research. With the advancement of convolutional neural networks (CNNs) and object detection algorithms, detection frameworks such as the YOLO series [

8,

9,

10] and Faster R-CNN [

11] have demonstrated powerful feature extraction and object recognition capabilities. However, when facing multimodal data, how to effectively fuse complementary information from different spectral domains and suppress background interference becomes a key challenge [

12]. Existing methods mainly adopt simple feature concatenation or element-wise addition/multiplication fusion strategies [

13], which ignore the intrinsic correlation between different modalities and find it difficult to fully utilize the complementary advantages of multimodal data.

To address the above issues, this paper proposes the Spectral Dual-stream Recursive Fusion Perception Target Network (SDRFPT-Net), a novel multispectral object detection architecture designed to effectively integrate visible and infrared modal information to improve detection performance in complex environments. Unlike existing methods, SDRFPT-Net innovatively proposes a Spectral Hierarchical Perception Architecture (SHPA) based on YOLOv10, providing a solid foundation for multimodal feature extraction, and achieves deep feature interaction and efficient fusion through the Spectral Recursive Fusion Module (SRFM), finally using the Spectral Target Perception Enhancement Module (STPEM) to enhance target region representation and suppress background interference.

The SHPA module adopts a dual-stream structure to process visible and infrared spectral information separately, capturing modality-specific features through independent parameter networks to maintain the integrity of spectral information. The SRFM module is the core innovation of this architecture, achieving deep feature interaction through a hybrid attention mechanism (integrating self-attention, cross-modal attention, and channel attention), and adopting a recursive progressive fusion strategy to achieve deep multi-modal feature interaction while maintaining parameter efficiency. The STPEM module focuses on enhancing target regions in features, significantly improving the detection capability of low-contrast targets through lightweight mask prediction and feature enhancement mechanisms.

Compared to traditional multispectral fusion methods, our proposed spectral dual-stream recursive fusion perception architecture has three significant advantages: First, it can more effectively capture complementary information between different modalities, showing excellent performance especially in low-light, adverse weather, and other complex environments; Second, the recursive progressive fusion strategy achieves deep feature interaction without significantly increasing the parameter count, improving computational efficiency; Finally, the target perception enhancement mechanism effectively distinguishes targets from backgrounds, improving detection accuracy and robustness.

To verify the effectiveness of SDRFPT-Net, we conducted extensive experiments on two multispectral object detection benchmark datasets: FLIR-aligned [

14] and LLVIP[

15]. The results show that SDRFPT-Net achieved state-of-the-art performance on both datasets, particularly with significant improvements over existing methods in the mAP50 and mAP50:95 metrics. For example, on the FLIR-aligned dataset, SDRFPT-Net achieved an mAP50 of 0.785, an 11.5% improvement over the second-best performing BA-CAMF Net (0.704); on the LLVIP dataset, the mAP50 reached 0.963, and the mAP50:95 reached 0.706, achieving optimal performance.

The contributions of this paper can be summarized as follows:

- (1)

Propose SDRFPT-Net, a novel multispectral object detection architecture that effectively extracts and integrates multimodal features through a dual-stream separated spectral structure;

- (2)

Design the Spectral Recursive Fusion Module (SRFM), achieving high-efficiency deep feature interaction through a hybrid attention mechanism and recursive progressive fusion strategy;

- (3)

Develop the Spectral Target Perception Enhancement Module (STPEM), enhancing target feature representation and suppressing background interference;

- (4)

Experimental validation of SDRFPT-Net's effectiveness on multiple public datasets, achieving state-of-the-art detection performance while maintaining computational efficiency;

The remainder of this paper is organized as follows:

Section 2 reviews related work;

Section 3 introduces the architecture and key modules of SDRFPT-Net in detail;

Section 4 presents experimental results and analysis;

Section 5 concludes the paper and indicates future research directions.

3. Methodology

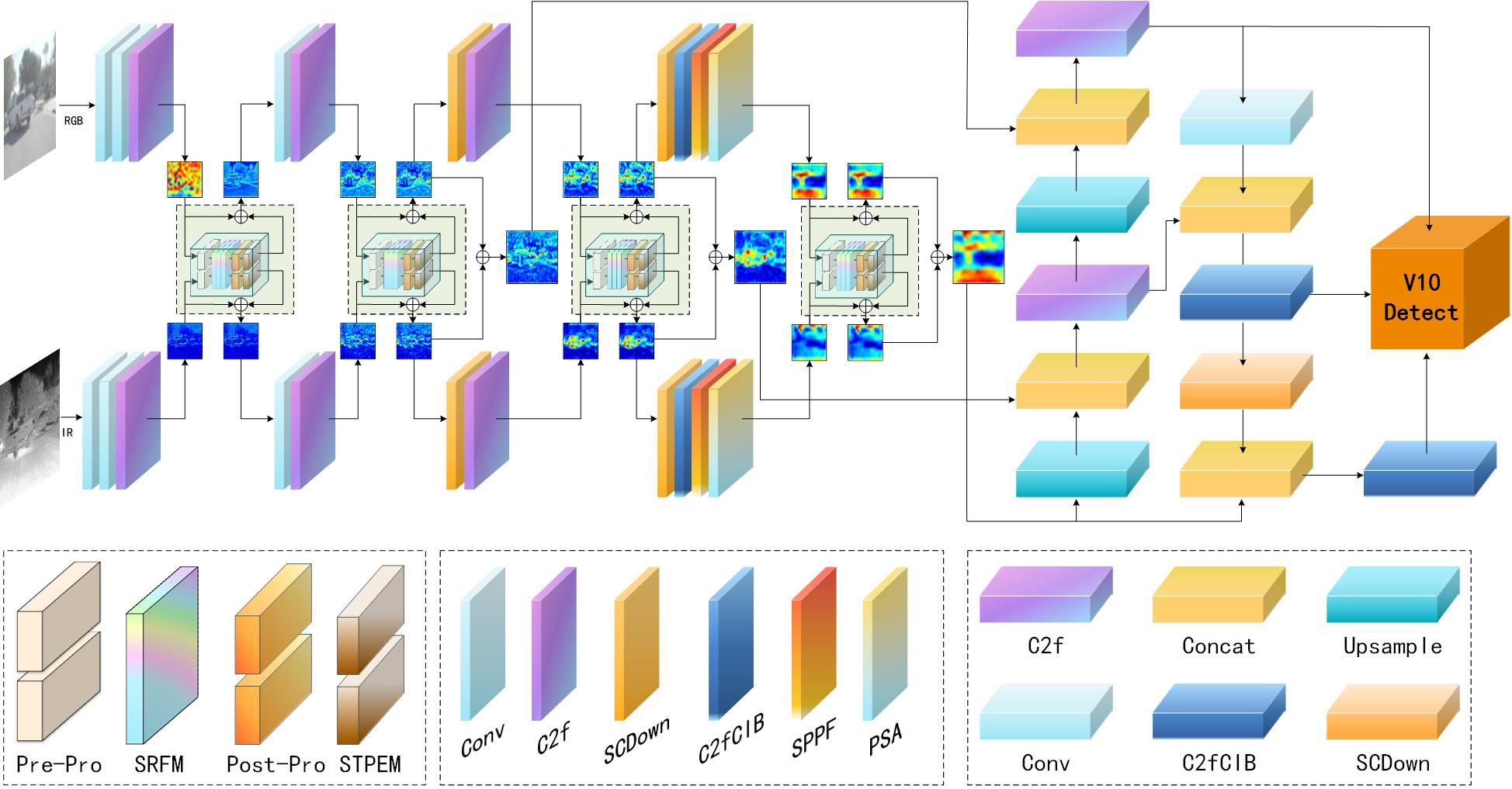

This section will detail the SDRFPT-Net algorithm, explaining in order according to the system data flow. The overall architecture of SDRFPT-Net is shown in

Figure 2, with its dual-stream design based on YOLOv10 capable of supporting multi-scale spectral feature extraction for visible and infrared modalities, and achieving significant improvement in detection performance through spectral self-adaptive recursive fusion mechanisms and target perception enhancement modules.

The system's data processing flow is as follows: First, the input visible and infrared images are processed separately through dual-stream feature extraction networks, generating feature maps of different scales; Then, these feature maps undergo deep interaction and fusion through the spectral self-adaptive recursive fusion module; Next, the fused features are further enhanced by the self-adaptive target perception enhancement module to strengthen the representation of target regions; Finally, the enhanced multi-scale features are aggregated through feature aggregation and input to the detection head, generating the final detection results.

Compared to traditional single-modality object detection methods, this architecture can more effectively utilize the complementary information of RGB and infrared images, especially showing greater detection accuracy and robustness in challenging scenarios such as low light, adverse weather, and complex backgrounds.

3.1. Spectral Hierarchical Perception Architecture (SHPA)

The SHPA architecture, as the core design of this algorithm, effectively processes visible and infrared spectral domain information through a dual-stream structure, laying the foundation for hierarchical perception and fusion of multi-scale features. This architecture is based on YOLOv10's excellent features and has been systematically improved for multi-modal perception.

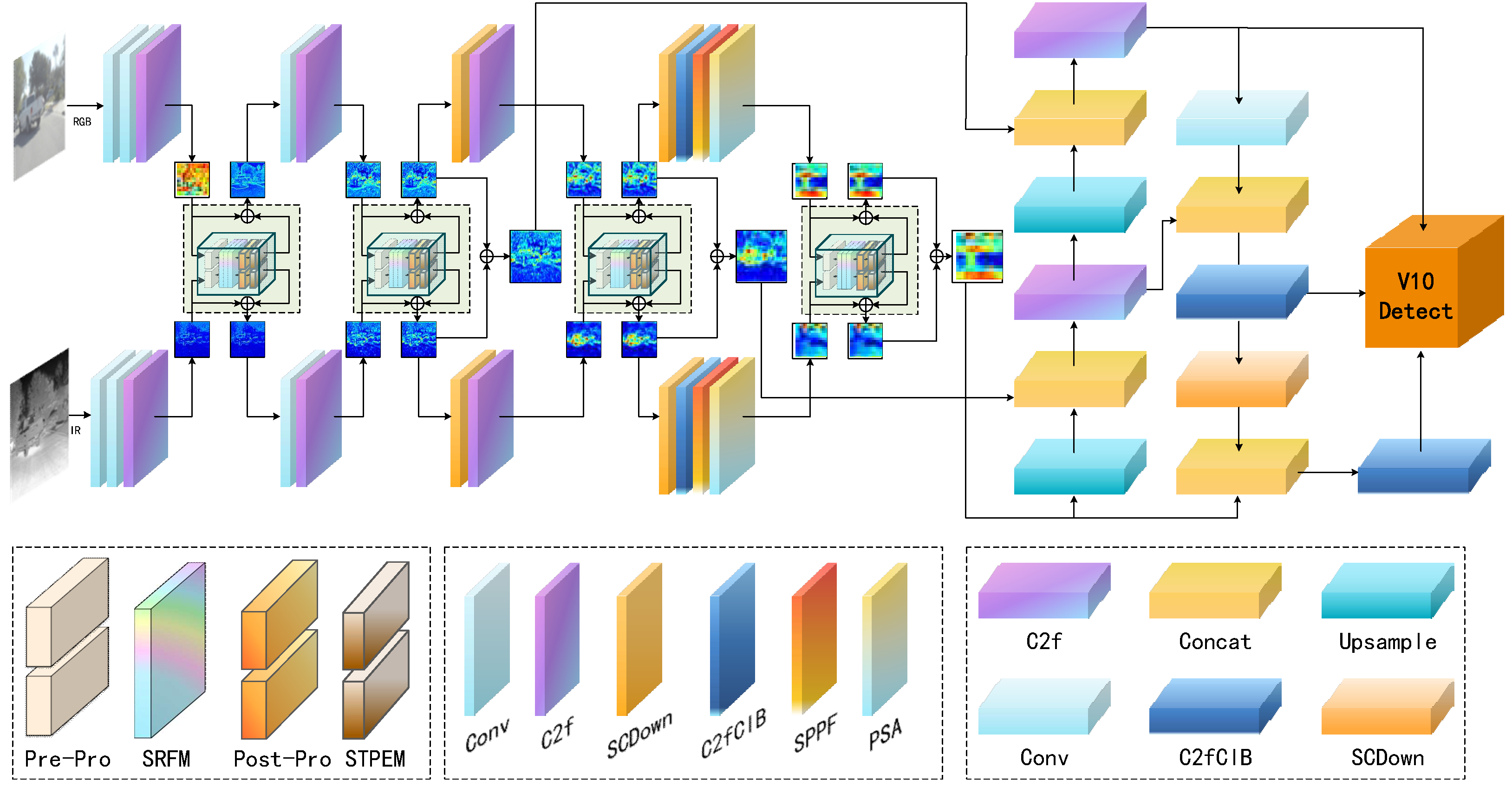

3.1.1. Dual-stream Separated Spectral Architecture Design

Compared to YOLOv10's single backbone network feature extraction mechanism, the dual-stream separated spectral architecture proposed in this paper can effectively process RGB-IR dual-modal data's heterogeneous properties, as shown in

Figure 3.

This architecture expands a single feature extraction network into a dual-stream network, processing visible spectral and infrared spectral information separately. The two feature extraction streams share similar network structures but use independent parameters, and the feature extraction process of the dual-stream network can be formalized as:

where,

and

represent RGB and infrared input images,

and

represent the corresponding feature extraction functions,

and

represent their respective network parameters.

The main advantages of the dual-stream architecture are:

- (1)

It can design specific extraction strategies for the characteristics of different spectral domains, thereby better adapting to the characteristics of data from each modality;

- (2)

It preserves the unique information of each spectral domain, avoiding the potential loss of information that might occur when processing in a single network;

- (3)

It captures the feature distributions of different spectral domains through independent parameters, improving the diversity of feature representations.

Compared to YOLOv10's single feature extraction path, the dual-stream architecture shows greater robustness in complex environments, especially when the quality of information from one modality decreases (such as insufficient RGB information at night or reduced infrared contrast during the day), the system can still maintain detection performance by relying on stable information provided by the other modality.

3.1.2. Multi-scale Spectral Feature Expansion

To comprehensively capture the multi-scale representation of targets, this paper designs a multi-scale spectral feature expansion mechanism. In each spectral stream, features form a multi-scale feature pyramid through progressive downsampling. For each spectral domain

, the feature expansion process can be represented as:

where,

represents the level

feature,

represents the downsampling function,

is the corresponding parameter. Specifically, the spatial resolution and channel number of each level feature are:

3.1.3. Feature Aggregation and Detection

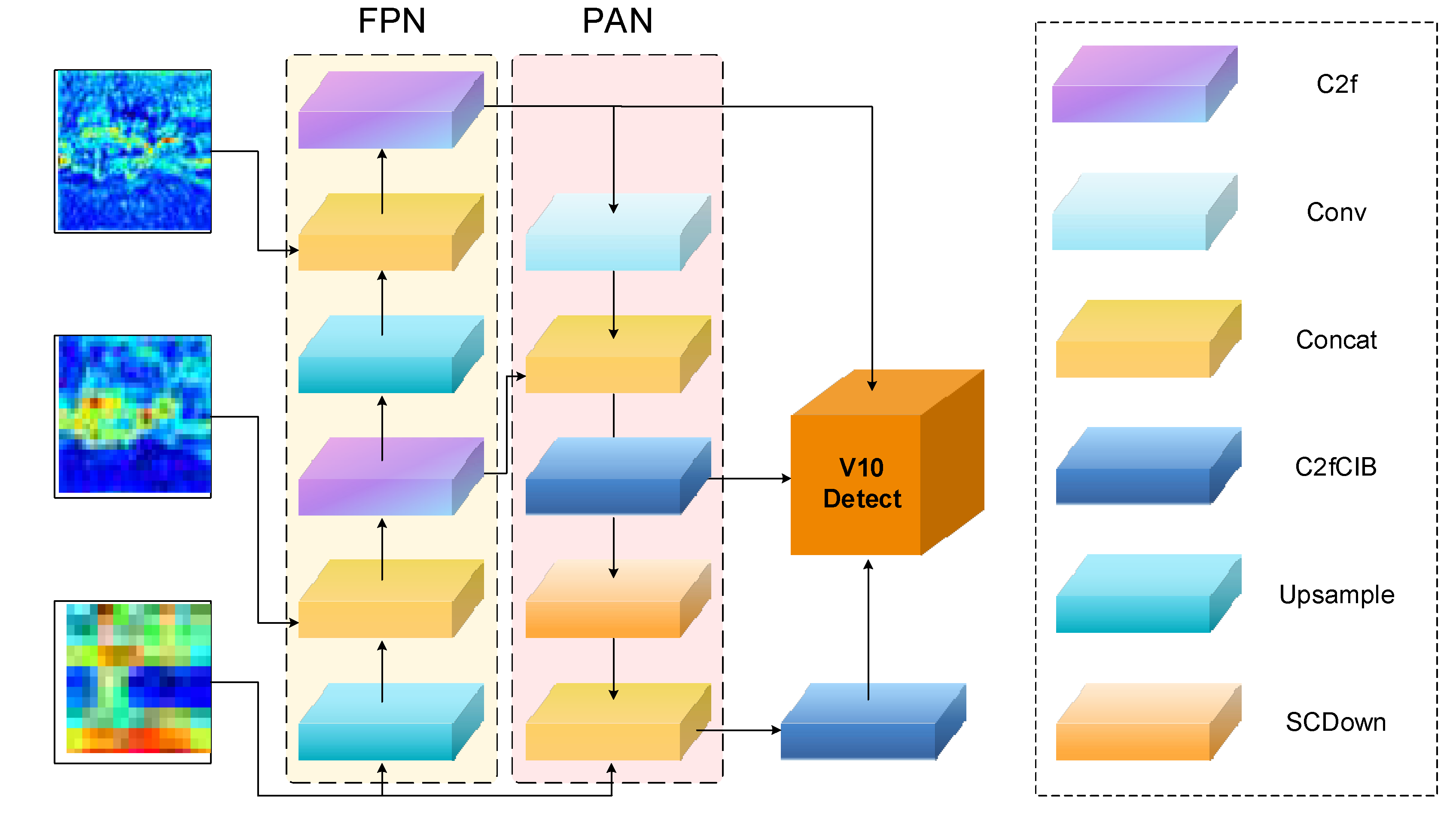

After multi-scale expansion, images go through Pre-Pro, SRFM, Post-Pro, STPEM models for fusion, thereby obtaining high-quality multi-scale fusion features. These features need to be further aggregated and processed to generate the final object detection results, as shown in

Figure 4.

First, multi-scale fusion features are aggregated through the feature pyramid (FPN) and path aggregation network (PAN), enhancing information exchange between features of different scales::

where,

represents the FPN output of level

feature,

represents the PAN output of level

feature,

represents the feature after fusion, containing complementary information from RGB and IR.

FPN transmits semantic information from high levels to low levels, while PAN transmits spatial details from low levels to high levels, forming a powerful feature representation. This bidirectional feature flow mechanism ensures that features at each scale can incorporate both rich semantic information and fine spatial details.

Finally, the aggregated features pass through the v10Detect detection head for object detection:

where

represents the detection function, with outputs including object class, bounding box coordinates, and confidence information. v10Detect adopts a more efficient feature decoding method, including dynamic convolution and branch specialization design, further improving detection accuracy and efficiency. v10Detect employs branch specialization design, designating specialized branches for bounding box regression, feature processing, and classification tasks, further improving detection accuracy and efficiency.

Compared to YOLOv10, our feature aggregation and detection stage utilizes the advantages brought by modal fusion and target perception enhancement, allowing the detection head to perform object detection based on richer and more accurate feature representations. This is particularly important in low light, adverse weather, and complex background conditions, as single-modal information is often unreliable in these scenarios.

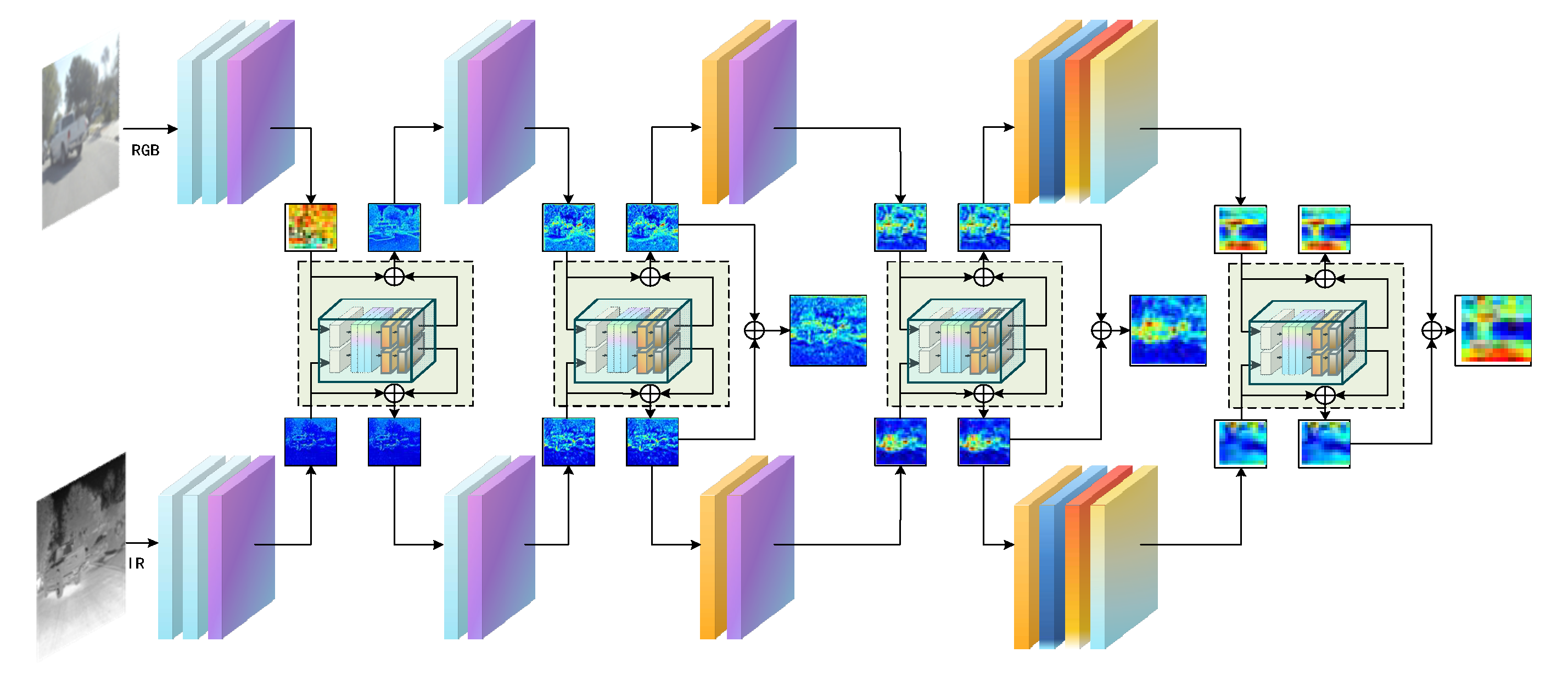

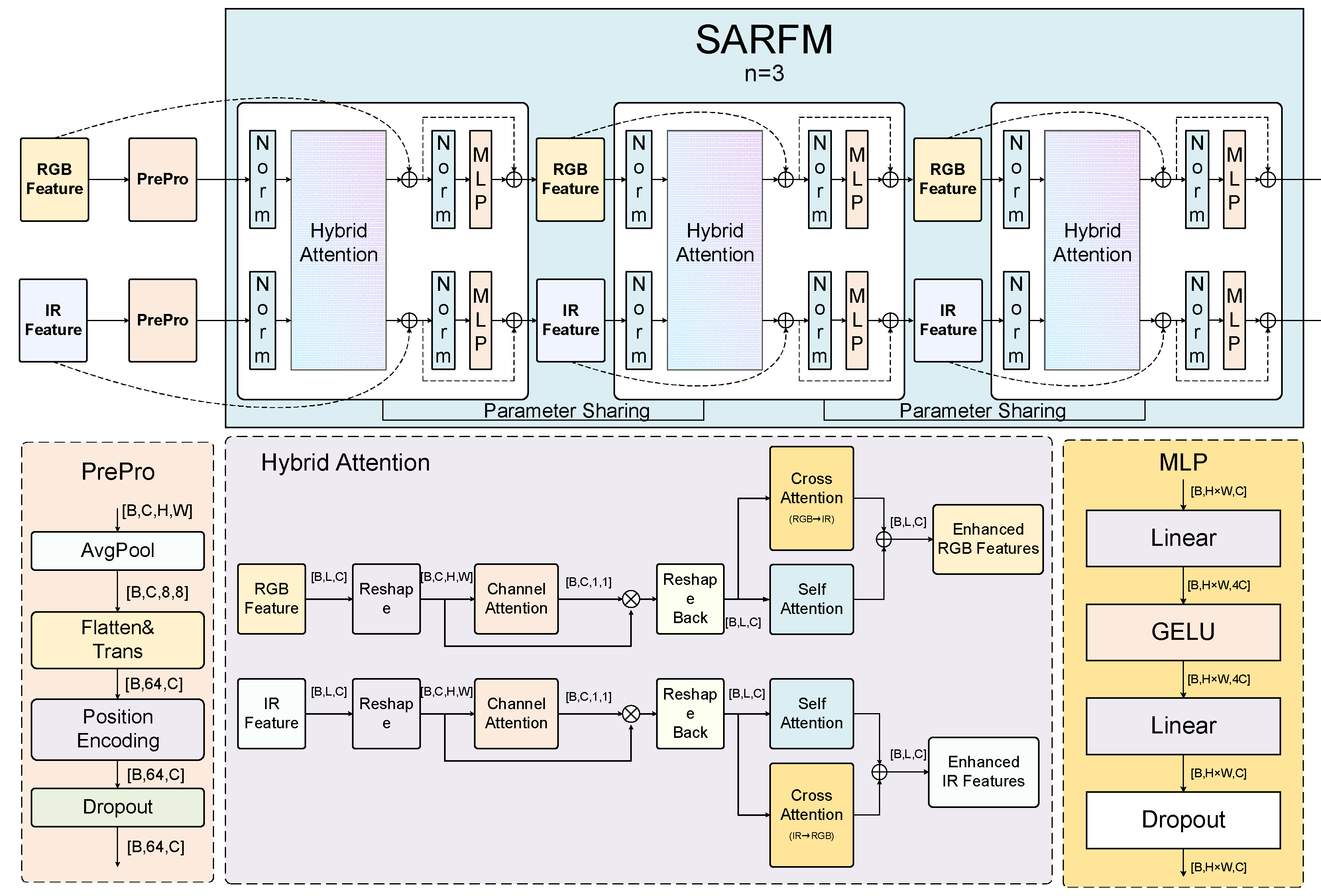

3.2. Spectral Recursive Fusion Module (SRFM)

The SRFM module achieves deep interaction and optimized integration of RGB-IR dual-modal features through innovative fusion mechanisms, significantly improving detection performance in complex environments. Unlike traditional fusion methods, SRFM combines hybrid attention mechanisms with recursive progressive fusion strategies organically, achieving deep multi-modal feature interaction while maintaining parameter efficiency, providing powerful feature representation capabilities for multispectral object detection.

As shown in

Figure 5, SRFM receives dual-stream features from SHPA and outputs fused enhanced features after cyclic progressive fusion. This section will introduce the design principles, key components and workflow of the mechanism in detail.

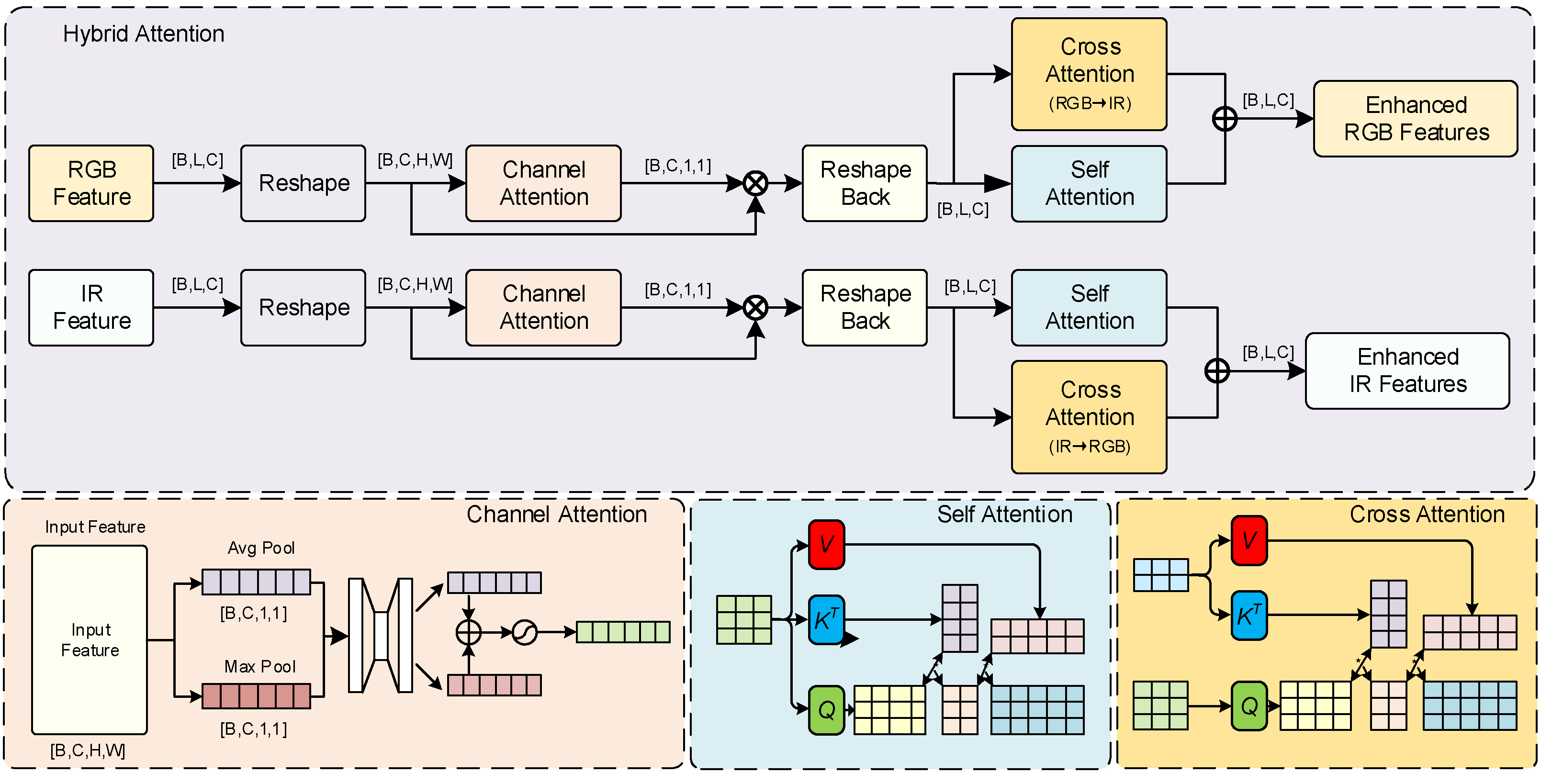

3.2.1. Hybrid Attention Mechanism

The hybrid attention mechanism builds a comprehensive feature enhancement system by integrating three complementary mechanisms: self-attention, cross-modal attention, and channel attention, capturing complex feature dependencies from spatial, modal relationship, and channel importance dimensions. This multi-dimensional feature enhancement design significantly improves the model's processing capability for different scenes.

According to the data flow shown in the figure, the overall calculation process of the hybrid attention mechanism can be expressed as:

where,

and

respectively represent RGB and infrared features after channel attention processing.

Channel attention mechanism. The channel attention sub-module learns channel dependencies through global information modeling, providing all-around enhanced features for the spectral recursive progressive fusion strategy. Given input feature map

, where

,

,

,

respectively represent batch size, channel number, height, and width, the calculation process of channel attention can be expressed as:

where,

and

represent global average pooling and global maximum pooling operations;

and

are shared weight fully connected layer parameter matrices, where

is the reduction rate. The weights are mapped to the

interval; finally, the channel attention weights are applied to the original features through element-wise multiplication.

Self-attention mechanism. The self-attention mechanism focuses on capturing spatial dependencies within a modality, allowing features to attend to related regions within the same modality, providing richer contextual information for the spectral hierarchical perception architecture. For input feature

, the calculation process of self-attention can be expressed as:

where,

,

,

are query, key, and value matrices obtained through learnable parameter matrices

,

,

;

is the feature dimension, serving as a scaling factor to avoid gradient vanishing problems.

Cross-modal attention mechanism. The cross-modal attention mechanism is used to capture complementary information between different modalities, establishing connections between visible and infrared features, and is the core component for achieving spectral information exchange. The unique aspect of cross-modal attention is that it uses the query from one modality to interact with the keys and values from another modality, thereby enabling information flow between modalities. For RGB and IR features, the calculation of cross-modal attention can be expressed as:

where,

,

,

are the cross-modal attention calculation from RGB to IR;

,

,

are the matrices for IR to RGB calculation;

is a learnable scaling factor that controls the strength of cross-modal information fusion.

Finally, the outputs of self-attention and cross-modal attention are added to obtain the final enhanced features:

Through this design, the hybrid attention mechanism can simultaneously attend to channel importance, spatial dependency relationships, and modal complementary information, building a more comprehensive and robust feature representation.

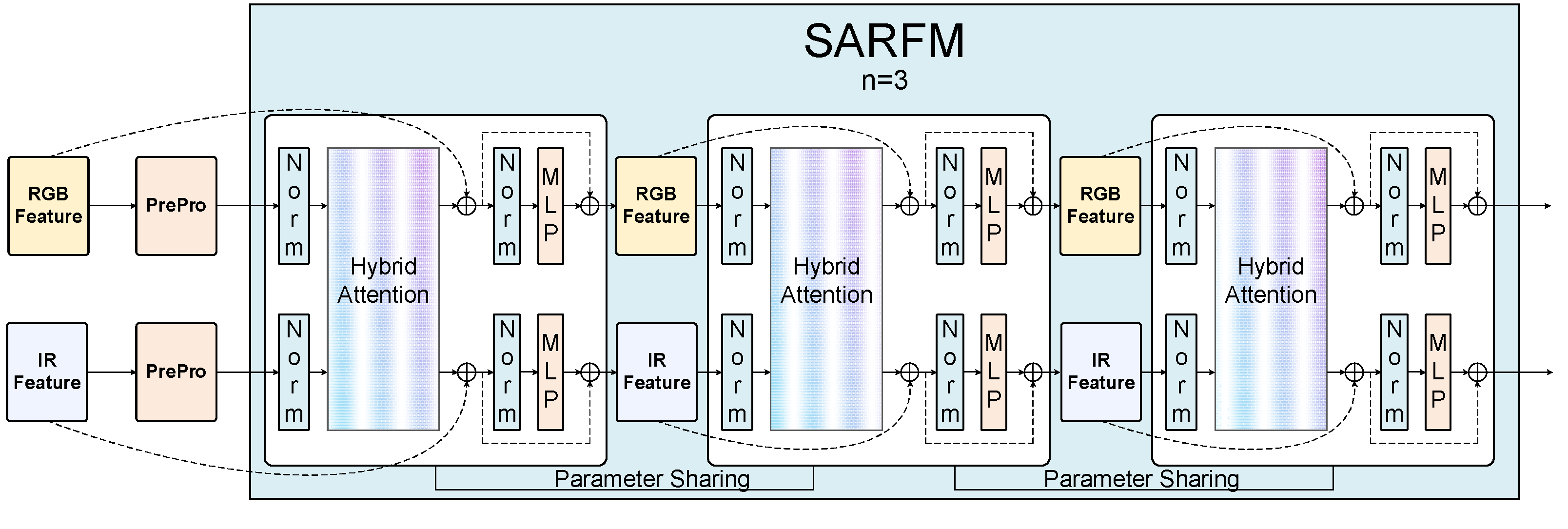

3.2.2. Recursive Progressive Fusion Strategy

Multi-modal feature fusion is a key challenge in RGB-T object detection. Traditional multi-modal feature fusion methods typically enhance performance by stacking multiple Transformer blocks, but this approach leads to dramatic increases in parameter count and computational complexity. Inspired by the "review-consolidate" mechanism in human learning processes, this paper proposes a spectral hierarchical recursive progressive fusion strategy, achieving feature progressive refinement through repeatedly applying the same feature transformation operations, thereby enhancing fusion effects without increasing model parameters.

Parameter Cycling Reuse Structure. The core idea of the spectral hierarchical recursive progressive fusion strategy is to use the same set of parameters for multiple rounds of feature refinement. Each refinement builds on the results of the previous round, forming a continuous, progressive feature fusion process. This process can be expressed as:

where,

and

respectively represent the visible and infrared features after the

round of cycling,

represents the feature transformation function,

is the reused model parameter.

Through multiple cycles, the feature representation ability is continuously enhanced:

where,

represents applying the transformation function

continuously

times,

and

are the initial features.

Compared to traditional methods, the cyclic weight reuse structure significantly reduces the model parameter count, while achieving deep feature interaction through multiple refinements.This design not only improves the model's representation ability but also alleviates the risk of overfitting.

Spectral Feature Progressive Fusion. Spectral feature progressive fusion is the core characteristic of this strategy, progressively fusing different spectral domain features. This progressive fusion process operates in the spectral dimension, ensuring each spectral property is fully preserved and mutually enhanced. The fusion process includes the following key steps:

1. Spectral feature normalization: Normalization is performed separately on visible and infrared features, expressed as follows.

2. Hybrid attention calculation: Apply hybrid attention mechanism to process normalized features, expressed as follows,

represents hybrid attention calculation.

3. Spectral residual connection: Combine attention outputs with original spectral features, expressed as follows.

4. Spectral feature enhancement: Further enhance each spectral feature through multilayer perceptron and residual connection, expressed as follows.

where,

represents layer normalization operation,

represents multilayer perceptron.

Progressive feature refinement process. The progressive feature refinement process can be viewed as a "feature distillation" mechanism, where each round of cycling makes the feature representation more pure and effective. In this research, we adopt a fixed 3-round cycling structure, a design based on extensive experimental validation.

The refinement process can be divided into three stages:

First round of cycling: Initial fusion stage. Mainly captures basic intra-modal and inter-modal relationships, establishing initial feature interaction;

Second round of cycling: Feature reinforcement stage. Based on the already established initial relationships, further strengthens important feature connections, suppressing noise and irrelevant information;

Third round of cycling: Feature refinement stage. Performs final optimization and fine-tuning on features, forming high-quality fusion representations.

This three-round progressive refinement process can be expressed as:

The progressive refinement mechanism creates a "deep cascade" effect, achieving deep network feature representation capabilities within a fixed parameter space, which is fundamentally different from traditional "multi-layer stacking" approaches. Traditional methods require introducing new parameter sets for each additional layer, while our method achieves deeper effective network depth through parameter reuse while maintaining parameter efficiency.

Spectral Multi-scale Fusion Mechanism. The spectral multi-scale fusion mechanism is an important component of the recursive progressive fusion strategy, applying recursive progressive fusion on features of different scales to achieve comprehensive multi-scale feature optimization. This mechanism includes the following key designs:

Multi-scale feature selection: The fusion strategy is applied separately on three scales—P3/8, P4/16, and P5/32—ensuring thorough fusion of features at all three scales;

Inter-scale information flow: Information exchange between features of different scales is achieved through FPN and PAN structures;

The multi-scale fusion process can be expressed as:

where

represents the feature scale index, and

represents the feature transformation function for the s-th scale. By applying recursive progressive fusion across multiple scales, the system comprehensively enhances the representation capability of features at different scales, providing a solid foundation for detecting targets of various sizes.

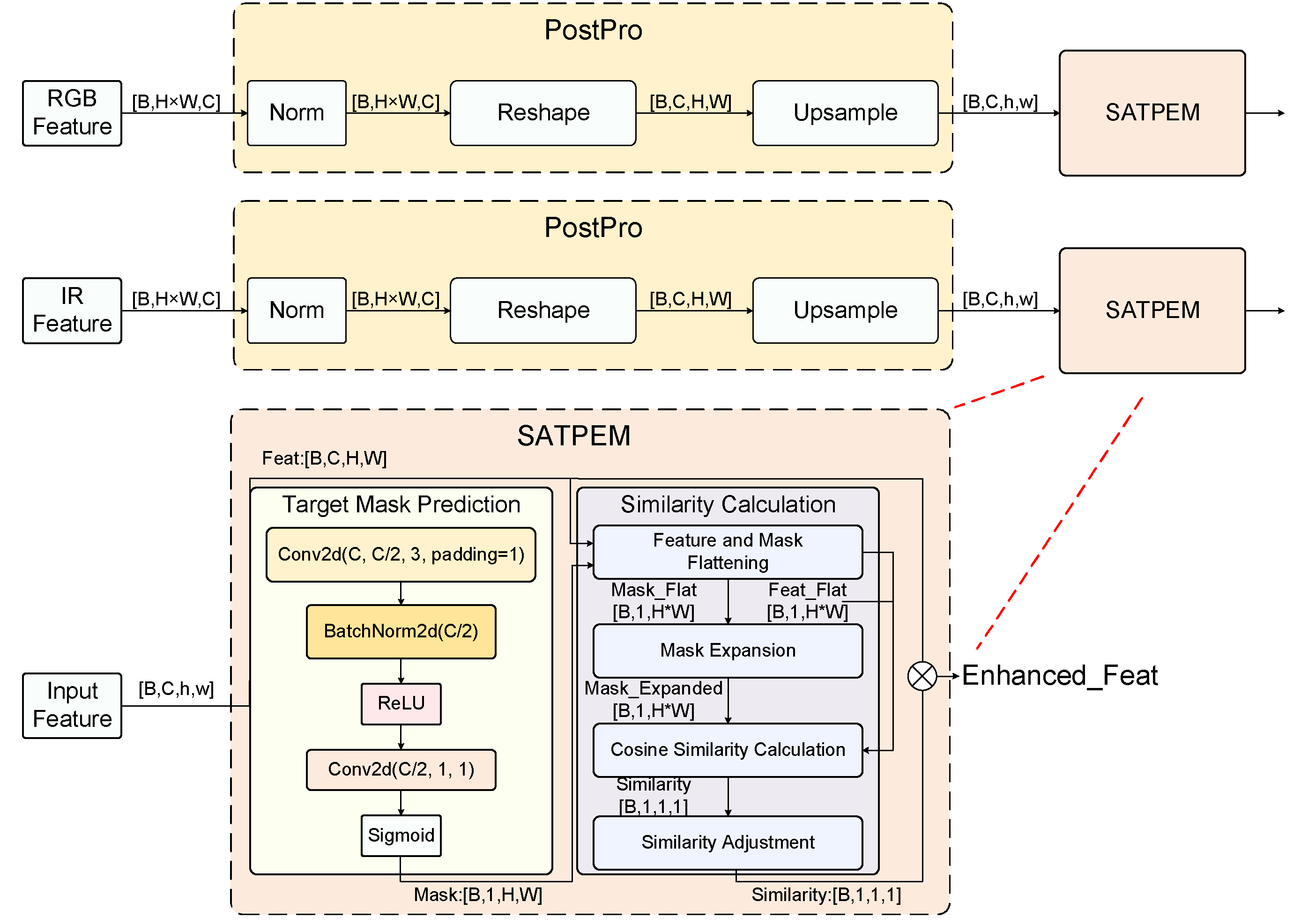

3.3. Spectral Target Percpetion Enhancement Module (STPEM)

The STPEM module focuses on enhancing target regions in features while reducing background interference. Through mask generation and feature enhancement mechanisms, this module significantly improves the model's detection capability for small and low-contrast targets, providing more precise feature representation for object detection in complex environments.

3.3.1. Lightweight Mask Prediction

Lightweight mask prediction is the core component of STPEM. Given input feature

, mask prediction first predicts target region masks through a lightweight convolutional network:

where

represents the mask prediction network, and

represents the sigmoid activation function. The mask prediction network adopts a two-layer convolutional structure:

The first layer is a 3×3 convolution that reduces the number of channels from C to C/2, followed by batch normalization and ReLU activation; the second layer is a 1×1 convolution that reduces the number of channels from C/2 to 1, outputting a single-channel mask. Finally, the sigmoid function maps values to the [0,1] range, representing the probability that each position contains a target.

The mask prediction network is essentially learning "what feature patterns might correspond to target regions." For example, in RGB images, targets typically have distinct edges and texture features; in infrared images, targets often appear as regions with significant temperature differences from the background. The mask prediction network captures these feature patterns through convolutional operations to generate masks representing potential target regions.

3.3.2. Similarity Calculation and Adjustment

After mask generation, the module calculates the cosine similarity between features and masks to evaluate the correlation between each feature channel and the target region, thereby establishing explicit associations between feature channels and potential target regions:

where the

operation flattens the spatial dimensions of the features, the

operation expands the mask to the same number of channels as the features, and

calculates the cosine similarity between two vectors.

After calculating the similarity between each channel and the mask, further processing is done through averaging operations and a learnable adjustment layer:

where

is a 1×1 convolutional layer for adjusting similarity, and

is the sigmoid activation function. This learnable similarity adjustment mechanism enables the module to adaptively adjust similarity calculations according to different scenes, improving the flexibility and adaptability of the module.

3.3.3. Feature Enhancement Mechanism

Finally, the enhanced feature

is achieved through similarity weighting:

The core idea of this weighting mechanism is: if a feature has high similarity with the predicted target region, it is preserved or enhanced; if the similarity is low, the feature is suppressed. In this way, features of target regions are effectively enhanced while features of background regions are suppressed, thereby improving the signal-to-noise ratio of the features.

The STPEM module significantly improves the performance of multispectral object detection by effectively identifying and enhancing potential target regions, showing excellent performance especially when processing complex background scenes.

6. Conclusions

This paper presents SDRFPT-Net (Spectral Dual-stream Recursive Fusion Perception Target Network), a novel architecture for multispectral object detection that effectively integrates visible and infrared modalities through three innovative modules. First, the Spectral Hierarchical Perception Architecture (SHPA) based on YOLOv10 employs a dual-stream structure to extract modality-specific features. Second, the Spectral Recursive Fusion Module (SRFM) achieves deep cross-modal feature interaction through a hybrid attention mechanism and recursive fusion strategy. Finally, the Spectral Target Perception Enhancement Module (STPEM) enhances target region representation and suppresses background interference through lightweight mask prediction.

Extensive experiments on the FLIR-aligned and LLVIP datasets demonstrate that SDRFPT-Net outperforms existing methods across all key metrics. On the FLIR-aligned dataset, our model achieves 0.785 mAP50 and 0.426 mAP50:95, surpassing the second-best BA-CAMF Net by 11.5%. On the LLVIP dataset, it reaches 0.963 mAP50 and 0.706 mAP50:95, significantly outperforming all comparison methods.

Through comprehensive ablation studies, we validated the effectiveness and optimal configuration of each innovative module. Results indicate that the complete hybrid attention mechanism (combining self-attention, cross-modal attention, and channel attention), full-scale advanced fusion strategy, and three iterations of recursive progressive fusion collectively contribute to the model's superior performance. Notably, the recursive progressive fusion mechanism achieves an optimal performance balance at three iterations, creating a "deep cascade" effect that enables deep network representation capabilities within a fixed parameter space.

Despite SDRFPT-Net's excellent performance, several limitations warrant discussion. Although the recursive progressive fusion strategy enhances feature interaction depth, the optimal design of three iterations also reflects that excessive iterations may lead to feature "over-fusion," which actually impairs performance. Additionally, the current model still has room for improvement in processing distant small targets, particularly under adverse weather conditions. Furthermore, deploying the model on edge devices continues to face certain challenges.

Future work could consider further optimizing the network structure while exploring the integration of temporal information to improve adaptability to dynamic scenes. Meanwhile, we will focus on enhancing model robustness in complex environments and reducing computational complexity to meet various requirements in practical applications. Overall, SDRFPT-Net offers a promising solution for multispectral object detection in applications such as autonomous driving, security surveillance, and remote sensing analysis.

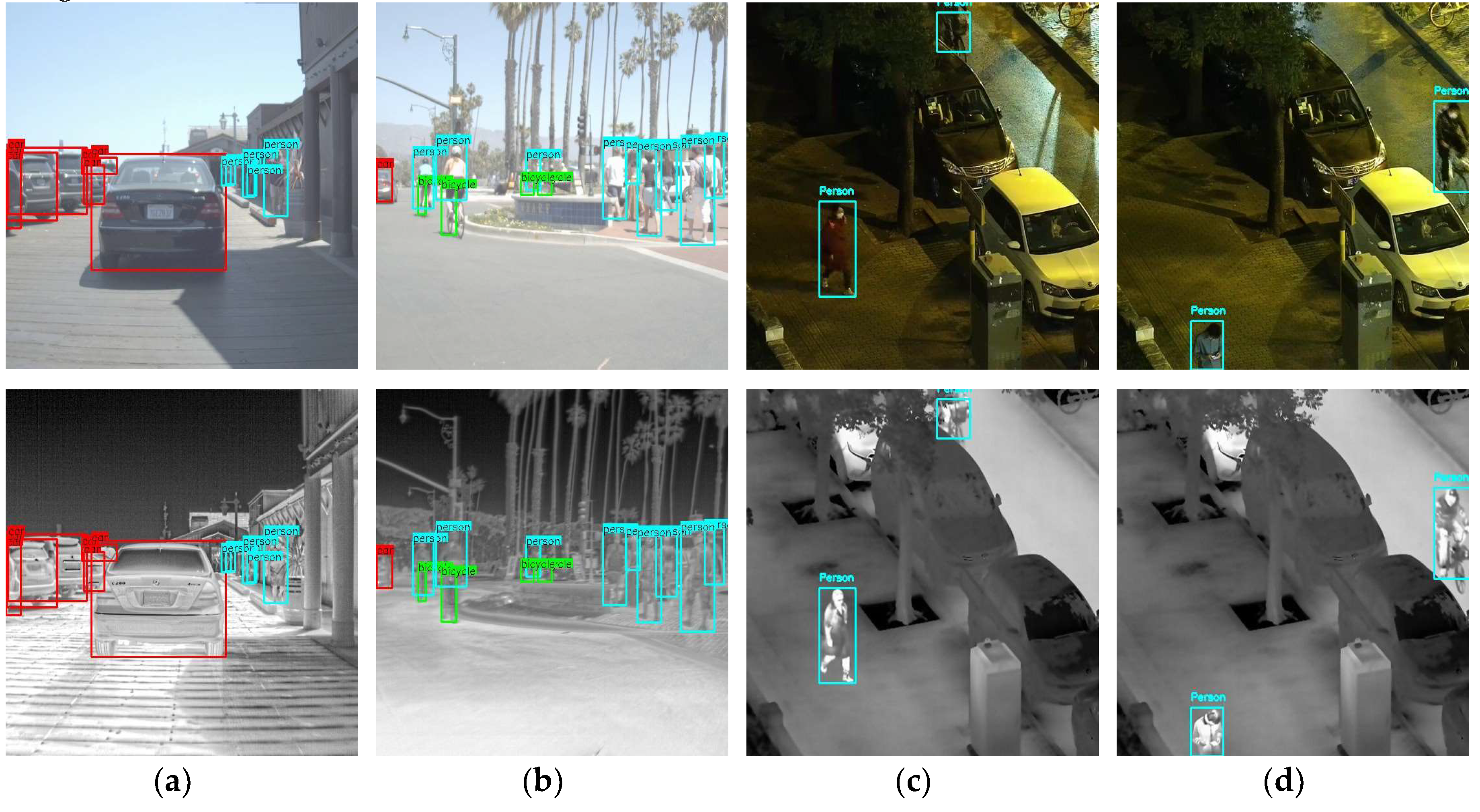

Figure 1.

Comparison of multispectral object detection advantages under different lighting conditions. The figure shows detection results for visible (top row) and infrared (bottom row) imaging in daytime (left two columns) and nighttime (right two columns) scenes. Red bounding boxes indicate cars, blue boxes indicate persons, and green boxes indicate bicycles. It clearly demonstrates that visible images (top-left) provide richer color and texture information for better detection in daylight, while infrared images (bottom-right) provide clearer object contours by capturing thermal radiation, showing significant advantages in low-light conditions. This complementarity proves the necessity of multispectral fusion for all-weather object detection, especially in complex and variable environmental conditions.

Figure 1.

Comparison of multispectral object detection advantages under different lighting conditions. The figure shows detection results for visible (top row) and infrared (bottom row) imaging in daytime (left two columns) and nighttime (right two columns) scenes. Red bounding boxes indicate cars, blue boxes indicate persons, and green boxes indicate bicycles. It clearly demonstrates that visible images (top-left) provide richer color and texture information for better detection in daylight, while infrared images (bottom-right) provide clearer object contours by capturing thermal radiation, showing significant advantages in low-light conditions. This complementarity proves the necessity of multispectral fusion for all-weather object detection, especially in complex and variable environmental conditions.

Figure 2.

Overall architecture of SDRFPT-Net (Spectral Dual-stream Recursive Fusion Perception Target Network). The architecture employs a dual-stream design with parallel processing paths for visible and infrared input images. The network consists of three key innovative modules: Spectral Hierarchical Perception Architecture (SHPA) for extracting modality-specific features, Spectral Recursive Fusion Module (SRFM) for deep cross-modal feature interaction, and Spectral Target Perception Enhancement Module (STPEM) for enhancing target region representation and suppressing background interference. The feature pyramid and detection head (V10 Detect) enable multi-scale object detection.

Figure 2.

Overall architecture of SDRFPT-Net (Spectral Dual-stream Recursive Fusion Perception Target Network). The architecture employs a dual-stream design with parallel processing paths for visible and infrared input images. The network consists of three key innovative modules: Spectral Hierarchical Perception Architecture (SHPA) for extracting modality-specific features, Spectral Recursive Fusion Module (SRFM) for deep cross-modal feature interaction, and Spectral Target Perception Enhancement Module (STPEM) for enhancing target region representation and suppressing background interference. The feature pyramid and detection head (V10 Detect) enable multi-scale object detection.

Figure 3.

Dual-stream separated spectral architecture design in SDRFPT-Net. The architecture expands a single feature extraction network into a dual-stream structure, where the upper stream processes visible spectral information while the lower stream handles infrared spectral information. Although both processing paths share similar network structures, they employ independent parameter sets for optimization, allowing each stream to specifically learn the feature distribution and representation of its respective modality.

Figure 3.

Dual-stream separated spectral architecture design in SDRFPT-Net. The architecture expands a single feature extraction network into a dual-stream structure, where the upper stream processes visible spectral information while the lower stream handles infrared spectral information. Although both processing paths share similar network structures, they employ independent parameter sets for optimization, allowing each stream to specifically learn the feature distribution and representation of its respective modality.

Figure 4.

Multi-scale fusion feature aggregation and detection process in SDRFPT-Net. The figure shows features from three different scales (P3, P4, P5) that already contain fused information from visible and infrared modalities. The middle section presents two complementary information flow networks: Feature Pyramid Network (FPN) and Path Aggregation Network (PAN). FPN (light blue background) follows a top-down path, transferring high-level semantic information to low-level features, while PAN (light pink background) follows a bottom-up path, transferring low-level spatial details to high-level features. This bidirectional feature flow mechanism ensures that features at each scale incorporate both fine spatial localization information and rich semantic representation.

Figure 4.

Multi-scale fusion feature aggregation and detection process in SDRFPT-Net. The figure shows features from three different scales (P3, P4, P5) that already contain fused information from visible and infrared modalities. The middle section presents two complementary information flow networks: Feature Pyramid Network (FPN) and Path Aggregation Network (PAN). FPN (light blue background) follows a top-down path, transferring high-level semantic information to low-level features, while PAN (light pink background) follows a bottom-up path, transferring low-level spatial details to high-level features. This bidirectional feature flow mechanism ensures that features at each scale incorporate both fine spatial localization information and rich semantic representation.

Figure 5.

Detailed architecture of the Spectral Recursive Fusion Module (SRFM). The framework is divided into two main parts: the upper light blue background area shows the overall recursive fusion process, labeled as 'SARFM n=3', indicating a three-round recursive fusion strategy. This part receives RGB and IR dual-stream features from SHPA and processes them through three cascaded hybrid attention units with parameter sharing to improve computational efficiency. The lower part shows the detailed internal structure of the hybrid attention unit, including preprocessing components (PrePro) such as AvgPool, Flatten, and Position Encoding; the hybrid attention mechanism implementation including Channel Attention, Self Attention, and Cross Attention; and the MLP module with Linear layers, GELU activation, and Dropout.

Figure 5.

Detailed architecture of the Spectral Recursive Fusion Module (SRFM). The framework is divided into two main parts: the upper light blue background area shows the overall recursive fusion process, labeled as 'SARFM n=3', indicating a three-round recursive fusion strategy. This part receives RGB and IR dual-stream features from SHPA and processes them through three cascaded hybrid attention units with parameter sharing to improve computational efficiency. The lower part shows the detailed internal structure of the hybrid attention unit, including preprocessing components (PrePro) such as AvgPool, Flatten, and Position Encoding; the hybrid attention mechanism implementation including Channel Attention, Self Attention, and Cross Attention; and the MLP module with Linear layers, GELU activation, and Dropout.

Figure 6.

Detailed structure of the hybrid attention mechanism in SDRFPT-Net. The mechanism integrates three complementary attention computation methods to achieve multi-dimensional feature enhancement. The upper part shows the overall processing flow: RGB and IR features first undergo reshaping and enter the Channel Attention module, which focuses on learning the importance weights of different feature channels. After reshaping back, the features simultaneously enter both Self Attention and Cross Attention modules, capturing intra-modal spatial dependencies and inter-modal complementary information. Finally, the outputs from both attention modules are added to generate enhanced RGB and IR feature representations.

Figure 6.

Detailed structure of the hybrid attention mechanism in SDRFPT-Net. The mechanism integrates three complementary attention computation methods to achieve multi-dimensional feature enhancement. The upper part shows the overall processing flow: RGB and IR features first undergo reshaping and enter the Channel Attention module, which focuses on learning the importance weights of different feature channels. After reshaping back, the features simultaneously enter both Self Attention and Cross Attention modules, capturing intra-modal spatial dependencies and inter-modal complementary information. Finally, the outputs from both attention modules are added to generate enhanced RGB and IR feature representations.

Figure 7.

Spectral recursive progressive fusion architecture in SDRFPT-Net. The light blue background area (labeled as 'SARFM n=3') shows the parameter-sharing three-round recursive fusion process. The left side includes RGB and IR input features after preprocessing (PrePro), which flow through three cascaded hybrid attention units. The key innovation is that these three processing units share the exact same parameter set (indicated by 'Parameter Sharing' connections), achieving deep recursive structure without increasing model complexity. Each processing unit contains normalization (Norm) components and MLP modules, forming a complete feature refinement path.

Figure 7.

Spectral recursive progressive fusion architecture in SDRFPT-Net. The light blue background area (labeled as 'SARFM n=3') shows the parameter-sharing three-round recursive fusion process. The left side includes RGB and IR input features after preprocessing (PrePro), which flow through three cascaded hybrid attention units. The key innovation is that these three processing units share the exact same parameter set (indicated by 'Parameter Sharing' connections), achieving deep recursive structure without increasing model complexity. Each processing unit contains normalization (Norm) components and MLP modules, forming a complete feature refinement path.

Figure 8.

Spectral Target Perception Enhancement Module (STPEM) structure and data flow. The module aims to enhance target region representation while suppressing background interference to improve detection accuracy. The figure is divided into three main parts: the upper and middle parts show parallel processing paths for features from RGB and IR modalities. Both feature paths first go through post-processing (PostPro) modules, including feature normalization, reshaping, and upsampling, before entering the STPEM module for enhancement processing.

Figure 8.

Spectral Target Perception Enhancement Module (STPEM) structure and data flow. The module aims to enhance target region representation while suppressing background interference to improve detection accuracy. The figure is divided into three main parts: the upper and middle parts show parallel processing paths for features from RGB and IR modalities. Both feature paths first go through post-processing (PostPro) modules, including feature normalization, reshaping, and upsampling, before entering the STPEM module for enhancement processing.

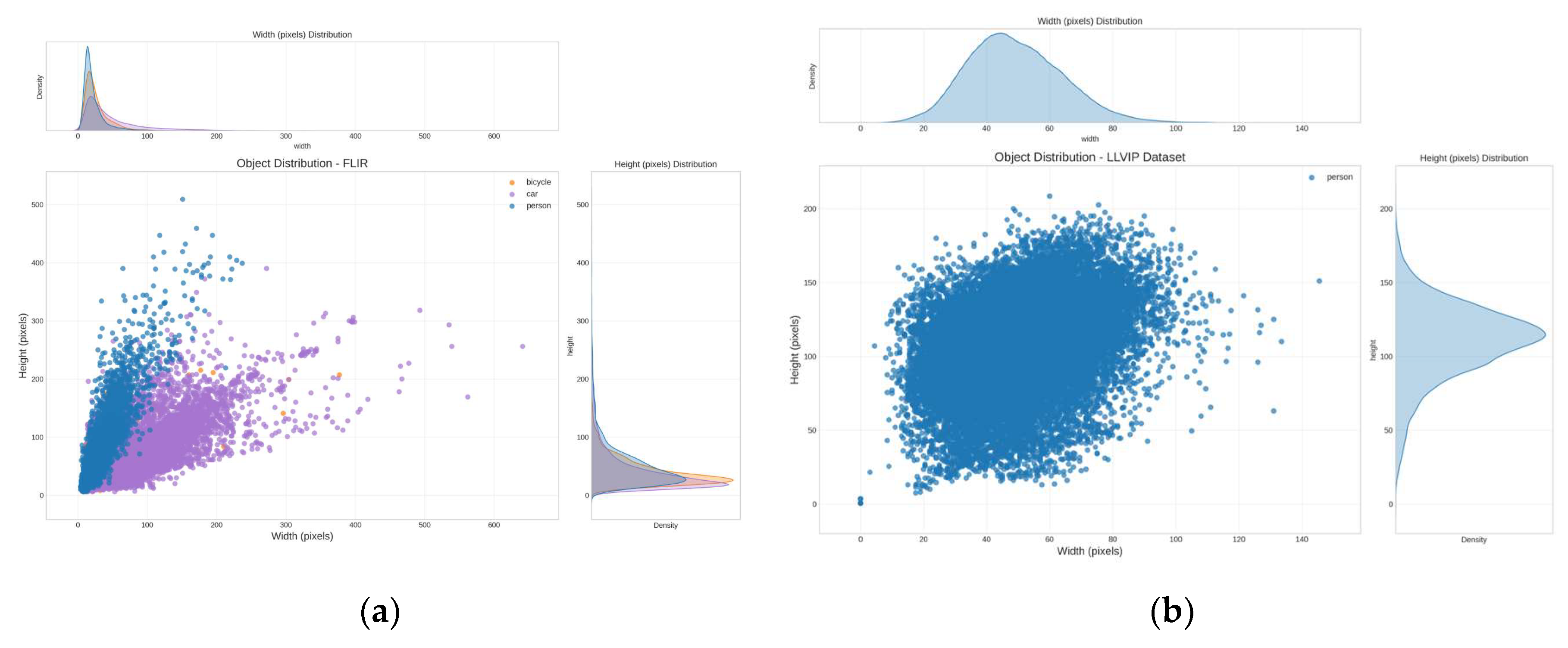

Figure 9.

Object size distribution characteristics in multispectral detection datasets. (a) FLIR-aligned dataset showing three object classes (blue: person, purple: car, orange: bicycle), where pedestrians exhibit slender features (width<100px, height<300px) and cars have wider distribution (width 50-300px, height 50-200px); (b) LLVIP dataset showing pedestrian size distribution with highly clustered characteristics (width 20-80px, height 40-150px), forming a high-density region. The density curves at the top and right of both figures show the statistical distribution of width and height, providing important reference for network design.

Figure 9.

Object size distribution characteristics in multispectral detection datasets. (a) FLIR-aligned dataset showing three object classes (blue: person, purple: car, orange: bicycle), where pedestrians exhibit slender features (width<100px, height<300px) and cars have wider distribution (width 50-300px, height 50-200px); (b) LLVIP dataset showing pedestrian size distribution with highly clustered characteristics (width 20-80px, height 40-150px), forming a high-density region. The density curves at the top and right of both figures show the statistical distribution of width and height, providing important reference for network design.

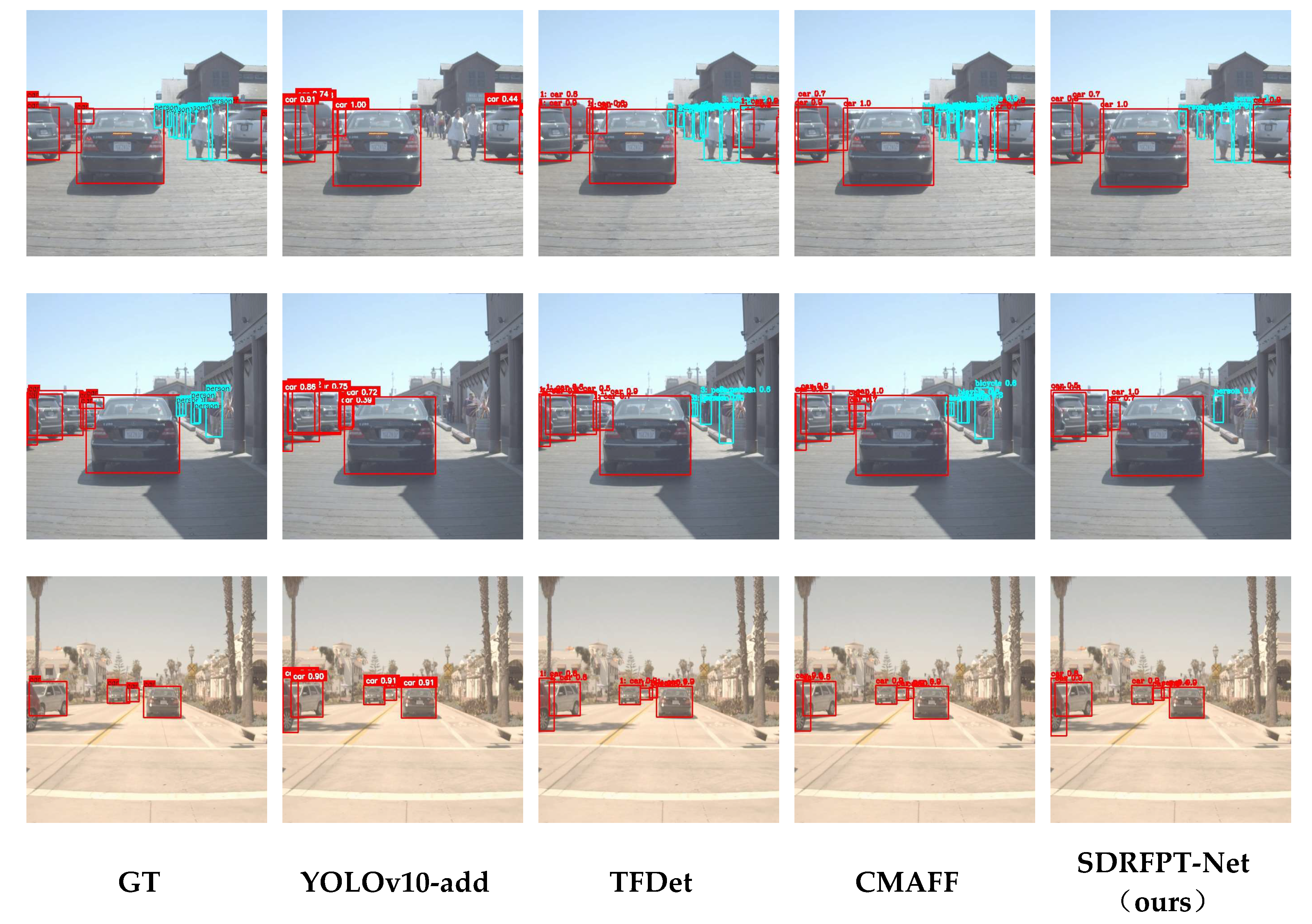

Figure 10.

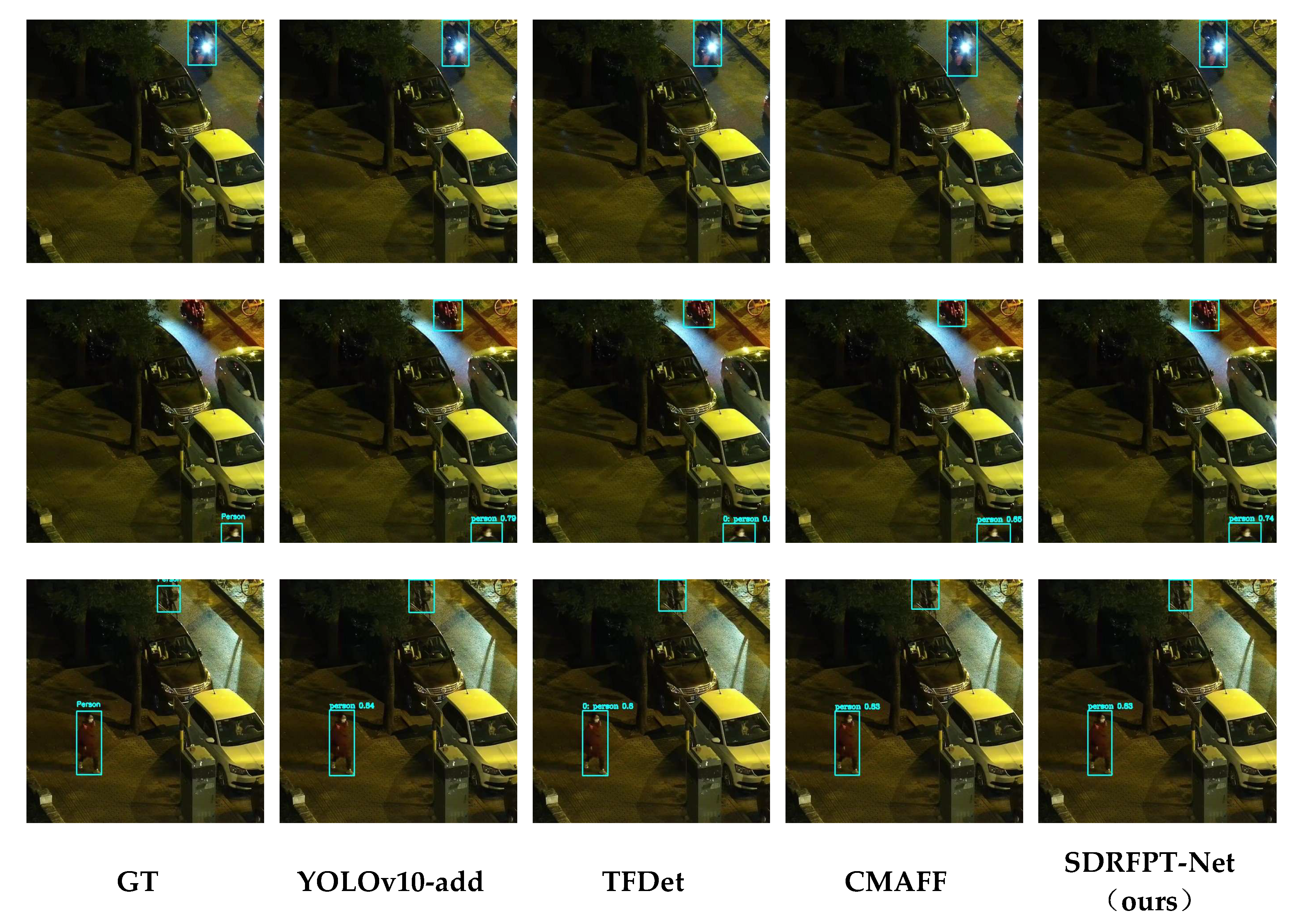

Comparison of detection performance for different multispectral object detection models on various scenarios in the FLIR-aligned dataset. Images are organized by columns from left to right: Ground Truth (GT), YOLOv10-add, TFDet, CMAFF, and our proposed SDRFPT-Net model. Each row shows typical scenarios with different environmental conditions and object distributions, including close-range vehicles, multiple roadway targets, parking areas, narrow streets, and open roads. The visualization results clearly demonstrate the advantages of SDRFPT-Net: in the first row's close-range vehicle scene, SDRFPT-Net's bounding boxes almost perfectly match GT; in the second row's complex multi-target scene, it successfully detects all pedestrians and bicycles without obvious misses; in the third row's parking lot scene, it accurately identifies multiple closely parked vehicles with precise bounding box localization.

Figure 10.

Comparison of detection performance for different multispectral object detection models on various scenarios in the FLIR-aligned dataset. Images are organized by columns from left to right: Ground Truth (GT), YOLOv10-add, TFDet, CMAFF, and our proposed SDRFPT-Net model. Each row shows typical scenarios with different environmental conditions and object distributions, including close-range vehicles, multiple roadway targets, parking areas, narrow streets, and open roads. The visualization results clearly demonstrate the advantages of SDRFPT-Net: in the first row's close-range vehicle scene, SDRFPT-Net's bounding boxes almost perfectly match GT; in the second row's complex multi-target scene, it successfully detects all pedestrians and bicycles without obvious misses; in the third row's parking lot scene, it accurately identifies multiple closely parked vehicles with precise bounding box localization.

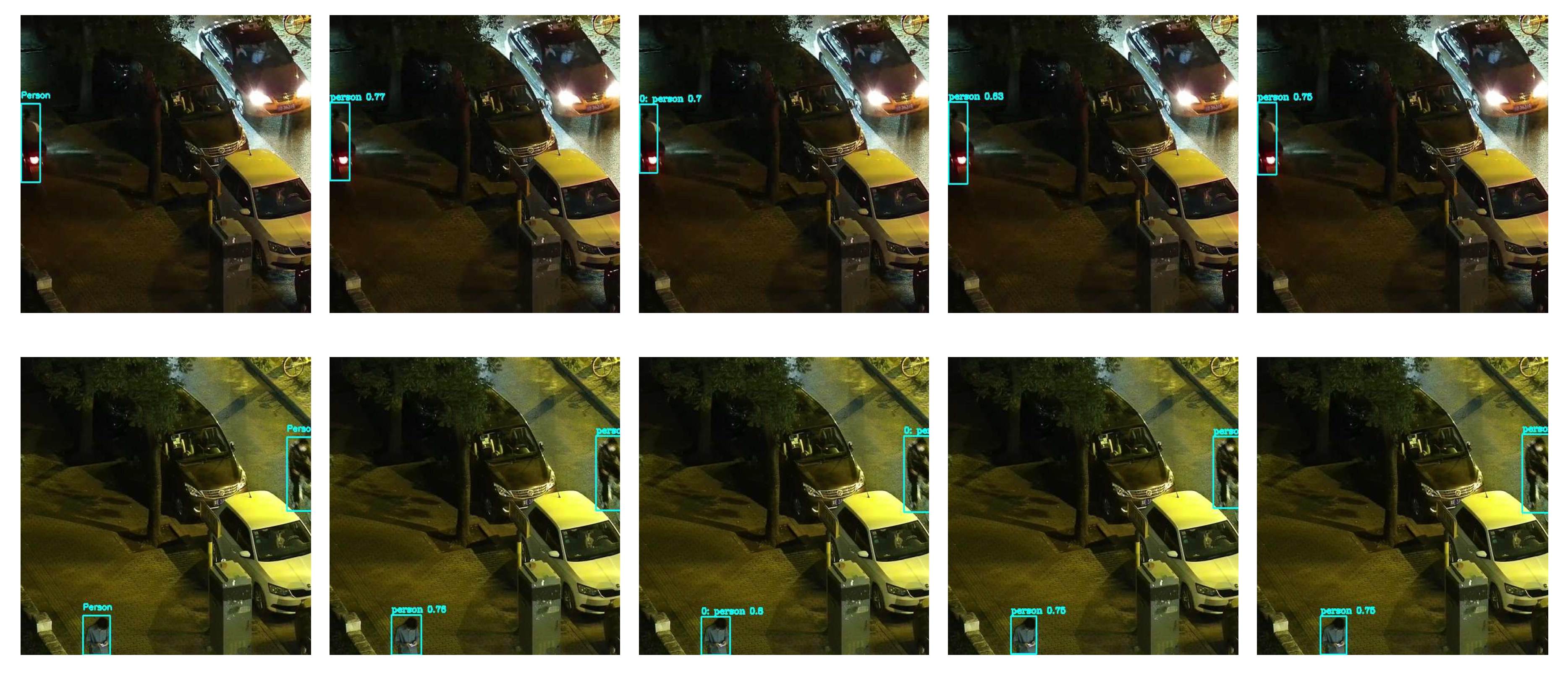

Figure 11.

Comparison of pedestrian detection performance for different detection models on nighttime low-light scenes from the LLVIP dataset. Images are organized by columns from left to right: Ground Truth (GT), YOLOv10-add, TFDet, CMAFF, and our proposed SDRFPT-Net model. The rows display five typical nighttime scenes representing challenging situations with different lighting conditions, viewing angles, and target distances. In all low-light scenes, SDRFPT-Net demonstrates excellent pedestrian detection capability: accurately identifying distant pedestrians with precise bounding boxes in the first row's street lighting scene; maintaining stable detection performance despite strong light interference in the second and fourth rows; successfully detecting distant pedestrians that other methods tend to miss in the fifth row's dark area.

Figure 11.

Comparison of pedestrian detection performance for different detection models on nighttime low-light scenes from the LLVIP dataset. Images are organized by columns from left to right: Ground Truth (GT), YOLOv10-add, TFDet, CMAFF, and our proposed SDRFPT-Net model. The rows display five typical nighttime scenes representing challenging situations with different lighting conditions, viewing angles, and target distances. In all low-light scenes, SDRFPT-Net demonstrates excellent pedestrian detection capability: accurately identifying distant pedestrians with precise bounding boxes in the first row's street lighting scene; maintaining stable detection performance despite strong light interference in the second and fourth rows; successfully detecting distant pedestrians that other methods tend to miss in the fifth row's dark area.

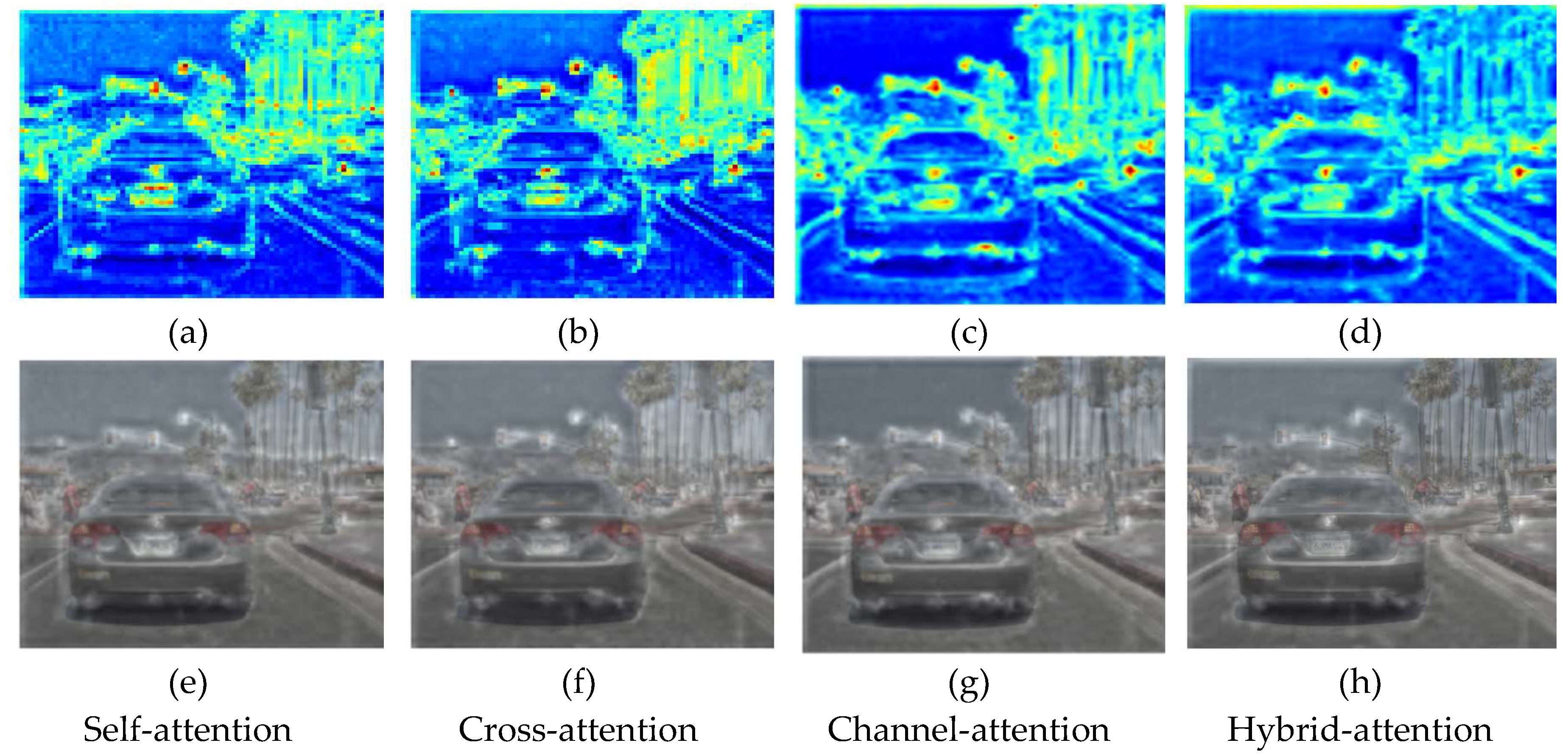

Figure 12.

Comparative impact of different attention mechanisms on the P3 feature layer (high-resolution features) in SDRFPT-Net. The top row (a-d) presents feature activation maps, while the bottom row (e-h) shows the corresponding original image heatmap overlay effects, demonstrating the differences in feature attention patterns. Self-attention (a,e) focuses on target contours and edge information; Cross-attention (b,f) presents overall attention to target areas with complementary information from RGB and IR modalities; Channel-attention (c,g) demonstrates selective enhancement of specific semantic information; Hybrid-attention (d,h) combines the advantages of all three mechanisms for optimal feature representation.

Figure 12.

Comparative impact of different attention mechanisms on the P3 feature layer (high-resolution features) in SDRFPT-Net. The top row (a-d) presents feature activation maps, while the bottom row (e-h) shows the corresponding original image heatmap overlay effects, demonstrating the differences in feature attention patterns. Self-attention (a,e) focuses on target contours and edge information; Cross-attention (b,f) presents overall attention to target areas with complementary information from RGB and IR modalities; Channel-attention (c,g) demonstrates selective enhancement of specific semantic information; Hybrid-attention (d,h) combines the advantages of all three mechanisms for optimal feature representation.

Figure 13.

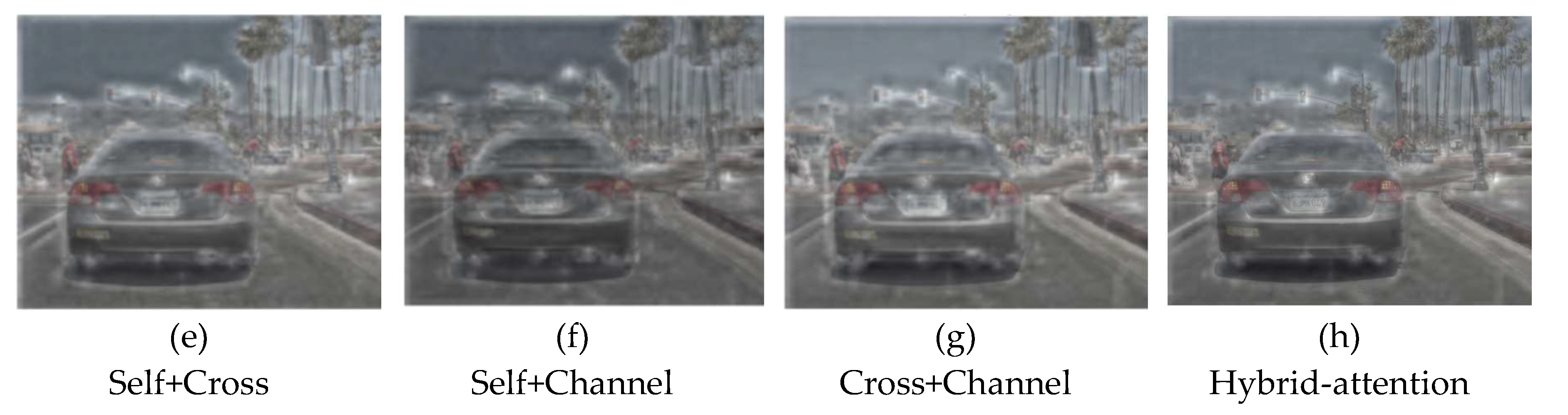

Representational differences between dual attention mechanism combinations and the complete triple attention mechanism on the P3 feature layer. The top row (a-d) shows feature activation maps, while the bottom row (e-h) shows original image heatmap overlay effects, revealing the complementarity and synergistic effects of different attention combinations. Self+Cross attention (a,e) simultaneously possesses excellent boundary localization and target region representation; Self+Channel attention (b,f) enhances specific semantic features while preserving boundary information; Cross+Channel attention (c,g) enhances channel representation based on multi-modal fusion but lacks spatial context; Hybrid-attention (d,h) achieves the most comprehensive and effective feature representation through synergistic integration of all three mechanisms.

Figure 13.

Representational differences between dual attention mechanism combinations and the complete triple attention mechanism on the P3 feature layer. The top row (a-d) shows feature activation maps, while the bottom row (e-h) shows original image heatmap overlay effects, revealing the complementarity and synergistic effects of different attention combinations. Self+Cross attention (a,e) simultaneously possesses excellent boundary localization and target region representation; Self+Channel attention (b,f) enhances specific semantic features while preserving boundary information; Cross+Channel attention (c,g) enhances channel representation based on multi-modal fusion but lacks spatial context; Hybrid-attention (d,h) achieves the most comprehensive and effective feature representation through synergistic integration of all three mechanisms.

Figure 14.

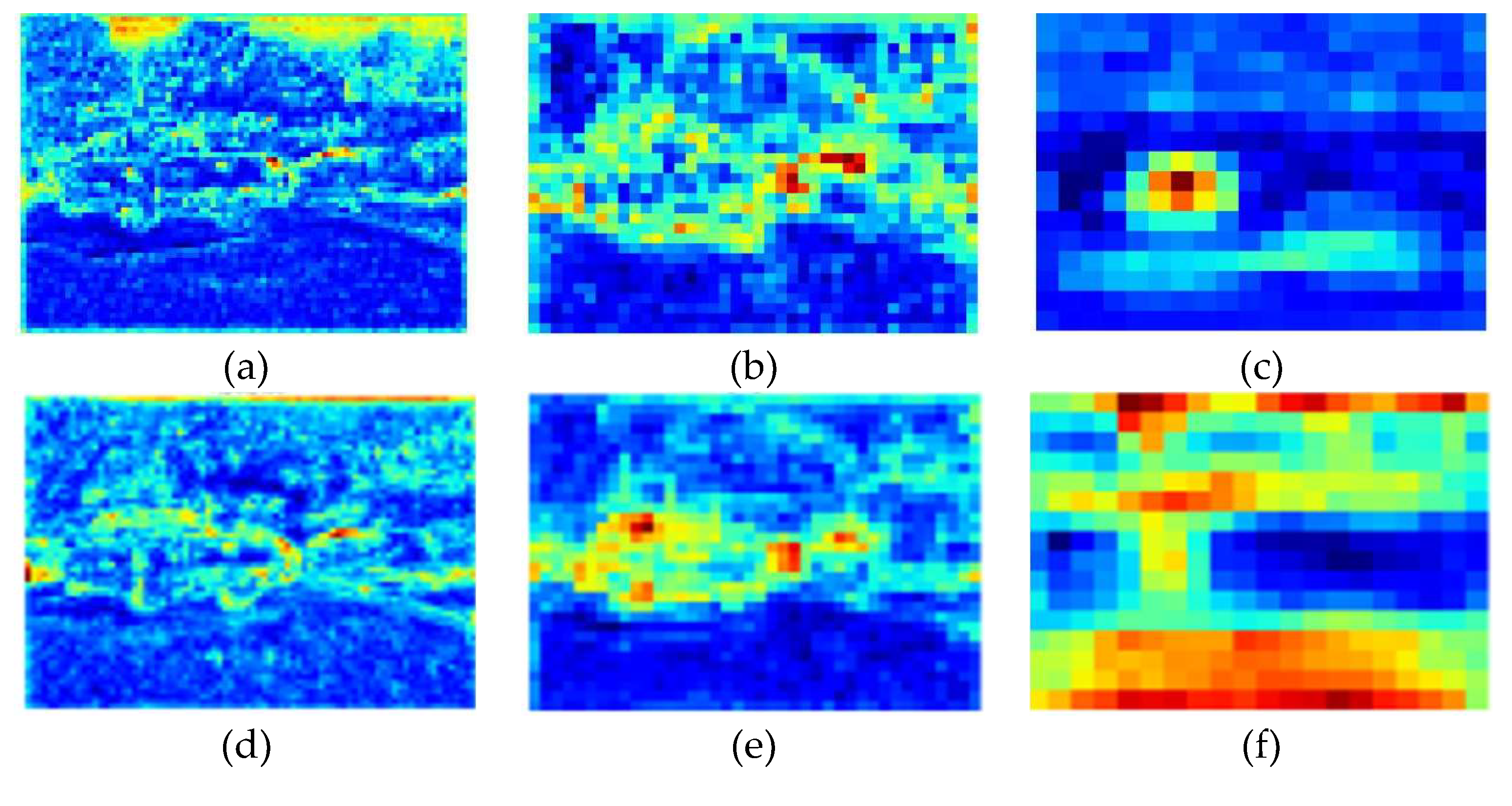

Comparison between simple addition fusion and innovative fusion strategies (SRFM+STPEM) on three feature scale layers. The upper row (a,b,c) presents traditional simple addition fusion at different scales: P3/8 high-resolution layer (a) shows dispersed activation with insufficient target-background differentiation; P4/16 medium-resolution layer (b) has some response to vehicle areas but with blurred boundaries; P5/32 low-resolution layer (c) only has rough response to the central vehicle. The lower row (d,e,f) shows feature maps of the innovative fusion strategy: P3/8 layer (d) provides clearer vehicle contour representation with precise edge localization; P4/16 layer (e) shows more concentrated target area activation; P5/32 layer (f) preserves richer scene semantic information while enhancing central target representation.

Figure 14.

Comparison between simple addition fusion and innovative fusion strategies (SRFM+STPEM) on three feature scale layers. The upper row (a,b,c) presents traditional simple addition fusion at different scales: P3/8 high-resolution layer (a) shows dispersed activation with insufficient target-background differentiation; P4/16 medium-resolution layer (b) has some response to vehicle areas but with blurred boundaries; P5/32 low-resolution layer (c) only has rough response to the central vehicle. The lower row (d,e,f) shows feature maps of the innovative fusion strategy: P3/8 layer (d) provides clearer vehicle contour representation with precise edge localization; P4/16 layer (e) shows more concentrated target area activation; P5/32 layer (f) preserves richer scene semantic information while enhancing central target representation.

Figure 15.

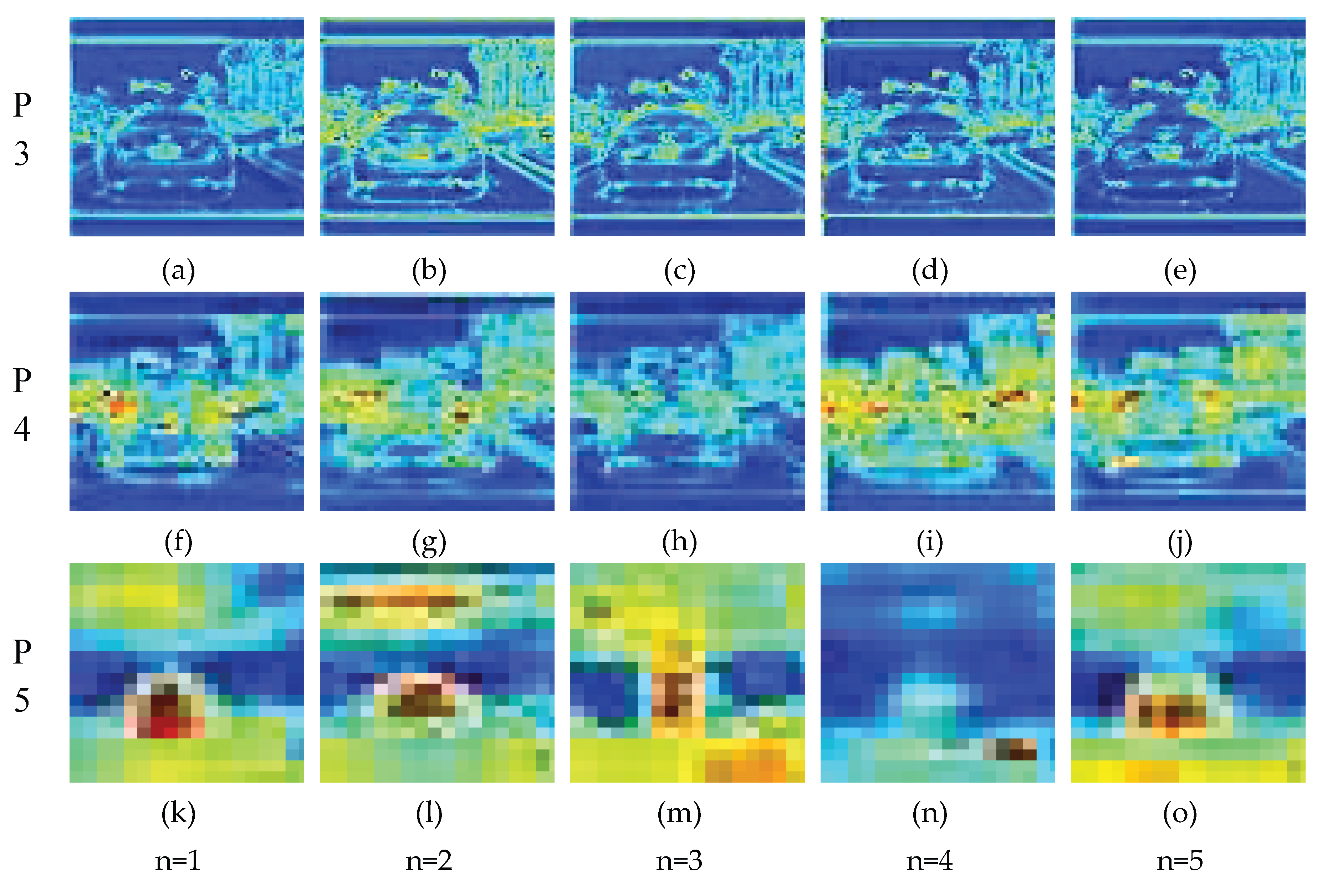

Impact of iteration counts (n=1 to n=5) in the recursive progressive fusion strategy on three feature scale layers of SDRFPT-Net. By comparing the evolution within the same row, changes in features with recursive depth can be observed; by comparing different rows, response characteristics at different scales can be understood. The P3 high-resolution layer (first row) shows feature representation gradually evolving from initial dispersed response (n=1) to more focused target contours (n=2,3), with clearer boundaries and stronger background suppression, but experiencing over-smoothing at n=4,5. The P4 medium-resolution layer (second row) shows optimal target-background differentiation at n=3, followed by feature response diffusion at n=4,5. The P5 low-resolution layer (third row) presents the most significant changes, achieving highly structured representation at n=3 that clearly distinguishes main scene elements, while showing obvious degradation at n=4 and n=5.

Figure 15.

Impact of iteration counts (n=1 to n=5) in the recursive progressive fusion strategy on three feature scale layers of SDRFPT-Net. By comparing the evolution within the same row, changes in features with recursive depth can be observed; by comparing different rows, response characteristics at different scales can be understood. The P3 high-resolution layer (first row) shows feature representation gradually evolving from initial dispersed response (n=1) to more focused target contours (n=2,3), with clearer boundaries and stronger background suppression, but experiencing over-smoothing at n=4,5. The P4 medium-resolution layer (second row) shows optimal target-background differentiation at n=3, followed by feature response diffusion at n=4,5. The P5 low-resolution layer (third row) presents the most significant changes, achieving highly structured representation at n=3 that clearly distinguishes main scene elements, while showing obvious degradation at n=4 and n=5.

Table 1.

Performance comparison of SDRFPT-Net with state-of-the-art methods on the FLIR-aligned dataset. The table presents Precision (P), Recall (R), mean Average Precision at IoU threshold of 0.5 (mAP50), and mean Average Precision across IoU thresholds from 0.5 to 0.95 (mAP50:95). The best results are highlighted in bold.

Table 1.

Performance comparison of SDRFPT-Net with state-of-the-art methods on the FLIR-aligned dataset. The table presents Precision (P), Recall (R), mean Average Precision at IoU threshold of 0.5 (mAP50), and mean Average Precision across IoU thresholds from 0.5 to 0.95 (mAP50:95). The best results are highlighted in bold.

| Methods |

Modality |

P |

R |

mAP50 |

mAP50:95 |

| YOLOv5 |

Visible |

0.531 |

0.395 |

0.441 |

0.202 |

| Infrared |

0.625 |

0.468 |

0.539 |

0.272 |

| YOLOv8 |

Visible |

0.532 |

0.396 |

0.448 |

0.218 |

| Infrared |

0.559 |

0.514 |

0.549 |

0.288 |

| YOLOv10 |

Visible |

0.727 |

0.538 |

0.620 |

0.305 |

| Infrared |

0.773 |

0.618 |

0.727 |

0.424 |

| YOLOv10-add |

V-I |

0.748 |

0.623 |

0.701 |

0.354 |

| CMA-Det |

V-I |

0.812 |

0.468 |

0.518 |

0.237 |

| TFDet |

V-I |

0.827 |

0.606 |

0.653 |

0.346 |

| CMAFF |

V-I |

0.792 |

0.550 |

0.558 |

0.302 |

| BA-CAMF Net |

V-I |

0.798 |

0.632 |

0.704 |

0.351 |

| SDRFPT-Net (ours) |

V-I |

0.854 |

0.700 |

0.785 |

0.426 |

Table 2.

Performance comparison of SDRFPT-Net with state-of-the-art methods on the LLVIP dataset. The table presents Precision (P), Recall (R), mean Average Precision at IoU threshold of 0.5 (mAP50), and mean Average Precision across IoU thresholds from 0.5 to 0.95 (mAP50:95). V-I indicates the fusion of visible and infrared modalities. The best results are highlighted in bold.

Table 2.

Performance comparison of SDRFPT-Net with state-of-the-art methods on the LLVIP dataset. The table presents Precision (P), Recall (R), mean Average Precision at IoU threshold of 0.5 (mAP50), and mean Average Precision across IoU thresholds from 0.5 to 0.95 (mAP50:95). V-I indicates the fusion of visible and infrared modalities. The best results are highlighted in bold.

| Methods |

Modality |

P |

R |

mAP50 |

mAP50:95 |

| YOLOv5 |

Visible |

0.906 |

0.820 |

0.895 |

0.504 |

| Infrared |

0.962 |

0.898 |

0.960 |

0.631 |

| YOLOv8 |

Visible |

0.933 |

0.829 |

0.896 |

0.513 |

| Infrared |

0.956 |

0.901 |

0.961 |

0.645 |

| YOLOv10 |

Visible |

0.914 |

0.833 |

0.892 |

0.512 |

| Infrared |

0.962 |

0.909 |

0.961 |

0.637 |

| YOLOv10-add |

V-I |

0.961 |

0.893 |

0.957 |

0.628 |

| TFDet |

V-I |

0.960 |

0.896 |

0.960 |

0.594 |

| CMAFF |

V-I |

0.958 |

0.899 |

0.915 |

0.574 |

| BA-CAMF Net |

V-I |

0.866 |

0.828 |

0.887 |

0.511 |

| SDRFPT-Net (ours) |

V-I |

0.963 |

0.911 |

0.963 |

0.706 |

Table 3.

Impact of different attention combinations on detection performance. The table compares the effects of Self Attention, Cross-modal Attention, and Channel Attention in various combinations. The best results are highlighted in bold.

Table 3.

Impact of different attention combinations on detection performance. The table compares the effects of Self Attention, Cross-modal Attention, and Channel Attention in various combinations. The best results are highlighted in bold.

| ID |

SHPA |

SRFM |

STPEM |

mAP50 |

mAP50:95 |

| A1 |

✔ |

|

|

0.701 |

0.354 |

| A2 |

✔ |

✔ |

|

0.775 |

0.373 |

| A3 |

✔ |

✔ |

✔ |

0.785 |

0.426 |

Table 4.

Impact of different attention combinations on detection performance. The table compares the effects of Self Attention, Cross-modal Attention, and Channel Attention in various combinations. The best results are highlighted in bold.

Table 4.

Impact of different attention combinations on detection performance. The table compares the effects of Self Attention, Cross-modal Attention, and Channel Attention in various combinations. The best results are highlighted in bold.

| ID |

Self-attention |

Cross-attention |

Channel-attention |

mAP50 |

mAP50:95 |

| B1 |

✔ |

|

|

0.776 |

0.408 |

| B2 |

|

✔ |

|

0.749 |

0.372 |

| B3 |

|

|

✔ |

0.730 |

0.384 |

| B4 |

✔ |

✔ |

|

0.774 |

0.424 |

| B5 |

✔ |

|

✔ |

0.763 |

0.409 |

| B6 |

|

✔ |

✔ |

0.729 |

0.362 |

| B7 |

✔ |

✔ |

✔ |

0.785 |

0.426 |

Table 5.

Ablation experiments on fusion positions. The table shows the impact of applying advanced fusion modules at different feature scales. P3/8, P4/16, and P5/32 represent feature maps at different scales, with numbers indicating the downsampling factor relative to the input image. The best results are highlighted in bold.

Table 5.

Ablation experiments on fusion positions. The table shows the impact of applying advanced fusion modules at different feature scales. P3/8, P4/16, and P5/32 represent feature maps at different scales, with numbers indicating the downsampling factor relative to the input image. The best results are highlighted in bold.

| ID |

P3/8 |

P4/16 |

P5/32 |

mAP50 |

mAP50:95 |

| C1 |

Add |

Add |

Add |

0.701 |

0.354 |

| C2 |

SRFM+STPEM |

Add |

Add |

0.769 |

0.404 |

| C3 |

SRFM+STPEM |

SRFM+STPEM |

Add |

0.776 |

0.410 |

| C4 |

SRFM+STPEM |

SRFM+STPEM |

SRFM+STPEM |

0.785 |

0.426 |

Table 6.

Impact of different iteration counts of the recursive progressive fusion strategy on model detection performance. The experiment compares performance with recursive depths from 1 to 5 iterations (D1-D5), evaluating detection accuracy using mAP50 and mAP50:95 metrics. The best results are highlighted in bold.

Table 6.

Impact of different iteration counts of the recursive progressive fusion strategy on model detection performance. The experiment compares performance with recursive depths from 1 to 5 iterations (D1-D5), evaluating detection accuracy using mAP50 and mAP50:95 metrics. The best results are highlighted in bold.

| ID |

times |

mAP50 |

mAP50:95 |

| D1 |

1 |

0.769 |

0.395 |

| D2 |

2 |

0.783 |

0.418 |

| D3 |

3 |

0.785 |

0.426 |

| D4 |

4 |

0.783 |

0.400 |

| D5 |

5 |

0.761 |

0.417 |