4.1. Ablation Study

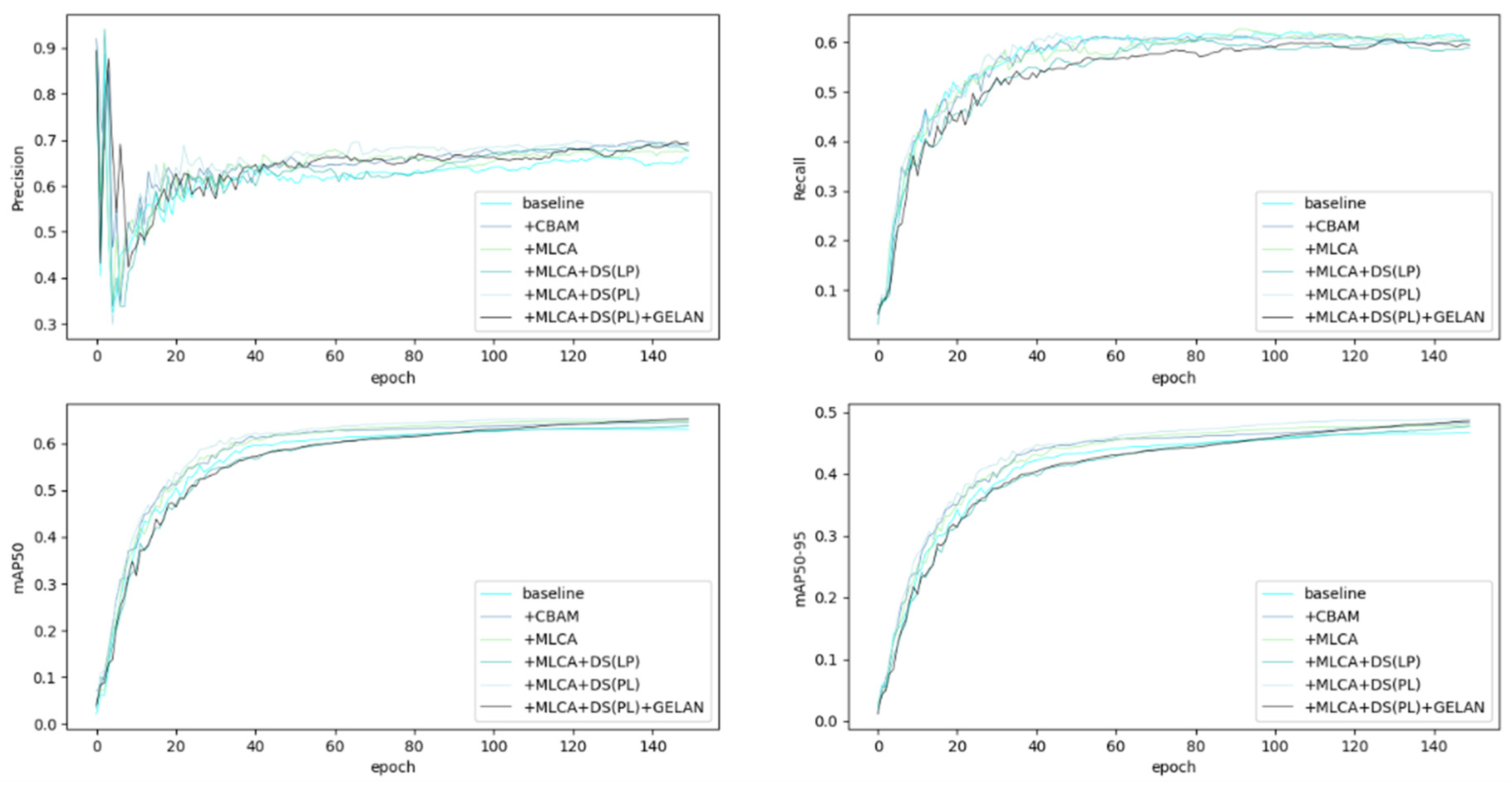

Figure 8 displays the training curves of multiple improved YOLOv8-obb models, showing similar convergence patterns where all metrics reach stable values after 150 epochs. Due to space constraints,

Figure 8 primarily illustrates the overall trends and approximate values of the metrics during training; therefore, this paper provides precise quantitative results in

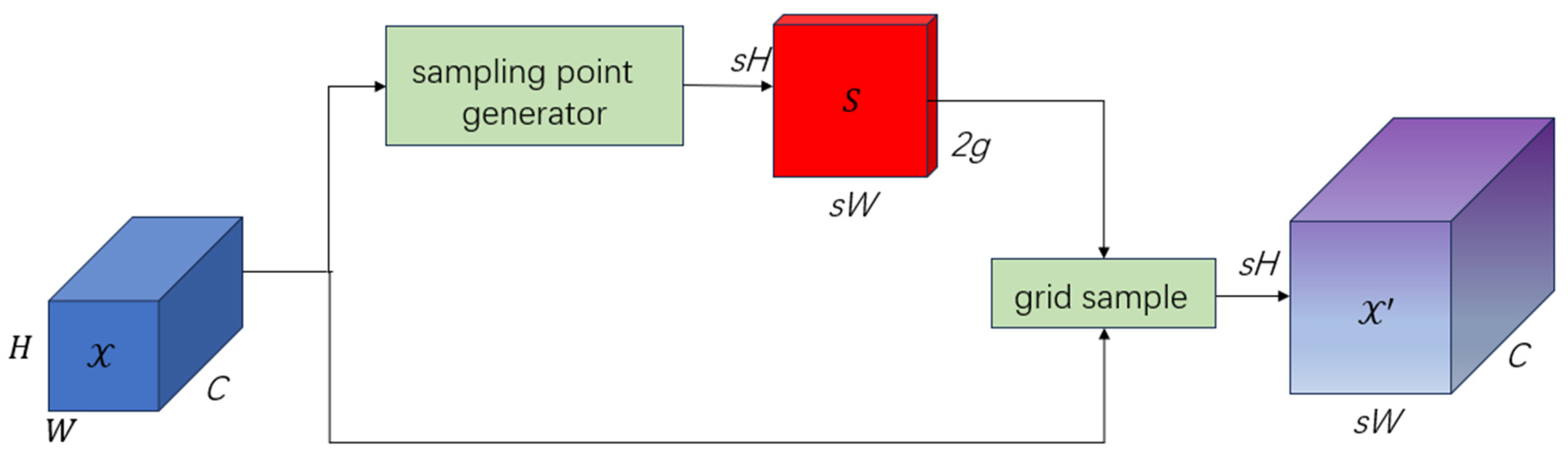

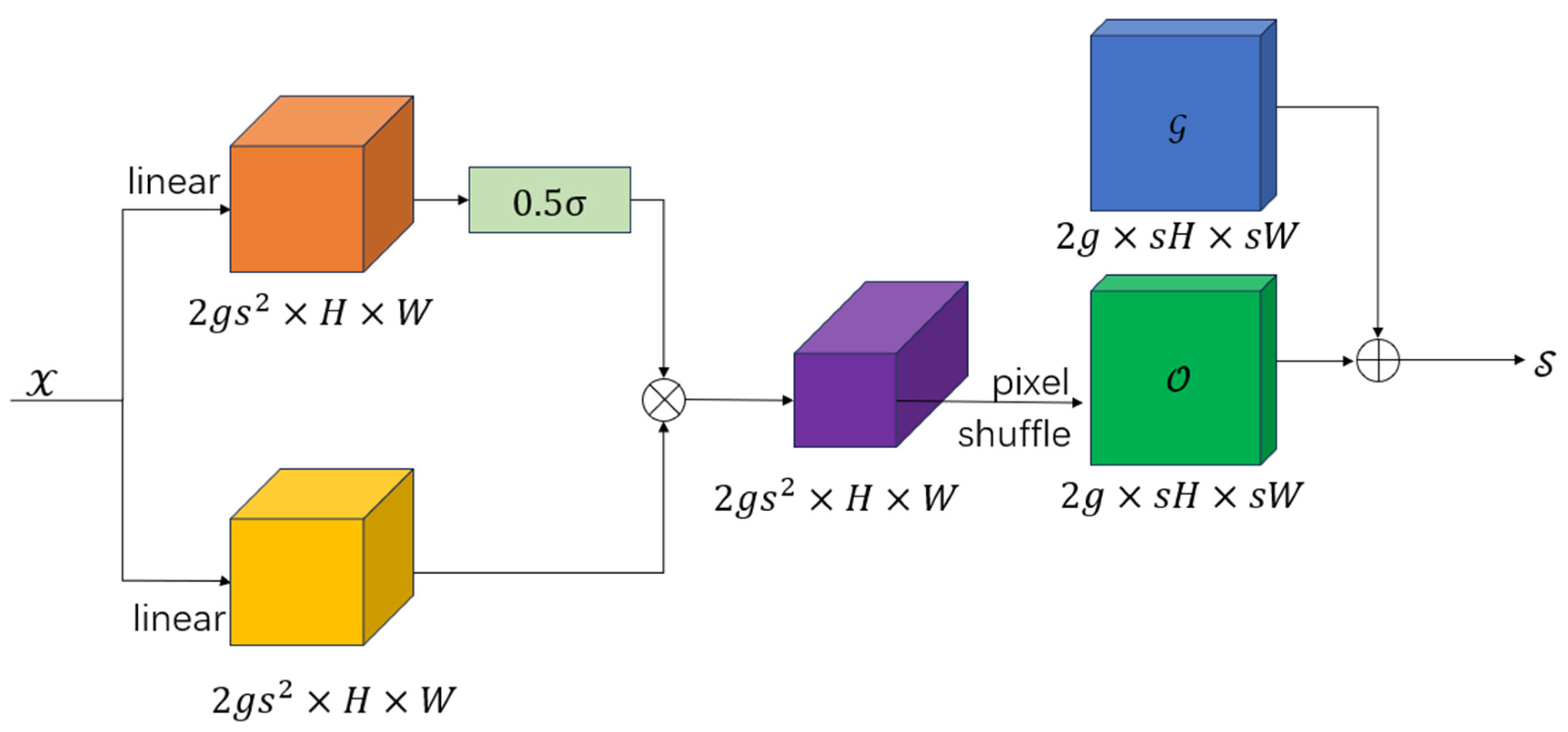

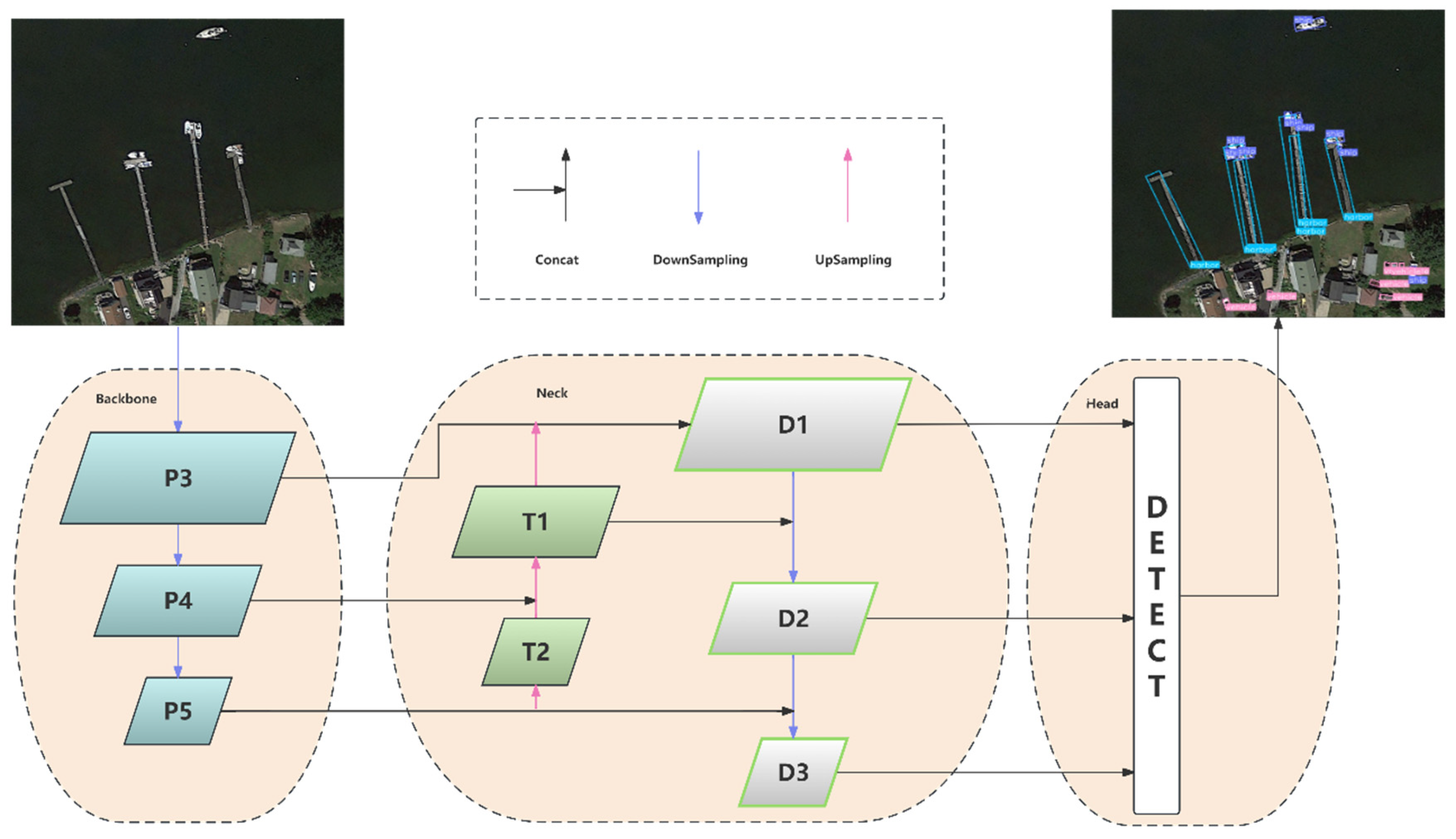

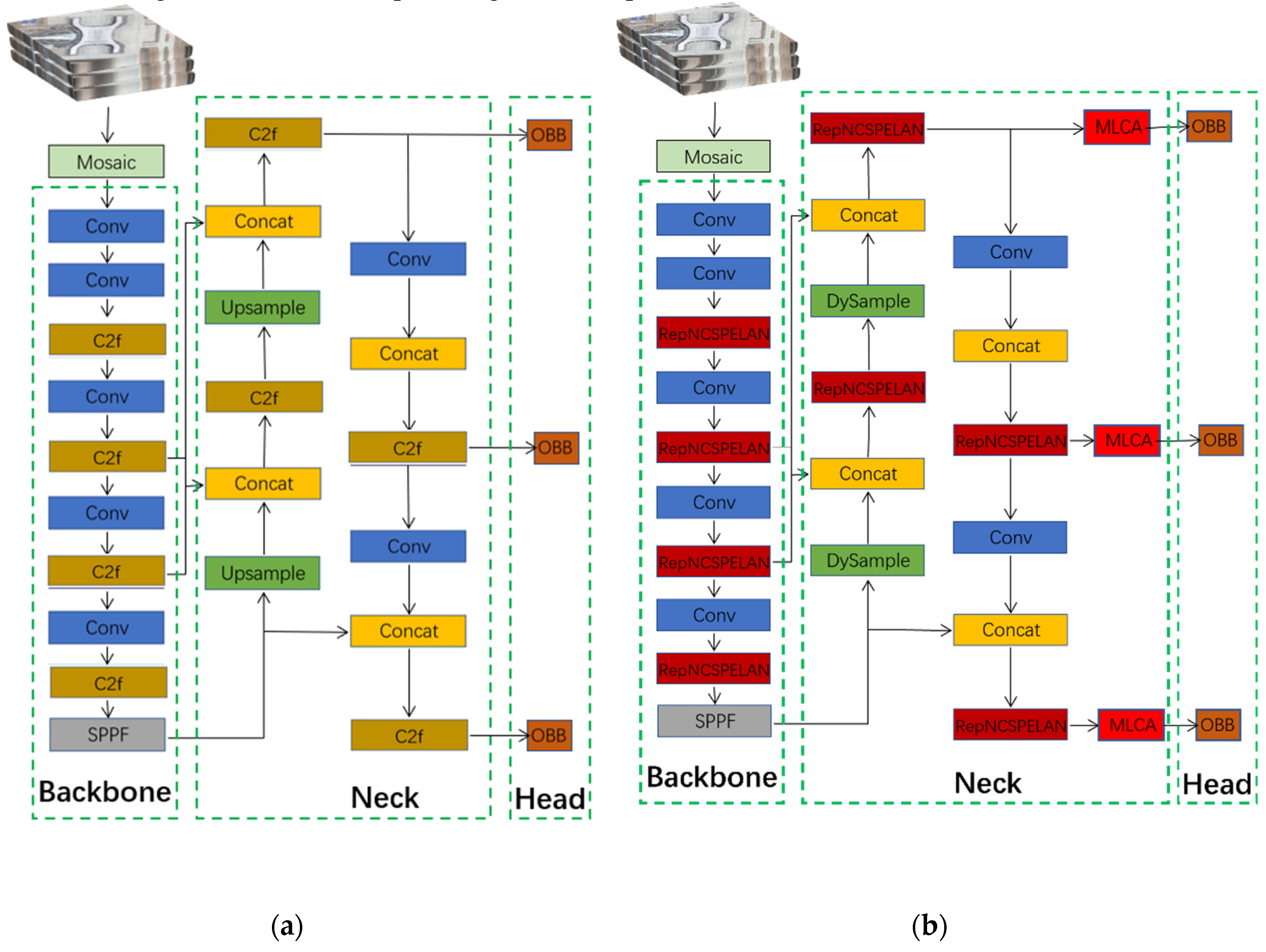

Table 6 to thoroughly evaluate each model’s performance. The table includes: MLCA (Mixed Local Channel Attention module added to YOLOv8-obb), DS (Dynamic Upsampling module), and GE (RepNCSPELAN layer). For comparison, we also implemented the CBAM attention module and two dynamic upsampling variants: LP (“linear + pixel shuffle” design) and PL (“pixel shuffle + linear” design), enabling systematic analysis of architectural modifications and their individual/combined effects on model optimization.

The ablation experiments validate the effectiveness of the proposed YOLOv8-obb modules. Experimental results demonstrate that incorporating the MLCA attention layer significantly enhances model performance, achieving improvements of 1.7% in precision, 0.2% in recall, 1.8% in mAP50, and 1.2% in mAP50-95. The performance gain primarily stems from MLCA’s enhanced feature extraction capability, which improves multi-scale object detection - particularly for small targets, as evidenced by the experimental data. Although the CBAM module also shows performance benefits, comprehensive comparison confirms MLCA’s superior effectiveness, leading to its selection in our final architecture.

Moreover, the integration of the dynamic upsampling (PL) module yields concurrent optimization in both detection accuracy and model parameters. Specifically, the proposed architecture achieves a 0.1M reduction in parameter count while improving mAP50 by 0.4%. Comparative experiments reveal that the structurally analogous LP module conversely degrades detection performance, underscoring the necessity for prudent selection of upsampling configurations in practical implementations.

The incorporation of the RepNCSPELAN module yields relatively modest improvements across evaluation metrics. The primary objective of replacing the C2f layer was to reduce parameter count for network lightweighting while maintaining detection accuracy. Remarkably, experimental results demonstrate that substituting the complex C2f layer not only preserves but slightly enhances model precision by 0.1%, validating the new module’s efficacy. These findings collectively confirm that our improved algorithm successfully achieves dual objectives: significant enhancement in target detection accuracy (evidenced by 2.3% mAP50 improvement on DOTA dataset) alongside meeting stringent lightweight architecture requirements (29% parameter reduction from 3.1M to 2.2M). The RepNCSPELAN module’s design proves particularly effective in balancing computational efficiency with feature representation capability, making it suitable for deployment in resource-constrained edge computing scenarios while maintaining competitive performance against conventional architectures.

Based on comprehensive ablation studies, this paper adopts the optimal ‘+MLCA+DS(PL)+GE’ configuration (hereafter termed Improved YOLOv8-obb) for subsequent experiments, achieving after 150 epochs: 68.4% precision (P), 60.6% recall (R), 65.3% mAP50, and 48.2% mAP50-95, with detailed category-wise metrics documented in

Table 6, demonstrating the architecture’s balanced optimization of accuracy (2.3% mAP50 improvement over baseline) and efficiency (29% parameter reduction to 2.2M) through synergistic integration of Mixed Local Channel Attention for enhanced feature discrimination, dynamic upsampling (PL variant) for multi-scale adaptation, and RepNCSPELAN’s lightweight feature fusion.

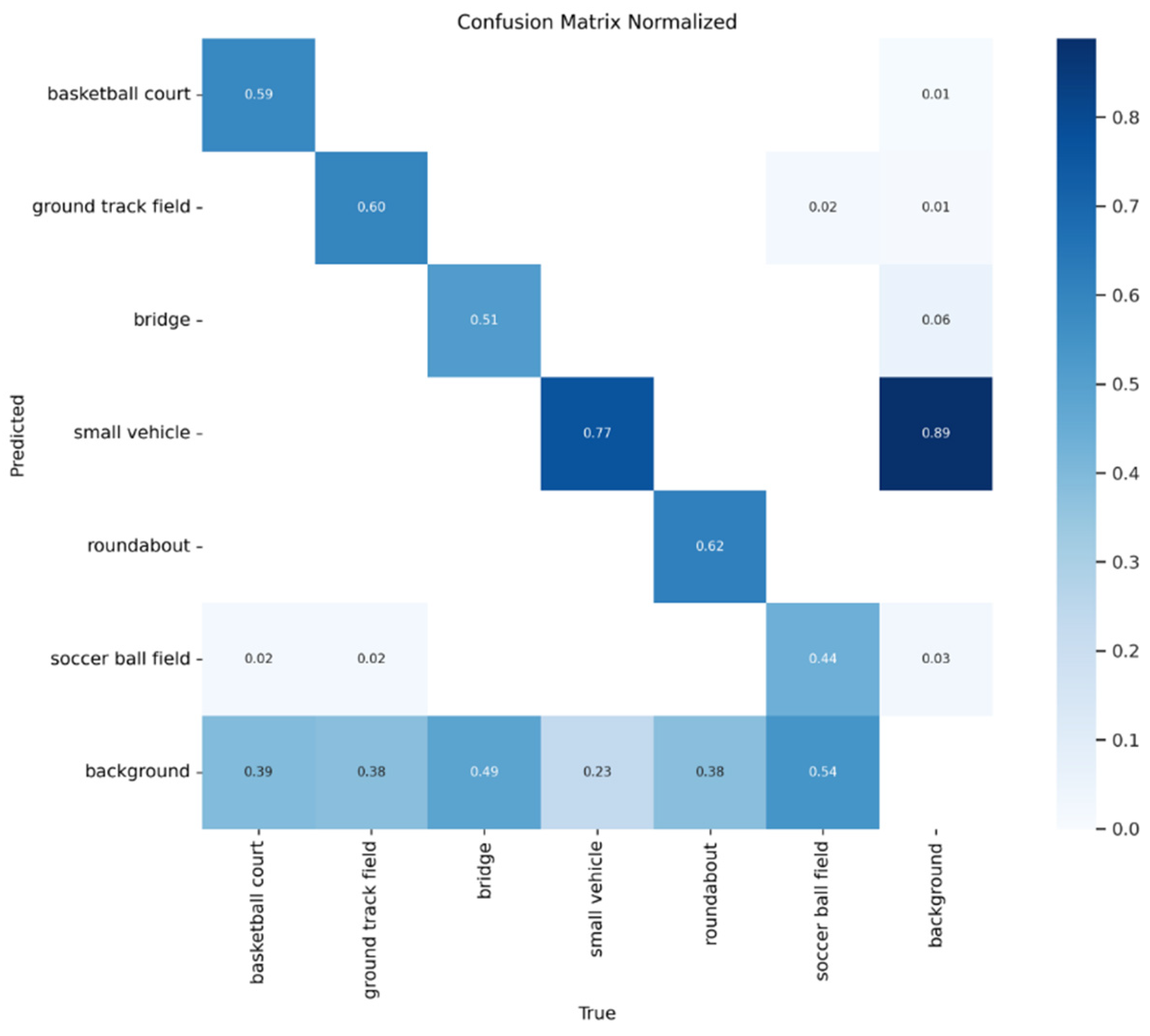

To further evaluate the detection performance,

Figure 9 and

Figure 10 present the confusion matrix and normalized confusion matrix respectively, where the main diagonal elements indicate either the count or proportion of correctly detected targets (with most categories exceeding 0.5 detection accuracy), while off-diagonal elements horizontally represent false positives and vertically represent false negatives - a dominant main diagonal signifies superior detection performance, whereas increased off-diagonal elements reflect higher misdetection and missed detection rates, quantitatively demonstrating the model’s classification capability across all categories through the matrix’s spatial distribution characteristics.

The results demonstrate that the small vehicle category, which has the largest sample size, achieves notably higher overall accuracy after training. Additionally, the roundabout and ground track field categories exhibit relatively strong detection performance. Although the bridge and soccer ball field categories show comparatively lower accuracy rates, analysis of

Table 3 reveals that their performance still improves over the baseline YOLOv8-obb model.

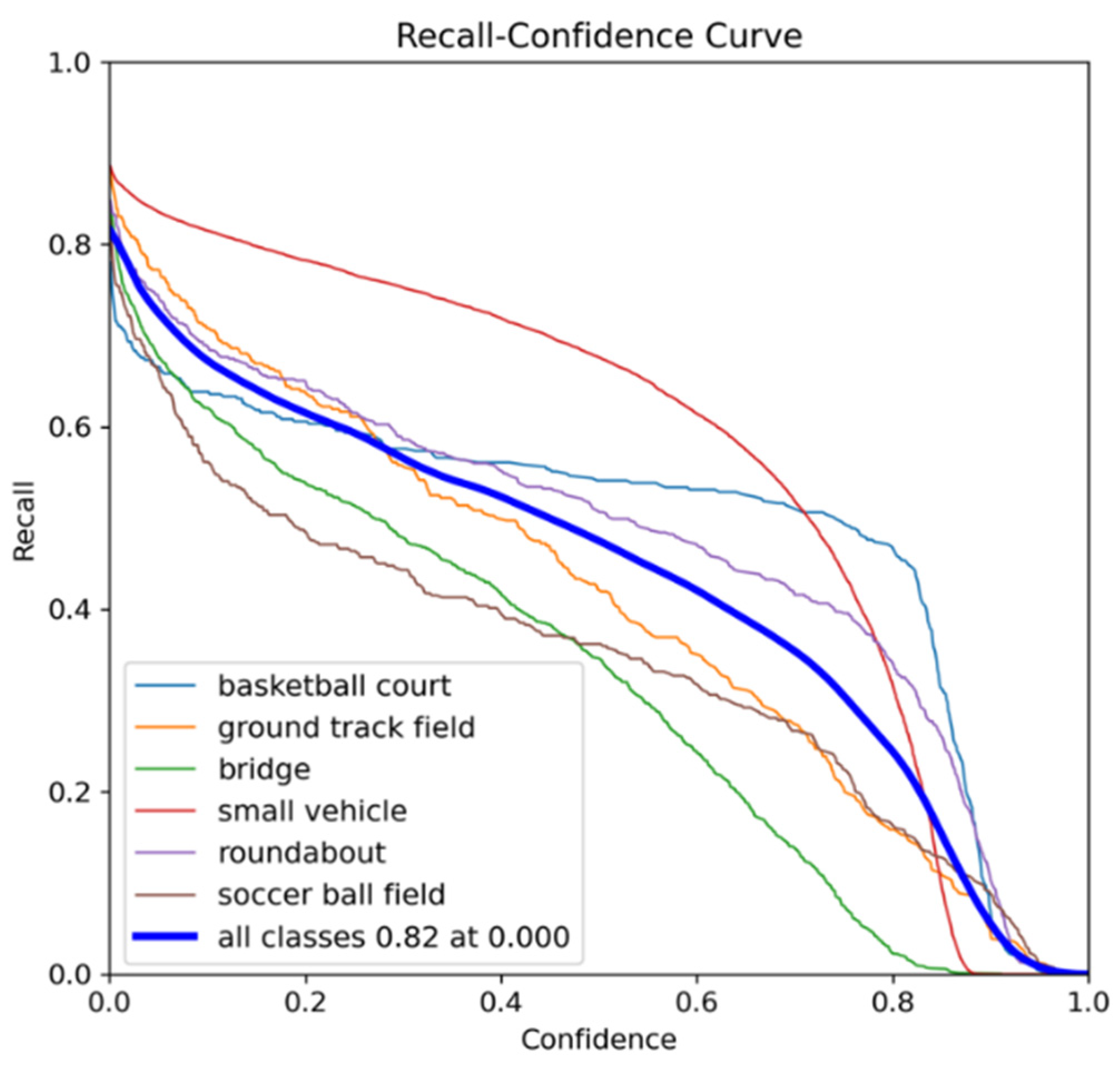

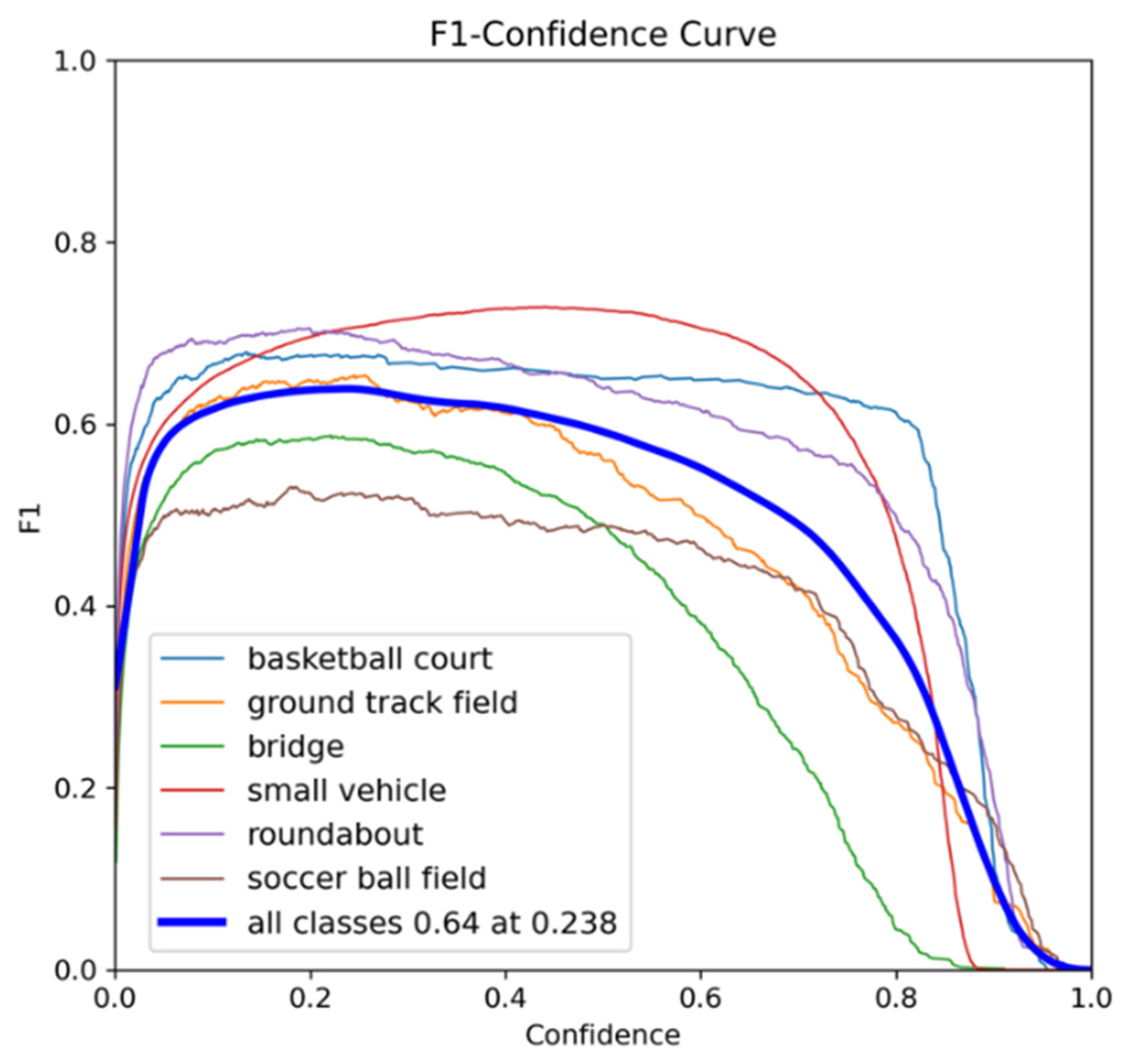

To further evaluate the detection capability of the improved YOLOv8-obb across different target categories,

Figure 10,

Figure 11,

Figure 12 and

Figure 13 present various evaluation metric curves plotted against confidence scores

Figure 11 shows the Precision-Confidence Curve, illustrating how precision varies across different confidence thresholds. The curve demonstrates higher precision at higher confidence levels, indicating fewer false positives (FP) in high-confidence predictions. While precision typically increases with confidence, the basketball court category shows an unusual precision drop at high confidence values.

Figure 12 presents the Recall-Confidence Curve, displaying recall rate changes at different thresholds. The curve reveals the fundamental trade-off: lower confidence thresholds achieve higher recall but lower precision, while higher thresholds result in lower recall but higher precision.

Figure 13 displays the F1-Confidence Curve, which evaluates the model’s balanced performance by plotting F1-score (the harmonic mean of precision and recall) against confidence thresholds. The peak of this curve corresponds to the optimal confidence threshold that best balances precision and recall - specifically matching the 65.3% mAP50 value in our experiments.

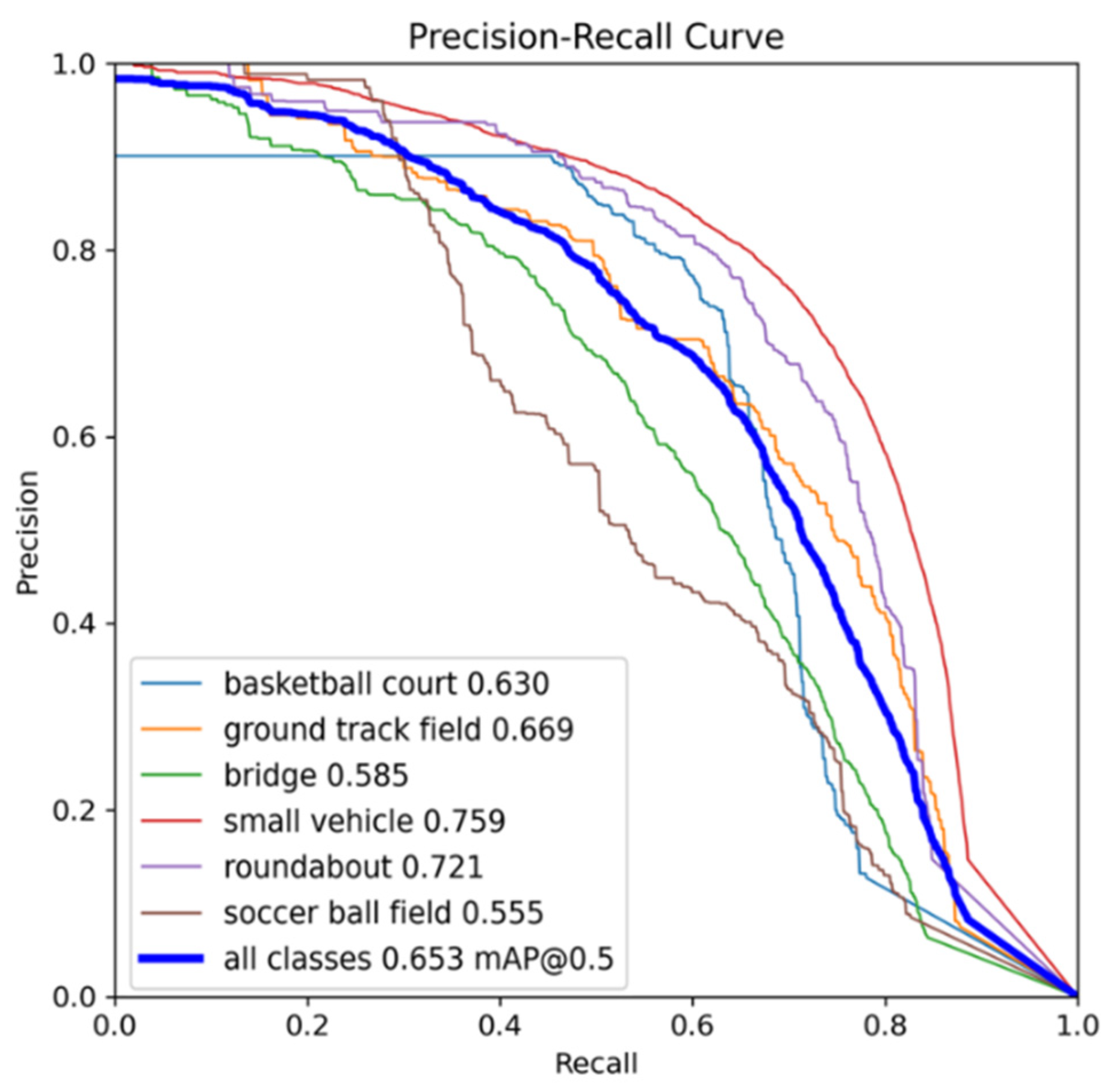

Figure 14 illustrates the Precision-Recall (PR) Curve, with recall on the x-axis and precision on the y-axis. The area under this curve (AP) represents average precision. Key observations include:

1) Curves closer to the upper-right corner indicate better performance

2) Strong convexity shows graceful precision decline as recall increases

3) Diagonal alignment suggests random-guessing-level performance

Notably, all categories except soccer ball field show desirable upper-right convexity, confirming the improved YOLOv8n-obb’s ability to balance precision and recall. Furthermore, the separation between category curves reflects detection difficulty - for example, the relatively flat curves for “bridge” and soccer ball field explicitly demonstrate these categories’ challenging nature for detection algorithms.

4.2. Comparative Experiment

To verify the effectiveness and efficiency of our improved algorithm, we conducted comparative experiments with several classical models including YOLOv5, YOLOv6, YOLOv8 and YOLOv8n-obb, evaluating them using four key metrics: parameter count, FLOPs (floating-point operations per second), mAP (mean average precision), and model size. The experiments specifically employed the ‘nano’ (n) scale variants of YOLO models, which are categorized into five size levels (n, s, m, l, and x) - for instance, YOLOv8n-obb contains only about 3.2M parameters, while YOLOv8s-obb includes approximately 11.2M parameters, and the largest YOLOv8x-obb exceeds 60M parameters. Although models with more parameters typically achieve higher detection accuracy, they require significantly longer training times; therefore, considering the lightweight requirements, we exclusively selected the n-scale models for comparative testing, with the experimental results detailed in

Table 8.

Experimental results demonstrate that the improved YOLOv8n-obb algorithm outperforms classical detectors (YOLOv5n, YOLOv6n, YOLOv8, and the original YOLOv8n-obb) in both model size and accuracy. The enhanced algorithm achieves 65.3% mAP50, representing a 2.3% improvement over the original YOLOv8n-obb and significantly surpassing other detectors. The additional parameters introduced by the OBB format substantially enhance the fitting degree between bounding boxes and targets. While leveraging the advantages of OBB data format, our improved algorithm further boosts detection accuracy through the incorporation of attention mechanisms, yet the attention layers’ impact on model size remains negligible due to the model’s lightweight nature. Moreover, the enhanced YOLOv8n-obb shows superior performance in parameter count, FLOPs, and model size compared to its original version - reducing parameters from 3.1M to 2.2M (a 29% decrease that significantly optimizes network architecture), decreasing FLOPs from 8.5G to 6.2G, and shrinking model size from 6.5MB to 4.9MB. Remarkably, while achieving substantial accuracy improvements, the final model size is even smaller than YOLOv5n, fully satisfying lightweight requirements for practical deployment scenarios.

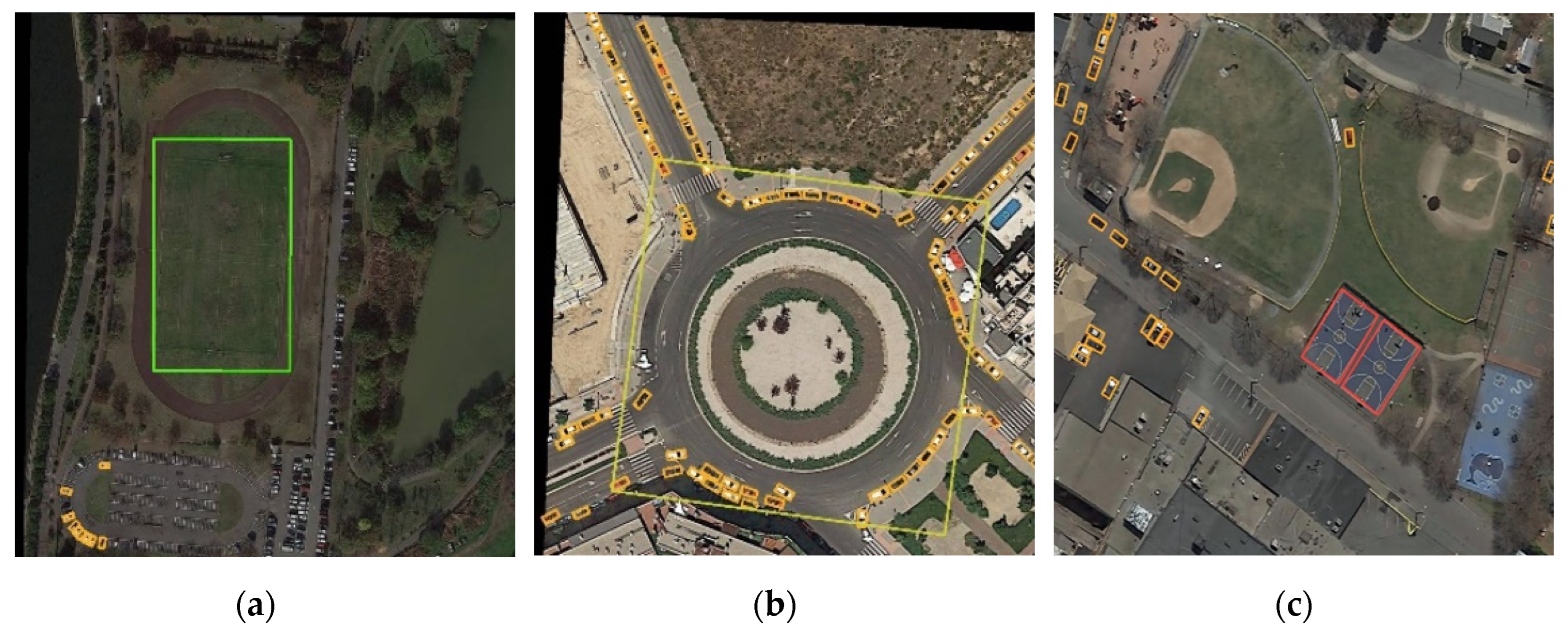

Figure 15 demonstrates the visual detection results of our enhanced YOLOv8-obb algorithm on the DOTA dataset. The visualization reveals that despite the dataset containing numerous small-scale instances (such as those in the small vehicle category) with complex background interference, our detector maintains consistently high detection accuracy.

Figure 15(a) exhibits a representative case of multi-scale characteristics in remote sensing object detection, simultaneously displaying both small vehicle targets and larger sports field targets, highlighting substantial scale variations.

Figure 15(b) presents a challenging scenario of nested and stacked objects, where a large roundabout instance encompasses multiple small vehicle targets, posing significant detection difficulties.

Figure 15(c) clearly illustrates that the basketball court possesses inherent rotation angles, necessitating rotated bounding boxes for optimal target fitting.

Our improved YOLOv8n-obb algorithm effectively addresses these challenges in remote sensing object detection, successfully completing detection tasks with high confidence scores, thereby demonstrating its superior performance in complex scenarios. The visualization comprehensively validates the algorithm’s capabilities in handling multi-scale objects, nested targets, and rotated instances while maintaining robust detection performance.

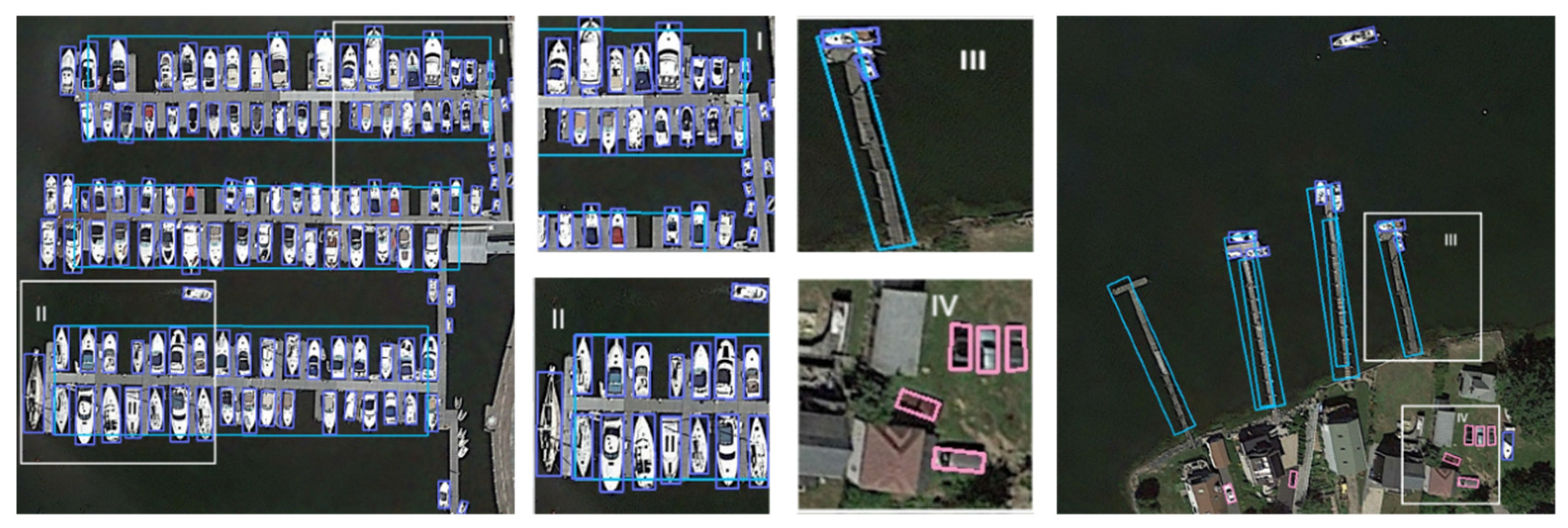

Furthermore, we validated our algorithm on the DIOR dataset, which comprises 23,463 images and 192,472 object instances spanning 20 distinct categories. The dataset provides both horizontal bounding box (HBB) and oriented bounding box (OBB) annotation formats. Experimental results of our improved YOLOv8-obb on the DIOR dataset are presented in

Figure 16, with corresponding accuracy metrics detailed in

Table 9.

Table 9 presents the experimental results comparing the original YOLOv8-obb and our improved algorithm on the DIOR dataset, with 3,488 labeled images per category. The results show our enhanced algorithm achieves higher mAP50 with fewer parameters than the original YOLOv8-obb.

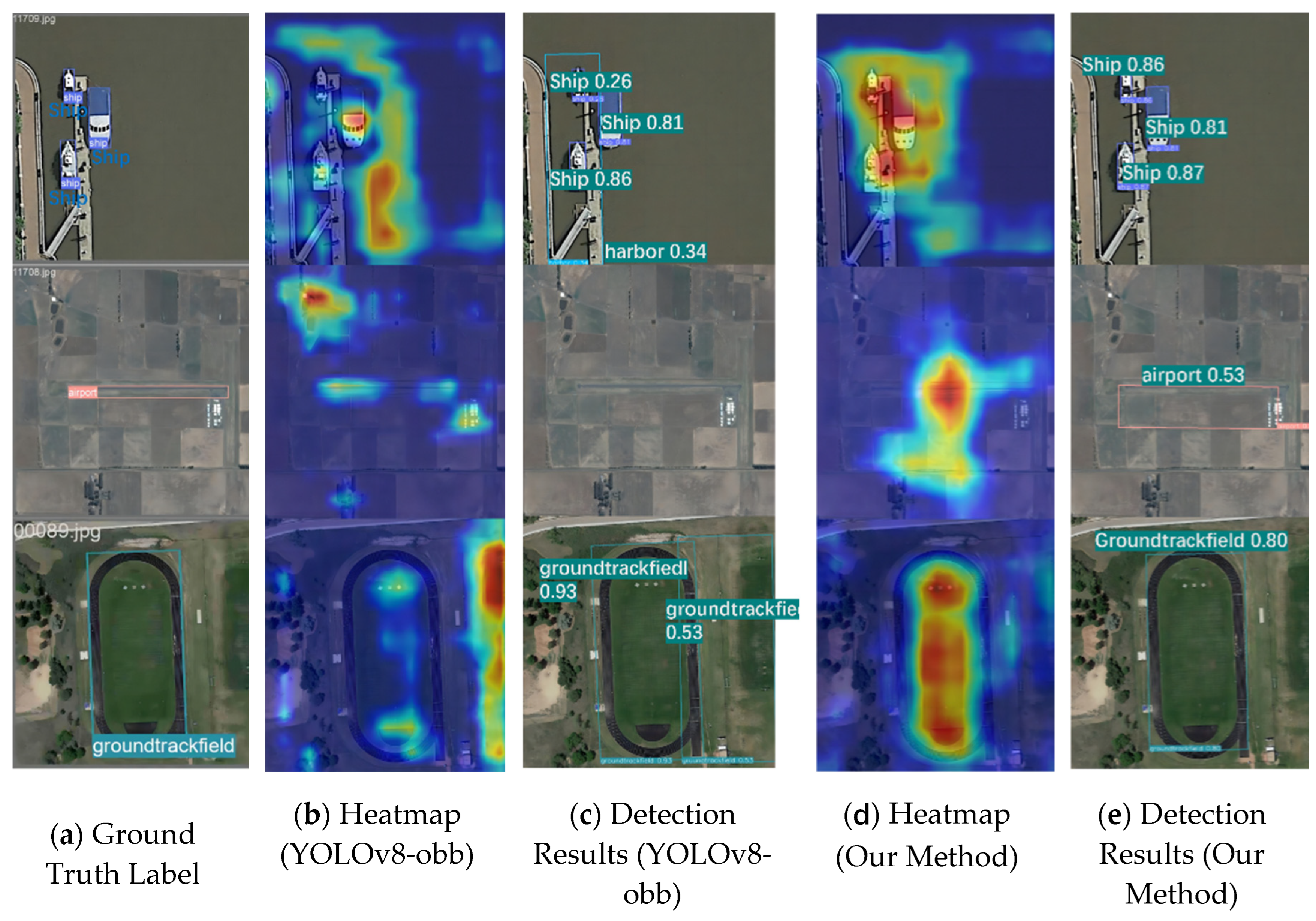

Figure 17 displays detection heatmaps that demonstrate our improved algorithm's more focused attention on target features like airports and runways, compared to the original version's tendency to attend to less relevant areas. These visualizations provide qualitative evidence supporting the quantitative improvements shown in

Table 9.