To verify the effectiveness of each innovative module in SDRFPT-Net, we conducted systematic ablation experiments on the FLIR-aligned dataset, which can more intuitively reflect the effectiveness of our algorithm. These experiments aim to evaluate the contribution of each component to the overall performance of the network and validate the rationality of our proposed design scheme.

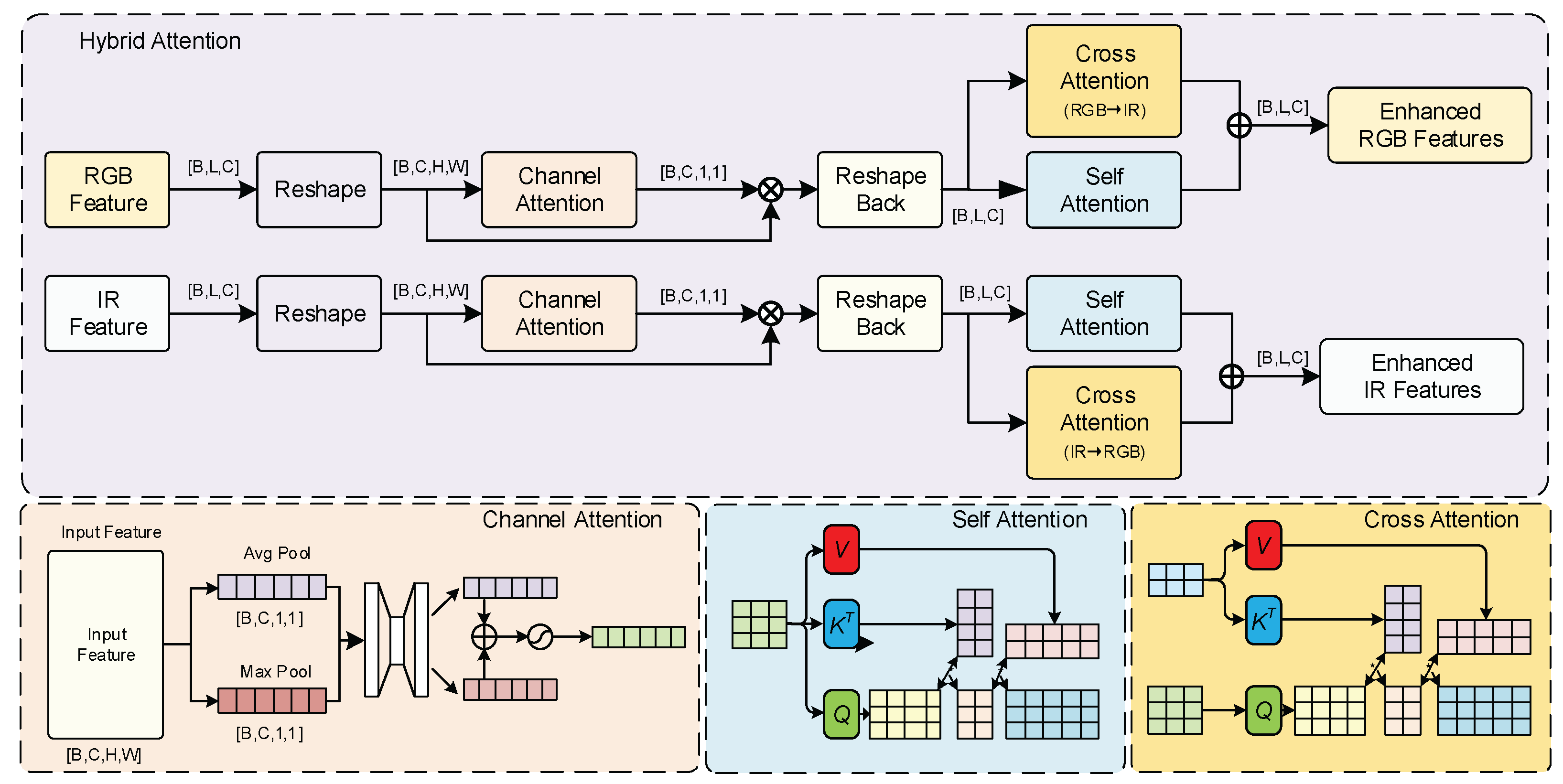

4.4.2. Ablation Experiments on Hybrid Attention Mechanism

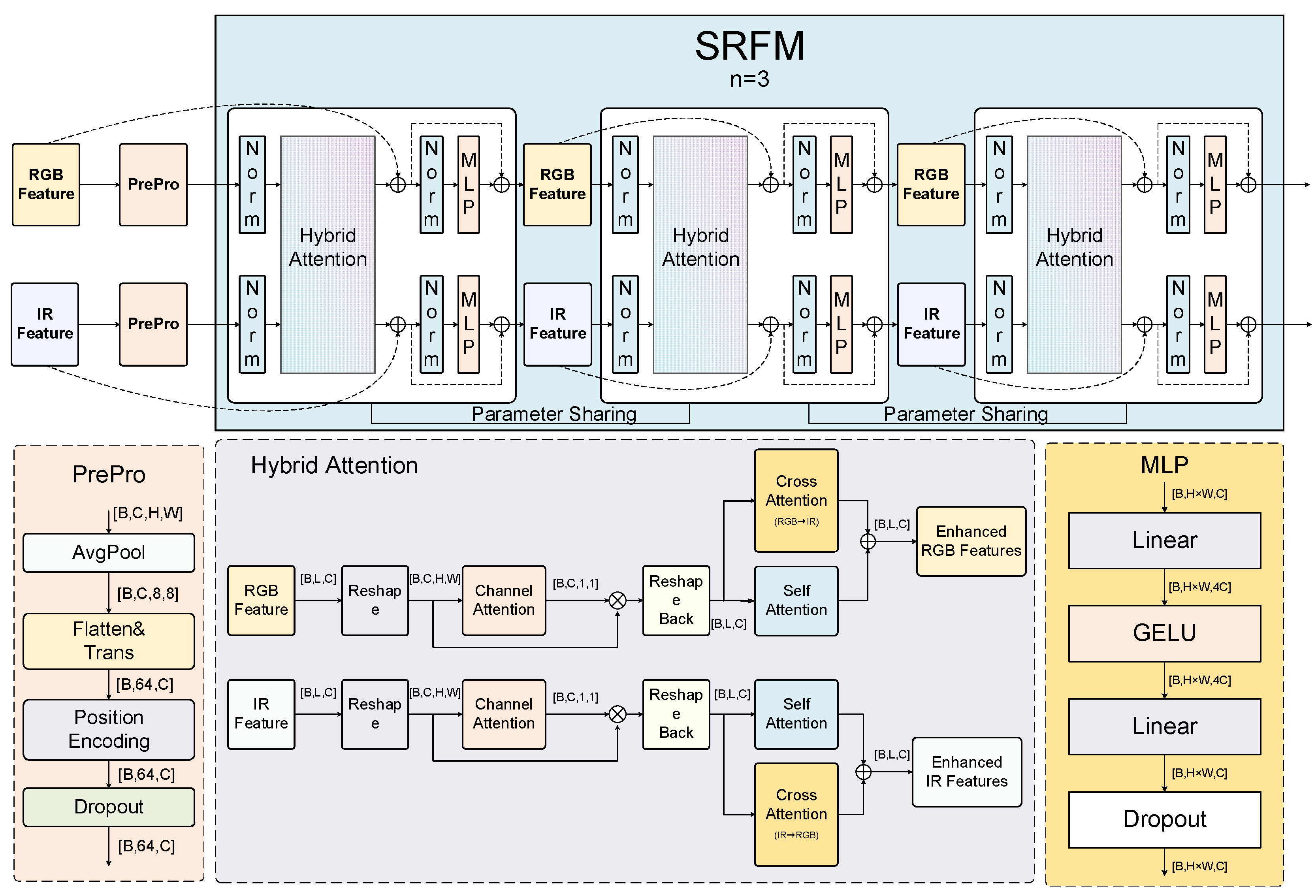

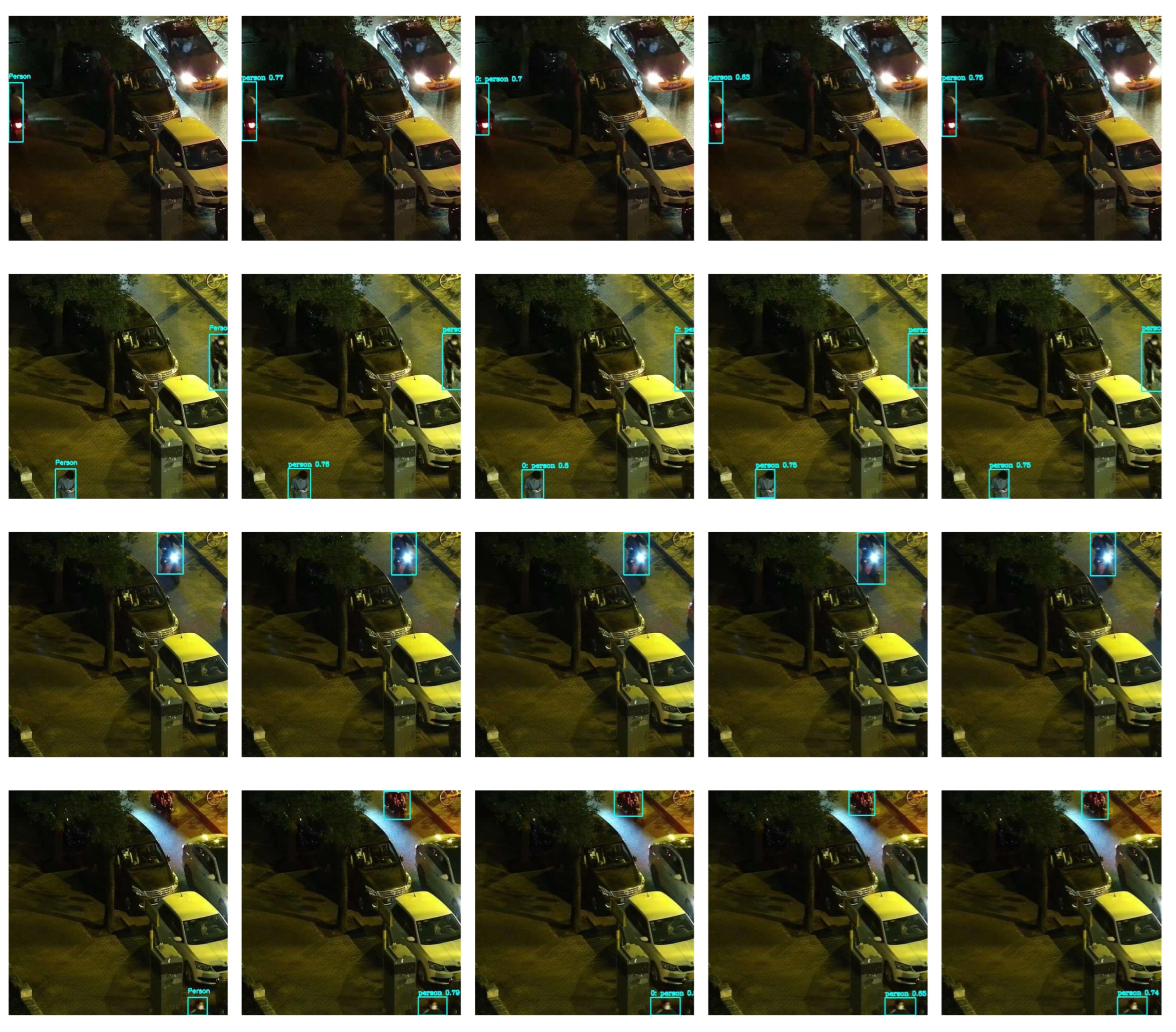

To evaluate the effectiveness of various attention mechanisms in the hybrid attention mechanism, we designed a series of comparative experiments, with results shown in

Table 5.

The results show that different types of attention mechanisms have varying impacts on model performance. When used individually, the self-attention mechanism (B1) performs best with an mAP50 of 0.776 and mAP50:95 of 0.408, indicating that capturing spatial dependencies within modalities is critical for object detection. Although cross-modal attention (B2) and channel attention (B3) show slightly inferior performance when used alone, they provide feature enhancement capabilities in different dimensions.

In combinations of two attention mechanisms, the combination of self-attention and cross-modal attention (B4) performs best, with mAP50:95 reaching 0.424, approaching the performance of the complete model. The complete combination of three attention mechanisms (B7) achieves the best performance, confirming the rationality of the hybrid attention mechanism design, which can comprehensively capture the complex relationships in multi-modal data.

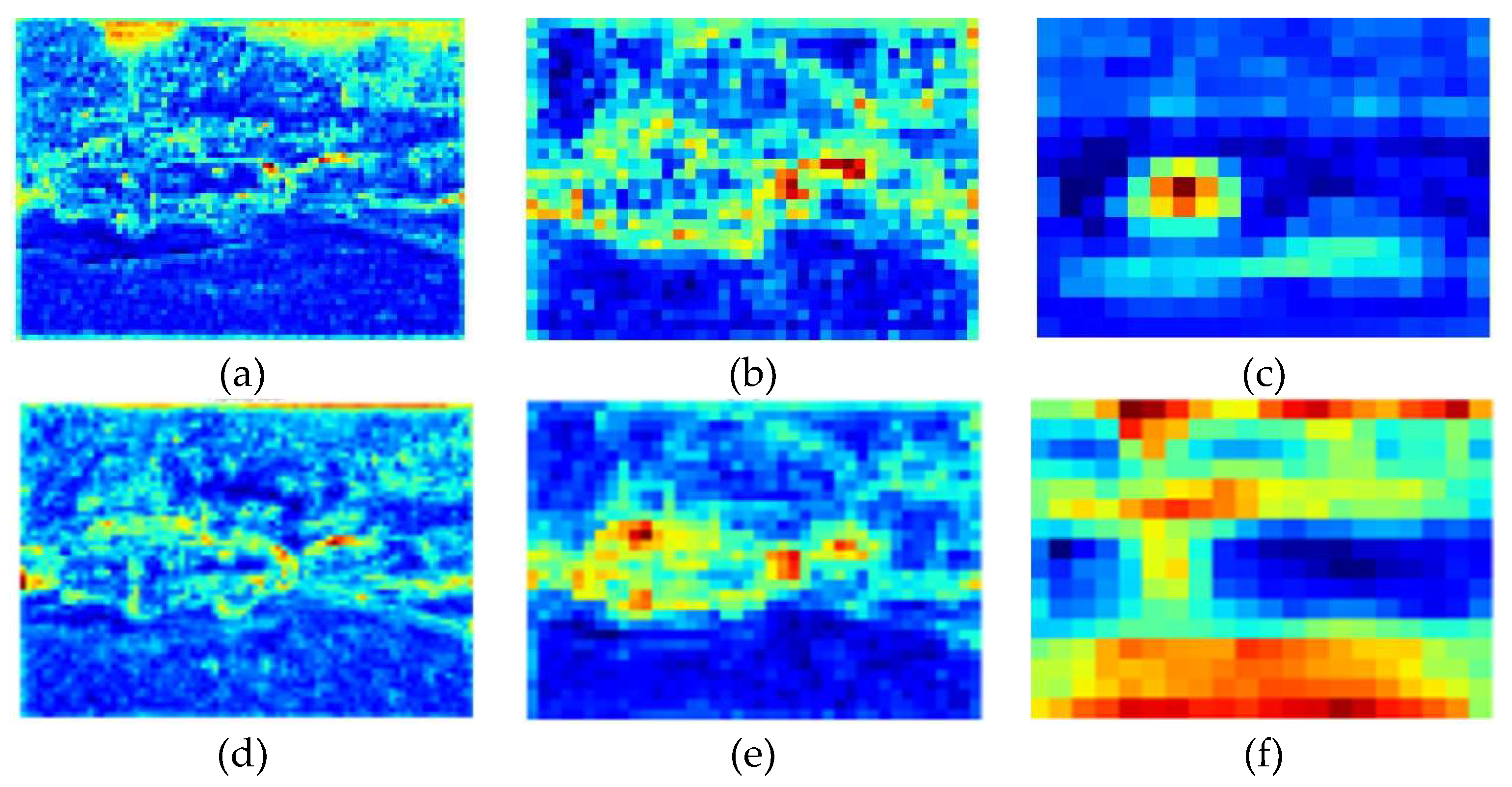

To gain a deeper understanding of the role of different attention mechanisms in multispectral object detection, we analyzed the visualization results of self-attention, cross-modal attention, and channel attention on the P3 feature layer.

From

Figure 13, it can be observed that the feature maps of single attention mechanisms present different attention patterns:

Self-attention mechanism (B1): Mainly focuses on target contours and edge information, effectively capturing spatial contextual relationships, with strong response to target boundaries, helping to improve localization accuracy;

Cross-modal attention mechanism (B2): Presents overall attention to target areas, integrating complementary information from RGB and infrared modalities, but with relatively weak background suppression capability;

Channel attention mechanism (B3): Demonstrates selective enhancement of specific semantic information, highlighting important feature channels, with strong response to specific parts of targets, improving the discriminability of feature representation.

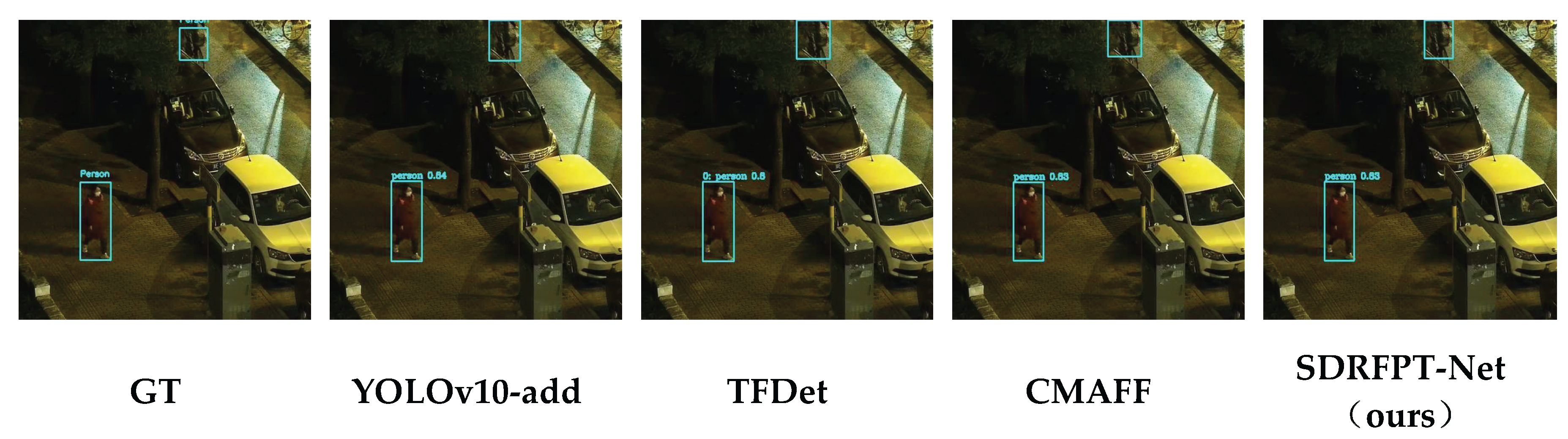

Furthermore, we conducted a comparative analysis of the visualization effects of dual attention mechanisms versus the full attention mechanism on the P3 feature layer, as shown in

Figure 14.

Through the visualization comparative analysis in

Figure 14, we observe that the feature maps of dual attention mechanisms present complex and differentiated feature representations:

Self-attention + Cross-modal attention (B4): The feature map simultaneously possesses excellent boundary localization capability and overall target region representation capability. The heatmap shows precise response to target regions with significant background suppression effect. This combination fully leverages the complementary advantages of self-attention in spatial modeling and cross-modal attention in multi-modal fusion, enabling it to reach 0.424 in mAP50:95, approaching the performance of the full attention mechanism.

Self-attention + Channel attention (B5): The feature map enhances the representation of specific semantic features while preserving target boundary information. The heatmap shows strong response to key parts of targets, enabling the model to better distinguish different categories of targets, achieving 0.409 in mAP50:95, outperforming any single attention mechanism.

Cross-modal attention + Channel attention (B6): The feature map enhances specific channel representation based on multi-modal fusion, but lacks the spatial context modeling capability of self-attention. The heatmap shows some response to target regions, but boundaries are not clear enough and background suppression effect is relatively weak, which explains its relatively lower performance.

Although dual attention mechanisms (especially self-attention + cross-modal attention) can improve feature representation capability to some extent, they cannot completely replace the comprehensive advantages of the full attention mechanism.

As shown in

Figure 1d and

Figure 14d, the full attention mechanism, through the synergistic effect of three attention mechanisms, shows the most precise and strong response to target regions in the heatmap, with clear boundaries and optimal background suppression effect, achieving an organic unification of spatial context modeling, multi-modal information fusion, and channel feature enhancement, obtaining optimal performance in multispectral object detection tasks.

Based on the above experiments and visualization analysis, we verified the effectiveness of the proposed hybrid attention mechanism. The results show that, despite the advantages of single and dual attention mechanisms, the complete combination of three attention mechanisms achieves optimal performance across all evaluation metrics. This confirms the rationality of our proposed “spatial-modal-channel” multi-dimensional attention framework, which creates an efficient synergistic mechanism through self-attention capturing spatial contextual relationships, cross-modal attention fusing complementary information, and channel attention selectively enhancing key features. This multi-dimensional feature enhancement strategy provides a new feature fusion paradigm for multispectral object detection, offering valuable reference for research in related fields.

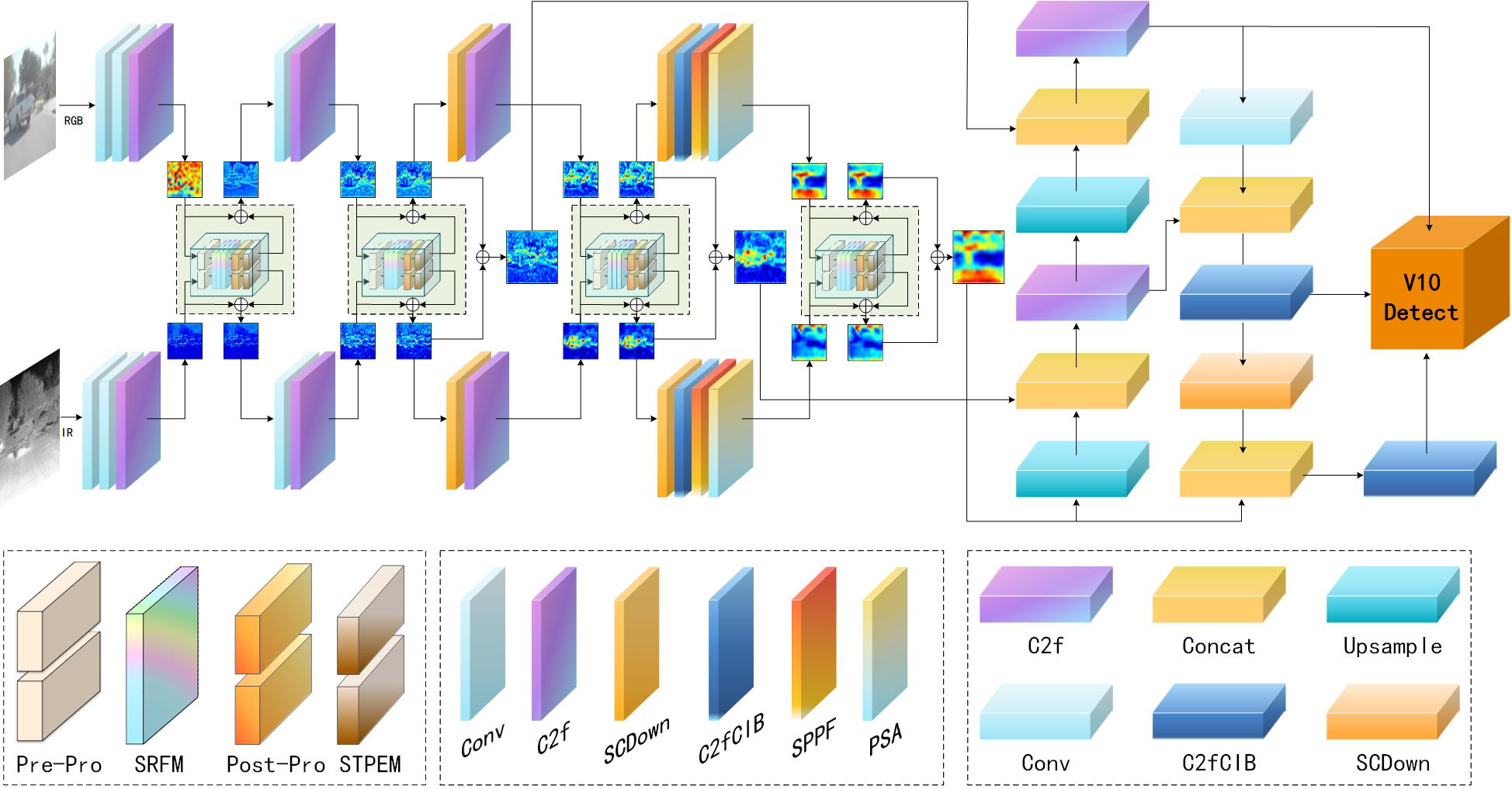

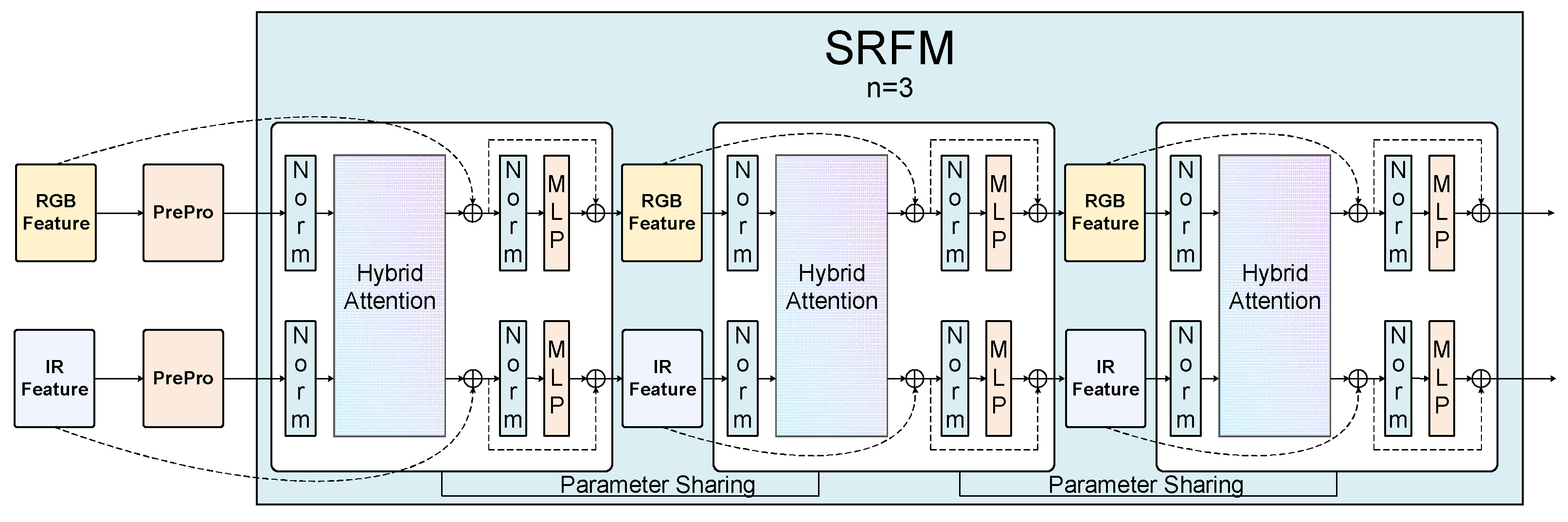

4.4.3. Ablation Experiments on Spectral Hierarchical Recursive Progressive Fusion Strategy

To verify the effectiveness of the spectral fusion strategy, we conducted ablation experiments from two aspects: fusion position selection and recursive progression iterations.

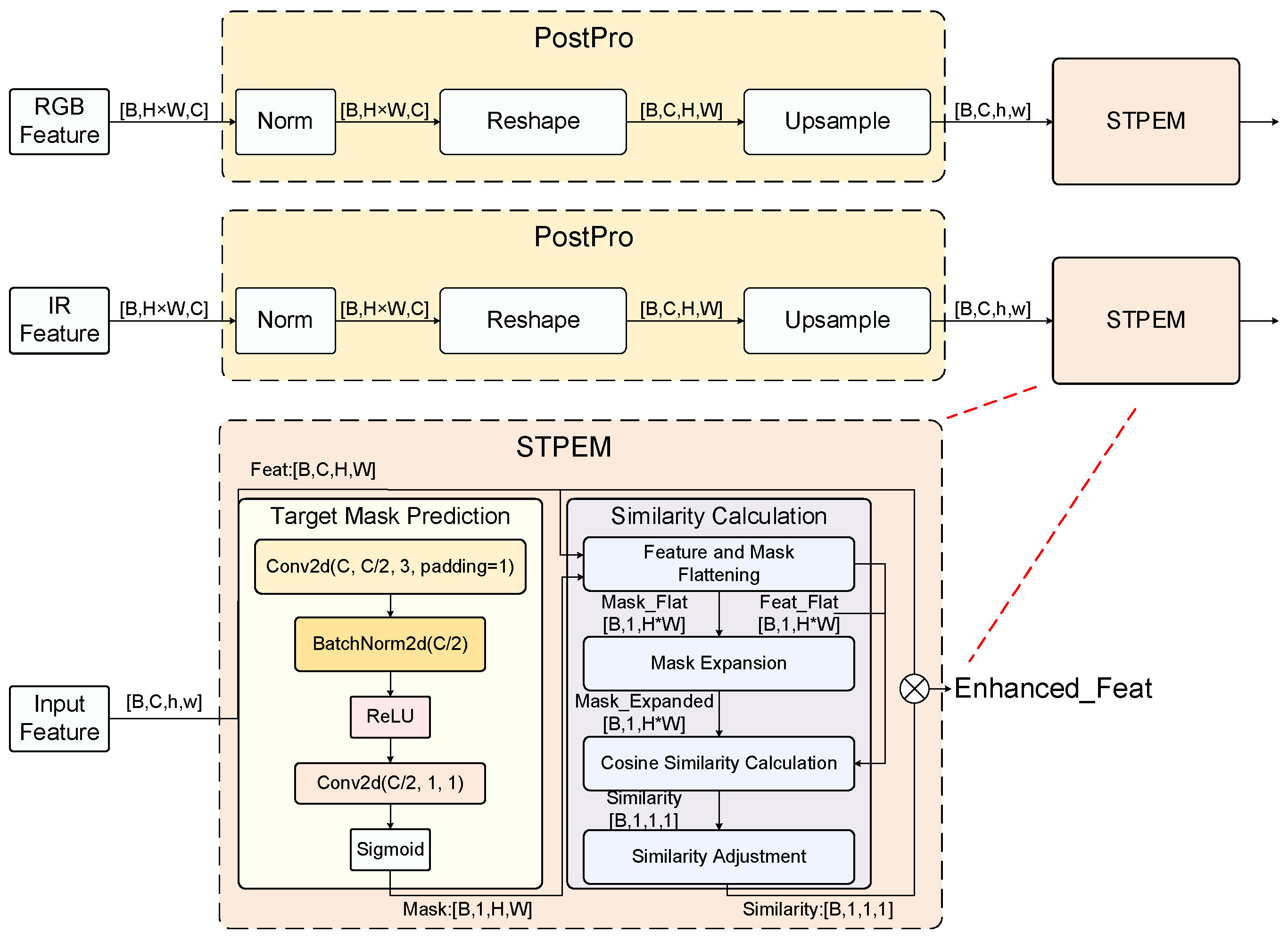

Impact of introducing fusion positions. Multi-scale feature fusion is a key link in multispectral object detection, and effective fusion of features at different scales has a decisive impact on model performance. This section explores the impact of fusion positions and fusion strategies on detection performance through ablation experiments and visualization analysis.

To systematically study the impact of fusion positions on model performance, we designed a series of ablation experiments, as shown in

Table 6. The experiments started from the baseline model (C1, using simple addition fusion at all scales), progressively applying our proposed innovative fusion modules (fusion mechanism combining SRFM and STPEM) at different scales, and finally evaluating the effect of comprehensive application of advanced fusion strategies.

As shown in

Table 6, with the increase in application positions of innovative fusion modules, model performance progressively improves. The baseline model (C1) only uses simple addition fusion at all feature scales, with an mAP50 of 0.701. When applying SRFM and STPEM modules at the P3/8 scale (C2), performance significantly improves to an mAP50 of 0.769. With further application of advanced fusion at the P4/16 scale (C3), mAP50 increases to 0.776. Finally, when the complete fusion strategy is applied to all three scales (C4), performance reaches optimal levels with an mAP50 of 0.785 and mAP50:95 of 0.426.

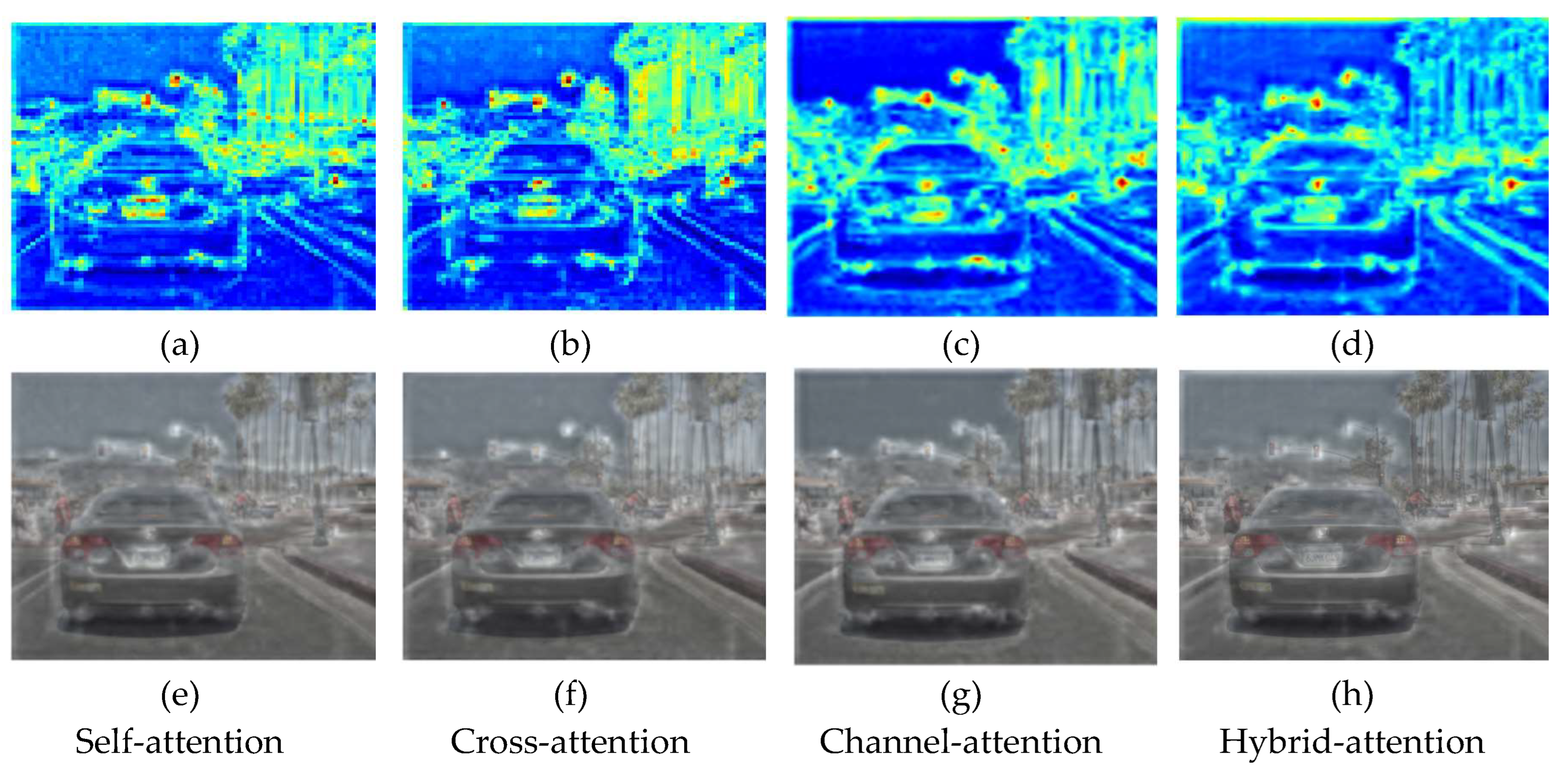

To intuitively understand the effect differences between different fusion strategies, we visualized and compared feature maps of simple addition fusion and advanced fusion strategies (SRFM+STPEM) at three scales: P3/8, P4/16, and P5/32, as shown in

Figure 15.

To gain a deeper understanding of the performance differences between different fusion strategies, we conducted systematic visualization comparisons at different scales (P3/8, P4/16, P5/32).

At the P3/8 scale, simple addition fusion presents dispersed activation patterns with insufficient target-background differentiation, especially with suboptimal activation intensity for small vehicles; whereas the feature map generated by the SRFM+STPEM fusion strategy possesses more precise target localization capability and boundary representation, with significantly improved background suppression effect and activation intensity distribution more concentrated on target regions, effectively enhancing small target detection performance.

The comparison of P4/16 scale feature maps shows that although simple addition fusion can capture medium target positions, activation is not prominent enough and background noise interference exists; in contrast, the advanced fusion strategy produces more concentrated activation areas with higher target-background contrast and clearer boundaries between vehicles. As an intermediate resolution feature map, P4 (40×40) demonstrates superior structured representation and background suppression capability under the advanced fusion strategy.

At the P5/32 scale, rough semantic information generated by simple addition fusion makes it difficult to distinguish main vehicle targets; whereas the advanced fusion strategy can better capture overall scene semantics, accurately represent main vehicle targets, and effectively suppress background interference. Although the P5 feature map has the lowest resolution (20×20), it has the largest receptive field, and the advanced fusion strategy fully leverages its advantages in large target detection and scene understanding.

Through comparative analysis, we observed three key synergistic effects of multi-scale fusion:

Complementarity enhancement: the advanced fusion strategy makes features at different scales complementary, with P3 focusing on details and small targets, P4 processing medium targets, and P5 capturing large-scale structures and semantic information;

Information flow optimization: features at different scales mutually enhance each other, with semantic information guiding small target detection and detail information precisely locating large target boundaries;

Noise suppression capability: the advanced fusion strategy demonstrates superior background noise suppression capability at all scales, effectively reducing false detections.

Impact of recursive iteration count. The recursive progression mechanism is a key strategy in our proposed SDRFPT-Net model, which can further enhance feature representation capability through multiple recursive progressive fusions. To explore the optimal number of recursive iterations, we designed a series of experiments to observe model performance changes by varying the number of iterations.

Table 7 shows the impact of iteration count on model performance.

The experimental results show that the number of recursive iterations significantly affects model performance. When the iteration count is 1 (D1), the model achieves an mAP50 of 0.769, indicating that even a single round of iteration can provide effective feature fusion. As the iteration count increases to 2 (D2) and 3 (D3), performance continues to improve, reaching mAP50 of 0.783 and 0.785, and mAP50:95 of 0.418 and 0.426 respectively. However, when the iteration count further increases to 4 (D4) and 5 (D5), performance begins to decline, with the mAP50 of 5 iterations significantly dropping to 0.761.

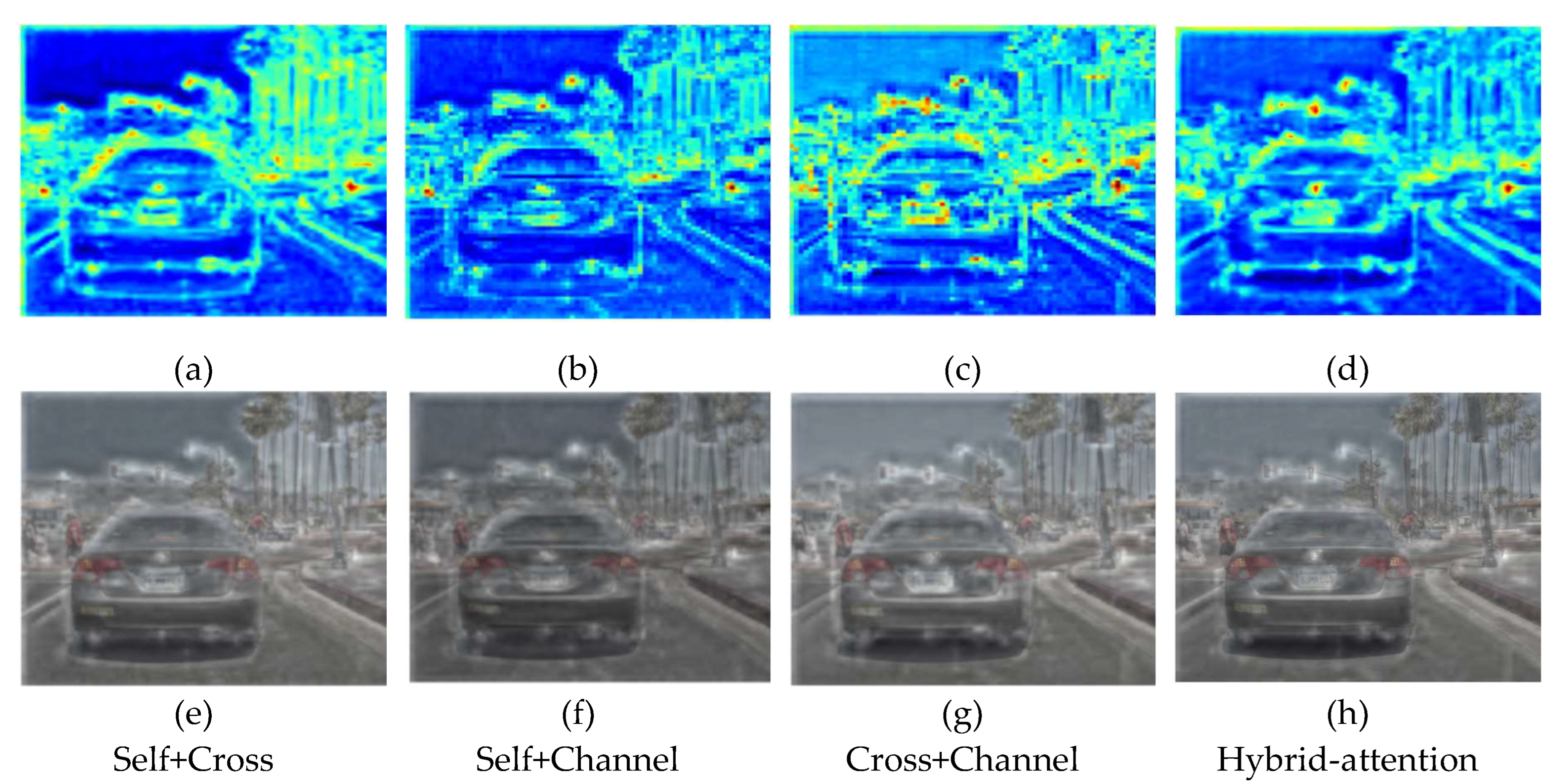

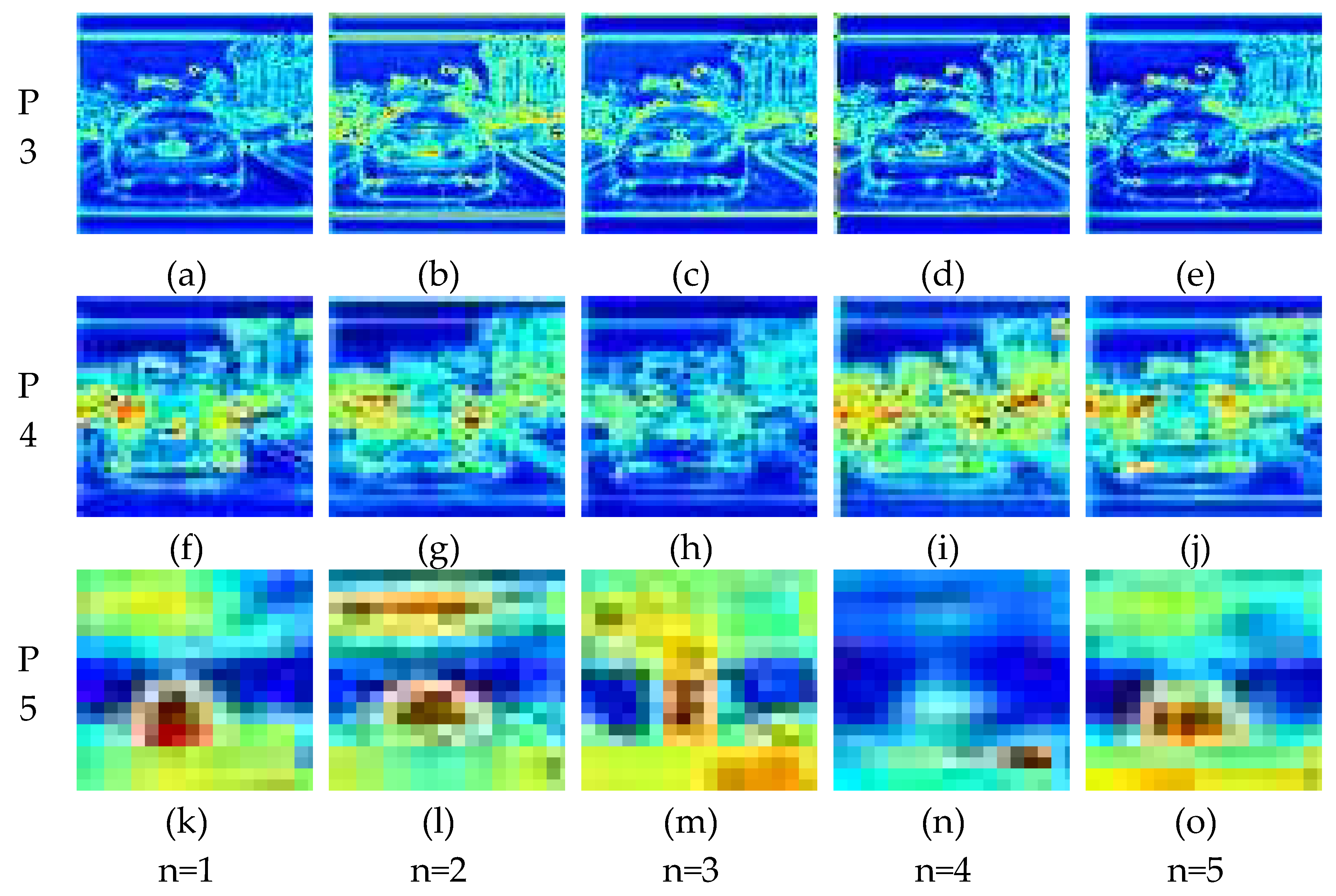

To more intuitively understand the impact of iteration count on feature representation, we conducted visualization analysis of feature maps at three feature scales—P3, P4, and P5—with different iteration counts, as shown in

Figure 16.

Through visualization, we observed the following feature evolution patterns:

P3 feature layer (high resolution): As the iteration count increases, the feature map gradually evolves from initial dispersed response (n=1) to more focused target representation (n=2,3), with clearer boundaries and stronger background suppression effect. However, when the iteration count reaches 4 and 5, over-smoothing phenomena begin to appear, with some loss of boundary details.

P4 feature layer (medium resolution): At n=1, the feature map has basic response to targets but is not focused enough. After 2-3 rounds of iteration, the activation intensity of target areas significantly increases, improving target differentiation. Continuing to increase the iteration count to 4-5 rounds, feature response begins to diffuse, reducing precise localization capability.

P5 feature layer (low resolution): This layer demonstrates the most obvious evolution trend, gradually developing from blurred response at n=1 to highly structured representation at n=3 that can clearly distinguish main targets. However, obvious signs of overfitting appear at n=4 and n=5, with feature maps becoming overly smoothed and target representation degrading.

These observations reveal the working mechanism of recursive progressive fusion: moderate iteration count (n=3) can achieve progressive optimization of features through multiple rounds of interactive fusion of complementary information from different modalities, enhancing target feature representation and suppressing background interference. However, excessive iteration count may lead to "over-fusion" of features, i.e., the model overfits specific patterns in the training data, losing generalization capability.

Combining quantitative and visualization analysis results, we determined n=3 as the optimal iteration count, achieving the best balance between feature enhancement and computational efficiency. This finding is also consistent with similar observations in other research areas, such as the optimal unfolding steps in recurrent neural networks and the optimal iteration count in message passing neural networks, where similar "performance saturation points" exist.

Through the above ablation experiments, we verified the effectiveness and optimal configuration of each core component of SDRFPT-Net. The results show that the spectral hierarchical perception architecture (SHPA), the complete combination of three attention mechanisms, the full-scale advanced fusion strategy, and three rounds of recursive progressive fusion collectively contribute to the model's superior performance. The rationality of these design choices is not only validated through quantitative metrics but also intuitively explained through feature visualization, providing new ideas for multispectral object detection research.