Submitted:

27 April 2025

Posted:

28 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

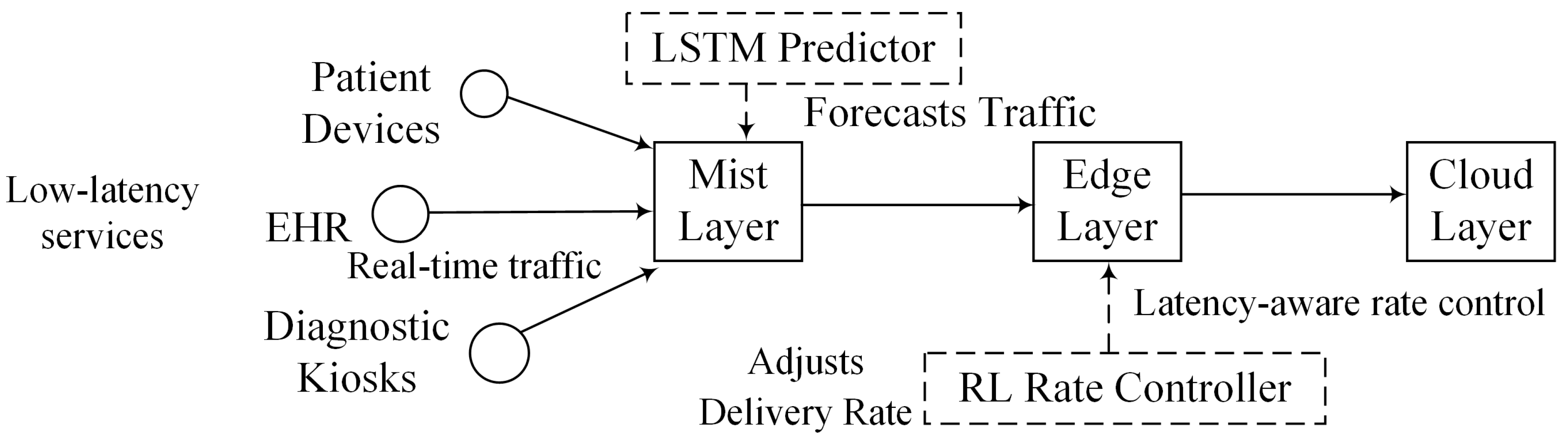

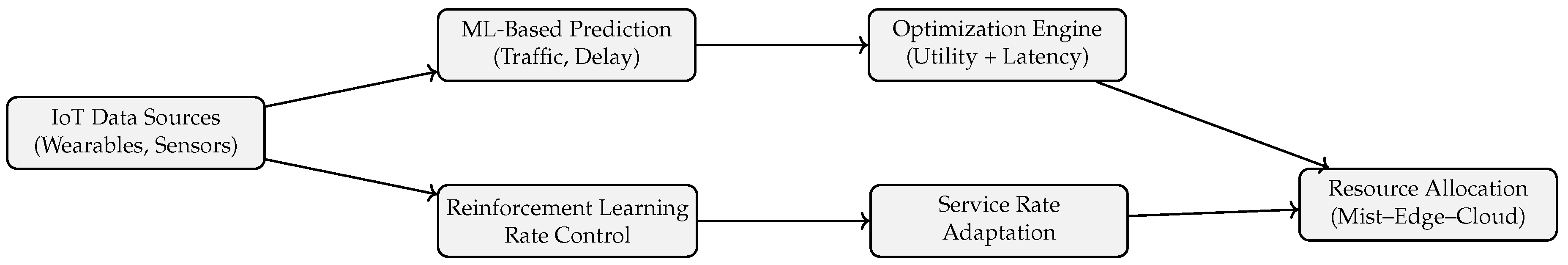

- We present ML-RASPF, a novel hybrid mist–edge–cloud framework for rate-adaptive and latency-aware IoT service provisioning in smart healthcare systems.

- We formulate the service provisioning problem as a joint optimization model that integrates both latency constraints and service delivery rates. This formulation enables intelligent, QoS-aware resource allocation across heterogeneous IoT environments.

- We propose a modular, ML-driven algorithmic suite combining supervised learning for traffic prediction and RL for real-time service rate adaptation.

- We evaluate the proposed framework within EdgeCloudSim, using realistic smart healthcare workloads and the results show that ML-RASPF significantly outperforms state-of-the-art rate-adaptive methods by reducing latency, energy consumption, and bandwidth utilization while improving service delivery rate.

2. Related Work

3. Optimal Service Provisioning Framework

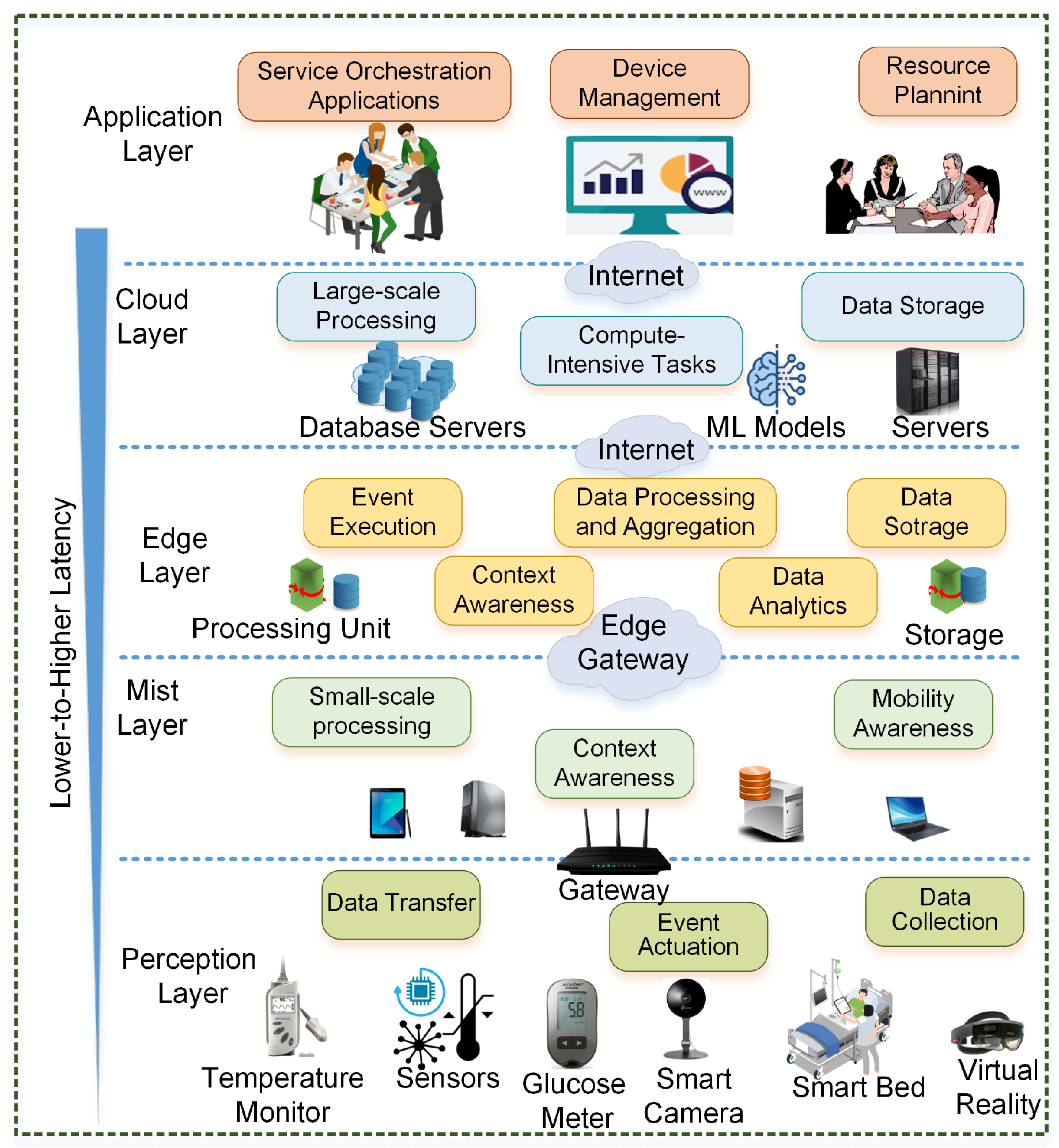

3.1. Customized Mist–Edge–Cloud Framework

3.1.1. Perception Layer

3.1.2. Mist Layer

3.1.3. Edge Computing Layer

3.1.4. Central Cloud Layer

3.1.5. Cloud Application Layer

3.2. Smart Healthcare with Emergency and Routine Services

4. Analytical Framework for Heterogeneous Service Provisioning

4.1. Problem Overview and Healthcare Service Requirements

4.1.1. Utility-Based Formulation for Service Delivery Rate

4.1.2. Delay-Aware Utility Adjustment

4.1.3. Approximation and Convex Transformation

4.1.4. Machine Learning Integration

4.1.5. Traffic and Demand Prediction

4.1.6. Delay Estimation and Latency Modeling

4.1.7. Reinforcement Learning for Adaptive Rate Control

4.1.8. Framework Integration

4.2. Algorithms for Rate-Adaptive Provisioning

| Algorithm 1 Network Data Collection and Initialization |

| Require: Set of services , links , link capacities |

| Ensure: Initialized weight matrix W and price matrix P |

| 1: Initialize matrices and |

| 2: for all services do |

| 3: for all consumers i of service s do |

| 4: for all links do |

| 5: |

| 6: |

| 7: end for |

| 8: end for |

| 9: end for |

| 10: returnW, P |

| Algorithm 2 Price Computation and Weight Update |

| Require: Current weights W, prices P, link capacities C, service rates |

| Ensure: Updated W and P |

| 1: for all nodes e do |

| 2: for all links t connected to e do |

| 3: for all services s using t do |

| 4: |

| 5: end for |

| 6: |

| 7: |

| 8: for all services s and consumers i on t do |

| 9: |

| 10: ifthen |

| 11: |

| 12: end if |

| 13: |

| 14: Update W and P |

| 15: end for |

| 16: end for |

| 17: end for |

| 18: returnW, P |

| Algorithm 3:Service Rate Adaptation and Delivery |

| Require: Updated link prices P, weights W, service paths |

| Ensure: Adapted delivery rates |

| 1: for all nodes e do |

| 2: ife is a provider of service s then |

| 3: for all consumers i of service s do |

| 4: |

| 5: Observe state |

| 6: Select action using RL policy : adjust |

| 7: Receive reward |

| 8: Update policy parameters using gradient of |

| 9: |

| 10: end for |

| 11: end for |

| 12: // Check for new service requests to trigger feedback-based re-optimization |

| 13: if any new service is requested by node e then |

| 14: Update network state and repeat Algorithm 2 |

| 15: end if |

| 16: end if |

| 17: return Updated rates |

4.3. Complexity Analysis

5. Experimental Evaluation

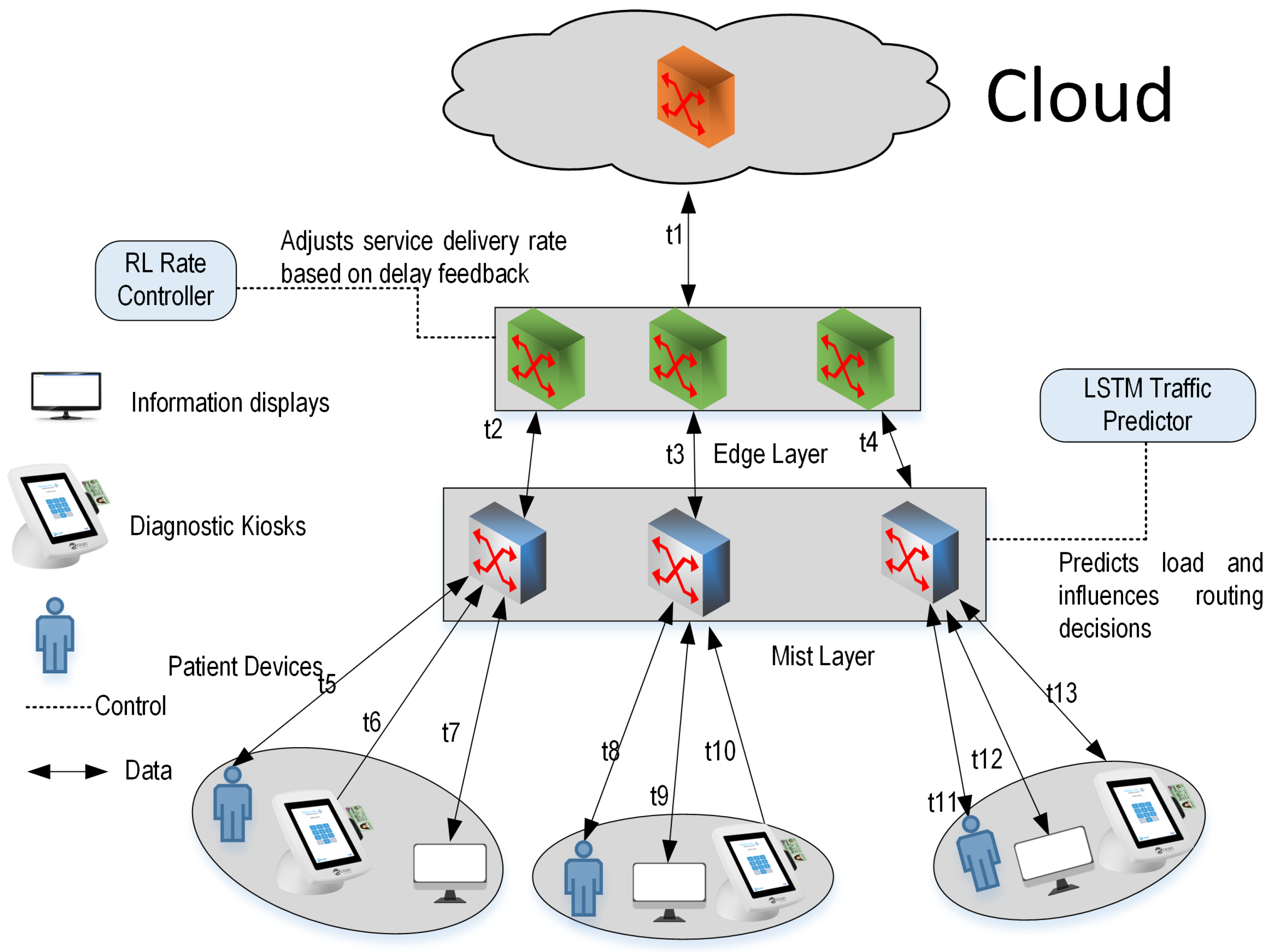

5.1. Simulation Setup

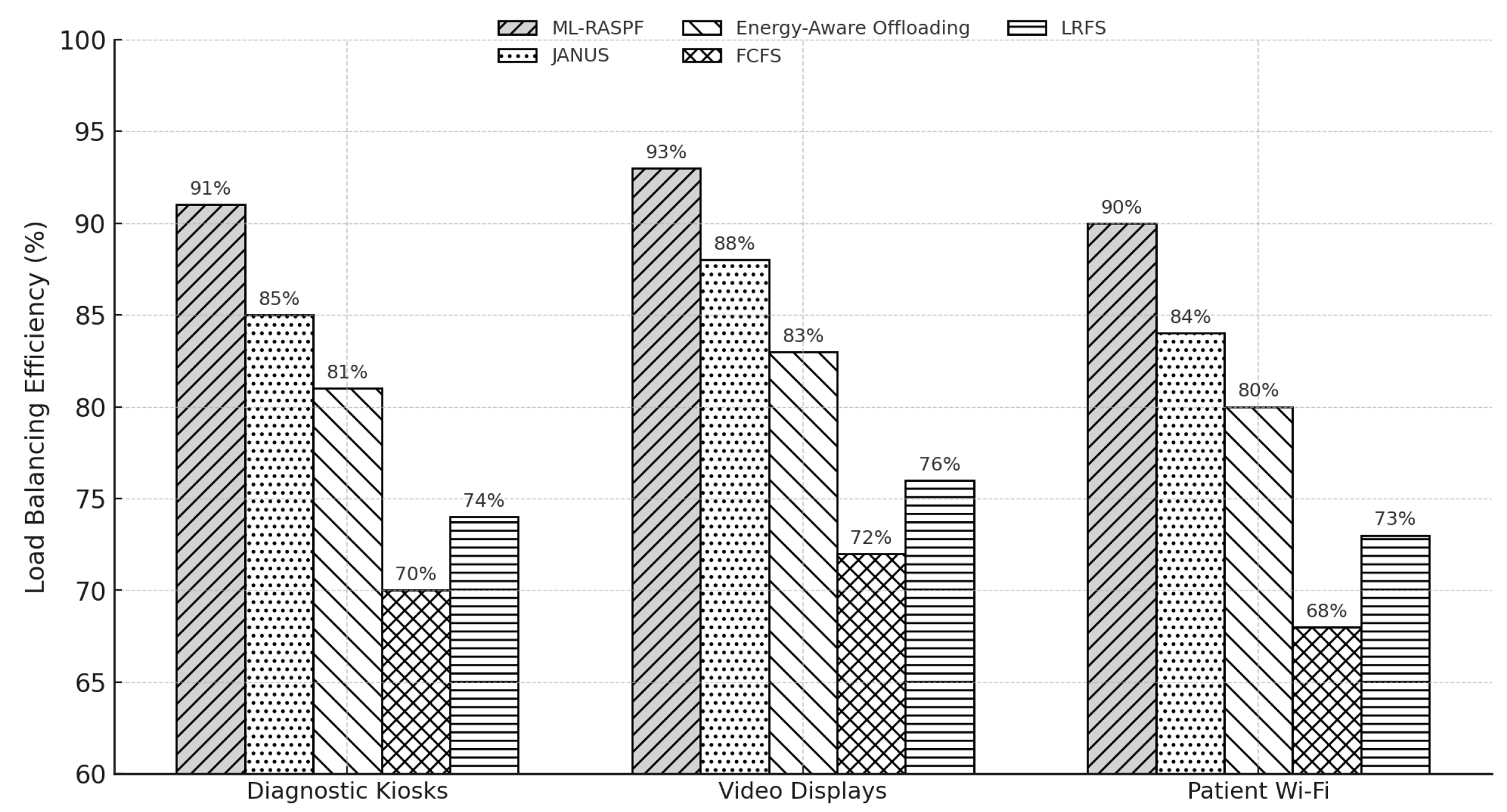

- Interactive Diagnostic Kiosks – provide patient-specific diagnostic support, lab report access, and symptom checkers. These require moderate delivery rates but have stringent latency requirements.

- Informational Displays – broadcast hospital alerts, safety protocols, and public health information. These involve high-bandwidth, video-rich content with stable rate requirements.

- Patient Devices – such as tablets or smartphones used by inpatients or visitors for accessing hospital Wi-Fi, EHRs, or teleconsultation. These represent latency-tolerant services.

5.2. Simulation Parameters

5.2.1. Traffic Trace Generation

5.3. Performance Metrics and Baselines

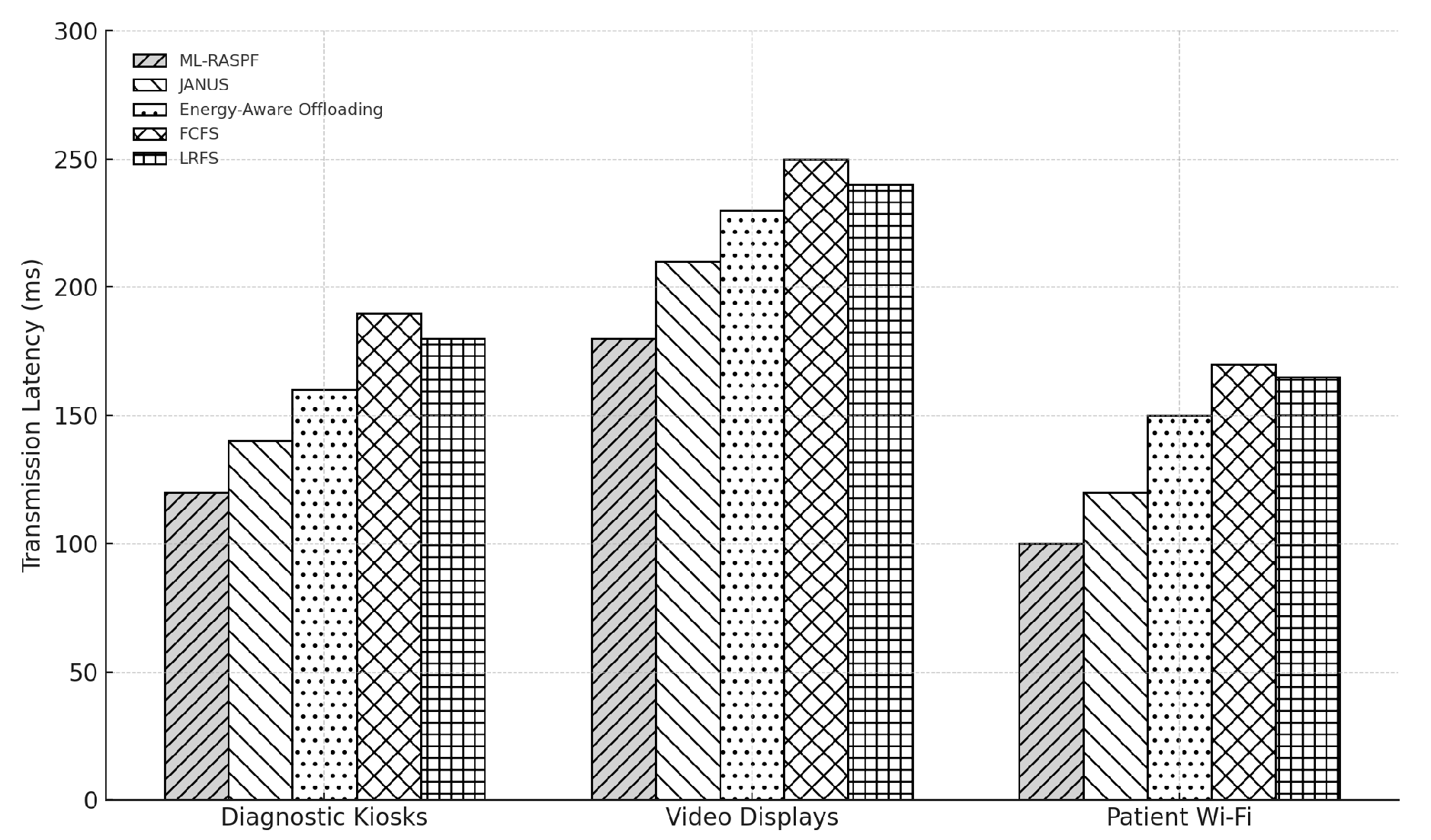

5.3.1. Latency

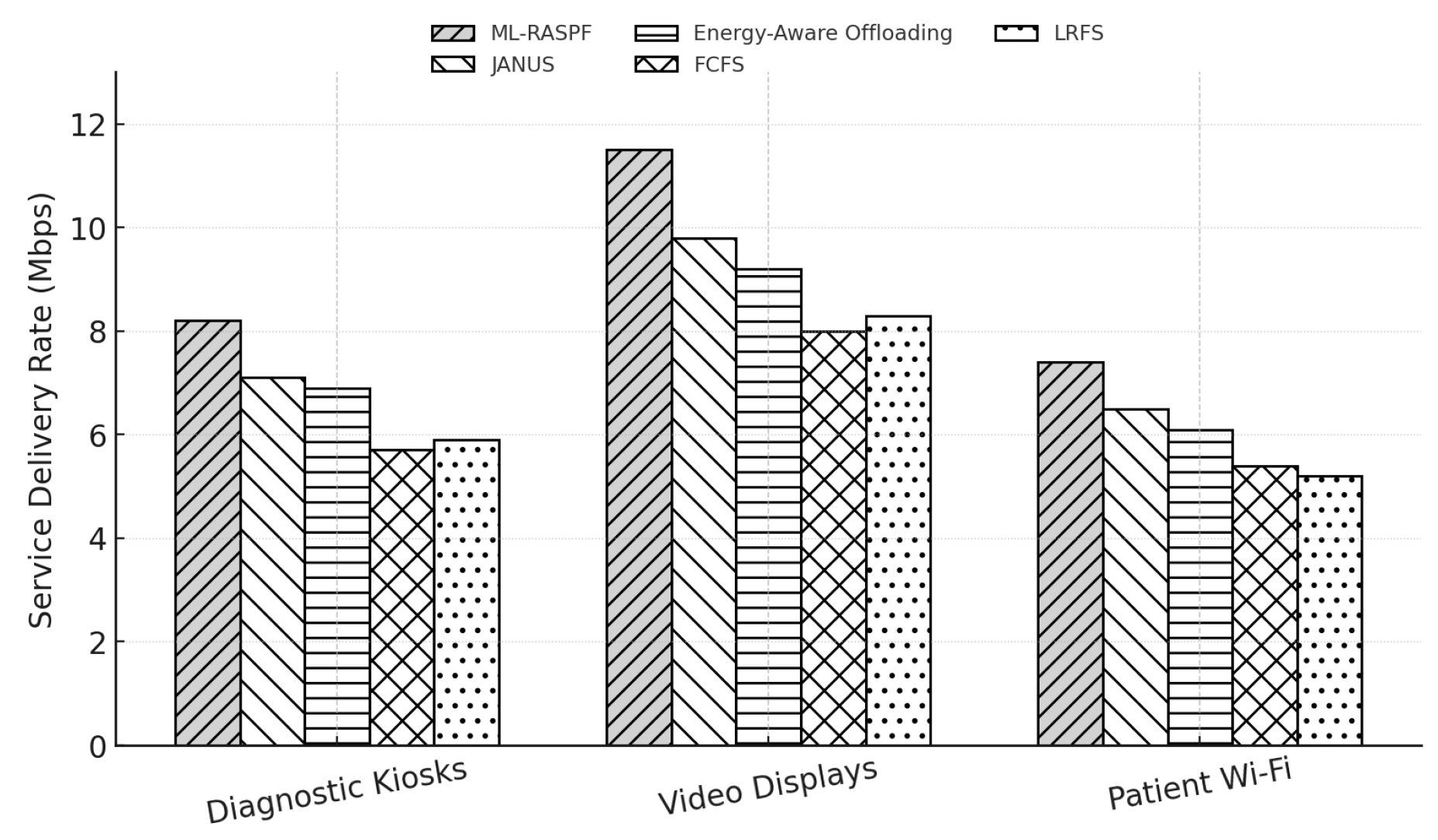

5.3.2. Service Delivery Rate

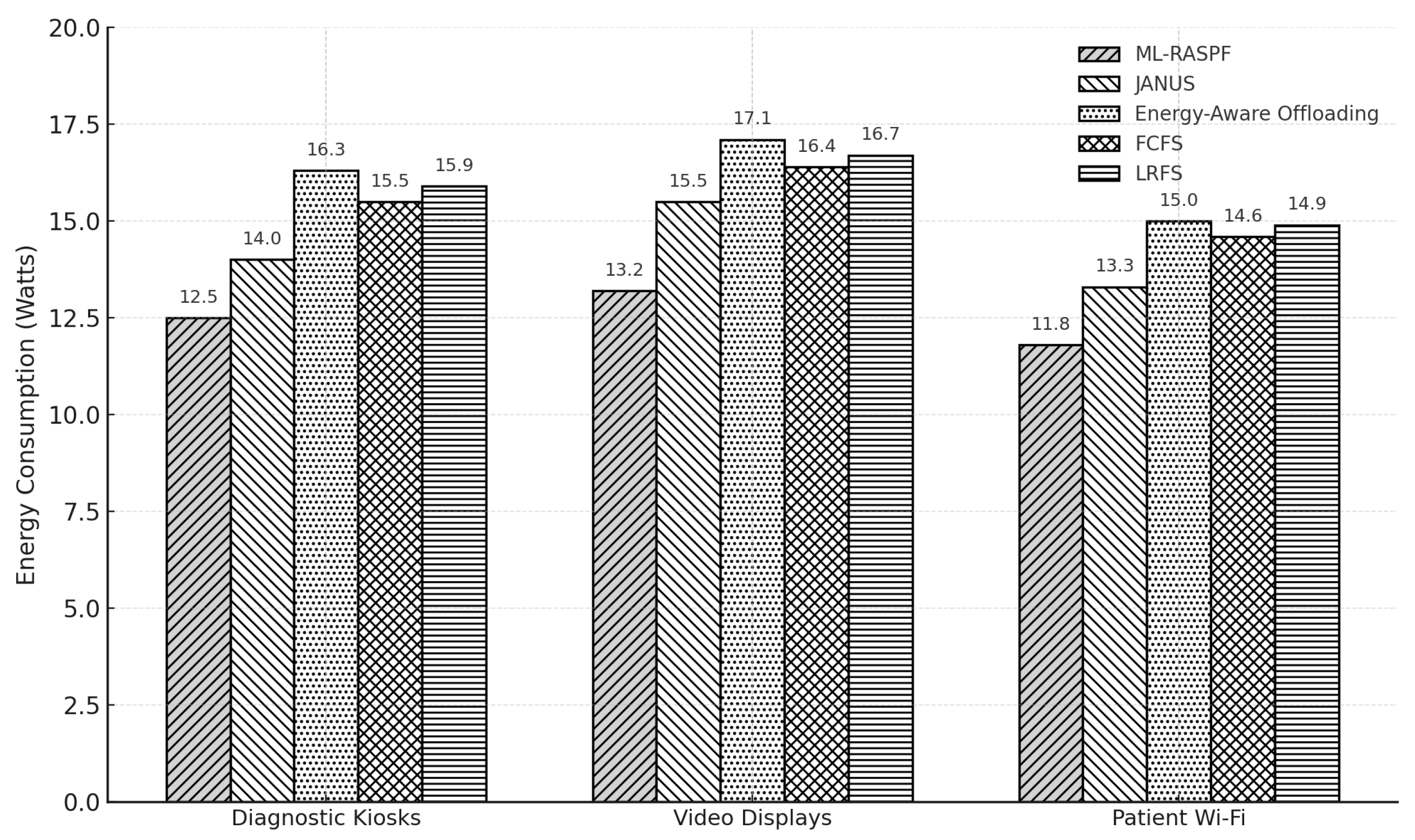

5.3.3. Energy Consumption

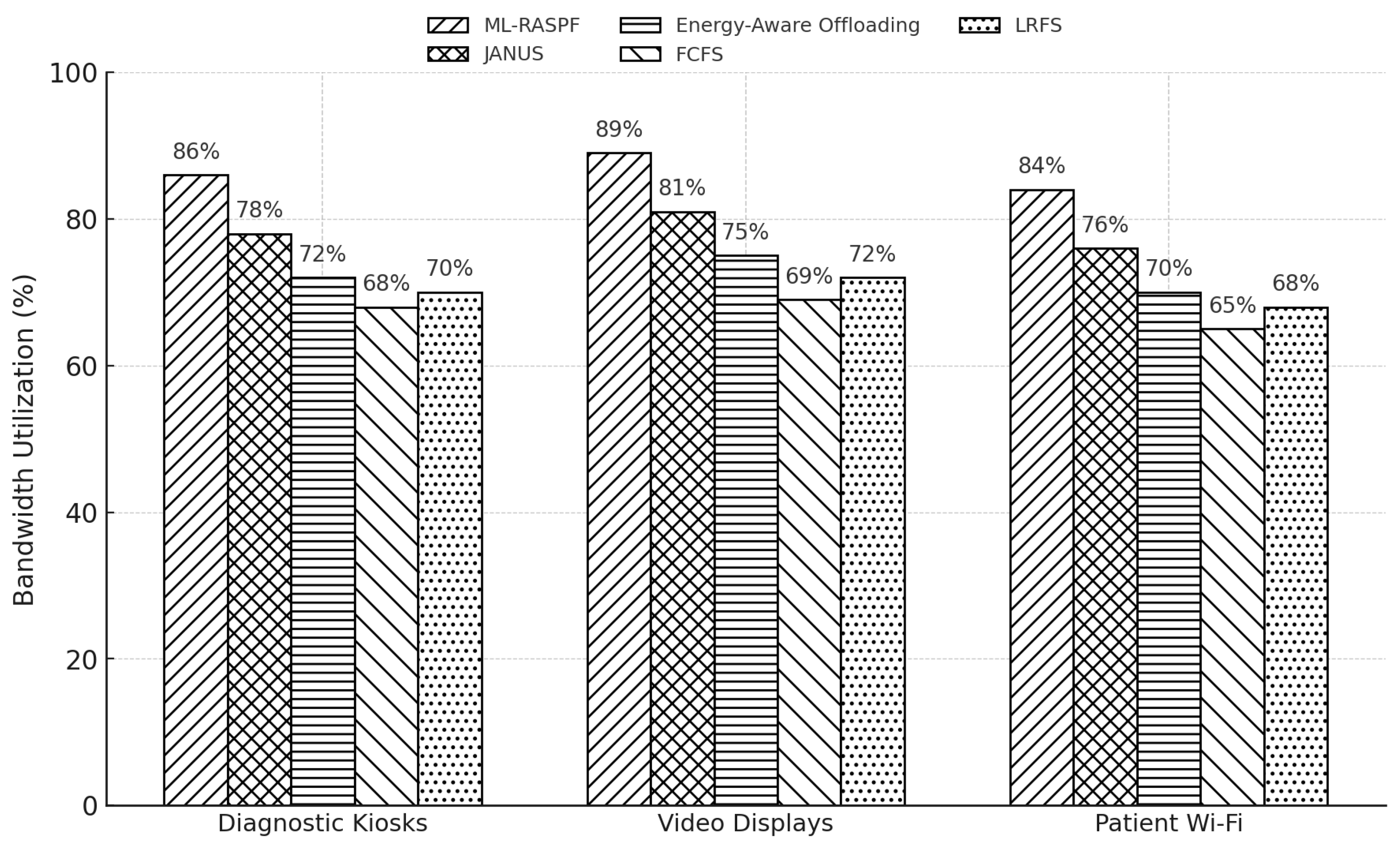

5.3.4. Bandwidth Utilization

5.3.5. Load Balancing Efficiency

5.3.6. Baselines for Comparison

- Energy-Aware Offloading [8]: An energy-aware task offloading framework that leverages dynamic load balancing and resource compatibility evaluation among fog nodes. The method uses lightweight metaheuristics to optimize offloading decisions based on task priority, fog availability, and energy profile, but does not consider ML-driven traffic prediction or RL-based rate control.

- JANUS [7]: A latency-aware traffic scheduling system for IoT data streaming in edge environments. JANUS employs multi-level queue management and global coordination using heuristic stream selection policies. Although effective in managing latency-sensitive streams, it does not perform joint rate-latency optimization or predictive traffic adaptation.

- FCFS: A baseline queuing strategy where incoming service requests are served in the order they arrive, without considering bandwidth, latency sensitivity, or system state. FCFS represents non-prioritized resource allocation and serves as a lower-bound reference.

- LRFS: A heuristic baseline that prioritizes service requests with the smallest bandwidth requirements. While this may help reduce short-term congestion, it often leads to unfair treatment of larger or high-priority flows, particularly in healthcare workloads.

5.4. Results and Analysis

5.4.1. Latency

5.4.2. Service Delivery Rate

5.4.3. Energy Consumption

5.4.4. Bandwidth Utilization

5.4.5. Load Balancing Efficiency

6. Conclusion

Conflicts of Interest

References

- Framingham, Mass. The Growth in Connected IoT Devices Is Expected to Generate 79.4ZB of Data in 2025, According to a New IDC Forecast. https://www.idc.com/getdoc. Accessed on April 16, 2025.

- Sun, M.; Quan, S.; Wang, X.; Huang, Z. Latency-aware scheduling for data-oriented service requests in collaborative IoT-edge-cloud networks. Future Generation Computer Systems 2025, 163, 107538. [Google Scholar] [CrossRef]

- Banitalebi Dehkordi, A. EDBLSD-IIoT: a comprehensive hybrid architecture for enhanced data security, reduced latency, and optimized energy in industrial IoT networks. The Journal of Supercomputing 2025, 81, 359. [Google Scholar] [CrossRef]

- Khan, S.; Khan, S. Latency aware graph-based microservice placement in the edge-cloud continuum. Cluster Computing 2025, 28, 88. [Google Scholar] [CrossRef]

- Pervez, F.; Zhao, L. Efficient Queue-Aware Communication and Computation Optimization for a MEC-Assisted Satellite-Aerial-Terrestrial Network. IEEE Internet of Things Journal 2025. [Google Scholar] [CrossRef]

- Tripathy, S.S.; Bebortta, S.; Mohammed, M.A.; Nedoma, J.; Martinek, R.; Marhoon, H.A. An SDN-enabled fog computing framework for wban applications in the healthcare sector. Internet of Things 2024, 26, 101150. [Google Scholar] [CrossRef]

- Wen, Z.; Yang, R.; Qian, B.; Xuan, Y.; Lu, L.; Wang, Z.; Peng, H.; Xu, J.; Zomaya, A.Y.; Ranjan, R. JANUS: Latency-aware traffic scheduling for IoT data streaming in edge environments. IEEE Transactions on Services Computing 2023, 16, 4302–4316. [Google Scholar] [CrossRef]

- Mahapatra, A.; Majhi, S.K.; Mishra, K.; Pradhan, R.; Rao, D.C.; Panda, S.K. An energy-aware task offloading and load balancing for latency-sensitive IoT applications in the Fog-Cloud continuum. IEEE Access 2024. [Google Scholar] [CrossRef]

- San José, S.G.; Marquès, J.M.; Panadero, J.; Calvet, L. NARA: Network-Aware Resource Allocation mechanism for minimizing quality-of-service impact while dealing with energy consumption in volunteer networks. Future Generation Computer Systems 2025, 164, 107593. [Google Scholar] [CrossRef]

- Du, A.; Jia, J.; Chen, J.; Wang, X.; Huang, M. Online Queue-Aware Service Migration and Resource Allocation in Mobile Edge Computing. IEEE Transactions on Vehicular Technology 2025. [Google Scholar] [CrossRef]

- Amzil, A.; Abid, M.; Hanini, M.; Zaaloul, A.; El Kafhali, S. Stochastic analysis of fog computing and machine learning for scalable low-latency healthcare monitoring. Cluster Computing 2024, 27, 6097–6117. [Google Scholar] [CrossRef]

- Centofanti, C.; Tiberti, W.; Marotta, A.; Graziosi, F.; Cassioli, D. Taming latency at the edge: A user-aware service placement approach. Computer Networks 2024, 247, 110444. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Q.; Yao, H.; Gao, R.; Xin, X.; Guizani, M. Next-Gen Service Function Chain Deployment: Combining Multi-Objective Optimization with AI Large Language Models. IEEE Network 2025. [Google Scholar] [CrossRef]

- Ahmed, W.; Iqbal, W.; Hassan, A.; Ahmad, A.; Ullah, F.; Srivastava, G. Elevating e-health excellence with IOTA distributed ledger technology: Sustaining data integrity in next-gen fog-driven systems. Future Generation Computer Systems 2025, p. 107755.

- Fei, Y.; Fang, H.; Yan, Z.; Qi, L.; Bilal, M.; Li, Y.; Xu, X.; Zhou, X. Privacy-Aware Edge Computation Offloading With Federated Learning in Healthcare Consumer Electronics System. IEEE Transactions on Consumer Electronics 2025.

- Ali, A.; Arafa, A. Delay sensitive hierarchical federated learning with stochastic local updates. IEEE Transactions on Cognitive Communications and Networking 2025.

- Liu, Z.; Xu, X. Latency-aware service migration with decision theory for Internet of Vehicles in mobile edge computing. Wireless Networks 2024, 30, 4261–4273. [Google Scholar] [CrossRef]

- Ji, X.; Gong, F.; Wang, N.; Xu, J.; Yan, X. Cloud-Edge Collaborative Service Architecture With Large-Tiny Models Based on Deep Reinforcement Learning. IEEE Transactions on Cloud Computing 2025. [Google Scholar] [CrossRef]

- Najim, A.H.; Al-sharhanee, K.A.M.; Al-Joboury, I.M.; Kanellopoulos, D.; Sharma, V.K.; Hassan, M.Y.; Issa, W.; Abbas, F.H.; Abbas, A.H. An IoT healthcare system with deep learning functionality for patient monitoring. International Journal of Communication Systems 2025, 38, e6020. [Google Scholar] [CrossRef]

- Shang, L.; Zhang, Y.; Deng, Y.; Wang, D. MultiTec: A Data-Driven Multimodal Short Video Detection Framework for Healthcare Misinformation on TikTok. IEEE Transactions on Big Data 2025. [Google Scholar] [CrossRef]

- EdgeCloudSim. https://github.com/CagataySonmez/EdgeCloudSim. Accessed on April 16, 2025.

| Parameter | Value / Description |

|---|---|

| Gradient-based step size | 0.01 |

| Number of service consumers | 9 |

| Number of service types | 3 (diagnostics, info, Wi-Fi) |

| Number of communication links | 13 |

| Link capacity | 20 Mb/s |

| Link capacity – | 16, 15, 14 Mb/s |

| Link capacity – | 13 Mb/s |

| Edge forwarders (mist nodes) | 3 |

| Edge cloudlet nodes | 1 per forwarder |

| Central cloud nodes | 1 |

| Mist node energy consumption | 3.5 W (static baseline) |

| Edge node energy consumption | 3.7 W (static baseline) |

| Cloud energy consumption | 9.7 kW (data center model) |

| CPU | Intel Core i7 E3-1225 |

| Processor frequency | 3.3 GHz |

| RAM | 16 GB |

| Operating system | Windows 10 64-bit |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).