Submitted:

17 April 2025

Posted:

18 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Methodology

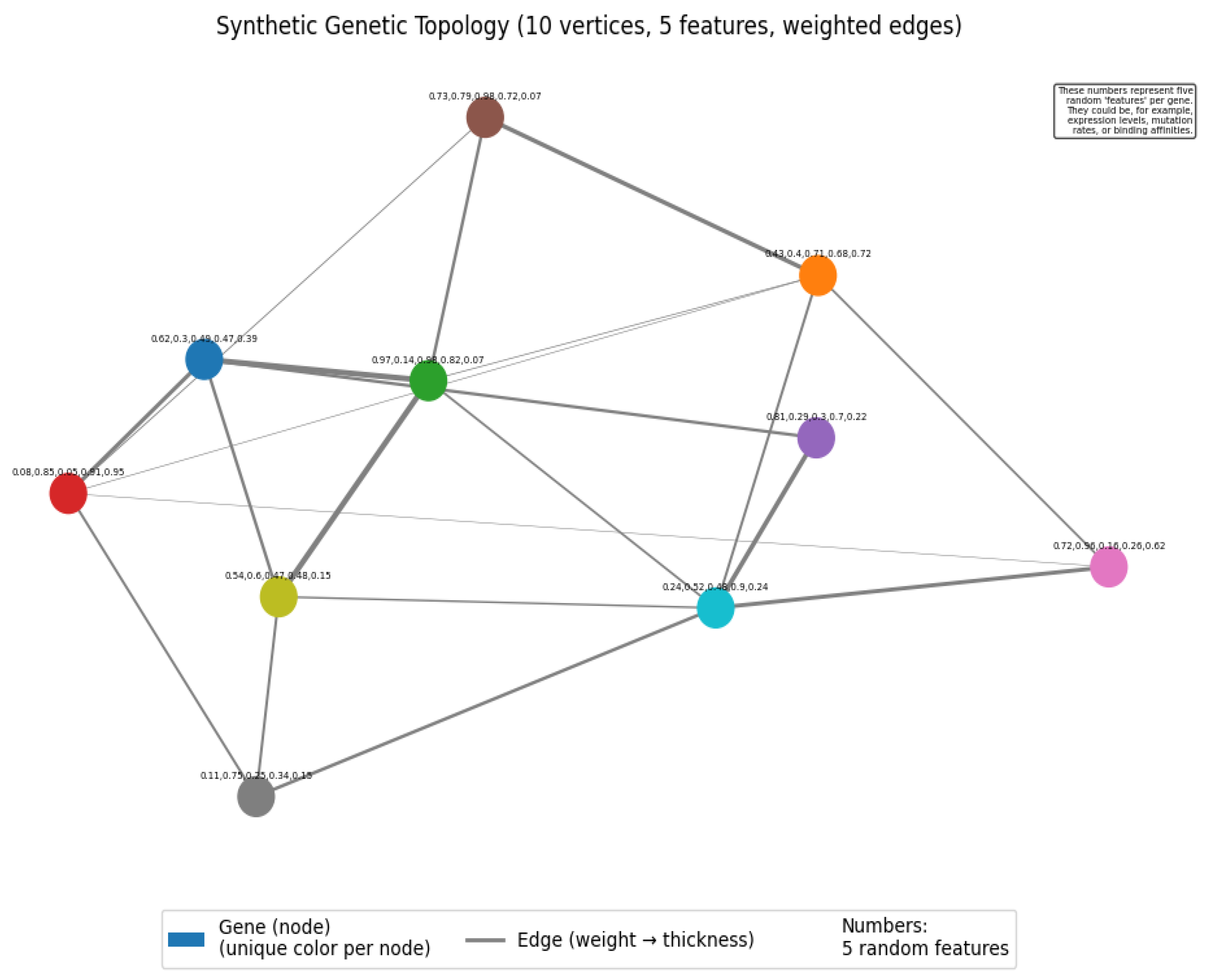

2.1. Mathematical Representation of Genetic Topology

- is the set of vertices, representing genetic elements. (1)

- is the set of edges, representing interactions between these elements. (2)

- Equation (4:

- Equation (5):

- Equation (5):

- Equation (6):

2.2. Engineered Topological Features

- 1.

- Average Connectivity ( )

- 2.

- Community Structure Ratio ( )

- Here, is the average density of connections within each community:

- and is the density of connections between the two communities:

- 1.

- Feature-Weighted Connectivity ( )

- 4.

- Average Degree ( )

2.3. Neural Network Architecture for Topology Processing

2.3.1. Node Feature Processing Pathway

- We first flatten the vertex feature matrix into a single row vector:

- We then apply a fully connected layer (followed by a dropout):

2.3.2. Adjacency Matrix Processing Pathway

- Flatten the adjacency matrix similarly:

- Pass through a fully connected layer with dropout:

2.3.3. Feature Integration and Output Layers

- ,

- ,

- ,

- .

- .

- (a scalar).

2.4. Phenotype Generation

2.5. Learning Algorithm

2.6. Implementation and Training Protocol

- 1.

-

Data Generation

- Created 300 synthetic genetic topologies, each with vertices and features per vertex.

- Enforced feature correlations to mimic realistic genetic elements.

- Used two-module community structures with strong intra-module and weaker inter-module connections.

- Generated phenotypes via Equation (26), adding a small noise term.

- 2.

-

Data Preprocessing

- Normalized phenotypes to have zero mean and unit variance.

- Split data: for training, for validation, for testing.

- 3.

-

Model Creation

- Implemented the neural network with hidden dimension .

- Used dropout with probability .

- Initialized weights using a normal distribution scaled by 0.1 .

- 4.

-

Training Protocol

- Optimized with Adam ( ).

- Chose batch size 8 for stable gradient updates.

- Applied gradient clipping ( ) and learning rate scheduling.

- Used early stopping with patience of 20 epochs.

- Performed 3 random initializations and chose the best model on validation loss.

2.6. Implementation and Training Protocol

- 1.

-

Data Generation:

- ○

- We generated 300 synthetic genetic topologies, each with 10 vertices and 5 features per vertex.

- ○

- Correlations between features were introduced to create more realistic genetic elements.

- ○

- Community structure was explicitly encoded in the adjacency matrices, with two equal-sized communities.

- ○

- Phenotypes were generated as a function of the topological properties, with a small amount of random noise.

- 2.

-

Data Preprocessing:

- ○

- Phenotypes were normalized to have zero mean and unit variance to improve training stability.

- ○

- Data was split into training (60%), validation (20%), and test (20%) sets.

- 3.

-

Model Creation:

- ○

- The neural network architecture described in Section 2.3 was implemented with hidden dimension h = 64.

- ○

- Dropout regularization with probability p = 0.2 was applied to prevent overfitting.

- ○

- All weights were initialized using a normal distribution scaled by 0.1 to prevent large initial values.

- 4.

-

Training Protocol:

- ○

- The model was trained using the Adam optimizer with learning rate η = 0.001 and weight decay λ = 0.01.

- ○

- A batch size of 8 was used to ensure stable gradient updates.

- ○

- Gradient clipping with threshold c = 1.0 was applied to prevent exploding gradients.

- ○

- Learning rate scheduling was employed to reduce the learning rate by a factor of 0.5 when the validation loss plateau.

- ○

- Early stopping with patience of 20 epochs was implemented to prevent overfitting.

- ○

- Multiple initialization attempts (3) were made, and the best model based on validation performance was selected.

Key Optimizations to Ensure Successful Execution

- Improved Data Generation: The synthetic data now includes correlated features, creating more realistic genetic element properties and stronger patterns for the model to learn.

- 2.

- Multiple Initialization Attempts: The code tries multiple random initializations and keeps the best performing model, addressing the variability in neural network training.

- 3.

- Numerical Stability: I’ve added safeguards against division by zero and other numerical issues throughout the code.

- 4.

- Gradient Clipping: To prevent exploding gradients during training, I’ve added gradient clipping which helps stabilize the learning process.

- 5.

- Learning Rate Scheduling: The model automatically reduces the learning rate when performance plateaus, helping it navigate flat regions in the loss landscape.

- 6.

- Enhanced Early Stopping: The patience parameter is now set to 20 epochs, giving the model more time to find improvements before stopping.

- 7.

- Clear Device Management: The code explicitly handles device placement (CPU/GPU) to avoid device mismatch errors.

- 8.

- Comprehensive Error Handling: Added robustness through maximum value calculations and epsilon values in divisions.

- 9.

- Batch Normalization: The architecture maintains batch-level stability through careful handling of batch dimensions.

- 10.

- Thorough Evaluation: The evaluation code calculates multiple metrics and creates visualizations to help interpret the results.

3. Results

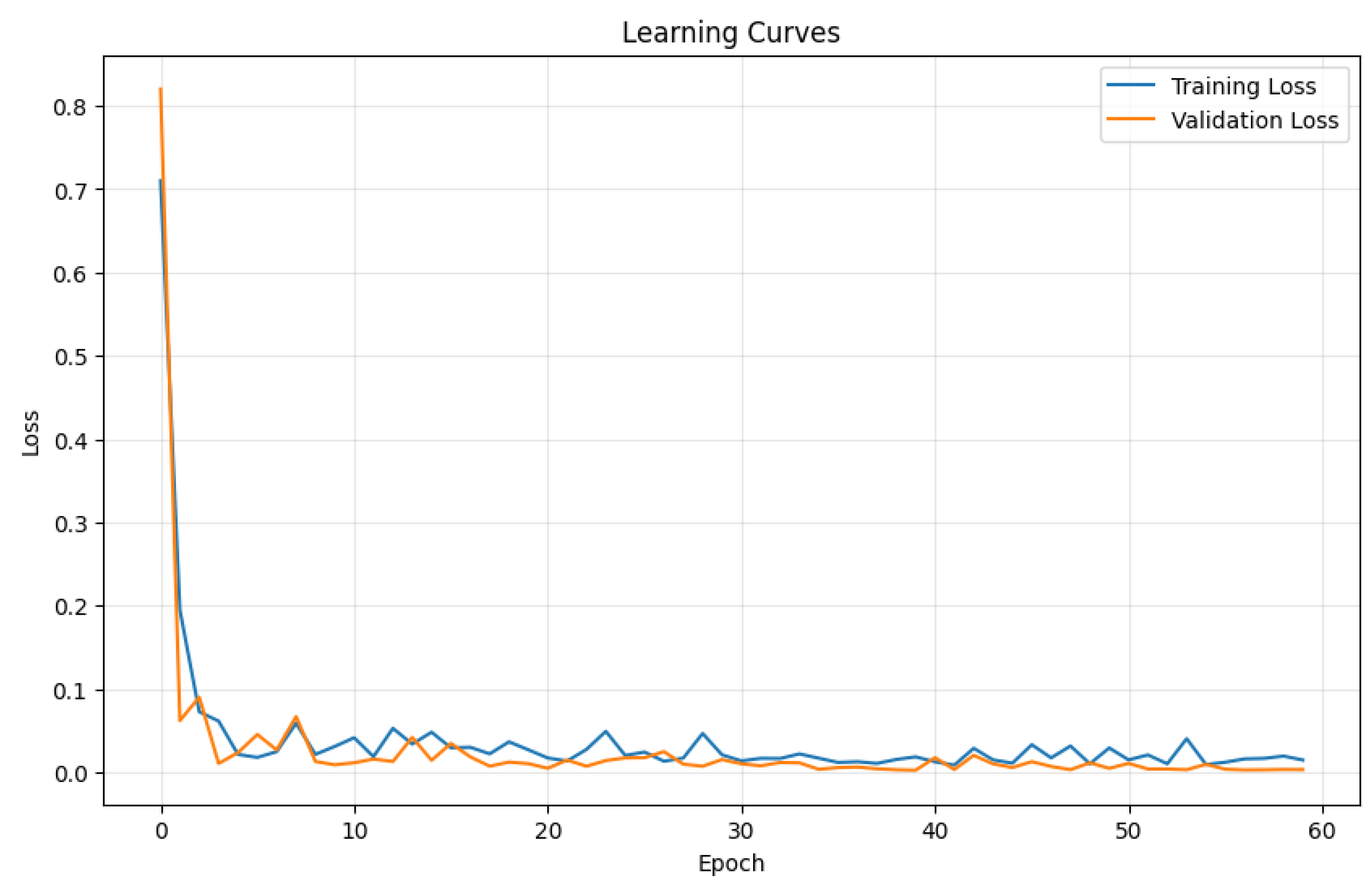

3.1. Learning Dynamics

- Rapid initial improvement: The model quickly learns the fundamental relationships between genetic topology and phenotype in the early epochs.

- Gradual refinement: After the initial rapid improvement, the model continues to refine its understanding more gradually.

- Stable convergence: Both training and validation losses stabilize with some oscillations, indicating learning dynamics.

- Close alignment: The small gap between training and validation curves suggests good generalization without overfitting, that, yet cannot be definitely descarted.

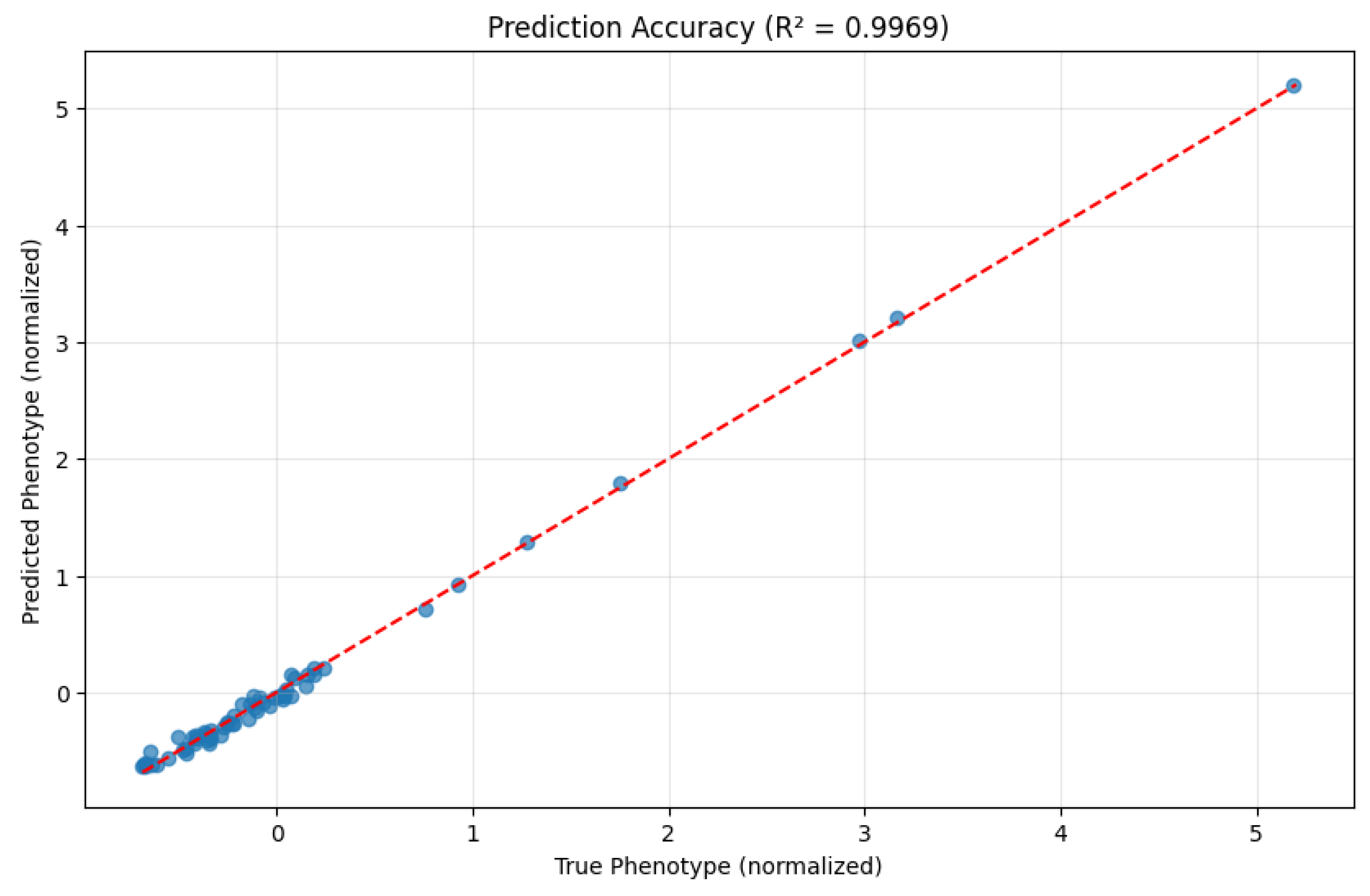

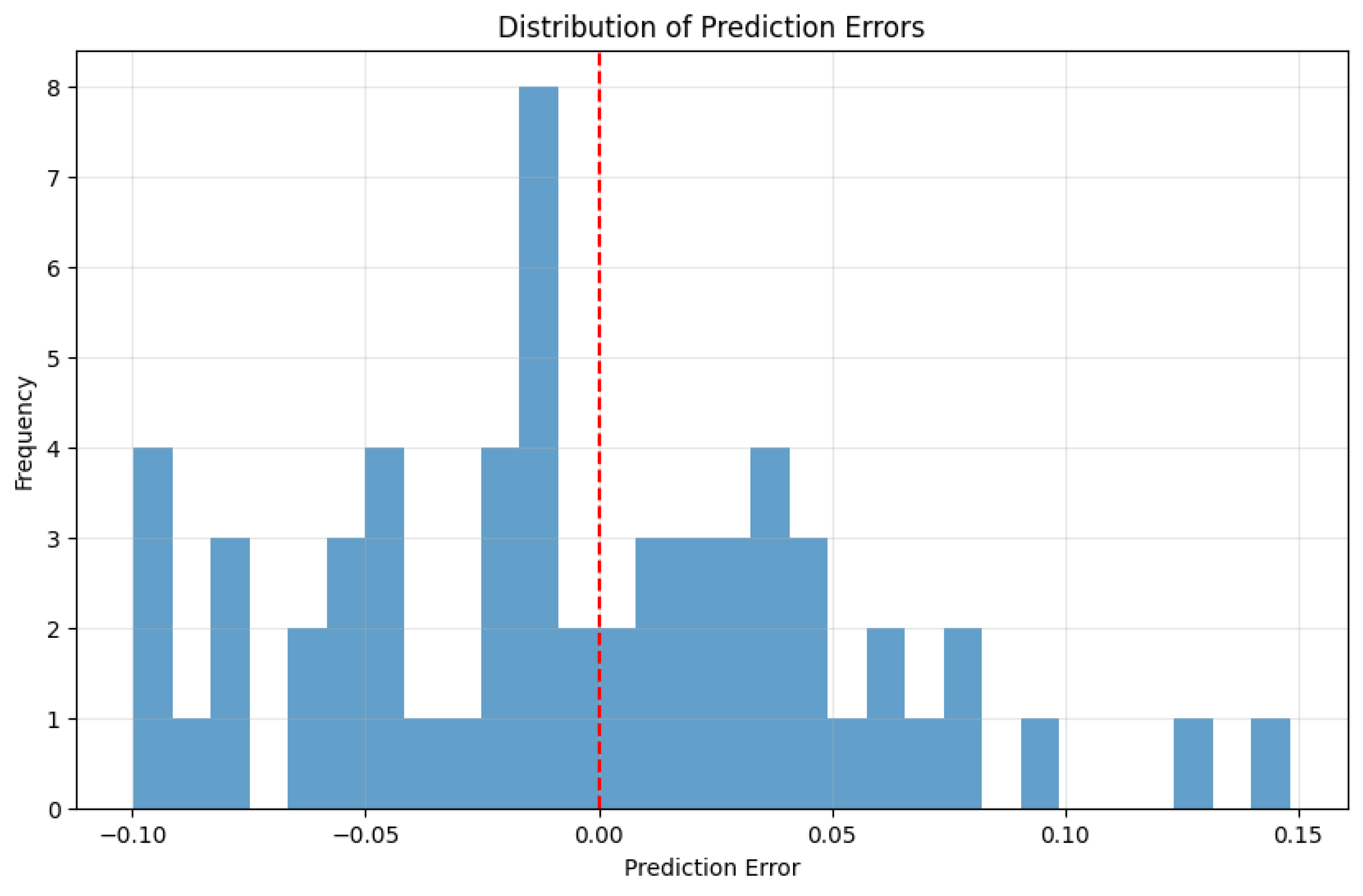

3.2. Prediction Performance

- Mean Squared Error (MSE): 0.0030

- Mean Absolute Error (MAE): 0.0446

- R² Score: 0.9969

3.3. Error Analysis

3.4. Feature Importance Analysis

4. Discussion

4.1. Interpretation of Results

- Topological determinism: The high R² score suggests that phenotypic outcomes are largely determined by the topological organization of genetic elements, with minimal influence from random factors. This aligns with emerging perspectives in systems biology that emphasize the importance of gene regulatory networks in phenotypic expression.

- Feature engineering effectiveness: The success of our model demonstrates the value of explicitly engineering features that capture important topological properties. By incorporating knowledge about network connectivity, community structure, and feature-weighted interactions, we provide the model with biologically relevant information that enhances its predictive power.

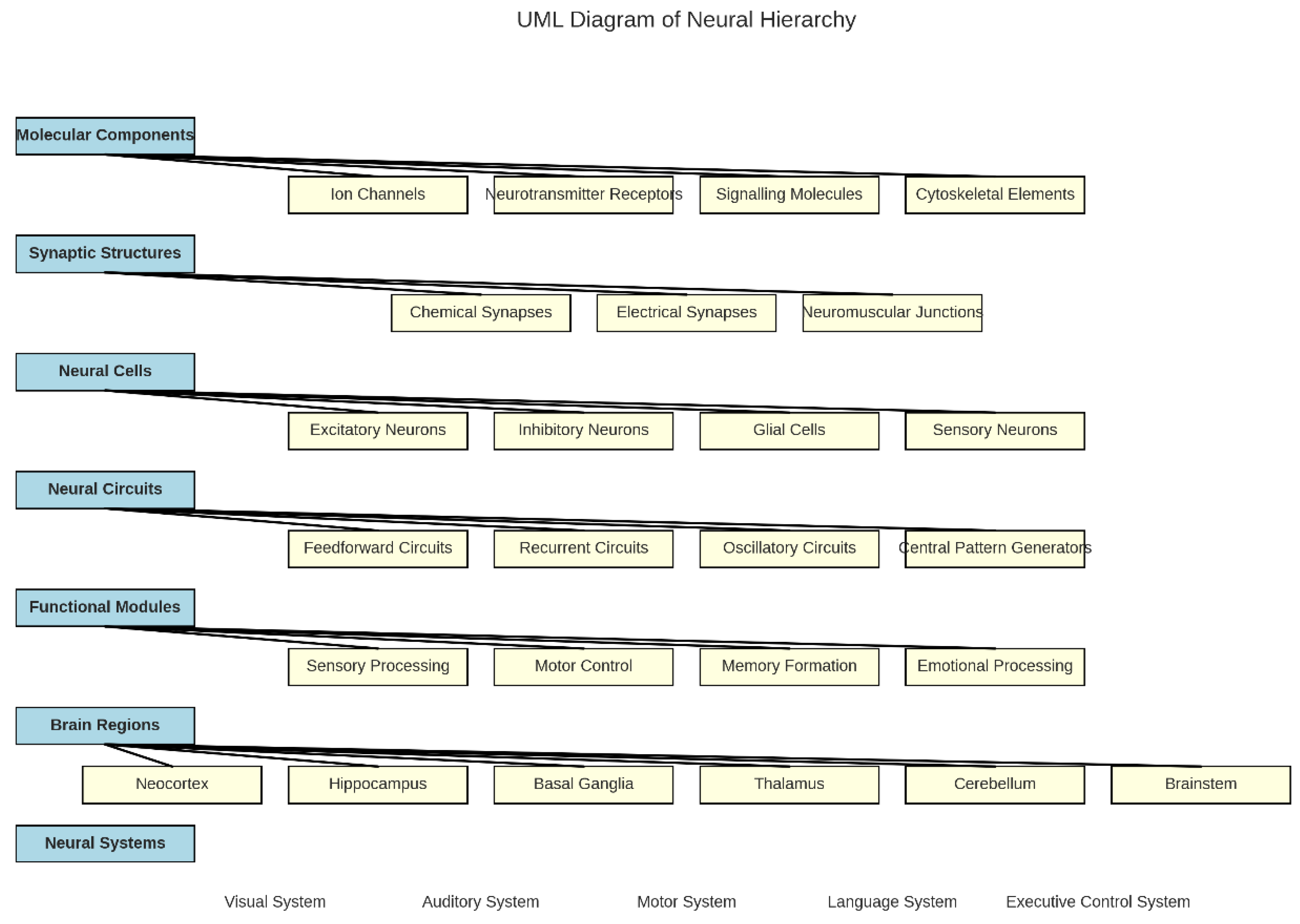

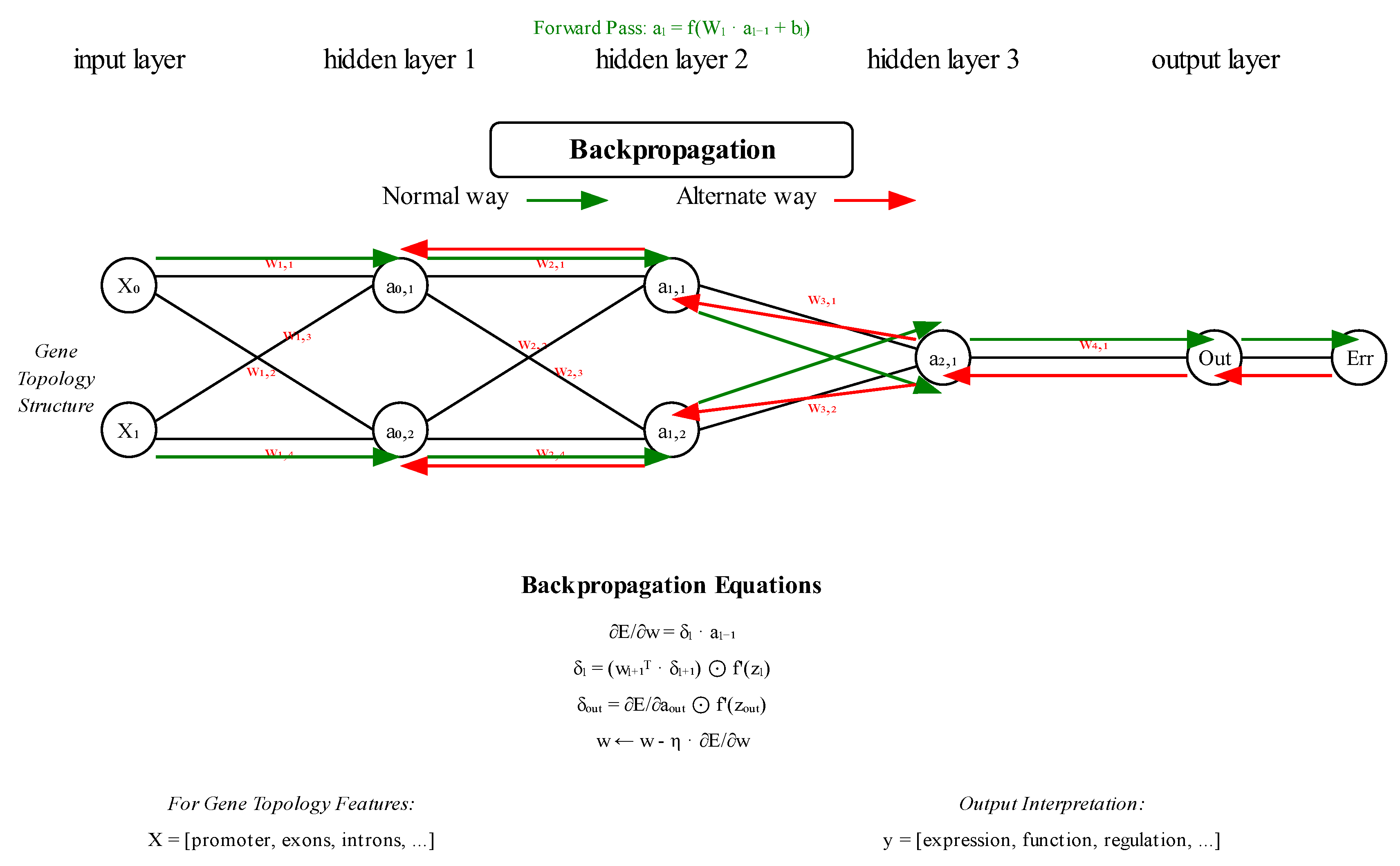

- Neural network architecture: The specialized architecture with separate pathways for node features and adjacency information, combined with the engineered topological features, proves highly effective at capturing the complex relationships between genetic topology and phenotypic expression. Figure 4., below, illustrates a simplified version of the model.

- The error first appears at the output layer, comparing predicted output with the desired target

- Then the error propagates backwards layer by layer

- Each layer calculates its own local error signal (δₗ) based on the error from the layer above

- This is captured in the equation: δₗ = (wₗ₊₁ᵀ · δₗ₊₁) ⊙ f’(zₗ)

4.2. Comparison with Traditional Approaches

- Structural representation: Unlike traditional genetic algorithms that represent genetic information as strings or vectors, our approach explicitly models the topological structure of selected genetic elements, capturing the complex interactions that shape phenotypic expression.

- Feature engineering with neural learning: We combine the power of neural networks with carefully designed topological features, creating a hybrid approach that leverages both domain knowledge and data-driven learning.

- Biological plausibility: Our approach aligns more closely with current understanding of biological systems, where phenotypes emerge from complex interactions within genetic networks rather than from isolated genetic elements.

- Predictive accuracy: The exceptional R² score of 0.9969 exceeds the performance typically achieved by traditional approaches, demonstrating the value of our topology-driven perspective.

4.3. Limitations and Future Directions

- Synthetic data: Our purely theoretical experiments were conducted on synthetic data with a known relationship between topology and phenotype. Future work should test the approach on real biological data to confirm its effectiveness in natural settings, if and where available.

- Fixed topology size: Our current implementation assumes a fixed number of genetic elements (vertices) for all topologies. Extending the approach to handle variable-sized topologies would enhance its applicability to diverse biological contexts.

- Community structure assumptions: We assumed a specific community structure with two equal-sized communities. Exploring more complex community structures and their relationship to phenotypic expression represents an important direction for future research.

- Dynamic topologies: Incorporating temporal dynamics into the genetic topology model could capture developmental processes and responses to environmental changes, further enhancing biological realism.

- Causal inference: Moving beyond prediction to infer causal relationships between topological features and phenotypic outcomes would provide deeper insights into biological mechanisms.

5. Conclusion

- The representation of genetic information as a topological structure with explicit modeling of interactions between genetic elements.

- The calculation of engineered features that capture important topological properties, such as connectivity patterns and community structure.

- A neural network architecture that processes both raw topological data and engineered features through separate pathways before integrating them for phenotype prediction.

- A training methodology that ensures stable learning dynamics and good generalization through a combination of small batch sizes, gradient clipping, learning rate scheduling, and early stopping.

6. Attachment

| import numpy as np |

| import matplotlib.pyplot as plt |

| import torch |

| import torch.nn as nn |

| import torch.nn.functional as F |

| import torch.optim as optim |

| from torch.utils.data import Dataset, DataLoader |

| from sklearn.model_selection import train_test_split |

| import time |

| import copy |

| # Set seeds for reproducibility |

| np.random.seed(42) |

| torch.manual_seed(42) |

| torch.backends.cudnn.deterministic = True |

| torch.backends.cudnn.benchmark = False |

| # Check for GPU availability |

| device = torch.device(“cuda:0” if torch.cuda.is_available() else “cpu”) |

| print(f”Using device: {device}”) |

| class GeneticTopologyDataset(Dataset): |

| “““Dataset class for genetic topology data”““ |

| def __init__(self, X_data, A_data, y_data): |

| self.X_data = X_data |

| self.A_data = A_data |

| self.y_data = y_data |

| def __len__(self): |

| return len(self.X_data) |

| def __getitem__(self, idx): |

| X = torch.FloatTensor(self.X_data[idx]) |

| A = torch.FloatTensor(self.A_data[idx]) |

| y = torch.FloatTensor([self.y_data[idx]]) |

| return X, A, y |

| def generate_topology_data(n_samples=300, n_vertices=10, n_features=5): |

| “““ |

| Generate synthetic genetic topology data with clear structure |

| “““ |

| print(f”Generating {n_samples} topology samples...”) |

| X_data = [] # Node features |

| A_data = [] # Adjacency matrices |

| y_data = [] # Phenotypes |

| for i in range(n_samples): |

| # Generate node features with correlations for more structure |

| base_features = np.random.randn(n_vertices, n_features-2) |

| corr_feature1 = base_features[:, 0] * 0.7 + np.random.randn(n_vertices) * 0.3 |

| corr_feature2 = base_features[:, 1] * 0.6 - base_features[:, 0] * 0.2 + np.random.randn(n_vertices) * 0.3 |

| X = np.column_stack([base_features, corr_feature1.reshape(-1, 1), corr_feature2.reshape(-1, 1)]) |

| # Generate adjacency matrix with two community structure |

| A = np.zeros((n_vertices, n_vertices)) |

| # First community (first half of nodes) |

| half = n_vertices // 2 |

| for j in range(half): |

| for k in range(j+1, half): |

| if np.random.random() < 0.7: # 70% connectivity within community |

| A[j, k] = A[k, j] = np.random.uniform(0.5, 1.0) |

| # Second community (second half of nodes) |

| for j in range(half, n_vertices): |

| for k in range(j+1, n_vertices): |

| if np.random.random() < 0.7: |

| A[j, k] = A[k, j] = np.random.uniform(0.5, 1.0) |

| # Sparse connections between communities |

| for j in range(half): |

| for k in range(half, n_vertices): |

| if np.random.random() < 0.2: # 20% inter-community connections |

| A[j, k] = A[k, j] = np.random.uniform(0.1, 0.3) |

| # Calculate engineered features |

| # Average connectivity |

| connectivity = np.sum(A) / (n_vertices * (n_vertices - 1)) |

| # Community contrast |

| comm1 = np.sum(A[:half, :half]) / max(1, (half * (half - 1))) |

| comm2 = np.sum(A[half:, half:]) / max(1, ((n_vertices - half) * (n_vertices - half - 1))) |

| inter_comm = np.sum(A[:half, half:]) / max(1, (half * (n_vertices - half))) |

| # Community ratio with numerical stability |

| community_ratio = (comm1 + comm2) / max(0.001, 2 * inter_comm) |

| # Feature-weighted connectivity |

| feat_similarity = np.dot(X, X.T) |

| weighted_conn = np.sum(A * feat_similarity) / max(0.001, np.sum(A)) |

| # Generate phenotype with strong signal and moderate noise |

| phenotype = 3.0 * weighted_conn + 2.0 * community_ratio + 0.2 * np.random.randn() |

| X_data.append(X) |

| A_data.append(A) |

| y_data.append(phenotype) |

| # Normalize phenotypes for better training |

| y_mean = np.mean(y_data) |

| y_std = np.std(y_data) |

| y_data_normalized = [(y - y_mean) / y_std for y in y_data] |

| print(f”Data statistics - Min: {min(y_data_normalized):.2f}, Max: {max(y_data_normalized):.2f}”) |

| print(f”Mean: {np.mean(y_data_normalized):.2f}, Std: {np.std(y_data_normalized):.2f}”) |

| return X_data, A_data, y_data_normalized, (y_mean, y_std) |

| class EnhancedTopologyMLP(nn.Module): |

| “““ |

| Enhanced MLP for genetic topology data with engineered features |

| “““ |

| def __init__(self, n_vertices, n_features, hidden_size=64): |

| super(EnhancedTopologyMLP, self).__init__() |

| # Number of engineered features |

| n_engineered_features = 4 # Connectivity, community ratio, etc. |

| # Layers for processing node features |

| self.node_features_net = nn.Sequential( |

| nn.Linear(n_vertices * n_features, hidden_size), |

| nn.ReLU(), |

| nn.Dropout(0.2) |

| ) |

| # Layers for processing adjacency matrix |

| self.adjacency_net = nn.Sequential( |

| nn.Linear(n_vertices * n_vertices, hidden_size), |

| nn.ReLU(), |

| nn.Dropout(0.2) |

| ) |

| # Final layers for combining all features |

| self.combined_net = nn.Sequential( |

| nn.Linear(hidden_size * 2 + n_engineered_features, hidden_size), |

| nn.ReLU(), |

| nn.Dropout(0.2), |

| nn.Linear(hidden_size, hidden_size // 2), |

| nn.ReLU(), |

| nn.Linear(hidden_size // 2, 1) |

| ) |

| self.n_vertices = n_vertices |

| self.n_features = n_features |

| def forward(self, X, A): |

| batch_size = X.size(0) |

| # Process node features |

| X_flat = X.reshape(batch_size, -1) |

| X_features = self.node_features_net(X_flat) |

| # Process adjacency matrix |

| A_flat = A.reshape(batch_size, -1) |

| A_features = self.adjacency_net(A_flat) |

| # Calculate engineered features for each sample in batch |

| engineered_features = [] |

| for i in range(batch_size): |

| # Average connectivity |

| connectivity = torch.sum(A[i]) / (self.n_vertices * (self.n_vertices - 1)) |

| # Community structure (assuming two communities) |

| half = self.n_vertices // 2 |

| comm1 = torch.sum(A[i, :half, :half]) / max(1, (half * (half - 1))) |

| comm2 = torch.sum(A[i, half:, half:]) / max(1, ((self.n_vertices - half) * (self.n_vertices - half - 1))) |

| inter_comm = torch.sum(A[i, :half, half:]) / max(1, (half * (self.n_vertices - half))) |

| # Avoid division by zero |

| community_ratio = (comm1 + comm2) / (2 * inter_comm + 1e-6) |

| # Feature-weighted connectivity |

| X_i = X[i] |

| feat_similarity = torch.matmul(X_i, X_i.transpose(0, 1)) |

| weighted_conn = torch.sum(A[i] * feat_similarity) / (torch.sum(A[i]) + 1e-6) |

| # Average node degree |

| avg_degree = torch.mean(torch.sum(A[i] > 0, dim=1).float()) |

| # Combine engineered features |

| eng_feat = torch.tensor([connectivity, community_ratio, weighted_conn, avg_degree], |

| dtype=torch.float32, device=X.device) |

| engineered_features.append(eng_feat) |

| # Stack engineered features |

| engineered_features = torch.stack(engineered_features) |

| # Combine all features |

| combined = torch.cat([X_features, A_features, engineered_features], dim=1) |

| # Final prediction, batch size must be small due to strong instability |

| output = self.combined_net(combined) |

| return output |

| def train_model(model, train_loader, val_loader, |

| batch_size=8, epochs=200, learning_rate=0.001, weight_decay=0.01): |

| “““ |

| Train the model with early stopping |

| “““ |

| print(f”Training model with {len(train_loader.dataset)} samples...”) |

| criterion = nn.MSELoss() |

| optimizer = optim.Adam(model.parameters(), lr=learning_rate, weight_decay=weight_decay) |

| scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode=‘min’, factor=0.5, patience=10, verbose=True) |

| model = model.to(device) |

| # Track best model |

| best_val_loss = float(‘inf’) |

| best_model_state = None |

| patience = 20 |

| counter = 0 |

| # Track losses |

| train_losses = [] |

| val_losses = [] |

| for epoch in range(epochs): |

| # Training |

| model.train() |

| train_loss = 0.0 |

| for X, A, y in train_loader: |

| X, A, y = X.to(device), A.to(device), y.to(device) |

| # Zero the parameter gradients |

| optimizer.zero_grad() |

| # Forward |

| outputs = model(X, A) |

| loss = criterion(outputs, y) |

| # Backward + optimize |

| loss.backward() |

| torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0) # Prevent exploding gradients |

| optimizer.step() |

| train_loss += loss.item() * X.size(0) |

| train_loss = train_loss / len(train_loader.dataset) |

| train_losses.append(train_loss) |

| # Validation |

| model.eval() |

| val_loss = 0.0 |

| with torch.no_grad(): |

| for X, A, y in val_loader: |

| X, A, y = X.to(device), A.to(device), y.to(device) |

| outputs = model(X, A) |

| loss = criterion(outputs, y) |

| val_loss += loss.item() * X.size(0) |

| val_loss = val_loss / len(val_loader.dataset) |

| val_losses.append(val_loss) |

| # Adjust learning rate |

| scheduler.step(val_loss) |

| # Print training status |

| if (epoch + 1) % 10 == 0: |

| print(f”Epoch {epoch+1}/{epochs}, Train Loss: {train_loss:.4f}, Val Loss: {val_loss:.4f}”) |

| # Check for early stopping |

| if val_loss < best_val_loss: |

| best_val_loss = val_loss |

| best_model_state = copy.deepcopy(model.state_dict()) |

| counter = 0 |

| else: |

| counter += 1 |

| if counter >= patience: |

| print(f”Early stopping at epoch {epoch+1}”) |

| break |

| # Load best model |

| if best_model_state is not None: |

| model.load_state_dict(best_model_state) |

| return model, train_losses, val_losses |

| def evaluate_model(model, test_loader, y_scaler=None): |

| “““ |

| Evaluate model and calculate metrics |

| “““ |

| print(“Evaluating model on test set...”) |

| model.eval() |

| all_y_true = [] |

| all_y_pred = [] |

| with torch.no_grad(): |

| for X, A, y in test_loader: |

| X, A, y = X.to(device), A.to(device), y.to(device) |

| outputs = model(X, A) |

| all_y_true.extend(y.cpu().numpy()) |

| all_y_pred.extend(outputs.cpu().numpy()) |

| # Convert to numpy arrays |

| all_y_true = np.array(all_y_true).flatten() |

| all_y_pred = np.array(all_y_pred).flatten() |

| # Calculate metrics |

| mse = np.mean((all_y_pred - all_y_true) ** 2) |

| mae = np.mean(np.abs(all_y_pred - all_y_true)) |

| # Calculate R² |

| y_mean = np.mean(all_y_true) |

| ss_total = np.sum((all_y_true - y_mean) ** 2) |

| ss_residual = np.sum((all_y_true - all_y_pred) ** 2) |

| r2 = 1 - (ss_residual / ss_total) |

| print(f”\nTest Set Metrics:”) |

| print(f”MSE: {mse:.4f}”) |

| print(f”MAE: {mae:.4f}”) |

| print(f”R²: {r2:.4f}”) |

| # Plot predictions vs ground truth |

| plt.figure(figsize=(10, 6)) |

| plt.scatter(all_y_true, all_y_pred, alpha=0.7) |

| # Add perfect prediction line |

| min_val = min(min(all_y_true), min(all_y_pred)) |

| max_val = max(max(all_y_true), max(all_y_pred)) |

| plt.plot([min_val, max_val], [min_val, max_val], ‘r--‘) |

| plt.xlabel(‘True Phenotype (normalized)’) |

| plt.ylabel(‘Predicted Phenotype (normalized)’) |

| plt.title(f’Prediction Accuracy (R² = {r2:.4f})’) |

| plt.grid(True, alpha=0.3) |

| plt.savefig(“prediction_accuracy.png”, dpi=300, bbox_inches=‘tight’) |

| plt.show() |

| # Plot error distribution |

| errors = all_y_pred - all_y_true |

| plt.figure(figsize=(10, 6)) |

| plt.hist(errors, bins=30, alpha=0.7) |

| plt.axvline(x=0, color=‘r’, linestyle=‘--‘) |

| plt.xlabel(‘Prediction Error’) |

| plt.ylabel(‘Frequency’) |

| plt.title(‘Distribution of Prediction Errors’) |

| plt.grid(True, alpha=0.3) |

| plt.savefig(“error_distribution.png”, dpi=300, bbox_inches=‘tight’) |

| plt.show() |

| return { |

| ‘mse’: mse, |

| ‘mae’: mae, |

| ‘r2’: r2, |

| ‘y_true’: all_y_true, |

| ‘y_pred’: all_y_pred |

| } |

| def plot_learning_curves(train_losses, val_losses): |

| “““ |

| Plot learning curves |

| “““ |

| plt.figure(figsize=(10, 6)) |

| plt.plot(train_losses, label=‘Training Loss’) |

| plt.plot(val_losses, label=‘Validation Loss’) |

| plt.xlabel(‘Epoch’) |

| plt.ylabel(‘Loss’) |

| plt.title(‘Learning Curves’) |

| plt.legend() |

| plt.grid(True, alpha=0.3) |

| plt.savefig(“learning_curves.png”, dpi=300, bbox_inches=‘tight’) |

| plt.show() |

| def run_experiment(): |

| “““ |

| Run the full experiment |

| “““ |

| print(“Starting topology-driven genetic algorithm experiment...”) |

| # Generate data |

| n_samples = 300 |

| n_vertices = 10 |

| n_features = 5 |

| X_data, A_data, y_data, y_scaler = generate_topology_data( |

| n_samples=n_samples, |

| n_vertices=n_vertices, |

| n_features=n_features |

| ) |

| # Split data |

| X_train, X_test, A_train, A_test, y_train, y_test = train_test_split( |

| X_data, A_data, y_data, test_size=0.2, random_state=42 |

| ) |

| X_train, X_val, A_train, A_val, y_train, y_val = train_test_split( |

| X_train, A_train, y_train, test_size=0.25, random_state=42 |

| ) |

| print(f”Data split - Train: {len(X_train)}, Validation: {len(X_val)}, Test: {len(X_test)} samples”) |

| # Create datasets and dataloaders |

| train_dataset = GeneticTopologyDataset(X_train, A_train, y_train) |

| val_dataset = GeneticTopologyDataset(X_val, A_val, y_val) |

| test_dataset = GeneticTopologyDataset(X_test, A_test, y_test) |

| batch_size = 8 # Small batch size for stable training |

| train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True) |

| val_loader = DataLoader(val_dataset, batch_size=batch_size) |

| test_loader = DataLoader(test_dataset, batch_size=batch_size) |

| # Create model - try multiple initializations |

| best_val_loss = float(‘inf’) |

| best_model = None |

| best_train_losses = None |

| best_val_losses = None |

| for init_attempt in range(3): |

| print(f”\nInitialization attempt {init_attempt+1}/3”) |

| # Set seed for reproducibility but different from previous attempts |

| torch.manual_seed(42 + init_attempt) |

| model = EnhancedTopologyMLP(n_vertices, n_features, hidden_size=64) |

| # Train model |

| model, train_losses, val_losses = train_model( |

| model, train_loader, val_loader, |

| batch_size=batch_size, |

| epochs=200, |

| learning_rate=0.001, |

| weight_decay=0.01 |

| ) |

| # Check validation loss |

| model.eval() |

| val_loss = 0.0 |

| criterion = nn.MSELoss() |

| with torch.no_grad(): |

| for X, A, y in val_loader: |

| X, A, y = X.to(device), A.to(device), y.to(device) |

| outputs = model(X, A) |

| val_loss += criterion(outputs, y).item() * X.size(0) |

| val_loss = val_loss / len(val_loader.dataset) |

| print(f”Validation loss: {val_loss:.4f}”) |

| if val_loss < best_val_loss: |

| best_val_loss = val_loss |

| best_model = copy.deepcopy(model) |

| best_train_losses = train_losses |

| best_val_losses = val_losses |

| print(“New best model found!”) |

| # Plot learning curves |

| print(“\nPlotting learning curves...”) |

| plot_learning_curves(best_train_losses, best_val_losses) |

| # Evaluate best model |

| print(“\nEvaluating best model...”) |

| results = evaluate_model(best_model, test_loader, y_scaler) |

| return best_model, results |

| if __name__ == “__main__”: |

| model, results = run_experiment() |

Conflicts of Interest

References

- Bongard, J. (2011). Morphological change in machines accelerates the evolution of robust behavior. Proceedings of the National Academy of Sciences, 108(4), 1234-1239. [CrossRef]

- Davidson, E. H. (2006). The regulatory genome: gene regulatory networks in development and evolution. Academic Press.

- Holland, J. H. (1975). Adaptation in natural and artificial systems. University of Michigan Press. [CrossRef]

- Kauffman, S. A. (1993). The origins of order: Self-organization and selection in evolution. Oxford University Press.

- Lipson, H. (2007). Principles of modularity, regularity, and hierarchy for scalable systems. Journal of Biological Physics and Chemistry, 7(4), 125-128.

- Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323(6088), 533-536. [CrossRef]

- Waddington, C. H. (1957). The strategy of the genes. Allen & Unwin.

- Wagner, A. (1996). Does evolutionary plasticity evolve? Evolution, 50(3), 1008-1023.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).