1. Introduction

As public interest in three-dimensional (3D) images grows, there is a rising demand for solutions to address the issues associated with stereoscopic or multi-view 3D imaging, such as discomfort and dizziness. Several 3D imaging and display methods have been developed [

1,

2], leading to an increased research interest in holography, which enables highly accurate 3D imaging [

3,

4,

5]. However, the generation of holograms is highly expensive, which remains a significant challenge [

6,

7] despite their potential.

Holography is a technique used to record and reconstruct the intensity and phase information of a signal wavefront using a reference wave. It was invented by Denis Gabor in 1948, marking the beginning of a revolutionary imaging approach. The invention of coherent laser sources in the 1960s paved the way for substantial advancements in the field of holography. Notable milestones include Yuri Denisyuk’s single-beam reflection holography and Leith and Upatniek’s off-axis transmission holography, which laid the groundwork for modern holographic display. These techniques not only simplify the recording process but also broaden the scope of holography, enabling its application in various fields, from art to scientific visualization [

8,

9,

10].

In a typical hologram-recording setup, whether in reflection or transmission mode, a physical object is required to record a hologram, and the holographic image is usually captured at a 1:1 scale. These limitations can be addressed by adopting holographic printing techniques that use suitable datasets. There are two primary approaches to digital holographic printing [

2,

11]: the numerical computation of interference fringes and optical multiplexing of digital perspective images. Numerical computation involves the algorithmic calculation of interference patterns and the encoding of the resulting data into a medium suitable for holographic displays. Although computationally intensive, this approach provides precise control over phase and intensity information, enhancing the quality of the holographic representation. By contrast, optical multiplexing integrates a series of digital perspective images into a holographic medium. This method is particularly advantageous for creating scaled holographic images and supporting dynamic features such as slight movements, animations, layering effects, and multiple encoding channels, thereby broadening the scope of holographic visualization applications [

12,

13].

Perspective image datasets can be derived from digital videos or photographs, or generated using 3D computer graphics software. This method allows the integration of natural scenes or real-world images with computer-generated 3D graphics within the same holographic print, thereby offering enhanced flexibility and creativity in hologram production. This capability opens up new possibilities for holography, making it accessible for applications ranging from entertainment and education to industrial and scientific visualization. Memory preservation through holography has seen significant advancements with the advent of digital technology.

Existing methods for generating digital holograms predominantly rely on stereo camera setups, photogrammetry, or high-resolution 3D models [

14,

15,

16]. However, these approaches are often inadequate when only a single black-and-white photograph is available. Our approach addresses the limitations of current hologram-generation techniques by facilitating the creation of digital stereoscopic holograms from a single black-and-white photograph. In the proposed approach, digital holograms are generated from minimal input data by combining colorization techniques with depth mapping using video editing software. Our approach leverages recent advancements in image colorization and depth-mapping algorithms to transform a single monochromatic image into rich datasets suitable for holographic reconstruction. Specifically, we first restored and enhanced the source image, applied a colorization algorithm, and then employed a state-of-the-art depth-estimation model to generate a depth map. The depth information was then used to synthesize a series of perspective images, which are subsequently encoded into a digital stereoscopic hologram. By integrating these techniques, accurate and emotionally resonant 3D holograms can be generated using legacy photographs. Our approach accurately preserves the visual characteristics of the original black and white images in the final digital hologram portrait.

This study has significant implications for cultural heritage preservation, personal archiving, and other applications that requires the creation of realistic holographic representations from sparse data. The remainder of this paper is organized as follows. Section II reviews prior work on depth-map generation and holographic display techniques in detail. Section III explains the proposed method for generating digital stereoscopic holograms from a single black-and-white image, which is based on image processing pipelining, depth map estimation, 3D reconstruction, and holographic encoding with a holographic stereogram printer. Section IV reports the results and discusses the implications and limitations of the proposed approach. Section V concludes the study.

2. Related Work

Although our method focuses on generating 3D digital stereoscopic holograms from a single black-and-white image using depth maps, previous studies have investigated the use of depth information for various 3D reconstruction and visualization tasks. Lee et al. [

17] introduced a method for generating digital holograms from photographs real 3D objects, employing a time-of-flight 3D image sensor to acquire both color and depth images. Subsequently, a point cloud was extracted from the depth maps to generate the hologram. Sarakinos et al. [

12] used digital holograms for creating color-reflection holograms of artwork and historic sites. They captured multiple perspective views via photography, videography, and 3D rendering and recorded interference patterns on holographic materials using specialized hardware. Dashdavaa et al. [

18] proposed a method for generating holograms from a reduced number of high-resolution perspective images, which is closely related to our study. They used a pixel rearrangement technique to improve the angular resolution of a Hogel image without increasing the Hogel size or degrading the spatial resolution. This technique involves capturing the perspective images of a 3D scene by recentering the camera and transforming them into parallax-related images for holographic printing. However, the existing methods often require multiple input images, specialized hardware, or color photographs. Our study differs in that it focuses on the generation of 3D digital stereoscopic holograms from single black-and-white images, a scenario not typically addressed in prior studies. To achieve this, we used deep learning to create and refine depth maps, offering a unique and accessible approach for holographic reconstruction in cases where only limited visual data are available, such as historical photographs. This approach allows the generation of 3D holographic content from legacy images, for which traditional methods prove inadequate. Using the proposed method, a holographic portrait can be created within a viewing angle of 85°–100°.

3. Proposed Method

3.1. Generation of the 3D Model From a Single Image Based on Depth Maps

This section describes the method proposed for generating 3D digital stereoscopic holograms from a single black-and-white photograph. Our approach overcomes the limitations of existing methods by leveraging readily available deep-learning techniques to create and manipulate depth information from a single monochromatic input image. The process involves the following key stages: (1) image preprocessing and restoration, (2) colorization using a convolutional neural network (CNN) architecture proposed by Iizuka et al. [

19], (3) depth map estimation using a deep convolutional neural field (DCNF) model proposed by Eigen et al. [

20], followed by refinement using Adobe After Effect, and (4) perspective image synthesis.

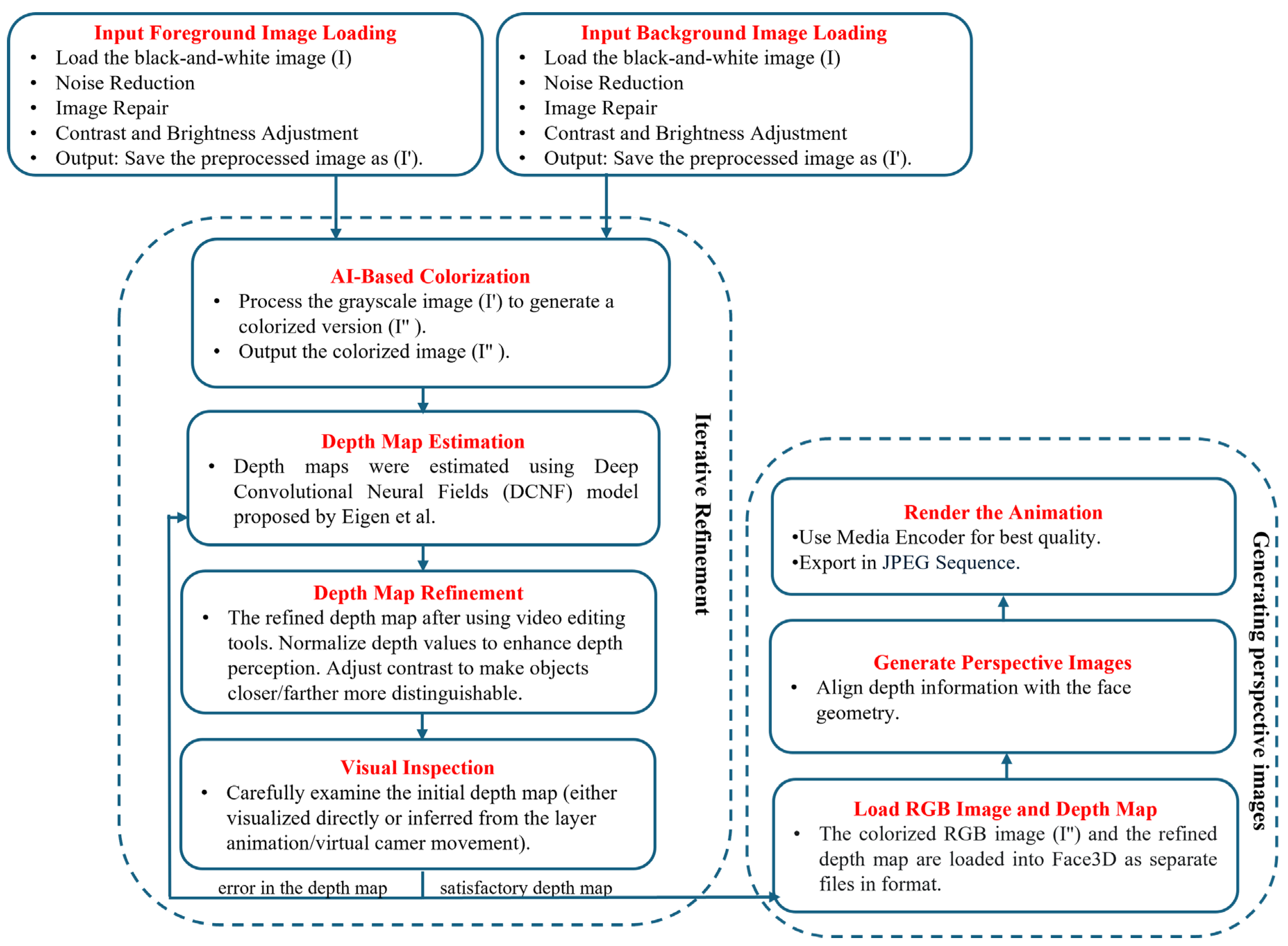

Figure 1 presents an overview of the proposed pipeline.

Our pipeline adopted a serial architecture in which colorization precedes depth estimation. The black-and-white input is first colorized, and a depth map is then generated from the colorized result. This approach is motivated by the observation that, compared to monochromatic images, RGB images have distinct color contrasts that enhance the accuracy of object edge detection, which is crucial for extracting high-quality depth maps. As illustrated in

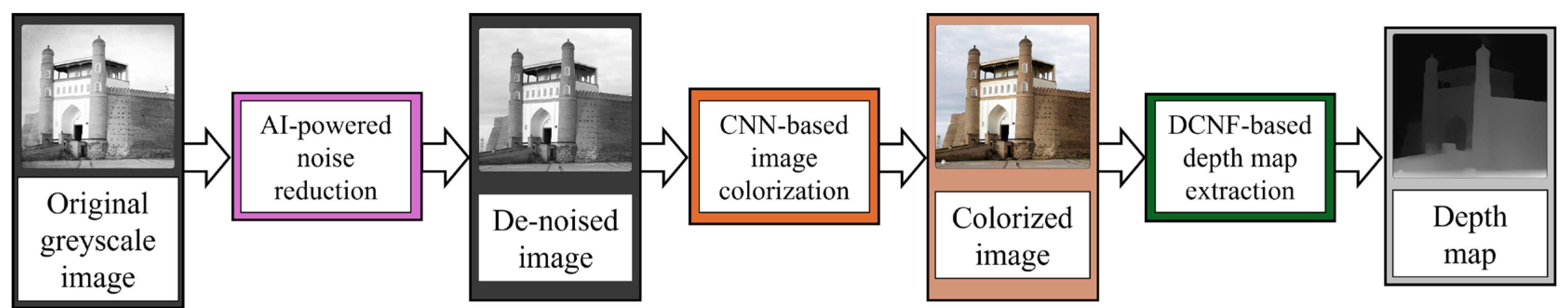

Figure 2, the process begins with noise reduction in the grayscale input image, using a pre-trained deep learning model from the Hugging Face Model Hub. This noise reduction step enhances the quality of the input for subsequent colorization and depth-map extraction.

For automatic image colorization, a joint end-to-end learning approach is used to integrate global image priors with local image features. The architecture comprises low-level feature network, mid-level feature network, global feature network, and colorization network arranged in a directed acyclic graph. This design allows the network to process images of arbitrary resolution and adapt colors based on the overall image context learned from large-scale scene classification datasets. The network outputs chrominance channels, which are then combined with the luminance channels from the original grayscale image to produce colorized results. We chose the CNN-based colorization model proposed by Iizuka et al. [

19] because its architecture, which incorporates both global scene priors and local image features, is well-suited for colorizing legacy black-and-white photographs. Global priors help infer plausible colors based on the overall scene context, whereas local features enable the detailed colorization of individual objects. This provides an advantage over simpler colorization methods that rely solely on local pixel relationships and often produce inconsistent or unrealistic colorizations.

We employed a DCNF model for depth-map estimation [

20]. This model formulates depth estimation as a continuous conditional random field (CRF) learning problem by combining the strengths of deep CNNs and continuous CRFs in a unified CNN framework. The DCNF model learns the unary and pairwise potentials of a continuous CRF, facilitating depth estimation in general scenes without geometric prior or extra information. To learn the unary potential, it uses a CNN with five convolutional and four fully connected layers to regress the depth values from the image patches centered around the superpixel centroids. To learn the pairwise potential, it uses a fully connected layer to model the similarities between neighboring superpixel pairs. The CRF loss layer minimizes the negative log-likelihood using the unary and pairwise outputs. A DCNF model was selected because it offers a robust and accurate approach for inferring depth from a single image, providing more accurate and consistent depth maps than methods that rely solely on CNNs or traditional geometric cues. Although this approach involves integrating existing technologies, our primary contribution lies in the innovative implementation of the pipeline, specifically in optimizing the serial combination of these models for hologram-restoration from legacy images. As illustrated in

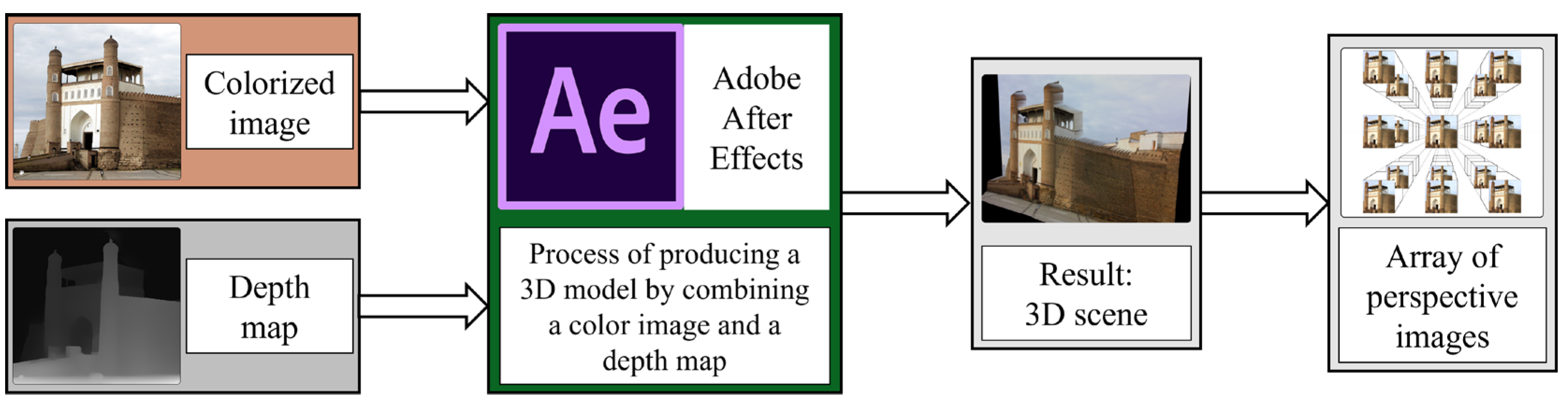

Figure 3, the colorized image and depth map were imported into the Adobe After Effects software to produce a 3D scene and an array of perspective images required for holographic encoding.

3.2. Acquisition of Perspective Images

The generation of perspective images is essential for synthesizing holographic stereograms. These images are fundamental for achieving depth-dependent parallax effects, which are necessary for the accurate holographic visualization of a scene. In our approach, perspective images are generated from color-texture data, with the depth map providing the spatial information required to reconstruct multiple viewpoints. This process ensures that the holographic representation remains realistic by accurately portraying the relative positioning of the objects within the scene. Given that holograms are highly sensitive to the quality of input images, it is imperative to choose an appropriate method for generating the perspective images.

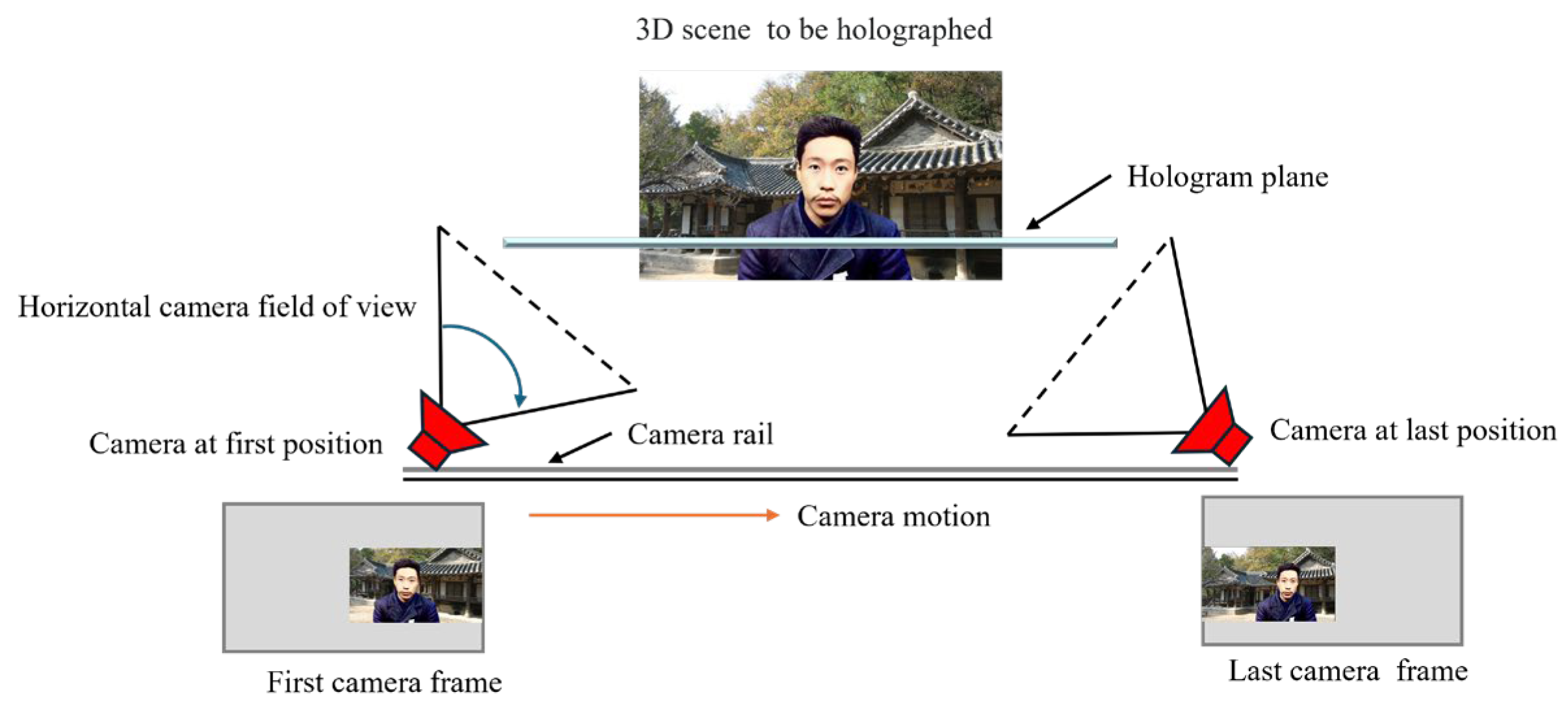

Holographic stereograms are traditionally created using three main steps: acquisition of perspective images, transformation of these images into parallax-related data, and hologram encoding. A 3D scene was constructed in After Effects, and perspective projections were captured using an array of virtual cameras arranged linearly. Crucially, this array employs a recentering camera model: each virtual camera not only translates along the linear path but also rotates to maintain focus on a fixed point at the center of the intended hologram surface (as depicted in

Figure 4). This minimizes the required camera field of view, potentially improving data quality. The perspective views are then exported directly as a JPEG sequence, where each image represents a distinct viewpoint and contains the required parallax information. This approach bypasses the traditional computationally intensive step of transforming perspective images into parallax-related data. Finally, this sequence of multi-view images was used for hologram encoding.

3.3. Digital Hologram Encoding and Hogel Generation

To replicate the optical field from multi-view image data, it is necessary to generate hogels that can be transferred to a digital hologram printer. This requires rearranging multi-view images into a data structure optimized for digital hologram printing [

1,

21].

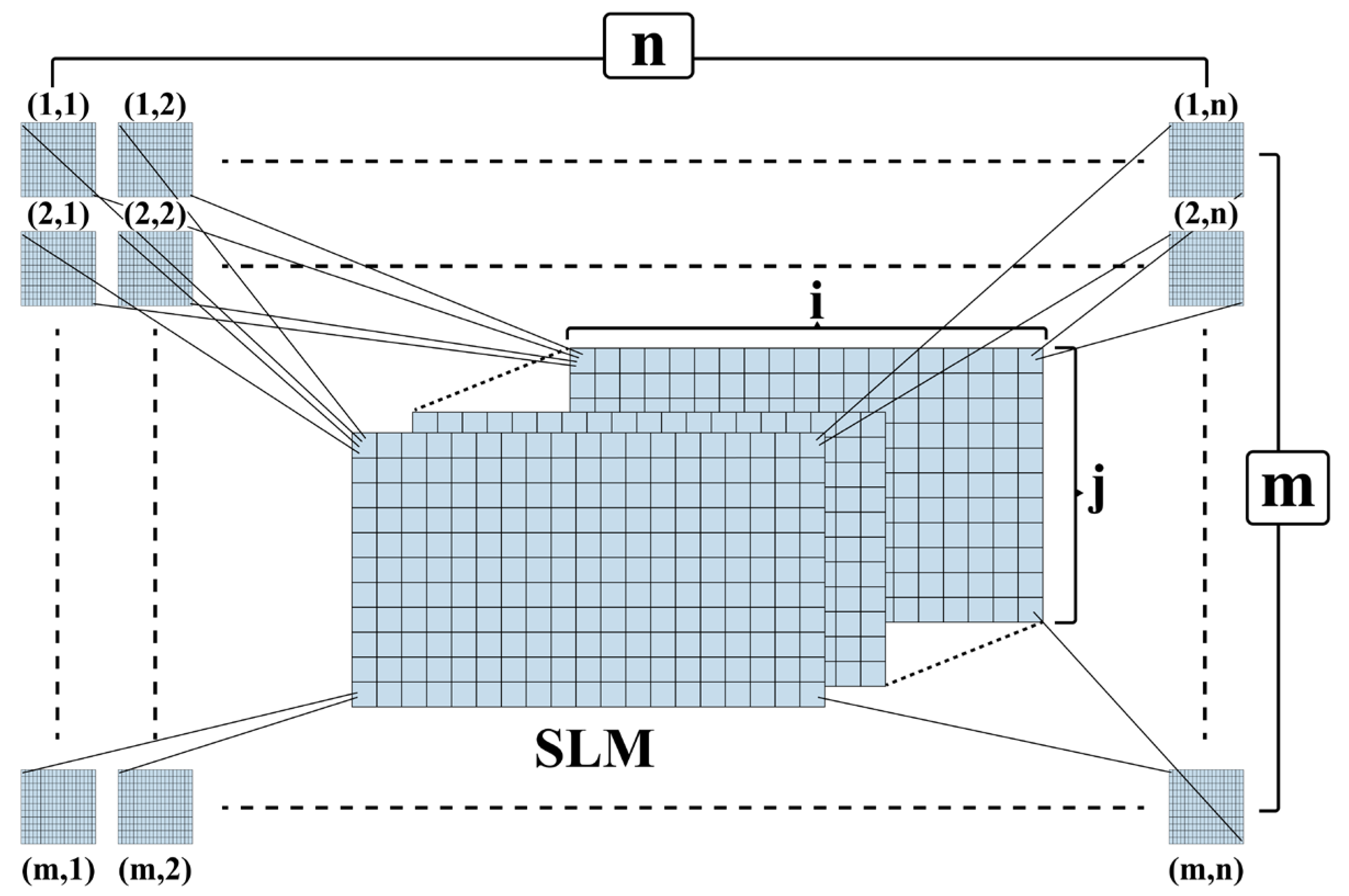

Figure 5 shows a conceptual diagram of digital-data rearrangement for hogel generation. Consider a 2D full-parallax 3D stereoscopic image with

pair of images (viewpoints) and a spatial light modulator (SLM) with a resolution of

. The diagram shows how the data are rearranged in this scenario.

The creation of a digital hologram begins with a set of multi-view images, denoted by

, each representing a unique perspective on the desired scene, as shown in

Figure 6. Using a dedicated data-rearrangement software on a control computer, the pixels of the multi-view images are sequentially loaded onto the SLM. The loading process follows a left-to-right and top-to-bottom order, starting with image

and proceeding to

in the first iteration.

The SLM—typically a liquid-crystal-on-silicon device—modulates a collimated laser beam through phase modulation, thereby encoding the information from an uploaded image. Crucially, the modulated beam interferes with a separate unmodulated reference beam of coherent light. The interference pattern created by the interaction of these two beams is then focused onto a holographic plate, which is a photosensitive material designed to record this intricate pattern. This recording process creates a single holographic element known as the hogel, .

The process is repeated iteratively. Each image is sequentially uploaded to the SLM, illuminated by the collimated laser and reference beams, and focused onto a holographic plate to record the corresponding hogel. This is repeated until the final iteration, where pixels from to are loaded, illuminated, and recorded, thereby generating the final hogel. The patterns displayed on the SLM are computationally generated through computer-generated holography. These calculations are highly complex and require substantial processing power. If both the input images and the resulting holes have a high-definition resolution of 1920 × 1080 pixels, each hogel encapsulates an immense amount of information. Unlike conventional image pixels that contain a single intensity value, each hogel contains 2,073,600 (1920 × 1080) unique data points, effectively encoding a complete perspective view. Consequently, to fabricate the digital hologram, the SLM displays a sequence of 2,073,600 distinct patterns for each hogel location, and each pattern is subtly modified to record a unique interference pattern on the holographic plate. These patterns are calculated to ensure accurate reconstruction of the optical field during viewing.

Hogel serves as the fundamental building block for the final digital hologram. When properly illuminated, the recorded interference patterns within each hogel diffract light, recreating the original 3D scene as perceived from a specific viewpoint. All hogels are combined to reconstruct the entire holographic image.

4. Results and Discussion

4.1. Dataset

Experiments were conducted using a dataset consisting of two monochromatic images. The images are portrait photographs of Korean activists Yu Gwan-sun and An Jung-geun, sourced from Wikipedia, with a resolution of 220 × 280 pixels. The images were chosen not only for their historical significance but also for their varying levels of image quality and complexity, enabling an assessment of the robustness of our method.

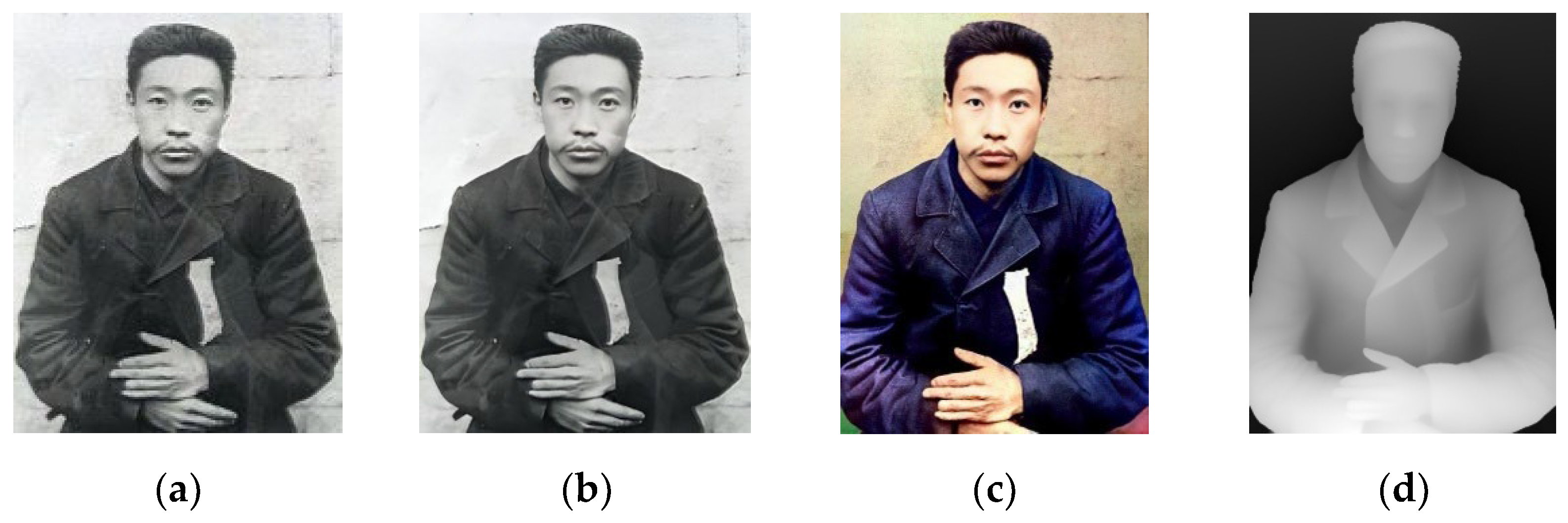

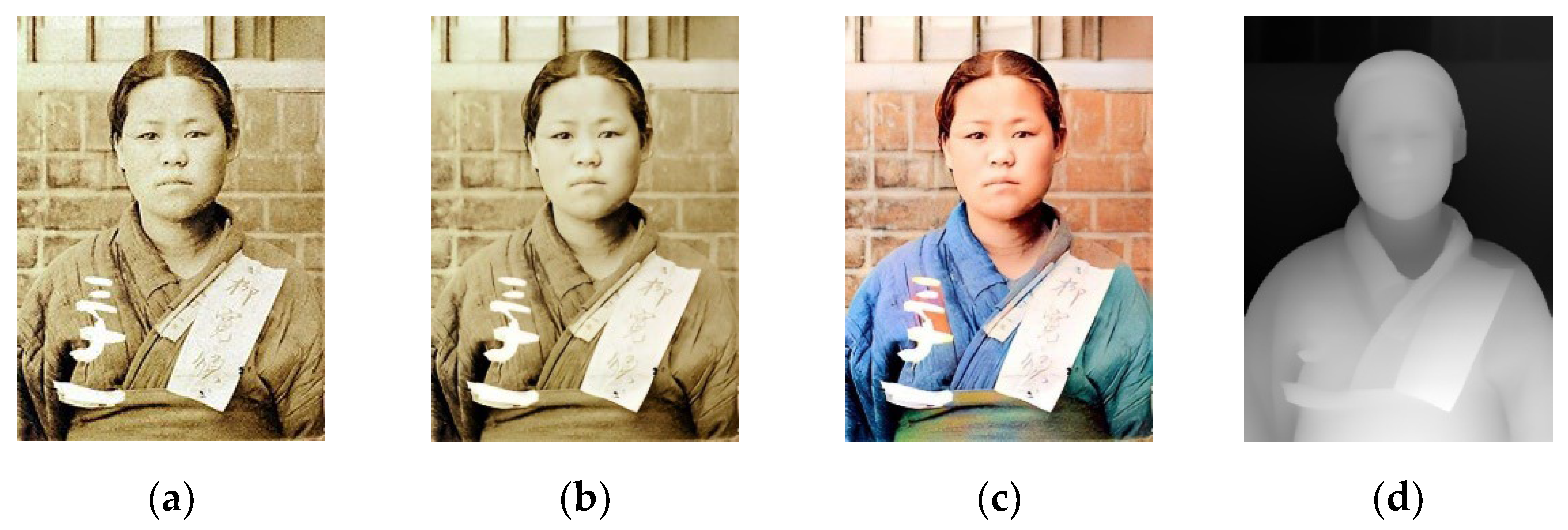

The original images (

Figure 7a and

Figure 8a) were first preprocessed to improve their quality. This involved removing artifacts and enhancing clarity through noise reduction.

Figure 7b and

Figure 8b show the resulting enhanced images. This initial step is crucial for improving the performance of the subsequent colorization and depth-estimation steps.

The CNN-based colorization method proposed by Iizuka et al. [

19] was used to enhance the grayscale images. This model, pre-trained on a large-scale dataset, effectively infers plausible color information based on both global- and local-image features.

Figure 7c and

Figure 8c show the resulting colored images. Visual inspection suggests that the colorization step produced realistic images consistent with the historical context of the photographs. Depth maps were generated from the colorized images using the DCNF model [

20]. This model efficiently infers depth from single images and produces more accurate and consistent depth maps than methods that rely solely on geometric cues. The generated depth maps are shown in

Figure 7d and

Figure 8d, where the brighter regions represent areas closer to the viewer.

Using the colorized images and their corresponding depth maps, 3D scenes were reconstructed using Adobe After Effects. Depth maps were used to displace the 2D images, creating a sense of depth and volume.

Figure 9a and

Figure 9b show the resulting 3D images of Yu Gwan-sun and An Jung-geun, respectively. Perspective images, which are fundamental for holographic stereogram synthesis, were generated from the constructed 3D scenes in Adobe After Effects. A virtual camera array was employed, with the specific configuration of the array determined by the complexity and dimensions of the 3D scene. Each virtual camera was positioned and oriented to capture a distinct perspective of the scene. A recentering camera model was used to ensure that the focal point of each camera remained fixed at the center of the scene. This technique mitigates perspective distortion and maintains consistent scaling across the viewpoints. The trajectory and baseline of the camera array were carefully chosen to achieve a balance between capturing sufficient parallax information and minimizing geometric distortions. The After Effects rendering engine generated a sequence of perspective images, each corresponding to a unique camera viewpoint. The resolution and format of the images were selected based on the requirements of the subsequent hologram-generation process. The resulting image sequence encoded parallax information, thereby forming the foundation for constructing holographic stereograms. This sequence served as the input for the hologram-generation process, where each perspective image contributes to the overall 3D perception of the final hologram. The number of perspective images directly influenced the angular resolution and perceived depth of the reconstructed hologram.

4.2. Holographic Plates for Holographic Stereogram Printer Recordings

Ultimate U04 silver halide glass plates (Ultimate Holography, France) were used for holographic stereogram recording. These plates were specifically designed for full-color holography, minimizing diffusion and responding to all visible wavelengths. The plates were developed using simplified user-friendly chemical baths. Multiple perspective views of a 3D scene must be captured to printing holographic stereograms [

22]. In the half-parallax implementation, 300 horizontal perspective images were acquired over a 95° arc. This configuration creates a floating effect in which objects in front of the vertical axis of the hologram appear to project outward [

23].

Table 1 lists the key parameters used for generating the digital holograms with a hologram printer, which had a hogel resolution of 250 μm. These parameters include the camera distance, the required camera track length, the horizontal camera field of view (FOV), the number of rendered frames, and the pixel dimensions of the individual photographs.

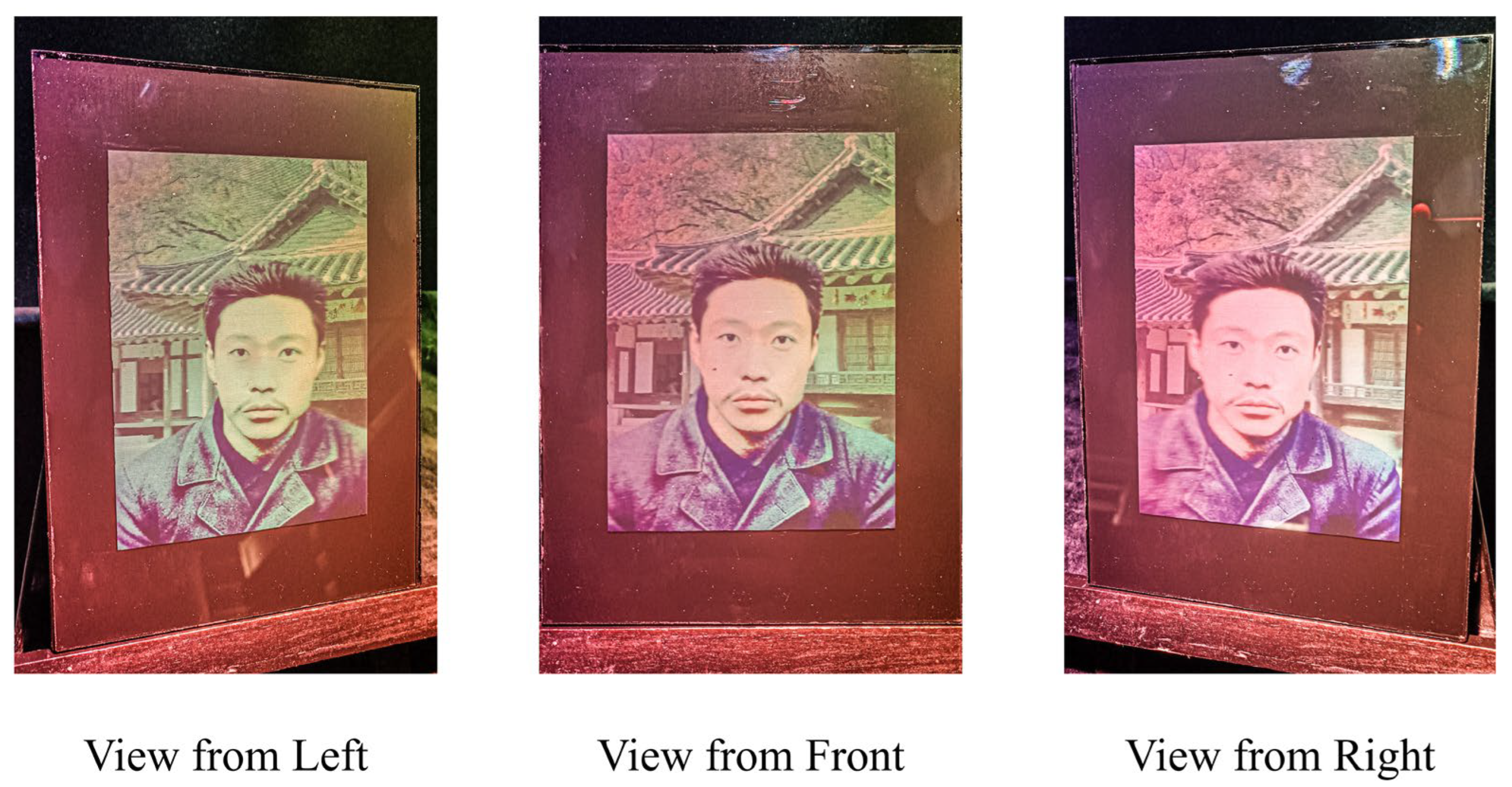

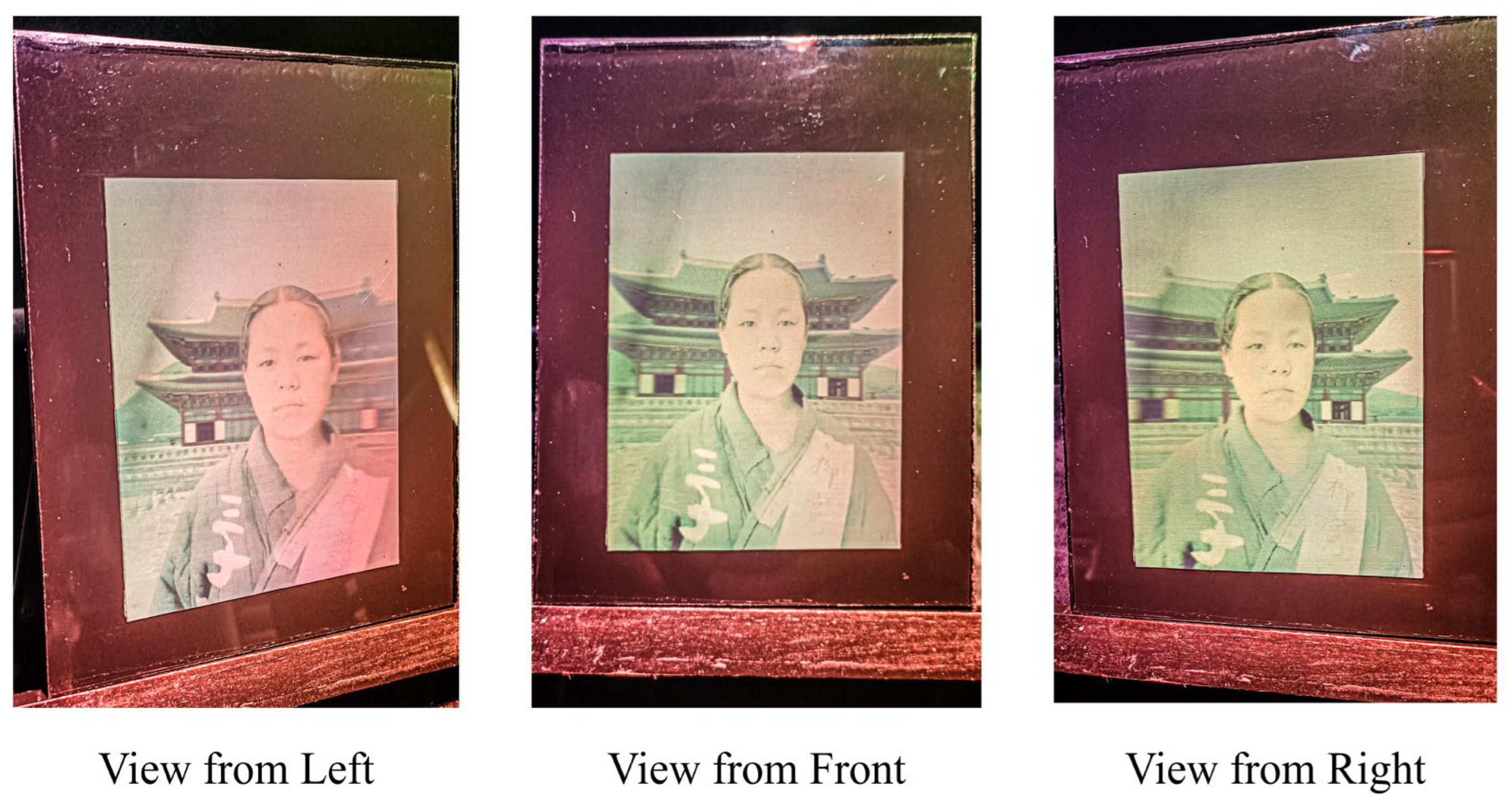

4.3. Final Holograms

To evaluate visual and aesthetic qualities, we selected Yu Gwan-sun and An Jung-geun as subjects for our final hologram demonstrations because of their importance in Korean history. Yu Gwan-sun was involved in the March 1

st Movement and An Jung-geun fought for Korean sovereignty, making them figures of immense national significance. We seek to respect and honor them, in addition to sharing their stories to a larger audience. Although limited in number, the black-and-white photographs of Yu Gwan-sun and An Jung-geun presented a diverse set of challenges for our method, including variations in image quality, posture, and facial expression. These challenges served as opportunities to assess the robustness of our 3D-hologram generation technique and demonstrate its capacity to produce lifelike and fascinating visual experiences. The holographic portraits showcased the effectiveness of our method in producing emotionally resonant 3D representations from vintage black-and-white images, preserving the likeness of the original images. The following results demonstrate the efficacy of the proposed technique when applied to historically significant images. The images of An Jung-geun and Yu Gwan-sun were printed on another U04 plate of dimensions 20 cm × 15 cm in the same manner as described above. The reconstructed images were as highly realistic as before, as shown in

Figure 10 and 11. The two images were also printed on a U04 plate of dimensions 20 cm × 15 cm, which also demonstrated ultra-realistic reconstruction. Additionally, unnatural deformations present in some interpolated images were eliminated. In all cases, the plane of the hologram was at the center of the recorded object.

4.4. Results and Analysis

Using the proposed method, aesthetically pleasing 3D holographic images were generated from historical monochromatic photographs. While this approach shows promising performance in colorization and depth reconstruction, further research is required to address the limitations related to depth-map accuracy, half-parallax generation, and the processing of low-quality input images. Despite these challenges, our study makes a significant contribution to digital holography by enabling 3D visualization from easily accessible historical sources. Our method achieves a horizontal viewing angle of 85°–100° for the holographic portrait, providing a noticeable parallax effect and a comfortable viewing experience for a single observer and crucially, it requires only a single black-and-white image as input, whereas the methods discussed in the related work section typically rely on multiple color images.

5. Conclusions

This paper presents an innovative approach for generating 3D digital stereoscopic holograms from a single black-and-white image. Our method integrated AI-based colorization and depth-map estimation with video-editing techniques to create 3D scenes and render perspective images. This pipeline allows the generation of holographic portraits from historical images, offering a novel method for cultural preservation and personal archiving when traditional techniques are limited by the availability of input data.

Our results confirmed the feasibility of producing visually compelling 3D holograms from monochromatic images. The combination of AI-driven colorization and depth estimation is effective for reconstructing realistic 3D representations. Quantitative comparisons showed that our approach achieved an accuracy comparable to that of state-of-the-art single-image depth-estimation algorithms, whereas subjective evaluations indicated that the generated holograms exhibited convincing depth and realism.

One limitation of the proposed method is the achievable FOV of the generated holographic stereograms. While a wider FOV may seem desirable for a more immersive experience, an FOV of approximately 100° introduces significant distortions owing to increased parallax and perspective effects that are not accurately represented in our rendering pipeline. To maintain image quality and ensure a comfortable viewing experience, we limited the FOV to be within a range of 85°–100°.

Despite these constraints, the quality of the final hologram remains highly dependent on the accuracy of the generated depth map and the FOV of the 3D scene. Future research should focus on addressing the complex challenge of generating 360° holographic portraits from single monocular images. Achieving this goal requires the development of new depth-estimation and 3D-reconstruction techniques that can accurately predicting the complete geometry of a subject using minimal input data. We anticipate that advancements in both holographic display technology and deep learning models are essential for realizing this aim. In addition, expanding the application of our method to a broader range of historical images and exploring alternative holographic printing techniques can further enhance the scope and impact of this study. Despite its limitations, our study introduces a valuable new approach for bridging the gap between historical photography and modern holographic technology. By enabling the creation of life-like 3D representations from minimal input data, this method opens new possibilities for memory preservation, historical visualization, and interactive engagement with the past.

Supplementary Materials

The following supporting information can be downloaded at: Preprints.org. Video S1: Supplementary Material.

Author Contributions

Conceptualization, O.M.N.; methodology, O.M.N. and J.F.U.A.; software, O.M.N., J.CH.; validation, O.M.N., P.G.; formal analysis, O.M.N.; investigation, O.M.N.; resources, P.G. and S.L.; data curation, O.M.N.; writing—original draft preparation, O.M.N.; writing—review and editing, O.M.N.; visualization, O.M.N.; supervision, L.H. and S.L.; project administration, L.H. and S.L.; funding acquisition, S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Korea Creative Content Agency (KOCCA) grant funded by the Ministry of Culture, Sports and Tourism (MCST) in 2024 (Project Name: 3D holographic infotainment system design R&D professional human resources, Project Number: RS-2024-00401213, Contribution Rate: 50%) and MSIT (Ministry of Science and ICT), Korea, under the ITRC (Information Technology Research Center) support program (IITP-2024-0-01846, Rate: 50%) supervised by the IITP (Institute for Information & Communications Technology Planning & Evaluation), The present research has been conducted by the Excellent researcher support project of Kwangwoon University in 2022.

Institutional Review Board Statement

Not applicable

Informed Consent Statement

Not applicable

Data Availability Statement

The original contributions presented in the study are included in the article, and further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN |

Convolutional neural network |

| DCNF |

Deep convolutional neural field |

| CRF |

Conditional random field |

| SLM |

Spatial light modulator |

| FOV |

Field of view |

References

- Javidi, B.; Okano, F. Three-Dimensional Television, Video, and Display Technologies, Springer: Heidelberg, 2002.

- Bjelkhagen, H.; Brotherton-Ratcliffe, D. Ultra-Realistic Imaging: Advanced Techniques in Analogue and Digital Colour Holography, 1st ed.; CRC Press, 2013. [CrossRef]

- Saxby, G.; Zacharovas, S. Practical Holography, 4th ed.; CRC Press, 2015. [CrossRef]

- Komar, V. G. Holographic motion picture systems compatible with both stereoscopic and conventional systems. Tekh. Kino Telev 1978, 10, 3–12. [Google Scholar]

- Gentet, P.; Coffin, M.; Gentet, Y.; Lee, S.-H. Recording of Full-Color Snapshot Digital Holographic Portraits Using Neural Network Image Interpolation. Appl Sci 2023, 13, 12289. [Google Scholar] [CrossRef]

- Richardson, M. J.; Wiltshire, J. D. The Hologram: Principles and Techniques, John Wiley & Sons: Hoboken, NJ, 2018.

- Javidi, B.; Okano, F. Three Dimensional Television, Video, and Display Technologies, Springer, 2011.

- Gabor, D. A new microscopic principle. Nature 1948, 161, 777–778. [Google Scholar] [CrossRef] [PubMed]

- Denisyuk, Y. N. On the reproduction of the optical properties of an object by the wave field of its scattered radiation. Opt Spectrosc 1963, 14, 279–284. [Google Scholar]

- Leith, N.; Upatnieks, J. Wavefront Reconstruction with Continuous-Tone Objects. J Opt Soc Am 1963, 53, 1377–1381. [Google Scholar] [CrossRef]

- Brotherton-Ratcliffe, D.; Zacharovas, S.; Bakanas, R.; Pileckas, J.; Nikolskij, A.; Kuchin, J. Digital Holographic Printing using Pulsed RGB Lasers. Opt Eng 2011, 50, 091307. [Google Scholar] [CrossRef]

- Sarakinos, A.; Zacharovas, S.; Lembessis, A.; Nikolskij, A. Direct-write digital holographic printing of color reflection holograms for visualization of artworks and historic sites. In 11th International Symposium on Display Holography, Aveiro, Portugal (June 2018).

- Zacharovas, S. Advances in Digital Holography. In IWHM 2008 International Workshop on Holographic Memories Digests, Japan, 55–67 (Japan, 2008).

- Dashdavaa, E.; Khuderchuluun, A.; Lim, Y.-T.; Jeon, S.-H.; Kim, N. "Holographic stereogram printing based on digitally computed content generation platform," Proc. SPIE 10944, 109440M (2019). [CrossRef]

- Gentet, P.; Coffin, M.; Gentet, Y.; Lee, S. H. From Text to Hologram: Creation of High-Quality Holographic Stereograms Using Artificial Intelligence. Photonics 2024, 11, 787. [Google Scholar] [CrossRef]

- Gentet, P.; Coffin, M.; Choi, B.H.; Kim, J.S.; Mirzaevich, N.O.; Kim, J.W.; Do Le Phuc, T.; Ugli, A.J.F.; Lee, S.H. Outdoor Content Creation for Holographic Stereograms with iPhone. Appl. Sci 2024, 14, 6306. [Google Scholar] [CrossRef]

- Lee, S.; Kwon, S.; Chae, H.; Park, J.; Kang, H.; Kim, J. D. K. "Digital hologram generation for a real 3D object using a depth camera," in Practical Holography XXXIII: Displays, Materials, and Applications, H. I. Bjelkhagen and V. M. Bove, eds., Proc. SPIE 10944, 109440J (2019).

- Dashdavaa, E.; Khuderchuluun, A.; Wu, H.-Y. Lim, Y.T.; Shin, C.W.; Kang, H.; Jeon, S.H.; Kim, N. Efficient Hogel-Based Hologram Synthesis Method for Holographic Stereogram Printing. Appl. Sci 2020, 10, 8088. [Google Scholar] [CrossRef]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. 2016. Let there be Color!: Joint End-to-end Learning of Global and Local Image Priors for Automatic Image Colorization with Simultaneous Classification. ACM Trans. Graph 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Liu, F.; Shen, C.; Lin, G. "Deep convolutional neural fields for depth estimation from a single image," in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 5162–5170 (2015).

- Su, J.; Yuan, Q.; Huang, Y.; Jiang, X.; Yan, X. Method of single-step full parallax synthetic holographic stereogram printing based on effective perspective images’ segmentation and mosaicking. Opt. Express 2017, 25, 23523–23544. [Google Scholar] [CrossRef]

- Gentet, Y.; Gentet, P. CHIMERA, a new holoprinter technology combining low-power continuous lasers and fast printing. Appl. Opt. 2019, 58, G226–G230. [Google Scholar] [CrossRef] [PubMed]

- Gentet, P.; Gentet, Y.; Lee, S. "CHIMERA and Denisyuk hologram: comparison and analysis," in Practical Holography XXXV: Displays, Materials, and Applications, Proc. SPIE 11710, 83-89 (2021).

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).