1. Introduction

The introduction should briefly place the study in a broad context and highlight why it is important. Three-dimensional (3D) reconstruction creates a detailed digital model of real-world objects or scenes using 2D images, providing precise spatial data for fields like engineering, medicine, and archaeology [

1]. One area that could greatly benefit from this technology is crime investigation, where reconstructing crime scenes helps investigators understand what occurred. The challenge lies in interpreting the aftermath as evidence often offers multiple possible scenarios. To develop a complete narrative the investigators must combine physical traces with additional information such as police reports, witness accounts, forensic data, and their own expertise.

In this research, the focus is on the application of 3D reconstruction in indoor crime scene investigations. Examining how the method of filming a crime scene impacts these reconstructions' quality. The findings can also be relevant for other applications by offering insights into optimising 3D reconstructing processes across different fields. For example, architectural visualisation can benefit from accurate indoor 3D reconstructions for design and renovation projects [

2,

3,

4]. In cultural heritage preservation, detailed reconstructions of historical sites and artefacts can aid in documentation and restoration efforts [

5,

6] Similarly, virtual reality (VR) and augmented reality (AR) applications in gaming and simulation training can achieve higher realism and immersion with optimized 3D reconstruction techniques [

7].

To achieve improvements in 3D reconstruction, Neural Radiance Fields (NeRF)[

8] is utilized, a relatively new approach that uses deep learning to generate highly detailed and accurate 3D models from 2D images by leveraging spatial and light interactions within the captured images. Gaussian Splatting further enhances this process by refining and smoothing the spatial data points, leading to better-quality reconstructions. Additionally, 3D Gaussian Splatting (3D-GS)[

9] is a promising technique in computer graphics for 3D rendering of scenes, gaining traction due to its ability to efficiently render high-quality images while maintaining a compact scene representation. Unlike NeRF, which relies on neural networks conditioned on viewpoint and position, 3D-GS employs Gaussian functions that can be rasterized directly into images, facilitating faster rendering speeds and improved visual fidelity.

NeRF is not the first step in this new evolution of 3D reconstructions but is, rather, the building block of a family of algorithms, which includes SNeRF[

10], Tetra-NeRF [

11], NeRFacto[

12] , Instant-NGP [

13], SPIDR [

14], MERF [

15], and so on. In fact, each one of them solves particular problems and allows for the increment of overall capabilities in different ways.

Preliminary research highlighted the importance of the camera, lens, and their settings as critical factors. The impact of each parameter on 3D reconstruction was thoroughly examined [

16,

17]. Additionally, the method used to film an environment was found to have a significant influence on the quality of the 3D reconstruction.

This paper concentrates solely on the method of filming indoor environments. The experimental setup consists of a living room, kitchen, and hallway. Four comparison experiments were conducted, varying one parameter at a time, to identify the optimal scan method for high-quality 3D reconstructions in indoor crime scene scenarios.

We investigate various factors that influence the quality of 3D reconstructions, focusing primarily on camera orientation, operator walking speed, layering techniques, and scanning paths. The orientation of the camera during capture plays a significant role. Landscape mode offers a broader horizontal field of view, ideal for wide scenes, while portrait mode enhancing vertical detail, which is better suited for tall subjects. Capturing the same environment in both modes enables an analysis of differences in data quality, level of detail, and the accuracy of 3D reconstructions.

Another key factor is the walking speed of the camera operator, as rapid movement can cause autofocus issues, resulting in blurry frames that negatively impact the reconstruction quality. By testing various walking speeds and comparing the resulting video lengths, we assess how motion clarity influences the final 3D model. Furthermore, the use of various layering techniques is examined to enhance data capture from multiple heights, along with the significance of scanning paths to achieve comprehensive environmental coverage. Together, these tests aim to optimize the methodology for achieving high-quality 3D reconstructions.

Accurate 3D reconstructions in crime scene investigations may provide crucial insights into the sequence of events and assist investigators in analysing evidence more efficiently. The traditional way of representation, like sketching or photographing the scene, neglects important details. In other hand, 3D reconstruction provides an overall, interactive way to view the scene. This technology aids in visualizing the crime scene, understanding the spatial relationships between various pieces of evidence, and displaying discoveries in court.

In this paper, the main contributions are provided to the field of 3D reconstruction with a special emphasis on its application within crime scene investigation. The contributions of research can be divided into theoretical and practical advancements.

Theoretical Contributions

• Impact analysis of the filming method: The study presents a deep theoretical look into the impacts derived from different filming methods, including camera orientation, filming speed, camera layers and filming path on the quality of 3D reconstruction. It deepens the comprehension of the interaction of settings for their impact on noise levels and detail accuracy within the resultant reconstructed models.

• Advancement in 3D reconstruction techniques: The primary difference is that this paper uses Gaussian Splatting to advance some of the current 3D reconstruction methodologies. It describes how these new methods can be very effective in producing high-detailed, accurate 3D reconstructions from 2D images and is specifically well-aligned with some of the difficulties related to complex lighting conditions and fine detail.

• Extension of theoretical framework: This research extends the theoretical framework related to 3D reconstruction. For example, a theoretical framework for cameras to be optimized could be any application that it has not seen before. There is big potential of using advanced techniques in reconstruction for architecture, archaeology, and digital media fields.

Practical Contributions

• Crime Scene Investigation Guidelines: The guidelines in the research demonstrate how forensic investigators can achieve an accurate 3D reconstruction of a crime scene. The primary focus of this paper was on the methodology for filming a crime scene. The key takeaway is the importance of capturing the scene from multiple angles by circling around specific objects in different zones.

• 3D Modelling Processes Optimization: The results of the research give very practical recommendations for optimization of 3D reconstruction processes within numerous fields. These recommendations can significantly improve the quality of 3D reconstructions used in architectural visualization, cultural heritage preservation, and VR/AR applications for enhanced realism and immersion.

This paper advances the methodology of 3D reconstructions in crime scene investigation with both theoretical and practical contributions, thus widening the scope of applicability of 3D reconstruction technology in many professional fields.

This paper is organized as follows:

Section 2 describes the methodology used in this study, including the methods employed during image capture and how they meet the demands of crime scene investigations.

Section 3 presents the results of our experiments and comparative analysis of different methods.

Section 4 provides a detailed discussion of these findings, comparing them with existing literature and exploring their implications for various applications. Finally,

Section 5 concludes the paper, summarizing our key contributions and suggesting directions for future research.

2. Materials and Methods

2.1. Experimental Setup

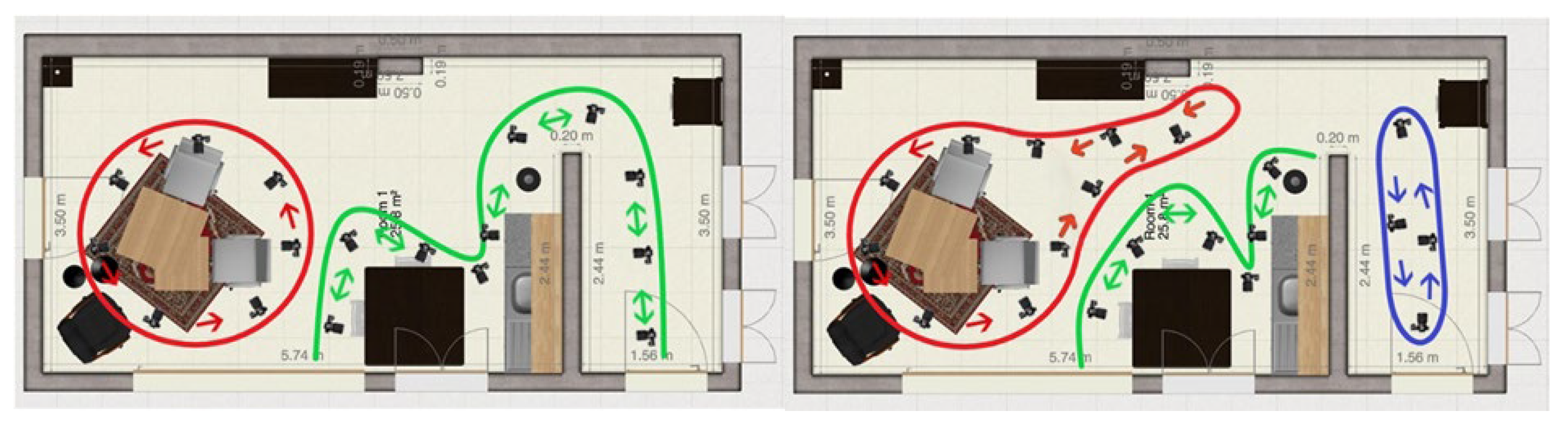

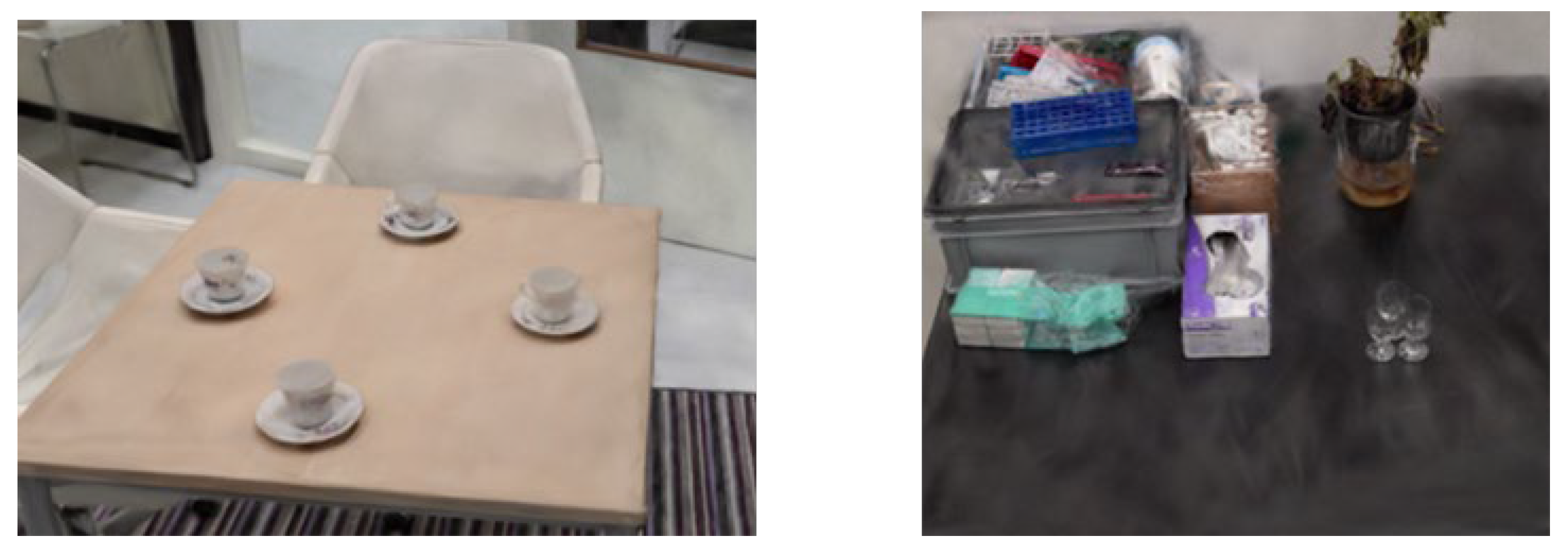

The experimental setup consists of a living room, kitchen, and hallway with several key features, such as tables, chairs, tableware, closets, ceiling, and lamps. These elements chosen to provide a diverse set of objects with different geometric and textural information for the 3D-GS. The floor plan of the room is displayed in the image below.

Figure 1.

Experimental setup.

Figure 1.

Experimental setup.

The primary equipment that was used was a SONY a7c[

18] camera with a 24.2MP full-frame Exmor R CMOS BS [

18]. This camera was chosen for its full-frame sensor, which results in high image quality. The lens combined with the camera is a Sigma 14mm f/1.4 DG DN[

19] Art lens, which is a wide-angle lens. With this lens, capturing more of a room in one capture is possible. The create an even more constant capturing method, a DJI RS 4 [

20] gimbal was added to the camera setup. The gimbal will result in a smoother capture of the environment, with less vibration and thus fewer unclear frames. Controlled lighting conditions were maintained across all captures.

To improve the quality of 3D reconstructions, particularly in noise reduction, and detail accuracy, Postshot [

21] is utilized, an end-to-end software for Radiance Fields to generate the 3DGS. The goal was to optimize the capturing method of rooms such as mentioned above, while generating accurate 3D models.

We conducted four comparison experiments, each varying one parameter of capturing at a time, using the same optimal camera setting and setup during all comparisons. The video footages were processed into 3D reconstructions, which were evaluated based on noise, and detail accuracy. These criteria were chosen for their importance in forensic applications where both clarity and realism are essential for analysing evidence.

This approach allowed us to identify the optimal scan method for high-quality 3D reconstructions in indoor crime scene scenarios, providing valuable insights for future forensic investigations.

2.2. Camera Settings

Various camera settings significantly influence the quality of a 3D reconstruction. This study does not focus on the camera settings. Therefore, the camera is set to automatic mode. One parameter that is not set in automatic mode is the aperture. A higher aperture results in a more detailed background [

22]. The aperture is set to f/5.6 during the capturing, which is the max aperture of the Sony a7c.

2.3. Data Collection

2.3.1. Capturing Techniques

The camera was handheld with a gimbal throughout the capture process, allowing for flexible, controlled, and stable movements as needed. Four distinct camera movement techniques, truck, pedestal, boom, and arc were utilized to achieve comprehensive scene coverage and capture high-quality data for the 3D reconstruction. Each technique was strategically used at different points along the paths based on the spatial characteristics of the scene and the specific details we aimed to capture. (source paper 2)

These techniques were not used for comparison but combined into the different capturing methods to complement each other during the capturing process. Each technique was applied based on the specific spatial requirements of the scene.

2.3.2. Scanning Methods Comparison

Orientation Impact on 3D Reconstruction Quality

This test examines the impact of landscape and portrait modes on 3D reconstruction. Landscape mode (horizontal orientation) captures wide scenes, providing a broader field of view and more horizontal overlap between frames. This will ensure comprehensive scene coverage. Portrait mode (vertical orientation) is ideal for tall objects, offering better vertical detail with more overlap on the vertical axis. By capturing the same environment in both modes, the differences in data quality, level of detail, and accuracy of the 3D reconstructions were assessed.

Effect of Walking Speed on 3D Reconstruction Quality

During filming, the camera operator navigates through the environment to collect data. If the camera operator moves too quickly, some video frames may become blurry because the autofocus cannot keep up. This test aims to examine the impact of blurry frames on 3D reconstruction. The difference in movement speed was assessed by comparing video lengths. Specifically, the duration of the video recorded at a slower movement speed is expected to be twice as long as the video recorded at a faster movement speed.

Layering Technique for Enhanced 3D Reconstruction

Based on preliminary research, the importance of capturing an environment at various heights has been identified. For methods 1 to 10, we utilized a 3-layer technique, with cameras positioned at 0.3 meters, 1 meter, and 1.7 meters. This approach allows for more comprehensive data capture, leading to a better overall picture of the environment and improved 3D reconstruction. However, capturing the environment with more layers does increase the time required. The test aims to examine the difference in 3D reconstruction quality when capturing the environment with 1, 3, or 5. The same object/zone was filmed using identical settings and setup, but the number of layers was varied. The 5 passes that have been taken are shown in

Figure 2. For 1 pass we used camera pose 3, for 3 passes we used 1,3 and 4, for 5 passes we used all 5 of them.

Scanning Paths

The previously mentioned parameters play a crucial role in data collection. Another significant factor is the specific path taken through an environment. Each path differs in some way from each other. The results from testing the different filming paths were a base for the next paths. This continued till there was a suitable path for the use case. All the paths have been filmed with the same camera with the same settings. The different scanning paths are captured with the optimal film technique which are concluded from tests 1, 2, and 3.

Below the scanning paths are displayed and shortly explained:

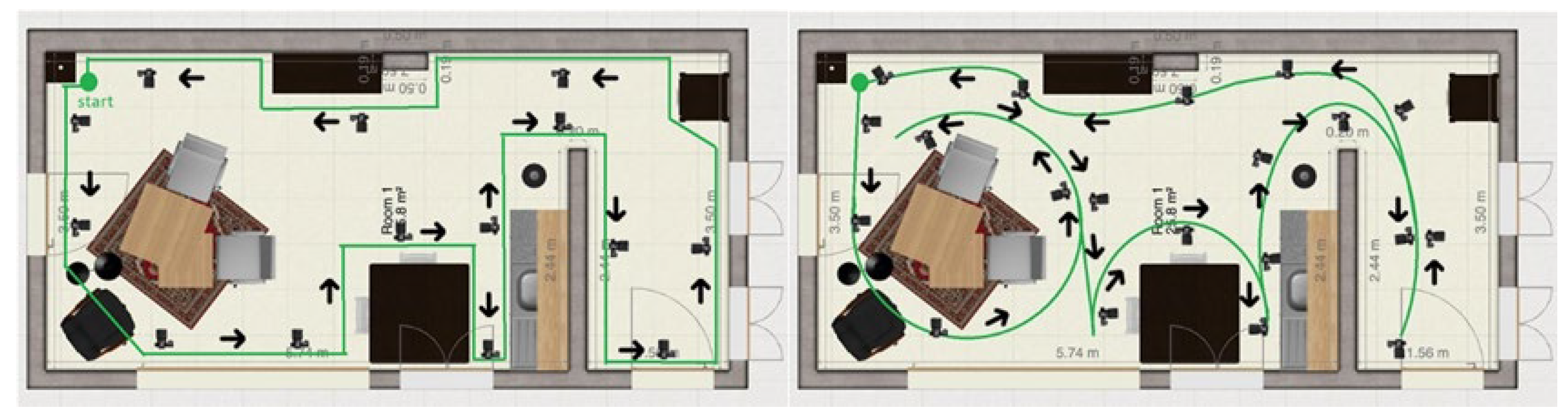

Method 1: Following the contours of the whole environment

The camera operator follows the contours of the environment while pointing the camera inwards. The path is shown in

Figure 3 (1). The camera operator starts in the upper left corner.

Method 2: Circle around the main objects in the rooms in one path

The camera operator follows circles partly around the main objects in the room in one connected path. The objects are a dining table, kitchen table, kitchen, closet, and hallway. This method is expected to create more detail of the individual objects because the focus was more on that. The path is shown in

Figure 3 (2). The camera operator starts in the upper left corner.

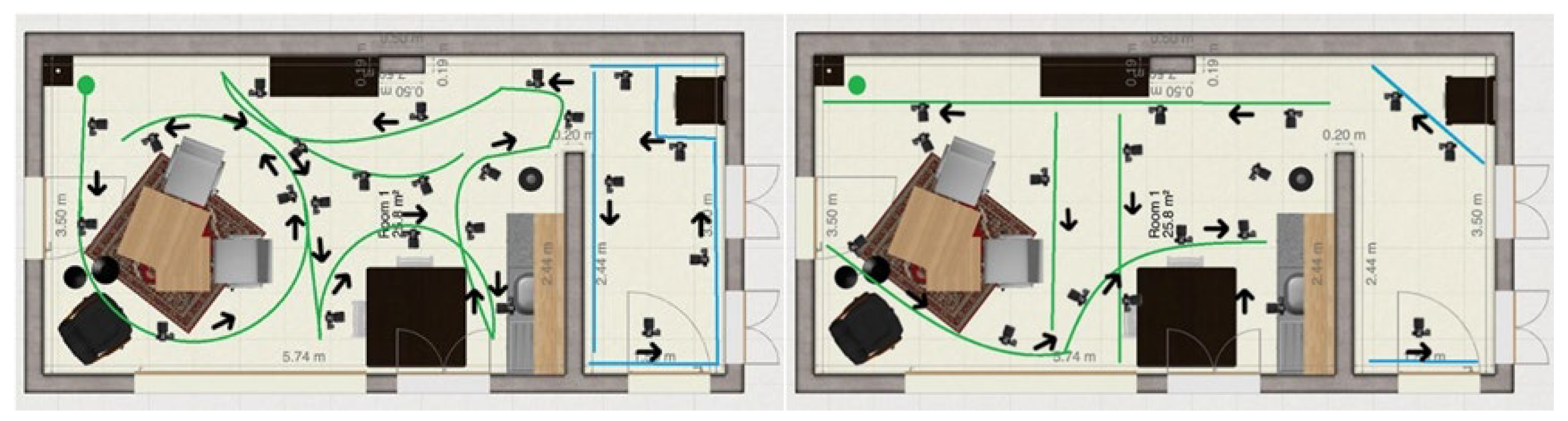

Method 3: Circle around the main objects in the room and separate hallway

This method is similar to method 2, the main difference is that the hallway is filmed separately from the kitchen/living room. At the end of the path in the living room there is an extra part added to get more data from the room from a distance. The path in the hallway follows the same principle as in method 1. The two videos are combined in the 3D reconstruction algorithm separately to create two reconstructions. The paths are shown in

Figure 4 (1).

Method 4: Four passes in the living room and a separated hallway

The camera operator makes two separate passes while facing the camera inward on the horizontal axis. On the vertical axis in the living room the camera was facing outwards. In the hallway the cameraman should make two horizontal passes on either side while face the camera inwards. The paths are shown in

Figure 4 (2).

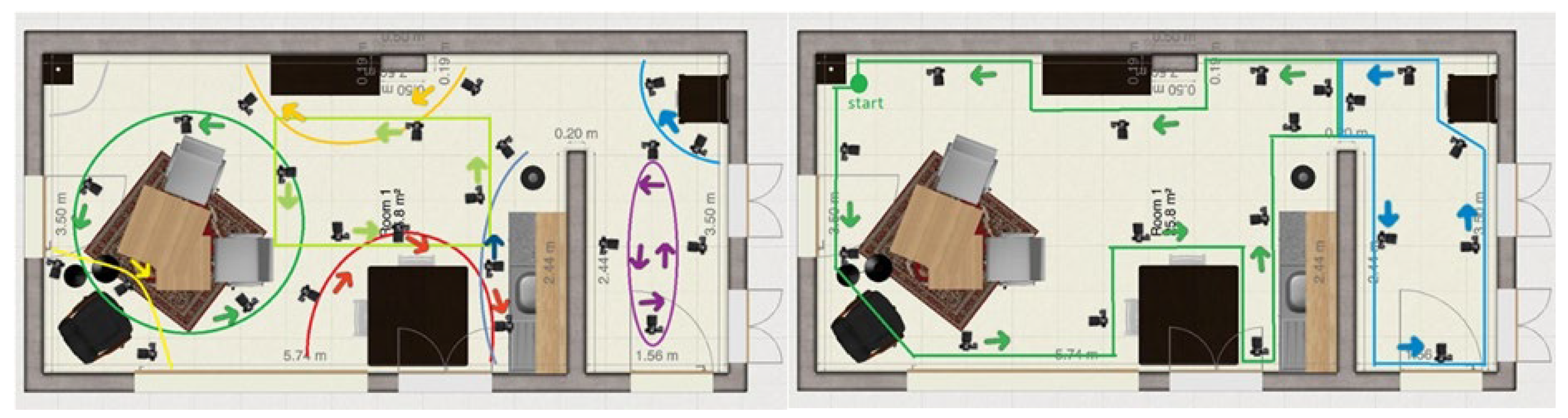

Method 5: Individual objects

Every main object/zone will be filmed separately to create more detail. The different paths are shown in the image to the left. The path is shown in

Figure 5 (1). The room is separated in the following objects/zones: leather seat, side table, diner table, kitchen table, closet, kitchen, corner closet and hallway. With this method the videos will be put in the 3D reconstruction algorithm separately.

Method 6: Alternative to Method 1

This method is the same as the method 1 but now the living room and the hallway are separated. The two videos will be put in 3D reconstructive separately. The path is shown in

Figure 5 (2).

Method 7: Three-zone scans

The room is separated into three zones, each zone was captured separately. The path is shown in

Figure 6 (1). Zone one (blue) captures the living area, zone two (red) captures the kitchen area and the third zone (purple) captures the hallway. These three zones/videos will each be put in the 3D reconstruction algorithm separately.

Method 8: Three-zone scans v2

It was found in the previous testing round that the best results were obtained when the room was divided into multiple zones. In Method 8, that is showed in

Figure 6 (2), the setup involves two loop closures: one at the coffee table and one in the hallway.

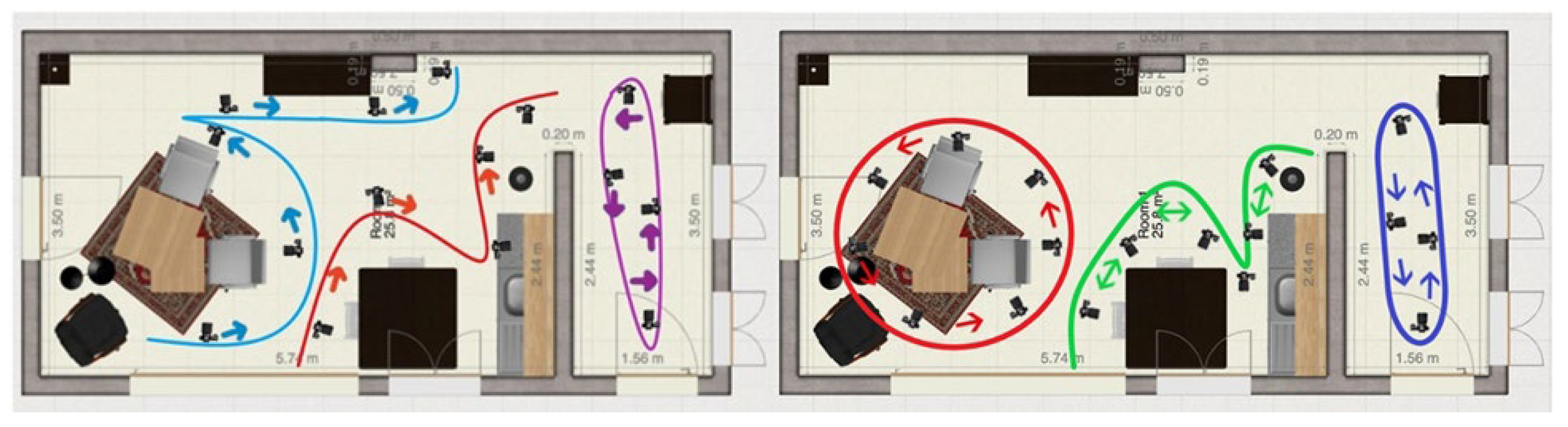

Method 9: Two zone scans

Method 9 involves capturing two videos and it is presented in

Figure 7 (1). One circles around the coffee table, while the other follows a continuous path around the dining table, kitchen, and hallway. This approach is expected to improve capture of the hallway. However, it may overlook some details, specifically the windows and the closet in the hallway corner. To address this, it is important to focus on capturing these features when rounding the corner.

Method 10: Alternative of Method 8

This method is similar to method 8 the only difference is the extra loop around the closet. This path is showed in

Figure 7 (2). This method is expected to have a better reconstruction of the black cabinet.

2.4. Comparative analysis criteria

In this study, we captured a continuous video sequence using a handheld camera, which was then processed into a 3D reconstruction model. The 3D reconstructions is assessed using certain viewpoints in the 3D reconstruction. These viewpoints were chosen for their ability to represent different textures, lighting conditions, and surfaces common in indoor crime scenes.

There are three focus areas: the living room with a coffee table, the kitchen with a diner table, and the hallway. These areas offer varying textures and lighting challenges, making them ideal for comparison. The 3D reconstructions were evaluated based on two criteria: (1) Noise (artifacts in the model), and (2) Details (fidelity of the reconstruction). In

Table 1, these criteria are rated on a 1-5 scale, allowing us to systematically compare different scanning methods. This framework adds a novel approach for assessing 3D reconstructions in forensic applications.

3. Results

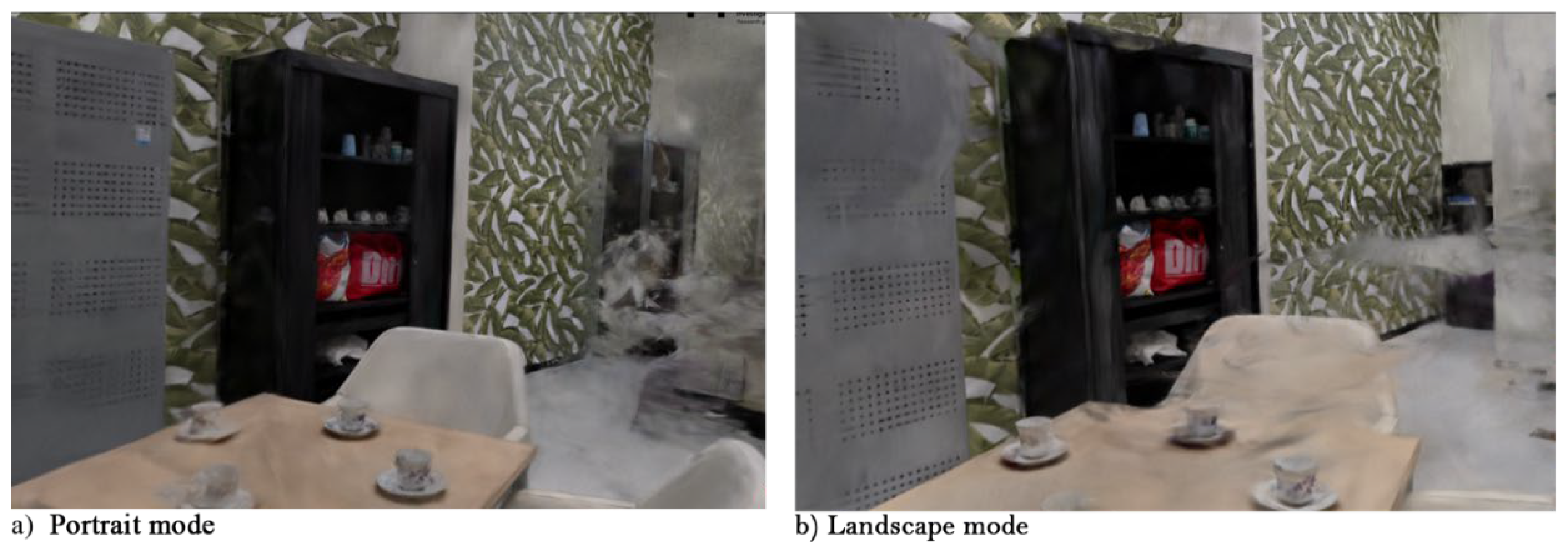

3.1. Orientation Impact on 3D Reconstruction Quality

The purpose of this test is to evaluate the difference in the quality of 3D reconstructions between environments captured in portrait mode and landscape mode. During the scanning process, Method 6 is employed. The difference between portrait and landscape modes is slight but noticeable. As illustrated in

Figure 8, the quality is generally comparable. However, in portrait mode, the wall with leaves exhibits a duplicated black closet, and the wall continues beyond this duplicated closet. This anomaly is absent in landscape mode.

Additional scans comparing the two orientations consistently showed that landscape mode yields slightly better results. The primary conclusion is that filming in landscape mode is superior to portrait mode. We hypothesize that portrait mode is slightly inferior because most data overlaps on the vertical axis rather than the horizontal axis. Given that the camera operator moves along the horizontal axis, horizontal overlap is preferable to ensure features are recognized and matched more frequently.

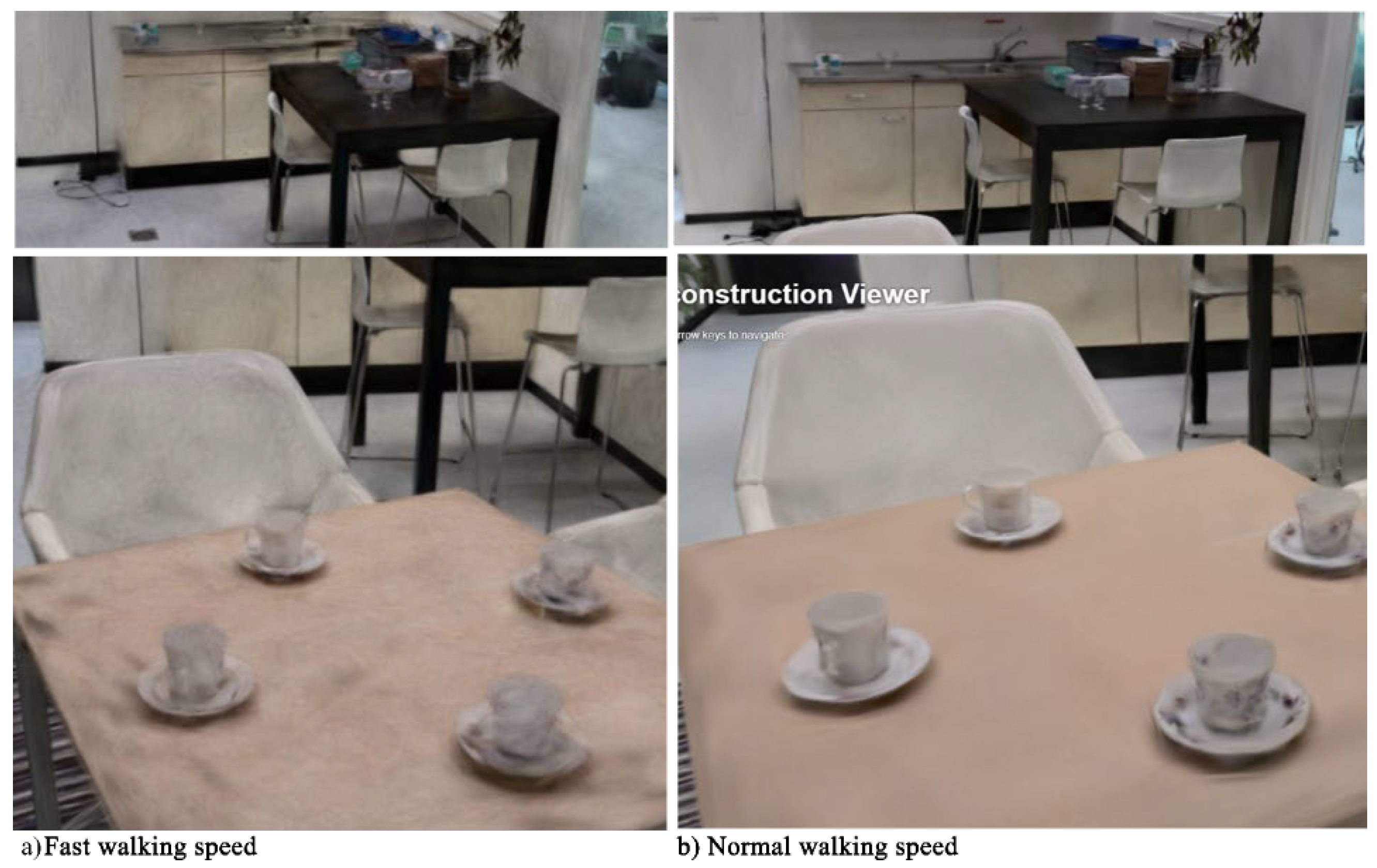

3.2. Effect of Walking Speed on 3D Reconstruction Quality

The duration of the video recording determines the velocity of the camera operator. The trajectory followed by the camera operator remains consistent across both videos. However, the speed at which the operator moves varies. The duration of the video recorded at normal speed (3:43min) is approximately twice as long as that of the faster video (1:56min).

The differences between the fast and slow recordings are clearly evident. At a slower speed, more details, particularly smaller objects, become visible. Additionally, there is significantly less noise in the 3D reconstructions produced from the slower video. This experiment demonstrates that a slower filming speed enhances the detail of the reconstruction. The underlying reason is that a longer video provides more frames for the algorithm to select from. Furthermore, slower movements allow the camera more time to focus on the environment, thereby improving the quality of the 3D reconstruction. Another factor is that slower movements result in greater frame overlap, which increases the number of features that can be matched between frames. All this is visible in

Figure 9.

In summary, a slower filming speed increases the amount of data, enhances the quality of the data, and facilitates the matching of more features between frames, all of which contribute to higher quality 3D reconstructions.

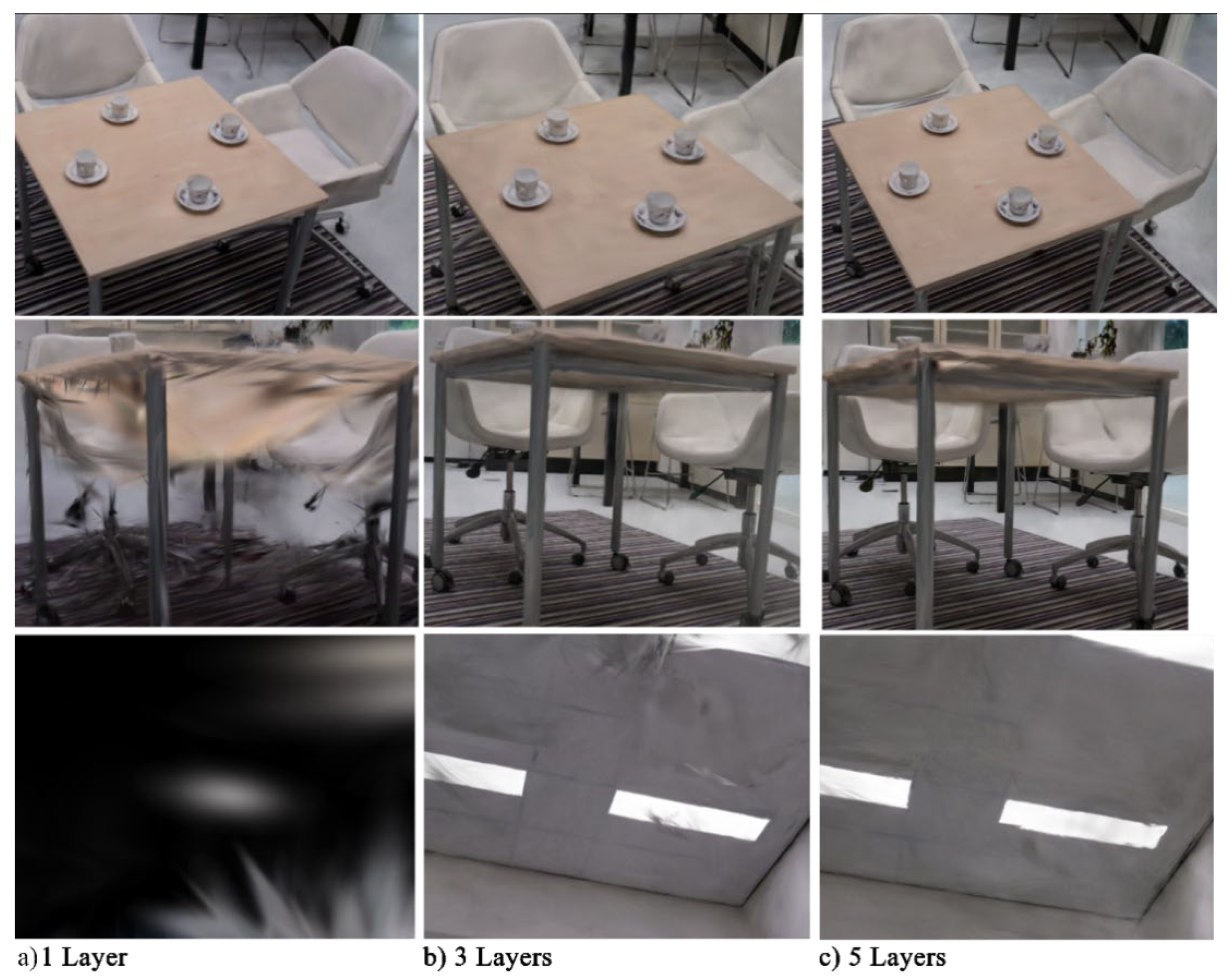

3.3. Layering Technique for Enhanced 3D Reconstruction

Preliminary research underscores the significance of capturing an environment at varying heights to maximize data acquisition. This test aims to analyse the impact of using 1, 3, or 5 layers on the quality of 3D reconstruction. To evaluate the quality of each reconstruction, three reference images are utilized: the top of the table, the bottom of the table, and the ceiling. For an optimal 3D reconstruction, all these components should be clearly visible and of high quality.

Top of the Table:

All three reconstructions (1, 3, and 5 layers) provided clear visibility of the tabletop.

The scan with a single layer showed the sharpest 3D reconstruction with minimal noise.

The scans with 3 and 5 layers, while acceptable, were slightly less sharp compared to the single-layer scan.

Bottom of the Table:

The single-layer scan failed to capture the bottom of the table and the sides, including the legs of the chairs.

Both the 3-layers and 5-layers scans successfully captured the bottom of the table and the chair legs.

The 3-layers scan performed better in visualizing the sides and bottom of the table compared to the 5-layers scan.

Ceiling:

The ceiling in the single-layer scan appeared pitch black due to the absence of data, which the AI algorithm filled with black.

The difference between the 3-layers and 5-layers scans was minimal, with the 3-layers scan being slightly sharper.

A single-layer scan is effective for capturing specific details, such as the surface of a table, but falls short when it comes to achieving a complete 3D reconstruction of an entire environment. The difference between the 3-layers and 5-layers scans was minimal, with the 3-layer scan often outperforming the 5-layer scan. This may be attributed to the 5-layers scan providing excessive data, complicating the algorithm’s ability to accurately align the frames. For this use case, a 3-layers scan is sufficient. All of the above is visible in

Figure 10.

3.4. Most Optimal Scan Method

3.4.1. Summary of Methods

All the methods mentioned above have been tested. The chosen method within an environment significantly impacts the quality of the 3D reconstruction. Each scan was evaluated based on the amount of noise present and the level of detail achieved. The results varied across the different scans. The poorest quality was observed in scan number 3, where the 3D reconstructed environment was barely recognizable. In contrast, scans 5, 8, and 10 yielded excellent results, characterized by high detail and minimal noise. These three reconstructions will be discussed in detail in the next section.

The analysis primarily concludes that capturing the hallway, living room, and kitchen in a single video is ineffective. The algorithm receives an excessive amount of data, resulting in a poorly reconstructed model. Dividing the environment into multiple scans enhances the reconstruction quality, making it more detailed. However, this method is not ideal as integrating all reconstructions into a single cohesive model remains challenging. It is recommended to segment the room. For the room that was used in these tests it is recommended to segment it in a maximum of 3 segments

Reflecting on Method 5, scanning around a specific object (loop closures) proved beneficial. For instance, the scan around the coffee table produced a high-quality reconstruction. For scans 8 and 10, the environment was divided into three paths, which resulted in the best reconstructions. However, reconstructing the hallway remains difficult due to its height, narrowness, and large monotone surfaces, which complicate the reconstruction process. The analysis of all scanning methods is visible in

Table 2.

3.4.2. Method 5

Method 5 yielded promising results. In this scan, each object or area was individually scanned, resulting in nine separate 3D reconstructions. Most of these individual reconstructions were high quality. Among the nine reconstructions, images were captured of the kitchen, the kitchen table, and the coffee table, as illustrated below.

Figure 11 showcases the reconstruction of the kitchen table, where the table, coffee cups, and chairs are clearly visible with minimal noise in the 3D data. The objects on both the kitchen table and counter are well-defined and easily identifiable as showed in

Figure 12.

Overall, scanning specific objects or zones led to more detailed and accurate 3D reconstructions, confirming that isolating individual areas improves reconstruction quality. However, one limitation is that current 3D reconstruction technology still requires manual work to merge separate scans. This means that each scan must be cropped, resized, and aligned to assemble a complete 3D reconstruction of an entire room. While larger zones can be connected, merging nine separate reconstructions would be time-consuming and challenging. Given the current limitations in splat file editing applications, this process is cumbersome and impractical for now. In summary, while focusing on individual zones enhances the quality of 3D reconstructions, this method is not yet feasible for whole-room reconstructions until more advanced algorithms or tools are developed to automate merging and clean up multiple 3D scans.

3.4.3. Methods 8 and 10

Methods 8 and 10 are identical, with the exception that in Method 10, the area around the coffee table is extended to include the side of the black cabinet, resulting in a complete reconstruction of the cabinet. The images below show screenshots of the cabinet, kitchen, and coffee table, all of which are captured detailed. Some items even have readable text. However, there is still some noise in the reconstructions, although this can be removed with further processing.

Figure 11.

Results from Method 8 and Method 10.

Figure 11.

Results from Method 8 and Method 10.

Figure 12.

Tabletop results from Method 8 and Method 10.

Figure 12.

Tabletop results from Method 8 and Method 10.

In summary, Method 5 produced very detailed 3D reconstructions of individual objects and areas. However, due to the lack of effective techniques for stitching 3D reconstructions, this method is not suitable. It takes too much time to attach nine smaller 3D reconstructions to each other. Method 10 emerged as the best scan path. The general living/kitchen area was reconstructed well while maintaining an efficient workflow. However, despite its effectiveness in more complex areas, limitations persist, particularly in narrow or featureless spaces like hallways. The inability to effectively stitch reconstructions of individual objects into a cohesive whole reflects current technological constraints in handling multiple separate scans.

4. Discussion

This section evaluates the implications of the findings on capturing techniques for 3D reconstruction, emphasizing their practical applications, limitations, and alignment with existing literature. By comparing the observed results with previous studies, this section identifies how specific capturing methods influence reconstruction quality, noise reduction, and detail accuracy. Additionally, it highlights the potential for refining current workflows, addresses the limitations imposed by technological constraints, and proposes avenues for future research to enhance the efficiency and adaptability of 3D reconstruction processes.

4.1. Comparison with Literature

The importance of slow, deliberate camera movements in improving 3D reconstruction quality is well-documented. Zhang et al. [

23] highlighted that optimized camera trajectories, such as those implemented in ROSEFusion, enhance feature matching and reduce noise artifacts even under dynamic conditions. Consistent with their findings, our study demonstrated that slower camera movements produced higher-quality reconstructions, with improved detail and reduced noise. This is attributed to increased frame overlap and better focus, aligning with the broader consensus in the literature.

Camera orientation also plays a pivotal role in the quality of 3D reconstructions. While portrait and landscape modes offer unique advantages, landscape orientation is generally preferred for its broader field of view and greater horizontal overlap. This observation is supported by principles in photogrammetry, which emphasize the importance of maximizing overlap for better feature recognition [

24]. Our results reinforce this understanding, with landscape mode consistently yielding superior reconstructions compared to portrait mode, especially for horizontally expansive environments.

Reconstructing narrow or featureless spaces remains a persistent challenge. Lu et al. [

25] identified that such environments hinder feature detection, impacting reconstruction accuracy. This was evident in our study, where hallways posed significant difficulties for reconstruction algorithms due to their monotone surfaces and lack of distinctive features. However, our segmentation-based methods, particularly Method 10, showed promise in mitigating these issues by dividing environments into manageable zones. This approach echoes similar strategies proposed in prior research but extends them by incorporating Gaussian Splatting techniques to further enhance reconstruction fidelity.

4.2. Potential Applications

The findings of this research have significant implications across multiple fields requiring high-quality 3D reconstructions. In crime scene investigations, accurate 3D models enhance evidence analysis and presentation by capturing spatial relationships and event sequences. Beyond forensics, these optimized methods support architecture, archaeology, and cultural heritage preservation, enabling precise, non-invasive documentation and restoration efforts while enhancing public engagement through virtual experiences.

In VR and AR, high-quality 3D models improve realism and immersion, benefiting training simulations, gaming, and education. By applying the recommended capturing techniques, developers can achieve clearer, more detailed models, broadening the applicability of 3D reconstruction technology across diverse professional domain

4.3. Limitations and Future Research

4.3.1. Limitations

This study had several limitations. The experimental setup was constrained by the available space and objects, which may not be fully representative of typical crime scenes. Additionally, the inability to fully automate the merging of individual object scans into a single coherent model limited the study’s application to real-world crime scene reconstruction. The study was also limited by the specific technology and software used for 3D Gaussian Splattering (3DGS), which may not have been the most advanced available at the time. The manual intervention required in aligning multiple scans remains a challenge, emphasizing the need for more sophisticated algorithms and tools for future studies.

4.3.2. Future Research

Future work should focus on overcoming the limitations of merging multiple scans into a cohesive whole, perhaps by advancing the software algorithms used in 3D reconstruction. Further exploration into using more advanced stabilizers or integrating markers within the scene could enhance the quality of the reconstructed models. Additionally, investigating how different lighting conditions or environments affect the 3D Gaussian Splattering process could broaden the applicability of this method in real-world crime scene reconstructions. There is also room for improvement in the reconstruction of narrow, featureless spaces like hallways, where more specialized techniques may be necessary.

5. Conclusions

This study explored various parameters influencing the quality of 3D reconstructions in forensic contexts, focusing on capturing methods using handheld cameras in indoor environments. By systematically adjusting factors such as camera orientation, filming speed, layering techniques, and scanning paths, the research identified the most effective strategies for achieving detailed and accurate reconstructions of crime scenes.

The findings highlight the advantages of using a landscape orientation over portrait mode, as it offers superior horizontal overlap, which is essential for aligning features effectively. This minimizes anomalies and ensures a more consistent reconstruction quality. Furthermore, the results demonstrated that slower camera movements significantly enhance the quality of reconstructions. The additional frames captured during slower movements allow for improved data quality and better feature matching, leading to reconstructions with higher detail and reduced noise.

In terms of layering, the study found that capturing the environment at three different heights provides an optimal balance between detail and processing efficiency. While additional layers occasionally introduced noise, the three-layer approach captured sufficient vertical data to reconstruct both the tops and bottoms of objects effectively. Scanning individual objects or areas, as seen in Method 5, produced high-quality reconstructions but was impractical due to the challenges of merging separate scans into a cohesive whole. Method 10, which employed segmented scanning with an additional loop for specific areas, emerged as the most practical approach, balancing detail and workflow efficiency. However, difficulties remained in reconstructing narrow, featureless spaces like hallways, underscoring limitations in current algorithms.

These findings provide valuable insights for forensic investigations, offering guidance on optimizing 3D reconstruction techniques with current technologies. While promising, the study also highlights the need for advancements in software to automate the merging of segmented scans into unified models. Future research could explore marker-based scanning techniques and more sophisticated reconstruction algorithms to address these challenges, enhancing the practical applicability of the proposed methods in real-world forensic scenarios.

By improving the quality and efficiency of 3D reconstructions, this study contributes to forensic science, architecture, and other fields requiring accurate spatial modeling, paving the way for more reliable and immersive applications of 3D technology.

Author Contributions

Conceptualization, K.W., S.W., R.M., M.v.K. and D.R.; methodology, D.R.; software, K.W., S.W. and D.R.; validation, K.W., S.W. and D.R.; formal analysis, D.R.; investigation, K.W., S.W. and D.R.; resources, D.R. and R.M.; data curation, D.R.; writing—original draft preparation, D.R.; writing—review and editing D.R., M.v.K. and R.M.; visualization, K.W. and S.W.; supervision, M.v.K. and R.M.; project administration, D.R.; funding acquisition, D.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received financial support from the University of Twente and research group Technologies for Criminal Investigations, part of Saxion University for Applied Sciences and the Police Academy of the Netherlands. Also, this research is part of the project “AI-aided robotic system for crime scene investigation and reconstruction” funded by the Police and Science grant of the Police Academy of the Netherlands.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Acknowledgments

Special gratitude to the University of Twente in the Netherlands, Technology for Criminal Investigations research group part of Saxion University of Applied Sciences and the Police Academy in the Netherlands, the Technical University of Sofia in Bulgaria and all researchers in the CrimeBots research line part of research group TCI. Also, thanks for the dedicated opportunity and funding from the Police Academy with the Police and Science grant.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Y. M. Mostafa, M. N. Al-Berry, H. A. Shedeed, and M. F. Tolba, “Data Driven 3D Reconstruction from 2D Images: A Review. Lecture Notes on Data Engineering and Communications Technologies 2023, 152, 812–823. [Google Scholar] [CrossRef]

- A. David, E. Joy, S. Kumar, and S. J. Bezaleel, “Integrating Virtual Reality with 3D Modeling for Interactive Architectural Visualization and Photorealistic Simulation: A Direction for Future Smart Construction Design Using a Game Engine. Lecture Notes in Networks and Systems 2022, 300, 180–192. [Google Scholar] [CrossRef]

- F. Remondino, S. El-Hakim, S. Girardi, A. Rizzi, S. Benedetti, and L. Gonzo, “3D VIRTUAL RECONSTRUCTION AND VISUALIZATION OF COMPLEX ARCHITECTURES-THE ‘3D-ARCH’ PROJECT”, Accessed: Jul. 15, 2024. [Online]. Available online: www.stefanobenedetti.com.

- M. G. Bevilacqua, M. Russo, A. Giordano, and R. Spallone, “3D Reconstruction, Digital Twinning, and Virtual Reality: Architectural Heritage Applications. Proceedings - 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops 2022, 2022, 92–96. [Google Scholar] [CrossRef]

- L. Gomes, O. Regina Pereira Bellon, and L. Silva, “3D reconstruction methods for digital preservation of cultural heritage: A survey. Pattern Recognit Lett 2014, 50, 3–14. [Google Scholar] [CrossRef]

- A. Cefalu, M. Abdel-Wahab, M. Peter, K. Wenzel, and D. Fritsch, “Image based 3D Reconstruction in Cultural Heritage Preservation”. [CrossRef]

- B. Rodriguez-Garcia, H. Guillen-Sanz, D. Checa, and A. Bustillo, “A systematic review of virtual 3D reconstructions of Cultural Heritage in immersive Virtual Reality. Multimedia Tools and Applications 2024, 2024, 1–51. [Google Scholar] [CrossRef]

- B. Mildenhall, P. P. Srinivasan, M. Tancik, J. T. Barron, R. Ramamoorthi, and R. Ng, “NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2020; 12346, 405–421. [CrossRef]

- B. Kerbl, G. Kopanas, T. Leimkuehler, and G. Drettakis, “3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans Graph 2023, 42, 14. [Google Scholar]

- T. Nguyen-Phuoc, F. Liu, and L. Xiao, “SNeRF: Stylized Neural Implicit Representations for 3D Scenes. ACM Trans Graph 2022, 41, 11. [Google Scholar] [CrossRef]

- J. Kulhanek and T. Sattler, “Tetra-NeRF: Representing Neural Radiance Fields Using Tetrahedra. 2023, Accessed: Jul. 15, 2024. [Online]. Available online: https://github.com/jkulhanek/tetra-nerf.

- M. Tancik et al., “Nerfstudio: A Modular Framework for Neural Radiance Field Development. Proceedings - SIGGRAPH 2023 Conference Papers, 2023. [CrossRef]

- T. Müller, S. Nvidia, A. Evans, C. Schied, and A. 2022 Keller, “Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Trans. Graph 2022, 41, 102. [Google Scholar] [CrossRef]

- R. Liang, J. Zhang, H. Li, C. Yang, Y. Guan, and N. Vijaykumar, “SPIDR: SDF-based Neural Point Fields for Illumination and Deformation. Oct. 2022, Accessed: Jul. 15, 2024. [Online]. Available online: https://arxiv.org/abs/2210.08398v3.

- C. Reiser et al., “MERF: Memory-Efficient Radiance Fields for Real-time View Synthesis in Unbounded Scenes. ACM Trans Graph 2023, 42. [Google Scholar] [CrossRef]

- D. Rangelov, J. D. Rangelov, J. Knotter, and R. Miltchev, “3D Reconstruction in Crime Scenes Investigation: Impacts, Benefits, and Limitations. Lecture Notes in Networks and Systems, 2024; 1065, 46–64. [Google Scholar] [CrossRef]

- D. Rangelov, S. Waanders, K. Waanders, M. van Keulen, and R. Miltchev, “Impact of Camera Settings on 3D Reconstruction Quality: Insights from NeRF and Gaussian Splatting. Sensors 2024, 24, 7594. [Google Scholar] [CrossRef]

- Sony, “Sony Alpha 7C Full-Frame Mirrorless Camera - Black| ILCE7C.” Accessed: Dec. 22, 2024. [Online]. Available online: https://electronics.sony.com/imaging/interchangeable-lens-cameras/all-interchangeable-lens-cameras/p/ilce7c-b?srsltid=AfmBOoo5N6vG9O3tR3d9p7ZKy9YqWMPZSzdnQnfZjfl4XP9WE2vRx1bz.

- Sigma, “14mm F1.4 DG DN | Art | Lenses | SIGMA Corporation.” Accessed: Dec. 22, 2024. [Online]. Available online: https://www.sigma-global.com/en/lenses/a023_14_14/.

- DJI, “DJI RS 4 - Gripping Storytelling - DJI.” Accessed: Dec. 22, 2024. [Online]. Available online: https://www.dji.com/bg/rs-4.

- Jawset, “Jawset Postshot.” Accessed: Dec. 22, 2024. [Online]. Available online: https://www.jawset.com/.

- Canon, “What Is Aperture Photography? | Canon U.S.A., Inc.” Accessed: Dec. 22, 2024. [Online]. Available online: https://www.usa.canon.com/learning/training-articles/training-articles-list/what-is-aperture.

- J. Zhang, C. Zhu, L. Zheng, and K. Xu, “ROSEFusion: Random Optimization for Online Dense Reconstruction under Fast Camera Motion. ACM Trans Graph 2021, 40, 56. [Google Scholar] [CrossRef]

- D. Lefcourt, “Portrait Vs Landscape Orientation In Photography (which Is Better?) - Lefcourt Photography.” Accessed: Dec. 22, 2024. [Online]. Available online: https://www.lefcourtphotography.com/portrait-vs-landscape-orientation-in-photography-which-is-better.

- F. Lu, B. Zhou, Y. Zhang, and Q. Zhao, “Real-time 3D scene reconstruction with dynamically moving object using a single depth camera. Visual Computer 2018, 34, 753–763. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).