Submitted:

22 January 2025

Posted:

22 January 2025

You are already at the latest version

Abstract

Keywords:

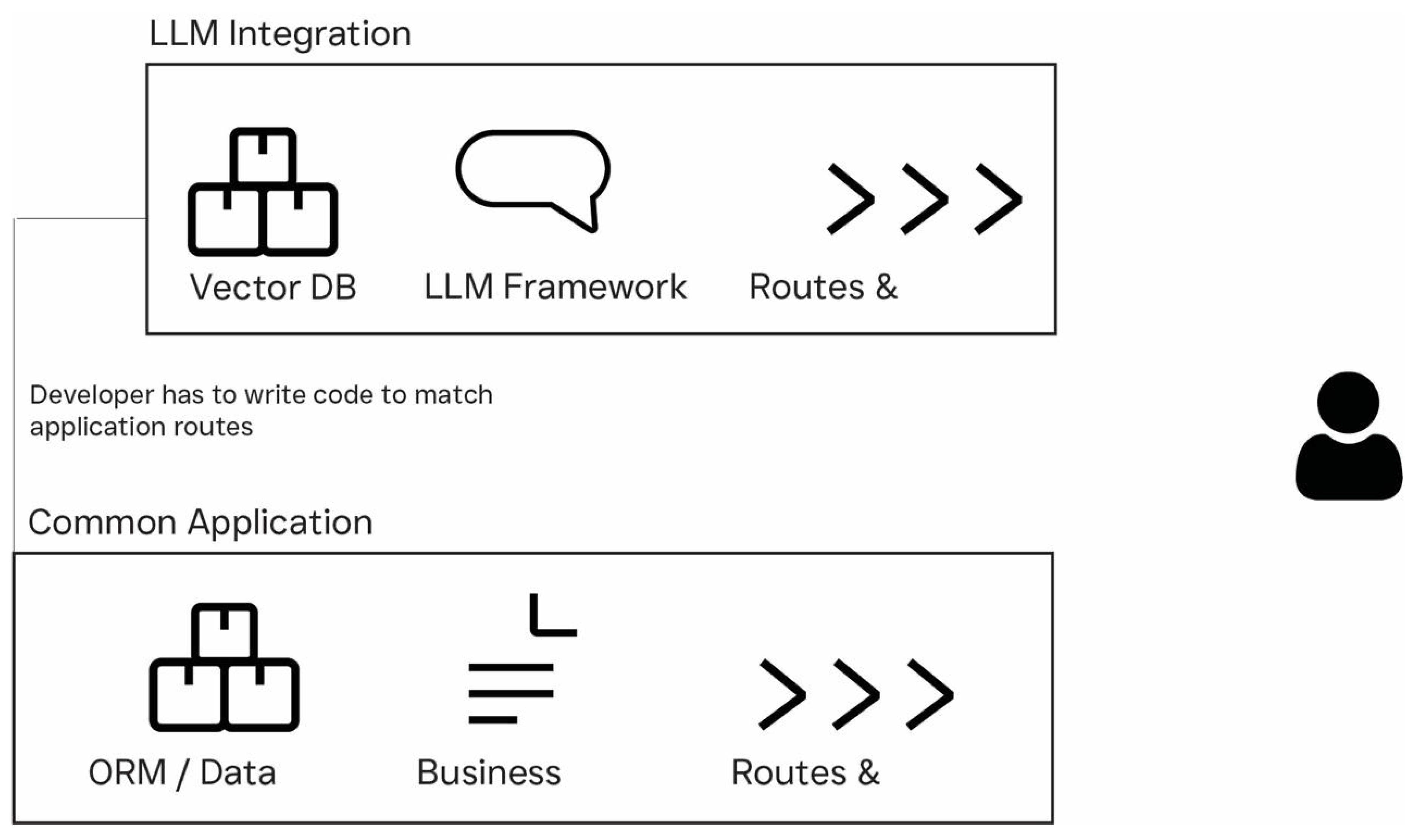

1. Introduction

2. Key Challenges in Enabling LLMs to Interact with Application Data

- Diverse and Non-Standardized Interfaces

- Context and Complexity of APIs

- Lack of Shared Knowledge Representation

- Safety, Reliability, and Operational Constraints

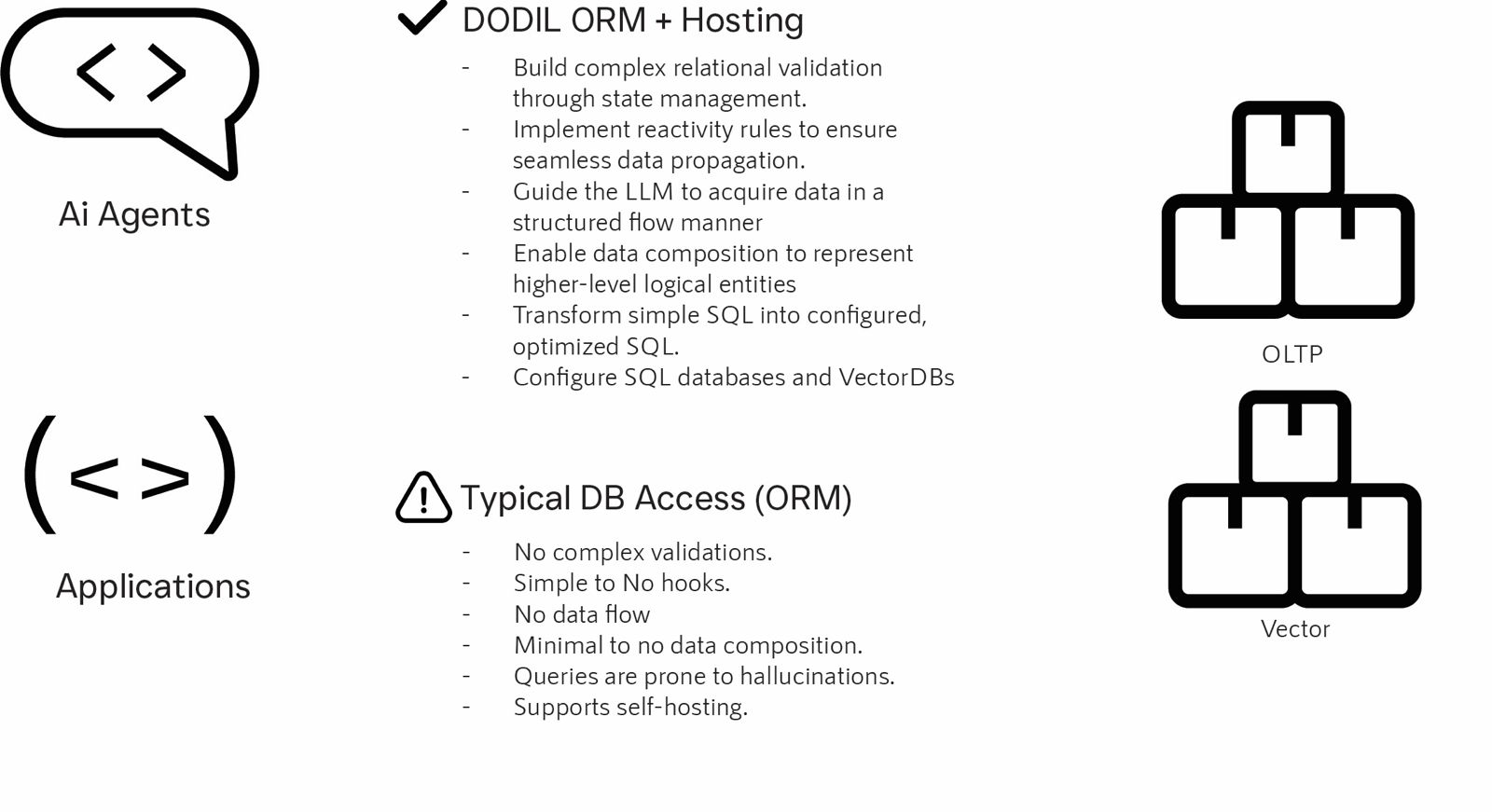

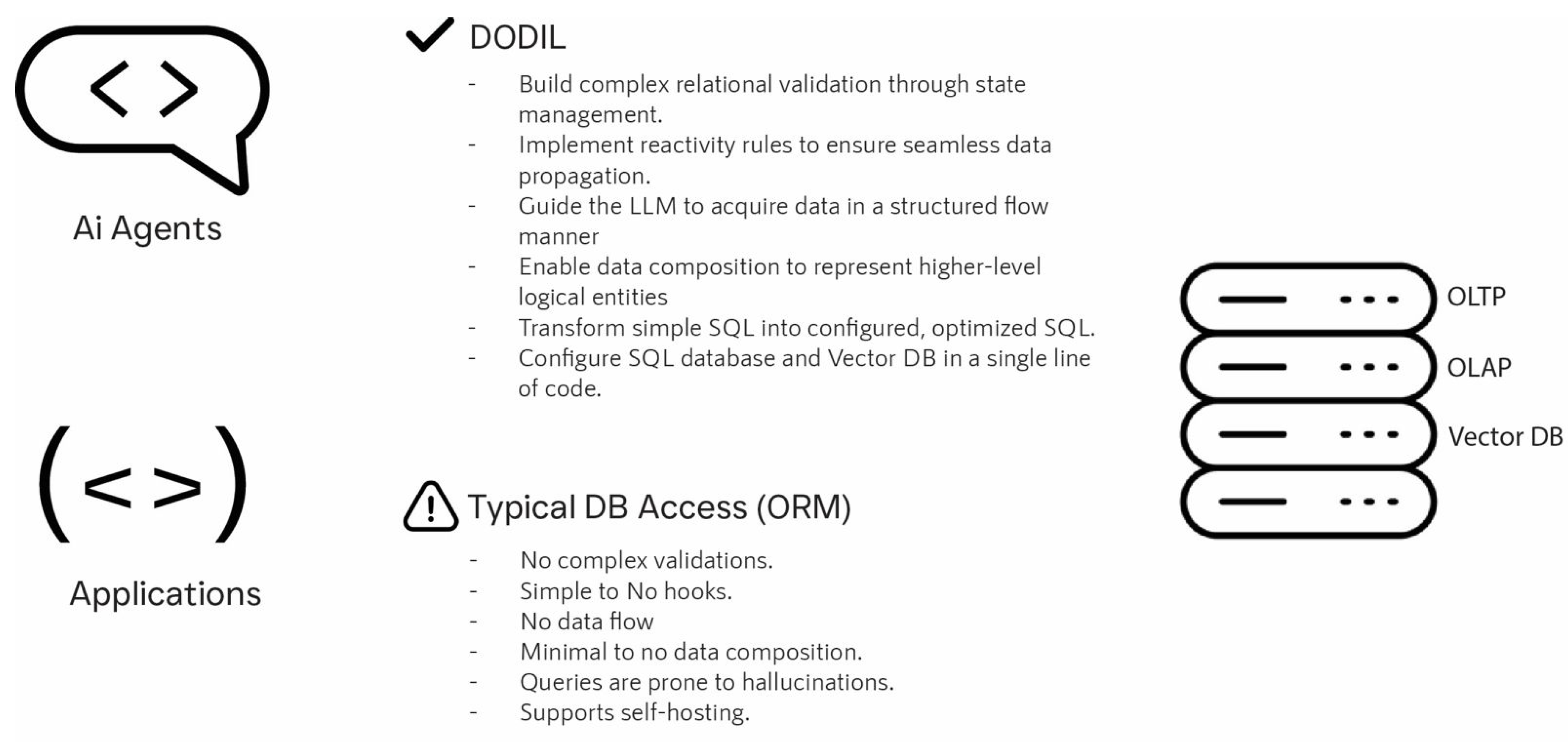

3. The Augmented Schema Framework: DODIL

4. Key Modules of the Augmented Schema Framework

- Relation

- Augment

- Compose

- State Management

- Reactivity

- Flow

- Prompt Management

-

DatumThis is the singular, basic data form. It represents a single data point represented by a single Schema.

-

DataSetThis is a list of Datum from the same Schema, with predefined powerful batch and math operations.

-

DataCompositeThis is an augmented data form of different Schemas connected via Relationships with the Eager or Lazy augmentation methods.For example, a composite of when considering an application involving ordering certain items.

4.1. Relation

- An Order (parent) can have multiple Order Items (children)

- A Category (parent) can have multiple Subcategories (children)

- A User (parent) can have multiple Addresses (children)

4.2. Augment

- Less Repetitive Code: Instead of manually querying child records, adding augmentations rule or calling an augmentation function, it can dynamically fetch related data.

- Consistent Data Structures: Augmentation converts multiple related Datums into a single CompositeData, letting you treat them as one cohesive unit.

- Flexible Fetching: Depending on performance and domain requirements, augmentation can be Eager (all in one go) or Lazy (on demand).

4.3. Compose

4.4. State Management

- Locking an field if a related status is set to a certain value.

- Making a field required or optional based on other field values.

- Restricting valid choices for a field once certain criteria are met.

-

Conditions:

- Each condition checks a field or a parent’s field using an operator and a value.

- If the condition is true, the specified effect is applied to target fields.

-

Effects:

- : You cannot change this field value after the condition is met.

- : This field must have a value.

- : Restricts the valid enum values for that field.

-

on Field:

- Indicates which field(s) to apply the effect to (for example, quantity, status, or even * for all fields).

-

References to parent/child fields

-

You can reference a parent schema’s field viaor .

- Allows child/parent constraints based on the relation’s state.

-

-

Computed :

- At runtime, each field’s final state is compiled into a object.

- This includes any combination of , etc.

4.5. Reactivity

-

Reaction RulesReaction rules are defined using . Each rule specifies which schema or target the rule belongs to, when to trigger the reactive rule (such as on creation, update, or deletion), which conditions apply to the rule, and what actions are to be done upon its execution.

-

Event ObjectThis represents the record or fields causing the event. For example, if an OrderItem is edited, would contain the newly edited fields and their values.

-

Modified FieldsThis object is the array of fields changed during an update. These are useful for identifying whether a specific field was altered before triggering a reaction.

-

Multi-Phase FilteringIn the first phase, rules are filtered by schema and event type (creation, modification, or deletion). In the second phase, conditions on and are satisfied. In the third phase, any needed database queries are performed if the first two filters are passed.

-

Reaction ActionsAn action defines how fields are updated or affected. Common patterns include which is setting a field in the current schema. The function can be used to add additional functionality.

4.6. Flow

- Basic order information

- Multiple order items

- A single delivery address

- Compositional: Each step references a separate schema.

- Sequential or Parallel: Steps can be strictly ordered or configured for more flexible paths.

- Partial Data Handling: At each step, partial data can be saved or validated before moving on, minimizing errors and rework.

- Collect order details.

- Collect multiple order item entries.

- Collect a single delivery address.

4.7. Prompt Management

- Reduce Hallucination: LLMs often invent SQL queries or misinterpret schema [22]. With AI-Prompt-Management, queries are validated and generated using real DODIL schema definitions.

- Enforce Business Rules: Augmentation and state management automatically apply to AI-generated read/write requests, ensuring data consistency [1].

- Streamline AI-Driven Workflows: Whether the user wants to query, create, or update data, the module generates the necessary SQL or prompts to the user for missing info, without manually crafting complex logic [23].

5. Conclusions and Future Works

- Bridging LLMs and Application Data: Enhances LLMs’ ability to interact with complex data, moving beyond static queries to dynamic, context-aware interactions.

- Automation and Dynamic Validation: Supports conditional immutability, field computations, and recursive logic validation for enhanced data consistency and automation.

- Semantic Augmentation: Enables the seamless integration of related schemas, ensuring relational data is dynamically fetched and processed.

- LLM Integration: By embedding schema and pipelines, the framework enables LLMs to understand system logic, automate workflows, and interact intelligently with data.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| DB | Database |

| DODIL | Data-Oriented Design Integration Layer |

| GPT | Generative Pre-trained Transformer |

| LLaMA | Large Language Model Meta AI |

| LLM | Large Language Model |

| NoSQL | Not Only SQL |

| OLAP | Online Analytical Processing |

| OLTP | Online Transaction Processing |

| ORM | Object-Relation Mapping |

| SAS | Smart Augmented Schemas |

| SQL | Structured Query Language |

References

- Yao, Y.; Duan, J.; Xu, K.; Cai, Y.; Sun, Z.; Zhang, Y. A survey on large language model (llm) security and privacy: The good, the bad, and the ugly. High-Confidence Computing 2024, 100211. [Google Scholar] [CrossRef]

- Liang, W.; Zhang, Y.; Wu, Z.; Lepp, H.; Ji, W.; Zhao, X.; Cao, H.; Liu, S.; He, S.; Huang, Z.; et al. Mapping the increasing use of llms in scientific papers. arXiv 2024, arXiv:2404.01268 2024. [Google Scholar]

- Ganesh, A. Program Scalability Analysis for LLM Endpoints: Ahmdal’s Law Analysis of Parallelizability Benefits of LLM Completions Endpoints*. In Proceedings of the 2024 4th International Conference on Electrical, Computer, 2024, Communications and Mechatronics Engineering (ICECCME); pp. 1–6. [CrossRef]

- Madden, S.; Cafarella, M.; Franklin, M.; Kraska, T. Databases Unbound: Querying All of the World’s Bytes with AI. Proceedings of the VLDB Endowment 2024, 17, 4546–4554. [Google Scholar] [CrossRef]

- Chen, Y.; Cui, M.; Wang, D.; Cao, Y.; Yang, P.; Jiang, B.; Lu, Z.; Liu, B. A survey of large language models for cyber threat detection. Computers & Security 2024, 104016. [Google Scholar]

- Jahić, J.; Sami, A. State of Practice: LLMs in Software Engineering and Software Architecture. In Proceedings of the 2024 IEEE 21st International Conference on Software Architecture Companion (ICSA-C). IEEE; 2024; pp. 311–318. [Google Scholar]

- Weber, I. Large Language Models as Software Components: A Taxonomy for LLM-Integrated Applications. arXiv 2024, arXiv:2406.10300 2024. [Google Scholar]

- Raiaan, M.A.K.; Mukta, M.S.H.; Fatema, K.; Fahad, N.M.; Sakib, S.; Mim, M.M.J.; Ahmad, J.; Ali, M.E.; Azam, S. A review on large Language Models: Architectures, applications, taxonomies, open issues and challenges. IEEE Access 2024. [Google Scholar] [CrossRef]

- Yang, J.; Jin, H.; Tang, R.; Han, X.; Feng, Q.; Jiang, H.; Zhong, S.; Yin, B.; Hu, X. Harnessing the power of llms in practice: A survey on chatgpt and beyond. ACM Transactions on Knowledge Discovery from Data 2024, 18, 1–32. [Google Scholar] [CrossRef]

- Patil, R.; Gudivada, V. A review of current trends, techniques, and challenges in large language models (llms). Applied Sciences 2024, 14, 2074. [Google Scholar] [CrossRef]

- Jin, H.; Huang, L.; Cai, H.; Yan, J.; Li, B.; Chen, H. From llms to llm-based agents for software engineering: A survey of current, challenges and future. arXiv 2024, arXiv:2408.02479 2024. [Google Scholar]

- Yang, J.; Prabhakar, A.; Narasimhan, K.; Yao, S. Intercode: Standardizing and benchmarking interactive coding with execution feedback. Advances in Neural Information Processing Systems 2024, 36. [Google Scholar]

- Patil, S.G. Teaching Large Language Models to Use Tools at Scale. PhD thesis, University of California, Berkeley, 2024.

- Gupta, A.; Panda, M.; Gupta, A. Advancing API Security: A Comprehensive Evaluation of Authentication Mechanisms and Their Implications for Cybersecurity. International Journal of Global Innovations and Solutions (IJGIS) 2024. [Google Scholar] [CrossRef]

- Ganesh, S.; Sahlqvist, R. Exploring Patterns in LLM Integration-A study on architectural considerations and design patterns in LLM dependent applications. Master’s thesis, Chalmers University of Technology, 2024.

- Xu, R.; Qi, Z.; Guo, Z.; Wang, C.; Wang, H.; Zhang, Y.; Xu, W. Knowledge conflicts for llms: A survey. arXiv 2024, arXiv:2403.08319 2024. [Google Scholar]

- Pawade, P.; Kulkarni, M.; Naik, S.; Raut, A.; Wagh, K. Efficiency Comparison of Dataset Generated by LLMs using Machine Learning Algorithms. In Proceedings of the 2024 International Conference on Emerging Smart Computing and Informatics (ESCI); 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Majeed, A.; Hwang, S.O. Reliability Issues of LLMs: ChatGPT a Case Study. IEEE Reliability Magazine 2024. [Google Scholar] [CrossRef]

- Deng, Y.; Xia, C.S.; Yang, C.; Zhang, S.D.; Yang, S.; Zhang, L. Large language models are edge-case generators: Crafting unusual programs for fuzzing deep learning libraries. In Proceedings of the Proceedings of the 46th IEEE/ACM International Conference on Software Engineering, 2024, pp.

- Morales, S.; Clarisó, R.; Cabot, J. A DSL for testing LLMs for fairness and bias. In Proceedings of the Proceedings of the ACM/IEEE 27th International Conference on Model Driven Engineering Languages and Systems, 2024, pp.

- Patkar, N.; Fedosov, A.; Kropp, M. Challenges and Opportunities for Prompt Management: Empirical Investigation of Text-based GenAI Users. Mensch und Computer 2024-Workshopband 2024, 10–18420. [Google Scholar]

- Martino, A.; Iannelli, M.; Truong, C. Knowledge injection to counter large language model (LLM) hallucination. In Proceedings of the European Semantic Web Conference. Springer; 2023; pp. 182–185. [Google Scholar]

- Singh, A.; Shetty, A.; Ehtesham, A.; Kumar, S.; Khoei, T.T. A Survey of Large Language Model-Based Generative AI for Text-to-SQL: Benchmarks, Applications, Use Cases, and Challenges. arXiv 2024, arXiv:2412.05208 2024. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).