1. Introduction

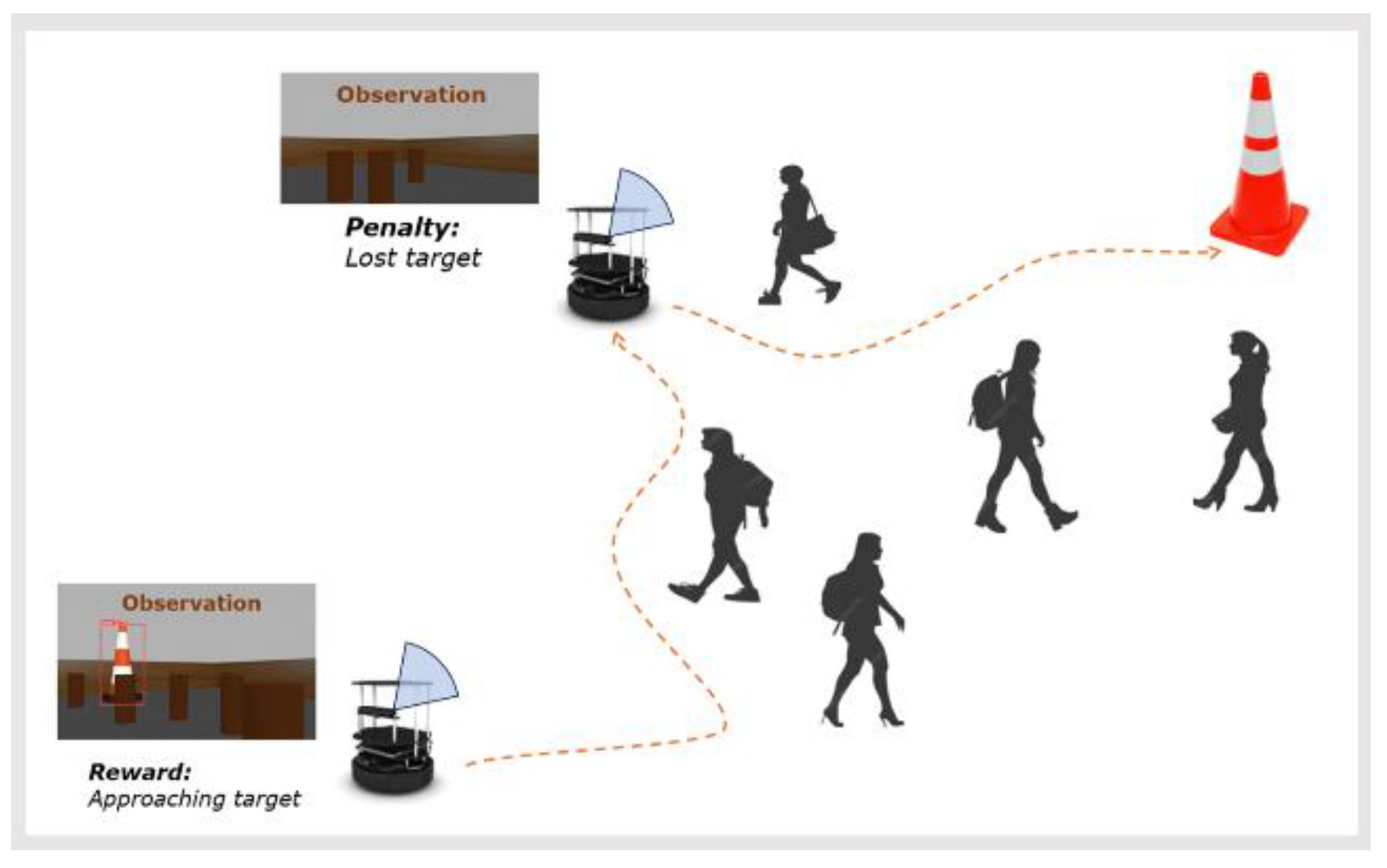

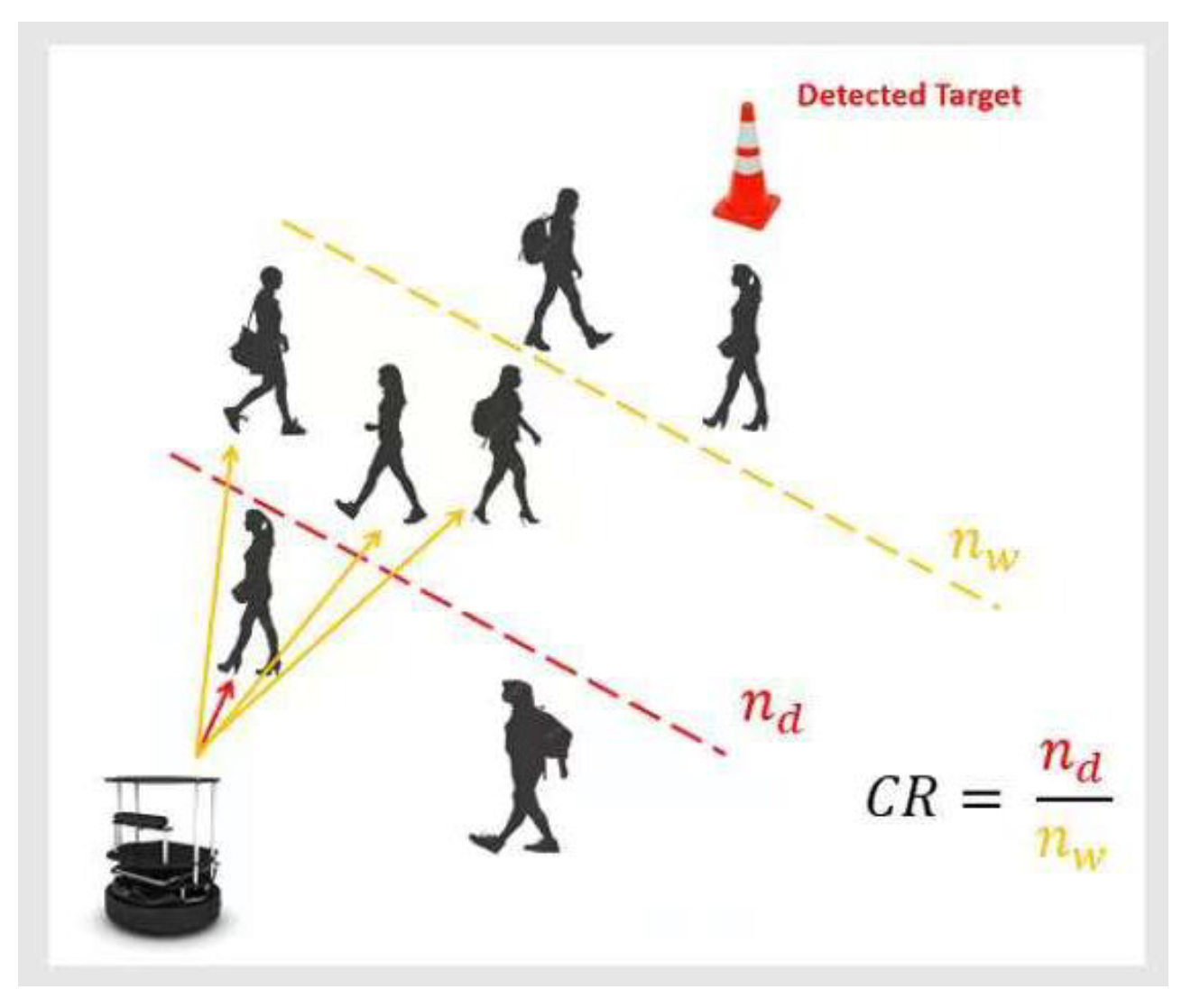

Effective navigation in crowded and dynamic environments, as illustrated in

Figure 1, is critical for a wide range of real-world robotic applications, such as autonomous delivery robots maneuvering through busy urban streets, service robots operating in congested shopping malls, and search-and-rescue robots deployed in disaster-stricken areas. Traditional navigation methods, such as those based on Simultaneous Localization and Mapping (SLAM), which rely on pre-mapping of environments, often underperform in these complex settings. The main limitation of SLAM-based approaches is their dependence on static maps and the assumption that the environment remains relatively unchanging [

1,

2], resulting in frequent localization errors and suboptimal navigation paths in dynamic environments.

In response to this challenge, Deep Reinforcement Learning (DRL) has emerged as a promising solution. DRL’s ability to learn directly from interactions with the environment enables it to adapt to a wide variety of scenarios, making it particularly effective in unknown and dynamic environments [

3,

4,

5]. Unlike conventional methods, DRL-based approaches do not require a predefined map, instead modeling navigation tasks as Partially Observable Markov Decision Processes (POMDPs) [

6,

7], an extension of Markov Decision Processes (MDPs). A POMDP is defined by the tuple:

where S represents the state space, A denotes the action space, T is the transition probability, R is the reward function, Ω is the observation space, O is a set of conditional observation probabilities and ϒ is the discount factor [

8,

9,

10]. DRL techniques, including specific algorithms such as Deep Q-Networks (DQN) and Proximal Policy Optimization (PPO), as well as broader frameworks like Actor-Critic methods, have demonstrated significant potential in enabling robots to navigate dynamic environments without relying on pre-built maps. These approaches effectively handle the complex, sequential decision-making processes inherent in such tasks [

11,

12].

However, existing DRL-based mapless navigation solutions are often constrained by their reliance on complex feature extraction processes and sensor fusion strategies, which typically requires systems with a wide field of view (FOV), as shown in [

13,

14,

15,

16]. While other methods are effective in relatively static environments as shown in [

17,

18,

19], they struggle in highly dynamic settings characterized by partial observability. In such environments, robots receive incomplete observations, which increases decision uncertainty. Moreover, traditional MDP-based approaches face significant challenges in handling large or continuous state and action spaces, often requiring function approximation techniques that can complicate model convergence and degrade real-time performance. The heavy reliance on handcrafted feature extraction methods exacerbates these issues, reducing generalization capabilities of the learned models.

Navigating in environments with limited FOV introduces additional complexities. The restricted observational capacity hampers a robot’s ability to accurately characterize its state, thereby reducing situational awareness. Furthermore, high-dimensional state representations derived from multimodal sensing data pose substantial difficulties for conventional DRL algorithms, particularly when generating continuous action spaces. This can lead to overfitting, limiting robot's adaptability to unseen scenarios.

To address these challenges, this paper proposes a DRL-based method specifically for visual target-driven navigation in crowded environments with limited FOV. Our approach is grounded in key principles aimed at enhancing generalization and practical applicability. First, we minimize hardware requirements by employing a single RGB-D camera with limited FOV, thereby reducing dependency on complex localization techniques, and enhancing deployment versatility across a broad spectrum of robotic platforms. This minimalist sensor setup not only reduces costs but also simplifies the overall system architecture, making it more robust accessible for various applications.

Second, we mitigate the risk of overfitting by incorporating dynamic obstacles into the training process, ensuring that the learned strategies are robust and adaptable to changing conditions in real-world scenarios. By introducing a randomly positioned visual target during training, we prevent the model from becoming overly dependent on specific environmental configurations, thereby enhancing the generalization capabilities.

Furthermore, to tackle the challenge associated with target tracking within a limited FOV, our method integrates a self-attention network (SAN). The SAN infers positional information of the lost target based on past observations, enabling the robot to effectively search for the target.

At the core of our approach is the Twin-Delayed Deep Deterministic Policy Gradient (TD3) algorithm, known for its effectiveness in handling continuous action spaces, to enable efficient navigation in crowded environments. The TD3 algorithm addresses the challenges associated with traditional DRL approaches by introducing several innovations, including twin Q-networks and delayed policy updates, which improve stability and performance in complex environments.

In summary, this paper addresses the gaps in the current literature on DRL-based navigation in crowded environments. By proposing a practical solution that reduces both observational and computational demands while maximizing adaptability. Our contributions are threefold:

Introducing a novel DRL-based architecture for mapless navigation in unseen and dynamic environments, which relies exclusively on a visual target without requiring any environment modeling or prior mapping.

Integrating a SAN to enhance the robot’s ability to search for and track targets in environments with dynamic obstacles and randomly positioned targets.

Simplifying sensor configurations by eliminating the need for complex localization techniques, thereby ensuring broader applicability in diverse operational scenarios.

The remainder of this paper is organized as follows:

Section 2 provides a review of related work on DRL-based navigation, with emphasis on visual target-driven approaches.

Section 3 details the system architecture of the proposed method. In

Section 4, we present the navigation policy representation.

Section 5 introduces the training algorithms used for the proposed model.

Section 6 presents the experimental results and compares our approach with existing methods.

Section 7 discusses the experiment results. Finally,

Section 8 concludes the paper and discusses directions for future research.

2. Related Work

Current DRL-based navigation systems can be broadly classified into three categories based on the environmental context in which robots operate: map-based navigation, mapless navigation in static environments, and mapless navigation in dynamic environments.

2.1. Map-Based Navigation

In environments where a pre-built map is available, researchers have explored integrating DRL with conventional SLAM to enhance the obstacle avoidance capabilities. For instance, Chen et al. [

20] proposed a navigation system that combines a DQN-based planner with SLAM to improve navigation performance in dynamic environments by utilizing a pre-built costmap. Similarly, Shunyi Yao et al. [

21] developed a DRL-based local planner for maneuvering through crowded pedestrian areas using a pre-built map. Although these applications demonstrate improved obstacle avoidance, their reliance on static pre-built maps limits their adaptability to dynamic environments, reducing their generalization to unseen or evolving scenarios.

2.2. Mapless Navigation in Static Environment

In relatively static environments, DRL-based systems have been developed for target-driven navigation without the need for pre-built maps. Kulhánek et al. [

22] demonstrated the efficacy of visual navigation in a static living room environment, using a LSTM network to leverage past actions and rewards to track targets. They introduced the A2C with Auxiliary Tasks for Visual Navigation (A2CAT-VN) framework, optimized for static indoor environments. Hsu et al. [

23] proposed vision-based DRL approach for robot to navigate a large-scale static environment by combing current state image and last action. Zhu et al. [

24] proposed a Deep Siamese Actor-Critic Network, which employs a dual-stream network to embed current observations and target images into a shared space for target-driven navigation, achieving successful navigation in indoor environments without a pre-built map. Kulhánek et al. [

25] further integrated the Parallel Advantage Actor-Critic (PPAC) algorithm with LSTM for indoor navigation without dynamic obstacles, while Wu et al. [

26] incorporated an information-theoretic regularization into an A3C framework to enable mapless navigation towards novel targets. Despite these successes, most existing DRL-based visual navigation systems are constrained by their application to relatively static environments. They often rely on complex network architectures and deep feature extraction, which are insufficient to handle navigation in highly dynamic and crowded settings.

2.3. Mapless Navigation in Dynamic Environment

Mapless navigation in highly dynamic and crowded environments poses distinct challenges. Researchers have made progress in achieving navigation in dynamic settings without relying on pre-built maps, though their solutions often depend heavily on sensor fusion and feature extraction techniques. For instance, Anas et al. [

13] employed odometry and 2D laser data to develop a collision probability concept, while Shi et al. [

14] introduced the notions of Traversability, VO Feasibility, and Survivability to guide navigation. Sun et al. [

15] proposed a spatial feature encoder incorporating risk-aware and attention-based feature extraction strategies. Despite these advancements, the heavy reliance on handcrafted features and sophisticated sensor setups, such as combinations of lidar and depth cameras [

14], complicates real-world deployment and increases the risk of overfitting to specific environments. Furthermore, most current approaches are tailored to predefined target positions, which can cause overfitting and limit their generalization to unseen scenarios.

In contrast to existing approaches, our method presents several key advantages. First, unlike map-based techniques, our solution enables real-time navigation without requiring prior mapping, making it both more flexible and scalable in unknown scenarios. Second, while most DRL-based methods using visual input are optimized for static environments, our system is specifically designed to operate in dynamic settings. Furthermore, compared to other DRL approaches in dynamic environments, which often rely on complex sensor fusion, we achieve efficient navigation using only a single RGB-D camera. Finally, our approach handles randomly positioned visual targets and reduces the need for handcrafted feature extraction, enhancing adaptability in real-world applications.

3. System Architecture

3.1. Overall System Design

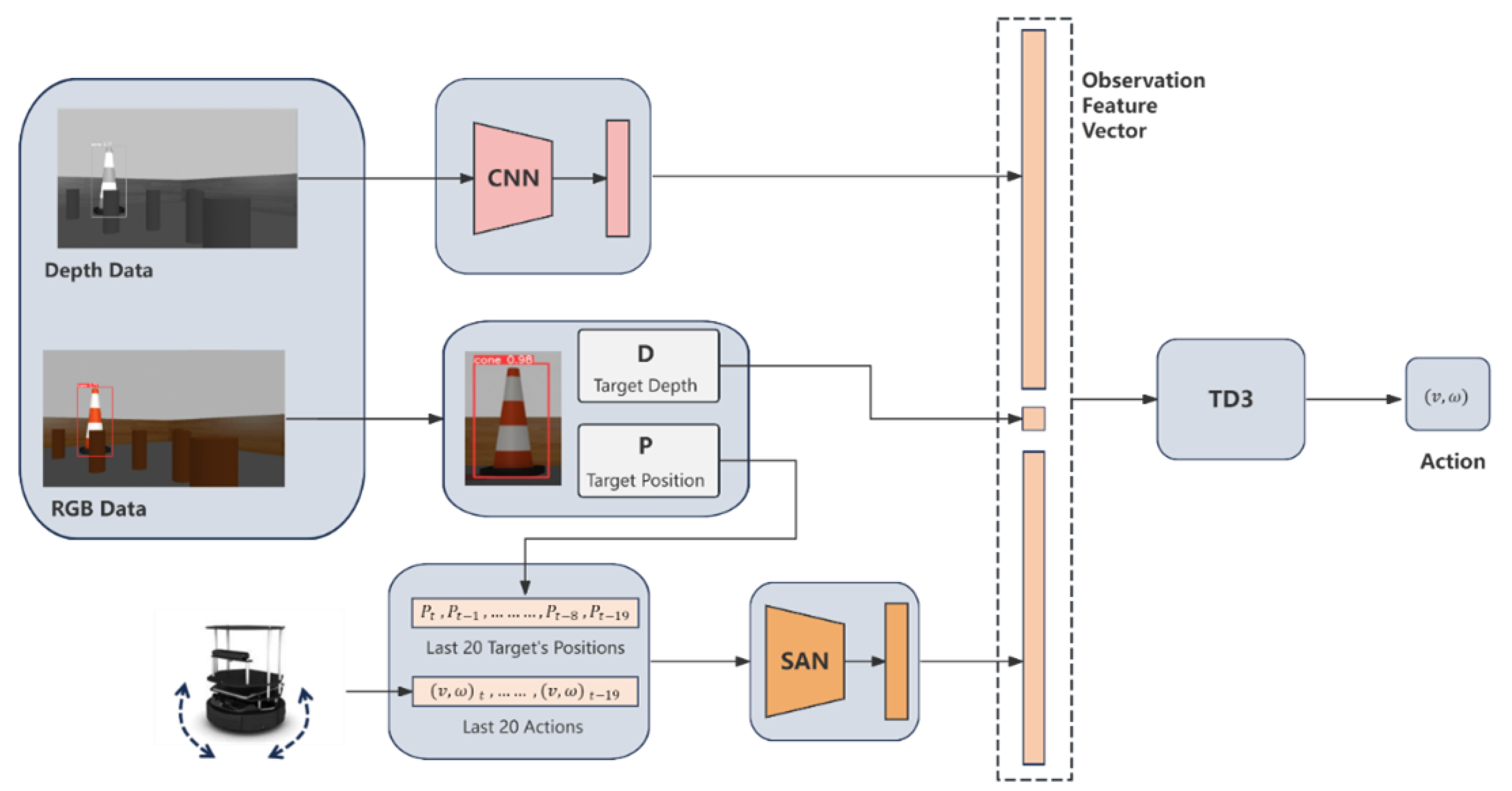

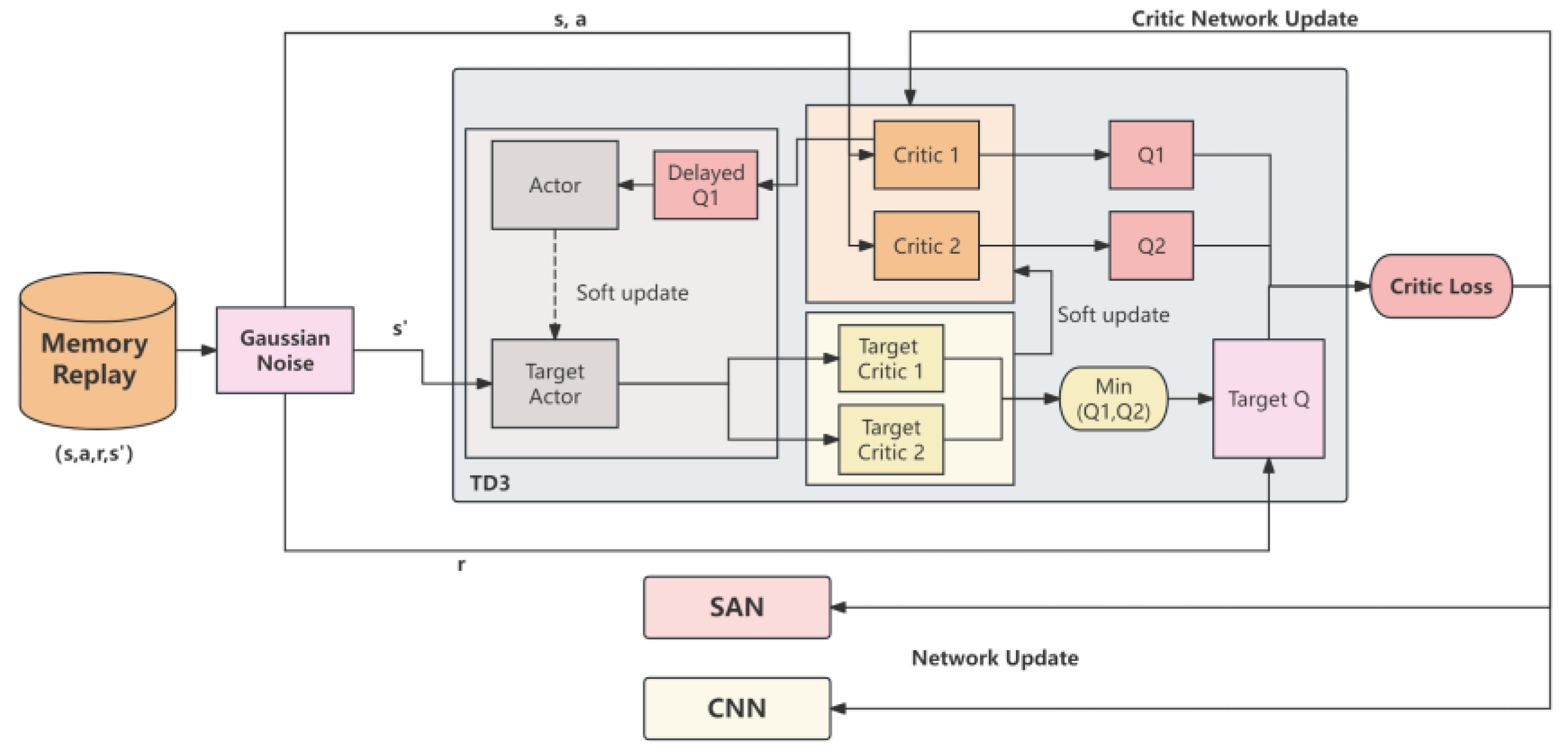

The architecture of the proposed DRL-based robot navigation approach, as shown in

Figure 2, integrates several components, including depth data processing via a convolutional neural network (CNN), YOLO-based target detection, a SAN for temporal feature extraction, and TD3 algorithm for policy learning. Collectively, these components form a robust framework for visual target-driven robot navigation in crowded environments.

3.2. Depth and Visual Data Processing

The system receives input from an onboard RGB-D camera with 70-degree horizontal FOV, a compact setup compared to systems in many existing works assuming a 360-degree FOV [

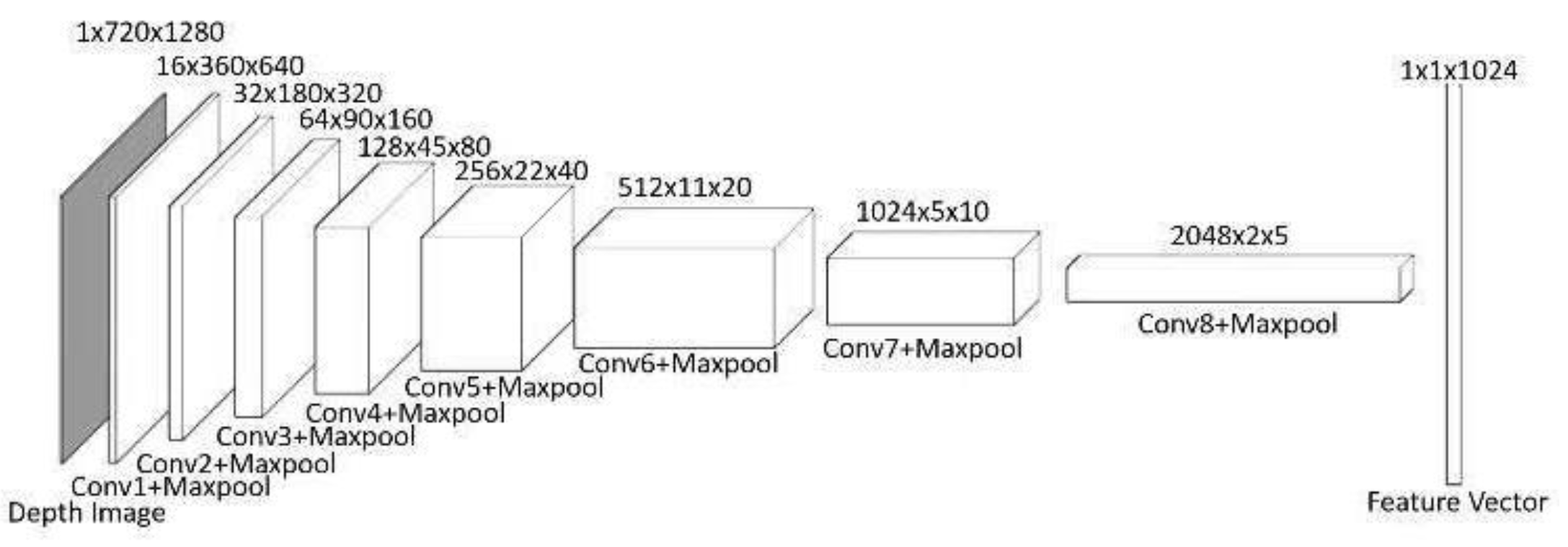

27]. The depth data (720 pixel × 1280 pixel), representing the 3D structure of the environment, is processed through a 8-layer CNN, as shown in

Figure 3. Each layer uses 3×3 kernels with 2×2 max-pooling to down-sample the data, reducing it to a feature representation (2048×2×5), which is further connected to a fully connected layer and then flattened to feature vector with dimension of 1024 for integration into the decision-making pipeline.

In parallel, YOLO is employed to perform real-time target detection using RGB data capture by the same RGB-D camera. YOLO predicts bounding boxes and class probabilities in a single pass, allowing continuous target tracking as the robot navigates. Once the target’s bounding box is detected, the depth value at its center is retrieved from the depth map, enabling the system to estimate the target’s distance and enhance situational awareness during navigation.

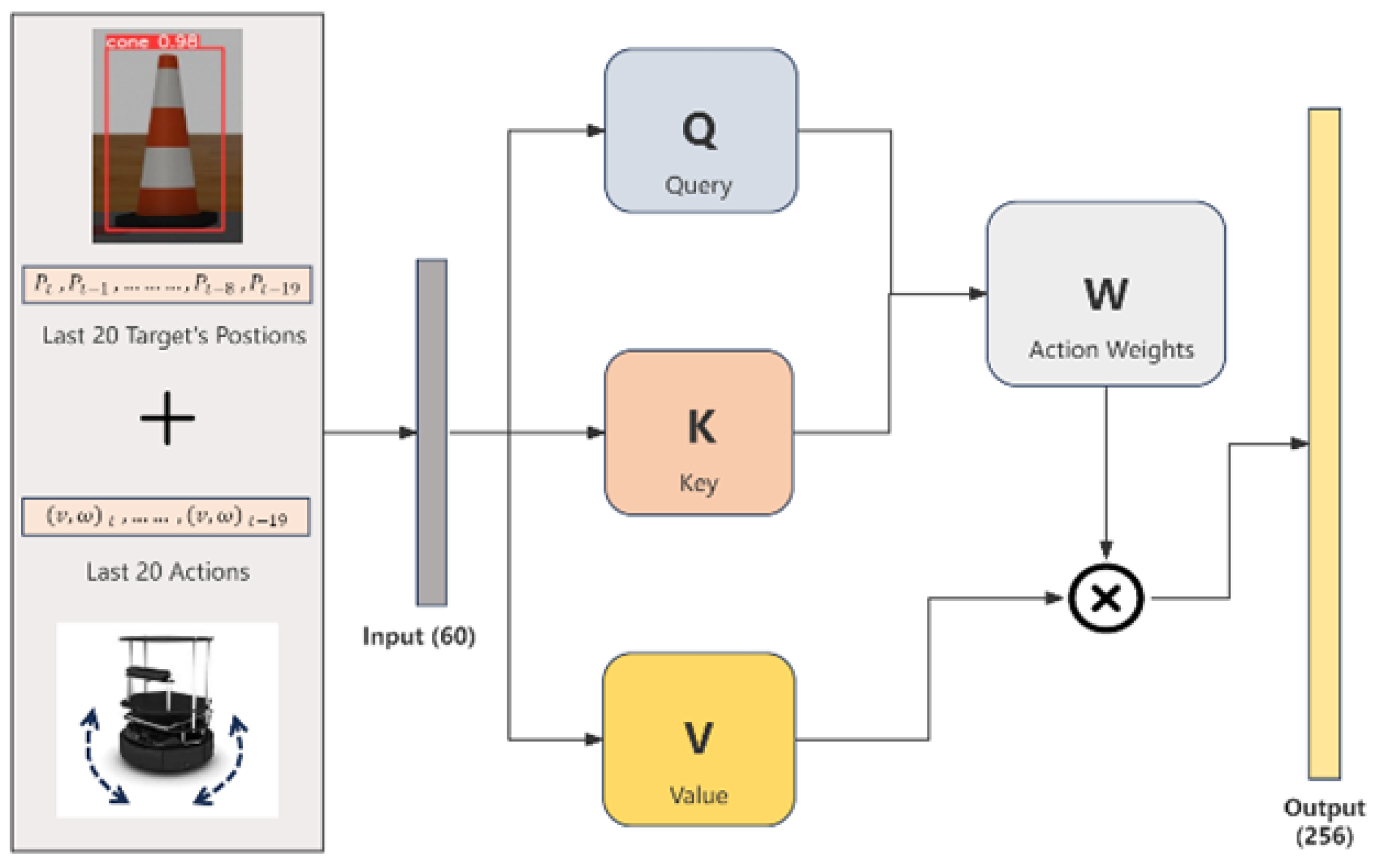

3.3. Self-Attention Network for Temporal Feature Processing

One key challenge posed by the robot’s limited 70-degree FOV is its restricted ability to perceive the entire environment, making it harder to track the target which moves outside its visual range. To mitigate this, the SAN is employed to compensate for the limited FOV by extracting temporal and spatial dependencies from past observations. This approach is inspired by SAN’s demonstrated effectiveness in capturing complex dependencies in previous research [

28,

29]. By learning patterns and relationships between actions and their outcomes over time, SAN enables the robot to maintain situational awareness beyond its current FOV.

In this study, SAN processes sequences of 20 past target positions and 20 corresponding actions and output a feature vector with dimension of 128, as illustrated in

Figure 4. This allows the robot to “reconstruct” a broader understanding of the environment, enabling it to track the target’s location, even when it temporarily falls outside the camera’s view. By leveraging these temporal patterns, SAN improves decision-making in target pursuit in dynamic environments.

The effectiveness of SAN in our approach lies in its ability to dynamically prioritize relevant spatial and temporal features, allowing the robot to respond when the target is lost from its current view. The self-attention mechanism works by allowing the model to assign varying levels of importance to different parts of its past observations, such as past target locations and actions. This means that when key information, like the target’s location, is missing from the current FOV, the model can attend to previous observations that still hold useful data about the target’s movement patterns. By computing relationships between queries, keys, and values, the robot can better predict where the target is likely to be, even when direct visual input is unavailable. This dynamic allocation of attention enables the robot to maintain robust situational awareness and make more informed navigation decisions, even in challenging environments.

Mathematically, this attention mechanism is represented by the following formula:

where the robot’s input sequence (i.e., past target positions and actions) is transformed into three distinct matrices—Query (Q), Key (K), and Value (V). Queries assess relevance, Keys represent the elements being attended to, and Values provide the actual data being aggregated. This allows the robot to focus on the most critical parts of its environment and adjust its behavior accordingly, despite the limited FOV.

3.4. Twin Delayed Deep Determinstic Policy Gradient (TD3)

Building on the feature extraction capabilities of the CNN and SAN, TD3 is employed as the core policy learning algorithm to manage continuous action spaces in the crow navigation task. TD3 enhances stability by using twin critics to reduce Q-value overestimation and delayed policy updates to improve training efficiency [

30]. Its strength in continuous action optimization makes it effective for real-time obstacle avoidance in dynamic environments, as demonstrated by its integration with methods like the Dynamic Window Approach (DWA) for LiDAR-based navigation [

31] and Long Short-Term Memory (LSTM) networks for path-following in autonomous systems [

32]. In dynamic environments with unpredictable obstacle movement, TD3 is advantageous in managing continuous action space for obstacle avoidance. Its twin critics compute conservative value estimates, addressing overestimation bias, which is particularly critical in environments where rapid changes occur. The TD3 loss function is defined as:

where

represents the action-value function approximated by the i-th Q-network, with

denoting its parameters. The term

refers to the target Q-value, which is calculated as the minimum value between the two Q-networks, addressing the issue of overestimation. Specifically, the target Q-value

is computed as follows:

Here, represents the target Q network, where are the parameters slowly updated from the current Q network parameters . This gradual update process helps stabilize training by providing more consistent target Q-values. The reward is obtained after executing action in state , and γ is the discount factor. The target policy is used to compute the next action, with ϵ being a small noise added to encourage exploration.

To further enhance stability, TD3 implements delayed policy updates and soft target network updates to further stabilize the learning process. These enhancements ensure more accurate Q-value estimation and robust training, setting TD3 apart from other reinforcement learning algorithms like DDPG. TD3’s robustness in managing continuous action spaces, coupled with its enhancements for stability and efficiency, makes it perform well in crowd navigation.

4. Navigation Policy Framework

In this section, we present the design of navigation policy for the proposed system.

4.1. Observation Space

In our proposed method, the observation feature representation, which is used as the input to the TD3 network, is composed of three distinct components, each providing crucial information for effective navigation. First, a 1024-dimensional vector is extracted from the depth image via a CNN. This vector captures spatial features of the environment, helping the robot identify obstacles and free spaces. Second, the depth value of the target object, represented as a scalar, is derived from the depth at the center point of the bounding box detected by YOLO, providing essential target distance information. Lastly, a 128-dimensional vector is produced by a SAN, which processes the historical context of the last 20 actions and the last 20 target positions. This vector captures temporal dependencies and relationships between past actions and target positions, enhancing the agent's ability to make informed decisions based on prior experiences. By integrating these three components, the TD3 network is equipped with comprehensive spatial and temporal information, allowing it to navigate complex environments and efficiently track the target.

4.2. Action Space

The action space is represented by a two-dimensional vector, ,, where denotes the linear velocity and represents the angular velocity. Actions are selected based on a deterministic policy , conditioned on the current observation o. Specifically, the action a is sampled from the policy distribution . The linear velocity ranges from 0 to 0.55 m/s, allowing control over the forward speed, while the angular velocity ω represents the angular velocity ranging from -1.5 to 1.5 rad/s, allowing the robot to adjust its heading during navigation.

4.3. Reward Function

The reward function in our approach is designed to guide the robot toward efficient and safe navigation in dynamic environment. It is defined as follows:

4.3.1. Step Penalty

This component encourages the robot to reach the target efficiently by imposing a constant penalty for each time step. It discourages prolonged navigation and oscillatory behavior. The penalty is set at a constant value of:

4.3.2. Distance Reward

The distance reward is designed to incentivize the robot to move closer to the target. The reward is proportional to the change in distance between the current position and the target, denoted by

, and the previous distance,

. The robot is penalized for moving away from the target:

4.3.3. Camera View Reward

This reward component encourages the robot to keep the target within the camera’s view. The robot is rewarded when the target is visible and penalized when it is lost. The reward is based on whether the target’s bounding box is detected:

4.3.4. Target Position Reward

This reward component encourages the robot to center the target in its view. The closer the target to the center of the camera’s view, the higher the reward. The position of the target within the view is denoted as

.

4.3.5. Obstacle Distance Penalty

This term penalizes the robot for moving too close to obstacles. The penalty is proportional to the change in distance between the agent and the nearest obstacle, where

and

represent the current and previous distance, respectively:

4.3.6. Collision Risk Penalty

A collision risk penalty is introduced to penalize the robot for operating in high-risk areas. As illustrated in

Figure 5, it is calculated as the as the ratio of the number of “dangerous-level” depth pixels

, where their depth is below a danger threshold, to the number of “warning-level” depth pixels

, where their depth is below a warning threshold:

The corresponding penalty is:

The collision risk penalty encourages the robot to avoid highly crowded area and choose a less crowded area for navigation.

5. Model Training

This section outlines the training process for the proposed model, which integrates both CNN and SAN modules within a DRL framework.

5.1. Network Training

By combining the spatial information extracted by the CNN with the temporal dependencies captured by the SAN, the model addresses the limitations posed by the robot’s constrained FOV. This fusion enables more informed and anticipatory decision-making, particularly in dynamic and crowded environments.

Figure 6 illustrates the overall framework of our proposed enhanced TD3 algorithm, where the critic loss of TD3 network is used not only to update the traditional critic network but also to simultaneously update the CNN and SAN networks. Specifically, the state-action-reward-next state

data in the experience replay buffer is first processed with Gaussian noise to generate a noisy next state

At this point, the TD3 algorithm uses two critic networks (Q1 and Q2) to compute the Q-values, and the target Q-value

is generated by taking the minimum of the two target critic networks (Target Critic 1 and Target Critic 2), which is then used to calculate critic loss.

In standard TD3, the critic loss is solely used to update the parameters of the critic network itself. However, in our design, the critic loss is further utilized to update the parameters of the CNN and SAN, enabling them to learn features that help reduce the Q-value prediction error. The parameters of CNN and SAN are updated simultaneously by minimizing this loss function. The gradient descent update rules are given by:

where α is the learning rate,

and

are the gradients of the loss function with respect to the CNN and SAN parameters, respectively. Specifically, the CNN is primarily responsible for extracting visual information from RGB-D images, while the SAN is used to extract temporal features from historical target positions and action sequences. By aligning the CNN and SAN networks with the target of the critic loss, they can collectively learn state and action features that are beneficial for Q-value prediction, thereby enhancing the accuracy of the Critic network's Q-value estimation.

Moreover, this joint optimization method ensures consistency between the feature extraction networks and the policy network, reducing the issue of feature mismatch among networks and improving the stability and overall performance of the training process.

We provide the pseudocode, outlined in

Table 1, which details comprehensive steps and procedures employed during the model training process. The pseudocode includes important aspects such as algorithm initialization, training process, and parameter updates to facilitate the reader's understanding of the algorithm's implementation.

In TD3, the critic network plays a crucial role in estimating Q-values by minimizing the critic loss, which measures the error between predicted and target Q-values. This process ensures accurate value functions and stable convergence. CNN and SAN are integral components of the framework, with the former extracting spatial features from RGB-D images and the latter capturing temporal dependencies from historical data. However, when optimized independently, these networks face limitations, as their feature extraction processes are not directly aligned with the reinforcement learning objective of minimizing Q-value prediction errors. This misalignment can lead to feature mismatch, reducing the effectiveness of the overall system.

To address this, we leverage the critic loss to simultaneously update CNN and SAN, aligning their training objectives with the reinforcement learning goal. This joint optimization provides several key benefits:

Direct Alignment with RL Objectives: CNN and SAN no longer optimize features independently but instead learn representations that directly minimize Q-value prediction errors, improving overall efficiency.

Reduced Feature Mismatch: By aligning feature extraction with the critic’s loss function, the features learned by CNN and SAN are more consistent and better suited to support the critic network.

Improved Stability and Performance: The unified training process enhances the stability of the learning process, accelerates convergence, and improves policy performance by ensuring that spatial and temporal features are optimized to complement each other.

Enhanced Feature Representation: CNN and SAN learn richer and more task-relevant features, enabling the critic network to model complex environments effectively.

Avoiding Optimization Conflicts: Jointly training CNN and SAN avoids potential conflicts or redundancies that may arise when these networks are optimized independently.

This integrated design allows the model to utilize spatial and temporal information more efficiently, compensating for limited FOV and enabling robust decision-making in dynamic and crowded environments.

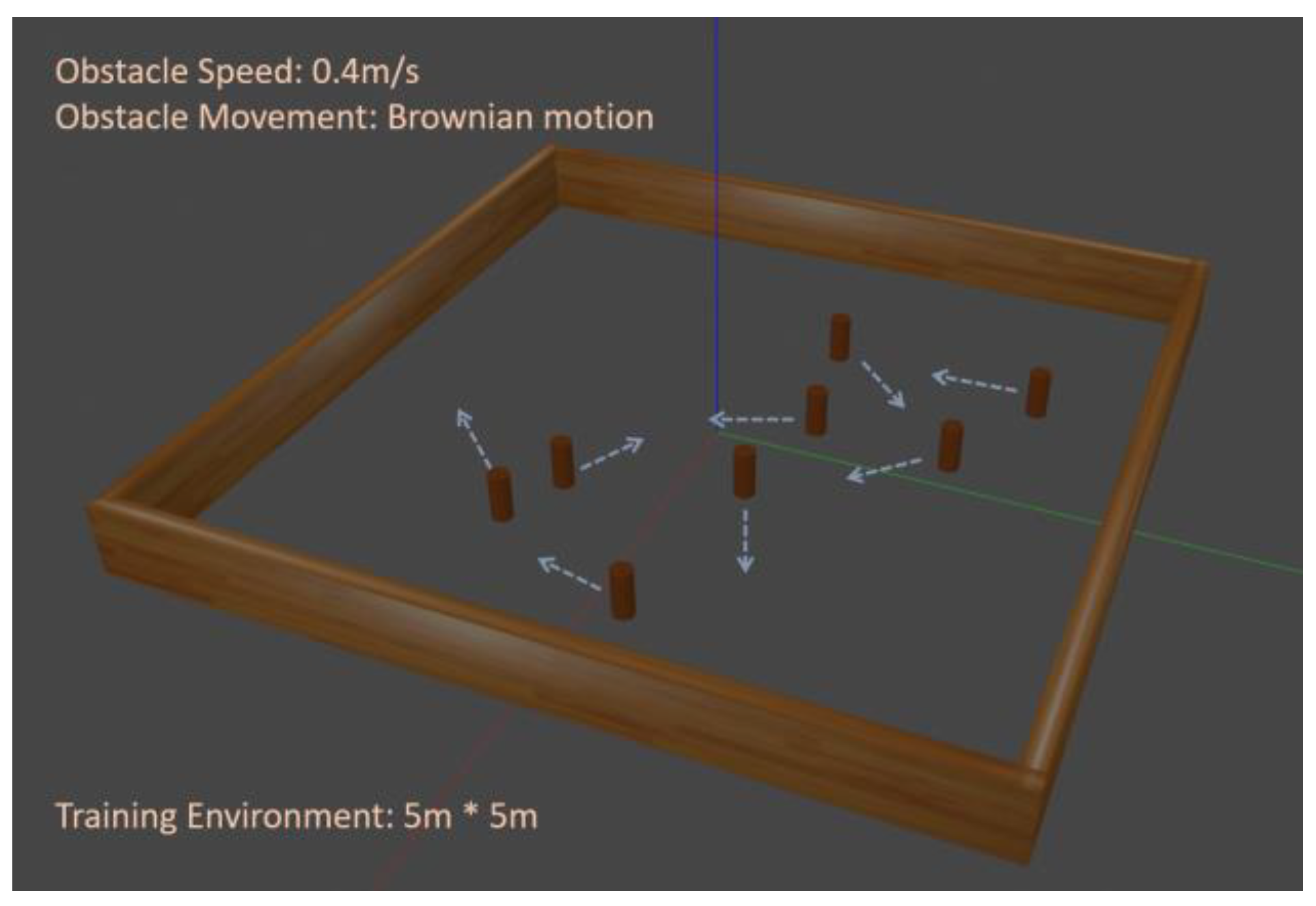

5.2. Training Environment and Procedure

The model training was conducted using an NVIDIA RTX 3090 GPU running Robot Operating System (ROS) Noetic distribution and Gazebo simulator. The simulated environment, as shown in

Figure 7, comprises a 5 m × 5 m square area populated with 12 dynamic obstacles.

These obstacles are cylindrical in shape with a height of 0.24 meters and a radius of 0.05 meters, exhibiting random Brownian motion with a maximum linear speed of 0.4 m/s. The autonomous robot, a TurtleBot Burger equipped with an Intel RealSense D435 depth camera, was tasked with navigating this environment. The robot’s mobility constraints were set with a maximum linear velocity of 0.55 m/s and a maximum angular velocity of 0.15 rad/s. The depth camera provides both depth and visual input, which are subsequently processed to extract crucial environment information for navigation.

Over the course of training, the robot underwent 120,000 episodes, totaling approximately 185 hours. Each episode initiated with the agent positioned randomly within the environment, aiming to reach a predefined target location while avoiding collisions with the moving obstacles. The primary training objective focused on maximizing the cumulative reward, designed to incentivize efficient and safe target pursuit. Throughout the training phase, the agent’s policy was continually refined via the TD3 algorithm. This enhancement was supported by a SAN, which leveraged both spatial and temporal information from historical observations and target.

This training setup enabled the robot to learn navigation strategies within a crowded and dynamically changing environment, achieving real-time decision-making under limited FOV.

6. Results

We tested our approach in a simulated environment with different obstacle movement patterns and conducted evaluations of our method across three distinct scenarios to assess its performance relative to existing DRL-based approaches. Our comparison was made with: (1) map-based DRL approaches in static environments, (2) vision-based DRL approaches in static environments, and (3) TD3-based approach in dynamic environments.

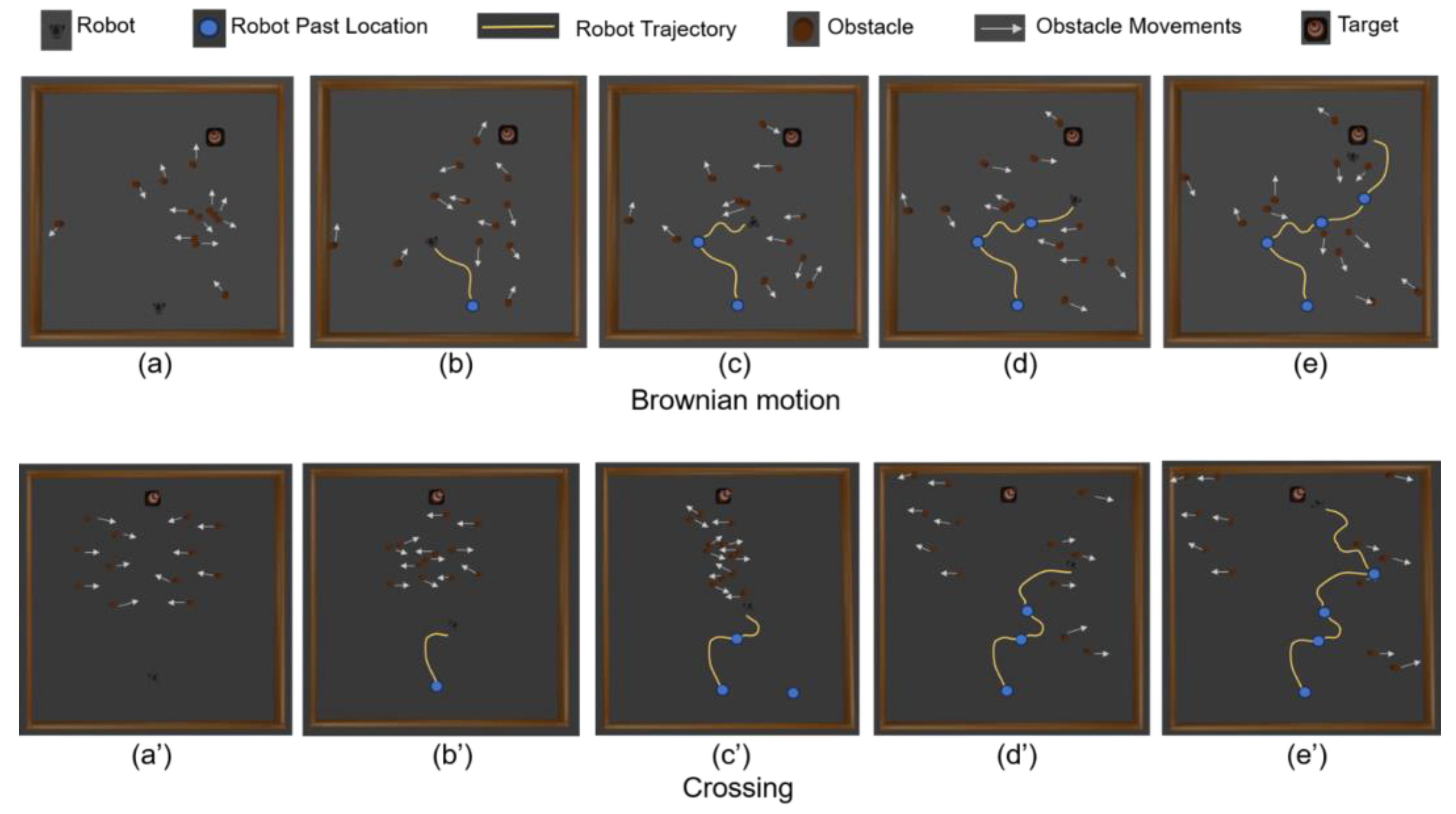

6.1. Trajectory Visualization in Dynamic Environment

To evaluate the robot's navigation performance in dynamic environments, we visualized its trajectory in a simulated space.

Figure 8 illustrates a typical trajectory of a robot navigating a 5 m × 5 m simulated space populated with eight cylindrical obstacles, each measuring 0.24 meters in height and 0.05 meters in radius. These obstacles are programmed to move in patterns of Brownian motion and crossing motion, with a maximum velocity of 0.4 m/s. Each frame captures the robot's state at different time intervals, showcasing its gradual approach toward the target in a dynamic environment. The variations in the trajectory confirm the robot's real-time adaptability to the movements of the obstacles, demonstrating the effectiveness and flexibility of the self-attention enhanced DRL approach in complex navigation tasks.

6.2. Comparison with Map-based DRL Approaches in Static Environment

Our experimental setup included a comparative evaluation against map-based DRL approaches, as summarized in

Table 2. Our method achieved a success rate of 0.97 in a map-less, target-driven simulation environment utilizing a single RGB-D camera. In the same environment, the DQN-SLAM approach achieved a success rate of 0.94 based on a pre-built map, utilizing LiDAR. Similarly, the PPO-SPD model reported a success rate of 0.996 within a narrow corridor populated by humans, employing both RGB-D and LiDAR sensors in an environment with a pre-built map.

It is important to highlight that these baseline methods depend on pre-built maps and a predefined fixed target location, substantially simplifying the navigation task compared to our map-less, visual target-driven setup. In our trials, we employed a 5 m × 5 m indoor environment with four randomly placed static cylindrical objects and a randomly located target object. Despite the challenging conditions, our method demonstrated superior navigation capabilities, achieving the highest success rate, and illustrating its adaptability and efficacy in unstructured, mapless environments, which is a crucial advantage for real-world applications where pre-built maps are unfeasible.

6.3. Comparision with Mapless Vision-based DRL Approaches in Static Environment

In further evaluations, our method was contrasted against other vision-based DRL strategies in a static environment, as detailed in

Table 3. Our approach recorded a success rate of 0.87 in an environment with eight moving obstacles, utilizing a single RGB-D sensor for perception. In contrast, the method developed by Wu et al. [

26], tested in a static indoor bathroom using the AI2-THOR framework, achieved a success rate of 0.627. The PPAC+LSTM method [

25] reported a perfect success rate of 1.0 in a simulated static indoor bathroom scenario, without any moving obstacle.

Our test environment, a 5 m × 5 m simulated space populated with eight cylindrical obstacles each 0.24 meters in height and 0.05 meters in radius moving at a maximum velocity of 0.4 m/s in a Brownian motion, represents a more complex and realistic challenge compared to the static conditions of the baseline methods. This demonstrates the robustness and adaptability of our method, offering significant benefits for practical robotic navigation, especially when employing a vision sensor with limited FOV.

6.4. Comparision with Mapless Vision-based DRL Approaches in Static Environment

In further tests, we compared our method with a baseline TD3 algorithm [

13] in varying obstacle densities (4, 8, and 12 moving obstacles). Our approach, utilizing a single RGB-D camera, achieving success rates of 0.93, 0.87, and 0.57, respectively, as shown in

Table 4.

We extensively tested the open-source program of [

13] in the same simulated environment and recorded its average performance in

Table 4. This baseline method, while achieving similar performance in low-density environments, showed significant performance declines in higher obstacle densities. This performance indicates that our approach not only maintains competitive success rates but also facilitates faster and more efficient navigation, validating its effectiveness in visual target-driven robot navigation tasks under varying dynamic conditions.

6.5. Ablation Study

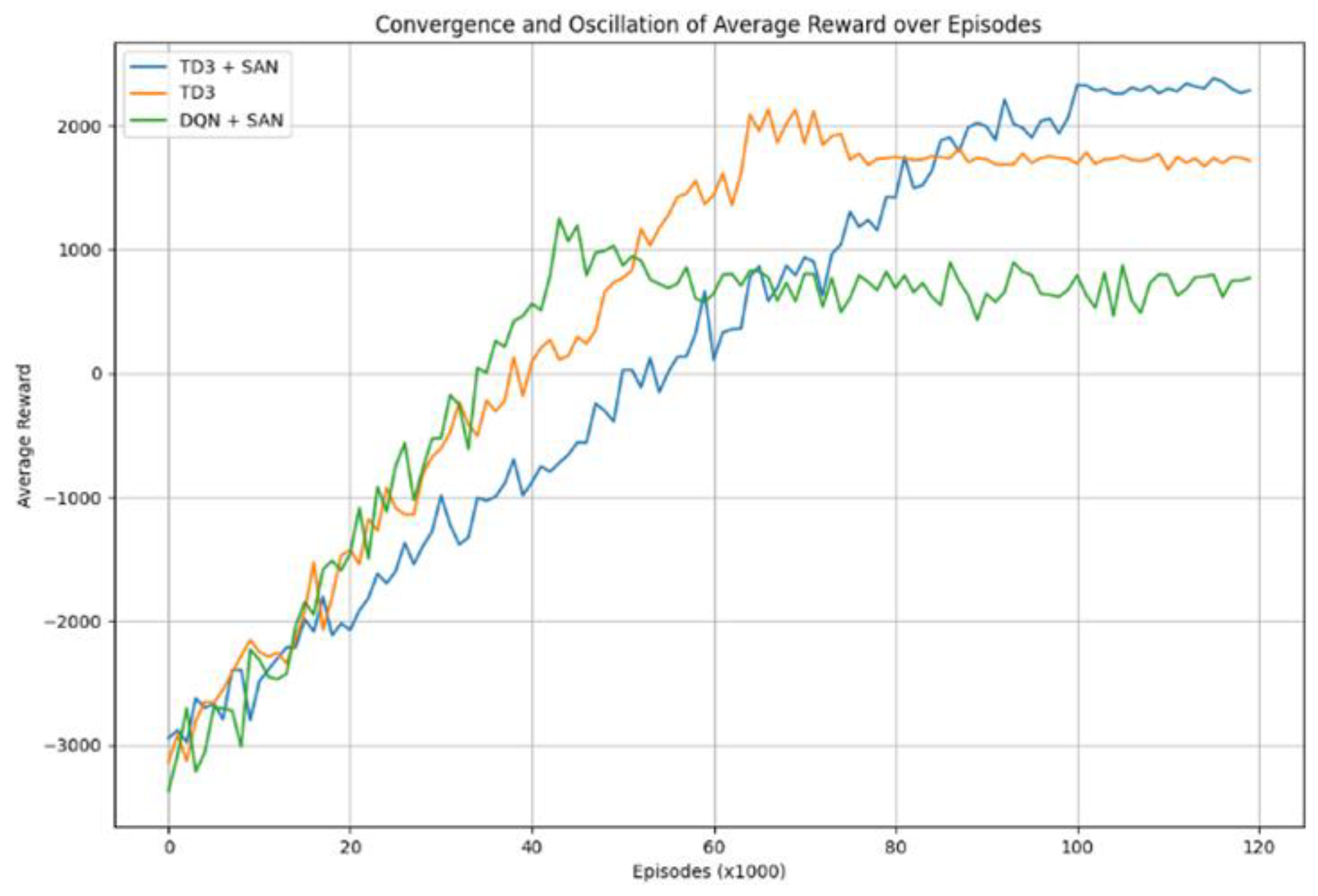

To determine the efficacy of various reinforcement learning configurations, we conducted an ablation study comparing three configurations with respect to their learning convergence and reward maximization, The result is shown in

Figure 9.

6.5.1. TD3 + SAN

This configuration exhibited stable convergence throughout the training period. Initially, the reward values progressively increased from -3000, approaching a level of approximately 2000 within the first 90000 episodes. After this phase, the reward plateaued around 2300, indicative of high performance and long-term stability.

6.5.2. Standalone TD3

Standalone TD3: In contrast, the standalone TD3 algorithm demonstrated faster convergence compared to TD3 + SAN, with rewards rising from the initial values and stabilizing near 1900. Despite some fluctuations observed between episodes 65000 and 75000, the final reward consistently hovered around 1900, suggesting quicker convergence but at a lower reward threshold compared to TD3 + SAN.

6.5.3. DQN + SAN

The DQN + SAN configuration showed the least favorable outcomes among the three tested configuration. Starting with an initial reward of -3400, it exhibited modest improvement during the first 45,000 episodes before stabilizing at a final reward of approximately 920. This configuration suffered from the highest degree of oscillation and demonstrated substantial instability, marking it as the least effective in this task.

It can be concluded from the ablation study that the TD3 + SAN configuration outperformed the others by achieving the highest final rewards and exhibiting significant training stability, albeit with a slower convergence rate. Conversely, the TD3 algorithm, though converging faster, failed to achieve the higher reward levels of TD3 + SAN. DQN + SAN displayed considerable instability and underperformance, reinforcing its unsuitability for robust task execution.

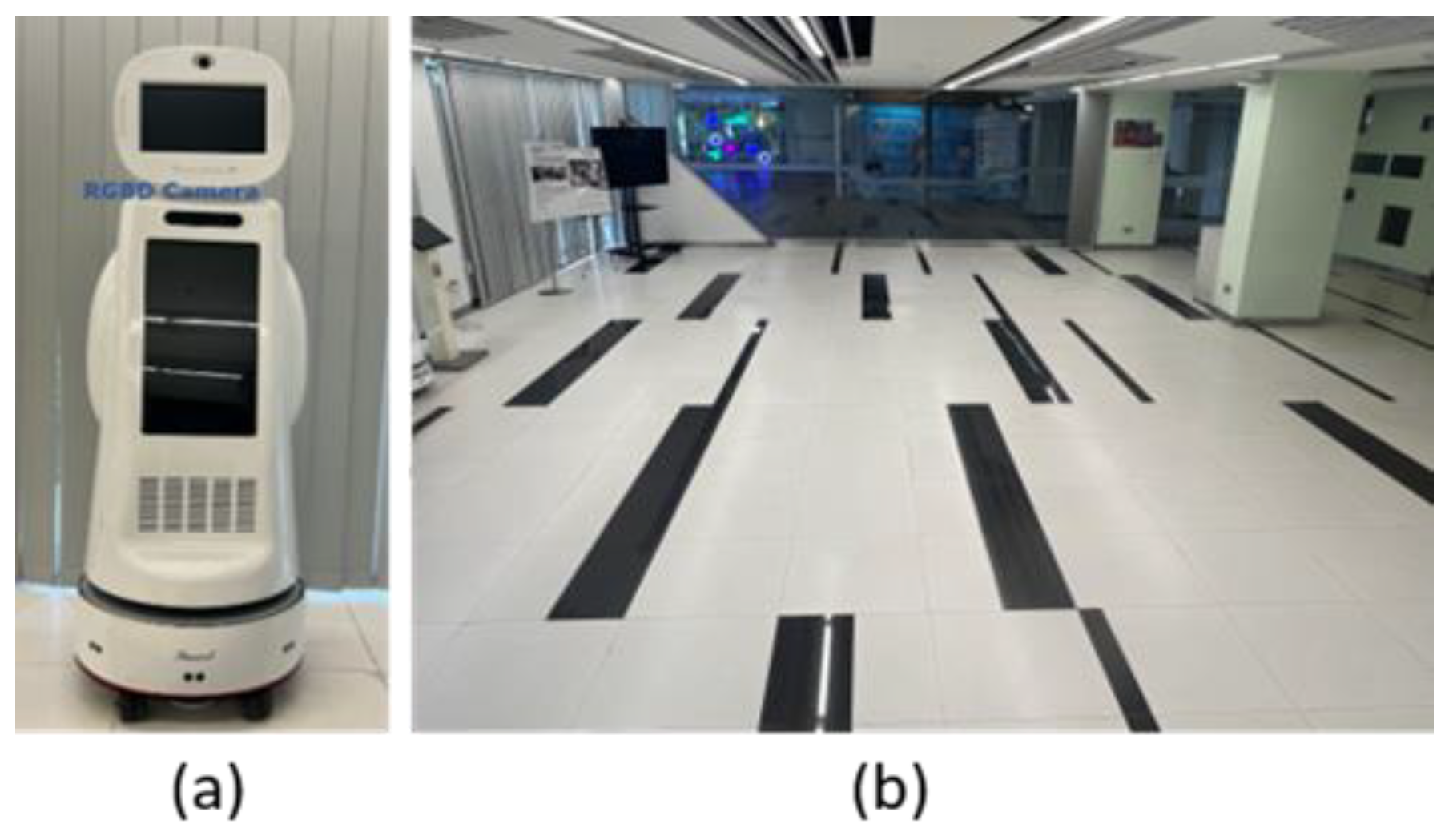

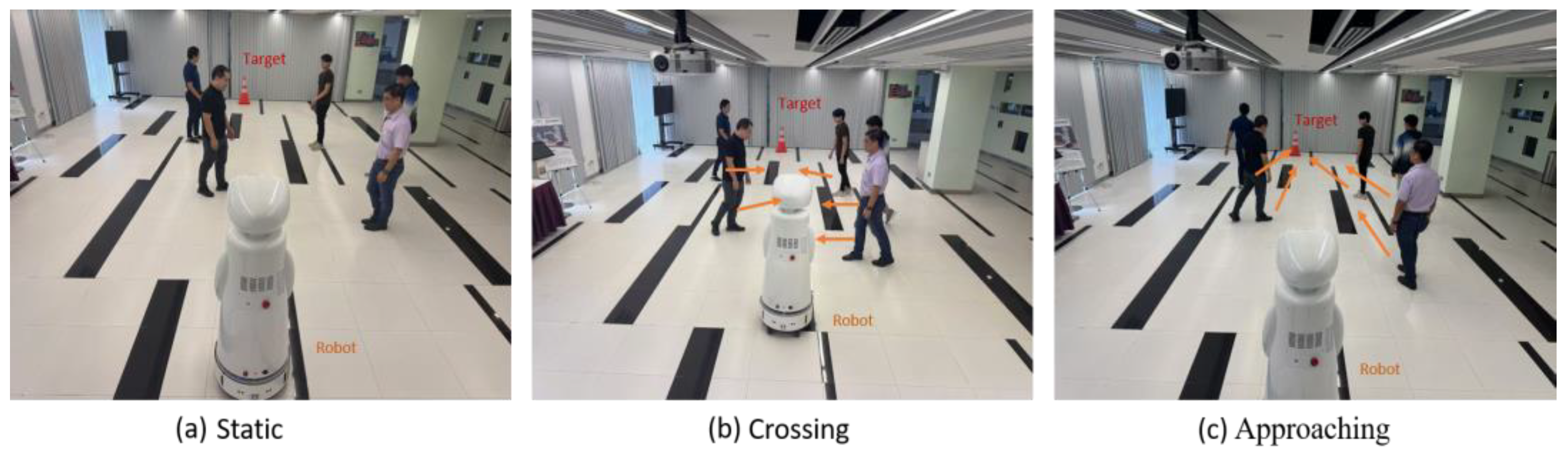

6.6. Experiment Using a Real Robot

To validate the proposed visual target-driven navigation approach, real-world experiments were conducted using a mobile robot, as shown in

Figure 10. The robot was equipped with an RGB-D camera, which provided a 70-degree field of view, and operated under ROS Noetic. The experiments were carried out in a 9.5 m × 7 m indoor environment, with the robot and the target positioned at opposite ends of the area. To simulate a dynamic environment, five pedestrians were included, moving at typical walking speeds to represent moving obstacles. The safety cone served as the visual target for the robot.

The failure condition was defined as the robot coming within 6 cm of a human or a wall, as detected by an ultrasonic sensor, to ensure safety. Additionally, if the robot failed to reach the target within 30 seconds, the trial was considered a failure. The success condition was defined as the robot coming within 15 cm of the target, as detected by the RGB-D camera.

Three distinct scenarios were tested to evaluate the performance of the trained policy: (a) pedestrians standing randomly along the pathway from the robot to the target without movement; (b) pedestrians positioned on either side of the pathway, with some crossing the pathway; and (c) pedestrians positioned on either side of the pathway, with some walking toward the target. The experimental setup is shown in

Figure 11. Each scenario was tested 20 times, and the success rate and average passing time for each trial are summarized in

Table 5.

7. Discussion

7.1. Benefits of TD3 + SAN

In

Figure 8, the incorporation of SAN led to a significant improvement in performance, particularly in the TD3 + SAN configuration. This improvement can be attributed to SAN's ability to enable the agent to dynamically focus on relevant information and recover lost targets when the target temporarily leaves its FOV. By leveraging past observations and capturing global contextual relationships, SAN helps the agent infer the target’s approximate position and resume tracking effectively. Specifically, SAN offers two key advantages:

Target Recovery and Tracking: Through its relational reasoning capability, SAN correlates spatial and temporal patterns in previous observations, allowing the agent to infer the target’s location even in scenarios with occlusions or limited visibility.

Dynamic Focus on Critical Regions: The mechanism of SAN enables robot to dynamically assign importance weights to different regions in the observation space. This allows the agent to prioritize target and obstacle-related information, enhancing decision-making under uncertainty.

Compared to traditional approaches, SAN improves the agent’s robustness to partial observability, reduces instability, and ensures smoother navigation trajectories. These advantages are evident in the TD3 + SAN results, where the agent achieved higher rewards and greater long-term stability than TD3 alone.

7.2. Overcoming the Challenge of Limited FOV

In visual target-driven crowd navigation, the limited FOV often causes the target to be lost, hindering navigation performance. Our method integrates the SAN, which leverages information from past frames to help the agent recover and track the target effectively. Additionally, we utilize TD3 algorithm, which is particularly well-suited for handling continuous action spaces, enabling the agent to efficiently navigate and reach the target. TD3’s actor-critic mechanism, along with its key features of target value smoothing and delayed updates, ensures stable learning, allowing the agent to adapt to dynamic crowd environments even with a limited FOV. Moreover, our reward function design of Camera View Reward and Target Position Reward effectively encourages the robot to keep the target within its view.

The results of TD3 + SAN demonstrate that the combination of SAN and TD3 enhances the agent's robustness and navigation performance under limited FOV conditions, validating the effectiveness of our approach.

7.3. Collaborate Training of CNN, SAN and TD3 for Better Performance

The experimental results are shown in

Figure 9. TD3 + SAN demonstrates significant advantages in dynamic and crowded environments with limited field of view. Compared to traditional TD3 and DQN + SAN, TD3 + SAN converges to a higher average reward more quickly, exhibits better stability, and achieves signifi6cantly better final reward values. This indicates that the collaborative optimization of CNN and SAN effectively utilizes both visual and temporal features, compensating for the perception limitations due to the restricted field of view, while enhancing decision-making robustness and performance in complex interaction scenarios.

8. Conclusions

In this study, we introduced a new visual target-driven navigation strategy for robots operating in crowded and dynamic environments with a limited FOV. Our approach utilizes the TD3 algorithm, integrated SAN, enabling effective navigation without the need for pre-mapped environments

Our contributions offer meaningful advancements in the field of autonomous robotic navigation. Firstly, we successfully reduced hardware and computational requirements by employing a single RGB-D camera, thereby simplifying the system's architecture, and lowering the overall cost and complexity. Secondly, the incorporation of a SAN enabled our system to maintain awareness of the navigation target even with a limited sensory field, enhancing the robot’s ability to relocate and track dynamic targets efficiently. Finally, the robust performance of our approach was demonstrated through extensive simulation and real robot experiments, which showed superior navigation capabilities in terms of both obstacle avoidance and target pursuit in complex environments.

The experimental results validated that our model outperforms traditional DRL-based methods in dynamic environments, achieving higher success rates and shorter average target-reaching times. Notably, our method exhibited greater adaptability to changes within the environment, proving particularly effective in real-world scenarios where unpredictability and the presence of dynamic obstacles are common.

Future work will focus on further enhancing the adaptability and efficiency of the navigation system under even more challenging conditions, such as outdoor environments with more extreme variability. Additionally, integrating multi-modal sensory inputs will be considered to broaden the applicability of the technology across various robotic applications.

Author Contributions

Conceptualization, Y.L.; methodology, Y.L.; Q.L.; software, Q.L.; formal analysis, Y.L.; Q.L.; Y.S.; resources, J.Y.; writing—original draft preparation, Y.L., Q.L.; writing—review and editing, J.Y., Y.S., B.W.; visualization, Q.L., B.W.; supervision, J.Y.; project administration, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- H. Yin, S. Li, Y. Tao, J. Guo, and B. Huang, "Dynam-SLAM: An Accurate, Robust Stereo Visual-Inertial SLAM Method in Dynamic Environments," IEEE Trans. Robot., vol. 39, no. 1, pp. 289-308, Feb. 2023. [CrossRef]

- S. M. Chaves, A. Kim, and R. M. Eustice, "Opportunistic sampling-based planning for active visual SLAM," in 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2014, pp. 3073-3080.

- K. Lobos-Tsunekawa, F. Leiva, and J. Ruiz-del-Solar, "Visual navigation for biped humanoid robots using deep reinforcement learning," *IEEE Robotics and Automation Letters*, vol. 3, no. 4, pp. 3247-3254, Oct. 2018. [CrossRef]

- J. Jin, N. M. Nguyen, N. Sakib, D. Graves, H. Yao, and M. Jagersand, "Mapless navigation among dynamics with social-safety-awareness: a reinforcement learning approach from 2D laser scans," *arXiv preprint arXiv:1911.03074 [cs.RO]*, Nov. 2019. [CrossRef]

- L. Tai, G. Paolo, and M. Liu, "Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation," in 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 31-36, Sep. 2017.

- M. M. Ejaz, T. B. Tang and C. -K. Lu, "Autonomous Visual Navigation using Deep Reinforcement Learning: An Overview," 2019 IEEE Student Conference on Research and Development (SCOReD), Bandar Seri Iskandar, Malaysia, 2019, pp. 294-299. [CrossRef]

- H. Bai, S. Cai, N. Ye, D. Hsu, and W. S. Lee, "Intention-aware online POMDP planning for autonomous driving in a crowd," in *2015 IEEE International Conference on Robotics and Automation (ICRA)*, Seattle, WA, USA, 2015, pp. 454-460. [CrossRef]

- C. Wang, J. Wang, Y. Shen, and X. Zhang, "Autonomous Navigation of UAVs in Large-Scale Complex Environments: A Deep Reinforcement Learning Approach," IEEE Trans. Veh. Technol., vol. 68, no. 3, pp. 2124-2136, Mar. 2019. [CrossRef]

- J. Zeng, R. Ju, L. Qin, Y. Hu, Q. Yin, and C. Hu, "Navigation in Unknown Dynamic Environments Based on Deep Reinforcement Learning," Sensors, vol. 19, no. 18, p. 3837, Sep. 2019. [CrossRef]

- K. Zhu and T. Zhang, "Deep reinforcement learning based mobile robot navigation: A review," Tsinghua Sci. Technol., vol. 26, no. 5, pp. 674-691, Oct. 2021. [CrossRef]

- N. D. Toan and K. G. Woo, "Mapless navigation with deep reinforcement learning based on the convolutional proximal policy optimization network," in *Proc. 2021 IEEE International Conference on Big Data and Smart Computing (BigComp)*, Jeju Island, Korea (South), 2021, pp. 298-301. [CrossRef]

- L. Sun, J. Zhai, and W. Qin, "Crowd navigation in an unknown and dynamic environment based on deep reinforcement learning," *IEEE Access*, vol. 7, pp. 109544-109554, 2019. [CrossRef]

- H. Anas, O. W. Hong, and O. A. Malik, "Deep reinforcement learning-based mapless crowd navigation with perceived risk of the moving crowd for mobile robots," *arXiv preprint arXiv:2304.03593 [cs.RO]*, Apr. 2023. [CrossRef]

- M. Shi, G. Chen, Á. Serra Gómez, S. Wu, and J. Alonso-Mora, "Evaluating dynamic environment difficulty for obstacle avoidance benchmarking," *arXiv preprint arXiv:2404.14848 [cs.RO]*, Apr. 2024. [CrossRef]

- X. Sun, Q. Zhang, Y. Wei, and M. Liu, "Risk-aware deep reinforcement learning for robot crowd navigation," *Electronics*, vol. 12, no. 23, Art. no. 4744, 2023. [CrossRef]

- C. Chen, Y. Liu, S. Kreiss, and A. Alahi, "Crowd-robot interaction: Crowd-aware robot navigation with attention-based deep reinforcement learning," arXiv preprint arXiv:1809.08835 [cs.RO], Sep. 2018. [CrossRef]

- N. Duo, Q. Wang, Q. Lv, H. Wei, and P. Zhang, "A deep reinforcement learning based mapless navigation algorithm using continuous actions," in *2019 International Conference on Robots & Intelligent System (ICRIS)*, Haikou, China, 2019, pp. 63-68. [CrossRef]

- M. Dobrevski and D. Skočaj, "Deep reinforcement learning for map-less goal-driven robot navigation," *International Journal of Advanced Robotic Systems*, vol. 18, no. 1, 2021. [CrossRef]

- X. Lei, Z. Zhang, and P. Dong, "Dynamic path planning of unknown environment based on deep reinforcement learning," Journal of Robotics, vol. 2018, pp. 1-10, 2018. [CrossRef]

- G. Chen, L. Pan, Y. Chen, P. Xu, Z. Wang, P. Wu, J. Ji, and X. Chen, "Robot navigation with map-based deep reinforcement learning," arXiv preprint arXiv:2002.04349 [cs.RO], Feb. 2020. [CrossRef]

- S. Yao, G. Chen, Q. Qiu, J. Ma, X. Chen and J. Ji, "Crowd-Aware Robot Navigation for Pedestrians with Multiple Collision Avoidance Strategies via Map-based Deep Reinforcement Learning," 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 2021, pp. 8144-8150. [CrossRef]

- J. Kulhánek, E. Derner, T. de Bruin, and R. Babuška, "Vision-based navigation using deep reinforcement learning," *arXiv preprint arXiv:1908.03627 [cs.RO]*, Aug. 2019. [CrossRef]

- S. Hsu, S. Chan, P. Wu, K. Xiao, and L. Fu, "Distributed deep reinforcement learning based indoor visual navigation," in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 2018, pp. 2532-2537. [CrossRef]

- Y. Zhu, R. Mottaghi, E. Kolve, J. J. Lim, A. Gupta, L. Fei-Fei, and A. Farhadi, "Target-driven visual navigation in indoor scenes using deep reinforcement learning," arXiv preprint arXiv:1609.05143 [cs.CV], Sep. 2016. [CrossRef]

- J. Kulhánek, E. Derner, and R. Babuška, "Visual navigation in real-world indoor environments using end-to-end deep reinforcement learning," arXiv:2010.10903v1 [cs.RO] , Oct. 2020. arXiv:2010.10903v1 [cs.RO], Oct. 2020.

- Q. Wu, K. Xu, J. Wang, M. Xu, X. Gong, and D. Manocha, "Reinforcement learning-based visual navigation with information-theoretic regularization," IEEE ROBOTICS AND AUTOMATION LETTERS, vol. 6, no. 2, April. 2021.

- L. Liu, D. Dugas, G. Cesari, R. Siegwart, and R. Dubé, "Robot navigation in crowded environments using deep reinforcement learning," in *Proc. 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)*, Las Vegas, NV, USA, 2020, pp. 5671-5677. [CrossRef]

- H. Zhao, J. Jia, and V. Koltun, "Exploring Self-Attention for Image Recognition," in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020), 2020, pp. 100-110. [CrossRef]

- Humphreys, Glyn & Sui, Jie. (2015). Attentional control and the self: The Self Attention Network (SAN). Cognitive neuroscience. 21. [CrossRef]

- S. Fujimoto, H. van Hoof, and D. Meger, "Addressing function approximation error in actor-critic methods," *arXiv preprint arXiv:1802.09477 [cs.AI]*, Feb. 2018. [CrossRef]

- H. Liu, Y. Shen, C. Zhou, Y. Zou, Z. Gao, and Q. Wang, "TD3 based collision free motion planning for robot navigation," arXiv preprint arXiv:2405.15460 [cs.RO*, May 2024. [CrossRef]

- X. Qu, Y. Jiang, R. Zhang, and F. Long, "A deep reinforcement learning-based path-following control scheme for an uncertain under-actuated autonomous marine vehicle," *J. Mar. Sci. Eng.*, vol. 11, no. 9, p. 1762, Sep. 2023. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).