1. Introduction

The field of Human-Robot Interaction (HRI) has been rapidly evolving, with recent years seeing a significant focus on integrating Augmented Reality (AR). HRI, which combines the efforts of humans and robots, offers considerable flexibility and benefits, such as reduced production time [

1,

2]. Although HRI is used in various industries, such as medical and automotive, it is predominantly applied in collaborative industrial settings, such as assembly lines and manufacturing [

3].

Recent research has used AR to address these challenges in HRI. AR has been shown to improve accuracy and efficiency, reduce cognitive workload, and minimize errors [

4,

5]. In HRI, AR is implemented through devices such as headsets, smartwatches, and projections, each tailored to tackle different aspects of the aforementioned issues [

6,

7,

8]. However, the rise in AR usage has introduced a new challenge related to information visualization. Current research on AR in HRI focuses more on information architecture rather than the presentation of information to the user [

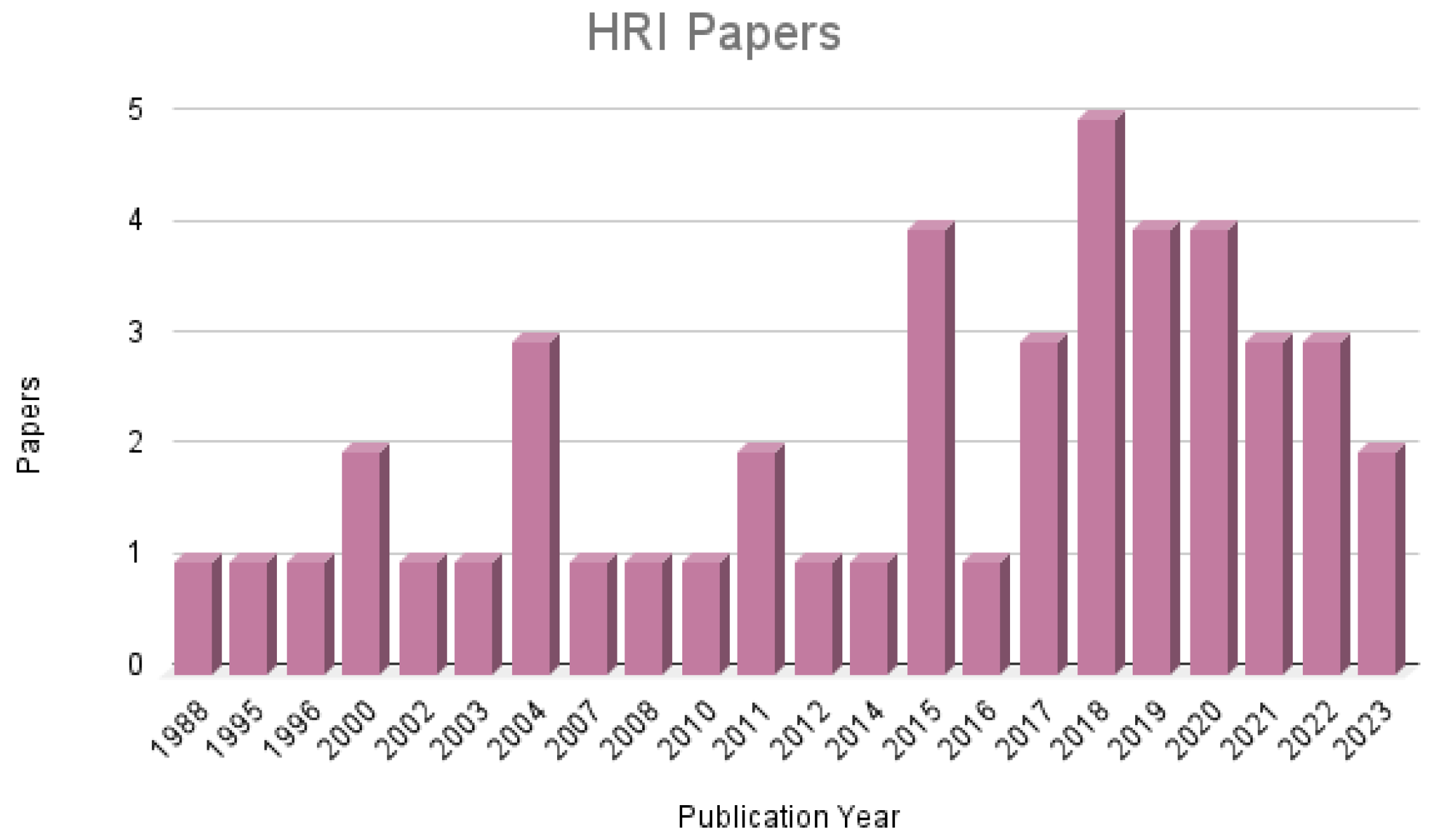

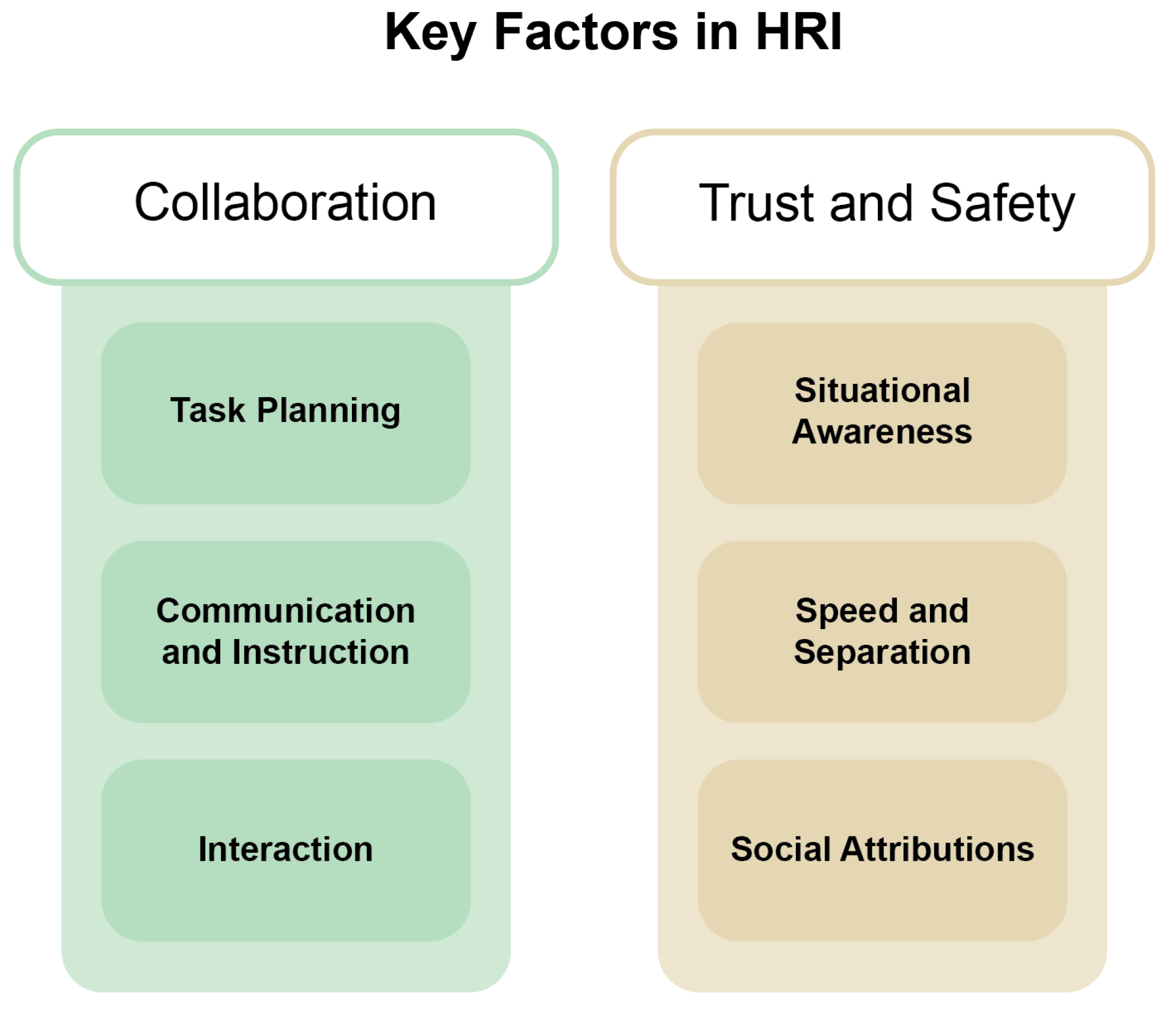

9] The two graphs below illustrate references and publication trends based on their year of publication. The first graph,

Figure 1, shows the papers that focus on HRI. The second graph,

Figure 2, displays the articles that discuss AR in HRI.

It is crucial to emphasize the user interface (UI) in AR applications, as it directly affects how users perceive, comprehend, and interact with the instructions and information provided [

10]. This is especially important in high-speed, high-stress environments where humans work in close proximity to robots. Integrating Human-Computer Interaction (HCI) principles into AR interfaces can enhance both visualization and usability.

Most AR in robotics research focuses primarily on AR in manufacturing, rather than HRI as a whole [

4,

11,

12,

13]. These papers solely address the applications and benefits of AR in aiding the work between humans and robots in manufacturing settings. Regarding HRI as a whole, prior research also includes a survey paper on the use of augmented reality (AR) in HRI [

5]. This paper is highly technical and introduces specialized terminology to categorize different AR hardware, overlays, and interaction points. However, none of the papers mentioned above address HCI, user interface design, or the importance of how visual information should be displayed. An AR survey paper briefly touches on HCI, but is not focused on the field of HRI [

9]. Instead, it looks at AR used for situational awareness across many fields of research.

There is a gap in research where HRI meets HCI. The principles of good design in AR from HCI can be used to improve the visualization of information within AR systems in HRI. This survey paper aims to provide a comprehensive literature review of key factors in HRI, AR visualizations that enhance HRI, and potential applications of HCI within AR in HRI. The paper will begin by discussing the key factors of HRI and research over the years that have improved both collaboration and trust and safety within HRI. It will then look at common challenges within HRI and prior work that utilizes AR to eliminate these challenges. Lastly, it will discuss the implementation of UI design practices within AR in HRI, good design practices from HCI principles, and provide a framework for future work in AR with respect to HRI.

Having established the significance of AR in enhancing Human-Robot Interaction (HRI), this study now turns to the methods employed to compile and analyze the relevant literature, providing a robust foundation for the proposed framework

2. Materials and Methods

In this study, four researchers collaborated to analyze and compile more than 115 papers to explore the integration of Augmented Reality (AR) into Human-Robot Interaction (HRI). The first author focused on HRI and AR related content, the second author concentrated on Human-Computer Interaction (HCI) materials, the third author specialized in situational awareness, and the fourth author supervised the entire research process, ensuring coherence and scientific rigor. The articles were sourced from reputable databases, including, but not limited to, Google Scholar and the ACM Digital Library, Elsevier, and IEEE using keywords such as "AR in HRI," "HCI in HRI," and "Mixed Reality in HRI to mention a few." The collected papers were meticulously reviewed and categorized based on their contributions to key areas within HRI, such as communication, safety, interaction, and other relevant aspects mentioned in this article. This categorization allowed for the development of a robust framework that outlines the critical elements of AR necessary for the advancement of HRI, focusing on improving collaboration, safety, and effective communication between humans and robots. With a comprehensive review of the methodologies in place, we can now explore the key factors that drive successful Human-Robot Interaction, particularly focusing on collaboration, trust, and safety.

3. Key Factors in HRI

Human-robot interaction (HRI) is the combination of both robots and humans working together. It combines the accuracy and speed of automated machines with the ease of adjustability and change of human labor [

3,

14]. HRI requires robots to be complex, they must be flexible enough to adapt to changing work environments and situations while also being autonomous [

2,

15]. All robots have control systems and software controlled by their human counterparts; some require full direction, such as teleoperated robots, while others operate alone, but are previously programmed to complete certain tasks [

1]. Human robot collaboration (HRC) refers to when humans and robots work together towards a common goal. HRC is a subsection of HRI and often utilizes cognitive robots that can plan their own actions by gathering information from the environment, understanding the human counterpart’s intent and determining what steps need to be completed in order to finish the task [

16]. These types of cognitive robot are advancing more in the field of HCI and require modern machine learning solutions that can build both the cognitive model and the behavioral block [

17]. Typically, HRC is seen in industrial work settings including manufacturing and fabrication [

18].

This paper mostly looks at HRC research in industrial settings but also includes HRI and HRC research in other fields. Therefore, the umbrella term HRI will be used unless it is necessary to specify the research was done for HRC. HRI can be beneficial for a number of reasons including low production costs, flexible adaptation to changing production layouts and tasks, and ergonomic benefits for humans [

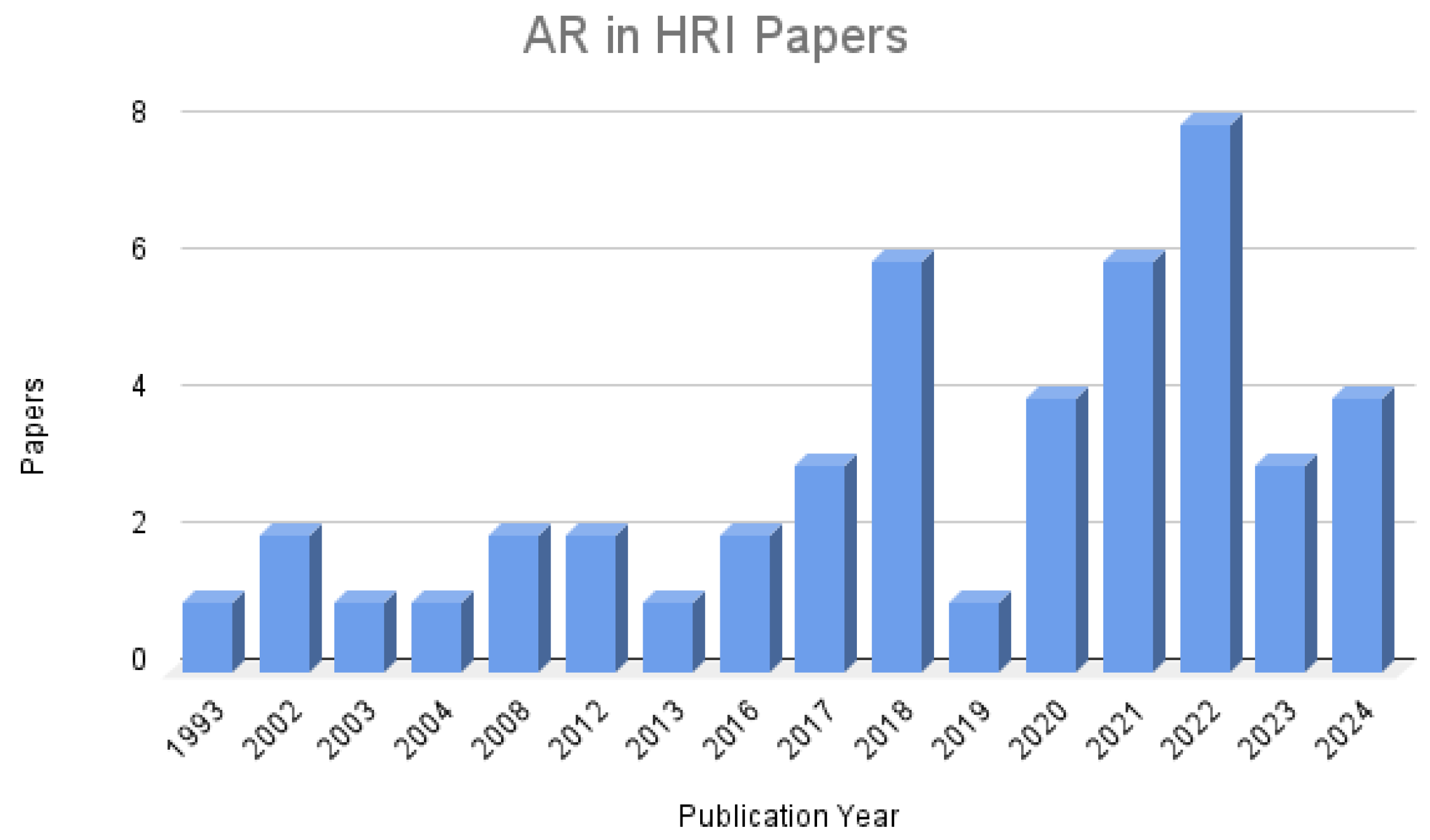

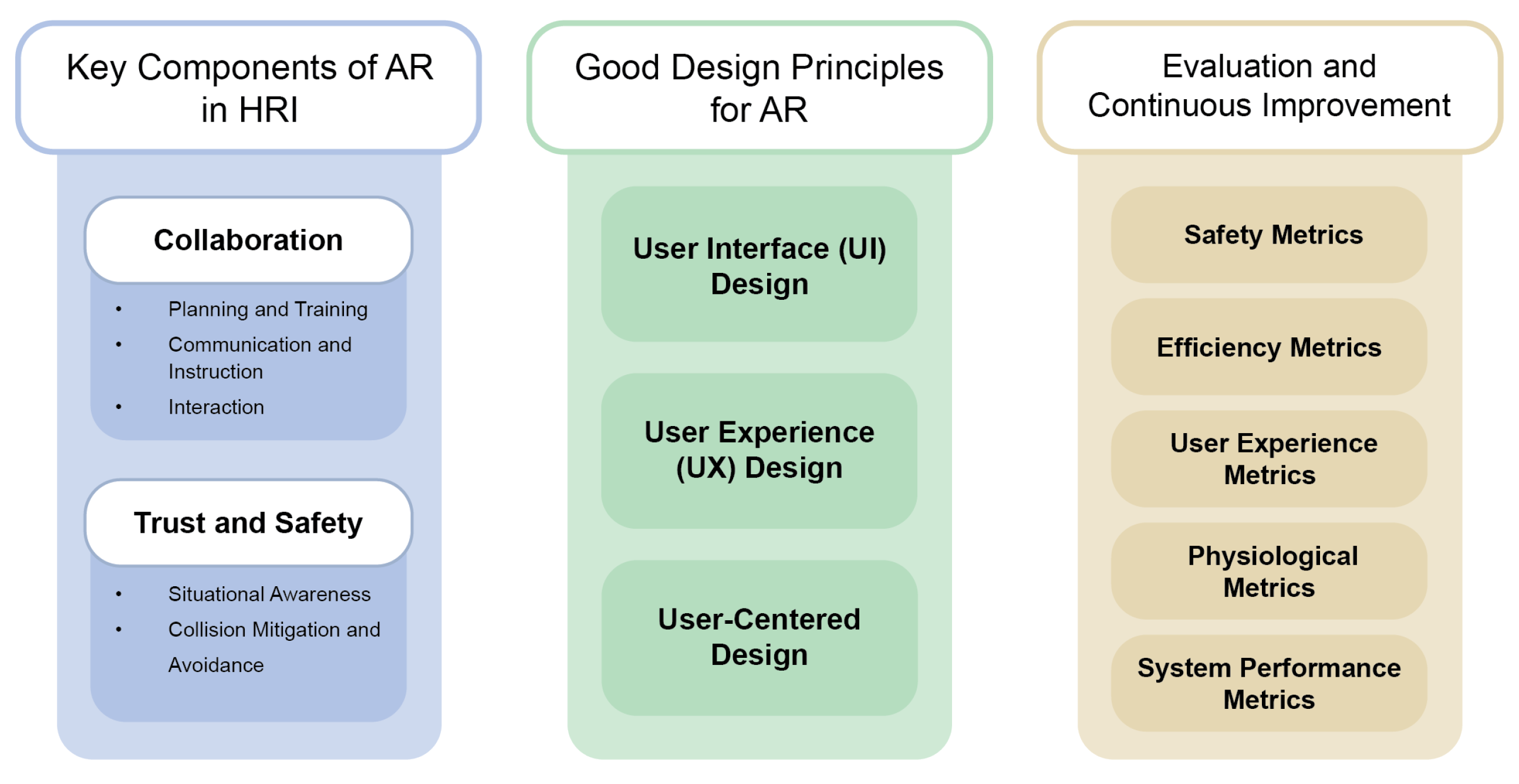

19]. Research in HRI tends to focus on improving fluency and efficiency between humans and robots through 2 key factors: Collaboration and Trust and Safety. The framework for this section has been visualized in

Figure 3

3.1. Collaboration

Collaboration between humans and robots refers to how they work together. Bauer et al. categorize various types of HRI collaboration into five categories: cell, coexistence, sequential collaboration, cooperation, and responsive collaboration [

16]. Each of these levels increases exponentially in terms of how closely robots and humans work and how much they interact with each other.

Efficient collaboration requires planning, communication and instruction, and interaction. Planning entails the allocation of tasks and deciding the pathways of the robots [

16]. Communication and instruction pertain to how humans and robots deliver and send each other feedback or instructions. With proper planning and communication, successful interaction between humans and robots can be achieved. Interaction can also proceed smoothly and quickly with the integration of action planning. Action planning involves robots anticipating a human’s movement and then planning their own actions accordingly. This subsection explores current research that aims to improve the planning, communication and interaction in HRI.

3.1.1. Task Planning

When robots and humans work together, proper planning is required to ensure safe interaction and a productive environment. One aspect of planning is task allocation or assigning proper tasks to both human individuals and robot entities. This ensures a smooth workflow and prevents overloading for any single participant. Lamon et al. [

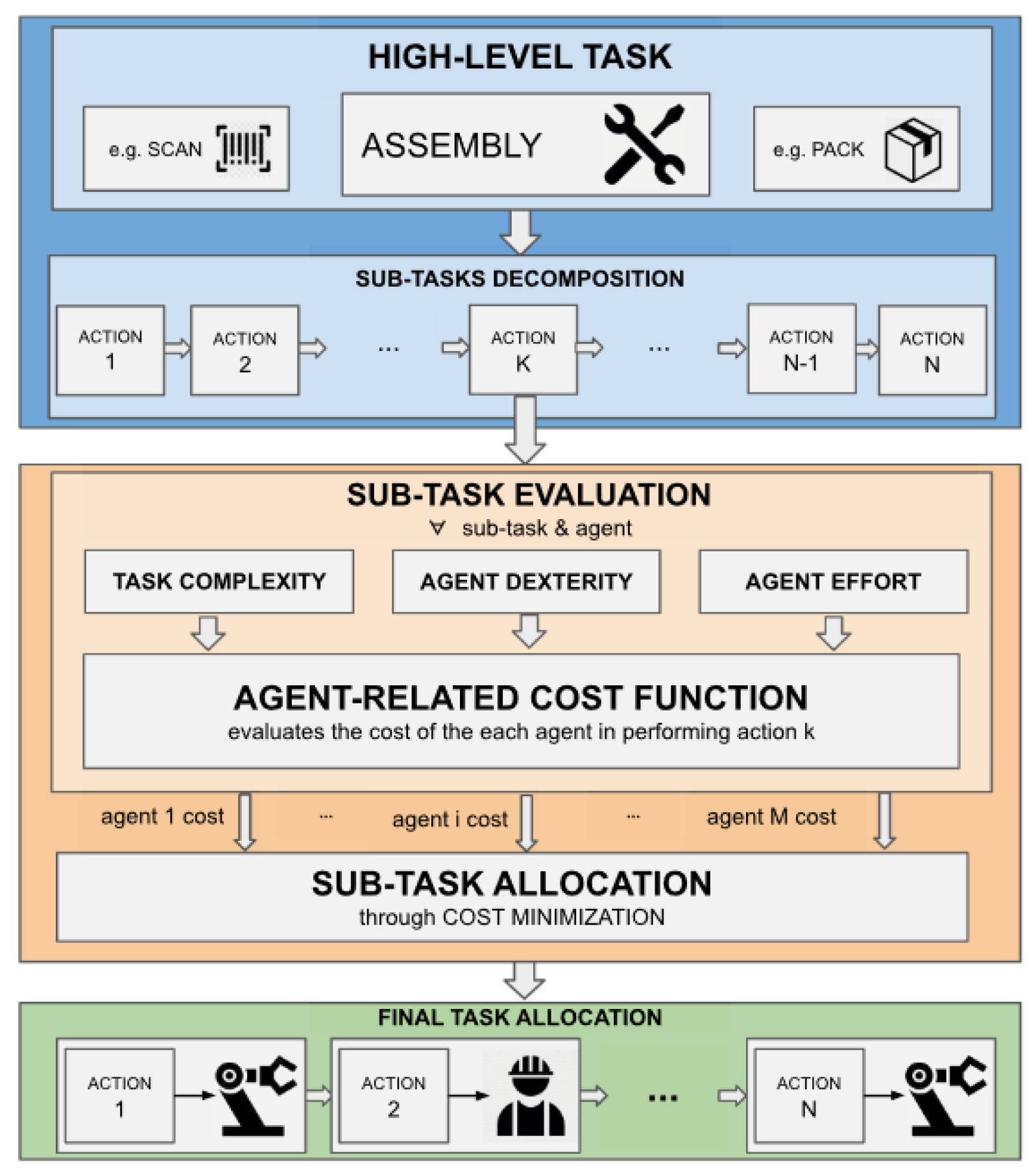

20] look at allocating tasks in manufacturing scenarios based on a robot’s physical characteristics. The experiment looks at the physical capabilities of robot agents and human workers and then allocates tasks accordingly.

Figure 4, taken from the paper, explains how tasks are broken down into a series of actions. These actions are assessed by the algorithm to determine whether they should be completed by a human or robot agent and are then allocated accordingly. The solution proposes which tasks are better suited for robot agents to remove easily physically fatiguing tasks from human workers. Results proved that tasks allocated by physical characteristics are suitable for fast-reconfigurable manufacturing environments. Another way to allocate tasks is by looking at trust [

20]. Rahman et al. [

21] consider the two-way trust of human trust in robots and human trust when creating a system that allocates and reallocates subtasks based on trust levels. Real-time trust methods and computational models of trust are used to calculate trust levels in both humans and robots. Subtasks are allocated using these calculations and reallocated in real time if trust levels drop. The results show that the allocation of subtasks and re-allocation based on trust lead to improved assembly performance compared to considering human trust alone or no trust at all.

3.1.2. Communication and Instruction

Instruction and communication are crucial in any industrial task, but it is especially important when involving robot entities that collaborate with humans. In typical HRI settings, most communication occurs with a human operator who controls the robots in the environment and instructs all human workers. When working with teleoperated robots the case is different. Teleoperated robots are controlled by a human operator from a different location. Oftentimes, teleoperated robots are entering locations that are not suitable for humans like underground caves or sewage systems. These robots are fully operated from a machine and communication can be difficult when the robot’s environment is dark and cannot be properly viewed through its camera [

22]. Glassmire et al. [

23] add force feedback to teleoperated humanoid robot astronauts. The addition of haptic feedback was proven to improve the performance of the operator and decrease completion times.

3.1.3. Interaction

When humans and robots work together, it often involves taking turns or working on separate tasks, resulting in rigid actions and limited flow. In contrast, when humans work with other humans, they adapt to each other and begin to predict each other’s actions, creating a more seamless and efficient workflow [

24]. This type of flow is often attempted to be replicated by making robots autonomous using various systems that involve prediction and adaptation. Hoffman et al. [

24] introduce an adaptive action selection system to robots that allows for anticipatory decision-making. The robots adapt to the human workers and use predictions to automate their next steps. The researchers found an increase in fluency in human-robot interaction when the robots used anticipatory actions through a higher increase in concurrent motion and a decrease in time between human and robot action. Additionally, participants who interacted with robots that did not use anticipatory actions were often annoyed by the robots’ lack of predictive behavior. Lasota et al. [

25] conducted a similar study in which participants worked with an adaptive robot that used human-aware motion planning. Fluency between humans and robots were evaluated along with human satisfaction and perceived safety. The results concluded that team fluency increased with the adaptive robot, leading to faster task completion times, decreased idle times, and increased concurrent motion. Additionally, participants reported feeling comfortable and satisfied working with the adaptive robot. Yao et al. [

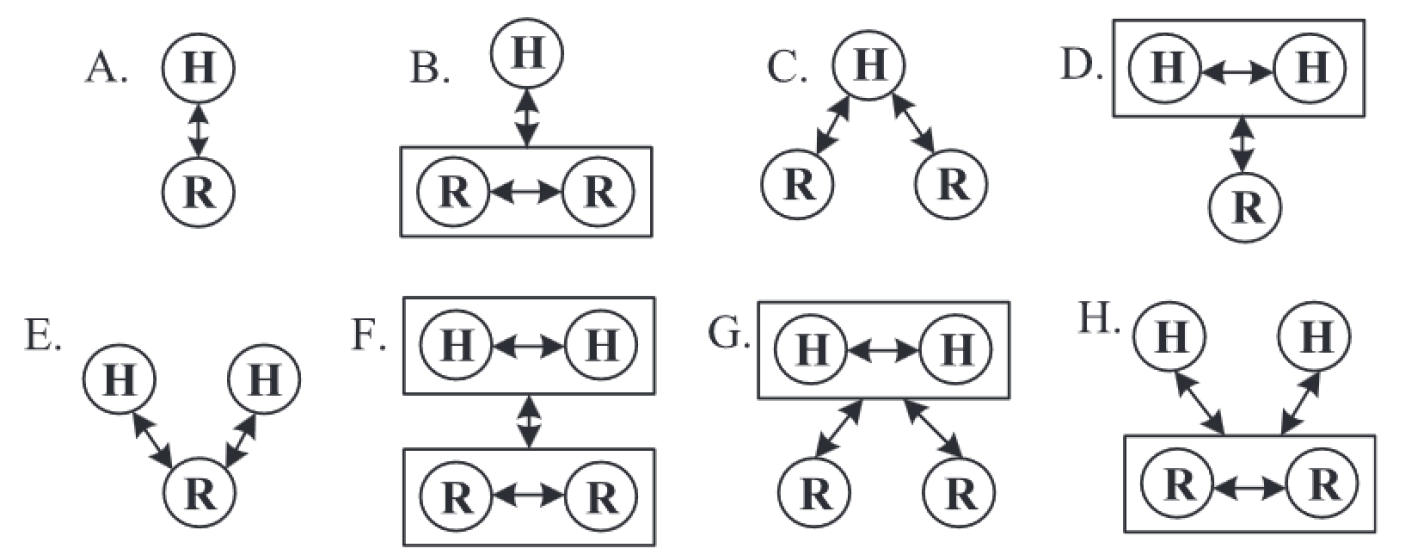

26] use a different approach to increasing autonomy in robots, they use Cyber-Physical Production System (CPPS) and IEC 6199 (International Electrotechnical Commission) function blocks. The system was designed to allow for robot autonomy during various human-robot interaction scenarios.

Figure 5 shows eight categories of human-robot teamwork that are addressed by this system. The feasibility of this proposed system was tested in an assembly line case and proven to have potential capabilities to improve HRC fluency and increase the flexibility of manufacturing systems in future work.

Another process to increase autonomy in robots is through the use of digital twins. Digital twins are the digital representations of physical objects. In HRI, digital twins are typically used to model robotic mechanisms and simulate changes in tasks and manufacturing scenarios before implementing them in real life [

27]. This virtual visualization enables workers to devise optimal solutions and saves time, money, and effort, as not all changes need to be physically tested [

28]. Liu et al. [

28] create a digital twin-based design platform using a quad-play CMCO (Configuration design-Motion planning-Control development-Optimization decoupling) model. The platform is used to design flow-type smart manufacturing systems that can be easily adjusted. A completed case study demonstrated that the digital twin-based platform is both efficient and feasible. Rosen et al. [

29] explore the topic of digital twins increasing the autonomy of robots in manufacturing settings. Information gathered by sensors or generated from the system is stored within a digital twin, allowing it to understand and represent the full environment and process state. The researchers propose that this information, along with the models of the digital twin, can be used to create simulations that anticipate the consequences of actions. This capability can enable the development of action-planning autonomous robots.

3.2. Trust and Safety

Trust and safety are important aspects of HRI [

18,

30,

31]. When working with automated machines, it is necessary to ensure the safety of humans. Safety leads to human trust, which in turn increases fluency and productivity [

18]. Neglecting the importance of safety in HRI can lead to serious injuries. Collision mitigation or avoidance is a necessary and critical system in HRI especially Human robot collaboration. One way to avoid injuries is through situational awareness (SA), which ensures that both humans and robots are aware of each other’s locations at all times. Another key approach is Speed and Separation Monitoring (SSM), a fundamental safety strategy that dynamically adjusts robot operations based on real-time proximity and the relative velocity of humans in a shared workspace. This method is guided by standards such as ISO 10218 and ISO/TS 15066:2016 [

32], which outline the requirements for safe SSM. Additionally, trust in robots can be enhanced by introducing social attributes like facial expressions, which help create a sense of bonding between humans and robots. The presence of robust safety measures like SSM and SA, combined with the introduction of social attributes in robots, can significantly boost human workers’ trust and acceptance of robotic systems. When workers feel confident that their safety is prioritized, they are more likely to engage positively with robots, leading to better collaboration and higher productivity.

Haddadin et al. [

33] systematically evaluated the safety in HRI looking at real world threats, dangers and injuries. The paper also included a summary and classification of possible human injuries when working with robots including soft- tissue injuries and blunt trauma. Topics like trust and safety can be very broad and difficult to define, as they encompass human emotions, behavior, and psychology. Lee et al. [

34] provides a detailed paper defining trust, its various aspects, and strategies for designing automation devices, such as robots, to gain trust.

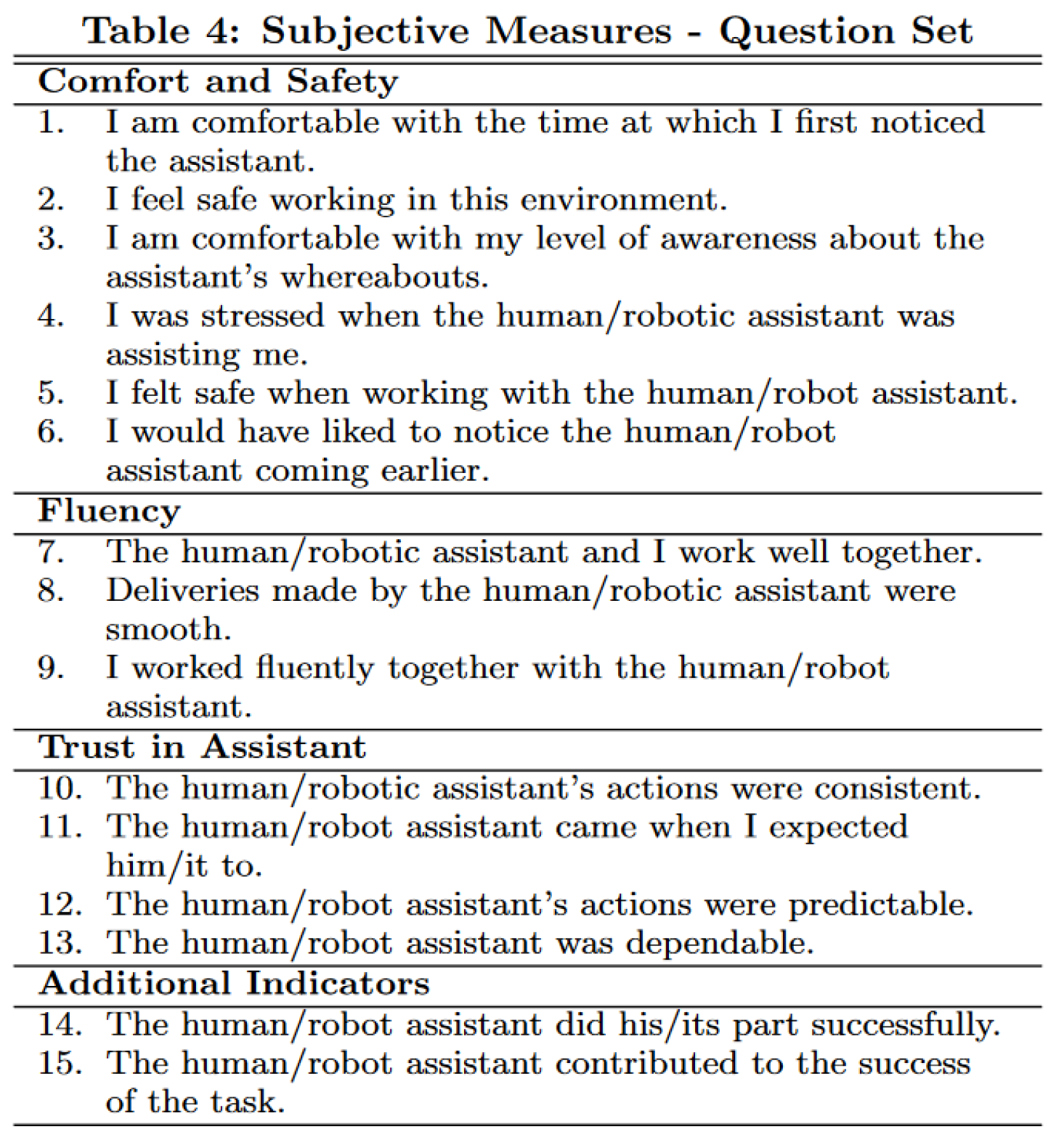

A key challenge in HRI is measuring or evaluating trust and safety. Qualitative data, often collected through questionnaires, is typically used to assess perceived safety or trust. For example Sahin et al. [

30] conducted an experiment on perceived safety when working with robots, gathering participant data through questionnaires during and after trials. Similarly, Mauruta et al. [

35] also used qualitative measures in the form of questionnaires to measure trust in their series of HRI experiments. On the other hand, Hancock et al [

36] explored various quantitative methods for measuring perceived trust in HRI. Their survey paper analyzed numerous papers and categorized quantitative measures into three sections: human-related, robot- related and environmental. They concluded that robot-related measurements were most commonly associated with determining trust in HRI. There is no single correct method for measurement; qualitative and quantitative measures can be combined. For instance, Soh et al. [

31] used both quantitative and qualitative methods to measure perceived safety, cross-checking quantitative data with qualitative questionnaire responses to ensure consistency.

3.2.1. Situational Awareness

A significant area of research concerning safety and trust in HRI is Situational Awareness (SA). In HRI, SA involves both the human being aware of the robot’s position relative to themselves and the robot being aware of the human’s position relative to itself [

37]. SA is crucial for establishing trust, safety, and effective collaboration between humans and robots. Additionally, SA directly corresponds with staying "in the loop." Low Situational Awareness (SA) can lead to an "out-of-the-loop" problem, where operators are unable to effectively take control when automation and robots fail. This lack of awareness hinders their ability to respond promptly and accurately in critical situations, compromising safety and efficiency [

38]. Situational awareness can improve collaboration between humans and robots, but the way it is displayed is crucial to its benefits. For instance, in an experiment by Unhelkar et al. [

39] comparing human and robotic assistants, a red light was used to indicate when the robot was getting close. This visual cue frightened the workers and put them on edge. As a result, they preferred the human assistant over the robot due to the unsettling nature of the red light. When it comes to measuring SA the one most prominent technique is the Situational Awareness Global Assessment technique (SAGAT). It was developed by Mica R. Endsley [

40,

41] back in 1988 and is still used today. The SAGAT technique involves random pauses of the machine so the user cannot predict the robot’s whereabouts or next moves. This allows for the comparison of the human’s perception of the robot’s location to the robot’s actual location. This method is often paired with questions to the user about the robot’s location or action.

Figure 6, taken from Unhelkar et al. [

39], shows the series of awareness questions asked during their user testing to measure the human’s situational awareness. Newer techniques for measuring SA include the Situation Present Assessment Technique (SPAM) and the Tactical Situational Awareness Test (TSAT) for the small-unit tactical level [

42,

43].

3.2.2. Speed and Separation Monitoring

Speed and Separation Monitoring (SSM) is a safety mechanism in human-robot collaboration that continuously tracks the distance between humans and robots. When a human enters a designated safety zone, the system prompts the robot to reduce speed or halt, thereby preventing collisions and ensuring a safe working environment.The framework for SSM, as established by Marvel and Norcross, underscores the importance of calculating the Minimum Protective distance at which a robot needs to stop, using parameters such as human speed, robot speed, and the robot’s stopping time (TS). This is further enhanced by implementing external observer systems that continuously monitor the workspace, ensuring the robot can initiate a controlled stop if the separation distance is breached[

44]. Complementing this approach, Kumar et al. [

45] demonstrated the efficacy of using Time-of-Flight (ToF) sensor arrays mounted on robot links to dynamically adjust the robot’s operational speed based on real-time distance measurements and relative velocities between the human and the robot. This methodology not only improves safety but also integrates self-occlusion detection to filter out false readings, thus enhancing system reliability. Additionally, Rosenstrauch et al. [

46] highlighted the use of Microsoft Kinect V2 for skeleton tracking, which continuously detects and tracks human workers within the shared workspace. This allows for dynamic adjustments in the robot’s speed, ensuring compliance with ISO/TS 15066 standards. Furthermore, Ganglbauer et al.[

47] introduced a computer-aided safety assessment tool that incorporates real-time digitization of human body parts and dynamic robot models to estimate potential contact forces, thereby providing immediate feedback on safety risks. Integrating these advanced SSM methodologies into HRI adheres to international safety standards and fosters safe and efficient working environments.

3.2.3. Social Attributions

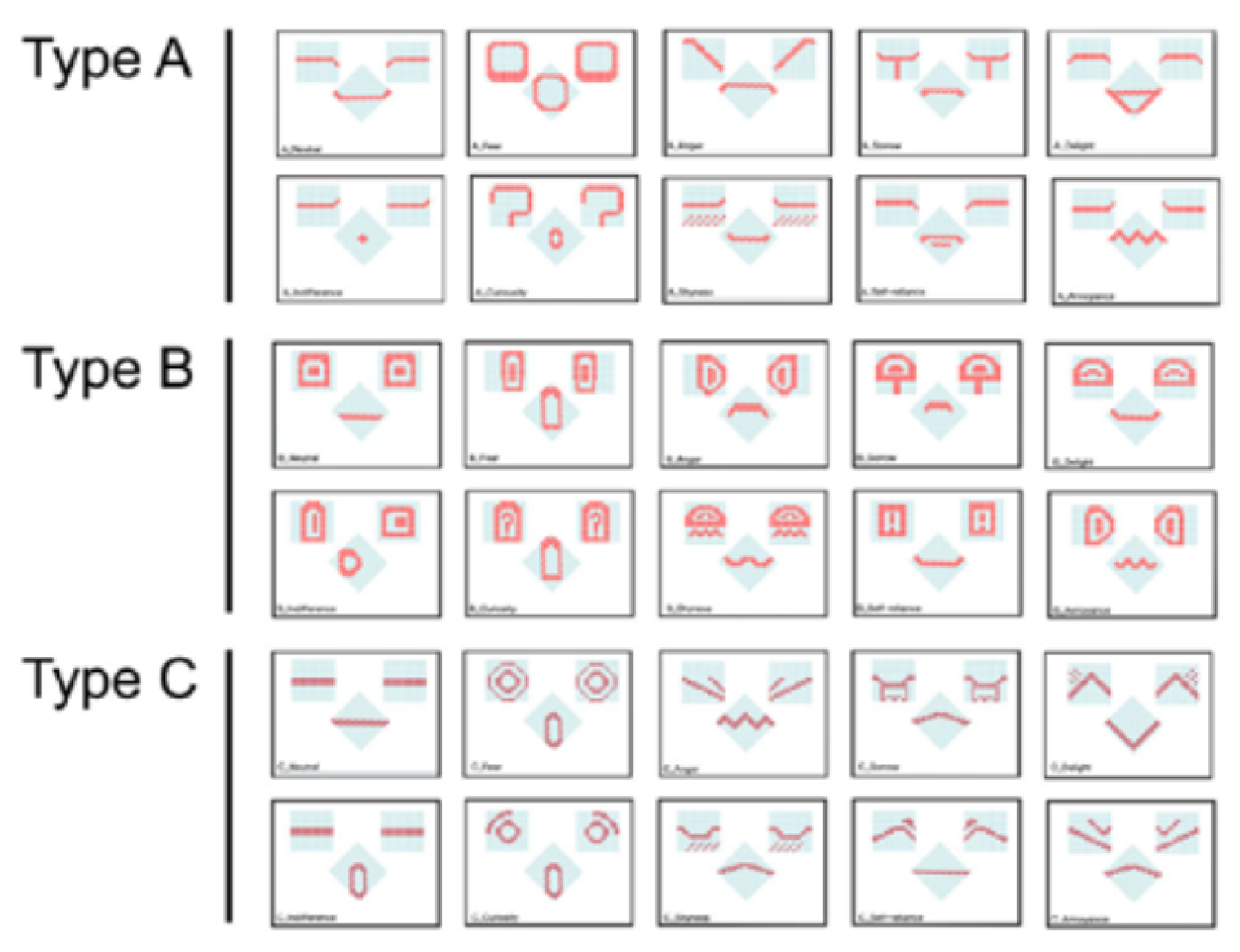

A smaller but still important area of research within safety and trust in HRI is promoting social interactions between humans and robots. This includes exploring how humans socially work with robots, adding emotional cues and social body language to robots, and understanding mental stress when working with robots. Through a Wizard of Oz and video ethnographic study at an elementary school, Oh and Kim [

48] discovered that over time, children develop an emotional connection with robots. The character resembling robots displayed a variety of facial expressions, as shown in

Figure 7. There were a total of 30 emotions that ranged from sorrow, anger, fear, delight, curiosity, indifference, and more. The emotional bond created with robots can lead to long-term use of robots, thereby extending their typically short lifespans. A similar emotional connection was created between workers and a robot co-worker in a study conducted by Sauppe et al. [

49] This study concluded that the social attributes of the robot facilitated this emotional connection, which in turn made the workers feel safe when near the robot. They began perceiving the robot as they would a human co-worker. Additionally, Bruce et al. [

50] explore how robots that express facial features and engage with humans socially, such as by turning towards them, are more compelling to interact with and thereby increase trust within humans. The mental stress of human workers is also a crucial factor to consider when aiming to ensure safety and trust around robots. This is important because mental stress directly correlates with productivity and comfort. Lu et al. [

51] document a number of measurements that can be used to gauge the mental stress of workers when collaborating with robots. The addition of both monitoring mental stress and giving robots social attributes can improve the interaction between humans and robots, fostering trust and creating safer workspaces.

Although understanding the essential factors that contribute to effective HRI is crucial, it is equally important to recognize the challenges that persist in this field, which the following section addresses.

4. Challenges in HRI

The field of Human-Robot Interaction (HRI) faces several key challenges that must be addressed to ensure effective and safe collaboration between humans and robots. Kumar et al. [

18] identify the three main challenges in HRC as human safety, human trust in automation, and productivity. This paper identifies similar challenges which include safety concerns, low levels of communication and collaboration, and the necessity for clear instruction and planning. Although recent research offers innovative solutions and improvements, HRI still struggles with the need for constant feedback to enable natural interactions. Currently, most HRI scenarios involve pre-planned actions on computers or adaptive robots that lack real-time interactive capabilities.

Communication and collaboration between humans and robots also remain inadequate. Effective HRI requires seamless interaction, where both parties can understand and predict each other’s actions. However, current systems often lack the capability to provide dynamic, ongoing communication. Rather, the communication is one-sided, with only the robot predicting, while the human cannot see its predictions or upcoming actions [

24,

25,

26]. This deficiency hinders the fluidity of teamwork and reduces overall efficiency, leading to misunderstandings and delays.

Figure 8 (left) depicts the robot’s workspace setup where human interaction takes place. On the right, it illustrates how the robot from Lastoa et al.’s [

25] experiment predicts human actions. While the most likely and frequently used paths are predicted, this method does not account for random actions or movements by humans. Additionally, the human cannot predict the robot’s path, which could further assist in avoiding collisions.

Furthermore, clear instruction and planning are crucial for optimizing HRI. Robots typically require precise instructions to perform tasks accurately. However, in dynamic and fast-paced environments, the ability to provide and update these instructions in real-time is limited [

52,

53]. This limitation results in inefficiencies and increased cognitive workload for human operators, who must continuously monitor and adjust the robots’ actions.

Lastly, safety concerns are important in HRI, as the close physical interaction between humans and robots in environments such as manufacturing and assembly lines introduces the risk of collision. Without real-time feedback and communication, it is challenging to ensure that both humans and robots can anticipate and respond to each other’s movements, potentially leading to hazardous situations [

54,

55,

56,

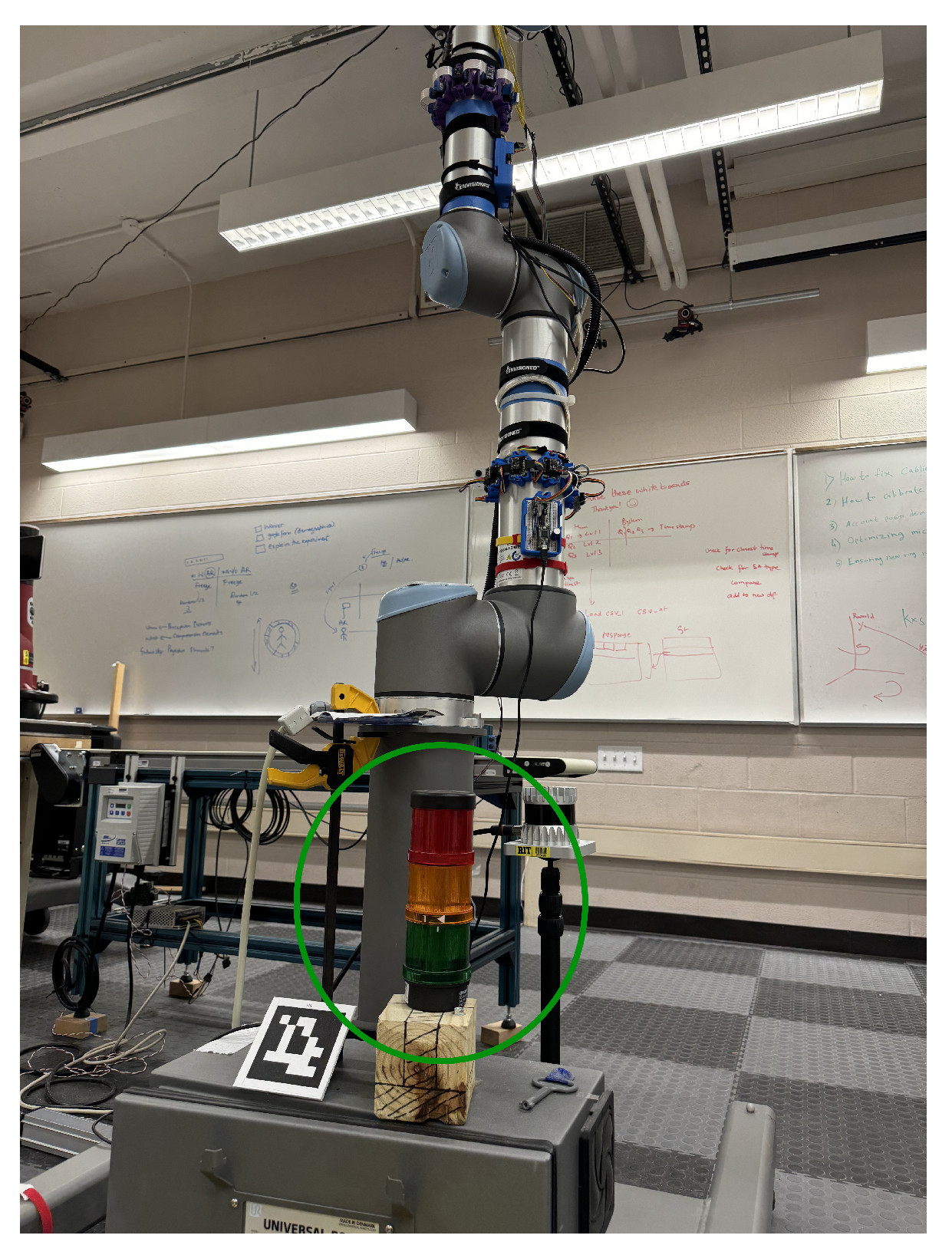

57]. Although solutions such as flashing lights have been created to show the whereabouts of the robots, unless the light is always in constant view of the user, it cannot always be useful and reach its full potential for ensuring safety and situational awareness [

39].

Figure 9 shows an example of lights near a collaborative robot that lights up to show the robot is in motion. The next section demonstrates how Augmented Reality can enhance HRI and provide solutions to the challenges that currently exist in the field, showcasing relevant research that addresses these issues.

5. Augmented Visualizations Used to Improve HRI

The integration of Augmented Reality (AR) presents a promising solution to the challenges in HRI. AR combines virtual elements overlaid on a real-world environment. It is typically used to convey important information needed while performing a task or viewing an environment [

4,

11]. AR enables humans to receive immediate visual feedback about the status and intentions of robots. This capability allows for more intuitive and responsive interactions. Additionally, AR has been integrated into variety of fields including medical, entertainment, military, product design and manufacturing [

4]. More recently it has been used in HRI to improve collaboration and feelings of safety between robots and humans [

58,

59]. Withing HRI, AR is typically presented in the form of wearable devices, such as head-mounted displays (HMD) and smart watches. It is also seen in the form of projections on to real life surfaces [

60]. This section will explore research demonstrating that AR can enhance planning and training, communication and instruction, and interaction within the field of HRI. Additionally, the exploration of AR to increase situational awareness and collision mitigation will be examined to understand how it improves trust and safety.

Table 1 below categorizes all of the papers reviewed in this section.

5.1. Collaboration Utilizing AR

Similar to traditional HRI collaboration, collaboration in HRI using AR focuses on three main components: planning and training, communication and instruction, and interaction. The key difference is that AR is used to enhance the features and systems involved in these components, ensuring more efficient collaboration. Additionally, there are various ways in which AR can be integrated into the collaboration, expanding the interaction between humans and robots to include how AR interfaces with both. Suzuki et al. [

5] provide a detailed categorization of the various approaches, interactions, and characteristics commonly found in AR applications within HRI. They categorize the three main approaches of how AR works with humans and robots as on-body, on-environment, and on-robot. This subsection will explore how AR is used in the field of HRI to improve planning and training, communication and instruction, and interaction, ultimately making collaboration more effective.

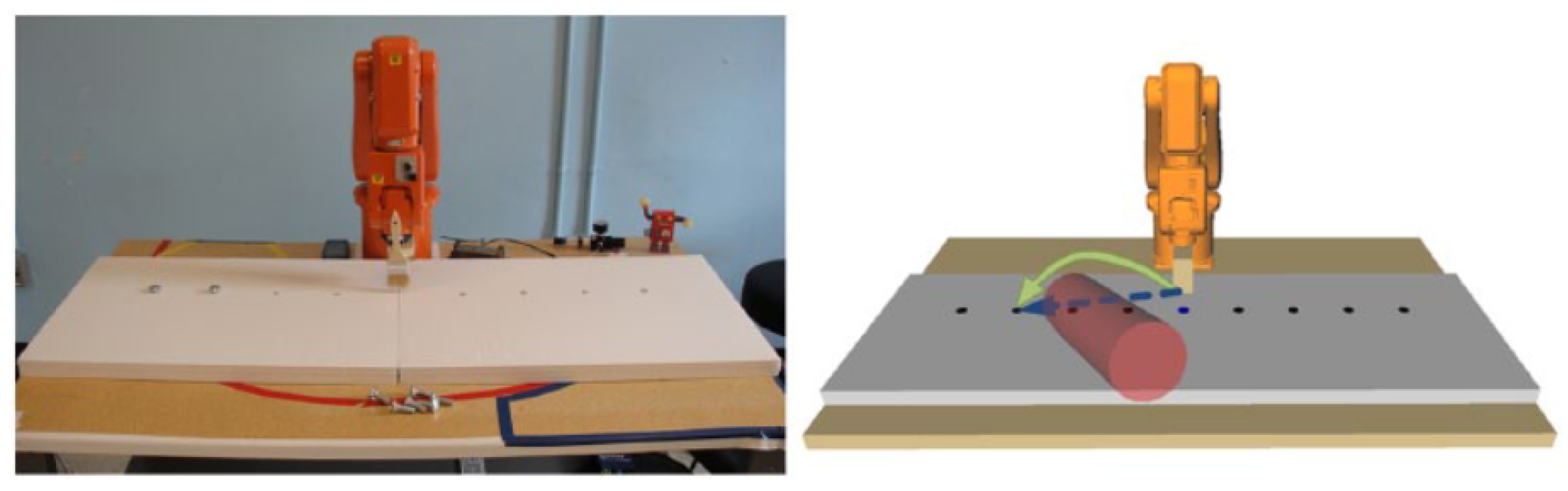

5.1.1. Planning and Training Using AR

Recent research has found that AR enriches path planning and training by offering a virtual approach rather than a physical one, making operations safer, easier, and more flexible [

61]. AR can be used to understand a robot’s status and planned actions, discuss and review plans with robots and generate optimal plans before implementing them. Fang et al. [

61] utilize AR to plan the path of an industrial robot. Despite encountering accuracy errors in their case study due to the application system, the concept of AR path planning was generally considered a success. Doil et al. [

62] explore the use of AR in planning manufacturing tasks by placing virtual robots and machines within a real-world workspace to map out a manufacturing layout. This approach eliminates the need to model the work environment as required in a VR-based version, streamlining the planning process. Similarly, assembly workstation planning using AR is explored by Wang et al. [

63] The researchers developed an AR system that enables HRC assembly simulation based on current human worker mapping and motion data. This system allows operators to design optimal HRC assembly lines that mimic human assembly lines efficiently and cost-effectively. In HRI training, AR technology often provides virtual simulations of real-life interactions and situations, helping individuals better understand and become comfortable working with robots. Bischoff et al. [

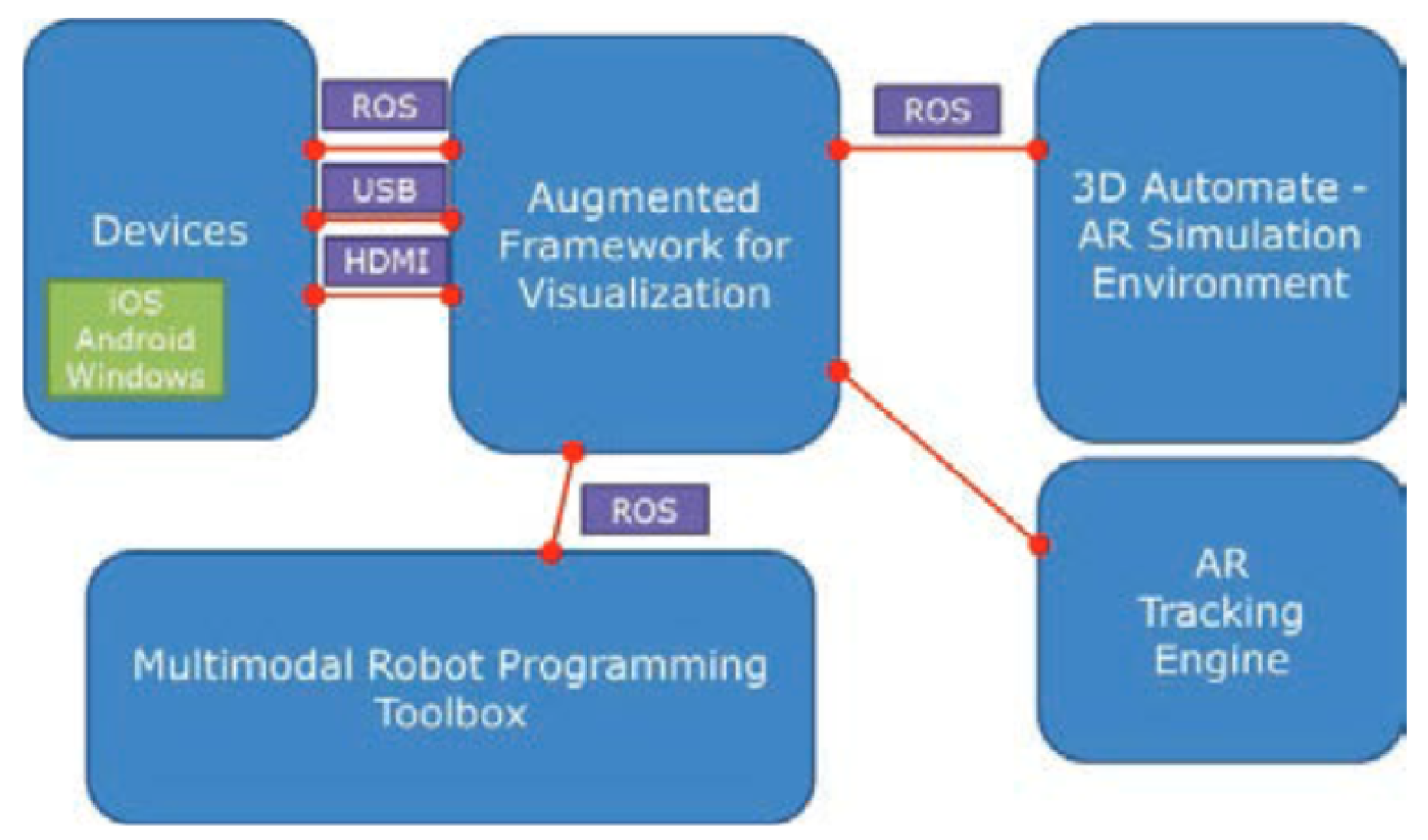

79] identified robot operation training as the most promising area of AR research in this field. When training with AR, human operators can safely practice complex tasks. Andersson et al. [

64] developed a system that facilitates AR-based training, enabling this safe and effective learning environment.The system is divided into four main parts, interconnected via the Robot Operating System (ROS) to ensure flexibility and extensibility. The multimodal robot programming toolbox allows for various programming tools to interact with a data model through parameter requests, enabling the user to select desired tools during runtime, such as PbD or free drive mode, to define robot poses or trajectories. A this is presented visually in

Figure 10. In contrast, Matsas et al. [

80] present a similar training system that utilizes VR and is entirely virtual, without incorporating real-world elements.

5.1.2. Employing AR for Communication and Instruction

In HRI, AR can be used both for communication and instruction. When giving instructions, AR is used for robots to give humans instructions proactively during work. When receiving instructions, AR can be used to view instruction plans during manufacturing and project instructions onto objects in assembly work. In an AR manufacturing case study by Saaski et al. [

53], researchers found that participants completed assembly tasks faster when instructions were displayed virtually using AR and an HMD display rather than paper instructions. Participants identified the 3D animations of parts being assembled as the most useful AR feature. AR systems that provide instructions are not only helpful for workers but also for operators who oversee entire workplaces, including both human and robot workers. Michalos et al. [

65] created an AR system that supports operators by allowing them to send AR instructions and maintain an overview of the workplace to ensure safety and display additional information through AR visualization. The results of the study demonstrated that the system significantly enhanced working conditions and the integration of the operator. Additionally, AR systems can support robots in giving instructions to human workers. Liu et al. [

81] designed an AR system that virtually augments instructions intuitively to humans. These instructions are provided by robots through recognizing human motions and determining the next best course of action.

When using HMDs to display instructions and other important information, it is vital to ensure that virtual screen coordinates, eye level, and calibrations are properly adjusted to the wearer. Janin et al. [

82] provide detailed procedures for finding the correct calibration parameters for displaying visuals on HMDs. Additionally, it is important to note that although utilizing HMDs for AR instructions can lead to positive results, they may not yet be ready for real-world applications. For example, an evaluation study by Evans et al. [

52] concluded that while the Microsoft HoloLens has the potential to display AR instructions in an assembly line, it still requires improvements in accuracy before it can be applied in a real factory setting. In addition to just HMDs, Lunding et al. [

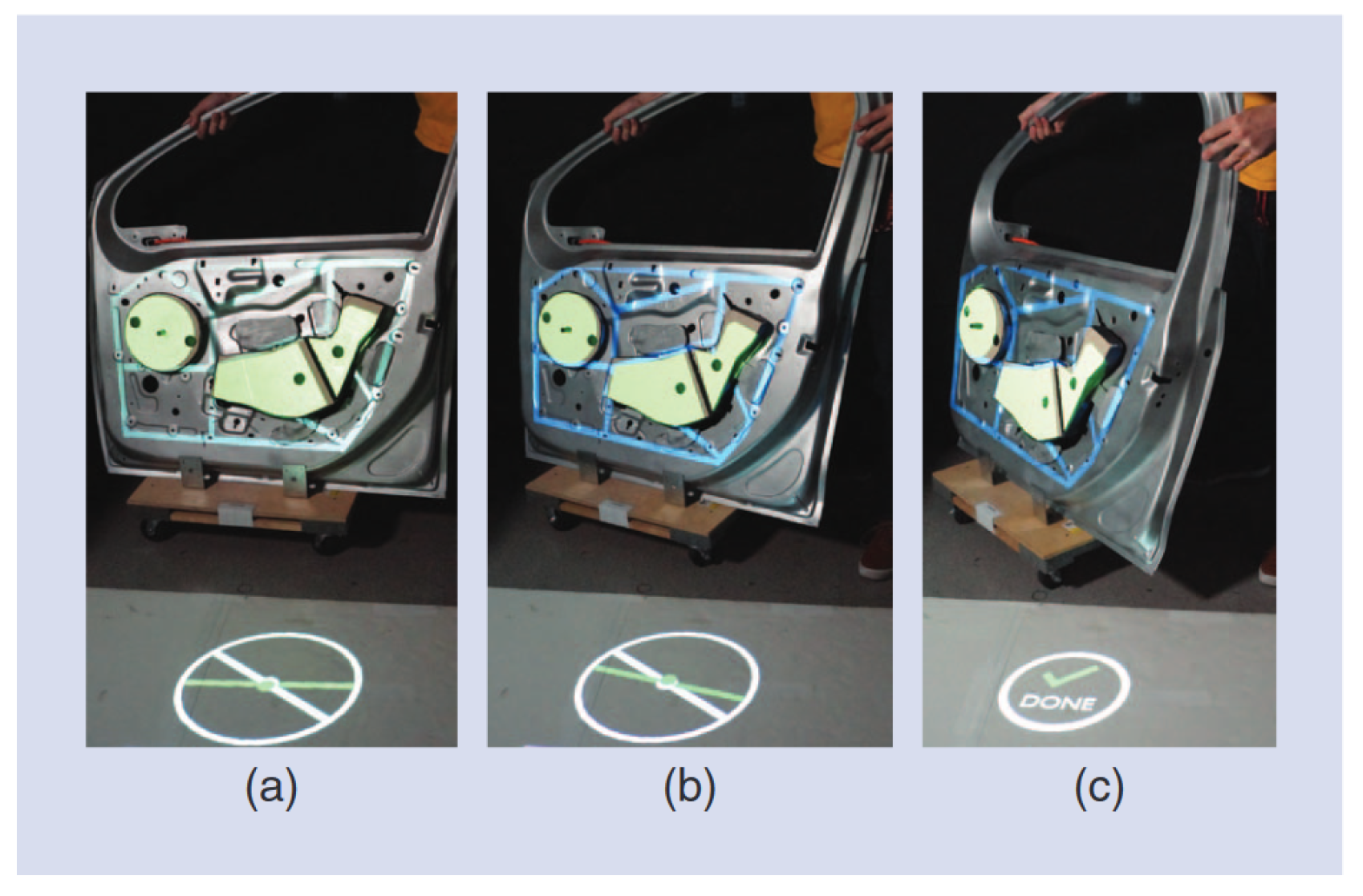

66] use a hybrid approach to provide instruction and enhance communication. Instead of relying solely on HMD-based AR, they incorporate a web interface to achieve a more accurate visual representation when displaying assembly instructions. Additionally, this hybrid method establishes two-way communication between the operator and the robot. Aside from HMDs, projection mapping can also be used to display instructions and informational cues from the robot to a human worker. Kalpangam et al. [

8] use vision-based object tracking to map out an environmental space, determine the location of various objects, and then utilize projection mapping to overlay important cues and instructions onto tracked objects.

Figure 11 illustrates the steps to aligning a car door projected over the physical door. A projected circle on the floor moves in real-time with the car door until it reaches the correct alignment. Once aligned, the circle provides feedback to let the user know that the task has been successfully completed.

Communication in HRI is vital for making informed decisions and adapting to unknown situations. AR-based communication and location guidance between robots and humans is explored by Tabrez et al. [

67] They use AR for both prescriptive and descriptive guidance techniques. For teleoperated robots, an AR interaction system can address the challenges of maintaining awareness due to a single ego-centric view. Green et al. [

22] explore how AR enables operators to view a teleoperated robot through a 3D visual representation of the work environment and anticipate the robot’s actions. This capability facilitates improved communication and collaboration between the operator and the teleoperated robot.

5.1.3. AR Promoting Interaction

Interaction in HRI often uses AR to promote fluency and productivity between humans and robots. This typically involves conveying the robots’ upcoming plans and actions, allowing users to interact with robots intuitively. De Franco et al. [

68] take a similar approach to promote natural interaction between robots and humans by creating an AR system that allowed humans to view robot status and future actions for collaborative tasks. Using an AR headset, the robot provided feedback to the human user, enabling them to understand the upcoming steps and be prepared. This improved their performance and was deemed helpful by 10 participants [

68]. Andronas et al. [

69] also promote natural interactions using a Human-System and System-Human interaction framework. This framework allows robots and humans to communicate important information back and forth while completing their tasks. In general, the system increased operator trust and awareness and reduced human errors. Similar interaction systems can also be operated through smartwatches. Gkournelos et al. [

70] promote HRI interactions through a hybrid approach involving smartwatches. This method significantly aided the operator in integrating into an assembly line. Another approach to human-robot interaction using AR is by allowing robots to manipulate virtual elements in a shared augmented reality workspace. In this setup, robots do not assist in the physical world but rather work proactively with humans by offering cues in the virtual augmented world. Qiu et al. [

71] developed an experiment to test this set-up and found that it worked effectively and could be useful for the future of HRC. Outside of the virtual world and elements, the AR interaction can also be projected onto a work surface to provide information about the robot’s upcoming actions and intentions. Sonawami et al. [

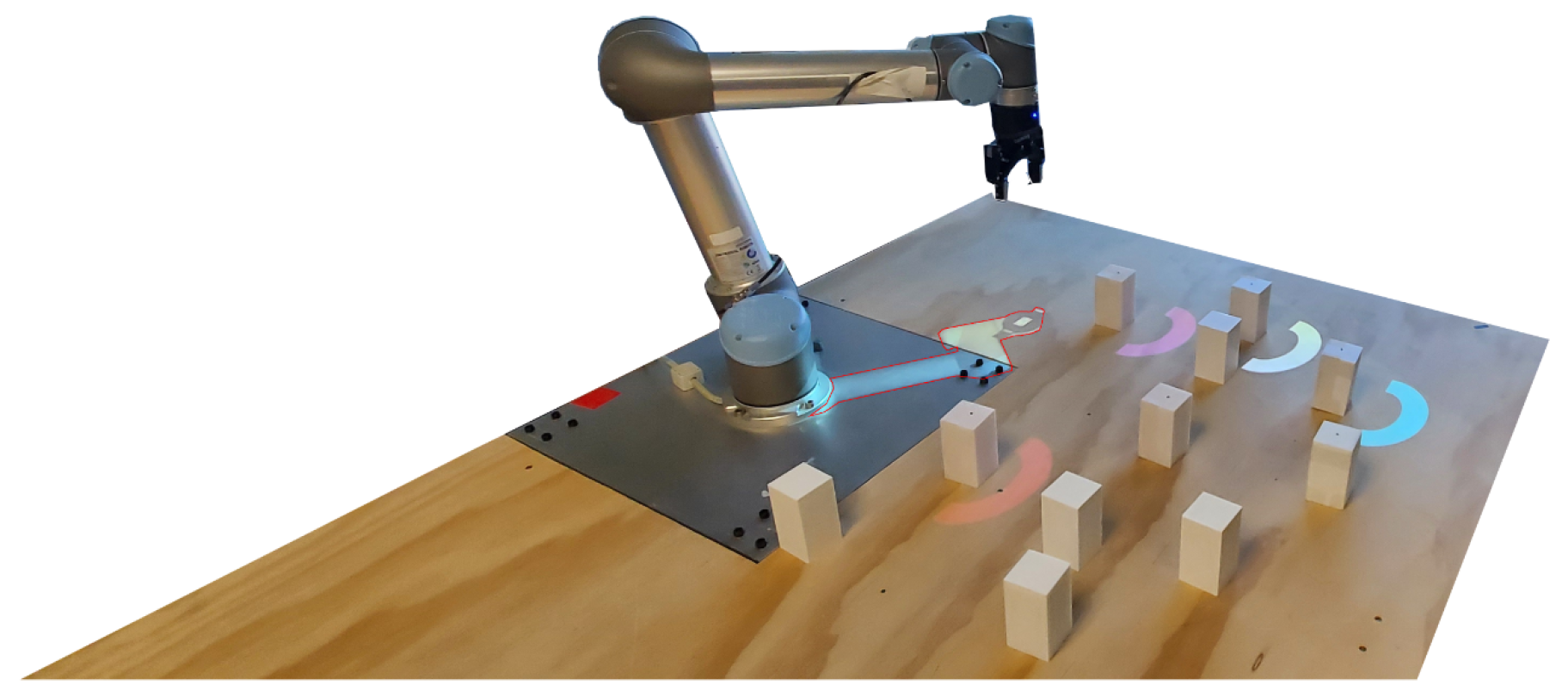

72] use projection mapping to display visual cues, highlighting the objects that human beings need to interact with. As seen in

Figure 12, the robot’s shadow is projected, highlighting its status. Additionally, the colored semi-circles show users which blocks are safe to pick up. The results showed that projection mapping improved safety and task efficiency.

5.2. Applying AR to Improve Trust and Safety

AR can enhance trust and safety in HRI through two main approaches: prevention and correction. Prevention is achieved through situational awareness, where humans are actively shown the next steps and whereabouts of the robot to avoid collisions. This approach helps both robots and humans understand each other’s locations, enabling safe interactions and reducing the likelihood of being taken by surprise if they are too close to each other. Collision Mitigation and Avoidance includes maintenance which refers to the upkeep of a robotic system or environment to ensure they comply with safety protocols and function appropriately. It also includes collision avoidance through the operator’s overall view and management of an active HRI environment, and the ability for robots to stop automatically if humans get too close to avoid collisions. In this subsection, we will examine research that demonstrates how AR systems can be integrated into HRI through situational awareness and collision mitigation to increase trust and safety for users in HRI environments.

5.2.1. Situational Awareness with AR

When it comes to AR, situational awareness is a widely researched topic in many different fields, including medical, military, and manufacturing [

9,

83]. In HRI, many researchers aim to promote safety by increasing user awareness of robots they are working with and their surroundings. These approaches may be similar to those discussed in the interaction section, but are grouped here because they are specifically designed to enhance situational awareness for safety and trust, rather than interaction.

The first approach to improving situational awareness involves visualizing the intended path or motion of the robot. Both Tsamis et al. [

55] and Palmarini et al. [

54] use AR to show users the robot’s next actions. Their goal is to help users become more context-aware and trust the robots more. Although Tsamis et al. [

55] use an HMD and Palmarini et al. [

54] use a handheld tablet, both experiments resulted in positive sentiments and increased perceived trust from the participants.

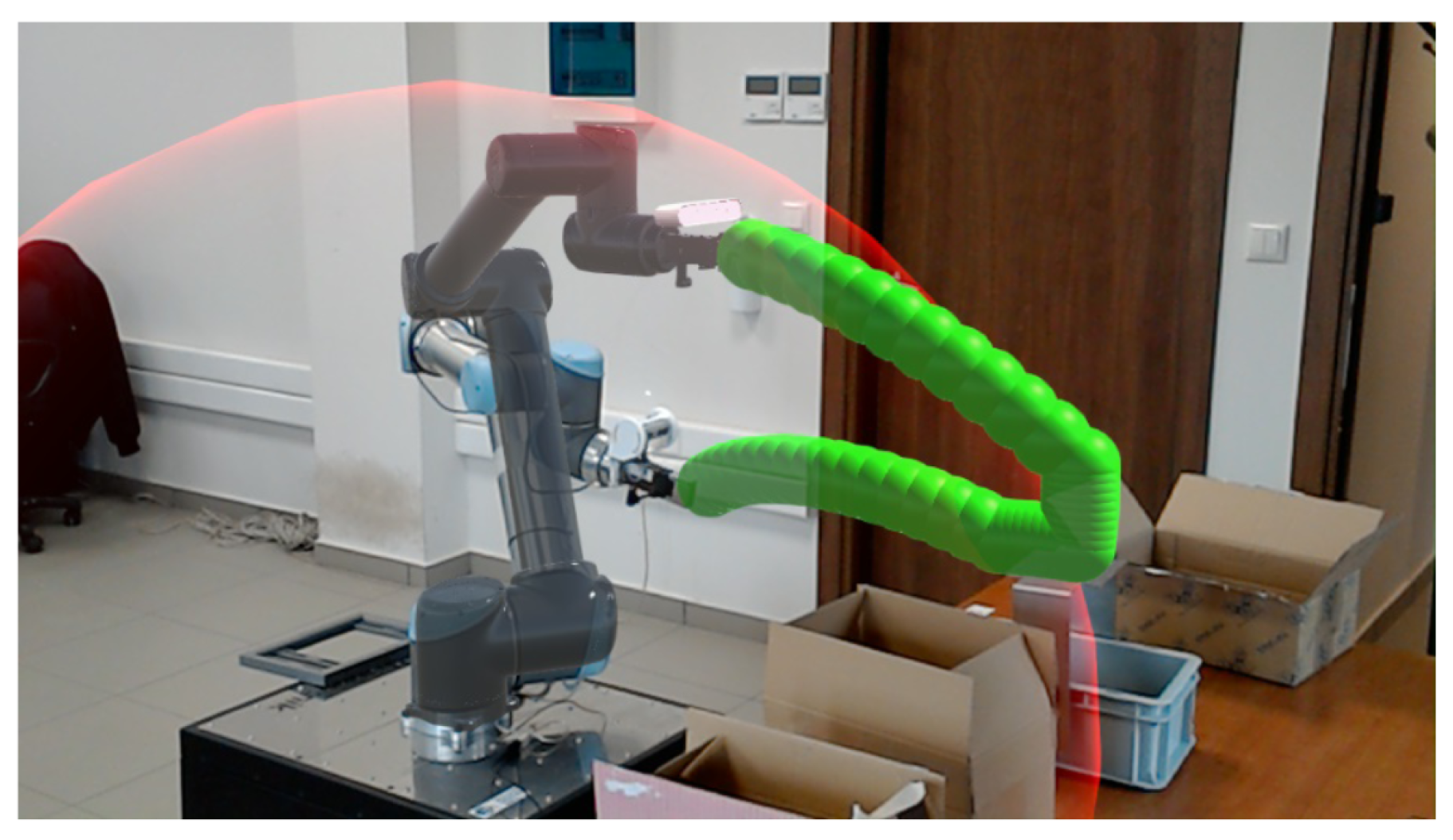

Figure 13 shows the AR seen through an HMD. The green tube indicates the robot’s next path of motion, while the red bubble encloses the danger zone, where humans should be cautious of collisions.

The second approach is similar to the red bubble; it is when AR is used to visualize safety zones. This allows users to see the area in which a robot operates and know when they are too close. Vogel et al. [

56] display safety zones using multiple projectors around the room, while Choi et al. [

57] use an HMD and calculate the 3D locations of both the human and the robot using digital twins. Neither of these papers evaluated their system designs; instead, they focused on design architecture and making the system functional. Hietanen et al. [

73] use both HMD and projection mapping to display safety zones. Both types of visualizations were tested in a user study. The AR in the HMD and the projection mapping both reduced task completion time and robot idle time. However, the projector was proven to show more noticeable improvements in safety.

Lunding et al. [

74] combine both approaches by displaying robot safety zones and upcoming actions using an HMD. Their system, called RoboVisAR, also allows for users to easily customize the visualizations shown in the AR. The papers discussed employed one of three different methods to present visualizations of situational awareness. While each method was found to enhance SA, some approaches are proven to be more effective than others[

12]. Generally, HMDs are found to improve efficiency. Furthermore, when compared to handheld displays and radio links, HMDs have been shown to offer better performance and reduce workload [

6].

5.2.2. Using AR for Collision Mitigation and Avoidance

AR can make HRI conditions safer, but there are also mental and physical risks associated with the use of AR in environments with potentially dangerous robots. Bahaei and Gallina [

84] created a framework called FRAAR(Framework for Risk Assessment in AR-equipped socio-technical systems) that can be used for the assessment of the risk of both social and technical issues that can arise when AR is introduced into any robot manufacturing system. Safe practices can also mean maintenance and upkeep of a running system. Eschen et al. [

75] utilize AR for the inspection and maintenance in the aviation industry. In a broader sense, Papanastiou et al. [

76] and Makris et al. [

77] both developed AR systems designed for operators to maintain safe and integrated environments in human-robot manufacturing settings. The accuracy of these safety measures is usually not precise, but instead activates early to avoid human-robot collisions. This means that robots will be interrupted from their work and forced to stop moving very often, which can hurt the flow of a workspace. To minimize interruptions and maximize workflow, Matsas et al. [

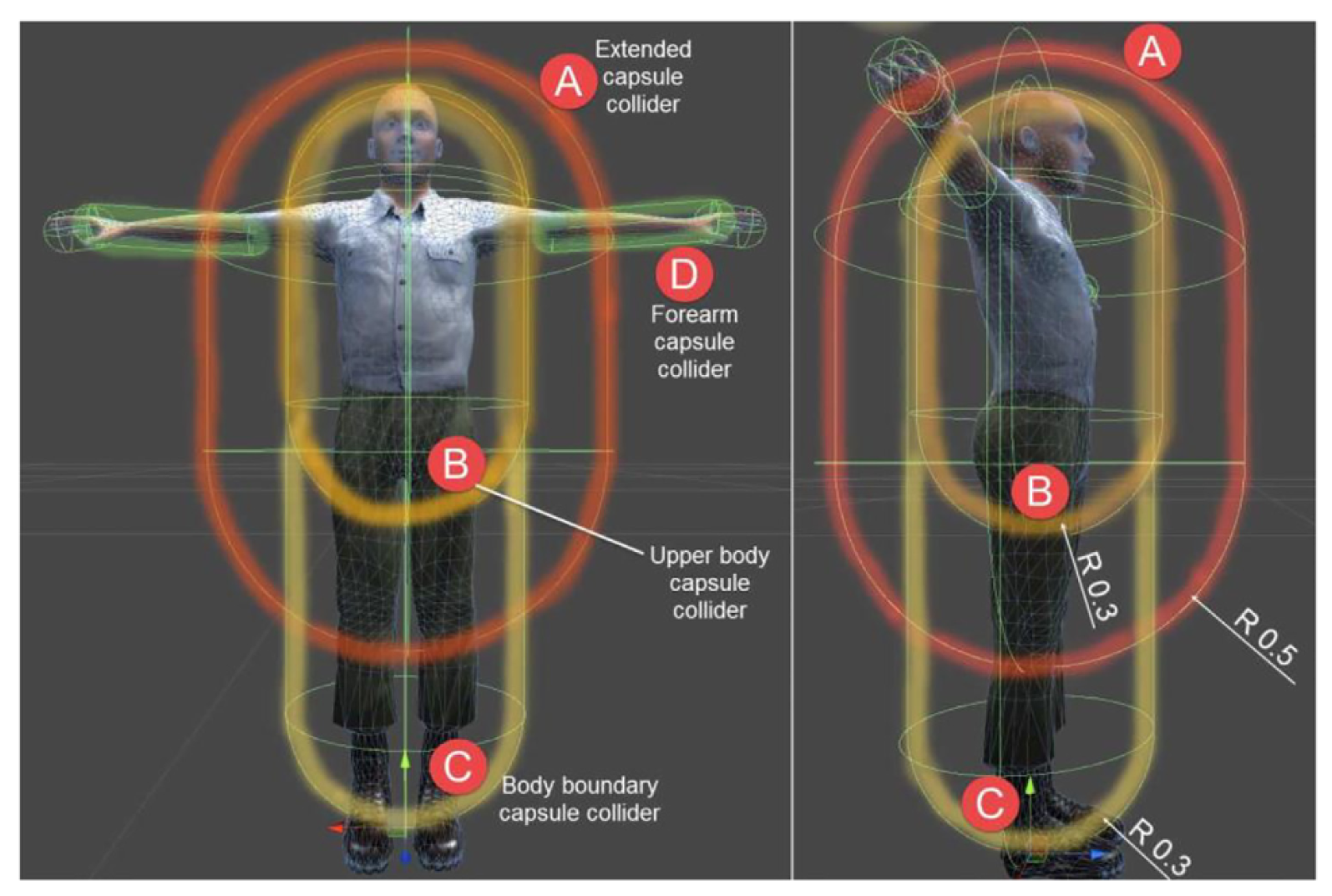

78] use proactive and adaptive techniques in a virtual immersive environment to test performance metrics of a robot’s safety enhancements. This approach aims to obtain precise measurements of how close a human can be before a robot is forced to stop. A virtual human body model is created and analyzed to determine the appropriate distances from the body that should trigger the robot’s motion-stopping feature.

Figure 14 shows the diagram of this body model.

The use of digital twins in human-robot collaboration has shown significant promise in improving safety and mitigating collisions. According to Feddoul et al. [

85] digital twins facilitate real-time monitoring, simulation, and prediction of potential safety hazards, providing a comprehensive approach to preemptively address safety issues. Similarly, Maruyama et al. [

86] propose a twin-based digital safety control framework that focuses on accurate distance calculations and real-time safety monitoring, ultimately preventing collisions by maintaining a safe distance between humans and robots. Lee et al. [

87] detail the application of digital twins in collaborative robotic systems through the implementation of safety controllers and validation techniques, focusing on their role in offline testing, real-time monitoring and predictive analysis. It contains a good overview of different types of simulation environments used for different types of collaborative applications. As we examine the impact of augmented visualizations on improving HRI, it becomes clear that good design principles are critical to maximizing their effectiveness, guiding the development of user-friendly AR systems.

6. Good Design Principles for AR

Although there is immense research and development of AR in HRI, there is a lack of focus on visual information, with more emphasis placed on information architecture [

9]. The way information is displayed is crucial because it directly affects how users comprehend and act on it. To properly design how information is presented in AR, we must look to Human-Computer Interaction (HCI) design principles. HCI is a multidisciplinary study focused on understanding how humans use and interact with technology, and plays a crucial role in design and research [

88]. This field intersects with HRI when it comes to understanding how humans will interact with visual information received from or regarding robots. HCI differs from HRI particularly in terms of visual design, specifically looking at the User Interface (UI) and User Experience (UX) of AR features implemented in human-robot collaboration. From Hamidli and others [

10,

89] we can summarize the top principles for both UI and UX design. UI design refers to the visual elements users see and interact with, which includes both static elements such as icons, colors, typography, layout, and interactive elements like buttons, scroll bars, and animations. The goal of UI design is to create an intuitive, easily learned, and navigated product. To create user-friendly interfaces, the top four principles to follow include:

Simplicity: Designs should be simple, clutter-free, and easy to navigate. Avoid unnecessary elements and keep the interface as straightforward as possible.

Consistency: Maintain internal and external consistency. The design elements should be consistent throughout the system and align with universal design standards. For example, a floppy disk icon universally signifies "save."

Feedback: Provide feedback through visual, auditory, or haptic means to indicate that an action has been successfully completed. This helps users understand the outcome of their interactions.

Visibility: Ensure that the cues are easy to see and understand. Visibility also refers to making the current state of the system visible to users. This helps users stay informed about what is happening within the system.

UX (User Experience) Design focuses on the flow of an app and how users interact with the system, rather than its appearance. It involves understanding users’ behaviors, pain points, and motivations. Good UX design improves engagement and usability. The top four principles for good UX design include:

Usability: Ensure the user flow of the system is easy to navigate, learn, and use. The design should be intuitive and provide a seamless experience for users.

Clarity: The design should be straightforward and provide users with only essential information, avoiding unnecessary elements. Clarity ensures that users understand the interface and its functions easily.

Efficiency: Design the system for performance optimization, enabling users to accomplish their goals quickly. Efficient design minimizes the time and effort required to complete tasks.

Accessibility: Ensure that the system is accessible to all users, regardless of their abilities. This includes considering different devices, screen sizes, and assistive technologies to create an inclusive user experience.

Lastly, a good practice in design is always to consider the user. This is known as User-Centered Design (UCD). It refers to designing with the user in mind, catering to their needs and considering their technology level and skill set. This approach ensures that the interface is accessible and user-friendly for the intended audience. UCD involves continuous user participation and feedback throughout the design process, ensuring that the final product effectively meets user requirements and provides a positive user experience [

89,

90]. When it comes to evaluating the interface and user experience of systems, including AR systems, 2 common subjective measures include the System Usability Scale (SUS) and the User Experience Questionnaire (UEQ) [

91,

92]. Both measures are well-established with benchmarks and prior work involving user studies. The SUS asks participants to rate the usability of an interface using a 5-point Likert scale. It includes 10 questions, each focusing on different aspects of UI usability [

92]. The UEQ is a 26-question survey that uses a 7-point Likert scale. Participants rate the user experience of a system based on six attributes, including dependability and attractiveness [

91].

Current Design Principles Used for AR in HRI

Within HRI research, there are limited papers that acknowledge or explore HCI principles such as UI/UX design. Only four papers considered UX and UI design strategies when developing the AR systems used to improve HRI. Fang et al. [

93] used interface design strategies when creating an AR system to aid in seamless interaction between humans and robots. Their paper includes a section labeled "Visualization," which discusses each visual element in the AR and the purpose of its design. The user experience was evaluated based on how well the users understood the visual elements, their cues, and the information they were displaying. Alt et al. [

94]directly examine principles for UI design, such as adaptability and workflow, when creating a user interface to explain an AI-based robot program. User experience was assessed based on users’ perceptions of the interface’s usefulness, assistance, readability, and understanding. Similarly, when developing an AR head-mounted display for robot team collaboration, Chan et al. [

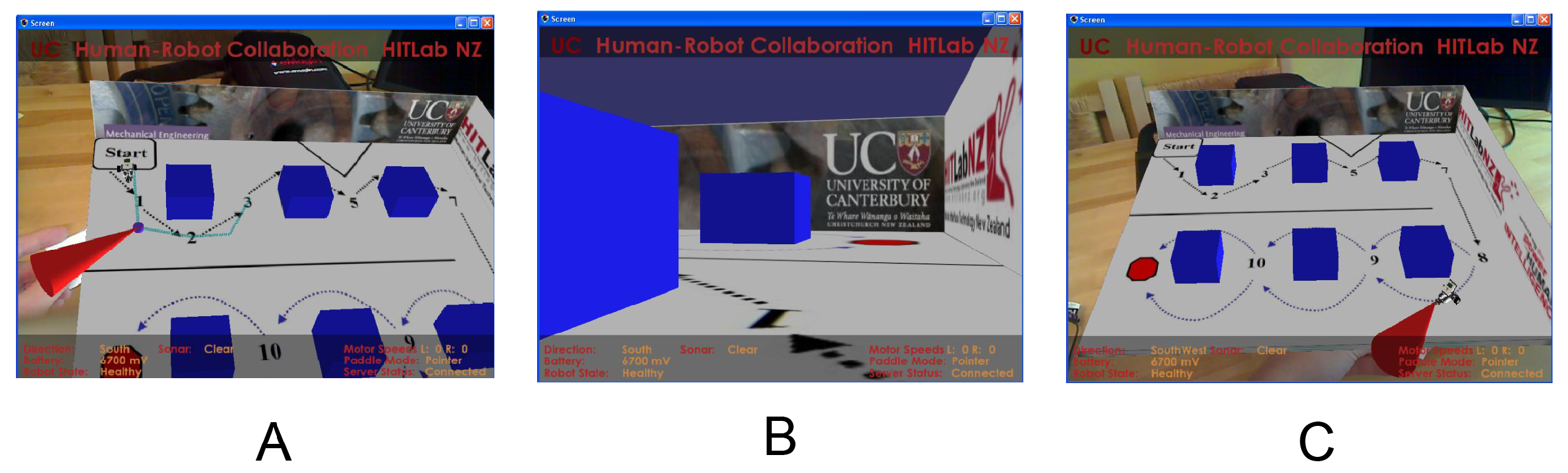

7] designed the system with user workflow in mind. The system’s user experience was evaluated based on task load and the increased efficiency of collaboration. Green et al. [

22] is the only one of these papers to offer multiple design variations. They present three different UI designs for their AR system to increase situational awareness, shown in

Figure 15. The user experience of these various UI designs was evaluated based on users’ comfort and their ability to accurately read the robot’s location at any given time. The papers above all evaluate user experience based on various qualitative and quantitative measurements that correctly correlate with what they are designing to improve. For example Chan et al. [

7] use both the SUS and UEQ, mentioned above, to evaluave the usability and user experince of the system design. There are also more universal methodologies for evaluating UX. Lindblom and Alenjung [

95] created ANEMONE, an approach to evaluating UX in HRI. They conducted extensive research on HRI evaluation principles and UX evaluation principles, deriving from HCI, to develop this combined methodology that can be implemented into any AR user study in the field of HRI. Aside from incorporating HCI principles and evaluation methods, HRI research also includes a few papers regarding design guidelines, but they tend to be limited or highly specific. Jeffri and Rambli [

96] offer guidelines for interface design in AR, but they focus specifically on AR used in manual assemblies. Wewerka et al.’s [

97] design guidelines are tailored for robotic process automation, and Zhao et al.’s [

98] work is centered on the fabrication process. While these guidelines and tools can potentially be applied to design UI for AR in various HRI fields, they have only been tested in their respective domains. Consequently, their effectiveness in other fields remains uncertain. A better approach is to use HCI design guidelines for AR. These broader guidelines can be applied to any use of AR, including HRI. Since they are not specific to a particular industry, they offer flexibility and can be adapted to suit the specific needs and contexts of various designs, leading to more optimal outcomes [

99].

Additionally, in HCI there is a focus on designing for inclusion, accessibility and sustainability. These principles can be considered when designing AR visual elements for HRI to ensure that all workers can properly use and interact with the technology [

100]. Furthermore, designing for diversity equality and inclusion can ensure unintended bias does not occur within the AR system [

101]. This paper will not go into detail on the design guidelines needed for accessibility, sustainability and design but there are numerous recourse that can be referenced including design guidelines and previous work [

99,

102,

103,

104]. Building upon these design principles, we propose a comprehensive framework for developing AR applications in HRI, ensuring that these systems are not only effective but also adaptable to the needs of human operators

7. Framework for Developing Augmented Reality Applications in Human Robot Collaboration.

The framework for designing augmented reality (AR) systems in human-robot interaction (HRI) is meticulously structured to address the critical aspects of collaboration, trust, and safety. This framework is built upon the foundational principles of Human-Computer Interaction (HCI) and is supported by the comprehensive research findings outlined in the AR in HCI survey. The framework, visualized in

Figure 16, is divided into three key components: Collaboration, Implementing HCI Principles for Good AR Design, and Evaluation and Continuous Improvement.

Collaboration focuses on enhancing the synergy between humans and robots through effective planning, training, communication, and interaction. It emphasizes the need for AR systems to facilitate seamless communication and instruction, thereby improving task efficiency and reducing the likelihood of errors.

Trust and safety are paramount in HRI, and the framework highlights situational awareness and control as essential elements. By leveraging AR technologies, the framework aims to enhance users’ situational awareness, enabling them to perceive, comprehend, and project the state of their environment accurately. The integration of control mechanisms, such as Speed and Separation Monitoring (SSM) algorithms, ensures that robots operate safely around human operators, dynamically adjusting their actions to maintain safe distances and prevent collisions.

Implementing HCI Principles for Good AR Design involves creating user interfaces (UI) and user experiences (UX) that are intuitive, user-centered, and designed to meet the specific needs of human operators. This includes utilizing AR to provide real-time feedback, improve usability, and enhance the overall user experience.

Evaluation and Continuous Improvement underscores the importance of using both quantitative and qualitative measures to assess the effectiveness of AR systems. By continuously collecting and analyzing data on system performance and user feedback, the framework advocates for an iterative design process that ensures that AR systems remain effective, user-friendly, and safe.

The framework outlined in this paper serves as a foundational guide for developing augmented reality (AR) applications in human-robot interaction (HRI). Its practical relevance is demonstrated through specific experiments and the design of the elements that leverage different components of the framework. The following subsections describe implementations that focus on Key Components of AR in HRI and Good Design principles of AR. Finally it enlists some of the metrics that are necessary to evaluate and continuously improve AR applications in the field of HRI

7.1. Improving Collaboration, Safety and Trust Using AR in HRI

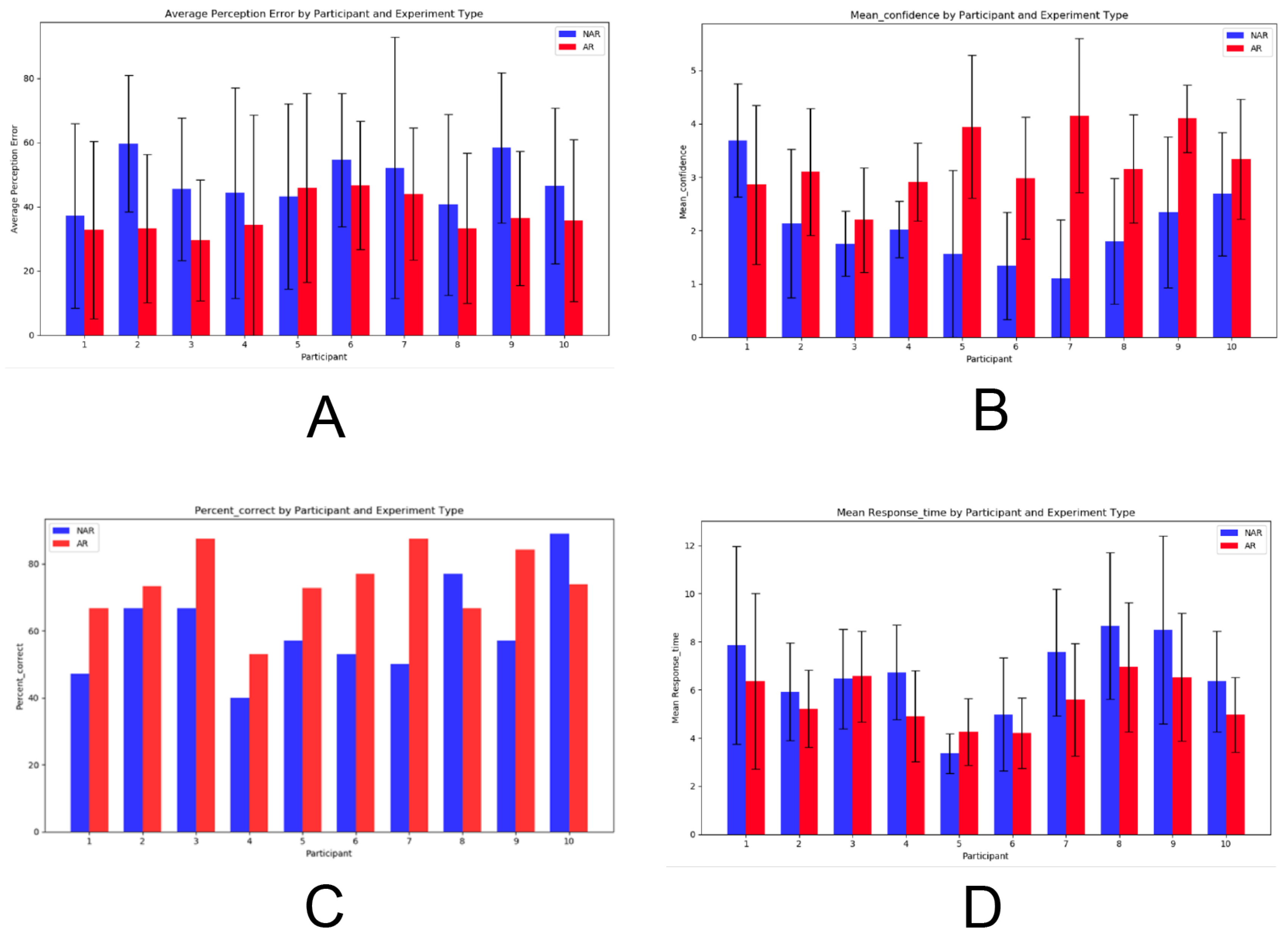

Ensuring trust and safety in human-robot interaction (HRI) is paramount, particularly in dynamic industrial environments. The presented framework for designing augmented reality (AR) systems in HRI underscores the importance of these components, as validated by recent experimental studies. The first study utilizes AR to enhance situational awareness, employing the Situational Awareness Global Assessment Technique (SAGAT) to measure improvements [

105]. Wearing an HMD, the user can see a green arrow that moves to point at the end effector of the robot. When the user is turned away from the robot, the arrow indicates where the robot is located in relation to the user. The green arrow is circled in

Figure 17 below. Results indicate a significant reduction in perception errors (average perception error decreased significantly) and increased operator confidence (mean confidence levels rose), reinforcing the framework’s emphasis on situational awareness as a cornerstone of safety. The study also reported an increase in the percentage of correct responses and a trend towards faster response times, highlighting the effectiveness of AR displays in improving situational awareness.

Figure 18 shows the results of the experiment [

105].

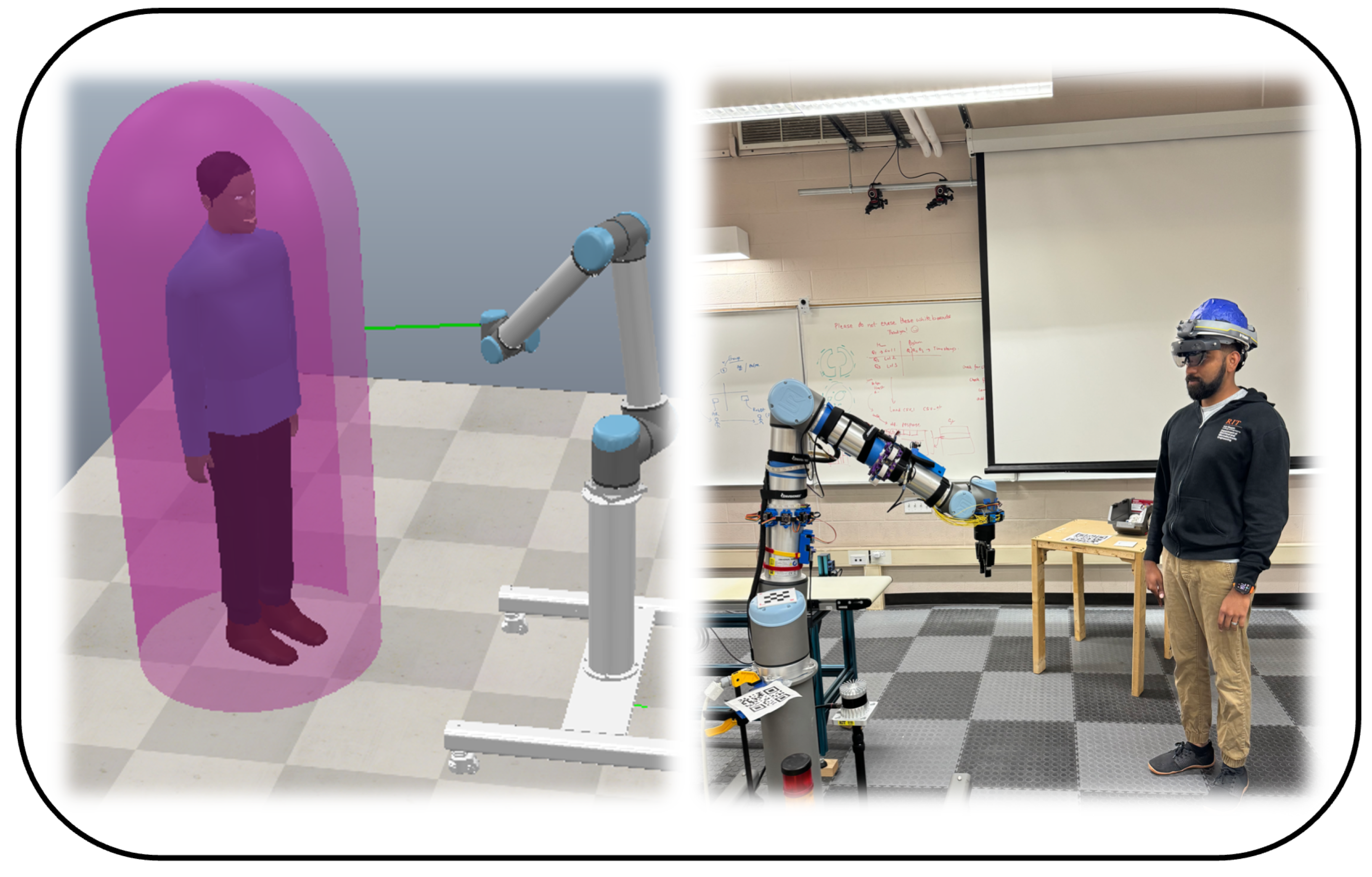

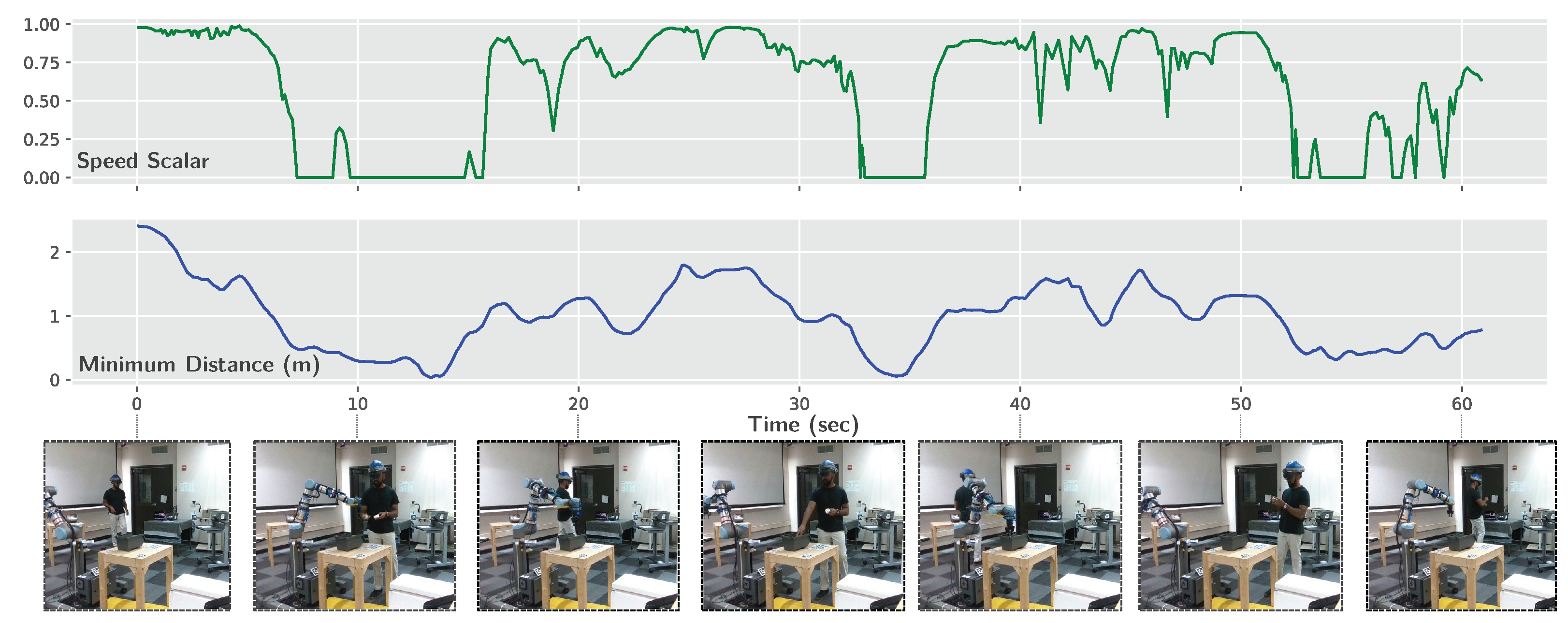

The second study leverages mixed reality (MR) and digital twin technology to implement a Speed and Separation Monitoring (SSM) algorithm, which dynamically adjusts robot speed to prevent collisions [

106]. This real-time control mechanism aligns perfectly with the framework’s focus on maintaining safety through effective control strategies. The study’s results, in

Table 3, showed a maximum tracking error of 0.0743 meters and a mean error of 0.0315 meters, demonstrating high positional accuracy. Additionally, the SSM algorithm effectively adjusted the robot’s speed in response to changes in separation distance, ensuring a safe distance between humans and robots. An example of this can be seen in

Figure 20.

Both studies utilize quantitative measures such as tracking error, perception accuracy, and response times, alongside qualitative feedback on user confidence and system usability, providing robust empirical support for the framework. By prioritizing trust and safety, the framework ensures that AR systems are designed to enhance operational security and build user trust, making it an invaluable tool for advancing HRI in industrial applications. These findings show the practical relevance of the framework, demonstrating its ability to guide the development of AR systems that significantly improve safety and trust in HRI environments.

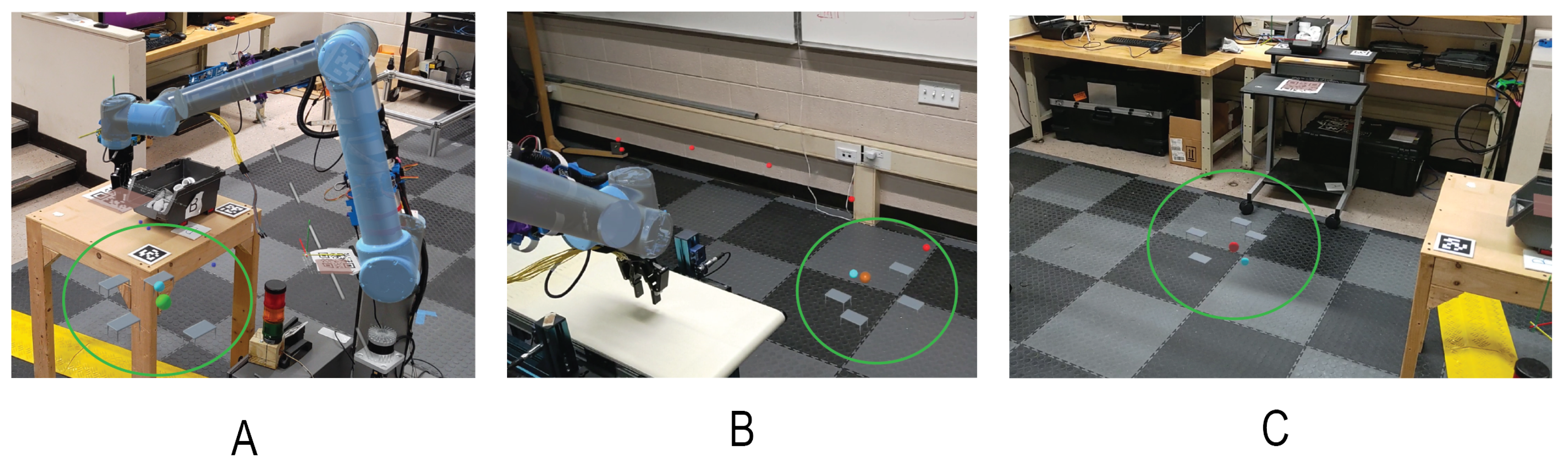

7.2. Collision Warnings Using Virtual Elements

When utilizing the augmented reality (AR) system, the wearer is immersed in a sophisticated, real-time environment that significantly enhances their situational awareness and safety during human-robot interactions (HRI). The wearer can expect to see a series of dynamic visual indicators, represented by spheres, which trace the path of the robot end effector’s movements. These spheres provide an intuitive visual cue, changing color based on the proximity of the wearer to the robot’s path. When the human operator is within a safe distance, the spheres remain blue (

Figure 21 A), indicating no immediate threat. However, if the wearer approaches a critical proximity threshold, the spheres turn red (

Figure 21 B), signaling a potential collision risk. This visual feedback allows the wearer to anticipate the robot’s trajectory and adjust their movements accordingly, thereby preventing accidents. The system continuously updates these indicators, ensuring that the wearer has up-to-date information about the robot’s position and path.

Implementing effective HCI practices should begin with user research and a thorough understanding of how the user will interact with the system. For the minimap design, it started with understanding user preferences and the optimal system design. Prior work by Green et al. suggested that an overview of the robot’s path was more successful in determining situational awareness than an immersive view. In the current lab, users have an immersive view. To provide them with an overview or bird’s-eye view, a minimap was implemented to show the user, the robot, and the tables at all times. The mini-map overlay provides real-time updates on the positions of key elements within the workspace, including the base and tool of the robot, the main camera, and additional objects of interest. These elements are represented by distinct indicator objects on the mini-map, which are dynamically updated to reflect their relative positions scaled down to a manageable size for the wearer’s view.

The mini-map serves as a crucial tool for spatial orientation, allowing the wearer to easily track the movements of both the robot and surrounding objects. Each indicator is color-coded for quick visual recognition: the base indicator is semi-transparent white, the tool indicator is cyan, the human indicator is green (

Figure 22 A) (changing to orange and then red based on proximity to the human operator) (

Figure 22 B & C), and other object indicators are grey. This color-coding not only helps in distinguishing between different elements but also provides immediate visual cues about the current operational status and potential hazards. The locations of the objects are linked to a fiducial and will be updated as the Hololens 2 is able to scan them.

As the wearer navigates the workspace, the mini-map continuously updates, showing the real-time positions of the robot and other objects relative to the base position. This dynamic update mechanism ensures that the wearer always has the latest information about the robot’s location, enhancing their ability to predict movements and avoid collisions. Furthermore, the camera indicator changes color based on the distance between the robot and the human operator, turning red when within a critical threshold, thus providing an immediate warning of potential collision risks.

For the visualization of the design, UI principles such as simplicity, consistency, and visibility were all considered. The design of the minimap is kept simple, including only the essential elements: the robot, the human, and the tables. Additionally, the human and robot are both represented as spheres to avoid cluttering the minimap. The tables take the shape of actual tables rather than cubes or other 3D shapes to remain consistent with the real world. This way, users don’t have to assume or remember the real-world item corresponding to the elements in the AR.

Visibility is ensured because the map moves with the person, ensuring they always see the map in the correct orientation. This means users don’t have to calculate their direction relative to the map’s direction to know where the robot is, enhancing ease of use and safety. Situational Awareness

7.3. Evaluation and Continuous Improvement

The framework’s emphasis on continuous evaluation and improvement is pivotal to ensuring that AR systems in HRI remain effective, user-friendly, and safe. This section elaborates on the methods and strategies for evaluating AR systems and outlines a process for continuous improvement.

7.3.1. Safety Metrics

Safety metrics are critical for evaluating the risk and potential hazards in Human-Robot Interaction (HRI) environments. They focus on ensuring that interactions between humans and robots are safe and free from accidents or injuries. These metrics assess how well the system maintains safe distances, prevents collisions, and handles emergency situations. By monitoring these metrics, it is possible to identify and mitigate risks, enhancing the overall safety and trust of human operators in robotic systems. The key safety and efficiency metrics for robot safety using Speed and Separation algorithms are defined in [

107,

108]

Collision Count: The number of collisions or near-misses between robots and humans. A lower collision rate indicates that the system effectively prevents accidents, ensuring the safety of human operators. Monitoring this metric helps quantify the direct impact of the AR-HRI system on safety. It is critical to track this to ensure that the implemented safety features are functioning as intended and to identify any areas needing improvement.

Safe Distance Maintenance: The percentage of time the robot maintains a predefined safe distance from the human operator. This metric shows how consistently the system keeps humans out of harm’s way. Maintaining a safe distance is crucial to prevent injuries and build trust between human operators and robots. Continuous monitoring of this metric ensures that the system adapts to dynamic environments while prioritizing human safety.

Emergency Stops: The frequency of emergency stops triggered by the system to prevent collisions. While emergency stops are necessary to prevent collisions, a high frequency may indicate overly conservative settings, which can disrupt workflow. Balancing safety and efficiency is key, and this metric helps in tuning the system. Tracking emergency stops helps in refining the sensitivity and response parameters of the system for optimal performance.

7.3.2. Efficiency Metrics

Efficiency metrics measure the productivity and effectiveness of the HRI system. They focus on how well tasks are completed. These metrics assess factors like task completion time, idle time, and path optimization. By evaluating efficiency, it is possible to determine if the system is not only safe but also effective in improving workflow and productivity in an HRI environment.

Task Completion Time: The time taken to complete a task before and after implementing the AR-HRI system. Reducing task completion time while maintaining safety shows that the system is efficient [

109]. This metric is vital for demonstrating that the system not only keeps humans safe but also enhances productivity. It provides insights into how well the AR integration and SSM are streamlining the workflow.

Idle Time: The amount of time the robot is idle due to safety interventions. Minimizing idle time indicates that the system is effective without unnecessarily halting operations [

109]. This balance is critical for maintaining a smooth and efficient workflow. By tracking idle time, one can assess the efficiency of the SSM algorithm in differentiating between real and false positives, thereby ensuring that the robot operates as smoothly as possible.

Path Optimization: Changes in the robot’s path efficiency, such as distance traveled and smoothness of movements. Efficient path planning ensures that the robot performs its tasks optimally while avoiding humans [

110]. Measuring path optimization helps in understanding the overall efficiency of the system. This metric is important for identifying any unnecessary detours or delays caused by the AR-HRI system and for optimizing the robot’s navigation algorithms.

7.3.3. User Experience Metrics

User experience metrics evaluate the subjective perceptions and satisfaction of human operators interacting with robotic systems. These metrics focus on how safe, comfortable, and intuitive the interactions are for users. They often include assessments of perceived safety, workload, and emotional responses. Understanding user experience is crucial for ensuring that the system is user-friendly and meets the needs and expectations of its operators, leading to higher acceptance and better overall performance.

Perceived Safety: User feedback on how safe they feel working alongside the robot. Perceived safety is crucial for user acceptance of robotic systems [

111]. This qualitative metric helps to understand the psychological impact of the system on human operators. Gathering user feedback through surveys and interviews helps in assessing the effectiveness of the AR visualizations and the SSM in making users feel secure.

NASA TLX Scores: Scores from the NASA Task Load Index, assessing perceived workload across six dimensions: mental demand, physical demand, temporal demand, performance, effort, and frustration [

40]. Assessing workload using NASA TLX provides insights into how the system affects the cognitive and physical demands on human operators [

112]. Lower scores indicate a more user-friendly and less stressful interaction. This metric is essential for understanding how the AR-HRI system impacts overall user workload and for identifying areas that can be improved to reduce user strain.

-

SAM Scale Scores:

Measurements from the Self-Assessment Manikin (SAM) scale, assessing user arousal and valence. The SAM scale provides a subjective measure of users’ emotional responses [

113]. These scores can be used for understanding the feelings felt by the workers during interaction with the robots while using AR.

Figure 23.

SAM Manequin used commonly to obtain subjective responses from human subjects [

114].

Figure 23.

SAM Manequin used commonly to obtain subjective responses from human subjects [

114].

-

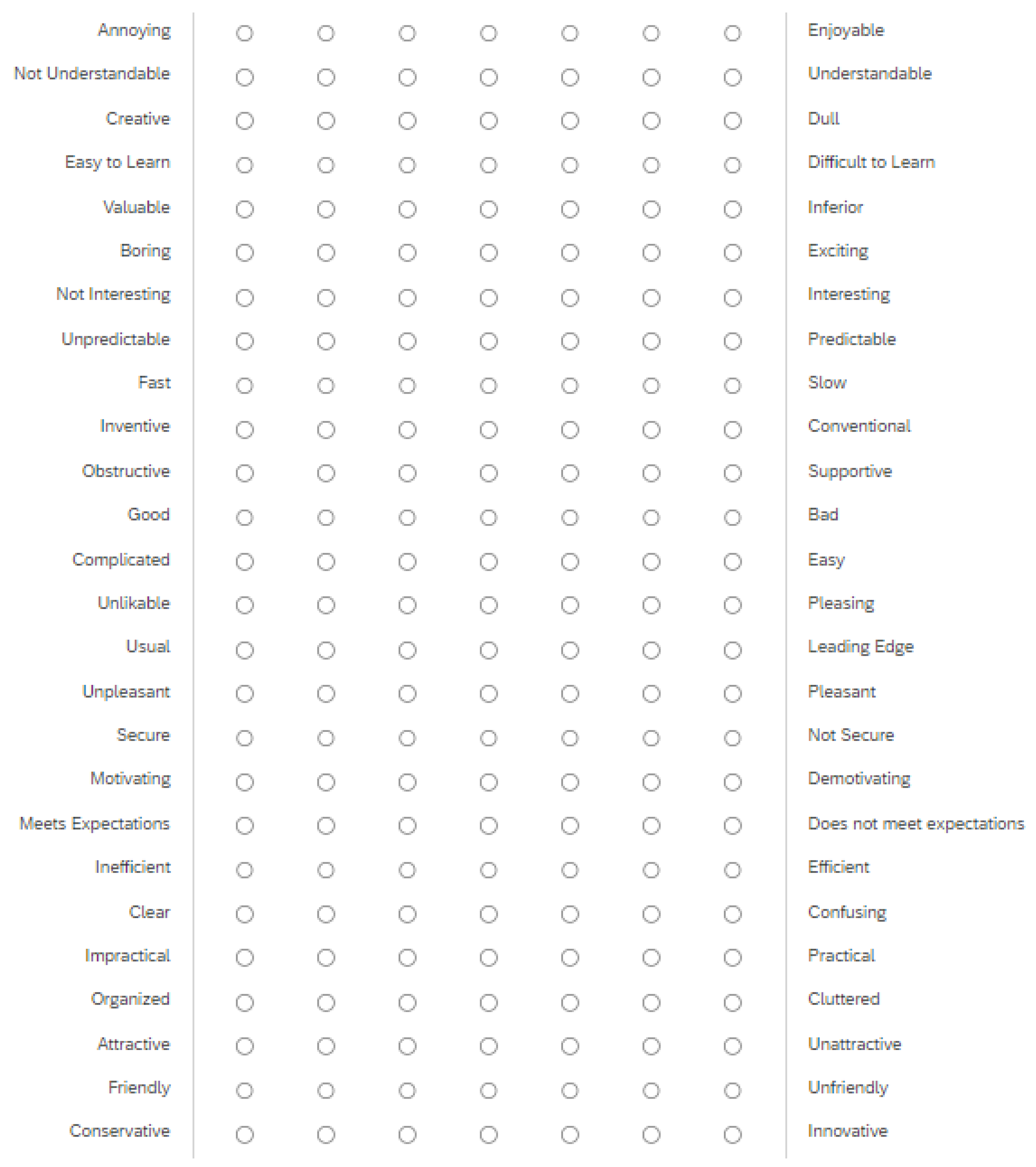

User Experience Questionnaire (UEQ) The UEQ consists of 26 questions, using a 7-point Likert scale where participants evaluate the user experience of a system across six attributes, including dependability and attractiveness [

91].

Figure 24 is an example of the UEQ Questionnaire.

Positive emotional experiences are essential for user satisfaction and acceptance. Correlating these scores with physiological data can provide a holistic view of user experience and emotional state. [

115]

7.3.4. Physiological Metrics

Physiological metrics involve measuring the physical and emotional responses of human operators through biometric data. These metrics can include heart rate, skin conductance, and other physiological signals that indicate stress, arousal, or emotional states. By monitoring these physiological responses, it is possible to gain insights into how the HRI system affects users’ well-being and to make necessary adjustments to improve their comfort and performance. Savur and Sahin in [

116] compile methods of physiological computing used in human robot collaboration.

ECG and Galvanic Skin Response (GSR): Data from physiological sensors that relay electrocardiogram (ECG) and galvanic skin response (GSR) signals from the human operator can be used to train models that estimate arousal and valence from ECG and GSR signals [

111]. These models can also provide data on the emotional states of users, which can be correlated with subjective measures from the SAM scale to validate the user experiences. Implementing these models helps in real-time monitoring and analysis of user states, enabling proactive adjustments to the AR-HRI system to improve user comfort and performance.

7.3.5. System Performance Metrics

System performance metrics evaluate the technical effectiveness and reliability of the HRI system. These metrics measure response time, accuracy, and the system’s ability to detect and respond to issues. High system performance ensures reliable, efficient, and safe operations.

Response Time: The time it takes for the system to detect a potential collision and respond. Faster response times are crucial for preventing accidents. This metric demonstrates the system’s efficiency in real-time monitoring and intervention. Reducing response time is essential for ensuring that safety interventions are timely and effective, thereby minimizing the risk of accidents.

Positional Accuracy: The accuracy of the system in detecting the positions of both the robot and the human. High positional accuracy is essential for the effective functioning of the system. This metric ensures that the system can reliably monitor and react to the positions of humans and robots. Accurate position tracking is vital for the SSM algorithm to function correctly and for providing precise AR visualizations.

8. Conclusions

This paper has explored the significant advancements in integrating Augmented Reality (AR) into Human-Robot Interaction (HRI), emphasizing the enhancement of collaboration, trust, and safety. By synthesizing findings from numerous studies, we have identified key challenges and proposed a comprehensive framework designed to address these issues effectively.

Our framework emphasizes the importance of situational awareness and control, leveraging AR technologies to enhance users’ ability to perceive, comprehend, and project the state of their environment. The integration of Speed and Separation Monitoring (SSM) algorithms ensures that robots operate safely around human operators, dynamically adjusting their actions to maintain safe distances and prevent collisions.

Moreover, the implementation of Human-Computer Interaction (HCI) principles for AR design ensures that user interfaces (UI) and user experiences (UX) are intuitive, user-centered, and tailored to meet the specific needs of human operators. Continuous evaluation and improvement, through both quantitative and qualitative measures, are essential to maintain the effectiveness, usability, and safety of AR systems in HRI.

In conclusion, the proposed framework not only addresses the critical aspects of collaboration, trust, and safety but also provides a structured approach for the continuous development and refinement of AR applications in HRI. This research paves the way for safer and more efficient human-robot collaborations, ultimately contributing to the advancement of the field.

Author Contributions

Subramanian, K. studied various AR devices, such as headsets and projections, to understand their impact on HRI, while Thomas, L. contributed towards HCI principles to enhance the usability and effectiveness of AR interfaces in HRI. Sahin, M. studied existing methods of evaluating Situational Awareness in HRI and contributed towards integrating SA in the proposed framework mentioned in this paper. Under the supervision of Sahin, F., the team synthesized their findings to provide a comprehensive review of AR applications within HRI, highlighting key challenges and proposing solutions for enhancing collaboration, trust, and safety in HRI systems.

Funding

This material is based upon work supported by the National Science Foundation under Award No. DGE-2125362. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Thrun, S. Toward a Framework for Human-Robot Interaction. Human–Computer Interaction 2004, 19, 9–24, Publisher: Taylor & Francis. [Google Scholar] [PubMed]

- Scholtz, J. Theory and evaluation of human robot interactions. 36th Annual Hawaii International Conference on System Sciences, 2003. Proceedings of the, 2003, pp. 10 pp.–. [CrossRef]

- Sidobre, D.; Broquère, X.; Mainprice, J.; Burattini, E.; Finzi, A.; Rossi, S.; Staffa, M. Human–Robot Interaction. In Advanced Bimanual Manipulation: Results from the DEXMART Project; Siciliano, B., Ed.; Springer Tracts in Advanced Robotics, Springer: Berlin, Heidelberg, 2012; pp. 123–172. [Google Scholar] [CrossRef]

- Ong, S.K.; Yuan, M.L.; Nee, A.Y.C. Augmented reality applications in manufacturing: a survey. International Journal of Production Research 2008, 46, 2707–2742. [Google Scholar] [CrossRef]

- Suzuki, R.; Karim, A.; Xia, T.; Hedayati, H.; Marquardt, N. Augmented Reality and Robotics: A Survey and Taxonomy for AR-enhanced Human-Robot Interaction and Robotic Interfaces. Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2022; CHI ′22; pp. 1–33. [Google Scholar] [CrossRef]

- Ruiz, J.; Escalera, M.; Viguria, A.; Ollero, A. A simulation framework to validate the use of head-mounted displays and tablets for information exchange with the UAV safety pilot. 2015 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), 2015, pp. 336–341. [CrossRef]

- Chan, W.P.; Hanks, G.; Sakr, M.; Zhang, H.; Zuo, T.; van der Loos, H.F.M.; Croft, E. Design and Evaluation of an Augmented Reality Head-mounted Display Interface for Human Robot Teams Collaborating in Physically Shared Manufacturing Tasks. ACM Transactions on Human-Robot Interaction 2022, 11, 31:1–31:19. [Google Scholar] [CrossRef]

- Kalpagam Ganesan, R.; Rathore, Y.K.; Ross, H.M.; Ben Amor, H. Better Teaming Through Visual Cues: How Projecting Imagery in a Workspace Can Improve Human-Robot Collaboration. IEEE Robotics & Automation Magazine 2018, 25, 59–71. [Google Scholar] [CrossRef]

- Woodward, J.; Ruiz, J. Analytic Review of Using Augmented Reality for Situational Awareness. IEEE Transactions on Visualization and Computer Graphics 2023, 29, 2166–2183, Conference Name: IEEE Transactions on Visualization and Computer Graphics. [Google Scholar] [CrossRef]

- Interaction Design Beyond Human-Computer Interaction, Sixth Edition (Helen Sharp, Jenny Preece, Yvonne Rogers) (Z-Library) | Download Free PDF | Human–Computer Interaction | Computer Science.

- Blaga, A.; Tamas, L. Augmented Reality for Digital Manufacturing. 2018, pp. 173–178. [CrossRef]

- Caudell, T.; Mizell, D. Augmented reality: an application of heads-up display technology to manual manufacturing processes. Proceedings of the Twenty-Fifth Hawaii International Conference on System Sciences, 1992, Vol. ii, pp. 659–669 vol.2. [CrossRef]