Submitted:

26 January 2024

Posted:

29 January 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

-

What is the state of the art of literature about nonverbal interaction in HRI with social service robots? We are interested in the following general metrics:

- a)

- the number of available articles;

- b)

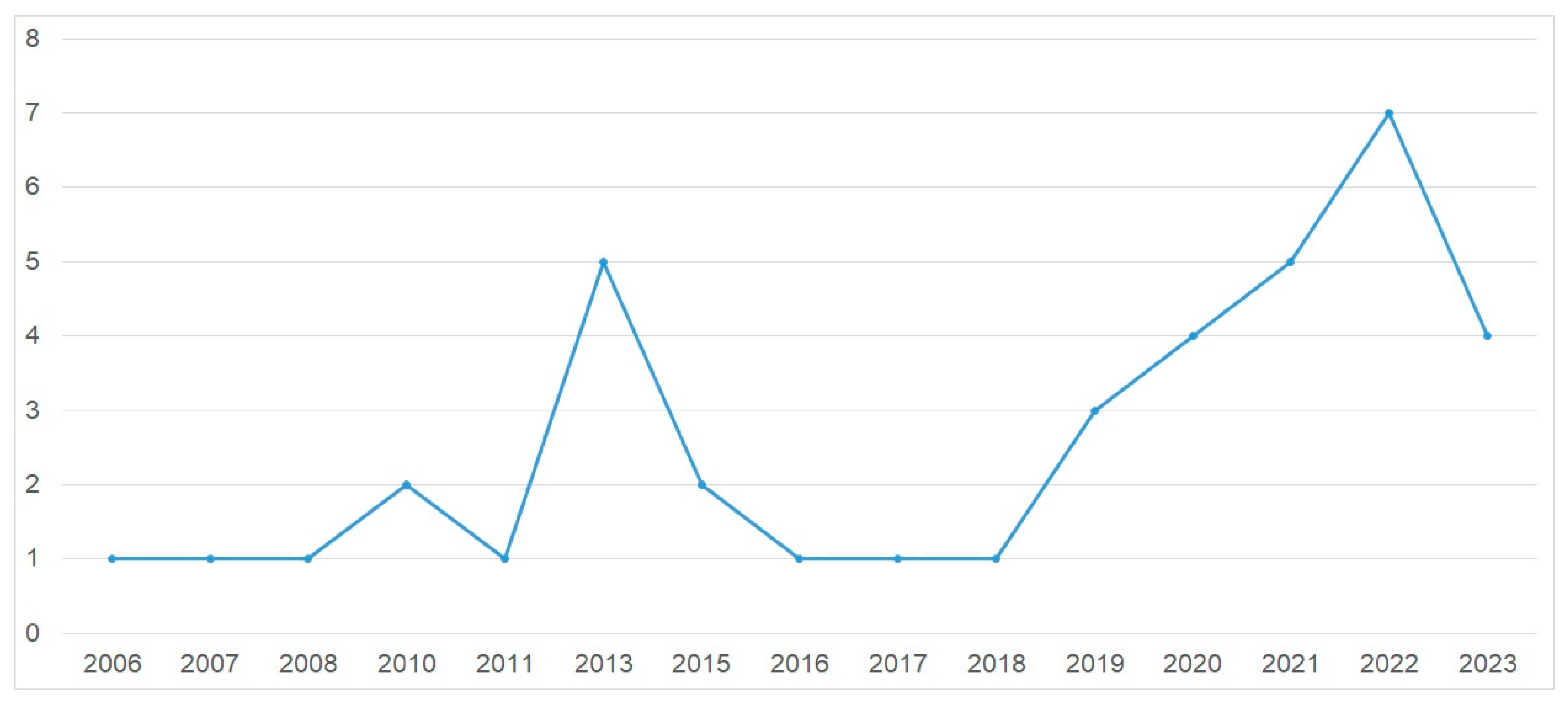

- the publishing years of the papers;

- c)

- the main contributing countries; and

- d)

- the main contributing authors.

-

In addition our interests lay in the following specific aspects of the studies:

- a)

- What robots were mainly used in the studies?

- b)

- What were the main research aims?

- c)

- What types of nonverbal communication were mostly examined?

- d)

- What methods were used for research?

- e)

- What are the main results of the studies?

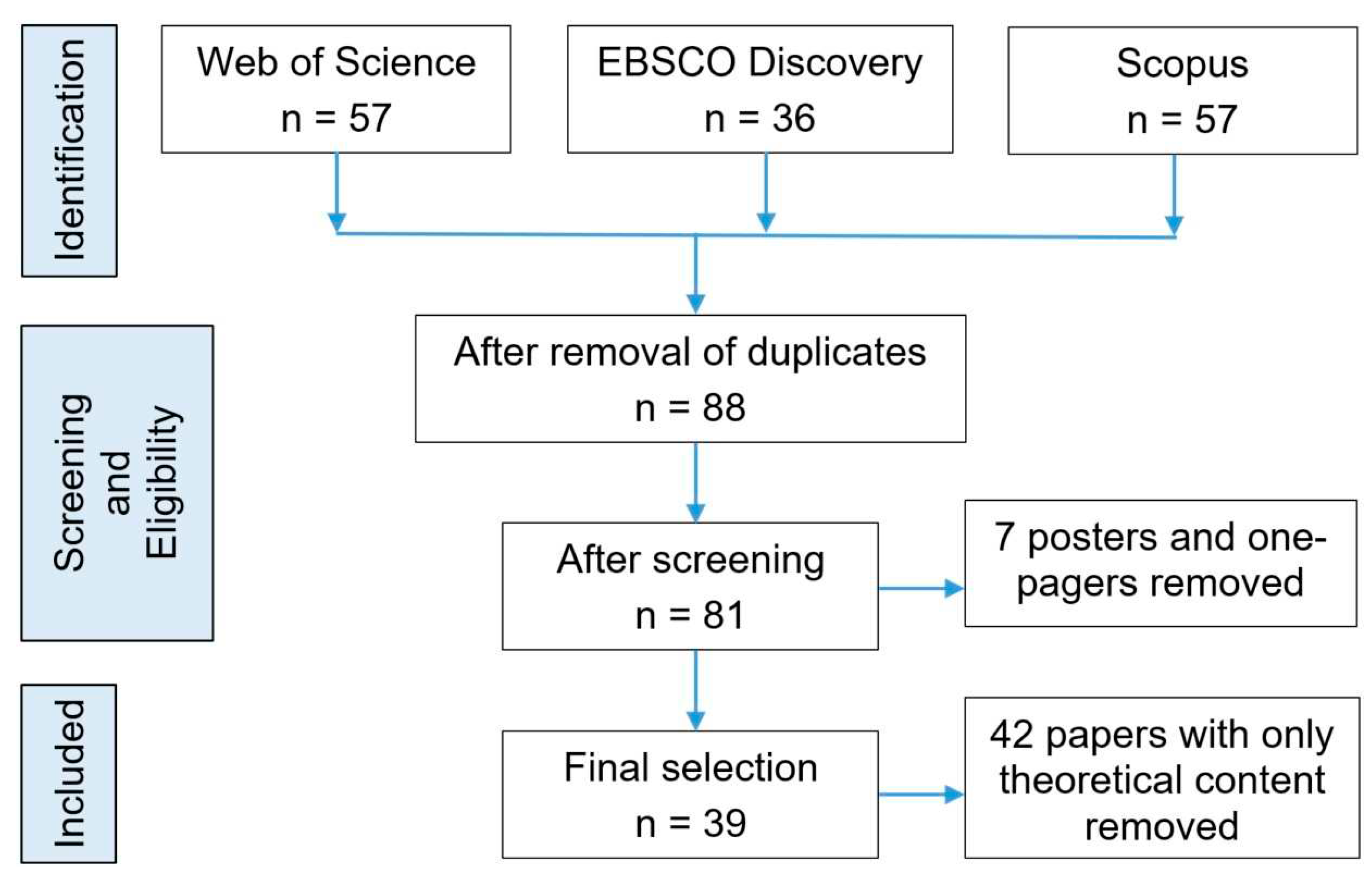

2. Materials and Methods

3. Results

3.1. Robots Used in the Examined Studies

3.2. Main Research Aims

3.3. Types of Nonverbal Communication Examined

3.4. Sample and Methods Used

3.4.1. Sample Size and Characteristics

3.4.2. Data Collection

3.5. Main Empirical Results

3.6. Theoretical Approaches Proposed

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A The Results of the Literature Selection Process

- Ahmad, M. I., Shahid, S., & Tahir, A. (2017). Towards the applicability of NAO robot for children with autism in Pakistan. Human-Computer Interaction Interact 2017: 16Th Ifip Tc 13 International Conference, Mumbai, India, September 25–29, 2017, Proceedings, Part Iii, 463-472. https://doi.org/10.1007/978-3-319-67687-6_32

- Aly, A., & Tapus, A. (2013). A model for synthesizing a combined verbal and nonverbal behavior based on personality traits in human-robot interaction. 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 325-332.

- Arts, E., Zörner, S., Bhatia, K., Mir, G., Schmalzl, F., Srivastava, A., Vasiljevic, B., Alpay, T., Peters, A., Strahl, E., & Wermter, S. (2020). Exploring Human-Robot Trust Through the Investment Game: An Immersive Space Mission Scenario. Proceedings of the 8th International Conference on Human-Agent Interaction. https://doi.org/10.1145/3406499.3415078

- Baddoura, R., & Venture, G. (2013). Social vs. Useful HRI: Experiencing the Familiar, Perceiving the Robot as a Sociable Partner and Responding to Its Actions. International Journal of Social Robotics, 5, 529-547. https://doi.org/10.1007/s12369-013-0207-x

- Capy, S., Osorio, P., Hagane, S., Aznar, C., Garcin, D., Coronado, E., Deuff, D., Ocnarescu, I., Milleville, I., & Venture, G. (2022). Yōkobo: A Robot to Strengthen Links Amongst Users with Non-Verbal Behaviours. Machines. https://doi.org/10.3390/machines10080708

- Chao, C., Cakmak, M., & Thomaz, A.L. (2010). Transparent active learning for robots. 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 317-324. https://doi.org/10.1109/HRI.2010.5453178

- Cooper, S., Fensome, S.F., Kourtis, D.A., Gow, S., & Dragone, M. (2020). An EEG investigation on planning human-robot handover tasks. 2020 IEEE International Conference on Human-Machine Systems (ICHMS), 1-6. https://doi.org/10.1109/ICHMS49158.2020.9209543

- Devillers, L., Rosset, S., Duplessis, G.D., Bechade, L., Yemez, Y., Türker, B.B., Sezgin, T.M., Erzin, E., Haddad, K.E., Dupont, S., Deléglise, P., Estève, Y., Lailler, C., Gilmartin, E., & Campbell, N. (2018). Multifaceted Engagement in Social Interaction with a Machine: The JOKER Project. 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), 697-701. https://doi.org/10.1109/FG.2018.00110

- Esteban, P.G., Bagheri, E., Elprama, S.A., Jewell, C.I., Cao, H., De Beir, A., Jacobs, A., & Vanderborght, B. (2021). Should I be Introvert or Extrovert? A Pairwise Robot Comparison Assessing the Perception of Personality-Based Social Robot Behaviors. International Journal of Social Robotics, 14, 115 - 125. https://doi.org/10.1007/s12369-020-00715-z

- Feng, H., Mahoor, M.H., & Dino, F. (2022). A Music-Therapy Robotic Platform for Children With Autism: A Pilot Study. Frontiers in Robotics and AI, 9. https://doi.org/10.3389/frobt.2022.855819

- Ghazali, A.S., Ham, J., Markopoulos, P.P., & Barakova, E.I. (2019). Investigating the Effect of Social Cues on Social Agency Judgement. 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 586-587. https://doi.org/10.1109/HRI.2019.8673266

- Gillet, S., Parreira, M.T., Vázquez, M., & Leite, I. (2022). Learning Gaze Behaviors for Balancing Participation in Group Human-Robot Interactions. 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 265-274. https://doi.org/10.1109/HRI53351.2022.9889416

- Gunes, H., Çeliktutan, O., & Sariyanidi, E. (2019). Live human–robot interactive public demonstrations with automatic emotion and personality prediction. Philosophical Transactions of the Royal Society B, 374. http://dx.doi.org/10.1098/rstb.2018.0026

- Jirak, D., Aoki, M., Yanagi, T., Takamatsu, A., Bouet, S., Yamamura, T., Sandini, G., & Rea, F. (2022). Is It Me or the Robot? A Critical Evaluation of Human Affective State Recognition in a Cognitive Task. Frontiers in Neurorobotics, 16. https://doi.org/10.3389/fnbot.2022.882483

- Kanda, T., Miyashita, T., Osada, T., Haikawa, Y., & Ishiguro, H. (2005). Analysis of Humanoid Appearances in Human–Robot Interaction. IEEE Transactions on Robotics, 24, 725-735. https://doi.org/10.1109/TRO.2008.921566

- Kennedy, J., Baxter, P.E., Senft, E., & Belpaeme, T. (2016). Social robot tutoring for child second language learning. 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 231-238. https://doi.org/10.1109/HRI.2016.7451757

- Kose-Bagci, H., Dautenhahn, K., Syrdal, D.S., & Nehaniv, C.L. (2010). Drum-mate: interaction dynamics and gestures in human–humanoid drumming experiments. Connection Science, 22, 103 - 134. https://doi.org/10.1080/09540090903383189

- Lallée, S., Hamann, K., Steinwender, J., Warneken, F., Martinez-Hernandez, U., Barron-Gonzalez, H., Pattacini, U., Gori, I., Petit, M., Metta, G., Verschure, P.F., & Dominey, P.F. (2013). Cooperative human robot interaction systems: IV. Communication of shared plans with Naïve humans using gaze and speech. 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, 129-136. https://doi.org/10.1109/IROS.2013.6696343

- Lee, K.M., Peng, W., Jin, S.A., & Yan, C. (2006). Can robots manifest personality? : An empirical test of personality recognition, social responses, and social presence in human-robot interaction. Journal of Communication, 56, 754-772. https://doi.org/10.1111/j.1460-2466.2006.00318.x

- Liu, L., Liu, Y., & Gao, X.Z. (2021). Impacts of Human Robot Proxemics on Human Concentration-Training Games with Humanoid Robots. Healthcare, 9. https://doi.org/10.3390/healthcare9070894

- Matarese, M., Rea, F., & Sciutti, A. (2022). Perception is Only Real When Shared: A Mathematical Model for Collaborative Shared Perception in Human-Robot Interaction. Frontiers in Robotics and AI, 9. https://doi.org/10.3389/frobt.2022.733954

- Matarese, M., Sciutti, A., Rea, F., & Rossi, S. (2021). Toward Robots’ Behavioral Transparency of Temporal Difference Reinforcement Learning With a Human Teacher. IEEE Transactions on Human-Machine Systems, 51, 578-589. https://doi.org/ 10.1109/THMS.2021.3116119

- Michalowski, M.P., & Kozima, H. (2007). Methodological Issues in Facilitating Rhythmic Play with Robots. RO-MAN 2007 - The 16th IEEE International Symposium on Robot and Human Interactive Communication, 95-100. https://doi.org/10.1109/ROMAN.2007.4415060

- Mora, A., Glas, D.F., Kanda, T., & Hagita, N. (2013). A Teleoperation Approach for Mobile Social Robots Incorporating Automatic Gaze Control and Three-Dimensional Spatial Visualization. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 43, 630-642. https://doi.org/10.1109/TSMCA.2012.2212187

- Oetringer, D., Wolfert, P., Deschuyteneer, J., Thill, S., & Belpaeme, T. (2021). Communicative Function of Eye Blinks of Virtual Avatars May Not Translate onto Physical Platforms. Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction. https://doi.org/10.1145/3434074.3447136

- Parreira, M.T., Gillet, S., Winkle, K., & Leite, I. (2023). How Did We Miss This?: A Case Study on Unintended Biases in Robot Social Behavior. Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction. https://doi.org/10.1145/3568294.3580032

- Perugia, G., Díaz-Boladeras, M., Català-Mallofré, A., Barakova, E.I., & Rauterberg, G. (2020). ENGAGE-DEM: A Model of Engagement of People With Dementia. IEEE Transactions on Affective Computing, 13, 926-943. https://doi.org/10.1109/TAFFC.2020.2980275

- Redondo, M.E., Niewiadomski, R., Francesco, R., & Sciutti, A. (2022). Comfortability Recognition from Visual Non-verbal Cues. Proceedings of the 2022 International Conference on Multimodal Interaction. https://doi.org/10.1145/3536221.3556631

- Redondo, M.E., Sciutti, A., Incao, S., Rea, F., & Niewiadomski, R. (2021). Can Robots Impact Human Comfortability During a Live Interview? Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction. https://doi.org/10.1145/3434074.3447156

- Saulnier, P., Sharlin, E., & Greenberg, S. (2011). Exploring minimal nonverbal interruption in HRI. 2011 RO-MAN, 79-86. https://doi.org/10.1109/ROMAN.2011.6005257

- Shao, M., Snyder, M., Nejat, G., & Benhabib, B. (2020). User Affect Elicitation with a Socially Emotional Robot. Robotics, 9, 44. https://doi.org/10.3390/robotics9020044

- Sheikhi, S., & Odobez, J. (2015). Combining dynamic head pose-gaze mapping with the robot conversational state for attention recognition in human-robot interactions. Pattern Recognit. Lett., 66, 81-90. http://dx.doi.org/10.1016/j.patrec.2014.10.002

- Song, S., & Yamada, S. (2019). Designing LED lights for a robot to communicate gaze. Advanced Robotics, 33, 360 - 368. https://doi.org/10.1080/01691864.2019.1600426

- Soomro, Z.A., BIN SHAMSUDIN, A.U., Abdul Rahim, R., Adrianshah, A., & Hazeli, M. (2023). Non-Verbal Human-Robot Interaction Using Neural Network for The Application of Service Robot. IIUM Engineering Journal. https://doi.org/10.31436/iiumej.v24i1.2577

- Trovato, G., Do, M., Kuramochi, M., Zecca, M., Terlemez, Ö., Asfour, T., & Takanishi, A. (2014). A Novel Culture-Dependent Gesture Selection System for a Humanoid Robot Performing Greeting Interaction. International Conference on Software Reuse. http://dx.doi.org/10.1007/978-3-319-11973-1_35

- Umbrico, A., De Benedictis, R., Fracasso, F., Cesta, A., Orlandini, A., & Cortellessa, G. (2022). A Mind-inspired Architecture for Adaptive HRI. International Journal of Social Robotics, 15, 371 - 391. https://doi.org/10.1007/s12369-022-00897-8

- Xu, J., Broekens, J., Hindriks, K.V., & Neerincx, M.A. (2013). Bodily Mood Expression: Recognize Moods from Functional Behaviors of Humanoid Robots. International Conference on Software Reuse. https://doi.org/10.1007/978-3-319-02675-6_51

- Xu, J., Broekens, J., Hindriks, K.V., & Neerincx, M.A. (2015). Mood contagion of robot body language in human robot interaction. Autonomous Agents and Multi-Agent Systems, 29, 1216-1248. https://doi.org/10.1007/s10458-015-9307-3

- Yang, Y., & Williams, A.B. (2021). Improving Human-Robot Collaboration Efficiency and Robustness through Non-Verbal Emotional Communication. Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction. https://doi.org/10.1145/3371382.3378385

References

- MarketsAndMarkets. Service Robotics Market by Environment (Aerial, Ground, Marine), Type (Professional, Personal & Domestic), Component, Application (Logistics, Inspection & Maintenance, Public Relations, Education) and Region - Global Forecast to 2028. Available online: https://www.marketsandmarkets.com/Market-Reports/service-robotics-market-681.html (accessed on 29 December 2023).

- Schraft, R. Service robot—From vision to realization. Technica 1993, 7, 27–31. [Google Scholar]

- ISO. Robots and Robotic Devices – Vocabulary ISO 8373:2012. Technical Report; ISO: Geneve, Switzerland, 2012. [Google Scholar]

- International Federation of Robotics. Service Robots. Available online: https://ifr.org/service-robot (accessed on 29 December 2023).

- Bieber, G.; Haescher, M.; Antony, N.; Hoepfner, F.; Krause, S. Unobtrusive Vital Data Recognition by Robots to Enhance Natural Human–Robot Communication. In Social Robots: Technological, Societal and Ethical Aspects of Human-Robot Interaction; Korn, O., Ed.; Springer: Cham, 2019. [Google Scholar] [CrossRef]

- Wirtz, J.; Patterson, P. G.; Kunz, W. H.; Gruber, T.; Lu, V. N.; Paluch, S.; Martins, A. Brave new world: Service robots in the frontline. Journal of Service Management 2018, 29, 907–931. [Google Scholar] [CrossRef]

- Kopacek, P. Development Trends in Robotics. IFAC-PapersOnLine 2016, 49, 36–41. [Google Scholar] [CrossRef]

- Holland, J.; Kingston, L.; McCarthy, C.; Armstrong, E.; O’Dwyer, P.; Merz, F.; McConnell, M. Service Robots in the Healthcare Sector. Robotics 2021, 10. [Google Scholar] [CrossRef]

- Turja, T.; Rantanen, T.; Oksanen, A. Robot use self-efficacy in healthcare work (RUSH): development and validation of a new measure. AI & Soc 2019, 34, 137–143. [Google Scholar] [CrossRef]

- Leoste, J.; Heidmets, M.; Virkus, S.; Talisainen, A.; Rebane, M.; Kasuk, T.; Tammemäe, K.; Kangur, K.; Kikkas, K.; Marmor, K. Keeping Distance with a Telepresence Robot: A Pilot Study. Frontiers in Education 2023, 7, 1046461. [Google Scholar] [CrossRef]

- Hoffman, G.; Ju, W. Designing robots with movement in mind. Journal of Human-Robot Interaction 2014, 3, 91–122. [Google Scholar] [CrossRef]

- Erel, H.; Shem Tov, T.; Kessler, Y.; Zuckerman, O. Robots are always social: Robotic movements are automatically interpreted as social cues. In Extended abstracts of the CHI conference on human factors in computing systems, 2019. [CrossRef]

- Sarrica, M.; Brondi, S.; Fortunati, L. How many facets does a "social robot" have? A review of scientific and popular definitions online. Inf. Technol. People 2019, 33, 1–21. [Google Scholar] [CrossRef]

- Lee, Y.; Lee, S.; Kim, D. Exploring hotel guests' perceptions of using robot assistants. Tourism Management Perspectives 2021, 37. [Google Scholar] [CrossRef]

- temibots. temi V3 Robot – Black. Available online: https://temibots.com/product/temi-v3-robot-black-buy (accessed on 29 December 2023).

- RobotLAB. LG CLOi Guidebot Robot for Hospitality. Available online: https://www.robotlab.com/hospitality-robots/store/cloi-guidebot (accessed on 29 December 2023).

- Urakami, J.; Sutthithatip, S. Building a Collaborative Relationship between Human and Robot through Verbal and Non-Verbal Interaction. Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, 2021. [CrossRef]

- Anderson-Bashan, L.; Megidish, B.; Erel, H.; Wald, I.; Hoffman, G.; Zuckerman, O.; Grishko, A. The greeting machine: an abstract robotic object for opening encounters. In Proceedings of the 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), 2018. [CrossRef]

- Ju, W.; Takayama, L. Approachability: How people interpret automatic door movement as gesture. International Journal of Design 2009, 3. [Google Scholar]

- Gonzalez-Aguirre, J.A.; Osorio-Oliveros, R.; Rodríguez-Hernández, K.L.; Lizárraga-Iturralde, J.; Morales Menendez, R.; Ramírez-Mendoza, R.A.; Ramírez-Moreno, M.A. , et al. Service Robots: Trends and Technology. Applied Sciences 2021, 11, 10702. [Google Scholar] [CrossRef]

- Dantas, R.; Fleck, D. Challenges in Identifying Studies to Include in a Systematic Literature Review: An Analysis of the Organizational Growth and Decline Topics. Global Knowledge, Memory and Communication 2023. [Google Scholar] [CrossRef]

- Clarivate. Web of Science Search. Available online: https://www.webofscience.com/wos/woscc/basic-search (accessed on 29 December 2023).

- Elsevier, B.V. Available online:. Available online: https://www.scopus.com/home.uri (accessed on 29 December 2023).

- EBSCO. EBSCO Discovery Service. EBSCO Information Services. Available online: https://www.ebsco.com/products/ebsco-discovery-service (accessed on 29 December 2023).

- Jirak, D.; Aoki, M.; Yanagi, T.; Takamatsu, A.; Bouet, S.; Yamamura, T.; Sandini, G.; Rea, F. Is It Me or the Robot? A Critical Evaluation of Human Affective State Recognition in a Cognitive Task. Frontiers in Neurorobotics 2022, 16. [Google Scholar] [CrossRef]

- Parreira, M.T.; Gillet, S.; Winkle, K.; Leite, I. How Did We Miss This?: A Case Study on Unintended Biases in Robot Social Behavior. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction; 2023. [Google Scholar] [CrossRef]

- Ghazali, A.S.; Ham, J.; Markopoulos, P.P.; Barakova, E.I. Investigating the Effect of Social Cues on Social Agency Judgement. In Proceedings of the 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI); 2019. [Google Scholar] [CrossRef]

- Oetringer, D.; Wolfert, P.; Deschuyteneer, J.; Thill, S.; Belpaeme, T. Communicative Function of Eye Blinks of Virtual Avatars May Not Translate onto Physical Platforms. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction; 2021. [Google Scholar] [CrossRef]

- Lee, K.M.; Peng, W.; Jin, S.A.; Yan, C. Can Robots Manifest Personality? An Empirical Test of Personality Recognition, Social Responses, and Social Presence in Human-Robot Interaction. Journal of Communication 2006, 56, 754–772. [Google Scholar] [CrossRef]

- Perugia, G.; Díaz-Boladeras, M.; Català-Mallofré, A.; Barakova, E.I.; Rauterberg, G. ENGAGE-DEM: A Model of Engagement of People With Dementia. IEEE Transactions on Affective Computing 2020, 13, 926–943. [Google Scholar] [CrossRef]

- Song, S.; Yamada, S. Designing LED lights for a robot to communicate gaze. Advanced Robotics 2019, 33, 360–368. [Google Scholar] [CrossRef]

- Capy, S.; Osorio, P.; Hagane, S.; Aznar, C.; Garcin, D.; Coronado, E.; Deuff, D.; Ocnarescu, I.; Milleville, I.; Venture, G. Yōkobo: A Robot to Strengthen Links Amongst Users with Non-Verbal Behaviours. Machines 2022. [Google Scholar] [CrossRef]

- Michalowski, M.P.; Kozima, H. Methodological Issues in Facilitating Rhythmic Play with Robots. In Proceedings of the 16th IEEE International Symposium on Robot and Human Interactive Communication; 2007. [Google Scholar] [CrossRef]

- Saulnier, P.; Sharlin, E.; Greenberg, S. Exploring minimal nonverbal interruption in HRI. In Proceedings of the 20th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN); 2011. [Google Scholar] [CrossRef]

- Soomro, Z.A.; Bin Shamsudin, A.U.; Abdul Rahim, R.; Adrianshah, A.; Hazeli, M. Non-Verbal Human-Robot Interaction Using Neural Network for The Application of Service Robot. IIUM Engineering Journal 2023, 24. [Google Scholar] [CrossRef]

- Yang, Y.; Williams, A.B. Improving Human-Robot Collaboration Efficiency and Robustness through Non-Verbal Emotional Communication. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction; 2021. [Google Scholar] [CrossRef]

- Kwak, S. The Impact of the Robot Appearance Types on Social Interaction with a Robot and Service Evaluation of a Robot. Archives of Design Research 2014, 27(2), 81–93. [Google Scholar] [CrossRef]

- Ahmad, M. I.; Shahid, S.; Tahir, A. Towards the Applicability of NAO Robot for Children with Autism in Pakistan. In Proceedings of Human-Computer Interaction Interact 2017: 16th IFIP TC 13 International Conference, Mumbai, India, (25–29 September 2017). [CrossRef]

- Aly, A.; Tapus, A. A Model for Synthesizing a Combined Verbal and Nonverbal Behavior Based on Personality Traits in Human-Robot Interaction. In proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction (2013).

- Arts, E.; Zörner, S.; Bhatia, K.; Mir, G.; Schmalzl, F.; Srivastava, A.; Vasiljevic, B.; Alpay, T.; Peters, A.; Strahl, E.; Wermter, S. Exploring Human-Robot Trust Through the Investment Game: An Immersive Space Mission Scenario. In Proceedings of the 8th International Conference on Human-Agent Interaction (2020). [CrossRef]

- Trovato, G.; Do, M.; Kuramochi, M.; Zecca, M.; Terlemez, Ö.; Asfour, T.; Takanishi, A. A Novel Culture-Dependent Gesture Selection System for a Humanoid Robot Performing Greeting Interaction. In Proceedings of the International Conference on Software Reuse, 2014. [CrossRef]

- Kanda, T.; Miyashita, T.; Osada, T.; Haikawa, Y.; Ishiguro, H. Analysis of Humanoid Appearances in Human–Robot Interaction. IEEE Transactions on Robotics 2008, 24, 725–735. [Google Scholar] [CrossRef]

- Umbrico, A.; De Benedictis, R.; Fracasso, F.; Cesta, A.; Orlandini, A.; Cortellessa, G. A Mind-inspired Architecture for Adaptive HRI. International Journal of Social Robotics 2022, 15, 371–391. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Broekens, J.; Hindriks, K.V.; Neerincx, M.A. Neerincx, M.A. Bodily Mood Expression: Recognize Moods from Functional Behaviors of Humanoid Robots. In Proceedings of International Conference on Software Reuse, 2013. [CrossRef]

- Xu, J.; Broekens, J.; Hindriks, K.V.; Neerincx, M.A. Mood contagion of robot body language in human-robot interaction. Autonomous Agents and Multi-Agent Systems 2015, 29, 1216–1248. [Google Scholar] [CrossRef]

- Chao, C.; Cakmak, M.; Thomaz, A.L. Transparent Active Learning for Robots. In Proceedings of 5th ACM/IEEE International Conference on Human-Robot Interaction, 2010. 2010. [Google Scholar] [CrossRef]

- Cooper, S.; Fensome, S.F.; Kourtis, D.A.; Gow, S.; Dragone, M. An EEG Investigation on Planning Human-Robot Handover Tasks. In Proceedins of the IEEE International Conference on Human-Machine Systems (ICHMS), 2020. [CrossRef]

- Devillers, L.; Rosset, S.; Duplessis, G.D.; Bechade, L.; Yemez, Y.; Türker, B.B.; Sezgin, T.M.; Erzin, E.; Haddad, K.E.; Dupont, S.; Deléglise, P.; Estève, Y.; Lailler, C.; Gilmartin, E.; Campbell, N. Multifaceted Engagement in Social Interaction with a Machine: The JOKER Project. In Proceedins of the 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), 2018. [CrossRef]

- Lallée, S.; Hamann, K.; Steinwender, J.; Warneken, F.; Martinez-Hernandez, U.; Barron-Gonzalez, H.; Pattacini, U.; Gori, I.; Petit, M.; Metta, G.; Verschure, P.F.; Dominey, P.F. Cooperative Human Robot Interaction Systems: IV. Communication of Shared Plans with Naïve Humans Using Gaze and Speech. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, 2013. [CrossRef]

- Kose-Bagci, H.; Dautenhahn, K.; Syrdal, D.S.; Nehaniv, C.L. Drum-mate: Interaction Dynamics and Gestures in Human–Humanoid Drumming Experiments. Connection Science 2010, 22, 103–134. [Google Scholar] [CrossRef]

- Kennedy, J.; Baxter, P.E.; Senft, E.; Belpaeme, T. Social Robot Tutoring for Child Second Language Learning. In Proceedings of the 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI); 2016. [Google Scholar] [CrossRef]

- Mora, A.; Glas, D.F.; Kanda, T.; Hagita, N. A Teleoperation Approach for Mobile Social Robots Incorporating Automatic Gaze Control and Three-Dimensional Spatial Visualization. IEEE Transactions on Systems, Man, and Cybernetics: Systems 2013, 43, 630–642. [Google Scholar] [CrossRef]

- Sheikhi, S.; Odobez, J. Combining dynamic head pose-gaze mapping with the robot conversational state for attention recognition in human-robot interactions. Pattern Recognit. Lett. 2015, 66, 81–90. [Google Scholar] [CrossRef]

- Shao, M.; Snyder, M.; Nejat, G.; Benhabib, B. User Affect Elicitation with a Socially Emotional Robot. Robotics 2020, 9, 44. [Google Scholar] [CrossRef]

- Gunes, H.; Çeliktutan, O.; Sariyanidi, E. Live Human–Robot Interactive Public Demonstrations with Automatic Emotion and Personality Prediction. Philosophical Transactions of the Royal Society B 2019, 374. [Google Scholar] [CrossRef]

- Matarese, M.; Rea, F.; Sciutti, A. Perception is Only Real When Shared: A Mathematical Model for Collaborative Shared Perception in Human-Robot Interaction. Frontiers in Robotics and AI 2022, 9. [Google Scholar] [CrossRef]

- Redondo, M.E.; Niewiadomski, R.; Francesco, R.; Sciutti, A. Comfortability Recognition from Visual Non-verbal Cues. In Proceedings of the International Conference on Multimodal Interaction; 2022. [Google Scholar] [CrossRef]

- Redondo, M.E.; Sciutti, A.; Incao, S.; Rea, F.; Niewiadomski, R. Can Robots Impact Human Comfortability During a Live Interview? In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, 2021. [CrossRef]

- Esteban, P.G.; Bagheri, E.; Elprama, S.A.; Jewell, C.I.; Cao, H.; De Beir, A.; Jacobs, A.; Vanderborght, B. Should I be Introvert or Extrovert? A Pairwise Robot Comparison Assessing the Perception of Personality-Based Social Robot Behaviors. International Journal of Social Robotics 2021, 14, 115–125. [Google Scholar] [CrossRef]

- Liu, L.; Liu, Y.; Gao, X.Z. Impacts of Human Robot Proxemics on Human Concentration-Training Games with Humanoid Robots. Healthcare 2021, 9. [Google Scholar] [CrossRef]

- Baddoura, R.; Venture, G. Social vs. Useful HRI: Experiencing the Familiar, Perceiving the Robot as a Sociable Partner and Responding to Its Actions. International Journal of Social Robotics 2013, 5, 529–547. [Google Scholar] [CrossRef]

- Feng, H.; Mahoor, M.H.; Dino, F. A Music-Therapy Robotic Platform for Children With Autism: A Pilot Study. Frontiers in Robotics and AI 2022, 9. [Google Scholar] [CrossRef]

- Matarese, M.; Sciutti, A.; Rea, F.; Rossi, S. Toward Robots’ Behavioral Transparency of Temporal Difference Reinforcement Learning With a Human Teacher. IEEE Transactions on Human-Machine Systems 2021, 51, 578–589. [Google Scholar] [CrossRef]

- Bandura, A. Self-efficacy. In Encyclopedia of Human Behavior; Ramachaudran, V. S., Ed; Academic Press: New York, NY; 1994, pp. 71–81.

- Robinson, N. L.; Hicks, T.; Suddrey, G.; Kavanagh, D. J. The Robot Self-Efficacy Scale: Robot Self-Efficacy, Likability and Willingness to Interact Increases After a Robot-Delivered Tutorial. In Proceedings of the 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN); 2020. [Google Scholar]

- Frank, D.A.; Otterbring, T. Being Seen… by Human or Machine? Acknowledgment Effects on Customer Responses Differ Between Human and Robotic Service Workers. Technological Forecasting & Social Change 2023, 189, 122345. [Google Scholar] [CrossRef]

- Latane, B. The Psychology of Social Impact. American Psychologist 1981, 36(4), 343–356. [Google Scholar] [CrossRef]

- Lee, K.M. Presence, Explicated. Communication Theory 2004, 14(1), 27–50. [Google Scholar] [CrossRef]

- Li, Y.; Sekino, H.; Sato-Shimokawara, E.; Yamaguchi, T. The Influence of Robot’s Expressions on Self-Efficacy in Erroneous Situations. Journal of Advanced Computational Intelligence and Intelligent Informatics 2022, 26(4), 521–530. [Google Scholar] [CrossRef]

- Swift-Spong, K.; Short, E.S.; Wade, E.; Matarić, M.J. Effects of comparative feedback from a Socially Assistive Robot on self-efficacy in post-stroke rehabilitation. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR); 2015. [Google Scholar] [CrossRef]

- Albardiaz, R. Non-verbal Communication: An Update on Smell. Education for Primary Care 2021, 32(6), 363–365. [Google Scholar] [CrossRef] [PubMed]

- Vinciarelli, A.; Pantic, M.; Bourland, H. Social signal processing: Survey of an emerging domain. Image and Vision Computing 2009, 27, 1743–1759. [Google Scholar] [CrossRef]

- Barquero, G.; Núñez, J.; Escalera, S.; Xu, Z.; Tu, W.; Guyon, I.; Palmero, C. Didn’t See That Coming: A Survey on Non-verbal Social Human Behavior Forecasting. Machine Learning Research 2022, 173, 139–178. [Google Scholar]

- Spatola, N.; Kühnlenz, B.; Cheng, G. Perception and Evaluation in Human–Robot Interaction: The Human–Robot Interaction Evaluation Scale (HRIES) – A Multicomponent Approach of Anthropomorphism. International Journal of Social Robotics 2021, 2021 13, 1517–1539. [Google Scholar] [CrossRef]

- Mandl, S.; Bretschneider, M.; Asbrock, F.; Meyer, B.; Strobel, A. The Social Perception of Robots Scale (SPRS): Developing and Testing a Scale for Successful Interaction Between Humans and Robots. In Proceedings of the PRO-VE 2022: Collaborative Networks in Digitalization and Society 5.0; 2022. [Google Scholar] [CrossRef]

- Zuckerman, O.; Walker, D.; Grishko, A.; Moran, T.; Levy, C.; Lisak, B.; ... & Erel, H. Companionship is not a function: the effect of a novel robotic object on healthy older adults' feelings of "Being-seen". In Proceedings of the 2020 CHI conference on human factors in computing systems, 2020.

- Jung, M. F.; DiFranzo, D.; Stoll, B.; Shen, S.; Lawrence, A.; Claure, H. Robot assisted tower construction-a resource distribution task to study human-robot collaboration and interaction with groups of people. arXiv preprint arXiv:1812.09548, 2018. arXiv:1812.09548, 2018.

- Hall, E. The Hidden Dimension. Doubleday: New York, 1969.

- Xie, L.; Lei, S. The nonlinear effect of service robot anthropomorphism on customers’ usage intention: A privacy calculus perspective. International Journal of Hospitality Management 2022, 107, 103312. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).