Submitted:

26 November 2025

Posted:

27 November 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Survey Objective

- Exploration of Object detection Applications in Autonomous vehicles: This involves the investigation of real-world use cases in object detection.

- Exploration of YOLO Applications in Diverse Weather Conditions: We then explore the specific applications of YOLO variants in diverse weather conditions.

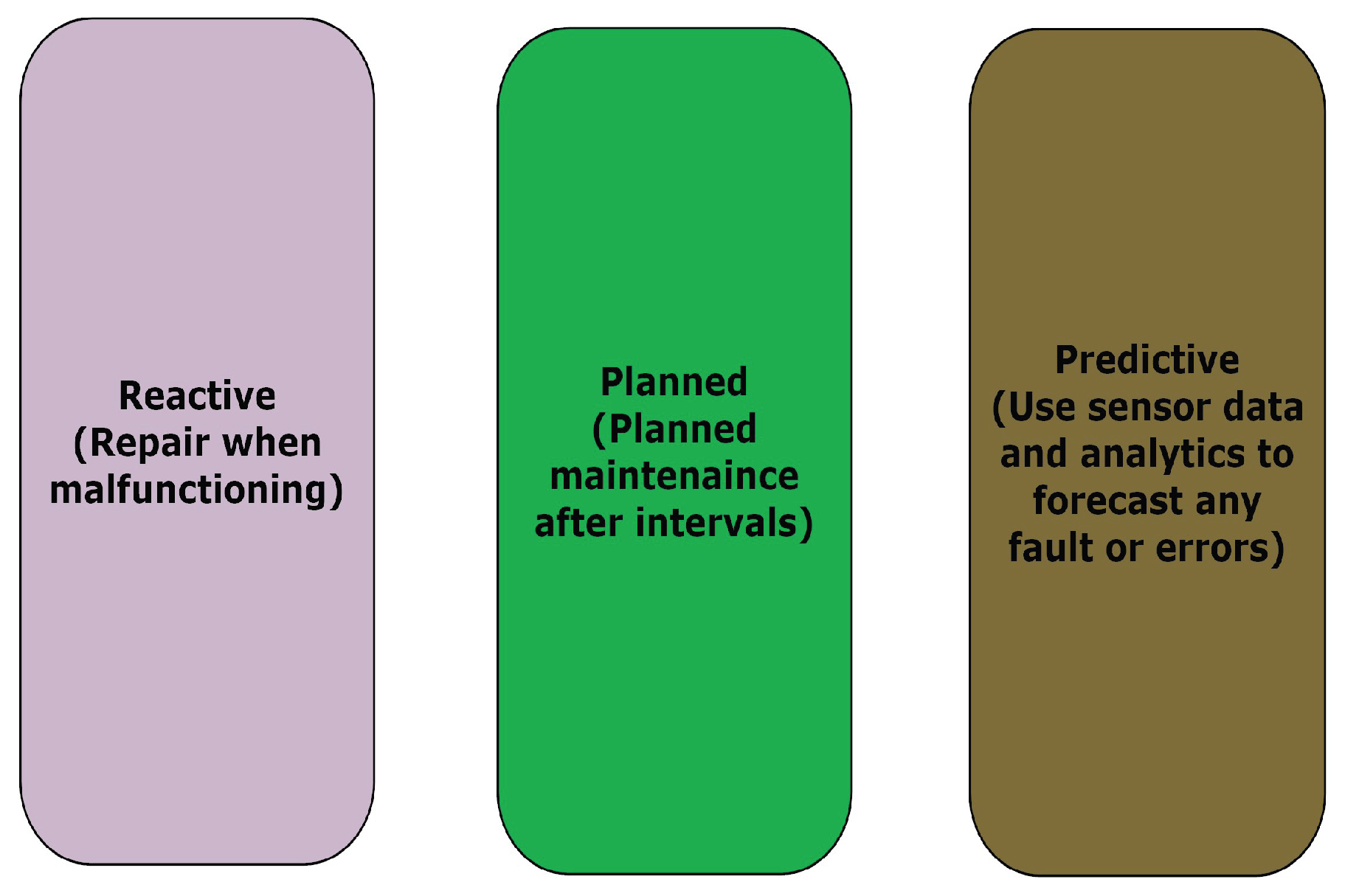

- Predictive maintenance: In this survey, we have proposed to imply predictive maintenaince techniques in object detection algorithms. Predictive maintenance has been made extremely difficult by the intricacy and risks of autonomous car systems. Regular maintenance should be carried out to ensure human safety because malfunctioning hardware and software in autonomous vehicle systems could result in fatal collisions. Large-scale product design for automotive systems depends heavily on anticipating future failures and adopting preventative measures to preserve system reliability and security.

- Proposed Methodology for Object Detection: In last the general framework of object detection is mentioned which shows how PM can be adopted in AV.

2. Predictive Maintenance

2.1. Predictive Maintenance in Autonomous Vehicles

- Integration with IoT: Autonomous vehicle (AV) predictive maintenance system architectures rely significantly on Internet of Things (IoT) frameworks. IoT frameworks make it possible to gather data in real time and interpretation of data from a variety of car sensors, including those that track battery health, tire pressure, and engine performance. [9]. Predictive maintenance with IoT integration makes it easier to monitor continuously and identify irregularities early. AV predictive maintenance systems use Internet of Things frameworks to gather information from a variety of sensors mounted all over the car, such as cameras, gyroscopes, and accelerometers. After being sent to a central processing unit, this data is examined using machine learning algorithms to find trends and anticipate any problems. Real-time monitoring and diagnostics are made possible by predictive maintenance systems that use IoT frameworks, allowing for early problem identification and prompt response. In addition to improving the safety and dependability of AVs, this reduces maintenance expenses and downtime.

- Sensor Networks: An Internet of Things (IoT)-based predictive maintenance system’s sensors are usually tiny, wireless devices that are simple to put on equipment and cars. They have several sensing components that are able to identify modifications in the equipment’s working circumstances. Through cellular networks, Bluetooth, Wi-Fi, and other wireless communication protocols, the data gathered by these sensors is sent to a central processing unit (CPU).

- Real-time Monitoring IoT frameworks’ real-time monitoring features are essential for improving the dependability and security of driverless cars. These frameworks allow for the early identification of any issues by continuously gathering and analyzing data from a variety of sensors and vehicle components. IoT frameworks reduce the risks associated with unplanned breakdowns by detecting any deviations from standard operating conditions in real-time and enabling fast intervention and corrective actions.

2.2. You Look Only Once , YOLO

3. Object Detection in Autonomous Vehicles

- Object classification within an image,

- Localization of objects,

- Detection of objects

- Image segmentation.

| Paper | Approach | Performance Metrics | Outcome |

| [19] | Latency was reduced using mobile net and SSD | Latency | |

| [24] | Comprehensive examination of object detection strategies designed for self-driving cars, covering both conventional and deep learning-based strategies as well as new developments. | Adaptibility and reliability | The study concludes by outlining potential future paths, such as the use of edge computing, transformer-based designs, and continuous learning to increase adaptability. |

| [27] | Sand weather makes it extremely difficult to detect objects because of environmental factors such opacity, low visibility, and shifting lighting. The performance of various activation functions in sandy weather was assessed in using the You Only Look Once (YOLO) version 5 and version 7 architectures. | mean average precision (mAP), recall, and precision | According to their findings, YOLOv5 with the LeakyReLU activation function outperformed other designs in the original DAWN dataset in terms of the documented research findings in sandy conditions, achieving 88% mAP. YOLOv7 with SiLU obtained 94% mAP on the expanded DAWN dataset. |

| [20] | The study in [20] tackles the problem of accurate object detection in foggy conditions, which is a significant challenge in computer vision. We introduce a new method utilizing a real-world dataset gathered from various foggy weather scenarios, emphasizing different levels of fog density. | a mean average precision (mAP) |

| Paper | Approach | Performance Metrics | Outcome |

| [8] | 19 short videos obtained from automated driving lab were tested | Performance compared with Aggregate channel feature detector in terms of reliability and effectiveness | The model outperformed ACF detector. It was recommended to use sensor fusion approach to enhance reliability and effectiveness of collision warning system. |

| [12] | The object detection accuracy was improved by using novel YOLO algorithm | Accuracy and confidence intervals | Accuracy/precision was improved using mobile net. |

| [13] | Accuracy in object detection was achieved using Flip-Mosaic algorithm to enhance the network’s perception of small targets in YOLOV5. | Accuracy and false rate detection | Flip-Mosaic data augmentation technique can lower the false detection rate and increase vehicle detection accuracy. |

| [14] | The traditional YOLO v5 model is enhanced with a prediction extension box that takes into account the coverage area and redundancy of actual targets, ensuring the safety of image perception. | suggested object detection algorithm has been shown to improve the range of detected targets, which considerably improves perception safety throughout the autonomous driving procedure. | Prediction extension box is added to YOLO v5 which improves the range of detected targets which improves the perception safety. |

| [15] | This study introduces an extensive dataset containing 8,388 annotated images of various vehicles, with a total of 27,766 labels in 11 different categories. The dataset was gathered under various weather conditions in the province of Quebec, Canada. The study demonstrates the effective use of deep neural networks for detecting road-related items in the context of smart cities and communities | Precision, Recall, and mAP. | Improved precision, recall, robustness and mAP. |

| [16] | offers a thorough review of object detection approaches under challenging weather conditions. It begins by exploring the difficulties caused by different weather effects such as rain, snow, fog, and varying lighting, all of which can greatly degrade detection system performance | classifies current object detection methods into three groups: direct detection models, distributed models that perform image restoration (enhancement) before detection, and end-to-end models that combine image restoration and object detection in a single framework | |

| [17] | This paper introduces the SCOPE (Stacked Classifiers for Object Prediction and Estimation) algorithm, designed to improve object detection capabilities in autonomous vehicles. The primary goal is to create a robust, high-performance model that accurately identifies various objects—including cars, trucks, pedestrians, bicyclists, and traffic lights—while addressing challenges related to detection accuracy and efficiency. | accuracy, precision, and recall. | The SCOPE algorithm demonstrated strong performance on self-driving cars ensuring a reduced false positive rate. |

| Paper | Approach | Performance Metrics | Outcome |

| [21] | This research paper [21] aims to enhance object detection performance using YOLO versions 5, 7, and 9 in AWCs for autonomous driving. Due to the large number and broad range of hyperparameters, manually finding the best values through trial and error is difficult. To address this, the study applies three optimization algorithms—Gray Wolf Optimizer (GWO), Artificial Rabbit Optimizer (ARO), and Chimpanzee Leader Selection Optimization (CLEO)—to independently fine-tune the hyperparameters of YOLOv5, YOLOv7, and YOLOv9. Results demonstrate that these optimization methods substantially boost the performance of the YOLO models in object detection under AWCs, with improvements of 6.146% overall, 6.277% for YOLOv7 combined with CLEO, and 6.764% for YOLOv9 combined with GWO. | Hyperparameters. | The findings indicate that these optimization techniques significantly enhance the performance of the YOLO models for object detection in AWCs, achieving an overall improvement of 6.146%, a 6.277% enhancement for YOLOv7 when combined with CLEO, and a 6.764% increase for YOLOv9 when paired with GWO. |

| [18] | This paper [18] focuses on developing robust detection and classification algorithms tailored to the unique challenges found in India, including diverse traffic patterns, unpredictable driving behaviors, and varying weather conditions | Average accuracy and peak accuracy. | Precision and accuracy was improved using YOLO v8. |

3.1. Object Detection and Classification Based on Weather Condition

4. Analysis on the Current Work

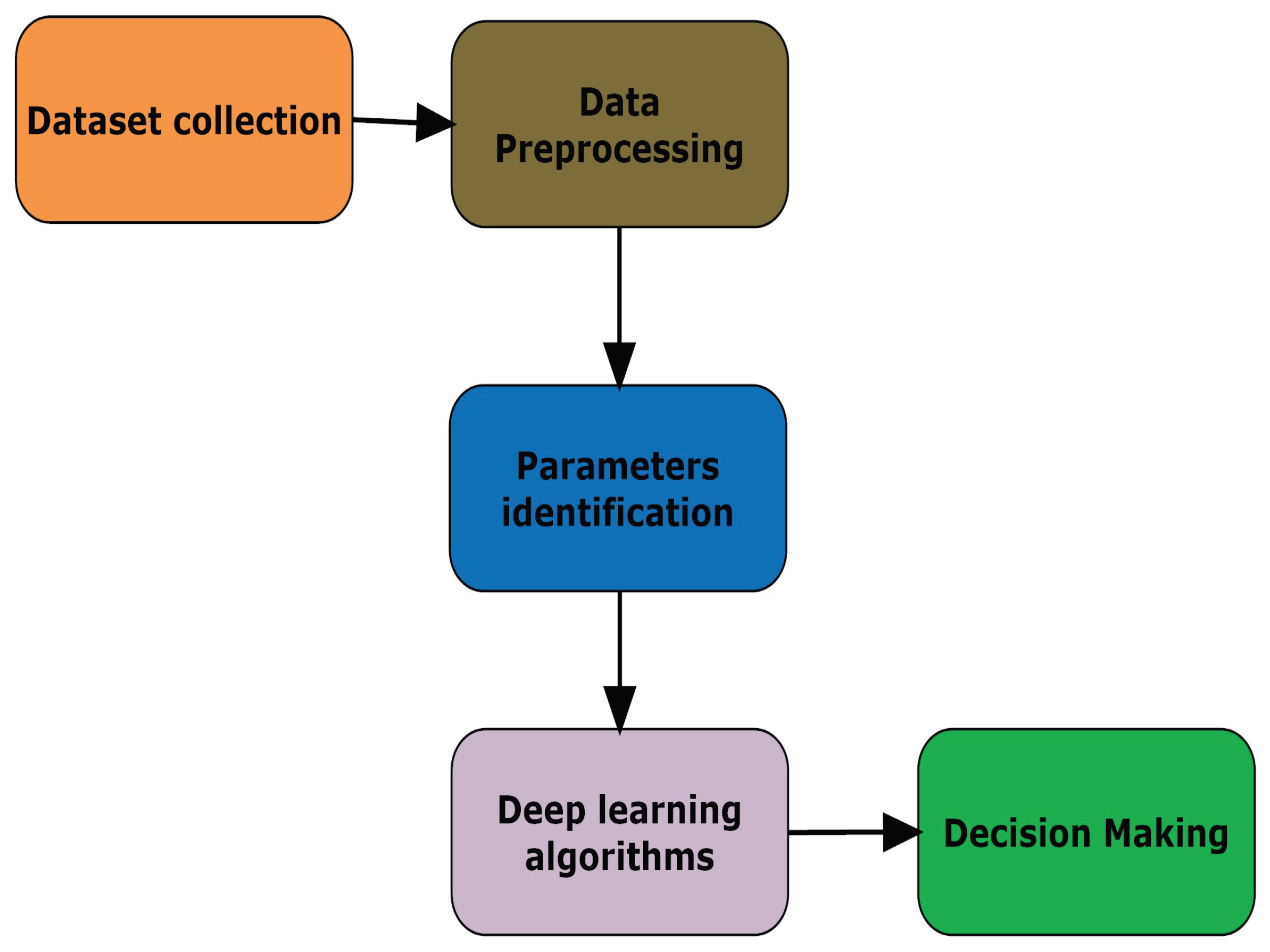

5. Proposed Methodology for Object Detection in AV in Generic Way

- Data collection: Data of vehicles will be collected from different car companies using script.

- Data pre-processing: A dataset will be created comprising of different cars.

-

Parameters identification: YOLO algorithm will be used to detect objects. Different performance parameters will be identified. The data comprises of different objects (vehicles) will be collected from car companies. Object are considered as vehicles in this work. A script will be implemented to collect data of vehicles. Then You Only Look Once (YOLO) algorithm will be used to detect vehicles and classify them. Afterwards the performance metrics will be identified. Following are the performance metrics which will be considered to evaluate the performance and also train the Long-Short Term Memory (LSTM) network to predict any anomaly behaviour in detection.

-

Intersection over Union (IoU): It provides a numerical value that reflects how closely the model’s prediction matches the actual object position. A higher IoU score indicates a better match between predicted and ground truth bounding boxes, implying superior localisation accuracy. Its mathematical formula is presented below.In equation (1.1) AoO corresponds to area of overlap and AoU represents area of union.In addition, IoU has a wide range of applications. For example, in self-driving cars, it helps with object recognition and tracking, resulting in safer and more efficient driving. Security systems use IoU to recognise and identify objects or individuals in surveillance footage. In medical imaging, it helps to accurately identify anatomical structures and anomalies.

-

Precision, Recall, and F1-Score are fundamental metrics used to assess the performance of object detection models. These metrics provide valuable insights into the model’s ability to identify objects of interest within images. These metrics are based on the following concepts

- (a)

- True Positive TP: This is the case when the model performs perfectly. The IoU is above the threshold.

- (b)

- False Positive FP: The model identifies the object which does not exits. In this case the value of IoU is below threshold.

- (c)

- False Negative FN: The models does not identify the object despite of its presence.

- (d)

- True Negative TN: Not applicable in object detection.

- Precision: It is used to quantify the accuracy of the positive predictions made be the model. It is calculated using equation below,

- Recall: Recall evaluates the model’s completeness in detecting objects of interest. A high recall score shows that the model correctly recognises the majority of the relevant objects in the dataset. Equation (1.3) is used to compute it.

-

5.1. Loss Function

- Deep learning algorithms: Deep learning techniques are the brain of PM systems. These algorithms are used to analyze data to identify the most important features which can cause possible errors or failures. They learn from historical data to predict equipment failures, anomalies. In this work, after calculating these values, a LSTM network will be trained on these values. The probability of model’s failure will be the output.

- Decision Making:The insights and predictions generated by the LSTM network are processed by decision-making modules. These modules are responsible to determine what maintenance actions are needed. They can recommend preventive or corrective maintenance tasks.

6. Conclusions

Abbreviations

| AoO | Area of Overlap |

| AoU | Area of Union |

| AV | Autonomous vehicles |

| ADS | Automated Driving Systems |

| FP | False Positive |

| FN | False Negative |

| ITS | Intelligent Transport Systems |

| IoU | Intersection over Union |

| IoT | Internet of Things |

| LiDAR | Light Detection and Ranging |

| PM | Predictive Maintenance |

| TP | True Positive |

| WHO | World Health Organization |

| YOLO | You Only Look Once |

References

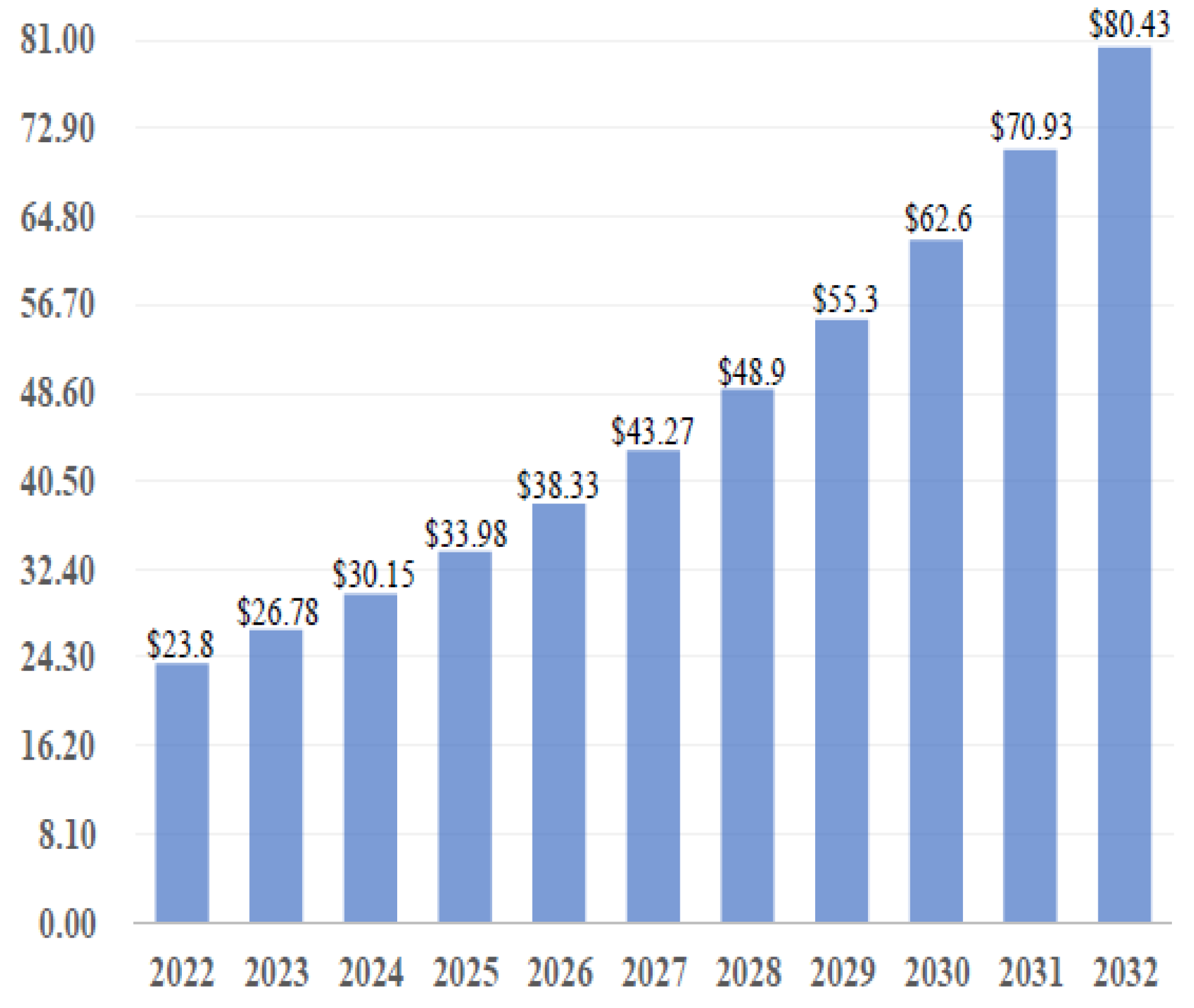

- Self-Driving Cars Global Market Size. Available online: https://precedenceresearch.com/self-driving-cars-market (accessed on 18 December 2023).

- Balasubramaniam, Abhishek and Pasricha, Sudeep, Object detection in autonomous vehicles: Status and open challenges. arXiv 2022, arXiv:2201.07706.

- Kang, Chang Ho and Kim, Sun Young, "Real-time object detection and segmentation technology: an analysis of the YOLO algorithm", JMST Advances, Vol 5, pp 69–76, Springer 2023.

- Dalzochio, Jovani and Kunst, Rafael and Pignaton, Edison and Binotto, Alecio and Sanyal, Srijnan and Favilla, Jose and Barbosa, Jorge, "Machine learning and reasoning for predictive maintenance in Industry 4.0: Current status and challenges", Computers in Industry, vol 123, Elsevier, 2020.

- Zhang, Weiting and Yang, Dong and Wang, Hongchao, "Data-driven methods for predictive maintenance of industrial equipment: A survey", IEEE systems journal, volume 13, pages 2213–2227, IEEE 2019.

- Arena, Fabio and Collotta, Mario and Luca, Liliana and Ruggieri, Marianna and Termine, Francesco Gaetano, "Predictive maintenance in the automotive sector: A literature review" Mathematical and Computational Applications, vol 27, MDPI, 2021.

- Saoudi, Oussama and Singh, Ishwar and Mahyar, Hamidreza, "Autonomous vehicles: open-source technologies, considerations, and development" 2022.

- Rajesh, B and Ramakrishna, D and Raju, A Ramakrishna and Chavan, Ameet, "Object detection and classification for autonomous vehicle", Journal of Physics: Conference Series, volume 1817, pp 012004, IOP Publising, 2021.

- Gohel, Hardik A and Upadhyay, Himanshu and Lagos, Leonel and Cooper, Kevin and Sanzetenea, Andrew, " Predictive maintenance architecture development for nuclear infrastructure using machine learning", Nuclear Engineering and Technology, elsevier 2022.

- Shah, Chirag Vinalbhai, " Machine learning algorithms for predictive maintenance in autonomous vehicles". International journal of engineering and computer science 2024, 13.

- Liebid-a lidar based early blind spot detection and warning system for traditional steering mechanism, "Naik, Abhir and Naveen, GVVS and Satardhan, J and Chavan, Ameet", 2020 International Conference on Smart Electronics and Communication (ICOSEC), pp 604-609. IEEE Conference, 2020.

- Mittapalli, Bharath Kumar and Thangaraj, S John Justin, " Elevating object detection precision in autonomous vehicles using YOLO algorithm over mobile net algorithm for image classification". AIP Conference Proceedings, 2025.

- Zhang, Yu and Guo, Zhongyin and Wu, Jianqing and Tian, Yuan and Tang, Haotian and Guo, Xinming, " Real-time vehicle detection based on improved yolo v5, Sustainability, 2022, MDPI.

- Wang, Sifen and Wang, Zhangyu and Hong, Sheng and Wang, Pengcheng and Zhang, Shaowei, Ensuring SOTIF: Enhanced object detection techniques for autonomous driving, Accident Analysis & Prevention, Elsevier 2025.

- Sharma, Teena and Chehri, Abdellah and Fofana, Issouf and Jadhav, Shubham and Khare, Siddhartha and Debaque, Benoit and Duclos-Hindie, Nicolas and Arya, Deeksha, "Deep learning-based object detection and classification for autonomous vehicles in different weather scenarios of Quebec, Canada", pp 13648–13662, vol 12, IEEE access, 2024.

- Chen, Zhige and Zhang, Zhigang and Su, Qizheng and Yang, Kai and Wu, Yandong and He, Lei and Tang, Xiaolin, "Object Detection for Autonomous Vehicles under Adverse Weather Conditions", Expert Systems with Applications, Elsevier, 2025.

- Zhang, Zhenzhong and Zhang, Quanyu and Liu, Huanxue and Gao, Yanan and Wang, Liang, " Artificial intelligence-based model for efficient object detection in autonomous vehicles", Journal of Intelligent Transportation Systems, pp 1–12, 2025, Taylor & Francis.

- Padia, Aayushi and TN, Aryan and Thummagunti, Sharan and Sharma, Vivaan and K. Vanahalli, Manjunath and BM, Prabhu Prasad and GN, Girish and Kim, Yong-Guk and BN, Pavan Kumar, "Object Detection and Classification Framework for Analysis of Video Data Acquired from Indian Roads". Sensprs 2024, 24, 6319.

- Chaturvedee, Adarsh and Al-Shabandar, Raghad and Mohammed, Ammar H, " Small Object Detection in Autonomous Cars Using a Deep Learning", International Journal of Data Science and Advanced Analytics, pp 307-314, 2024.

- Faiz, Muhammad and Ahmad, Tauqir and Mustafa, Ghulam, "Object Detection in Foggy Weather using Deep Learning Model, The Nucleus, pp 117-125, 2024.

- Özcan, İbrahim and Altun, Yusuf and Parlak, Cevahir," Improving YOLO detection performance of autonomous vehicles in adverse weather conditions using metaheuristic algorithms", Applied Sciences, 2024, MDPI.

- , Tahir, Noor Ul Ain and Zhang, Zuping and Asim, Muhammad and Chen, Junhong and ELAffendi, Mohammed, Object detection in autonomous vehicles under adverse weather: A review of traditional and deep learning approaches. Algorithms, vol, 17, 2024 MDPI.

- Murat, Ayşe Aybilge and Kiran, Mustafa Servetm, " A comprehensive review on YOLO versions for object detection", Engineering Science and Technology, an International Journal, Elsevier, 2025.

- Oyedokun, Eunice and William, Barnty, "Thorough Analysis of Object Detection for Autonomous Vehicles", preprints, 2025.

- Bratulescu, Razvan-Alexandru and Vatasoiu, Robert-Ionut and Sucic, George and Mitroi, Sorina-Andreea and Vochin, Marius-Constantin and Sachian, Mari-Anais, Object detection in autonomous vehicles, 2022 25th International Symposium on Wireless Personal Multimedia Communications (WPMC), pp 375–380, IEEE 2022.

- Ahmed, Arshee and Rasheed, Haroon, " Analyzing relative humidity and ber relation in terahertz vanet using bch coding", 2022 third international conference on latest trends in electrical engineering and computing technologies (intellect), pp 1-5, 2022, IEEE.

- Aloufi, Nasser and Alnori, Abdulaziz and Thayananthan, Vijey and Basuhail, Abdullah, "Object detection performance evaluation for autonomous vehicles in sandy weather environments", Applied Sciences, p 10259, MDPI.

- Ali, ML and Zhang, Z, " The YOLO Framework: A Comprehensive Review of Evolution, Applications, and Benchmarks in Object Detection". Computers 2024, 13, 336, 2024.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).