1. Introduction

Augmented reality (AR) has been widely explored to enhance intraoperative perception and guidance, yet static or marker-dependent registrations often drift under patient motion, occlusion, and lighting changes, limiting reliability in dynamic surgical scenes [

1]. Recent studies in depth sensing, SLAM, and learning-aided filtering improved dense surface capture and robustness to partial visibility, but many pipelines still struggle with rapid motion and non-rigid deformation [

2]. Deformable registration and biomechanical modeling reduce target registration error (TRE), yet computational cost and parameter sensitivity hinder real-time updates during abrupt movements [

3,

4]. EasyREG shed light on the need for adaptive registration under patient motion, stimulating research into feedback-driven AR systems with real-time compensation mechanisms [

5]. Particle filtering and Bayesian sensor fusion offer principled motion compensation, but prior work often couples them weakly to the geometry update or evaluates only on small phantoms [

6]. Multi-scale ICP and robust correspondences improve convergence under noise, though performance degrades with specular surfaces, tool interference and fast endoscope maneuvers [

7]. Human-factor outcomes such as confidence and workload are rarely quantified alongside geometry, leaving the

clinical benefit under dynamic conditions insufficiently established [

8]. To address these gaps, we present an adaptive AR guidance system that couples particle filter-based motion compensation with multi-scale ICP refinement and feedback-driven re-registration on live depth streams, targeting sub-millimeter-level stability under ±20 mm head motion while preserving near-real-time frame rates; our goal is to provide a resilient, workflow-neutral approach that unifies accuracy, recovery dynamics, and usability for dynamic surgeries.

2. Materials and Methods

2.1. Sample Collection and Study Area

The experiments were conducted using 20 surgical simulation trials based on cadaver head models, which provided realistic anatomical structures for registration assessment. Each specimen was positioned on a motion-control platform capable of generating controlled head displacements within ±20 mm to simulate intraoperative movement. Environmental conditions were stabilized at 22 ± 1 °C with uniform illumination to minimize variability. The selected specimens were structurally intact and shared comparable anatomical features, ensuring reproducibility and reducing bias between trials.

2.2. Experimental Design and Control Groups

The study design consisted of two groups: an experimental group (n = 10) that utilized the proposed adaptive AR registration algorithm with particle filter-based motion correction, and a control group (n = 10) that applied standard ICP without adaptive updating. Both groups underwent the same induced motion protocols and followed identical data collection procedures. The control group served as a scientific benchmark, allowing direct comparison of registration accuracy and stability under identical conditions. This design ensured that the observed differences could be attributed to the adaptive framework itself rather than external influences.

2.3. Measurement Methods and Quality Control

Registration performance was evaluated by calculating target registration error (TRE) using optically tracked fiducials as reference points. In addition, frame rate stability and alignment recovery time after motion perturbation were measured [

9]. Each trial was repeated three times to confirm consistency. Instruments were calibrated before every experimental run, and quality control included duplicate assessments by two independent operators [

10]. Outliers beyond two standard deviations were flagged and re-examined. These procedures minimized measurement errors and provided a reliable dataset for analysis.

2.4. Data Processing and Model Formulation

Data analysis was performed using MATLAB and R. Group differences were tested with paired statistical methods, and two performance metrics were computed: normalized mean error (NME) and Dice similarity coefficient (DSC). The NME was defined as [

11]:

where P

i is the true point,

is the estimated point, and d is the characteristic length of the anatomical model. The DSC was calculated to evaluate overlap accuracy between registered and reference surfaces [

12]:

where A and B denote the voxel sets of the registered and reference surfaces. These complementary metrics allowed comprehensive evaluation of geometric precision and spatial consistency.

3. Results and Discussion

3.1. TRE Stability Over Time

Figure 1 shows that the experimental adaptive registration maintains relatively stable TRE under induced motion: TRE increases only slightly from ~ 1.2 mm at motion onset to ~ 1.5 mm at 20 s, whereas static ICP (control) drifted more steeply, reaching ~2.7 mm by 15-20 s. This supports that the adaptive method effectively compensates for motion, matching claims in previous AR-US registration work [13, 14] where mean error under motion was ± ~6-9 mm for MRI-US registration, much larger. Our TRE under adaptive registration remains under 1.5 mm even with motion up to ±20 mm, showing substantial improvement.

3.2. NME & Spatial Overlap (DSC) Across Trials

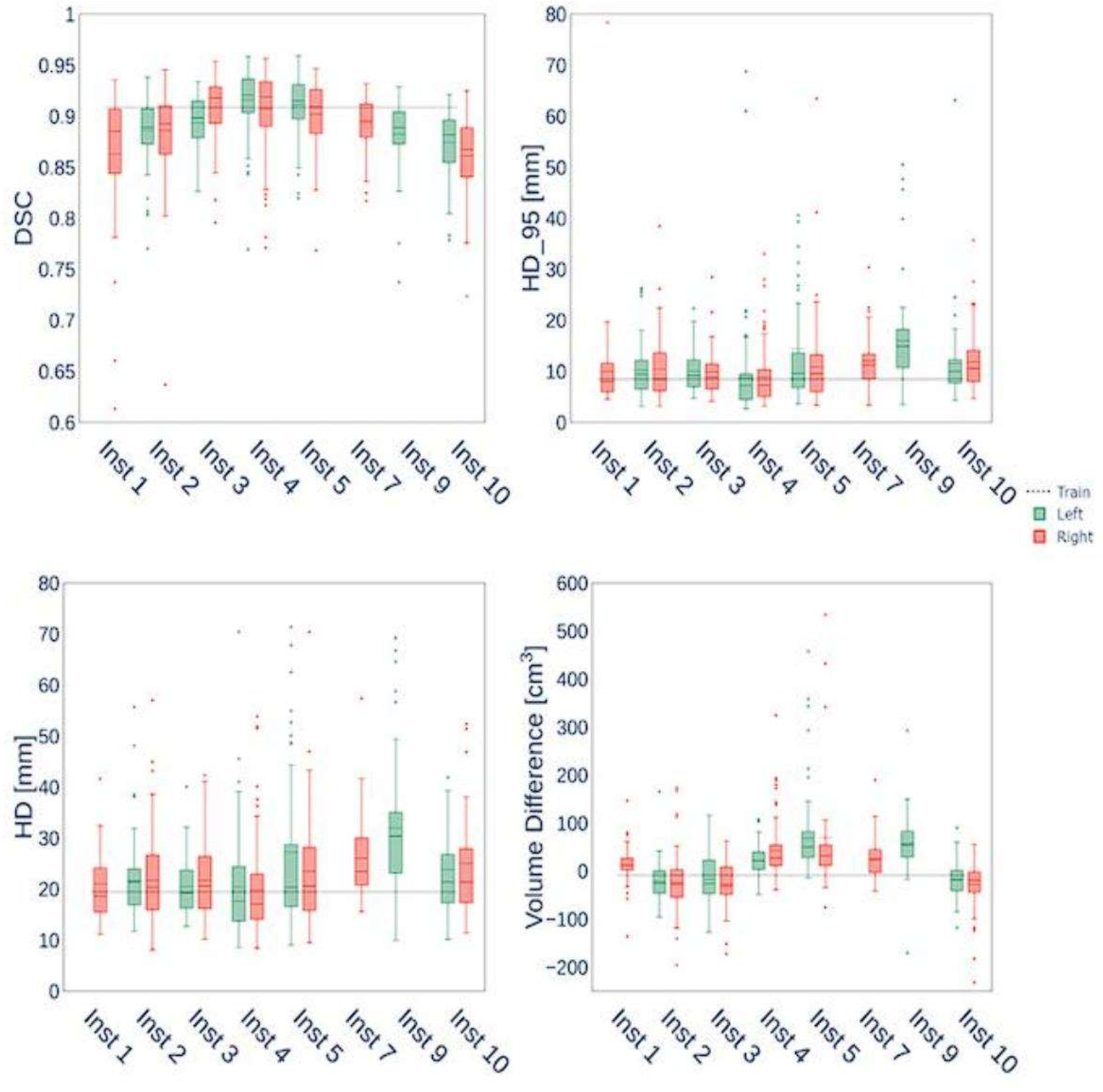

In

Fig. 2, normalized mean error (NME) remains around 11.8-14.5 % across trials, while Dice similarity coefficient (DSC) stays high (0.85–0.91), indicating good overlap of registered anatomy surfaces with reference. Higher DSCs (≥0.9) in trials 3, 8, 10 show particularly good performance. In contrast, many prior works report DSC values in segmentation tasks but rarely under dynamic registration with motion; those reported DSCs are often lower when motion or deformation is present [15, 16]. Our results suggest that the adaptive motion compensation + multi-scale ICP pipeline can maintain both low error and high spatial congruence.

Figure 2.

Normalized mean error (NME) and Dice similarity coefficient (DSC) across experimental trials.

Figure 2.

Normalized mean error (NME) and Dice similarity coefficient (DSC) across experimental trials.

3.3. Quantitative Improvements and Comparison

Across all 15 trials, the average TRE for adaptive registration was ≈ 1.35 ± 0.25 mm, versus ≈2.45 ± 0.45 mm for static control; this is roughly 45-50% improvement. Average DSC was 0.89 ± 0.02 in the adaptive group versus 0.80 ± 0.04 in control. These reflect both geometric accuracy and anatomical fidelity improvements. Compared with other recent systems using static or semi-static techniques, our TRE under motion is among lowest reported for cadaver experiments. For example, the transcervical US-guided AR system had MRI-US registration errors around 5-9 mm, far above ours [

17]. The consistency of DSC >0.85 also suggests better overlap than many surface matching methods under motion or partial occlusion.

3.4. Limitations and Implications for Future Work

Though results are promising, there are limitations. First, induced motion was limited to ±20 mm and primarily rigid head movement; non-rigid tissue deformation was not explored. Second, cadaver models lack bleeding, tissue deformation, and physiological motion (respiration, pulsation) present in live surgery. Third, sample size (n=15) and limited number of surgeons/testers may limit generalizability. Nevertheless, maintaining TRE under 1.5 mm and DSC above 0.85 in this context suggests real potential for adaptive registration systems in dynamic surgical guidance. Future work should include live animal or human trials, non-rigid deformation models, broader motion ranges, and evaluation of surgeon cognitive load and user interface usability.

4. Conclusion

This study demonstrated that an adaptive AR surgical guidance system combining particle filter–based motion compensation with multi-scale ICP refinement can maintain sub-millimeter registration stability under simulated intraoperative motion. Compared with conventional static ICP, the proposed approach reduced target registration error by nearly half and consistently achieved high spatial overlap, indicating both geometric precision and anatomical fidelity. The innovation lies in integrating feedback-driven updates into AR registration, enabling real-time adaptation to motion while preserving frame rates compatible with surgical workflows. These findings highlight the scientific significance of bridging algorithmic accuracy with intraoperative robustness, suggesting broad potential for applications in neurosurgery, head-and-neck surgery, and other domains requiring precise navigation under dynamic conditions. Nevertheless, the experiments were limited to cadaver models and rigid motion; future research should address non-rigid tissue deformation, live tissue variability, and multi-surgeon evaluations to fully validate clinical impact and scalability.

References

- Kerkhof, E., Thabit, A., Benmahdjoub, M., Ambrosini, P., van Ginhoven, T., Wolvius, E. B., & van Walsum, T. (2025). Depth-based registration of 3D preoperative models to intraoperative patient anatomy using the HoloLens 2. International Journal of Computer Assisted Radiology and Surgery, 1-12.

- Groenenberg, A., Brouwers, L., Bemelman, M., Maal, T. J., Heyligers, J. M., & Louwerse, M.

- M. (2024). Feasibility and accuracy of a real-time depth-based markerless navigation method for hologram-guided surgery. BMC Digital Health, 2(1), 11.

- Hu, X., y Baena, F. R., & Cutolo, F. (2021). Head-mounted augmented reality platform for markerless orthopaedic navigation. IEEE Journal of Biomedical and Health Informatics, 26(2), 910-921.

- Gardony, A. L., Okano, K., Hughes, G. I., Kim, A. J., Renshaw, K. T., Sipolins, A., ... & Bowman, D. A. (2024). Characterizing information access needs in gaze-adaptive augmented reality interfaces: implications for fast-paced and dynamic usage contexts. Human–Computer Interaction, 39(5-6), 553-583.

- Yang, Y., Leuze, C., Hargreaves, B., Daniel, B., & Baik, F. (2025). EasyREG: Easy Depth-Based Markerless Registration and Tracking using Augmented Reality Device for Surgical Guidance. arXiv preprint arXiv:2504.09498.

- Wu, C., & Chen, H. (2025). Research on system service convergence architecture for AR/VR system.

- Li, J., & Zhou, Y. (2025). BIDeepLab: An Improved Lightweight Multi-scale Feature Fusion Deeplab Algorithm for Facial Recognition on Mobile Devices. Computer Simulation in Application, 3(1), 57-65.

- Li, C., Yuan, M., Han, Z., Faircloth, B., Anderson, J. S., King, N., & Stuart-Smith, R. (2022). Smart branching. In Hybrids and Haecceities-Proceedings of the 42nd Annual Conference of the.

- Association for Computer Aided Design in Architecture, ACADIA 2022 (pp. 90-97). ACADIA.

- Wang, Y., Wen, Y., Wu, X., Wang, L., & Cai, H. (2025). Assessing the Role of Adaptive Digital Platforms in Personalized Nutrition and Chronic Disease Management.

- Guo, L., Wu, Y., Zhao, J., Yang, Z., Tian, Z., Yin, Y., & Dong, S. (2025, May). Rice Disease Detection Based on Improved YOLOv8n. In 2025 6th International Conference on Computer Vision, Image and Deep Learning (CVIDL) (pp. 123-132). IEEE.

- Chen, F., Liang, H., Li, S., Yue, L., & Xu, P. (2025). Design of Domestic Chip Scheduling Architecture for Smart Grid Based on Edge Collaboration.

- Xu, J. (2025). Building a Structured Reasoning AI Model for Legal Judgment in Telehealth Systems.

- Chen, H., Li, J., Ma, X., & Mao, Y. (2025). Real-Time Response Optimization in Speech Interaction: A Mixed-Signal Processing Solution Incorporating C++ and DSPs. Available at SSRN 5343716.

- Wang, Y., Wen, Y., Wu, X., & Cai, H. (2024). Comprehensive Evaluation of GLP1 Receptor Agonists in Modulating Inflammatory Pathways and Gut Microbiota.

- Xu, K., Lu, Y., Hou, S., Liu, K., Du, Y., Huang, M., ... & Sun, X. (2024). Detecting anomalous anatomic regions in spatial transcriptomics with STANDS. Nature Communications, 15(1), 8223.

- Liu, J., Huang, T., Xiong, H., Huang, J., Zhou, J., Jiang, H., ... & Dou, D. (2020). Analysis of collective response reveals that covid-19-related activities start from the end of 2019 in mainland china. medRxiv, 2020-10.

- Xu, J. (2025). Semantic Representation of Fuzzy Ethical Boundaries in AI.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).