1. Introduction

Shannon’s

Mathematical Theory of Communication [

1] presumes a unidirectional, forward flow of information from transmitter to receiver. Time is treated as an external ordering parameter along which signals propagate, with a fixed and globally well-defined causal order. The framework is therefore silent on regimes in which the direction of time may be indefinite or even reversible. Such regimes, once thought purely speculative, now appear in quantum information theory and quantum foundations [

2,

3,

4,

5,

6,

7,

8,

9].

To restore symmetry without invoking quantum superposition, we replace the one-way channel with a bidirectional shannon channel: every forward symbol is immediately echoed on a reverse channel as a symbol-level acknowledgement. In the ideal deterministic (noise-free) limit, the receiver’s information gain about the forward message is exactly matched by the sender’s information gain from the echo, so that over a complete forward–echo cycle the net mutual information across the pair is zero while both endpoints attain certainty. We call this Perfect Information Feedback (PIF).

We cast the bidirectional echo channel as an instance of Alternating Causality (AC): a reversible, symmetric process whose deterministic microdynamics alternate sender/receiver roles according to a fixed schedule. A single causal transmission collapses an oscillating arrow of causality into one of four directions: Alice to Alice, Alice to Bob, Bob to Alice, Bob to Bob. The AC/PIF primitive avoids baking a global forward-in-time assumption into the model; any macroscopic arrow emerges from boundary conditions and control objectives. Physically, one can view AC as the classical, buildable analogue of time-symmetric “mirror” interactions (e.g., photon reflections), but our formal results rely only on the deterministic alternation, making the construction auditable and composable at network scale.

2. From Minkowski Spacetime to Alternating Causality

In Minkowski spacetime, time-separated events are represented along the

axis, while spatial separations are measured along

x,

y, and

z. In this framing, “instants” are meaningless: a single point in spacetime carries no temporal reality independent of the intervals that connect it to others. An

interval in Minkowski spacetime,

is therefore not the same as the interval measured on a local clock. Only the invariant

proper time , defined along a timelike worldline by

corresponds to the physical time experienced by an observer. For a photon, however, the proper time is identically zero:

A photon therefore experiences no passage of time between emission and absorption. Its worldline connects two events without internal duration, leaving its “distance traveled” undefined from the photon’s own perspective. The frequency

of light conveys energy (

), not elapsed time; only ensembles of photons, averaged statistically, generate measurable temporal frequencies [

10].

In general relativity, causal structure is encoded geometrically through

causal diamonds: regions defined by the intersection of the future of one event and the past of another [

2,

3]. Each diamond represents the set of all spacetime points that can both influence and be influenced between two events. While such constructions elegantly capture local causal order, they are insufficient to describe processes where causal order itself becomes indefinite or reversible.

Recent developments in quantum foundations have challenged this classical assumption. Work by Oreshkov, Brukner, and collaborators has shown that quantum theory can accommodate processes in which the order of operations is not fixed but exists in superposition, a regime known as

indefinite causal order (ICO) [

4,

5,

6,

7]. In the ICO framework, operations performed by two parties

A and

B may coexist coherently in both

and

configurations. Such processes are represented not by a single causal relation but by a higher-order

process matrix, which encodes all admissible temporal configurations consistent with local quantum operations.

2.1. The Photon Clock Model

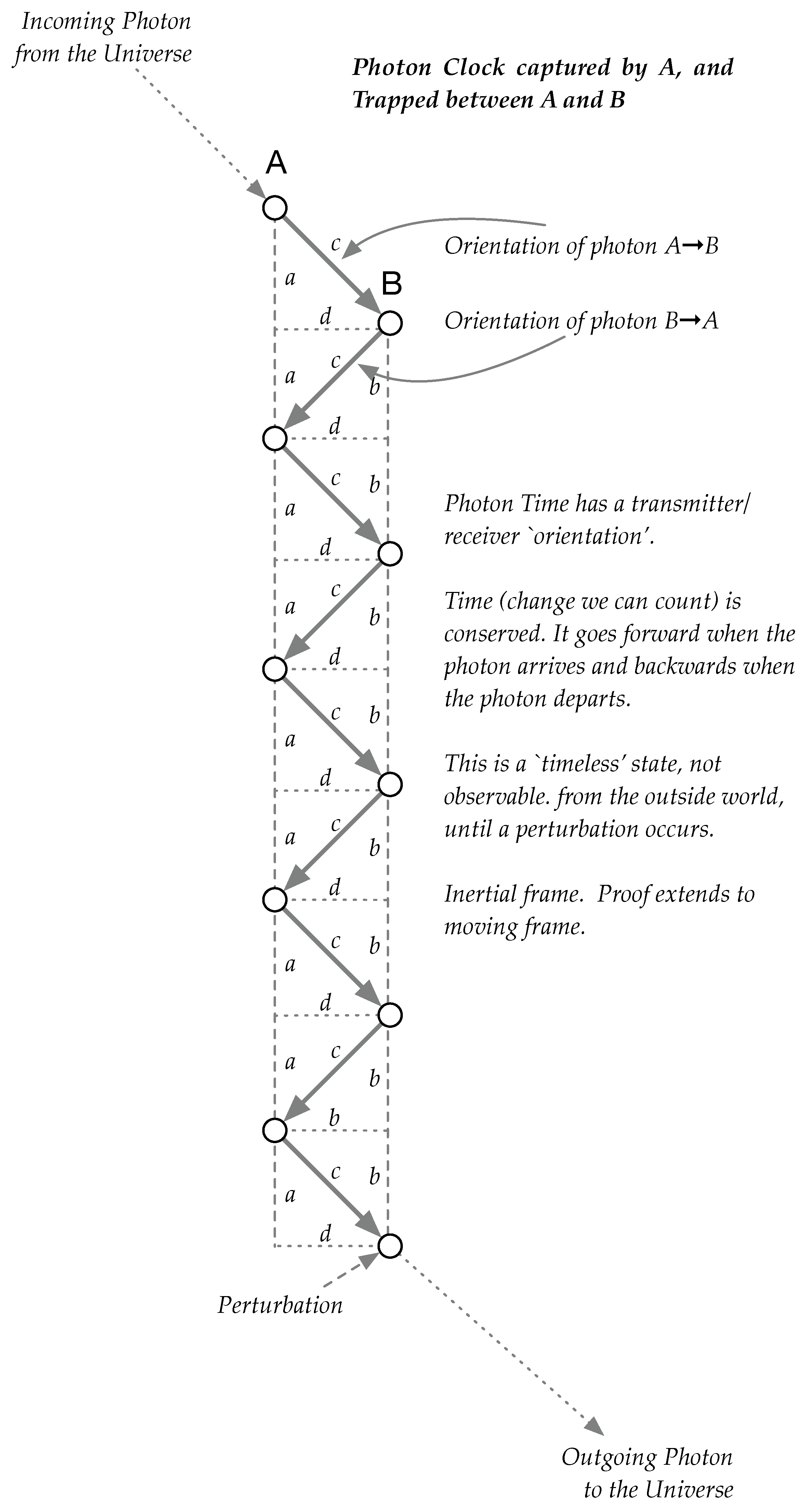

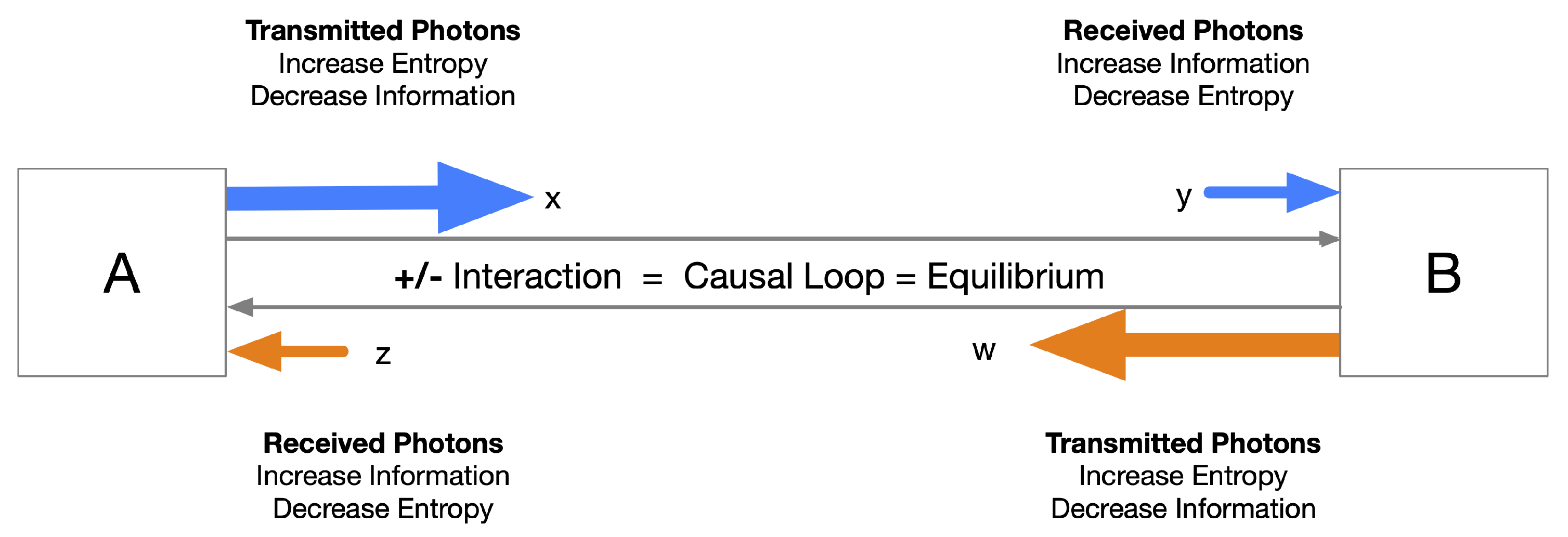

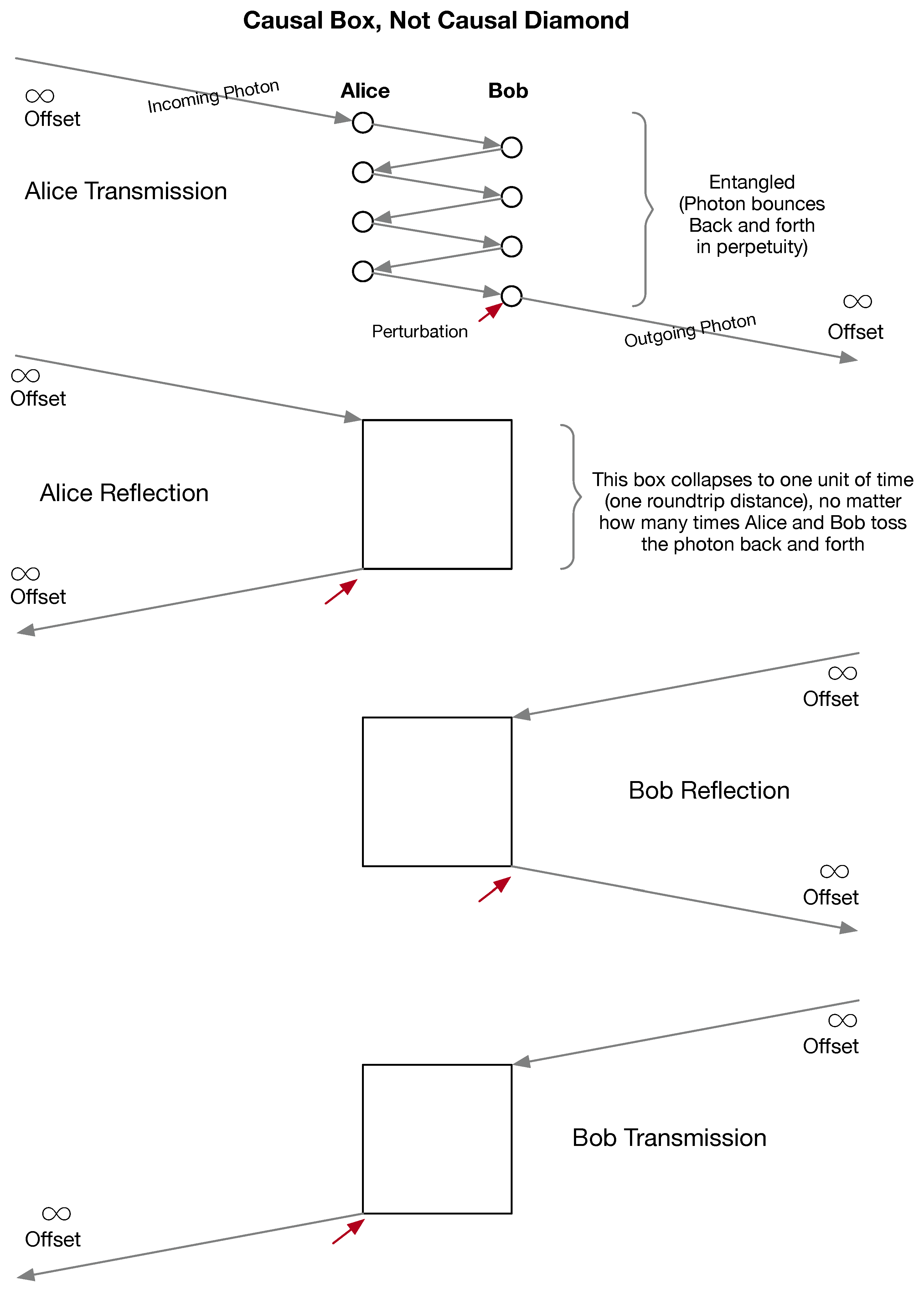

To operationalize this idea within a relativistic setting, consider a single photon confined between two ideal mirrors, representing observers A (Alice) and B (Bob). Each reflection constitutes a lightlike exchange event. As long as the photon remains trapped, the system exists in an alternating causality regime: causal influence oscillates reversibly between A and B, without a definite direction of time.

Figure 1.

A photon confined between two mirrors forms a closed causal system.

Figure 1.

A photon confined between two mirrors forms a closed causal system.

When Alice first encounters the photon, she has no knowledge of how far or for how long it has traveled. She reflects it perfectly toward Bob, reversing both momentum and phase. From her standpoint, no local time elapses between emission and reception. The photon’s worldline is a single, lightlike event with zero proper duration. When Bob receives and reflects the photon, he too possesses no information about Alice’s existence—only that a photon arrived with proper time zero. Only after multiple exchanges can either observer infer a round-trip duration using their own local clock. This measured interval defines an emergent geometric separation between

A and

B, effectively reconstructing spacetime distance from the statistics of information exchange [

8,

9].

The confined photon and its two mirrors together form an entangled, time-symmetric system. As long as the photon remains within, its causal configuration is reversible; no absolute ordering between Alice and Bob exists. When a perturbation allows the photon to escape by absorption, decoherence, or asymmetry, the system’s causal symmetry collapses. The external observer then perceives one of four possible classical configurations, corresponding to distinct, definite arrows of time.

This photon-clock model thus provides a minimal physical realization of indefinite causal order. It extends the causal-diamond construction by embedding within it a reversible, quantum-causal interior termed a “causal box.” When projected outward, the box behaves as a single classical causal diamond with a fixed orientation; internally, however, it encodes a superposition of time directions. Alternating Causality (AC) therefore serves as a bridge between general relativity’s local causal geometry and quantum theory’s process-based indeterminacy.

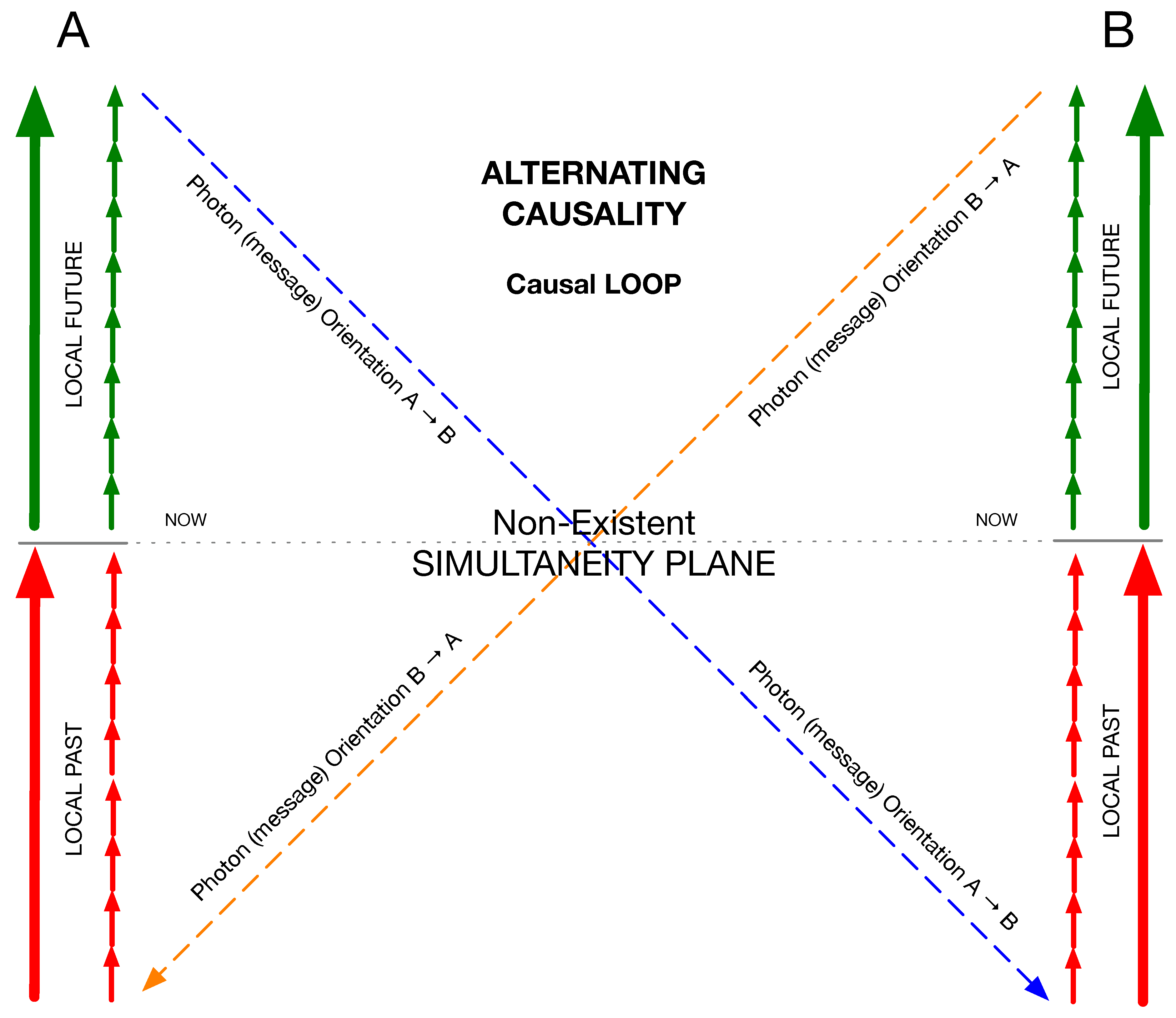

A perturbation such as absorption, decoherence, or asymmetry, breaks this interaction, collapsing the system into a definite causal order (

Figure 2). The alternating forward–backward structure of influence can be represented geometrically as two joined causal triangles (Fig. ??), capturing the oscillatory exchange of causal orientation between

A and

B.

2.2. Barukčić Triangles, Radar Time, and the Non-Existence of a Simultaneity Plane

Consider a single photon bouncing back and forth between two timelike worldlines A (Alice) and B (Bob). Each bounce is the junction of two null legs: and . Between Alice’s emission and her reception, all causal communication across the gap is lightlike; the only timelike accumulation occurs on the local clocks along A and B.

From Alice’s perspective, if the emission and echo-reception occur at proper times

and

, the radar-time construction assigns the reflection event

R the coordinates

or, in light-cone form,

,

. The only invariant extracted from this single echo is

Where:

: Alice’s proper time at the emission event: when the photon leaves her worldline toward Bob.

: Alice’s proper time at the reception event: when the reflected photon returns from Bob.

: the radar time coordinate of the reflection event R, defined as the midpoint between emission and reception times on Alice’s clock.

: the radar distance to the reflection event R, equal to half the round-trip light time expressed in light-seconds.

: the forward light-cone coordinate, representing the outgoing null leg ().

: the backward light-cone coordinate, representing the return null leg ().

: Alice’s proper-time interval accumulated between emission and reception; the invariant tick of her local clock satisfying .

which equals the proper time that Alice’s clock accrues over the closed send–reflect–receive loop. An identical statement holds for Bob with . Thus, the null legs and the local proper-time altitudes are the physically meaningful pieces.

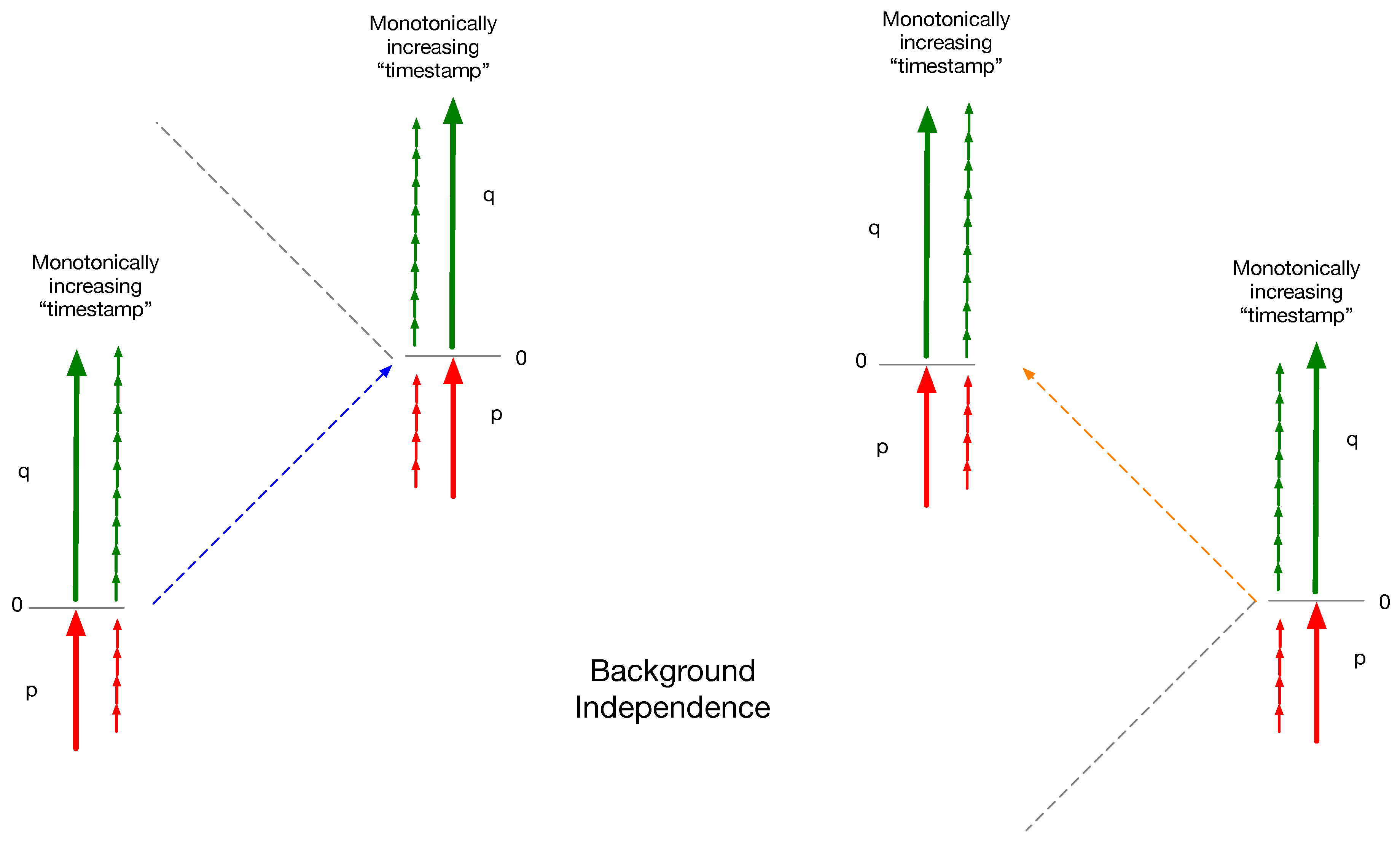

Figure 3.

Each event is registered only against its own local clock: the emission and reception of a photon define proper-time intervals for the participating observers, but not a shared global schedule. No observer can assert that distant clocks advance in lock step with a single transmission or echo; the notion of simultaneity exists only within each worldline’s local frame.

Figure 3.

Each event is registered only against its own local clock: the emission and reception of a photon define proper-time intervals for the participating observers, but not a shared global schedule. No observer can assert that distant clocks advance in lock step with a single transmission or echo; the notion of simultaneity exists only within each worldline’s local frame.

A “simultaneity plane’’ would be a spacelike slice that intersects both worldlines between emission and reception and is supposed to represent a shared “now.” Inside the causal diamond of the echo, there is no observer independent way to pick such a slice. Any slice of simultaneity must be defined by a foliation, e.g., for some inertial frame. Lorentz boosts tilt this foliation: one null leg appears longer while the other appears shorter, so which pair of points on A and B are “simultaneous’’ changes with the frame. The diamond’s null boundaries are preserved; a spacelike simultaneity cut is not.

The only operational way for Alice to nominate a “midpoint time’’ for R is the average . Bob can do the same on his clock. These constructions are private coordinate choices: they depend on each party’s synchronization convention and, in general, yield different slices. Nothing inside the two-party photon exchange selects one foliation over another. Physically, the interior dynamics oscillate their causal orientation: on one leg, on the next. That alternation supplies a discrete global order (bounce number) but not a continuous, frame-invariant “time sheet’’ spanning both worldlines.

The averages and are perfectly meaningful as local coordinates (they define each party’s radar chart), but they have no observer-independent status between the parties. They cannot be promoted to a common “now’’ because a change of inertial frame changes which distant events are simultaneous. By contrast, the products and (and the count of bounces) are invariant and exhaust what the echo itself can certify.

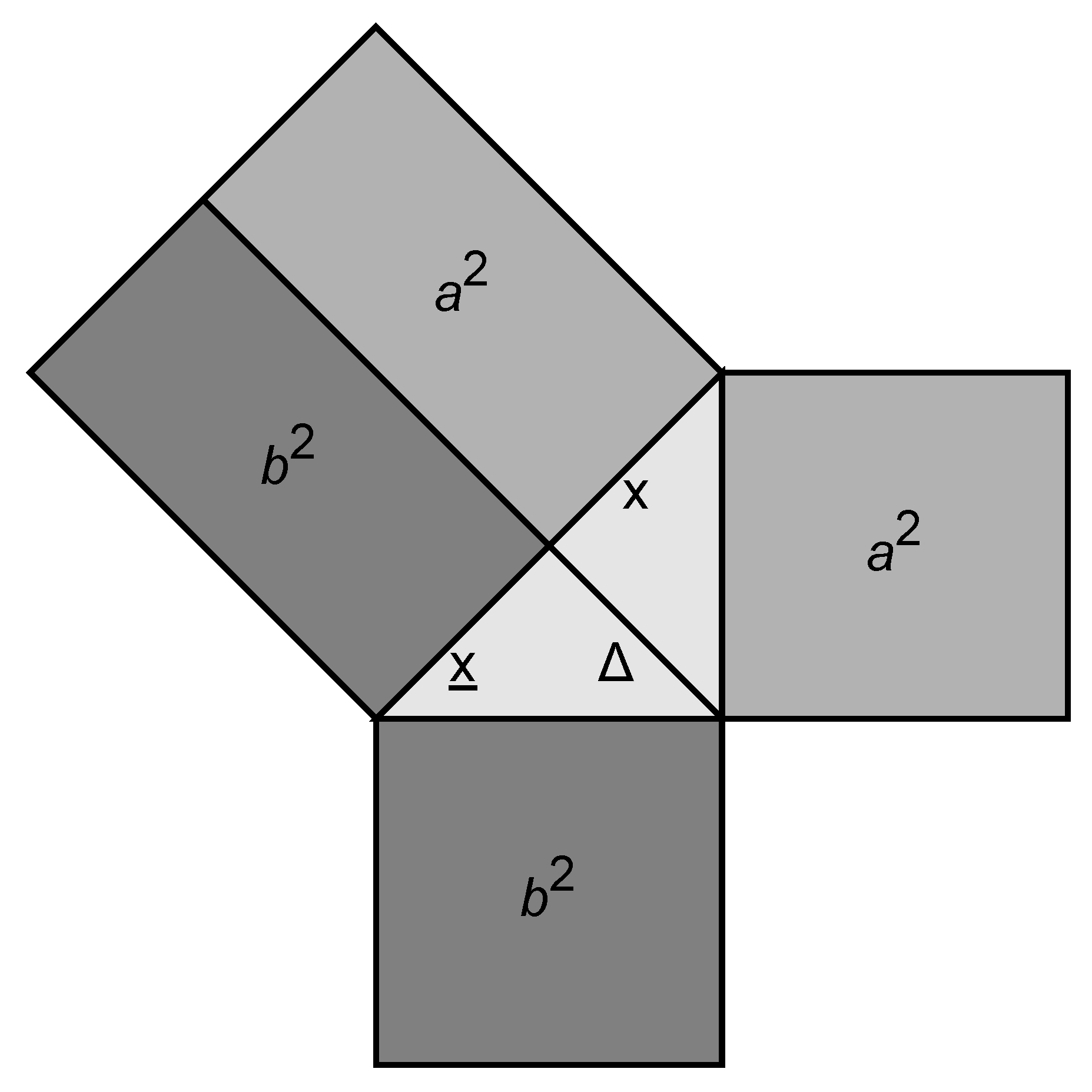

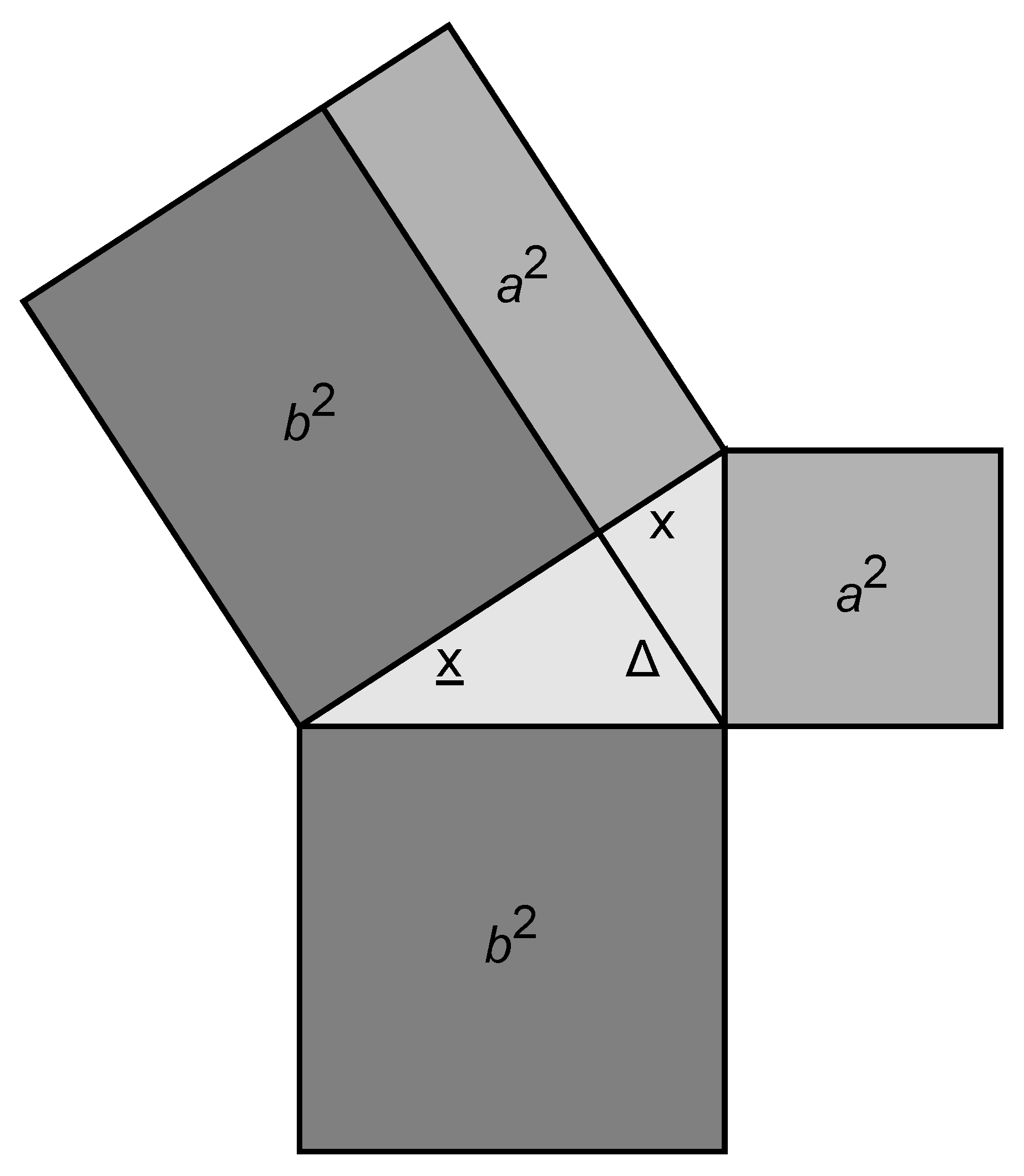

2.3. Construction (Barukčić Triangle)

To visualize the invariant relationship between Alice’s local proper time and the photon’s forward–backward propagation, we recast the radar-time equations into a simple geometric form. The idea is to represent the two null exchanges ( and ) as the sides of a right triangle, and Alice’s proper-time interval as its altitude. In this construction, the photon’s outward and return legs define the boundaries of a causal diamond, while the altitude corresponds to the invariant tick of Alice’s local clock. The resulting triangle provides a Euclidean analogue of the Minkowski relation , allowing the null and timelike components of a single photon exchange to be expressed in one compact geometric identity.

Draw a right triangle whose

hypotenuse is partitioned into two contiguous segments of lengths

Drop the

altitude from the right angle to the hypotenuse. By the right-triangle altitude (geometric-mean) theorem,

Comparing (

7) with (

5), we identify

0.9

Where:

: the forward light-cone coordinate measured on Alice’s clock, corresponding to the photon’s outgoing leg (). It represents the null interval from emission to reflection as perceived by Alice.

: the backward light-cone coordinate measured on Alice’s clock, corresponding to the photon’s return leg (). It represents the null interval from reflection to reception.

: the altitude of the right triangle, dropped from the right angle to the hypotenuse. It represents the geometric mean of the two null intervals and corresponds physically to Alice’s proper-time tick between emission and reception: .

: the proper-time interval accumulated on Alice’s worldline between the emission and reception events. It is the invariant part of the exchange, equal to the altitude of the triangle.

: the two contiguous segments of the hypotenuse, together forming the radar-defined worldline interval between the two null legs. They encode the forward and backward propagation times of the photon as projected onto Alice’s clock.

Tiling the worldsheet with back-to-back copies of this triangle (forward and return) as in

Figure 4 gives a

photon clock: each bounce contributes one invariant “tick’’ equal to the altitude

. Repeating the same construction using Bob’s times

yields an analogous triangle with altitude

. In a stationary two-mirror configuration

, but the mapping

remains invariant in general because (

5) is local to each radar cycle.

Figure 5.

Non-inertial frame: the photon clock experiences asymmetric bounces.

Figure 5.

Non-inertial frame: the photon clock experiences asymmetric bounces.

Figure 6.

Inertial frame: two local clocks in uniform motion, no global simultaneity.

Figure 6.

Inertial frame: two local clocks in uniform motion, no global simultaneity.

This construction emphasizes that the photon exchange defines only local relations between emission and reception events. Each worldline measures its own proper-time altitude or , derived from its own pair of null intervals or . However, no single “horizontal’’ plane of simultaneity can be drawn through the triangle that both observers would agree upon. The forward and backward null legs constrain what each observer can signal or know, but not how distant clocks advance in relation to one another. The midpoint of a round-trip echo, defined by the average , is a convention internal to Alice’s frame, not a global timestamp shared with Bob.

In this sense, the Barukčić triangle captures more than a geometric mean: it visualizes the limits of simultaneity. Each observer constructs time from the closed loop of its own emissions and receptions, anchored only by local invariants. The proper-time altitude is therefore the sole physically meaningful measure inside the triangle, while any attempt to project a global “now’’ across both worldlines is coordinate-dependent and physically unobservable. The photon clock, built from these alternating null exchanges, thus enforces a fundamentally background-independent notion of time: one defined locally by causal contact, not globally by synchronized ticks.

3. Alternating Causality and the Process Matrix Formalism

Oreshkov, Costa, and Brukner (OCB, 2012) introduced the

process matrix formalism to describe correlations among quantum operations without assuming a fixed global causal order [

11]. Within that framework, two operations

A and

B can exist in a coherent superposition of causal sequences:

The process matrix W generalizes the concept of a quantum channel by encoding all physically admissible correlations consistent with local quantum operations but independent of a global temporal reference frame.

Alternating Causality (AC) builds upon this foundation but differs conceptually from indefinite causal order. Whereas ICO describes a probabilistic or coherent superposition of orders, AC represents a deterministic, time-symmetric oscillation of causal direction. Instead of an indeterminate mixture of and , AC describes a periodic, reversible exchange of influence between the two parties.

A link state is x: classically a probability vector (entries , sum 1); quantum mechanically a density operator (positive, trace 1). AC specifies two maps on states: forward F () and reverse R (). One cycle is F then R.

Alternating Causality is defined by three simple principles. First, the forward and reverse maps

F and

R must preserve the set of valid states. Probabilities remain normalized in the classical case, and density operators remain positive and unit-trace in the quantum case. Second, the dynamics proceed in two consecutive halves, with each complete cycle consisting of a forward transformation

F immediately followed by its reverse

R. Third, the reverse half exactly undoes the forward half, expressed algebraically as

Together these axioms ensure that a full forward–reverse cycle restores the system to its initial state while maintaining internal consistency and causal definiteness.

In the classical setting, F may be taken as a permutation on microstates—a bijective stochastic update—so that . In the quantum setting, the natural reversible half is unitary conjugation, with inverse . In both cases, the two halves are exact inverses, and a complete forward–reverse cycle therefore acts as the identity on the state space.

3.1. From Indefinite to Alternating Order

Table 1.

Comparison between Indefinite Causal Order (ICO) and Alternating Causality (AC).

Table 1.

Comparison between Indefinite Causal Order (ICO) and Alternating Causality (AC).

| Concept |

Indefinite Causal Order (ICO) |

Alternating Causality (AC) |

| Relation between

|

Superposition of and

|

Oscillation between and

|

| Temporal model |

Non-factorizable process matrix |

Periodic reversible process tensor |

| Reversibility |

Implicit via linearity |

Explicit via phase alternation |

| Information flow |

Coherent mixture of directions |

Deterministic bidirectional feedback |

The bidirectional symmetry of Alternating Causality mirrors the Wheeler–Feynman absorber picture, in which every exchange consists of two complementary causal halves: one propagating forward in time and its counterpart propagating in reverse [

12]. A

causal initiator is the side that emits first; operationally this is a local CPTP perturbation

(or

) inserted before the reversal:

In the unperturbed loop

; the insertion (

11) sets the time orientation of that cycle. Let

be Shannon/VN entropy and

the mutual information across the link’s cut (definitions in

Appendix A). With a unitary dilation of the full cycle, total entropy is conserved,

, and at the instant of initiation the phasewise information flux satisfies

so the initiator’s local entropy increase is balanced by an equal information gain on the opposite side:

The resulting causal arrow

collapses into one of four deterministic modes, represented by idempotent selectors

with

:

corresponding to forward transfer

, reverse transfer

, and the two self-mirror cases

,

. Equations (

12)–(

13) make it clear that the initiator exports uncertainty (positive

) while the partner imports information, yet the composite evolution remains entropy balanced and time–symmetric.

The Wheeler–Feynman picture let us treat a single exchange as two time-symmetric halves (forward, then echo), with a local initiator choosing which half fires first. That is a dynamical account: phase by phase, one side exports uncertainty while the other imports information, yet the complete forward/echo cycle is entropy balanced and reversible. If we now coarse-grain this cycle to a single “use’’ of the link—suppressing the internal timing and tracing out the microdynamics—we obtain a static object that only answers the operational question: which pairs of local instruments at A and B are jointly compatible? This is precisely what the Oreshkov–Costa–Brukner (OCB) process matrix formalism captures.

Seen this way, Alternating Causality produces (after coarse-graining) a process description that may look like a mixture of different effective orders, because the time labels that distinguished the forward and echo halves have been hidden. Crucially, however, that appearance comes from classical averaging over which half went first in each cycle; the underlying dynamics remained deterministic and time-symmetric. By contrast, Indefinite Causal Order (ICO) refers to situations where no underlying time-resolved account can select a single order or a classical mixture of orders: operational statistics themselves witness causal nonseparability that cannot be explained by coarse-graining a definite alternating sequence. In the next subsection, we switch fully to the OCB language, introduce process matrices, and use them to distinguish this genuinely indefinite regime from the alternating (but ultimately definite) reversible dynamics above.

3.2. Process Tensor Perspective

The OCB process matrix formalism provides a static snapshot of causal relations between two parties: a single-use map that specifies how operations at A and B can be probabilistically composed without presupposing a definite temporal order. In contrast, a physical interaction proceeds through a sequence of locally timed operations. Each node advances according to its own proper-time clock, which defines when inputs are received and outputs are emitted. A full description must therefore account for how causal influence propagates across these discrete local intervals and how earlier exchanges leave traces that shape subsequent ones. The process tensor extends the process matrix into this temporally resolved regime: it records correlations among interventions occurring at successive clock ticks, thereby describing the flow of information between parties as their local clocks advance step by step. This elevates the static W-matrix of OCB into a structured object that specifies not only who influences whom, but also when each influence occurs within the synchronized lattice of local proper times.

Within Alternating Causality, this tensor naturally splits into two complementary phases,

Where:

: the space of linear operators on the given Hilbert space (analogous to in operator algebra).

: the input Hilbert space at time step i, representing the system space before an operation.

: the output Hilbert space at time step i, representing the system space after that operation.

: the tensor product over all steps , capturing the multi-time correlations across the entire process.

The transition from a symmetric, oscillatory regime to an observed, directional one marks the emergence of irreversibility. When the causal cavity is closed and perfectly balanced, information circulates without dissipation, maintaining a steady equilibrium between the two halves of the link. When the balance is perturbed by measurement, emission, or boundary asymmetry, one side acquires informational surplus while the other incurs uncertainty, and a local arrow of time appears. To formalize this transition, we now quantify how entropy and information flux behave within a complete alternating cycle and show that, under unitary dilation, the global process remains exactly reversible even though each half may appear thermodynamically biased from its own frame.

3.3. Reversibility and Entropy Production

We adopt the formal definitions of entropy and mutual information from

Appendix A, where

denotes the Shannon entropy of a source,

its conditional entropy,

their mutual information, and

the joint entropy [

1,

13,

14]. Throughout, logarithms are taken base 2 and all entropies are expressed in bits.

Entropy balance. Let

be the joint state of the system

S (comprising the nodes

) and its environment

E at time

t. If the combined evolution over a cycle of duration

is unitary,

, then von Neumann entropy is invariant:

Differentiating and integrating over one period gives the fundamental conservation law

Equation (

18) expresses the vanishing of total entropy production: although the subsystem entropies

and

may vary during each half-cycle, their changes exactly cancel under global unitarity. This condition is the information-theoretic analogue of Clausius’ equality for a reversible thermodynamic cycle.

Information flux. Let

be the mutual information between the nodes at local proper time

t. The rate of change of

defines an

information flux across the causal cut,

whose temporal average over a half-cycle is

Alternating Causality imposes a strict flux balance:

Equation (

21) guarantees that information exchanged in one temporal direction is returned in the next, establishing a steady-state equilibrium analogous to the zero-net-energy condition of a standing electromagnetic wave. Local entropic gradients may exist transiently, but their integral over a full cycle vanishes, confirming that no information is created or destroyed.

Operator symmetry. The microscopic origin of this balance is the operator identity

which enforces exact time-reversal duality between the forward and reverse causal phases. Each half-cycle corresponds to a completely positive, trace-preserving map on its respective subsystem, while the composite process is unitary on the joint

Hilbert space. Any local entropy increase at one node is offset by a corresponding decrease at the other, preserving Eq. (

18) and ensuring the process remains on the reversible manifold of the CPTP set.

Logical reversibility and causal computation. This structure realizes Bennett’s principle of

logical reversibility: each causal operation has a unique inverse and thus erases no information [

15]. The forward transformation

F and its reverse

R satisfy

Here

F plays the role of a computational write step, encoding the mapping

;

R performs the exact uncomputation, restoring the pre-image by reversing the order of local operations. Viewed dynamically, the causal channel behaves as a Turing machine with two time-reversed tapes evolving along opposite directions of proper time. At the midpoint of the cycle, the two tapes coincide, no net entropy is produced, and all logical states remain accessible from both temporal boundaries. This correspondence extends Bennett’s reversible computation to physical causation: Alternating Causality acts as a reversible information engine in which entropy is not dissipated but exchanged symmetrically between temporal phases, conserving total information and maintaining exact time-reversal symmetry.

3.4. Geometric Interpretation

In Indefinite Causal Order, causal direction is undefined: the underlying structure exists in a superposition of orders. In Alternating Causality, causal direction is phase-linked: it alternates coherently, like a standing wave exchanging energy between two reflectors. Each causal link thus behaves as a causal resonator, sustaining equilibrium between forward and backward propagation.

This viewpoint connects directly to time-symmetric electrodynamics and to the reversible signaling logic used in Open Atomic Ethernet (OAE) link design.

The causal resonator picture also provides a geometric analogue of the “photon clock” model: a photon confined between two mirrors embodies a reversible, alternating causal process whose external measurement collapses to a single direction of time.

Alternating Causality therefore unifies two paradigms: the quantum-informational notion of causal symmetry and the computational requirement of logical reversibility. In this sense, AC can be regarded as the causal analogue of a unitary gate: time evolution preserves information and admits a well-defined inverse.

Section 4 will develop the

information-theoretic realization of Alternating Causality as

Perfect Information Feedback (PIF) on reversible physical links, demonstrating that the framework is not only mathematically self-consistent but also physically implementable in deterministic signaling networks.

4. Perfect Information Feedback: Constructing the Bidirectional Channel

While

Section 3 treated Alternating Causality (AC) as a generalization of the Oreshkov–Costa–Brukner process matrix, we now translate it into the language of

information theory. The central idea is that every reversible causal interaction can be described as a form of

Perfect Information Feedback (PIF): a closed two-way channel in which the receiver continuously confirms the sender’s transmitted state.

This feedback symmetry provides an explicit physical mechanism for alternating causality. Where ordinary communication channels rely on error detection and correction that operate after the fact, a PIF channel maintains instantaneous correlation between transmission and reception, producing a self-verifying exchange.

The bidirectional channel of

Figure 6 establishes the physical foundation of Perfect Information Feedback: two conjugate communication paths maintaining mutual state through continuous reflection. We now translate this symmetry into information-theoretic form. In Shannon’s original formulation, the information content of an event is given by the term

, defined for a single, forward-directed flow of probability. However, a reversible channel necessarily contains both the forward and its conjugate, retrocausal component. Each transmitted symbol therefore carries a pair of informational amplitudes—

p for the forward half and

for the reverse—whose contributions cancel when combined.

In what follows, this idealized construction serves as the reference frame for analyzing real channels. Introducing noise, delay, or partial reflection will be shown to break the conjugate symmetry between and , producing finite entropy and an apparent arrow of time.

4.1. Information Theoretic Model of PIF

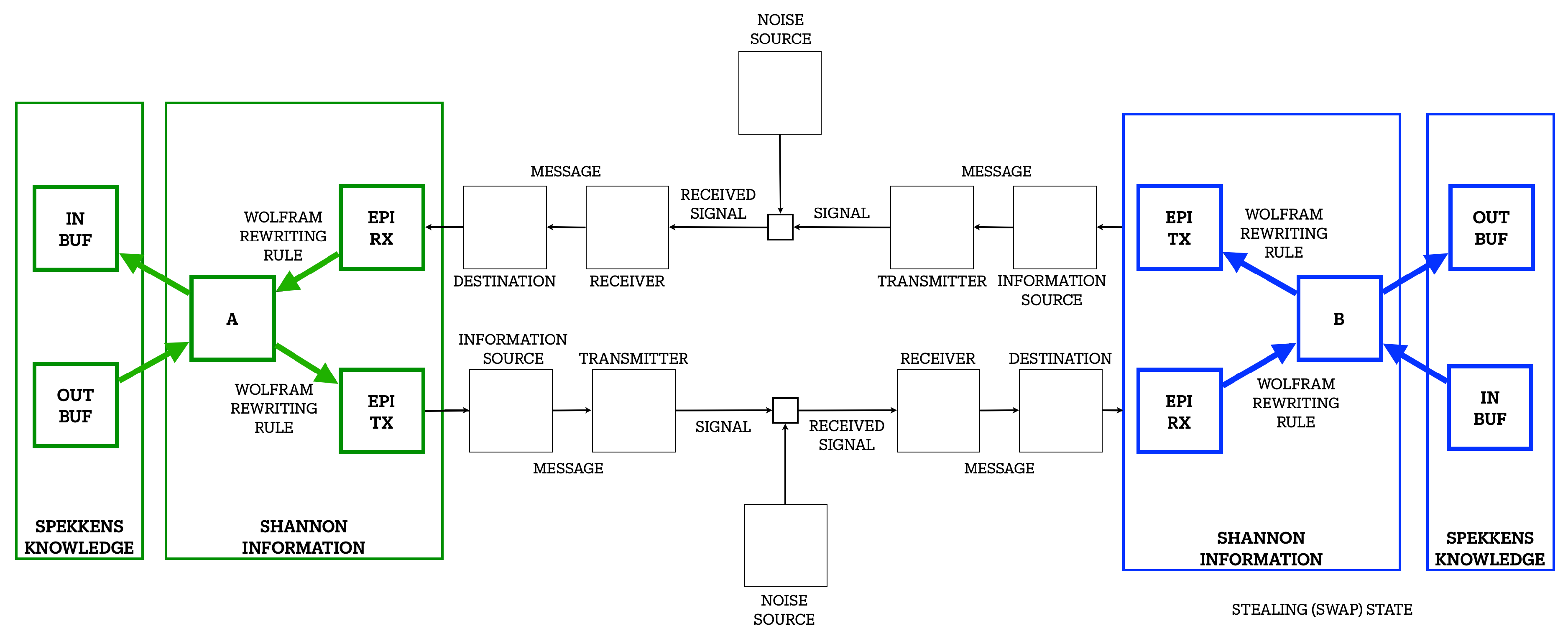

Figure 7.

Shannon communication system extended to a bidirectional Perfect Information Feedback (PIF) configuration. Forward and reverse channels form conjugate halves of a reversible exchange. In the noise-free limit, total information flux is conserved; symmetric perturbations () from the shared noise source break this balance, producing entropy.

Figure 7.

Shannon communication system extended to a bidirectional Perfect Information Feedback (PIF) configuration. Forward and reverse channels form conjugate halves of a reversible exchange. In the noise-free limit, total information flux is conserved; symmetric perturbations () from the shared noise source break this balance, producing entropy.

We begin by constructing the simplest reversible communication link: two conjugate channels operating in opposite directions. Each endpoint alternately acts as transmitter and receiver, so that every symbol launched in one direction is immediately mirrored by a corresponding acknowledgment in the other. This closed loop forms a self-consistent, bidirectional channel analogous to a standing electromagnetic cavity. No external clock or global time reference is required; synchronization arises naturally from the mutual reflection of state between the two sides.

Let

and

denote the instantaneous logical states of the two agents. In a conventional forward-in-time channel, information flows only from

X to

Y:

To complete the feedback loop, we introduce the reverse channel

that carries the conjugate correlation:

Together these two directions form a closed informational system in which the total mutual information is conserved. In the ideal, noise-free limit, the forward and reverse probability amplitudes are exact conjugates,

so that their informational contributions cancel,

This condition defines the

Perfect Information Feedback (PIF) limit: a fully reversible, two-way Shannon channel in which uncertainty and confirmation are perfectly balanced.

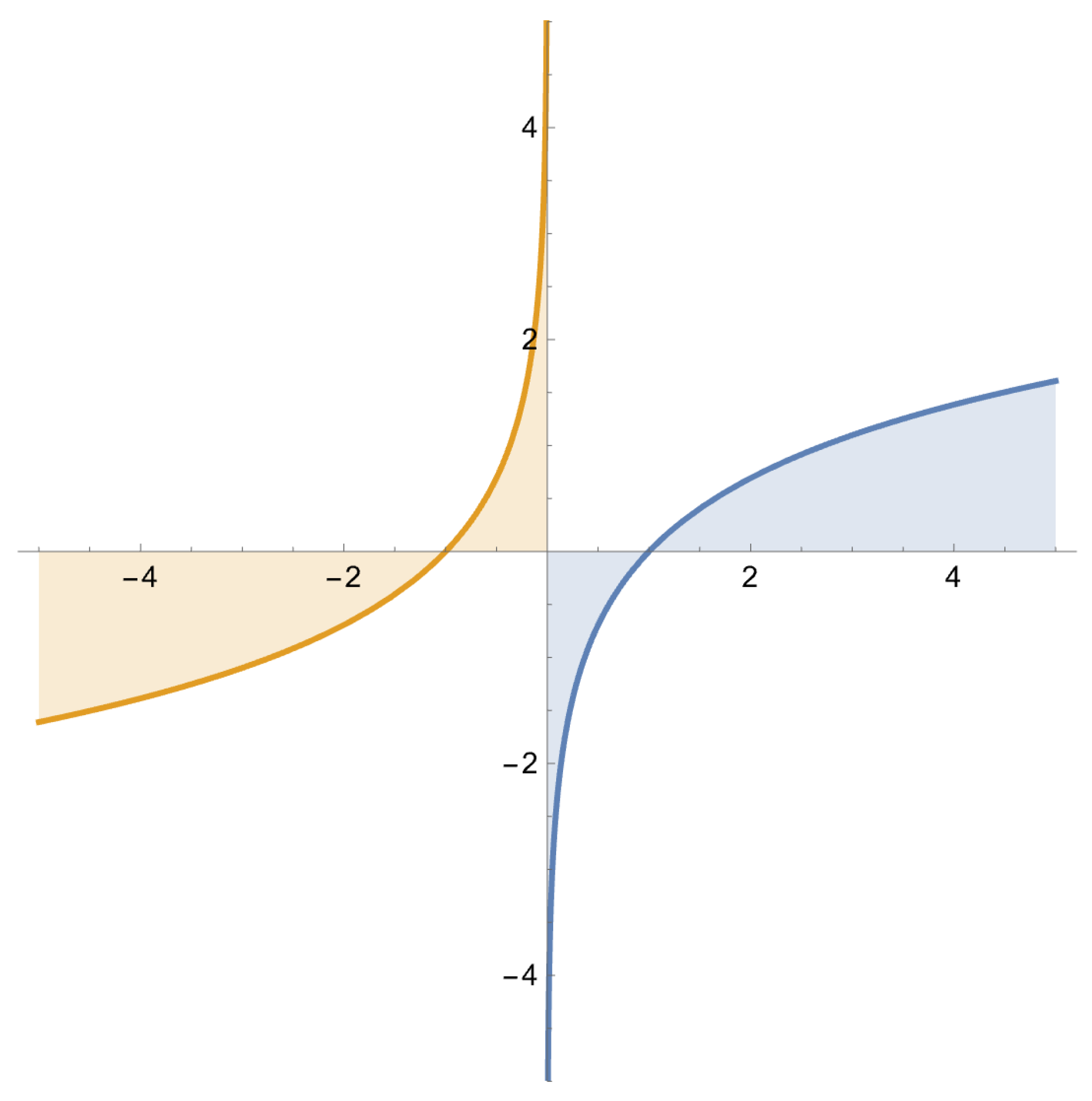

Figure 8.

In Shannon’s formulation, each event with probability p contributes an information term , representing the expected surprise associated with its occurrence. Log is undefined for , so corresponds to a reflection across , and the inverse of .

Figure 8.

In Shannon’s formulation, each event with probability p contributes an information term , representing the expected surprise associated with its occurrence. Log is undefined for , so corresponds to a reflection across , and the inverse of .

Following Shannon, view a channel as a sequence of discrete uses (

slots) indexed by

. Let

denote the mutual information across a chosen cut during slot

n. For each edge

e of the link graph, define the

slot flux

the net information pushed across

e from

(edge–resolved contribution to the cut). In the noise free PIF regime, conservation holds

slotwise at every internal node

v:

and reversibility enforces a loop constraint on any closed causal cycle

within one forward–echo pair:

where

is an

information potential attached to

e (e.g., surprisal

or a log–likelihood ratio). Together, (

29)–(

30) state that, per slot, information neither accumulates at nodes nor builds net “bias” around loops: the standing–wave picture of PIF.

4.2. Effects of Noise

With noise (erasures, phase slips, timing jitter), forward and reverse path weights on

e need not match. Define the

information affinity

and the (Schnakenberg-type) slot entropy production

Node balance then includes an environmental leak

:

and loop sums register the net affinity,

In the ideal PIF limit,

for all

, so

and both Kirchhoff laws (

29)–(

30) are restored

per slot.

Intuition. PIF behaves like a lossless AC network at steady state whereas noise introduces resistive drops that convert balanced standing waves into directed, entropy-producing flow.

An incorrect symbol is not “pure loss”; it is a

surprisal event1. Its likelihood

contributes evidence

that something in the forward–echo pair has decohered. In a hardware implementation, the slice-level echo and comparator turn that evidence into a local trigger: the nodes can (i) mark the offending slot/slice, (ii) exchange minimal reverse messages (ACK/NACK, checksums, or syndrome bits), and (iii)

roll back only the affected portion and replay with stronger protection (re-encode, interleave, escalate redundancy). This

successive reversibility confines repair to the smallest inconsistent region, converting raw surprisal into guidance for recovery. Thus even when the original payload instance is unrecoverable in that pass, the event itself supplies the side information needed to restore information balance and alignment on the next pass.

4.3. Capacity and Composition in Slots

Let

and

be the forward/reverse capacities in bits/slot. A phase-locked PIF link satisfies

with

in the balanced case; noise reduces

via the penalty

. Series/parallel composition of links preserves equations (

29)–(

30) when interfaces are slot and phase aligned, so contracting any zero-EP subgraph yields an equivalent PIF edge. This forms the basis of a “nestable, composable” Alternating Causal Graph in discrete time.

In the Shannon sense, the channel capacity for such a reversible link doubles the degrees of freedom available for encoding:

4.4. Nestability and Composability of PIF Links

Let

and

be PIF links with forward/reverse halves

and

. They are

compatible when their boundary clocks and phases align so that the downstream reverse half mirrors the upstream forward half:

More generally, for multi-rate operation there exist integers such that one outer slot equals inner slots of and of , with phase alignment at the shared boundary.

Series composition (closure).: If

are compatible and each satisfies

, then the series composite

is again PIF:

Hence, with slotwise conservation (

29)–(

30) holding on each link, entropy production and mutual-information flux sum to zero over the composite cycle.

Parallel composition (independent branches): For disjoint links

,

and capacities add while ideal entropy production remains zero:

Nested composition (encapsulation): A

PIF module is any subgraph

G with boundary ports

that, over an integer number of inner slots per outer slot, satisfies: (i) slotwise node balance and loop neutrality (

29)–(

30) at the boundary, and (ii) zero net entropy production per complete forward–echo pair at the boundary. Such a module is

contractible: there exists an effective edge

with halves

whose boundary traces reproduce the observed boundary fluxes and echoes of

G. Contractibility is

associative and

context-preserving: replacing any subgraph by

leaves all external measurements of flux, capacity, and (ideal) entropy balance unchanged. Thus, a bidirectional channel can be

built out of bidirectional channels: PIF is closed under hierarchical encapsulation.

Nesting supports multiple levels of semantic and informational reversibility. A lower layer (symbols/slices) provides immediate echoes to detect physical mismatches; a middle layer (frames/transactions) aggregates slice-level surprisal into targeted rollbacks; an upper layer (application semantics) confirms invariants (e.g., exactly-once) via lightweight reverse checks. Errors are not “pure loss’’—they are surprisal events that supply side information to localize and repair. Because each layer is itself a PIF module, recovery remains confined to the smallest inconsistent region and composes cleanly: inner-layer corrections restore alignment so outer layers rarely need to escalate. In this way, bidirectional links within bidirectional links yield robust, nestable channels whose reversibility extends from bits on the wire to application APIs.

4.5. Alternating Causal Graphs

On an Alternating Causal Graph (ACG) with nodes

and oriented edges

, let

be the half-cycle–averaged flux on

e. PIF imposes a Kirchhoff-like conservation at every internal node

v:

and along any closed cycle

,

If a nested subgraph obeys these boundary equalities over whole-slot pairs (possibly at a slower outer timescale), it may be contracted to a single PIF edge without changing the rest of the graph.

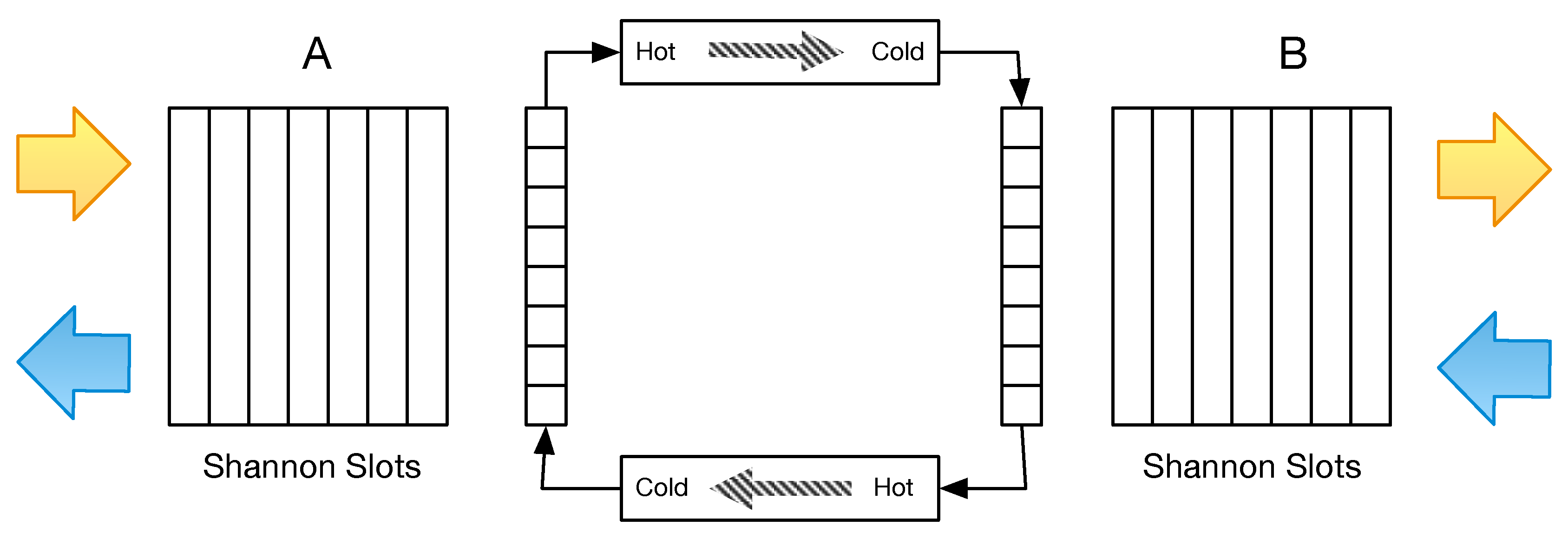

4.6. Thermodynamic Perspective

In ordinary communication theory, entropy production is tied to irreversible encoding, lossy decisions, and noise dissipation. In a PIF system, the budget is

ideally zero: each forward update is immediately mirrored, so exchange is balanced slot by slot and the composite evolution is reversible.

2

A transmitter (HOT) injects quanta into a guided cavity (cable/fiber pair, connectors, package parasitics) while a receiver (COLD) absorbs a small fraction. During each bit period, the cavity modes fill to a quasi-steady standing wave set by the SERDES bit clock and the ASIC clock tree. Energy flows down the HOT→COLD gradient, yet time’s direction is not fixed by the physics of the cavity: it is imposed operationally by how we terminate the ports and schedule who “goes first.”

The receiver does not read the waveform continuously; it takes discrete samples at instants defined by its local clock. A clock-and-data recovery (CDR) loop derives and continuously adjusts this clock so those sampling instants land near the center of each symbol’s eye: a phase detector measures timing error between signal transitions and the local oscillator, a loop filter integrates that error (storing soft timing evidence), and a VCO/DLL shifts the sampling phase until the eye is centered. Once aligned, bits are decided at those instants and transferred across a small elastic buffer into the digital clock domain. In a one-way link, any sampling mistake is resolved by a hard decision at the slicer, discarding soft evidence and dissipating heat. In a PIF link, the echo returns the same timing evidence to the initiator; both ends co-adjust and co-lock on the common standing wave so their sampling instants are mirror-matched across the cavity. Small phase disturbances then circulate as reversible, reactive corrections rather than being burned away as resistive heat.

Figure 10.

Transmitting creates entropy. Receiving increases information and decreases entropy.

Figure 10.

Transmitting creates entropy. Receiving increases information and decreases entropy.

Landauer’s bound penalizes

logically irreversible steps; PIF neither denies nor violates this—it

rearranges the pipeline so that soft information is preserved in echoes and loop states, corrections are targeted at the smallest inconsistent slice/slot, and only the

net irreversibility appears where synchronization fails or a deliberate final decision is taken. In the reversible limit (no slips, no erasures, soft inference), the link behaves like a conservative dynamical system: information is shuttled, not destroyed, and slotwise conservation (cf. (

29)–(

30)) holds at the boundary.

Noise, shot fluctuations, phase slips, and timing jitter break detailed balance: forward and reverse paths no longer carry identical weights, and an unexpected symbol or timing excursion occurs—a surprisal event. Surprisal is not “pure loss’’; its improbability is diagnostic. With an echo and comparator, the nodes treat that event as side information: they localize the fault (which edge, which slice/slot) and trigger the minimal reverse exchange (ACK/NACK, syndrome, re-timing) that rolls back only the affected micro-interval. Even when a particular payload instance is unrecoverable in that pass, the event seeds the next pass with exactly the evidence needed to realign.

Two PLLs facing each other across a bidirectional cavity are thermodynamically analogous to reactive tanks coupled by an ideal transformer: the forward drive builds phase on one side while the echo builds the equal-and-opposite mirror on the other. At two-sided lock, the net entropy flow over a forward–echo pair is zero; only imbalance introduces a resistive component, visible as loop error, excess jitter, or decoding heat. Conventional Alternating Bit Protocols (ABP) handles that imbalance after the fact with bulk retransmissions; PIF addresses it in the act at slice granularity, confining dissipation to the smallest place and time.

There is no intrinsic direction of time in this picture. The apparent arrow of causality is imposed by the designer who connects TX/RX asymmetrically. A pair of back-to-back channels naturally reflects TX/RX symmetry, turning each Shannon channel (originally one-way) into a bidirectional flow between Alice and Bob. Alice’s entropy becomes Bob’s information, and Bob’s information becomes Alice’s entropy; in the ideal, phase-locked regime these balance exactly over each forward–echo cycle.

5. Toward a Unified Reversible Causal Principle

This section consolidates the preceding analyses into a single formal symmetry: the

Reversible Causal Principle (RCP). Sections 3–4 examined Alternating Causality from four complementary domains—the formal structure of process matrices, the informational symmetry of Perfect Information Feedback, the time-symmetric dynamics of physical fields, and the topology of Alternating Causal Graphs. RCP unifies these views by asserting that every causal interaction

admits a time-reversed conjugate satisfying

and that any closed causal loop obeys

These relations express

local reversibility and

global causal neutrality, extending Noether’s logic from mechanics to information:

The principle is cast in an operator form

whose unitarity condition,

guarantees conservation of total informational amplitude. Departures from unitarity correspond to entropy generation or causal decoherence. A cross-domain correspondence links this invariance to familiar constructs: Hermitian process matrices in quantum theory, mutual-information balance in Shannon channels, advanced–retarded field superposition in electrodynamics, and zero net causal flux in topological cycles. In each case, irreversibility appears as the non-vanishing of an asymmetry term.

Within this framework, the arrow of time

emerges from the entropy generated around the network rather than axiomatically. When boundary conditions or noise impede perfect reflection

a small imaginary term

enters the action, breaking time-reversal invariance and yielding measurable entropy. The macroscopic flow of time thus represents the universe’s imperfect causal echo [

2,

16]. RCP therefore provides a compact grammar linking Shannon’s communication symmetry [

1], Wheeler–Feynman absorber dynamics, and Bennett’s reversible computation [

15,

17,

18] under a single conservation law of information.

6. Conclusions

Under the Reversible Causal Principle (RCP) explored in this work, symmetry in causal direction elevates information to the status of a conserved quantity. In a perfectly time-symmetric (reversible) interaction, the present state retains all the encoded details of its past states. Nothing of the initial information is truly lost or irretrievably dissipated; the evolution is invertible, and every effect can in principle be traced back to its cause. In this sense, information behaves analogously to an invariant of motion, preserved by the bidirectional symmetry of cause and effect

For a more concrete intuition, we can draw an analogy with communication theory. Reliable communication protocols intrinsically rely on two-way exchange: a sender transmits data and the receiver returns an acknowledgment, incurring a measurable round-trip latency. This bidirectional feedback loop ensures that information sent is information received and confirmed. Every bit of data is effectively “spoken” by the sender and “heard” by the receiver, who then responds with an ACK. In effect, the channel enforces a causal symmetry: the forward flow of information is mirrored by a return flow, preventing a one sided dissipation of the message. Such mechanisms guarantee that no information is lost in transit without leaving a trace, much like a reversible physical process guarantees that no information vanishes without a record in the system’s state. What might appear as a mere engineering detail (the necessity of acknowledgments and handshakes) is, in essence, the communication system’s insistence on a minimal form of causal reversibility to preserve information.

At steady state, a well-designed channel operates in a mode akin to detailed balance in physics: for every packet or signal sent from one end, an acknowledgement or return signal is sent from the other, so that each elementary “action” is paired with an opposing action. No unbounded accumulation of unacknowledged packets occurs; likewise, no net loss of information transpires. In this ideal lossless exchange, the information flow reaches a kind of dynamic equilibrium, maintaining maximum throughput without degradation. By contrast, delays, packet loss, or dissipation of signals indicate a breach of this symmetry and a departure from equilibrium. An unacknowledged or lost message is the communication analog of an irreversible process: information escapes into an unrecoverable form (noise or delay), much as erasing a bit of information in a computational system dissipates a minimum amount of heat into the environment.

The experimental test of RCP lies in systems that sustain near-perfect bidirectional feedback: reversible digital links, quantum-switch interferometers, and symmetric electromagnetic or superconducting cavities. The predicted signature is not merely energy conservation but the suppression of informational entropy through phase coherence between alternating directions.

RCP reframes reliability as a symmetry law: a link is “good” not merely when signals are strong in the forward direction but when every assertion is promptly closed by a confirmation. Practically, this means designing for echo quality as carefully as for SNR, budgeting latency as the unavoidable price of certainty, and treating acknowledgments and handshakes as constitutive features of a lossless medium. Whether implemented as slice-by-slice echo verification in digital links, echo-locked electromagnetic cavities, or protocol stacks that preserve invertibility at each slot, the prescription is the same: maximize alternating causality to conserve information.

Funding

This research received no external funding

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Notions of Entropy

Appendix A.1. Local Observer Entropy

Entropy in network theory is typically taken to be Shannon Information Entropy [

1]. Rényi Entropy generalizes this to an infinte class of entropy measures with values of

where

.

In [

13] Rényi defined an expression for the entropy of order

of a distribution

X where

X is represented as a list of probabilties

From this infinite set of entropy measures, the two measures of interest for communications networks are Shannon Information Entropy with

and Min Entropy with

which gives a measure of the security of secret values such as keys used in cryptographic protocols.

A notable feature of all Rényi entropy measures is that they are all a function of a static distribution.

A sequence of symbols transmitted over a network is not constrained to be I.I.D. (Independent and Identically Distributed) and so does not typically have a static distribution. Quantum entanglement behavior in physical systems mean that no generator is free of correlation and so no generator is genuinely I.I.D. while extractor theory permits methods to approximate an I.I.D. distribution arbitrarily closely.

Any sequence of random symbols that can be generated from a birth-death Markov chain generator will, except for 2 state, fully symmetric configurations, be non I.I.D. and so will have a distribution that changes with the reception of each symbol, which gives the receiver information about the current active state in the Markov generator [

19].

For the purposes of practically characterizing entropy of a Shannon channel or a sequence of non I.I.D. symbols, it is sufficient to average over a number of symbols where a static distribution will be asymptotically reached as the number of symbols increases.

For example, a simple 2 state Markov generator creating binary symbols with equal probability of 0.5 and a negative serial correlation coefficient of 0.2. The min entropy of a generated bit will be 1 bit when the observer is ignorant of the previous bit but the min entropy will be lower if the previous bit is taken into account [

20].

In the example with

and single bit symbols, the entropy per bit is:

However the most probable symbol is alternating between 0 and 1.

Accumulating generated bits into groups of 8 bits forming bytes for symbols, we find the most probable symbols will be 0x55 and 0xAA representing the alternating bits patterns of 01010101 and 10101010. These symbols are equiprobable and so the distribution of most probable symbols is static, even though the underlying single bit distribution is not static.

In our example, the 8 bit min entropy per symbol will be:

These equations can be extended for more complex Markov models of generator processes. An example analyzing a physical bit generator with an infinite Markov model as the limit is given in [

21].

In this paper, where the term entropy is used without qualification, it is referring to Shannon Entropy.

Another variation on terminology is "Entropy Rate" which is defined as the Entropy in a group of

n symbols divided by

n. This corresponds to the average entropy per symbol.

Appendix A.2. Mutual Information

In the context of Shannon entropy, we have source

x and receiver

y entropy defined as:

From this we can derive the two equivocations

and

:

We can derive information transfer

:

From these we can define joint or mutual entropy

as:

In this paper,

,

,

and

are used with these definitions [

14].

Appendix B. Alternating Causality and the TIKTYKTIK Protocol

Recent studies in quantum information and statistical physics have shown that multiple or even opposing arrows of time can coexist within the same physical system. These systems do not violate thermodynamics—they remain globally consistent—but locally, the direction of causal flow can invert or oscillate. In essence, a subsystem can momentarily “run time backward” with respect to its neighbors while the larger ensemble still evolves forward overall.

From a communications standpoint, the TIKTYKTIK protocol models this phenomenon at the link level. Each communication link carries not just data, but causal structure—an ordering of events that defines “before” and “after.” When two links interact, they can temporarily exchange or mirror portions of that causal order. During this interaction, the link participates in an alternating causality regime: the direction of effective causation oscillates before collapsing into a consistent forward orientation.

The TIKTYKTIK sequence—“The I Know That You Know That I Know”—represents a two-phase reversible exchange:

The first two messages (TIK–TYK) constitute a reversible handshake, where both endpoints sample and exchange causal state without committing to direction.

The next two messages (TIK–TYK) complete the cycle and cause the local entropic gradient to collapse into a definite direction of information flow.

After the fourth message, entropy is produced and the causal arrow becomes fixed for that particular exchange.

In this framing, the alternating causality capacity defines the maximum reversible exchange rate a link can sustain before this entropic commitment occurs. It quantifies how much causal information two endpoints can trade symmetrically before one side becomes the effective “future” of the other. Once the TIKTYKTIK cycle closes, the reversible phase ends and the transaction’s arrow of time is set—analogous to a quantum measurement collapsing a superposed causal state.

Practically, this suggests a new interpretation of latency, propagation delay, and jitter in distributed systems. These parameters may not be mere artifacts of imperfect transmission, but reflections of a deeper causal oscillation that underlies all communication. Each reversible cycle carries information both forward and backward in local time until entropy production forces a commitment. At macroscopic scales this alternation averages out, appearing as smooth, one-way delay—but the microscopic TIKTYKTIK oscillations still bound the ultimate efficiency and determinism of communication systems.

Appendix C. Reversible Firing Squad on Alternating-Causality Links

This appendix illustrates how reversible synchronization may emerge over a network of alternating-causality links. The mechanism is inspired by the classical Firing Squad Synchronization Problem (FSSP), but is recast in the context of reversible communication primitives such as the TIKTYKTIK protocol.

A system of symmetric links, each capable of locally reversible and temporally bidirectional exchange, can coordinate a simultaneous commit without violating causality. The construction below shows how such a network can compose local reversible exchanges into a single, system-wide irreversible event.

Each link between nodes executes a two-phase, four-message reversible protocol to negotiate ownership of a token. Any error or congestion will successively reverse the phase of the negotiation until both sides are at equilibrium. The FSSP interaction works as follows: each node queries its immediate neighbors for their current state and receives their replies. After a node has both heard from all of its neighbors and responded to their corresponding requests, it transitions to the next state. If any discrepancy or delay occurs, the node computes the reverse transition, restoring the previous reversible configuration. This ensures that no part of the network advances farther than the slowest participating node. The reversible preamble thus acts as an elastic synchronization barrier: progress occurs only when local consensus across all adjacent links has been reached.

This reversible firing-squad mechanism provides an operational illustration of how local reversibility and symmetric causality can compose to produce a global irreversible simultaneous event. Entropy is localized to the final message in each link, while the prior coordination remains energetically neutral. The result is a distributed system capable of reaching simultaneous commit without clocks, but instead through the geometry of its causal exchanges.

Although this construction is illustrative rather than formal, it clarifies the physical intuition behind synchronization in alternating-causality networks. The same principles may extend to broader classes of reversible or self-timed systems, where global order emerges from the alignment of locally reversible interactions. Future work will formally verify the protocol and examine it’s guarantees.

References

- Shannon, C.E. A Mathematical Theory of Communication. Bell System Technical Journal 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Donoghue, J.F.; Menezes, G. Arrow of Causality and Quantum Gravity. Phys. Rev. Lett. 2019, 123, 171601. [Google Scholar] [CrossRef] [PubMed]

- Donoghue, J.F.; Menezes, G. Quantum causality and the arrows of time and thermodynamics. Progress in Particle and Nuclear Physics 2020, 115, 103812. [Google Scholar] [CrossRef]

- Procopio, L.M.; Castaños-Cervantes, L.O.; Bartley, T.J. 2025; arXiv:quant-ph/2509.02209].

- de la Hamette, A.C.; Kabel, V.; Christodoulou, M.; Brukner, i.c.v. Indefinite Causal Order and Quantum Coordinates. Phys. Rev. Lett. 2025, 135, 141402. [Google Scholar] [CrossRef]

- Escandón-Monardes, J. A map of indefinite causal order. 2025, arXiv:quant-ph/2506.04607. [Google Scholar]

- Zhao, X.; Zhao, B.; Chiribella, G. The quantum communication power of indefinite causal order. 2025, arXiv:2510.08507. [Google Scholar] [CrossRef]

- Kuypers, S.; Rijavec, S. Measuring time in a timeless universe. 2025, arXiv:quant-ph/2406.14642. [Google Scholar] [CrossRef]

- Surace, J. A Theory of Inaccessible Information. Quantum 2024, 8, 1464. [Google Scholar] [CrossRef]

- Bennett, C.H.; Shor, P.W. Quantum information theory. IEEE transactions on information theory 2002, 44, 2724–2742. [Google Scholar] [CrossRef]

- Oreshkov, O.; Costa, F.; Brukner, Č. Quantum correlations with no causal order. Nature Communications 2012, 3, 1092. [Google Scholar] [CrossRef] [PubMed]

- Wheeler, J.A.; Feynman, R.P. Interaction with the Absorber as the Mechanism of Radiation. Rev. Mod. Phys. 1945, 17, 157–181. [Google Scholar] [CrossRef]

- Rényi, A. On measures of entropy and information. In Proceedings of the Fourth Berkeley symposium on mathematical statistics and probability; 1961; Vol. 1, pp. 547–561. [Google Scholar]

- Usher, M. Information Theory for Information Technologists; Macmillan, 1984.

- Bennett, C.H. Logical Reversibility of Computation. IBM Journal of Research and Development 1973, 17, 525–532. [Google Scholar] [CrossRef]

- Price, H. Time’s Arrow and Archimedes’ Point: New Directions for the Physics of Time; Oxford University Press, 1996.

- Bennett, C.H. Time/Space Trade-Offs for Reversible Computation. SIAM Journal on Computing 1989, 18, 766–776. [Google Scholar] [CrossRef]

- Frank, M.P. Reversibility for Efficient Computing. Science of Computer Programming 1999, 37, 83–94. [Google Scholar]

- Johnston, D. Online Testing Entropy and Entropy Tests with a Two State Markov Model. In Proceedings of the Security, Privacy, and Applied Cryptography Engineering; Knechtel, J., Chatterjee, U., Forte, D., Eds.; Cham, 2025; pp. 110–128. [Google Scholar]

- Johnston, D. Random Number Generators, Principles and Practicess; De|G Press: Berlin, Boston, 2018. [Google Scholar] [CrossRef]

- Parker, R.J. Entropy justification for metastability based nondeterministic random bit generator. 2017 IEEE 2nd International Verification and Security Workshop (IVSW), 2017; 25–30. [Google Scholar]

- University of Surrey. Physicists uncover evidence of two arrows of time emerging from the quantum realm. University of Surrey News 2025. [Google Scholar]

- Guff, T.; Shastry, A.; Rocco, N. Emergence of opposing arrows of time in open quantum systems. Scientific Reports 2025, 15, 87323. [Google Scholar] [CrossRef]

- Liu, Z.D.; Chen, B.; Dahlsten, O. Inferring the arrow of time in quantum spatiotemporal correlations. arXiv preprint 2024, arXiv:2311.07086v2. [Google Scholar] [CrossRef]

- Füllgraf, K.; Wang, Y.; Gemmer, J. Evidence for simple “arrow of time functions” in closed chaotic quantum systems. arXiv preprint 2024, arXiv:2408.08007. [Google Scholar] [CrossRef] [PubMed]

- Geier, S.; et al. Time-reversal in a dipolar quantum many-body spin system. arXiv preprint 2024, arXiv:2402.13873. [Google Scholar] [CrossRef]

- Zhang, L.; et al. Quantum Algorithm for Reversing Unknown Unitary Evolutions. arXiv preprint 2024, arXiv:2403.04704. [Google Scholar]

- Phys.org. Researchers realize time reversal through input-output indefiniteness. Phys.org 2024. [Google Scholar]

- Youvan, D. A New Thermodynamics Featuring the Arrow in Time. arXiv preprint 2024, arXiv:2409.19033v1. [Google Scholar]

- Xue, Q.F.; et al. Evidence of genuine quantum effects in nonequilibrium entropy production. arXiv preprint 2024, arXiv:2402.06858. [Google Scholar]

- Di Terlizzi, I. Force-free kinetic inference of entropy production. arXiv preprint 2025, arXiv:2502.07540. [Google Scholar]

- Pachter, J. The foundations of statistical physics: entropy, irreversibility, and inference. arXiv preprint 2024, arXiv:2310.06070. [Google Scholar] [CrossRef]

- Barbour, J. Time’s Arrow and Simultaneity. Timing & Time Perception 2024, 12, 125. [Google Scholar] [CrossRef]

- Rovelli, C. Reversing the Arrow of Time; Cambridge University Press, 2024.

- De Cesare, M. Arrows of time in bouncing cosmologies. arXiv preprint 2024, arXiv:2405.01380. [Google Scholar]

- Papadopoulos, L.; et al. Arrows of Time for Large Language Models. arXiv preprint 2024, arXiv:2401.17505. [Google Scholar]

- Youvan, D. The Computational Arrow of Time: Exploring the Emergence of Temporal Direction Through Complexity and Entropy. ResearchGate preprint 2024. [Google Scholar]

- Prokopenko, M. Biological arrow of time: Emergence of tangled information hierarchies and self-modelling dynamics. arXiv preprint 2024, arXiv:2409.12029. [Google Scholar] [CrossRef]

- LHCb Collaboration. Observation of charge-parity symmetry breaking in baryon decays. Nature 2025. [CrossRef]

- Austrian Academy of Sciences. Observations of the delayed-choice quantum eraser using coherent photons. Scientific Reports 2023. [CrossRef]

- Lesovik, G.; et al. Arrow of time and its reversal on the IBM quantum computer. Scientific Reports 2019, 9, 4396. [Google Scholar] [CrossRef]

- MIT News. Physicists harness quantum “time reversal” to measure vibrating atoms. MIT News, 2022.

- Phys.org. Scientists observe ’negative time’ in quantum experiments. Phys.org 2024. [Google Scholar]

- ScienceDaily. New method to measure entropy production on the nanoscale. ScienceDaily 2024. [Google Scholar]

- others. Deep learning probability flows and entropy production rates in active matter. PNAS 2024. [Google Scholar] [CrossRef]

- others. Learning Stochastic Thermodynamics Directly from Correlation and Trajectory-Fluctuation Currents. arXiv preprint, arXiv:2504.19007v1.

- Stanford Encyclopedia of Philosophy. Boltzmann’s Work in Statistical Physics. Stanford Encyclopedia of Philosophy, 2024.

- others. H-theorem in quantum physics. Scientific Reports 2016, 6, 32815. [Google Scholar] [CrossRef] [PubMed]

- others. Emergence of Entropic Time in a Tabletop Wheeler-DeWitt Universe. arXiv preprint, arXiv:2509.07745.

- Carroll, S. From Eternity to Here: The Quest for the Ultimate Theory of Time; Dutton, 2010.

- Barbour, J. The End of Time: The Next Revolution in Physics; Oxford University Press, 2001.

- Smolin, L. Time Reborn: From the Crisis in Physics to the Future of the Universe; Houghton Mifflin Harcourt, 2013.

- Rovelli, C. Relational quantum mechanics. International Journal of Theoretical Physics 1996, 35, 1637–1678. [Google Scholar] [CrossRef]

- Moreva, E.; et al. Time from quantum entanglement: an experimental illustration. Physical Review A 2014, arXiv:1310.469189, 052122. [Google Scholar] [CrossRef]

- Spivak, D.; Schultz, P. Temporal Type Theory: A topos-theoretic approach to systems and behavior. arXiv preprint 2017, arXiv:1710.10258. [Google Scholar]

- others. Holography of information in AdS/CFT. Journal of High Energy Physics 2022, 2022, 095. [Google Scholar] [CrossRef]

- others. Non-commutative Geometry from Perturbative Quantum Gravity. Physical Review D 2023, arXiv:2207.03345107, 064041. [Google Scholar] [CrossRef]

- Hartle, J.B. The physics of now. American Journal of Physics 2005, 73, 101–109. [Google Scholar] [CrossRef]

- Lamport, L. Time, Clocks, and the Ordering of Events in a Distributed System. Communications of the ACM 1978, 21, 558–565. [Google Scholar] [CrossRef]

- Ridley, M.; Cohen, E. Two times or none? 2025, arXiv:2509.22264. [Google Scholar] [CrossRef]

- Cuffaro, M.E.; Hartmann, S. Information-Theoretic Concepts in Physics. Entropy 2025, 27. [Google Scholar] [CrossRef] [PubMed]

- Rioul, O. This is IT: A primer on Shannon’s entropy and information. In Proceedings of the Information Theory: Poincaré Seminar 2018. Springer; 2021; pp. 49–86. [Google Scholar]

- Stone, J.V. Information Theory: A Tutorial Introduction. 2019; arXiv:cs.IT/1802.05968. [Google Scholar]

- Aharonov, Y.; Vaidman, L. Properties of a quantum system during the time interval between two measurements. Phys. Rev. A 1990, 41, 11–20. [Google Scholar] [CrossRef]

- Waaijer, M.; Van Neerven, J. Delayed choice experiments: an analysis in forward time. Quantum Studies: Mathematics and Foundations 2024, 11, 391–408. [Google Scholar] [CrossRef]

- Mrini, L.; Hardy, L. Indefinite Causal Structure and Causal Inequalities with Time-Symmetry. 2024, arXiv:2406.18489. [Google Scholar] [CrossRef]

- Aoki, S.; Strumia, A. Testing the arrow of time at the cosmo collider. 2025, arXiv:2510.05204. [Google Scholar] [CrossRef]

- Shiraishi, N.; Takagi, R. Recovery of the second law in fully quantum thermodynamics. 2025, arXiv:2510.05642. [Google Scholar] [CrossRef]

- Li, B. Entanglement and Bell Inequalities from a Dynamically Emergent Temporal Structure. Preprints 2025. [Google Scholar] [CrossRef]

| 1 |

This does not apply to erasure errors |

| 2 |

The PIF model replaces statistical uncertainty with phase uncertainty. Entropy grows only when synchronization between the two causal directions breaks down (loss, jitter, slips), or when hard decisions erase soft evidence. |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).