Submitted:

27 October 2025

Posted:

29 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background and Contextual Settings

1.2. Literature Review

1.3. Questions, Aims and Objectives

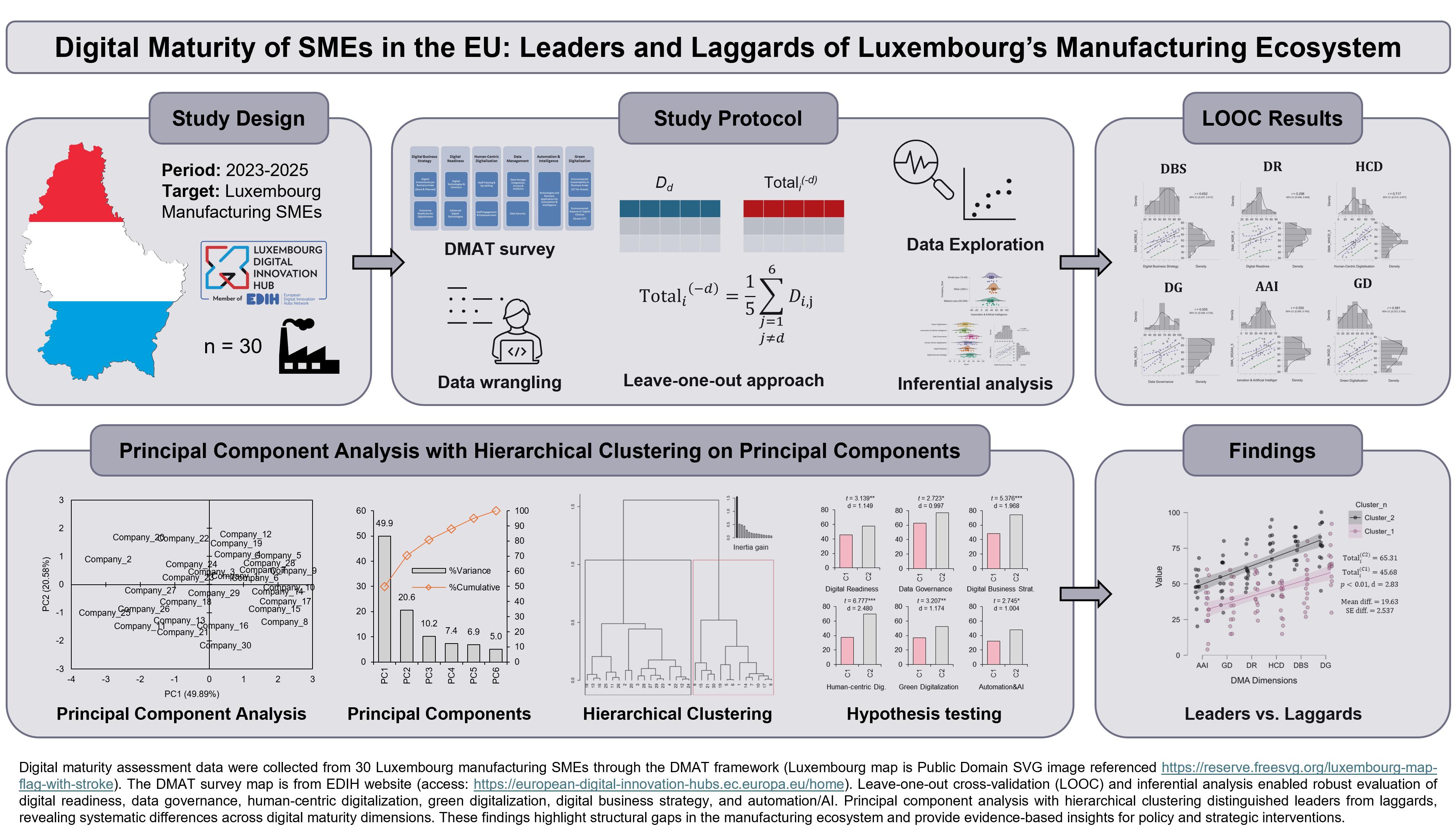

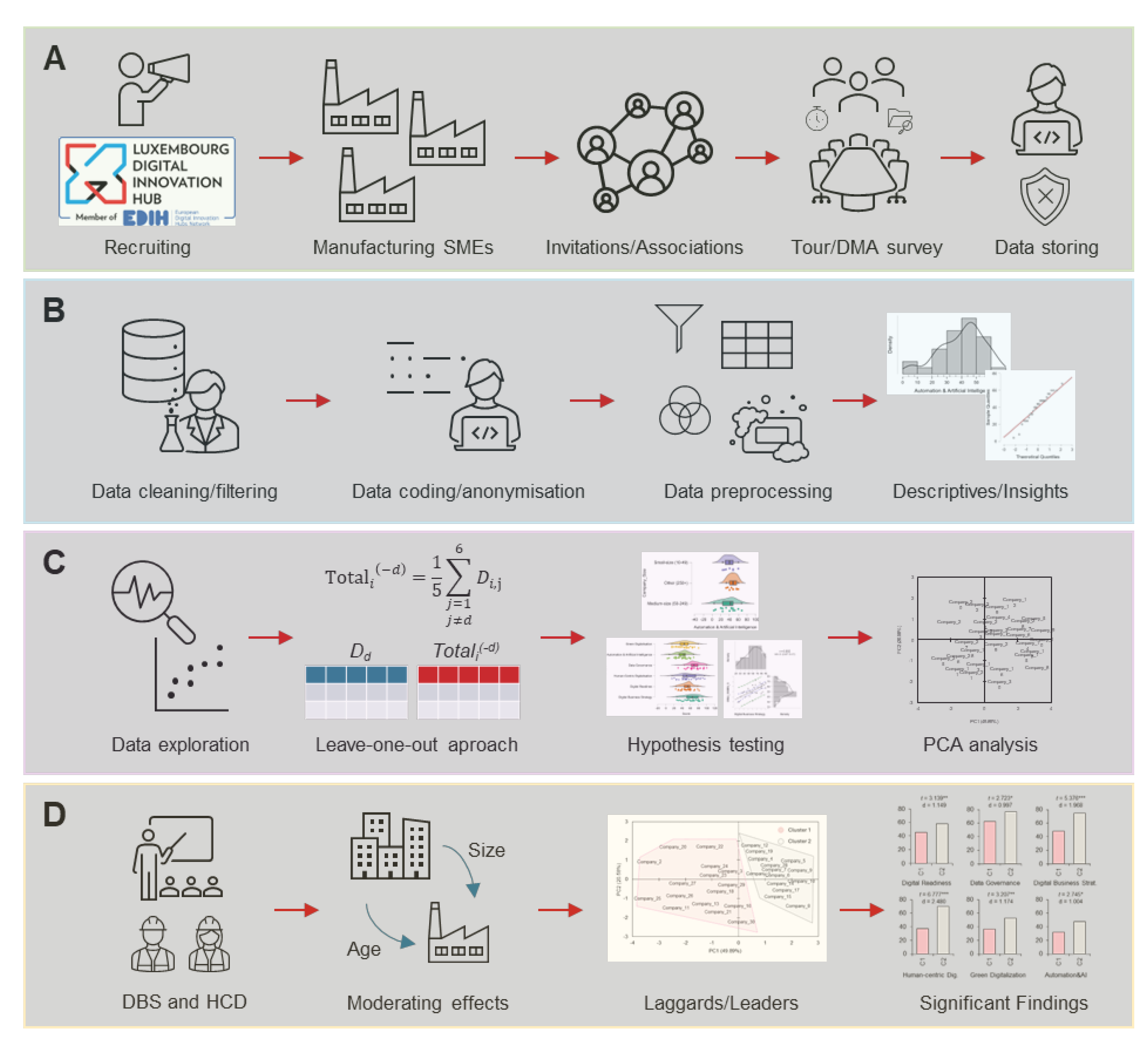

2. Methodology

2.1. Study Protocol

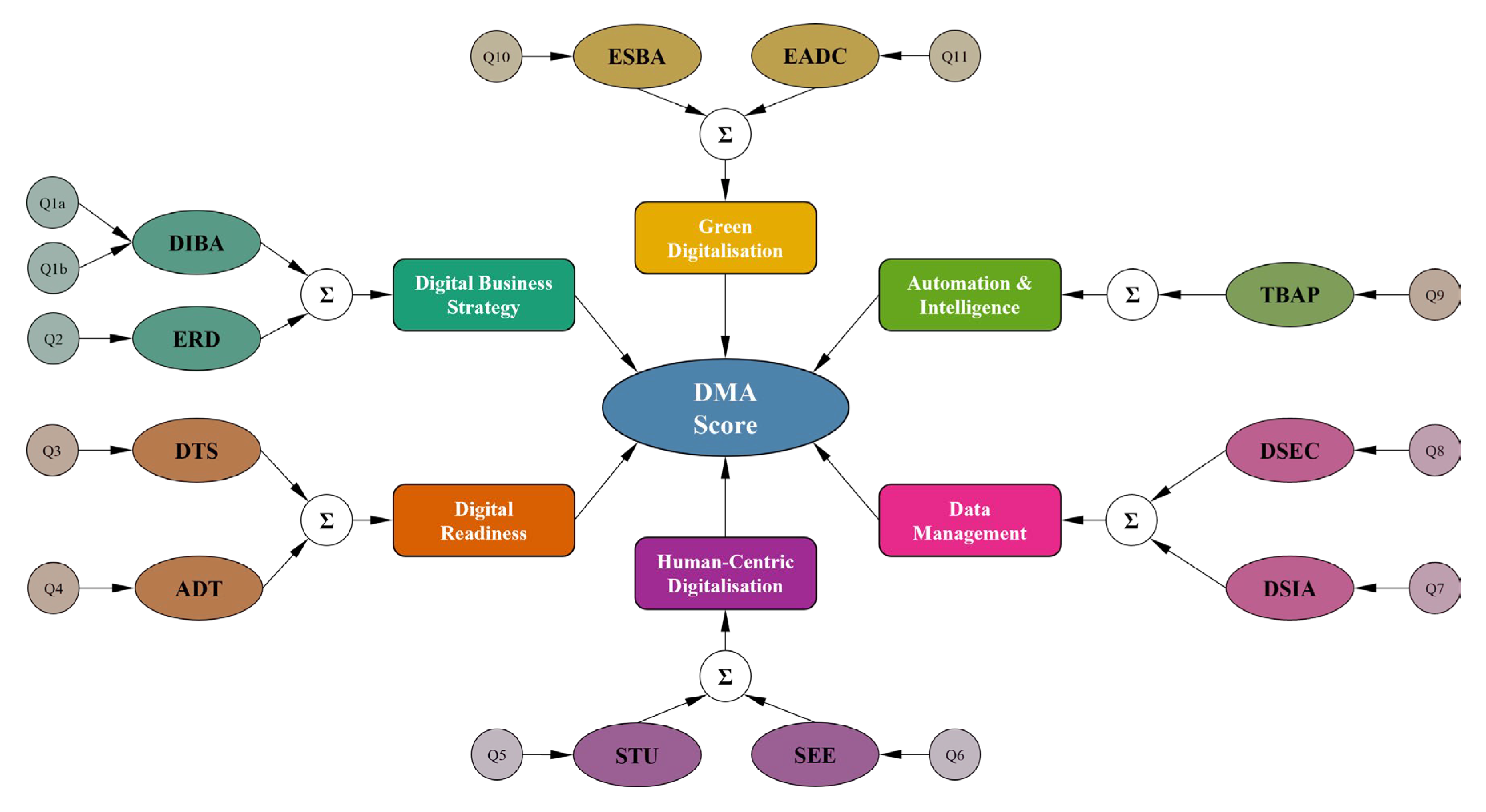

2.2. DMAT Survey and Scoring System

2.3. Analytical Strategy

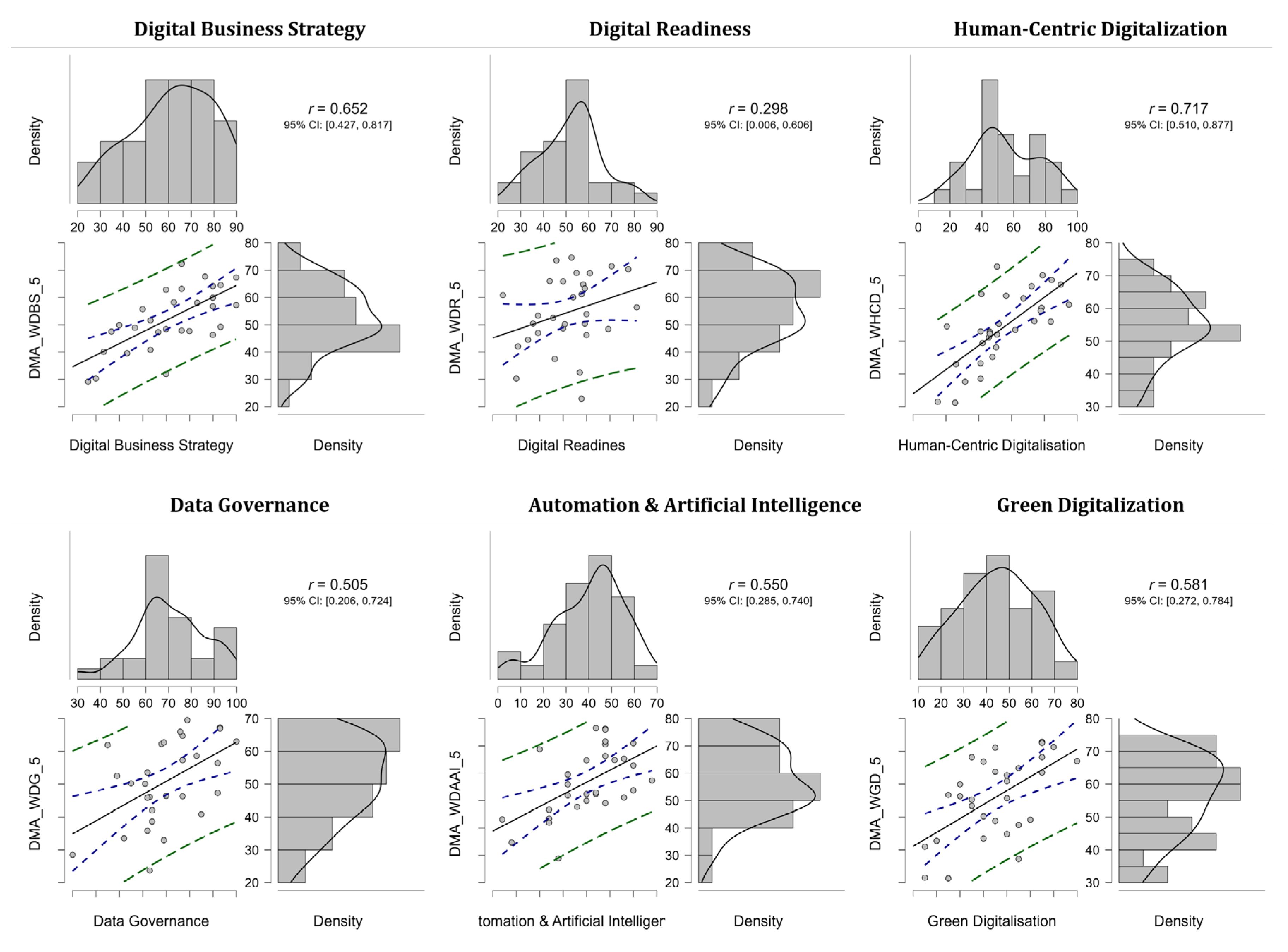

2.3.1. Correlation and Regression Analysis

2.3.2. Moderator Analysis

2.3.3. Within-Subject Comparisons Across Dimensions

2.3.4. PCA with Agglomerative Hierarchical Clustering

2.3.5. Post-hoc Power Analysis

3. Results

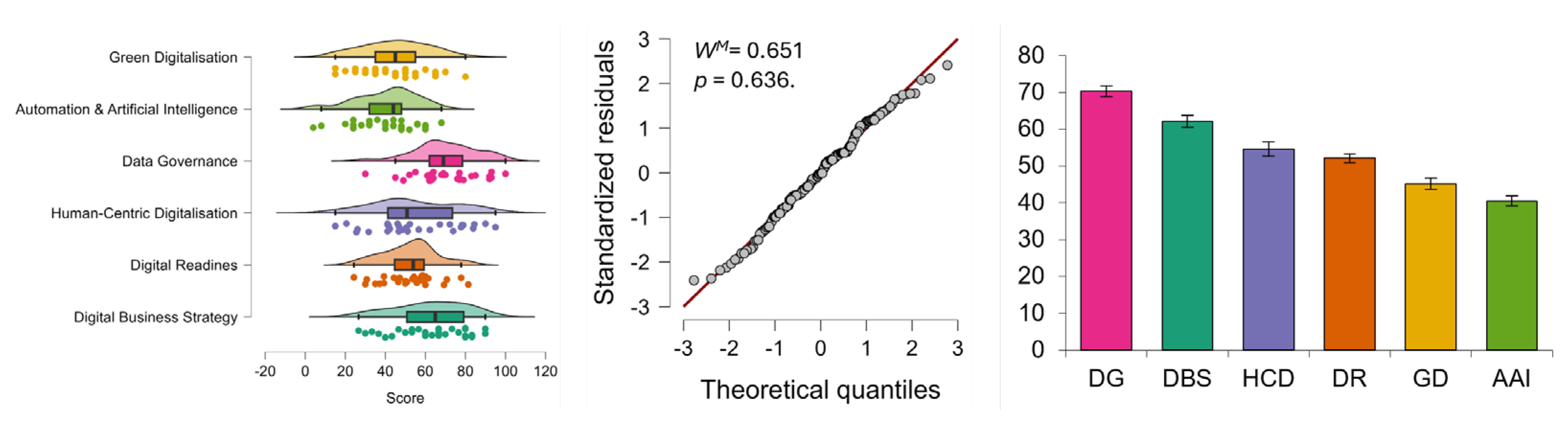

3.1. Descriptive Analysis

3.2. Predictive Strength of Maturity Dimensions

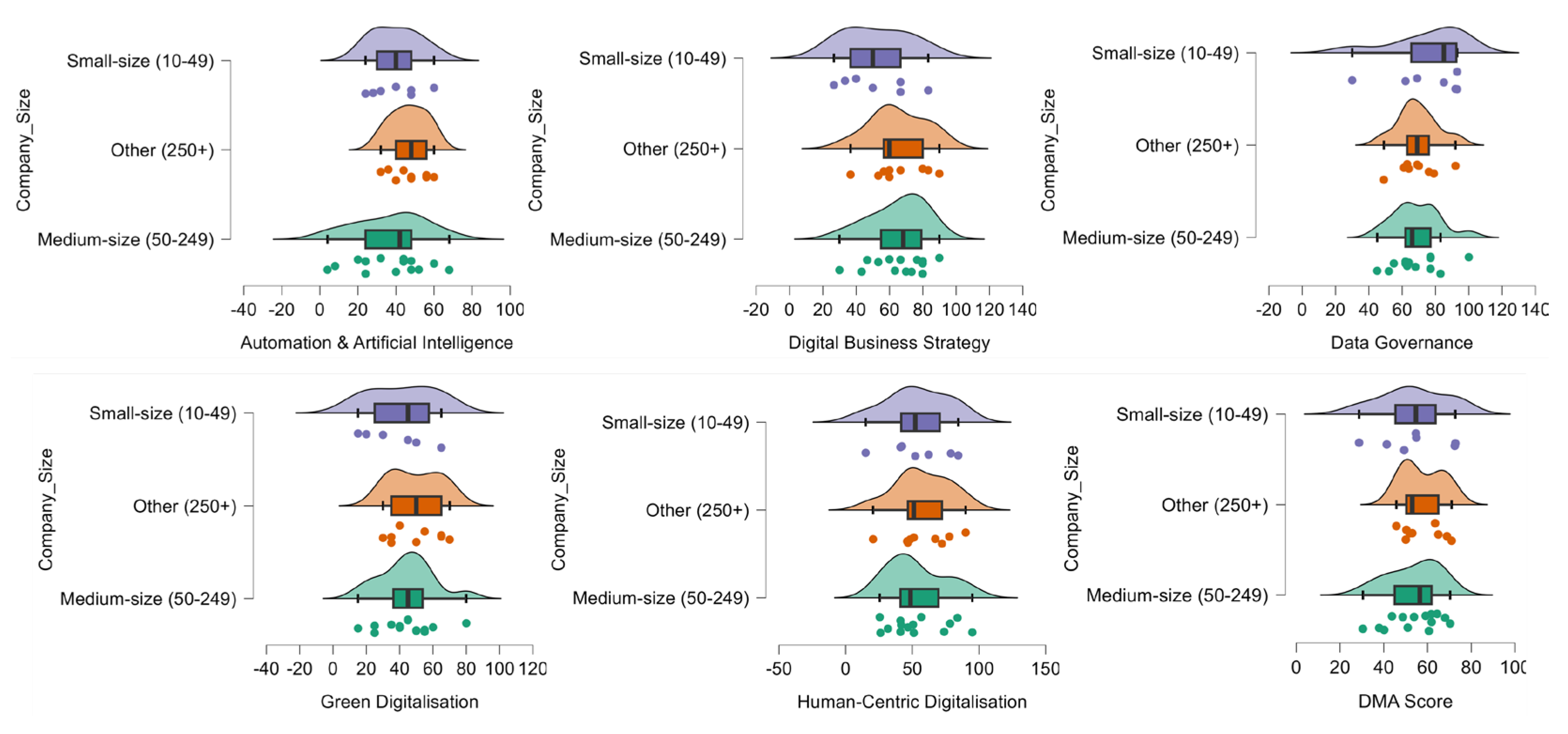

3.3. Structural Moderators of Maturity

3.4. Dimension-Specific Performance

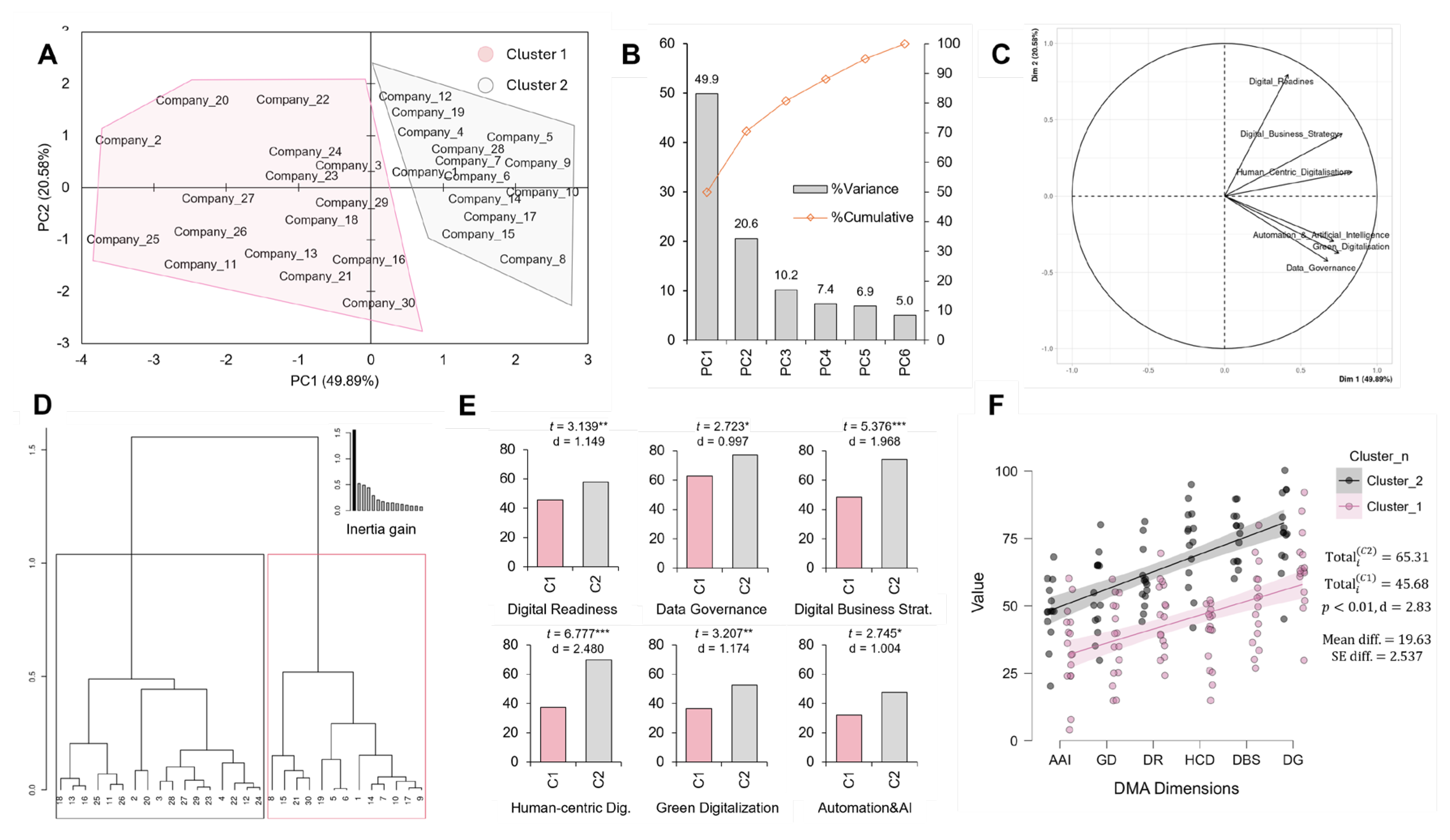

3.5. Emergence of Maturity Profiles Through Clustering

4. Discussion

4.1. Strategic and Human-Centric Enablers as Core Maturity Drivers

4.2. Limited Influence of Structural SME Attributes

4.3. Internal Disparities Between Maturity Dimensions

4.4. Divergen SME Maturity Profiles

5. Conclusions

5.1. Concluding Remarks

5.2. Implications and Limitations

5.3. Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Equations and Abbreviations

| i | index for enterprises |

| n | index for sample size |

| d | index for dimensions of digital maturity (d = 1,…,6) |

| q | index for questions within a dimension (q = 1,…,Qd) |

| o | index for response options within a question q |

| k | index for principal component axis |

| wd,q,o | weight assigned to option o of question q in dimension d |

| gi,d,q,o | graded response value for enterprise i for option o of question q in dimension d |

| Ri,d,q | raw points accrued by enterprise i on question q in dimension d |

| Wd,q | maximum attainable points for question q in dimension d |

| Qi,d,q | normalised score of enterprise i on question q in dimension d, from 0-10 scale |

| Di,d | score of enterprise i in dimension d, expressed on 0-100 scale |

| Totali | total digital maturity score of enterprise i, expressed on 0-100 scale |

| X | data matrix of size n × d (30 × 6 for samples and dimensions) used in PCA |

| Z | standardised data matrix used in PCA |

| zi,d | standardised score of enterprise i on dimension d |

| C | correlation matrix in PCA |

| λk | eigenvalue of axis k, representing the variance explained by the component k |

| vk | eigenvector (loading) associated with axis k |

| fi,k | principal component score of enterprise i on axis k |

| cosi,k2 | squared cosine (quality of representation) of enterprise i on axis k |

| ctri,k | contribution of enterprise i to axis k |

| ttest | independent Student’s t-test statistic |

| s | sample standard deviation |

| r | Pearson’s sample correlation coefficient |

| δ | Pearson’s population correlation coefficient |

| R | Regression coefficient |

| β | Regression weight coefficient |

| SE | Standard error |

| Δ(A,B) | Within-cluster inertia |

| WM | Mauchly’s test of sphericity |

| AAI | Automation & Artificial Intelligence |

| ANOVA | Analysis of Variance |

| CEO | Chief Executive Officer |

| CoV | Coefficient of Variation |

| DBS | Digital Business Strategy |

| DG | Data Governance / Data Management and Connectedness |

| DMA | Digital Maturity Assessment |

| DMAM | Digital Maturity Assessment Model |

| DMAT | Digital Maturity Assessment Tool |

| DR | Digital Readiness |

| EC | European Commission |

| EDIH | European Digital Innovation Hub |

| EIB | European Investment Bank |

| EU | European Union |

| GD | Green Digitalisation |

| GDPR | General Data Protection Regulation |

| HCPC | Hierarchical Clustering on Principal Components |

| HCD | Human-Centric Digitalisation |

| IT | Information Technology |

| KPI | Key Performance Indicator |

| L-DIH | Luxembourg Digital Innovation Hub |

| LNDS | Luxembourg National Data Service |

| LOO / LOOC | Leave-One-Out Correlation |

| MADM | Multi-Attribute Decision-Making |

| ML | Machine Learning |

| MLOps | Machine Learning Operations |

| NACE | Statistical Classification of Economic Activities in the European Community |

| NR | Non-Related (NR1 / NR2 in context) |

| PCA | Principal Component Analysis |

| PSO | Public Sector Organisation |

| RMSE | Root Mean Square Error |

| RQ | Research Question |

| SD | Standard Deviation |

| SE | Standard Error |

| SME | Small and Medium-Sized Enterprise |

| SW | Shapiro–Wilk test |

| SLR | Systematic Literature Review |

| TBI | Test Before Invest |

References

- Milošević, I.M.; Plotnic, O.; Tick, A.; Stanković, Z.; Buzdugan, A. Digital Transformation in Manufacturing: Enhancing Competitiveness Through Industry 4.0 Technologies. Precision Mechanics & Digital Fabrication 2024, 1, 31–40. [Google Scholar] [CrossRef]

- Ghobakhloo, M.; Iranmanesh, M. Digital Transformation Success under Industry 4.0: A Strategic Guideline for Manufacturing SMEs. Journal of Manufacturing Technology Management 2021, 32, 1533–1556. [Google Scholar] [CrossRef]

- Yaqub, M.Z.; Alsabban, A. Industry-4.0-Enabled Digital Transformation: Prospects, Instruments, Challenges, and Implications for Business Strategies. Sustainability 2023, 15, 8553. [Google Scholar] [CrossRef]

- Sharma, M.; Paliwal, T.; Baniwal, P. Challenges in Digital Transformation and Automation for Industry 4.0. In AI-Driven IoT Systems for Industry 4.0; CRC Press: Boca Raton, 2024; pp. 143–163. [Google Scholar]

- Agustian, K.; Mubarok, E.S.; Zen, A.; Wiwin, W.; Malik, A.J. The Impact of Digital Transformation on Business Models and Competitive Advantage. Technology and Society Perspectives (TACIT) 2023, 1, 79–93. [Google Scholar] [CrossRef]

- European Commission 2030 Digital Compass: The European Way for the Digital Decade; Brussels, 2021;

- Brodny, J.; Tutak, M. Digitalization of Small and Medium-Sized Enterprises and Economic Growth: Evidence for the EU-27 Countries. Journal of Open Innovation: Technology, Market, and Complexity 2022, 8, 67. [Google Scholar] [CrossRef]

- Strilets, V.; Frolov, S.; Datsenko, V.; Tymoshenko, O.; Yatsko, M. State Support for the Digitalization of SMEs in European Countries. Problems and Perspectives in Management 2022, 20, 290–305. [Google Scholar] [CrossRef]

- European Commission The Digital Europe Programme. Available online: https://digital-strategy.ec.europa.eu/en/activities/digital-programme#:~:text=The%20Digital%20Europe%20Programme%20,EDIH (accessed on 19 September 2025).

- Belciu, A.-C. Performance in Accessing Funding through Digital Europe Programme. Eastern European Journal for Regional Studies 2025, 11, 45–62. [Google Scholar] [CrossRef]

- Kanellopoulou, D.; Giannakopoulos, G.; Terlixidis, P.; Karkaletsis, V. Smart Attica EDIH: A Paradigm for DIH Governance and a Novel Methodology for AI-Powered One-Stop-Shop Projects Design. In Proceedings of the Springer Proceedings in Business and Economics; Springer Nature; 2025; pp. 151–159. [Google Scholar]

- Rudawska, J. THE ONE STOP SHOP MODEL – A CASE STUDY OF A DIGITAL INNOVATION HUB. Zeszyty Naukowe Politechniki Częstochowskiej. Zarządzanie 2022, 47, 31–42. [Google Scholar] [CrossRef]

- Carpentier, E.; D’Adda, D.; Nepelski, D.; Stake, J. European Digital Innovation Hubs Network’s Activities and Customers; Luxembourg, 2025.

- Maurer, F. Business Intelligence and Innovation: A Digital Innovation Hub as Intermediate for Service Interaction and System Innovation for Small and Medium-Sized Enterprises. In IFIP advances in information and communication technology; Springer Science+Business Media, 2021; pp. 449–459 ISBN 1868-4238.

- Sassanelli, C.; Terzi, S. The D-BEST Based Digital Innovation Hub Customer Journey Analysis Method: Configuring DIHs Unique Value Proposition. International Journal of Engineering Business Management 2022, 14. [Google Scholar] [CrossRef]

- Gyulai, T.; Nagy, M.; Cibu-Buzac, R. Smart Development with Digital Intelligent Cities in Cross-Border Regions. In Pandémia – fenntartható gazdálkodás – környezettudatosság; Soproni Egyetem Kiadó, 2022; pp. 264–677.

- Kalpaka, A.; Rissola, G.; De Nigris, S.; Nepelski, D. Digital Maturity Assessment (DMA) Framework & Qustionnaires for SMEs/PSOs: A Guidence Document for EDIHs; Seville, 2023;

- Kulchytsky, О. Assessment of Digital Maturity of Business Companies According to the Methodology of European Digital Innovation Hubs. Economics time realities 2024, 5, 117–122. [Google Scholar] [CrossRef]

- European Commission Commission Unveils New Tool to Help SMEs Self-Assess Their Digital Maturity. Available online: https://digital-strategy.ec.europa.eu/en/news/commission-unveils-new-tool-help-smes-self-assess-their-digital-maturity (accessed on 19 September 2025).

- European Commission Luxembourg 2025 Digital Decade Country Report. Available online: https://digital-strategy.ec.europa.eu/en/factpages/luxembourg-2025-digital-decade-country-report (accessed on 19 September 2025).

- The Government of the Grand Duchy of Luxembourg Digital Decade: National Strategic Roadmap for Luxembourg 2.0; Luxembourg, 2024;

- European Commission State of the Digital Decade 2024; Brussels, 2024.

- Eurostat Digitalisation in Europe – 2025 Edition. Available online: https://ec.europa.eu/eurostat/web/interactive-publications/digitalisation-2025#:~:text=The%20proportion%20of%20SMEs%20with,in%20Finland (accessed on 25 September 2025).

- Ministry of the Economy Ons Wirtschaft vu Muer: Roadmap for a Competitive and Sustainable Economy 2025; Luxembourg, 2021.

- The Government of the Grand Duchy of Luxembourg Luxembourg’s AI Strategy: Accelerating Digital Sovereignty 2030; Luxembourg, 2025;

- LNDS Annual Report 2024; Esch-sur-Alzette, 2024;

- Sobczak, E. Digital Transformation of Enterprises and Employment in Technologically Advanced and Knowledge-Intensive Sectors in the European Union Countries. Sustainability 2025, 17, 5868. [Google Scholar] [CrossRef]

- Tanhua, D.; Tuomi, E.O.; Kesti, K.; Ogilvie, B.; Delgado Sahagún, C.; Nicolas, J.; Rodríguez, A.; Pajares, J.; Banville, L.; Arcusin, L.; et al. DIGITAL MATURITY OF THE COMPANIES IN SMART INDUSTRY ERA. Scientific Papers Series Management, Economic Engineering in Agriculture and Rural Development 2024, 24, 855–876. [Google Scholar]

- Kljajić Borštnar, M.; Pucihar, A. Multi-Attribute Assessment of Digital Maturity of SMEs. Electronics (Basel) 2021, 10, 885. [Google Scholar] [CrossRef]

- Volf, L.; Dohnal, G.; Beranek, L.; Kyncl, J. Navigating the Fourth Industrial Revolution: SBRI - A Comprehensive Digital Maturity Assessment Tool and Road to Industry 4.0 for Small Manufacturing Enterprises. Manufacturing Technology 2024, 24, 668–680. [Google Scholar] [CrossRef]

- Novoa, R. Training Needs in Digital Technologies in Companies in the Valencian Region: An Empirical Study Carried out in the Framework of the European Network of EDIHs (European Digital Innovation Hubs). Research Square (Research Square) 2024. [Google Scholar] [CrossRef]

- Rahamaddulla, S.R. Bin; Leman, Z.; Baharudin, B.T.H.T. Bin; Ahmad, S.A. Conceptualizing Smart Manufacturing Readiness-Maturity Model for Small and Medium Enterprise (Sme) in Malaysia. Sustainability (Switzerland) 2021, 13. [Google Scholar] [CrossRef]

- Semrádová Zvolánková, S.; Krajčík, V. Digital Maturity of Czech SMEs Concerning the Demographic Characteristics of Entrepreneurs and Enterprises. Equilibrium. Quarterly Journal of Economics and Economic Policy 2024, 19, 1363–1404. [Google Scholar] [CrossRef]

- Di Felice, P.; Paolone, G.; Di Valerio, D.; Pilotti, F.; Sciamanna, M. Transforming DIGROW into a Multi-Attribute Digital Maturity Model. Formalization and Implementation of the Proposal. In Proceedings of the Lecture Notes in Computer Science; Springer Science and Business Media Deutschland GmbH, 2022; Vol. 13378 LNCS; pp. 541–557. [Google Scholar]

- Serrano-Ruiz, J.C.; Ferreira, J.; Jardim-Gonçalves, R.; Ortiz Bas, Á. Relational Network of Innovation Ecosystems Generated by Digital Innovation Hubs: A Conceptual Framework for the Interaction Processes of DIHs from the Perspective of Collaboration within and between Their Relationship Levels. J Intell Manuf 2025, 36, 1505–1545. [Google Scholar] [CrossRef]

- Crupi, A.; del Sarto, N.; Di Minin, A.; Gregori, G.L.; Lepore, D.; Marinelli, L.; Spigarelli, F. The Digital Transformation of SMEs – a New Knowledge Broker Called the Digital Innovation Hub. Journal of Knowledge Management 2020, 24, 1263–1288. [Google Scholar] [CrossRef]

- Kääriäinen, J.; Perätalo, S.; Saari, L.; Koivumäki, T.; Tihinen, M. Supporting the Digital Transformation of SMEs — Trained Digital Evangelists Facilitating the Positioning Phase. International Journal of Information Systems and Project Management 2023, 11, 5–27. [Google Scholar] [CrossRef]

- Leino, S.-P.; Kuusisot, O.; Paasi, J.; Tihinen, M. VTT Model of Digimaturity. In Towards a new era in manufacturing: Final report of VTT’s for industry spearhead programme; 2017; pp. 1–186.

- Kudryavtsev, D.; Moilanen, T.; Laatikainen, E.; Ali Khan, U. Towards Actionable AI Implementation Capability Maturity Assessment for SMEs. In Herman Hollerith Conference 2024; 2025; pp. 7–17.

- Krčelić, G.; Lorencin, I.; Tanković, N. Digital Maturity of Private and Public Organizations in RH. In Proceedings of the 2025 MIPRO 48th ICT and Electronics Convention; 2025; pp. 1857–1861. [Google Scholar]

- Shirwa, A.M.; Hassan, A.M.; Hassan, A.Q.; Kilinc, M. A Cooperative Governance Framework for Sustainable Digital Transformation in Construction: The Role of Digital Enablement and Digital Strategy. Results in Engineering 2025, 25, 104139. [Google Scholar] [CrossRef]

- Grigorescu, A.; Lincaru, C.; Ciuca, V.; Priciog, S. Trajectories toward Digital Transformation of Business Post 2027 in Romania. In Proceedings of the Managing Business Transformations during Uncertain Times; Bucharest, October 26 2023; pp. 244–259. [Google Scholar]

- Zare, L.; Ali, M. Ben; Rauch, E.; Matt, D.T. Navigating Challenges of Small and Medium-Sized Enterprises in the Era of Industry 5.0. Results in Engineering 2025, 27, 106457. [Google Scholar] [CrossRef]

- Mazgajczyk, E.; Pietrusewicz, K.; Kujawski, K. Digital Maturity in Mapping the European Digital Innovation Hub Services. Pomiary Automatyka Robotyka 2024, 28, 125–140. [Google Scholar] [CrossRef]

- Lorencin, I.; Krčelić, G.; Blašković, L.; Žužić, A.; Licardo, J.T.; Babić, S.; Etinger, D.; Tanković, N. From Innovation to Impact: Case Studies of EDIH Adria TBI Projects. In Proceedings of the 2025 MIPRO 48th ICT and Electronics Convention; 2025; pp. 1851–1856. [Google Scholar]

- Quenum, G.G.Y.; Vallée, S.; Ertz, M. The Digital Maturity of Small- and Medium-Sized Enterprises in the Saguenay-Lac-Saint-Jean Region. Machines 2025, 13, 835. [Google Scholar] [CrossRef]

- Yezhebay, A.; Sengirova, V.; Igali, D.; Abdallah, Y.O.; Shehab, E. Digital Maturity and Readiness Model for Kazakhstan SMEs. In Proceedings of the SIST 2021 - 2021 IEEE International Conference on Smart Information Systems and Technologies; Institute of Electrical and Electronics Engineers Inc., April 28 2021. [Google Scholar]

- Omol, E.J.; Mburu, L.W.; Abuonji, P.A. Digital Maturity Assessment Model (DMAM): Assimilation of Design Science Research (DSR) and Capability Maturity Model Integration (CMMI). Digital Transformation and Society 2025, 4, 128–152. [Google Scholar] [CrossRef]

- Alqoud, A.; Milisavljevic-Syed, J.; Salonitis, K. Self-Assessment Model for Digital Retrofitting of Legacy Manufacturing Systems in the Context of Industry 4.0. International Journal of Industrial Engineering and Management 2025, 16, 316–336. [Google Scholar] [CrossRef]

- M. Saari, L.; K滗ri鋓nen, J.; Yliker鋖�, M. Maturity Model for the Manufacturing Industry with Case Experiences. Intelligent and Sustainable Manufacturing 2024, 1, 10010–10010. [Google Scholar] [CrossRef]

- Spaltini, M.; Acerbi, F.; Pinzone, M.; Gusmeroli, S.; Taisch, M. Defining the Roadmap towards Industry 4.0: The 6Ps Maturity Model for Manufacturing SMEs. In Proceedings of the Procedia CIRP; Elsevier B.V., 2022; Vol. 105; pp. 631–636. [Google Scholar]

- Krulčić, E.; Doboviček, S.; Pavletić, D.; Čabrijan, I. A MCDA Based Model for Assessing Digital Maturity in Manufacturing SMEs. Tehnicki Glasnik 2025, 19, 37–42. [Google Scholar] [CrossRef]

- Krulčić, E.; Doboviček, S.; Pavletić, D.; Čabrijan, I. A Dynamic Assessment of Digital Maturity in Industrial SMEs: An Adaptive AHP-Based Digital Maturity Model (DMM) with Customizable Weighting and Multidimensional Classification (DAMA-AHP). Technologies (Basel) 2025, 13. [Google Scholar] [CrossRef]

- Almeida, F. The Value of Digital Transformation Initiatives in Manufacturing Firms. Master Thesis, NOVA Information Management School, 2024.

- De Carolis, A.; Sassanelli, C.; Acerbi, F.; Macchi, M.; Terzi, S.; Taisch, M. The Digital REadiness Assessment MaturitY (DREAMY) Framework to Guide Manufacturing Companies towards a Digitalisation Roadmap. Int J Prod Res 2025, 63, 5555–5581. [Google Scholar] [CrossRef]

- Schuh, G.; Anderl, R.; Dumitrescu, R.; Kruger, A.; ten Hompel, M. Industrie 4.0 Maturity Index: Managing the Digital Transformation of Companies. Available online: https://en.acatech.de/publication/industrie-4-0-maturity-index-update-2020/ (accessed on 23 September 2025).

- Pandey, A.; Branson, D. 2020 Digital Operations Study for Energy: Oil and Gas. Available online: https://www.strategyand.pwc.com/gx/en/insights/2020/digital-operations-study-for-oil-and-gas/2020-digital-operations-study-for-energy-oil-and-gas.pdf (accessed on 23 September 2025).

- Deloitte Digital Maturity Model: Achieving Digital Maturity to Drive Growth. Available online: https://www.readkong.com/page/digital-maturity-model-achieving-digital-maturity-to-8032400 (accessed on 23 September 2025).

- van Tonder, C.; Bossink, B.; Schachtebeck, C.; Nieuwenhuizen, C. Key Dimensions That Measure the Digital Maturity Levels of Small and Medium-Sized Enterprises (SMEs). Journal of technology management & innovation 2024, 19, 110–130. [Google Scholar] [CrossRef]

- Durst, S.; Eðvarðsson, I.; Foli, S. Knowledge Management in SMEs: A Follow-up Literature Review. Journal of Knowledge Management 2023, 27, 25–58. [Google Scholar] [CrossRef]

- Cognet, B.; Pernot, J.-P.; Rivest, L.; Danjou, C. Systematic Comparison of Digital Maturity Assessment Models. Journal of Industrial and Production Engineering 2023, 40, 519–537. [Google Scholar] [CrossRef]

- Orošnjak, M.; Brkljač, N.; Ristić, K. Fostering Cleaner Production through the Adoption of Sustainable Maintenance: An Umbrella Review with a Questionnaire-Based Survey Analysis. Cleaner Production Letters 2025, 8, 100095. [Google Scholar] [CrossRef]

- Shi, Q.; Shen, L. Advancing Firm-Level Digital Technology Diffusion: A Hybrid Bibliometric and Framework-Based Systematic Literature Review. Systems 2025, 13, 262. [Google Scholar] [CrossRef]

- Vadana, I.-I.; Kuivalainen, O.; Torkkeli, L.; Saarenketo, S. The Role of Digitalization on the Internationalization Strategy of Born-Digital Companies. Sustainability 2021, 13, 14002. [Google Scholar] [CrossRef]

- EIB Who Is Prepared for the New Digital Age? 2019.

- McElheran, K.; Li, J.F.; Brynjolfsson, E.; Kroff, Z.; Dinlersoz, E.; Foster, L.; Zolas, N. AI Adoption in America: Who, What, and Where. J Econ Manag Strategy 2024, 33, 375–415. [Google Scholar] [CrossRef]

- Steiber, A.; Alänge, S.; Ghosh, S.; Goncalves, D. Digital Transformation of Industrial Firms: An Innovation Diffusion Perspective. European Journal of Innovation Management 2021, 24, 799–819. [Google Scholar] [CrossRef]

- Marin, R.; Santos-Arteaga, F.J.; Tavana, M.; Di Caprio, D. Value Chain Digitalization and Technological Development as Innovation Catalysts in Small and Medium-Sized Enterprises. JOURNAL OF INNOVATION & KNOWLEDGE 2023, 8. [Google Scholar] [CrossRef]

- Kalpaka, A. A Deep Dive into the Digital Maturity Assessment Tool. Available online: https://european-digital-innovation-hubs.ec.europa.eu/system/files/2023-02/dma_tool_16.02.2023_presentation.pdf (accessed on 25 September 2025).

- European Commission Digital Maturity Assessment Questionnaire for SMEs. Available online: https://european-digital-innovation-hubs.ec.europa.eu/system/files/2024-02/DMA%20Questionnaire%20for%20SMEs_EDIH_network_EN_v2.1.pdf (accessed on 25 September 2025).

- Husson, F.; Le, S.; Pages, J. Exploratory Multivariate Analysis by Example Using R; 2nd ed.; Chapman & Hall/CRC Taylor and Francis Group: Milton Park Abingdon, OX, UK, 2017.

- Husson, F.; Josse, J.; Pages, J. Principal Component Methods- Hierarchical Clustering- Partitional Clustering: Why Would We Need to Choose for Visualizing Data? 2010. [Google Scholar]

- Husson, F.; Josse, J.; Le, S.; Mazet, J. FactoMineR: Multivariate Exploratory Data Analysis and Data Mining 2024.

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Routledge, 2013; ISBN 9781134742707.

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A Flexible Statistical Power Analysis Program for the Social, Behavioral, and Biomedical Sciences. Behav Res Methods 2007, 39, 175–191. [Google Scholar] [CrossRef]

- Eurostat NACE Rev. 2: Statistical Classification of Economic Activities in the European Community; Luxembourg: Office for Official Publications of the European Communities, 2008. [Google Scholar]

- European Commission Commission Recommendation 2003/361/EC of 6 May 2003 Concerning the Definition of Micro, Small and Medium-Sized Enterprises. Official Journal of the European Union 2003, 124, 36–41.

- Potvin, P.J.; Schutz, R.W. Statistical Power for the Two-Factor Repeated Measures ANOVA. Behavior Research Methods, Instruments, & Computers 2000, 32, 347–356. [Google Scholar] [CrossRef]

- Boral, S.; Black, L.; Velis, C. Conceptualizing Systems Thinking and Complexity Modelling for Circular Economy Quantification: A Systematic Review and Critical Analysis 2025.

| Reference | Survey tool | Region | Sample pool | Sample size |

|---|---|---|---|---|

| [46] | Custom DM tool | Canada | SMEs | 30 |

| [39] | FAIR EDIH AI | Finland | SMEs | >60 |

| [29] | MADM model | Slovenia | SMEs | 7 |

| [47] | DM and Readiness | Kazakhstan | SMEs | 12 (managers) |

| [30] | SBRI DMA | Czech | SMEs | 23 |

| [48] | DMAM | Kenya | SMEs | 382 |

| [49] | Digital retrofitting | UK | SMEs | 32 |

| [50] | OSME Tool | Finland | SMEs+Large | 9 |

| [28] | DM Scan | EU (Mixed) | SMEs+Large | 70 |

| [33] | Custom DM tool | Czech | SMEs | >100 |

| [51] | 6P Maturity Model | Italy | SMEs | 9 |

| [52] | MCDA DMA | Croatia | SMEs | 3 |

| [53] | Custom DMA | Croatia | SMEs | 6 |

| [32] | Smart Readiness | Global | SMEs | Concept* |

| [54] | TOE-based survey | Portugal | SMEs+Large | 9 |

| [27] | EU indicators | EU | Enterprises | Countries† |

| [55] | DREAMY | Italy | SME+Large | 1 (380 test) |

| [37] | VTT’s VMoD | Finland | SMEs | 19 |

| [38] | VTT’s VMoD | Finland | SMEs | Concept* |

| [31] | Custom DM tool | Spain | SMEs+Large | 30 SMEs (72) |

| [40] | EU DMAT | Croatia | SMEs/PSOs | 48 SMEs/62 PSOs |

| This study | EU DMAT | Luxembourg | SMEs | 30 |

| *The survey tool is not demonstrated on a sample of studies or provides only data without stating the sample size. †The DMA analysis is performed on a sample of EU countries. | ||||

| Dimension | Med | Mean | SE | 95%CI Mean | SD | CoV | IQR | p(SW) | Range | Min | Max |

|---|---|---|---|---|---|---|---|---|---|---|---|

| DBS | 64.94 | 62.16 | 3.29 | [55.44, 68.88] | 17.99 | 0.29 | 28.31 | 0.29 | 63.27 | 26.00 | 89.00 |

| DR | 53.93 | 52.14 | 2.48 | [47.06, 57.22] | 13.60 | 0.26 | 14.64 | 0.78 | 57.14 | 24.00 | 81.00 |

| HCD | 50.83 | 54.65 | 3.91 | [46.66, 62.64] | 21.40 | 0.39 | 32.22 | 0.35 | 80.00 | 15.00 | 95.00 |

| DG | 69.00 | 70.30 | 2.90 | [64.38, 76.22] | 15.86 | 0.23 | 16.50 | 0.63 | 70.00 | 30.00 | 100.00 |

| AAI | 44.00 | 40.53 | 2.82 | [34.77, 46.29] | 15.43 | 0.38 | 16.00 | 0.37 | 64.00 | 4.00 | 68.00 |

| GD | 45.00 | 45.17 | 3.06 | [38.92, 51.42] | 16.74 | 0.37 | 20.00 | 0.78 | 65.00 | 15.00 | 80.00 |

| DMA Totali | 54.26 | 54.84 | 2.20 | [50.34, 59.35] | 12.07 | 0.22 | 15.28 | 0.96 | 44.01 | 28.00 | 72.00 |

| Dimension | r (β coeff.) | 95% CI [r] | R2 | adj.R2 | RMSE | SW test | p(SW) |

|---|---|---|---|---|---|---|---|

| DBS | 0.652*** | [0.43, 0.82] | 0.425 | 0.405 | 9.07 | 0.971 | 0.643 |

| DR | 0.298 | [0.01, 0.61] | 0.089 | 0.056 | 12.94 | 0.974 | 0.768 |

| HCD | 0.717*** | [0.51, 0.88] | 0.514 | 0.497 | 7.79 | 0.978 | 0.885 |

| DG | 0.505** | [0.21, 0,72] | 0.255 | 0.229 | 10.98 | 0.966 | 0.458 |

| AAI | 0.550** | [0.28, 0.74] | 0.302 | 0.277 | 10.57 | 0.965 | 0.437 |

| GD | 0.581*** | [0.27, 0.78] | 0.337 | 0.314 | 10.07 | 0.957 | 0.234 |

| *p < 0.05, **p < 0.01, ***p <0.001. | |||||||

| Moderator | Dimension | Pearson’s r | p value | Spearman’s ρ | p value |

|---|---|---|---|---|---|

| Age | Totali | -0.040 | 0.832 | -0.081 | 0.669 |

| Age | DBS | 0.193 | 0.306 | 0.198 | 0.295 |

| Age | DR | 0.086 | 0.650 | -0.016 | 0.933 |

| Age | HCD | -0.182 | 0.335 | -0.191 | 0.312 |

| Age | DG | -0.263 | 0.160 | -0.310 | 0.095 |

| Age | AAI | 0.068 | 0.723 | -0.006 | 0.998 |

| Age | GD | 0.025 | 0.894 | 0.060 | 0.751 |

| Testing | Mean diff. | 95%CI Mean | SE | df | t | Cohen’s d | 95%CI Cohen d | pbonf | pholm |

|---|---|---|---|---|---|---|---|---|---|

| DBS-DR | 10.02 | [0.43, 19.60] | 2.996 | 29 | 3.343 | 0.589 | [-0.03, 1.20] | 0.034 | 0.018 |

| DBS-HCD | 7.51 | [-2.15, 17.2] | 3.022 | 29 | 2.485 | 0.442 | [-0.16, 1.04] | 0.284 | 0.095 |

| DBS-DG | -8.14 | [-19.6, 3.33] | 3.587 | 29 | -2.269 | -0.478 | [-1.18, 0.22] | 0.463 | 0.123 |

| DBS-AAI | 21.63 | [10.56, 32.7] | 3.461 | 29 | 6.248 | 1.271 | [0.43, 2.11] | < 0.001 | < 0.001 |

| DBS-GD | 16.99 | [5.86, 28.13] | 3.481 | 29 | 4.882 | 0.999 | [0.22, 1.78] | < 0.001 | < 0.001 |

| DR-HCD | -2.50 | [-14.68, 9.67] | 3.807 | 29 | -0.658 | -0.147 | [-0.87, 0.57] | 1.000 | 0.516 |

| DR-DG | -18.16 | [-30.3, -5.99] | 3.803 | 29 | -4.775 | -1.067 | [-1.91, 0.22] | < 0.001 | < 0.001 |

| DR-AAI | 11.61 | [0.35, 22.87] | 3.521 | 29 | 3.297 | 0.682 | [-0.04, 1.40] | 0.039 | 0.018 |

| DR-GD | 6.976 | [-5.10, 19.06] | 3.777 | 29 | 1.847 | 0.410 | [-0.32, 1.14] | 1.000 | 0.225 |

| HCD-DG | -15.65 | [-27.04, -4.27] | 3.559 | 29 | -4.397 | -0.920 | [-1.69, 0.15] | 0.002 | 0.001 |

| HCD-AAI | 14.11 | [2.55, 25.67] | 3.614 | 29 | 3.905 | 0.830 | [0.07, 1.59] | 0.008 | 0.005 |

| HCD-GD | 9.481 | [-2.25, 21.21] | 3.667 | 29 | 2.585 | 0.557 | [-0.17, 1.28] | 0.225 | 0.090 |

| DG-AAI | 29.77 | [19.89, 39.65] | 3.089 | 29 | 9.635 | 1.750 | [0.81, 2.69] | < 0.001 | < 0.001 |

| DG-GD | 25.13 | [15.81, 34.46] | 2.917 | 29 | 8.617 | 1.477 | [0.65, 2.30] | < 0.001 | < 0.001 |

| AAI-GD | -4.63 | [-13.38, 4.11] | 2.736 | 29 | -1.693 | -0.272 | [-0.80, 0.25] | 1.000 | 0.225 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).