Submitted:

20 October 2025

Posted:

21 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

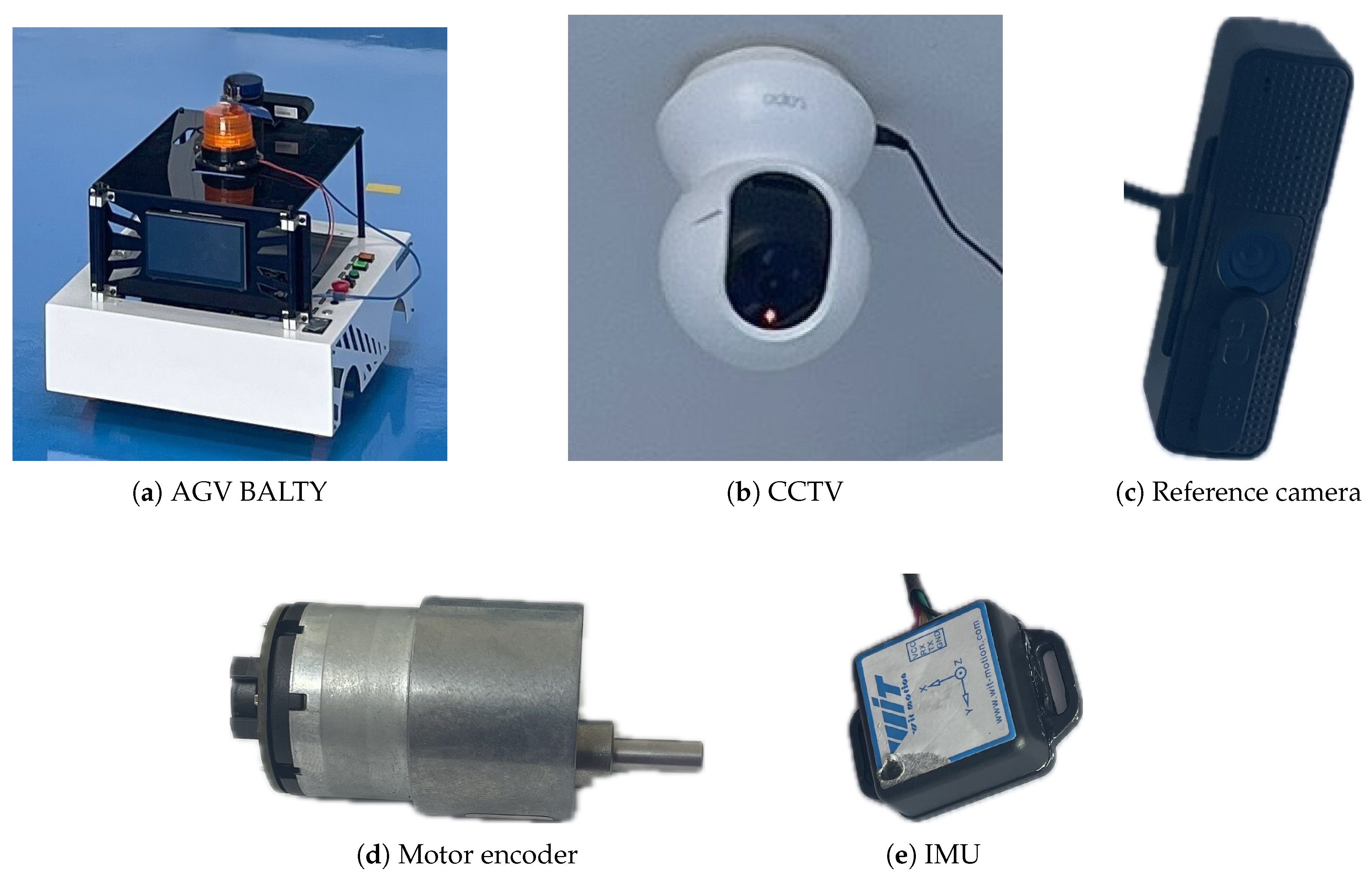

2.1. Preparation and Equipment Used in the Experiment

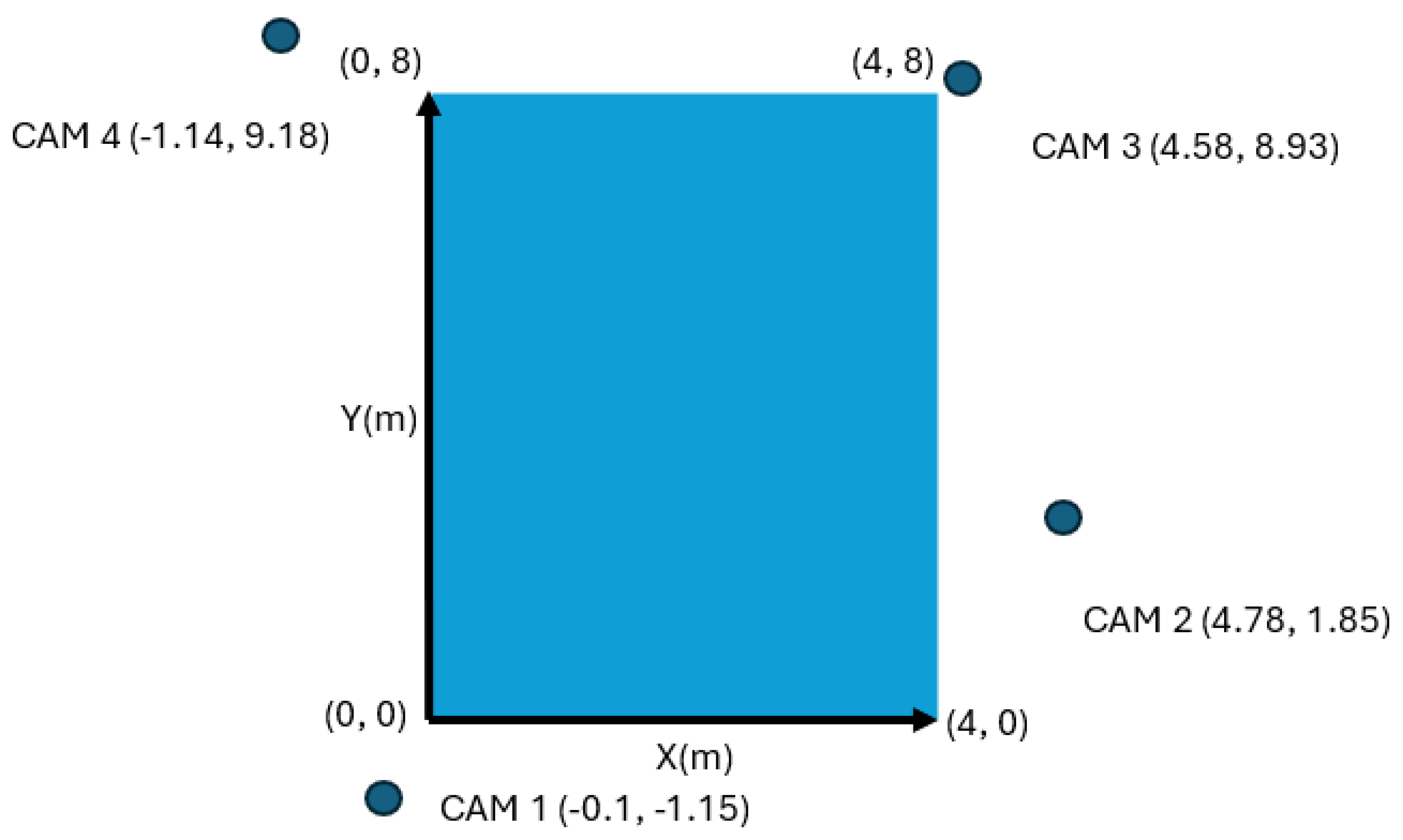

2.1.1. Preparation Used in the Experiment

2.1.2. Equipment Used in the Experiment

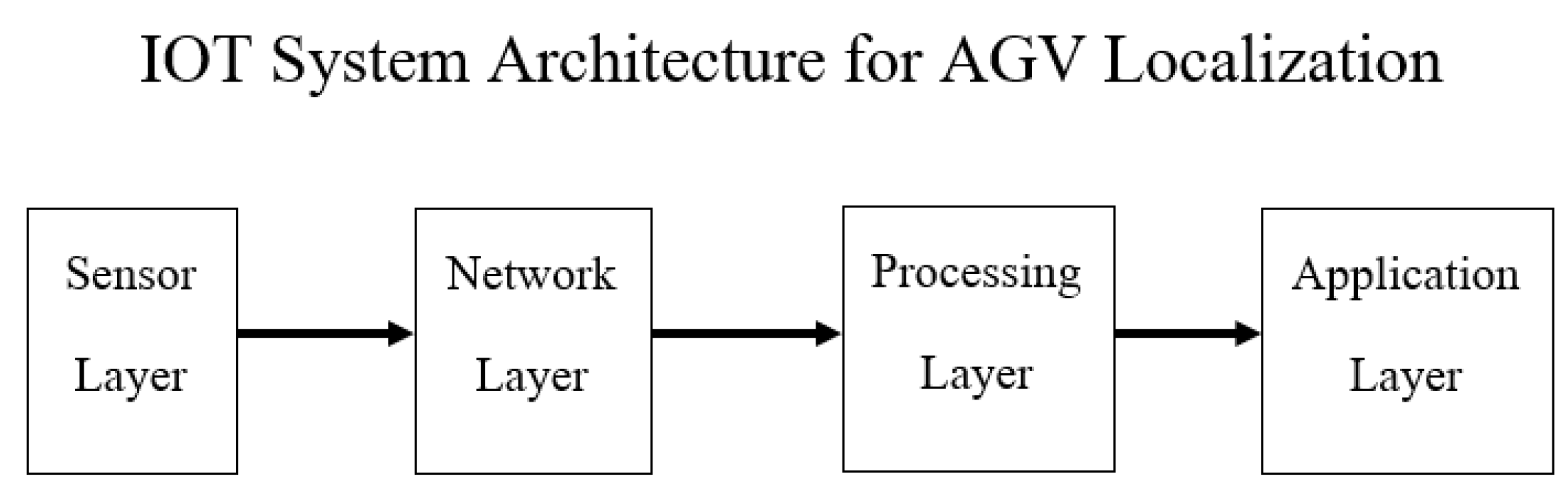

2.1.3. IoT System Architecture Concepts

- Sensor (Sensor) Layer: Consists of the encoder + IMU and a CCTV camera that records data from the experimental area.

- Network (Communication) Layer: Uses Wi-Fi wireless communication with the MQTT protocol for lightweight messaging to exchange sensor data with low latency.

- Processing Layer (Central Server): The central server collects sensor data streams, performs synchronization, integrates the data, and stores the records for analysis.

- Application Layer: Provides visualization and location identification for the experimental area.

2.2. Localization Methods

2.2.1. Dead Reckoning Localization

- Distance measurement from encoder: An encoder mounted to the drive motor provides wheel rotation data that is converted to linear velocity and angular velocity using the vehicle kinematics model. However, This method is limited by accumulated errors caused by wheel slip, calibration inaccuracies, and surface irregularities [18,23].

- Direction from IMU: A 9-DOF IMU provides angular velocity and linear acceleration . The gyroscope output is crucial for direction calculations, as accelerometer data is highly sensitive to noise and bias errors that increase with time [8].

2.2.2. Camera Localization

- Feature Detection: The system detects the features of the AGV to be located using standard computer vision algorithms.

- Coordinate Transformation: The AGV’s position in the image plane is converted to its real-world coordinates (world frame) via perspective transformation or a pre-calibrated homography matrix.

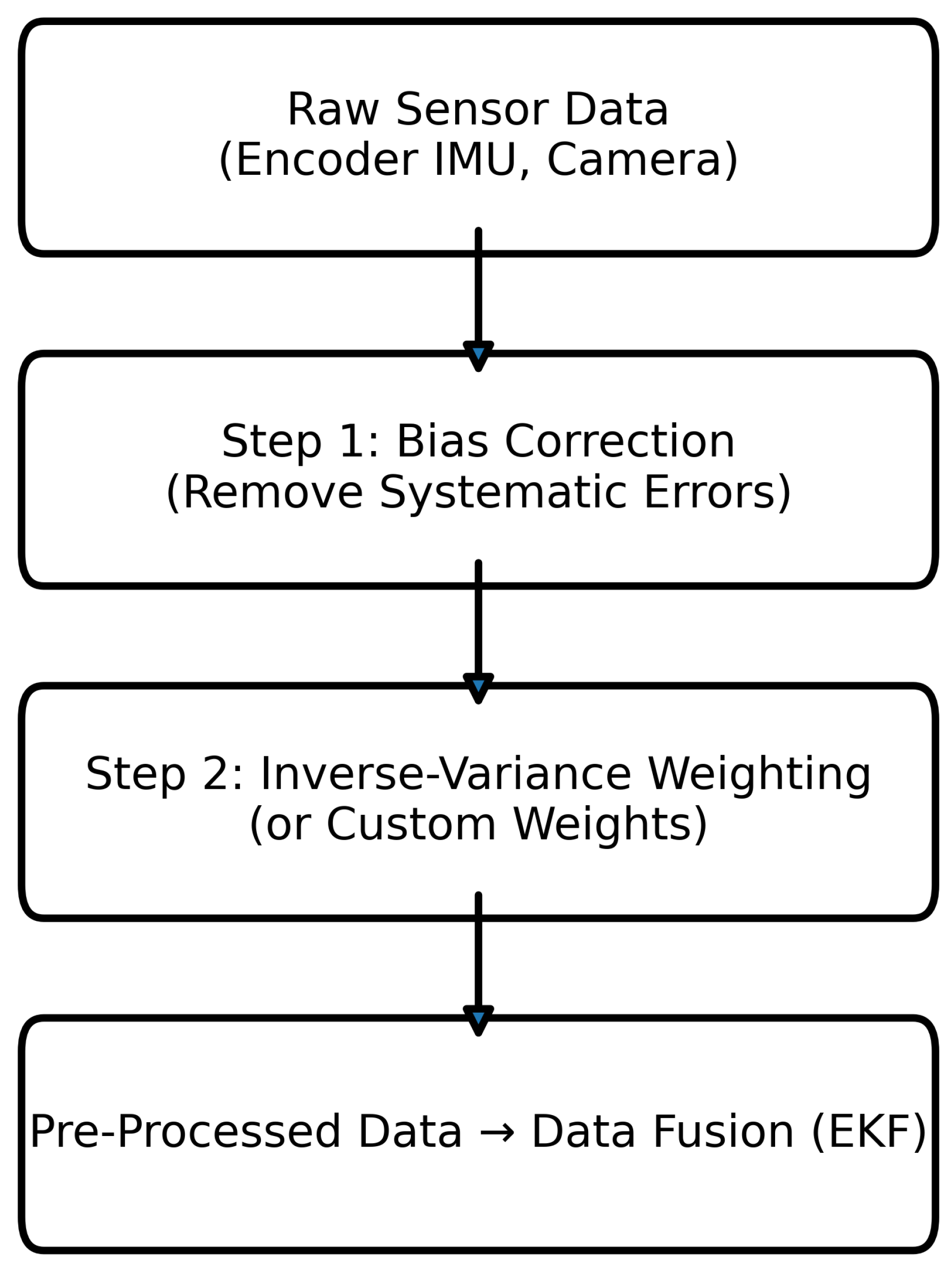

2.3. Pre-Processing Data Analysis

2.3.1. Bias Correction

2.3.2. Inverse-Variance Weighting

2.4. Extended Kalman Filter (EKF)

2.4.1. Normal Series Fusion EKF (Normal EKF)

2.4.2. Blended EKF

- is the weight assigned to the Camera (CAM) measurements

- is the weight assigned to the Dead Reckoning (DR) measurements

3. Experimental Design

-

Bias Correction Analysis: The raw data obtained from each sensor type were analyzed against Ground Truth (GT) data, which is akin to the "true path," to determine the systematic bias and variance.This step is crucial in reducing the Root Mean Squared Error (RMSE) of the raw data and enhancing the reliability of the data before further use.

- Weighting with Inverse-Variance Weighting: The error-corrected data were then statistically weighted. The principle is that sensors with lower bias variance (which means higher reliability) will receive higher weight in data fusion.

- Data Fusion with EKF: The pre-processed data is fed into the EKF system to estimate the state (position and orientation). Two EKF models have been developed and compared: (1) a conventional EKF and (2) a blended EKF, which fuses measurements from the CAM and DR sensors before the update process.

- Straight Section: This movement involves constant speed and no sudden changes of direction to assess the ability to maintain a precise position under normal conditions.

- Curved Section: It is a dynamic movement, which easily causes accumulated drift in the dead reckoning system to assess the system capability and stability under the condition of changing direction of movement.

4. Results and Discussion

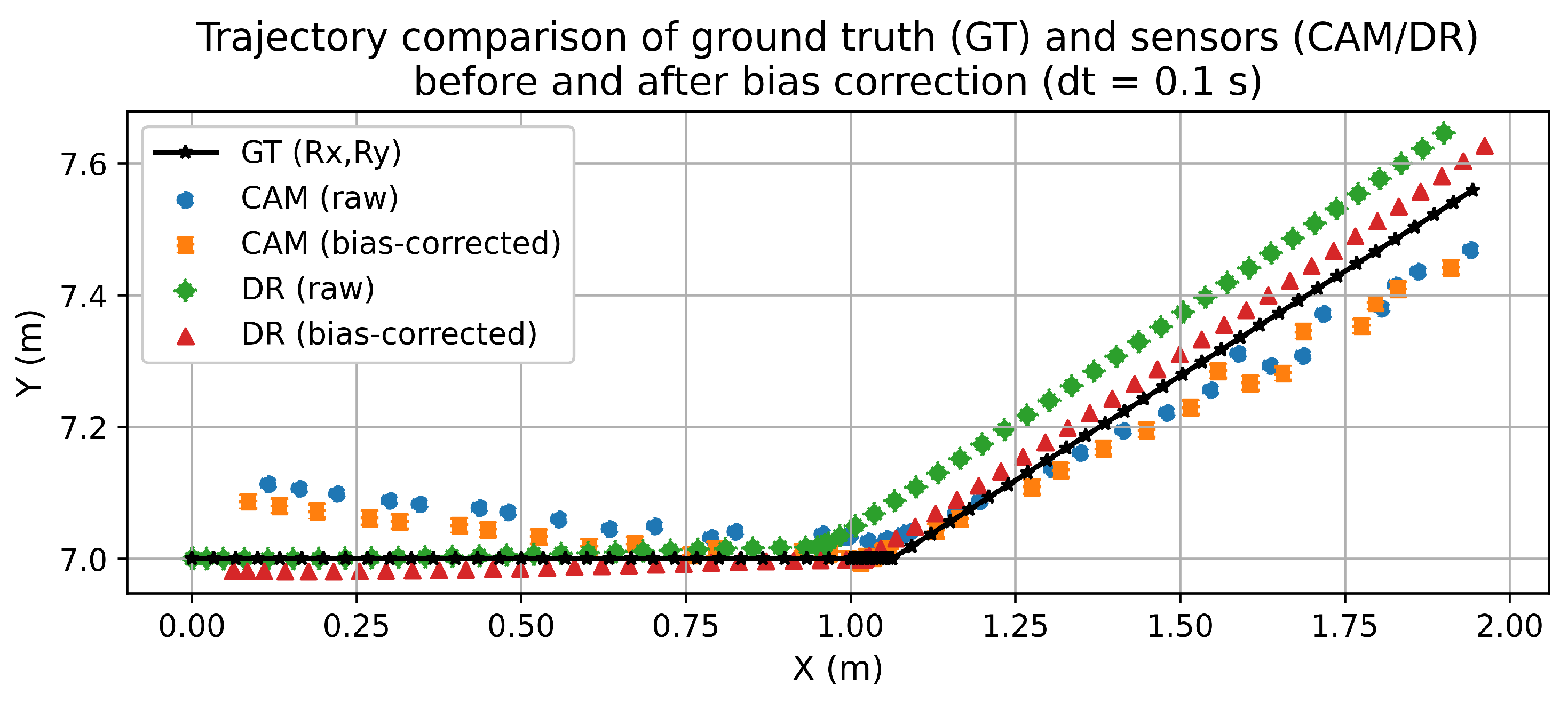

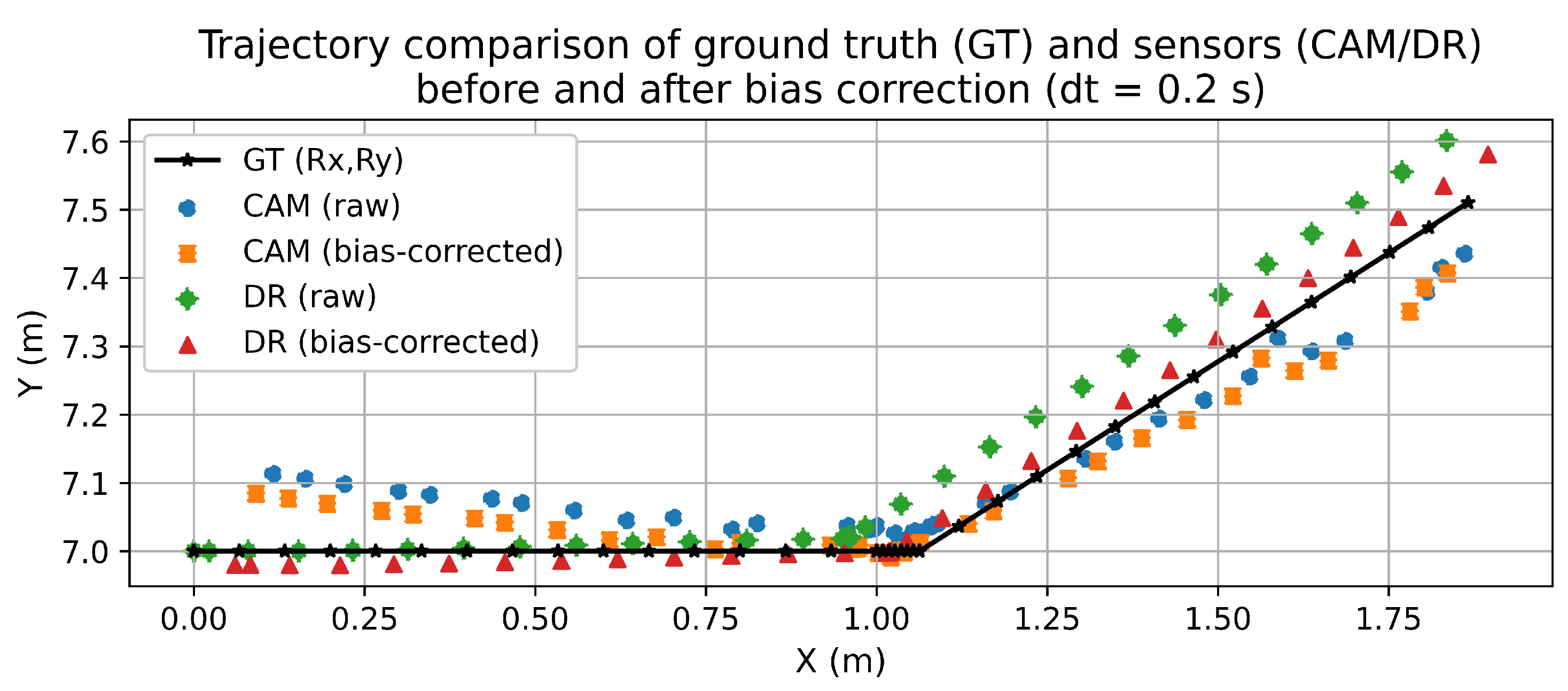

4.1. Analysis of bias correction (BC) results

- Camera Sensor (CAM): The bias error values in the X-axis (Mean Error X) and Y-axis (Mean Error Y) were found to be positive in both cases ( = 0.1 and 0.2). The position was higher than the actual values in both axes. However, the camera sensor did not measure the rotation angle, so there was no error for the Mean Error .

- Dead Reckoning (DR) Sensor: For DR, the error values in the X-axis (Mean Error X) were negative, while the Y-axis (Mean Error Y) were positive, indicating that there was error in both directions.

- Inertial Measurement Unit (IMU) Sensors: IMU sensors are used to measure changes in angle (). The only error is the Mean Error ().

-

Camera Sensor (CAM):

- −

- Before Correction (Raw): CAM had RMSE X values of 0.0617 m and 0.0483 m, and RMSE Y values of 0.0467 m and 0.0459 m for s and s, respectively.

- −

- After Correction (Corrected): RMSE values significantly decreased, with RMSE X decreasing to 0.0536 m and 0.0414 m, and RMSE Y decreasing to 0.0382 m and 0.0355 m, respectively.

-

Dead Reckoning (DR) Sensor:

- −

- Before Correction (Raw): DR had RMSE X values of 0.0792 m and 0.0780 m, and RMSE Y values of 0.0241 m and 0.0255 m.

- −

- After Correction (Corrected): Error correction significantly reduced the RMSE of DR. RMSE X decreased to 0.0497 m and 0.0495 m, while RMSE Y decreased to 0.0148 m and 0.0157 m, respectively.

-

Inertial Measurement Unit (IMU) Sensor:

- −

- Before Correction (Raw): The IMU is used to measure the rotation angle (). The RMSE values before correction were approximately 0.2256 rad and 0.2317 rad.

- −

- After Correction (Corrected): The RMSE values decreased to approximately 0.2018 rad and 0.2072 rad.

- True Trajectory (GT): The blue line represents the actual trajectory of the object, which is the reference line.

- CAM (Raw): The dark blue dots represent the CAM path before correction.

- CAM (Bias-Corrected): The orange dots represent the CAM path after correction.

- DR (Raw): The green dots represent the DR path before correction.

- DR (Bias-Corrected): The red dots represent the DR path after correction.

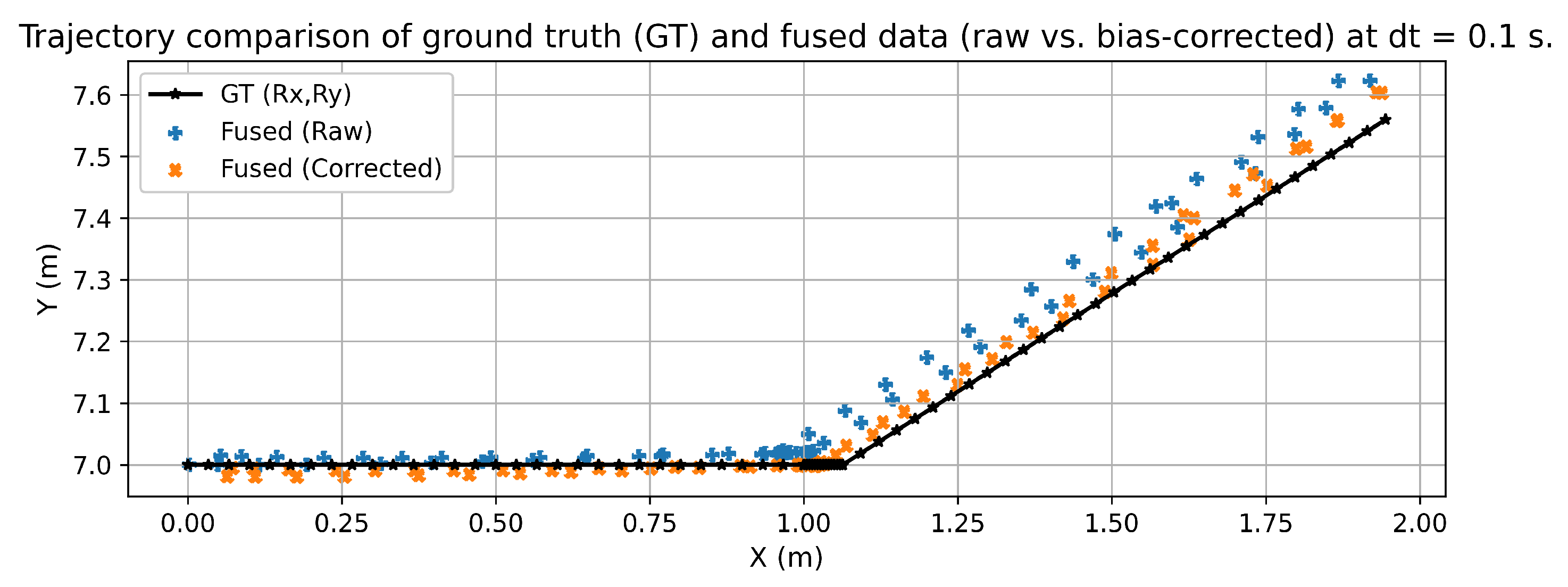

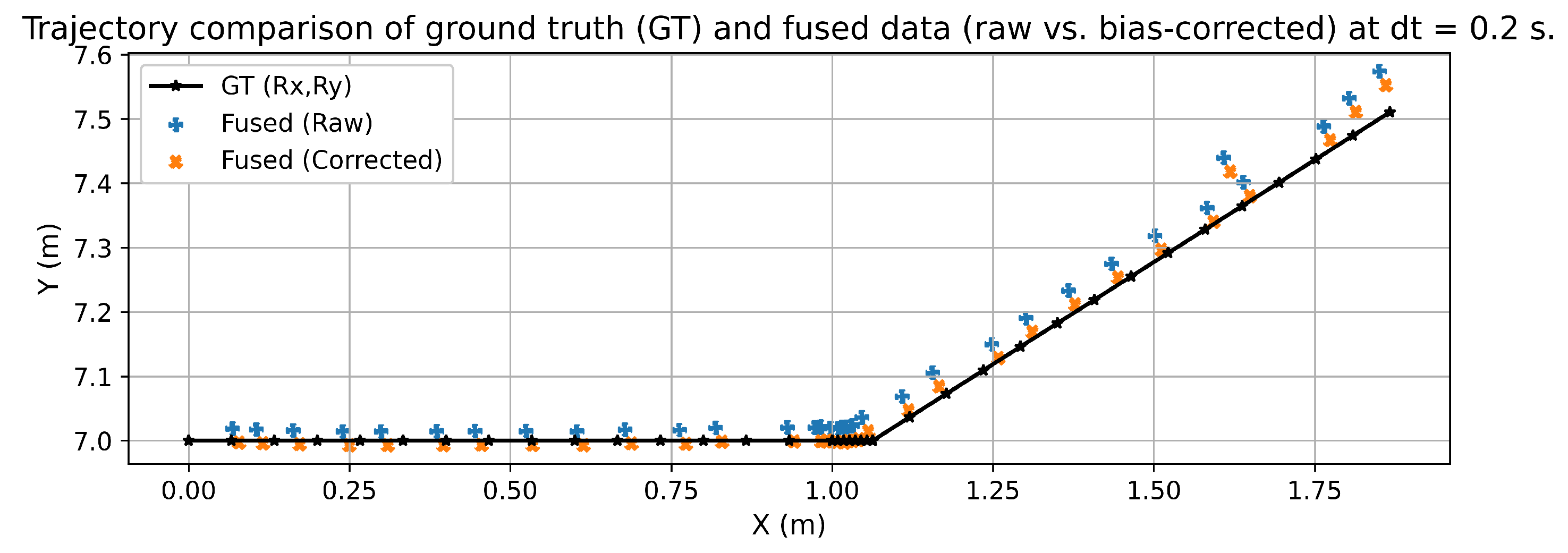

4.2. Analysis of the results of sensor data fusion using Inverse-Variance Weighting

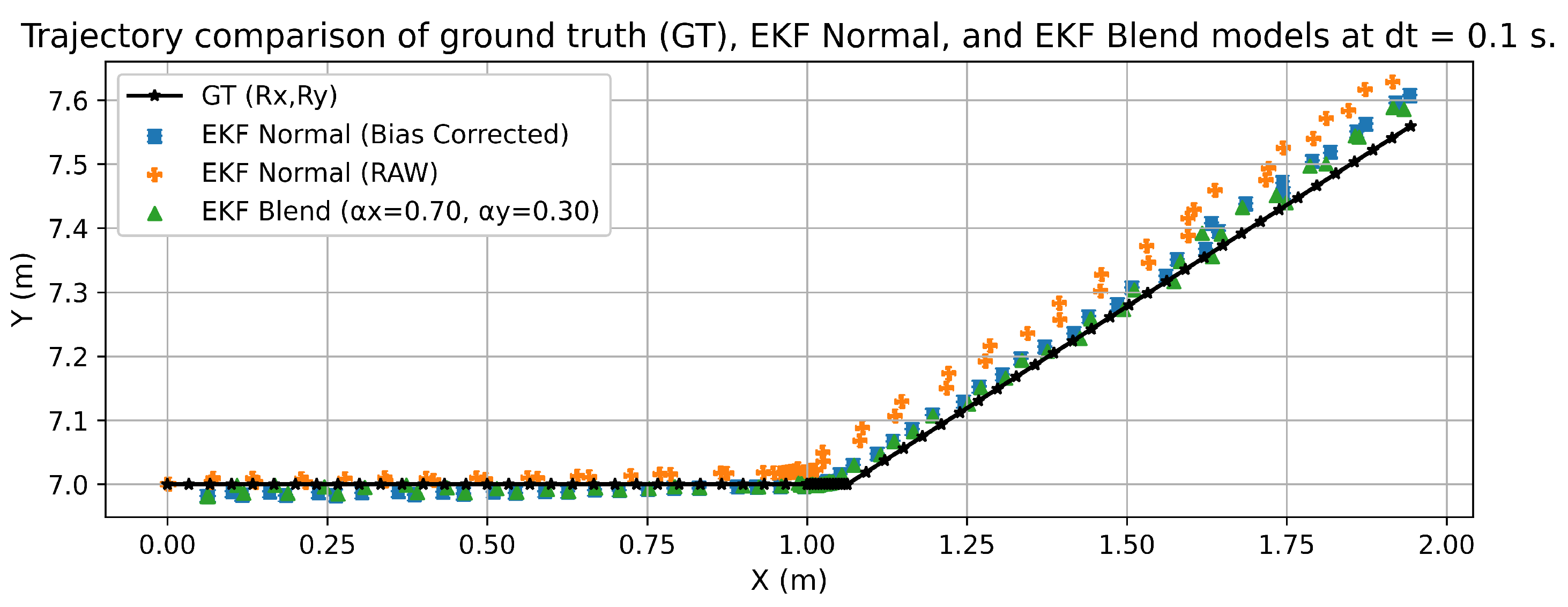

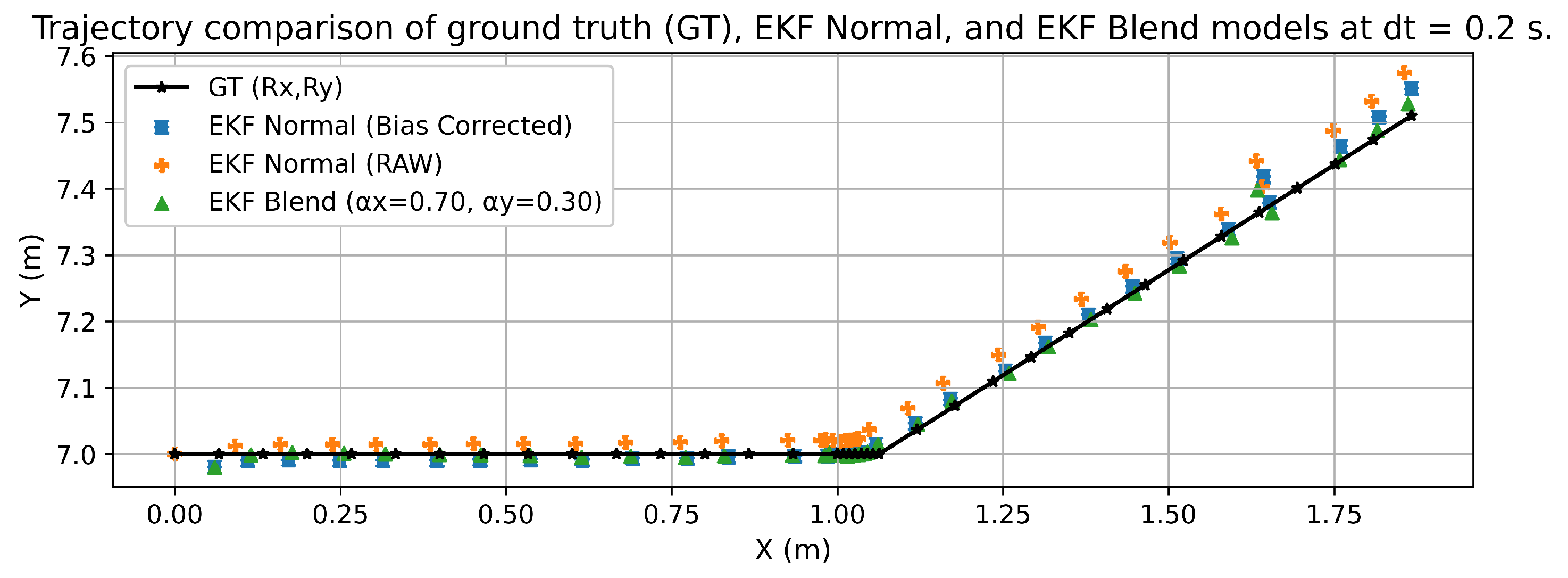

4.3. Analysis of data integration results using EKF

4.4. Discussion and suggestions

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Akhlaghi, S.; Zhou, N.; Huang, Z. Adaptive Adjustment of Noise Covariance in Kalman Filter for Dynamic State Estimation. 2017 IEEE Power & Energy Society General Meeting 2017, 1–5. [Google Scholar] [CrossRef]

- Bereszyński, K.; Pelic, M.; Paszkowiak, W.; Pabiszczak, S.; Myszkowski, A.; Walas, K.; Czechmanowski, G.; Węgrzynowski, J.; Bartkowiak, T. Passive Wheels – A New Localization System for Automated Guided Vehicles. Heliyon 2024, 10(15), e34967. [Google Scholar] [CrossRef] [PubMed]

- Brooks, A.; Makarenko, A.; Upcroft, B. Gaussian Process Models for Sensor-Centric Robot Localisation. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation (ICRA 2006, 56–61. [CrossRef]

- Chen, Y.; Cui, Q.; Wang, S. Fusion Ranging Method of Monocular Camera and Millimeter-Wave Radar Based on Improved Extended Kalman Filtering. Sensors (Basel) 2025, 25. [Google Scholar] [CrossRef]

- Fan, Z.; Zhang, L.; Wang, X.; Shen, Y.; Deng, F. LiDAR, IMU, and Camera Fusion for Simultaneous Localization and Mapping: A Systematic Review. Artificial Intelligence Review 2025, 58(6), 174. [Google Scholar] [CrossRef]

- Fragapane, G.; de Koster, R.; Sgarbossa, F.; Strandhagen, J. O. Planning and Control of Autonomous Mobile Robots for Intralogistics: Literature Review and Research Agenda. European Journal of Operational Research 2021, 294(2), 405–426. [Google Scholar] [CrossRef]

- Grzechca, D.; Ziebinski, A.; Paszek, K.; Hanzel, K.; Giel, A.; Czerny, M.; Becker, A. How Accurate Can UWB and Dead Reckoning Positioning Systems Be? Comparison to SLAM Using the RPLidar System. Sensors (Basel) 2020, 20. [Google Scholar] [CrossRef]

- Hesch, J. A.; Kottas, D. G.; Bowman, S. L.; Roumeliotis, S. I. Camera–IMU-Based Localization: Observability Analysis and Consistency Improvement. The International Journal of Robotics Research 2013, 33(1), 182–201. [Google Scholar] [CrossRef]

- Ito, S.; Hiratsuka, S.; Ohta, M.; Matsubara, H.; Ogawa, M. Small Imaging Depth LIDAR and DCNN-Based Localization for Automated Guided Vehicle. Sensors (Basel) 2018, 18. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F. O.; Razavi, S. N. Multisensor Data Fusion: A Review of the State-of-the-Art. Information Fusion 2013, 14(1), 28–44. [Google Scholar] [CrossRef]

- Li, M.; Mourikis, A. I. High-Precision, Consistent EKF-Based Visual–Inertial Odometry. The International Journal of Robotics Research 2013, 32(6), 690–711. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, S.; Xie, Y.; Xiong, T.; Wu, M. A Review of Sensing Technologies for Indoor Autonomous Mobile Robots. Sensors (Basel) 2024, 24. [Google Scholar] [CrossRef]

- Milam, G.; Xie, B.; Liu, R.; Zhu, X.; Park, J.; Kim, G.; Park, C. H. Trainable Quaternion Extended Kalman Filter with Multi-Head Attention for Dead Reckoning in Autonomous Ground Vehicles. Sensors (Basel) 2022, 22. [Google Scholar] [CrossRef]

- Niu, X.; Wu, Y.; Kuang, J. Wheel-INS: A Wheel-Mounted MEMS IMU-Based Dead Reckoning System. IEEE Transactions on Vehicular Technology 2021, 70(10), 9814–9825. [Google Scholar] [CrossRef]

- Perera, L. D. L.; Wijesoma, W. S.; Adams, M. D. The Estimation Theoretic Sensor Bias Correction Problem in Map Aided Localization. The International Journal of Robotics Research 2006, 25(7), 645–667. [Google Scholar] [CrossRef]

- Sousa, L. C.; Silva, Y. M. R.; Schettino, V. B.; Santos, T. M. B.; Zachi, A. R. L.; Gouvea, J. A.; Pinto, M. F. Obstacle Avoidance Technique for Mobile Robots at Autonomous Human–Robot Collaborative Warehouse Environments. Sensors (Basel) 2025, 25. [Google Scholar] [CrossRef] [PubMed]

- Sousa, R. B.; Sobreira, H. M.; Moreira, A. P. A Systematic Literature Review on Long-Term Localization and Mapping for Mobile Robots. Journal of Field Robotics 2023, 40(5), 1245–1322. [Google Scholar] [CrossRef]

- Teng, X.; Shen, Z.; Huang, L.; Li, H.; Li, W. Multi-Sensor Fusion Based Wheeled Robot Research on Indoor Positioning Method. Results in Engineering 2024, 22, 102268. [Google Scholar] [CrossRef]

- Urrea, C.; Agramonte, R. Kalman Filter: Historical Overview and Review of Its Use in Robotics 60 Years after Its Creation. Journal of Sensors 2021, 2021(1), 9674015. [Google Scholar] [CrossRef]

- Vignarca, D.; Vignati, M.; Arrigoni, S.; Sabbioni, E. Infrastructure-Based Vehicle Localization through Camera Calibration for I2V Communication Warning. Sensors (Basel) 2023, 23. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, J.; Liao, Y.; Huang, B.; Li, H.; Zhou, J. Research on Multi-Sensor Fusion Localization for Forklift AGV Based on Adaptive Weight Extended Kalman Filter. Sensors (Basel) 2025, 25. [Google Scholar] [CrossRef]

- Xu, H.; Li, Y.; Lu, Y. Research on Indoor AGV Fusion Localization Based on Adaptive Weight EKF Using Multi-Sensor. Journal of Physics: Conference Series 2023, 2428, 012028. [Google Scholar] [CrossRef]

- Yan, Y.; Zhang, B.; Zhou, J.; Zhang, Y.; Liu, X. Real-Time Localization and Mapping Utilizing Multi-Sensor Fusion and Visual–IMU–Wheel Odometry for Agricultural Robots. Agronomy 2022, 12. [Google Scholar] [CrossRef]

- Zeghmi, L.; Amamou, A.; Kelouwani, S.; Boisclair, J.; Agbossou, K. A Kalman–Particle Hybrid Filter for Improved Localization of AGV in Indoor Environment. In Proceedings of the 2nd International Conference on Robotics, Automation and Artificial Intelligence (RAAI), 141–147. 2022. [Google Scholar] [CrossRef]

- Zheng, Z.; Lu, Y. Research on AGV Trackless Guidance Technology Based on the Global Vision. Science Progress 2022, 105(3), 00368504221103766. [Google Scholar] [CrossRef]

| Sensor | Model | Specifications |

|---|---|---|

| Encoder | DC Motor JGB37-520 with Double Magnetic Hall Encoder | AB-phase (quadrature) output for direction and revolution detection; 90 pulses/rev; operating voltage: 3.3–5 V DC. |

| IMU | WitMotion WT901C (9-DOF) | Measures linear acceleration ( g), angular velocity (/s), and orientation; sampling rate: 10 Hz. |

| CCTV | TP-Link Tapo C200 | Wireless IP camera; 1080p at 30 fps; H.264 compression; IR LED (850 nm, up to 30 ft); WiFi video streaming. |

| Reference Camera (GT) | OKER HD-869 | Full HD (1920×1080) resolution; auto-focus lens; used as the ground-truth reference camera. |

| Sampling interval (s) | Sensor | Mean Error X (m) | Mean Error Y (m) | Mean Error (rad) |

|---|---|---|---|---|

| 0.1 | CAM | 0.0305 | 0.0268 | – |

| DR | -0.0617 | 0.0190 | – | |

| IMU | – | – | 0.0977 | |

| 0.2 | CAM | 0.0250 | 0.0290 | – |

| DR | -0.0604 | 0.0201 | – | |

| IMU | – | – | 0.1006 |

| Sampling interval (s) | Sensor | X Raw (m) | X BC (m) | Y Raw (m) | Y BC (m) | Raw (rad) | BC (rad) |

|---|---|---|---|---|---|---|---|

| 0.1 | CAM | 0.0617 | 0.0536 | 0.0467 | 0.0382 | – | – |

| DR | 0.0792 | 0.0497 | 0.0241 | 0.0148 | – | – | |

| IMU | – | – | – | – | 0.2256 | 0.2018 | |

| 0.2 | CAM | 0.0483 | 0.0414 | 0.0459 | 0.0355 | – | – |

| DR | 0.0780 | 0.0495 | 0.0255 | 0.0157 | – | – | |

| IMU | – | – | – | – | 0.2317 | 0.2072 |

| Data type | Sensor | Var X (m2) | Var Y (m2) | Var (rad2) | |||

|---|---|---|---|---|---|---|---|

| Raw | CAM | 0.002904 | 0.001478 | – | 0.460989 | 0.129245 | – |

| DR | 0.002483 | 0.000219 | – | 0.539011 | 0.870755 | – | |

| IMU | – | – | 0.041277 | – | – | 1.000000 | |

| Bias corrected | CAM | 0.002904 | 0.001478 | – | 0.460989 | 0.129245 | – |

| DR | 0.002483 | 0.000219 | – | 0.539011 | 0.870755 | – | |

| IMU | – | – | 0.041277 | – | – | 1.000000 |

| Data type | Sensor | Var X (m2) | Var Y (m2) | Var (rad2) | |||

|---|---|---|---|---|---|---|---|

| Raw | CAM | 0.001732 | 0.001278 | – | 0.588462 | 0.164134 | – |

| DR | 0.002477 | 0.000251 | – | 0.411538 | 0.835866 | – | |

| IMU | – | – | 0.044070 | – | – | 1.000000 | |

| Bias corrected | CAM | 0.001732 | 0.001278 | – | 0.588462 | 0.164134 | – |

| DR | 0.002477 | 0.000251 | – | 0.411538 | 0.835866 | – | |

| IMU | – | – | 0.044070 | – | – | 1.000000 |

| Sampling interval (s) | Fusion type | RMSE X (m) | RMSE Y (m) |

|---|---|---|---|

| 0.1 | Fused (Raw) | 0.0661 | 0.0230 |

| Fused (Corrected) | 0.0483 | 0.0122 | |

| 0.2 | Fused (Raw) | 0.0415 | 0.0235 |

| Fused (Corrected) | 0.0402 | 0.0095 |

| Sampling interval (s) | EKF model | RMSE X (m) | RMSE Y (m) | RMSE XY (m) |

|---|---|---|---|---|

| 0.1 | Normal (Raw) | 0.060541 | 0.022919 | 0.064734 |

| Normal (Bias Corrected) | 0.047174 | 0.012047 | 0.048688 | |

| Blend () | 0.047108 | 0.009638 | 0.048084 | |

| Blend () | 0.047699 | 0.009897 | 0.048715 | |

| Blend () | 0.047114 | 0.009983 | 0.048160 | |

| Blend () | 0.047696 | 0.009983 | 0.048729 | |

| Blend () | 0.047118 | 0.009897 | 0.048146 | |

| 0.2 | Normal (Raw) | 0.040356 | 0.023574 | 0.046737 |

| Normal (Bias Corrected) | 0.039453 | 0.009881 | 0.040672 | |

| Blend () | 0.040282 | 0.011603 | 0.041920 | |

| Blend () | 0.043155 | 0.007732 | 0.043842 | |

| Blend () | 0.038740 | 0.018405 | 0.042889 | |

| Blend () | 0.043131 | 0.018432 | 0.046905 | |

| Blend () | 0.038761 | 0.007731 | 0.039524 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).