Submitted:

09 October 2025

Posted:

09 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Research Background and Significance

1.2. The Bottlenecks and Challenges of Traditional Research Methods

1.3. The Rise and Limitations of Deep Learning Proxy Models

1.4. The Innovation and Contribution of This Article

- A Geo-PhysNet framework aimed at learning field differential structures is proposed, with its core lying in a dual-output head architecture customized for vehicle surface manifolds. Unlike previous methods that only predict scalar values [8,9,10,16], our network can simultaneously predict the pressure scalar field and its tangential gradient vector field on the surface manifold within a unified framework. The core motivation of this design lies in elevating the gradient from an implicit quantity that requires post-processing to an explicit and supervised learning objective, thereby compelling the network to learn and understand the local mechanical causes that lead to pressure changes, rather than merely memorizing and imitating discrete pressure values. This architecture deeply integrates multi-level geometric priors including curvature through a multi-scale graph attention network, providing a solid foundation for precise differential structure prediction.

- A hybrid loss function for enforcing mathematical consistency and physical rationality was constructed, providing a new paradigm for imposing field structure constraints in geometric deep learning. This is the key mechanism for achieving the proposed "differential representation learning", which is significantly different from the traditional data-driven methods [13]. The core of this loss function is an innovative "pressure/gradient consistency loss", which mathematically mandates that the pressure field output by the network must be the potential function of the gradient field they jointly predict, thereby ensuring the intrinsic structural integrity and irrotational property of the understanding. In addition, a physical regularization term based on the surface Laplacian operator was introduced to penalize non-smooth solutions that violate fluid physics priors, effectively suppressing high-frequency noise in the prediction results. This guides the model to generate a pressure field that is not only numerically precise but also structurally smooth, continuous, and physically realistic.

2. Methodology

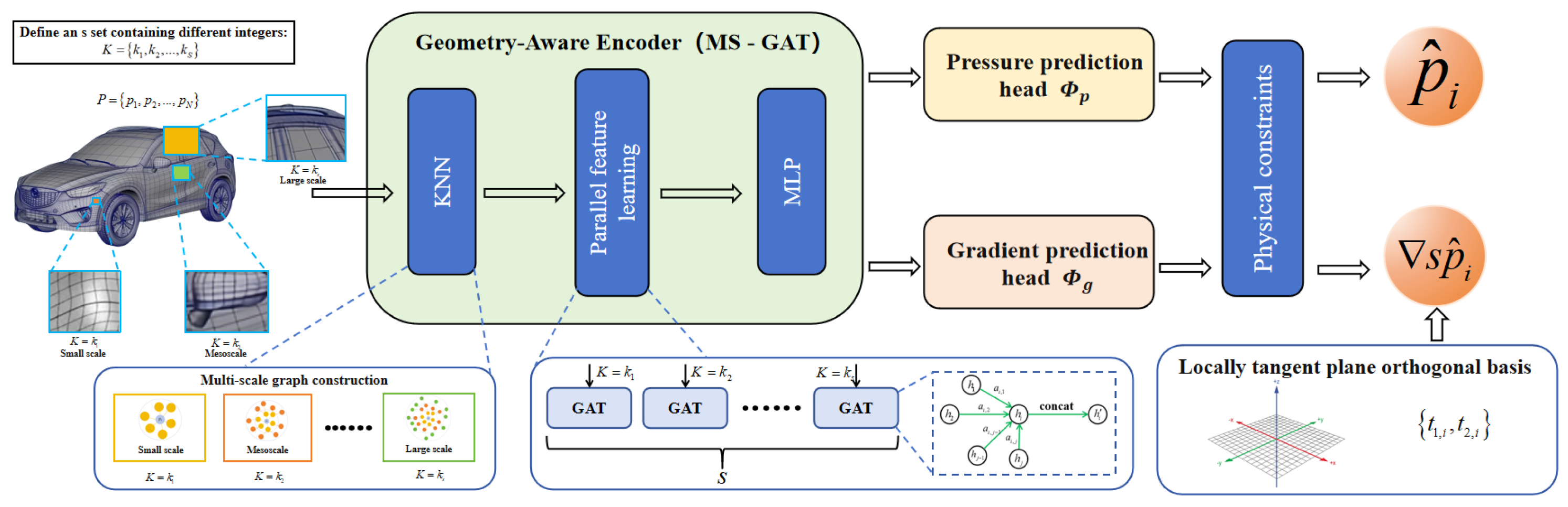

2.1. Geometry-Aware Encoder

2.1.1. Input Feature Representation

2.1.2. Multi-Scale Graph Attention Network (MS-GAT)

- Define the scale set: First, we define a set containing S different integers. Each integer will define an independent graph scale. For example, we can set a set that contains three scales, and they respectively represent small-scale, mesoscale and large-scale.

-

Parallel construction of adjacency relationship: Next, for the same point cloud P and its features, we run the k-NN algorithm S times in parallel:

- For the small-scale graph (, ): We calculate the feature space distance between each point in the point cloud and all other points, and find the 16 points with the closest distances as its neighbor set . This defines the edge set of graph . In this graph, each node is only connected to its 16 closest neighbors, and the range it can "see" is very local, focusing on capturing fine geometric details, such as sharp chambers or minor curved surface changes.

- For the medium-scale graph (, ): We repeat the above-mentioned process, but this time, for each point , we find 32 nearest neighbors to form the neighbor set , and the edge set of graph . In this graph, the "field of view" of the nodes is expanded, and it can perceive the shape of the local region composed of more points, such as the overall contour of a car door handle or a rearview mirror.

- For the large-scale graph , ): Similarly, we find 64 nearest neighbors for each point to form the neighbor set and the edge set of graph . At this scale, the model can aggregate information from a rather broad region, thereby understanding the macroscopic geometric trends over a larger range, such as the streamline shape of the car roof, the overall posture of the car body, and so on.

- Parallel Feature Learning: After constructing these S graphs (), their node sets are exactly the same, only the edge sets are different. We feed the shared initial node features into S parallel Graph Attention (GAT) network branches with the same structure but non-shared weights. The GAT layer of the s-th branch will aggregate information strictly according to the adjacency relationship of graph . This means that in the small-scale branch, information transfer and update only occur among 16 neighbors; while in the large-scale branch, information will be exchanged among 64 neighbors.

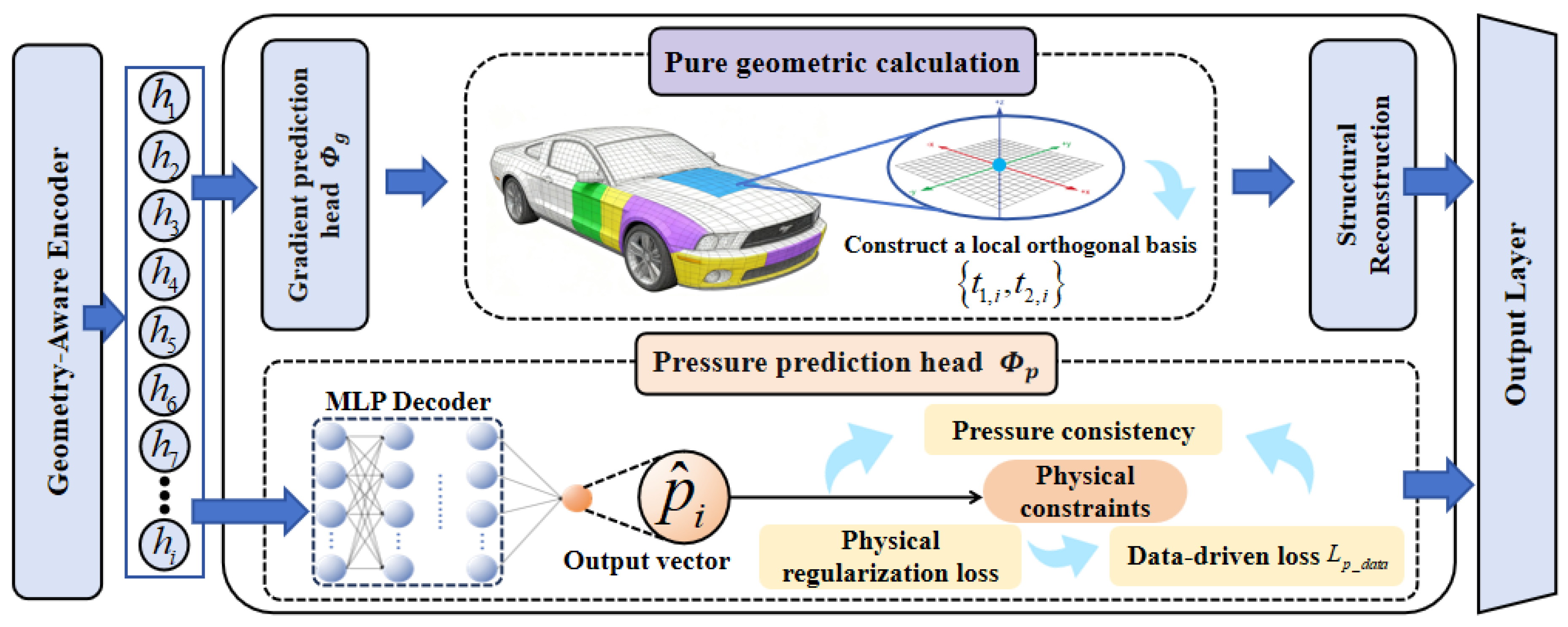

2.2. Dual Output Head

2.2.1. Motivation and Rationale

- Richer physical characterization: The pressure gradient serves as a bridge connecting the pressure field and fluid dynamics. For example, the Adverse Pressure Gradient is the direct cause of the separation of the flow boundary layer and the generation of pressure difference resistance. By allowing the network to directly learn and predict gradients, we force it to understand the local mechanical mechanisms that lead to these key aerodynamic phenomena, rather than merely learning the numerical mapping of pressure.

- Stronger learning constraints and generalization ability: Compared with an unstructured pressure scalar field, a gradient field provides stronger supervised signals and geometric constraints. To accurately predict the magnitude and direction of the gradient, the network must have a deeper understanding of the complex relationship among local geometric curvature, orientation and flow direction. This more challenging and structured learning task itself is a powerful regularization, which can effectively prevent the model from "overfitting" on the data, thereby enabling it to have stronger generalization ability when facing unfamiliar situations.

2.2.2. Differential Representation Learning

- Step 1: Define the local tangent plane .

- Step 2: Construct the orthogonal basis of the local tangent plane .

- First, we select a global, non-collinear reference vector, usually a unit vector of the coordinate axis. For example, . To avoid numerical singularity that may occur when is approximately parallel to , we will check. If (where is a very small tolerance), then switch the reference vector to .

- The first tangent vector is obtained by performing a cross-product operation between the reference vector and the normal vector, and then normalizing the result. This ensures that is orthogonal to , that is, it lies within the tangent plane.

- The second tangent vector is obtained by performing a cross-product of the normal vector and the first tangent vector . Since and are already unit orthogonal vectors, the result of their cross-product is naturally a unit vector and is orthogonal to both of them. Thus, the construction of the standard orthogonal basis is completed. also lies within the tangent plane.

- Step 3: Definition of Network output.

- Pressure prediction head (): Outputs a scalar , which directly corresponds to the predicted pressure value.

- Gradient prediction head (): Outputs a two-dimensional vector . The two components are exactly the coordinates of the predicted tangential gradient vector in the local orthogonal basis we just constructed. Therefore, in the three-dimensional global coordinate system, the complete predicted gradient vector can be reconstructed as:

2.3. Physics-Informed Hybrid Loss Function

2.3.1. Data-Driven Loss ()

- Pressure-fitting loss (): This is the most basic supervision signal. The mean squared error (MSE) is used to measure the deviation between the predicted pressure and the real CFD pressure .

- Gradient-fitting loss (): To provide direct supervision for the gradient prediction head, we first calculate the surface tangential gradient at each point from the real pressure field , and then obtain the label . Then, we use this "simulated value" to supervise the output of the gradient head.

2.3.2. Pressure/Gradient Consistency Loss ()

2.3.3. Surface Physical Regularization Loss ()

3. Verification Experiment

3.1. Experimental Setup

3.1.1. Dataset and Preprocessing

3.1.2. Benchmark Model

- PointNet++: As a landmark work in processing point cloud data, PointNet++ performs outstandingly in 3D understanding tasks through its multi-scale and multi-level feature extraction mechanism. We take it as a benchmark to test the ability of advanced point cloud networks in directly processing geometry for field prediction.

- DGCNN: This is the original model proposed in the DrivAerNet paper, which captures local geometric information through dynamic graph convolution. We take it as the benchmark because it represents a powerful geometric encoder that has been verified on the same dataset.

- GCN: A basic graph convolutional network. This model uses a simple graph Laplacian operator for neighborhood information aggregation and does not incorporate attention or multi-scale mechanisms. It serves as a "minimalist" benchmark for evaluating the performance gains brought about by more complex graph structure designs.

- Transolver: This model efficiently captures physical correlations in complex geometric domains through a slicing and attention mechanism based on physical states, achieving leading PDE solution performance and excellent scalability.

- Geo-PhysNet (w/o Physics): This is a key ablation version of our model. It has exactly the same geometry-aware encoder and dual-output head architecture as Geo-PhysNet. However, during training, only the data-driven loss () is used, and the two physical constraint loss terms and are completely excluded. This model is aimed at accurately quantifying the actual contribution of the physical constraint paradigm we proposed, compared to a purely data-driven model with the same architecture.

3.1.3. Evaluation Index

3.1.4. Implementation Details

3.2. Implementation Details

3.2.1. Experimental Comparison of Effectiveness of Pressure Gradient Consistency Measurement Methods

3.2.2. Ablation Study

| Consistency Measurement Method | GME(↓) | GCS(↑) | Convergence Stability |

| Simple Finite Difference | 0.055 (expected) | 0.968 (expected) | Oscillations may occur |

| Direct Curl-Free Constraint | 0.049 (expected) | 0.975 (expected) | Stable |

| Weighted Least-Squares (Ours) | 0.0416 | 0.981 | Stable and efficient |

| Ablation model | MAE(↓) | Laplacian-MAE(↓) | RMSE(↓) | GME(↓) | GCS(↑) |

| Geo-PhysNet(Full-Model) | 0.0112 | 0.0197 | 0.0163 | 0.0616 | 0.981 |

| w/o (Remove consistency loss) | 0.0129 | 0.0451 | 0.0188 | 0.0921 | 0.935 |

| w/o (Remove physical regularization loss) | 0.0115 | 0.0431 | 0.0169 | 0.0834 | 0.943 |

| w/o Geo-Features(Remove geometric features) | 0.0151 | 0.0487 | 0.0224 | 0.1097 | 0.921 |

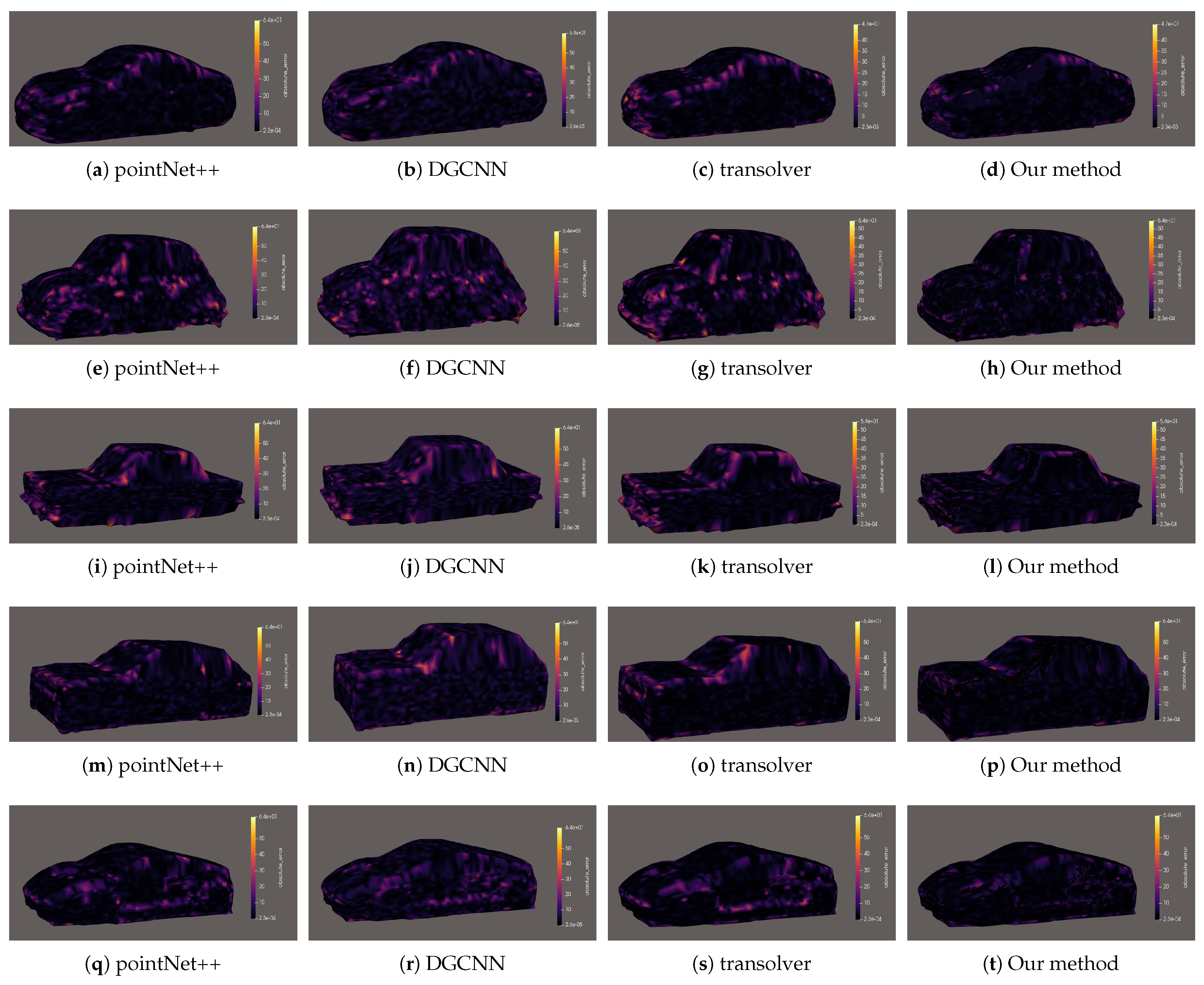

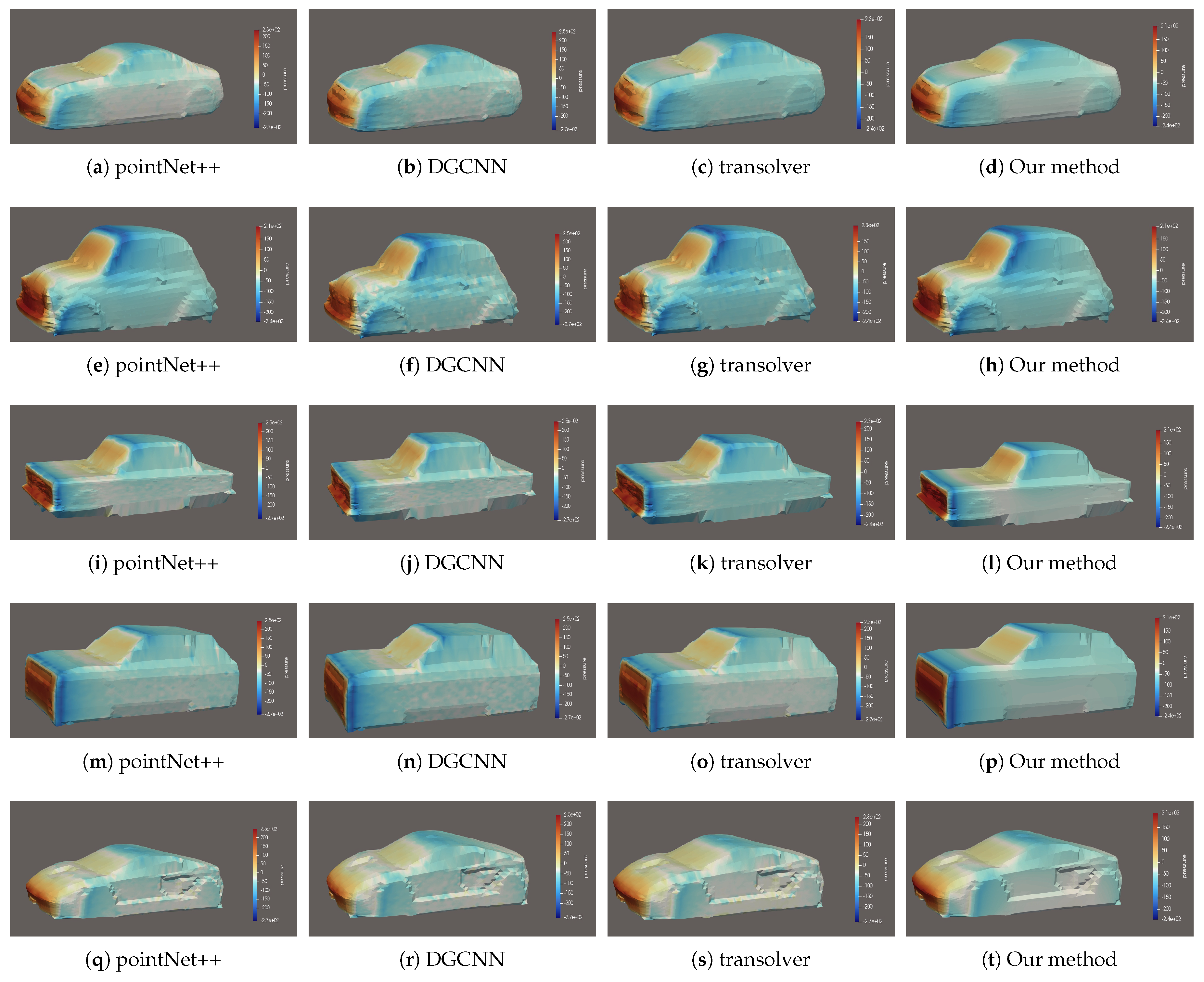

3.2.3. Visual Comparison of Prediction Results

3.2.4. Visual Comparison of Prediction Errors

4. Conclusions

References

- Brand, C.; Anable, J.; Ketsopoulou, I.; Watson, J. Road to zero or road to nowhere? Disrupting transport and energy in a zero carbon world. Energy Policy 2020, 139, 111334. [CrossRef]

- Ahmed, S.R.; Ramm, G.; Faltin, G. Some salient features of the time-averaged ground vehicle wake. SAE transactions 1984, pp. 473–503.

- Heft, A.I.; Indinger, T.; Adams, N.A. Experimental and numerical investigation of the DrivAer model. In Proceedings of the Fluids engineering division summer meeting. American Society of Mechanical Engineers, 2012, Vol. 44755, pp. 41–51. [CrossRef]

- Heft, A.I.; Indinger, T.; Adams, N.A. Introduction of a new realistic generic car model for aerodynamic investigations. Technical report, SAE Technical Paper, 2012. [CrossRef]

- Aultman, M.; Wang, Z.; Auza-Gutierrez, R.; Duan, L. Evaluation of CFD methodologies for prediction of flows around simplified and complex automotive models. Computers & Fluids 2022, 236, 105297. [CrossRef]

- Thuerey, N.; Weißenow, K.; Prantl, L.; Hu, X. Deep learning methods for Reynolds-averaged Navier–Stokes simulations of airfoil flows. AIAA journal 2020, 58, 25–36. [CrossRef]

- Gunpinar, E.; Coskun, U.C.; Ozsipahi, M.; Gunpinar, S. A generative design and drag coefficient prediction system for sedan car side silhouettes based on computational fluid dynamics. Computer-Aided Design 2019, 111, 65–79. [CrossRef]

- Song, B.; Yuan, C.; Permenter, F.; Arechiga, N.; Ahmed, F. Surrogate modeling of car drag coefficient with depth and normal renderings. In Proceedings of the International Design Engineering Technical Conferences and Computers and Information in Engineering Conference. American Society of Mechanical Engineers, 2023, Vol. 87301, p. V03AT03A029. [CrossRef]

- Remelli, E.; Lukoianov, A.; Richter, S.; Guillard, B.; Bagautdinov, T.; Baque, P.; Fua, P. Meshsdf: Differentiable iso-surface extraction. Advances in Neural Information Processing Systems 2020, 33, 22468–22478.

- Jacob, S.J.; Mrosek, M.; Othmer, C.; Köstler, H. Deep learning for real-time aerodynamic evaluations of arbitrary vehicle shapes. arXiv preprint arXiv:2108.05798 2021.

- Li, Z.; Kovachki, N.; Choy, C.; Li, B.; Kossaifi, J.; Otta, S.; Nabian, M.A.; Stadler, M.; Hundt, C.; Azizzadenesheli, K.; et al. Geometry-informed neural operator for large-scale 3d pdes. Advances in Neural Information Processing Systems 2023, 36, 35836–35854.

- Pfaff, T.; Fortunato, M.; Sanchez-Gonzalez, A.; Battaglia, P. Learning mesh-based simulation with graph networks. In Proceedings of the International conference on learning representations, 2020.

- Kashefi, A.; Mukerji, T. Physics-informed PointNet: A deep learning solver for steady-state incompressible flows and thermal fields on multiple sets of irregular geometries. Journal of Computational Physics 2022, 468, 111510. [CrossRef]

- Deng, J.; Li, X.; Xiong, H.; Hu, X.; Ma, J. Geometry-guided conditional adaption for surrogate models of large-scale 3d PDEs on arbitrary geometries 2024.

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. Shapenet: An information-rich 3d model repository. arXiv preprint arXiv:1512.03012 2015.

- Wu, H.; Luo, H.; Wang, H.; Wang, J.; Long, M. Transolver: A fast transformer solver for pdes on general geometries. arXiv preprint arXiv:2402.02366 2024.

| Model | MAE (↓) | RMSE (↓) | GME (↓) | GCS (↑) | Laplacian MAE (↓) |

| GCN | 0.0264 | 0.0381 | 0.1512 | 0.887 | 0.0286 |

| PointNet++ | 0.0217 | 0.0315 | 0.1305 | 0.911 | 0.0263 |

| DGCNN | 0.0165 | 0.0240 | 0.1189 | 0.946 | 0.0231 |

| Transolver | 0.0135 | 0.0200 | 0.0862 | 0.965 | 0.0225 |

| Geo-PhysNet (w/o Physics) | 0.0138 | 0.0201 | 0.0953 | 0.956 | 0.0230 |

| Geo-PhysNet (Ours) | 0.0112 | 0.0163 | 0.0616 | 0.981 | 0.0197 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).