Submitted:

04 October 2025

Posted:

06 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

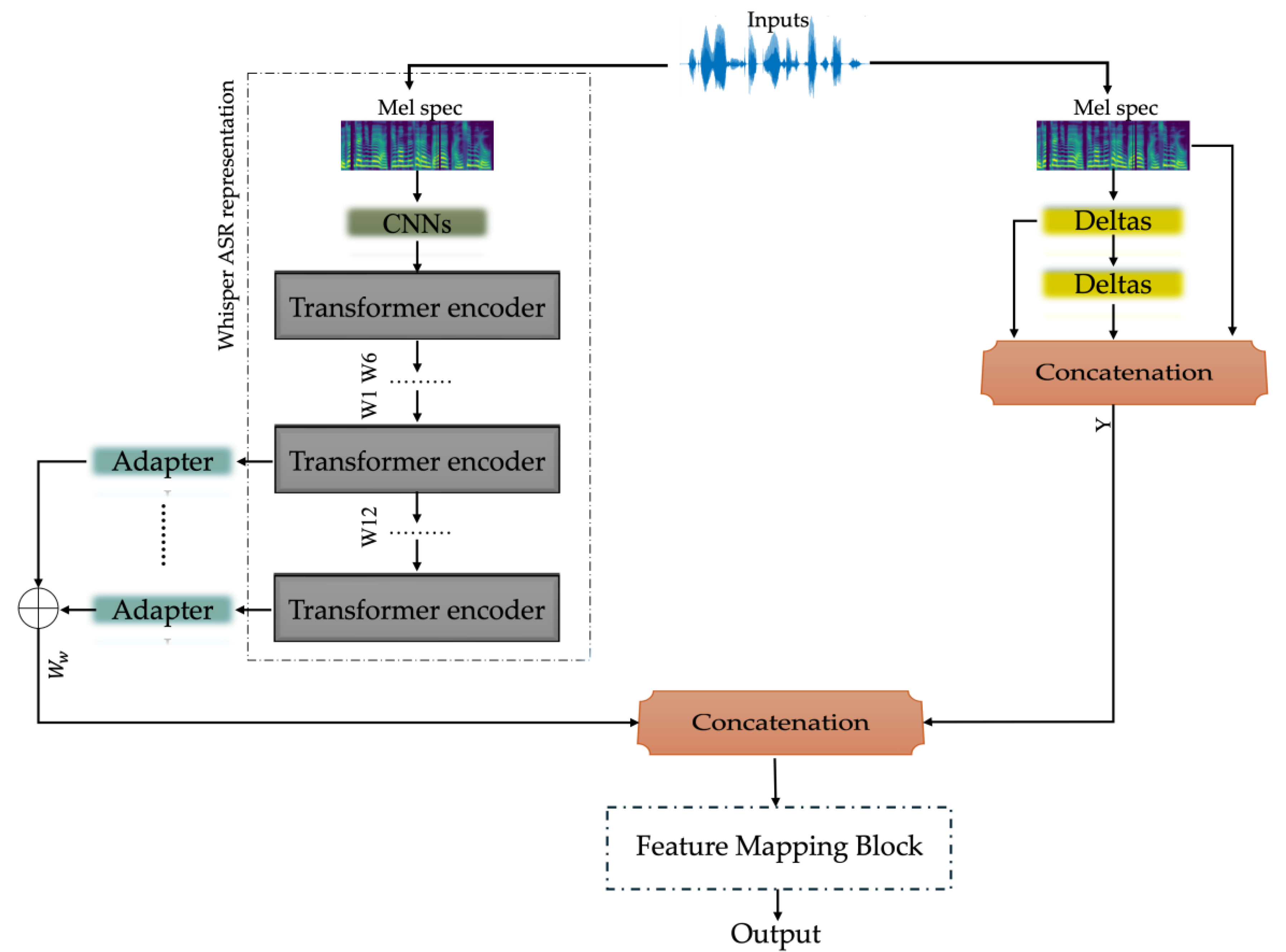

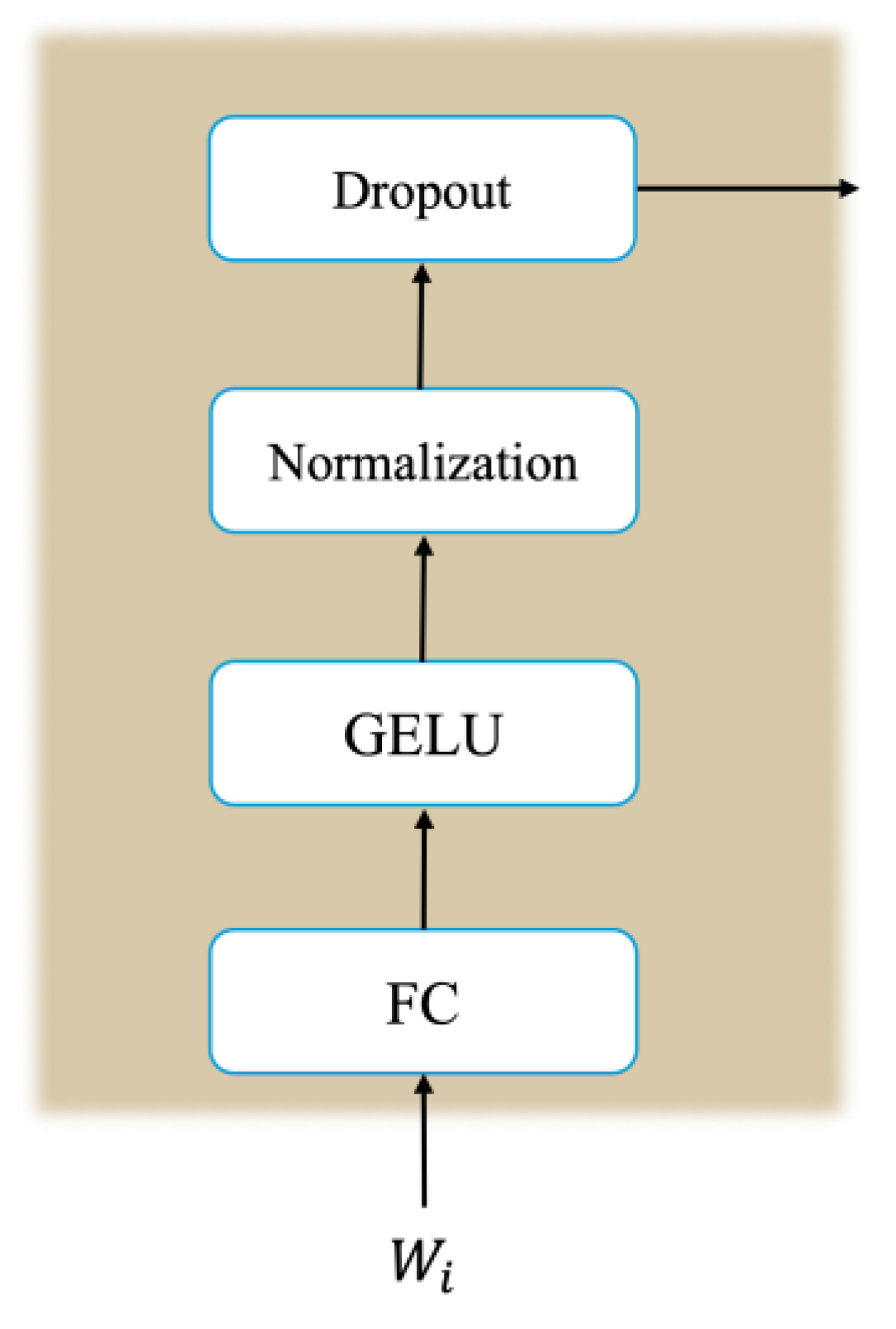

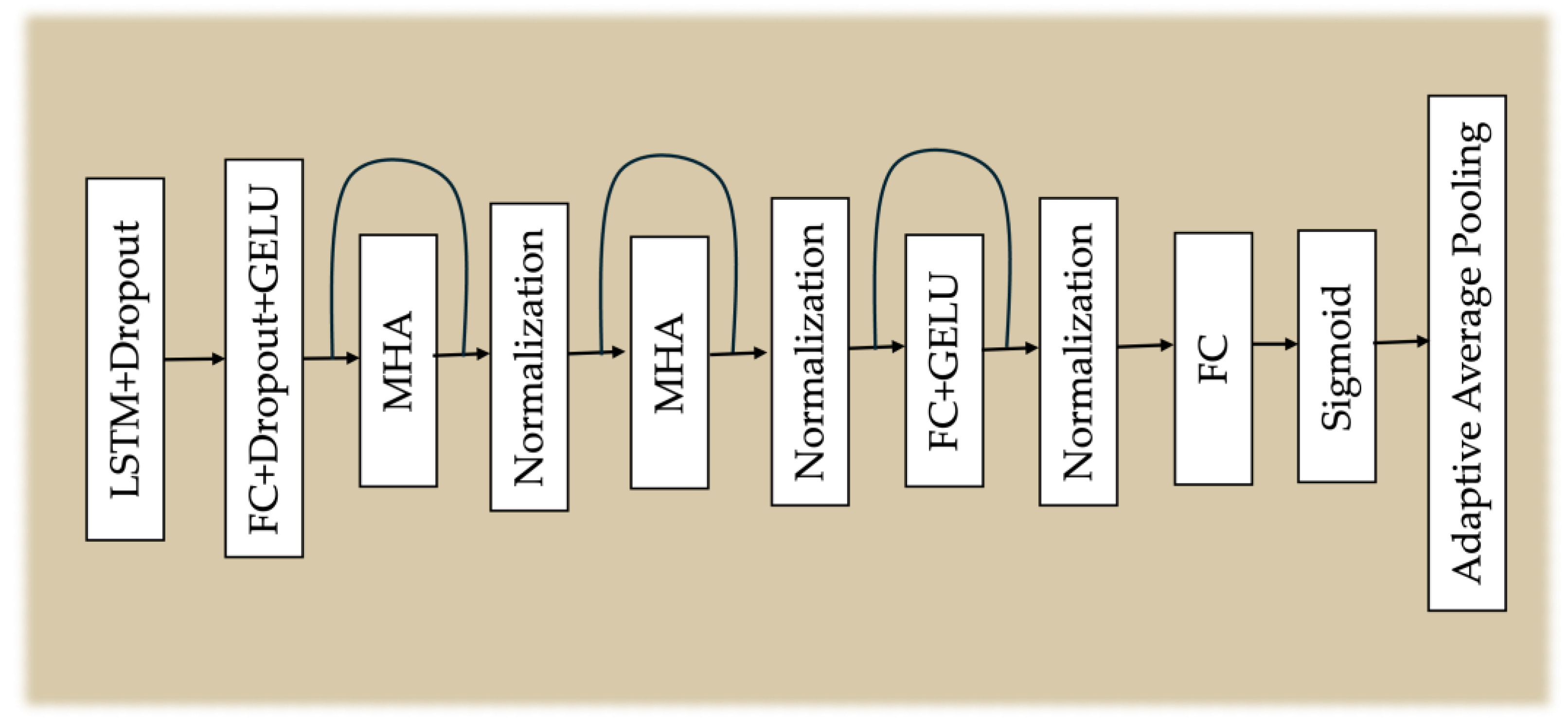

2. Proposed Model

3. Methodology

3.1. Dataset

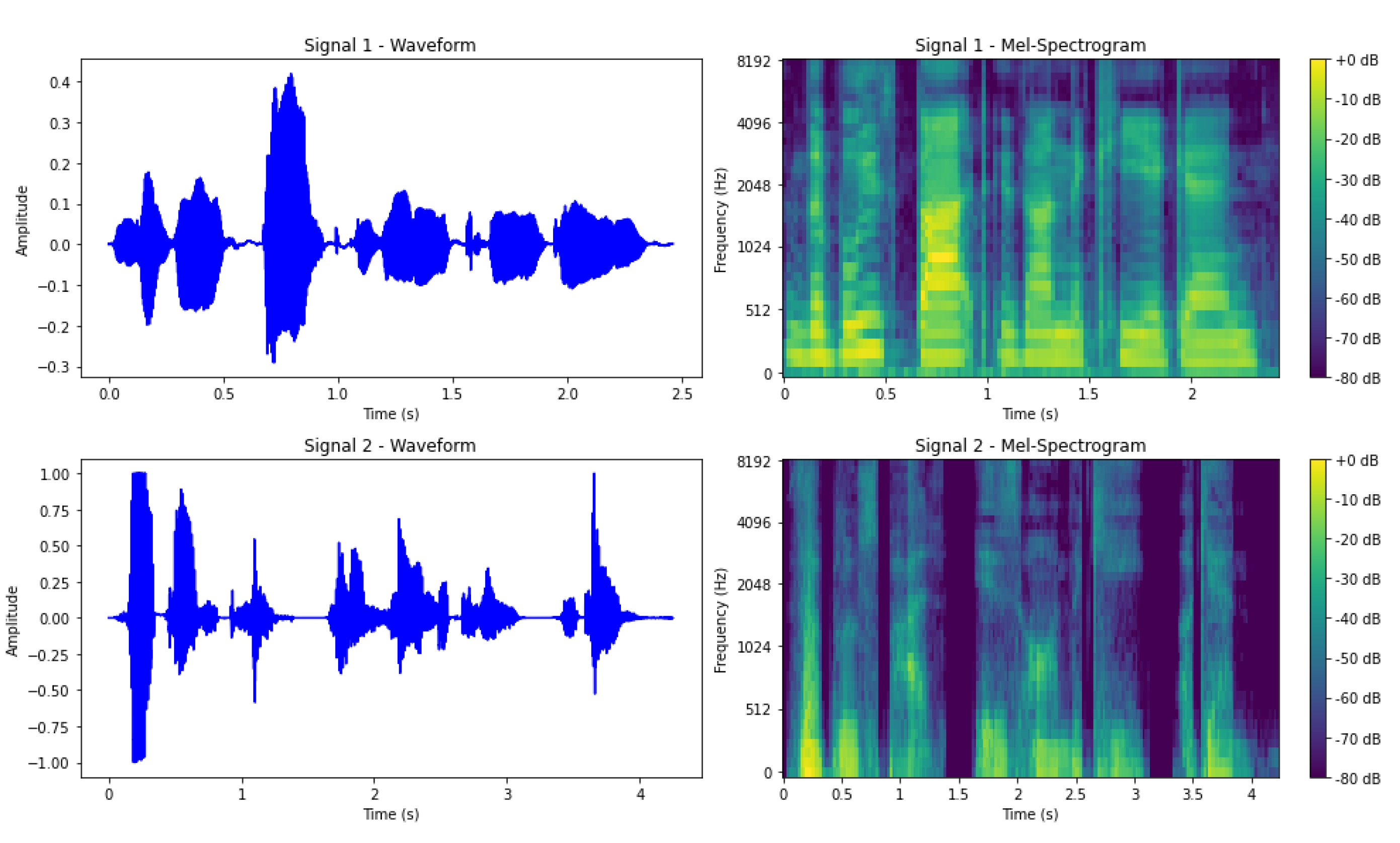

3.2. Input Data

3.3. Training Setup

3.4. Results

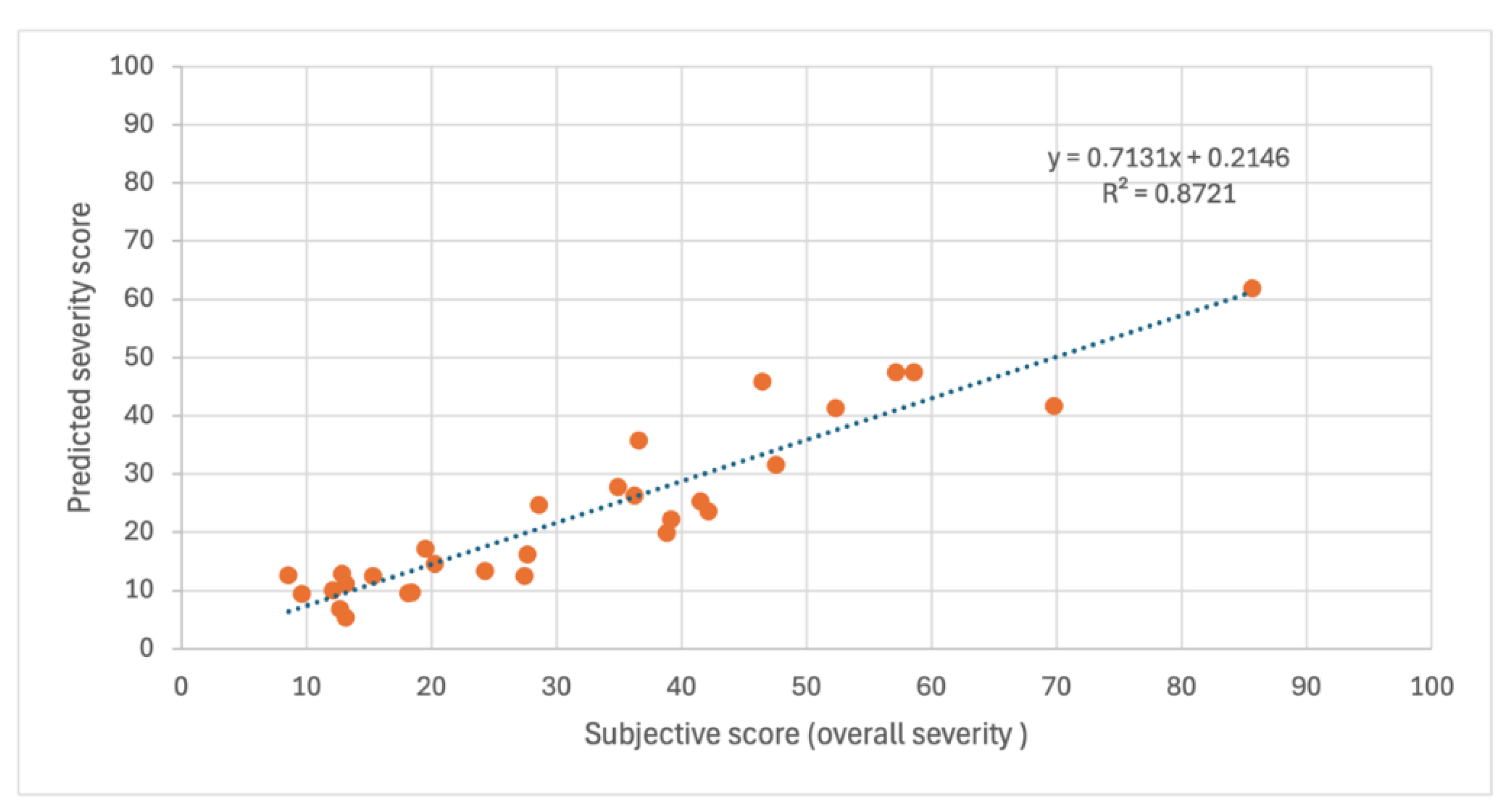

3.5. Generalization to an Unseen Dataset

4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ASR | Automatic Speech Recognition |

| DNN | Deep Neural Network |

| LSTM | Long Short-Term Memory |

| MFCC | Mel-Frequency Cepstral Coefficients |

| Whisper ASR | Whisper Automatic Speech Recognition |

| SQ | Speech Quality |

| SI | Speech Intelligibility |

| SFMs | Speech Foundation Models |

| CPP | Cepstral Peak Prominence |

| AVQI | Acoustic Voice Quality Index |

| CAPE-V | Consensus Auditory-Perceptual Evaluation of Voice |

| GRBAS | Grade, Roughness, Breathiness, Asthenia, and Strain |

| PD | Parkinson’s Disease |

| CPC2 | Clarity Prediction Challenge 2 |

| SSL | Self-Supervised Learning |

| SFMs | Speech Foundation Models |

| SAFN | Sequential-Attention Fusion Network |

| FC | Fully Connected |

| GAP | Global Average Pooling |

| PVQD | Perceptual Voice Qualities Database |

| RMSE | Root Mean Square Error |

References

- Barsties, B.; De Bodt, M. Assessment of voice quality: Current state-of-the-art. Auris Nasus Larynx 2015, 42, 183–188. [Google Scholar] [CrossRef] [PubMed]

- Kreiman, J.; Gerratt, B.R. Perceptual Assessment of Voice Quality: Past, Present, and Future. Perspect. Voice Voice Disord. 2010, 20, 62–67. [Google Scholar] [CrossRef]

- Tsuboi, T.; Watanabe, H.; Tanaka, Y.; Ohdake, R.; Yoneyama, N.; Hara, K.; Nakamura, R.; Watanabe, H.; Senda, J.; Atsuta, N.; et al. Distinct phenotypes of speech and voice disorders in Parkinson's disease after subthalamic nucleus deep brain stimulation. J. Neurol. Neurosurg. Psychiatry 2014, 86, 856–864. [Google Scholar] [CrossRef] [PubMed]

- Tsuboi, T.; Watanabe, H.; Tanaka, Y.; Ohdake, R.; Hattori, M.; Kawabata, K.; Hara, K.; Ito, M.; Fujimoto, Y.; Nakatsubo, D.; et al. Early detection of speech and voice disorders in Parkinson’s disease patients treated with subthalamic nucleus deep brain stimulation: a 1-year follow-up study. J. Neural Transm. 2017, 124, 1547–1556. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; et al. 05 July 2022; arXiv:arXiv:2110.05376. [CrossRef]

- Hidaka, S.; Lee, Y.; Nakanishi, M.; Wakamiya, K.; Nakagawa, T.; Kaburagi, T. Automatic GRBAS Scoring of Pathological Voices using Deep Learning and a Small Set of Labeled Voice Data. J. Voice 2022, 39, 846.e1–846.e23. [Google Scholar] [CrossRef] [PubMed]

- Kent, R.D. Hearing and Believing. Am. J. Speech-Language Pathol. 1996, 5, 7–23. [Google Scholar] [CrossRef]

- Mehta, D.D.; E Hillman, R. Voice assessment: updates on perceptual, acoustic, aerodynamic, and endoscopic imaging methods. Curr. Opin. Otolaryngol. Head Neck Surg. 2008, 16, 211–215. [Google Scholar] [CrossRef] [PubMed]

- Nagle, K.F. Clinical Use of the CAPE-V Scales: Agreement, Reliability and Notes on Voice Quality. J. Voice 2022, 39, 685–698. [Google Scholar] [CrossRef] [PubMed]

- Maryn, Y.; Roy, N.; De Bodt, M.; Van Cauwenberge, P.; Corthals, P. Acoustic measurement of overall voice quality: A meta-analysis. J. Acoust. Soc. Am. 2009, 126, 2619–2634. [Google Scholar] [CrossRef] [PubMed]

- Gómez-García, J.; Moro-Velázquez, L.; Mendes-Laureano, J.; Castellanos-Dominguez, G.; Godino-Llorente, J. Emulating the perceptual capabilities of a human evaluator to map the GRB scale for the assessment of voice disorders. Eng. Appl. Artif. Intell. 2019, 82, 236–251. [Google Scholar] [CrossRef]

- Lin, Y.-H.; Tseng, W.-H.; et al. Lightly Weighted Automatic Audio Parameter Extraction for the Quality Assessment of Consensus Auditory-Perceptual Evaluation of Voice, in 2024 IEEE International Conference on Consumer Electronics (ICCE), Jan. 2024, pp. 1–6. [CrossRef]

- Maryn, Y.; Weenink, D. Objective Dysphonia Measures in the Program Praat: Smoothed Cepstral Peak Prominence and Acoustic Voice Quality Index. J. Voice 2015, 29, 35–43. [Google Scholar] [CrossRef] [PubMed]

- Falk, T.H.; Parsa, V.; Santos, J.F.; Arehart, K.; Hazrati, O.; Huber, R.; Kates, J.M.; Scollie, S. Objective Quality and Intelligibility Prediction for Users of Assistive Listening Devices: Advantages and limitations of existing tools. IEEE Signal Process. Mag. 2015, 32, 114–124. [Google Scholar] [CrossRef] [PubMed]

- Andersen, A.H.; de Haan, J.M.; Tan, Z.-H.; Jensen, J. Nonintrusive Speech Intelligibility Prediction Using Convolutional Neural Networks. IEEE/ACM Trans. Audio, Speech, Lang. Process. 2018, 26, 1925–1939. [Google Scholar] [CrossRef]

- Leng, Y.; Tan, X.; et al. MBNET: MOS Prediction for Synthesized Speech with Mean-Bias Network, in ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 21, pp. 391–395. 20 June. [CrossRef]

- Zezario, R.E.; Fu, S.-W.; Chen, F.; Fuh, C.-S.; Wang, H.-M.; Tsao, Y. Deep Learning-Based Non-Intrusive Multi-Objective Speech Assessment Model With Cross-Domain Features. IEEE/ACM Trans. Audio, Speech, Lang. Process. 2022, 31, 54–70. [Google Scholar] [CrossRef]

- Dong, X.; and Williamson, D. S. An Attention Enhanced Multi-Task Model for Objective Speech Assessment in Real-World Environments, in ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 20, pp. 911–915. 20 May. [CrossRef]

- Zezario, R. E.; Fu, S.-W.; Fuh, C.-S.; et al. STOI-Net: A Deep Learning based Non-Intrusive Speech Intelligibility Assessment Model, in 2020 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Dec. 2020, pp. 482–486. Accessed: , 2025. [Online]. Available: https://ieeexplore.ieee. 18 May 9306. [Google Scholar]

- Fu, S.-W.; Tsao, Y.; et al. Quality-Net: An End-to-End Non-intrusive Speech Quality Assessment Model based on BLSTM, Aug. arXiv, arXiv:arXiv:1808.05344. [CrossRef]

- Liu, Y.; Yang, L.-C.; Pawlicki, A.; and Stamenovic, M. CCATMos: Convolutional Context-aware Transformer Network for Non-intrusive Speech Quality Assessment, in Interspeech 2022, Sept. 2022, pp. 3318–3322. [CrossRef]

- Kumar, A.; et al. , Torchaudio-Squim: Reference-Less Speech Quality and Intelligibility Measures in Torchaudio, in ICASSP 2023 - 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 23, pp. 1–5. 20 June. [CrossRef]

- Gao, Y.; Shi, H.; Chu, C.; and Kawahara, T. Enhancing Two-Stage Finetuning for Speech Emotion Recognition Using Adapters, in ICASSP 2024 - 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Apr. 2024, pp. 11316–11320. [CrossRef]

- Gao, Y.; Chu, C.; and Kawahara, T. Two-stage Finetuning of Wav2vec 2.0 for Speech Emotion Recognition with ASR and Gender Pretraining, in INTERSPEECH 2023, ISCA, Aug. 2023, pp. 3637–3641. [CrossRef]

- Tian, J.; et al. , Semi-supervised Multimodal Emotion Recognition with Consensus Decision-making and Label Correction, in Proceedings of the 1st International Workshop on Multimodal and Responsible Affective Computing, in MRAC ’23. New York, NY, Oct. 2023, USA: Association for Computing Machinery; pp. 67–73. [CrossRef]

- Dang, S.; Matsumoto, T.; Takeuchi, Y.; and Kudo, H. Using Semi-supervised Learning for Monaural Time-domain Speech Separation with a Self-supervised Learning-based SI-SNR Estimator, in INTERSPEECH 2023, ISCA, Aug. 2023, pp. 3759–3763. [CrossRef]

- Sun, H.; Zhao, S.; et al. Fine-Grained Disentangled Representation Learning For Multimodal Emotion Recognition, in ICASSP 2024 - 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Apr. 2024, pp. 11051–11055. [CrossRef]

- Cuervo, S.; and Marxer, R. Speech Foundation Models on Intelligibility Prediction for Hearing-Impaired Listeners, in ICASSP 2024 - 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Apr. 2024, pp. 1421–1425. [CrossRef]

- Mogridge, R.; et al. , Non-Intrusive Speech Intelligibility Prediction for Hearing-Impaired Users Using Intermediate ASR Features and Human Memory Models, in ICASSP 2024 - 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Apr. 2024, pp. 306–310. [CrossRef]

- Liu, G.S.; Jovanovic, N.; Sung, C.K.; Doyle, P.C. A Scoping Review of Artificial Intelligence Detection of Voice Pathology: Challenges and Opportunities. Otolaryngol. Neck Surg. 2024, 171, 658–666. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zhu, P.; Qiu, W.; Guo, J.; Li, Y. Deep learning in automatic detection of dysphonia: Comparing acoustic features and developing a generalizable framework. Int. J. Lang. Commun. Disord. 2022, 58, 279–294. [Google Scholar] [CrossRef] [PubMed]

- García, M. A.; and Rosset, A. L. Deep Neural Network for Automatic Assessment of Dysphonia, Feb. 2022; 25, arXiv:arXiv:2202.12957. [Google Scholar] [CrossRef]

- Dang, S.; et al. , Developing vocal system impaired patient-aimed voice quality assessment approach using ASR representation-included multiple features, Aug. 2024; 22, arXiv:arXiv:2408.12279. [Google Scholar] [CrossRef]

- van der Woerd, B.; Chen, Z.; Flemotomos, N.; Oljaca, M.; Sund, L.T.; Narayanan, S.; Johns, M.M. A Machine-Learning Algorithm for the Automated Perceptual Evaluation of Dysphonia Severity. J. Voice 2023. [Google Scholar] [CrossRef] [PubMed]

- Lee, M. Mathematical Analysis and Performance Evaluation of the GELU Activation Function in Deep Learning. J. Math. 2023, 2023, 1–13. [Google Scholar] [CrossRef]

- Walden, P.R. Perceptual Voice Qualities Database (PVQD): Database Characteristics. J. Voice 2022, 36, 875.e15–875.e23. [Google Scholar] [CrossRef] [PubMed]

- Murton, O. M.; Haenssler, A. E.; Maffei, M. F.; et al. Validation of a Task-Independent Cepstral Peak Prominence Measure with Voice Activity Detection, in INTERSPEECH 2023, ISCA, Aug. 2023, pp. 4993–4997. [CrossRef]

- Dindamrongkul, R.; Liabsuetrakul, T.; Pitathawatchai, P. Prediction of pure tone thresholds using the speech reception threshold and age in elderly individuals with hearing loss. BMC Res. Notes 2024, 17, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Ensar, B.; Searl, J.; Doyle, P. Stability of Auditory-Perceptual Judgments of Vocal Quality by Inexperienced Listeners, presented at the American Speech and Hearing Convention, Seattle, WA, United States, Dec. 2024. [Google Scholar]

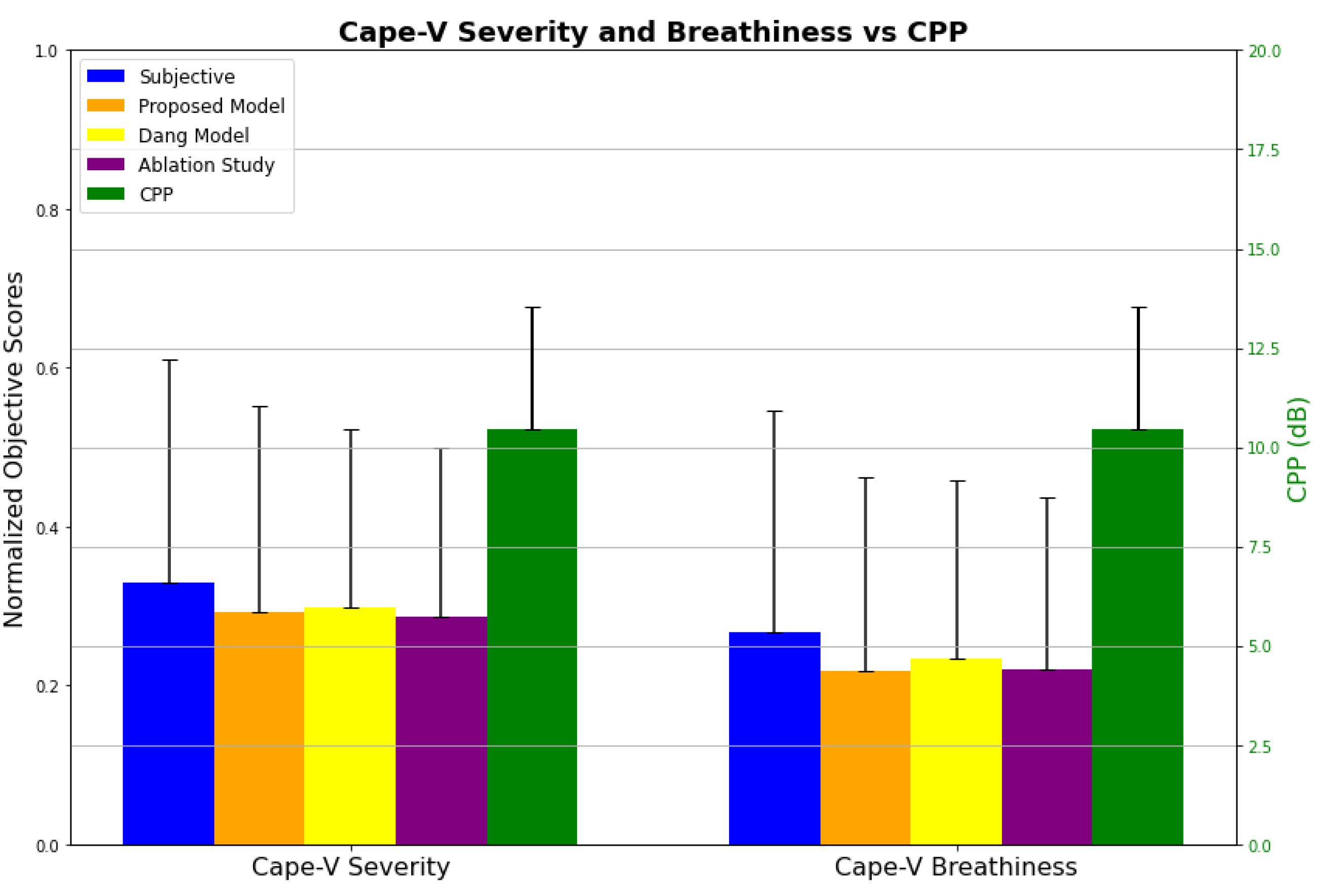

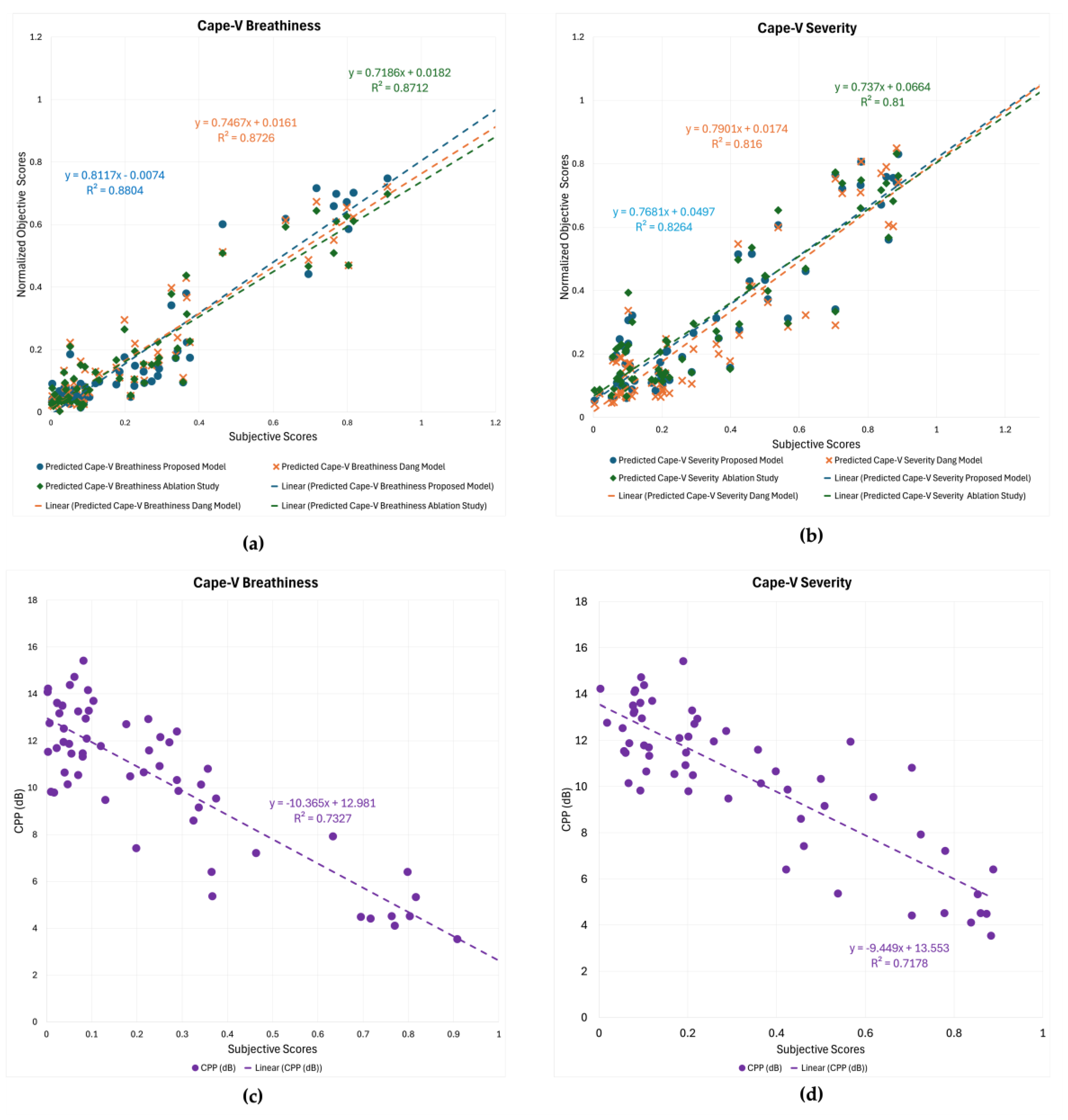

| Method | CAPE-V severity | CAPE-V breathiness | Trainable Parameters | ||

|---|---|---|---|---|---|

| Correlation | RMSE | Correlation | RMSE | ||

| Sentence Level: | |||||

| Proposed | 0.8810 | 0.1335 | 0.9244 | 0.1118 | 242,423,655 |

| Dang et al. [33] | 0.8784 | 0.1423 | 0.9155 | 0.1159 | 336,119,692 |

| Dang et al. [33], Ablated | 0.8685 | 0.1386 | 0.9104 | 0.1216 | 241,177,500 |

| CPP (dB) | -0.7468 | 0.1835 | -0.7577 | 0.1665 | - |

| HNR (dB) | -0.4916 | 0.2402 | -0.4898 | 0.2225 | - |

| Talker Level: | |||||

| Proposed | 0.9092 | 0.1189 | 0.9394 | 0.1029 | |

| Dang et al. [33] | 0.9034 | 0.1286 | 0.9352 | 0.1060 | |

| Dang et al. [33], Ablated | 0.9000 | 0.1237 | 0.9342 | 0.1111 | |

| Benjamin et al. [34] * | 0.8460 | 0.1423 | - | - | |

| CPP (dB) | -0.8489 | 0.1458 | -0.8576 | 0.1307 | |

| HNR (dB) | -0.5330 | 0.2333 | -0.5296 | 0.2156 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).