Submitted:

16 September 2025

Posted:

17 September 2025

You are already at the latest version

Abstract

Keywords:

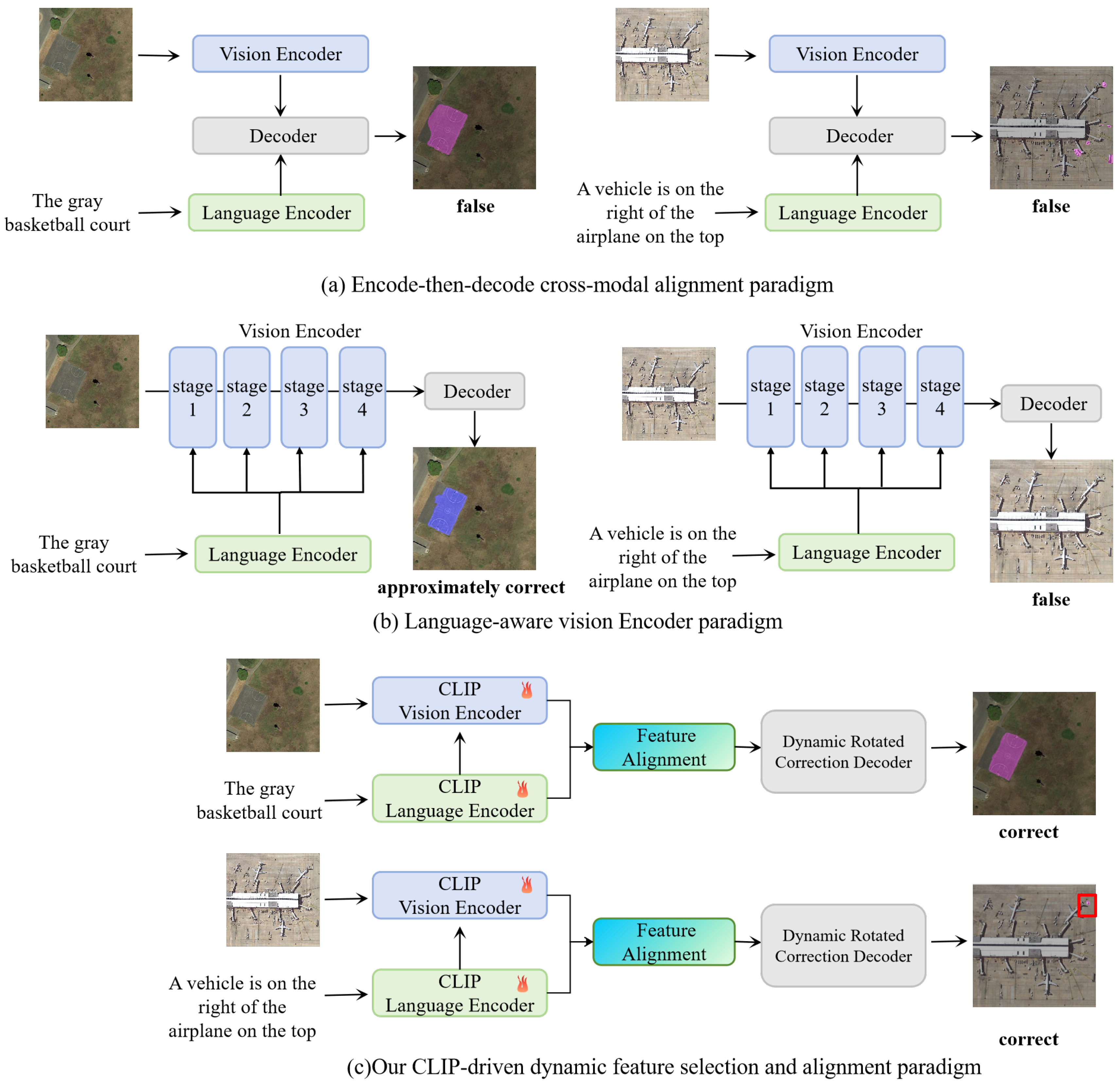

1. Introduction

- We propose CD2FSAN (CLIP-Driven Dynamic Feature Selection and Alignment Network), a CLIP-tailored, single-stage framework for RRSIS that is jointly optimized to improve cross-modal alignment, small object localization, and geometry-aware decoding in complex aerial scenes.

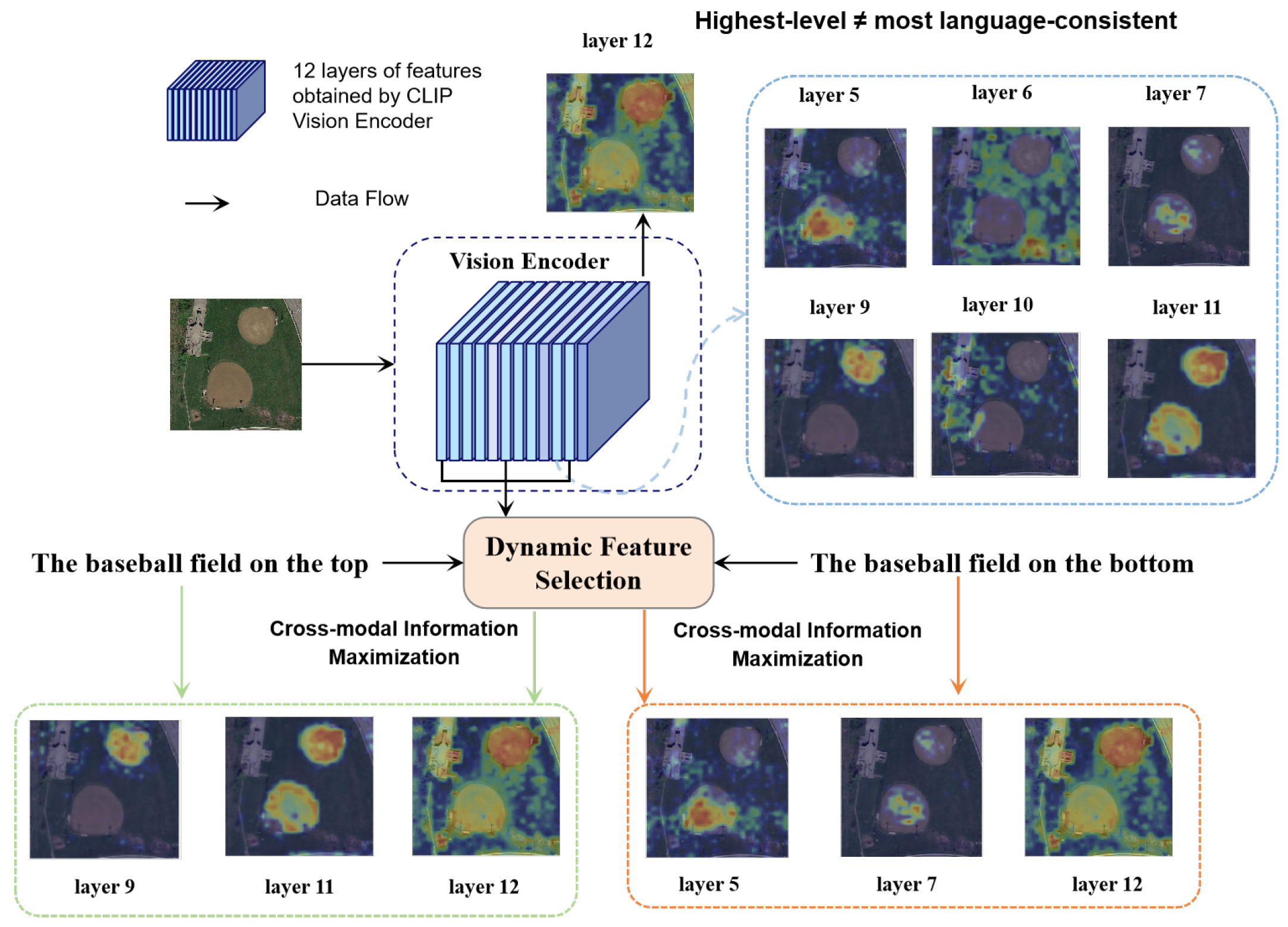

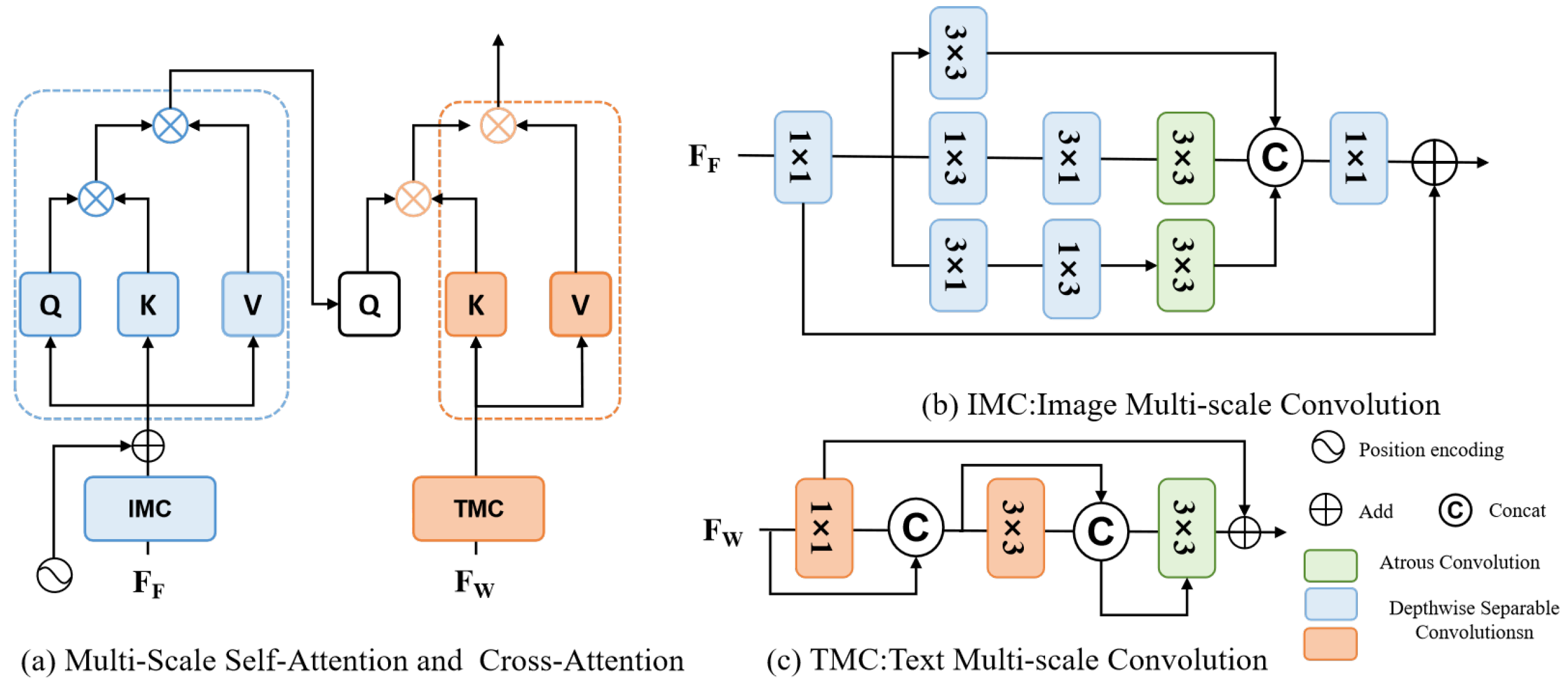

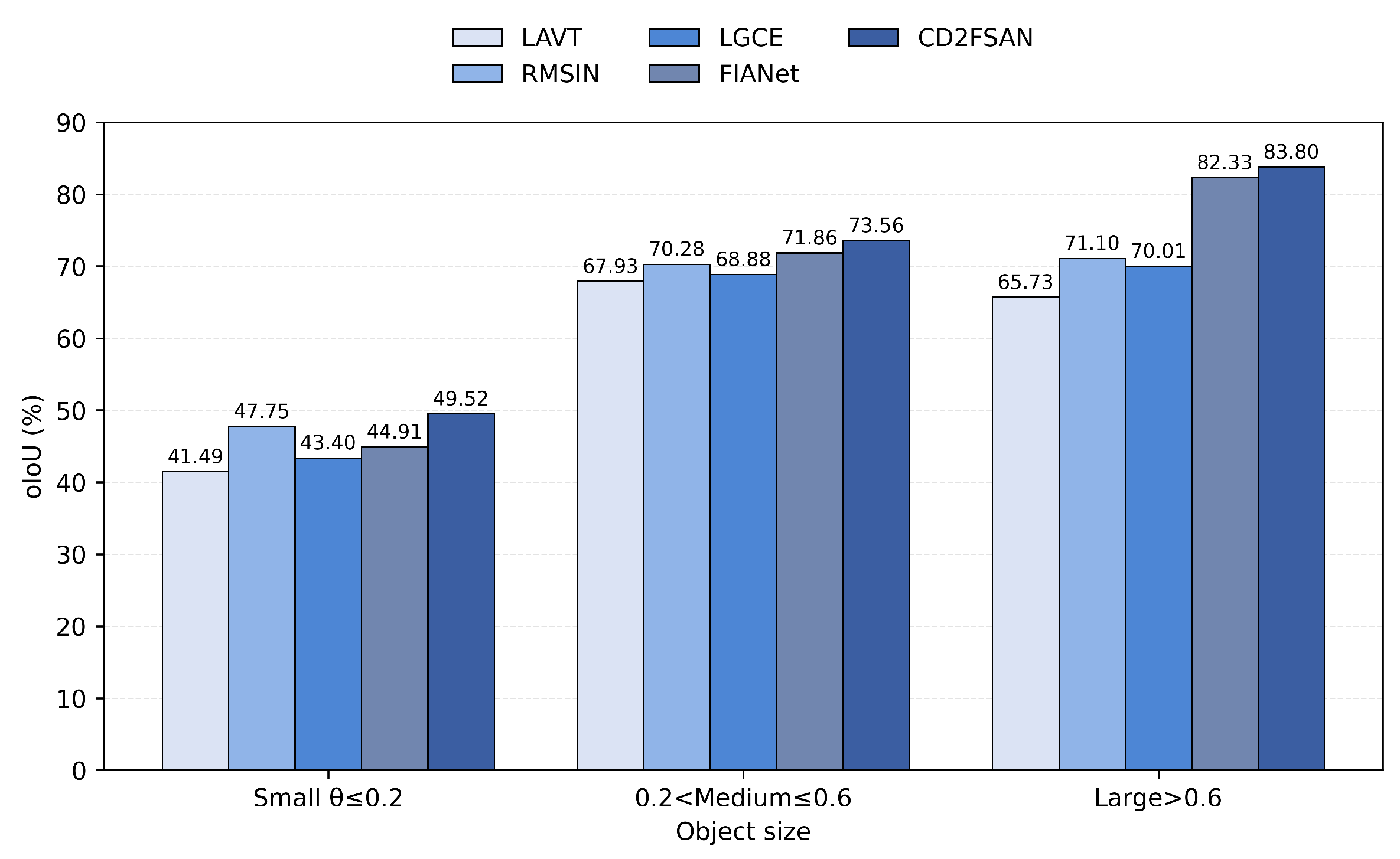

- To enhance cross-modal alignment and fine-grained segmentation, we introduce an integrated visual–language alignment and geometry-aware decoding design. This design incorporates a mutual-information–inspired dynamic feature selection to pick language-consistent features across CLIP’s hierarchy, and a Multi-scale Aggregation and Alignment mechanism (MAAM) that establishes scale-consistent representations to sharpen small-object localization. Additionally, a Dynamic Rotation Correction Decoder (DRCD) predicts per-sample rotation angles and re-parameterizes convolutional kernels via differentiable affine transformations, aligning receptive fields with object orientations and improving the delineation of rotated targets.

- Extensive experiments on three public RRSIS benchmarks show that CD2FSAN achieves state-of-the-art performance in both accuracy and efficiency. Ablation and visualization further confirm the effectiveness and interpretability of each component.

2. Related Work

2.1. Referring Image Segmentation

2.2. Referring Remote Sensing Image Segmentation

3. Materials and Methods

3.1. Overview

3.2. Image and Text Feature Encoding

3.3. Dynamic Feature Selection Mechanism

3.4. Multi-Scale Aggregation and Alignment Module

3.5. Dynamic Rotation Correction Decoder

4. Results

4.1. Dataset and Implementation Details

- RefSegRS. The dataset comprises 4,420 image–text–label triplets drawn from 285 scenes. The training, validation, and test splits contain 2,172, 431, and 1,817 triplets, respectively, corresponding to 151, 31, and 103 scenes. Fourteen categories (for example, road, vehicle, car, van, and building) and five attributes are annotated. Images are 512×512 pixels at a ground sampling distance (GSD) of 0.13 m.

- RRSIS-D. This benchmark contains 17,402 triplets of images, segmentation masks, and referring expressions. The training, validation, and test sets include 12,181, 1,740, and 3,481 triplets, respectively. It covers 20 semantic categories (for example, airplane, golf field, expressway service area, baseball field, and stadium) and seven attributes. Images are 800×800 pixels, with GSD ranging from 0.5 m to 30 m.

- RISBench. The dataset includes 52,472 image–language–label triplets. The training, validation, and test partitions contain 26,300, 10,013, and 16,159 triplets, respectively. It features 26 categories with eight attributes. All images are resized to 512×512 pixels, with GSD ranging from 0.1 m to 30 m.

- Overall Intersection over Union (oIoU): This metric is calculated as the ratio of the cumulative intersection area to the cumulative union area across all test samples, with an emphasis on larger objects.

- Mean Intersection over Union (mIoU): mIoU is computed by averaging the IoU values between predicted masks and ground truth annotations for each test sample, treating both small and large objects equally.

- Precision@X: Precision@X measures the percentage of test samples for which the IoU between the predicted result and the ground truth exceeds a threshold . This metric evaluates the model’s accuracy at specific IoU thresholds, reflecting its performance in object localization.

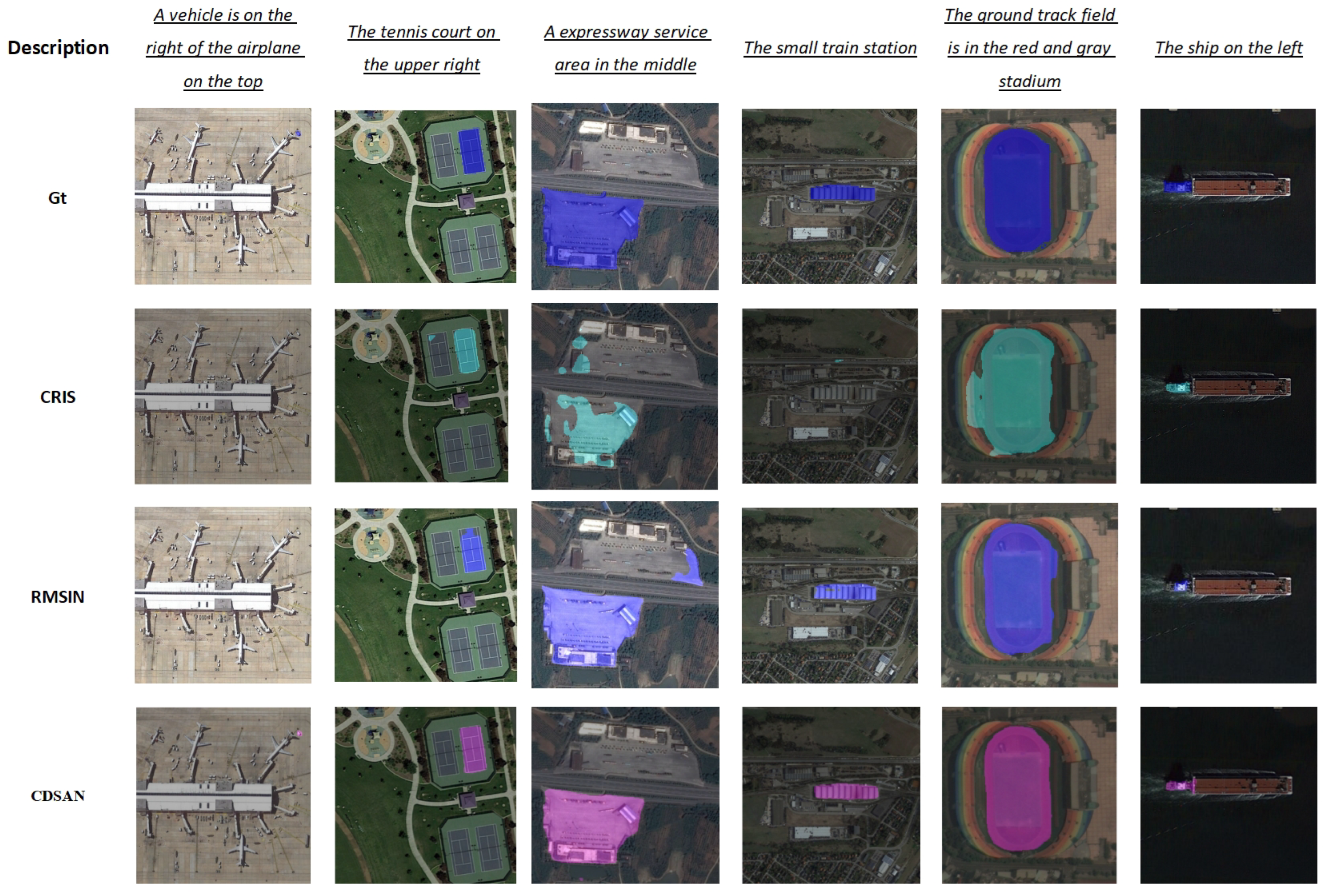

4.2. Comparisons with Other Methods

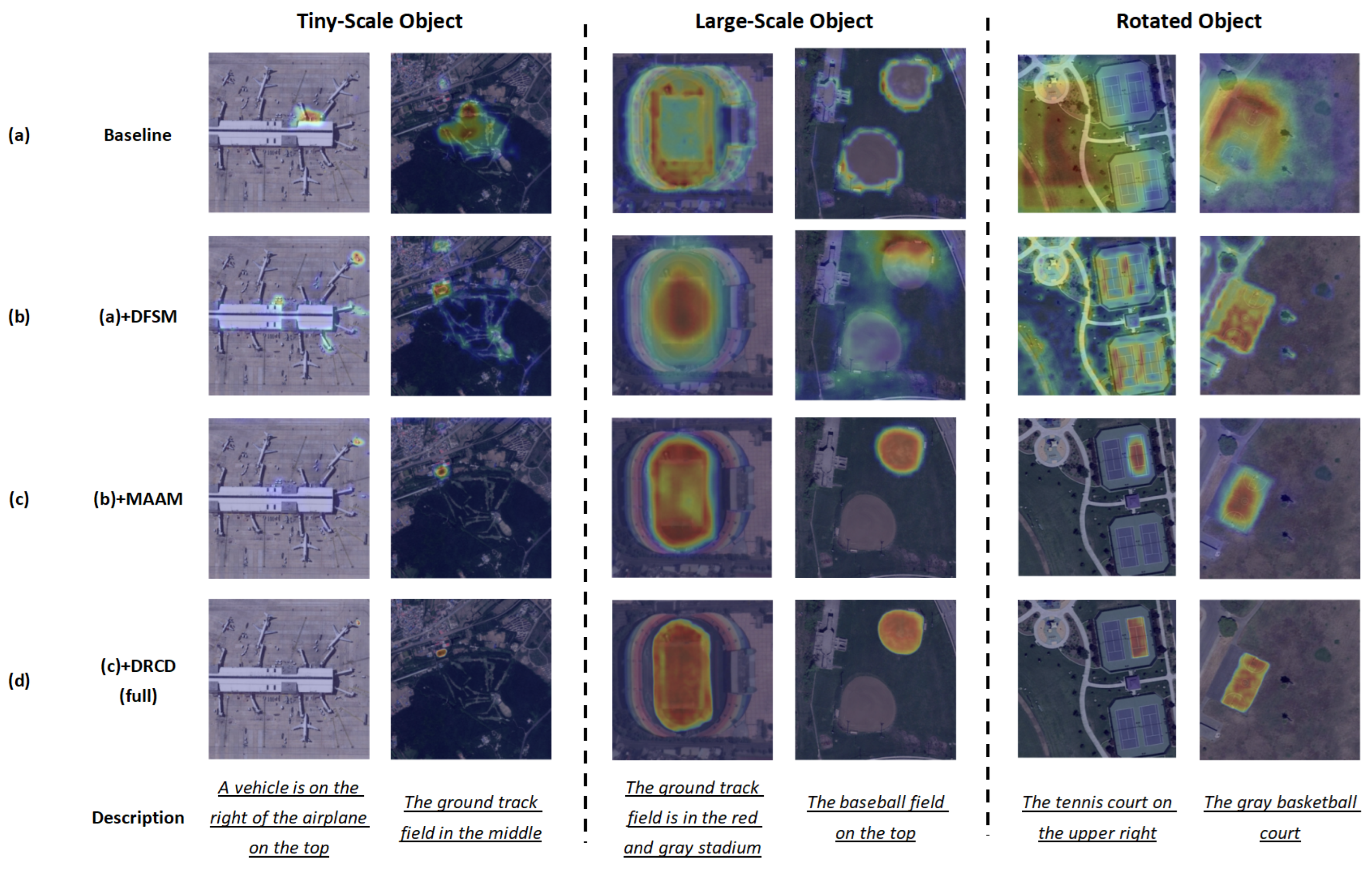

4.3. Ablation Study

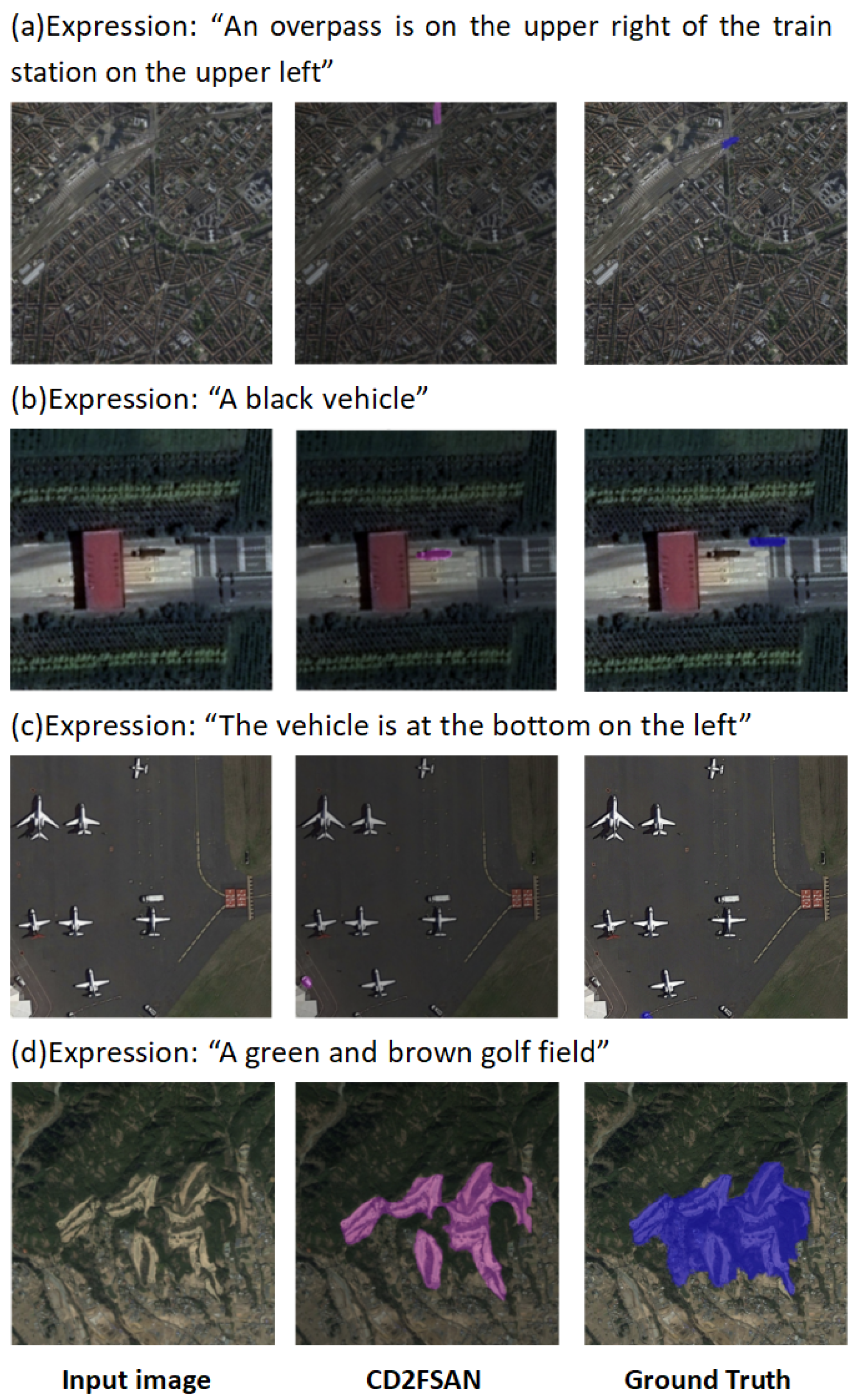

4.4. Visualization and Qualitative Analysis

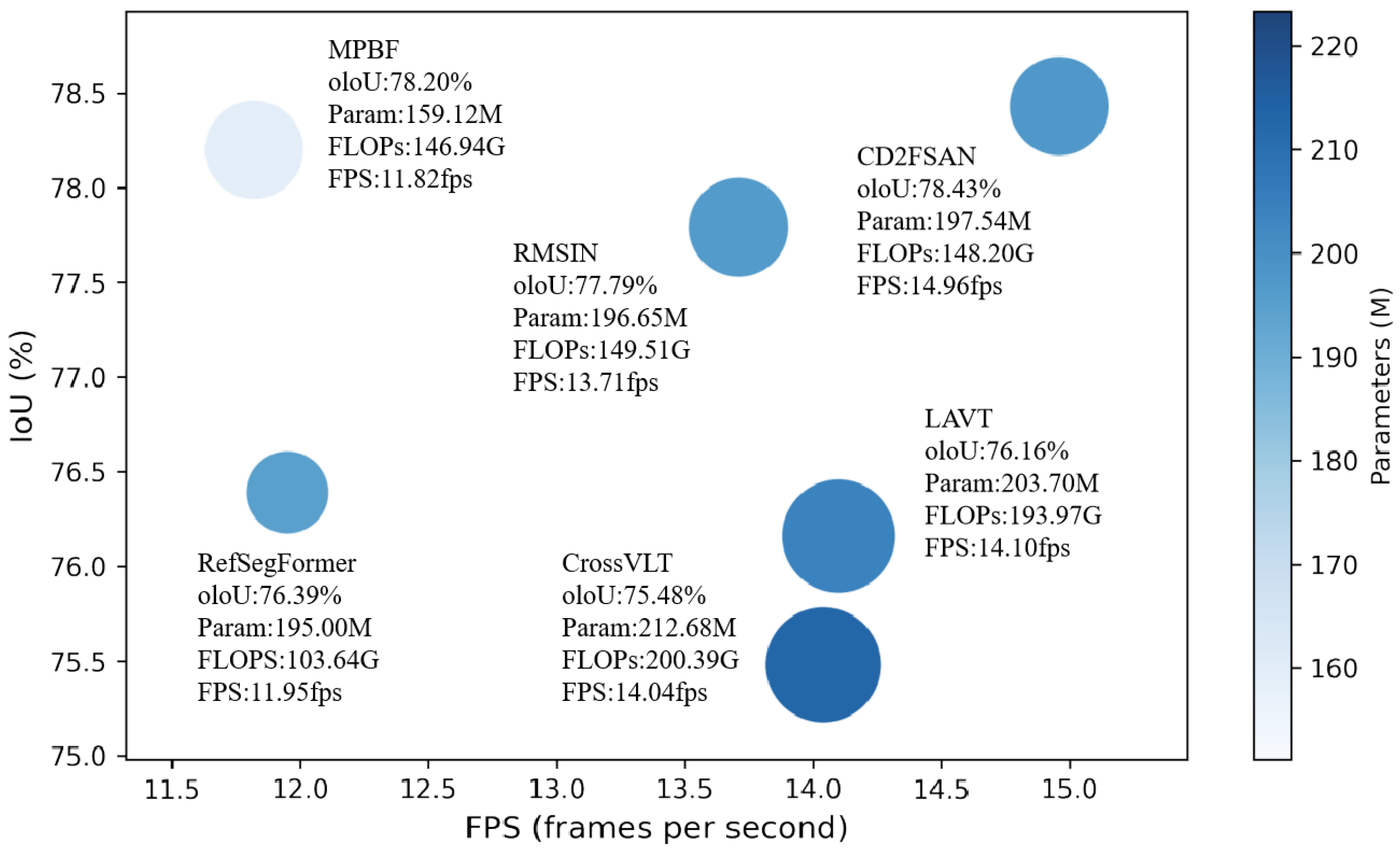

4.5. Efficiency and Complexity Analysis

5. Conclusion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yuan, Z.; Mou, L.; Hua, Y.; Zhu, X.X. RRSIS: Referring Remote Sensing Image Segmentation. IEEE Transactions on Geoscience and Remote Sensing 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Liu, S.; Ma, Y.; Zhang, X.; Wang, H.; Ji, J.; Sun, X.; Ji, R. Rotated Multi-Scale Interaction Network for Referring Remote Sensing Image Segmentation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2024; pp. 26648–26658. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS Journal of Photogrammetry and Remote Sensing 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Cheng, J.; Deng, C.; Su, Y.; An, Z.; Wang, Q. Methods and datasets on semantic segmentation for Unmanned Aerial Vehicle remote sensing images: A review. ISPRS Journal of Photogrammetry and Remote Sensing 2024, 211, 1–34. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A review of deep learning methods for semantic segmentation of remote sensing imagery. Expert Systems with Applications 2021, 169, 114417. [Google Scholar] [CrossRef]

- Duan, L.; Lafarge, F. Towards Large-Scale City Reconstruction from Satellites. In Proceedings of the Computer Vision – ECCV 2016; Leibe, B.; Matas, J.; Sebe, N.; Welling, M., Eds., Cham, 2016; pp. 89–104.

- Abid, S.K.; Chan, S.W.; Sulaiman, N.; Bhatti, U.; Nazir, U. Present and Future of Artificial Intelligence in Disaster Management. In Proceedings of the 2023 International Conference on Engineering Management of Communication and Technology (EMCTECH); 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Zhao, W.; Lyu, R.; Zhang, J.; Pang, J.; Zhang, J. A fast hybrid approach for continuous land cover change monitoring and semantic segmentation using satellite time series. International Journal of Applied Earth Observation and Geoinformation 2024, 134, 104222. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sensing of Environment 2020, 236, 111402. [Google Scholar] [CrossRef]

- Ji, R.; Tan, K.; Wang, X.; Tang, S.; Sun, J.; Niu, C.; Pan, C. PatchOut: A novel patch-free approach based on a transformer-CNN hybrid framework for fine-grained land-cover classification on large-scale airborne hyperspectral images. International Journal of Applied Earth Observation and Geoinformation 2025, 138, 104457. [Google Scholar] [CrossRef]

- Ding, H.; Liu, C.; Wang, S.; Jiang, X. Vision-Language Transformer and Query Generation for Referring Segmentation. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2021, pp. 16321–16330.

- Yu, L.; Lin, Z.; Shen, X.; Yang, J.; Lu, X.; Bansal, M.; Berg, T.L. MAttNet: Modular Attention Network for Referring Expression Comprehension. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018. [Google Scholar]

- Yang, Z.; Wang, J.; Tang, Y.; Chen, K.; Zhao, H.; Torr, P.H. LAVT: Language-Aware Vision Transformer for Referring Image Segmentation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2022; pp. 18134–18144. [Google Scholar] [CrossRef]

- Liu, S.; Ma, Y.; Zhang, X.; Wang, H.; Ji, J.; Sun, X.; Ji, R. Rotated Multi-Scale Interaction Network for Referring Remote Sensing Image Segmentation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2024; pp. 26648–26658. [Google Scholar] [CrossRef]

- Jing, Y.; Kong, T.; Wang, W.; Wang, L.; Li, L.; Tan, T. Locate then Segment: A Strong Pipeline for Referring Image Segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2021; pp. 9853–9862. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the Proceedings of the 38th International Conference on Machine Learning; Meila, M.; Zhang, T., Eds. PMLR, 18–24 Jul 2021, Vol. 139, Proceedings of Machine Learning Research; pp. 8748–8763.

- Wang, Z.; Lu, Y.; Li, Q.; Tao, X.; Guo, Y.; Gong, M.; Liu, T. CRIS: CLIP-Driven Referring Image Segmentation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2022; pp. 11676–11685. [Google Scholar] [CrossRef]

- Mao, J.; Huang, J.; Toshev, A.; Camburu, O.; Yuille, A.; Murphy, K. Generation and Comprehension of Unambiguous Object Descriptions. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016; pp. 11–20. [Google Scholar] [CrossRef]

- Hu, R.; Rohrbach, M.; Darrell, T. Segmentation from Natural Language Expressions. In Proceedings of the Computer Vision – ECCV 2016; Leibe, B.; Matas, J.; Sebe, N.; Welling, M., Eds., Cham, 2016; pp. 108–124.

- Li, R.; Li, K.; Kuo, Y.C.; Shu, M.; Qi, X.; Shen, X.; Jia, J. Referring Image Segmentation via Recurrent Refinement Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2018; pp. 5745–5753. [Google Scholar] [CrossRef]

- Ye, L.; Rochan, M.; Liu, Z.; Wang, Y. Cross-Modal Self-Attention Network for Referring Image Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2019; pp. 10494–10503. [Google Scholar] [CrossRef]

- Hu, Z.; Feng, G.; Sun, J.; Zhang, L.; Lu, H. Bi-Directional Relationship Inferring Network for Referring Image Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020; pp. 4423–4432. [Google Scholar] [CrossRef]

- Shi, H.; Li, H.; Meng, F.; Wu, Q. Key-Word-Aware Network for Referring Expression Image Segmentation. In Proceedings of the Computer Vision – ECCV 2018; Ferrari, V.; Hebert, M.; Sminchisescu, C.; Weiss, Y., Eds., Cham, 2018; pp. 38–54.

- Kamath, A.; Singh, M.; LeCun, Y.; Synnaeve, G.; Misra, I.; Carion, N. MDETR - Modulated Detection for End-to-End Multi-Modal Understanding. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV); 2021; pp. 1760–1770. [Google Scholar] [CrossRef]

- Ding, H.; Liu, C.; Wang, S.; Jiang, X. VLT: Vision-Language Transformer and Query Generation for Referring Segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence 2022, 45, 7900–7916. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV); 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Kim, N.; Kim, D.; Kwak, S.; Lan, C.; Zeng, W. ReSTR: Convolution-free Referring Image Segmentation Using Transformers. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2022; pp. 18124–18133. [Google Scholar] [CrossRef]

- Liu, J.; Ding, H.; Cai, Z.; Zhang, Y.; Kumar Satzoda, R.; Mahadevan, V.; Manmatha, R. PolyFormer: Referring Image Segmentation as Sequential Polygon Generation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2023; pp. 18653–18663. [Google Scholar] [CrossRef]

- Zhu, C.; Zhou, Y.; Shen, Y.; Luo, G.; Pan, X.; Lin, M.; Chen, C.; Cao, L.; Sun, X.; Ji, R. SeqTR: A Simple Yet Universal Network for Visual Grounding. In Proceedings of the Computer Vision – ECCV 2022; Avidan, S.; Brostow, G.; Cissé, M.; Farinella, G.M.; Hassner, T., Eds., Cham, 2022; pp. 598–615.

- Liu, C.; Ding, H.; Jiang, X. GRES: Generalized Referring Expression Segmentation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2023; pp. 23592–23601. [Google Scholar] [CrossRef]

- Quan, W.; Deng, P.; Wang, K.; Yan, D.M. CGFormer: ViT-Based Network for Identifying Computer-Generated Images With Token Labeling. IEEE Transactions on Information Forensics and Security 2024, 19, 235–250. [Google Scholar] [CrossRef]

- Yuan, Z.; Mou, L.; Hua, Y.; Zhu, X.X. RRSIS: Referring Remote Sensing Image Segmentation. IEEE Transactions on Geoscience and Remote Sensing 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Wu, J.; Li, X.; Xu, S.; Yuan, H.; Ding, H.; Yang, Y.; Li, X.; Zhang, J.; Tong, Y.; Jiang, X.; et al. Towards Open Vocabulary Learning: A Survey. IEEE Transactions on Pattern Analysis and Machine Intelligence 2024, 46, 5092–5113. [Google Scholar] [CrossRef] [PubMed]

- Dong, Z.; Sun, Y.; Liu, T.; Zuo, W.; Gu, Y. Cross-Modal Bidirectional Interaction Model for Referring Remote Sensing Image Segmentation. 2025; arXiv:cs.CV/2410.08613]. [Google Scholar]

- Lei, S.; Xiao, X.; Zhang, T.; Li, H.C.; Shi, Z.; Zhu, Q. Exploring Fine-Grained Image-Text Alignment for Referring Remote Sensing Image Segmentation. IEEE Transactions on Geoscience and Remote Sensing 2025, 63, 1–11. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, J. Multimodal-Aware Fusion Network for Referring Remote Sensing Image Segmentation. IEEE Geoscience and Remote Sensing Letters 2025, 22, 1–5. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, J.; Liu, C.; Zou, Z.; Shi, Z. RSRefSeg: Referring Remote Sensing Image Segmentation with Foundation Models. 2025; arXiv:cs.CV/2501.06809]. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. In Proceedings of the Advances in Neural Information Processing Systems; Ranzato, M.; Beygelzimer, A.; Dauphin, Y.; Liang, P.; Vaughan, J.W., Eds. Curran Associates, Inc., 2021, Vol. 34, pp. 12077–12090.

- Li, Y.; Li, Z.Y.; Zeng, Q.; Hou, Q.; Cheng, M.M. Cascade-CLIP: cascaded vision-language embeddings alignment for zero-shot semantic segmentation. In Proceedings of the Proceedings of the 41st International Conference on Machine Learning. JMLR.org, 2024, ICML’24.

- Cho, Y.; Yu, H.; Kang, S.J. Cross-Aware Early Fusion With Stage-Divided Vision and Language Transformer Encoders for Referring Image Segmentation. IEEE Transactions on Multimedia 2024, 26, 5823–5833. [Google Scholar] [CrossRef]

- Li, R.; Li, K.; Kuo, Y.C.; Shu, M.; Qi, X.; Shen, X.; Jia, J. Referring Image Segmentation via Recurrent Refinement Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2018; pp. 5745–5753. [Google Scholar] [CrossRef]

- Hu, Z.; Feng, G.; Sun, J.; Zhang, L.; Lu, H. Bi-Directional Relationship Inferring Network for Referring Image Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020; pp. 4423–4432. [Google Scholar] [CrossRef]

- Ye, L.; Rochan, M.; Liu, Z.; Wang, Y. Cross-Modal Self-Attention Network for Referring Image Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Los Alamitos, CA, USA; 2019; pp. 10494–10503. [Google Scholar] [CrossRef]

| Metric | Model | Publication | Visual Encoder | Text Encoder | RefSegRS | RRSIS-D | RISBench | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Val | Test | Val | Test | Val | Test | |||||

| oIoU | RRN [41] | CVPR-18 | ResNet-101 | LSTM | 69.24 | 65.06 | 66.53 | 66.43 | 47.28 | 49.67 |

| BRINet [42] | CVPR-20 | ResNet-101 | LSTM | 61.59 | 58.22 | 70.73 | 69.68 | 46.27 | 48.73 | |

| ETRIS [43] | ICCV-23 | ResNet-101 | CLIP | 72.89 | 65.96 | 72.75 | 71.06 | 64.09 | 67.61 | |

| CRIS [17] | CVPR-22 | CLIP | CLIP | 72.14 | 65.87 | 70.98 | 70.46 | 66.26 | 69.11 | |

| CrossVLT [40] | TMM-23 | Swin-B | BERT | 76.12 | 69.73 | 76.25 | 75.48 | 69.77 | 74.33 | |

| LAVT [13] | CVPR-22 | Swin-B | BERT | 78.50 | 71.86 | 76.27 | 76.16 | 69.39 | 74.15 | |

| LGCE [1] | TGRS-24 | Swin-B | BERT | 83.56 | 76.81 | 76.68 | 76.34 | 68.81 | 73.87 | |

| RMSIN [14] | CVPR-24 | Swin-B | BERT | 74.40 | 68.31 | 78.27 | 77.79 | 69.51 | 74.09 | |

| FIANet [35] | TGRS-25 | Swin-B | BERT | 85.51 | 78.28 | 76.77 | 76.05 | - | - | |

| CroBIM [34] | Arxiv-25 | Swin-B | BERT | 78.85 | 72.30 | 76.24 | 76.37 | 69.08 | 73.61 | |

| CD2FSAN (ours) | - | CLIP | CLIP | 87.04 | 79.88 | 79.04 | 78.43 | 70.32 | 74.84 | |

| mIoU | RRN [41] | CVPR-18 | ResNet-101 | LSTM | 50.81 | 41.88 | 46.06 | 45.64 | 42.65 | 43.18 |

| BRINet [42] | CVPR-20 | ResNet-101 | LSTM | 38.73 | 31.51 | 51.41 | 49.45 | 41.54 | 42.91 | |

| ETRIS [43] | ICCV-23 | ResNet-101 | CLIP | 54.03 | 43.11 | 55.21 | 54.21 | 51.13 | 53.06 | |

| CRIS [17] | CVPR-22 | CLIP | CLIP | 53.74 | 43.26 | 50.75 | 49.69 | 53.64 | 55.18 | |

| CrossVLT [40] | TMM-23 | Swin-B | BERT | 55.27 | 42.81 | 59.87 | 58.48 | 61.54 | 62.84 | |

| LAVT [13] | CVPR-22 | Swin-B | BERT | 61.53 | 47.40 | 57.72 | 56.82 | 60.45 | 61.93 | |

| LGCE [1] | TGRS-24 | Swin-B | BERT | 72.51 | 59.96 | 60.16 | 59.37 | 60.44 | 62.13 | |

| RMSIN [14] | CVPR-24 | Swin-B | BERT | 54.24 | 42.63 | 65.10 | 64.20 | 61.78 | 63.07 | |

| FIANet [35] | TGRS-25 | Swin-B | BERT | 80.61 | 68.63 | 62.99 | 63.64 | - | - | |

| CroBIM [34] | Arxiv-25 | Swin-B | BERT | 65.79 | 52.69 | 63.99 | 64.24 | 67.52 | 67.32 | |

| CD2FSAN (ours) | - | CLIP | CLIP | 76.95 | 66.96 | 66.47 | 65.37 | 68.36 | 69.74 | |

| Method | oIoU | mIoU | Pr@0.5 | Pr@0.6 | Pr@0.7 | Pr@0.8 | Pr@0.9 |

|---|---|---|---|---|---|---|---|

| LAVT [13] | 76.27 | 57.72 | 65.23 | 58.79 | 50.29 | 40.11 | 23.05 |

| CRIS [17] | 70.98 | 50.75 | 56.44 | 47.87 | 39.77 | 29.31 | 11.84 |

| LGCE [1] | 76.68 | 60.16 | 68.10 | 60.61 | 51.45 | 42.34 | 23.85 |

| FIANet [35] | 76.77 | 62.99 | 74.20 | 66.15 | 54.08 | 41.27 | 22.30 |

| RMSIN [14] | 78.27 | 65.10 | 68.39 | 61.72 | 52.24 | 41.44 | 23.16 |

| CroBIM [34] | 76.24 | 63.99 | 74.20 | 66.15 | 54.08 | 41.38 | 22.30 |

| CD2FSAN (Ours) | 79.04 | 66.47 | 78.28 | 70.11 | 56.78 | 41.38 | 20.57 |

| Category | LAVT [13] | RMSIN [14] | LGCE [1] | FIANet [35] | CD2FSAN (Ours) |

|---|---|---|---|---|---|

| Airport | 66.44 | 68.08 | 68.11 | 68.66 | 68.61 |

| Golf field | 56.53 | 56.11 | 56.43 | 57.07 | 64.22 |

| Expressway service area | 76.08 | 76.68 | 71.19 | 77.35 | 72.31 |

| Baseball field | 68.56 | 66.93 | 70.93 | 70.44 | 88.43 |

| Stadium | 81.77 | 83.09 | 84.90 | 84.87 | 84.43 |

| Ground track field | 81.84 | 81.91 | 82.54 | 82.00 | 83.06 |

| Storage tank | 71.33 | 73.65 | 73.33 | 76.99 | 74.89 |

| Basketball court | 70.71 | 72.26 | 74.37 | 74.86 | 88.43 |

| Chimney | 65.54 | 68.42 | 68.44 | 68.41 | 79.85 |

| Tennis court | 74.98 | 76.68 | 75.63 | 78.48 | 72.88 |

| Overpass | 66.17 | 70.14 | 67.67 | 70.01 | 65.63 |

| Train station | 57.02 | 62.67 | 58.19 | 61.30 | 68.32 |

| Ship | 63.47 | 64.64 | 63.48 | 65.96 | 68.32 |

| Expressway toll station | 63.01 | 65.71 | 61.63 | 64.82 | 72.32 |

| Dam | 61.61 | 68.70 | 64.54 | 71.31 | 66.11 |

| Harbor | 60.05 | 60.40 | 60.47 | 62.03 | 57.77 |

| Bridge | 30.48 | 36.74 | 34.24 | 37.94 | 43.53 |

| Vehicle | 42.60 | 47.63 | 43.12 | 49.66 | 49.72 |

| Windmill | 35.32 | 41.99 | 40.76 | 46.72 | 63.76 |

| Average | 62.82 | 65.39 | 64.21 | 66.78 | 69.98 |

| Method | Validation | Test | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| # | P@0.5 | P@0.6 | P@0.7 | P@0.8 | P@0.9 | mIoU | P@0.5 | P@0.6 | P@0.7 | P@0.8 | P@0.9 | mIoU | |

| (a) | Baseline | 45.98 | 35.92 | 26.26 | 15.86 | 4.48 | 42.59 | 39.73 | 29.93 | 22.01 | 12.41 | 4.14 | 38.58 |

| (b) | (a) + RFS(4,7,12) | 44.19 | 34.02 | 24.71 | 14.65 | 3.67 | 41.75 | 38.67 | 28.51 | 18.10 | 10.34 | 2.12 | 37.39 |

| (c) | (a) + RFS(6,9,12) | 50.97 | 40.45 | 31.43 | 19.31 | 6.14 | 46.21 | 45.51 | 36.09 | 26.61 | 15.86 | 4.31 | 43.66 |

| (d) | (a) + DFSM | 57.82 | 51.32 | 42.53 | 32.30 | 15.40 | 50.62 | 52.24 | 42.53 | 31.15 | 12.53 | 5.74 | 48.22 |

| (e) | (d) + Transformer | 71.84 | 62.29 | 51.21 | 37.59 | 17.30 | 60.31 | 71.65 | 61.96 | 50.47 | 35.94 | 18.04 | 59.18 |

| (f) | (d) + MAAM | 76.32 | 66.60 | 54.82 | 40.80 | 19.71 | 64.87 | 72.39 | 62.37 | 50.83 | 36.11 | 17.81 | 62.55 |

| (g) | (f) + DRCD (full) | 78.28 | 70.11 | 56.78 | 41.38 | 20.57 | 66.47 | 73.14 | 63.46 | 51.19 | 36.37 | 19.42 | 65.37 |

| Method | Params (M) | FLOPs (G) | oIoU (%) | FPS |

|---|---|---|---|---|

| LAVT | 203.70 | 193.97 | 76.16 | 14.10 |

| CrossVLT | 212.68 | 200.39 | 75.48 | 14.04 |

| RefSegFormer | 195.00 | 103.64 | 76.39 | 11.95 |

| RMSIN | 196.65 | 149.51 | 77.79 | 13.71 |

| MPBF | 159.12 | 146.94 | 78.20 | 11.82 |

| CD2FSAN (Ours) | 197.54 | 148.20 | 78.43 | 14.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).