Introduction

Attractiveness refers to the degree to which a person’s sexual appeal or beauty captures the perceiver’s attention and elicits positive emotional experiences and a desire to approach (Hill et al., 2017; Rhodes, 2006). Individuals who are physically attractive or have an appealing voice are commonly believed to possess more socially desirable, positive personality traits, reflecting the stereotype of “what is beautiful is good” (Dion et al., 1972; Zuckerman et al., 1990). In turn, personality traits can also influence evaluations of facial and vocal attractiveness; that is, individuals with positive personality traits are often perceived as more attractive, aligning with the stereotype of “what is good is beautiful” (Langlois et al., 2000; Wu et al., 2022). This stereotype represents the reverse mechanism of the halo effect, evolving from the notion that “what is beautiful is good”, which is supported by meta-analytic evidence (Eagly et al., 1991). People may infer others’ personality traits through external features such as facial, vocal, and body attractiveness. Similarly, alleged personality traits, such as morality (which is often considered a dimension of personality traits in social perception contexts (McAdams & Mayukha, 2024)), can influence the perceived attractiveness of external features. However, the perceptual or cognitive processes through which personality trait information influences perceived attractiveness are not well understood. By examining how personality traits modulate perceptions of attractiveness across facial and vocal modalities, this work seeks to expand our understanding of the cognitive and neural mechanisms underlying these stereotypes. The present study investigated this question combining a Stroop-like paradigm with the event-related potential (ERP) technique.

The Perception of Facial Attractiveness and Its Relationship with Personality Traits

ERP studies have shown that facial attractiveness affects brain responses such as the P1, N170, P2, and LPC components (Halit et al., 2000; Marzi & Viggiano, 2010; Pizzagalli et al., 2002; Schacht et al., 2008), with highly attractive faces eliciting larger N170 compared to less attractive faces (Marzi & Viggiano, 2010), indicating more pronounced in structural encoding of faces and motivated attention. Additionally, the interaction with attractive individuals or its anticipation triggers positive emotional responses and a desire for a closer relationship (Pataki & Clark, 2004).

Facial attractiveness influences various social and psychological outcomes, including mate selection, kin relationships and career prospects (Busetta et al., 2013) and the inference of certain personality traits (). For example, there is the “what is beautiful is good” stereotype where attractive individuals are attributed more positive behaviors, characteristics, and personality traits and are treated more favorably (Langlois et al., 2000). For example, teachers’ evaluations of students across multiple dimensions, such as their academic potential and intelligence, and students’ evaluations of teachers in areas, such as organizational skills and classroom management, are influenced by attractiveness levels (Liu et al., 2013). Preferences for attractiveness also exist in the labor market, social interactions, and even in politics (Kamenica, 2012; Johnston, 2010; Berggren et al., 2010; Rosar et al., 2008).

Personality traits are amongst the most important factors influencing mate selection, cooperation, and decision-making. Li et al. (2024) and Zhang et al. (2014) have shown that positive personality traits can increase the acceptance of unfair proposals and improve the rating of facial attractiveness. Research by Little et al. (2006) and Niimi and Goto (2023) explored how individuals’ expectation of their partner’s personality traits affected their preference for faces; they found that expected personality traits could significantly affect the attractiveness evaluation of faces. He et al. (2022) reported that faces related to prosocial moral vignettes were considered more attractive, and further supported the bidirectional relationship between moral character and aesthetic judgment.

The Perception of Vocal Attractiveness and Its Relationship with Personality Traits

ERP studies on vocal attractiveness have found that, as compared to low-attractive voices, high-attractive voices elicited larger N1 amplitudes (Zhang et al., 2020; Shang and Liu, 2022; Yuan et al., 2024) and LPC amplitudes (Zhang et al., 2020; Shang and Liu, 2022) in both conscious vocal attractiveness judgments and passive listening. These studies suggest that vocal attractiveness processing involves early rapid and mandatory processes, as well as late, strategy-based aesthetic decisions.

Voice also plays a role in shaping impressions related to social evaluations (Mileva et al., 2018). When visual cues are absent, such as hearing voices on the radio or telephone, listeners make judgments about the speaker based on vocal information (Borkenau & Liebler, 1992). Listeners exhibit high consistency in social evaluations and personality judgments of others even when faced with different speech content or in different language contexts (Mahrholz et al., 2018; McAleer et al., 2014). This phenomenon occurs partly because vocal acoustic information is correlated with actual personality traits (McAleer et al., 2014), and we can make judgments based on non-visual cues alone (for a review, see Groyecka et al., 2017). Indeed, vocal acoustic characteristics influence personality trait attribution, such as trustworthiness (Belin et al., 2017). During first impression formation vocal attractiveness was positively correlated with judgments of the speaker’s confidence, openness, and conscientiousness (Zuckerman et al., 1991). For example, when experts are asked to provide professional advice, they lower their average pitch and vocal tract resonance, presumably in order to obtain favorable evaluations in social environments (Groyecka et al., 2017).

Research on the influence of ascribed personality traits on vocal attractiveness is relatively scarce. One study examining the bidirectional relationship between vocal attractiveness and morality in the impression formation process (Wu et al., 2022). The results revealed a tendency to rate the voices of individuals associated with positive moral impressions as more attractive, in addition to associating highly attractive voices with better moral qualities. While these findings illustrate a harmonious bidirectional influence when perceptual and semantic cues align, a key question remains: How are evaluations formed when vocal attractiveness and ascribed personality traits provide conflicting signals, such as an appealing voice paired with negative moral descriptors?

One useful framework for understanding this apparent contradiction is provided by cognitive conflict and conflict-monitoring theories (Botvinick et al., 2001; Stürmer et al., 2009). This framework posits that conflicts in information processing—such as mismatches between perceptual attractiveness signals and semantic trait valence—trigger an evaluative monitoring system (potentially involving the anterior cingulate cortex) to detect the demand for control, leading to compensatory adjustments in attention and integration. According to this account, conflict can arise when concurrently active representations imply different evaluations — in our study, when externally derived perceptual signals (e.g., voice or face attractiveness) and internally represented semantic trait information favor different responses. When both types of cues are presented simultaneously — for example, a perceptually attractive face paired with a negative personality descriptor — these inputs create a representational mismatch that triggers conflict detection and resolution processes (Botvinick et al., 2001; Festinger, 1957). Such representational mismatch constitutes a form of cognitive conflict, which is detected by the anterior cingulate cortex (ACC) and subsequently resolved through the recruitment of prefrontal control systems. This framework predicts that external cues, given their perceptual salience, often dominate initial impressions, while internal cues exert stronger influence when perceptual information is ambiguous or uncertain. Neurocognitively, this conflict–resolution process is indexed by distinct ERP signatures: semantic mismatch arising from internal trait information is reflected in N400 modulations (Kutas & Federmeier, 2011), whereas later evaluative re-processing and control-related adjustments are reflected in late positive potentials (LPCs) associated with motivated attention (e.g.,Hajcak et al., 2009). In this way, conflict-monitoring theory provides a mechanistic account of how external perceptual signals and internal personality information are weighted and integrated in attractiveness evaluations.

The Present Study

Previous research has found that facial/vocal attractiveness and personality traits mutually influence each other. However, the neural mechanisms underlying the influence of (putative) personality traits on attractiveness perception are still unclear since pertinent studies are still missing. In this study, we used electroencephalography (EEG) and a Stroop-like paradigm to investigate the neurocognitive processes involved in the influence of personality traits on perceiving facial and vocal attractiveness. The classic Stroop paradigm is a widely used task to measure cognitive conflict arising from interference between relevant and irrelevant stimulus dimensions(Kornblum et al., 1990). Stroop-like paradigms are variations of this classic paradigm, adapted to probe cognitive conflict in other domains, such as semantic, emotional, or cross-modal incongruencies (e.g., spatial Stroop tasks where stimulus location conflicts with content). In the present study, we employed a Stroop-like paradigm where facial or vocal stimuli of high or low attractiveness are presented simultaneously with positive or negative personality trait words, analogous to the classic Stroop task’s simultaneous presentation of conflicting dimensions. Trait words presented concurrently with face/voice stimuli are expected to automatically activate semantic associations or valuations (e.g.,“honest”with positive), which can be congruent or incongruent with the perceptual attractiveness cue, thereby producing interference analogous to Stroop interference.

Our experiment included two tasks: In the face task, we presented participants with face stimuli of high or low attractiveness along with written denotations of positive or negative personality traits, requiring to judge facial attractiveness. The voice task was similar to the face task except that voice stimuli of high or low attractiveness was presented and vocal attractiveness was to be judged. Both tasks included congruent conditions (high facial/vocal attractiveness-positive personality traits, low facial/vocal attractiveness-negative personality traits) and incongruent conditions (high facial/vocal attractiveness-negative personality traits, low facial/vocal attractiveness-positive personality traits).

In terms of behavioral outcomes, based on previous research on stereotypes, we hypothesized that personality trait words semantically congruent with the attractiveness levels would facilitate attractiveness judgments, whereas incongruent trait information would counteract these evaluations. Furthermore, as a strong signal, the face may be relatively less affected by this effect. As for the ERP results, according to previous studies (e.g., Marzi & Viggiano, 2010) we predicted that, compared to low-attractive faces, high-attractive faces would elicit larger N170 and LPC amplitudes, but low-attractive faces would elicit larger P1 amplitudes compared to high-attractive faces (Halit et al., 2000). Similarly, compared to low-attractive voices, high-attractive voices should elicit larger N1, P2, and LPC amplitudes (Zhang et al. 2020; Shang & Liu, 2022; Yuan et al., 2024). More importantly, we expected incongruent conditions to elicit greater cognitive conflicts compared to congruent conditions, enhancing N400 amplitudes (e.g., Zahedi et al., 2019). The N400 typically begins 200-300 ms after the presentation of visual or auditory stimuli and peaks around 400 ms (Lau et al., 2008), and is thought to be triggered among others by semantic conflict (Zahedi et al., 2019), conflict control (Pan et al., 2020) and cognitive resource allocation (Paulmann & Kotz, 2008). Many studies have found that incongruent (vs. congruent) stimuli elicit larger N400 amplitudes, and this effect has been observed across different types of stimuli, such as speech, words, faces, and pictures (Ishii et al., 2010; Lau et al., 2008). Based on the conflict-monitoring account and prior ERP findings, we predicted that incongruent pairings would induce greater semantic/representational conflict — manifesting as enhanced N400 amplitudes — followed by increased LPC amplitudes reflecting control-related re-evaluation. If a positive personality trait is presented, it is usually associated with high attractiveness (according to stereotypes) and would facilitate the corresponding response. However, when the face/voice is unattractive, the response primed by a positive personality trait is incongruent with the required response. Moreover, because external perceptual cues (especially faces) are may be highly salient and dominate initial processing, semantic conflict may be resolved later for faces (producing stronger LPC effects), whereas vocal attractiveness—being comparatively more susceptible to semantic/contextual information—may more readily elicit early semantic conflict indexed by a larger N400 (Liu et al., 2023).

Previous research has found that gender affects attractiveness preferences (O’Connor et al., 2013; Feinberg et al., 2011). Specifically, men are more inclined to be attracted to attractive female faces or voices, while women prefer attractive male faces or voices and, furthermore, women who prefer male faces tend to also prefer male voices (O’Connor et al., 2012). Therefore, in order to reduce the complexity of the experimental design, we decided to recruit only female participants and to use only male faces and male voice stimuli.

2. Methods

2.1. Participants

Based on previous research (Thiruchselvam et al., 2016), the sample size was estimated by using G*power3.1 software (Faul et al., 2007). We specified an F-test for an ANOVA: “Repeated measures, within factors” (type of power analysis: a priori), set the effect size to f = 0.25 (medium), α = 0.05, power (1 − β) = 0.80, number of groups = 1 (within-subjects design), and number of measurements = 4 (four within-subject conditions). The correlation between repeated measurements and spherical vacation devices was set by default (correlation among repeated measures = 0.50 and nonsphericity correction ε = 1). Under these settings, G*Power indicated that a sample of approximately 23 participants (N ≈ 23) was required. A total of 30 female college students from Liaoning Normal University were recruited. All participants reported being heterosexual, with no history of mental illness, and having normal or corrected-to-normal hearing and vision. Three participants were excluded from the behavioral data analysis due to an error rate exceeding 50%. Due to equipment failure, EEG data from two additional participants were not recorded, and EEG data from two participants were excluded due to low quality (low number of valid trials after preprocessing and excessive artifacts). Consequently, the final sample for data analysis included 23 female participants with an average age of 22.12 ± 1.92 years. The experiment was approved by the Research Ethics Committee of Liaoning Normal University. All participants read and signed an informed consent form before the experiment and received compensation thereafter.

2.2. Stimuli

The stimuli included voice recordings, face images, and personality trait words. All voice recordings were selected from our own database and were of the emotionally neutral Chinese words “历史(history)”, “事物(things)”, and “心脏(heart)”. The recordings had been made in a quiet environment using a high-quality microphone (Bluebird SL, Blue Microphones) and Adobe Audition CS6 software, with a sampling rate of 44,100Hz at a bit rate of 32kbps, and stored in .wav format. Praat software was used for editing, retaining only the voiced segments, standardizing loudness at 70dB, and removing any recordings with noticeable background noise, mispronunciations, or unnatural speech, retaining voiced segments without equalizing F0 or duration to preserve attractiveness cues. For the experiment, 150 voice recordings from 50 male speakers were selected.

Photographs of faces were taken from previous research (Yang et al., 2015) and partially newly taken, resulting in 80 images of adult male faces. All portraits were taken from frontal view, with eyes looking straight ahead and displaying a neutral expression. Brightness and contrast were standardized using the SHINE toolbox in MATLAB(Willenbockel et al., 2010), which applies histogram equalization to match luminance distributions across images. This process minimized low-level perceptual confounds (e.g., variations in lighting or contrast) while preserving the natural structural features of the faces. The images were first cropped to 260×300 pixels and converted to grayscale with a black background using Adobe Photoshop CS6, ensuring uniformity in external features (e.g., no hair, ears, or clothing visible).

Since the formal experiment involved only female participants evaluating male stimuli, we selected high- and low- attractive stimuli based on the ratings by female participants. A total of 23 females (aged 19-26 years, M = 21.75, SD = 2.12) who did not participate in the formal experiment rated the attractiveness of all faces and voices. The stimuli were presented using E-prime software and rated on 7-point scales (1 = very attractive, 7 = very unattractive) based on subjective impressions. The final stimuli for the ERP experiment included 30 high-attractive (M = 5.46, SD = 0.28) and 30 low-attractive male voices (M = 3.29, SD = 0.42), as well as 30 high-attractive (M = 4.61, SD = 0.39) and 30 low-attractive male faces (M = 2.15, SD = 0.22). Statistical testing confirmed the differences between the high- and low-attractive stimuli for both voices (t = 23.38, p < 0.001) and faces (t = 29.77, p < 0.001).

Personality trait words (adjectives, e.g., “honest”, “evil”) were partially selected from standardized personality trait databases (Kong et al., 2012) and partially from online sources, resulting in a total of 235 two-character Chinese words. Personality trait words were rated by a mixed-gender sample, as these descriptors are not gender-specific and our aim was to obtain normative semantic valence ratings. Forty participants (21 females, 19 males, aged 19-26 years, M = 21.89, SD = 2.12) were recruited to rate each word on valence, familiarity, and gender typicality. In the valence rating task, 1 indicated a very negative word, 4 indicated neutral, and 7 indicated very positive. In the familiarity rating task, 1 indicated very unfamiliar, 4 indicated moderate familiarity, and 7 indicated very familiar. In the gender typicality rating task, 1 indicated that the word described only females, 4 indicated that the word could describe both males and females, and 7 indicated that the word described only males. The mean and standard deviation were calculated for the valence, familiarity, and gender typicality dimensions. Finally, 90 negative words (valence: M = 2.55, SD = 0.34, familiarity: M = 5.11, SD = 0.22, gender typicality: M = 3.93, SD = 0.29) and 90 positive words (valence: M = 5.72, SD = 0.25, familiarity: M = 5.70, SD = 0.22, gender typicality: M = 4.00, SD = 0.32) were selected as the final experimental materials. Statistical testing confirmed the differences between the negative and positive words for valence (t = -72.13, p < 0.001), familiarity (t = -18.05, p < 0.001), and gender typicality (t = -1.48, p = 0.14).

2.3. Procedure

The experiment was conducted in a room with soft lighting, quiet and comfortable surroundings, and electromagnetic shielding. Participants sat in front of a computer monitor at 75 cm. The monitor was 17inches with a resolution of 1920×1080 pixels, and stimuli subtended a visual angle of approximately 5°. Auditory stimuli were presented through headphones at a subjectively comfortable volume. Each participant completed two Stroop-like tasks: one for facial attractiveness and one for vocal attractiveness judgements, and they were asked to classify the face or voice as attractive or unattractive according to their own criteria (they were not informed about the preselection of stimuli) while ignoring the personality words. In the task, faces/voices and personality trait words were presented simultaneously to induce automatic semantic activation and thereby probe semantic interference in attractiveness judgments. Participants were to quickly and spontaneously (without much deliberation) classify each face/voice according to attractiveness.

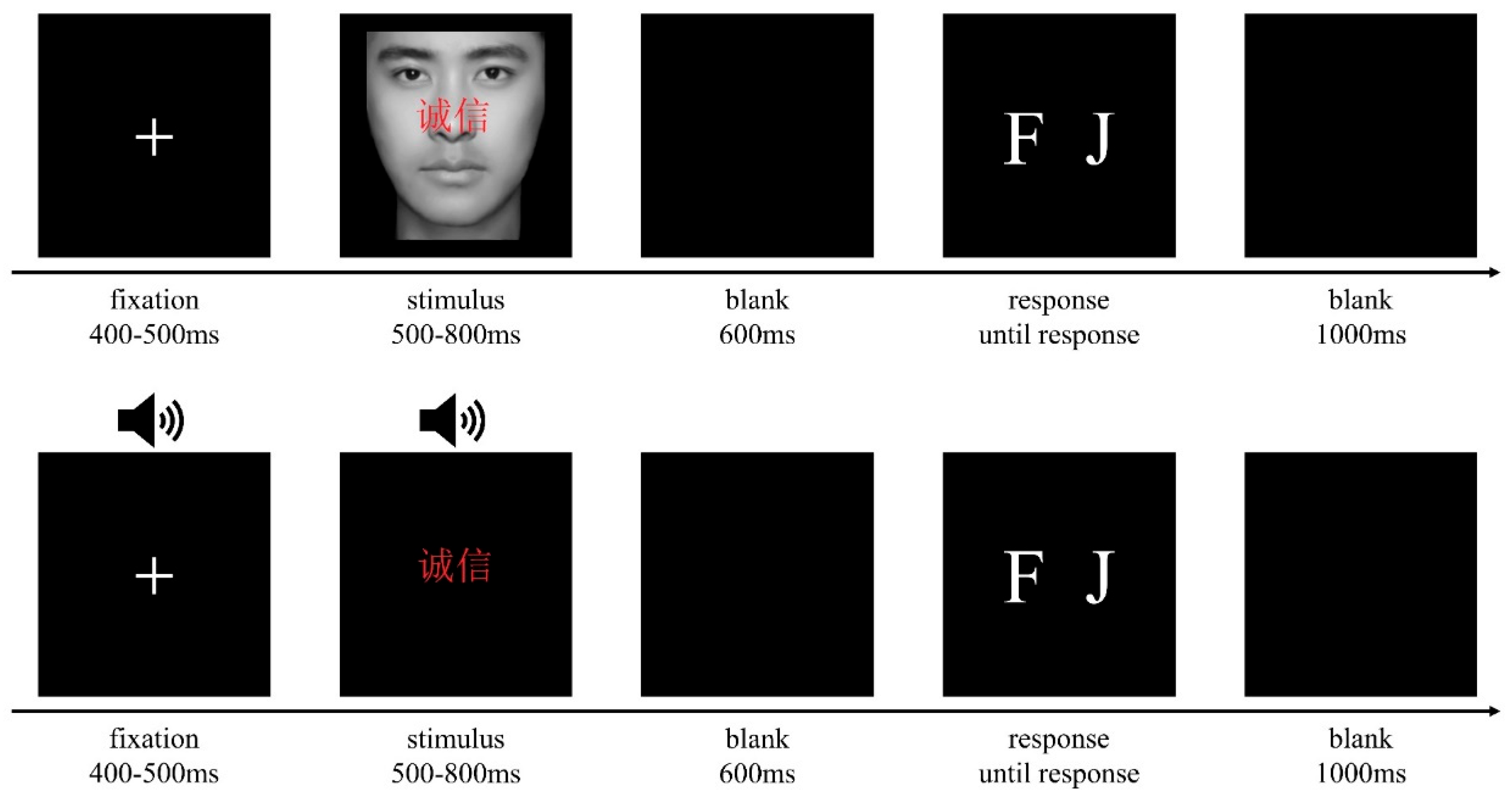

As shown in

Figure 1, each trial began with a fixation point (accompanied by a “beep” sound in the voice task) that lasted 400-500ms. This was followed by the simultaneous presentation of a face (or voice) and a personality trait word for 500-800ms, with the personality words positioned above the nose of the face (at the same location as in the voice task). Only after a 600ms blank screen, participants were required to classify the attractiveness of each face/voice by pressing the “F” or “J” key on the keyboard, with key assignments counterbalanced between participants. A final 1000-ms blank screen was presented before the next trial began.

All face and voice stimuli were presented across four blocks, with two blocks each for faces and voices; the order of face and voice tasks was counterbalanced across participants. In both tasks, stimuli were pseudo-randomly assigned to two blocks, each containing 90 trials. The paired stimuli yielded four conditions with 45 trials each (high-attractive voices/positive traits, high-attractive voices/negative traits, low-attractive voices/positive traits, low-attractive voices/ negative traits). A practice phase with 16 additional trials was included to ensure that participants understood the experimental procedure. The entire experiment took approximately one hour, including preparation, practice, and the main experiment.

2.4. Data Collection and Analysis

The EEG signals were recorded using a 64-channel Ag/AgCl electrode cap (Brain Products, Germany) based on the extended 10-20 international system. Continuous EEG signals were recorded by using FCz as common online reference, a 100 Hz low-pass filter and a sampling rate of 500 Hz. The impedance of all scalp electrodes was maintained below 5 kΩ. During offline analysis, the data were re-referenced to the averaged mastoid channels using EEGLAB v2023.1 (MathWorks, Natick, USA). Data were preprocessed with a bandpass filter of 0.01 Hz (24 dB/octave) to 30 Hz (24 dB/octave). Obvious artifacts such as EMG and head movements were manually removed. Further artifact correction was performed using independent component analysis (ICA) (Delorme & Makeig, 2004), and trials with amplitudes exceeding ±100 μV were excluded. Components were visually inspected and rejected if they clearly reflected ocular artifacts (e.g., blinks or eye movements, characterized by frontal topography and slow waveforms), muscular artifacts (e.g., high-frequency noise with peripheral topography), or other non-neural sources (e.g., line noise or electrode pops). The EEG data were segmented from 200 ms before to 800 ms after the onset of the face or voice stimuli, with a baseline correction based on the 200 ms pre-stimulus period. Only trials with participant responses consistent with independent pre-ratings (labeled as “correct” for analytical purposes, as a proxy for agreement with baseline attractiveness categories) were retained for ERP averaging. We retained approximately 74.1% of trials in total.

In the ERPs of both face and voice tasks, the N400 component was measured in the 300-500 ms time window at a frontal-central ROI (FCz, FC1, FC2, FC3, FC4, Cz, C1, C2, C3, C4) (Caldara et al., 2004); Zahedi et al., 2019). The LPC typically shows a parietal scalp distribution (for attractive relative to non-attractive faces) (Schacht et al., 2008; Werheid et al. 2007) and voices (Liu et al. 2023) but with some possible variance. In the present results, we found that the differences for attractive and non-attractive stimuli in the late ERPs differed in topographies and time windows in face and voice tasks. Therefore, in both the face and voice tasks, the LPC component was analyzed in two consecutive time windows: 500–650 ms and 650–800 ms. For the face task, mean amplitudes were extracted from a centro-parietal ROI (CP1, CP2, CPz, P1, P2, Pz); whereas for the voice task, a fronto-central ROI (FCz, FC1, FC2, FC3, FC4, Cz, C1, C2, C3, C4) was used.

Additionally, in the face task ERPs we measured the P1 in the 90-140ms time window at an occipito-temporal ROI (O1, Oz, O2) (Halit et al., 2000) and the N170 component during the 160-220 ms time window at an occipito-temporal ROI (O2, O1, Oz, POz, PO8, PO7, PO4, PO3) (Doi & Shinohara, 2015). In the voice task ERPs, the N1 component was measured in the 90-140 ms time window at the fronto-central region of interest (ROI: F1, Fz, F2, FC1, FCz, FC2, C1, Cz, C2) (e.g., Yuan et al., 2024; Marzi & Viggiano, 2010); the P2 component was measured during the 160 to 230ms time window at a fronto-central ROI (F2, F1, Fz, FC2, FC1, FCz, Cz, C1, C2) (Zhang & Deng, 2012).

ERP amplitudes were statistically analyzed using repeated measures ANOVAs with factors Attractiveness (high attractiveness, low attractiveness) and Congruency (congruent, incongruent). Our analytic strategy followed a theory-driven ROI approach: each ERP component/time window was pre-specified based on prior literature, and Bonferroni correction was applied in SPSS for post-hoc pairwise comparisons following significant ANOVA effects. This was done for each component to control Type I error in targeted comparisons.

4. Discussion

Using ERP technology in a Stroop-like paradigm we examined the effects of positive or negative associated personality traits on the classification of facial and vocal attractiveness. The behavioral and ERP results show that personality traits modulate perception of attractive levels and show differences in face and voice tasks. At the behavioral level, we found that the influence of personality trait words on perceived attractiveness differed between facial and vocal stimuli. Analysis of the EEG data further revealed the temporal dynamics underlying this effect. In fact, the influence of personality traits on attractive judgments is not expressed in isolation but emerges through their interaction with perceptual cues. It is this interplay — where trait information can either reinforce or conflict with facial/voice attractiveness — that reveals the dynamic contribution of personality to impression formation. In the following, we will discuss these findings in greater detail.

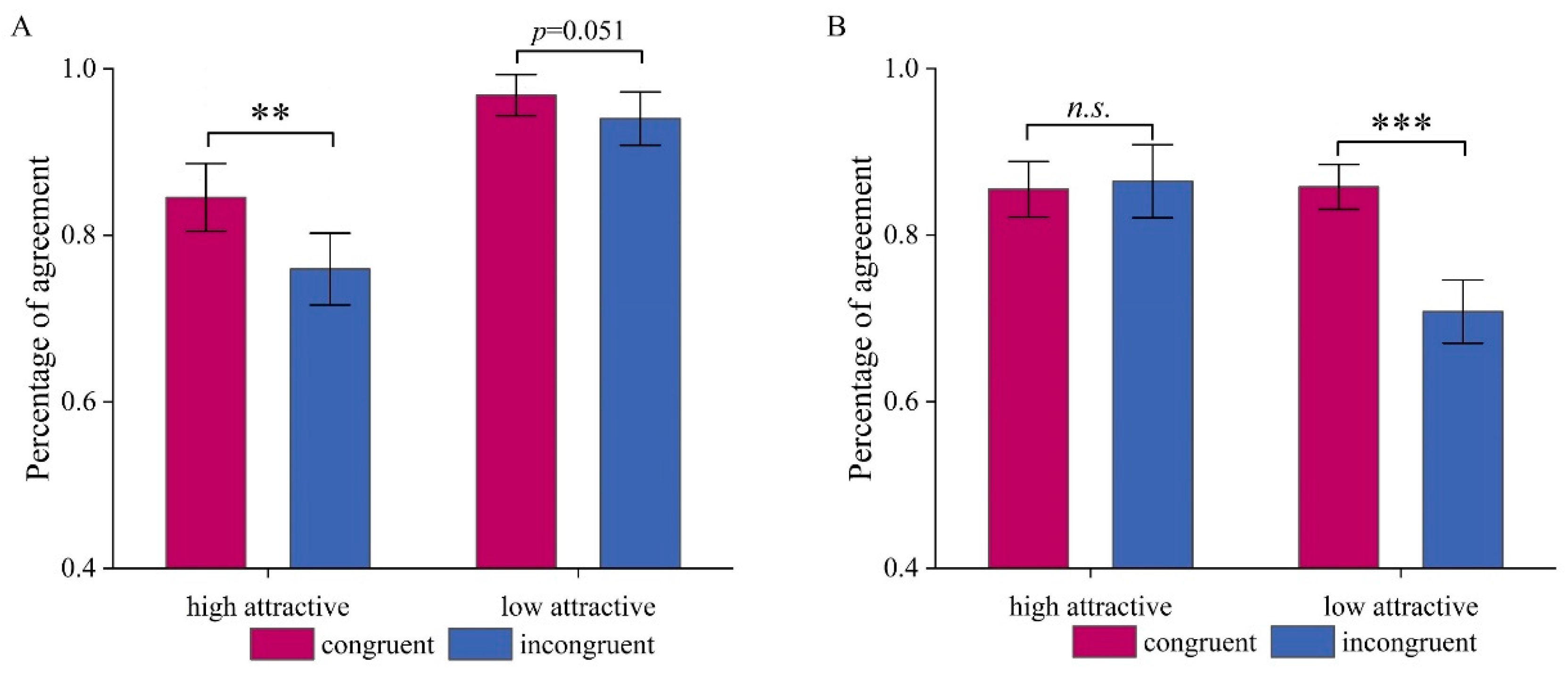

4.1. Performance Evidence

Significant main effects of congruency revealed that agreement of participants’ attractiveness ratings with the corresponding stimulus categories was higher when personality traits were congruent rather than incongruent with the stimulus category. This result suggests that personality trait information influences participants’ attractiveness judgements for both faces and voices, consistent with the “what is good is beautiful” stereotype, where positive traits enhance perceived attractiveness and negative traits diminish (Dion et al., 1972).

In the face task, percentage of agreement for low-attractive faces was significantly higher than for high-attractive faces, whereas in the voice task, percentage of agreement for high-attractive voices was significantly higher than for low-attractive voices. First, people’s opinions on unattractive faces may tend to be more consistent. Besides, these seemingly opposite results across the two tasks are not truly contradictory. One possible explanation is that, relative to the other level, the high-attractive face stimuli and the low-attractive voice stimuli were closer to the intermediate level, which is shown in the pre-experimental rating results. This may have restricted the influence of personality trait information on attractiveness judgments for low attractive faces and for high attractive voices, as reflected in our results. This pattern aligns with stereotype theory, as moderately attractive stimuli may be more susceptible to trait-based modulation due to their ambiguity, amplifying the influence of congruent or incongruent personality cues (Langlois et al., 2000).

The interaction effect revealed that the congruency effect was stronger in the high-attractive face condition and the low-attractive voice condition. A stronger consistency effect for highly attractive faces suggests that when the expected positive traits do not match the personality trait words presented, it more significantly disrupts the processing of attractive faces. This may be due to stronger stereotypes or expectations associated with attractive individuals (Dion et al., 1972). When negative trait words appeared, classification accuracy for highly attractive faces significantly declined, indicating that this mismatch induced cognitive conflict. In contrast, in the voice task, the distinct acoustic features of attractive voices may have aided participants in their classification. For low-attractive voices, the percentage of agreement decreased under inconsistent conditions, suggesting that consistent trait words provided more critical information for judging attractiveness. Meanwhile, high-attractive voices remained relatively stable across conditions. These findings indicate that low-attractive voices may be characterized by more ambiguous perceptual cues and therefore rely more heavily on semantic context to guide judgments. The same explanation may hold for the “high attractive” faces, which in reality were similarly intermediate as the low-attractive voices. This differential susceptibility to trait influences underscores the stereotype-driven expectation that positive traits enhance attractiveness, particularly when perceptual cues are less definitive, as seen in our low-attractive voice and moderately attractive face conditions.

Overall, our findings aligned with our expectations: personality trait words that were semantically congruent with attractiveness facilitated attractiveness judgments. In contrast, incongruent trait information led to altered evaluations. The impact of this effect also varied across different levels of attractiveness—being more pronounced when attractiveness was closer to a moderate level, and attenuated as it approached the extremes. These behavioral results underscore the complexity of attractiveness judgments, suggesting that they are shaped not only by perceptual cues but also by contextual semantic information.

4.2. Effect of Personality Traits on the Perception of Facial Attractiveness

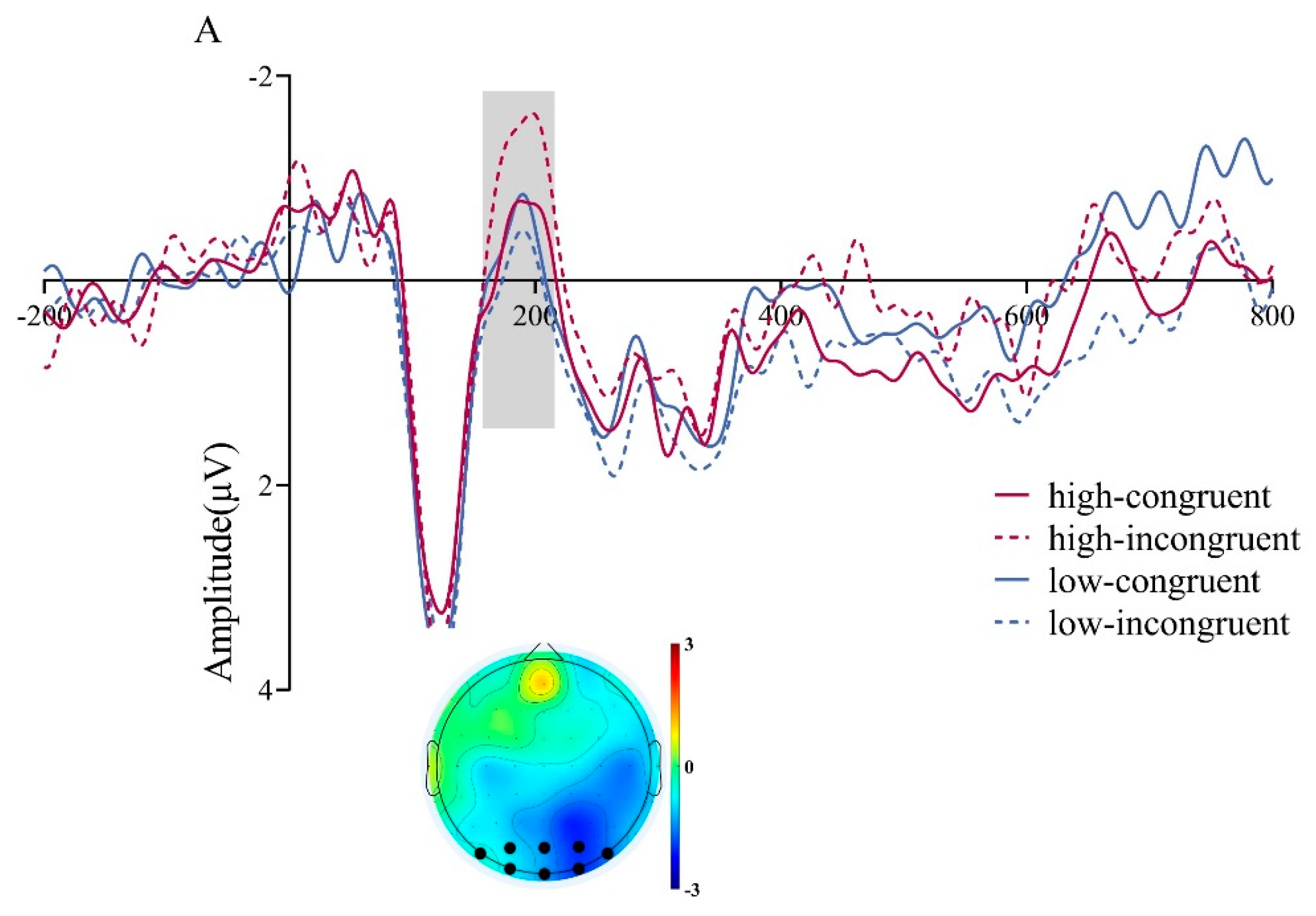

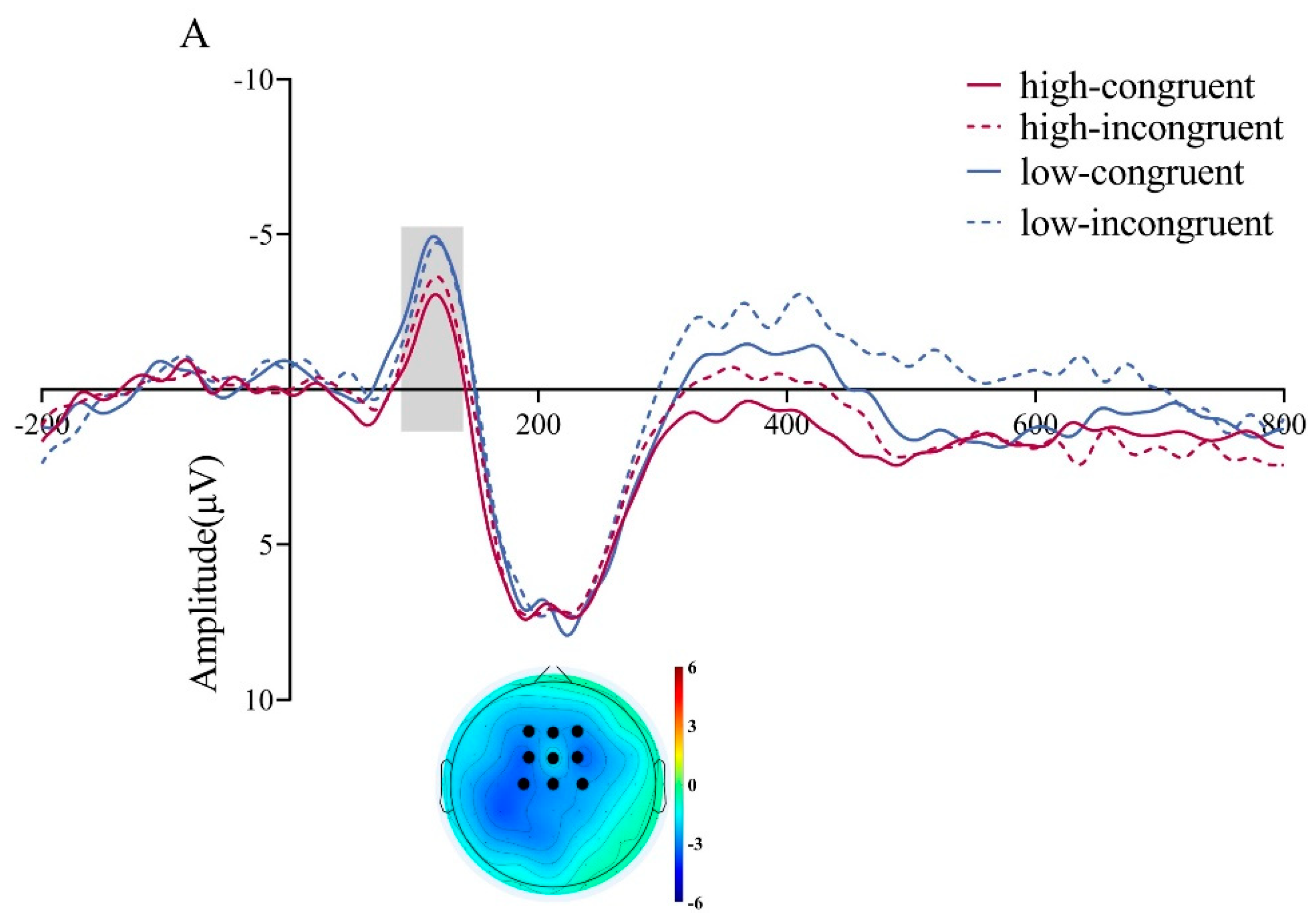

In our study, we found that low-attractive faces elicited larger P1 amplitudes than high attractive faces. This result is consistent with results of Halit et al. (2000), which showed that facial morphology is processed at a very early stage, and that unattractive and atypical faces elicit larger P1 amplitudes than attractive and typical faces. The amplitude of P1 is generally thought to reflect the initial perception and processing of visual stimuli, especially in the allocation of attention and early analysis of external visual features. The low-attractive faces in our study may have been less typical than the high-attractive faces, which may have caused participants to attend to these faces earlier. This early effect may reflect low-level perceptual differences, such as atypical facial features or asymmetry in low-attractive faces, which draw attention due to their deviation from prototypical face templates (Rhodes, 2006).

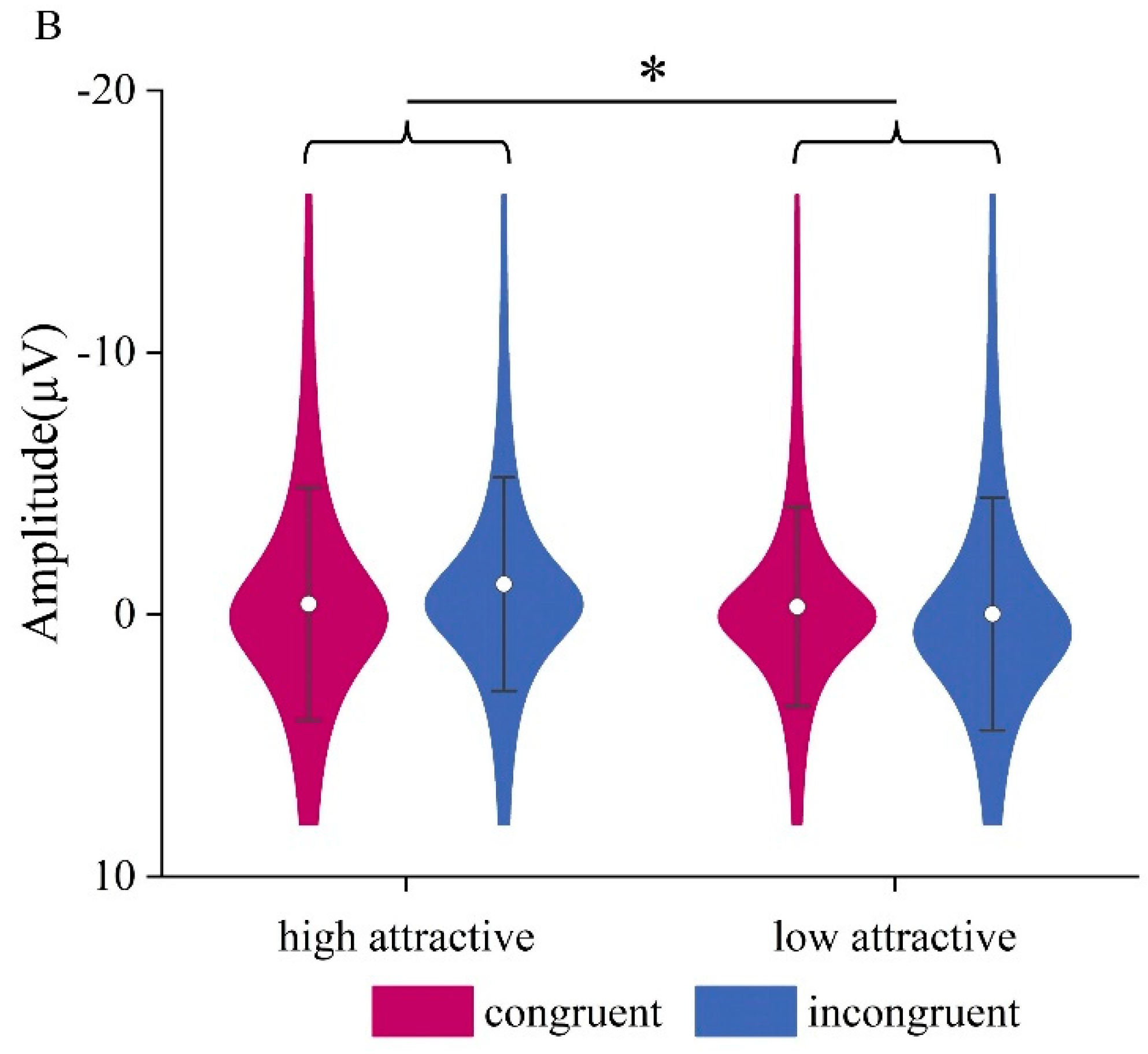

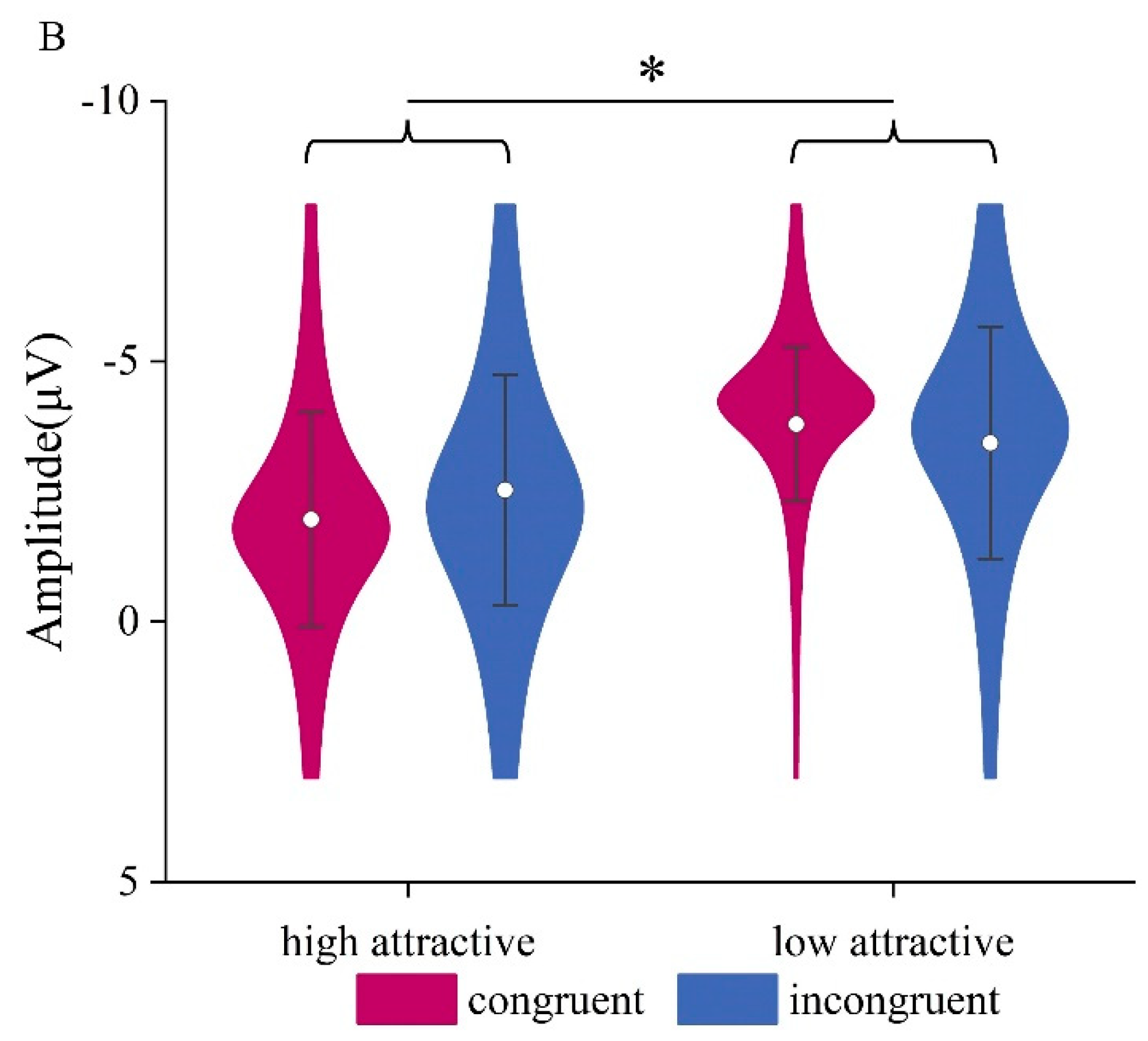

Then, we found that high-attractive faces induced larger N170 amplitudes compared to low-attractive faces. The N170 component has been found to be independent of facial expression in some studies, sensitive to face processing, and attractive faces may cause greater amplitudes associated with structural encoding and recognition memory (Marzi & Viggiano, 2010; Liu et al., 2023; Pizzagalli et al., 2002). The current result is consistent with these previous research.

Nevertheless, our study is inconsistent with Hahn et al. (2016) and Halit et al. (2000), who found that N170 amplitudes of lower aesthetic versions of faces were larger than those of higher aesthetic versions of faces when presented with adult and infant faces as stimuli. A possible explanation for the inconsistency between our study and Hahn et al. (2006), and Halit et al. (2000), is that in these two studies, investigators processed the same individual's faces into high-and low-attractive versions, while we selected different faces from different individuals when selecting materials, so differences in material selection could lead to different results.

In the face task, no effect was observed on the N400 component, which is noteworthy. Given that the N400 is typically linked to semantic processing, this may suggest that there was no effect of personality trait words on first-pass semantic integration or memory retrieval. Besides, the visual salience of faces dominates early processing, delaying the integration of personality traits until later stages, potentially due to the holistic nature of face perception (Rhodes, 2006).

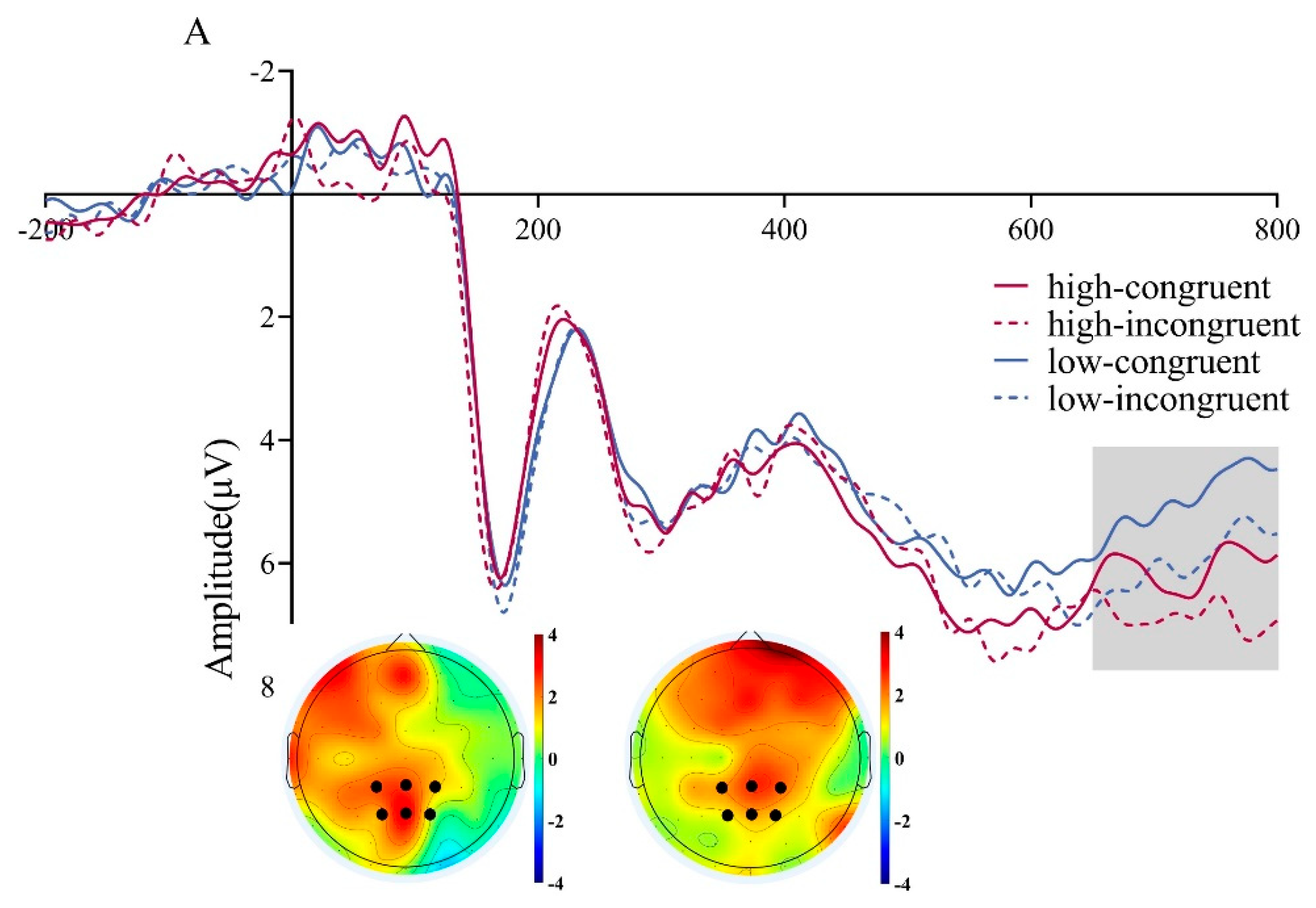

The LPC segment was divided into two phases. In the early LPC phase, no main effects or interaction were observed. However, in the later phase, the LPC revealed a trend of larger amplitudes for highly attractive faces as well as a significant effect of consistency, with inconsistent conditions eliciting greater amplitudes. The long latency of the effect may be because the influence of personality traits on faces is mainly produced in later processing stages compared with voice. The marginal effect of attractiveness on the LPC is consistent with previous studies (Li et al., 2024; Shang & Liu, 2022). However, the main effect of congruency in the LPC where its amplitude was significantly greater in the incongruent condition than in the congruent condition is not consistent with the results of previous studies. High-attractive stimuli provide profound intrinsic rewards (Wiese et al., 2014; Andreoni & Petrie, 2008), leading to larger LPC amplitudes in both face and voice tasks. However, it can be seen from our results that personality traits exert less influence on facial attractiveness perception than voice tasks. So one possible explanation for our interaction is that when participants encounter an inconsistent stimulus that does not align with their identified stereotype, the processing of this information occurs in stages. In the early stages of cognitive processing, although face and personality information were presented together, the face stimulus dominated the processing, overshadowing the accompanying personality information. However, as processing advances to later stages, the personality information becomes more prominent. This shift indicates that participants needed more attention resources to process this inconsistency at a higher level of cognitive processing, which also reflected the difference in the influence of personality traits when processing faces and voices. This late-stage effect supports the stereotype that positive traits enhance attractiveness judgments (Dion et al., 1972), but the delayed integration may reflect low-level perceptual factors, such as the holistic processing of faces, which prioritizes visual features over semantic traits until later cognitive stages.

4.3. Effect of Personality Traits on the Perception of Vocal Attractiveness

In the vocal attractiveness judgment task, the N1 component served as an early indicator of auditory attention toward target stimuli (Shehabi et al., 2025). N1 amplitude was larger to low- than to high attractive voices, suggesting that in the initial stages of cognitive processing, individuals paid greater attention to less attractive voices. Alternatively, it could also reflect the greater difficulty to categorize low-attractive voices, which were closer to the neutral point than high-attractive voices.

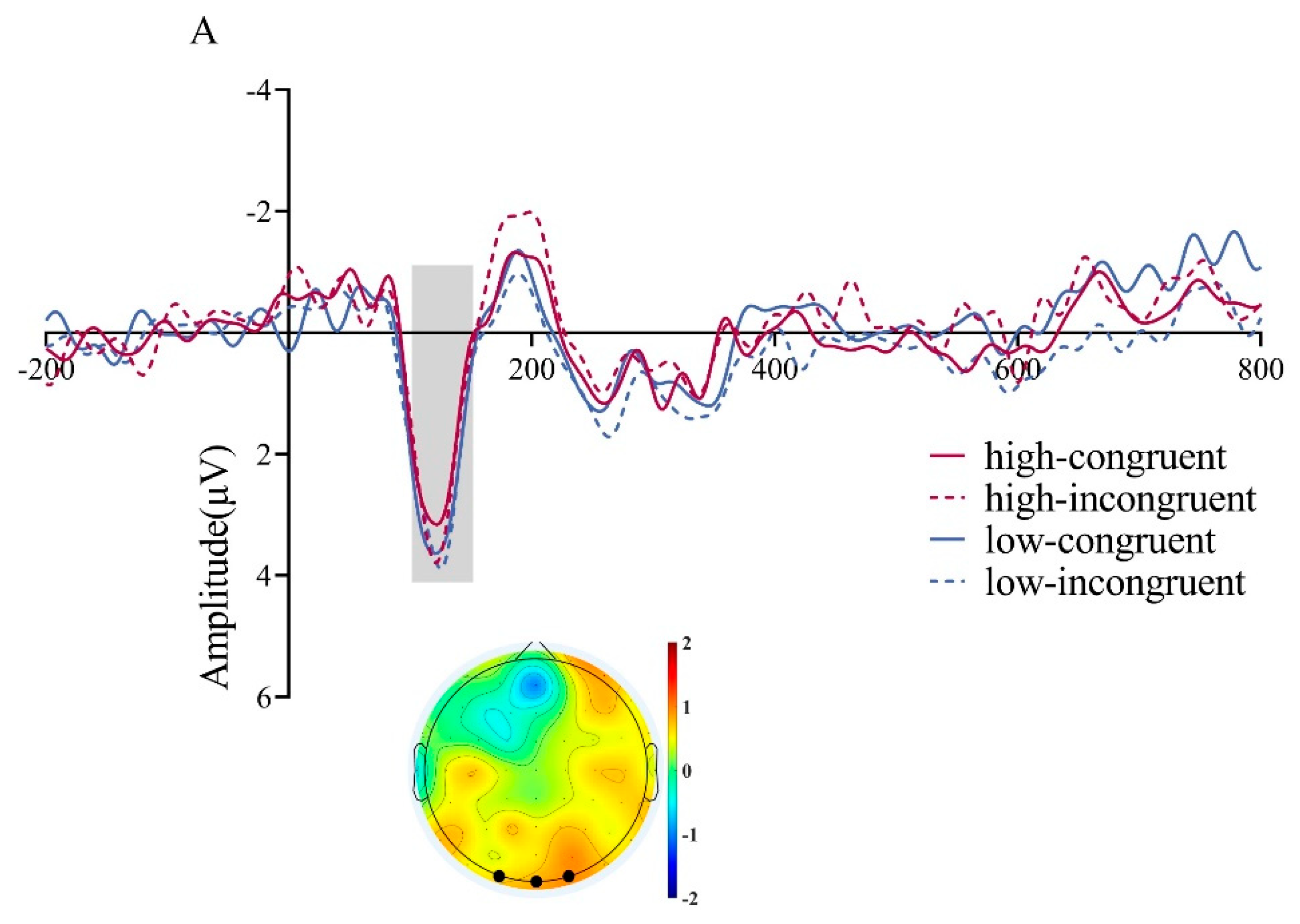

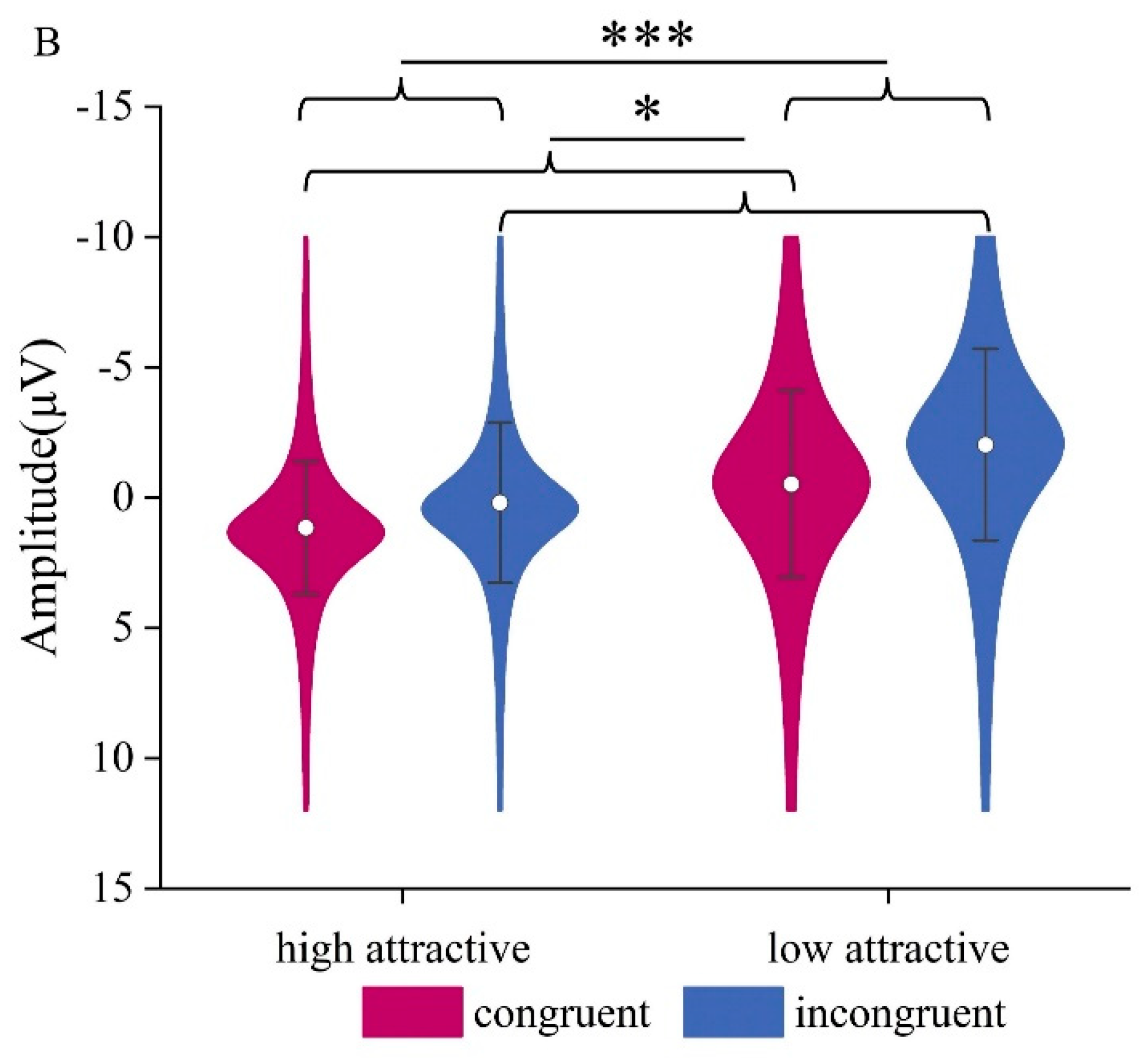

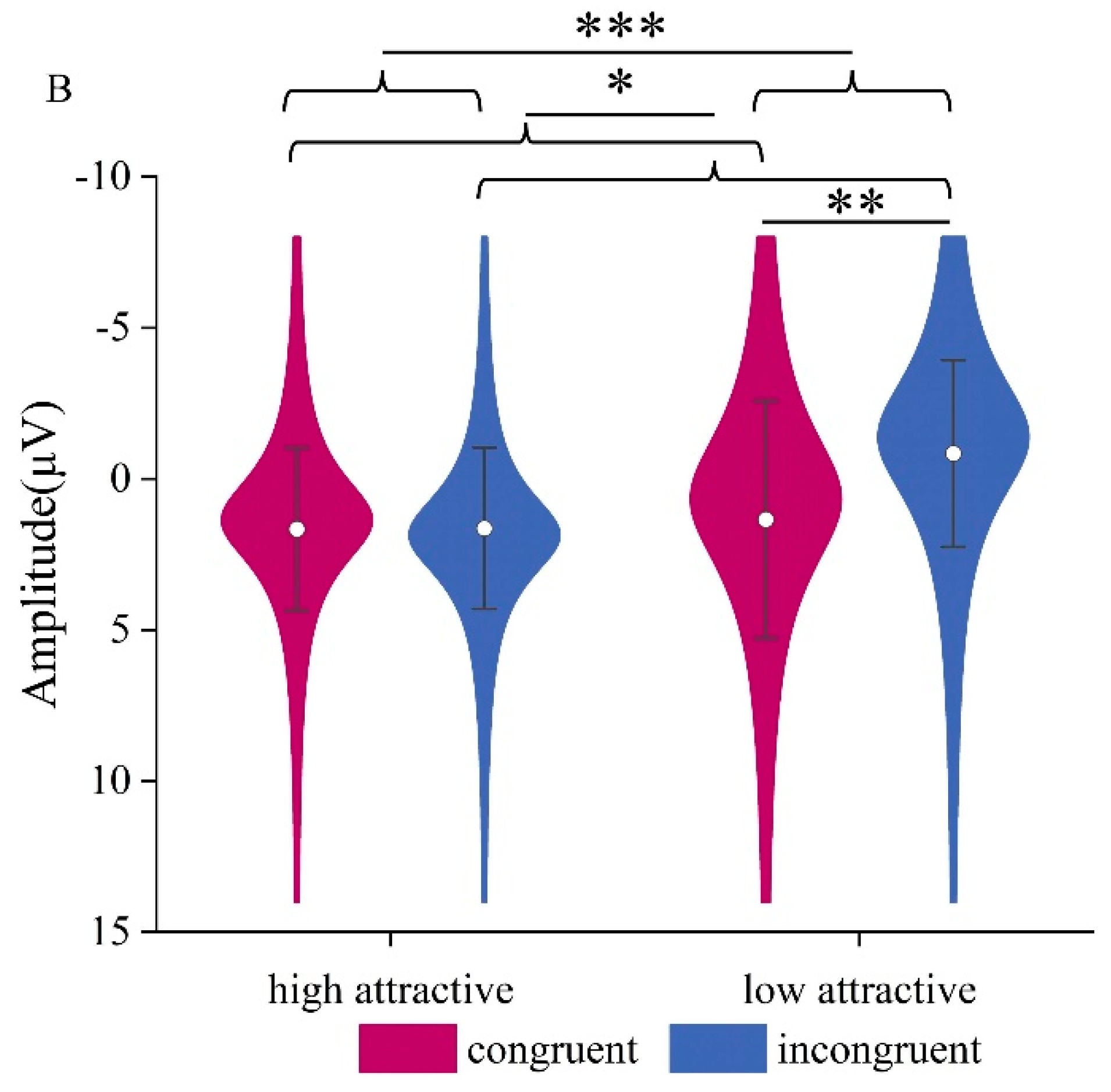

Personality traits influence attractiveness, which is manifested in the N400 component. The N400 is generally considered to reflect the processing of mismatched semantic information or retrieval of semantic information (Ishii et al., 2010; Kutas & Federmeier, 2011; Zahedi et al., 2019), focusing on the consistency of stimulus conditions rather than the type of stimulus (Caldara et al., 2004). Other meaningful stimuli, such as faces (Barrett et al., 1988), can also elicit this component. Most studies found that incongruent conditions elicited larger N400 amplitudes compared to congruent conditions (Bobes et al., 2000). Our results are consistent with those studies, which may be due to participants having specific impression for attractive or unattractive voices, with the simultaneous personality trait information being inconsistent with their impression, causing greater N400 amplitudes.

In addition to integration processing, the N400 is related to conflict control (Pan et al., 2020), and cognitive resource allocation (Paulmann & Kotz, 2008); the increased cognitive resources needed to resolve conflicting information may lead to larger N400 amplitudes. In our study, participants’ task was to assess vocal attractiveness, which may involve suppressing conflicting stimuli unrelated to personality trait information (Liu et al., 2015; Paulmann & Pell, 2010). Thus, the larger N400 amplitude observed under inconsistent conditions may indicate that personality trait information interfered with the attractiveness evaluation process. Further, the N400 effect only happens for voices and not for faces. One possible reason is that the greater difficulty of vocal attractiveness judgements caused a deeper and more elaborate processing than for the easier facial attractiveness task, which then led to the interaction at the conceptual /semantic stage.

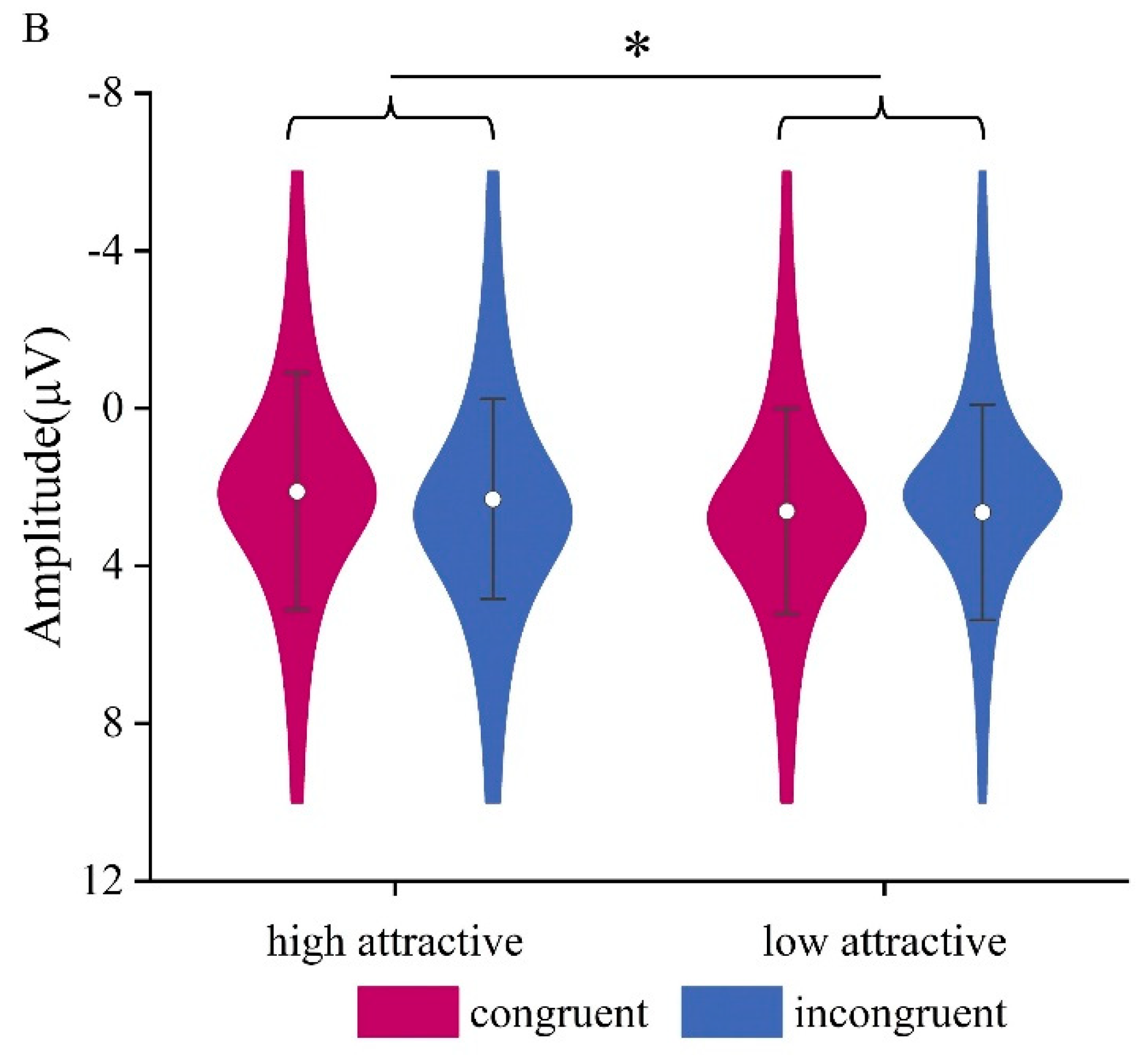

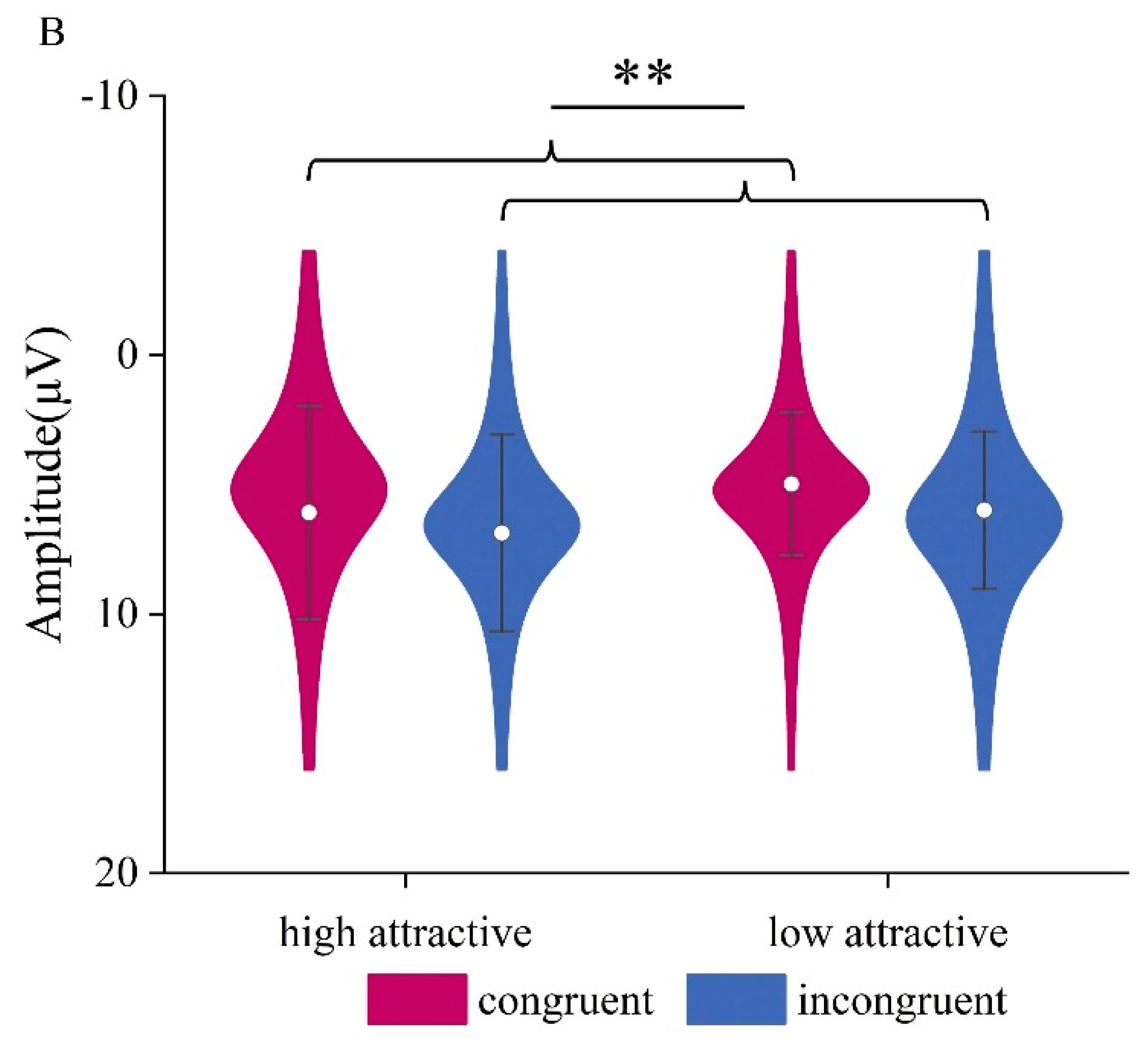

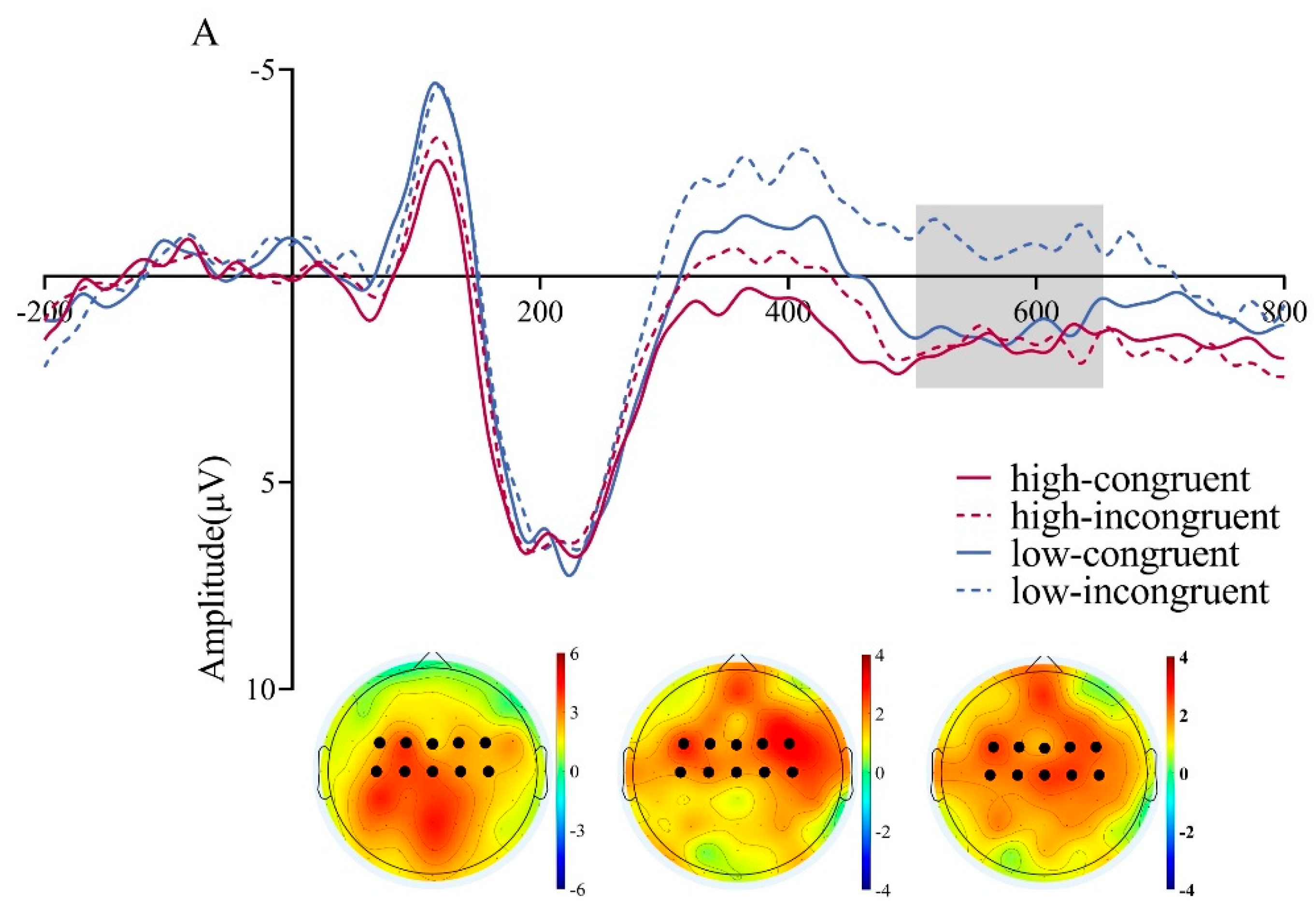

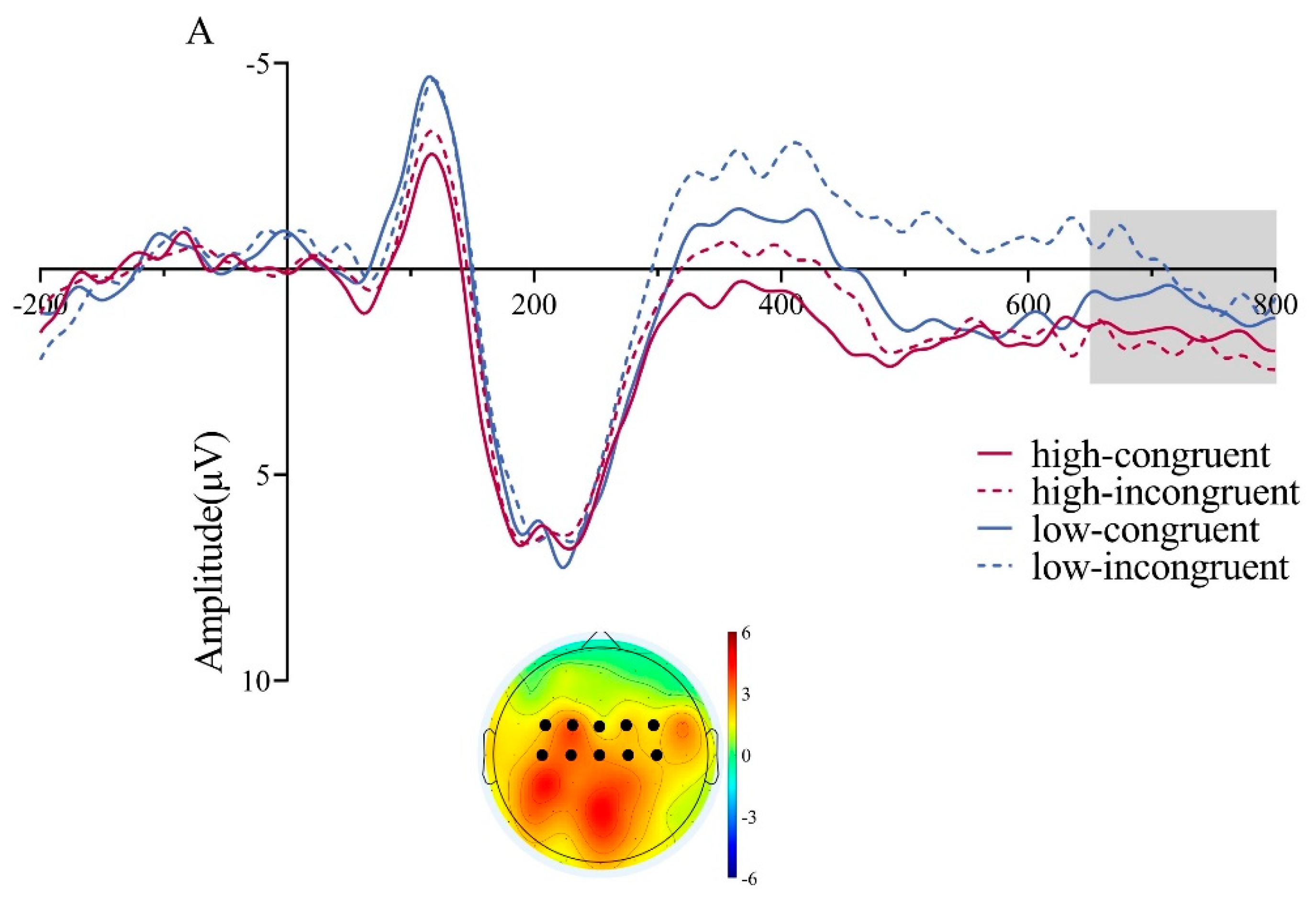

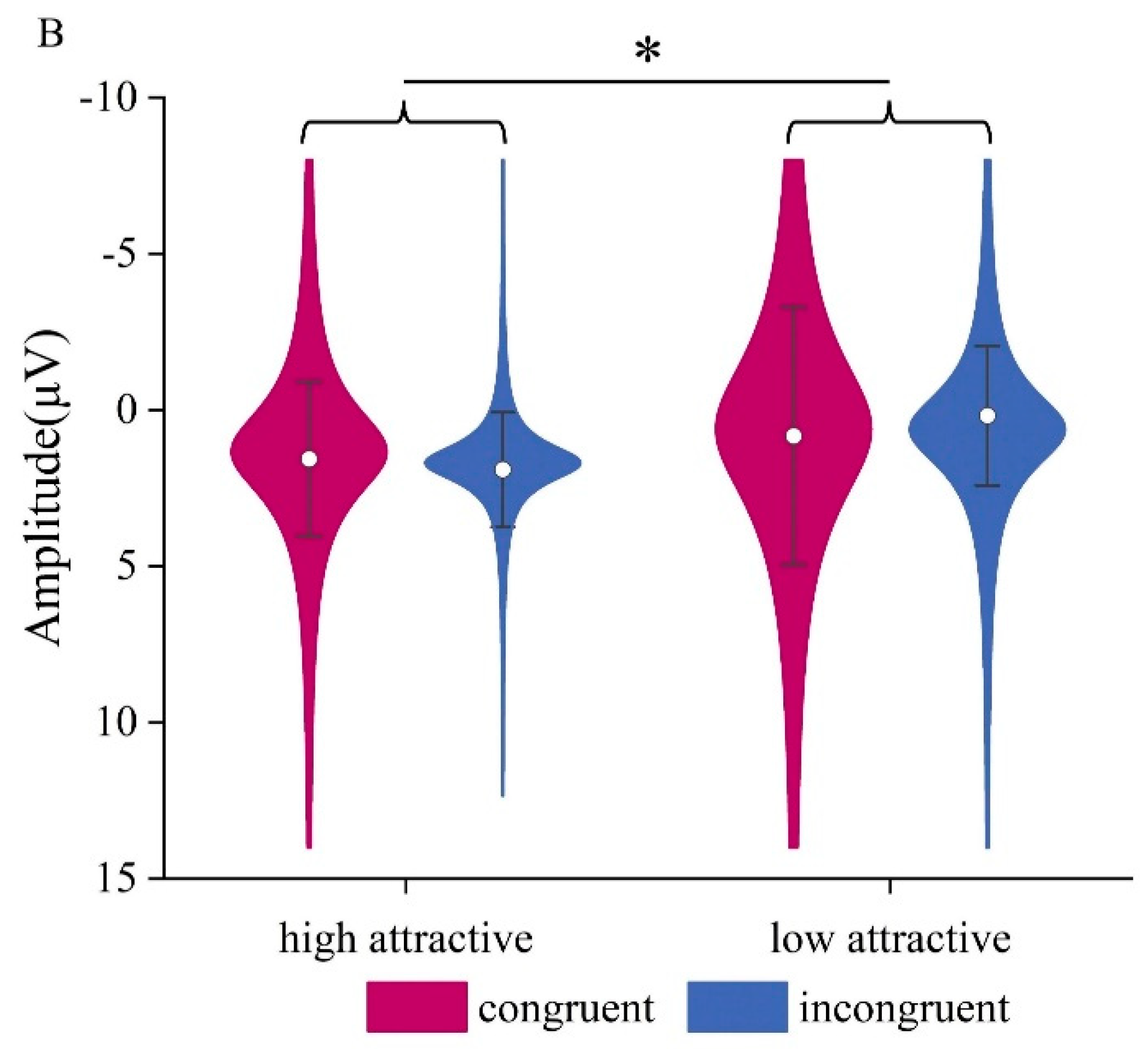

For the LPC of the 500-650ms time window, we found that it was modulated by attractiveness; high-attractive voices induced larger LPC amplitudes compared to low-attractive voices. Previous research has shown that attractive voice or face stimuli elicit larger LPC amplitudes compared to unattractive stimuli (Li et al., 2024; Liu et al., 2023; Shang & Liu, 2022; Thiruchselvam et al., 2016), and high- and low-attractive stimuli induced larger LPC amplitudes compared to moderately attractive stimuli (Revers et al., 2023). High-attractive voices have greater intrinsic reward value (Wiese et al., 2014; Andreoni & Petrie, 2008), thus capturing more of the participant’ attention resources, resulting in larger LPC amplitudes for high-attractive voices. We further observed a main effect of congruency, with larger LPC amplitudes to congruent than incongruent condition, consistent with previous research (Shang & Liu, 2022; Liu et al., 2023). LPC amplitude increases with the importance of the stimulus and with motivated attention (Werheid et al., 2007). In the present study, unrelated personality traits can enhance the level of vocal attractiveness under consistent conditions. This may reflect that under consistent conditions, participants need to invest more attention and cognitive resources in the aesthetic evaluation. The interaction indicates that for low-attractive voices, consistency influenced the LPC, whereas it had no such effect for high-attractive voices. This pattern suggests that the consistency of trait words affected evaluative processing for low-attractive voices. This finding is consistent with the performance effects where only low-attractive voices are modulated by congruency. And it is also consistent with the observation of larger N1 amplitudes elicited by low-attractive voices, indicating that greater early attention was allocated to less attractive stimuli. For these perceptually ambiguous signals, the integration process appears to rely more heavily on contextual information. In the later 650–800 ms window, only the main effect of attractiveness persisted, with high-attractive voices eliciting larger amplitudes, possibly reflecting sustained positive appraisal or reward processing(Liu et al., 2023). These effects support stereotype theory, as congruent positive traits reinforce the reward value of attractive voices, enhancing motivated attention, while low-level sequential processing of auditory cues may amplify the role of semantic context in early evaluation stages.

4.4. Integration of Results on Face and Voice Attractiveness

Overall, we found that personality traits exert both overlapping and distinct influences on the perception of facial and vocal attractiveness, our findings highlight the complex interaction between personality traits and attractiveness perceptions, which appear to be temporally and modality specific. Behaviorally, our results indicate that in both the face and voice domain, the congruency between personality traits and attractiveness categories significantly affects attractiveness judgments, supporting the “what is good is beautiful” stereotype where positive traits amplify perceived attractiveness across modalities (Dion et al., 1972; Langlois et al., 2000). In terms of ERP findings, we observed modality-specific differences in the cognitive processing of these influences. In facial and vocal tasks, early ERP components, such as N1 and P1, likely reflect initial sensory processing and attention allocation to the stimulus(Luck, 2014). These early effects may be driven by low-level perceptual differences, with faces processed holistically and voices sequentially, influencing the timing of trait integration (Groyecka et al., 2017; Rhodes, 2006). However, the processing of personality trait information diverged temporally between the two modalities. In the face task, the impact of personality traits became apparent during the late-stage LPC, reflecting higher-level cognitive integration and evaluative processing. Conversely, in the voice task, this influence was evident as early as in the N400 component, indicating that semantic incongruency between personality traits and vocal attractiveness was detected and processed at an earlier stage of semantic integration. These differences highlight the complex interplay between perceptual cues and semantic information in shaping attractiveness judgments across different sensory modalities and underscore the need for further research to explore these interactions in more diverse populations and contexts. Future studies could further disentangle whether low-level perceptual factors, such as face configuration versus vocal prosody, or higher-level stereotype expectations drive these modality-specific effects.

4.5. Limitations

The present study has several limitations. Firstly, the sample size was relatively small. Although the large effect sizes observed lend support to the findings, a larger and more diverse sample would enhance the stability of the experimental results and improve ecological validity. Besides, the 600-ms blank screen before responses may introduce minor motor preparation effects, though our preprocessing and trial retention of 74.1% suggest minimal impact on key ERP components. Future studies could use longer delays or response-locked analyses to further isolate cognitive signals. Furthermore, subsequent studies should more thoroughly investigate the degree to which personality traits influence attractiveness. For example, including a neutral-attractiveness level in the stimulus set would allow a clearer comparison of how personality trait descriptors independently influence attractiveness ratings and how they interact with baseline perceptual attractiveness, thereby improving the separation between attractiveness baselines and trait-driven effects. Besides, although counterbalancing was done to rule out order effects such as fatigue or practice, there may be carry-over effects between tasks, which provide a caveat for the present results and could be ruled out in research with between group designs. In our study, a potential problem may lie in the asymmetric differences between attractive and unattractive stimuli. This makes it unclear whether the asymmetric congruency effects relate to the valence of the stimuli or to the asymmetric difficulty of classification. Further research should use more symmetrical stimuli to examine the influence of personality traits on attractiveness.

Figure 1.

Trial scheme of the face and voice task (top vs. bottom).

Figure 1.

Trial scheme of the face and voice task (top vs. bottom).

Figure 2.

Percent agreement of attractiveness judgments with stimulus categories. (A) Attractiveness judgment in the face task. (B) Attractiveness judgment in the voice task. Only interaction effects are marked. **p<0.01, ***p<0.001, n.s.p>0.01.

Figure 2.

Percent agreement of attractiveness judgments with stimulus categories. (A) Attractiveness judgment in the face task. (B) Attractiveness judgment in the voice task. Only interaction effects are marked. **p<0.01, ***p<0.001, n.s.p>0.01.

Figure 3.

P1 component in the Facial Attractiveness Classification task. (A) The ERP waveforms in the region of interest (ROI; see bold points in the topography) for the P1 component, along with topographical maps showing the low-attractive condition minus the high-attractive condition within the 90-140 ms time window. (B) The ERP amplitudes of the P1 component in each condition. *p<0.05.

Figure 3.

P1 component in the Facial Attractiveness Classification task. (A) The ERP waveforms in the region of interest (ROI; see bold points in the topography) for the P1 component, along with topographical maps showing the low-attractive condition minus the high-attractive condition within the 90-140 ms time window. (B) The ERP amplitudes of the P1 component in each condition. *p<0.05.

Figure 4.

N170 component in the Facial Attractiveness Judgment task. (A) The ERP waveforms in the region of interest (ROI) for the N170 component, along with topographical maps showing the high attractive condition minus the low attractive condition within the 160-220 ms time window. (B) ERP amplitudes of the N170 component for each condition. *p<0.05.

Figure 4.

N170 component in the Facial Attractiveness Judgment task. (A) The ERP waveforms in the region of interest (ROI) for the N170 component, along with topographical maps showing the high attractive condition minus the low attractive condition within the 160-220 ms time window. (B) ERP amplitudes of the N170 component for each condition. *p<0.05.

Figure 5.

LPC Component in Facial Attractiveness Judgment. (A) The ERP waveforms in the region of interest (ROI) for the LPC component, along with topographical maps showing the high attractive condition minus the low attractive condition (left) and the incongruent condition minus the congruent condition (right) within the 650-800 ms time window. (B) ERP amplitudes of the LPC component for each condition combination. **p < 0.01.

Figure 5.

LPC Component in Facial Attractiveness Judgment. (A) The ERP waveforms in the region of interest (ROI) for the LPC component, along with topographical maps showing the high attractive condition minus the low attractive condition (left) and the incongruent condition minus the congruent condition (right) within the 650-800 ms time window. (B) ERP amplitudes of the LPC component for each condition combination. **p < 0.01.

Figure 6.

N1 component in the Vocal Attractiveness Judgment task. (A) Grand mean ERP waveforms of the N1 (ROI) during classification of vocal attractiveness, and the difference topography of the low attractive minus the high attractive conditions in the 90-140ms time window. (B) ERP amplitudes of the N1 component for four condition combinations. ***p < 0.001.

Figure 6.

N1 component in the Vocal Attractiveness Judgment task. (A) Grand mean ERP waveforms of the N1 (ROI) during classification of vocal attractiveness, and the difference topography of the low attractive minus the high attractive conditions in the 90-140ms time window. (B) ERP amplitudes of the N1 component for four condition combinations. ***p < 0.001.

Figure 7.

N400 component in the Vocal Attractiveness Judgment task. (A) Grand mean ERP waveforms for the N400 ROI during vocal attractiveness classification, and the difference topography of the low attractive minus high attractive voice condition (left) and the incongruent minus congruent condition (right) in the 300-500ms time window. (B) ERP amplitude of the N400 component under different conditions. *p<0.05, ***p<0.001.

Figure 7.

N400 component in the Vocal Attractiveness Judgment task. (A) Grand mean ERP waveforms for the N400 ROI during vocal attractiveness classification, and the difference topography of the low attractive minus high attractive voice condition (left) and the incongruent minus congruent condition (right) in the 300-500ms time window. (B) ERP amplitude of the N400 component under different conditions. *p<0.05, ***p<0.001.

Figure 8.

LPC component in the Vocal Attractiveness Judgment task. (A) Grand mean ERP waveforms for the LPC ROI during vocal attractiveness classification, and the difference topography of the high attractive voice condition minus the low attractive voice condition (left), the congruent condition minus the incongruent condition (middle), and the low attractive-congruent condition minus the low attractive-incongruent condition (right) in the 500-650ms time window. (B) ERP amplitude of the LPC component under different conditions. *p<0.05,**p<0.01, ***p<0.001.

Figure 8.

LPC component in the Vocal Attractiveness Judgment task. (A) Grand mean ERP waveforms for the LPC ROI during vocal attractiveness classification, and the difference topography of the high attractive voice condition minus the low attractive voice condition (left), the congruent condition minus the incongruent condition (middle), and the low attractive-congruent condition minus the low attractive-incongruent condition (right) in the 500-650ms time window. (B) ERP amplitude of the LPC component under different conditions. *p<0.05,**p<0.01, ***p<0.001.

Figure 9.

(A) Grand mean ERP waveforms for the LPC ROI during vocal attractiveness classification, and the difference topography of the high attractive minus the low attractive voice condition in the 650-800 ms time window. (B) ERP amplitude of the LPC component under different conditions. *p<0.05.

Figure 9.

(A) Grand mean ERP waveforms for the LPC ROI during vocal attractiveness classification, and the difference topography of the high attractive minus the low attractive voice condition in the 650-800 ms time window. (B) ERP amplitude of the LPC component under different conditions. *p<0.05.