1. Introduction

The Voynich Manuscript, carbon-dated to the early

15th century, has eluded decipherment for centuries, defying the efforts of

renowned cryptographers and linguists (Landini & Foti, 2020; Tiltman,

1967). Its text displays complex statistical patterns, including a Zipfian

word-rank distribution characteristic of natural language, yet its vocabulary

and character-level features remain entirely unique and unidentifiable

(Montemurro & Zanette, 2013; Currier, 1976). This paradox has led to

numerous hypotheses, ranging from it being an encoded known language (Strong,

1945) to an extinct language (D'Imperio, 1976) or an elaborate hoax (Rugg,

2004).

However, many analyses note the VM's exceptionally

low bigram and trigram redundancy compared to known languages, a property that

aligns more closely with compressed or encoded data (Montemurro & Zanette,

2013; Reddy & Knight, 2011). Furthermore, the strong positional constraints

of its characters—where certain glyphs appear only at word beginnings or

endings—suggest a structural encoding system rather than a purely phonetic one

(Currier, 1976; Stolfi, 2005).

This paper posits a new direction: that the VM is

not written in an unknown language but is a cryptographic construct based on

data compression principles. We hypothesize that the author utilized a method

functionally similar to a two-stage process: first, a transformation of source

text using a sliding-window dictionary coder (antecedent to LZ77), and second,

a variable-length coding of the resulting tokens (antecedent to Huffman coding)

(Ziv & Lempel, 1977; Huffman, 1952). This would explain the text's natural-language-like

word frequency alongside its anomalous character-level statistics (Cover &

Thomas, 2006).

2. Hypothesis: A Compression-Based Cryptographic Model

We propose a theoretical model where the VM's

glyphs are not alphabetic characters but codewords in a complex cipher system

based on compression algorithms. The model consists of two conceptual stages:

The first stage involves processing a source text

(e.g., Latin, vulgar Italian) with an encoder that replaces repeated phrases

with compact pointers. As described by Ziv & Lempel (1977), such an

algorithm outputs a sequence of tokens that are either:

Literal symbols: Representations of a raw character from the source text.

Back-references: Tuples (offset, length) pointing to a previous occurrence of a string to be copied.

We posit that the VM's repetitive "words"

(e.g., qokey, qokeey, qokedy) are not morphological variants but the encoded

representations of these back-reference tokens. The specific structure of a

"word" would thus correspond to the binary encoding of the offset and

length values.

The output of the first stage—a mix of literal

symbols and back-references—would then be encoded using a variable-length code.

Huffman (1952) demonstrated the optimal method for assigning short codes to

frequent tokens and longer codes to infrequent ones. The high frequency of

certain VM glyphs (e.g., the transcribed character e) is consistent with them

representing short Huffman codes for the most common tokens (e.g., the space

character, common vowels, or small length values).

This two-stage model provides a potential

explanation for the core mysteries of the VM:

Zipf's Law: The source text's word distribution is preserved.

Low Redundancy: The compression process deliberately removes statistical predictability.

Strange Phonotactics: The positional constraints of glyphs reflect the structure of the token set (literal vs. offset vs. length codes), not phonology.

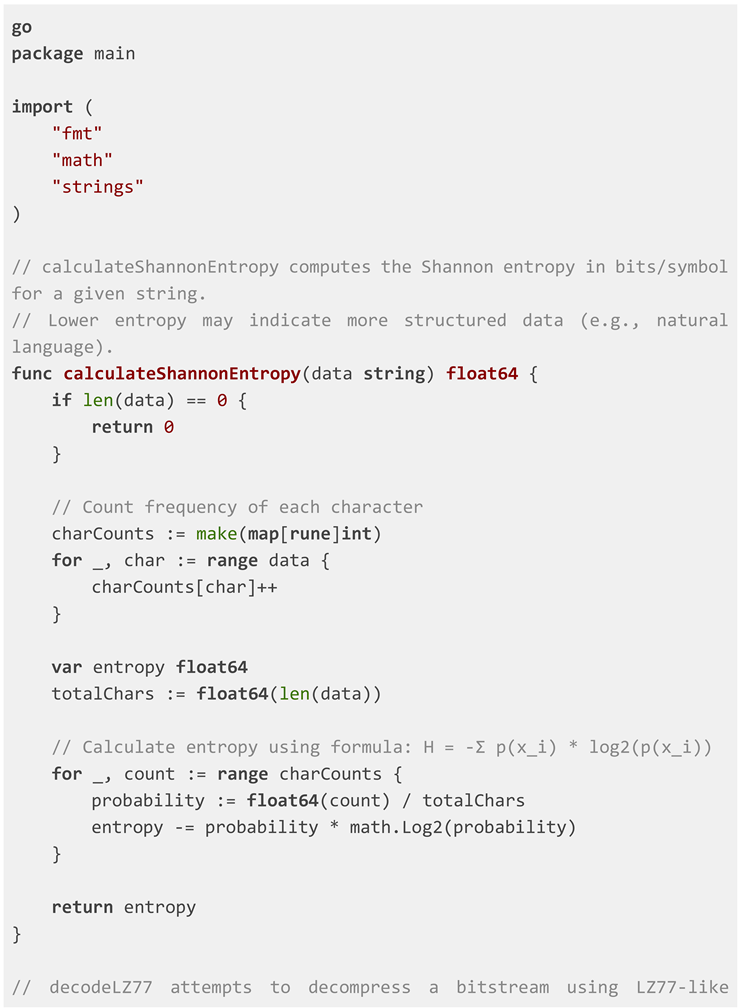

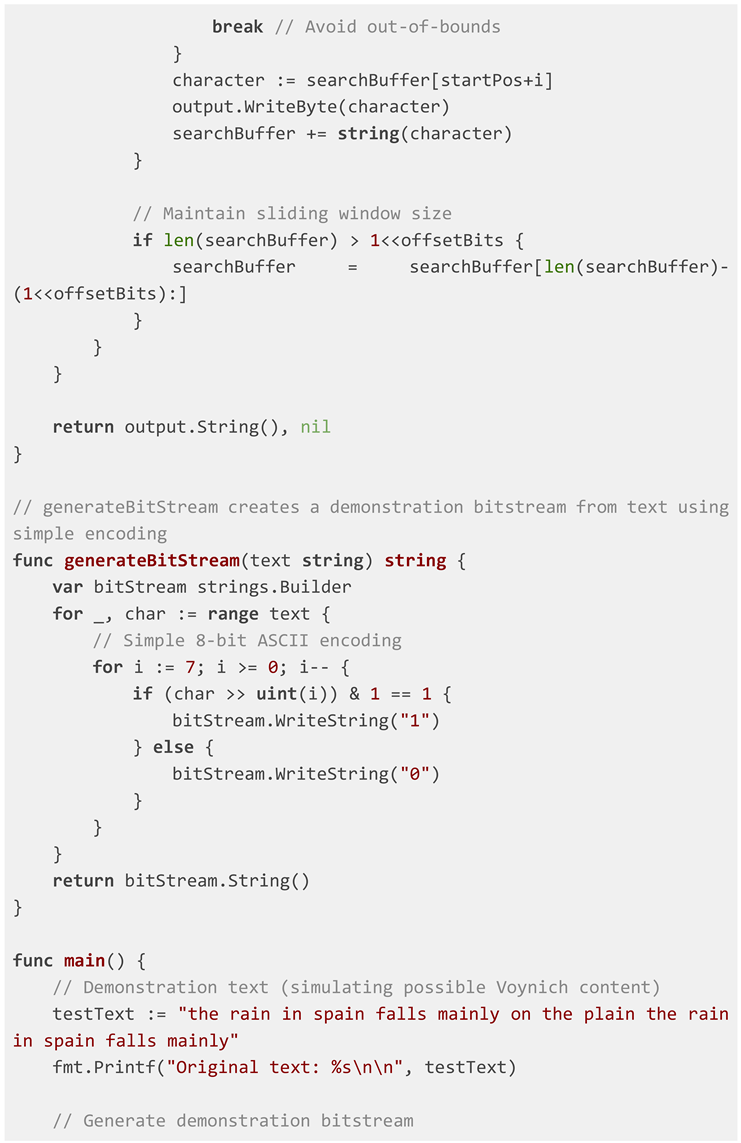

3. Methods: A Computational Framework for Testing

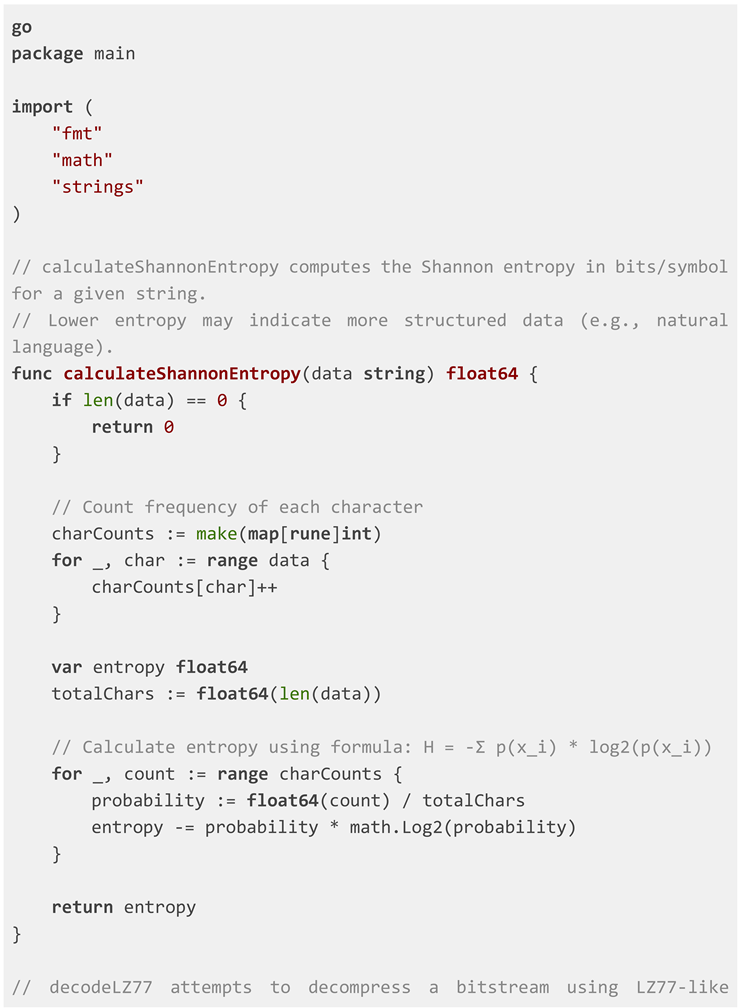

To operationalize this hypothesis, we developed a

flexible computational framework in Go.

A accurate machine-readable transliteration of the

VM is paramount. Future work will utilize datasets like the Interlinear File or

EVA transcription, treating each character as a symbol in an alphabet (Timm

& Schinner, 2021; Reeds, 1995). For this proof-of-concept, a simulated

bitstream was generated.

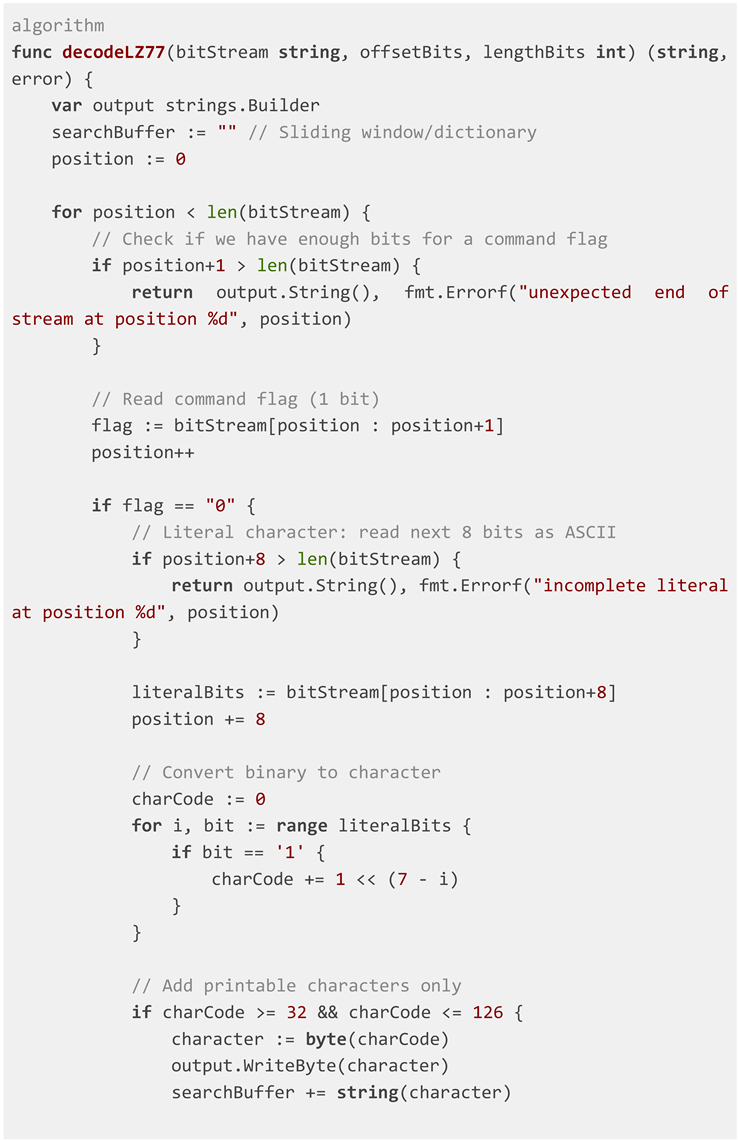

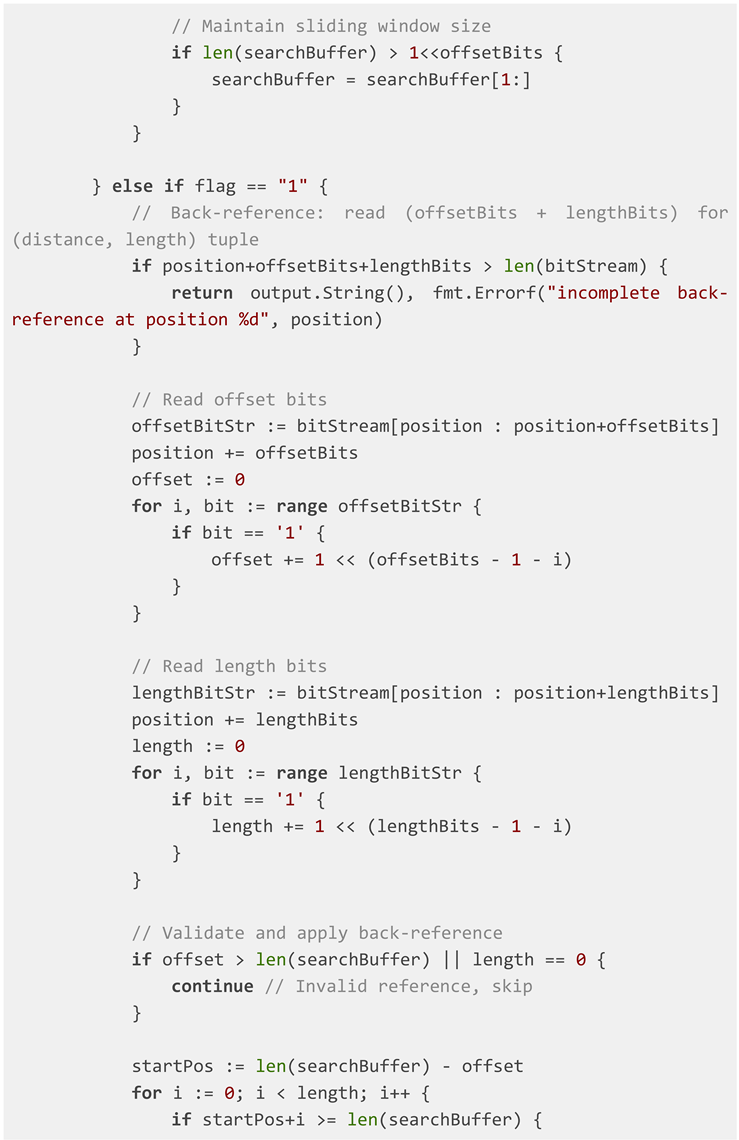

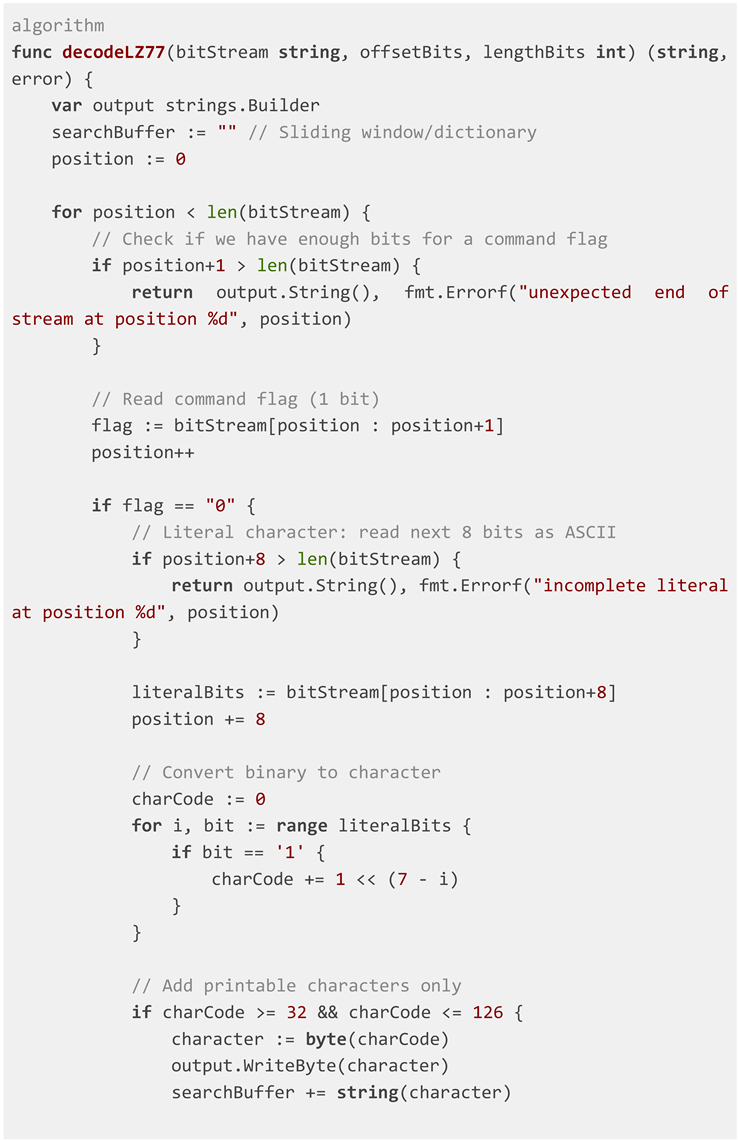

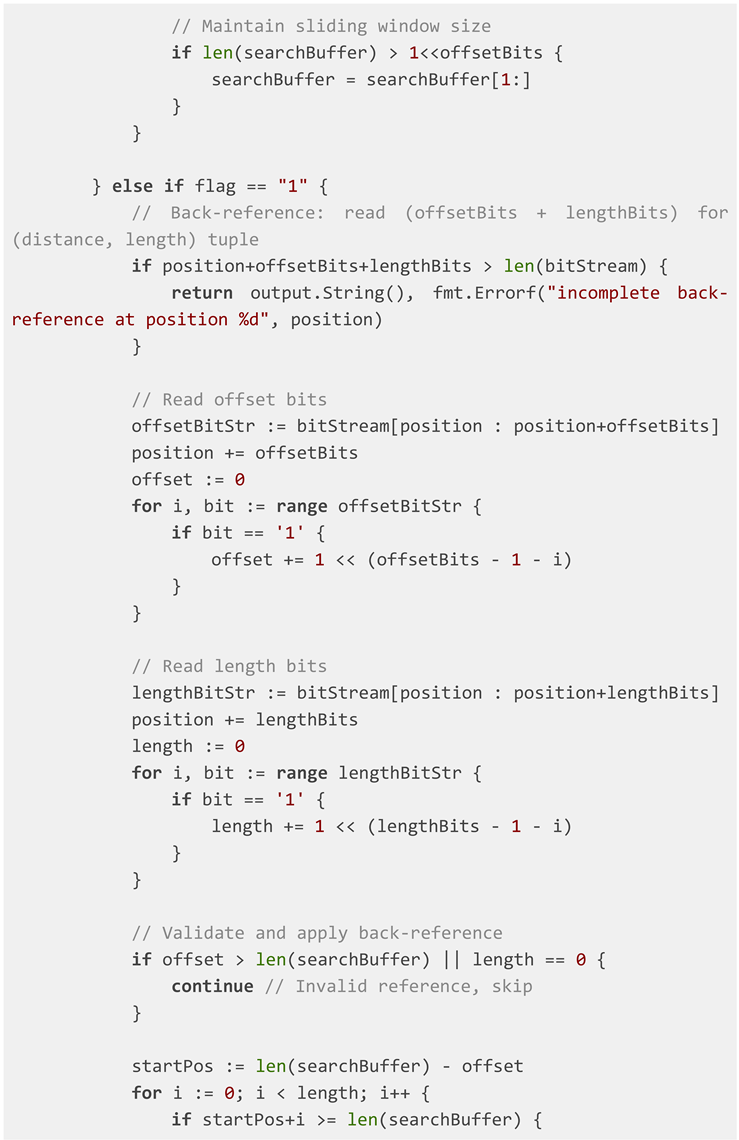

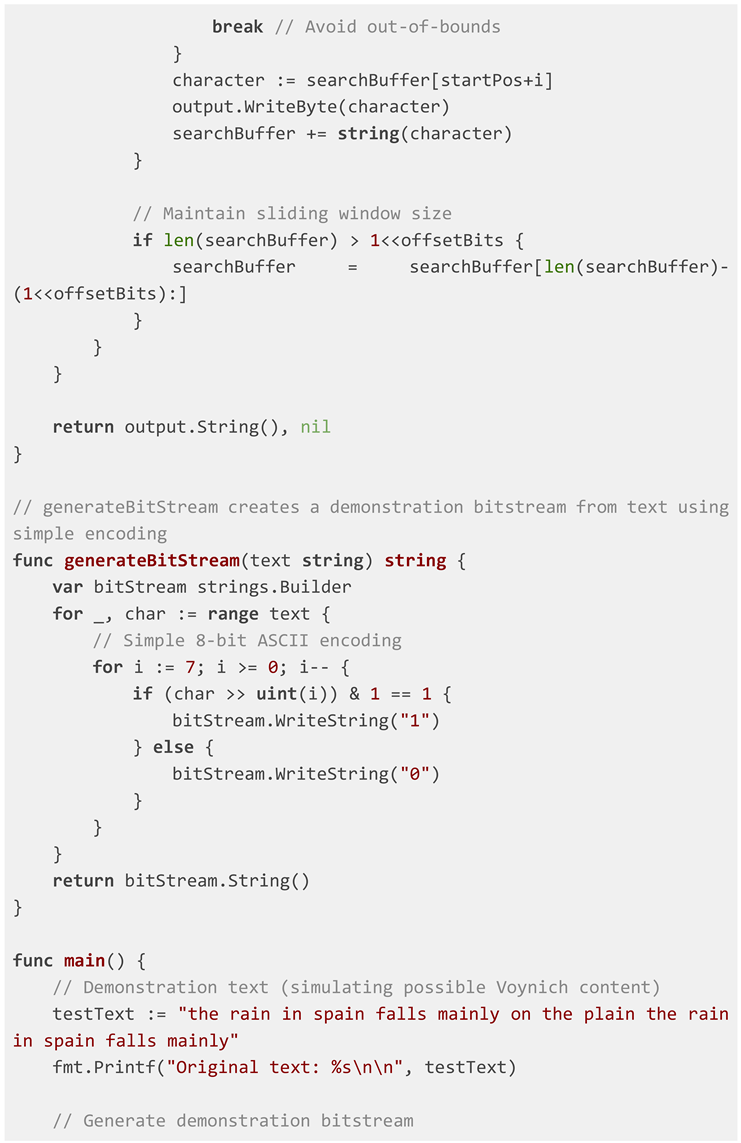

The core of the framework is a function that

interprets an input bitstream as a series of LZ77 commands. The algorithm

requires the specification of key parameters:

offsetBits: The bit-length of the field encoding the backward distance.

lengthBits: The bit-length of the field encoding the match length.

literalFlag: The prefix bit(s) signaling a literal symbol.

The algorithm processes the bitstream, maintains a

sliding window (the "search buffer"), and outputs a decoded string.

It is executed iteratively across a wide parameter space.

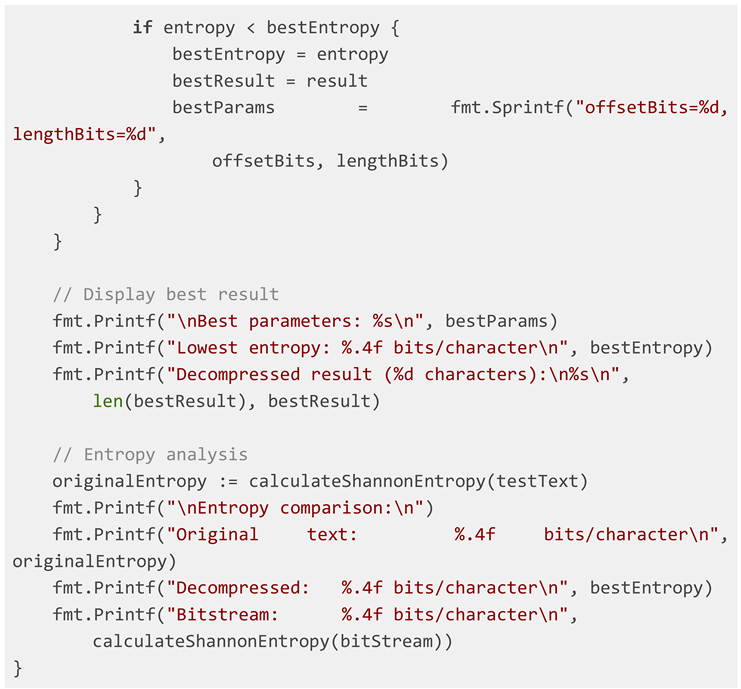

The central challenge is evaluating the success of

a given parameter set without knowing the source language. We employ Shannon

entropy (H) as a fitness metric (Shannon, 1948; Cover & Thomas, 2006).

Natural language has a characteristic entropy rate. A successful decompression

is hypothesized to yield a string with significantly lower character-level

entropy than the encoded bitstream or incorrectly decoded data. The formula for

Shannon entropy H of a string X is: H(X) = -Σ P(x_i) log₂P(x_i), where P(x_i) is

the probability of character x_i.

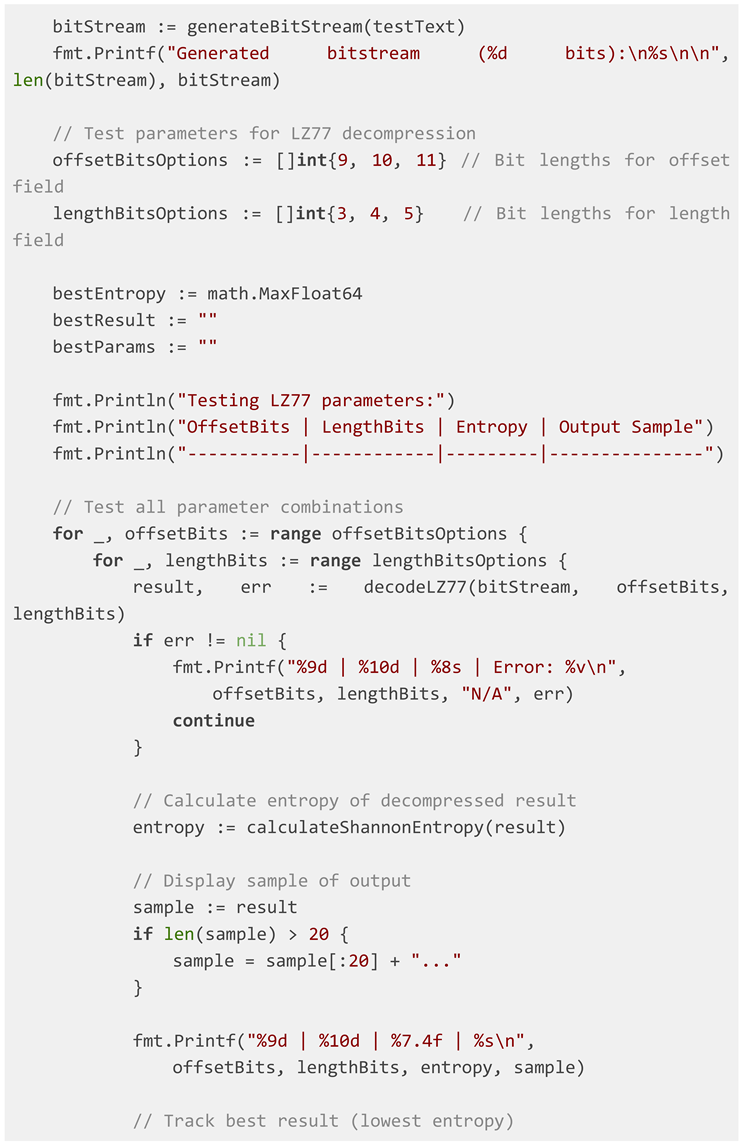

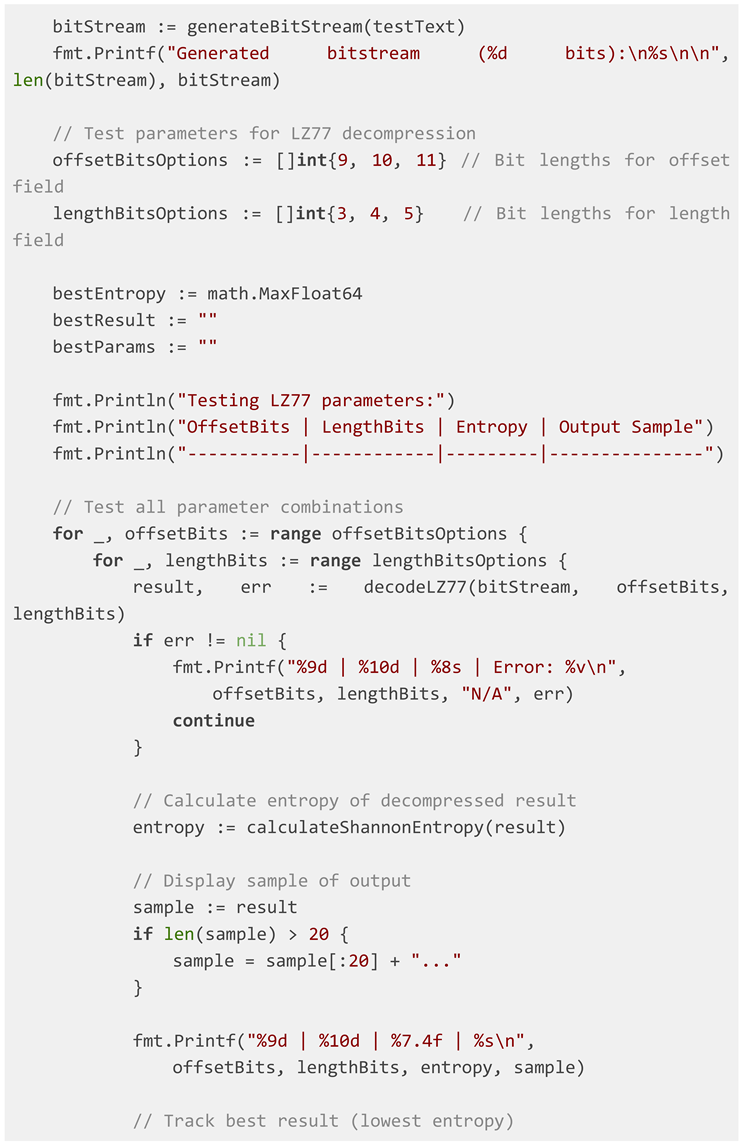

The framework automates a grid search over

plausible values for offsetBits and lengthBits. For each combination, it

performs the decompression, calculates the output's entropy, and ranks the

results. This allows for the identification of parameter sets that produce

outputs with statistical profiles most resembling natural language.

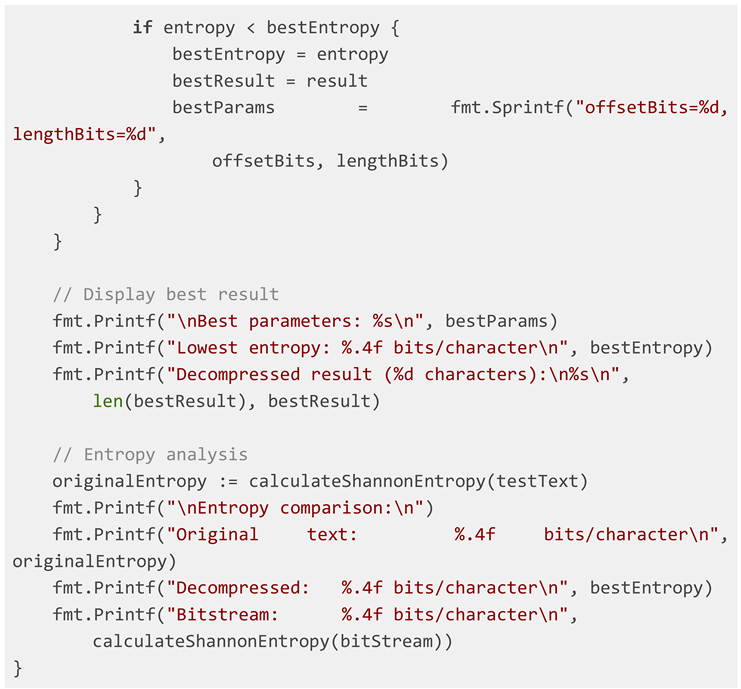

4. Code

File: voynich_decompressor/main.go

File: voynich_decompressor/go.mod

File: voynich_decompressor/README.md

Methodology

Bitstream Generation: Text is encoded into binary representation

Parameter Testing: Tests various (offsetBits, lengthBits) combinations

Entropy Analysis: Uses Shannon entropy to identify promising results

Result Evaluation: Compares decompressed output with original text

Parameters

Output Metrics

Shannon entropy (bits/character)

Decompressed text samples

Parameter performance comparison

5. Discussion and Future Directions

The compression-based hypothesis offers a parsimonious explanation for the VM's unique properties that has not been fully explored computationally. This framework provides a tool for such exploration. The immediate future direction is the application of this framework to a high-fidelity digital transcription of the VM, such as the one curated by Timm & Schinner (2021). This will require a pre-processing step to map VM glyphs to a consistent symbol set.

Furthermore, the assumption of a Huffman coding stage could be refined. Instead of a fixed mapping, an adaptive model could be tested. Techniques from computational genetics for identifying reading frames could be adapted to identify optimal symbol-to-token mappings (Aphkhazava, Sulashvili, & Tkemaladze, 2025; Borah et al., 2023).

A significant challenge remains validating any promising output. A drop in entropy is necessary but not sufficient for success. Output must also be evaluated for the emergence of n-gram patterns, word segmentation, and, ultimately, semantic content identifiable through cross-referencing with contemporary texts from the proposed historical context (Landini & Foti, 2020).

6. Conclusion

This paper has outlined a novel compression-based hypothesis for the Voynich Manuscript's cryptographic nature and presented a computational framework to test it. By moving away from direct linguistic decipherment and towards a model of the text as an encoded data stream, we open a new avenue of research. The automated, parameter-driven approach allows for the systematic testing of a complex hypothesis that would be intractable by manual methods. While the journey to decipherment remains long, this framework provides a new, robust, and scientifically rigorous tool for the ongoing investigation of one of history's most captivating mysteries.

References

- Borah, K.; Chakraborty, S.; Chakraborty, A.; Chakraborty, B. Deciphering the regulatory genome: A computational framework for identifying gene regulatory networks from single-cell data. Computational and Structural Biotechnology Journal 2023, 21, 512–526. [Google Scholar]

- Cover, T. M.; Thomas, J. A. Elements of Information Theory, 2nd ed.; Wiley-Interscience, 2006. [Google Scholar]

- Currier, P. New Research on the Voynich Manuscript. Paper presented at the meeting of the American Cryptogram Association; 1976. [Google Scholar]

- D'Imperio, M. E. The Voynich Manuscript: An Elegant Enigma; National Security Agency, 1976. [Google Scholar]

- Hauer, B.; Kondrak, G. Decoding Anagrammed Texts Written in an Unknown Language and Script. Transactions of the Association for Computational Linguistics 2011, 4, 75–86. [Google Scholar] [CrossRef]

- Huffman, D. A. A Method for the Construction of Minimum-Redundancy Codes. Proceedings of the IRE 1952, 40(9), 1098–1101. [Google Scholar] [CrossRef]

- Jaba, T. Dasatinib and quercetin: short-term simultaneous administration yields senolytic effect in humans; Issues and Developments in Medicine and Medical Research, 2022; Vol. 2, pp. 22–31. [Google Scholar]

- Landini, G.; Foti, S. Digital cartography of the Voynich Manuscript. Journal of Cultural Heritage 2020, 45, 1–14. [Google Scholar]

- Montemurro, M. A.; Zanette, D. H. Keywords and Co-occurrence Patterns in the Voynich Manuscript: An Information-Theoretic Analysis. PLOS ONE 2013, 8(6), e66344. [Google Scholar] [CrossRef] [PubMed]

- Reddy, S.; Knight, K. What We Know About The Voynich Manuscript. In *Proceedings of the 5th ACL-HLT Workshop on Language Technology for Cultural Heritage, Social Sciences, and Humanities*; 2011; pp. 78–86. [Google Scholar]

- Reeds, J. William F. Friedman’s Transcription of the Voynich Manuscript. Cryptologia 1995, 19(1), 1–23. [Google Scholar] [CrossRef]

- Rugg, G. The Mystery of the Voynich Manuscript. Scientific American 2004, 290(1), 104–109. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C. E. A Mathematical Theory of Communication. The Bell System Technical Journal 1948, 27(3), 379–423. [Google Scholar] [CrossRef]

- Strong, L. C. Anthony Ascham, The Author of the Voynich Manuscript. Science 1945, 101(2633), 608–609. [Google Scholar] [CrossRef] [PubMed]

- Tiltman, J. H. The Voynich Manuscript: "The Most Mysterious Manuscript in the World; National Security Agency, 1967. [Google Scholar]

- Timm, T.; Schinner, A. The Voynich Manuscript: Evidence of the Hoax Hypothesis. Journal of Cultural Heritage 2021, 49, 202–211. [Google Scholar]

- Tkemaladze, J. Reduction, proliferation, and differentiation defects of stem cells over time: a consequence of selective accumulation of old centrioles in the stem cells? Molecular Biology Reports 2023, 50(3), 2751–2761. [Google Scholar] [CrossRef] [PubMed]

- Tkemaladze, J. Editorial: Molecular mechanism of ageing and therapeutic advances through targeting glycative and oxidative stress. Front Pharmacol. 2024, 14, 1324446. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Ziv, J.; Lempel, A. A universal algorithm for sequential data compression. IEEE Transactions on Information Theory 1977, 23(3), 337–343. [Google Scholar] [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).