Submitted:

15 August 2025

Posted:

18 August 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- Continuous Iteration and Feedback Loops: Modern energy forecasting Machine Learning (ML) pipelines often run in a continuous development environment, such as development operations (DevOps) [13] or MLOps, where models are retrained and redeployed regularly in response to new data, seasonal changes, or performance triggers [14]. While CRISP-DM permits backtracking between phases, it does not explicitly formalize decision points or gating criteria at each phase beyond a general waterfall-style progression [15]. This can make it unclear when or how to iterate or rollback if issues arise.

- Domain Expert Involvement: Ensuring that analytical findings make sense in context is critical, especially in high-stakes or domain-specific applications [16]. CRISP-DM emphasizes business understanding at the start and evaluation before deployment, which implicitly calls for domain knowledge input. In practice, however, many ML pipelines implemented in pure technical terms neglect sustained involvement of energy domain experts after the initial requirements stage [11]. The separation of domain experts from data scientists can lead to models that technically optimize a metric but fail to align with real-world needs and constraints [17].

- Documentation, Traceability, and Compliance: With growing concerns about Artificial Intelligence (AI) accountability and regulations, such as the General Data Protection Regulation (GDPR) [18] and the forthcoming European Union Artificial Intelligence Act (EU AI Act) [19], there is a need for rigorous documentation of data sources, modeling decisions, and model outcomes at each step of the pipeline. Traceability, maintaining a complete provenance of data, processes, and artifacts, is now seen as a key requirement for trustworthy AI [20].

- Modularity and Reusability: The rise of pipeline orchestration tools (Kubeflow [21], Apache Airflow [22], TensorFlow Extended (TFX) [23], etc.) reflects a best practice of designing ML workflows as modular components arranged in a Directed Acyclic Graph (DAG) [24]. Each component is self-contained (for example, data ingestion, validation, training, evaluation, deployment as separate steps) and can be versioned or reused. Classical process models were conceptually modular but did not consider the technical implementation aspect. Integrating the conceptual framework with modern pipeline architecture can enhance clarity and maintainability of data science projects. The Proposed Framework addresses these gaps by combining the strengths of established methodologies with new enhancements for iterative control, expert validation, and traceability. In essence, we extend the CRISP-DM philosophy with formal decision gates at each phase, which involves multiple stakeholders’ validation step, all underpinned by thorough documentation practices. The framework is enhanced through selective automation and continuous improvement. Technical validation steps, such as quality checks, artifact versioning, and forecast anomaly detection—can be partially or fully automated, while manual governance gates ensure expert oversight and compliance. The framework remains modular and tool-agnostic, aligning with contemporary MLOps principles so it can be implemented using pipeline orchestration platforms in practice.

- Formalized Feedback Loops: Introduction of explicit decision gates at each phase, enabling iteration, rollback, or re-processing when predefined success criteria are not met. This makes the iterative nature of data science explicit rather than implicit [25], and supports partial automation of loopback triggers and alerts.

- Systematic Energy Domain Expert Integration: Incorporation of energy domain expertise throughout the pipeline, not just at the beginning and end, to ensure results are plausible and actionable in the energy system context. This goes beyond typical consultation, embedding energy domain feedback formally into the pipeline, particularly for validating data patterns, feature engineering decisions, and model behavior against energy system knowledge.

- Enhanced Documentation & Traceability: Each phase produces traceable outputs (datasets, analysis reports, model artifacts, evaluation reports, etc.) that are versioned and documented. This provides end-to-end provenance for the project, responding to calls for AI traceability as a foundation of trust in critical energy infrastructure applications [26].

- Modularity and Phase Independence: The process is structured into self-contained phases with well-defined inputs and outputs, making it easier to integrate with pipeline orchestration tools and to swap or repeat components as needed. This modular design mirrors the implementation of workflows in platforms like Kubeflow Pipelines and Apache Airflow, which treat each step as a reusable component in a DAG [27]. It facilitates collaboration between data scientists, engineers, and domain experts, and supports consistent, repeatable runs of the pipeline.

2. Background and Related Work

2.1. Existing Data Science Process Models

- Framework for Machine Learning with Quality Assurance (QA): CRISP-ML(Q) extends CRISP-DM by adding an explicit Monitoring and Maintenance phase at the end of the lifecycle to handle model degradation in changing environments [11]. It also merges the Business Understanding and Data Understanding phases into one, recognizing their interdependence in practice [11]. Most notably, CRISP-ML(Q) introduces a comprehensive QA methodology across all phases: for every phase, specific requirements, constraints and risk metrics are defined, and if quality risks are identified, additional tasks (e.g., data augmentation, bias checks) are performed to mitigate them [29]. This ensures that errors or issues in each step are caught as early as possible, improving the overall reliability of the project [11]. This quality-driven approach is informed by established risk management standards such as International Organization for Standardization (ISO) 9001: Quality Management Systems — Requirements [30] and requires that each stage produces verifiable outputs to demonstrate compliance with quality criteria. Although CRISP ML(Q) improves overall model governance and reliability, it is designed to be application neutral and does not specifically address challenges unique to energy forecasting, such as handling temporal structures, integrating weather-related inputs, or capturing domain-specific operational constraints.

- Frameworks for Specific Domains: In regulated or specialized industries, variants of CRISP-DM have emerged. For instance, Financial Industry Business Data Model (FIN-DM) [31] augments CRISP-DM by adding a dedicated Compliance phase that focuses on regulatory requirements and risk mitigation (including privacy laws like GDPR), as well as a Requirements phase and a Post-Deployment review phase [32]. This reflects the necessity of embedding governance and legal compliance steps explicitly into the data science lifecycle in domains where oversight is critical.

- Industrial Data Analysis Improvement Cycle (IDAIC) [33] and Domain-Specific CRISP-DM Variants: Other researchers have modified CRISP-DM to better involve domain experts or address maintenance. For example, Ahern et al. (2022) propose a process to enable domain experts to become citizen data scientists in industrial settings, by renaming and adjusting CRISP-DM phases and adding new ones like “Domain Exploration” and “Operation Assessment” for equipment maintenance scenarios [32]. These changes underscore the importance of domain knowledge and continuous operation in certain contexts.

2.2. Limitations of Current Approaches

- Implicit vs. Explicit Iteration: CRISP-DM and its variants generally allow iteration (e.g., CRISP-DM’s arrows looping back, CRISP-ML(Q)’s risk-based checks). However, the decision to loop back is often left implicit or to the judgment of the team, without a formal structure. For instance, CRISP-DM’s Evaluation phase suggests checking if the model meets business objectives; if not, one might return to an earlier stage [11]. But this is a single checkpoint near the end. The proposed framework introduces multiple gated checkpoints with clear criteria (e.g., data quality metrics, model performance thresholds, validation acceptance by domain experts) that must be met to proceed forward, which increases process automation opportunities while also making the workflow logic explicit and auditable.

- Domain Knowledge Integration: Many pipelines emphasize technical validation (metrics, statistical tests) but underemphasize semantic validation. As noted by Studer et al. in CRISP-ML(Q), separating domain experts from data scientists can risk producing solutions that miss the mark [11]. Some domain-specific processes have tried to bridge this, as in Ahern et al.’s work and other “CRISP-DM for X” proposals [33], but a general solution is not common. The proposed framework elevates domain expert involvement to the formal phases and suggests including domain experts not only in defining requirements but also in reviewing intermediate results and final models. This provides a structured feedback loop where expert insight can trigger model adjustments.

- Comprehensive Traceability: Reproducibility and traceability are increasingly seen as essential for both scientific rigor and regulatory compliance. Tools exist to version data and models, but few conceptual frameworks explicitly require that each step’s outputs be recorded and linked to inputs. The High-Level Expert Group on AI’s guidelines for Trustworthy AI [38] enumerate traceability as a requirement, meaning one should document the provenance of training data, the development process, and all model iterations [20]. The proposed framework bakes this in by design: every phase yields an artifact (or set of artifacts) that is stored with metadata. This could be implemented via existing MLOps, but methodologically we ensure no phase is “ephemeral”, even exploratory analysis or interim results should be captured in writing or code for future reference. This emphasis exceeds what CRISP-DM prescribed and aligns with emerging standards in AI governance [20].

- Modularity and Extensibility: Traditional methodologies were described in documents and weren’t directly operational. Modern pipelines are often executed in software. A good process framework today should be easily translated into pipeline implementations. CRISP-ML(Q) explicitly chose to remain tool-agnostic so it can be instantiated with various technologies [11]. The proposed framework shares that philosophy: the framework is conceptual, but each phase corresponds to tasks that could be implemented as modules in a pipeline. By designing with clear modular boundaries, we make it easier to integrate new techniques (for instance, if a new data validation library arises, it can replace the validation component without affecting other parts of the process). This modularity also aids reusability: components of the pipeline can be reused across projects, improving efficiency.

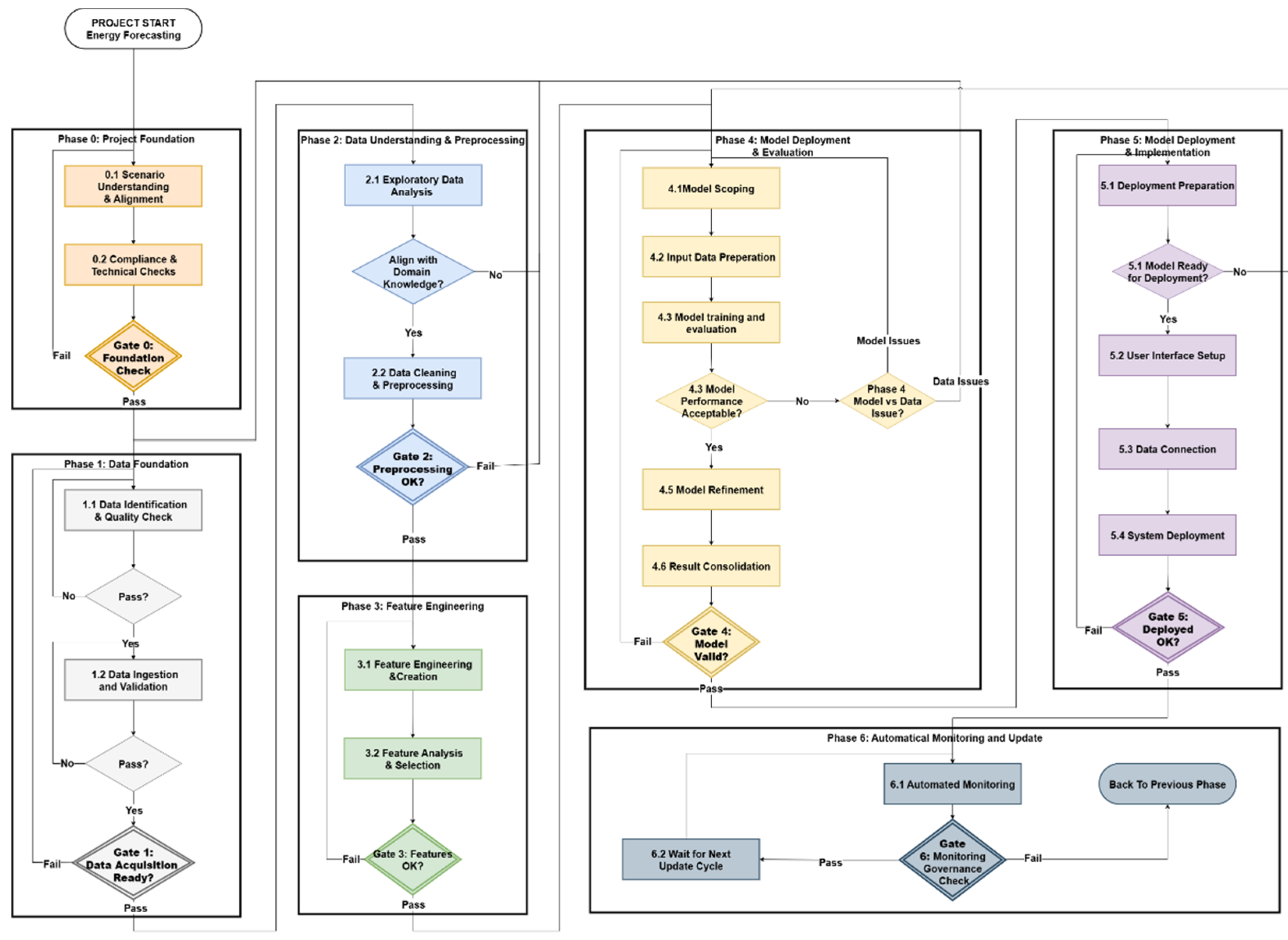

3. Proposed Energy Forecasting Pipeline Framework

- All the internal decision points pass the predefined technical requirements and criteria

- Artifacts are documented, versioned, and traceable

- Outputs and processes comply with technical, operational, regulatory, and domain standards

- Risks are evaluated and either mitigated or accepted

- The project is ready to transition to the next phase without carrying forward unresolved issues

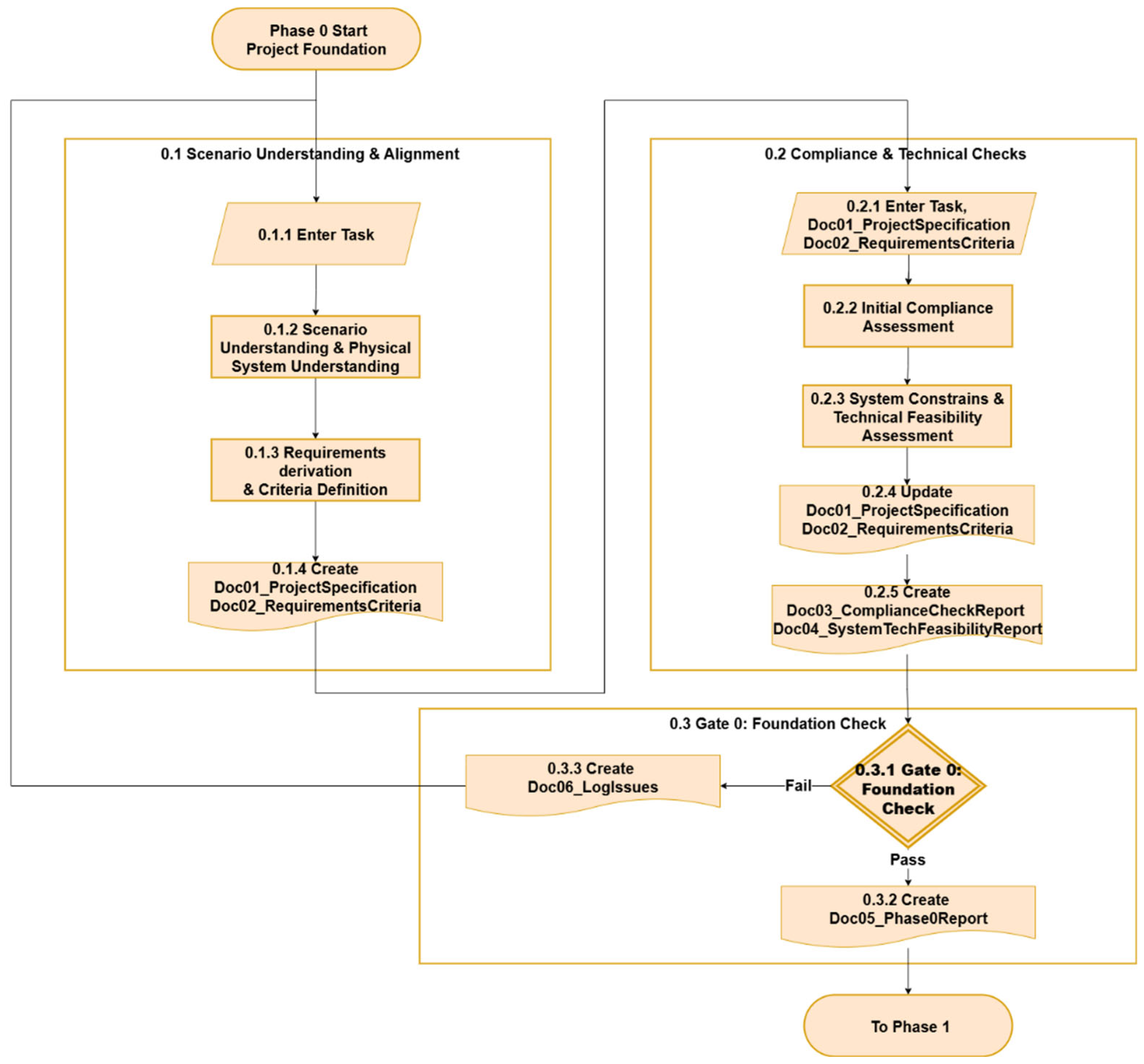

3.1. Phase 0 - Project Foundation

3.1.1. Scenario Understanding & Alignment

3.1.2. Compliance & Technical Checks

3.1.3. Gate 0 - Foundation Check

- Specification and requirements and success criteria are clearly defined and documented. Compliance obligations are clearly identified and documented. System and infrastructure feasibility has been confirmed.

- All output artifacts are documented.

- Outputs and processes are compliant.

- Risks are identified and accepted.

- There are no unresolved issues.

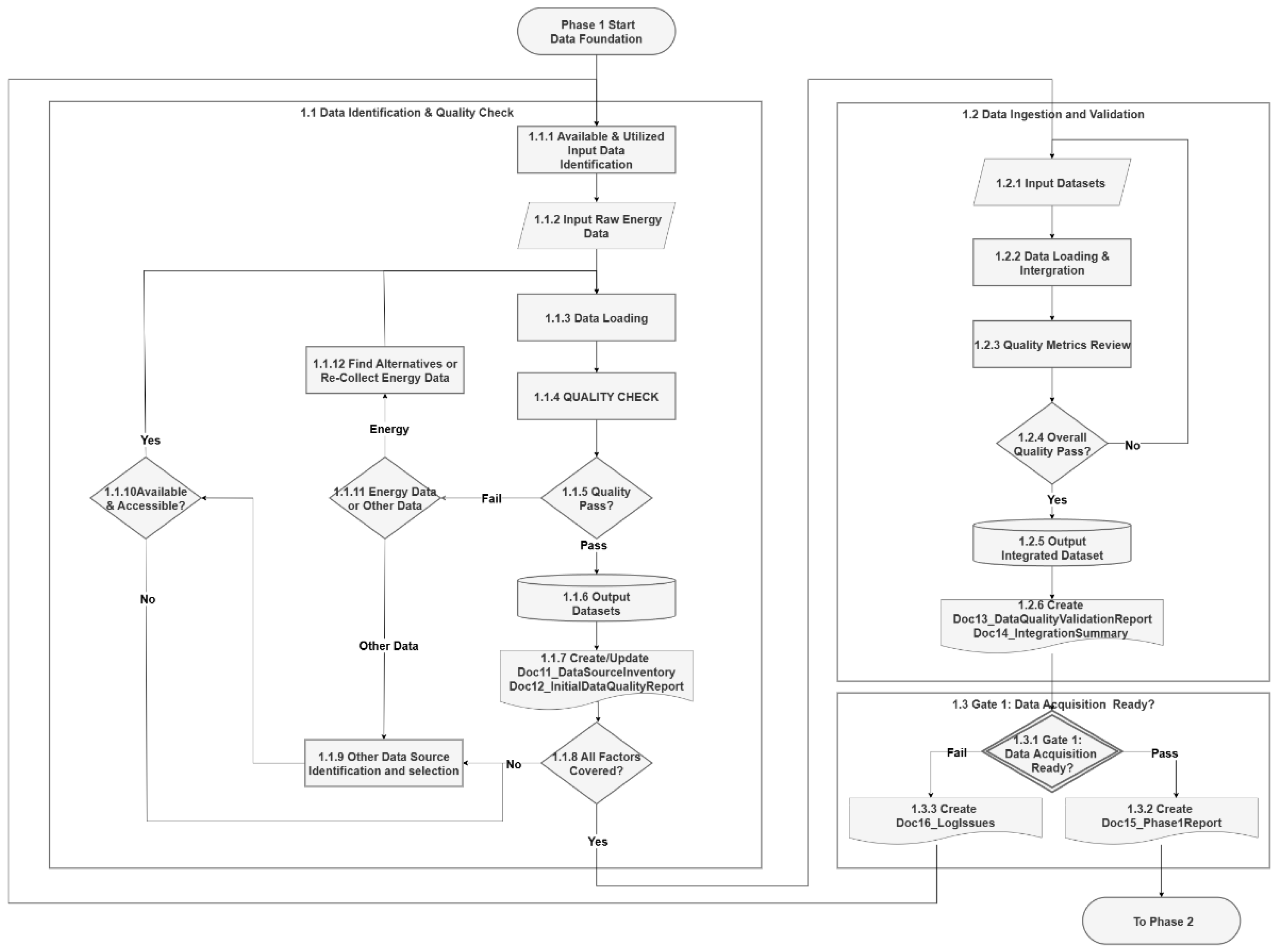

3.2. Phase 1 - Data Foundation

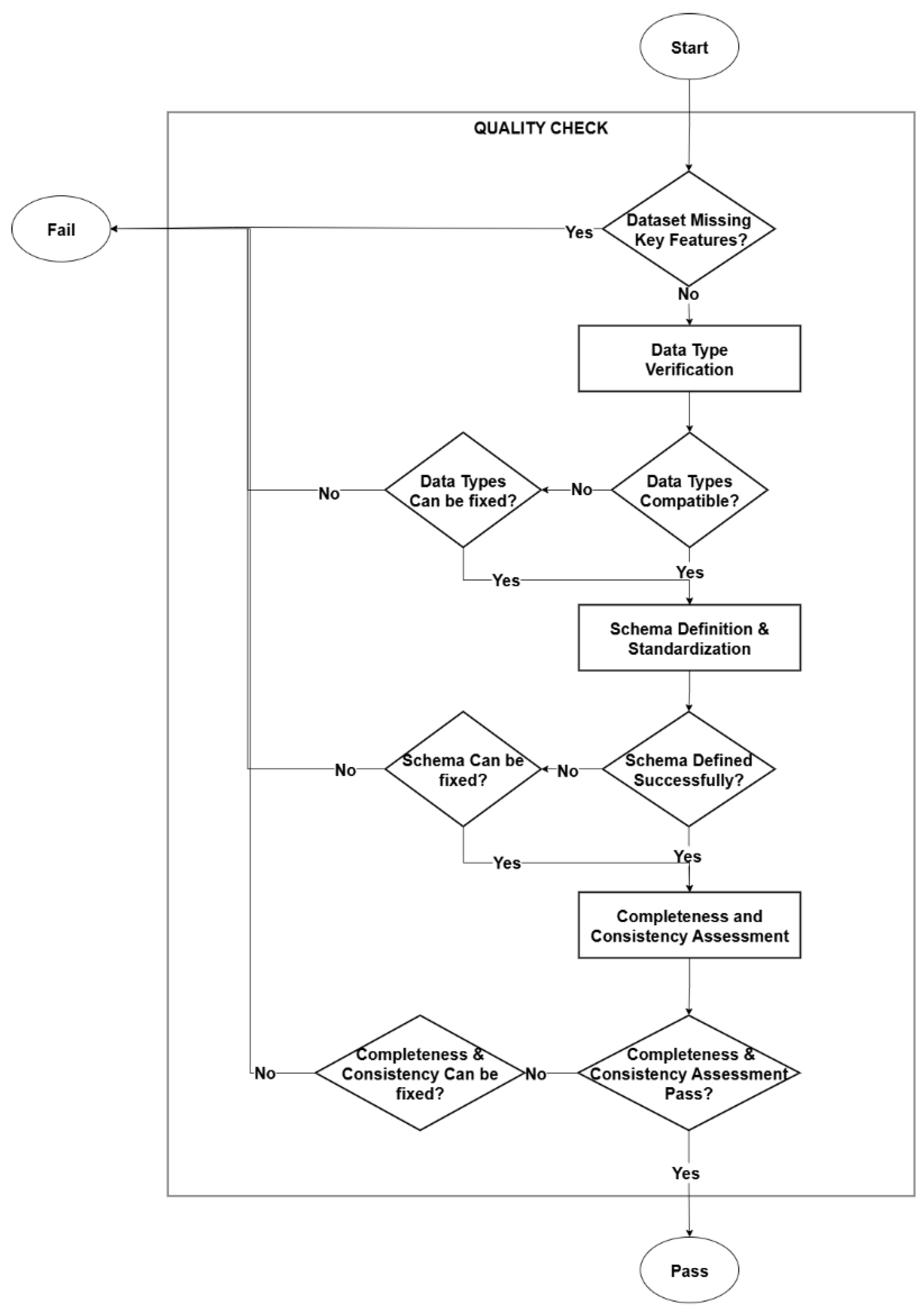

3.2.1. Data Identification & Quality Check

3.2.2. Data Ingestion and Validation

3.2.3. Gate 1 - Data Acquisition Ready

- All the necessary data have been identified and collected. Datasets have been successfully ingested and validated. Quality metrics meet established thresholds.

- All documents and datasets are correctly created.

- The process complies with data-related technical and process requirements.

- Risks are accepted

- There are no unresolved issues.

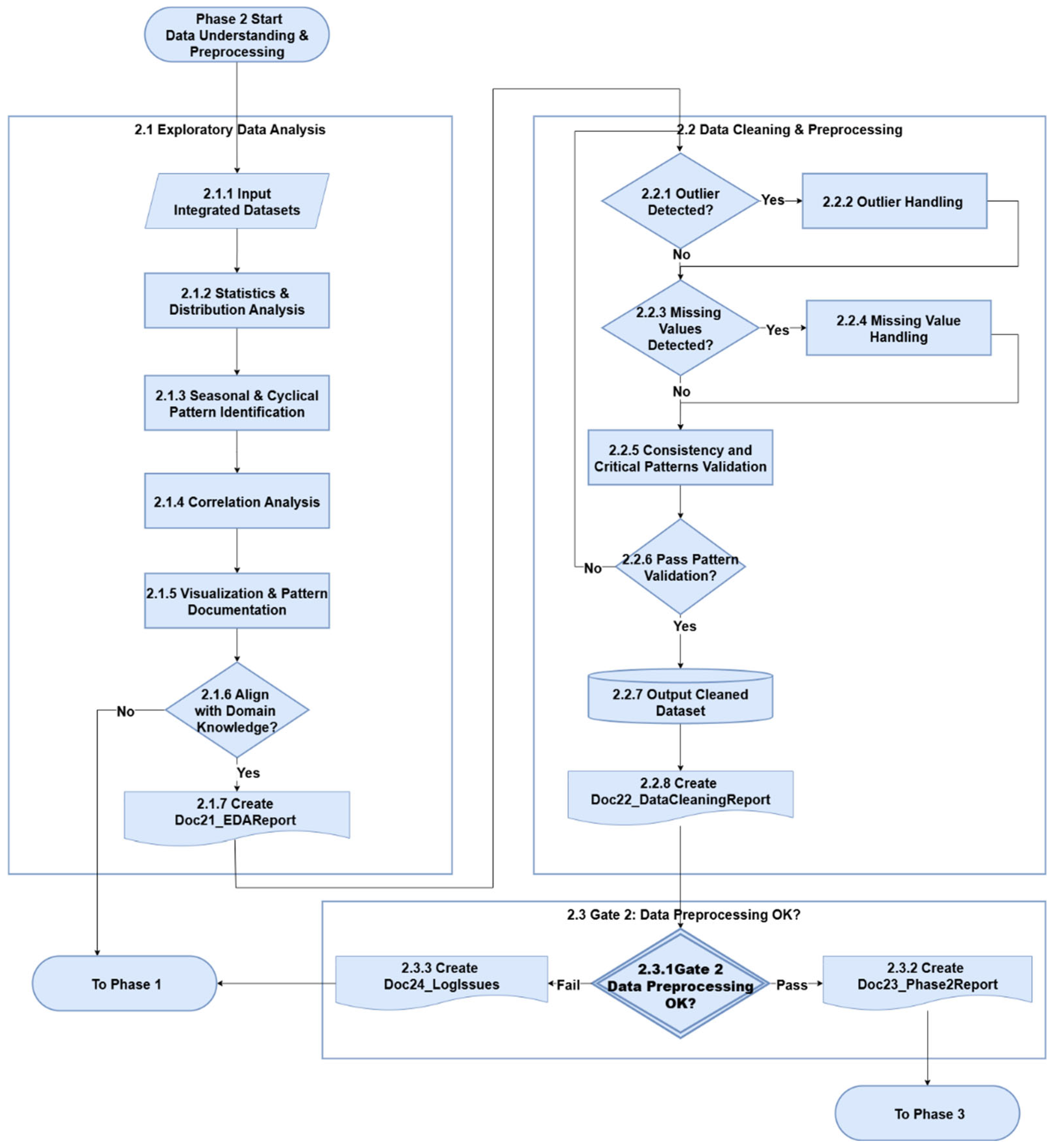

3.3. Phase 2 - Data Understanding & Preprocessing

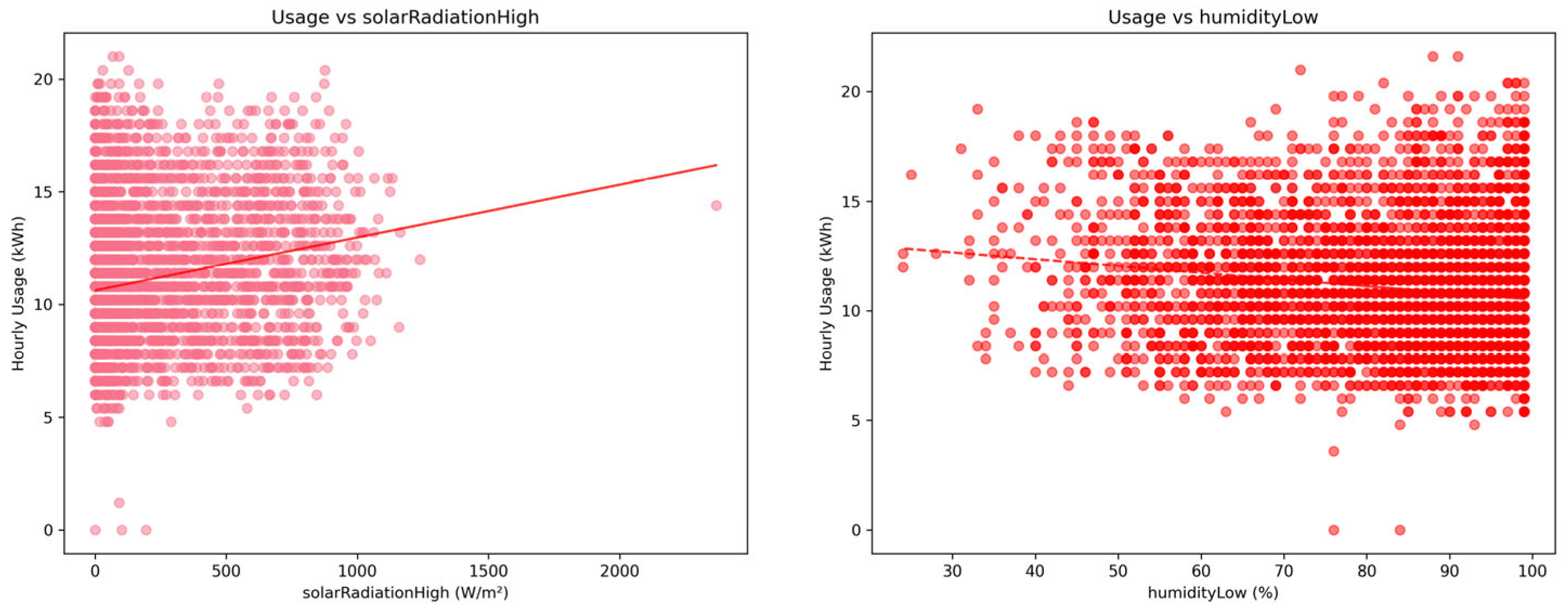

3.3.1. EDA

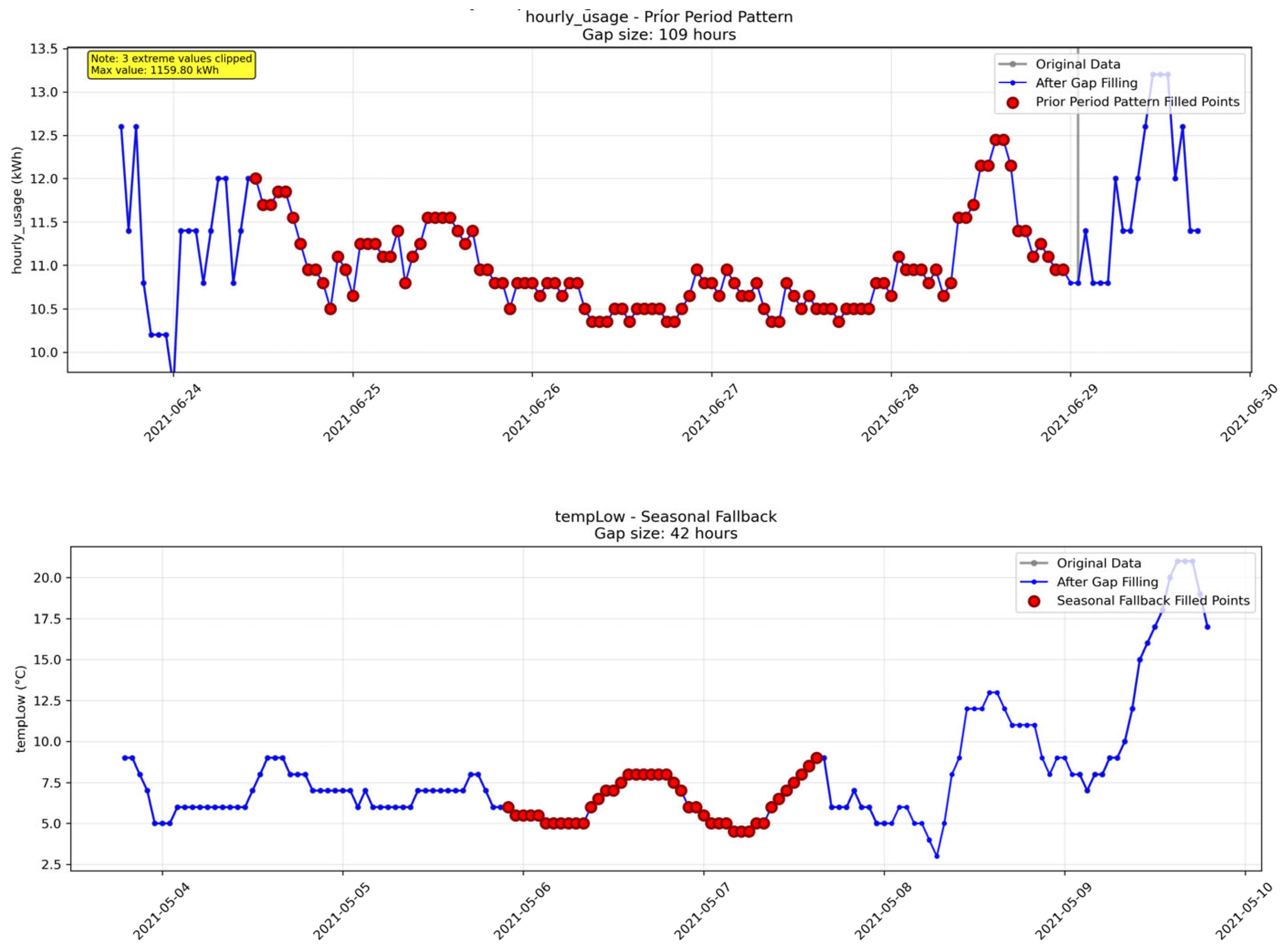

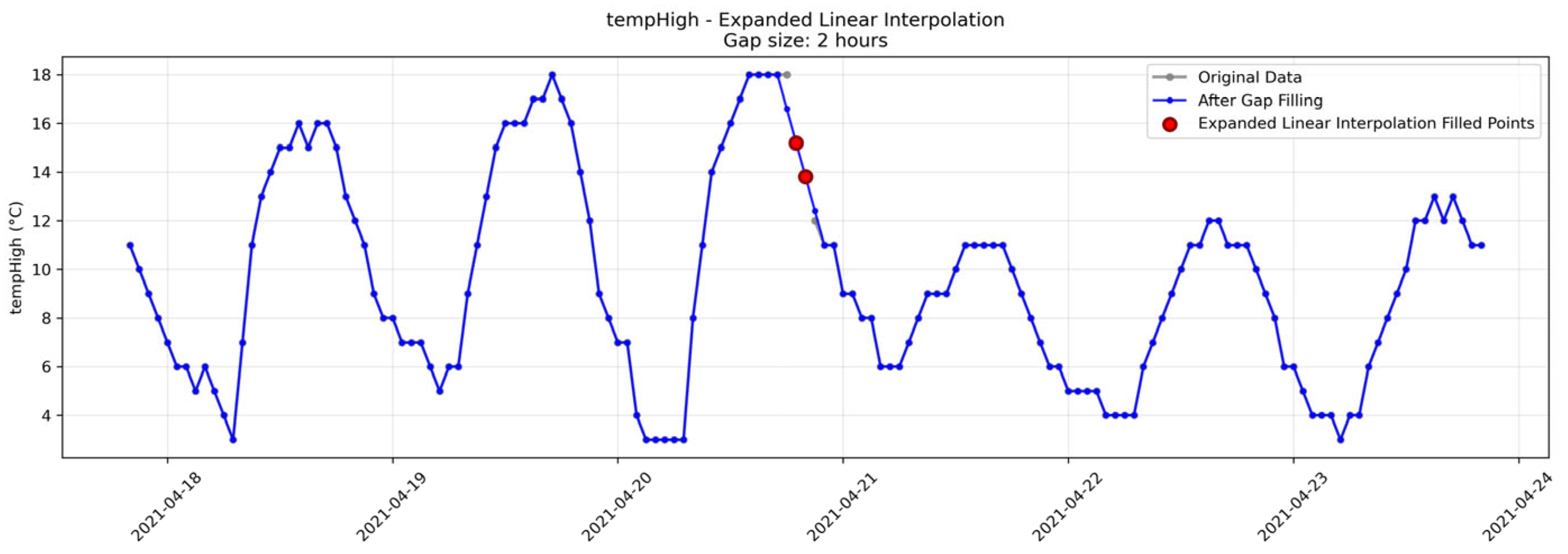

3.3.2. Data Cleaning & Preprocessing

3.3.3. Gate 2 – Data Preprocessing OK

- Exploratory insights align with domain knowledge, and all critical data anomalies have been addressed.

- Preprocessing procedures are transparently documented.

- Outputs and processes comply with technical, operational, regulatory, and domain standards

- Risks are evaluated and either mitigated or accepted

- The project is ready to transition to the next phase without carrying forward unresolved issues

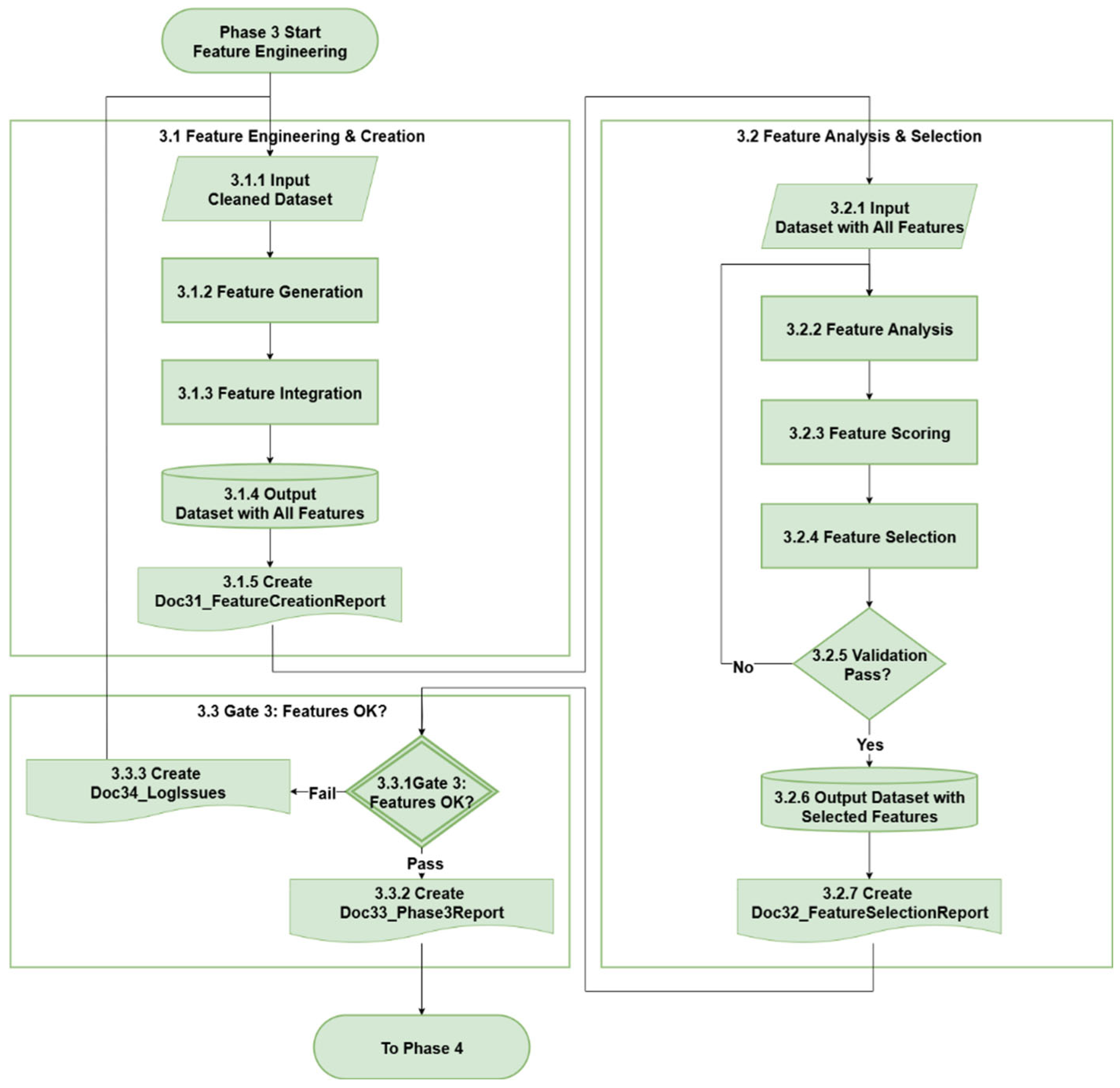

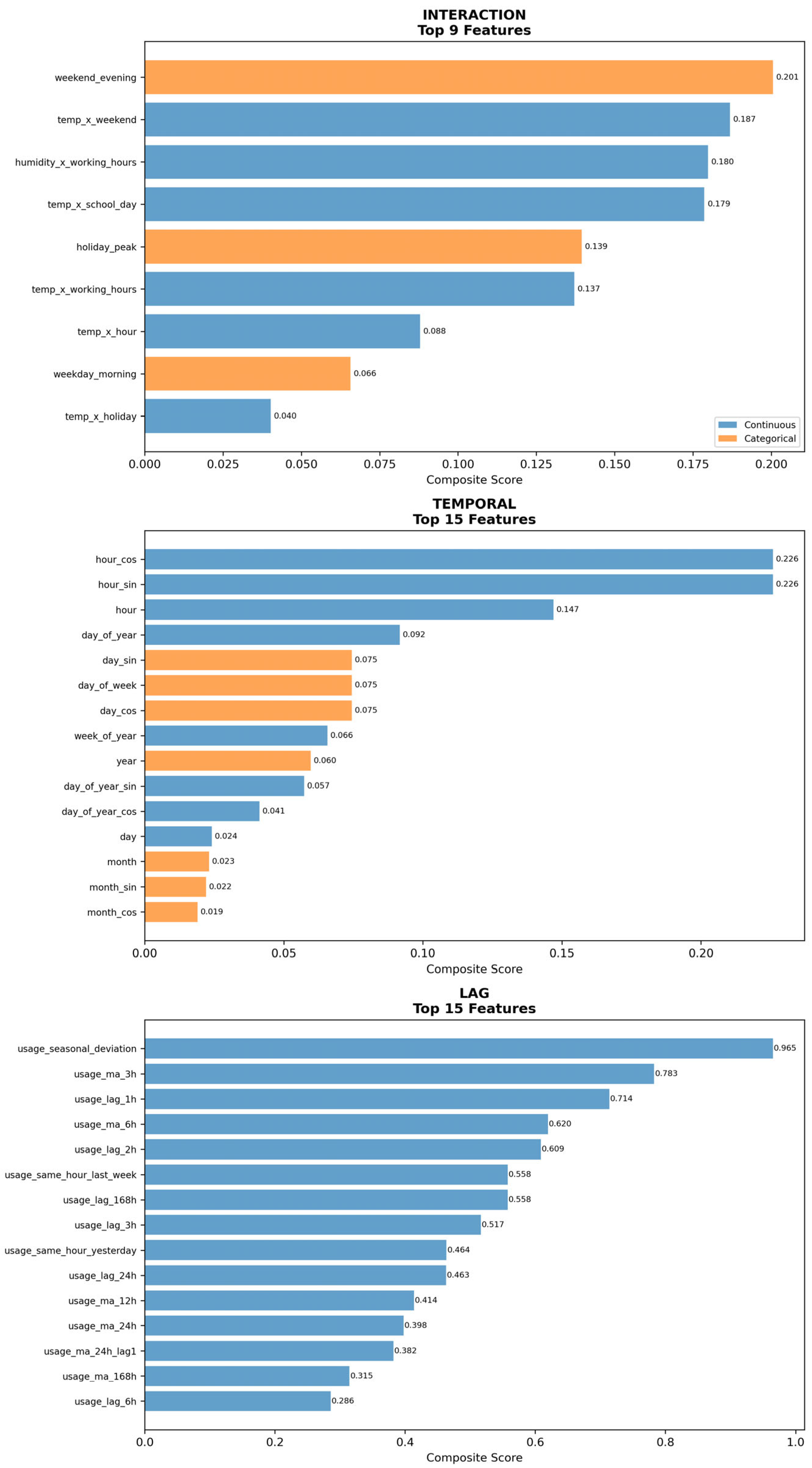

3.4. Phase 3 - Feature Engineering

3.4.1. Feature Engineering & Creation

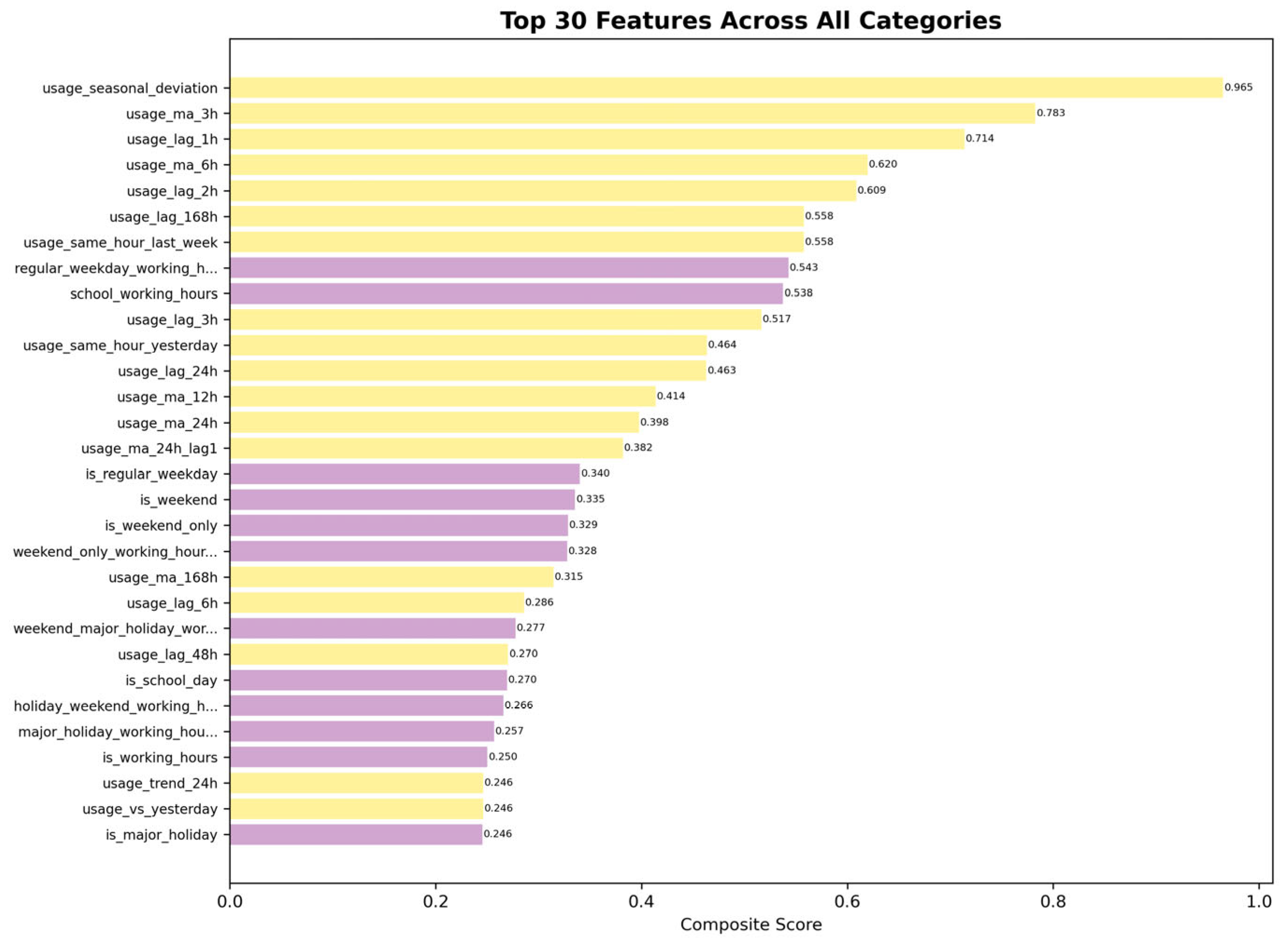

3.4.2. Feature Analysis & Selection

3.4.3. Gate 3 - Features OK

- Feature engineering has been thoroughly conducted, and selected features are sufficient, relevant, and validated for the modeling objective.

- All artifacts are documented appropriately.

- The process complies with regulations and standards if applicable.

- Risks are evaluated and accepted.

- No unresolved issues remain.

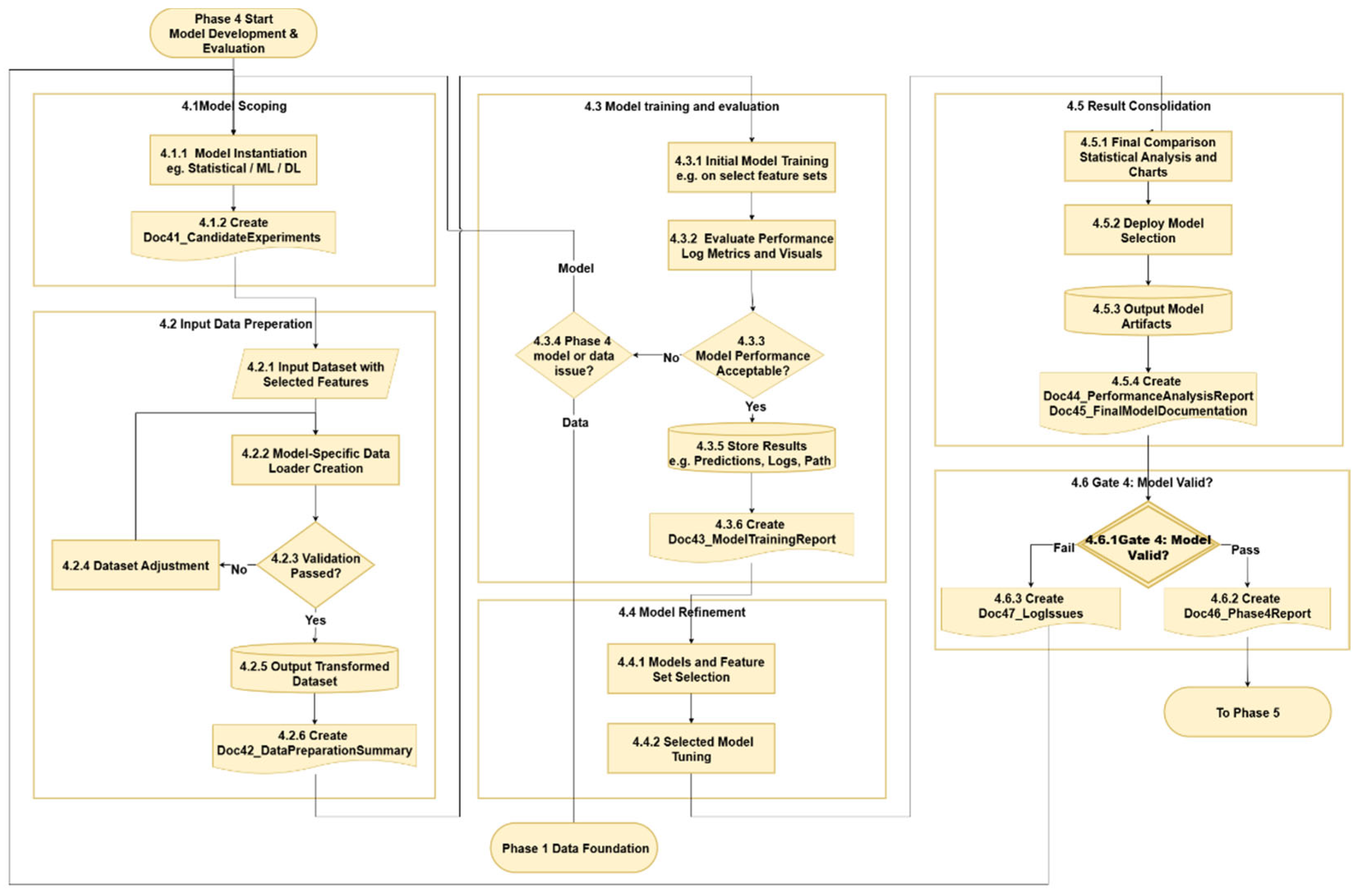

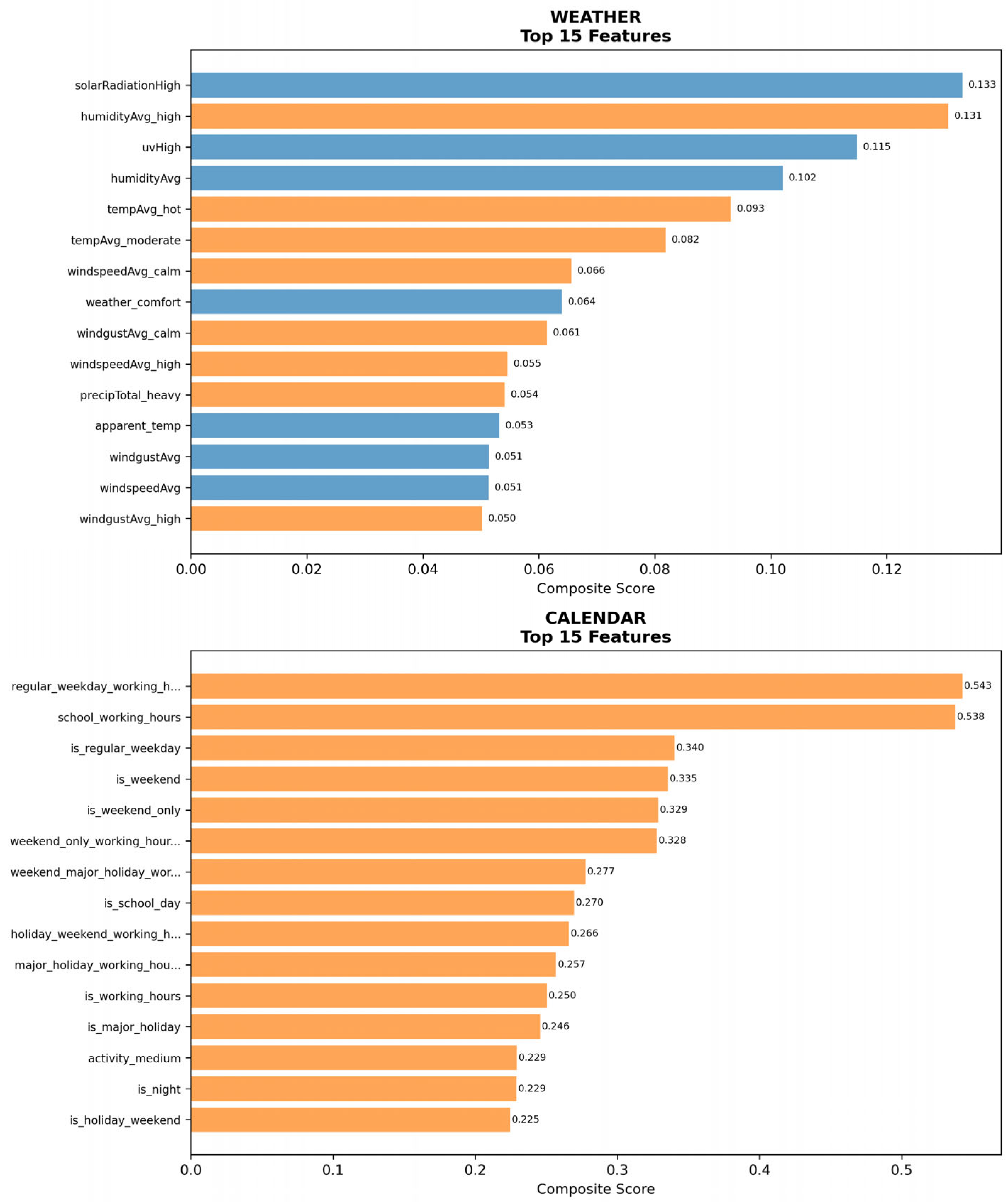

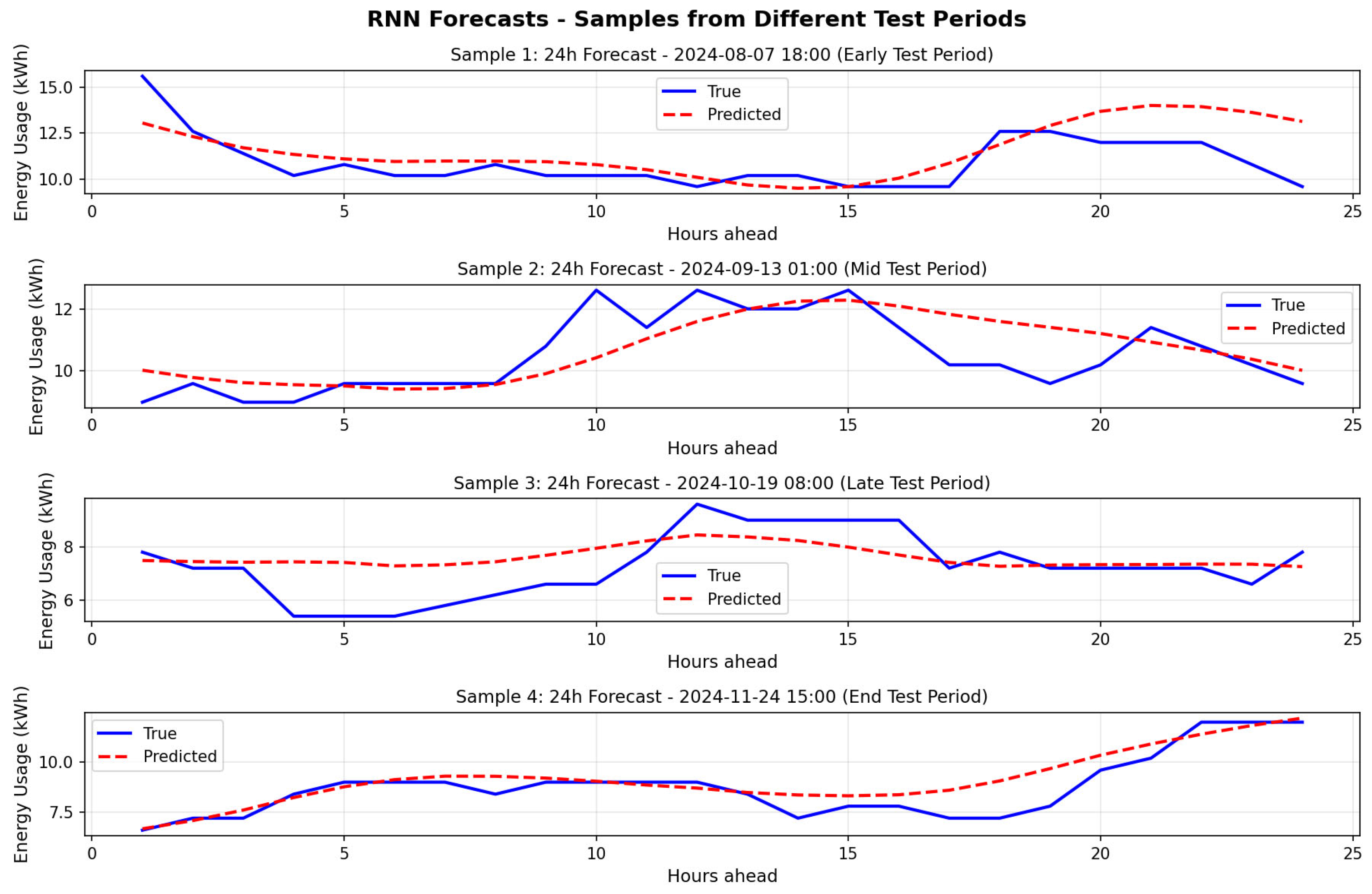

3.5. Phase 4 - Model Development & Evaluation

3.5.1. Model Scoping

3.5.2. Input Data Preparation

3.5.3. Model Training and Evaluation

3.5.4. Model Refinement

3.5.5. Result Consolidation

3.5.6. Gate 4 - Model Valid

- Data preparation steps, including transformations and splits, have passed integrity checks. Model performance meets defined criteria across relevant metrics and datasets. Selected models are reproducible and aligned with forecasting objectives.

- All modeling procedures, configurations, and decisions are fully documented.

- The process meets compliance requirements.

- Risks are evaluated and accepted.

- There are no other unresolved issues.

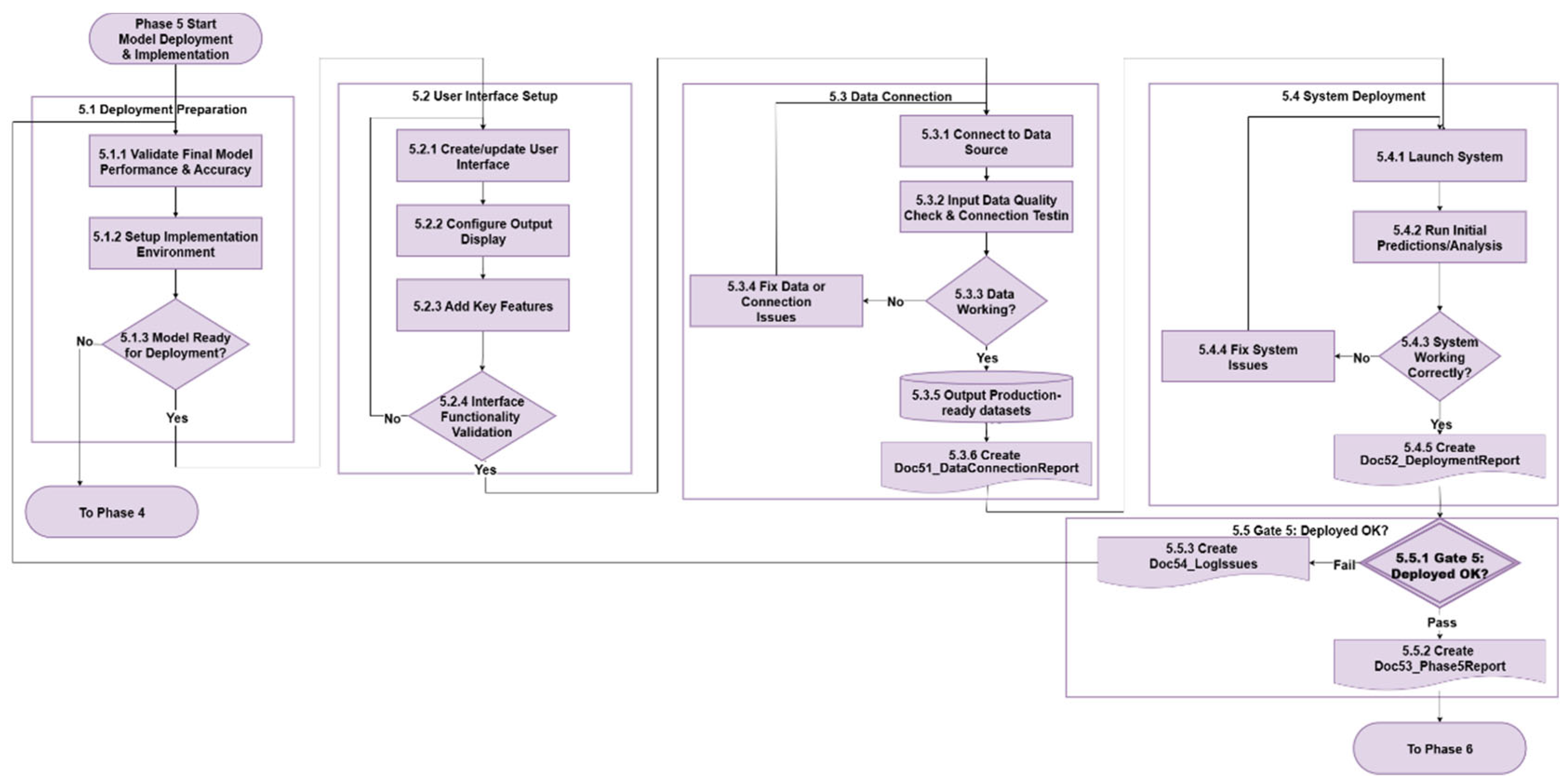

3.6. Phase 5 - Model Deployment & Implementation

3.6.1. Deployment Preparation

3.6.2. User Interface (UI) Setup

3.6.3. Data Connection

3.6.4. System Deployment

3.6.5. Gate 5 - Deployment OK

- The model is fully validated and operational in its target environment. Data connections are stable and accurate. UI are functional and deliver correct outputs

- Deployment documentation and system configuration have been properly captured and archived.

- No unfulfilled compliance related requirements.

- No unacceptable risks identified.

- No more other issues identified by all stakeholders.

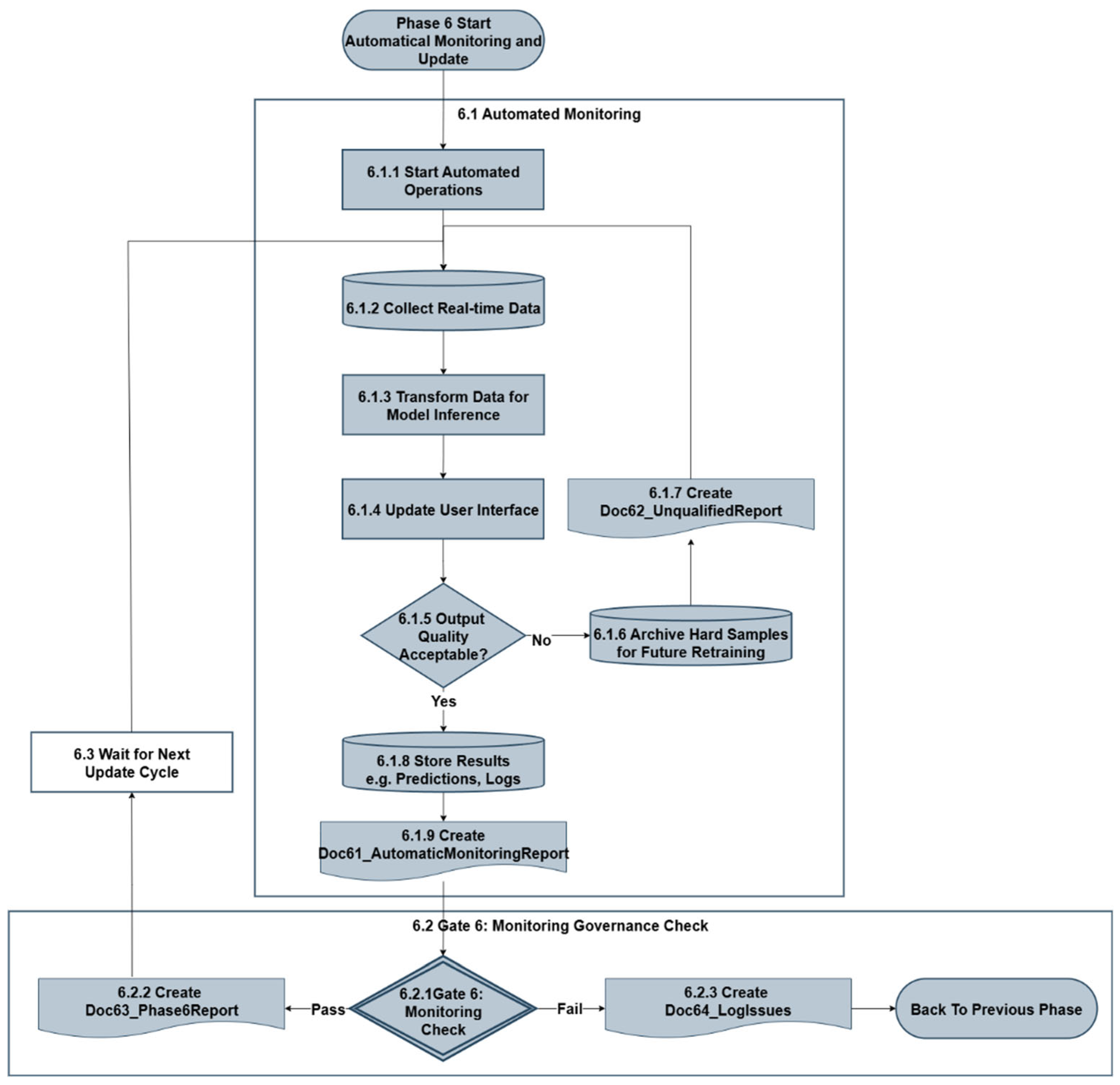

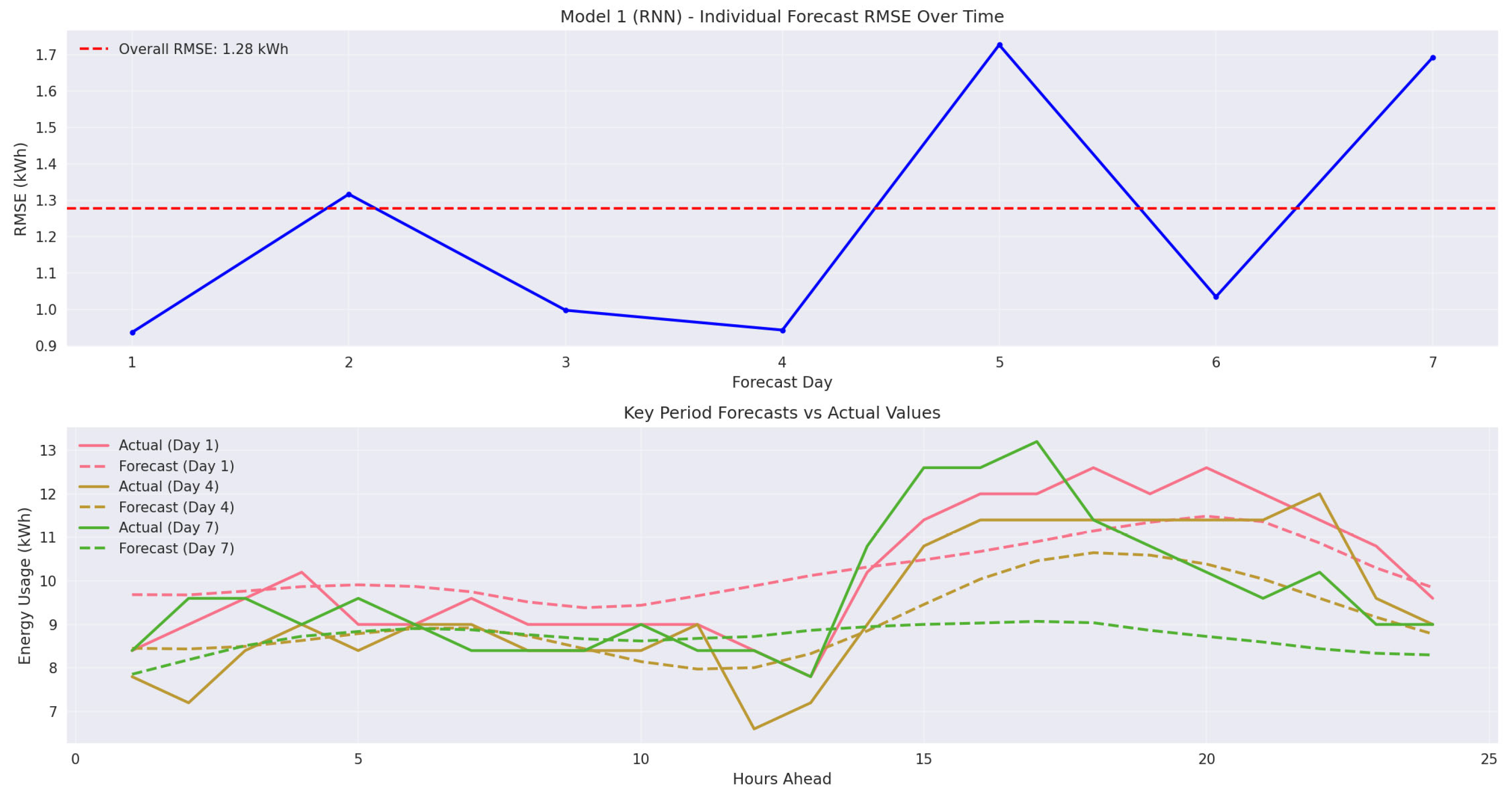

3.7. Phase 6 - Automated Monitoring and Update

3.7.1. Automated Monitoring

3.7.2. Gate 6 - Monitoring Governance Check

- Output predictions remain within acceptable accuracy ranges.

- Documents are recorded according to the pipeline.

- Operational and monitoring workflows are compliant and auditable.

- Risks are identified and acceptable.

- No unresolved anomalies or system failures persist.

3.7.3. Wait for Next Update Cycle

3.8. Stakeholder Roles and Responsibilities

3.8.1. Responsibility Distribution and Governance Structure

3.8.2. Core Stakeholder Functions

4. Comparison of Existing Frameworks

5. Case Study

5.1. Phase 0 - Project Foundation

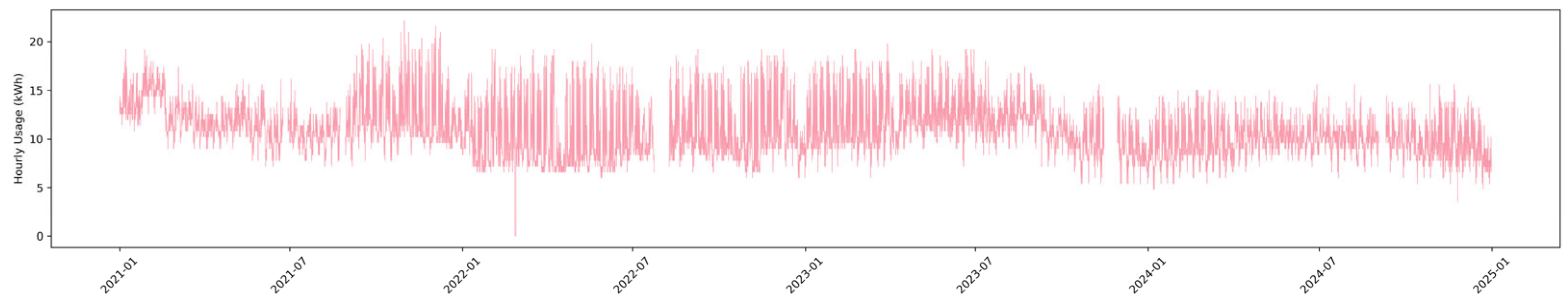

5.2. Phase 1 - Data Foundation

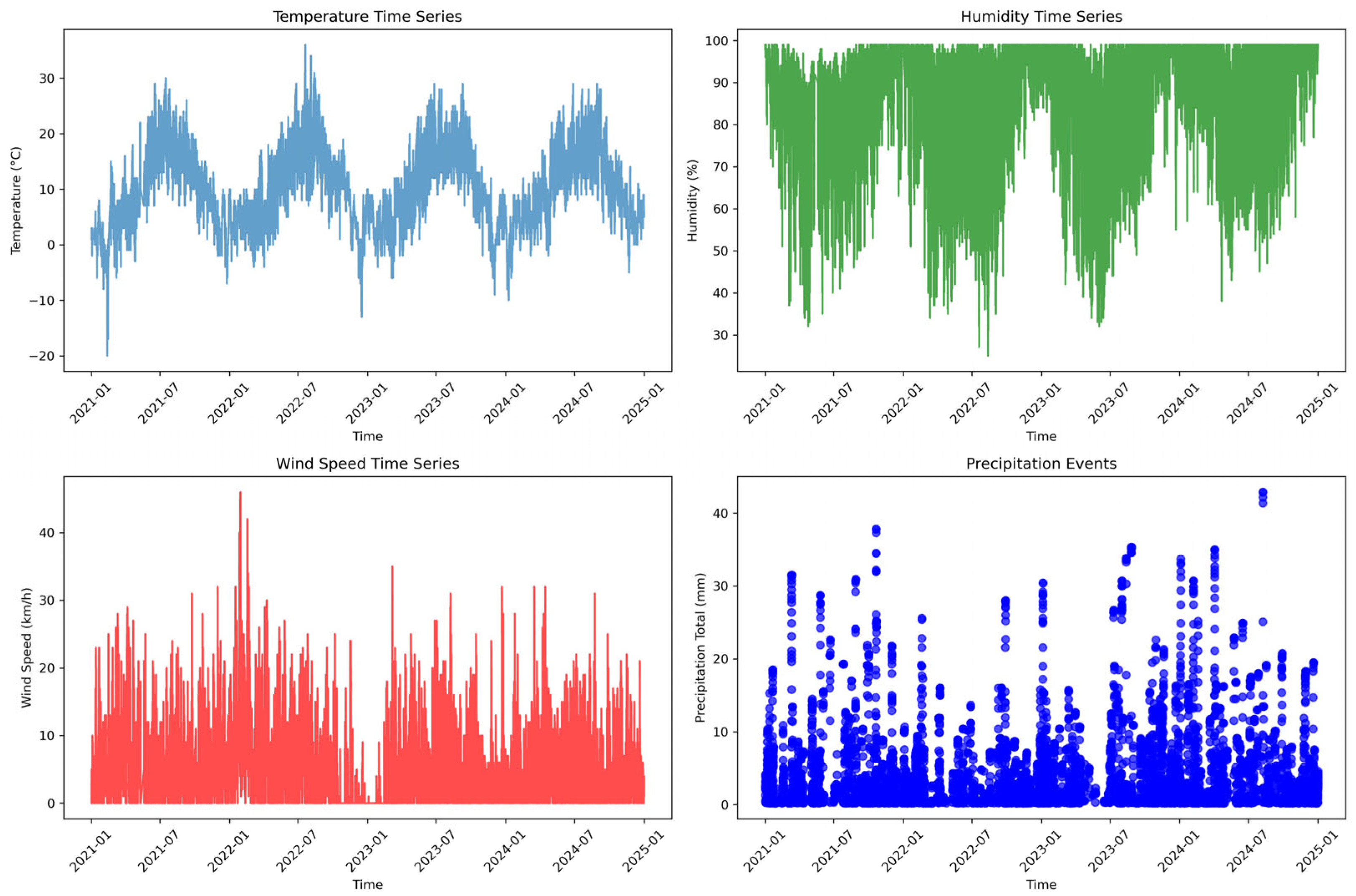

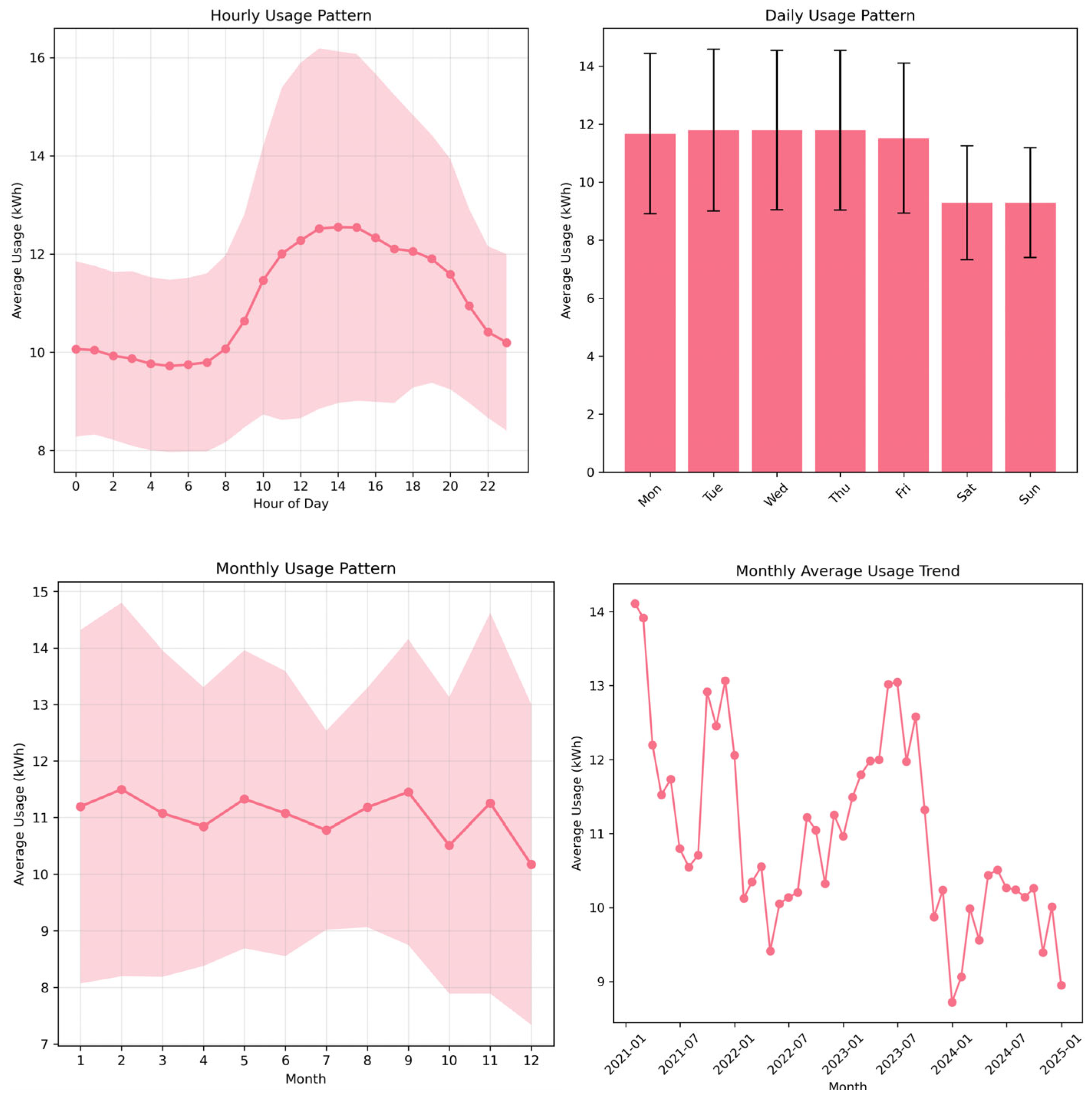

5.3. Phase 2 - Data Understanding & Preprocessing

5.4. Phase 3 - Feature Engineering

5.5. Phase 4 - Model Development & Evaluation

5.6. Phase 5 - Deployment & Implementation

5.7. Phase 6 - Automated Monitoring & Update

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| APIs | Application Programming Interfaces |

| CI/CD | Continuous Integration / Continuous Delivery |

| CPU | Central Processing Unit |

| CRISP-DM | Cross-Industry Standard Process for Data Mining |

| CRISP-ML(Q) | CRISP for Machine Learning with Quality |

| DAG | Directed Acyclic Graph |

| DevOps | Development and Operations |

| DL | Deep Learning |

| EDA | Exploratory Data Analysis |

| EU | European Union |

| EU AI Act | European Union Artificial Intelligence Act |

| FIN-DM | Financial Industry Business Data Mode |

| GDPR | General Data Protection Regulation |

| GPU | Graphics Processing Unit |

| IDAIC | Industrial Data Analysis Improvement Cycle |

| ISO | International Organization for Standardization |

| IoT | Internet of Things |

| IQR | Interquartile Range |

| JSON | JavaScript Object Notation |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| ML | Machine Learning |

| MLOps | Machine Learning Operations |

| NaN | Not a Number |

| QA | Quality Assurance |

| RAM | Random Access Memory |

| RMSE | Root Mean Squared Error |

| RNN | Recurrent Neural Network |

| SARIMA | Seasonal Autoregressive Integrated Moving Average |

| SDU | University of Southern Denmark |

| SHAP | Shapley additive explanations |

| TFX | TensorFlow Extended |

| UI | User Interface |

| XGBoost | Extreme Gradient Boosting |

Appendix A. Summary of Key Phase Artifacts in the Energy Forecasting Framework

| Phase | Document ID & Title | Main Content | Output Datasets |

| Phase 0: Project Foundation | Doc01_ProjectSpecification | Defines the overall project scope, objectives, forecasting purpose, energy type, and domain. It outlines technical requirements, infrastructure readiness, and modeling preferences to guide downstream phases | / |

| Doc02_RequirementsCriteria | Details the functional, technical, and operational requirements across all project phases. It specifies accuracy targets, data quality standards, model validation criteria, and system performance thresholds | ||

| Doc03_ComplianceCheckReport | Assesses the completeness and compliance of foundational documents with institutional, technical, and regulatory standards. It evaluates workflow adherence, documentation quality, and privacy/security compliance prior to Phase 1 launch | ||

| Doc04_SystemTechFeasibilityReport | Evaluates the project's technical feasibility across system architecture, data pipelines, model infrastructure, UI requirements, and resource needs. | ||

| Doc05_Phase0Report | Summarizes all Phase 0 activities, confirms completion of key artifacts, and consolidates stakeholder approvals. It serves as the official checkpoint for Gate 0 and authorizes the transition to Phase 1. | ||

| Doc06_LogIssues | Records issues identified during Phase 0, categorized by severity and type (e.g., compliance, technical). It documents unresolved items, required actions before re-evaluation, and the feedback loop for rework. | ||

| Phase 1: Data Foundation | Doc11_DataSourceInventory | Catalogs all available internal and external data sources, assesses their availability, and verifies data loading success. | Raw energy datasets Supplementary datasets Integrated datasets |

| Doc12_InitialDataQualityReport | Conducts an initial assessment of raw data completeness, timestamp continuity, and overall timeline coverage for each source. Provides pass/fail evaluations against quality thresholds. | ||

| Doc13_DataQualityValidationReport | Validates the integrated datasets against predefined coverage and overlap thresholds. Confirms ingestion completeness, assesses quality across sources, and provides a go/no-go recommendation for proceeding to the next step. | ||

| Doc14_IntegrationSummary | Documents the data integration process, including merge strategy, record preservation logic, and overlaps between datasets. | ||

| Doc15_Phase1Report | Summarizes all Phase 1 activities including data acquisition, quality assessment, integration, and documentation. Confirms completion of Gate 1 requirements with stakeholder approvals and formally transitions the project to Phase 2. | ||

| Doc16_LogIssues | Records issues encountered during Phase 1, especially data quality-related problems and integration anomalies. Lists unresolved items, assigns responsibilities, and documents actions required before re-evaluation of Gate 1. | ||

| Phase 2: Data Understanding & Preprocessing | Doc21_EDAReport | Presents exploratory analysis of the cleaned and integrated datasets, highlighting temporal and seasonal trends, correlations with external variables, and deviations from domain expectations. Includes validation of findings against expert knowledge and system behavior. | Cleaned datasets |

| Doc22_DataCleaningReport | Documents all data cleaning procedures including outlier detection, gap filling, and context-aware imputation. Provides validation results for completeness, accuracy, and statistical consistency to confirm feature engineering readiness. | ||

| Doc23_Phase2Report | Summarizes all Phase 2 activities including exploratory analysis, cleaning, and preprocessing. Confirms all quality checks and domain alignments were met, with stakeholder sign-offs authorizing transition to Phase 3. | ||

| Doc24_LogIssues | Logs all unresolved issues identified, including those affecting data completeness and alignment with domain knowledge. Lists required corrective actions prior to re-evaluation of Gate 2. | ||

| Phase 3: Feature Engineering | Doc31_FeatureCreationReport | Details the engineering of a comprehensive feature set from raw and cleaned data using domain-informed strategies. Features were organized into interpretable categories with documented coverage, correlation structure, and quality validation. | Feature-engineered datasets Selected feature subsets Training/validation/test splits Transformed model-ready datasets |

| Doc32_FeatureSelectionReport | Summarizes feature selection process involving redundancy elimination, normalization, and scoring. Features were evaluated by category and ranked based on statistical performance and domain relevance, resulting in a final set optimized for model training. | ||

| Doc33_Phase3Report | Consolidates all Phase 3 activities including feature generation, selection, and data preparation. Confirms dataset readiness for modeling through rigorous validation checks and stakeholder sign-offs, supporting transition to Phase 4. | ||

| Doc34_LogIssues | Logs issues and challenges encountered during feature engineering, such as problems with derived variable consistency, transformation logic, or dataset leakage risks. It identifies required fixes before re-evaluation of Gate 3. | ||

| Phase 4: Model Development & Evaluation | Doc41_CandidateExperiments | Provides a comprehensive record of all candidate modeling experiments, including feature exploration and hyperparameter optimization. Summarizes tested models, feature combinations, and experimental design across forecasting horizons. | Trained model artifacts Deployment-ready model packages |

| Doc42_DataPreparationSummary | Describes the transformation of selected feature datasets into model-ready formats, including scaling, splitting, and structure adaptation for different model types. Validate reproducibility, leakage prevention, and data consistency across training and test sets. | ||

| Doc43_ModelTrainingReport | Details the end-to-end training process for all model-feature combinations, including experiment configurations, validation strategy, and training metrics. Confirms successful execution of all planned runs with results stored and traceable . | ||

| Doc44_PerformanceAnalysisReport | Presents evaluation of model performance, identifying the top-performing models based on RMSE, MAE, and efficiency metrics. Includes rankings, model category comparisons, stability assessments. | ||

| Doc45_FinalModelDocumentation | Documents the architecture, configuration, hyperparameters, and feature usage of the final selected model. Highlights performance, robustness, and production suitability based on comprehensive testing. | ||

| Doc46_Phase4Report | Summarizes all Phase 4 activities including model scoping, training, refinement, evaluation, and documentation. Confirms compliance, readiness for deployment, and fulfillment of Gate 4 acceptance criteria | ||

| Doc47_LogIssues | Records issues related to model configuration, performance discrepancies, and documentation gaps. Specifies actions required for Gate 4 re-evaluation and areas for improvement in modeling or validation. | ||

| Phase 5: Deployment & Implementation | Doc51_DataConnectionReport | Summarizes the successful establishment and validation of data connections between the system and external sources (e.g., sensors, databases, APIs). Confirms input quality, system access, and the generation of production-ready datasets for real-time or scheduled operations. | Production-ready datasets |

| Doc52_DeploymentReport | Documents the final deployment activities including system launch, interface rollout, initial prediction testing, and APIs/configuration packaging. Confirms operational readiness and system stability through validation of predictions, logs, and interface behavior. | ||

| Doc53_Phase5Report | Provides a consolidated summary of Phase 5 milestones, including deployment validation across system, data, and UI layers. Captures stakeholder approvals and confirms satisfaction of Gate 5 acceptance criteria for transition into live operation. | ||

| Doc54_LogIssues | Captures issues encountered during deployment such as interface errors, system downtime, or misconfigured outputs. Lists root causes, assigned actions, and resolution status for any system deployment failure points or rollback triggers. | ||

| Phase 6: Automated Monitoring & Update | Doc61_AutomaticMonitoringReport | Reports on the system’s automated monitoring activities including real-time data ingestion, prediction updates, and UI refreshes. Confirms performance metrics, logs operational status, and verifies ongoing model effectiveness in production. | Real-time monitoring Results e.g. Predictions, Logs Archive Hard Samples for Future Retraining |

| Doc62_UnqualifiedReport | Identifies and documents cases where prediction outputs fall below acceptable quality thresholds. Flags performance degradation, specifies unqualified data samples, and archives cases for potential future retraining or manual review. | ||

| Doc63_Phase6Report | Summarizes all monitoring results and update cycle performance, including model consistency, drift detection, and operational stability. Confirms Gate 6 completion through governance checks and outlines next steps for continuous improvement or retraining cycles. | ||

| Doc64_LogIssues | Tracks incidents such as data stream interruptions, interface failures, or prediction anomalies. Serves as the operational log for Phase 6, capturing diagnostics, recovery actions, and escalation records. |

References

- Hong, T.; Pinson, P.; Wang, Y.; Weron, R.; Yang, D.; Zareipour, H. Energy forecasting: A review and outlook. IEEE Open Access Journal of Power and Energy 2020, 7, 376–388. [Google Scholar] [CrossRef]

- Mystakidis, A.; Koukaras, P.; Tsalikidis, N.; Ioannidis, D.; Tjortjis, C. Energy forecasting: A comprehensive review of techniques and technologies. Energies 2024, 17, 1662. [Google Scholar] [CrossRef]

- Im, J.; Lee, J.; Lee, S.; Kwon, H.-Y. Data pipeline for real-time energy consumption data management and pre diction. FRONTIERS IN BIG DATA 2024, 7. [Google Scholar] [CrossRef]

- Kilkenny, M.F.; Robinson, K.M. Data quality: “Garbage in – garbage out”. Health Information Management Journal 2018, 47, 1833358318774357. [Google Scholar] [CrossRef]

- Amin, A.; Mourshed, M. Weather and climate data for energy applications. Renewable & Sustainable Energy Reviews 2024, 192, 114247. [Google Scholar] [CrossRef]

- Bansal, A.; Balaji, K.; Lalani, Z. Temporal Encoding Strategies for Energy Time Series Prediction. 2025. [CrossRef]

- Gonçalves, A.C.R.; Costoya, X.; Nieto, R.; Liberato, M.L.R. Extreme Weather Events on Energy Systems: A Comprehensive Review on Impacts, Mitigation, and Adaptation Measures. Sustainable Energy Research 2024, 11, 1–23. [Google Scholar] [CrossRef]

- Chen, G.; Lu, S.; Zhou, S.; Tian, Z.; Kim, M.K.; Liu, J.; Liu, X. A Systematic Review of Building Energy Consumption Prediction: From Perspectives of Load Classification, Data-Driven Frameworks, and Future Directions. Applied Sciences 2025, 15. [Google Scholar] [CrossRef]

- Kreuzberger, D.; Kühl, N.; Hirschl, S. Machine Learning Operations (MLOps): Overview, Definition, and Architecture. IEEE Access 2023, 11, 31866–31879. [Google Scholar] [CrossRef]

- Nguyen, K.; Koch, K.; Chandna, S.; Vu, B. Energy Performance Analysis and Output Prediction Pipeline for East-West Solar Microgrids. J 2024, 7, 421–438. [Google Scholar] [CrossRef]

- Studer, S.; Pieschel, L.; Nass, C. Towards CRISP-ML(Q): A Machine Learning Process Model with Quality Assurance Methodology. Informatics 2020, 3, 20. [Google Scholar] [CrossRef]

- Tripathi, S.; Muhr, D.; Brunner, M.; Jodlbauer, H.; Dehmer, M.; Emmert-Streib, F. Ensuring the Robustness and Reliability of Data-Driven Knowledge Discovery Models in Production and Manufacturing. Frontiers in Artificial Intelligence 2021, 4, 576892. [Google Scholar] [CrossRef]

- EverythingDevOps.dev. A Brief History of DevOps and Its Impact on Software Development. Available online: https://www.everythingdevops.dev/blog/a-brief-history-of-devops-and-its-impact-on-software-development (accessed on 13 August 2025).

- Steidl, M.; Zirpins, C.; Salomon, T. The Pipeline for the Continuous Development of AI Models – Current State of Research and Practice. In Proceedings of the Proceedings of the 2023 IEEE International Conference on Software Architecture Companion (ICSA-C), 2023; pp. 75-83.

- Saltz, J.S. CRISP-DM for Data Science: Strengths, Weaknesses and Potential Next Steps. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), 2021, 15-18 December 2021; pp. 2337–2344. [Google Scholar]

- Pei, Z.; Liu, L.; Wang, C.; Wang, J. Requirements Engineering for Machine Learning: A Review and Reflection. In Proceedings of the 30th IEEE International Requirements Engineering Conference Workshops (RE 2022 Workshops), 2022, 2022; pp. 166-175.

- Andrei, A.-V.; Velev, G.; Toma, F.-M.; Pele, D.T.; Lessmann, S. Energy Price Modelling: A Comparative Evaluation of Four Generations of Forecasting Methods. 2024. [CrossRef]

- European, P.; Council of the European, U. Regulation (EU) 2016/679 – General Data Protection Regulation (GDPR). Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj/eng (accessed on 13 August 2025).

- European, P.; Council of the European, U. Regulation (EU) 2024/1689 – Artificial Intelligence Act (EU AI Act). Available online: https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng (accessed on 13 August 2025).

- Mora-Cantallops, M.; Pérez-Rodríguez, A.; Jiménez-Domingo, E. Traceability for Trustworthy AI: A Review of Models and Tools. Big Data and Cognitive Computing 2021, 5, 15. [Google Scholar] [CrossRef]

- Kubeflow Contributors, G. Introduction to Kubeflow. Available online: https://www.kubeflow.org/docs/started/introduction/ (accessed on 13 August 2025).

- Maxime Beauchemin, A. Apache Airflow – Workflow Management Platform. Available online: https://en.wikipedia.org/wiki/Apache_Airflow (accessed on 13 August 2025).

- Google. TensorFlow Extended (TFX): Real-World Machine Learning in Production. Available online: https://blog.tensorflow.org/2019/06/tensorflow-extended-tfx-real-world_26.html (accessed on 13 August 2025).

- Miller, T.; Durlik, I.; Łobodzińska, A.; Dorobczyński, L.; Jasionowski, R. AI in Context: Harnessing Domain Knowledge for Smarter Machine Learning. Applied Sciences 2024, 14. [Google Scholar] [CrossRef]

- Oakes, B.J.; Famelis, M.; Sahraoui, H. Building Domain-Specific Machine Learning Workflows: A Conceptual Framework for the State-of-the-Practice. ACM Transactions on Software Engineering and Methodology 2024, 33, 1–50. [Google Scholar] [CrossRef]

- Kale, A.; Nguyen, T.; Harris, F.C., Jr.; Li, C.; Zhang, J.; Ma, X. Provenance Documentation to Enable Explainable and Trustworthy AI: A Literature Review. Data Intelligence 2023, 5, 139–162. [Google Scholar] [CrossRef]

- Theusch, F.; Heisterkamp, P. Comparative Analysis of Open-Source ML Pipeline Orchestration Platforms. 2024.

- Schröer, C.; Kruse, F.; Gómez, J.M. A Systematic Literature Review on Applying CRISP-DM Process Model. Procedia Computer Science 2021, 181, 526–534. [Google Scholar] [CrossRef]

- Chatterjee, A.; Ahmed, B.; Hallin, E.; Engman, A. Quality Assurance in MLOps Setting: An Industrial Perspective; 2022.

- International Organization for, S. ISO 9001:2015 – Quality management systems — Requirements. Available online: https://www.iso.org/standard/62085.html (accessed on 13 August 2025).

- Jayzed Data Models, I. Financial Industry Business Data Model (FIB-DM). Available online: https://fib-dm.com/ (accessed on 13 August 2025).

- Shimaoka, A.M.; Ferreira, R.C.; Goldman, A. The evolution of CRISP-DM for Data Science: Methods, processes and frameworks. SBC Reviews on Computer Science 2024, 4, 28–43. [Google Scholar] [CrossRef]

- Ahern, M.; O’Sullivan, D.T.J.; Bruton, K. Development of a Framework to Aid the Transition from Reactive to Proactive Maintenance Approaches to Enable Energy Reduction. Applied Sciences 2022, 12, 6704–6721. [Google Scholar] [CrossRef]

- Oliveira, T. Implement MLOps with Kubeflow Pipelines. Available online: https://developers.redhat.com/articles/2024/01/25/implement-mlops-kubeflow-pipelines (accessed on 13 August 2025).

- Apache Software, F. Apache Beam — Orchestration. Available online: https://beam.apache.org/documentation/ml/orchestration/ (accessed on 13 August 2025).

- Boettiger, C. An Introduction to Docker for Reproducible Research, with Examples from the R Environment. 2014. [CrossRef]

- Amershi, S.; Begel, A.; Bird, C.; DeLine, R.; Gall, H.; Kamar, E.; Nagappan, N.; Nushi, B.; Zimmermann, T. Software Engineering for Machine Learning: A Case Study. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), 2019, 25-31 May 2019; pp. 291–300. [Google Scholar]

- High-Level Expert Group on Artificial, I. Ethics Guidelines for Trustworthy Artificial Intelligence; 2019.

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 4768–4777. [Google Scholar]

- Stigler, S.M. Statistics on the Table: The History of Statistical Concepts and Methods; Harvard University Press: 2002.

- Tukey, J.W. Exploratory Data Analysis; Addison-Wesley: 1977.

- Encyclopaedia, B. Pearson’s correlation coefficient. Available online: https://www.britannica.com/topic/Pearsons-correlation-coefficient (accessed on 13 August 2025).

- Shannon, C.E. A Mathematical Theory of Communication. The Bell System Technical Journal 1948, 27, 379–423. [Google Scholar] [CrossRef]

- OpenStax. Cohen's Standards for Small, Medium, and Large Effect Sizes. Available online: https://stats.libretexts.org/Bookshelves/Introductory_Statistics/Introductory_Statistics_2e_(OpenStax)/10%3A_Hypothesis_Testing_with_Two_Samples/10.03%3A_Cohen%27s_Standards_for_Small_Medium_and_Large_Effect_Sizes (accessed on 13 August 2025).

- Elman, J.L. Finding Structure in Time. Cognitive Science 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Computation 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD 2016), San Francisco, CA, USA, 2016 August 13–17; pp. 785–794. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2019), 2019, (Location and dates can be added if known); pp. 8024–8035.

- scikit-learn, d. MinMaxScaler — scikit-learn preprocessing. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.MinMaxScaler.html (accessed on 13 August 2025).

- DataCamp. RMSE Explained: A Guide to Regression Prediction Accuracy. Available online: https://www.datacamp.com/tutorial/rmse (accessed on 13 August 2025).

- Frost, J. Mean Absolute Error (MAE). Available online: https://statisticsbyjim.com/glossary/mean-absolute-error/ (accessed on 13 August 2025).

| Phase | Activity | Data Scientists & ML Engineers | Energy Domain Experts | Project Managers | DevOps & MLOps Engineers | Compliance Officers & QA |

| Phase 0 | Scenario Understanding & Alignment | A | A | P | C | A |

| Compliance & Technical Checks | A | A | P | A | P | |

| Gate 0: Foundation Check | A | A | P | A | P | |

| Phase 1 | Data Identification & Quality Check | P | A | G | C | C |

| Data Ingestion & Validation | P | C | G | C | A | |

| Gate 1: Data Acquisition Ready | P | A | P | C | A | |

| Phase 2 | Exploratory Data Analysis | P | A | G | C | C |

| Data Cleaning & Preprocessing | P | A | G | C | C | |

| Gate 2: Preprocessing OK | P | A | P | C | A | |

| Phase 3 | Feature Engineering & Creation | P | A | G | C | C |

| Feature Analysis & Selection | P | A | G | C | C | |

| Gate 3: Features OK | P | A | P | C | A | |

| Phase 4 | Model Scoping | P | A | G | C | C |

| Input Data Preparation | P | C | G | C | C | |

| Model Training & Evaluation | P | A | G | C | C | |

| Model Refinement | P | A | G | C | C | |

| Result Consolidation | P | A | G | C | C | |

| Gate 4: Model Valid | P | A | P | C | A | |

| Phase 5 | Deployment Preparation | A | C | G | P | C |

| UI Setup | C | A | G | P | C | |

| Data Connection | A | C | G | P | C | |

| System Deployment | A | C | G | P | C | |

| Gate 5: Deployment OK | A | A | P | P | A | |

| Phase 6 | Automated Monitoring | C | A | G | P | C |

| Gate 6: Monitoring Governance | C | A | P | P | A | |

| Update Cycle Management | A | C | G | P | C |

| Aspect | CRISP-DM | Modern MLOps Pipeline | Proposed Energy Framework |

| Phases / Coverage | 6 phases: Business Understanding, Data Understanding, Data Preparation, Modeling, Evaluation, Deployment. No explicit monitoring phase (left implicit in Deployment). | Varies, but generally covers data ingestion, preparation, model training, evaluation, deployment, and adds monitoring through continuous integration and continuous deployment (CI/CD) for ML [14]. Business understanding is often assumed but not formally part of pipeline; focus is on technical steps. | 7 phases: Project Foundation (Phase 0), Data Foundation (Phase 1), Data Understanding & Preprocessing (Phase 2), Feature Engineering (Phase 3), Model Development & Evaluation (Phase 4), Model Deployment & Implementation (Phase 5), Automated Monitoring & Update (Phase 6). Each phase contains 2-4 sequential workflows with explicit decision gates. |

| Iteration & Feedback Loops | Iterative in theory (waterfall with backtracking) – one can loop back if criteria not met [11], but no fixed decision points except perhaps after Evaluation. Iteration is ad-hoc, relies on team judgement. | Continuous triggers for iteration (e.g., retrain model if drift detected, or on schedule) are often implemented [14]. Pipelines can be re-run automatically. However, decision logic is usually encoded in monitoring scripts or not explicitly formalized as “gates” – it’s event-driven. | Systematic iteration through formal decision gates (Gates 0-6) with predetermined validation criteria. Each gate requires multi-stakeholder review and explicit sign-off. Controlled loopback mechanisms enable returns to earlier phases with documented rationale and tracked iterations. |

| Domain Expert Involvement | Present at start (Business Understanding) and end (Evaluation) implicitly. Domain knowledge guides initial goals and final evaluation, but no dedicated phase for domain feedback mid-process. Often, domain experts’ role in model development is informal. | Minimal integration within automated pipelines. Domain experts typically provide initial requirements and review final outputs, but lack formal validation checkpoints within technical workflows. Manual review stages often implemented separately from core pipeline. | Structured domain expert involvement across all phases with mandatory validation checkpoints. Energy domain expertise formally required for EDA alignment verification (Phase 2), feature interpretability assessment (Phase 3), and model plausibility validation (Phase 4). Five-stakeholder governance model ensures continuous domain-technical alignment. |

| Documentation & Traceability | Emphasized conceptually in CRISP-DM documentation, but not enforced. Artifacts like data or models are managed as part of project assets, but no standard format. Traceability largely depends on the team’s practices. No specific guidance on versioning or provenance. | Strong tool support for technical traceability: version control, experiment tracking, data lineage through pipeline metadata. Every run can be recorded. However, focuses on data and model artifacts; business context or rationale is often outside these tools. Documentation is usually separate from pipeline (confluence pages, etc.). | Comprehensive traceability built-in: Each phase produces artifacts with recorded metadata. Framework requires documentation at each gate transition. Combines the best of tool-based tracking (for data/model versions) with process documentation (decision logs, validation reports). Satisfies traceability requirements for trust and compliance by maintaining provenance from business goal to model deployment. |

| Regulatory Compliance | No explicit compliance framework. Regulatory considerations addressed ad-hoc by practitioners without systematic integration into methodology structure. | Compliance capabilities added as optional pipeline components (bias audits, model cards) when domain requirements dictate. No native compliance framework integrated into core methodology. | Embedded compliance management throughout pipeline lifecycle. Phase 0 establishes comprehensive compliance framework through jurisdictional assessment, privacy evaluation, and regulatory constraint identification. All subsequent phases incorporate compliance validation at decision gates with dedicated Compliance Officer oversight and audit-ready documentation. |

| Modularity & Reusability | High-level phases are conceptually modular, but CRISP-DM doesn't define technical modules. Reusability depends on how project is executed. Not tied to any tooling. | Highly modular in implementation – each pipeline step is a distinct component (e.g., container) that can be reused in other pipelines. Encourages code reuse and standardized components. However, each pipeline is specific to an organization's stack. | Explicitly modular at concept level with energy-specific phases that correspond to pipeline components. Tool-agnostic design allows implementation in any workflow orchestration environment. Clear phase inputs/outputs promote creation of reusable energy forecasting templates for each phase. |

| QA | Relies on final Evaluation; quality mostly measured in model performance and meeting business objective qualitatively. No explicit risk management steps in each phase. | Quality control is often automated via tests in CI/CD that fail pipeline if data schema changes or performance drops. However, these are set up by engineers, not defined in a high-level methodology. | Multi-layered QA with phase-specific success criteria and validation protocols. Energy-specific quality metrics defined for each phase outcome (data completeness thresholds, feature interpretability requirements, model performance benchmarks). Structured reviews and formal validation artifacts ensure comprehensive quality coverage from foundation through deployment. |

| Category | Specification Details |

| Use Case Context | Electricity forecasting for office building optimization. |

| Installation & Infrastructure | Existing smart meter infrastructure with hourly data collection. Automated hourly readings from building management system. |

| Factor Impact Analysis | Weather conditions, academic calendar, occupancy patterns. |

| Model Scoping | Tree-Based Models and Neural Network-Based Models. |

| Phase | Requirements | Success Criteria |

| Overall | Define forecasting value Establish appropriate forecasting horizon Define the automatically update interval |

Forecasting value: electricity usage Forecast horizon: 24 hours Automatically update interval: 24 hours |

| Phase 1 | Establish data quality metrics Ensure key factors (e.g. weather, school calendar) are covered Confirm data source availability Define data ingestion success Set overall data quality standards |

≥ 85% data completeness All primary factors represented accessible data sources ≥ 98% successful ingestions Missing values <15%, consistent time intervals, data type correct. |

| Phase 2 | Align data patterns with domain knowledge Establish data cleaning success benchmarks |

Temporal cycles visible in data Outliers < 5% post-cleaning |

| Phase 3 | Generate sufficient, relevant features (lag, calendar, temporal, weather, and interaction features) | Feature set covers key drivers |

| Phase 4 | Validate data preparation pipeline Model meet performance metrics |

No data leaks after transform MAPE < 15% on validation |

| Phase 5 | Validate model in production Confirm data quality and connectivity Verify system-level integration |

Live model predictions match dev-stage metrics within ±25% Quality good, Uptime > 99%, APIs response time < 2s Data pipeline integrated and producing outputs without error |

| Phase 6 | Define output quality acceptance | Forecast degradation < 25% from baseline |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).