1. Introduction

Defect detection on wood surfaces is a critical task in the furniture and woodworking industries, directly influencing product quality, customer satisfaction, and production efficiency. While most modern manufacturing lines have adopted automation in machining and finishing processes, visual quality inspection remains predominantly manual. Such human-dependent inspection is prone to inconsistencies, fatigue-induced errors, and reduced throughput, leading to both false acceptance (leakage) and false rejection (overkill) of products. The integration of

automated optical inspection (AOI) systems into production lines offers a practicable path toward smart manufacturing, enabling real-time, objective, and rapid defect detection. Recent advances in

artificial intelligence (AI), particularly

deep learning (DL), have significantly improved image-based defect detection in various industrial domains [

1,

2]. However, despite the progress in generic defect detection,

research on wood grain defect detection remains limited [

3,

4,

5], primarily due to the complex texture patterns and wide variability in defect appearance.

Gabor functions are based on the sinusoidal plane wave with particular frequency and orientation, which characterizes the spatial frequency information of the image. A set of Gabor filters with a variety of frequencies and orientations can effectively extract invariant features from an image. Due to these capabilities, Gabor filters are widely employed in image processing applications, such as texture classification, image retrieval and wood defect detection [

6]. Through multi-scale and multi-orientation design, Gabor filters effectively extract rich texture features, making them particularly valuable for wood defect detection. They can capture slight texture variations on wood surfaces, aiding in the identification of defect locations and types. However, relying solely on Gabor filters for feature extraction may not be sufficient to handle the complexity and diversity of wood defects. Since wood defects exhibit diverse characteristics,

more advanced feature learning and recognition techniques are required for accurate detection. To overcome this limitation, integrating Gabor filters with deep learning techniques has proven to be a highly effective strategy [

7,

8,

9].

Convolutional neural networks (CNNs) are a deep learning architecture that leverages multiple layers of convolution and pooling operations to efficiently extract hierarchical features from images. CNNs have demonstrated highly effectiveness for tasks such as image classification, object detection, and segmentation [

2]. Gabor convolutional networks (GCNs) integrate Gabor filters into CNNs, leveraging both the local feature extraction capability of Gabor filters and the feature learning and classification abilities of CNNs. This integration enhances the robustness of learned features against variations in orientation and scale [

8]. However, despite these advantages, GCNs suffer from a more complex network architecture. Thus, optimizing the network architecture to enhance GCN performance and computational efficiency has become a valuable research topic.

The

Taguchi method, developed by

Dr. Genichi Taguchi, is a quality engineering approach primarily used for

product design and process optimization. It employs

design of experiments (DOE), particularly

orthogonal arrays (OAs), to efficiently evaluate multiple factors affecting quality. Additionally, it incorporates the s

ignal-to-noise (S/N) ratio to measure system robustness with the goal of

reducing variation and improving product reliability. In addition, the

Taguchi method offers the following advantages: (i) Reduced experimental cost and time – By leveraging

orthogonal arrays, the method significantly reduces the number of experimental runs while still achieving optimal design parameters. (ii) Systematic problem-solving approach – Through

parameter design and tolerance design, the method optimizes product performance during the development phase, thereby minimizing the need for costly modifications. Due to these benefits, the Taguchi method has been widely adopted in

industries such as manufacturing, electronics, biomedical engineering, automotive, and semiconductor [10,11].

Generally speaking, the design of CNN and GCN involves numerous hyperparameters, such as convolutional kernel size, Gabor convolutional filters, pooling strategies, number of layers, and learning rate. Traditional hyperparameter optimization methods such as grid search and random search suffer from high computational costs and may fail to capture robust parameter settings under small-sample conditions. In contrast, the Taguchi method utilizes orthogonal arrays to efficiently select representative parameter sets, significantly reducing the number of experimental runs and computational costs. This advantage motivates the adoption of the Taguchi method for CNN and GCN optimization, as it not only reduces the computational burden of hyperparameter tuning but also enhances model robustness, convergence speed, and generalization ability. Furthermore, the Taguchi method is particularly well-suited for applications with limited data and computational resources, such as texture image analysis, industrial inspection, and intelligent surveillance systems. Therefore, it provides a highly efficient and systematic approach for optimizing CNN and GCN architectures.

Based on the aforementioned reasons and advantages, this study proposes a wood grain defect recognition model based on Gabor convolutional networks, integrating convolutional neural networks, Gabor filters, and the Taguchi method. The proposed GCN model employs the Taguchi method to optimize the network architecture. Furthermore, to address the issue of limited training samples, this study utilizes image tiling and data augmentation techniques to effectively increase the number of training samples, thereby enhancing the stability and accuracy of the model.

This study addresses these gaps by proposing a Taguchi-optimized Gabor convolutional network for wood grain defect detection. The main contributions of this work are as follows:

i) Integration of interpretable texture feature extraction and deep feature learning through a GCN architecture specifically adapted for wood surface inspection.

ii) Systematic optimization of GCN hyperparameters using the Taguchi method, enabling high performance with reduced computational cost.

iii) Data augmentation and tiling strategies to overcome limited training data, enhancing model stability and generalization.

iv) Extensive comparative evaluation against a baseline CNN on the MVTec AD wood category dataset, demonstrating a 2.73% accuracy improvement.

The remainder of this study is organized as follows.

Section 2 briefly reviews some studies on Gabor filters, CNNs, and their combination. The proposed optimization of Gabor convolutional networks using the Taguchi method and their application in wood grain defect detection are given in

Section 3. Finally,

Section 4 presents some conclusions of this study.

3. Proposed Methodology

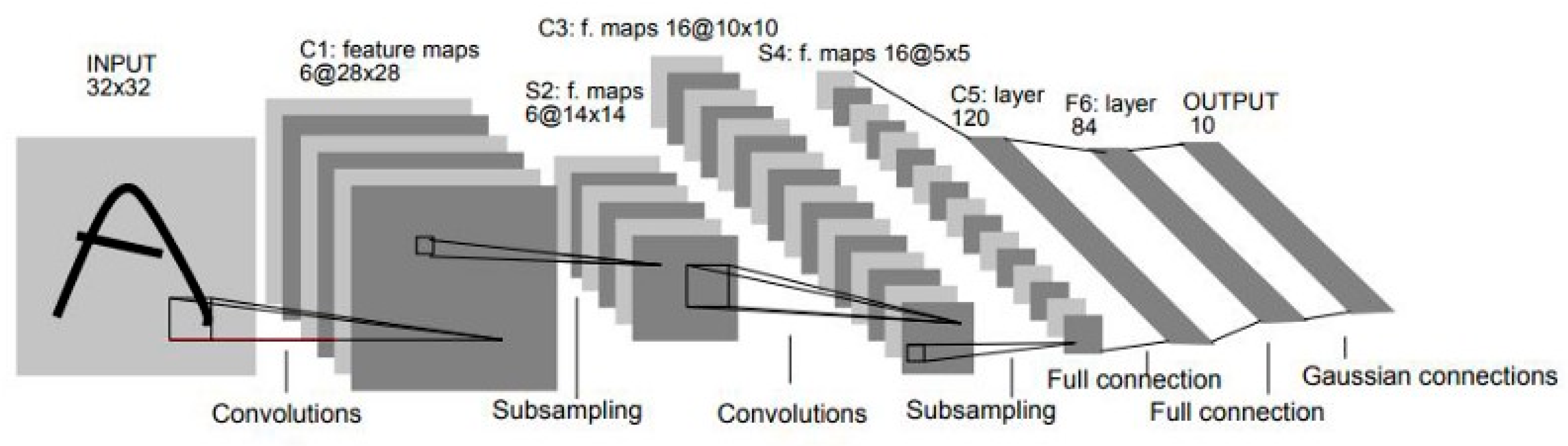

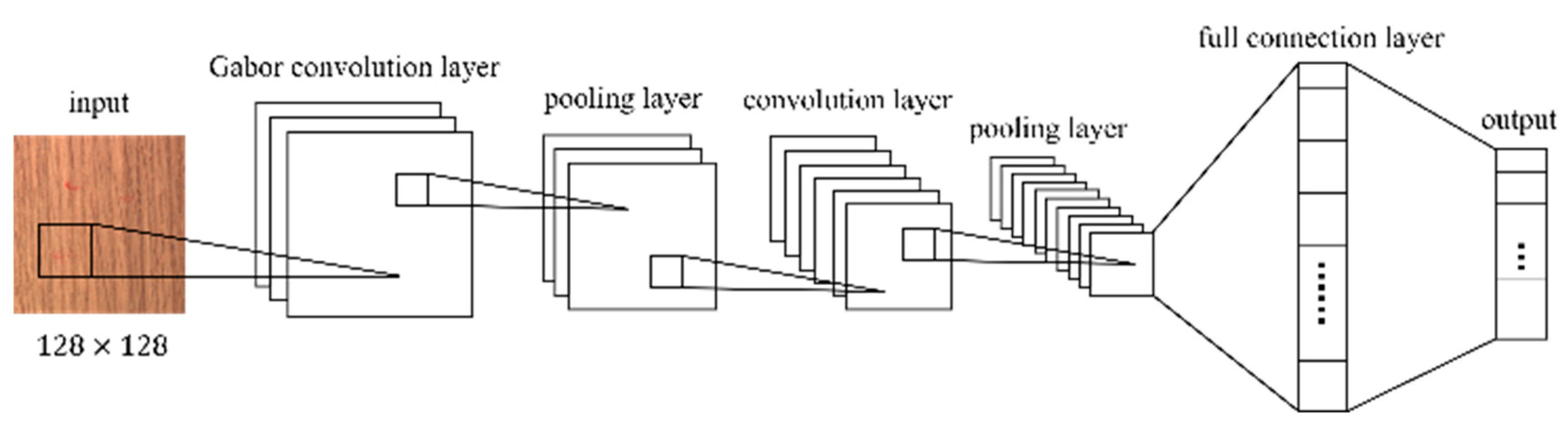

The architecture of Gabor convolutional network (GCN) is illustrated in

Figure 2. This design builds upon the foundational LeNet-5 architecture, as depicted in

Figure 1. Notably, in the GCN architecture, the initial layer is a convolutional layer that employs Gabor kernels [

17]. This modification preserves the filtering and feature extraction capabilities inherent to traditional convolutional layers. Additionally, it offers advantages such as reducing the need for extensive data preprocessing and enhancing the computational efficiency of the network.

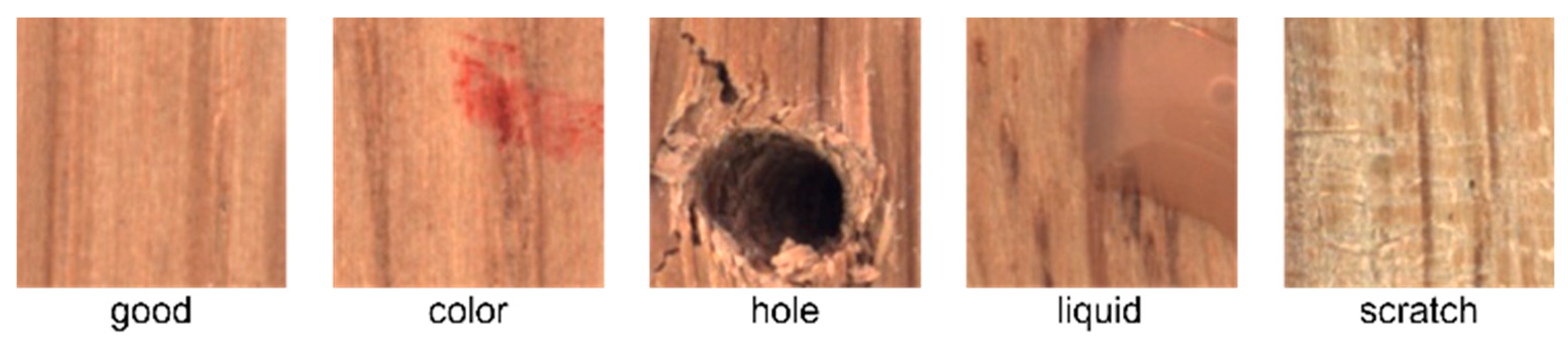

This study utilizes the wood category from the MVTec Anomaly Detection (MVTec AD) dataset [

18]. The MVTec AD dataset comprises 15 categories with 3,629 images for training and validation and 1,725 images for testing. The training set contains only images without defects. The test set contains both: images containing various types of defects and defect-free images. Within this dataset, the wood category includes images of defects such as color anomalies, holes, liquid, and scratch, as illustrated in

Figure 3.

Table 1 presents the number of images for each type within the wood category, where “Good” represents defect-free. Note that MVTec AD dataset includes 247 defect-free images for training and validation and 19 images for testing. Therefore, the total number of defect-free images, as represented in the "Good" category (second row of the table), is 266.

In the experimental setup, the model-building environment utilized Pytorch 2.3.0, Jupyter Notebook 7.0.6, and TensorFlow 2.10.0. The test environment comprised an Intel(R) Core™ i7-13700H CPU @ 3.40 GHz processor, 32.0 GB of RAM, an Nvidia GeForce RTX 3060 Ti GPU, and a Windows 11 64-bit operating system.

3.1. Data Preprocessing

Due to the lack of training samples for wood defect detection, this study employs two approaches to increase the number of training samples and reduce computational load:

i) Image tiling: The original wood grain images are divided into smaller tiles to increase the number of defect samples while simultaneously reducing computational complexity. In this study, a 1024×1024-pixel image is tiled into 64 sub-images of 128×128 pixels. Furthermore, when the tiled images serve as input to the defect detection model, if a specific sub-image is classified as a certain defect category, the corresponding region in the original image can be marked as the defect location. This enables both defect classification and localization within wood grain images.

ii)Image augmentation: To enhance the diversity of training samples and expand the dataset, this study applies various image augmentation techniques, including rotation, horizontal translation, vertical translation, and random scaling.

After applying the aforementioned two data preprocessing methods, the training dataset for this study consists of a total of 10,000 samples (2,000 per category), while the test dataset contains 2,000 samples (400 per category), as shown in

Table 1. During the training process, 80% of the training samples are used for model training to optimize the model parameters, while the remaining 20% serve as the validation set to evaluate the model’s confirm the optimal configuration performance.

3.2. Steps for Implementing the Taguchi Method

The Taguchi method is a systematic approach for optimizing process parameters by minimizing variability and improving robustness. It employs a structured experimental design using orthogonal arrays (OAs) to efficiently determine the optimal factor levels [

10,

11]. The following steps outline its implementation in optimizing a GCN or CNN for wood grain defect detection.

i) Define the problem and objective: The first step involves identifying the need for optimization, such as improving the accuracy of wood grain defect detection using a GCN or CNN. To achieve this, it is crucial to determine the key factors influencing model performance. These factors include convolutional kernel size, the number of filters, and Gabor filter properties like frequency, orientation, and phase offset.

ii) Select control factors and levels: After defining the problem, the next step is to choose the parameters (control factors) that will be optimized and assign appropriate levels to each. For instance, convolutional kernel size may have three levels: 3×3, 5×5, and 7×7, while the number of filters in different layers may vary across experiments. Proper selection of these factors ensures that the experimental design captures a wide range of potential improvements.

iii) Design the experiment using an orthogonal array: Instead of testing all possible combinations, which would be computationally expensive, an orthogonal array (OA) is selected to systematically conduct experiments with reduced trials. The OA helps distribute experiments evenly across factor levels, ensuring a balanced analysis.

iv) Conduct experiments and record performance: Each experimental configuration is implemented by training and testing the GCN or CNN under the selected parameter settings.

v) Calculate the signal-to-noise (S/N) ratio: To measure the robustness of each configuration, the signal-to-noise (S/N) ratio is calculated.

vi) Analyze results and determine optimal factor levels: Once the S/N ratios are computed, the average S/N ratio for each factor level is analyzed to determine the optimal combination. The best parameter settings are selected based on the highest S/N ratios, and response plots are generated to visualize their effects on model performance.

vii) Confirm the optimal configuration: The GCN is then retrained using the optimized parameters to validate its performance. A comparison with a baseline CNN is conducted to assess improvements in accuracy and computational efficiency. The results confirm whether the optimized model outperforms the traditional approach.

viii) Implement and verify performance: Finally, the optimized model is applied to real-world wood defect detection tasks. Further evaluations are conducted to ensure its robustness and generalization ability. If needed, additional refinements can be made to enhance the model’s effectiveness.

3.3. Optimizing Gabor Convolutional Networks Using the Taguchi Method

This study employs the Taguchi method to optimize the architecture of the Gabor convolutional network. The control factors to be optimized, along with their corresponding levels, are shown in

Table 2. Among these, three control factors—frequency (

ω), orientation (

θ), and phase offset (

ψ)—are related to the Gabor filter parameters, while the remaining five control factors are hyperparameters associated with the convolutional layers. As shown in

Table 2, there is one factor with two levels and seven factors with three levels, resulting in a total of

15 degrees of freedom for the

eight control factors. Consequently, an orthogonal array with at least 15 degrees of freedom is required for the Taguchi experiment. In this study, the

L18 (2¹×3⁷) orthogonal array, as listed in

Table 3, is selected, requiring

18 experimental runs to systematically evaluate the factor-level combinations. Without the orthogonal array, a total of 4,374 (

2¹×3⁷) experiments would be required. However, by applying the orthogonal array, only 18 experiments are needed in this study.

To reduce the influence of randomness and enhance reliability, each experimental configuration is independently executed 10 times. The average accuracy for each independent trial is recorded, and the mean of these 10 trials is used to represent the experimental performance of that configuration, as shown in

Table 4. For convenience, the average accuracy is also included in the aforementioned

Table 3. A higher mean value indicates a higher average accuracy for that configuration. Since the objective of applying the Taguchi method is to improve the recognition accuracy of the Gabor convolutional network, this study considers the “

larger-the-better” quality characteristic. Therefore, the “

larger-the-better” formulation of the Taguchi method is applied for the calculation of the signal-to-noise (S/N) ratio, as expressed in Eq. 4:

where

represents the

ith observed value, and

n denotes the total number of observations.

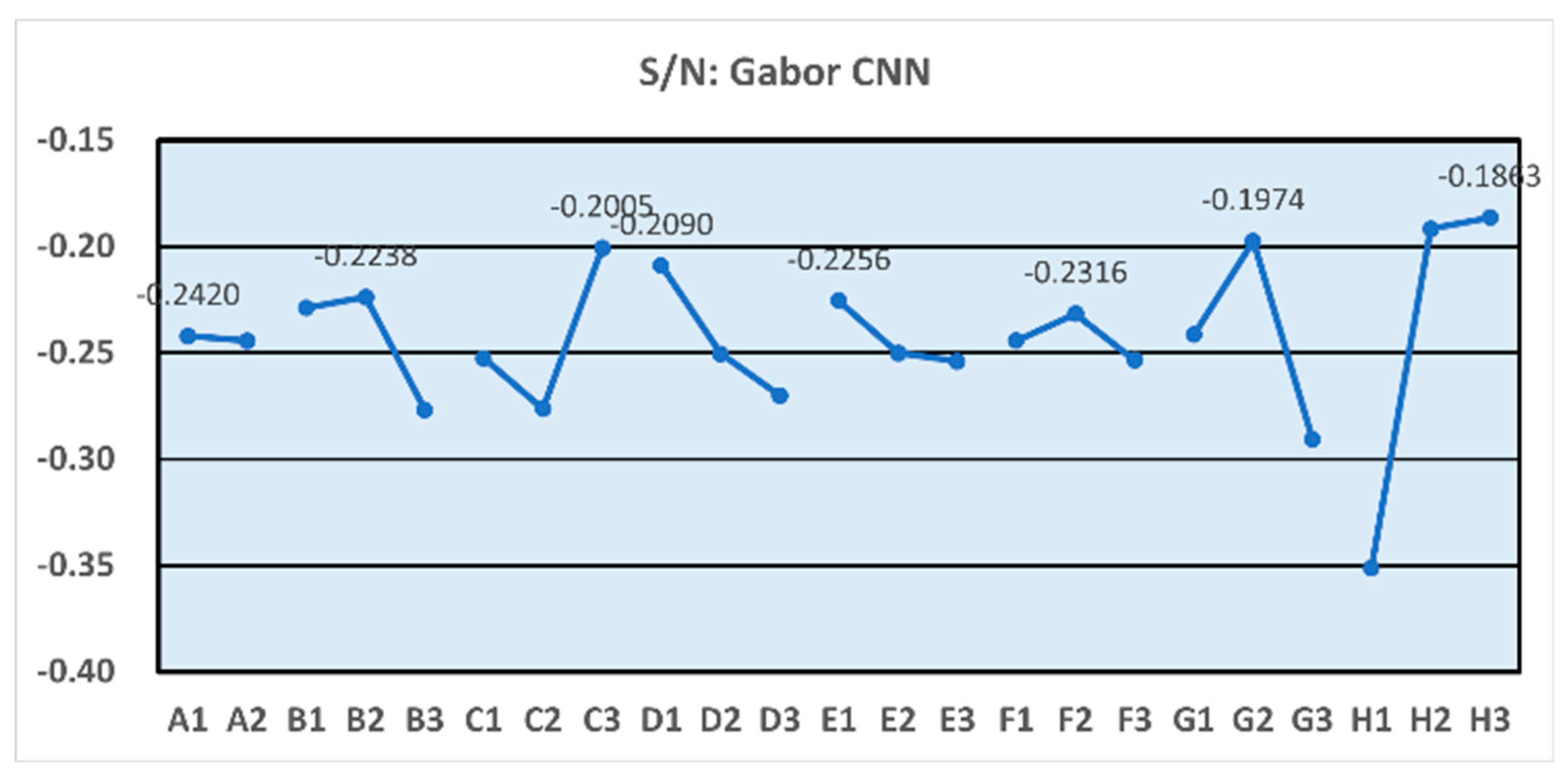

Based on the L18 orthogonal array and the recognition results of

Table 4 (at the end of the paper), the S/N ratio for each experimental setup is listed in the last column of

Table 3. The average S/N ratio of each factor and level is listed in

Table 5. The higher S/N ratio represents the higher stability of the quality. The optimal parameter of each factor is also displayed in the last row in

Table 5.

Figure 4 presents the factor S/N ratio response plot, which illustrates the mean response values calculated for each control factor and its corresponding levels. Since the quality characteristic of this study follows the “

larger-the-better” criterion, the optimal factor settings can be identified from the response plot in

Figure 4 as follows: the pooling method is max pooling, the number of filters in the first convolutional layer is 256, the kernel size of the first convolutional layer is (7,7), the number of filters in the second convolutional layer is 128, the kernel size of the second convolutional layer is (3,3), the number of frequency components is 6, the number of orientations is 4, and the number of phase offsets is 3.

3.4. Optimizing Convolutional Neural Networks Using the Taguchi Method

In this study, the control group employs a traditional convolutional neural network (CNN) with an architecture similar to the Gabor convolutional network shown in

Figure 2. This network comprises two convolutional layers with corresponding pooling layers, followed by two fully connected layers. To optimize the

CNN’s hyperparameters, the

Taguchi method is applied, focusing on

control factors and levels. The

control factors and their corresponding levels are listed in

Table 6, while

Table 7 presents the

L8 orthogonal array used in this study. The

experimental results and S/N ratios are provided in

Table 8 and the

last column of Table 7, respectively.

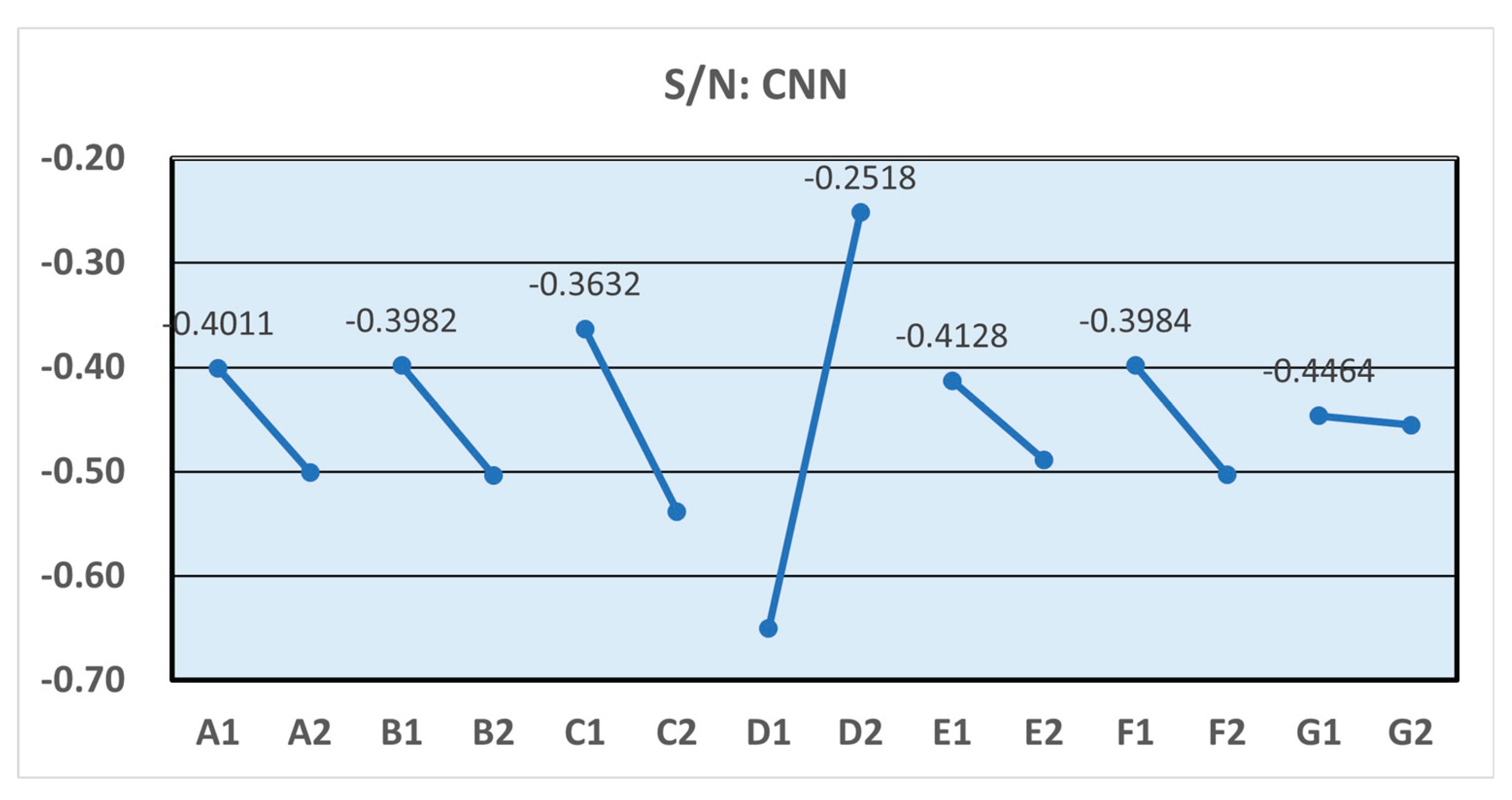

The average S/N ratio of each factor and level, along with the optimal parameter of each factor, is presented in

Table 5. Additionally,

Figure 5 depicts the corresponding Taguchi response plot, which illustrates the mean response values calculated for each control factor and its associated levels. Based on these results, the optimized control factors for the CNN are identified as follows: the pooling method is max pooling, the number of filters in the first convolutional layer is 128, the kernel size of the first convolutional layer is (3,3), the pooling size of the first pooling layer is 4, the number of filters in the second convolutional layer is 128, the kernel size of the second convolutional layer is (3,3), and the pooling size of the second pooling layer is 2.

Table 6.

Levels of control factors: CNNs.

Table 6.

Levels of control factors: CNNs.

| No. |

Control Factors |

Level 1 |

Level 2 |

| A |

Pooling function |

Max |

Average |

| B |

Conv1_filters |

128 |

512 |

| C |

Conv1_kernel size |

(3,3) |

(7,7) |

| D |

Conv1_pool_size |

2 |

4 |

| E |

Conv2_filters |

128 |

512 |

| F |

Conv2_kernel size |

(3,3) |

(7,7) |

| G |

Conv2_pool_size |

2 |

4 |

Table 7.

average accuracy and S/N ratio of L8 orthogonal array: CNNs.

Table 7.

average accuracy and S/N ratio of L8 orthogonal array: CNNs.

| No. |

A |

B |

C |

D |

E |

F |

G |

Ave. Acc. |

S/N |

| 1 |

1 |

1 |

1 |

1 |

1 |

1 |

1 |

95.94% |

-0.3654 |

| 2 |

1 |

1 |

1 |

2 |

2 |

2 |

2 |

98.22% |

-0.1568 |

| 3 |

1 |

2 |

2 |

1 |

1 |

2 |

2 |

92.65% |

-0.7590 |

| 4 |

1 |

2 |

2 |

2 |

2 |

1 |

1 |

96.38% |

-0.3233 |

| 5 |

2 |

1 |

2 |

1 |

2 |

1 |

2 |

92.33% |

-0.7243 |

| 6 |

2 |

1 |

2 |

2 |

1 |

2 |

1 |

96.15% |

-0.3465 |

| 7 |

2 |

2 |

1 |

1 |

2 |

2 |

1 |

91.82% |

-0.7503 |

| 8 |

2 |

2 |

1 |

2 |

1 |

1 |

2 |

97.95% |

-0.1805 |

Table 8.

Recognition accuracy of convolutional neural networks.

Table 8.

Recognition accuracy of convolutional neural networks.

| Exp. |

Run 1 |

Run 2 |

Run 3 |

Run 4 |

Run 5 |

Run 6 |

Run 7 |

Run 8 |

Run 9 |

Run 10 |

Average |

| 1 |

93.60% |

97.65% |

95.60% |

96.75% |

98.05% |

99.15% |

94.76% |

97.00% |

93.02% |

93.85% |

95.94% |

| 2 |

98.85% |

96.80% |

98.40% |

98.75% |

98.85% |

97.03% |

98.21% |

99.12% |

98.26% |

97.93% |

98.22% |

| 3 |

97.50% |

79.90% |

96.40% |

97.65% |

98.20% |

91.97% |

97.23% |

93.78% |

96.81% |

77.03% |

92.65% |

| 4 |

93.70% |

97.45% |

94.10% |

98.00% |

97.90% |

96.24% |

95.95% |

97.05% |

97.65% |

95.76% |

96.38% |

| 5 |

97.40% |

95.50% |

86.15% |

97.65% |

91.00% |

87.99% |

87.83% |

92.28% |

99.08% |

88.39% |

92.33% |

| 6 |

93.85% |

96.80% |

92.45% |

96.80% |

96.35% |

98.13% |

94.85% |

95.69% |

98.69% |

97.85% |

96.15% |

| 7 |

89.65% |

95.75% |

87.60% |

91.15% |

92.65% |

91.64% |

90.87% |

90.83% |

96.27% |

91.82% |

91.82% |

| 8 |

97.90% |

98.15% |

97.10% |

98.65% |

98.45% |

97.04% |

98.71% |

97.31% |

97.83% |

98.35% |

97.95% |

Table 9.

Factor S/N Ratio Response Table: CNNs.

Table 9.

Factor S/N Ratio Response Table: CNNs.

| Level |

A |

B |

C |

D |

E |

F |

G |

| 1 |

-0.4011 |

-0.3982 |

-0.3632 |

-0.6498 |

-0.4128 |

-0.3984 |

-0.4464 |

| 2 |

-0.5004 |

-0.5033 |

-0.5383 |

-0.2518 |

-0.4887 |

-0.5031 |

-0.4552 |

| rank |

5 |

3 |

2 |

1 |

6 |

4 |

7 |

| best |

Max |

128 |

(3,3) |

4 |

128 |

(3,3) |

2 |

3.5. Comparative Analysis of Optimal Factor Combinations

The final experiment is performed based on the optimal factor combination. To ensure the reliability and stability of the results, the optimized Gabor convolutional network and the optimized convolutional neural network were independently executed ten times each, with the average accuracy serving as the primary metric for evaluating their predictive performance. The experimental results and corresponding average values are presented in

Table 10. The optimized GCN achieved an average accuracy of 98.92%, whereas the optimized CNN attained 96.19%.

These findings demonstrate that the optimized GCN outperforms the optimized CNN by 2.73% in wood grain defect detection. This improvement can be attributed to the integration of the Gabor filter with the CNN architecture, which enhances the model's ability to extract texture features more effectively. By leveraging the superior edge and texture detection capabilities of the Gabor filter, the GCN not only achieves higher detection accuracy but also exhibits greater robustness and reliability in defect detection tasks.