1. Introduction

Over the past three decades, consecutive

r-out-of-

n systems and their variants have attracted significant attention due to their broad applicability across engineering and industrial domains. These systems effectively model real-world configurations such as microwave relay stations in telecommunications, oil pipeline segments, and vacuum assemblies in particle accelerators. Depending on the arrangement and operational logic of the components, several types of consecutive systems can be defined. In particular, a linear consecutive

r-out-of-

n:G system comprises

n components arranged in sequence, where each component is assumed to operate independently and identically (i.i.d.). The system remains functional if at least

r consecutive components are operational. Notably, classical series and parallel systems arise as special cases: the series system corresponds to the

n-out-of-

n:G configuration, while the parallel system aligns with the 1-out-of-nnn:G case. The reliability properties of these systems have been extensively examined under a range of assumptions. Seminal contributions in this area include the works of Jung and Kim [

1], Shen and Zuo [

2], Kuo and Zuo [

3], Chang et al. [

4], Boland and Samaniego [

5], and Eryılmaz [

6,

7], among others. Among the various configurations, linear systems that satisfy the condition

are of particular interest, as they strike a balance between mathematical tractability and practical applicability in reliability analysis. In these systems, the lifetime of the i-th component is typically denoted by

, where i=1,2,…,

n. The lifetime of each component is assumed to follow a continuous distribution with probability density function (pdf)

, cumulative distribution function (cdf)

and survival function

. The system lifetime is denoted as

. Notably, Eryilmaz [

8] showed that when

, the reliability function of the consecutive

-out-of-

:G system is given by:

In information theory, a central objective is to quantify the uncertainty inherent in probability distributions. Within this framework, we focus on the application of Tsallis entropy to consecutive r-out-of-n:G systems, where component lifetimes follow continuous probability distributions. Let

be a nonnegative random variable with cumulative distribution function

and probability density function

. The Tsallis entropy of order

, introduced by Tsallis [

9], serves as a valuable measure of uncertainty and is defined as:

where , for , represents the quantile function of .

In particular, the Shannon differential entropy, a foundational concept in information theory introduced by Shannon [

10], can be obtained as the limiting case of the Tsallis entropy as

. Specifically, it is defined by:

An alternative and insightful representation of Tsallis entropy can be derived from Equation (2) by expressing it in terms of the hazard rate function. Specifically, it takes the form:

where

represents the hazard rate function,

means the expectation, and

follows a pdf given by:

It is important to note that Tsallis entropy does not necessarily yield positive values for all

. However,

remains invariant under location transformations, though it is sensitive to changes in scale. Unlike Shannon entropy, which is additive for independent variables, Tsallis entropy exhibits non-additive behavior. Specifically, for bivariate random variables

we have:

The investigation of information-theoretic properties in reliability systems and order statistics has garnered significant scholarly attention. Foundational contributions in this domain include the works of Wong and Chen [

11], Park [

12], Ebrahimi et al. [

13], Zarezadeh and Asadi [

14], Toomaj and and Doostparast [

15], Toomaj [

16] and Mesfioui et al. [

17], among others. More recently, Alomani and Kayid [

18] explored additional aspects of Tsallis entropy, focusing on coherent and mixed systems under an i.i.d. assumption. Baratpour and Khammar [

19] examined its behavior in relation to order statistics and record values, while Kumar [

20] studied its role in the context of

r-records. Further advancements were made by Kayid and Alshehri [

21], who derived an explicit expression for the lifetime entropy of consecutive

r-out-of-

n:G systems, established a characterization result, proposed practical bounds, and introduced a nonparametric estimator. Complementing this research, Kayid and Shrahili [

22] investigated the fractional generalized cumulative residual entropy for similar systems, providing a computational formulation, preservation properties, and two nonparametric estimators supported by empirical validation.

Building upon the existing body of research, this paper aims to further elucidate the role of Tsallis entropy in the analysis of consecutive r-out-of-n:G systems. By extending earlier results, we offer deeper insights into its structural characteristics, propose refined bounding techniques, and develop estimation methods specifically adapted to these reliability models.

The remainder of this paper is organized as follows.

Section 2 presents a novel representation for the Tsallis entropy of consecutive

r-out-of-

n:G systems, denoted by

, where component lifetimes are drawn from an arbitrary continuous distribution function

F. This representation is derived using the case of the uniform distribution as a baseline. Given the inherent difficulty in deriving exact expressions for Tsallis entropy in complex reliability structures, we address this challenge by establishing several analytical bounds, illustrated through numerical examples. In

Section 3, we explore characterization results for Tsallis entropy in the context of consecutive systems, establishing key theoretical properties.

Section 4 provides computational evidence supporting our results and introduces a nonparametric estimator tailored for system-level Tsallis entropy. The estimator’s performance is demonstrated using both simulated and real-world data. Finally,

Section 5 concludes with a summary of the main findings and their implications.

2. Tsallis Entropy of Consecutive :G System

This section is divided into three main parts. First, we provide a concise review of key results concerning Tsallis entropy and its connections to Rényi and Shannon differential entropies. In the second part, we derive an explicit expression for the Tsallis entropy of a consecutive r-out-of-n:G system and examine its behavior under various stochastic orders. Finally, we present a set of analytical bounds that shed additional light on the entropy structure of these systems.

2.1. Results on Tsallis Entropy

Differential entropy serves as a measure of the average uncertainty associated with a continuous random variable. Broadly speaking, the more a probability density function

deviates from the uniform distribution, the higher its differential entropy. In essence, this quantity reflects the expected amount of information gained from observing a specific realization of the random variable. Rényi entropy offers a more flexible framework for quantifying uncertainty compared to differential entropy. For a non-negative random variable

with probability density function

, Rényi entropy introduces a parameter

that enables the exploration of different aspects of uncertainty depending on the weight assigned to various probability outcomes. It is defined as:

The Tsallis and Renyi entropy are also measures of the disparity of the pdf from the uniform distribution. Additionally, these information measures take values in . It is known that for an absolutely continuous nonnegative random variable , we have and . Moreover, one can see that the connection of Renyi and Tsallis entropies is given by and where is defined in (3). In the next theorem, we investigate the relationship of the Shannon differential entropy of proportional hazards rate model defined by (5).

Theorem 2.1.

For an absolutely continuous nonnegative random variable , we have

Proof. By the log-sum inequality, we have

where

denotes the hazard rate function of

. By noting that

we get the results for all , and hence the theorem.

2.2. Expression and Stochastic Orders

To obtain the Tsallis entropy expression for the consecutive

r-out-of-

n:G system, we apply the probability integral transformation

It is well known that the transformed variables follow a uniform distribution under certain conditions. Using this transformation, we proceed to obtain an explicit expression for the Tsallis entropy of the system lifetime

, assuming that the component lifetimes are independently drawn from a continuous distribution function F. Based on Equation (1), the probability density function of

, given by:

Furthermore, when

, the pdf of

can be represented as follows:

We are now ready to present the following result based on the previous analysis.

Proposition 2.1.

For , the Tsallis entropy of , can be expressed as follows:

where is given by (10).

Proof. Applying the transformation

and referencing Equations (2) and (9), we can obtain the following expressions:

and this completes the proof.

In the subsequent theorem, we present an alternative representation for by employing Newton's generalized binomial theorem and Theorem 2.1.

Theorem 2.2.

Under the conditions of Proposition 2.1, we get

where and for all .

Proof. By defining

and

, and referring to (10) and (11), we find that

where the third equality follows directly from Newton's generalized binomial series

. This result, in conjunction with Eq. (11), complete the proof.

We now illustrate the utility of the representation in (11) through the following example.

Example 2.1. Consider a linear consecutive 2-out-of-4:G system with the following lifetime

Let us assume that the component lifetimes are i.i.d. and follow the log-logistic distribution (known as the Fisk distribution in economics). The pdf of this distribution is represented as follows:

After appropriate algebraic manipulation, the following identity is obtained:

As a result of specific algebraic manipulations, we obtain the following expression for the Tsallis entropy:

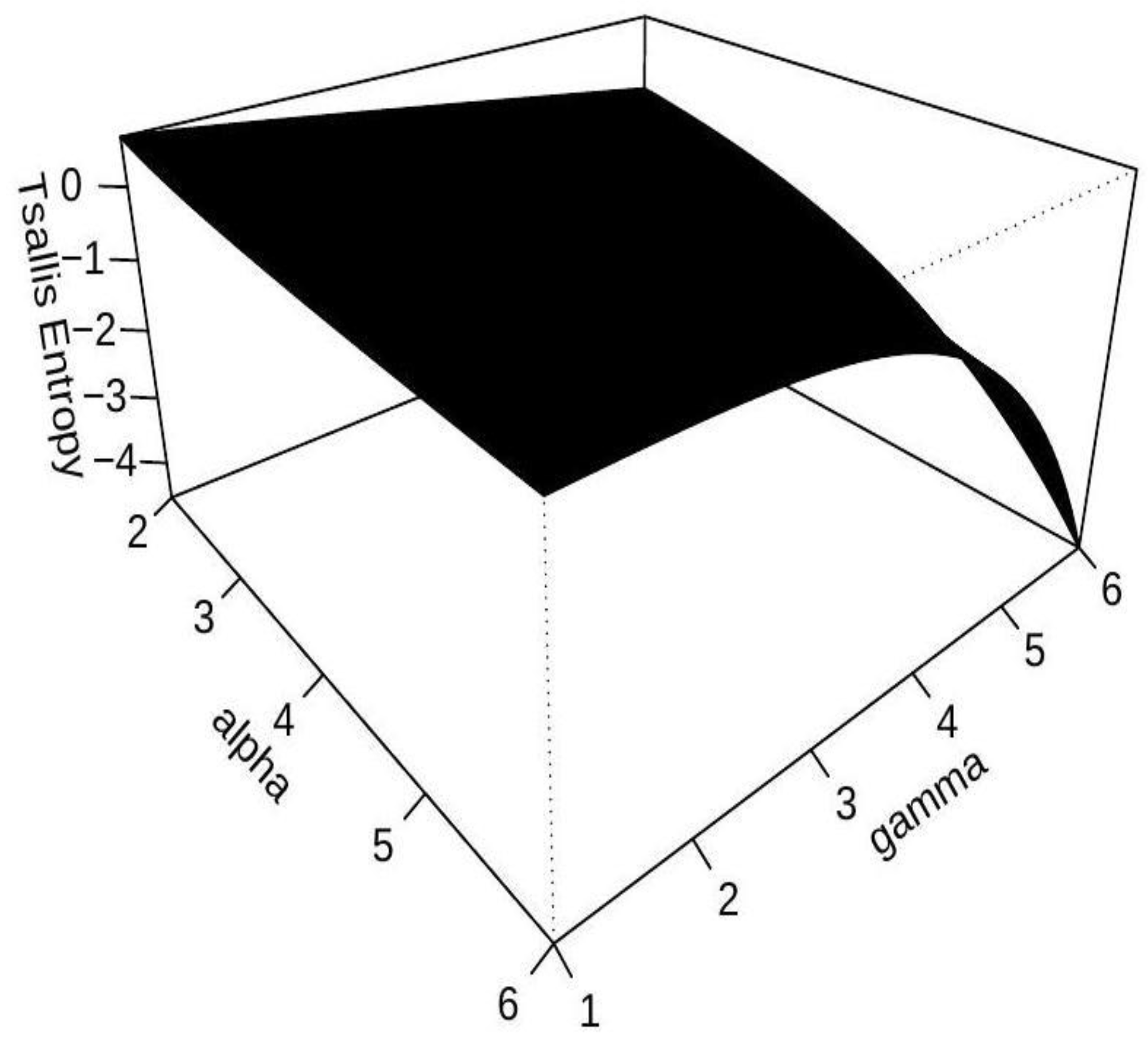

Due to the difficulty of obtaining a closed-form expression, numerical methods are employed to examine the relationship between and the parameters and . The analysis focuses on the consecutive 2-out-of-4:G system and is carried out for values and , as the integral diverges for and .

As illustrated in

Figure 1, the Tsallis entropy decreases as the parameters

and

increase. This trend reflects the sensitivity of the entropy measure to these parameters and underscores their substantial impact on the system's information-theoretic characteristics.

Definition 2.

1.

Let and be two absolutely continuous nonnegative random variables with pdfs and , cdfs and , survival functions and , respectively. Then, (i) is smaller than or equal to in the dispersive order, denoted by , if and only if for all ; (ii) is smaller than in the hazard rate order, denoted by , if is increasing for all ; (iii) it is said to be that exhibits the decreasing failure rate (DFR) property if is decreasing in .

Additional details on these ordering concepts can be found in the comprehensive work of Shaked and Shanthikumar [

23]. The following theorem is a direct consequence of the expression given in Equation (11).

Theorem 2.3.

Let and be the lifetimes of two consecutive r-out-of- systems consisting i.i.d. components having cdfs and , respectively. If , then

Proof. If

, then for all

, we have

This yields that for all , and this completes the proof.

The following result rigorously demonstrates that, within the class of consecutive r-out-of-n:G systems composed of components with the DFR property, the series system yields the minimum Tsallis entropy.

Proposition 2.2. Consider the lifetime of a consecutive r-out-of- system, comprising i.i.d. components that exhibit the DFR property. Then, for , and for all ,

Proof. (i) It is easy to see that

. Furthermore, if

exhibits the DFR property, then it follows that

also possesses the DFR property. Due to Bagai and Kochar [

24], it can be concluded that

which immediately obtain

by recalling Theorem 2.6.

(ii) Based on the findings presented in Proposition 3.2 of Navarro and Eryilmaz [

25], it can be inferred that

. Consequently, employing analogous reasoning to that employed in Part (i) leads to the acquisition of similar results.

A notable application of Equation (11) lies in comparing the Tsallis entropy of consecutive r-out-of-n:F systems whose components are independent but follow different lifetime distributions, as formalized in the following result.

Proposition 2.3.

Under the conditions of Theorem 2.6, if for all , and , for

then for all and .

Proof. Given that

for all

, Equation (2) implies

where

. Assuming

, based on Equation (11), we have

The first inequality holds because in and in when . The last inequality follows directly from equation (14). Consequently, we have for , which completes the proof for the case . The proof for the case , follows a similar argument.

The following example is provided to illustrate the application of the preceding proposition.

Example 2.2. Assume coherent systems with lifetimes and , where are i.i.d. component lifetimes with a common cdf , and are i.i.d. component lifetimes with the common cdf . We can easily confirm that and , so . Additionally, since and , we have . Thus Theorem 2.3 implies that

2.3. Some Bounds

In the absence of closed-form expressions for the Tsallis entropy of consecutive systems, particularly when dealing with diverse lifetime distributions or systems comprising a large number of components, bounding techniques become a practical necessity for approximating entropy behavior over the system's lifetime. To address this challenge, the present study examines the role of analytical bounds in characterizing the Tsallis entropy of consecutive r-out-of-n:G systems. Toward this goal, we introduce the following theorem, which provides a lower bound on the Tsallis entropy for such systems. This bound yields valuable insights into entropy dynamics in realistic settings and contributes to a deeper understanding of the underlying system structure.

Lemma 2.1.

Consider a nonnegative continuous random variable with pdf and cdf such that , where , denotes the mode of the pdf . Then, for , we have

Proof. By noting that

, then for

, we have

. Now, the identity

implies that

and hence the result. When , then we have . Now, since , by using the similar arguments, we have the results.

The following theorem establishes a lower bound for the Tsallis entropy of . This bound depends on the Tsallis entropy of a consecutive r-out-of-n:G system under the uniform distribution, as well as the mode of the underlying component lifetime distribution.

Example 2.3.

Assume a linear consecutive -out-of- :G system with lifetime

where

for

. Let further that the lifetimes of the components are i.i.d. having the common mixture of two Pareto distributions with parameters

and

with pdf as follows:

Given that the mode of this distribution is

, we can determine the mode value

as

. Consequently, from Lemma 2.1, we get

In the following theorem, we derive bounds for the Tsallis entropy of consecutive r-out-of-n:G systems by leveraging the Tsallis entropy of the individual components.

Theorem 2.4.

When , we have

where where .

Proof. The mode of

is clearly observed as

. As a result, we can establish that

for

. Therefore, for

, we can conclude that:

and hence the theorem.

The following example illustrates the lower bound presented in Theorem 2.5 by applying it to a consecutive r-out-of-n:G system.

Example 2.4.

Let us consider a linear consecutive 10-out-of-18:G system with lifetime , where for . In order to conduct the analysis, we assume that the lifetimes of the individual components in the system are i.i.d. according to a common standard exponential distribution. By performing a simple verification, we find that the optimal value is equal to 0.08, resulting in a corresponding value of as 4.268. Utilizing Theorem 2.4, we can write

In the next result, we provide bounds for the consecutive -out-of- :G systems which relates to the hazard rate function of the component lifetimes.

Proposition 2.4.

Let , be the lifetimes of components of a consecutive -out-of- systems with having the common failure rate function . If and . Then

where has the pdf , for .

Proof. It is easy to see that the hazard rate function of

can be expressed as

, where

Since for and , it follows that is a monotonically decreasing function of . Given that and , we have for , which implies that , for . Combining this result with the relationship between Tsallis entropy and the hazard rate (as defined in Eq. (4)) for , completes the proof.

Let us consider an illustrative example that demonstrates the application of the preceding proposition.

Example 2.5. Consider a linear consecutive 2-out-of-3:G system with lifetime , where the component lifetimes are i.i.d. with an exponential distribution with the cdf for . The exponential distribution has a constant hazard rate, , so, it follows that . Applying Proposition 2.4 yields the bounds on the Tsallis entropy of the system as . Based on (11), one can compute the exact value as which falls within the bounds.

The following theorem holds under the assumption that the expected value of the squared hazard rate function of is finite.

Theorem 2.5.

Under the conditions of Theorem 2.11 such that , for and , it holds that

Proof. The pdf of

can be rewritten as

, while its failure rate function is given by

Consequently, by (4) and using Cauchy-Schwarz inequality, we obtain

The last equality follows from the change of variable , completing the proof.

3. Characterization Results

In this section, we investigate characterization results for consecutive systems using Tsallis entropy. To illustrate these results, we focus on a specific system type: a linear consecutive (

)-out-of-

n:G system, under the condition that

, where

. To this end, we begin by revisiting a lemma derived from the Müntz–Szász Theorem (as referenced in Kamps [

26]), which is commonly used in moment-based characterization theorems.

Lemma 3.1. For an integrable function on the finite interval ( if 1 , then for almost all , where is a strictly increasing sequence of positive integers satisfying

It is worth pointing out that Lemma 3.1 is a well-established concept in functional analysis, stating that the sets

constitutes a complete sequence. Notably, Hwang and Lin [

27] expanded the scope of the Müntz-Szász theorem for the functions

, where

is both absolutely continuous and monotonic over the interval

.

Theorem 3.1.

Consider two consecutive ()-out-of-n:G systems with lifetimes and composed of i.i.d. components with cdfs and , and pdfs and , respectively. Then and belong to the same family of distributions, but for a change in location, if and only if for a fixed ,

Proof. For the necessity part, since

and

belong to the same family of distributions, but for a change in location, then

, for all

and

. Then, it is clear that

To establish the sufficiency part, we first observe that for a consecutive (

)-out-of-

:G system, the following equation holds

where

and

ranges from 0 to

. Given the assumption that

, we can write

By applying Lemma 3.1 with the function

and considering the complete sequence

, one can conclude that

Consequently, and are part of the same distribution family, differing only in a location shift.

Recognizing that a consecutive n-out-of-n:G system is a special case of a series system, the following corollary characterizes its Tsallis entropy.

Corollary 3.1.

Let and be two series systems having the common pdfs and and cdfs and , respectively. Then and belong to the same family of distributions, but for a change in location, if and only if

Another helpful characterization is provided in the following theorem.

Theorem 3.2.

Under the conditions of Theorem 3.1, and belong to the same family of distributions, but for a change in location and scale, if and only if for a fixed ,

Proof. The necessity is straightforward, so we must now establish the sufficiency aspect. Leveraging Eqs. (6) and (18), we can derive

An analogous argument can be made for

. If relation (22) holds for two cdfs

and

, then we can infer from Equation (23) that

Let us set

. Using similar arguments as in the proof of Theorem 3.2, we can write

The proof is then completed by using similar arguments to those in Theorem 3.2.

By applying Theorem 3.2, we derive the following corollary.

Corollary 3.2.

Suppose the assumptions of Corollary 3.1, and belong to the same family of distributions, but for a change in location and scale, if and only if for a fixed ,

The exponential distribution is characterized in the following theorem through the use of Tsallis entropy for consecutive

r-out-of-

n:G systems. This result serves as the theoretical basis for a newly developed goodness-of-fit test for the exponential distribution, applicable to a wide range of datasets. To establish this result, we begin by introducing the lower incomplete beta function, defined as:

where and are positive real numbers. When this expression reduces to the complete beta function. We now proceed to present the main result.

Theorem 3.3.

Let us assume that is the lifetime of the consecutive -out-of-n:G system having i.i.d. component lifetimes with the pdf and . Then has an exponential distribution with the parameter if and only if for a fixed ,

for all , and .

Proof. Given an exponentially distributed random variable

, its Tsallis entropy, directly calculated using

, is

. Furthermore, since

, application of Eq. (11) yields:

for

. To derive the second term, let us set

and

, uponrecalling (10), it holds that

where the necessity is derived. To establish sufficiency, we assume that Equation (25) holds for a fixed value of

and assume that

. Following the proof of Theorem 3.1 and utilizing the result in Equation (26), we obtain the following relation

where

is defined in (18). Thus, it holds that

where

is defined in (21). Applying Lemma 3.1 to the function

and utilizing the complete sequence

, we can deduce that

By solving this equation, it yields , where is an arbitrary constant. Utilizing the boundary condition , it follows that , we determine that . Consequently, leading to for . This implies the cdf , confirming that follows an exponential distribution with scale parameter . This establishes the theorem.

4. Entropy-Based Exponentiality Testing

In this section, we introduce a nonparametric method for estimating the Tsallis entropy of consecutive

r-out-of-

n:G systems. The exponential distribution’s broad applicability has led to the development of numerous test statistics aimed at assessing exponentiality. These methods often rely on foundational principles in statistical theory. The central objective here is to determine whether the distribution of a random variable

T follows an exponential distribution. Let

denote the cdf of the exponential distribution, defined as

, for

. The hypothesis under investigation is stated as follows:

Extropy has recently gained considerable attention in the research community as a useful metric for goodness-of-fit testing. Qiu and Jia [

28] pioneered the development of two consistent estimators for Tsallis entropy based on the concept of spacings and subsequently constructed a goodness-of-fit test for the uniform distribution using the more efficient of the two. In a related contribution, Xiong et al. [

29] exploited the properties of classical record values to derive a characterization result for the exponential distribution, leading to the development of a novel exponentiality test. Their work carefully outlined the test statistic and emphasized its strong performance, particularly with small sample sizes. Building on this foundation, Jose and Sathar [

30] proposed a new exponentiality test based on a characterization involving the Tsallis entropy of lower

r-record values.

The present section aims to extend these developments by exploring the Tsallis entropy of consecutive

r-out-of-

n:G systems. As established in Theorem 3.3, the exponential distribution can be uniquely characterized through the Tsallis entropy associated with such systems. Leveraging Equation (25) and after simplification, we propose a new test statistic for the exponentiality, denoted by

, and for

, defined as follows:

where

then Theorem 3.3 directly implies that

if and only if

is exponentially distributed. This fundamental property establishes

as a viable measure of exponentiality and a suitable candidate for a test statistic. Given a random sample

, an estimator

of

can be used as a test statistic. Significant deviations of

from its expected value under the null hypothesis (i.e., the assumption of an exponential distribution) would indicate non-exponentiality, prompting the rejection of the null hypothesis. Consider a random sample of size

, denoted by

drawn from an absolutely continuous distribution

and

represent the corresponding order statistics. To estimate the test statistic, we adopt an estimator proposed by Vasicek [

31] for

as follows:

and

is a positive integer smaller than

which is known as the window size and

for

and

for

. So, a reasonable estimator for

can be derived using Equation (30) as follows:

Establishing the consistency of an estimator is essential, particularly when evaluating estimators for parametric functions. The following theorem confirms the result stated in Equation (25). The proof adopts an approach similar to that of Theorem 1 in Vasicek's [

31]. Notably, Park [

32] and Xiong et al. [

29] also utilized the consistency proof technique introduced by Vasicek [

31] to validate the reliability of their respective test statistics.

Theorem 4.1.

Assume that is a random sample of size taken from a population with pdf and cdf . Also, let the variance of the random variable be finite. Then as and , where stands for the convergence in probability for all .

Proof. To establish the consistency of the estimator

, we employ the approach given in Noughabi and Arghami [

33]. As both

and

tend to infinity, with the ratio

approaching 0, we can approximate the density as follows:

where

represents the empirical distribution function. Furthermore, given that

, we can express

where the second approximation relies on the almost sure convergence of the empirical distribution function i.e.

as

. Now, applying the Strong Law of Large Numbers, we have

This convergence demonstrates that is a consistent estimator of and hence completes the proof of consistency for all .

The following theorem shows that the root mean square error (RMSE) of

remains invariant under location shifts in the random variable

T. However, this invariance does not hold under scale transformations. The proof of these results can be obtained by adapting the arguments presented by Ebrahimi et al. [

34].

Theorem 4.2. Assume that is a random sample of size taken from a population with pdf and and . Denote the estimators for on the basis of and with and , respectively. Then, the following properties apply:

- 3.

,

- 4.

,

- 5.

, for all .

Proof. It is not hard to see from (25) that

The proof is then completed by leveraging the properties of the mean, variance, and RMSE of .

Various combinations of n and i can be used to construct a range of test statistics. For computational purposes, we choose and which simplifies the expression in Equation (30) for evaluation. Under the null hypothesis, , the value of asymptotically converges to zero as the sample size approaches infinity. Conversely, under an alternative distribution with an absolutely continuous cdf , the value of converges to a positive value as . Based on these asymptotic properties, we reject the null hypothesis at a given significance level , for a finite sample size if the observed value of the test statistic exceeds the critical value . The asymptotic distribution of is intricate and analytically intractable due to its dependence on both the sample size and the window parameter .

To overcome this, we employed a Monte Carlo simulation approach. Specifically, we generated 10,000 samples of sizes

from the standard exponential distribution under the null hypothesis. For each sample size, we determined the

-th quantile of the simulated

values to establish the critical value for significance levels

, while varying the window size

from 2 to 30.

Table 1 and

Table 2 present the resulting critical values for the respective sample sizes and significance levels.

Power Comparisons

The power of the test was assessed through a Monte Carlo simulation involving nine alternative probability distributions. For each sample size replicates of size N were drawn from each alternative distribution, and the statistic was computed for each. The power at a significance level was then estimated as the empirical rejection rate, specifically the proportion of the 10,000 samples yielding a statistic exceeding the critical threshold.

To validate the efficiency of the newly introduced test based on the

statistic, its performance will be benchmarked against several existing tests for exponentiality reported in the literature, with the specifications of the alternative distributions provided in

Table 3.

The simulation setup, including the selection of alternative distributions and their parameters, closely follows the methodology proposed by Jose and Sathar [

30]. To assess the efficiency of the newly proposed test based on the

statistic, its performance is compared with several established tests for exponentiality reported in the literature, as summarized in

Table 4.

The performance of the statistic is contingent upon the window size m, necessitating the prior determination of an appropriate value to ensure adequate adjusted statistical power. Simulations across varying sample sizes resulted in the heuristic formula , where is the floor function. This formula provides a practical guideline for selecting and aims to ensure robust power performance across different distributions.

To comprehensively assess the performance of the proposed

, test, we selected ten established tests for exponentiality and evaluated their power against a diverse set of alternative distributions. Notably, Xiong et al. [

29] introduced a test statistic based on the Tsallis entropy of classical record values, while Jose and Sathar [

30] characterized the exponential distribution using Tsallis entropy derived from lower iii-records and developed a corresponding test statistic.

These two tests, designated as

and

in

Table 4, are included in our comparative analysis due to their foundation in information-theoretic principles. The original authors provided detailed discussions supporting their relevance and applicability for testing exponentiality. To estimate the power of each test, we simulated 10,000 independent samples for each sample size

from each alternative distribution detailed in

Table 3. The power values for the

, test was then computed at a 5 percent significance level. Subsequently, the power values for

, and the eleven competing tests were calculated for the same sample sizes and alternative distributions. These comparative results are summarized in

Table 5.

In general, the test statistic demonstrates strong performance in detecting deviations from exponentiality towards the gamma distribution. However, its performance against other alternative distributions, including Weibull, uniform, half-normal, and log-normal, is moderate, exhibiting neither exceptional strength nor significant weakness.