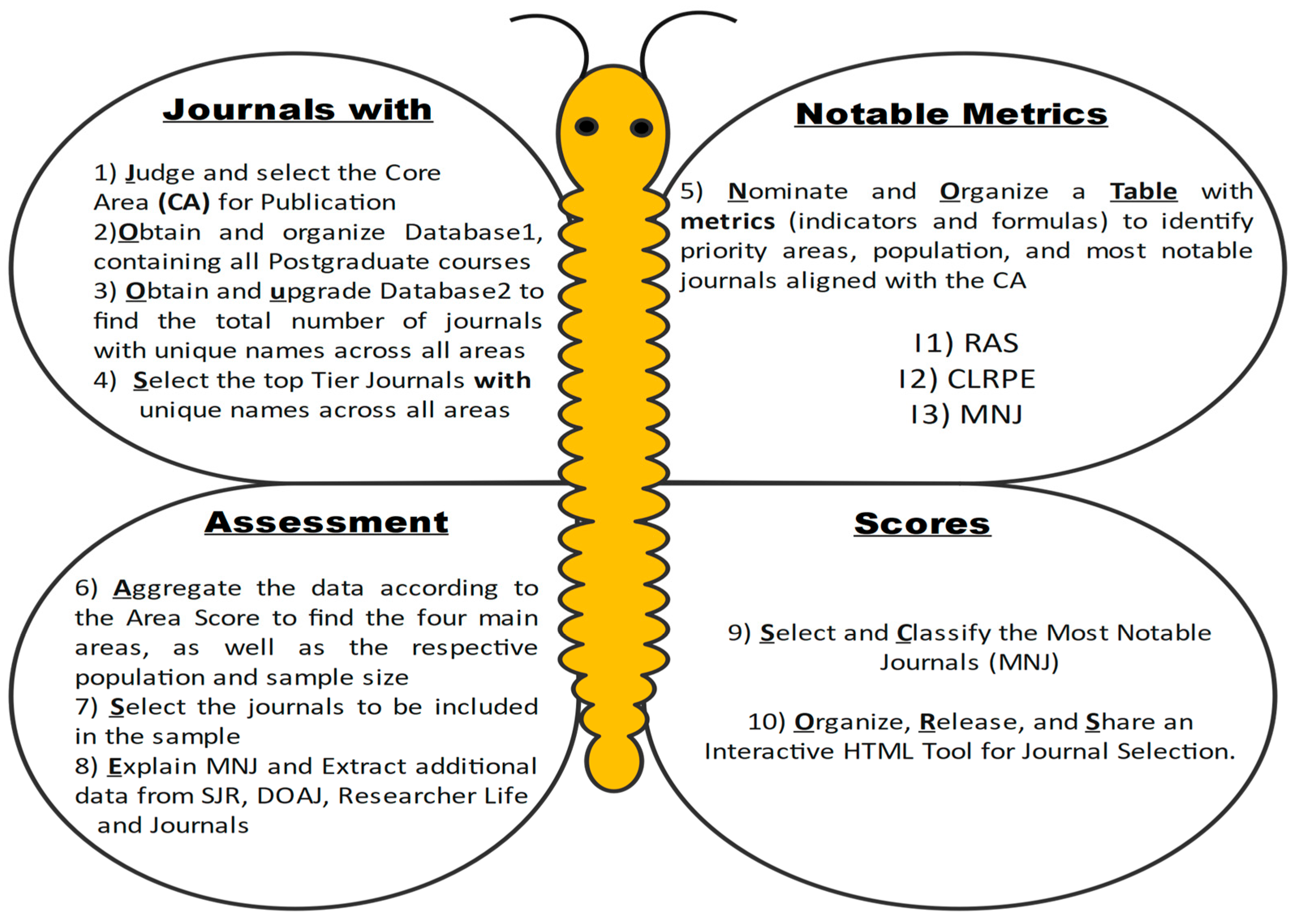

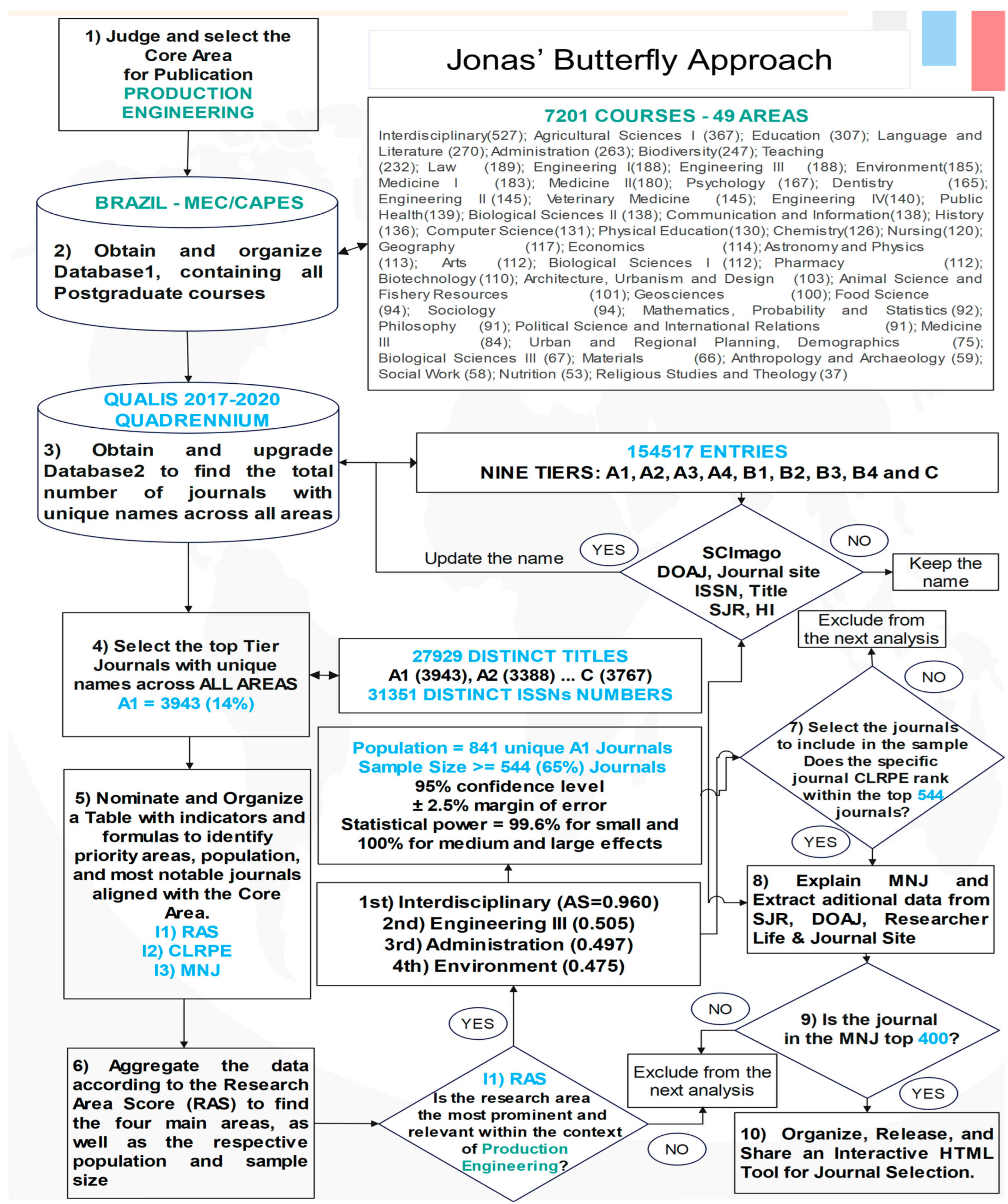

This explanatory and applied study uses a mixed-methods design that integrates open science principles with bibliometric and documentary analyses, complemented by expert validation. Scientometric techniques underpin the quantitative component, which combines computational methods for data extraction and cleaning (using Julius AI and Levenshtein Distance fuzzy matching), integration of multiple bibliometric databases, normalization procedures (min–max scaling and percentile-rank transformation), and ranking through original formulas (RAS, CLRPE, and MNJ). These steps were systematically implemented to ensure methodological rigor, consistency, and comparability across datasets.

At the last step, the computational workflow was implemented using a Python script and digital platforms (Edraw Max, GitHub, Wix Studio, and Harvard Dataverse), enabling automated data processing, transparent analysis, and the generation of an interactive HTML tool for journal selection. These tools saved time and made the research process more transparent and accessible for others who want to follow or build on this work.

Despite the inherent risks of generative AI, using it for data collection, creation, and visualization improves the discoverability and quality of datasets shared, thereby speeding research and helping users find and understand needed information (Hosseimi et al., 2024; Resnik & Hosseini, 2024; Solatorio & Dupriez, 2024).

Data were gathered and examined over one year (May 2024–April 2025), with an additional update in May 2025. The research follows a systematic, multi-stage process to identify and assess the most influential academic journals for Brazilian scholars working in an Under-Resourced environment, focusing specifically on the area of Production Engineering as its core domain (Figure 6 shown in Appendix T; ABEPRO, 2024).

2.1. Phase 1: Journal with Data Preparation

Step 1) Judge and select the Core Area (CA) for publication:

This refers to the researcher’s main field. For this study, Production Engineering (PE) was chosen as the core area (Figure 6; Appendix T), aligned with the author’s area of expertise.

In Brazil, the Associação Brasileira de Engenharia de Produção (ABEPRO) is the main body representing PE professionals. The field covers ten areas, ranging from Operations, Supply Chain, and Quality to Sustainability and Education, divided into 58 subareas focused on designing, operating, and improving integrated production systems (ABEPRO, 2024).

Step 2) Obtain and organize Database1, containing all Postgraduate courses

The Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES), under Brazil’s Ministry of Education, oversees postgraduate education and contributes to national academic output. Data were collected on January 11, 2025, from the CAPES Graduate Programs Database (

https://sucupira-v2.capes.gov.br/), the country’s main system for cataloging and evaluating stricto sensu programs.

As of January 2025, the CAPES database listed 7,201 postgraduate programs across 49 academic areas in Brazil (

Figure 5; Appendix A), reflecting the country’s diverse research and education landscape. The four largest areas were Interdisciplinary Studies (527 programs), Agricultural Sciences I (367), Education (307), and Language and Literature (270). PE, the core focus of this study, falls under Engineering III, alongside Mechanical, Aerospace, and Naval Engineering.

This distribution illustrates the academic ecosystem driving Brazil’s scientific output and underpins the Research Area Score (RAS) calculation in the Jonas Butterfly Model.

Step 3) Obtain and update Database2, to find total number of journals with unique names

The second database was sourced from the Brazilian Qualis System (

https://tinyurl.com/3f2ucmky), a framework developed by CAPES to evaluate and classify academic journals into nine strata (tiers), ranging from A1 (highest quality) to B4 and C (lower quality). Although the Qualis System is being phased out and will be replaced by a new article-level evaluation model starting with the 2025–2028 cycle, it remains widely used in Brazil. Due to its historical significance and continued relevance, particularly for the 2017–2020 evaluation period, the Qualis database is used in this study, as it remains the only publicly available and comprehensive national source for journal classification currently guiding funding decisions and publication strategies during this transition.

The database was downloaded on January 12, 2025, and to ensure data accuracy and avoid duplicate journal entries, the Qualis database (2017–2020 cycle, 154,517 records) underwent a standardization and deduplication process using the Julius AI and Levenshtein Distance fuzzy-matching algorithm, a method that has played a central role, both past and present, in sequence alignment in particular and biological database similarity search in general (Berger et al., 2021). This allowed identification and correction of minor typographical differences in journal titles with identical ISSNs (e.g., variations in punctuation or accents).

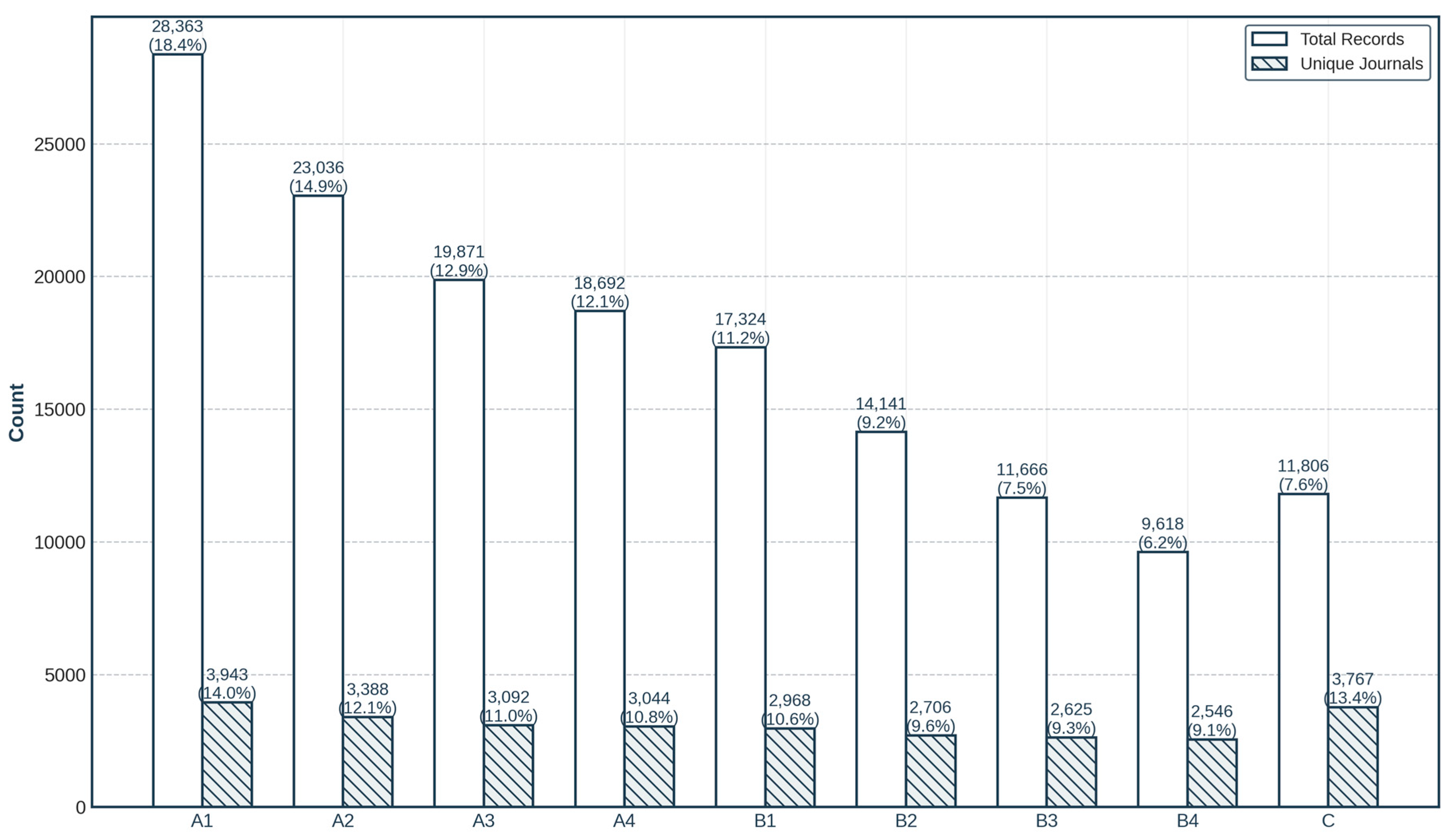

The cleaning process (Appendix B) involved four iterative rounds, validated against external databases like Scimago, DOAJ, and official journal sites. Analysis of the 2017–2020 Qualis Database (

Figure 7) showed an uneven distribution, with 28,363 A1 titles (18.4% of 154,517 entries; Appendix C). This sharp decline across strata suggests title overlaps and a bias toward publishing in higher-ranked, more prestigious journals.

Focusing solely on the A1 journals (28,363 records, as detailed in Appendix D), nearly 50% are concentrated in a few areas, including Interdisciplinary (7.02%), Medicine I and II (8.64% combined), Biological Sciences I–III (10.39%), Public Health (3.36%), Biotechnology (3.30%), Environmental Sciences (3.28%), and Engineering III (3.12%). This concentration highlights a strong emphasis on publishing in high-impact journals within select fields.

Figure 7.

Number of records and unique journals of the Brazilian Qualis System (2017-2020).

Figure 7.

Number of records and unique journals of the Brazilian Qualis System (2017-2020).

Analysis of the 2017–2020 Qualis Database shows that higher strata (A1–A4) account for 58% of records, reflecting a preference for top-tier journals, while B1–B4 and C represent 34% and 7.7%, respectively. However, when focusing on 27,929 unique titles, the distribution is more balanced, with A1 (14%) and C (13.4%) showing comparable shares.

Additionally, 148 journals appear in multiple strata, highlighting the system’s interdisciplinary reach and inclusiveness.

Another key finding is that the 27,929 unique journal titles correspond to 31,351 ISSNs, reflecting the broad scope of Qualis evaluations. This analysis prioritizes journal unique titles over ISSNs, as titles better capture publication diversity, avoiding duplication from format changes (print or electronic), regional editions, or title history.

Step 4) Select the Top Tier Journals with unique names across all areas

Among 27,929 distinct titles, 3943 (14%) journals are considered in A1 top tier stratum, which is the main target of this research.

2.3. Phase 3: Assessment

Step 6) Aggregate the data according to the RAS to find the four main areas, population and sample

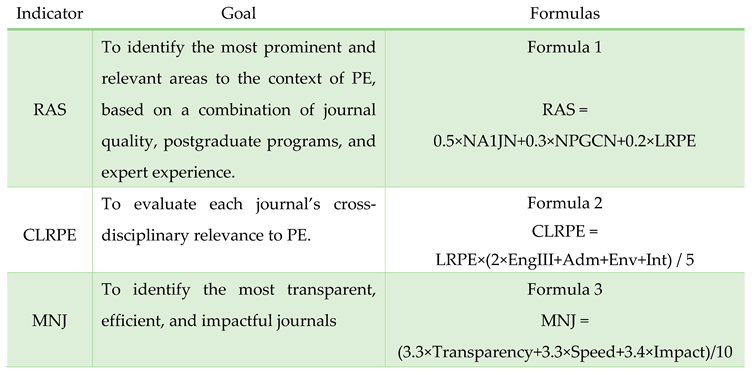

The Research Area Score (RAS) indicator provides a comprehensive and normalized measure that ranks research areas based on their academic strength and relevance to the Core Area selected.

The data collection process involved two primary sources from CAPES. The Qualis System provided the Number of A1 Journals (NA1J), which represents the quantity of highest-tier journals in each academic area. CAPES’ evaluation of postgraduate programs supplied the Number of Post-Graduation Courses (NPGC), encompassing both master’s and doctoral programs. Additionally, the Level of Relation with Production Engineering (LRPE) was determined through the author’s expert analysis of ABEPRO’s defined areas and subareas.

For each 49 areas, the author assigned a value between 0 and 1, where: 0.0: No relation to the Core Area (Production Engineering); 0.2: Weak relation; 0.4: Moderate Relation; 0.6: Substantial Relation; 0.8: Strong Relation, and 1.0: Very Strong Relation.

The statistical approach involved normalizing the variables NA1J and NPGC to ensure fair comparison across different scales. The variables used in the analysis are defined as follows:

The weights in the formula were assigned based on specific criteria:

0.5 for NA1JN: highest weight assigned to research output quality, as A1 journals represent the pinnacle of academic publication

0.3 for NPGCN: weight given to educational infrastructure, reflecting postgraduate training role

0.2 for LRPE: weight assigned to domain relevance with the Core Area, balancing the need for field-specific alignment while acknowledging its subjective nature

The normalization scaled each area from 0 to 1, with 1 representing the area with the most A1 journals and 0 the least, ensuring fair comparison across fields. As shown in Appendix E, the top four areas most relevant to PE were: Interdisciplinary (RAS = 0.960; 1,992 A1 journals; 527 programs; LRPE = 0.8), Engineering III (0.505; 886 journals; 188 programs; LRPE = 1.0), Administration (0.497; 832 journals; 263 programs; LRPE = 0.8), and Environmental Sciences (0.475; 929 journals; 185 programs; LRPE = 0.8).

After defining the four main areas, 2,275 unique A1 journals were identified (Appendix F) and assigned LRPE scores. Of these, 63% (1,434) had no link to PE (LRPE = 0.0) and were excluded. The final sample comprised 841 journals (37%) with non-zero LRPE: 43% weak to moderate, 23% substantial, and 34% strong to very strong relevance, ensuring alignment with the study’s disciplinary focus. For resource-limited researchers, targeting these 841 journals offers greater visibility, citation potential, and cross-disciplinary reach, especially through Administration and Environmental Sciences journals. This supports broader dissemination and funding chances without large budgets.

Using Survey Monkey (2025) with N=841, 95% confidence, and a 2.5% margin of error, the minimum sample size was set at 544 journals (64.7%), ensuring strong statistical reliability for analyzing journal relevance in PE.

Step 7) Select the journals to be included in the sample

To select the titles, the Cross-Level Relationship with Production Engineering (CLRPE) indicator was designed to evaluate each journal’s cross-disciplinary relevance to PE. As mentioned in

Table 1, the

CLRPE is calculated using Formula 2: LRPE×(2×EngIII+Adm+Env+Int) / 5, where:

LRPE was already explained in Step 6.

Adm, EngIII, Env, Int receives binary variables (0 or 1) indicating whether the journal is classified under the four main areas selected with RAS formula (Step 6)

In this formula, Engineering III (EngIII) is weighed double for its key role in PE, while Administration (Adm), Environment (Env), and Interdisciplinary (Int) areas are equally weighted. The sum is divided by 5 to normalize the CLRPE score between 0 and 1, enabling easy comparison and effective, balanced journal ranking.

Appendix G lists CLRPE values (0–1) for 841 journals, calculated based on their coverage of four PE-related areas and sorted in descending order. Based on the distribution of CLRPE, an initial group of 544 journals with the highest CLRPE values fell within the interval CLRPE ≥ 0.16, meeting sample size requirements for 95% confidence and 2.5% margin of error. However, a closer inspection of the full population of 841 journals revealed that several additional titles share exactly the 0.16 CLRPE value. By including them, the sample increased to 588 (69.9%) journals, preventing the exclusion of borderline cases and preserving statistical rigor.

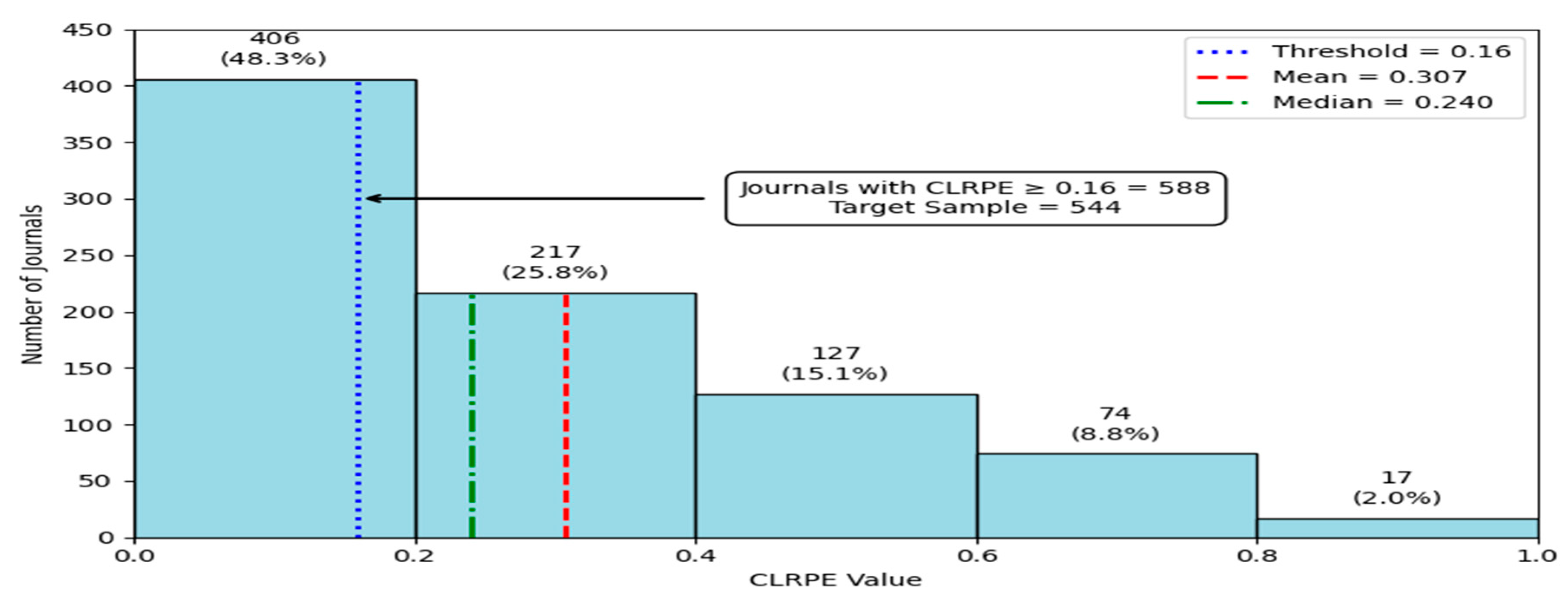

Figure 8 shows a left-skewed distribution of CLRPE scores for 841 journals, with 48.3% scoring ≤ 0.20. Frequencies decrease across intervals, with only 2% scoring above 0.80. The median (0.24) and mean (0.31) fall in the 0.20–0.40 range, indicating most journals score below 0.30. A CLRPE cutoff of 0.16 includes 588 journals (69.9%), exceeding the required sample size of 544.

Figure 8.

Distribution of CLRPE values across 841 A1 journals.

Figure 8.

Distribution of CLRPE values across 841 A1 journals.

When the analysis (Appendix G) focuses on the four coverage areas at CLRPE ≥ 0.16 compared with the population, the results reveal both strong performers and areas for targeted improvement. Engineering III leads with 89.9% (455/506) of journals meeting the threshold. Environment follows at 83.3% (245/294), with some just below cutoff. Administration has 74.8% (306/409), and Interdisciplinary 72.7% (397/546), indicating solid but improvable coverage across areas.

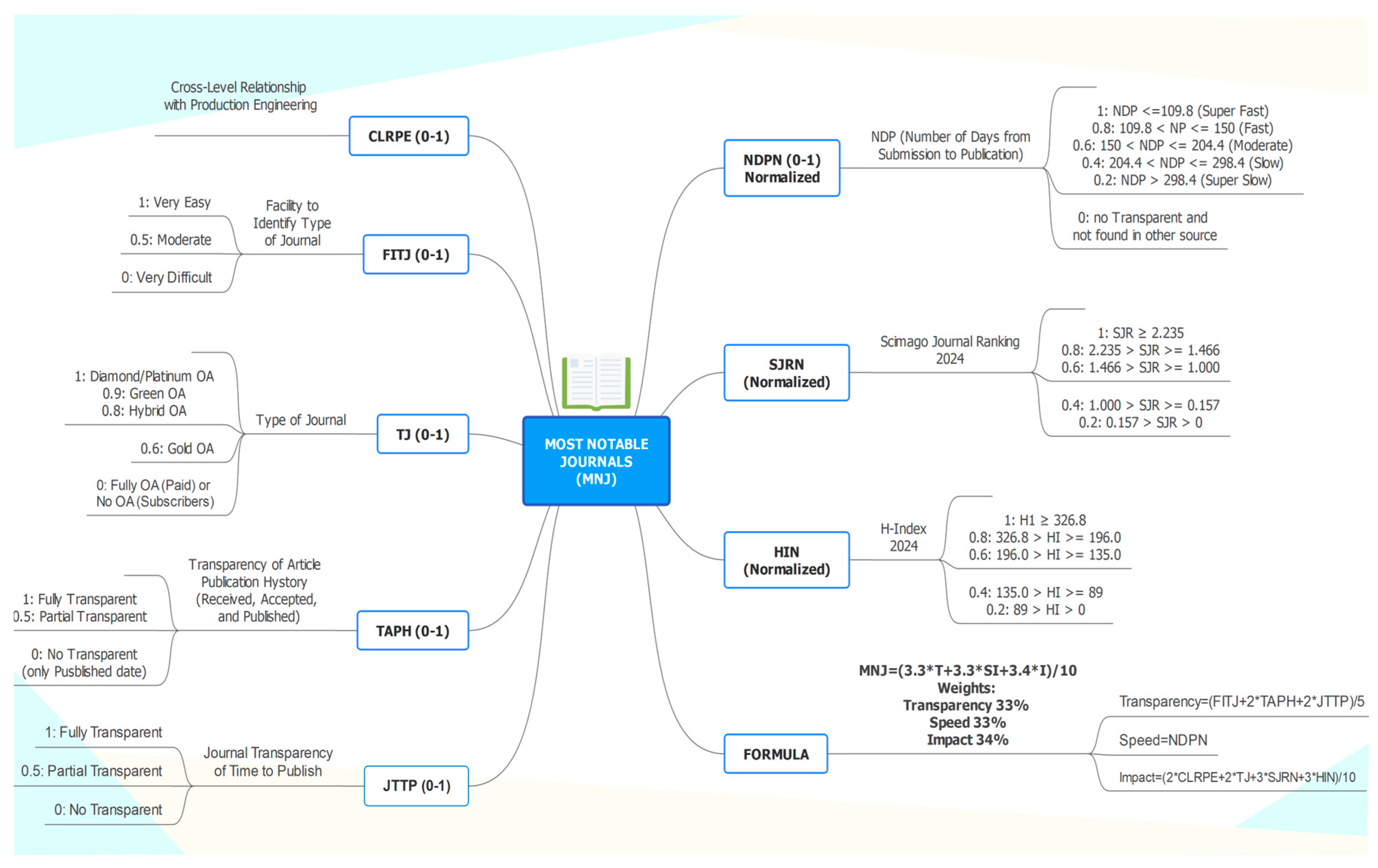

Step 8) Explain MNJ and Extract additional data from SJR, DOAJ, Researcher Life, and Journals

To determine which data needed to be extracted and the appropriate sources, Formula 3 (

Table 1 and

Figure 9) was developed to identify the most transparent, efficient, and impactful journals, referred to hereafter as the Most Notable Journals (MNJ).

The MNJ score is computed as a weighted average of three key components, Transparency, Speed, and Impact, according to the following Formula 3:

MNJ = (3.3 × Transparency + 3.3 × Speed + 3.4 × Impact) / 10

Indicator data for these components were collected between May and December 2024, then updated from April to May 2025, using 2024 as the reference year. The data were sourced from multiple reputable platforms, including the journal’s official website, Scimago (

https://www.scimagojr.com/), DOAJ (

https://doaj.org/), and Researcher Life (

https://researcher.life/). Due to space limitations, all MNJ component descriptions and normalized values (0 to 1) are provided in Appendix H1 and Appendix H2, respectively.

In this phase, it is important to highlight two key points. First, among the 588 journals identified in Step 7, 586 were successfully validated. Only the journal REDES (ISSNs 1414-7106 and 1982-6745) was excluded due to its absence from the Scimago database, which rendered it without available values for the SJR and H-Index metrics. Second, the quantile-based normalization and classification applied to the SJRN and HIR variables ensure standardized scaling that effectively captures meaningful differences in journal performance. This methodological approach, using well-defined thresholds, enhances interpretability, facilitates direct comparisons across journals, and supports robust, replicable analysis in scholarly assessments.

2.4. Phase 4: Scores

Step 9) Select and Classify the 400 Most Notable Journals (MNJ)

9.1 Select 400 MNJs

The MNJ formula was applied to a dataset of 587 journals (Appendix H2) to prioritize and select the top 400 Most Notable Journals (MNJs). The selection process involved excluding 50 journals (8.5%) that were either fully open access with mandatory APCs or subscription-only, both financially restrictive for researchers with limited funding. Additionally, 133 journals (22.6%) were excluded due to a lack of transparency in the SPEED Index, and 3 journals were removed for having the lowest MNJ scores.

The new list, presented in Appendix I, ranks the 400 MNJs in descending order based on their MNJ scores (median: 0.6956; range: 0.3078–0.9619). For each journal, key publication attributes are provided, including Title, ISSN, MNJ score, coverage across four main subject areas, Qualis classification, APC amounts (ranging from US$0 to US$12,690; mean: US$3,763), publisher, journal type, 2024 NDP (in days), and the component metrics contributing to the MNJ score. Consequently, the five leading MNJs are Elsevier’s hybrid journals, and include: Applied Catalysis B: Environmental, Bioresource Technology, Energy Conversion and Management, Science of The Total Environment, and Journal of Hazardous Materials.

These journals support open access through two options for authors with accepted articles: pay the APC (median: US$4,830) for immediate open access, or opt for the traditional subscription model, where only the abstract is publicly accessible and full content is restricted to subscribers. They also demonstrate a rapid publication timeline, with a median NDP of 89.5 days.

Figure 9.

Detailed Formula of the Most Notable Journals (MNJ).

Figure 9.

Detailed Formula of the Most Notable Journals (MNJ).

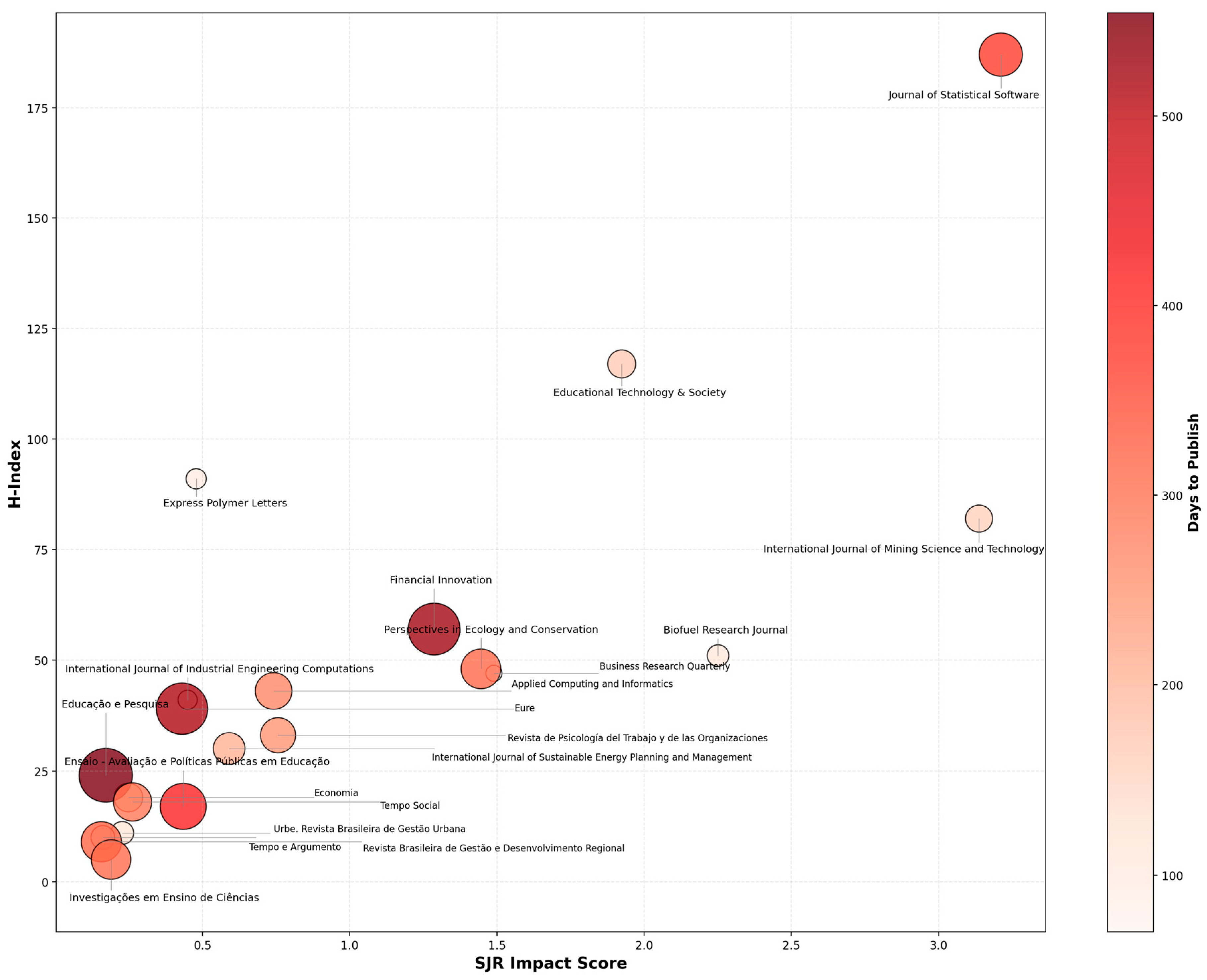

9.2. Performance by type of Journal and Publishers

The analysis of 400 journal types reveals a more favorable publication landscape for Under-Resourced researchers than initially anticipated. Among them, 21 Diamond Open Access (OA) journals (5.2%) stand out for offering no-fee publishing and full public access to articles. These journals report a mean publication time of 261 days (ranging from 70 to 554.5 days), making them highly accessible for scholars facing financial constraints.

Figure 10 presents a combined impact-speed metric (SJR × H-Index × NDP) for these

Diamond journals. On average, they show an SJR of 0.955 (0.157–3.211) and an H-index of 46.6 (5–187). The Most Notable Journals include the

International Journal of Industrial Engineering Computations (MNJ=0.8164; NDP=90 days; SJR=0.450; HI=41),

Biofuel Research Journal (MNJ=0.7953; 109 days; 2.251; 51),

International Journal of Mining Science and Technology (MNJ=0.7456; 158.5 days; 3.137; 82), and

Express Polymer Letters (MNJ=0.6334; 98 days; 0.479; 91).

For researchers prioritizing impact and visibility over publication speed, recommended journals include the Journal of Statistical Software (NDP = 371 days), International Journal of Mining Science and Technology (158.5 days), and Educational Technology & Society (168 days). Conversely, for those seeking faster publication timelines, leading options are Business Research Quarterly (70 days), International Journal of Industrial Engineering Computations (90 days), Express Polymer Letters (98 days), and Biofuel Research Journal (109 days). Targeting these Diamond journals allows scholars to publish in top-tier A1 outlets without incurring APCs, effectively balancing impact, visibility, and speed according to individual research goals.

Figure 10.

Performance Metrics of 21 Most Notable Diamond Open Access Journals.

Figure 10.

Performance Metrics of 21 Most Notable Diamond Open Access Journals.

Hybrid Journals dominate with 361 journals (90.2%) with the top five MNJs already mentioned in the previous section. All the 361 journals offer the option of free publication with abstract-only visibility, boasting the highest average metrics (SJR 2.048, H-index 170.8) and faster publication times (median 180 days). While their APCs average $4,034 for those choosing open access, the no-APC option (tradition) makes them accessible to all researchers.

Gold OA Journals constitute only 18 journals (4.5%), requiring APCs averaging $2,722 (range $1,300-$4,640) with moderate impact (SJR 1.184) and median of 214 days from submission to publication. Strategic options include journals related to University of Surrey (Jasss; US$ 1,300, 98 days), Vilnius Gediminas Technical University (Technological and Economic Development of Economy; $1,378, 105 days), and Springer Nature (Humanities and Social Sciences Communications; $1,890, 133 days) for researchers with modest funding.

In terms of Publishers, the Elsevier Group leads with 206 journals (210 Hybrid, 1 Diamond), averaging 189 days to publication and SJR 1.933, while Springer Group’s 38 journals (33 Hybrid, 4 Gold OA, 1 Diamond) average 244 days, and Taylor & Francis’s 27 journals (26 Hybrid, 1 Gold OA) take 261 days on average.

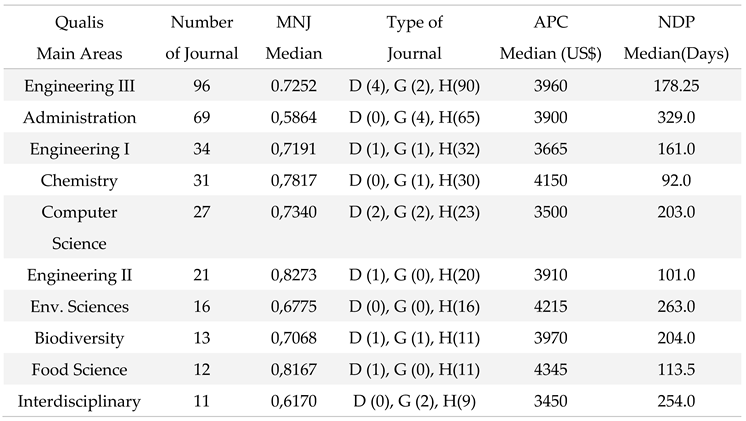

9.3. Performance by Qualis Main Area

When each journal area is classified according to

QUALIS main area, the Appendix I shows high diversity, covering 30 distinct fields. In terms of representability, the top ten areas (

Table 2; Appendix J) include Engineering III (96 journals), Administration (69), and Chemistry (31), while eight areas, such as Geography, Education, and History, are represented by only one journal each

Table 2.

The ten most representative Qualis main Areas among the 400 MNJ.

Table 2.

The ten most representative Qualis main Areas among the 400 MNJ.

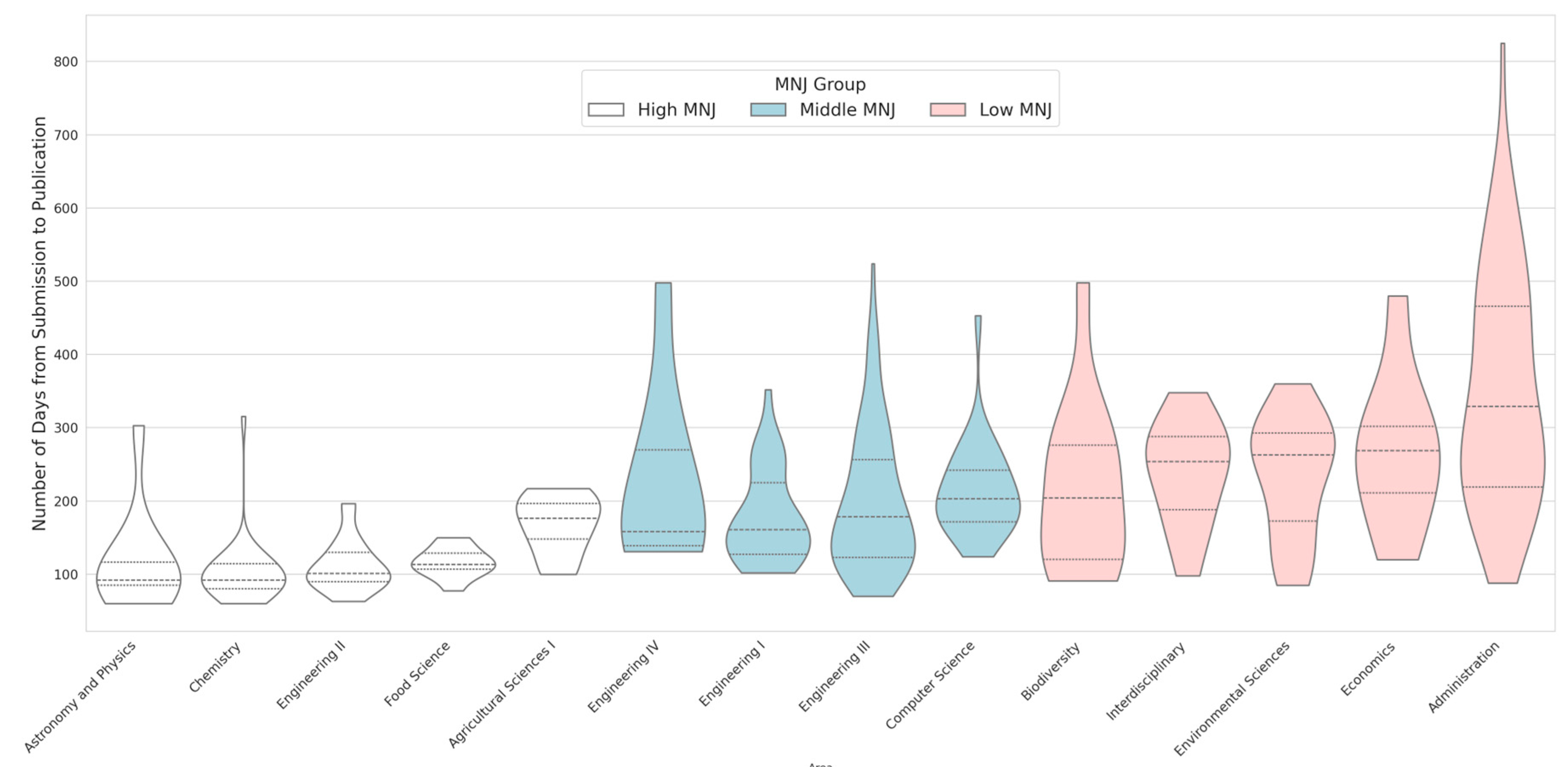

Analysis of median MNJ scores, journal types, APCs, and NDP across Qualis Main Areas with over five journals (Appendix J; Figure 11) reveals distinct patterns within High, Middle, and Low MNJ Groups, offering key insights for resource-limited researchers.

The High MNJ Group includes five areas with 84 journals and a median MNJ of 0.8167: Engineering II, Food Science, Astronomy & Physics, Chemistry, and Agricultural Sciences I. These fields feature a fast median publication time (101 days) and a median APC of US$3,950. Most journals are hybrid (81 titles), with only two Diamond and one Gold OA.

For Under-Resourced authors, hybrid journals in High MNJ Group offer free publication with abstract access but restrict full-text availability. Despite the few Diamond journals, the abundance of hybrid outlets provides 83 no-APC options for rapid dissemination, albeit with limited visibility behind paywalls.

The Middle MNJ Group includes four areas with 166 journals and a median MNJ of 0.7232: Engineering III, Computer Science, Engineering IV, and Engineering I. This group has a slower median NDP (170 days) but a lower median APC of US$3,682.50. Hybrid journals dominate (154 titles), with seven Diamond and five Gold OA journals offering free or low-fee full-text publication. This provides underfunded researchers with a balance of impact and affordability.

Figure 11.

Submission-to-Publication Distribution by MNJ Group (Areas > 5 Journals).

Figure 11.

Submission-to-Publication Distribution by MNJ Group (Areas > 5 Journals).

The Low MNJ Group includes Biodiversity, Environmental Sciences, Interdisciplinarity, Administration, and Economics, totaling 117 journals with a median MNJ of 0.6170. Despite lower impact and longer publication times (median 263 days), this group charges the highest median APC (US$3,955) and consists mainly of hybrids (108), with only seven Gold OA and two Diamond journals, offering a poor cost–benefit ratio.

To navigate these constraints, Under-Resourced scholars should publish via hybrid no-APC routes and deposit preprints in open repositories, or concentrate on the few available Diamond journals, to ensure both fee relief and full-text accessibility.

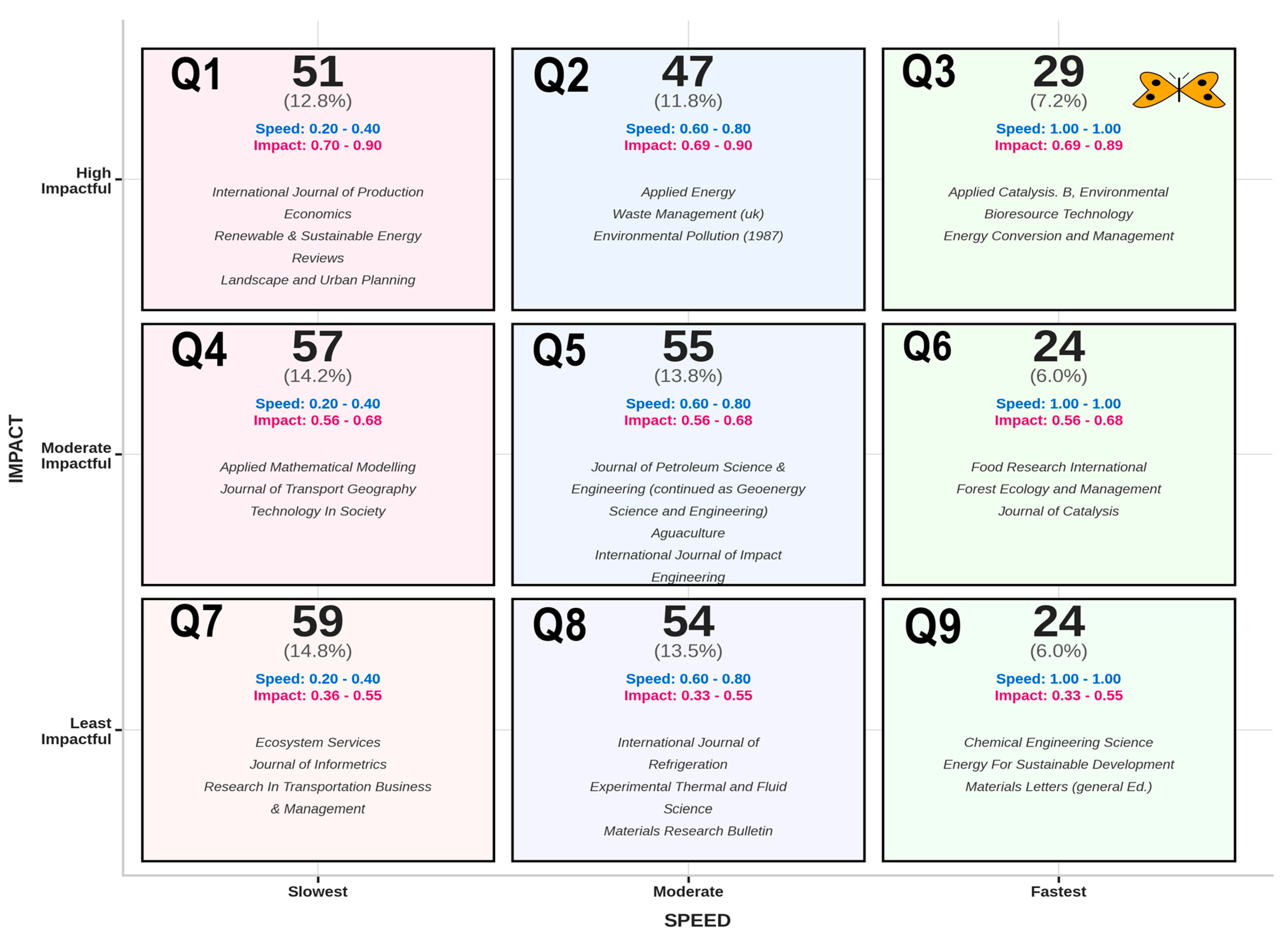

9.4. Classify the 400 MNJs

The Julius AI and Appendix I were used to develop Appendices L and M to classify the journals in nine quadrants as shown in Figure 12. To avoid arbitrary thresholds, the 400 journals were classified using again the percentile-based approach, dividing Speed and Impact into tertiles: Speed (Slow ≤0.4, Moderate 0.4–0.8, Fast >0.8); Impact (Low ≤0.552, Moderate 0.552–0.68, High >0.68), all percentiles based on 33.33rd and 66.67th breaks).

Thus, Appendix L and Figure 12 present the distribution of journals across the nine groups (3×3 matrix), revealing notable patterns within the academic publishing landscape. These patterns and recommendations are described in the next sections.

9.4.1 Quadrant 1 (Q1): Slowest & High Impactful

This group includes 51 journals (median MNJ: 0.6544) with long publication times (median NDP: 281 days) but high impact. Dominated by hybrids (96.1%), it offers flexibility for Under-Resourced researchers. Key titles include the International Journal of Production Economics and Renewable & Sustainable Energy Reviews. With one Diamond (Journal of Statistical Software) and hybrid APCs (median: $4,030), authors can publish without upfront costs, retain abstract visibility, and seek funding for OA after acceptance.

9.4.2 Quadrant 2 (Q2): Moderate Speed & High Impactful

This quadrant includes 47 journals (median MNJ: 0.7905) with fast publication times (median NDP: 154 days) and high impact. All are hybrids, offering submission without upfront APCs. Top titles include Applied Energy (MNJ = 0.8864; NDP = 131.5 days), Waste Management (MNJ = 0.8796; NDP = 147.5 days), Environmental Pollution (MNJ = 0.8755; NDP = 111 days), Acta Materialia (MNJ = 0.8687; NDP = 132 days), and Journal of Environmental Management (MNJ = 0.8660; NDP = 111.5 days).

Despite a high median APC ($4,260), Under-Resourced researchers can benefit from rapid timelines, defer OA decisions until after acceptance, and explore green OA options where available.

Figure 12.

3x3 Matrix showing the distribution of the 400 Most Notable Journals.

Figure 12.

3x3 Matrix showing the distribution of the 400 Most Notable Journals.

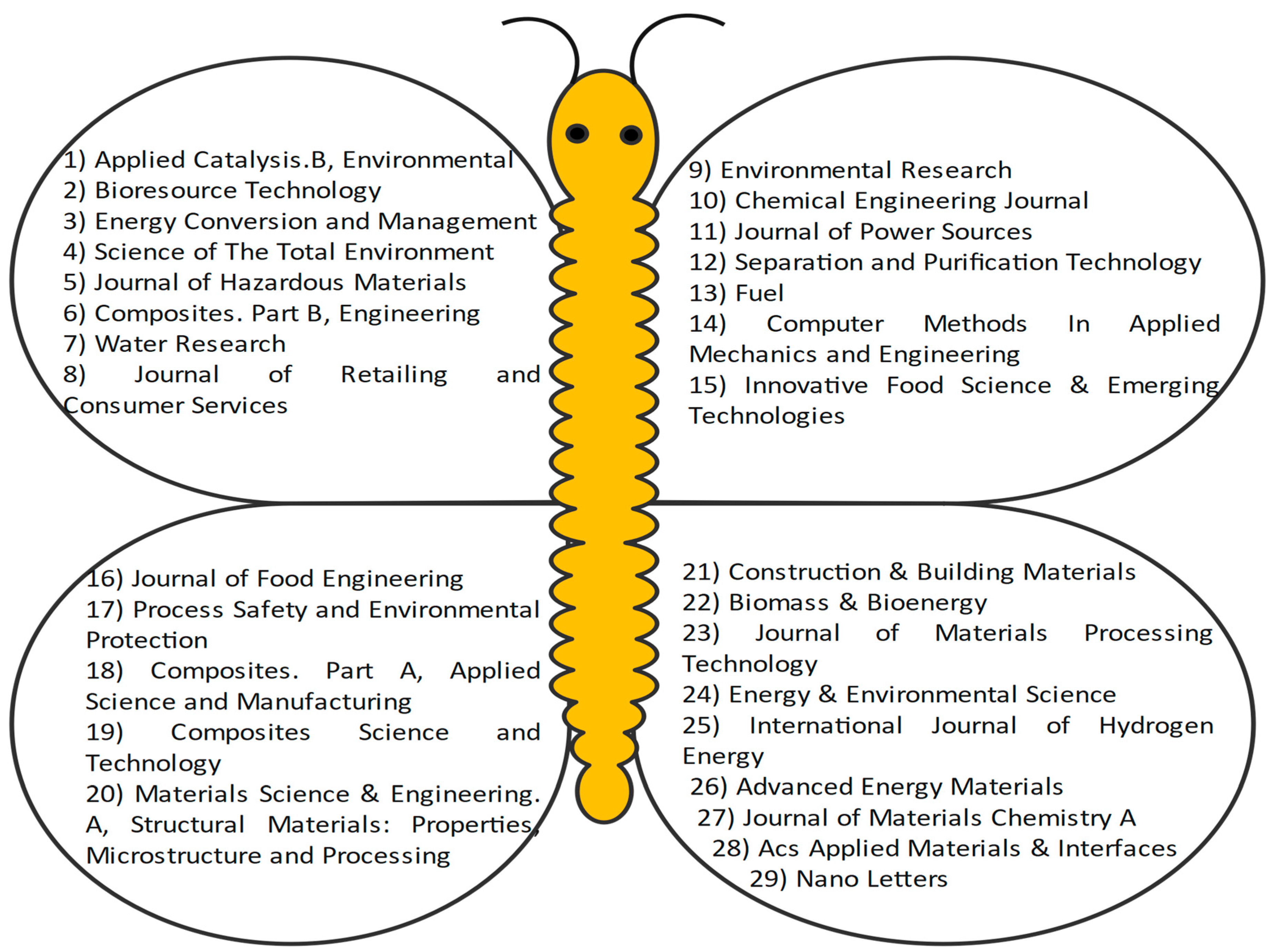

9.4.3 Quadrant 3 (Q3): Fastest & High Impactful Journals

This is the elite category (Figure 13), characterized by the highest median MNJ (0.9116), the greatest impact (0.712), and the fastest publication time among all quadrants, with a median NDP of 91 days. All 29 journals operate as hybrids, and the five best performers are: Applied Catalysis B: Environmental (MNJ = 0.9619; NDP = 76.5 days; CLRPE = 0.64; SJR = 5.18; HI = 353), Bioresource Technology (MNJ = 0.9592; NDP = 80.5 days; CLRPE = 0.60; SJR = 2.395; HI = 383), Energy Conversion and Management (MNJ = 0.9524; NDP = 91.5 days; CLRPE = 0.80; SJR = 2.659; HI = 274), Science of The Total Environment (MNJ = 0.9524; NDP = 91 days; CLRPE = 0.80; SJR = 2.137; HI = 399), and Journal of Hazardous Materials (MNJ = 0.9510; NDP = 89.5 days; CLRPE = 0.48; SJR = 3.078; HI = 375).

These fast, high-impact hybrid journals offer accessible submission with optional post-acceptance APCs (median US$4,480). They are highly recommended for Under-Resourced researchers or for breakthrough studies requiring rapid dissemination. Their quick peer-review process and strong editorial standards ensure high visibility and impact. Even under paywalled access, publishing in these journals helps establish research priority and accommodates future open access conversion if funding becomes available.

9.4.4 Quadrant 4 (Q4): Slowest & Moderate Impactful

This group of 57 journals (median MNJ: 0.5850) is mostly hybrid (94.7%), with one Diamond (Financial Innovation) and two Gold OA titles. Top performers include Applied Mathematical Modelling, Journal of Transport Geography, Land Use Policy, and Technology in Society.

These journals have slower processing times (median NDP: 295 days) and moderate impact, making them suitable for non-urgent submissions like reviews or methodological studies. With a lower median APC (US$3,890), and flexible hybrid models, they offer accessible options for researchers focusing on specialized or emerging fields without strict time constraints.

Figure 13.

The 29 Most Notable Journals (Fastest & High Impactful).

Figure 13.

The 29 Most Notable Journals (Fastest & High Impactful).

9.4.5 Quadrant 5 (Q5): Moderate Speed & Moderate Impactful

This third-largest group features journals like Journal of Petroleum Science & Engineering (now Geoenergy Science and Engineering), Aquaculture, and International Journal of Impact Engineering. These 55 journals show moderate impact (median MNJ: 0.8155), average publication speed (median NDP: 157 days), and affordable median APCs (US$3,810).

With 92.7% hybrid coverage, they offer accessible, mid-range venues ideal for researchers seeking a balance between speed, cost, and visibility to build a steady publication record.

9.4.6 Quadrant 6 (Q6): Fastest & Moderate Impactful

This second-fastest group (24 journals) includes Food Research International, Forest Ecology and Management, Journal of Catalysis, Energy Storage Materials, and International Journal of Engineering Science, all combining rapid publication (median NDP: 91.5 days) with moderate impact (MNJ ≈ 0.89).

With a uniform speed index (1.00) and strong hybrid presence (91.7%), plus two Diamond titles, these journals suit time-sensitive research. Despite a higher median APC (US$4,145), Under-Resourced researchers can publish without APCs, making this category ideal for fast-tracked, applied, or emerging-topic publications.

9.4.7 Quadrant 7 (Q7): Slowest & Least Impactful

This largest quadrant (59 journals) includes Eure, Science and Public Policy, and Investigações em Ensino de Ciências, characterized by the lowest median MNJ (0.4823) and longest publication times (median NDP: 300 days).

Despite slower processing and modest impact (Speed Index: 0.20–0.40; Impact Index: 0.36–0.55), this group offers the highest proportion of free publishing options: 17% Diamond, 10% Gold OA, and 72.9% hybrid journals. Many titles are newer or in reformulation, often adopting Diamond models to attract submissions.

This quadrant seems to be ideal for early-career or resource-limited researchers seeking A1 publications without APCs. Priority should go to Diamond and Gold OA outlets, with hybrid journals used where no-fee traditional routes exist. Preprints and institutional repositories can help mitigate visibility delays.

9.4.8 Quadrant 8 (Q8): Moderate Speed & Least Impactful

With a median MNJ of 0.6599 and NDP of 150 days, this group offers consistent, mid-speed publication schedules with moderate impact. Representative journals include International Journal of Refrigeration, Experimental Thermal and Fluid Science, and Materials Research Bulletin.

Comprising 54 journals (47 hybrid, 4 Gold OA, 3 Diamond), this quadrant balances affordability (median APC: $3,585) with publication speed. It is best suited for Under-Resourced researchers seeking timely dissemination in niche fields without high APC burdens. Hybrid and OA options provide flexibility, making it a practical choice for maintaining a steady publication record between higher-impact projects.

9.4.9 Quadrant 9 (Q9): Fastest & Least Impactful

This group of 24 journals offers the third-fastest publication speed (median NDP: 93.5 days) with solid performance (median MNJ: 0.8218). It includes 19 hybrid journals (79.2%), 2 Diamond (e.g., International Journal of Industrial Engineering Computations, Express Polymer Letters), and 3 Gold OA journals. Journals like Chemical Engineering Science and Energy for Sustainable Development combine rapid processing with flexible access models. The median APC ($3,590) applies only if authors opt for open access after acceptance.

Ideal for time-sensitive research, preliminary results, or early-career publications. Free submission is possible through hybrid and Diamond models, enabling authors to build publication records and establish priority while managing budget constraints.

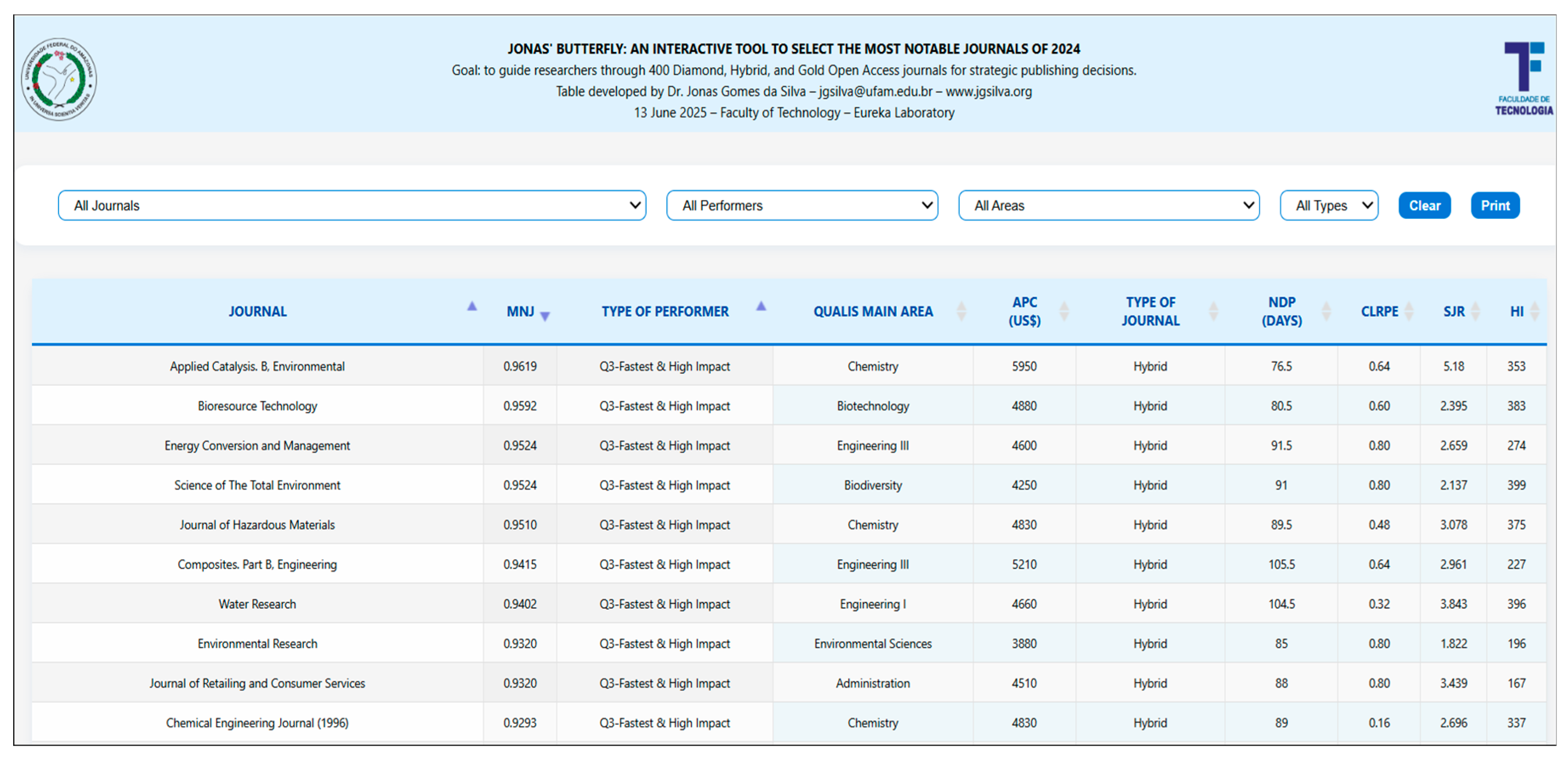

Step 10) Organize, Release, and Share an Interactive HTML Tool for Journal Selection.

Between June 1–13, 2025, Julius AI, the dataset (Appendix L), and two logos were used to test prompts, resulting in the final prompt, Python script, and HTML file (Appendices N–P). This process produced an interactive HTML tool (

Figure 14; Appendix Q) to help researchers explore 400 Diamond, Hybrid, and Gold OA journals with notable scores.

Figure 14.

The main section of the HTML file designed to assist researchers.

Figure 14.

The main section of the HTML file designed to assist researchers.

The next step was creating a public GitHub repository, “400mostnotablejournals2024,” using the author’s account and the HTML file (Appendix Q). A shareable link (

https://tinyurl.com/35uhcuaf) was added to the author’s website (

https://www.jgsilva.org/, ‘Pub’ section) via Wix Studio, ensuring free access for academics and the public. This use of GitHub promotes transparency, reproducibility, and collaboration through open sharing, version control, and detailed documentation (Chen et al., 2025; Ram, 2013).

This is an interactive HTML tool that provides researchers with services to enhance their academic publishing decisions. It enables rapid exploration and comparison of over 400 notable journals by offering four intuitive filters for journal name, type of performer, main research area, and journal type, as well as two buttons to clean or to print pdf report.

The responsive table allows easy sorting and pagination, while the integrated PDF export function lets users generate customized reports of filtered results, complete with institutional branding for printing.

Additionally, all appendices, figures, files, and datasets were deposited in the author’s Harvard Dataverse account (Gomes da Silva, 2025b). Harvard Dataverse is a widely recognized multidisciplinary repository that adheres to the FAIR principles and is commonly used by journals and researchers to ensure robust data management, long-term preservation, and proper citation (Wilkinson et al., 2016; Boyd, 2021).