1. Introduction

The advent of algorithmic management has significantly transformed the hospitality industry, reshaping operational processes, employee experiences, and ethical considerations (Yang, 2025; Jianu et al., 2025). As digital technologies profoundly affected all economies and become increasingly integrated into service delivery (Mirčetić & Mihić, 2022), algorithmic systems promise enhanced efficiency, precision, and responsiveness (Luo & Yi, 2025). One of the primary advantages of algorithmic management in hospitality is its ability to significantly enhance operational efficiency (Zhang, H. et al., 2025). Through sophisticated algorithms, managers can optimize resource allocation, such as staffing levels and inventory management, ensuring that resources are deployed where they are needed most, thereby reducing waste and increasing productivity (Diwan, 2025). According to Wu et al. (2024), dynamic scheduling systems powered by algorithms can adjust shift patterns in real-time based on fluctuating customer demand, which is especially critical during peak hours or special events. This not only improves service delivery but also reduces labor costs by preventing overstaffing (Schwartz et al., 2025). According to Webster & Ivanov (2020) and Mandić et al. (2024), automation minimizes human error in decision-making processes. According to Mojoodi et al. (2025), algorithms that handle reservation systems can prevent overbooking and double-booking issues, which traditionally relied on manual oversight prone to mistakes. According to Rojas & Jatowt (2025), algorithmic management enhances responsiveness to customer preferences and behaviors. By analyzing data from online reviews, booking patterns, and social media interactions, these systems can tailor offerings, personalize marketing, and optimize service delivery, thereby elevating customer satisfaction (Jia et al., 2025). The integration of such data-driven approaches leads to a more agile and competitive hospitality operation, capable of adapting swiftly to changing market conditions (Xu et al., 2024; Contessi et al., 2024; Huang et al., 2025).

Given the rapid digital transformation of the hospitality industry, particularly in the realm of human resource management (Ivanov et al. 2020; Vujko et al., 2025a), there is growing interest in how algorithmic or AI-driven systems shape employee experiences. While such technologies promise efficiency, transparency, and performance optimization, their impact on frontline employees remains insufficiently explored. This study contributes to the expanding scholarly discourse on algorithmic management and artificial intelligence (AI) integration in the hospitality industry by empirically examining the effects of AI-driven human resource (HR) systems on hotel employees’ perceptions of performance, autonomy, fairness, and work-life balance. While existing research has primarily emphasized the operational efficiencies afforded by AI in hospitality contexts (Buhalis & Leung, 2018; Zhu & Chen, 2025; Alam et al., 2025), there remains a notable gap concerning how these systems impact employee well-being and organizational trust. Addressing this gap, the present study adopts a post-positivist epistemological approach and applies structural equation modeling (SEM) to a large, demographically diverse sample of 437 employees from Accor Group hotels in Paris, Berlin, and Amsterdam.

This study investigates how hotel employees perceive the implementation of AI-driven HR systems—especially in terms of job attitudes, workplace satisfaction, and work-life balance. The subject of the research centers on employees working in Accor Group hotels across Paris, Berlin, and Amsterdam, offering insight into how algorithmic management is experienced across different operational and cultural contexts. The research is grounded in the central hypothesis (H): Employees’ perceptions of algorithmic (AI-driven) management systems significantly influence their overall attitudes and work-life balance. Accordingly, the aim is to analyze whether AI is perceived as a supportive and developmental tool or as a restrictive force that reduces autonomy and emotional engagement. The research addresses the question: How do hotel employees perceive the impact of AI-driven HR systems on their work experience, job attitudes, and work-life balance, and what factors influence these perceptions? In doing so, the study contributes to a more nuanced understanding of digital transformation in hospitality work environments, with implications for ethical management, employee retention, and sustainable human resource strategies. This study advances theoretical integration by combining concepts from the algorithmic management literature (Kellogg et al., 2020), organizational justice theory (Leventhal, 1980; Colquitt, 2001), and technology acceptance models (Davis, 1989; Venkatesh et al., 2003). Additionally, the analysis examines how demographic factors (e.g., age, gender, education and experience) moderate these relationships, providing nuanced insights into the human consequences of digital transformation in hospitality. As such, this research fills a critical empirical and conceptual gap, offering actionable knowledge for the ethical and sustainable integration of AI in HR practices.

Two latent constructs—AI Perceptions and Balanced Management—were identified through exploratory factor analysis and validated through SEM, capturing both the supportive and disruptive dimensions of algorithmic management. It can be concluded that the integration of AI-driven management systems in the hospitality sector brings both promising advancements and critical challenges. Employees increasingly encounter algorithmic tools in areas such as scheduling, performance monitoring, and communication, and their perceptions of these systems significantly shape workplace outcomes. While AI offers potential gains in efficiency, recognition, and career development, it can also generate concerns related to reduced flexibility, monotony, and the replacement of human judgment. These opposing experiences suggest that the effects of AI in the workplace are not merely technical but deeply relational and contextual. It can also be concluded that employee acceptance of algorithmic management depends largely on how fairly, transparently, and supportively these systems are introduced and maintained.

2. Literature Review

The integration of algorithmic management systems and AI-powered tools, such as service robots, is rapidly transforming operations in the hospitality industry (Cheng & Hwang, 2025). These technologies are not merely technical instruments; rather, they are experienced and interpreted through the lens of employees' individual beliefs, emotional responses, and personal attributions (Tan & Li, 2025). As such, employee perceptions significantly influence how algorithmic systems are received, and, in turn, how they shape workplace engagement, satisfaction, and adaptability (Bai et al., 2025; Vujko et al., 2025b). A growing body of research suggests that employee attitudes toward algorithmic management are profoundly shaped by how they make sense of its purpose and impact (Kellogg et al., 2020). When AI systems are viewed as enhancing fairness, objectivity, and performance clarity, employees may interpret them positively (Ahn & Chen, 2022; Huo et al., 2025). However, when perceived as rigid or dehumanizing, such systems can lead to reduced morale, autonomy concerns, and even active resistance (Xu et al., 2025).

One influential theoretical lens for understanding this variance is Regulatory Focus Theory (RFT), which distinguishes between two motivational orientations: promotion focus and prevention focus (Higgins, 1997; Li et al., 2025). Promotion-focused employees, who are driven by advancement and aspirations, tend to view algorithmic systems as opportunities for growth and achievement (Maiti et al., 2025). Their generally optimistic outlook supports greater adaptability to technological changes, including the adoption of AI-based HR tools or robotic service interfaces (Madanchian & Taherdoost, 2025). These employees are more likely to engage proactively with algorithmic management, interpreting it as a means of career development and workplace efficiency (Madanchian et al., 2023; Bennett & Martin, 2025).

In contrast, prevention-focused individuals prioritize security, stability, and the avoidance of errors (Shin et al., 2025). They tend to respond to algorithmic systems with skepticism, interpreting them as constraints that threaten their autonomy or job security (Sun et al., 2025). Such employees often express discomfort with automation, fearing that it may erode the relational and discretionary aspects of their roles (Cauchi et al., 2017). This group is more likely to report negative emotions, experience stress, and resist AI adoption, particularly when implementation lacks transparency or human oversight (Huang & Gursoy, 2024; Zhang, Y. et al., 2025). These divergent perceptions—anchored in motivational orientations—can have substantial effects on team dynamics and organizational culture (Zhou et al., 2024). While promotion-focused individuals may foster innovation and openness, prevention-focused attitudes can contribute to resistance, disengagement, and the deterioration of trust in management (Kirshner & Lawson, 2025). As such, the emotional and cognitive responses of employees to algorithmic tools must be actively managed to avoid polarization within teams and to support cohesive implementation efforts (Yadav & Dhar, 2021).

Effective integration of algorithmic management requires context-sensitive leadership (Jianu et al., 2025). Managers must acknowledge these psychological differences and avoid one-size-fits-all solutions. Instead, communication strategies should emphasize transparency, fairness, and opportunities for employee voice (Jerez-Jerez, 2025). Training programs tailored to various motivational profiles can enhance system acceptance and reduce uncertainty (Bai & Zhang, 2025). Ultimately, algorithmic tools should be framed not as replacements for human judgment but as enhancements to employee potential and well-being (Li et al., 2025). The literature clearly shows that the success of algorithmic management in the hospitality sector depends not only on technological capability, but on its social and psychological integration. Recognizing the role of regulatory focus and individual interpretation helps managers and researchers alike understand the complex interplay between automation and human behavior, paving the way for more inclusive and effective digital transformation strategies.

3. Materials and Methods

This study focuses on hotel employees working within the Accor Group’s properties across three major European cities: Paris, Berlin, and Amsterdam. Accor maintains a substantial presence in these destinations, with approximately 147 hotels in Paris, 35 in Berlin, and 25 in Amsterdam. These properties span a broad portfolio of brands—from luxury to economy—catering to diverse market segments and offering a rich context for analyzing employee experiences in varied organizational environments. To ensure statistical robustness and representativeness, a total of 437 hotel employees were surveyed. This sample size exceeds the minimum threshold recommended by Hair et al. (2010) for structural equation modeling (SEM), thereby supporting the reliability and validity of the analytical results. The sample was proportionally distributed across the three cities to capture geographic and organizational diversity in employee experiences with AI-driven human resource systems.

Demographic characteristics of the sample indicate a balanced representation across key variables. The gender distribution was nearly equal, with 50.3% male and 49.7% female respondents. The age structure reveals a mature workforce: 3.2% were aged 18–24, 3.4% were 25–34, 24.0% were 35–44, 30.2% were 45–54, 25.4% were 55–64, and 13.7% were over 65 years old. This composition suggests a workforce with considerable professional experience and potentially well-formed attitudes toward HR policies and technology. Educational background varied, with 51.7% of participants holding a high school diploma, 41.9% possessing a college or faculty degree, and 4.6% having obtained a master's or doctoral degree. Only 1.8% of respondents reported completing only elementary education. The relatively high level of formal education among employees reflects the growing professionalization of the hospitality sector and supports the assumption that participants were capable of meaningfully engaging with questions regarding technological systems in HR. In terms of work experience, 4.3% of employees had been employed for less than one year, 53.3% had between one and five years of experience, 31.4% had between six and fifteen years, and 11.0% had over sixteen years of service. These findings suggest that a significant portion of the sample had extensive tenure in the hotel industry, which is particularly relevant for exploring attitudes toward long-term career development and organizational loyalty in the context of digital transformation.

This study adopts a post-positivist epistemological stance, recognizing that employees’ perceptions of algorithmic management are shaped by subjective experiences yet can be systematically measured and analyzed. The use of structured quantitative methods, such as structural equation modeling, reflects a commitment to identifying underlying patterns and testing theory-informed hypotheses, while acknowledging the contextual and interpretive nature of human attitudes and organizational behavior. To examine the core premise of this study, two sub-hypotheses were formulated based on the underlying factor structure identified in preliminary analyses.

- -

Sub-Hypothesis 1 (H1a): It posits that employees who perceive artificial intelligence (AI) systems as beneficial—specifically in terms of enhancing job performance, reducing workload, and improving communication—will exhibit higher levels of career optimism and stronger intentions to remain with their organization.

- -

Sub-Hypothesis 2 (H1b): This hypothesis suggests that employees who view algorithmic management as striking a balance between operational efficiency and personal consideration will report lower perceptions of flexibility loss and greater satisfaction regarding skill development opportunities.

To explore hotel employees’ attitudes, satisfaction, and perceptions of work-life balance under AI-driven human resource (HR) systems, a structured questionnaire was developed, consisting of 40 evaluative statements. Each item was measured on a five-point Likert scale (1 = Strongly Disagree, 2 = Disagree, 3 = Neutral, 4 = Agree, 5 = Strongly Agree), enabling respondents to express their level of agreement with statements reflecting key constructs such as performance, autonomy, recognition, fairness, and digital support. The questionnaire design was grounded in an extensive literature review and drew upon several established theoretical models and validated measurement instruments: Constructs related to algorithmic control, flexibility, decision-making, and fairness were informed by research on algorithmic management, especially Kellogg et al. (2020) and Deldadehasl et al. (2025). Items measuring job satisfaction and work engagement were adapted from the Job Satisfaction Survey (Spector, 1997) and the Job Diagnostic Survey (Hackman & Oldham, 1975). Measures of work-life balance and scheduling stress were based on Netemeyer et al. (1996). Employee reactions to digital systems—such as perceived usefulness, feedback mechanisms, and communication improvements—were derived from the Technology Acceptance Model (Davis, 1989) and the Unified Theory of Acceptance and Use of Technology (UTAUT) by Venkatesh et al. (2003). To evaluate perceived fairness, inclusivity, and trust in automated decisions, items were guided by the Organizational Justice framework developed by Colquitt (2001) and Leventhal (1980). Finally, digital HR practices within hospitality were contextualized using insights from Buhalis and Leung (2018) and Ivanov and Webster (2017), particularly concerning service automation and smart HR environments.

The research was conducted between June 2024 and June 2025, during which the authors visited multiple hospitality workplaces across Europe. Data were collected using a mixed-mode approach: while the majority of responses were gathered face-to-face through on-site engagement, additional participants were reached via email invitations with secure survey links. This method enabled access to both operational and support staff across different levels of the organization. A significant part of the fieldwork was carried out in Accor Group hotels in Paris, Berlin, and Amsterdam. These hotels were purposefully selected due to their integration of AI-powered HR systems, including platforms for recruitment, shift scheduling, and chatbot-based employee support. During site visits, the researchers interacted with employees to collect responses in person, while others completed the survey digitally after being contacted through the hotels’ communication channels. These sites provided real-world insight into how algorithmic management functions in active hospitality operations and how it is perceived by those affected by it.

To uncover the latent structure underlying the 40 questionnaire items and identify coherent dimensions of employee perception, an exploratory factor analysis (EFA) was conducted. EFA reduces data complexity by grouping correlated variables into broader conceptual categories known as factors. This statistical approach is especially useful in identifying core constructs that shape how employees experience and interpret algorithmic systems in the workplace.

Where represents the observed variable (e.g., Job Monotony, Performance Impact), λ are factor loadings, F are the latent constructs (AI Perceptions and Balanced Management), and is the error term, representing variance not explained by the factors. To examine the relationships between employees’ perceptions of algorithmic management and their evaluations of balanced and fair HR practices, the study employed Structural Equation Modeling (SEM) using AMOS. The model is based on two latent constructs: Factor 1 (F1) – AI Perceptions, and Factor 2 (F2) – Balanced Management. The measurement model specifies how observed indicators reflect their underlying latent constructs through confirmatory factor analysis (CFA). The two-factor model was developed to reflect both the supportive and developmental aspects of AI (captured by F1) and employees’ perceptions of balance, fairness, and personal consideration under algorithmic management (captured by F2).

Factor 1 AI Perceptions encompasses employees’ overall perceptions and attitudes toward algorithmic management within the hospitality context. This factor captures a comprehensive range of experiences related to how AI-driven HR systems influence job performance, employee retention, workload, recognition, and communication, as well as the perceived balance between efficiency and employee satisfaction. The factor reflects a spectrum of employee experiences, highlighting both the advantages and challenges brought by algorithmic management. The construct “Performance Impact” indicates that employees believe AI enhances their job performance, while “Retention Intention” measures their likelihood to remain employed due to the presence of AI management. Conversely, constructs such as “Flexibility Loss” and “Turnover Risk” reveal concerns related to reduced work flexibility and the potential for increased employee turnover associated with AI systems. Organizational support is also addressed through items like “Support Availability” and “Training Adequacy,” assessing employees’ perceptions of assistance and preparation in adapting to AI tools. The constructs “Recognition Timeliness” and “Communication Improvement” evaluate the role of AI in providing prompt feedback and fostering better communication between staff and supervisors. Concerns about job engagement and autonomy appear in “Job Monotony” and “Decision Replacement,” which reflect feelings of reduced job variety and the displacement of human decision-making by AI. The construct “Satisfaction Tradeoff” emphasizes the tension between increased efficiency and potential decreases in employee satisfaction. The factor further incorporates elements related to inclusivity and future outlooks, such as “Diversity Respect,” which measures the consideration of diverse employee needs, and “Career Optimism,” which reflects confidence that AI-driven HR practices will enhance long-term career prospects. In sum, Factor 1 offers a rich, multidimensional understanding of hotel employees’ perceptions of algorithmic management, capturing the varied ways AI integration affects attitudes, job satisfaction, and loyalty within the hospitality industry.

Factor 2 Balanced Management captures employees’ perceptions of how algorithmic management influences the balance between organizational efficiency and individual well-being within the hospitality workplace. This factor reflects the extent to which AI-driven HR systems are perceived to consider personal circumstances, support productivity, and foster fair treatment. The construct “Balance Disruption” highlights concerns that algorithmic scheduling may negatively impact employees’ work-life balance, indicating a challenge in managing the demands of AI-driven shift assignments. In contrast, “Personal Consideration” reflects the degree to which employees feel their individual needs and circumstances are acknowledged by AI management systems when assigning shifts. Employees’ perceived productivity is captured by “Productivity Boost,” which measures whether working under algorithmic management enhances their efficiency and output. The construct “Fair Efficiency” emphasizes the hotel’s ability to maintain fairness and equity alongside the pursuit of AI-driven operational efficiency. Factor 2 also includes “Skill Development,” reflecting employees’ views that AI management supports their learning and growth by helping them acquire new skills. Finally, “Future Growth” captures employees’ belief in the continuing expansion and importance of AI-based management within the hospitality industry. Overall, Factor 2 represents a nuanced perspective on the interplay between AI-driven efficiency and employee-centric considerations, highlighting both the benefits and potential challenges of algorithmic management in fostering a balanced and supportive work environment.

These latent constructs were then utilized in a Structural Equation Model (SEM) to test the proposed hypotheses and examine how demographic variables (e.g., age, gender, experience) may moderate these relationships. To evaluate the adequacy of the structural equation model, several goodness-of-fit indices were examined. The Comparative Fit Index (CFI) was 0.913, and the Tucker-Lewis Index (TLI) was 0.904. Both indices exceed the commonly accepted threshold of 0.90, indicating an acceptable level of model fit. The Goodness-of-Fit Index (GFI) also yielded a value of 0.913, further supporting the model’s fit to the observed data. The Root Mean Square Error of Approximation (RMSEA) was 0.062, which falls within the acceptable range of 0.05 to 0.08, suggesting a reasonable approximation of the model in the population. Collectively, these fit indices indicate that the proposed structural model demonstrates a good overall fit, supporting its use for testing the relationships among employee attitudes, job satisfaction, and work-life balance under AI-driven HR systems in the hospitality sector.

4. Results

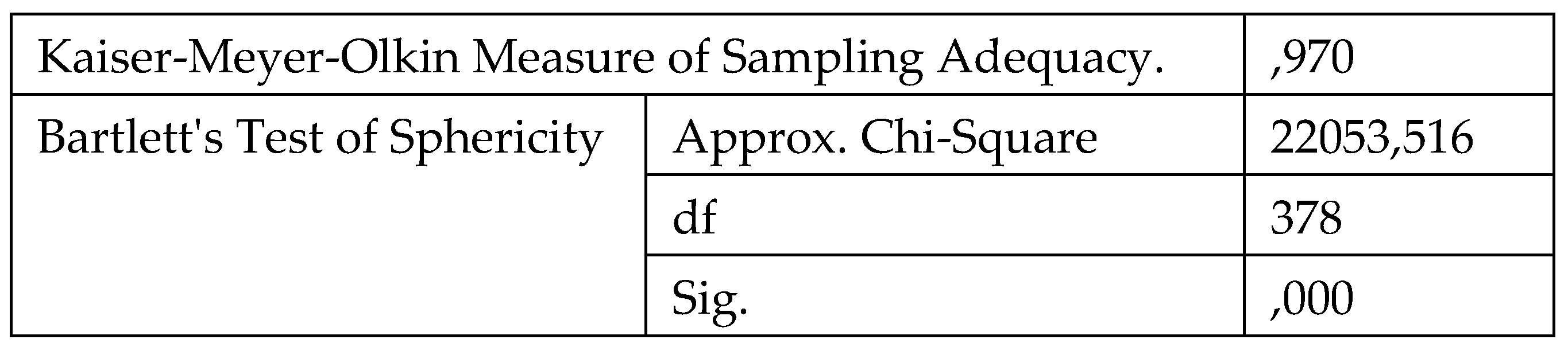

To assess the suitability of the dataset for factor analysis, the Kaiser-Meyer-Olkin (KMO) Measure of Sampling Adequacy and Bartlett’s Test of Sphericity were conducted. The KMO value was 0.970, which is well above the recommended threshold of 0.60 (Kaiser, 1974), indicating excellent sampling adequacy. This result confirms that the patterns of correlations among the variables are compact enough to yield distinct and reliable factors. Bartlett’s Test of Sphericity was highly significant, with a chi-square value of 22,053.516, degrees of freedom (df) = 378, and p < .001. This test evaluates whether the correlation matrix significantly differs from an identity matrix (i.e., a matrix in which all correlations are zero). The significant result indicates that the observed correlations are sufficient for conducting exploratory or confirmatory factor analysis. Taken together, these results provide strong statistical evidence that the dataset is appropriate for factor analysis and further structural equation modeling, supporting the validity of using this approach to explore underlying constructs such as employee attitudes, job satisfaction, and work-life balance in the context of AI-driven HR systems.

Table 1.

KMO and Bartlett's Test.

Table 1.

KMO and Bartlett's Test.

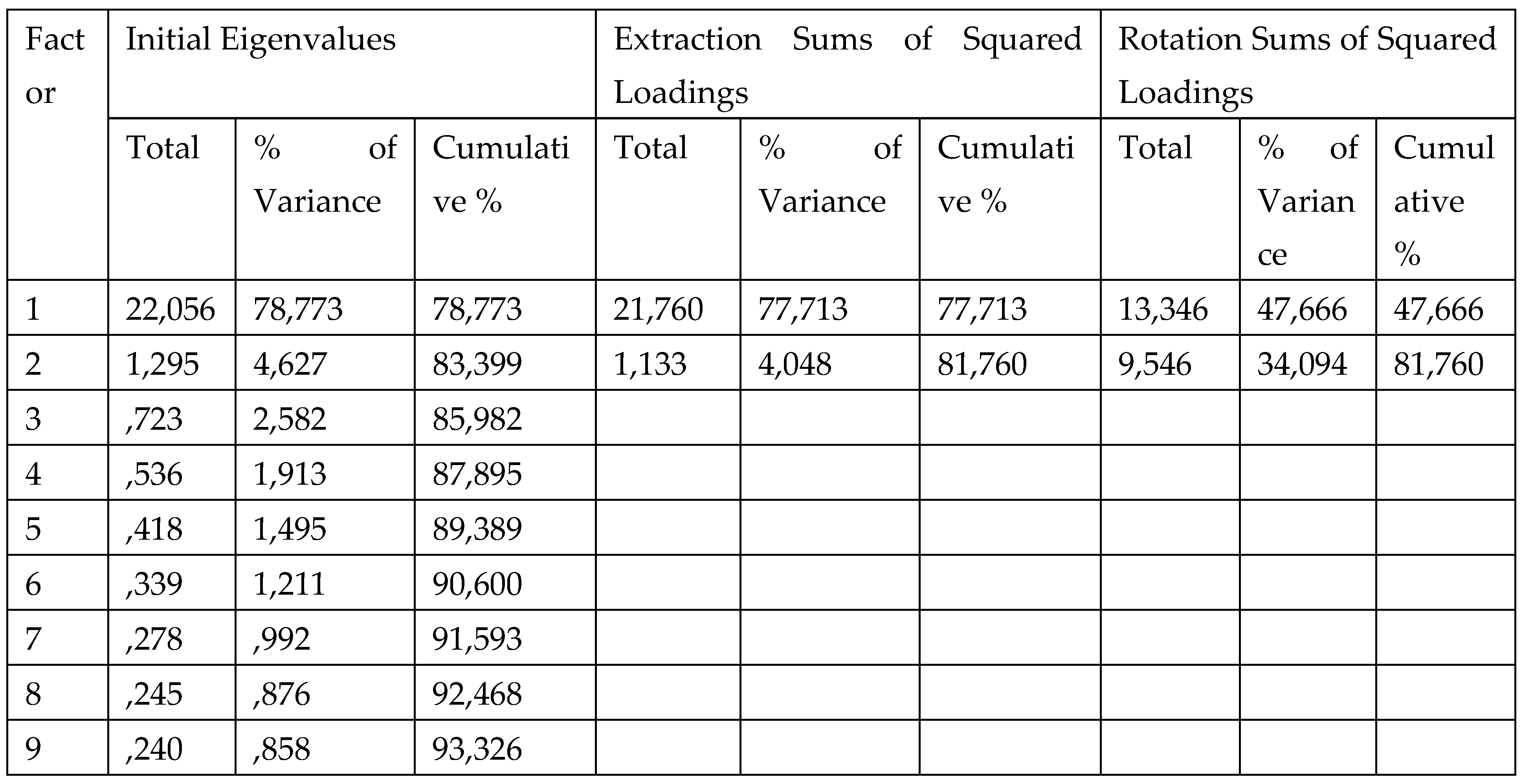

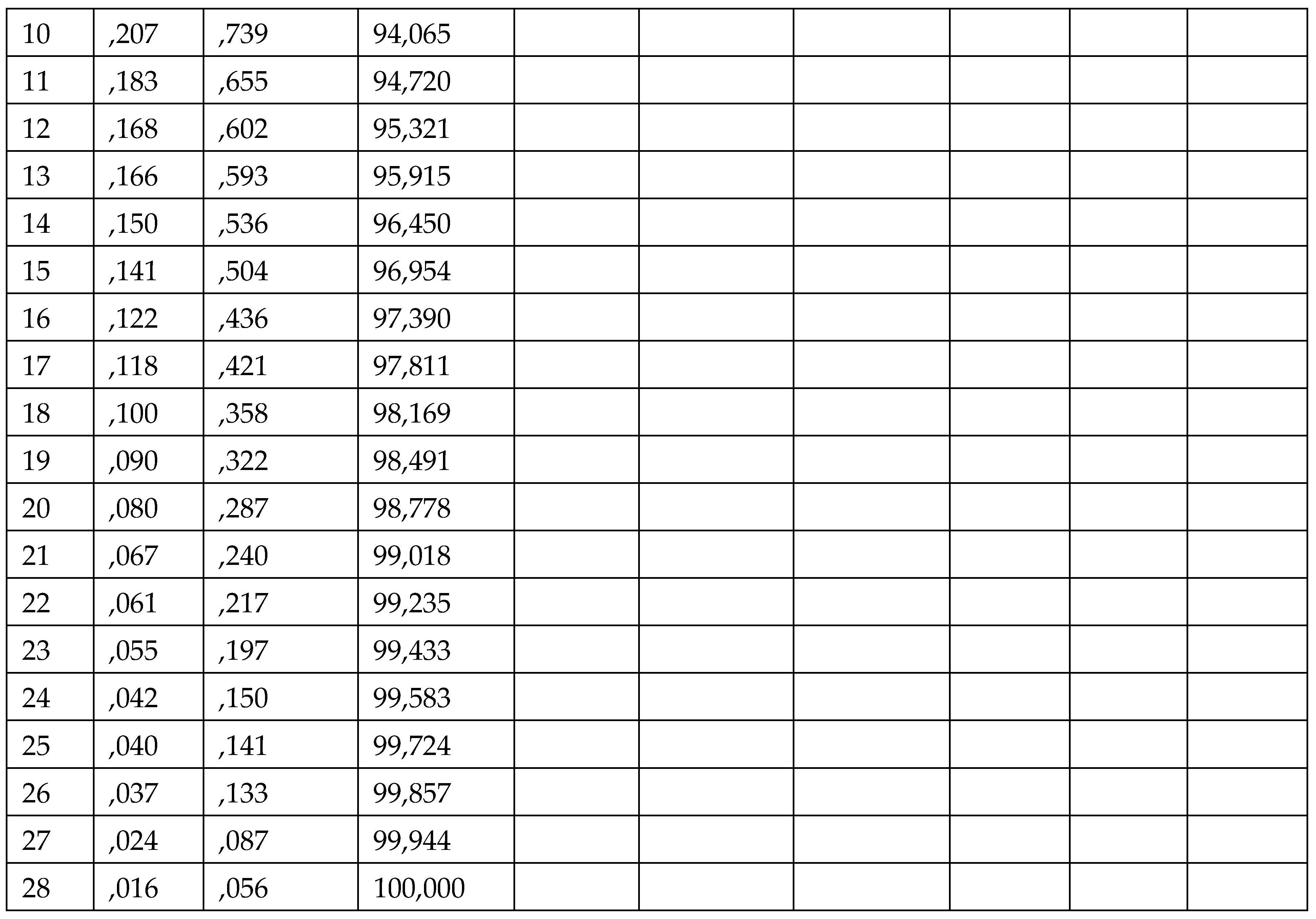

To identify the underlying factor structure of hotel employees’ perceptions of AI-driven HR systems, an exploratory factor analysis (EFA) was performed using Principal Component Analysis (PCA) with Varimax rotation. The analysis revealed two significant factors based on eigenvalues greater than 1. The first factor had an initial eigenvalue of 22.056, explaining 78.77% of the total variance, while the second factor had an eigenvalue of 1.295, accounting for an additional 4.63%. Together, these two factors explained 83.40% of the variance before rotation. After extraction, the first factor explained 77.71% and the second 4.05%, summing to 81.76% of the total variance, indicating a strong and well-defined factor structure. Varimax rotation further clarified the results, redistributing variance so that the first rotated factor explained 47.67% and the second 34.09%, while maintaining the total explained variance at 81.76%. This rotation enhanced the interpretability of the factors without sacrificing explanatory power.

Table 2.

Total Variance Explained.

Table 2.

Total Variance Explained.

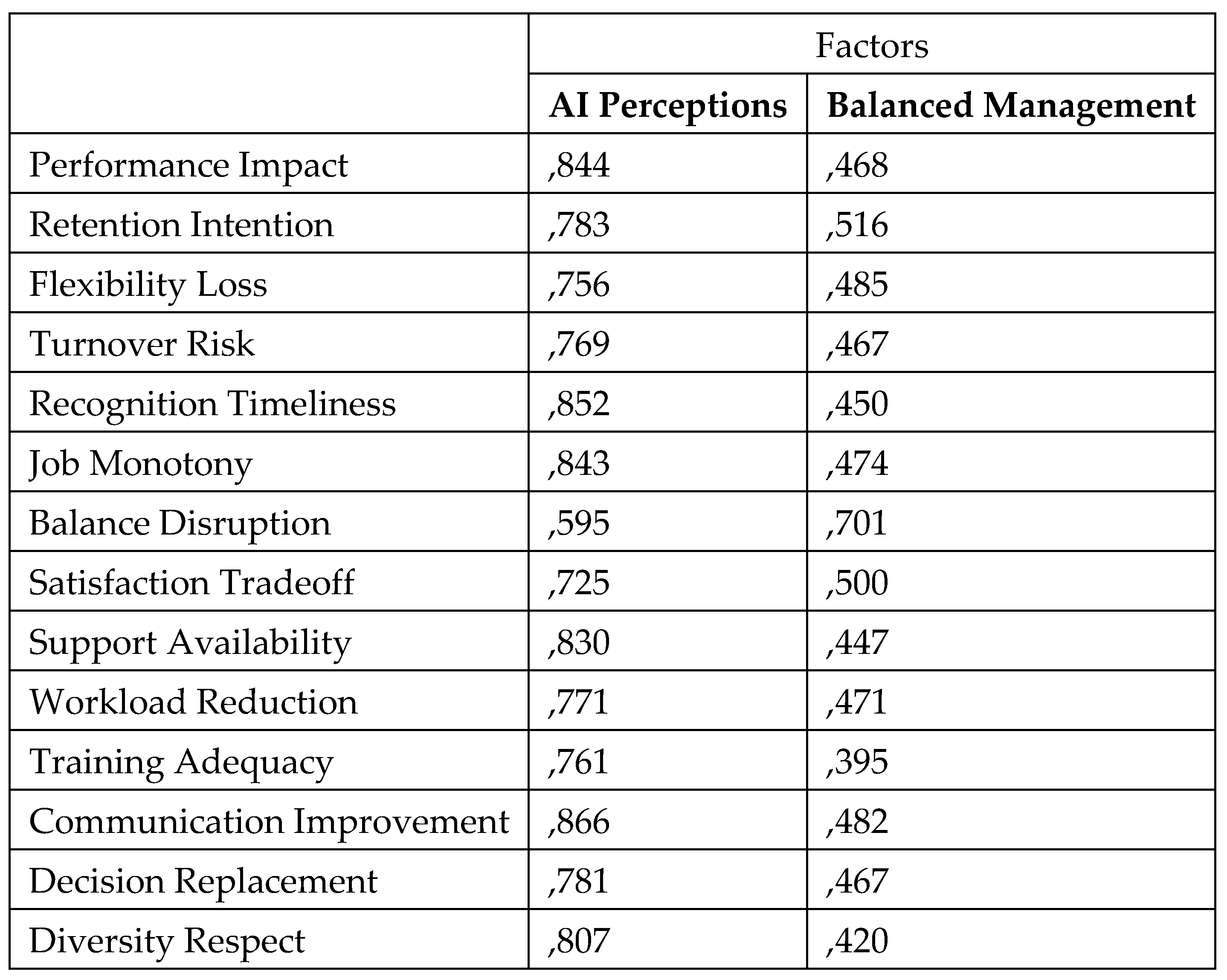

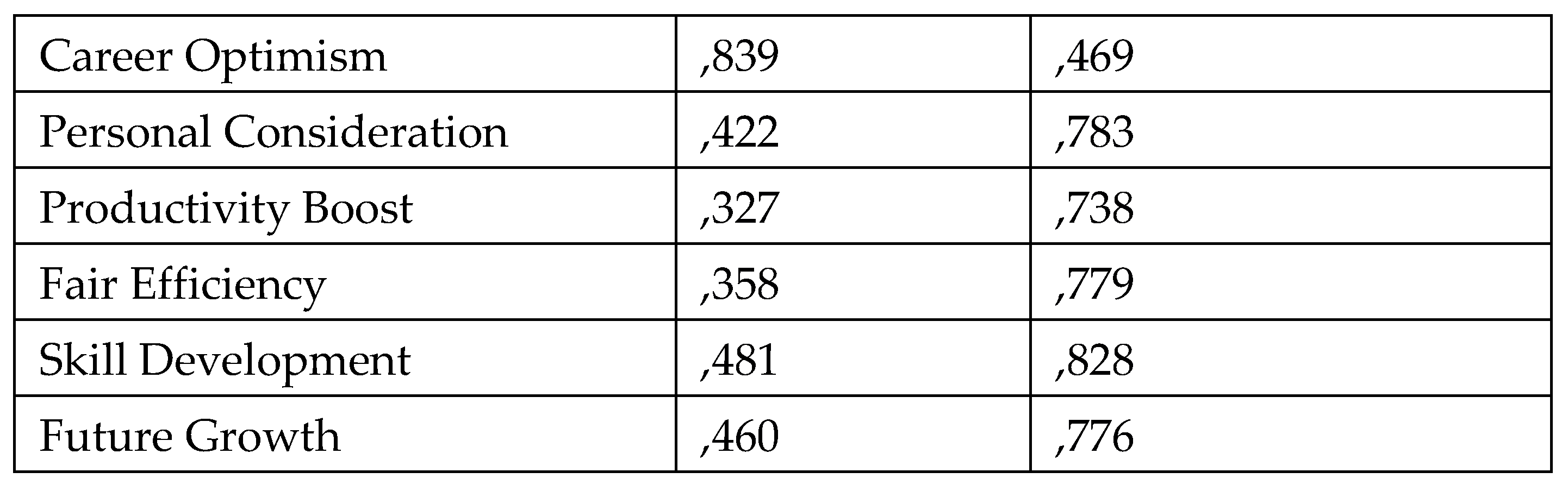

The two resulting factors (

Table 3) correspond to key dimensions in employee perceptions: Factor 1 represents

AI Perceptions, encompassing employees’ overall attitudes toward algorithmic management and its impact on job performance and workplace communication; Factor 2 represents

Balanced Management, reflecting employees’ perceptions of fairness, personal consideration, work-life balance, and skill development under AI-driven HR systems. These findings confirm a clear and interpretable two-factor structure underlying hotel employees’ views on AI management.

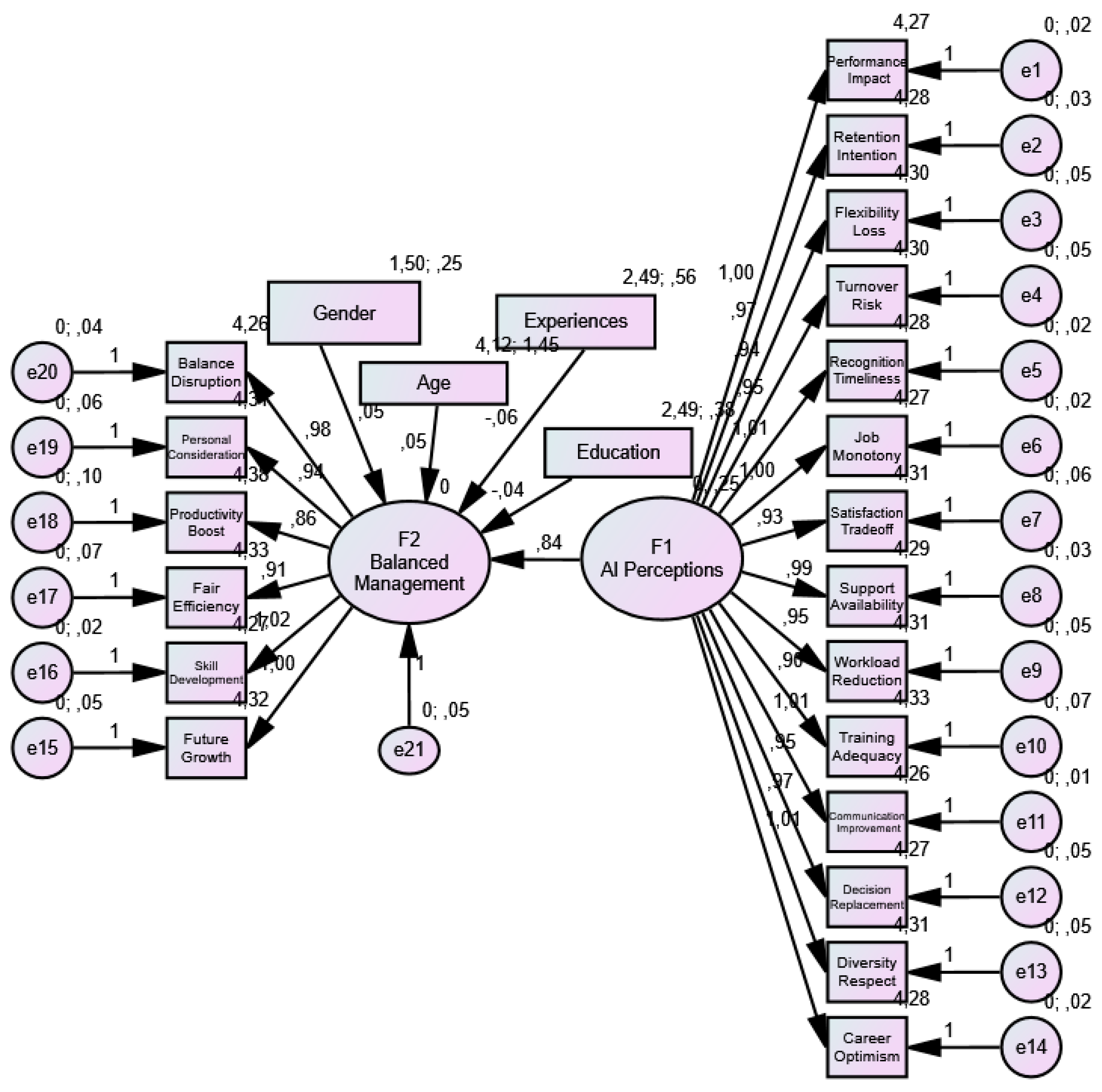

Figure 1.

Structural Equation Modeling (SEM). Source: Prepared by the authors (2025).

Figure 1.

Structural Equation Modeling (SEM). Source: Prepared by the authors (2025).

The structural equation modeling (SEM) results (

Figure 1) reveal a well-fitting model comprising two factors: F1 – AI Perceptions and F2 – Balanced Management, along with the influence of demographic variables (education, age, gender, and work experience). These findings provide insight into how hotel employees assess the integration of AI in workplace management, balancing efficiency gains with human-centered values. The first latent construct, AI Perceptions (F1), is composed of 14 observed variables reflecting employee attitudes toward the effects of AI-driven or algorithmic management systems. The factor includes variables representing both positive and negative outcomes, indicating the duality of experiences employees report when interacting with automated managerial tools. Positive perception indicators include: Performance Impact: Employees associate AI systems with improved overall performance; Retention Intention: Some employees are more inclined to remain in their jobs due to perceived improvements linked to AI; Recognition Timeliness and Support Availability: These reflect timely acknowledgment and assistance in AI-managed environments; Workload Reduction and Training Adequacy: Employees recognize practical support in easing job demands and adapting to new systems; Communication Improvement and Career Optimism: Positive long-term views of AI's role in internal communication and professional growth. Negative indicators reflect nuanced concerns: Flexibility Loss and Turnover Risk: Employees express that algorithmic scheduling reduces their control over work hours and may elevate turnover; Job Monotony and Satisfaction Tradeoff: These signal a perceived decline in job engagement and emotional satisfaction; Decision Replacement and Diversity Respect: Skepticism remains about AI replacing nuanced human decisions and the system’s ability to accommodate diverse employee needs. The factor loadings for F1 are remarkably high, ranging from .93 to 1.00, signifying strong internal consistency and robust measurement reliability. This confirms that employees clearly distinguish and evaluate the multifaceted impacts of AI on their professional experience. The second latent construct, Balanced Management (F2), consists of six observed variables that capture how employees perceive the integration of fairness, personal development, and future orientation in AI-managed systems. Balance Disruption and Personal Consideration: These address whether AI systems negatively affect work-life balance or show consideration for individual circumstances; Productivity Boost and Fair Efficiency: These reflect perceptions of performance enhancement paired with fair treatment; Skill Development and Future Growth: Employees also view AI’s potential to support learning and long-term relevance in the sector. These items also show high loadings (from

.86 to 1.02), supporting the construct’s validity and confirming that perceptions of balance and fairness are well defined and clearly articulated by respondents.

The structural model reveals a strong and statistically significant path from F2 (Balanced Management) to F1 (AI Perceptions), with a standardized coefficient of .84. This indicates that employees who perceive AI systems as fair, personally considerate, and developmentally supportive are much more likely to have overall positive perceptions of AI management. This relationship highlights a critical moderating role: although AI systems introduce operational changes, their acceptance and perceived value are heavily dependent on whether they align with employees’ social and ethical expectations. Fairness, respect for individuality, and perceived growth opportunities emerge as central to shaping trust and engagement in algorithmic management contexts. Among the demographic predictors included in the model: Education exerts a strong positive influence on AI Perceptions (unstandardized coefficient = 2.49, standardized = .38). This suggests that higher levels of education are associated with more favorable views of AI-based management. It is likely that more educated employees are more technologically literate, adaptable, and better equipped to navigate the demands and opportunities of digital systems. Gender, Age, and Work Experience show comparatively weak or non-significant effects on F2 (Balanced Management), with standardized coefficients near or below .05. This indicates that perceptions of fairness, balance, and developmental value are widely shared across demographic groups, and are not strongly conditioned by individual background characteristics.

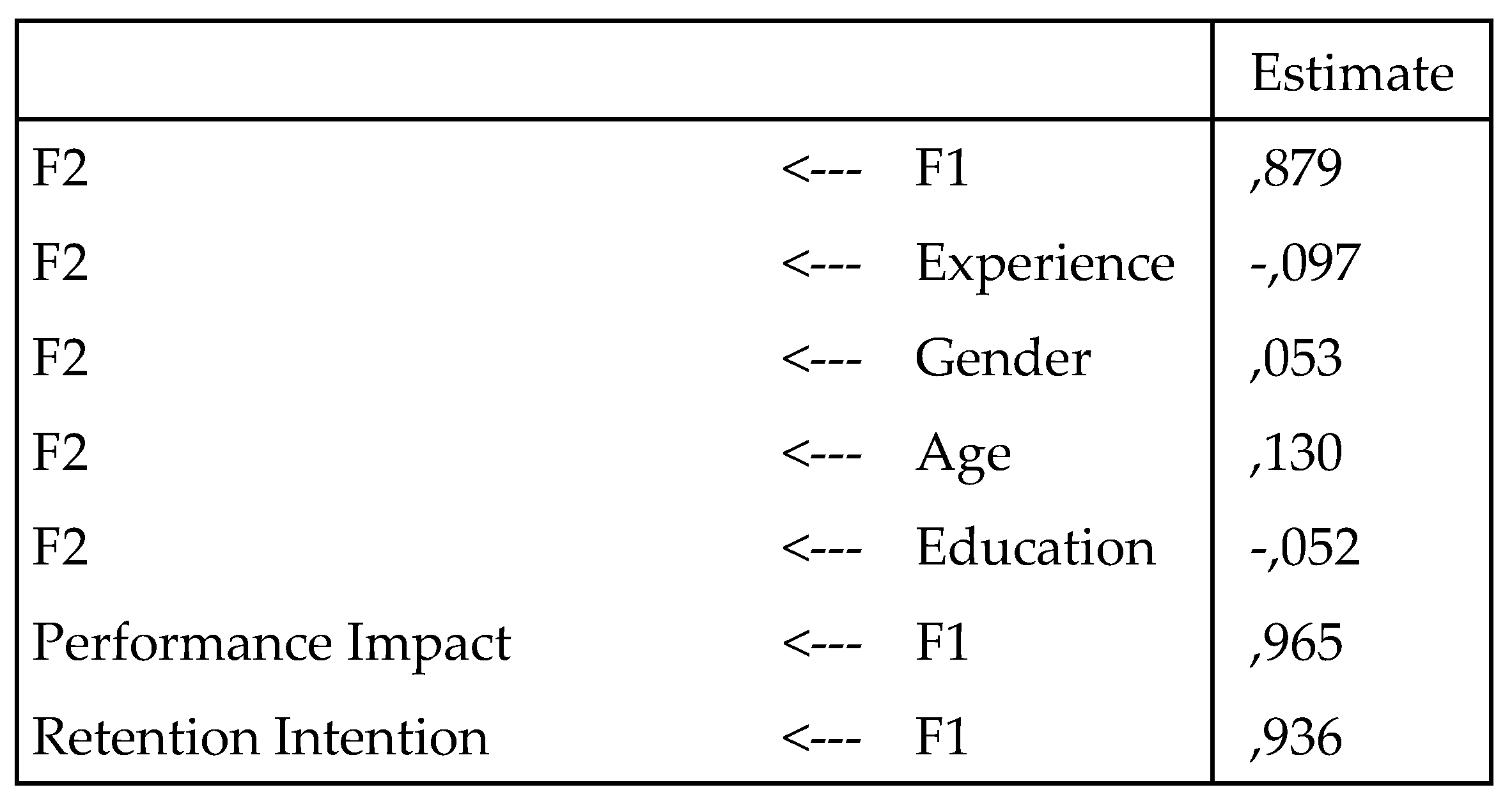

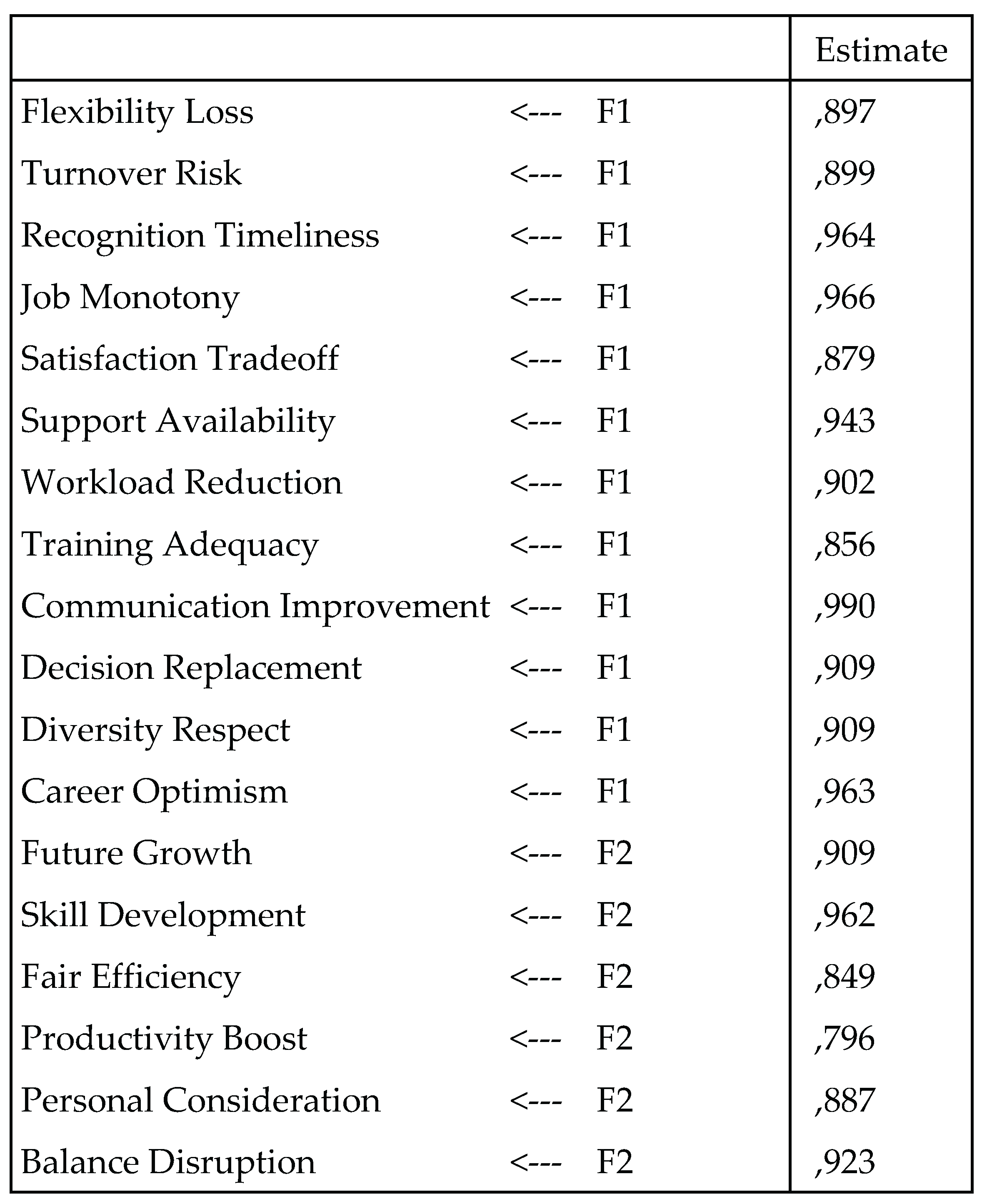

Table 4.

Standardized Regression Weights: (Group number 1 - Default model).

Table 4.

Standardized Regression Weights: (Group number 1 - Default model).

The standardized regression weights in

Table 4 provide strong empirical evidence supporting the internal validity of the two latent constructs in the model: F1 – AI Perceptions and F2 – Balanced Management. The data also offer insights into the influence of key demographic variables on perceptions of AI-driven management systems. The structural path from F1 (AI Perceptions) to F2 (Balanced Management) shows a very high and statistically significant standardized regression weight (β = 0.879). This suggests a robust and positive relationship between the two constructs. In practical terms, employees who have favorable perceptions of AI—seeing it as supportive, fair, and useful—are also more likely to perceive AI-based management systems as balanced, inclusive, and development-oriented. This central pathway reinforces the idea that the way AI is perceived on an emotional and operational level directly influences employees’ trust in its fairness and its ability to integrate human values. In other words, the more employees experience AI as enhancing their job performance, recognition, communication, and development, the more they believe AI is being applied in a fair and personally considerate manner.

Demographic variables show weak to negligible effects on the Balanced Management construct (F2): Age shows a minor positive effect (β = 0.130), suggesting that older employees may slightly favor AI when it is perceived as balancing efficiency with fairness; Gender (β = 0.053) and Education (β = -0.052) show minimal influence, indicating perceptions of balanced AI management are largely consistent across these groups; Experience has a small negative relationship (β = -0.097), possibly reflecting a subtle resistance or skepticism among more experienced workers toward AI's ability to fairly manage human-centric tasks. Although these effects are weak, they point to the possibility that younger or less experienced employees may be more open to AI-based systems, especially if they perceive them as aligned with contemporary organizational practices.

All observed variables related to the AI Perceptions construct demonstrate very strong loadings, confirming that they are reliable indicators of the underlying latent factor. Notably: Communication Improvement (β = 0.990) and Job Monotony (β = 0.966) are among the highest-loading items, highlighting the dual nature of employee experiences—improved communication on one hand, but a potential loss of engagement and creativity on the other. Performance Impact (β = 0.965), Recognition Timeliness (β = 0.964), and Career Optimism (β = 0.963) reflect the perception that AI enhances both short-term task performance and long-term professional development. Items reflecting potential downsides, such as Flexibility Loss (β = 0.897), Turnover Risk (β = 0.899), and Decision Replacement (β = 0.909), also load highly on the same construct, confirming that employees interpret AI as a multidimensional force—capable of supporting performance but potentially undermining autonomy and decision-making. This coexistence of positive and negative associations within the same factor highlights the complexity of how employees engage with algorithmic systems, reinforcing the need for nuanced management strategies.

The indicators for the Balanced Management construct also show high reliability and conceptual coherence: Skill Development (β = 0.962) and Balance Disruption (β = 0.923) load particularly strongly, suggesting that employees judge the quality of AI management based on its ability to foster learning while respecting work-life balance. Future Growth (β = 0.909) and Personal Consideration (β = 0.887) indicate the importance of both strategic foresight and individualized attention in shaping trust toward AI systems. Fair Efficiency (β = 0.849) and Productivity Boost (β = 0.796) reflect employees’ attention to both equity and output—a balance they seek in AI-driven environments. These results confirm that F2 captures a value-oriented dimension of AI management, centered on fairness, adaptability, and growth potential. Together, the standardized regression weights from this model provide compelling evidence that AI management systems are perceived through a lens that balances operational efficiency with human values. The strength of the F1→F2 path suggests that positive perceptions of AI are a necessary foundation for trust in its balanced application. High loadings across both constructs affirm that employees engage deeply with AI on multiple fronts—technological, emotional, developmental, and ethical. These insights underscore the importance for hospitality managers and system designers to go beyond efficiency and automation, focusing instead on transparent, personalized, and developmental uses of AI, particularly in employee-facing roles.

Sub-Hypothesis 1 (H1a) is reflected in Factor 1: AI Perceptions, which captures employees’ recognition of the positive impacts of AI-driven management systems—specifically improvements in job performance, workload reduction, and enhanced communication. The findings support this hypothesis, demonstrating that employees who perceive these benefits tend to exhibit greater career optimism and stronger intentions to remain with their organization. Sub-Hypothesis 2 (H1b) corresponds to Factor 2: Balanced Management, encompassing employees’ perceptions of how algorithmic management balances operational efficiency with personal consideration. The results confirm that when employees feel their individual circumstances are acknowledged and supported—particularly regarding work-life balance and skill development—they report lower perceptions of flexibility loss and higher satisfaction with growth opportunities. Together, these sub-hypotheses emphasize the dual nature of employee experiences with algorithmic management, underscoring the importance of both technological effectiveness and a human-centered approach to AI implementation. The strong structural link between Balanced Management and AI Perceptions further reinforces that trust in AI depends on how well it aligns with human-centered values. The findings reaffirm that algorithmic management is not a neutral intervention. Its adoption reshapes power dynamics, decision-making processes, and the lived experience of work. The legitimacy and long-term sustainability of such systems hinge on how well they balance operational efficiency with principles of fairness, dignity, inclusiveness, and personal development. In the hospitality sector — where service quality, emotional labor, and human connection are central — the integration of AI must be approached with particular sensitivity. Only when digital innovations are embedded within a broader social contract that values employees as partners in technological change can AI fulfill its promise to improve both business outcomes and worker well-being.

5. Discussion

The results of this study reveal a complex but ultimately optimistic picture of how employees perceive the role of AI in hospitality management. The findings demonstrate that employee attitudes toward algorithmic management are neither uniformly positive nor negative, but rather shaped by nuanced interpretations of how these technologies are introduced, experienced, and embedded within broader workplace practices. On one hand, when AI tools are well-integrated, transparent, and support employee development, they are associated with numerous benefits — including improved performance, timely recognition, reduced workloads, enhanced communication, and increased optimism about career progression. These positive associations reflect a growing recognition among staff that digital systems, when implemented thoughtfully, can improve clarity, predictability, and operational efficiency in a demanding service environment. However, this potential is counterbalanced by significant concerns. When algorithmic systems are perceived as dehumanizing, inflexible, or indifferent to personal needs, negative reactions become pronounced. Employees report reduced flexibility, heightened monotony, and feelings of alienation, particularly when AI tools override human judgment or fail to account for individual circumstances and diversity. This perception of impersonal automation not only undermines morale but may also increase turnover intention, as employees struggle to find meaning and agency in their roles. These contrasting perceptions underscore a critical insight: the success of AI in hospitality management is not solely a technical matter but is fundamentally relational and ethical. Employees do not respond to the technology itself in isolation; they respond to the management philosophy and practices surrounding its use. Whether AI systems are experienced as empowering or constraining depends largely on how they are framed, communicated, and supported within the organization.

This has direct implications for human resource (HR) and operational management. To maximize the benefits and minimize the risks of algorithmic management, hospitality organizations must adopt a hybrid approach that combines technological efficiency with human-centered values. Specifically, three key strategies are essential: 1. Transparent Communication: Employees need clear and honest explanations about how AI systems make decisions — especially regarding scheduling, performance evaluation, and shift allocation. This transparency helps build trust and ensures that staff do not feel manipulated or unfairly treated by opaque algorithms; 2. Human Oversight and Context Sensitivity: AI tools should complement, not replace, managerial discretion. Supervisors must be empowered to override algorithmic decisions when contextual, emotional, or ethical considerations demand it. This ensures that individual needs, life circumstances, and interpersonal dynamics are respected; 3. Continuous Training and Development: Staff must be equipped with the skills to use, interpret, and adapt to AI systems. Ongoing training not only reduces resistance but also positions AI as a career-enhancing tool rather than a threat. When employees feel competent and supported, their engagement with digital tools becomes more constructive.

5.1. Positive Perception of AI as Supportive and Developmental

The analysis of employee perceptions reveals a strong positive dimension to the integration of AI-based management systems within hospitality settings. Several indicators highlight how algorithmic tools are not only accepted but often valued by employees when implemented in ways that enhance job performance, professional development, and workplace communication. One of the most prominent benefits reported is the improved efficiency and accuracy of job performance. Employees perceive that AI-supported systems contribute meaningfully to streamlining daily operations. By automating routine administrative tasks, such as shift allocation, performance tracking, and data entry, AI allows staff to dedicate more time to core service activities that require human interaction and emotional intelligence. This shift is seen as a way to enhance both productivity and the perceived value of employees’ contributions. The indicator related to Performance Impact confirms that algorithmic tools are recognized not as replacements for human labor, but as enhancers of job effectiveness. Linked to this is the perception that AI contributes to greater job security and organizational commitment. The Retention Intention item illustrates that when AI systems are perceived as fair and consistent, employees feel more inclined to remain in their positions. This can be attributed to reduced ambiguity in job expectations, improved clarity of performance metrics, and the presence of structured, transparent systems that support equitable decision-making. AI-driven environments, when properly managed, can foster a sense of organizational stability and trust in leadership processes.

The model highlights that AI systems are perceived to improve timeliness and consistency in employee recognition, as reflected in the indicators Recognition Timeliness and Support Availability. Unlike human managers who may vary in responsiveness, AI tools can immediately register, log, and communicate positive employee behaviors or outcomes. For example, performance dashboards, automated feedback notifications, or digital acknowledgments can ensure that employees feel seen and appreciated in real-time. In addition, AI systems can help supervisors identify performance dips or behavioral cues early, allowing them to provide support or guidance before problems escalate. This proactive dimension of AI management contributes to a workplace culture where recognition and assistance are timely and data-informed. Another important finding concerns the perception of workload reduction. Many respondents report that AI systems help to reduce the mental and physical strain associated with operational complexity. Workload Reduction is not merely a matter of removing tasks but optimizing how and when those tasks are assigned. This is especially relevant in hospitality contexts, where irregular hours and high service demands can result in burnout. Algorithmic tools that dynamically adjust workloads, flag staffing gaps, or assist in resource planning are seen as relieving pressure, thus contributing to better job satisfaction. Crucially, this effect is amplified when paired with adequate training. The Training Adequacy indicator underscores the importance of equipping staff with the digital skills needed to interact confidently with AI systems. Without proper training, AI may be perceived as intrusive or disempowering. However, when training is sufficient, AI is more likely to be viewed as a supportive ally in the workplace rather than a controlling force.

The integration of AI is viewed as enhancing communication and professional growth. Respondents highlight that digital tools improve the clarity and flow of communication between staff and supervisors. Features such as automated scheduling updates, real-time messaging, or centralized information portals reduce misunderstandings and foster a sense of organizational cohesion. The indicator Communication Improvement reflects this perceived enhancement in transparency and interaction. At the same time, employees express Career Optimism in relation to AI deployment, seeing it as a sign that their workplace is evolving and investing in future-forward practices. The presence of algorithmic systems is interpreted not as a threat, but as a signal of modernization that could unlock new pathways for learning, advancement, and alignment with broader industry trends. Together, these findings suggest that when AI management is implemented with attention to usability, fairness, training, and communication, it can have a profoundly supportive and developmental impact on employees. Rather than eroding human value, well-designed AI systems can affirm it — by reducing operational burdens, increasing recognition, enabling clearer communication, and fostering a work environment conducive to growth. These insights provide strong justification for hospitality organizations to view AI not only as a tool for cost reduction or process optimization but also as a mechanism for enhancing employee experience and engagement.

5.2. Negative Perception of AI as Restrictive and Impersonal

While the integration of AI into hospitality management brings several perceived benefits, the findings also highlight a substantial set of concerns that frame algorithmic systems as restrictive, impersonal, and occasionally counterproductive from the employee’s perspective. These negative perception indicators underscore the risks associated with poorly contextualized or rigid AI applications, particularly when they compromise human autonomy, emotional satisfaction, or equitable treatment. One of the most frequently cited drawbacks relates to reduced flexibility and increased turnover risk. The indicators Flexibility Loss and Turnover Risk reflect the view that AI-driven scheduling systems often prioritize operational optimization over individual preferences or personal life circumstances. Employees report diminished autonomy in negotiating their schedules, difficulty making shift adjustments, and a general lack of agency in navigating their work-life balance. This rigidity not only undermines morale but also appears to increase dissatisfaction and burnout, contributing to a higher intention to leave the organization. These outcomes are particularly problematic in service industries like hospitality, where unpredictable demand patterns already make scheduling a stress point. When employees perceive that technology replaces dialogue and negotiation with impersonal allocation, their emotional connection to the job weakens.

In addition to reduced autonomy, many respondents express concern about the monotonization of work. The indicators Job Monotony and Satisfaction Tradeoff suggest that algorithmic management, by focusing heavily on efficiency and output metrics, may lead to oversimplified and repetitive job roles. Employees report that their work becomes increasingly task-oriented and stripped of relational or creative elements. This loss of variety and meaningful interaction erodes the intrinsic rewards of hospitality work, such as providing personalized service or exercising interpersonal skills. Although operational gains may be realized, they come at the cost of emotional engagement, a core ingredient of service quality and long-term employee retention. The perceived tradeoff between efficiency and fulfillment points to a fundamental tension in the deployment of AI in emotionally demanding roles — one where technological precision must be weighed against human motivation and purpose. Perhaps more critically, employees raise ethical and procedural concerns about the replacement of human decision-making and the lack of inclusive sensitivity in algorithmic logic. The indicators Decision Replacement and Diversity Respect bring attention to the limitations of AI in handling individual differences, complex life contexts, and cultural nuances. Employees feel that when decisions related to scheduling, evaluations, or conflict resolution are delegated entirely to automated systems, they risk becoming detached from the lived realities of the workforce. AI systems, especially those designed using generic or historically biased data, may fail to accommodate diverse needs — whether based on gender, age, family obligations, health conditions, or cultural practices. This perceived lack of adaptability contributes to a sense of injustice and marginalization, particularly for those who fall outside the norm assumed by the algorithm’s training data.

These concerns are not simply about fairness in outcome but also about procedural justice. Employees want to be seen, heard, and treated as individuals — not merely as data points within a system. When AI is perceived as an opaque authority that cannot explain its logic or flex its rules, it can undermine trust in organizational decision-making and damage the psychological contract between employer and employee. Taken together, these negative perceptions suggest that algorithmic systems must be implemented with caution, transparency, and human oversight. The absence of emotional intelligence, nuance, and contextual judgment in AI tools can lead to perceptions of coldness, alienation, and systemic unfairness — even if the tools are technically effective. Importantly, these issues are not inherent to AI itself, but rather reflect design choices and managerial practices that fail to anticipate the social dynamics of digital transformation. To mitigate these risks, organizations should prioritize ethical algorithm design, employee involvement in digital transitions, and mechanisms for appeal or override when employees feel that a system has failed to account for their circumstances. AI must be positioned not as a replacement for empathy or discretion, but as a tool to support fair and flexible human-centered management.

These perception indicators collectively reflect a dual reality: while many employees see AI as a facilitator of performance, communication, and growth, others fear a loss of autonomy, human oversight, and emotional connection to their work. The results suggest that AI systems must be carefully calibrated — not just for operational efficiency but also for personalization, empathy, and adaptability. If AI tools are perceived as neutral assistants that empower employees, they are welcomed. But when experienced as rigid systems that marginalize human context, resistance and dissatisfaction rise. Importantly, these findings underscore the need for hybrid management models, where technology enhances — but does not replace — human judgment and care.

6. Conclusions

This study set out to examine hotel employees’ perceptions of AI-driven human resource management systems within the context of the Accor Group’s operations in three major European cities: Paris, Berlin, and Amsterdam. It sought to understand how these perceptions shape employee attitudes, job satisfaction, and work-life balance, in a period of accelerating technological transformation across the hospitality industry. Through robust quantitative analysis—including factor analysis and structural equation modeling—two core latent constructs emerged as central to employee experiences: AI Perceptions and Balanced Management. The findings offer a multidimensional understanding of how AI technologies are received by frontline hospitality workers. On the one hand, employees recognize a broad range of potential benefits when AI systems are implemented with care and transparency. AI was often associated with increased efficiency, improved scheduling, consistent performance tracking, faster internal communication, and greater access to development opportunities. In these cases, algorithmic management is not viewed as a threat, but as a facilitator of smoother workflows and more equitable career progression. Employees who perceived these systems as fair, transparent, and supportive of their growth tended to report higher levels of satisfaction, trust in management, and overall engagement in their roles.

On the other hand, the research also uncovered significant concerns. Many employees expressed anxiety over the depersonalization of management, the monotony introduced by systematized tasks, and the erosion of human discretion in decision-making. Some viewed AI systems as rigid and inflexible, unable to capture the nuances of individual performance or the complexities of day-to-day hotel operations. Others noted that algorithmic management tools—when poorly communicated or insufficiently contextualized—could foster feelings of alienation and a lack of autonomy. In these contexts, AI was not perceived as a neutral tool, but as a force that could undermine morale, strain interpersonal dynamics, and contribute to burnout or attrition.

These findings confirm the study’s central hypothesis (H): Employees’ perceptions of algorithmic (AI-driven) management systems significantly influence their overall attitudes and work-life balance. It is not the presence of AI itself, but the way it is introduced, governed, and experienced by employees that determines its impact. Where organizations invest in ethical design, employee involvement, and mechanisms for feedback and accountability, AI can serve as a positive force. Conversely, when AI is deployed in a top-down, opaque, or overly mechanistic manner, it risks alienating the very workforce it is intended to support.

The study contributes to a growing body of literature on the intersection of technology and human resource management in service industries. It advances our understanding by foregrounding the voices of employees—those most directly affected by digital transformation—within the specific, high-pressure context of hospitality. In an industry where emotional labor, adaptability, and human interaction remain central, the challenge is not simply to automate, but to integrate. The most successful AI systems will be those that amplify human capabilities rather than attempt to replace them, embedding discretion, empathy, and context-awareness into automated decision-making processes.

From a managerial perspective, the implications are clear. First, AI should be implemented alongside comprehensive training programs that ensure employees understand not only how systems function, but why they are being used. Second, there must be channels for ongoing dialogue and feedback, so that employees feel empowered to voice concerns or propose adjustments. Third, organizations must maintain a strong emphasis on fairness, inclusion, and psychological safety in digital environments, reinforcing that technology is a tool for support—not surveillance or control. Moreover, ethical considerations should be central to every stage of AI system design and deployment. This includes transparency in algorithmic decision-making, the ability to audit system outputs, and the retention of human judgment in high-stakes decisions such as promotions, disciplinary actions, or workload distribution. AI should support human dignity, not diminish it.

Looking forward, future research should explore these dynamics in a broader range of organizational and cultural contexts. Comparative studies across different hotel chains, job roles, or national settings could provide deeper insight into how cultural attitudes toward technology shape acceptance and resistance. Longitudinal studies may also help track how perceptions evolve over time, especially as AI systems become more embedded in organizational life. Additionally, qualitative research could offer richer, more textured accounts of employee experiences that complement and extend the quantitative findings presented here. The integration of AI into human resource management is neither inherently beneficial nor detrimental. Its success depends on the choices organizations make—about design, governance, communication, and culture. In hospitality, where human connection remains at the heart of service, AI must be aligned with values of empathy, fairness, and respect. Only by maintaining this balance can organizations ensure that technological innovation enhances, rather than erodes, the human experience of work.

6.1. Practical Implications

The results of this study present numerous practical implications for enhancing rural tourism development, especially in areas like Fruška Gora that are trailing behind more established rural destinations, such as Tuscany. A significant aspect that requires enhancement is the development of infrastructure. By improving road networks and public transportation systems, accessibility for both domestic and international visitors can be greatly increased. The expansion of digital infrastructure, particularly in terms of enhanced internet connectivity, is essential for appealing to tech-savvy travelers who depend on digital resources for planning their trips and enriching their experiences.

For hotels implementing AI-driven management systems, it is crucial to focus not only on technological efficiency but also on maintaining a human-centered approach. Transparent communication about how AI algorithms function—especially in areas such as scheduling, performance evaluations, and shift allocations—can help build trust and reduce employee anxiety about automated decision-making. Human oversight must be preserved by empowering managers to intervene and adjust AI-generated decisions when unique personal circumstances or ethical issues arise, ensuring fairness and empathy in workplace management. Continuous and comprehensive training programs are essential to help employees develop the necessary digital literacy and skills to confidently interact with AI tools. This fosters a sense of empowerment and reduces resistance, transforming AI from a perceived threat into a supportive resource that enhances job performance.

AI systems should be designed and applied with sensitivity to diversity and individual needs, such as accommodating different work-life balance requirements and personal circumstances. This respect for personal consideration can improve employee satisfaction and reduce turnover risk. Additionally, hotels can leverage AI to provide timely, consistent feedback and improve communication channels between staff and supervisors, thereby increasing transparency and engagement. Ultimately, by balancing AI-driven operational efficiency with fairness, personal development opportunities, and ethical management practices, hotels can create a more supportive work environment. This balanced approach enhances employee motivation, loyalty, and overall well-being—factors that are critical to sustaining high service quality and competitive advantage in the hospitality industry.

Author Contributions

Conceptualization, Aleksandra Vujko and Milena Turčinović.; methodology, Aleksandra Vujko, Vuk Mirčetić; software, Milena Turčinović.; validation, Aleksandra Vujko., and Milena Turčinović.; formal analysis Aleksandra Vujko.; investigation, Milna Turčinović.; resources, Aleksandra Vujko.; data curation, Vuk Mirčetić.; writing—original draft preparation, Aleksandra Vujko.; writing—review and editing, Vuk Mirčetić.; visualization, Milena Turčinović.; supervision, Aleksandra Vujko. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of Singidunum University (protocol code 169, 01 May 2024) for studies involving humans.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The aggregated data analyzed in this study are available from the corresponding author(s) upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Adeyinka-Ojo, S. (2018). A strategic framework for analysing employability skills deficits in rural hospitality and tourism destinations. Tourism Management Perspectives, 27, 47-54. [CrossRef]

- Alam, S. S., Kokash, H. A., Ahsan, M. N., & Ahmed, S. (2025). Relationship between technology readiness, AI adoption and value creation in hospitality industry: Moderating role of technological turbulence. International Journal of Hospitality Management, 127, 104133. [CrossRef]

- Ahn, M. J., & Chen, Y.-C. (2022). Digital transformation toward AI-augmented public administration: The perception of government employees and the willingness to use AI in government. Government Information Quarterly, 39(2), 101664. [CrossRef]

- Bai, J. Y., Wong, I. A., Huan, T. C. T., Okumus, F., & Leong, A. M. W. (2025). Ethical perceptions of generative AI use and employee work outcomes: Role of moral rumination and AI-supported autonomy. Tourism Management, 111, 105242. [CrossRef]

- Bai, S., & Zhang, X. (2025). My coworker is a robot: The impact of collaboration with AI on employees' impression management concerns and organizational citizenship behavior. International Journal of Hospitality Management, 128, 104179. [CrossRef]

- Bennett, N., & Martin, C. L. (2025). AI as a talent management tool: An organizational justice perspective. Business Horizons. [CrossRef]

- Buhalis, D., & Leung, R. (2018). Smart hospitality—Interconnectivity and interoperability towards an ecosystem. International Journal of Hospitality Management, 71, 41–50. [CrossRef]

- Cauchi, N., Macek, K., & Abate, A. (2017). Model-based predictive maintenance in building automation systems with user discomfort. Energy, 138, 306–315. [CrossRef]

- Cheng, W., & Hwang, J. (2025). Robots' anthropomorphic designs: Psychological mechanism of consumer acceptance in restaurant contexts of service success, failure, and recovery. International Journal of Hospitality Management, 131, 104322. [CrossRef]

- Colquitt, J. A. (2001). On the dimensionality of organizational justice: A construct validation of a measure. Journal of Applied Psychology, 86(3), 386–400. [CrossRef]

- Contessi, D., Viverit, L., Pereira, L. N., & Heo, C. Y. (2024). Decoding the future: Proposing an interpretable machine learning model for hotel occupancy forecasting using principal component analysis. International Journal of Hospitality Management, 121, 103802. [CrossRef]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. [CrossRef]

- Deldadehasl, M., Karahroodi, H. H., & Haddadian Nekah, P. (2025). Customer Clustering and Marketing Optimization in Hospitality: A Hybrid Data Mining and Decision-Making Approach from an Emerging Economy. Tourism and Hospitality, 6(2), 80. [CrossRef]

- Diwan, S. A. (2025). Optimizing guest experience in smart hospitality: Integrated fuzzy-AHP and machine learning for centralized hotel operations with IoT. Alexandria Engineering Journal, 116, 535–547. [CrossRef]

- Hackman, J. R., & Oldham, G. R. (1975). Development of the Job Diagnostic Survey. Journal of Applied Psychology, 60(2), 159–170. [CrossRef]

- Higgins, E. T. (1997). Beyond pleasure and pain. American Psychologist, 52(12), 1280–1300. [CrossRef]

- Huang, G. I., Wong, I. A., Zhang, C. J., & Liang, Q. (2025). Generative AI inspiration and hotel recommendation acceptance: Does anxiety over lack of transparency matter? International Journal of Hospitality Management, 126, 104112. [CrossRef]

- Huang, Y., & Gursoy, D. (2024). How does AI technology integration affect employees’ proactive service behaviors? A transactional theory of stress perspective. Journal of Retailing and Consumer Services, 77, 103700. [CrossRef]

- Huo, W., Xie, J., Yan, J., Long, T., & Liang, B. (2025). Approach or avoidance? Relationship between perceived AI explainability and employee job crafting. Acta Psychologica, 257, 105097. [CrossRef]

- Ivanov, S. H., & Webster, C. (2017). Adoption of robots, artificial intelligence and service automation by travel, tourism and hospitality companies – A cost-benefit analysis. Paper presented at the International Scientific Conference "Contemporary Tourism – Traditions and Innovations", Sofia University, October 19–21, 2017. https://ssrn.com/abstract=3007577.

- Ivanov, S. H., Seyitoğlu, F., & Markova, M. (2020). Hotel managers’ perceptions towards the use of robots: A mixed-methods approach. Information Technology & Tourism, 22(4), 505–535. https://ssrn.com/abstract=3824271.

- Jerez-Jerez, M. J. (2025). A study of employee attitudes towards AI, its effect on sustainable development goals and non-financial performance in independent hotels. International Journal of Hospitality Management, 124, 103987. [CrossRef]

- Jia, S. (J.), Chi, O. H., & Chi, C. G. (2025). Unpacking the impact of AI vs. human-generated review summary on hotel booking intentions. International Journal of Hospitality Management, 126, 104030. [CrossRef]

- Jianu, B., Ashton, M., & Lugosi, P. (2025). Integrating algorithmic management in hotels: Emerging challenges and opportunities for frontline managers. International Journal of Hospitality Management, 129, 104168. [CrossRef]

- Kellogg, K. C., Valentine, M. A., & Christin, A. (2020). Algorithms at work: The new contested terrain of control. Academy of Management Annals, 14(1), 366–410. [CrossRef]

- Kirshner, S. N., & Lawson, J. (2025). Preventing promotion-focused goals: The impact of regulatory focus on responsible AI. Computers in Human Behavior: Artificial Humans, 3, 100112. [CrossRef]

- Leventhal, G. S. (1980). What should be done with equity theory? New approaches to the study of fairness in social relationships. In K. J. Gergen, M. S. Greenberg, & R. H. Willis (Eds.), Social exchange: Advances in theory and research (pp. 27–55). Plenum Press.

- Li, C., Fang, Y., & Derakhshan, A. (2025). Unlocking the interplay among Chinese EFL learners’ L2 motivation, regulatory focus, and language learning achievement: From a regulatory focus theory perspective. Learning and Motivation, 91, 102141. [CrossRef]

- Li, H., Xi, J., Hsu, C. H. C., Yu, B. X. B., & Zheng, X. (K.). (2025). Generative artificial intelligence in tourism management: An integrative review and roadmap for future research. Tourism Management, 110, 105179. [CrossRef]

- Luo, X., & Yi, Z. (2025). Efficiency management of engineering projects based on particle swarm multi-objective optimization algorithm. Systems and Soft Computing, 7, 200320. [CrossRef]

- Madanchian, M., & Taherdoost, H. (2025). Criteria for AI adoption in HR: Efficiency vs. ethics. Procedia Computer Science, 258, 233–241. [CrossRef]

- Madanchian, M., Taherdoost, H., & Mohamed, N. (2023). AI-based human resource management tools and techniques: A systematic literature review. Procedia Computer Science, 229, 367–377. [CrossRef]

- Maiti, M., Kayal, P., Vujko, A. (2025): A Study on Ethical Implications of Artificial Intelligence Adoption in Business: Challenges and Best Practices. Future Business Journal, 11:34 . [CrossRef]

- Mandić, D., Knežević, M., Borovčanin, D., & Vujko, A. (2024). Robotisation and service automation in the tourism and hospitality sector: a meta-study (1993-2024). Geojournal of Tourism and Geosites, 1271–1280. [CrossRef]

- Mirčetić, V., & Mihić, M. (2022). Smart Tourism as a Strategic Response to Challenges of Tourism in the Post-COVID. Sustainable Business Management and Digital Transformation: Challenges and Opportunities in the Post-COVID Era (pp. 445-463). Springer. [CrossRef]

- Mojoodi, A., Kumail, T., Ahmadzadeh, S. M., & Jalalian, S. (2025). Perceptual mapping and key factors influencing hotel choices: A web mining approach to Booking.com reviews. International Journal of Hospitality Management, 131, 104308. [CrossRef]

- Netemeyer, R. G., Boles, J. S., & McMurrian, R. (1996). Development and Validation of Work-Family Conflict and Family-Work Conflict Scales. Journal of Applied Psychology, 81, 400-410. [CrossRef]

- Rojas, C., & Jatowt, A. (2025). Transformer-based probabilistic forecasting of daily hotel demand using web search behavior. Knowledge-Based Systems, 310, 112966. [CrossRef]

- Schwartz, Z., Webb, T. D., Altin, M., & Riasi, A. (2025). Overbooking and performance in hotel revenue management. International Journal of Hospitality Management, 129, 104192. [CrossRef]

- Shin, H. H., Choi, S., & Kim, H. (2025). Artificial intelligence (AI) in human resource management (HRM): A driver of organizational dehumanization and negative employee reactions. International Journal of Hospitality Management, 131, 104230. [CrossRef]

- Spector, P. E. (1997). Job satisfaction: Application, assessment, causes, and consequences. Sage Publications, Inc.

- Sun, H., Kim, M., Kim, S., & Choi, L. (2025). A methodological exploration of generative artificial intelligence (AI) for efficient qualitative analysis on hotel guests’ delightful experiences. International Journal of Hospitality Management, 124, 103974. [CrossRef]

- Tan, L., & Li, J. (2025). Working with robots makes service employees counterproductive? The role of moral disengagement and task interdependence. Tourism Management, 111, 105233. [CrossRef]

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. [CrossRef]

- Vujko, A., Cvijanović, D., El Bilali, H., & Berjan, S. (2025a). The Appeal of Rural Hospitality in Serbia and Italy: Understanding Tourist Motivations and Key Indicators of Success in Sustainable Rural Tourism. Tourism and Hospitality, 6(2), 107. [CrossRef]

- Vujko, A., Knežević, M., & Arsić, M. (2025). The Future Is in Sustainable Urban Tourism: Technological Innovations, Emerging Mobility Systems and Their Role in Shaping Smart Cities. Urban Science, 9(5), 169. [CrossRef]

- Webster, C., & Ivanov, S. H. (2020). Robots in travel, tourism and hospitality: Key findings from a global study. Varna: Zangador. https://ssrn.com/abstract=3542208.

- Wu, L., Wang, Z., Liao, Z., Xiao, D., Han, P., Li, W., & Chen, Q. (2024). Multi-day tourism recommendations for urban tourists considering hotel selection: A heuristic optimization approach. Omega, 126, 103048. [CrossRef]

- Xu, J., Zhang, W., Li, H., Zheng, X. (K.), & Zhang, J. (2024). User-generated photos in hotel demand forecasting. Annals of Tourism Research, 108, 103820. [CrossRef]

- Xu, T., Zheng, Y.-H., Zhang, J., & Wang, Z. (2025). “They” threaten my work: How AI service robots negatively impact frontline hotel employees through organizational dehumanization. International Journal of Hospitality Management, 128, 104162. [CrossRef]

- Yadav, A., & Dhar, R. L. (2021). Linking frontline hotel employees’ job crafting to service recovery performance: The roles of harmonious passion, promotion focus, hotel work experience, and gender. Journal of Hospitality and Tourism Management, 47, 485–495. [CrossRef]

- Yang, Z. (2025). Application of evolutionary deep learning algorithm in construction engineering management system. Systems and Soft Computing, 7, 200317. [CrossRef]

- Zhang, H., Xiang, Z., & Zach, F. J. (2025). Generative AI vs. humans in online hotel review management: A Task-Technology Fit perspective. Tourism Management, 110, 105187. [CrossRef]

- Zhang, Y., Wang, J., Zhang, J., & Wang, Y. (2025). To be right on the button: How and when hotel frontline service employees’ AI awareness influences deviant behavior. International Journal of Hospitality Management, 126, 104090. [CrossRef]

- Zhou, S., Yi, N., Rasiah, R., Zhao, H., & Mo, Z. (2024). An empirical study on the dark side of service employees’ AI awareness: Behavioral responses, emotional mechanisms, and mitigating factors. Journal of Retailing and Consumer Services, 79, 103869. [CrossRef]

- Zhu, D. (J.), & Chen, M.-H. (2025). Modeling AI’s impact on hospitality firm profit: Demand and productivity effect. International Journal of Hospitality Management, 131, 104256. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).