1. Introduction

In educational environments, analyzing and predicting interactions between students and questions is essential for providing personalized learning experiences. With the proliferation of digital educational platforms and the accumulation of large-scale educational data, extracting meaningful insights from such data has become increasingly critical. In this context, Knowledge Tracing (KT) has established itself as a core methodology for modeling student-question interactions to predict learning performance. It serves as the foundation for intelligent tutoring systems to infer conceptual understanding and skill acquisition levels, enabling customized curricula delivery [

2].

The student responses observed in educational interactions represent more than superficial phenomena—they result from interactions between the ’latent traits’ of both students and questions. These latent traits refer to internal state values that, while not directly observable, significantly influence entities’ observable behaviors. For students, latent traits can include knowledge state, conceptual understanding, problem-solving ability, learning style, and cognitive characteristics. For questions, latent traits can include difficulty, complexity, cognitive demand level, and required prerequisite knowledge.

However, existing KT approaches have limitations in comprehensively addressing the latent traits of both students and questions. To overcome these limitations, this study applies the Cyclic Dual Latent Discovery (CDLD) methodology [

7] to educational data. CDLD is a methodology that progressively discovers the comprehensive latent traits of users and items through cyclic learning between dual deep learning models. This is based on the premise that all interactions are manifestations of the underlying latent traits of the respective entities.

This study aimed to validate CDLD’s applicability to the educational domain. To achieve this, we applied the CDLD methodology to the educational dataset EdNet[

10] to discover the latent traits of students and questions, and use the discovered latent trait values to predict the interaction results between students and questions.

2. Related Work

Traditional KT approaches are limited by their simplistic modeling techniques and their inability to effectively discover complex latent traits. Bayesian Knowledge Tracing (BKT) [

8] represents students’ knowledge states only as binary variables (’mastered’ or ’not mastered’),failing to capture comprehensive student latent traits beyond binary mastery states. Performance Factor Analysis (PFA) [

9], while improving predictive accuracy, primarily models student-question interactions through linear combinations of predefined parameters, thereby providing limited capacity to capture complex nonlinear relationships between comprehensive latent traits.

Deep learning-based approaches have demonstrated systematic progress in modeling educational interaction patterns, as evidenced by recent comprehensive analyses [

1]. Early architectures like Deep Knowledge Tracing (DKT) [

3] established the viability of recurrent neural networks for temporal sequence modeling. Subsequent developments introduced specialized components—Dynamic Key-Value Memory Networks (DKVMN) [

4] incorporated memory mechanisms for concept relationship tracking, while Self-Attentive Knowledge Tracing (SAKT) [

5] utilized transformer architectures to capture long-range dependencies. The SAINT [

6] and SAINT+ [

11] frameworks extended these foundations through encoder-decoder configurations. Contemporary extensions address specific modeling challenges: DKVMN&MRI [

12] integrates exercise-knowledge relationships with forgetting curve dynamics, DyGKT [

13] employs continuous-time dynamic graphs to handle infinitely growing learning sequences, and AAKT [

14] reformulates knowledge tracing as a generative process using question-response alternate sequences. Despite architectural diversity, existing approaches model only partial aspects of student or question latent traits—such as knowledge states for students or difficulty levels for questions—rather than discovering comprehensive latent representations. They remain constrained to predefined latent traits instead of uncovering the comprehensive latent traits for each entity.

The Cyclic Dual Latent Discovery (CDLD) approach addresses this limitation by discovering comprehensive latent trait vectors for both students and questions through cyclic optimization. Unlike methods that predefine which traits to model, CDLD enables latent traits to emerge from interaction data, capturing the diverse factors that influence educational outcomes and providing a foundation for applications beyond performance prediction.

3. Proposed Method: CDLD Application for Latent Trait Discovery

This section details the CDLD methodology applied for predicting interactions in educational data. The user and item specified in the CDLD methodology correspond to student and question respectively in this study. Accordingly, unless otherwise noted, user refers to student and item refers to question.

3.1. Entity-Processor Perspective and Latent Trait Modeling

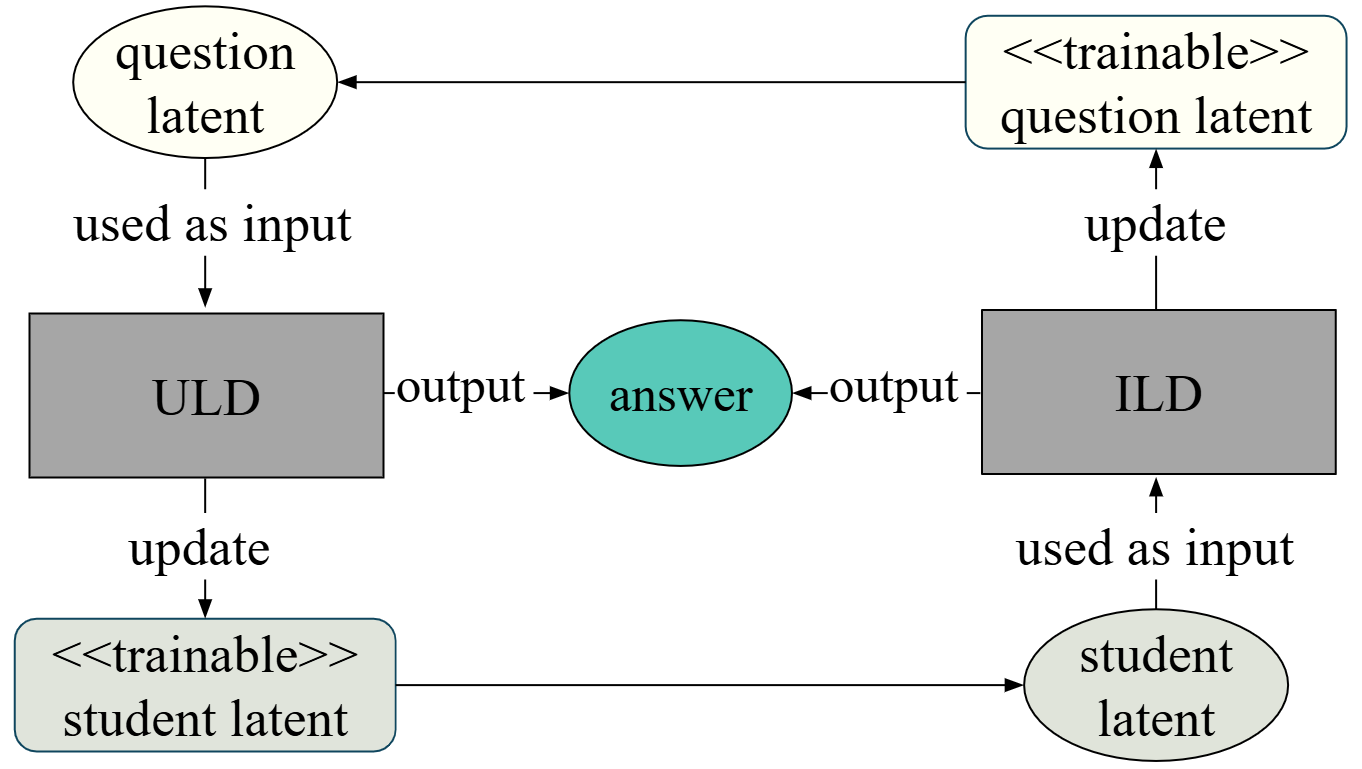

In educational interactions, students and questions interact as mutual processors rather than independent entities. A student’s latent academic ability and a problem’s latent difficulty influence each other, and the interaction between the two determines the resulting answer. From this perspective, a question can be regarded as a processor that receives a student entity as input and produces an answer that reflects the student’s academic ability. Similarly, a student can be viewed as a processor that receives a question entity and generates a answer that reflects the perceived difficulty of the question. This perspective, as illustrated in

Figure 1, suggests that the answer is the outcome of one entity processing the latent traits of the other.

Mathematically, the interaction result

between student

S and question

Q can be expressed as:

where

g denotes the interaction function, and

represents the noise term arising from inevitable variability and uncertainty in real-world educational settings. More specifically, this can be expressed as:

Existing educational data analysis approaches typically rely only on observable student features and question features to predict interaction results and either ignore or oversimplify student latent traits and question latent traits . In contrast, CDLD aims to directly discover the latent traits and from the interaction results .

Although not directly observable, the latent traits of users are underlying factors that significantly determine the outcomes of interactions. It’s very important for KT task. In CDLD, these latent traits are represented as a d-dimensional vector and , which is discovered through User Latent Discoverer (ULD) and Item Latent Discoverer (ILD) of CDLD.

3.2. Cyclic Latent Trait Discovery Framework

ULD and ILD are deep neural networks (DNNs) that takes

,

,

, and

as input and output answer. ULD and ILD can be represented by Equations (

3) and (

4), respectively.

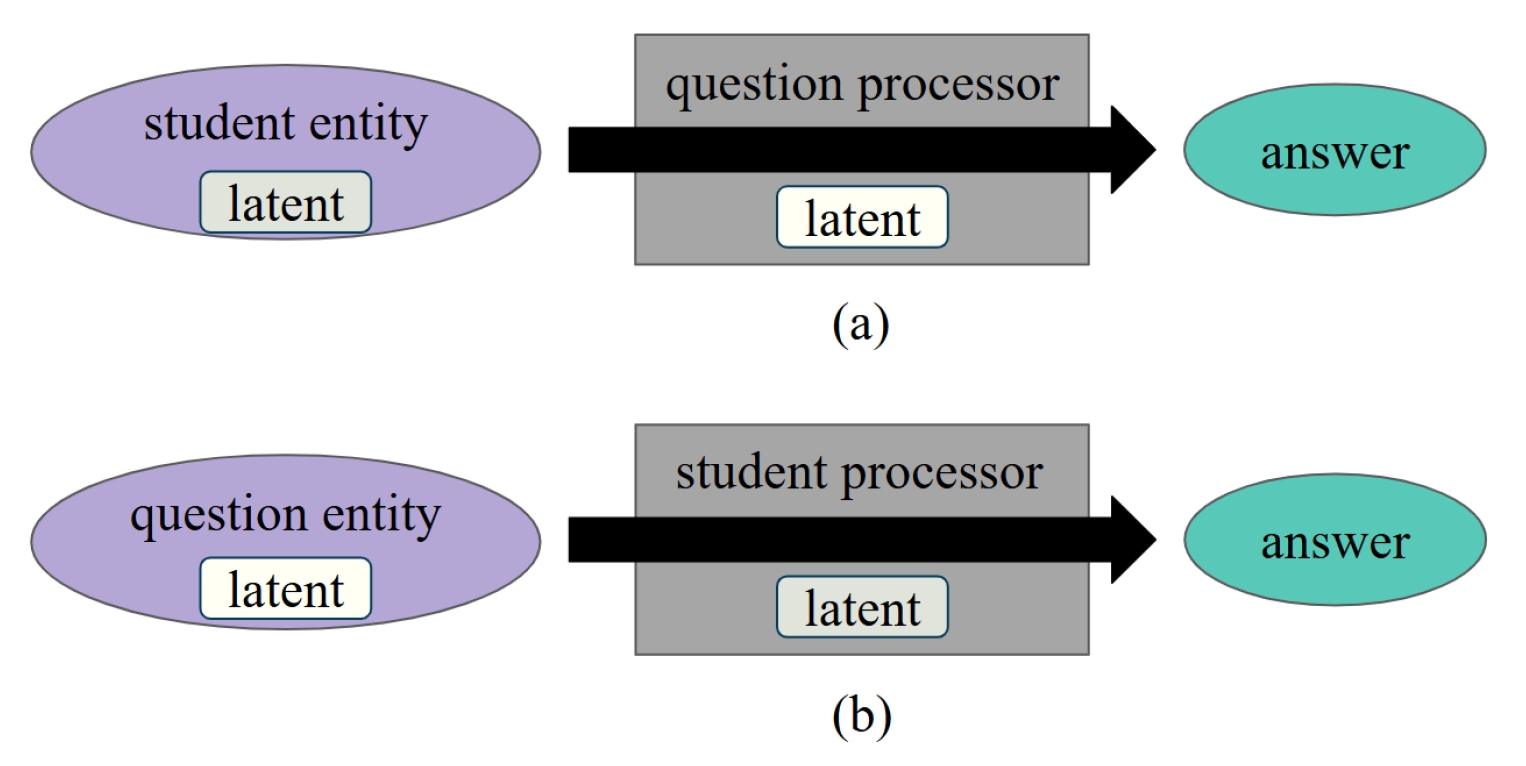

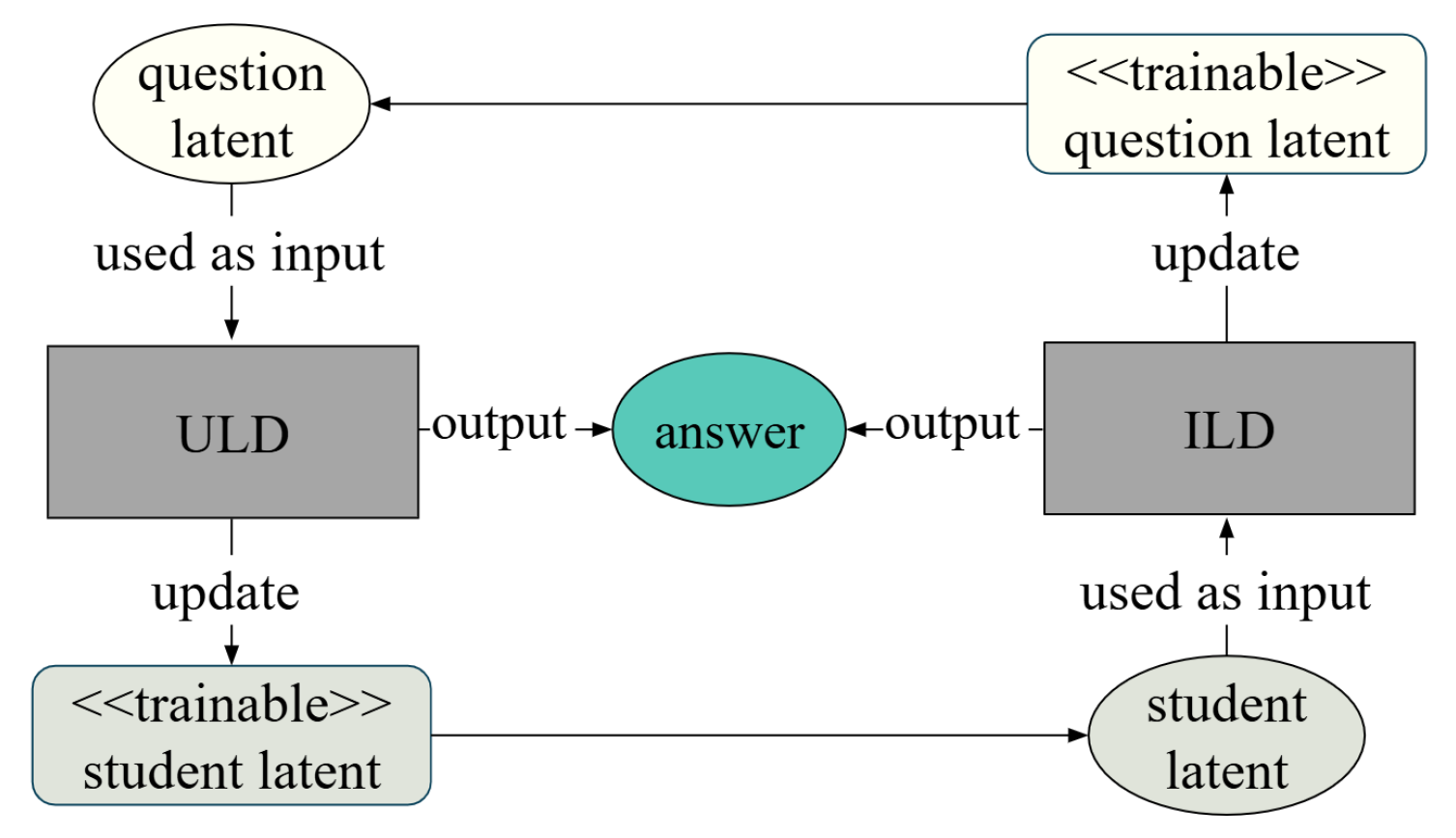

In contrast to conventional DNNs, where only the network parameters are updated during training, (

3) illustrates that CDLD updates both the parameters of the ULD network and the user’s latent traits. Similarly, in (

4), the item’s latent traits are updated along with the ILD network. In both equations, the asterisk (*) denotes components—whether parameters or latent inputs—that are updated during training. First, ULD is trained while updating

, which is then used as a fixed input for training ILD. After ILD training is completed and

is updated, it is used as a fixed input for training ULD. By cyclic training ULD and ILD in this manner, the student latent traits and question latent traits are discovered. The training procedure described above is illustrated in

Figure 2.

4. Experiments

This section presents the experimental evaluation of the CDLD methodology on the EdNet dataset, aiming to discover and assess the latent traits of students and questions through answer prediction.

4.1. Data Preparation

EdNet is a student-system interaction dataset collected from the online educational platform Santa. We used EdNet-KT1, containing approximately 96.25 million response records from about 780,000 students and 13,000 questions. Each question includes features such as part (type), tags, and correct answers. Since this dataset does not contain student features, we modified the original CDLD model to operate without them.

For our preprocessing pipeline, we first selected 7,843 students who had the most responses to ensure computational efficiency. We then focused on the 94 questions most frequently solved by these students. When students answered the same question multiple times, we retained only their latest response to capture their most recent knowledge state. To ensure sufficient data for meaningful latent trait discovery, we filtered the dataset to include only students and questions with at least three interactions each. This preprocessing resulted in 60,069 interaction records.

The categorical features were processed using one-hot encoding for the part attribute and multi-hot encoding for the tags attribute. The target variable, indicating the correctness of student responses, was binarized, resulting in a label distribution where 63.4% of responses were correct. No class imbalance correction techniques were applied. To ensure randomized data distribution, the dataset was thoroughly shuffled and subsequently partitioned into training, validation, and test sets using an 8:1:1 ratio.

4.2. Model Architecture

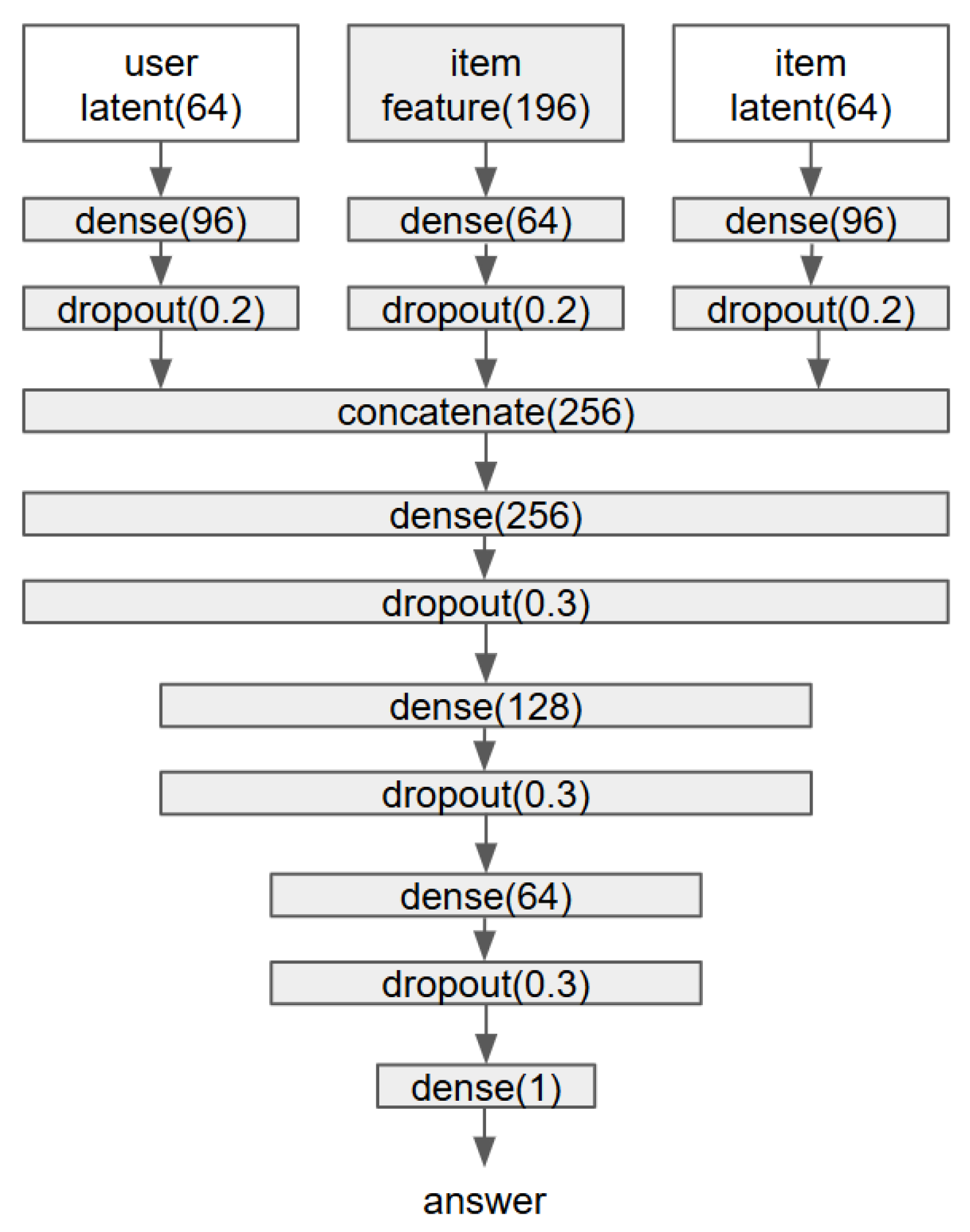

Figure 3 illustrates the architectures of the ILD and ULD modules. Each dense layer employs the swish activation function, with the final layer using sigmoid. Notably, the item latent traits in ILD and the user latent traits in ULD are implemented as model layers rather than input variables, allowing them to be updated during training. The implementation follows the same methodology as the original CDLD framework. The predictor model, which shares the same architecture, uses fixed latent trait inputs without updates, consistent with standard deep neural network conventions.

4.3. Training Details

The dimensions of both user and item latent vectors were set to 64, and the batch size was set to 2,048. The training process followed a cyclic scheme in which the ILD was trained for 10 epochs, followed by 10 epochs of ULD training; this alternating cycle was repeated 10 times. The final predictor model was trained for 100 epochs. The Adam optimizer was used with an initial learning rate of , and learning rate scheduling was applied using ReduceLROnPlateau, which reduced the learning rate by 50% if validation performance did not improve for two consecutive epochs.

The model was trained using a composite loss function consisting of categorical cross-entropy combined with L2 regularization. We applied a weight decay coefficient of to all dense layer weights to prevent overfitting. To ensure optimal model selection, we implemented early stopping that terminated training if the validation loss showed no improvement for 10 consecutive epochs. The same 100-epoch training regime was applied to the predictor model. We evaluated model performance using accuracy and Area Under the Receiver Operating Characteristic Curve (AUC) as our primary metrics.

All experiments were conducted on a standard desktop equipped with Intel i7-11700 CPU and 32 GB of RAM.

4.4. Experimental Results

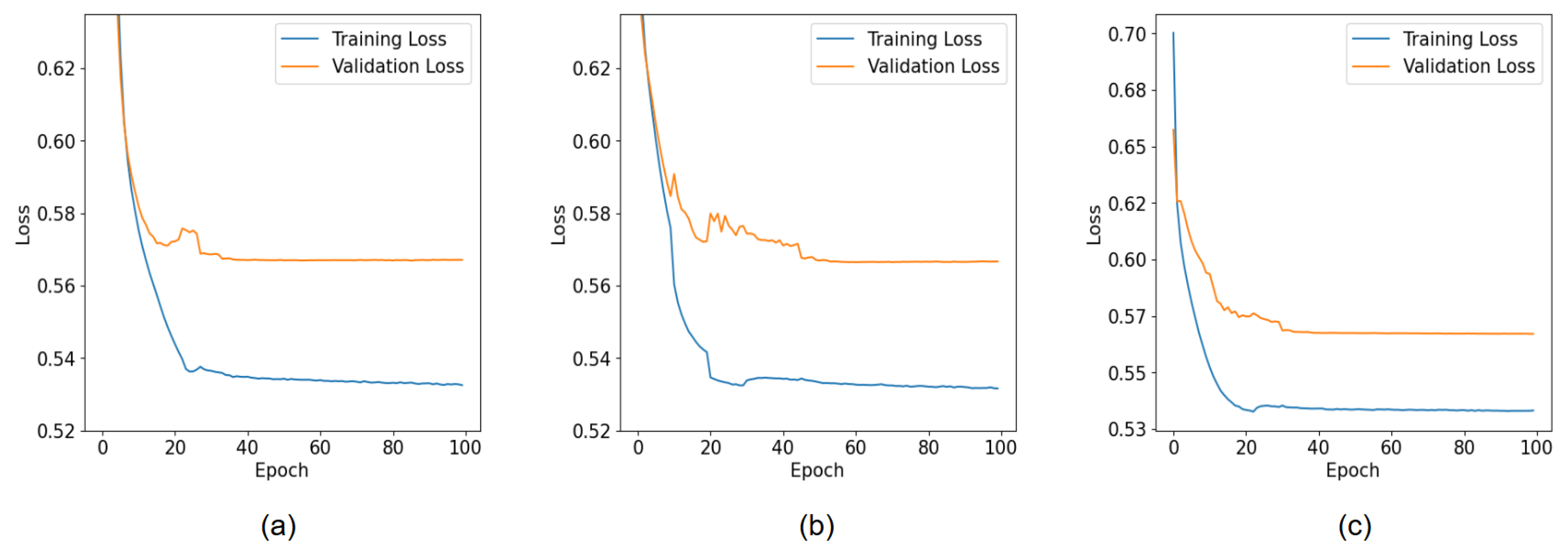

Figure 4 shows the loss graphs of ULD, ILD, and predictor training. All three models converged before 50 epochs.

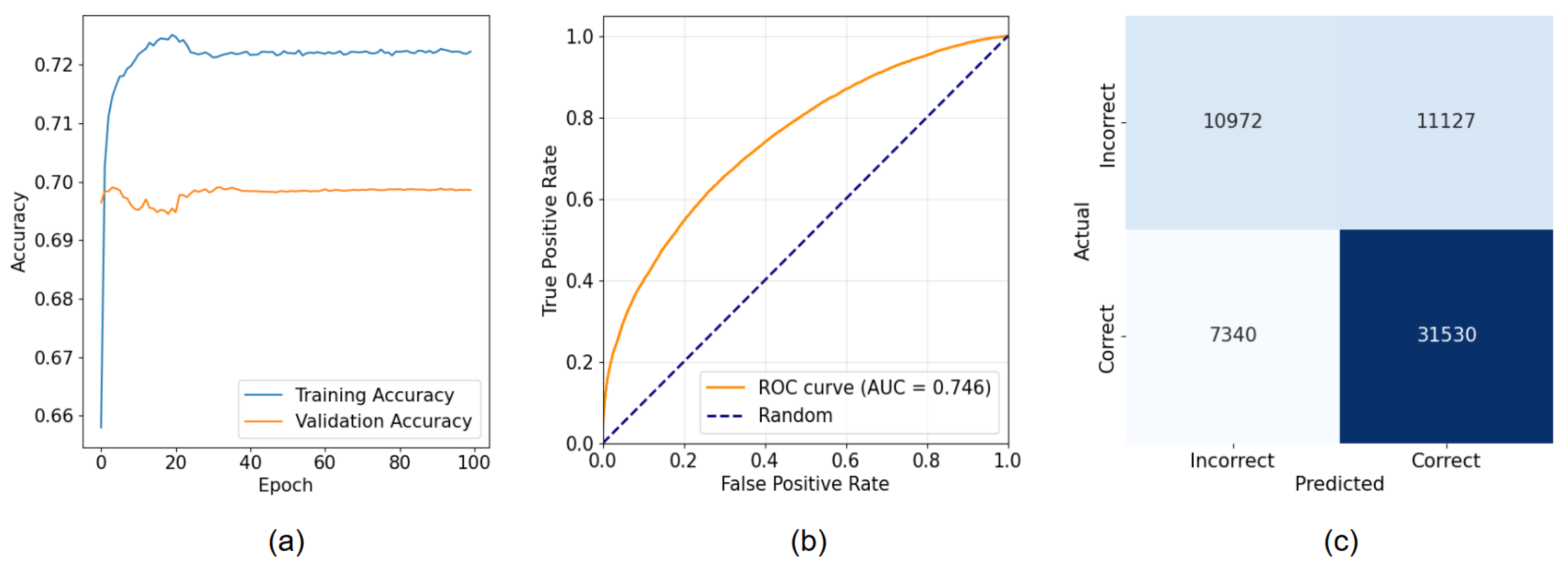

Figure 5 shows the accuracy graph during training, ROC curve, and confusion matrix. The model achieved 69.7% accuracy for correctness prediction. Precision was 0.739 and recall was 0.811. The AUC was 0.746.

5. Discussion

5.1. Confirming Feasibility for Educational Data Application

CDLD is a methodology for discovering the latent traits of two interacting entities based on the outcomes of their interactions. While the original study applied CDLD to model the relationship between users and movies, this study investigates its applicability to student-question interactions in the educational domain. We found that CDLD can be applied to educational data with minimal modifications.

Table 1 presents the performance of existing methods on the same dataset. We did not reproduce previous studies, and our data preprocessing pipeline differs from theirs. Therefore, direct comparison is not appropriate. Nevertheless, as shown in

Table 1, our model achieved an AUC of 0.746. This result shows a maximum gap of approximately 5.7% from prior methods, indicating that the predictive performance of our approach is meaningful and competitive.

Notably, our experiments required no EdNet-specific or educational domain knowledge, suggesting CDLD’s potential across diverse domains.

5.2. Assessing of Latent Trait Informativeness

To assess the informativeness of the discovered latent traits, we conducted ablation experiments using only observable features, without latent traits. We used macro F1-score as our primary evaluation metric, which averages class-wise F1-scores and offers a balanced assessment under class imbalance. When using only observable features, the model achieved a macro F1-score only slightly above the random-guessing baseline, indicating limited predictive power.

Table 2 shows the result. In contrast, when latent traits were incorporated, the model’s performance improved substantially. This suggests that the discovered latent traits encode meaningful information that is essential for accurate prediction. Furthermore, when using only latent traits and excluding the features, the accuracy degradation was only 0.5%. This finding indicates that the latent traits discovered through entity interactions carry more predictive information than the observable features themselves.

5.3. Educational Applications

The latent traits discovered through CDLD may offer potential benefits for various educational applications. One such possibility is student grouping, in which clustering algorithms could be applied to latent trait representations to identify students with similar learning profiles. This information may assist educators in developing customized learning paths tailored to the characteristics of each group. For example, students exhibiting lower values in certain latent dimensions might benefit from targeted reinforcement materials addressing those specific areas.

Another potential application lies in question analysis and curriculum design. The latent traits associated with questions may offer insights into their inherent difficulty and structural characteristics. When certain latent trait patterns are consistently associated with low accuracy rates, these patterns could serve as indicators of question difficulty. Additionally, by applying distance metrics such as Euclidean or cosine similarity in the latent space, it may be possible to identify questions with similar attributes, thereby supporting the construction of balanced assessments and targeted practice sets.

A particularly promising direction is the development of recommendation systems that utilize both student and question latent traits derived from CDLD. Such systems could recommend questions that align with a student’s current proficiency—those with high predicted success rates may help maintain engagement, while questions within the learner’s zone of proximal development could enhance learning effectiveness. This personalized selection mechanism offers a meaningful alternative to traditional one-size-fits-all educational approaches. Collectively, these potential applications suggest that CDLD can function not only as a predictive model but also as a general framework for data-driven decision-making in educational settings.

5.4. Limitations

In this study, we did not use the entire EdNet dataset. Instead, users and questions were filtered based on interaction frequency to construct a higher-quality subset for experimentation. The primary objective was to apply the CDLD framework to educational data, validate its capability for latent trait discovery, and evaluate its effectiveness in predicting student responses. While the results confirm the feasibility of applying CDLD to educational data, the evaluation was conducted using only a single dataset. Therefore, it remains difficult to claim general applicability across diverse educational domains.

A notable challenge of the proposed approach lies in the interpretability of the discovered latent traits. Although these traits are encoded as 64-dimensional vectors, the dimensions are entangled and lack explicit correspondence to interpretable educational concepts. This entanglement hinders the ability to provide educators with intuitive explanations regarding the meaning of each latent dimension or its relationship to observable student attributes and question features. The abstract nature of these representations may constrain their practical applicability in educational contexts where clear and actionable insights are required by stakeholders.

Furthermore, the current CDLD model treats each student–question interaction as independent, thereby failing to capture the temporal dynamics inherent in learning processes. Although students’ knowledge states evolve over time, the model does not consider sequential patterns or learning trajectories. This static method may overlook important information regarding the progression of student ability and the influence of prior interactions on subsequent performance.

5.5. Future Work

Building on our findings and addressing the identified limitations, several promising directions for future research emerge. To enhance the interpretability of latent traits, future work should develop methods for analyzing correlations between specific latent dimensions and observable features such as student achievement levels or question difficulty ratings. Dimensionality reduction techniques and feature selection methods could help identify more interpretable latent representations.

Incorporating temporal modeling represents another crucial research direction. Integrating CDLD with sequence modeling techniques such as recurrent neural networks (RNNs) or transformer architectures could capture the evolving nature of student knowledge. Such temporal extensions would enable the model to consider learning trajectories, identify knowledge retention patterns, and predict long-term learning outcomes more accurately.

Domain generalization and practical deployment warrant further investigation. While the results on English language learning data are encouraging, validation across diverse educational domains—such as mathematics, science, and the humanities—is necessary. Each domain may present unique interaction patterns and require domain-specific adaptations of the CDLD framework. In addition, user studies involving educators and students could offer valuable insights into the effective use of latent trait information in classroom settings. Such studies should evaluate the practical benefits of CDLD-based recommendations, the usability of latent trait visualizations, and their overall impact on learning outcomes.

6. Conclusions

We successfully applied CDLD to the EdNet educational dataset to discover the latent traits of students and questions and to predict question responses. CDLD achieved an AUC of 0.746, indicating meaningful predictive performance. An ablation study was conducted to assess the informativeness of the discovered traits. These results suggest the potential utility of CDLD for various educational applications.

Acknowledgments

The authors would like to thank the creators of the EdNet dataset for making their educational data publicly available for research purposes. The authors used OpenAI’s ChatGPT during manuscript preparation to improve readability and grammar. After using this tool, the authors verified and edited the content to ensure accuracy and take full responsibility for the final text.

References

- S. Shen et al., "A Survey of Knowledge Tracing: Models, Variants, and Applications," IEEE Trans. Learn. Technol., vol. 17, pp. 1858–1879, 2024.

- X. Ding and E. C. Larson, "On the interpretability of deep learning based models for knowledge tracing," 2021, arXiv:2101.11335.

- C. Piech et al., "Deep knowledge tracing," in Advances in Neural Inf. Process. Syst., Montreal, Canada, Dec. 2015, pp. 505–513.

- J. Zhang, X. Shi, I. King, and D.-Y. Yeung, "Dynamic key-value memory networks for knowledge tracing," in Proc. 26th Int. Conf. World Wide Web, Perth, Australia, Apr. 2017, pp. 765–774.

- S. Pandey and G. Karypis, "A self-attentive model for knowledge tracing," in Proc. 12th Int. Conf. Educational Data Mining, Montreal, Canada, Jul. 2019, pp. 384–389.

- Y. Choi et al., "Towards an appropriate query, key, and value computation for knowledge tracing," in Proc. 7th ACM Conf. Learning@Scale, Virtual Conference, Aug. 2020, pp. 341–344.

- D. Rim, S. Nuriev, and Y. Hong, "Cyclic Training of Dual Deep Neural Networks for Discovering User and Item Latent Traits in Recommendation Systems," IEEE Access, vol. 13, pp. 10663–10677, 2025.

- A. T. Corbett and J. R. Anderson, "Knowledge tracing: Modeling the acquisition of procedural knowledge," User Model. User-Adapt. Interact., vol. 4, no. 4, pp. 253–278, 1994.

- P. I. Pavlik, H. Cen, and K. R. Koedinger, "Performance factors analysis–a new alternative to knowledge tracing," in Proc. Artif. Intell. in Education, 2009, pp. 531–538.

- Y. Choi et al., "EdNet," Riiid. [Online]. Available: https://github.

- D. Shin, Y. Shim, H. Yu, S. Lee, B. Kim, and Y. Choi, "Saint+: Integrating temporal features for ednet correctness prediction," in Proc. 11th Int. Learn. Analytics and Knowl. Conf., Virtual Conference, Apr. 2021, pp. 490–496.

- F. Xu et al., "DKVMN&MRI: A new deep knowledge tracing model based on DKVMN incorporating multi-relational information," PLoS One, vol. 19, no. 10, p. e0312022, Oct. 2024.

- K. Cheng, L. Peng, P. Wang, J. Ye, L. Sun, and B. Du, "DyGKT: Dynamic Graph Learning for Knowledge Tracing," in Proc. 30th ACM SIGKDD Conf. Knowl. Discovery Data Mining, Barcelona, Spain, Aug. 2024, pp. 409–420.

- H. Zhou, W. Rong, J. Zhang, Q. Sun, Y. Ouyang, and Z. Xiong, "AAKT: Enhancing Knowledge Tracing With Alternate Autoregressive Modeling," IEEE Trans. Learn. Technol., vol. 18, pp. 25–38, 2025.

Short Biography of Authors

|

Geonhee Yang received a bachelor’s degree in Smart ICT Convergence from

Konkuk University in Seoul, South Korea. He has been working as an AI

Engineer at Rowan since 2024. |

|

Dohyoung Rim received the B.S. degree in electronic engineering and the

M.S. degree in cognitive science, with a focus on AI from Yonsei University,

Seoul, South Korea, in 1995 and 1997, respectively. He is currently pursuing the

Ph.D. degree, with a focus on AI for digital therapeutics development. He

completed his doctoral coursework with Yonsei University. After his academic

training, he gained practical experience as a Software Engineer and has been

working in the field of deep learning, since 2016. He is currently the Chief

Technology Officer with Rowan, a digital therapeutics company. |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).