1. Introduction

According to the World Health Organization (WHO), more than 70 million people are currently hearing impaired, and this number is unfortunately increasing. It is estimated that by 2050, the number of people with hearing impaired and permanent hearing loss (deaf) could reach 2.5 billion [

1]. This increase means that more than 700 million people worldwide will need hearing rehabilitation [

1]. SL is a form of communication that people with hearing loss use to communicate their thoughts, feelings, and knowledge through gestures instead of verbal communication. Currently, there are negative aspects of the use of SL in the world, including the low level of learning of this language by healthy people, the almost complete absence of employees who understand this language in government agencies, and the very small number of sign language interpreters. To solve such problems, researchers are developing various types of human-machine interfaces (HMI). Recent scientific studies show that the use of electromyography in gesture recognition provides significant advantages [

2,

3,

4].

EMG is a method of measuring the electrical activity of muscles. Recording EMG signals can be done in two ways, invasive and non-invasive [

5]. In the invasive method, the EMG signal is obtained by inserting an electrode into the muscle. In the non-invasive method, the EMG signal is obtained by placing sEMG (surface electromyography) electrodes on the skin surface. The main advantage of the non-invasive EMG method is the ease of placement of the electrodes on the skin.

Currently, there are many DSs designed for sign language recognition (SLR) using EMG, and information about them is presented in

Table 1.

Table 1 presents a review of the literature from 2015 to 2024. The studies in this table are analyzed based on different numbers of participants, sessions, and devices for detecting sign language from sEMG signals. Early studies (e.g., [

6] and [

12]) widely used 8-channel Myo Armband sEMG devices, involving relatively more subjects (10) and sessions (10), which varied in classification accuracy between 80% and 100%. By 2020, research has expanded and experiments with different devices (e.g., Delsys Trigno [

7] and SS2LB [

8]) have been conducted, and these methods have helped to achieve high (81% and 95.48%) accuracies based on different classifiers such as RF and Linear Discriminant. Recent studies (e.g. [

13] and Our DS) have been based on new devices (Terylene and Biosignalsplux) and modern classification algorithms (CNN-CBAM and RF), and although they have a relatively small number of channels (4 and 6 channels), they have achieved high accuracy (92.32% and 97%). [

13] The classifier in the literature with a result of 92.32% showed a lower result than the model we present. This is due to the fact that in our study there are a large number of participants and a small number of channels. This shows that the technological development of sensors placed on the muscles and the optimization of the number of channels give good results.

In this study, 6 sign words (fruit names: apple, pear, apricot, nut, cherry, raspberry) were recorded from 46 participants using a 4-channel device for 3 weeks, each action 10 times.

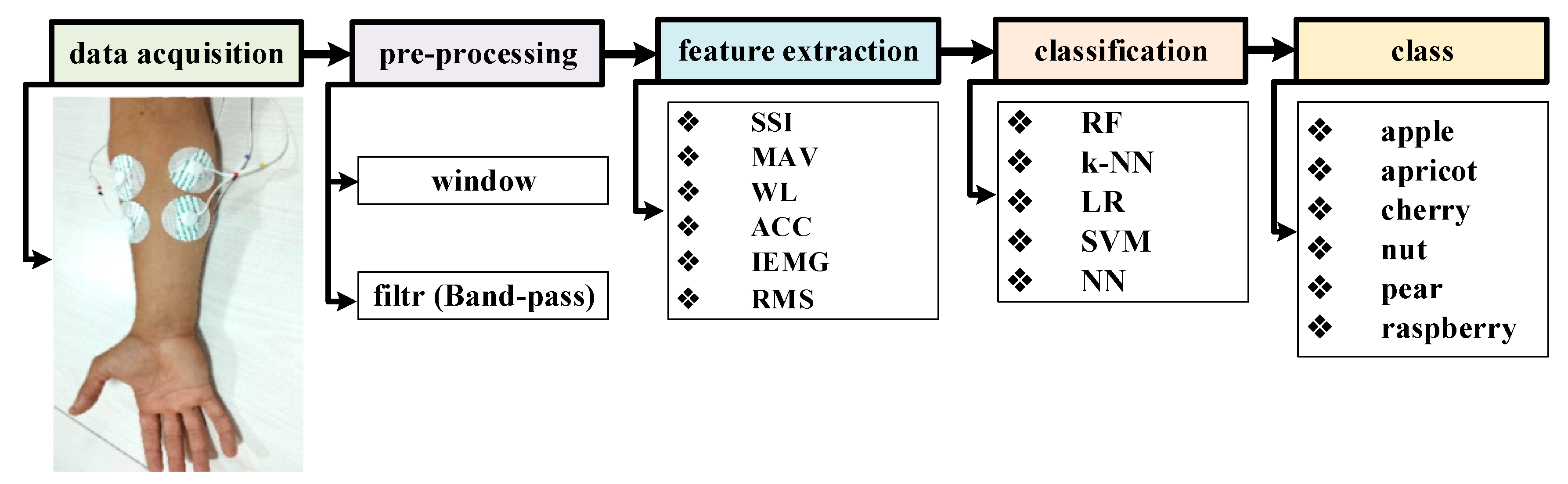

The general scheme for organizing DS and classification is presented in

Figure 1. This process is organized step-by-step, first a data set is formed. In the next stage, the raw signals are cleaned of various noises through pre-processing and the necessary filtering operations are performed. After that, features are extracted. In the final stage, each sign action is assigned to the appropriate class using classification algorithms.

2. Data Collection Organization

2.1. Device

Special tests were conducted to organize the DS. During the experiments, it was adjusted taking into account the characteristics of hand movements of people with hearing impairments.

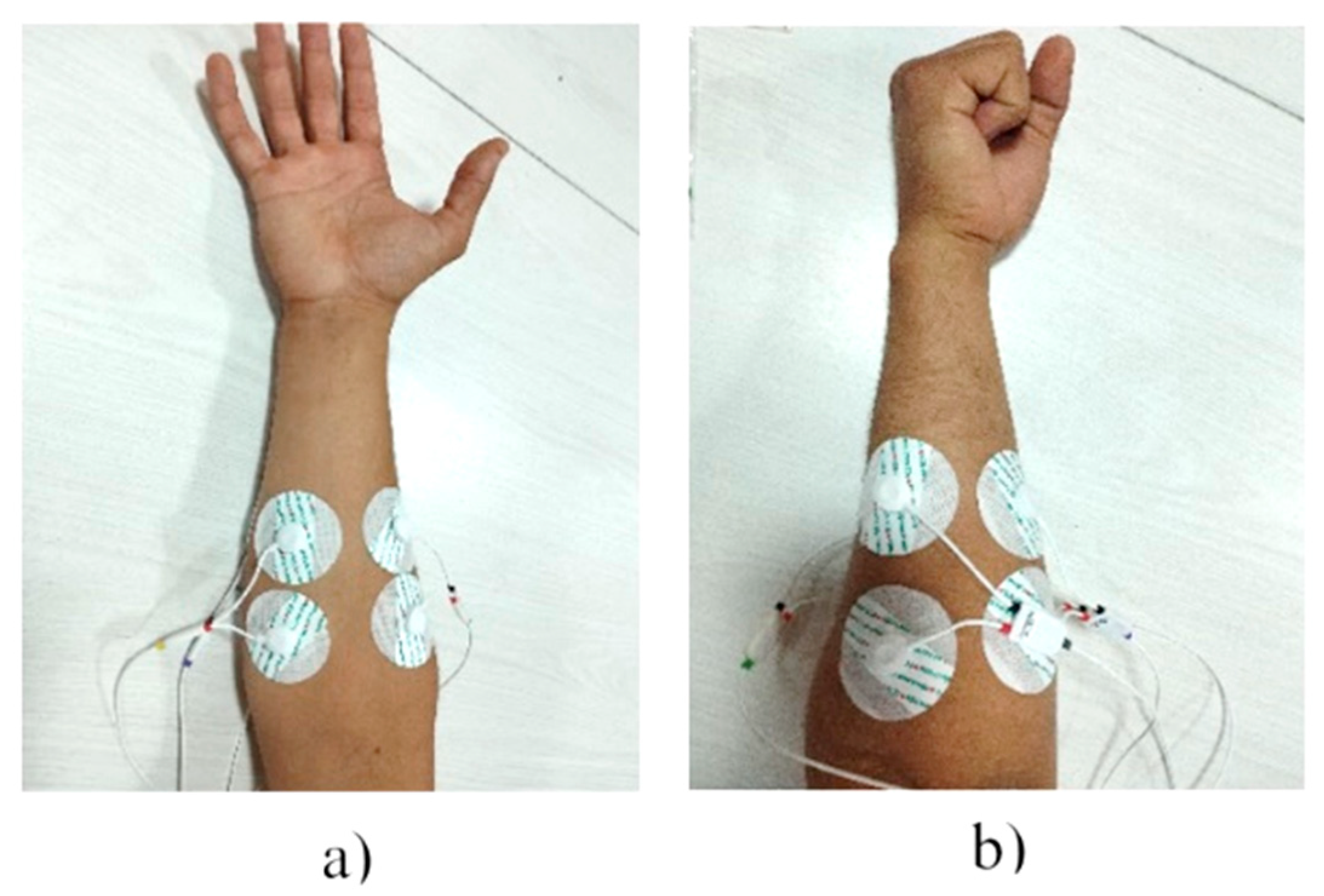

A 4-channel biosignalsplux EMG device developed by PLUX Wireless Biosignals (Portugal, PLUX Wireless Biosignals S.A.) was used to record the EMG signal (

Figure 2). The signal was recorded at a frequency of 1000 Hz. The data were recorded non-invasively, by placing electrodes on the skin.

A 200 ms sequential window was used to control the incoming signals. A band-pass filter was used to clean the EMG signals from various noises and artifacts.

Electrodes were used to record the signal and were placed in the innervation zones of the muscles by the nervous system (

Figure 3).

The electrodes of the 4-channel Biosignalsplux EMG device were placed on the brachio radialis, flexor carpi radialis, extensor carpi ulnaris, and flexor carpi ulnaris muscles of the right hand, which are most active during gesture movements (

Figure 3, a, b).

2.2. DS Structure

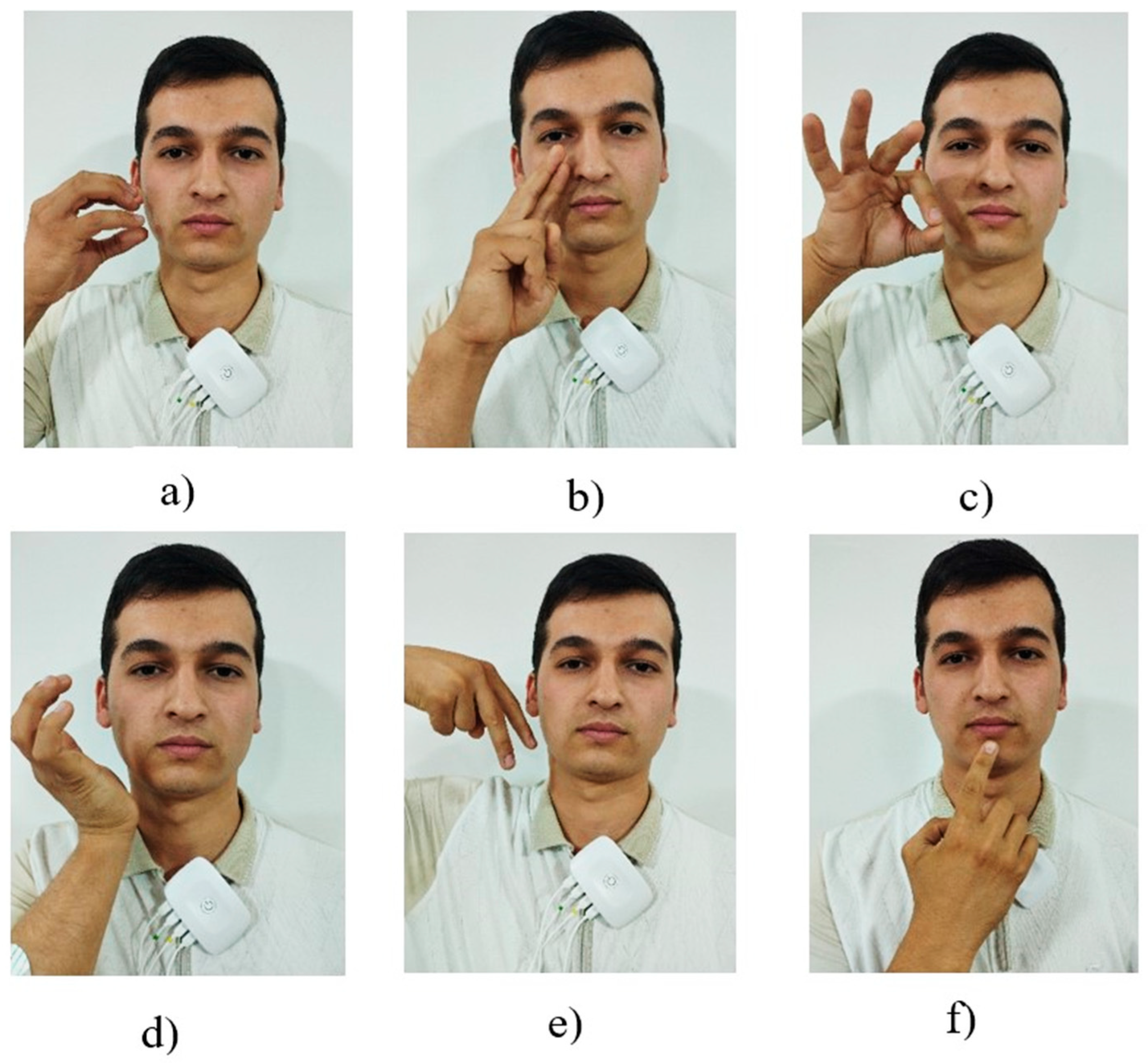

The study involved the recognition of gestural actions for the names of 6 fruits, including apple, pear, apricot, nut, cherry, and raspberry (

Figure 4).

Each subject repeated the gestural actions 10 times. Each session was conducted once for 1 week. 30 sessions were conducted over 3 weeks. The size of the DS is as follows:

30 (repetition) × 6 (class number) × 46 (subjects) = 8280

The participants for the experiment were senior students of the “Karshi city specialized boarding school No. 17 for disabled children with special educational needs” (Uzbekistan). EMG signals were obtained with the consent of the subjects participating in the study. The experiment was conducted on 46 students, including 18 girls and 28 boys.

3. Feature Extraction and Classification

This section describes in detail the steps involved in generating a feature vector for detecting and classifying various sign language gestures using EMG signals. Experiments were also conducted using several modern and efficient classification algorithms that allow for automatic gesture discrimination based on these feature vectors, and the results were analyzed.

3.1. Signal Amplitude

EMG signals are characterized by a high level of variability. This variability is caused by several factors: the electrical resistance of the human skin surface, the technical quality of the electrodes used, and physiological and technical factors such as the anatomical location of the muscle tendons and their innervation zones significantly affect the stability of these signals [

20].

In modern scientific research, various pre-processing steps are being implemented in order to reduce such discrepancies, including signal filtering, segmentation, and signal amplitude normalization.

In this study, the amplitude values of the EMG signal in the resting state were used as a basis and were evaluated using the signal-to-noise ratio (SNR). This approach allows for direct comparison of signals before and during movement. This plays an important role in the process of accurately distinguishing and classifying different movements of sign language.

The SNR formula is expressed as follows:

Here, Pgesture is the average signal strength during active movement, and Pidle is the average signal strength during idle state.

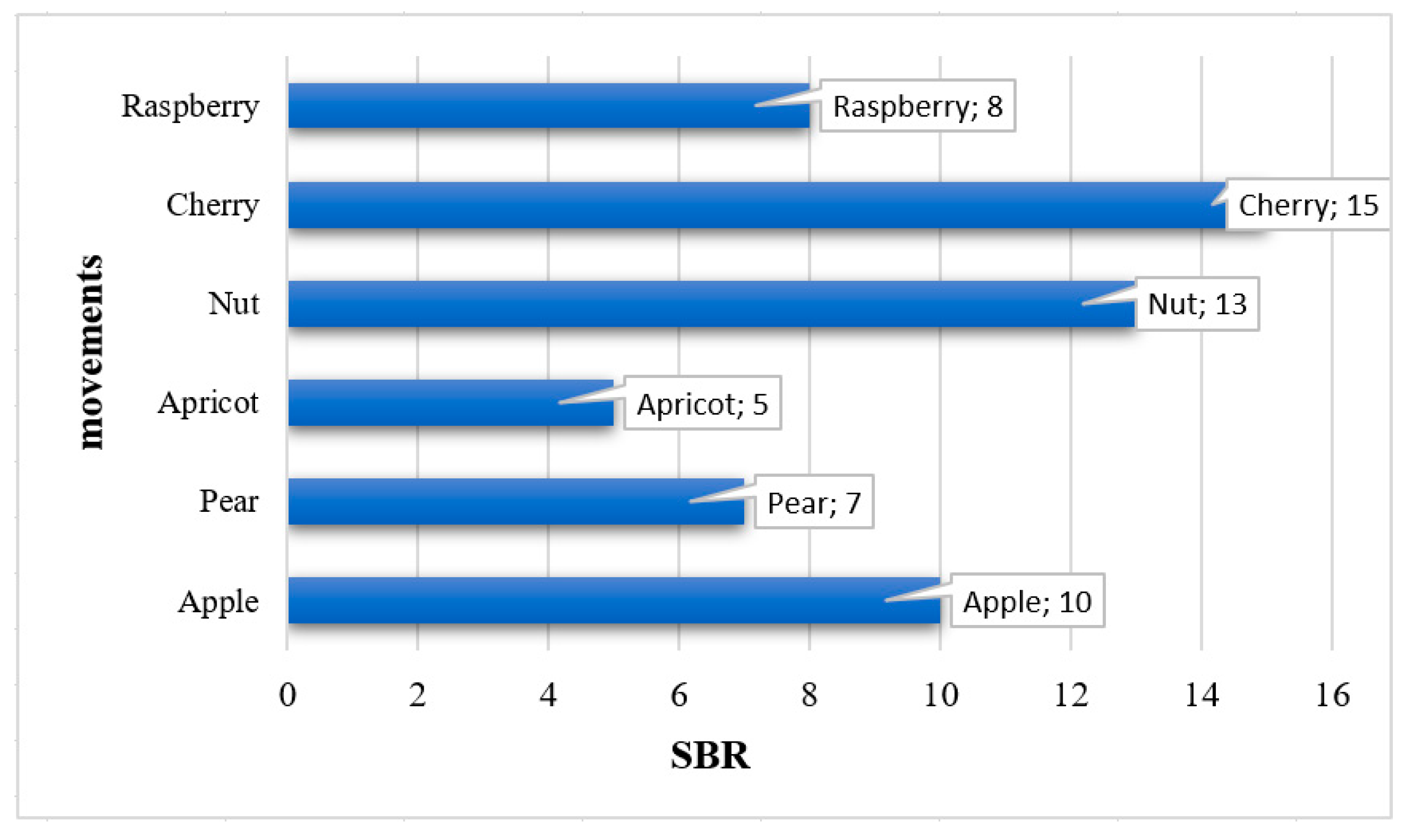

The SNR results calculated based on the average values of the signal amplitudes in the 4 selected EMG channels for each gesture movement are shown in

Figure 5. The analysis results showed that some gestures showed significantly stronger electromyographic activity than others. In particular, the Cherry class had the highest SNR, averaging 15 dB. This indicates that the muscle activity during the gesture was strong. The Nut class also showed a high electromyographic response, coming in second with an average SNR of 13 dB.

The Apple and Raspberry classes showed values of 10 dB and 8 dB, respectively, indicating that their muscle activity level was moderate.

However, the SNR value was significantly lower for some gestures. For example, the Pear class showed an average SNR of 7 dB, while the Apricot class recorded the lowest value of 5 dB. This indicates that the signal-to noise ratio of EMG signals during these gestures was low and muscle activity was relatively weak.

3.2. Feature Extraction

The proposed DS has its own unique features, which distinguish it from other existing EMG collections by several important parameters. In particular, the large number of subjects performing gestures in this collection, the repetition of each gesture movement several times, and the fact that the EMG signals are obtained from hearing-impaired people communicating in SL increase the reliability and analytical accuracy of the data. Therefore, this DS serves as an important source for extracting continuous electromyographic features characteristic of gestures and their effective classification [].

Many scientific studies have been conducted on the detection and classification of movements or gestures using electromyographic signals. In this work, we use the following feature indicators, which have been proven effective in previous experiments [

14]: SSI (Simple Square Integral), ACC (Average Conditional Complexity), IEMG (Integrated EMG), WL (Waveform Length), RMS (Root Mean Square) and MAV (Mean Absolute Value).

These features, in addition to expressing the statistical aspects of the signal over time, also reflect its amplitude, frequency and complexity characteristics. In previous scientific studies, the classification results based on these parameters showed an accuracy of up to 99% [

14]. However, these high results are mainly due to the small number of movements (only 3 gestures) and the limited number of subjects (20).

3.3. Classification

In this study, five classification algorithms—Random Forest (RF), k-Nearest Neighbors (k-NN), Logistic Regression (LR), Support Vector Machine (SVM), and Neural Networks (NN)—were used to classify SL-related actions. These methods are known for their ability to effectively handle features with different structures and to provide robust classification results [

15,

16,

17,

18,

19].

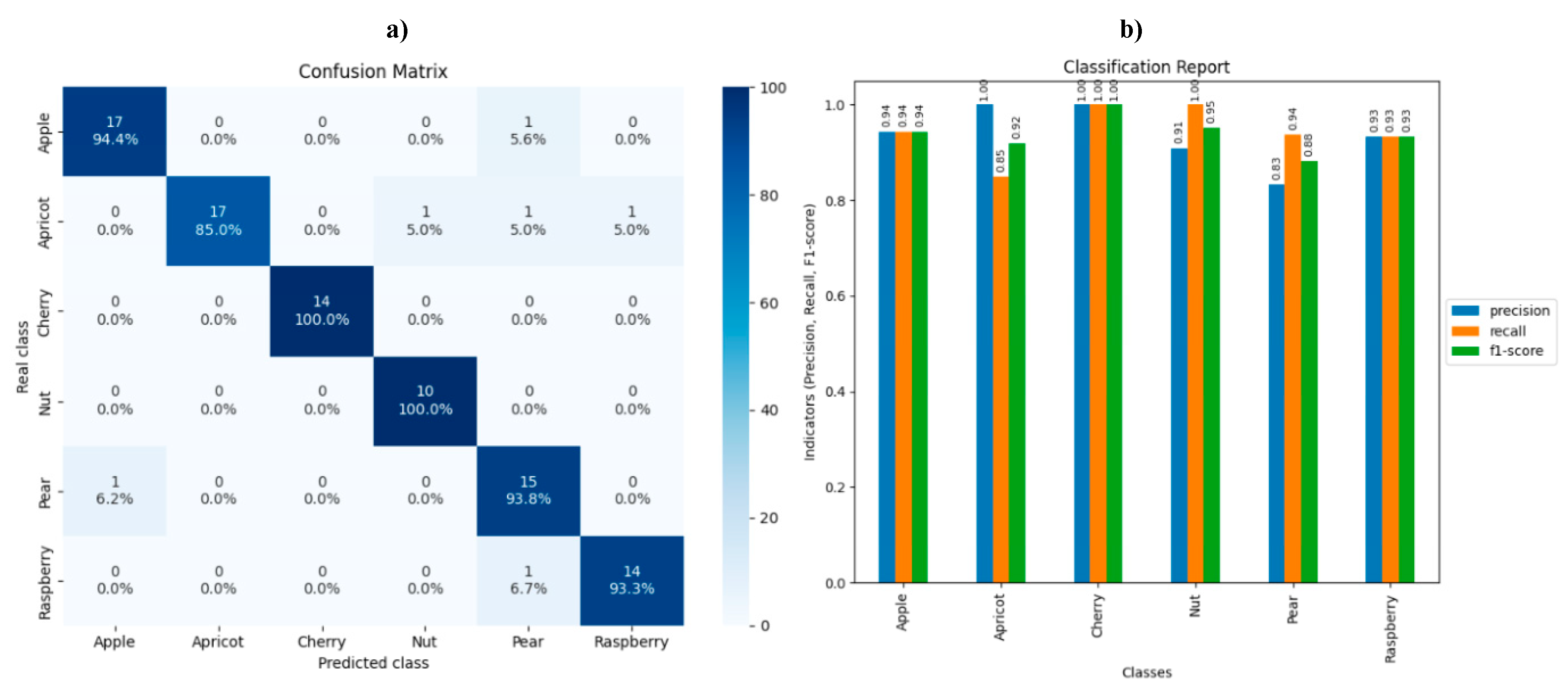

Confusion matrix and classification report are tools for evaluating classifiers for classification models [

21]. They provide a visual representation of the evaluation of a classification algorithm. The confusion matrix and classification report of the kNN model used in the study are shown in

Figure 6.

The classification accuracy was calculated separately for each gesture class, and based on these results, a generalized assessment was made based on their statistical weights.

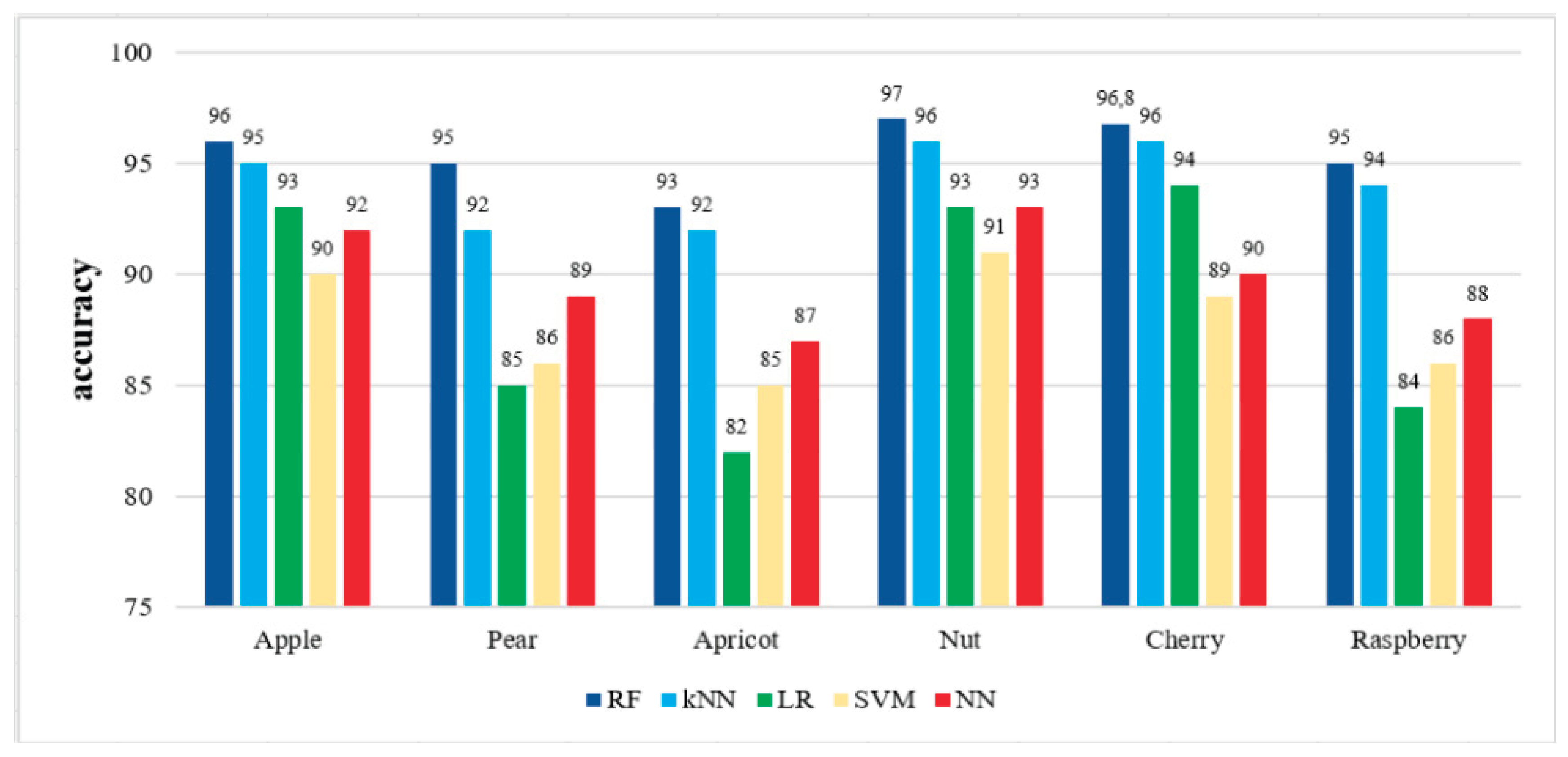

The experimental tests showed that based on the selected feature set, all classifiers — RF, LR, SVM, NN, and kNN algorithms — demonstrated high performance in classifying gestures. However, the accuracy level for each gesture movement was different, and some differences were observed between these algorithms (

Figure 7).

4. Discussion

The number of device channels is important in the process of recording EMG signals. In most studies, an 8-channel Myo Armband was used. However, due to the large number of channels in this device, calculations during the processing of signals coming from them are difficult. This leads to an increase in the amount of time when working in real-time mode.

In this study, DS was established using a 4-channel biosignalsplux device and high results were achieved.

As can be seen from the results of the study, the highest accuracy was observed in the Cherry class, which achieved 97% accuracy in the RF algorithm, and 93% and 96% in the NN and kNN algorithms, respectively.

The Nut class also had high accuracy, with RF and kNN algorithms classifying it with 96.8% and 96% accuracy, respectively. On the contrary, the lowest classification accuracy was observed for the Apricot class. The LR algorithm for this gesture achieved 82% accuracy, while other models also achieved relatively low results: 85% (SVM), 87% (NN), 92% (kNN), and 93% (RF). The reason for the low classification results for the Apricot class is that the Confusion matrix in

Figure 6 shows that the EMG signal values recorded when this class was executed have some similarities to the EMG signal values recorded when the Nut, Pear, and Raspberry classes were executed.

Overall, these results indicate that the level of complexity and the differences in electromyographic responses between gestures have a significant impact on the results of the classifiers.

5. Conclusions

The article presents the recognition of 6 fruit names in SL using EMG signals. A total of 46 hearing-impaired participants participated in the DS collection process. An analysis of previous work in the field of gesture recognition was performed. The analysis revealed that the location of the electrodes, the number of participants and sessions during the DS collection process, and the number of gesture movements affect the classification accuracy. At the same time, the study achieved a high recognition rate using a small number of channels (4 channels), unlike other studies.

The reduction in the number of channels reduces the signal processing time, reduces the computational load, and allows for stable operation in real-time classification systems.

The signal-to-noise ratio analysis of the gesture movements performed in the study was performed. As a result of this analysis, the highest indicators were shown by the cherry and walnut classes, 15 dB and 13 dB, respectively. The lowest was 5 dB due to the low activity of the selected muscles during the execution of the apricot gesture. At the same time, the EMG signals were filtered to remove various noise and artifacts. In the next stage, the SSI, ACC, IEMG, WL, RMS, and MAV features of the EMG signal were selected and DS was created using these features. RF, LR, SVM, NN, and kNN classification algorithms were used to recognize the 6 gesture movements, and they gave an accuracy ranging from 84% to 97%. Of these, the RF algorithm showed the highest result with an accuracy of 97%. Although these results are lower than those in other studies, this created DS can be used in other studies to detect gesture movements or in HMI systems.

Author Contributions

Methodology, K.Z., S.B., M.T., F.R., G.B.; software, S.B., M.T., F.R.; validation, K.Z., S.B., and K.E.; formal analysis, K.Z., G.P., K.M., and S.B.; resources, M.T., F.R., M.S., and G.P.; data curation, K.Z., S.B.; writing—original draft, K.Z., S.B., F.R., and M.T.; writing—review and editing, K.Z., S.B., and M.S.; supervision, K.Z., S.B., and M.T.; project administration, K.Z., and S.B. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All used dataset is available online which open access.

Conflicts of Interest

The authors declare no conflicts of interest

References

- https://www.who.int/news-room/fact-sheets/detail/deafness-and-hearing-loss.

- A. Duivenvoorde.; K. Lee.; M. Raison and S. Achiche. Sensor fusion in upper limb area networks: A survey. 2017 Global Information Infrastructure and Networking Symposium (GIIS), Saint Pierre, France, 2017, pp. 56-63. [CrossRef]

- C. Dignan.; E. Perez.; I. Ahmad.; M. Huber and A. Clark. Improving Sign Language Recognition by Combining Hardware and Software Techniques. 2020 3rd International Conference on Data Intelligence and Security (ICDIS), South Padre Island, TX, USA, 2020, pp. 87-92. [CrossRef]

- J. Shin.; A. S. M. Miah.; M. H. Kabir.; M. A. Rahim and A. Al Shiam. A Methodological and Structural Review of Hand Gesture Recognition Across Diverse Data Modalities. in IEEE Access, Volume 12, pp. 142606-142639, 2024. [CrossRef]

- A. Turgunov.; K. Zohirov and B. Muhtorov. A new dataset for the detection of hand movements based on the SEMG signal. 2020 IEEE 14th International Conference on Application of Information and Communication Technologies (AICT), Tashkent, Uzbekistan, 2020, pp. 1-4. [CrossRef]

- C. Savur and F. Sahin. American Sign Language Recognition system by using surface EMG signal. 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 2016, pp. 002872-002877. [CrossRef]

- S. Yuan et al., Chinese Sign Language Alphabet Recognition Based on Random Forest Algorithm. 2020 IEEE International Workshop on Metrology for Industry 4.0 & IoT, Roma, Italy, 2020, pp. 340-344. [CrossRef]

- M. U. Khan.; F. Amjad.; S. Aziz.; S. Z. H. Naqvi.; M. Shakeel and M. A. Imtiaz. Surface Electromyography based Pakistani Sign Language Interpreter. 2020 International Conference on Electrical, Communication, and Computer Engineering (ICECCE), Istanbul, Turkey, 2020, pp. 1-5. [CrossRef]

- J. Wu.; L. Sun and R. Jafari. A Wearable System for Recognizing American Sign Language in Real-Time Using IMU and Surface EMG Sensors. in IEEE Journal of Biomedical and Health Informatics, Volume 20, no. 5, pp. 1281-1290, Sept. 2016. [CrossRef]

- A Simple Guide for Sign Language Classification Using Support Vector Machines | by Kithmini Herath | the-ai.team | Medium.

- M. Seddiqi.; H. Kivrak and H. Kose. Recognition of Turkish Sign Language (TID) Using sEMG Sensor. 2020 Innovations in Intelligent Systems and Applications Conference (ASYU), Istanbul, Turkey, 2020, pp. 1-6. [CrossRef]

- Bernabé Rodríguez-Tapia.; Alberto Ochoa-Zezzatti.; Angel Israel Soto Marrufo.; Norma Candolfi Arballo.; Patricia Avitia Carlos. Sign Language Recognition Based on EMG Signals through a Hibrid Intelligent System. acc. 2018-10-07 Research in Computing Science 148(6), 2019, ISSN 1870-4069 pp. 253–262. [CrossRef]

- J. Gong.; C. Li.; C. Tang.; X. Chen and S. Gao. An EMG Based Wearable System for Chinese Sign Language Recognition. 2024 IEEE BioSensors Conference (BioSensors), Cambridge, United Kingdom, 2024, pp. 1-4. [CrossRef]

- A. Turgunov.; K. Zohirov.; A. Ganiyev and B. Sharopova. Defining the Features of EMG Signals on the Forearm of the Hand Using SVM, RF, k-NN Classification Algorithms. 2020 Information Communication Technologies Conference (ICTC), Nanjing, China, 2020, pp. 260-264.

- M. Karuna and S. R. Guntur. Classification of Hand Movements via EMG using Machine Learning Methods for Prosthesis. 2022 2nd International Conference on Artificial Intelligence and Signal Processing (AISP), Vijayawada, India, 2022, pp. 1-4. [CrossRef]

- N. Sanwlot.; K. V. Raj.; G. Udupa and R. Anand. Cost-Effective EMG Signal Acquisition for Rehabilitation Robotics using Single-Channel Sensors and Machine Learning. 2024 2nd International Conference on Self Sustainable Artificial Intelligence Systems (ICSSAS), Erode, India, 2024, pp. 1603-1609. [CrossRef]

- K. Zohirov. A New Approach to Determining the Active Potential Limit of an Electromyography Signal. 2023 3rd International Conference on Technological Advancements in Computational Sciences (ICTACS), Tashkent, Uzbekistan, 2023, pp. 291-294. [CrossRef]

- Pires, N.; Macedo, M.P. A Bimodal EMG/FMG System Using Machine Learning Techniques for Gesture Recognition Optimization. Signals 2025, 6, 8. [CrossRef]

- K. Zohirov. Classification Of Some Sensitive Motion Of Fingers To Create Modern Biointerface. 2022 International Conference on Information Science and Communications Technologies (ICISCT), Tashkent, Uzbekistan, 2022, pp. 1-4. [CrossRef]

- Muguro, J.K.; Laksono, P.W.; Rahmaniar, W.; Njeri, W.; Sasatake, Y.; Suhaimi, M.S.A.b.; Matsushita, K.; Sasaki, M.; Sulowicz, M.; Caesarendra, W. Development of Surface EMG Game Control Interface for Persons with Upper Limb Functional Impairments. Signals 2021, 2, 834–851. [Google Scholar] [CrossRef]

- J. Erbani.; P. -É. Portier.; E. Egyed-Zsigmond and D. Nurbakova. Confusion Matrices: A Unified Theory in IEEE Access, Volume 12, pp. 181372-181419, 2024. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).