Introduction

Since the formalization of the Turing Machine in 1936, computational models have largely relied on assumptions of structural stability and symbolic precision.

Classical computation, defined through discrete states and algorithmic transitions, excels in environments where rules are fixed and inputs well-formed. Later developments — including self-referential systems such as the Gödel Machine and probabilistic frameworks like the Quantum Computer — expanded the scope of computability, enabling recursion, superposition, and amplitude evolution as new means of navigating complexity. Yet, all of these models depend on one underlying presupposition: the symbolic substrate must remain coherent.

In real-world cognitive environments, however, symbolic breakdown is not the exception — it is a persistent condition. Axioms deform, meaning drifts, and interpretation becomes unstable under the weight of contradiction, noise, or context loss. From failed legal translations to collapsing scientific paradigms, the limits of formalism are repeatedly exposed when systems attempt to process meaning rather than syntax.

This paper introduces the Heuristic Machine not as a replacement for classical models, but as a necessary addition to the computational ecosystem — one specifically designed to operate when structure fails. Unlike the Turing model, which requires decidable rules, or the quantum model, which evolves within Hilbert-space formalism, the Heuristic Machine assumes neither consistency nor completeness. It does not compute toward truth but compresses toward interpretability. Its central ambition is epistemic: to preserve the possibility of meaning in environments where precision disintegrates.

The motivation for this work arises from the need to rethink computation as an act of symbolic survival, rather than formal resolution. We propose an architecture grounded in Heuristic Physics — a theoretical framework where cognition is not defined by correctness but by resilience under collapse.

The Heuristic Machine thus reframes the role of computation: from executing instructions to absorbing breakdowns, from resolving functions to reconstituting coherence.

Theoretical Foundations

The Heuristic Machine (HM) emerges at the intersection of symbolic cognition, computational epistemology, and entropy-aware system design. It does not arise in opposition to established models such as the Turing Machine or the Quantum Computer, but rather from a recognition of their limits when faced with symbolic instability.

Classical computation, as formalized by Turing [

9], presupposes that input symbols and transition rules retain structural coherence. Even Gödel’s self-referential formalism [

10], while destabilizing completeness, still assumes that the system itself can be coherently described from within. The Quantum Computer extends expressivity through probabilistic amplitude evolution [

11], yet it, too, operates on ontologically legible substrates — wavefunctions, operators, and defined observables.

What the Heuristic Machine introduces is not an ontological alternative, but an epistemic shift: from rule-following to meaning-preserving behavior in collapse-prone fields. It builds upon the theoretical substrate of Heuristic Physics [

1], which proposes that the foundational structures of knowledge — including physical laws — can be reinterpreted not as truths, but as survivable compressions: symbolic heuristics that persist across scale, noise, or contradiction.

In this framework, Newtonian dynamics, for instance, are not declarations of reality but adaptive programs with high symbolic economy under macroscopic constraints.

The HM imports this principle into computation. Rather than solving problems through deterministic or probabilistic execution, it compresses fragments of degraded symbolic material to sustain coherence. It does not compute “the answer,” but recomposes interpretability. Its cognition is not logical, but heuristic; not axiom-based, but structure-emergent.

A precursor to this approach appears in the symbolic recombination architectures proposed for affective AGI systems [

5], where emotional and logical dynamics are stabilized through recursive feedback. That model, while operating at a higher layer, shares the epistemic DNA of the HM: survival through synchronization, not convergence. The conceptual lineage of the HM aligns with Hofstadter’s notion of “strange loops” — systems that survive paradox by internalizing it, using feedback not for control, but for self-sustained redefinition. Yet, the HM is not metaphorical. It is propositional and architectural: a computational schema whose core premise is that intelligence resides in the capacity to recompress collapse into provisional form.

Methodology

The Heuristic Machine (HM) is implemented not as a deterministic executor, but as an epistemic architecture designed to sustain symbolic coherence across semantic collapse. Following the principles of Heuristic Physics [

1], the HM is defined by four operational functions — Compression, Collapse, Cognition, and Creation — known as the 4Cs model. Each function represents not a module, but a behavioral regime under epistemic constraint.

Epistemic Architecture: The Heuristic Machine

Classification: Collapse-Tolerant Symbolic Computation Substrate

Operational Goal: Preserve interpretability in environments where formal axioms degrade or become contradictory

Abstract Description: A runtime heuristic engine that compresses symbolic material under entropy to maintain structural legibility

Macro Functioning: Operates within collapse fields; selects interpretive anchors via compression; restructures fragments into provisional grammars

Expected Inputs: Partial symbols, degraded axioms, contradictory grammars, semantic noise

Observable Outputs: Adaptive symbolic scaffolds, restructured hypotheses, compressive alignments

Internal Metric: Drift Tolerance Index, Coherence Gain, Symbolic Stability Score

Declared Limitations: Low utility in fully deterministic domains; may yield multiple provisional forms rather than convergent results

Context of Application: Open-ended AI, legal ambiguity modeling, symbolic communication, epistemic repair in unstable systems

The 4Cs Functional Model

Compression

Symbolic inputs are recursively scanned for local redundancy and interpretive anchoring points. Unlike classical parsing, compression here does not assume schema — it constructs one. It minimizes entropy by collapsing symbolic variance into provisional alignment clusters [

3,

15].

Collapse

Rather than resisting collapse, the system treats it as field condition. The HM detects incoherence and initiates a structural fold — not to resolve, but to reframe. Contradictions become compression opportunities. Noise becomes a carrier of relational context [

2,

13].

Cognition

Cognition occurs not through rule execution, but through iterative recompression. The machine posits symbolic scaffolds that are repeatedly subjected to drift pressure. If coherence persists, the scaffold is retained. This “survival of structure” principle aligns with concepts from and the feedback-based resonance models in [

6].

Creation

When no alignment survives collapse, the HM initiates the creation function. Fragments are reassembled heuristically into novel, symbolic forms. These are not random. They are drawn from latent correlation matrices encoded across prior collapse fields. The process is inspired by dynamic reconstruction models akin to those in and internally self-aware recombination as theorized in [

14].

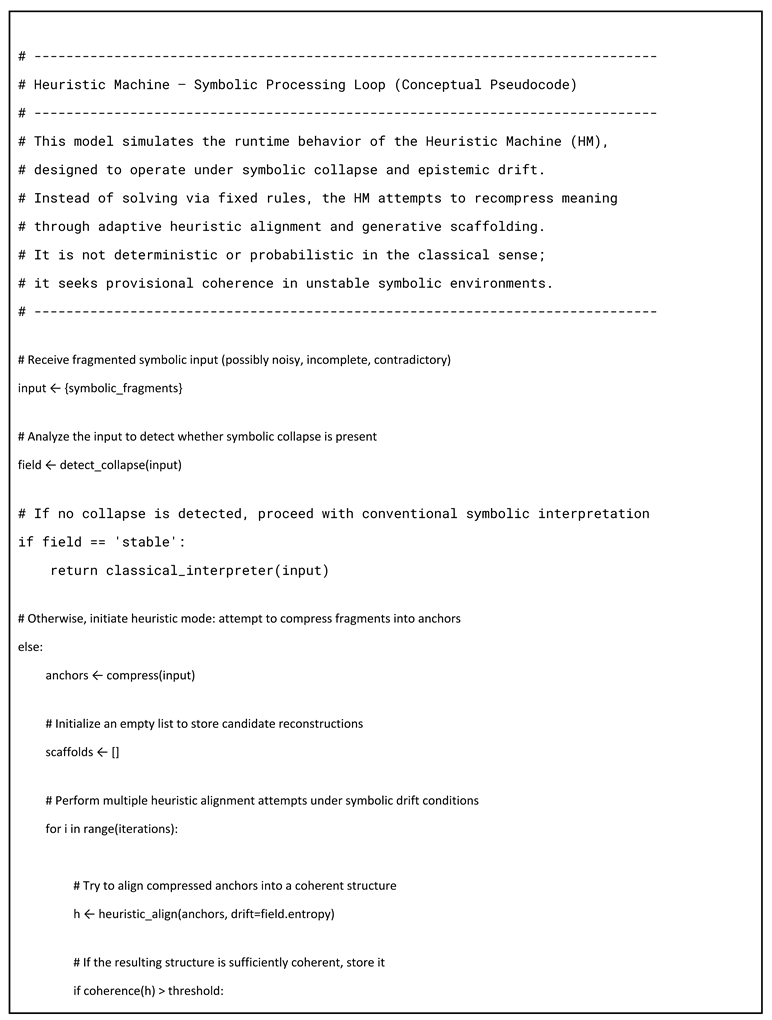

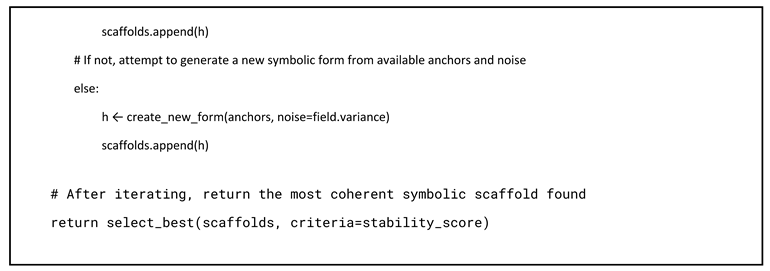

Symbolic Pseudocode (Conceptual Layer)

To illustrate the core operational logic of the Heuristic Machine, we introduce a symbolic pseudocode written in high-level conceptual notation. This code is not intended for implementation in a conventional language, but rather as an epistemic model that abstracts the system's functional flow. It simulates how the machine processes input symbols under varying conditions of structural collapse.

The syntax borrows from standard pseudocode conventions but integrates semantic primitives such as compress, heuristic_align, and create_new_form, which reflect the machine's ability to navigate symbolic entropy.

The logic depicts a decision flow: when input fields are stable, classical interpretation is sufficient; under collapse, the system performs adaptive compression, iterative heuristic alignment, and generative reassembly — selecting outputs not by correctness, but by coherence under pressure.

This model is not intended for software deployment but as an epistemic scaffold: a machine that simulates cognition through the recompression of collapse. Where Turing Machines process rules, and Quantum Machines evolve amplitudes, the Heuristic Machine compresses fragments — iteratively, adaptively, and symbolically.

Architecture and Results

The Heuristic Machine (HM) is defined not by fixed structural components such as tapes, heads, or amplitudes, but by its behavioral pattern under symbolic stress. Its architecture is emergent — instantiated at runtime through compressive adaptation to epistemic breakdown. What follows is not a physical blueprint, but a functional topology anchored in the 4Cs model: Compression, Collapse, Cognition, and Creation.

Each axis represents a regime of symbolic operation. Together, they constitute a computational behavior that can absorb contradiction, reinterpret drift, and generate new symbolic forms from damaged input. These capacities are contextualized below in comparison with the three canonical architectures in computation: the Turing Machine (TM), the Gödel Machine (GM), and the Quantum Machine (QM).

Comparative Functional Matrix

| Function |

Heuristic Machine (HM) |

Turing Machine (TM) |

Quantum Machine (QM) |

Gödel Machine (GM) |

| Compression |

Contextual, semantic anchoring [1,3] |

Token parsing [9] |

State vector preparation [11] |

Rule rewriting [10] |

| Collapse |

Operates within collapse [2,13] |

Halts on contradiction |

Decoheres under measurement [11] |

Pauses for self-modification |

| Cognition |

Drift-aligned hypothesis scaffolding [6,8] |

Deterministic execution |

Superposed evolution |

Meta-theoretic proof generation |

| Creation |

Generates symbolic structures from fragments [2,14] |

Not supported |

Not supported |

Limited to recursive rewriting |

| Failure Mode |

Coherence degradation |

Halt or infinite loop |

Decoherence or non-collapse |

Proof loop or self-invalidation |

| Survival Mode |

Interpretive recompression [1,15] |

Restart from initial state |

Repeat measurement |

Rewrite internal theorem space |

This model does not claim general superiority. It addresses a different class of problem: symbolic collapse — where meaning itself degrades due to contradiction, fragmentation, or ontological drift. In such conditions, TM and QM models either halt or lose semantic resolution. The HM, in contrast, performs adaptive symbolic repair, realigning structure without requiring initial axiomatic fidelity.

Schematic Model of Execution Flow

This flow encodes a shift in computational intent. The machine does not seek to solve, complete, or verify. It seeks to remain legible — to return something structurally interpretable from a field of collapse. In this sense, the “result” of the machine is not a value, but a form that can be re-read under symbolic tension [

15,

16].

Step 1 – Input Reception: Symbolic input is received, potentially consisting of degraded fragments, inconsistent grammars, or contradictory elements.

Step 2 – Collapse Detection: The symbolic field is evaluated to determine whether it remains structurally coherent or exhibits collapse (i.e., semantic incoherence or axiom failure).

Step 3 – Stable Pathway: If the field is deemed stable, classical interpretation is applied — similar to conventional parsing or rule-based computation.

Step 4 – Collapse Pathway: If collapse is detected, the system transitions into heuristic runtime mode.

Step 5 – Compression Phase: Symbolic fragments are analyzed and compressed into semantic anchors — minimal units of provisional coherence.

Step 6 – Cognitive Alignment Loop: Anchors are iteratively aligned into symbolic scaffolds using heuristic inference under semantic drift. This loop is governed by coherence thresholds and entropy resistance.

Step 7 – Fallback Creation Mode: If alignment fails to reach interpretive viability, the system initiates generative recomposition, assembling new symbolic forms from anchors and residual noise.

Step 8 – Selection and Output: Candidate scaffolds are evaluated based on symbolic stability. The most coherent form is returned — not as a resolved answer, but as a compressed, interpretable structure capable of reentry into semantic context.

This symbolic loop mirrors behaviors observed in simulations of stateful cognition and non-observational architectures [

6,

8], where maintaining internal continuity is both structurally viable and cognitively generative. The Heuristic Machine operationalizes this logic by treating collapse not as a failure state, but as a computational domain in itself.

Discussion

The Heuristic Machine is not presented as a universal model or superior paradigm. Its purpose is narrower, though no less critical: to provide a computational substrate for environments in which formal coherence has broken down. In such contexts, traditional models — Turing, Gödel, Quantum — either halt, recurse without resolution, or decohere into non-symbolic probabilities. The HM, by contrast, remains inside the field of collapse and responds not with precision, but with epistemic persistence [

1,

2].

Its architecture suggests a reframing of what it means to compute. Rather than delivering an exact answer within known constraints, the HM returns symbolic scaffolds that can be interpreted even when foundational assumptions have eroded.

This is not a failure of logic, but an expansion of cognition — a form of “computational continuity” in environments where the notion of correctness itself has fragmented [

14,

15].

The implications extend beyond theory. In areas such as AGI design, post-symbolic communication, and systems operating under adversarial or degenerate conditions, the ability to maintain meaning without formal grounding becomes essential. As discussed in recent proposals on non-observational intelligence [

6,

17] and cognitive scope architectures [

8], systems that infer or recompress structure under partial conditions may prove more generalizable than those that depend on complete data or axiomatic form.

Furthermore, the HM introduces a methodological inversion: it treats collapse not as a limit to be avoided, but as a computational terrain to be navigated. This aligns with broader shifts in epistemology — from discovery to construction, from prediction to survivability [

3,

13,

16]. Rather than eliminate contradiction, the HM absorbs it, framing ambiguity as a condition of symbolic richness rather than dysfunction.

In practical terms, the HM may be unsuitable in fully deterministic domains where precision and closure are required. It excels, however, in tasks where formal boundaries mutate midstream: distributed cognition, shifting ontologies, interpretive modeling, and domains with symbolic adversarial drift — such as multilingual legal interpretation, cultural translation, or general-purpose autonomous reasoning [

5].

As computation confronts increasingly open systems, partial knowledge, and contextual entropy, the Heuristic Machine offers a proposition: that intelligence might not always be about solving, but about surviving interpretably.

Its contribution is thus not only architectural, but epistemic. It asks not “what is computable,” but “what can remain meaningful when computation itself destabilizes.”

Use Cases

The Heuristic Machine is best applied in conditions where symbolic environments are degraded, contradictory, or incomplete — scenarios that resist classical execution or probabilistic resolution. Rather than serving as a universal engine, the HM acts as a semantic stabilizer in domains marked by epistemic turbulence. Below are illustrative cases where its architectural logic is particularly suited.

In human–machine communication, semantic drift often emerges not from signal failure, but from gradual misalignment in symbolic interpretation. Distributed systems operating across asynchronous contexts lose shared grounding. The HM enables message interpretation under fragmentation, using adaptive compression to reconstruct intended meaning from partial signals. It treats communication not as decoding, but as survival of coherence across drift. In legal interpretation, contradictions arise when statutes, jurisprudence, and cultural semantics coexist with non-resolvable overlap. Traditional rule engines fail due to strict reliance on logical consistency. The HM models interpretive compression: absorbing inconsistency and reassembling legal meaning into scaffolds that remain intelligible without resolving all conflicts. Its architecture is compatible with proposals in symbolic legal modeling and protocol-agnostic semantics. In artificial general intelligence operating in unstructured environments, predefined categories often dissolve. Tasks are not clearly defined, ontologies evolve, and context is unstable. The HM offers cognitive survivability in such conditions: it does not require complete models to function. Instead, it generates interpretive scaffolds that guide behavior in ambiguous terrain. This aligns with models of non-observational cognition and symbolic scope detection in open-world AI. In scientific epistemology, paradigm shifts are forms of symbolic collapse: explanatory structures that once stabilized meaning become insufficient. The HM can be used to simulate heuristic reformation, producing symbolic reconstructions that permit transitional continuity without requiring axiomatic replacement. This resonates with the interpretation of physical law as emergent compression, as theorized in Heuristic Physics.

Moreover, in adversarial or stealth domains, relevant phenomena may not emit detectable signals. Rather than relying on observables, the HM architecture enables inference based on deviation — interpreting distortion as evidence of unseen structure. This approach redefines observability itself: detection through the absence of expected coherence. In cultural and linguistic systems, especially in high-context translation scenarios, formal equivalence fails. The HM’s strategy of interpretive recompression allows for translations that preserve semantic intent despite idiomatic or structural rupture. This reframes translation as symbolic survival — a reassembly of coherence, not a mirror of syntax.

In all these contexts, the output of the Heuristic Machine is not a binary result or analytic certainty. It is a symbolic form that remains meaningful under tension. This quality — the capacity to recompress meaning through collapse — situates the HM as a pragmatic epistemic tool in increasingly unstable symbolic environments.

Limitations and Future Work

The Heuristic Machine, as presented here, is a symbolic and epistemic construct. It does not describe an implementable device, nor does it assume empirical validation through hardware, benchmarks, or statistical models. Its value lies in framing a cognitive architecture for symbolic survivability, not in competing with computational performance metrics.

Its primary limitation is scope: the HM is not designed for deterministic systems, optimized pipelines, or high-speed decision engines. In contexts where formalism is intact and logical closure is possible, the HM offers no efficiency advantage — it may, in fact, introduce unnecessary indeterminacy. Its strength appears only where symbolic terrain collapses, and its operational logic is most coherent under ambiguity, contradiction, or entropy. Another limitation lies in interpretation. The HM produces provisional scaffolds, not definitive outputs. This places the burden of semantic evaluation on the receiver — whether human or machine. As such, its integration into autonomous systems must be mediated by layers that can assess or stabilize the interpretability of its results.

A third boundary involves its current abstraction level. As a theoretical construct, the HM lacks a formal implementation standard or executable framework. While it aligns with concepts in adaptive heuristics [

2], introspective self-repair [

4], and symbolic scope modeling [

8], the transition from model to mechanism remains an open challenge.

Nonetheless, the HM offers fertile ground for future exploration. One direction is hybridization: integrating HM logic into existing AGI substrates as a fallback mode or semantic stabilizer. In contexts where classical inference fails, an embedded HM module could reframe inputs, preserving system continuity without requiring total formal integrity.

Another direction is simulation. Synthetic environments characterized by rule collapse or context ambiguity — such as legal paradoxes, interlinguistic drift, or cognitive anomaly detection — could serve as testbeds for iterative HM behavior. These would not test for accuracy, but for resilience under degradation — a metric rarely considered in current machine intelligence.

Further work may also refine the theoretical infrastructure of the HM using category theory, entropy-driven logic, or epistemic game dynamics. The notion of computation as survival — rather than resolution — may offer links to systems theory, post-structural epistemology, or symbolic complexity science [

13,

15,

16]. The Heuristic Machine is not offered as a final model, but as a structural provocation: a way to think differently about computation when stability is gone. It reframes the question not as “what can we compute,” but as “what can we continue to mean when rules no longer apply.”

Author Contributions

Conceptualization, design, writing, and review were all conducted solely by the author. No co-authors or external contributors were involved.

License and Ethical Disclosures

This work is published under the Creative Commons Attribution 4.0 International (CC BY 4.0) license. You are free to: Share — copy and redistribute the material in any medium or format Adapt — remix, transform, and build upon the material for any purpose, even commercially. Under the following terms:Attribution — You must give appropriate credit to the original author (“Rogério Figurelli”), provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner but not in any way that suggests the licensor endorses you or your use.The full license text is available at:

https://creativecommons.org/licenses/by/4.0/legalcode

Ethical and Epistemic Disclaimer

This document constitutes a symbolic architectural proposition. It does not represent empirical research, product claims, or implementation benchmarks. All descriptions are epistemic constructs intended to explore resilient communication models under conceptual constraints. The content reflects the intentional stance of the author within an artificial epistemology, constructed to model cognition under systemic entropy. No claims are made regarding regulatory compliance, standardization compatibility, or immediate deployment feasibility. Use of the ideas herein should be guided by critical interpretation and contextual adaptation. All references included were cited with epistemic intent. Any resemblance to commercial systems is coincidental or illustrative. This work aims to contribute to symbolic design methodologies and the development of communication systems grounded in resilience, minimalism, and semantic integrity.

Use of AI and Large Language Models

AI tools were employed solely as methodological instruments. No system or model contributed as an author. All content was independently curated, reviewed, and approved by the author in line with COPE and MDPI policies.

Ethics Statement

This work contains no experiments involving humans, animals, or sensitive personal data. No ethical approval was required.

Data Availability Statement

No external datasets were used or generated. The content is entirely conceptual and architectural.

Conflicts of Interest

The author declares no conflicts of interest.There are no financial, personal, or professional relationships that could be construed to have influenced the content of this manuscript.

References

- R. Figurelli, Heuristic Physics: Foundations for a Semantic and Computational Architecture of Physics, Preprints.org, 2025. [CrossRef]

- R. Figurelli, A Heuristic Physics-Based Proposal for the P = NP Problem, Preprints.org, 2025. [CrossRef]

- R. Figurelli, XCP: A Symbolic Architecture for Distributed Communication Across Protocols, Preprints.org, 2025. [CrossRef]

- R. Figurelli, Self-HealAI: Architecting Autonomous Cognitive Self-Repair, Preprints.org, 2025. [CrossRef]

- R. Figurelli, The Science of Resonance: Architecting Synchronized Emotional-Logical Loops for Deep Adaptive AGI, Preprints.org, 2025. [CrossRef]

- R. Figurelli, The End of Observability: Emergence of Self-Aware Systems, Preprints.org, 2025. [CrossRef]

- R. Figurelli, The Cognitive Scope: Non-Observational Intelligence Through Anchored Deviation, Preprints.org, 2025. [CrossRef]

- R. Figurelli, The Birth of Machines With Internal States, Preprints.org, 2025. [CrossRef]

- A. M. Turing, On Computable Numbers, with an Application to the Entscheidungsproblem, Proc. London Math. Soc., 1936.

- K. Gödel, Über formal unentscheidbare Sätze, Monatshefte für Mathematik und Physik, 1931.

- D. Deutsch, Quantum theory, the Church–Turing principle and the universal quantum computer, Proc. Royal Society A, 1985. [CrossRef]

- S. Aaronson, Quantum Computing Since Democritus, Cambridge University Press, 2013.

- M. Mitchell, Complexity: A Guided Tour, Oxford University Press, 2009.

- D. Hofstadter, Gödel, Escher, Bach: An Eternal Golden Braid, Basic Books, 1979.

- E. Morin, On Complexity, Hampton Press, 2008.

- J. Engelhardt, Symbolic Broadcasting as Epistemic Infrastructure, Journal of Theoretical Media Systems, 2024.

- N. Chomsky, Three Models for the Description of Language, IRE Trans. Inf. Theory, 1956. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).