1. Introduction

In robotics various sensors are employed to enable the execution of complex tasks [

1]. These sensors include 2D and 3D cameras, force sensors, tactile sensors, laser scanners, and similar devices. Among these, cameras have proven to be particularly effective and cost-efficient for applications such as bin picking [

2], automated robot assembly [

3], and quality control [

4]. However, challenges arise when objects are not visible due to overlap, poor lighting conditions, or suboptimal camera positioning [

5,

6]. Accurate hand–eye camera calibration is a critical yet frequently underestimated challenge in robotics, which can present problems for operations requiring high precision, such as when assembling objects with low tolerance [

7].

In such cases, reliance must shift to alternative sensors, such as force and touch sensors, which do not provide information as rich or comprehensive as that from cameras [

8,

9]. This paper addresses this issue and proposes a tactile localization method that does not rely on cameras.

A typical assembly operation, such as inserting a peg into a hole, can be divided into two primary phases: positioning the peg near the opening and the actual insertion. This paper does not address the insertion phase, which requires force sensors and that has already been well studied in robotics [

10]. Instead, we focus on the approach phase, i.e. the localization of the opening, using only force sensors or touch detection.

Several heuristic and statistical search methods are commonly employed for this purpose, including random search [

11], spiral search [

12], genetic algorithms based search [

13], ergodic search [

14] and others. Among them, only random and spiral searches do not require prior knowledge of the environment. In contrast, ergodic search utilizes a probability distribution indicating where the assembly object is likely to be located in space, making it more effective than the other two methods.

Rather than relying primarily on heuristics or statistical priors, our approach assumes the availability of an accurate geometric model of the environment, although its position and orientation relative to the robot are initially unknown. The goal is to localize this model through systematic exploration.

2. State of the Art

The problem of object localization in assembly operations has been widely studied in prior research, with diverse approaches proposed depending on available sensing modalities and application contexts. Early methods, such as those by [

15,

16], utilized pre-acquired contact maps combined with particle filters to enable precise localization using sparse tactile data. Similarly, [

17] introduced a computationally efficient iterative Bayesian Monte Carlo technique for six degree-of-freedom (6-DOF) pose estimation, demonstrating robustness in tactile localization tasks. Other approaches, such as the Gaussian mixture model-based contact state detection method proposed by [

18], leverage wrench signals to facilitate peg-in-hole assembly localization.

Building on these foundations, tactile sensing for object localization has been further advanced by [

19], who introduced the Next Best Touch (NBT) strategy to identify the most informative subsequent contact for efficient pose estimation. Extensions of this concept to 2D visual maps were explored by [

20] using recursive Bayesian filtering to estimate belief distributions over possible locations, with [

21] refining this framework to address both localization and shape uncertainty in active tactile sensing. Recent works have incorporated deep learning techniques to process tactile data more effectively; for example, [

22,

23] demonstrated the use of deep neural networks (DNNs) for tactile object pose estimation from high-resolution sensor arrays, achieving significant accuracy improvements. Other studies, such as [

8] and [

24], have successfully applied tactile contact sensing for object recognition and classification, highlighting the growing capabilities of tactile perception.

In parallel, related research in robotic grasping and manipulation has emphasized the integration of multimodal sensory inputs, combining vision, force, and tactile data to enhance pose estimation accuracy and robustness under uncertainty [

25,

26].

Despite these significant advancements, the majority of existing work — apart from [

15,

16] — does not explicitly target the challenge of assembly pose search using sparse binary touch sensors, which provide extremely limited and discrete information. This sparse sensing modality imposes unique challenges in developing algorithms capable of robust, efficient localization under minimal sensory input. Consequently, this remains a critical open problem in automated assembly, motivating further research into probabilistic and adaptive methods tailored for sparse tactile feedback.

Binary touch sensing, despite its simplicity, offers several key advantages in constrained environments. Unlike visual-tactile sensing, which requires cameras with clear line-of-sight, adequate lighting, and often precise calibration between visual and robot coordinate frames, binary contact sensors can operate in complete darkness, through occlusions, and without complex setup. This makes them particularly well-suited for tasks where cameras cannot be reliably deployed, such as operations in enclosed fixtures, poorly illuminated areas, or behind physical obstructions. Furthermore, visual-tactile systems generally require high-fidelity calibration and often depend on higher-bandwidth communication and processing pipelines, whereas binary touch sensing enables lightweight, reactive implementations that are easier to deploy and maintain in industrial environments. These trade-offs motivate the development of efficient localization algorithms that rely solely on binary tactile feedback.

3. Materials and Methods

In this section, we present our original algorithms for detecting the 3D position of objects using touch sensing. We begin by introducing a basic search algorithm for 2D position detection and subsequently extend it to handle 3D position estimation. We then enhance these algorithms with a probabilistic search framework designed to robustly manage sensor noise, inaccuracies in the object map, and variations due to object rotation.

3.1. Map Registration

In this section, we present a deterministic 2D search method that serves as a foundation for 3D search introduced in

Section 3.2 and its further enhancement into a probabilistic framework described in

Section 3.3. Our approach shares similarities with Next Best Touch (NBT) methods [

19], as it systematically refines the search region through geometric region elimination in consecutive steps.

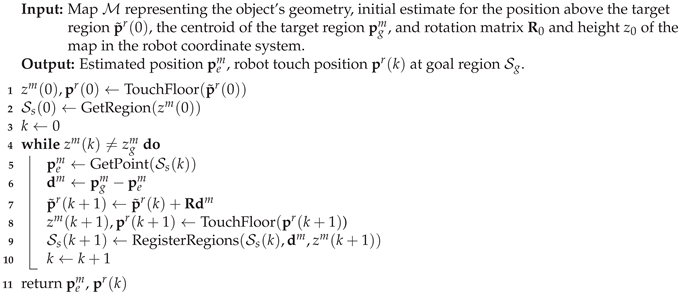

We assume the availability of a 3D map of the object where the assembly operation takes place. Furthermore, we consider that the 3D object consists of a finite number of horizontal faces (quasi-iso-height regions). These surfaces are represented as a 2D model map

in the

x-

y plane, where

denote the partitions of the map and

N is the number of partitions. Each partition is defined as an area of the object having the same height

when put on the horizontal surface,

and

is the number of discrete points within the partition

. In practice we obtain these points by discretization directly from a CAD model or, alternatively, using a scanner device. An example of such a region-based map is depicted in

Figure 1.

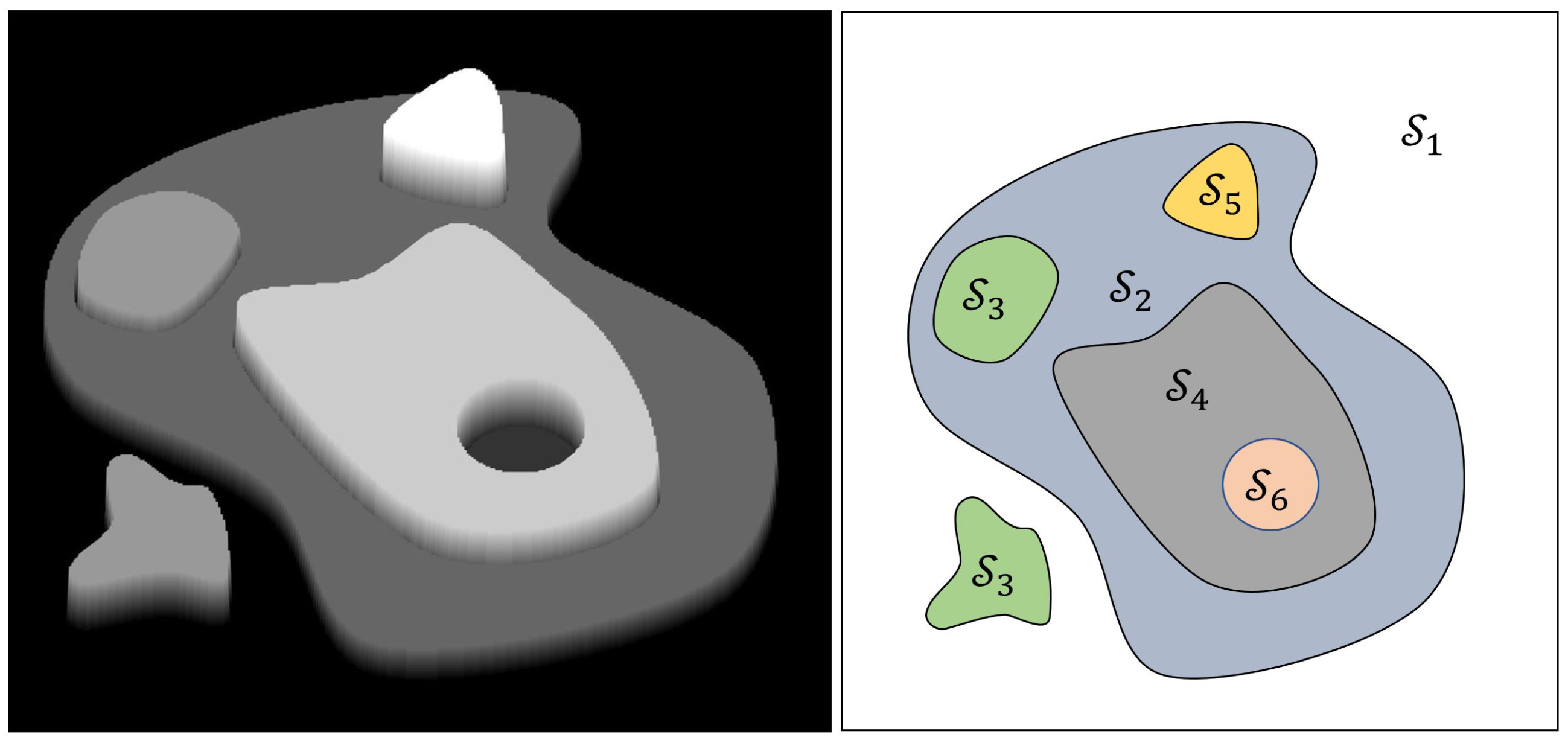

While the 2D map representing the object’s geometry and the object’s orientation is known, the position of the object in the robot’s coordinate system is unknown. There are many practical examples in industry that satisfy these requirements, for example all objects that are rotationally invariant. There is also a common case when we can provide the exact orientation of the object, but not its position. We consider a scenario where the robot must determine any point on the target region , which centroid is denoted by in the map coordinate system. Initially, we are given an estimate of a point above the target region in the robot’s coordinate system, which we denote here by . However, due to uncertainty in this initial position, the robot might initially contact a different region. Note that we are not specifically looking for a centroid of but for any point in .

Next the robot moves in the

direction in the map coordinate system until it touches the surface of the object. By calculating the

z coordinate of this initial contact point

in the map coordinate system (see Eq. (

4)), the robot can identify which of the regions has been touched.

1 We denote the touched region as

, where

i is the index determined by the measured height. The region

is defined as

, so each point

corresponds to a point in

.

In the following we apply notation where vectors with superscript are expressed in the robot’s coordinate system, while the corresponding vectors with the superscript are expressed in the map’s coordinate system.

Initially, we determine the touched position in the map coordinate system as a point closest to the centroid of the touched region and ensure it is also contained in the that region. We denote this position as

. The algorithm then computes the displacement vector:

Next the robot moves to the next estimate of the position above the target region:

where the rotation matrix

accounts for the rotation between the robot and the map coordinate system. The robot then moves again along the

coordinate of the map coordinate system until it touches the surface of the object. The

z coordinate of the new contact point

in the map coordinate system determines the next touched region

.

To refine the estimate, we update the search region

by selecting all points

within

that satisfy:

The updated search region

contains only the points that fulfill the above condition. The next estimate of

is computed as the centroid of

. Like before, if the centroid is not contained within

, we take a random point from

as the estimate of

. This operation is repeated until the robot hits a point on the target region

. We denote the iteration index by

k.

In the appendix we show that the last touched position is guaranteed to lie within the target region .

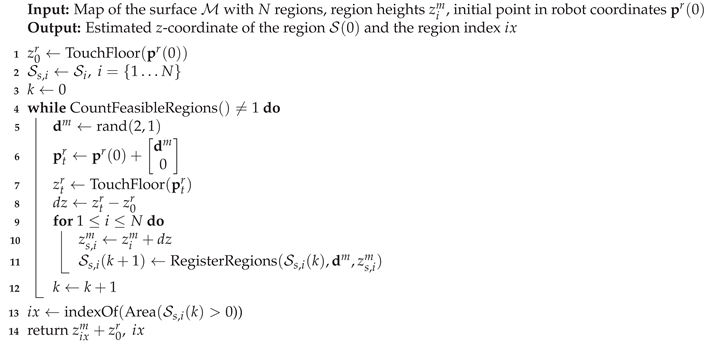

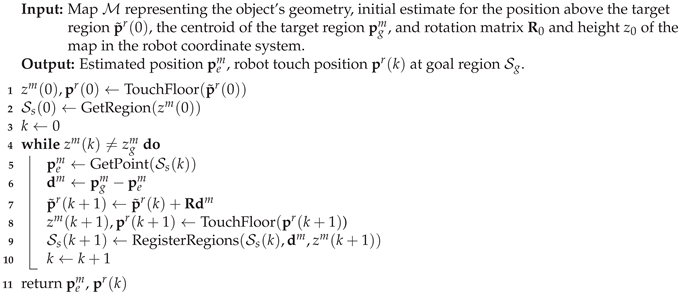

The above procedure defines an iterative algorithm outlined in Algorithm 1.

|

Algorithm 1: Map Registration Algorithm Using Touch Sensing |

|

In Algorithm 1, we apply the following functions:

TouchFloor is a function that involves the motion of the robot from the initial position

along the

axes in the map coordinate system until it touches the surface of the object. It also computes the

coordinate of the touch point in the map coordinate system. This calculation involves the transformation

where

is a constant that defines the

z-component of the map coordinate system origin expressed in robot coordinates. Note that

x and

y coordinates of the map coordinate system origin are unknown.

GetRegion returns the region index based on measured coordinate at the contact point.

GetPoint returns a point from closest to the centroid of .

RegisterRegions returns the region composed of all points that satisfy the condition .

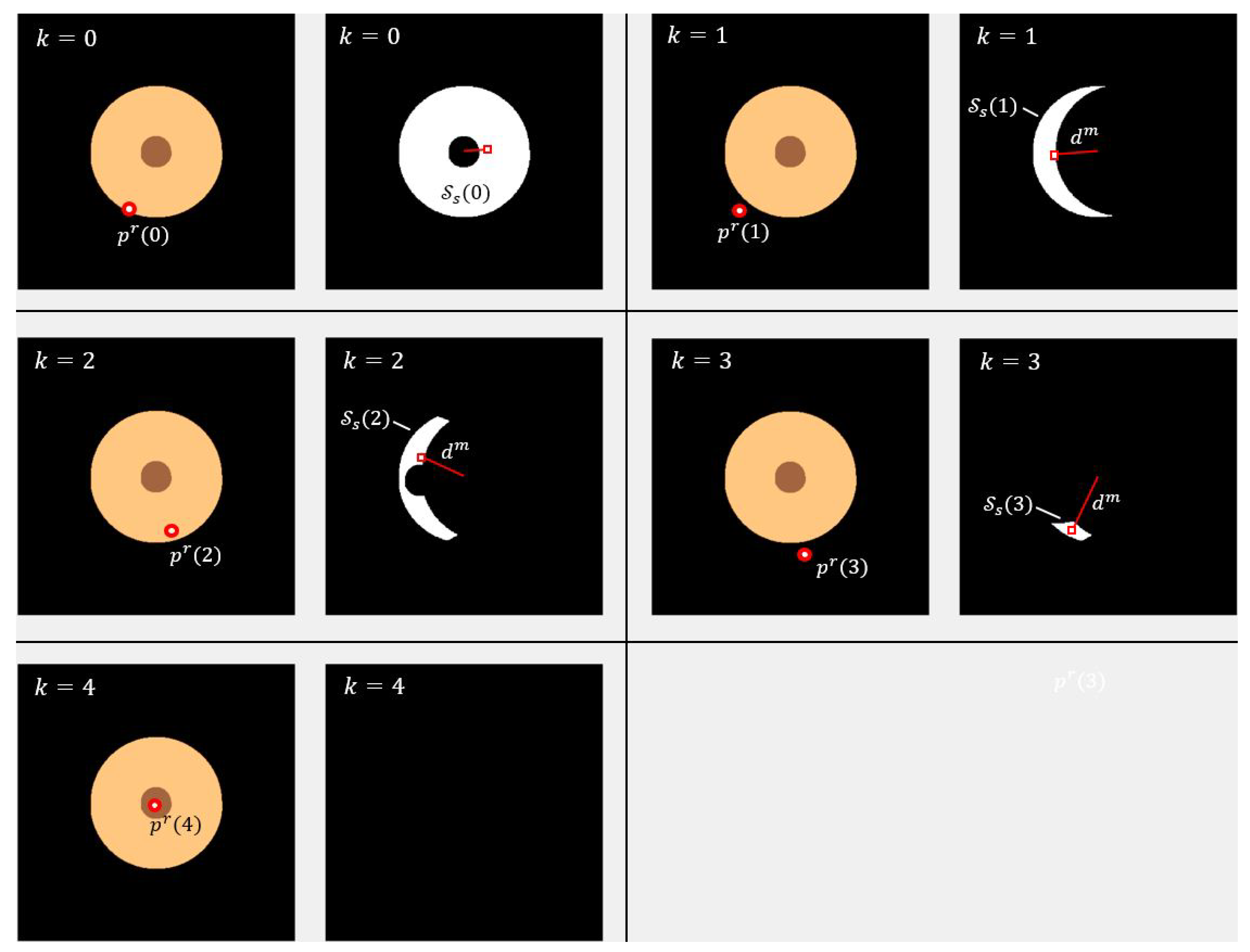

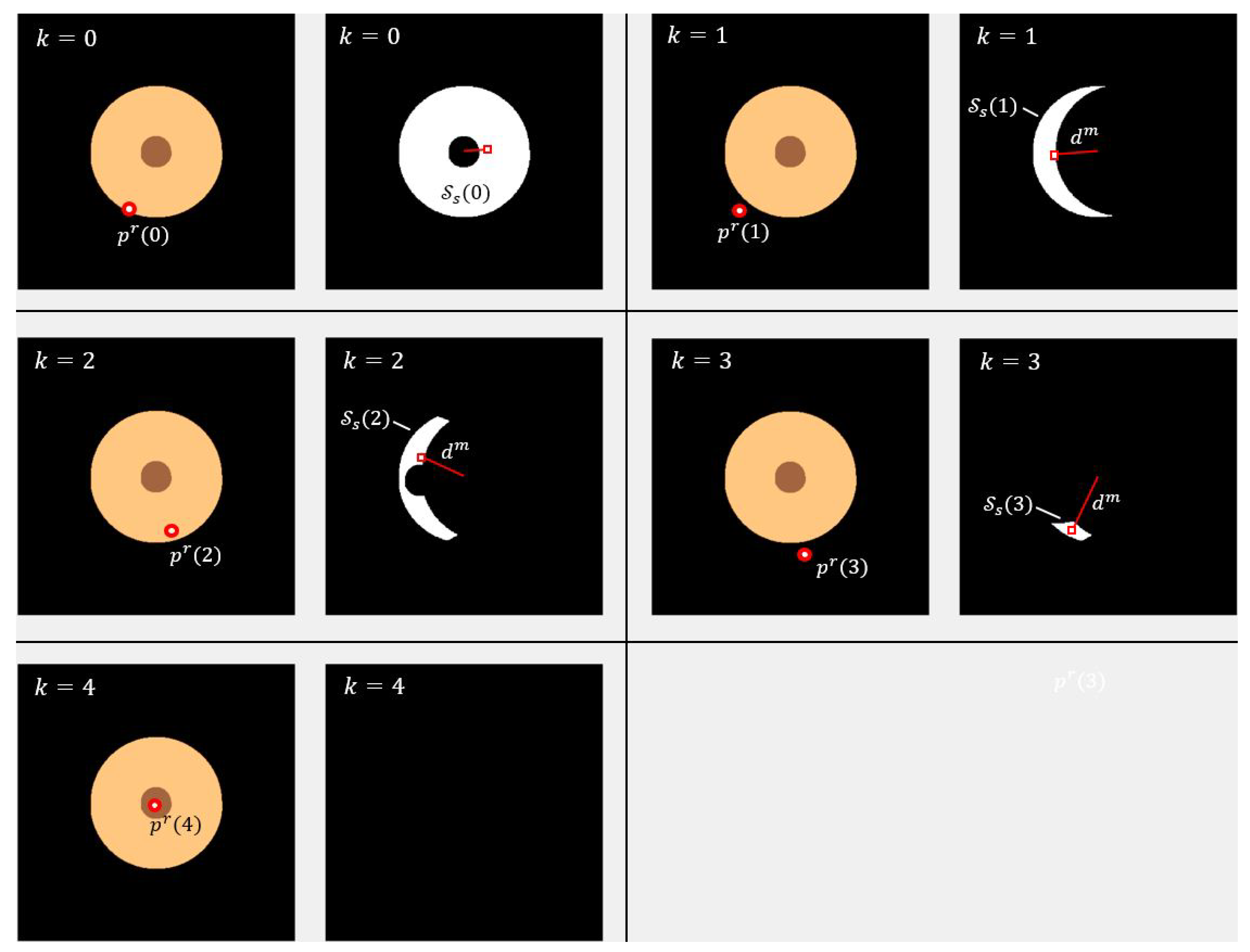

To illustrate the proposed method, we apply it to the search for the socket where a robot should insert an audio jack plug, as shown in

Figure 2.

Figure 3 shows the iterative refinement process. The search area

(marked in white) is gradually reduced until the estimated location

is within the target region

, ensuring successful insertion of the plug.

3.2. Map Registration with Unknown Object Base Plane Height

The algorithm presented in the previous section assumes that the z-coordinate of the object’s surface can be directly determined from the touch sensor’s reading. In other words, it requires prior knowledge of the height of the object’s base plane in the robot coordinate system so that each touch immediately reveals which region was contacted. However, if the exact height is unknown, the robot cannot directly ascertain which region it has touched. In such scenarios, estimating the object’s base z-coordinate (height) becomes a necessary step before proceeding with precise localization. The algorithm presented in this section overcomes this limitation by eliminating the need for prior height information, thus ensuring that the robot can still identify the contacted region.

We propose an iterative algorithm to estimate an object’s base height using a 3D map and successive touch operations. As, before, we assume that the object consists of a finite number of uniform height regions, denoted as , where denotes the region index, each located at a distinct height . From the 3D map, the algorithm first identifies the number of these regions, N, and their corresponding heights in the robot coordinates.

The algorithm begins by selecting an arbitrary position above the object, establishes the contact point using the TouchFloor procedure and records the z coordinate as height . At this stage, it is unclear which of the map’s regions the robot has touched. Therefore, the algorithm initializes a candidate region for each i, effectively treating all N regions as potential matches. In subsequent steps, the algorithm narrows down the feasible candidate regions by eliminating regions that are inconsistent with additional measurements.

The robot touches the object at another arbitrary point

, and a displacement vector in the map frame is computed as:

The new contact point yields a height measurement

. We calculate height difference:

For each candidate region, the height

is updated as

where

is the height (

z coordinate) of the

th region

. Additionally, each candidate region

is updated by retaining only those points that satisfy the condition:

where

represents the set of regions at height

.

This process is repeated until all but one of the candidate region have been eliminated (i.e., their areas are reduced to zero). The remaining candidate region is then identified as the correct match for the initial contact point and the height associated with can be used to estimate the base z-coordinate of the object.

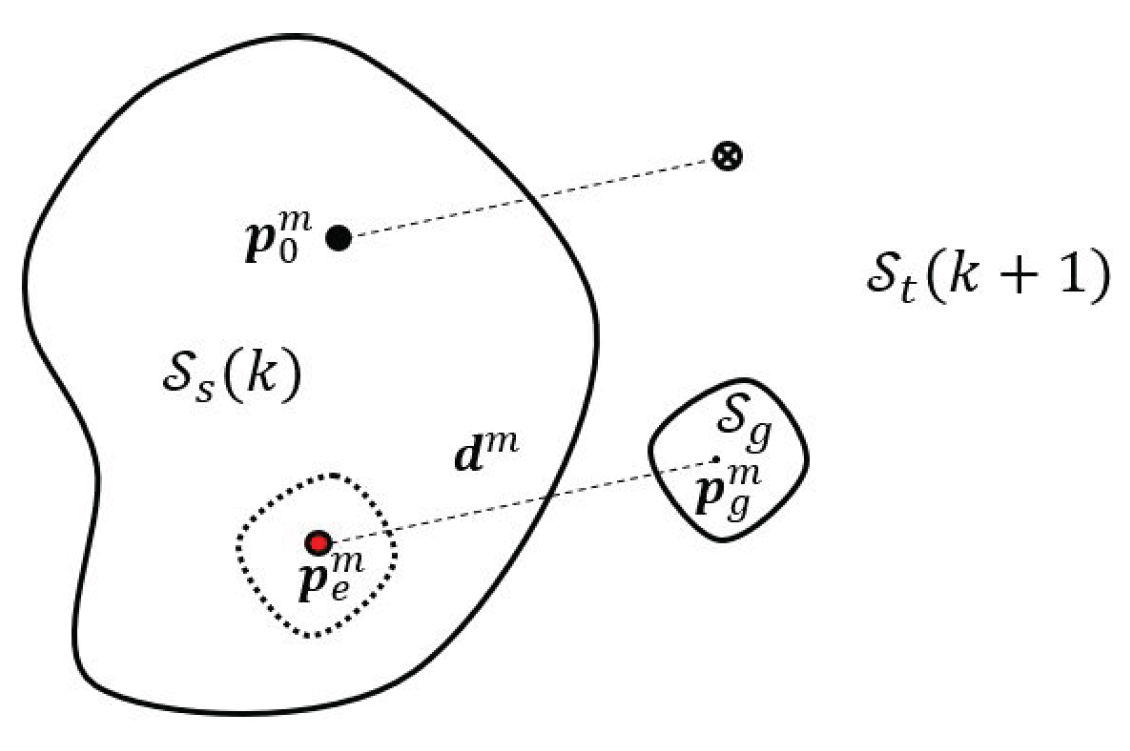

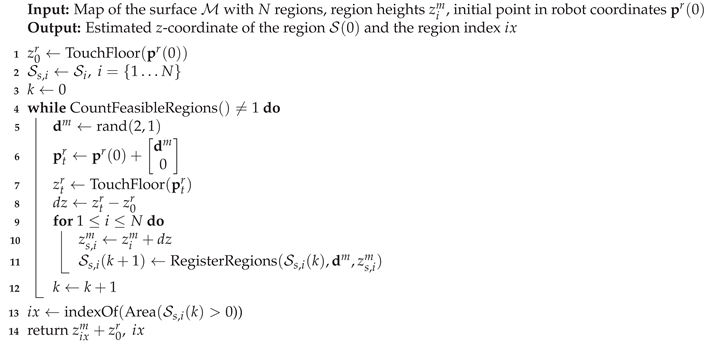

The algorithm is outlined in Algorithm 2. In addition to the functions already used in Algorithm 1, the following new functions are defined

2:

Area() returns the area of the region .

rand(m,n) returns a matrix with random numbers.

CountFeasibleRegions returns the number of feasible candidate regions, i.e. regions with area greater than 0.

|

Algorithm 2: Map registation with unknown object base plane height using touch sensing |

|

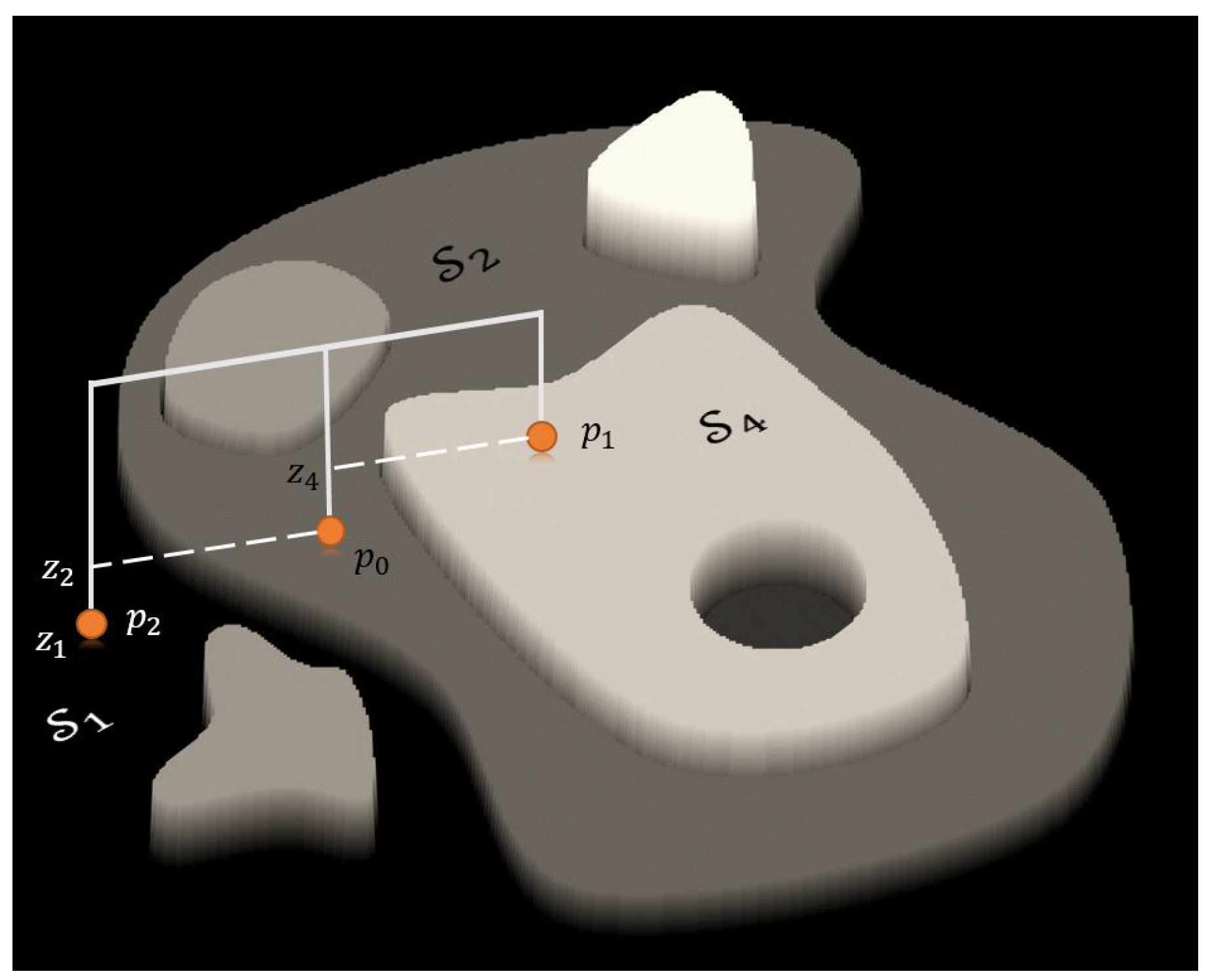

The underlying intuition behind this approach is that once the robot touches all planes constituting the object, we can uniquely determine the identity of each plane. In practice, the identity of a certain plane can often be determined by touching only some of the planes. By tracking the sequence of detected planes and their relative displacements, the algorithm ensures reliable plane identification. This process is illustrated in

Figure 4.

By estimating the

z-coordinate before searching for the

x- and

y-coordinates (as described in

Section 3.1), the algorithm significantly reduces the initial search space, minimizing computational complexity. Experimental results in

Section 4 show that this additional step of determining

z coordinate of the object’s base plane only marginally increases the total number of search iterations.

3.3. Probabilistic Map Registration

In an idealized, noise-free environment, deterministic map registration can achieve arbitrarily high accuracy. However, real-world robotic assembly is affected by measurement noise, actuation errors, and map inaccuracies. These factors can lead to inconsistencies in which the search area is prematurely reduced to zero before reaching the goal. To address these challenges, we propose a probabilistic extension of the deterministic approach.

Unlike the deterministic approach, where the map is partitioned in regions , we now model the likelihood that a point belongs to the search region. Let denote the probability that a point belongs to the search region at k-th iteration. Rather than assuming is in the center of , the algorithm selects with the highest probability P.

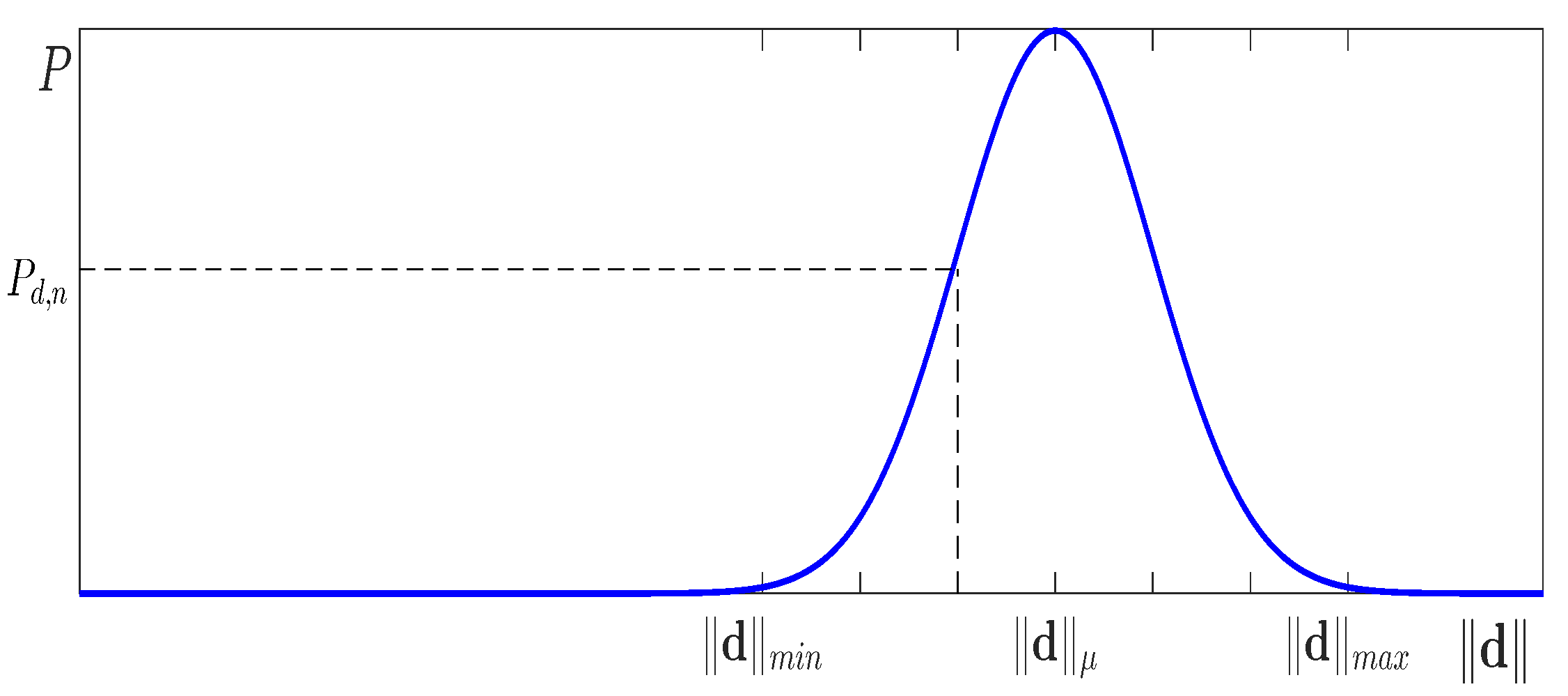

In the deterministic map registration algorithm, the displacement vector

is computed according to Eq. (

1). In the probabilistic framework, we instead model the displacement length

as a random variable with a continuous probability distribution. It is sampled from the range

assuming a normal distribution

, where

is taken as the displacement length calculated with Eq. (

1) (see

Figure 5). The parameter

models the uncertainty in the robot’s position and map inaccuracies by controlling the spread of the Gaussian distribution used to sample the displacement length

. Intuitively,

defines the width of this distribution, determining how broadly the search region is updated around the expected displacement. Typically, it is chosen such that the Gaussian covers approximately 20–30% of the nominal displacement vector length

. At this scale, the Gaussian falls to about 5% of its peak height at the distribution’s edges, ensuring that the probabilistic update accounts for realistic positional errors without overly broadening the search space. This setting balances robustness against robot and map uncertainties with the efficiency of the search, and while the exact choice can be tuned experimentally, the described range provides a principled guideline.

The search region is updated accordingly. It is obtained by marginalizing over all possible displacement lengths. That is, instead of using a single displacement vector, we integrate the effect of sampled displacements weighted by their probability.

For computational reasons the length on the interval

is divided into

intervals,

, each with an associated probability

, providing that

In each

k-th search step, for each length

, we obtain region

using the Eq. (

3), following the same procedure as in the deterministic case. This way, we obtain

regions

and compute:

The probabilities are updated recursively as:

Similar as before in the deterministic approach, the algorithm narrows the search region

until the robot hits the goal region

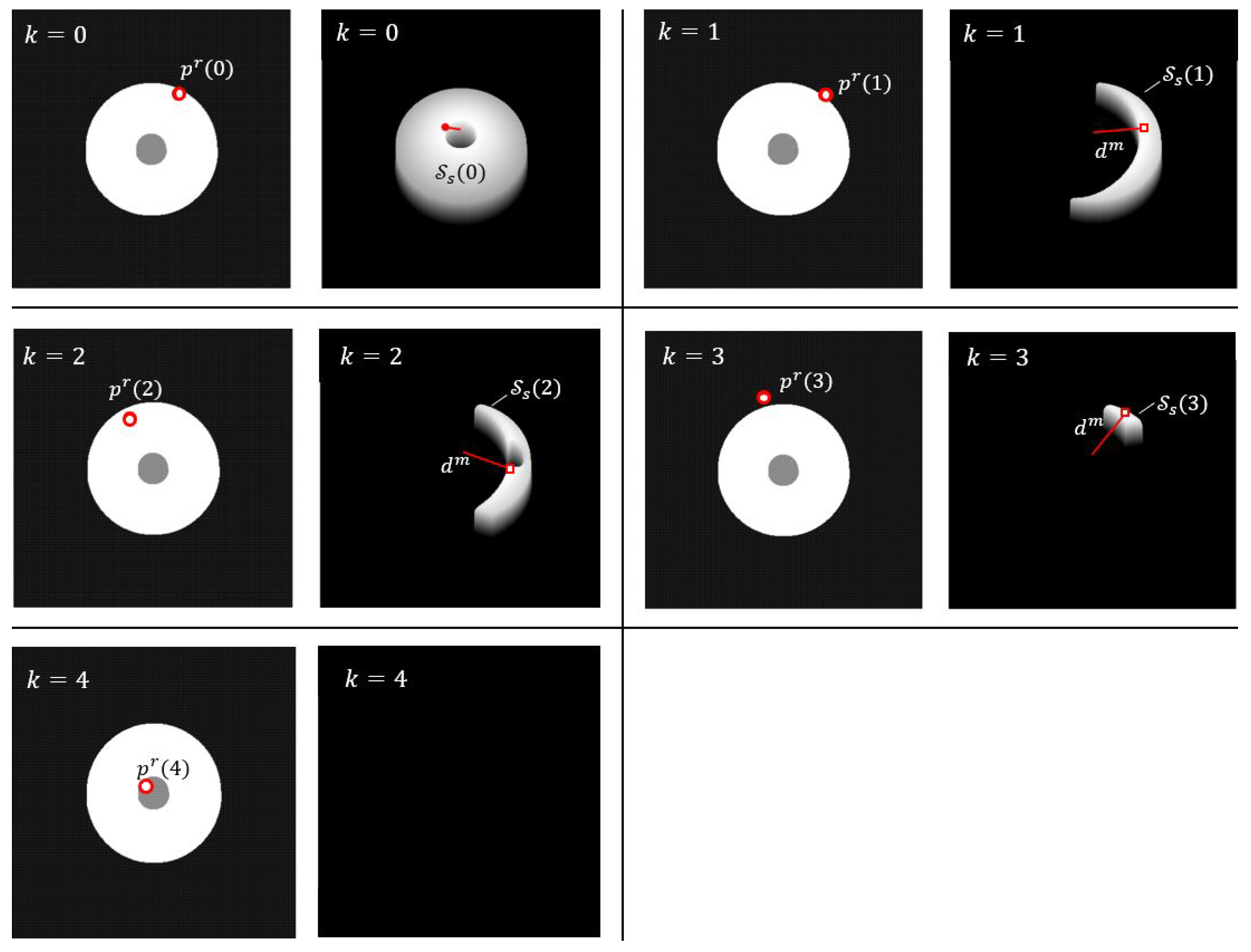

.

Figure 6 illustrates the probabilistic map registration process in an audio pin insertion task, similar to the deterministic approach in

Section 3.1.

4. Experimental Results

In this section, we experimentally validate the performance of the proposed algorithm and compare it with a random search strategy. For all experiments, we used a 7-DOF Franka Research 3 robot controlled by an enhanced Cartesian impedance control law. The applied control law is detailed in [

27] and [

28]. Enhancements to the original control law include bidirectional friction compensation, which improved positional accuracy for small displacements with low stiffness. The touch motion was implemented by setting the velocity command in the direction of the surface normal of the object and monitoring the force in the same direction. Motion was halted whenever the force exceeded a predefined threshold, and impact forces were mitigated by setting low stiffness in the impedance control law in the direction of the surface normal. A touching probe with a known geometry is attached to the tip of the robot. This allows us to determine the height of the touched point in the robot base coordinate system.

4.1. Inserting the Pin into the Socket

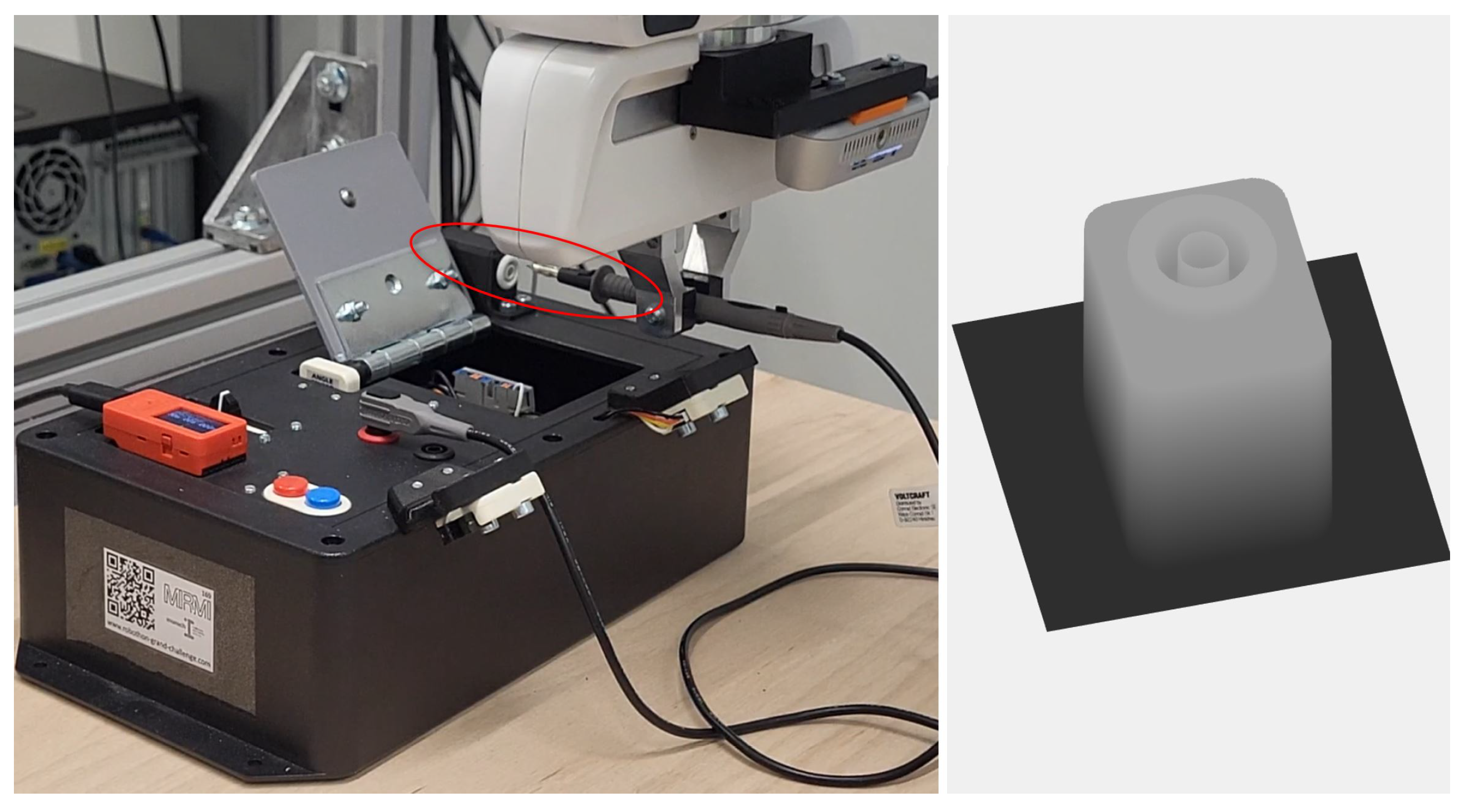

To validate the efficiency and robustness of our algorithm, we first replicated the experiment of inserting an audio pin into a socket, as described in

Section 3.1. The experimental setup is depicted in

Figure 7, where the socket was positioned on a table with its normal aligned along the

z-axis. The socket was installed within a housing of 2 cm in diameter, with a socket hole measuring

mm.

The search area was confined to a cm square, and the map was encoded as a matrix. Therefore, each point in the map corresponds to 0.1 mm. Since the coordinate frames of the map and the robot were aligned, the rotation matrix was set to the identity matrix. The robot’s initial search position in robot coordinates was randomly selected within the defined search area.

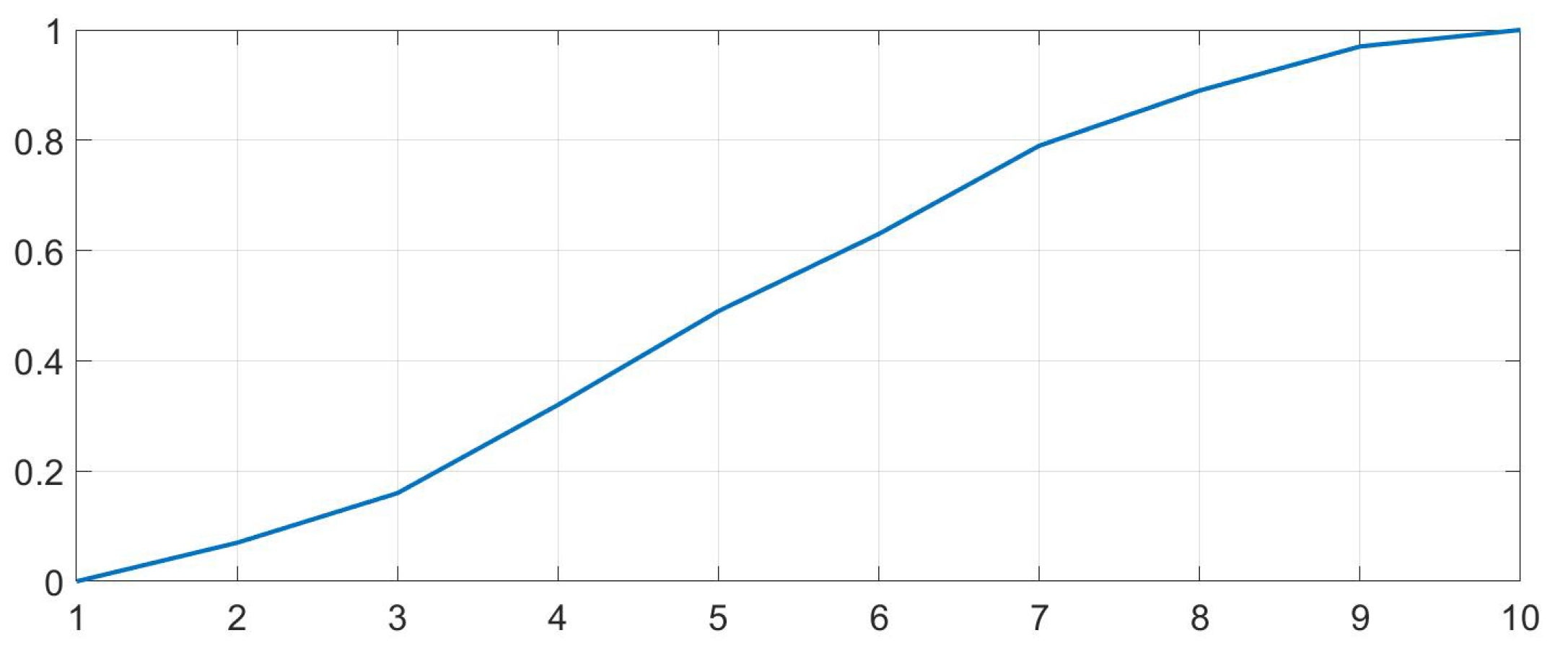

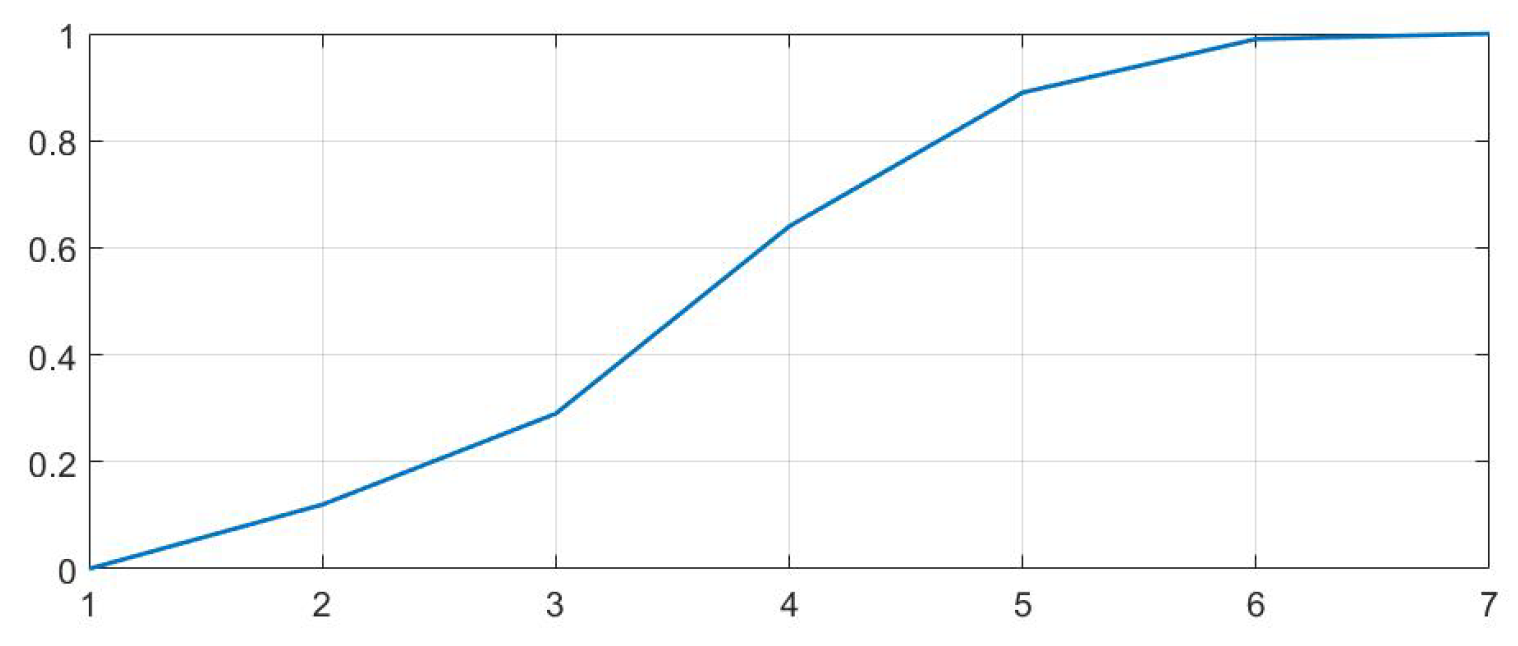

In a set of 100 experimental trials, the algorithm successfully located the socket opening within one to ten attempts.

Figure 8 illustrates the convergence behavior and standard deviation of the search process. In this experiment, the results are virtually identical when using deterministic or probabilistic search.

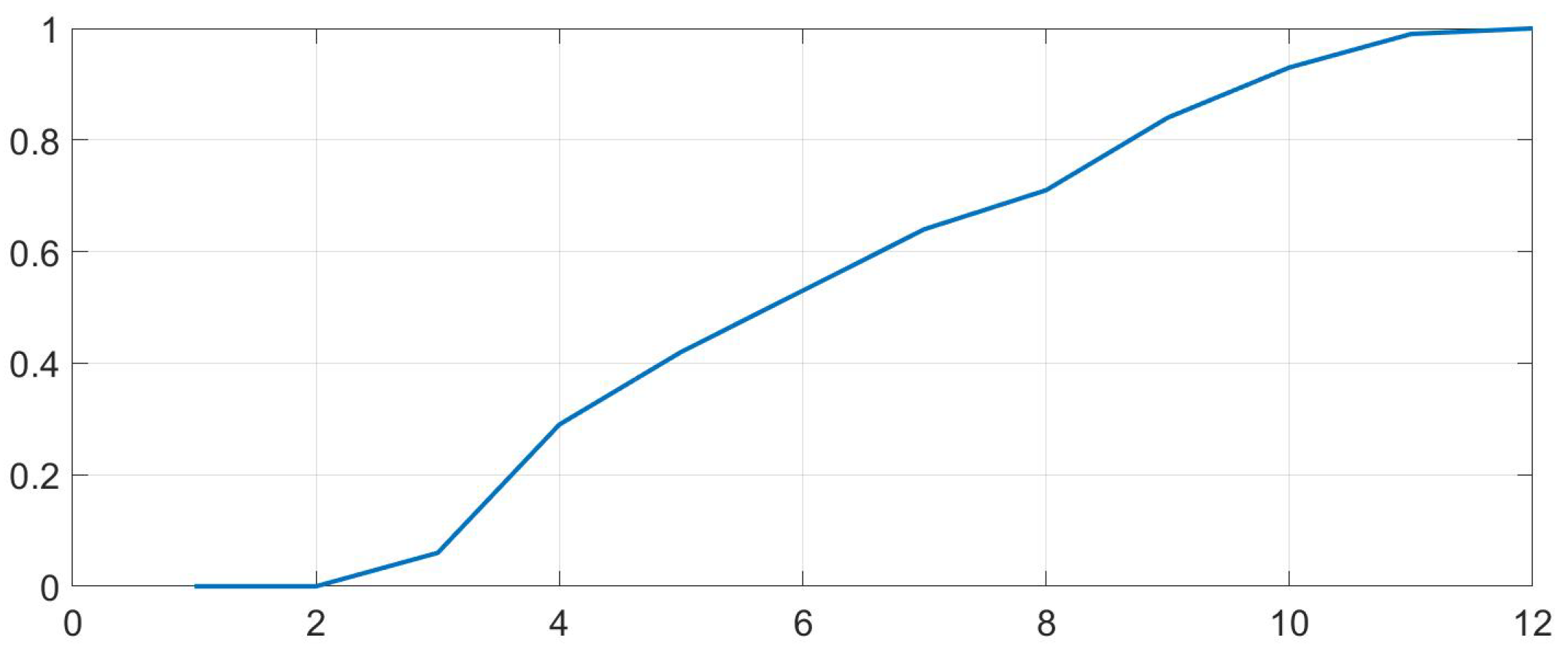

To further evaluate the algorithm’s performance under more challenging conditions, we considered a scenario where the object’s height relative to the robot is unknown. In this case, the height measurement alone is not sufficient to identify which of map partitions has been touched. Therefore, the algorithm first estimates the correct

z-position before proceeding with the

x- and

y-coordinate search, following the procedures outlined in

Section 3.2 and

Section 3.1, respectively. The convergence characteristics and standard deviation of this extended search process are illustrated in

Figure 9.

A comparative analysis between Figs.

Figure 8 and

Figure 9 reveals that incorporating the additional

z-coordinate search increases the maximum number of attempts by only two, while the average number of attempts increases marginally. This demonstrates that the added dimensional complexity does not significantly degrade the efficiency of the search algorithm.

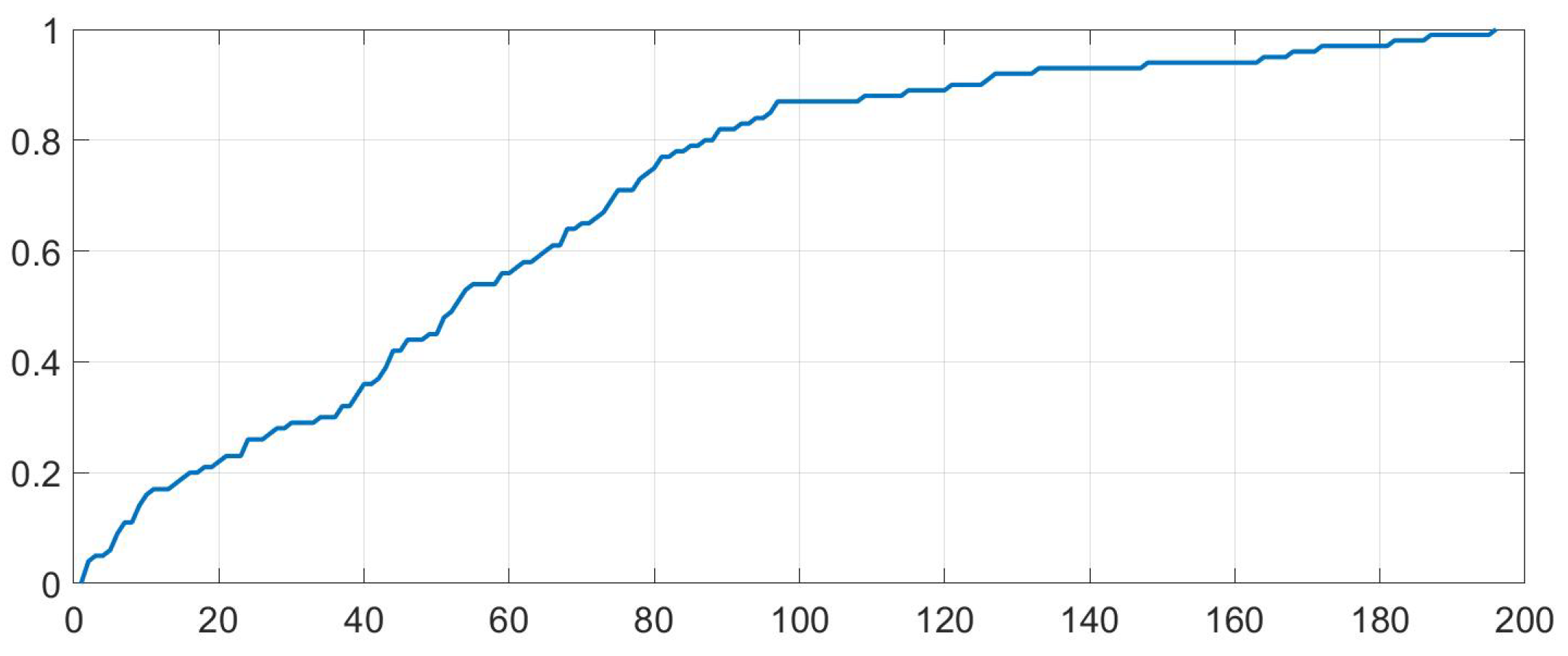

As a benchmark, we conducted an additional 100 trials using a purely random search strategy within a

cm grid with a

mm resolution. To ensure fairness, no points were tested more than once.

Figure 10 presents the convergence and standard deviation of the random search.

The results clearly highlight the superiority of our proposed search algorithm compared to random search. The algorithm demonstrates an average convergence speed more than six times faster than random search and exhibits significantly lower variance. In worst-case scenarios, our approach achieves over twenty times faster convergence, further validating its efficiency and reliability for real-world robotic assembly tasks.

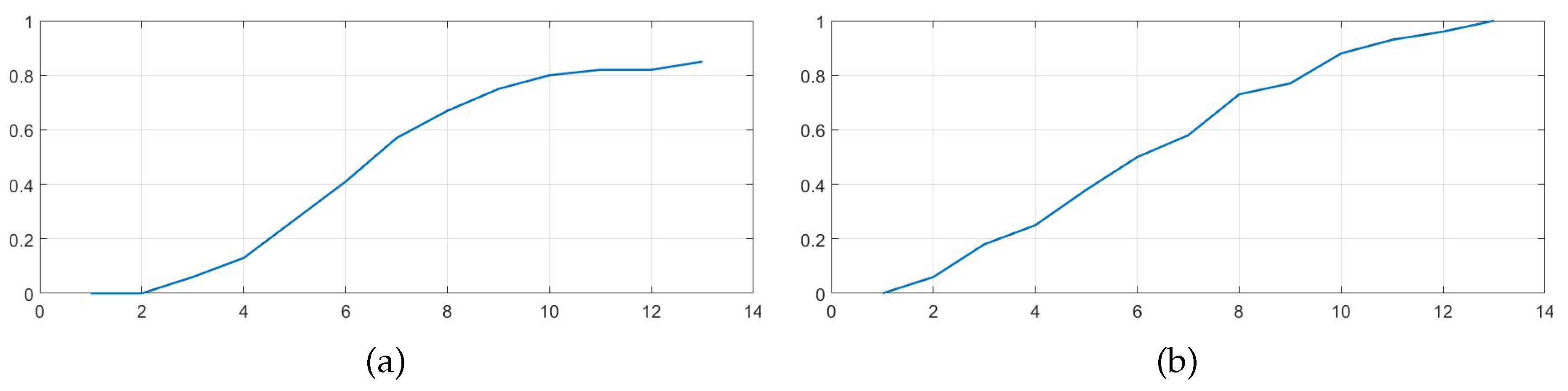

In our final experiment, we evaluated the performance advantages of the probabilistic search algorithm under conditions of imprecise object mapping and positional inaccuracies of the robot. To simulate these uncertainties, we increased the distance parameter

in Eq. (

2) by a factor of 1.2, while retaining the original value of

in the registration process described by Eq. (

3). We then conducted 100 experimental trials of inserting an audio pin into a socket, comparing the success rates and convergence behavior of the deterministic and probabilistic search algorithms. The deterministic algorithm successfully inserted the pin into the socket in 85 out of 100 attempts, whereas the probabilistic algorithm achieved a success rate of 100 out of 100. Parameters

and

were set to 20. These results, presented in

Figure 11, clearly demonstrate the superiority of the probabilistic search algorithm in noisy environments, highlighting its robustness in handling uncertainties.

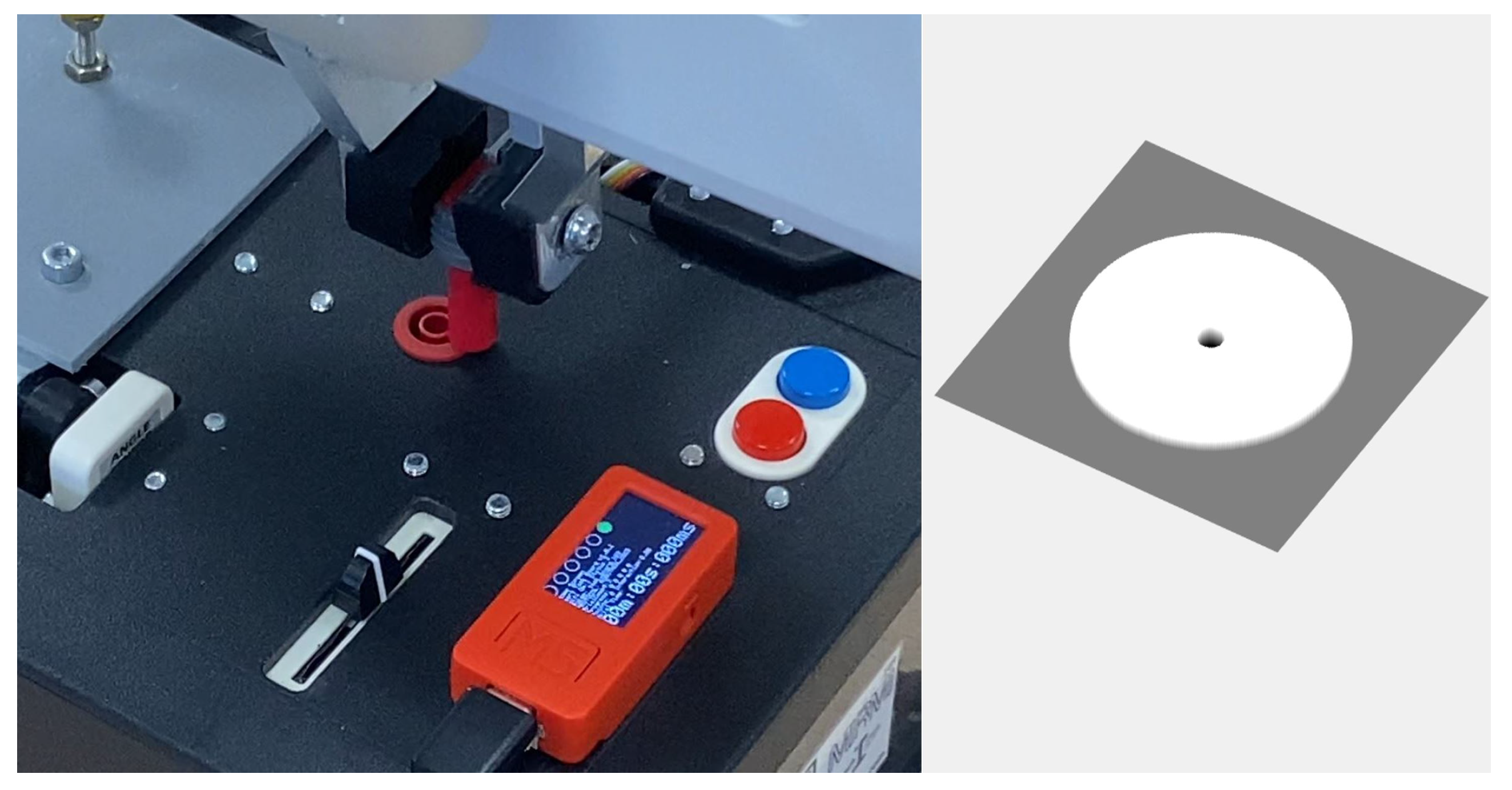

4.2. Inserting the Task Board probe into the socket

The subsequent experiment pertains to the

Task Board, an internet-connected device designed to assess real-world robot manipulation skills [

29]. Following the trial protocol, one of the operations involves extracting a probe from its socket, measuring the probe’s voltage level, wrapping the cable, and then stowing the probe. The last operation often fails due to factors such as incomplete grasp of the probe, significant movements during manipulation, environmental contact with the probe, and the effects of pulling the probe cable. As part of the euRobin project

3, numerous Task Board manipulation solutions employing both in-hand and overhead cameras were introduced. However, these camera placements are inadequate for monitoring the probe-stowing operation. Consequently, an alternative solution utilizing touch detection was implemented for this purpose.

Initially, a 400 x 400 map with depth information of the socket housing was provided, as depicted in

Figure 12. In this case, the insertion is along the robot’s

x-axis, therefore the rotation matrix was

. Each unit represented 0.1 mm in robot coordinates, with the socket hole having a diameter of 4 mm. Following the protocol of previous experiments, 100 attempts were made to insert the probe into the socket, introducing random displacements of the starting point within the search area. In all attempts, the robot successfully inserted the probe into the socket in two to six attempts. The convergence and standard deviation of the search algorithm for this scenario are shown in

Figure 13.

As demonstrated, the algorithm identified the target more quickly than in the previous example. This increased efficiency is attributed to the more complex environment, which provides additional information about the location during exploration.

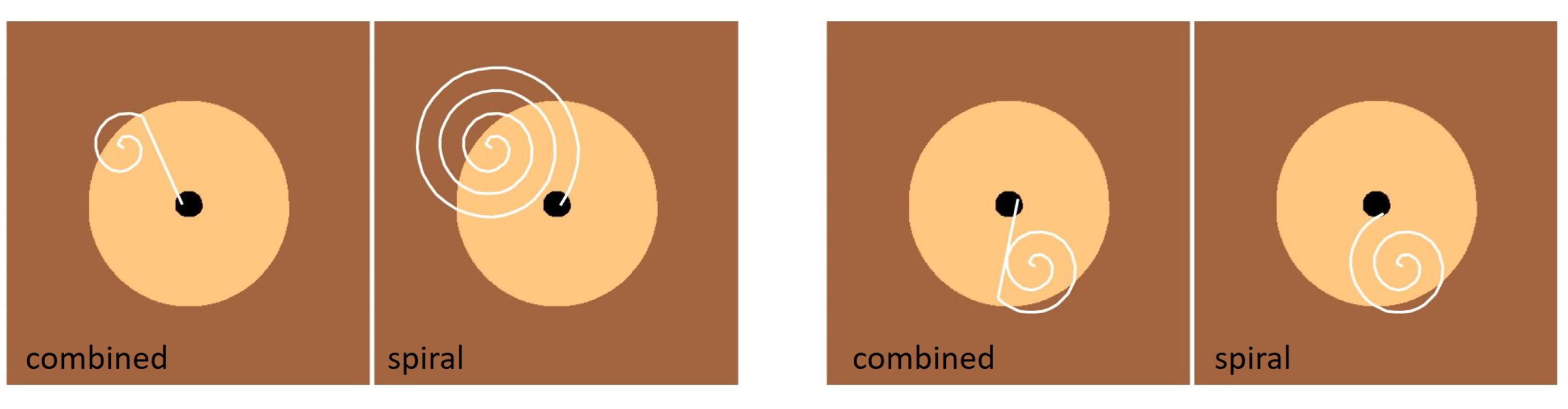

4.3. Inserting the Task Board Connector into the Socket with Continuous Search

In the previous examples, we evaluated the proposed search algorithm on objects with top surfaces that were not sufficiently smooth to allow for continuous trajectory-based search. However, the proposed procedure is also applicable and efficient in cases where a continuous trajectory can be employed to systematically sweep a designated search area. To demonstrate this capability, we again utilize the Task Board, this time focusing on the insertion of the termination connector of the test probe, as shown in

Figure 14.

The search procedure is initiated using a spiral search strategy, where the trajectory is continuously updated at each sampling interval

according to the following equation:

where

represents the initial search position,

defines the radial increment per step, and

is the angular frequency governing the spiral motion. The parameters

and

must be carefully tuned to ensure that the generated trajectory sufficiently covers the search area and reliably intersects the goal region from any starting position

.

During the spiral search, the robot applies a controlled force in the

z-direction while maintaining compliance along this axis. This allows it to smoothly traverse the surface and conform to any variations in height. When the probe encounters the socket opening, it slides into place, marking the successful termination of the search. Further details on controlling the robot’s stiffness and force at the tool center point can be found in [

28].

To further improve the search efficiency, we integrate the spiral search with the map registration algorithm introduced in

Section 3.1. First, we construct an appropriate model of the socket. Given that the plug is a cylinder with a radius of 4 mm, we account for its insertion by increasing the socket’s radius accordingly. Additionally, considering the insertion tolerance of

, the total radius of the socket hole is adjusted to accommodate this clearance. For simplification, we model the plug as a point mass while ensuring that its physical constraints within the socket are maintained (See

Figure 14 right). The map registration algorithm runs concurrently with the spiral search, refining the position estimate dynamically. Specifically, an update is triggered whenever the distance between two consecutive points exceeds a predefined threshold:

The algorithm continuously tracks the area of the current search region

, which contains the initial search point

. If the area of

shrinks below the area of the goal region (i.e., the required region for successful insertion), the next command position is determined using Eq. (

2). In this experiment we applied probabilistic map registration algorithm. This adaptive refinement significantly enhances search efficiency.

The advantages of the combined search approach are illustrated in

Figure 15, which compares the performance of the combined algorithm with the standard spiral search for two different initial positions. In both cases, the combined algorithm exhibited faster convergence to the goal region. However, the efficiency of the combined approach depends on the amount of information gained about different regions during the search. If the robot does not encounter new regions while searching, the combined algorithm performs similarly to the standard spiral search. Consequently, when the initial position is close to the goal region, there are no performance difference between the two methods. On the other hand, the spiral search requires precise tuning of free parameters to successfully complete search. In contrast, the proposed combined search algorithm is successful even with poorly set spiral search parameters.

4.4. Summary of Experimental Results

To provide a concise overview of the method’s performance across different use cases, we summarize the key experimental results in

Table 1. The table reports the number of trials, success rate, average number of attempts, standard deviation, average search time and qualitative notes on search characteristics. This summary highlights the efficiency and robustness of the proposed method across a variety of practical scenarios, including both discrete and continuous search strategies, and under both ideal and noisy conditions.

We observe that across all tested scenarios, the proposed algorithm performs consistently and robustly, with probabilistic extensions offering added resilience under uncertainty. The combined continuous and probabilistic method further improves convergence efficiency in smooth surface conditions.

All experimental use cases are additionally described in the attached videos, where the exploration of the algorithm and the process of evaluating the starting point can be observed. The Matlab source code of the registration algorithms in the simulated environment and videos are available via

repo.ijs.si/nemec/3d-object-pose-detection-using-active-touch-sensing.

5. Conclusion

In this study, we introduced a novel algorithm for locating openings in peg-in-hole assembly tasks using sparse tactile feedback. Building upon principles from NBT techniques, particle filters, iterative tactile probing, and active hypothesis testing, the method leverages prior geometric knowledge of the target object to enable efficient search in environments with limited sensory data. Our experimental results demonstrate two key insights: (1) the algorithm achieves rapid convergence, particularly in complex environments, and (2) environmental complexity paradoxically enhances search efficiency by providing richer tactile cues that accelerate hypothesis elimination. This phenomenon arises because intricate geometries introduce distinct contact signatures, enabling the algorithm to discard incorrect hypotheses faster than in simpler, less informative settings.

The core algorithm, designed for 2D localization, was extended to 3D through innovative hypothesis confirmation and rejection protocols. By decoupling positional and orientational search dimensions, our 3D implementation avoids the curse of dimensionality, achieving comparable computational complexity to the 2D case while improving robustness. Furthermore, we developed a probabilistic framework to address real-world challenges such as sensor noise and imperfect prior maps to maintain reliability under practical conditions. The probabilistic algorithm has demonstrated much greater resilience to noisy data and the environment compared to the deterministic approach. Apart from its higher computational demands, it exhibits no deflections relative to the deterministic algorithm. The method’s compatibility with established time-continuous search techniques was also demonstrated, enabling hybrid strategies that combine the precision of tactile search with the efficiency of motion-planning approaches.

The proposed algorithm is specifically designed for objects that contain multiple parallel surfaces at a distinct height. This design choice reflects common constraints in many real-world tasks, particularly in industrial and domestic assembly settings where such geometries are prevalent (e.g., sockets, ports, jigs, or fixture-based systems). Thus, this case is practically the most relevant.

While the current implementation focuses on positional localization, the architecture naturally extends to full 6-DOF pose estimation through systematic expansion of the hypothesis space. Future work will address orientational search by developing adaptive sampling strategies that exploit mechanical constraints in peg-hole interactions, potentially using hierarchical search spaces to manage computational complexity. Additional directions include integration with force-torque sensing for contact-rich environments and validation in industrial assembly tasks with variable friction and material properties.

The algorithm’s ability to transform environmental complexity into a computational advantage suggests broad applicability beyond peg-in-hole scenarios. Potential applications range from micro-surgical robotics, where tactile feedback is critical, to space-constrained maintenance tasks in aerospace systems. By providing a framework that effectively bridges geometric priors, probabilistic reasoning, and tactile exploration, this work advances the frontier of contact-based robotic perception, offering a principled approach to manipulation in information-constrained environments.

Funding

The research leading to these results has received funding from the European Union’s Horizon Europe Framework Programme under grant agreement No 101070596 (euROBIN).

Appendix

If the estimated map point converges towards the initial touch point , both given in the map coordinate system, then the final touch point is guaranteed to lie within the target region . The convergence of towards can be proven by showing that the search area is monotonically decreasing in each iteration step k and that the estimated point . In the following we consider three regions: the selection region at iteration step k, the region touched by the robot , and the target region . The proof relies on the following theorem:

Theorem 1 (Monotonic Convergence). Let be a map consisting of disjoint regions , each corresponding to a unique horizontal face in 3D space. Suppose:

The initial touch point , which defines the initial region .

At each iteration step , the algorithm computes the displacement , , and the robot touches the new region .

The candidate region is updated as:

Then, for all :

- (1)

(strict subset property),

- (2)

(the initial touch point given in the map coordinate system is contained in the selection region).

Figure 16.

Registration process in k-th step. The region with dashed lines denotes the part that will be removed form in the next iteration.

Figure 16.

Registration process in k-th step. The region with dashed lines denotes the part that will be removed form in the next iteration.

Proof. We prove the lemma by induction on k, demonstrating that the selection region is strictly decreasing while always containing the initial touch point .

Base Case ()

By definition, an algorithm determines

Thus, the lemma holds for .

Inductive Step

We now show these properties hold for .

Step 1: Shrinking of Selection Region

The algorithm computes the displacement vector:

where

is the estimated point at iteration

k. The newly detected region is denoted by

and the candidate selection region is updated as:

Since by definition

and

and since

, at least the point

is excluded from

,

. Thus at least one element is guaranteed to be excluded from the set

, which ensures

Thus, the strict subset property holds.

Step 2: Containment of the Initial Touch Point

By induction assumption, the initial touch point satisfies . If the newly touched region is equal to , the algorithm finishes as the correct point has been identified. If this is not the case, and since , the initial touch point remains in .

This concludes the proof of the theorem.

Conclusion

By induction, we conclude that for all :

- (1)

, ensuring monotonic shrinkage.

- (2)

, ensuring the true initial point is never eliminated.

The algorithm continues until the robot touches the goal region . Therefore, the algorithm converges to a sufficiently small search region containing , completing the proof. □

References

- Navarro, S.E.; Mühlbacher-Karrer, S.; Alagi, H.; Zangl, H.; Koyama, K.; Hein, B.; Duriez, C.; Smith, J.R. Proximity Perception in Human-Centered Robotics: A Survey on Sensing Systems and Applications. IEEE Transactions on Robotics 2022, 38, 1599–1620. [Google Scholar] [CrossRef]

- Zhuang, C.; Li, S.; Ding, H. Instance segmentation based 6D pose estimation of industrial objects using point clouds for robotic bin-picking. Robotics and Computer-Integrated Manufacturing 2023, 82. [Google Scholar] [CrossRef]

- Nottensteiner, K.; Sachtler, A.; Albu-Schäffer, A. Towards autonomous robotic assembly: Using combined visual and tactile sensing for adaptive task execution. Journal of Intelligent & Robotic Systems 2021, 101, 49. [Google Scholar]

- Lončarević, Z.; Gams, A.; Reberšek, S.; Nemec, B.; Škrabar, J.; Skvarč, J.; Ude, A. Robotics and Computer-Integrated Manufacturing 2023, 82. [CrossRef]

- Saleh, K.; Szénási, S.; Vámossy, Z. Occlusion Handling in Generic Object Detection: A Review. In Proceedings of the IEEE 19th World Symposium on Applied Machine Intelligence and Informatics (SAMI); 2021; pp. 477–484. [Google Scholar]

- Yi, A.; Anantrasirichai, N. A Comprehensive Study of Object Tracking in Low-Light Environments. Sensors 2024, 24, 4359. [Google Scholar] [CrossRef] [PubMed]

- Enebuse, I.; Ibrahim, B.K.S.M.K.; Foo, M.; Matharu, R.S.; Ahmed, H. Accuracy evaluation of hand-eye calibration techniques for vision-guided robots. PLOS One 2022, 17, e0273261. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Wu, Y.; Sun, F.; Guo, D. Recent progress on tactile object recognition. International Journal of Advanced Robotic Systems 2017, 14, 1–12. [Google Scholar] [CrossRef]

- Galaiya, V.R.; Asfour, M.; Alves de Oliveira, T.E.; Jiang, X.; Prado da Fonseca, V. Exploring Tactile Temporal Features for Object Pose Estimation during Robotic Manipulation. Sensors 2023, 23. [Google Scholar] [CrossRef] [PubMed]

- Abu-Dakka, F.; Nemec, B.; Jørgensen, J.A.; Savarimuthu, T.R.; Krüger, N.; Ude, A. Adaptation of manipulation skills in physical contact with the environment to reference force profiles. Autonomous Robots 2015, 39, 199–217. [Google Scholar] [CrossRef]

- Abu-Dakka, F.; Nemec, B.; Kramberger, A.; Buch, A.; Krüger, N.; Ude, A. Solving peg-in-hole tasks by human demonstration and exception strategies. Industrial Robot 2014, 41, 575–584. [Google Scholar] [CrossRef]

- Chen, F.; Cannella, F.; Sasaki, H.; Canali, C.; Fukuda, T. Error recovery strategies for electronic connectors mating in robotic fault-tolerant assembly system. In Proceedings of the IEEE/ASME 10th International Conference on Mechatronic and Embedded Systems and Applications (MESA); 2014; pp. 1–6. [Google Scholar]

- Marvel, J.A.; Newman, W.S. Assessing internal models for faster learning of robotic assembly. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA); 2010; pp. 2143–2148. [Google Scholar]

- Shetty, S.; Silvério, J.; Calinon, S. Ergodic Exploration Using Tensor Train: Applications in Insertion Tasks. IEEE Transactions on Robotics 2022, 38, 906–921. [Google Scholar] [CrossRef]

- Chhatpar, S.; Branicky, M. Localization for robotic assemblies with position uncertainty. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); 2003; pp. 2534–2540. [Google Scholar]

- Chhatpar, S.; Branicky, M. Particle filtering for localization in robotic assemblies with position uncertainty. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); 2005; pp. 3610–3617. [Google Scholar]

- Petrovskaya, A.; Khatib, O. Global Localization of Objects via Touch. IEEE Transactions on Robotics 2011, 27, 569–585. [Google Scholar] [CrossRef]

- Jasim, I.F.; Plapper, P.W.; Voos, H. Position Identification in Force-Guided Robotic Peg-in-Hole Assembly Tasks. Procedia CIRP 2014, 23, 217–222. [Google Scholar] [CrossRef]

- Hebert, P.; Howard, T.; Hudson, N.; Ma, J.; Burdick, J.W. The next best touch for model-based localization. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA); 2013; pp. 99–106. [Google Scholar]

- Luo, S.; Mou, W.; Althoefer, K.; Liu, H. Localizing the object contact through matching tactile features with visual map. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA); 2015; pp. 3903–3908. [Google Scholar]

- Hauser, K. Bayesian Tactile Exploration for Compliant Docking With Uncertain Shapes. IEEE Transactions on Robotics 2019, 35, 1084–1096. [Google Scholar] [CrossRef]

- Bauza, M.; Valls, E.; Lim, B.; Sechopoulos, T.; Rodriguez, A. Tactile Object Pose Estimation from the First Touch with Geometric Contact Rendering. arXiv 2020, arXiv:2012.05205. [Google Scholar]

- Bauza, M.; Bronars, A.; Rodriguez, A. Tac2Pose: Tactile object pose estimation from the first touch. The International Journal of Robotics Research 2023, 42, 1185–1209. [Google Scholar] [CrossRef]

- Xu, J.; Lin, H.; Song, S.; Ciocarlie, M. TANDEM3D: Active Tactile Exploration for 3D Object Recognition. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA); 2023; pp. 10401–10407. [Google Scholar]

- Calandra, R.; Owens, A.; Upadhyaya, M.; Yuan, W.; Lin, J.; Adelson, E.H.; Levine, S. The Feeling of Success: Does Touch Sensing Help Predict Grasp Outcomes? arXiv 2017, arXiv:1710.05512. [Google Scholar]

- Yuan, W.; Dong, S.; Adelson, E.H. GelSight: High-Resolution Robot Tactile Sensors for Estimating Geometry and Force. Sensors 2017, 17. [Google Scholar] [CrossRef] [PubMed]

- Nemec, B.; Hrovat, M.M.; Simonič, M.; Shetty, S.; Calinon, S.; Ude, A. Robust Execution of Assembly Policies Using a Pose Invariant Task Representation. In Proceedings of the 20th International Conference on Ubiquitous Robots (UR); 2023; pp. 779–786. [Google Scholar]

- Simonič, M.; Ude, A.; Nemec, B. Hierarchical Learning of Robotic Contact Policies. Robotics and Computer-Integrated Manufacturing 2024, 86. [Google Scholar] [CrossRef]

- So, P.; Sarabakha, A.; Wu, F.; Culha, U.; Abu-Dakka, F.J.; Haddadin, S. Digital Robot Judge: Building a Task-centric Performance Database of Real-World Manipulation With Electronic Task Boards. IEEE Robotics & Automation Magazine 2024, 31, 32–44. [Google Scholar]

| 1 |

This assumes that a mapping between the map’s z coordinate and the robot’s z coordinate is available. However, this assumption is necessary only to explain the basic algorithm. In later sections, we extend the algorithm to cases where this mapping is initially unknown. |

| 2 |

In function TouchFloor, an unknown value appears. However, since the results of this function are subtracted in algorithm 2, the value of does not affect the result and can be set to 0. |

| 3 |

|

Figure 1.

Left: 3D representation of the object’s surface. Right: A 2D map with color-coded regions based on their height. Note that some regions may be disjoint (e.g., ).

Figure 1.

Left: 3D representation of the object’s surface. Right: A 2D map with color-coded regions based on their height. Note that some regions may be disjoint (e.g., ).

Figure 2.

Audio plug and socket used in the example. The shaded square determines the search area for insertion of the pin into the socket and corresponds to the black area in

Figure 3

Figure 2.

Audio plug and socket used in the example. The shaded square determines the search area for insertion of the pin into the socket and corresponds to the black area in

Figure 3

Figure 3.

Example of the search process for the audio plug socket with progressive refinement of the search region over five steps (). Each step includes two views: the left shows the object map with the robot’s contact point (red circle), which is unknown to the algorithm and displayed only for illustration; the right shows the current search region in white, the estimated point as a red square, and the direction vector . The map includes three regions: the dark brown socket hole (target), the light brown enclosure, and a black area where the robot misses the socket. At , the robot touches the object, and the algorithm identifies the touched region . It selects near the centroid of and computes toward the goal point , located at the center. This guides the next move to .The touched region is updated using Eq. (3), shrinking the search area to . The process repeats, with the algorithm refining and at each step, until the robot reaches the goal region at , where the search area converges to zero.

Figure 3.

Example of the search process for the audio plug socket with progressive refinement of the search region over five steps (). Each step includes two views: the left shows the object map with the robot’s contact point (red circle), which is unknown to the algorithm and displayed only for illustration; the right shows the current search region in white, the estimated point as a red square, and the direction vector . The map includes three regions: the dark brown socket hole (target), the light brown enclosure, and a black area where the robot misses the socket. At , the robot touches the object, and the algorithm identifies the touched region . It selects near the centroid of and computes toward the goal point , located at the center. This guides the next move to .The touched region is updated using Eq. (3), shrinking the search area to . The process repeats, with the algorithm refining and at each step, until the robot reaches the goal region at , where the search area converges to zero.

Figure 4.

A set of contact points uniquely determines the identity of each plane. In this example, was the first touch, the second, and the third. This sequence, along with the detected height differences that identify the planes, is consistent only if belongs to region .

Figure 4.

A set of contact points uniquely determines the identity of each plane. In this example, was the first touch, the second, and the third. This sequence, along with the detected height differences that identify the planes, is consistent only if belongs to region .

Figure 5.

A distance is modeled to be normally distributed. We sample the probability for each discrete distance from the interval from to .

Figure 5.

A distance is modeled to be normally distributed. We sample the probability for each discrete distance from the interval from to .

Figure 6.

Example of probabilistic map registration for inserting an audio pin into a socket. The registration process is illustrated across sub-figures for . In the left sub-images, the gray region represents the socket center, the white region denotes the socket body, and the black region indicates the exterior. Red dots mark contact points, which are unknown to the algorithm. In the right sub-images, the search region is shown as a shaded 3D area tilted by around the x-axis, where shading intensity represents the probability estimates . The red vector represents , while the red square indicates . In this probabilistic case, the search region is represented with varying probabilities of robot position, accounting for sensor noise and map inaccuracies. The transition between steps (k to k+1) shows how the search space is adjusted dynamically, with increasing confidence in .

Figure 6.

Example of probabilistic map registration for inserting an audio pin into a socket. The registration process is illustrated across sub-figures for . In the left sub-images, the gray region represents the socket center, the white region denotes the socket body, and the black region indicates the exterior. Red dots mark contact points, which are unknown to the algorithm. In the right sub-images, the search region is shown as a shaded 3D area tilted by around the x-axis, where shading intensity represents the probability estimates . The red vector represents , while the red square indicates . In this probabilistic case, the search region is represented with varying probabilities of robot position, accounting for sensor noise and map inaccuracies. The transition between steps (k to k+1) shows how the search space is adjusted dynamically, with increasing confidence in .

Figure 7.

Experimental setup for testing the insertion of the audio pin into the socket.

Figure 7.

Experimental setup for testing the insertion of the audio pin into the socket.

Figure 8.

Convergence analysis of the proposed search algorithm. The x-axis represents the number of attempts, while the ly-axis shows the probability of locating the target. The mean number of attempts is 5.83 and the standard deviation 2.04.

Figure 8.

Convergence analysis of the proposed search algorithm. The x-axis represents the number of attempts, while the ly-axis shows the probability of locating the target. The mean number of attempts is 5.83 and the standard deviation 2.04.

Figure 9.

Convergence behavior of the combined search algorithm, which first determines the z-coordinate before localizing the x- and y-coordinates. The x-axis denotes the number of attempts and the left y-axis represents the probability of hitting the target. The mean and standard deviation are 6.37 and 2.53, respectively.

Figure 9.

Convergence behavior of the combined search algorithm, which first determines the z-coordinate before localizing the x- and y-coordinates. The x-axis denotes the number of attempts and the left y-axis represents the probability of hitting the target. The mean and standard deviation are 6.37 and 2.53, respectively.

Figure 10.

Convergence behavior of the enhanced random search. The figure shows the probability of hitting the target related to the number of attempts. The mean and standard deviation are 60 and 45.5 .

Figure 10.

Convergence behavior of the enhanced random search. The figure shows the probability of hitting the target related to the number of attempts. The mean and standard deviation are 60 and 45.5 .

Figure 11.

Convergence analysis of the deterministic search algorithm (a) and Convergence analysis of the deterministic search algorithm (b) in noisy environment. In this case, the deterministic and probabilistic search algorithms had success rate of 85% and 100%, respectively

Figure 11.

Convergence analysis of the deterministic search algorithm (a) and Convergence analysis of the deterministic search algorithm (b) in noisy environment. In this case, the deterministic and probabilistic search algorithms had success rate of 85% and 100%, respectively

Figure 12.

Left: Experimental setup for testing the stowing of the probe in the Task Board. The red oval highlights the socket and the probe. Right: A 3D map of the socket used for registration in the corresponding experiment. Note that the left and right images are intentionally shown from different viewpoints to emphasize the rotation between the robot’s coordinate system and the map’s coordinate system.

Figure 12.

Left: Experimental setup for testing the stowing of the probe in the Task Board. The red oval highlights the socket and the probe. Right: A 3D map of the socket used for registration in the corresponding experiment. Note that the left and right images are intentionally shown from different viewpoints to emphasize the rotation between the robot’s coordinate system and the map’s coordinate system.

Figure 13.

The convergence of the proposed search algorithm, showing the the probability to hit the target vs number of attempts. Mean and the standard deviation are 4.07 and 1.18, respectively

Figure 13.

The convergence of the proposed search algorithm, showing the the probability to hit the target vs number of attempts. Mean and the standard deviation are 4.07 and 1.18, respectively

Figure 14.

Left:Robot inserting the termination connector with combined spiral search and map registration algorithm. Right: Model of the socket, as used by the search algorithm

Figure 14.

Left:Robot inserting the termination connector with combined spiral search and map registration algorithm. Right: Model of the socket, as used by the search algorithm

Figure 15.

Comparison between the combined search algorithm and the spiral search algorithm for inserting the TaskBoard connector into the socket, evaluated for two different starting points. The white line represents the trajectory of the connector’s center during the search. The dark brown area indicates regions where the connector fails to engage with the socket, while the light brown area represents regions where the connector glides over the socket. The black region marks the goal.

Figure 15.

Comparison between the combined search algorithm and the spiral search algorithm for inserting the TaskBoard connector into the socket, evaluated for two different starting points. The white line represents the trajectory of the connector’s center during the search. The dark brown area indicates regions where the connector fails to engage with the socket, while the light brown area represents regions where the connector glides over the socket. The black region marks the goal.

Table 1.

Summary of experimental results across different use cases.

Table 1.

Summary of experimental results across different use cases.

| Experiment |

Trials |

Success Rate |

Avg. Attem. |

Std. Dev. |

Avg. Time |

Notes |

| Audio Pin Random Search (Baseline) |

100 |

100% |

37.37 |

36.55 |

71.0 s |

No prior knowledge used |

| Audio Pin Insertion (Deterministic) |

100 |

100% |

5.83 |

2.04 |

11.1 s |

Basic algorithm with known object height |

| Audio Pin + Height Estimation |

100 |

100% |

6.37 |

2.53 |

12.8 s |

Includes z-height search step |

| Audio Pin (Noisy, Deterministic) |

100 |

85% |

6.78 |

3.0 |

12.1 s |

Sensitive to uncertainty, occasional failure |

| Audio Pin (Noisy, Probabilistic) |

100 |

100% |

6.76 |

2.32 |

12.8 s |

Robust under position and map uncertainty |

| Task Board Probe |

100 |

100% |

4.07 |

1.18 |

8.7 s |

Rich geometry improves convergence |

| Task Board Connector (Combined) |

20 |

100% |

— |

— |

7.8 s |

Spiral + map registration, robust to par. settings |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).