I. Introduction

In modern computing architectures, backend systems are responsible for key tasks such as data processing, storage, and service response. As user scale increases and business logic becomes more complex, the demand for high performance and high availability in backend systems grows stronger. Caching is a crucial technique for backend performance optimization. It effectively reduces direct access to databases and main memory, increases data retrieval speed, and alleviates system pressure. However, with the rapid growth of data volume and request types, traditional caching strategies such as LRU (Least Recently Used) and LFU (Least Frequently Used) reveal their limitations in handling complex and dynamic scenarios. These strategies rely mostly on static rules or fixed heuristics and lack adaptability to system states and access patterns. They struggle to maintain optimal performance under high concurrency and high variability [

1,

2].

In practical applications, the quality of a cache management strategy directly affects system response time and resource utilization. When cache hit rates are low, the backend system faces a large number of redundant data requests and frequent disk accesses. This reduces service performance and may lead to system congestion or even failure. With the growing adoption of microservice architectures and distributed systems, the challenges for backend caching become more severe. Services are highly interdependent, request paths change dynamically, and data temperature shifts frequently. Traditional rules cannot handle the complex combinations of these multi-dimensional states. Therefore, there is an urgent need for a mechanism with intelligent sensing and decision-making capabilities [

3]. It should make efficient, dynamic caching decisions based on real-time system conditions and access behaviors.

In recent years, the development of reinforcement learning, especially deep reinforcement learning, has introduced new approaches for intelligent backend optimization. Reinforcement learning is a framework that learns optimal policies through interaction with the environment. It does not require an explicit model and can autonomously explore effective behavior strategies. Among these methods, Deep Q-Networks (DQN) have shown strong learning ability in high-dimensional state spaces [

4]. They are increasingly used in areas such as resource scheduling and path optimization. Applying DQN to backend cache management can overcome the dependence on static rules. It can adjust strategies dynamically based on feedback from the environment, with the goal of maximizing cache hit rate or overall system performance. The key advantage of this method is that the policy is learned automatically through interaction, offering strong generalization and adaptability [

5].

Applying deep reinforcement learning to cache eviction strategy selection has both theoretical and practical significance. On the theoretical side, this research promotes the shift from traditional caching paradigms to more intelligent and autonomous frameworks. It broadens the scope of reinforcement learning applications in real system optimization. This work provides a new methodology for cache design and introduces the ability of self-learning and self-adaptation into backend systems. On the engineering side, reinforcement learning-based cache strategies can be embedded in real systems to enable smarter resource management. This improves user experience, system stability, and resource efficiency. In scenarios with high-frequency data access and intense resource competition, a real-time learning strategy outperforms traditional approaches [

6]. In conclusion, building a backend caching strategy selection and eviction mechanism based on deep reinforcement learning has significant theoretical and practical value. It breaks the limitations of traditional algorithms and lays the foundation for intelligent backend evolution [

7].

II. Method

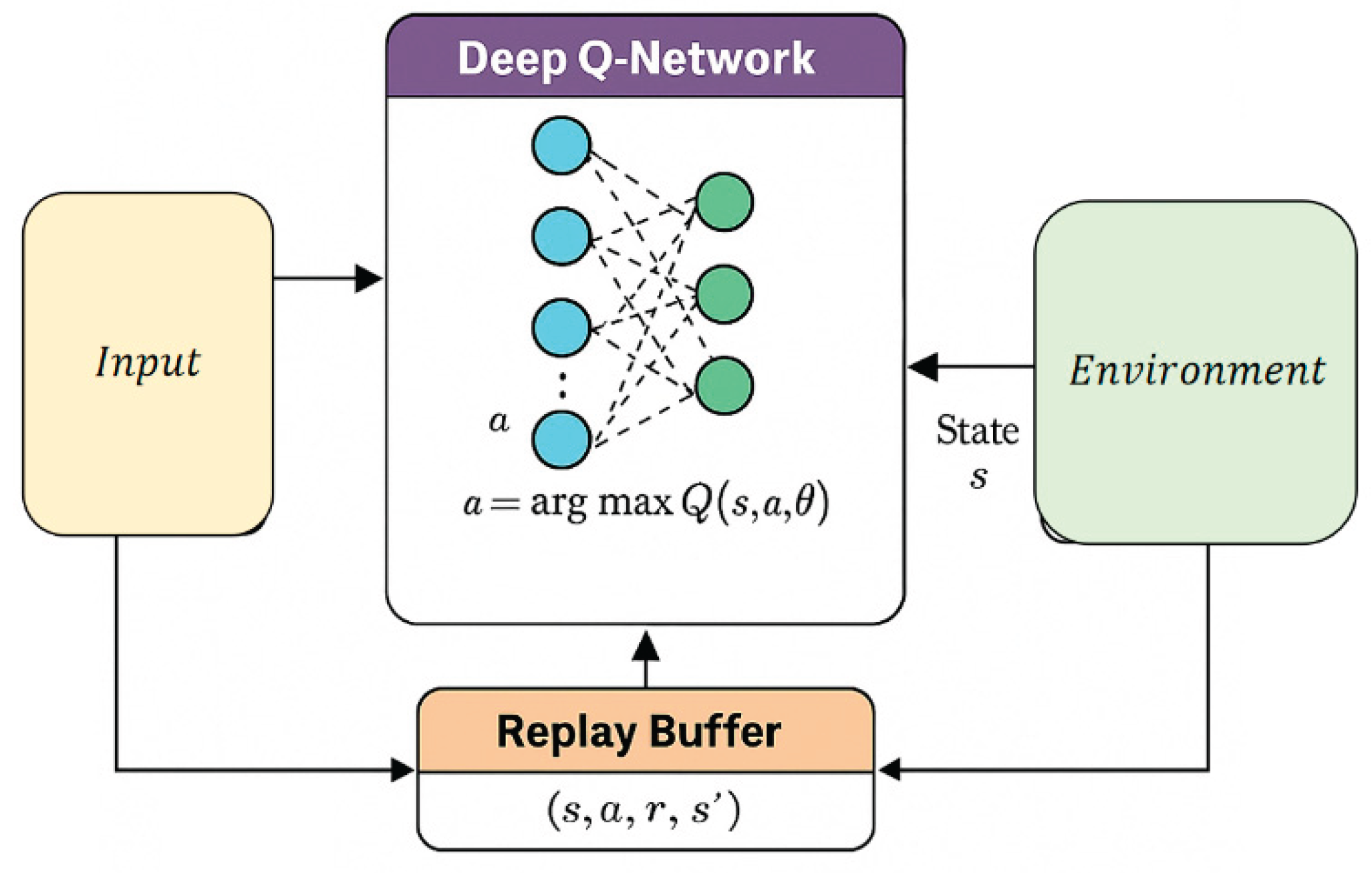

This study constructs a backend cache strategy selection and eviction mechanism based on Deep Q-Networks (DQN), modeling the cache management process as a Markov Decision Process (MDP) to enable adaptive decision-making in complex access environments. This formulation allows the system to represent and evaluate sequential actions across varying cache states, facilitating real-time optimization. In designing the model, we integrate principles from topology-aware distributed decision-making [

8], which emphasize state-driven local optimization to address distributed constraints. The state representation further incorporates spatiotemporal elements such as access intervals and frequency, drawing on insights from deep learning-based memory forecasting approaches [

9]. These elements enhance the system’s ability to capture transient and long-term behavior patterns within cache operations. To ensure stability and convergence during training, the DQN framework applies experience replay and uses a target network, aligning with reinforcement learning-based scheduling strategies for high-dimensional environments [

10]. These mechanisms mitigate oscillations in policy updates and improve generalization under changing workload profiles. The overall architecture, as illustrated in

Figure 1, supports dynamic strategy refinement and efficient cache eviction in real-world scenarios.

In this framework, the system state S represents a combination of multi-dimensional features such as the distribution of data items in the current cache, data access frequency, cache hits, etc. The action space A is defined as the currently executable cache operations, such as retaining or eliminating a certain data; the environment rewards R based on action feedback to guide the agent to optimize performance indicators such as cache hit rate. The goal of reinforcement learning is to maximize the future long-term cumulative rewards, that is, the expected return

, through strategy

.

Among them, the target Q value A is given by the following Bellman equation:

Where is the discount factor, which measures the importance of future rewards, and represents the parameters of the target network, which are periodically copied from the main network to enhance training stability.

During the learning process, the experience replay mechanism is used to alleviate the correlation between samples, and stable and efficient training is achieved by randomly sampling small batches of experience pairs

from the replay cache. This technique enhances the stability and efficiency of training by increasing sample diversity and breaking the dependency between consecutive data points. The use of experience replay is informed by trust-constrained learning mechanisms in distributed scheduling scenarios [

11], which emphasize the importance of decoupling sample sequences to ensure policy robustness. Additionally, randomized batch sampling draws on practices from A3C-based reinforcement learning for microservice scheduling [

12], which demonstrated improved convergence under asynchronous and dynamic conditions. Furthermore, insights from TD3 reinforcement learning in load balancing tasks [

13] reinforce the value of a structured replay cache in maintaining stability during continuous control. These mechanisms collectively contribute to a reliable and effective training pipeline. To further improve the exploration efficiency, the

-greedy strategy is used to control the action selection behavior, that is, to select a random action with probability

and select the action with the largest current Q value with probability

:

This strategy encourages the exploration of new strategies in the early stages of training and gradually tends to utilize learning outcomes in the later stages of training.

This study introduces a custom-designed state space representation and reward function to guide the reinforcement learning process within the cache environment. The state space captures critical attributes such as access frequency, recency, and cache occupancy, while the reward function reflects objectives like hit rate maximization and latency minimization. The design methodology is informed by techniques used in adaptive reinforcement learning for resource scheduling in complex systems [

14], which emphasize environment-specific feature encoding for efficient policy learning. Additionally, representational accuracy benefits from feature alignment strategies seen in multimodal detection models [

15], which guide how input characteristics are abstracted into meaningful state features. Considerations for system heterogeneity and generalization are further supported by insights from federated learning models [

16], emphasizing compact and decentralized learning structures. Together, these influences support a reinforcement learning framework that is well-calibrated to the demands of backend cache optimization. The state vector

encodes information such as cache hits, data access time series, and storage frequency distribution. The reward function r is defined as the difference between the hit reward and the elimination cost, in the following form:

Among them, is the adjustment coefficient, represents the cache hit effect generated by the action, and measures the performance cost of eliminating data. This design encourages the model to avoid unnecessary data replacement operations while improving the hit rate.

The overall approach uses a deep neural network to approximate the optimal strategy in a high-dimensional state space [

17], and combines it with a reinforcement learning mechanism to continuously adjust the cache strategy to achieve intelligent cache management in a dynamic environment [

18]. The DQN model iteratively updates parameters during the training process, gradually learning how to select the optimal cache elimination action to maximize the performance of the long-term cache system. This method is not only applicable to static data scenarios, but also has the ability to adaptively adjust strategies in complex and ever-changing backend environments, showing strong versatility and scalability.

IV. Conclusion

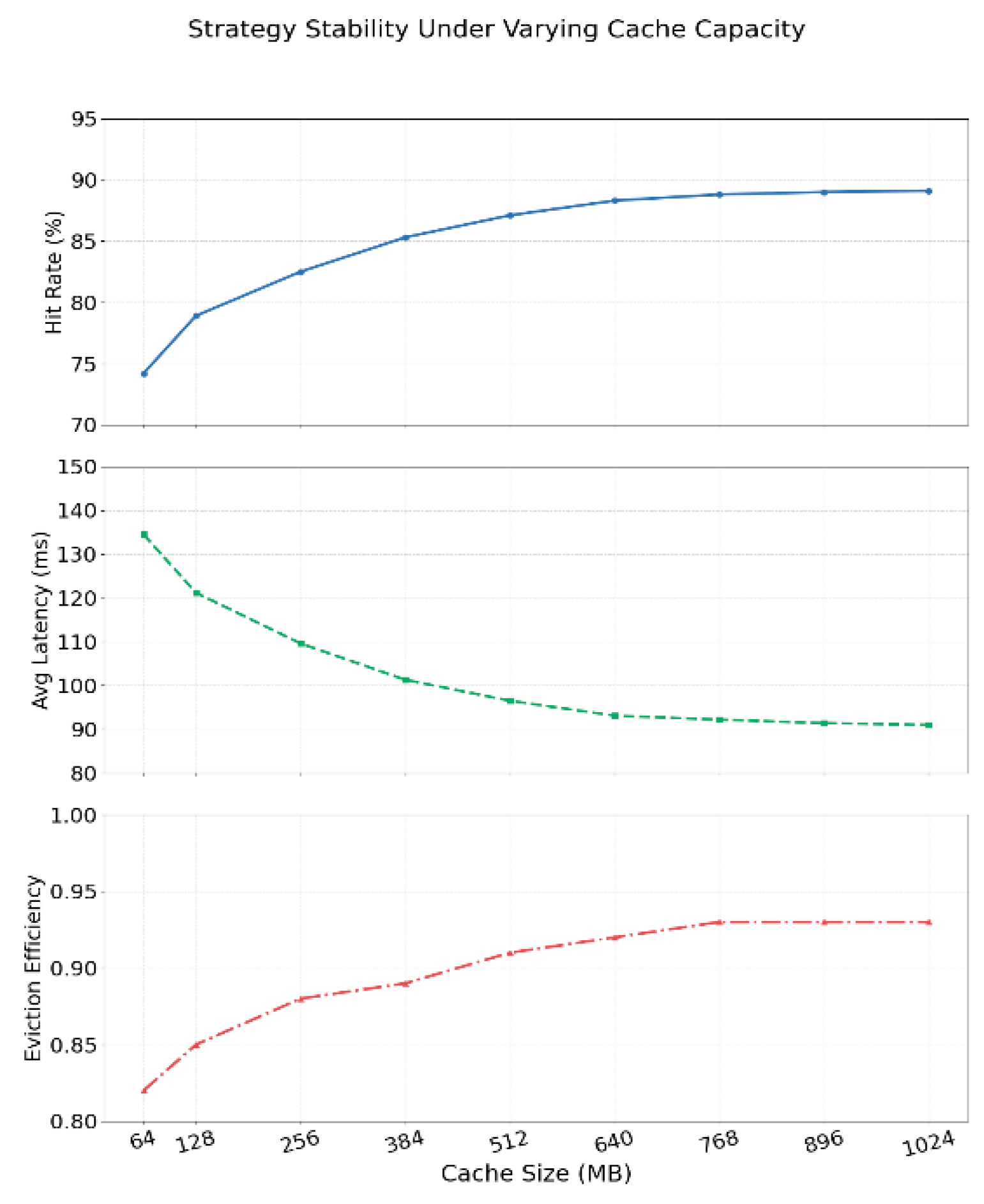

This study focuses on backend cache strategy optimization and proposes an adaptive eviction mechanism based on deep reinforcement learning. A cache decision model is constructed using Deep Q-Networks (DQN) as the core. By using key performance metrics such as cache hit rate, response time, and eviction efficiency as feedback signals, the model continuously learns optimal policies in dynamic access environments. It overcomes the limitations of traditional cache algorithms that rely on static rules and limited state awareness. Experiments conducted from multiple dimensions confirm the stability and adaptability of the proposed method in handling complex scenarios such as dynamic data patterns and changing cache capacities. The results show significant performance advantages. The core value of this method lies in introducing reinforcement learning into backend cache optimization. It establishes a closed-loop mechanism of sensing, decision-making, and feedback. This enables intelligent, data-driven, and self-adaptive evolution of cache policies. The method shows strong responsiveness and policy adjustment capabilities in handling real-world scenarios such as hot and cold data switching, sudden traffic spikes, and resource constraints. It is particularly suitable for cloud service platforms, high-concurrency web systems, and microservice architectures that require dynamic cache scheduling. Therefore, this study not only enriches the technical approaches to cache management but also offers new ideas for resource optimization in large-scale distributed systems.

The model demonstrates good scalability and transferability in practical deployment. It can be flexibly integrated into different platforms or service architectures to meet diverse business needs. At the same time, it brings real engineering benefits in improving overall system service quality, reducing latency, and minimizing resource consumption. It has the potential to drive traditional systems toward more intelligent, efficient, and sustainable development. Future research may expand in several directions. For example, attention mechanisms can be introduced to improve the model’s ability to identify key states. A multi-agent framework may be used to address more complex cooperative caching decisions. Long-term deployment with real system data can further validate the model’s feasibility and stability in real-world applications. Additionally, the approach can be extended to other resource management problems such as memory allocation, bandwidth scheduling, and data prefetching, expanding its impact in the field of intelligent system management.