Submitted:

26 April 2025

Posted:

28 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related work:

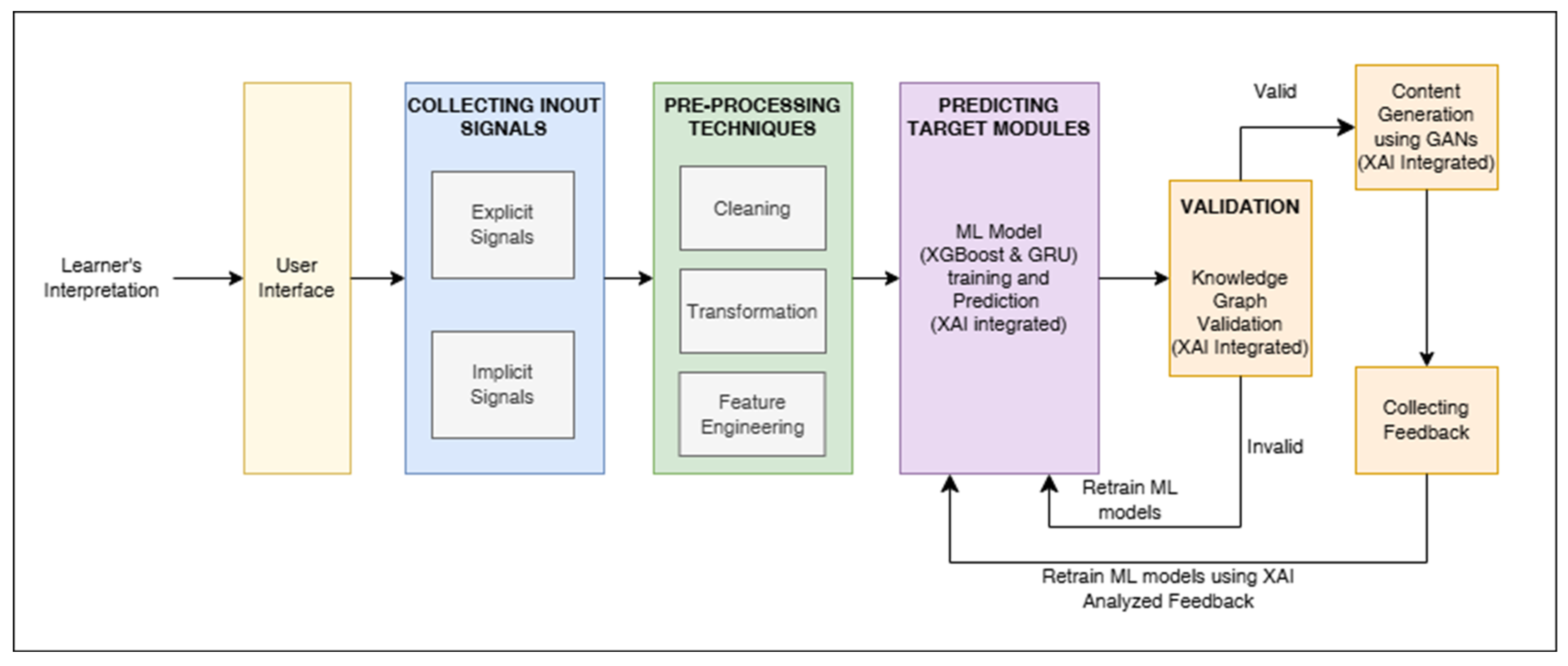

3. Materials And Methodology:

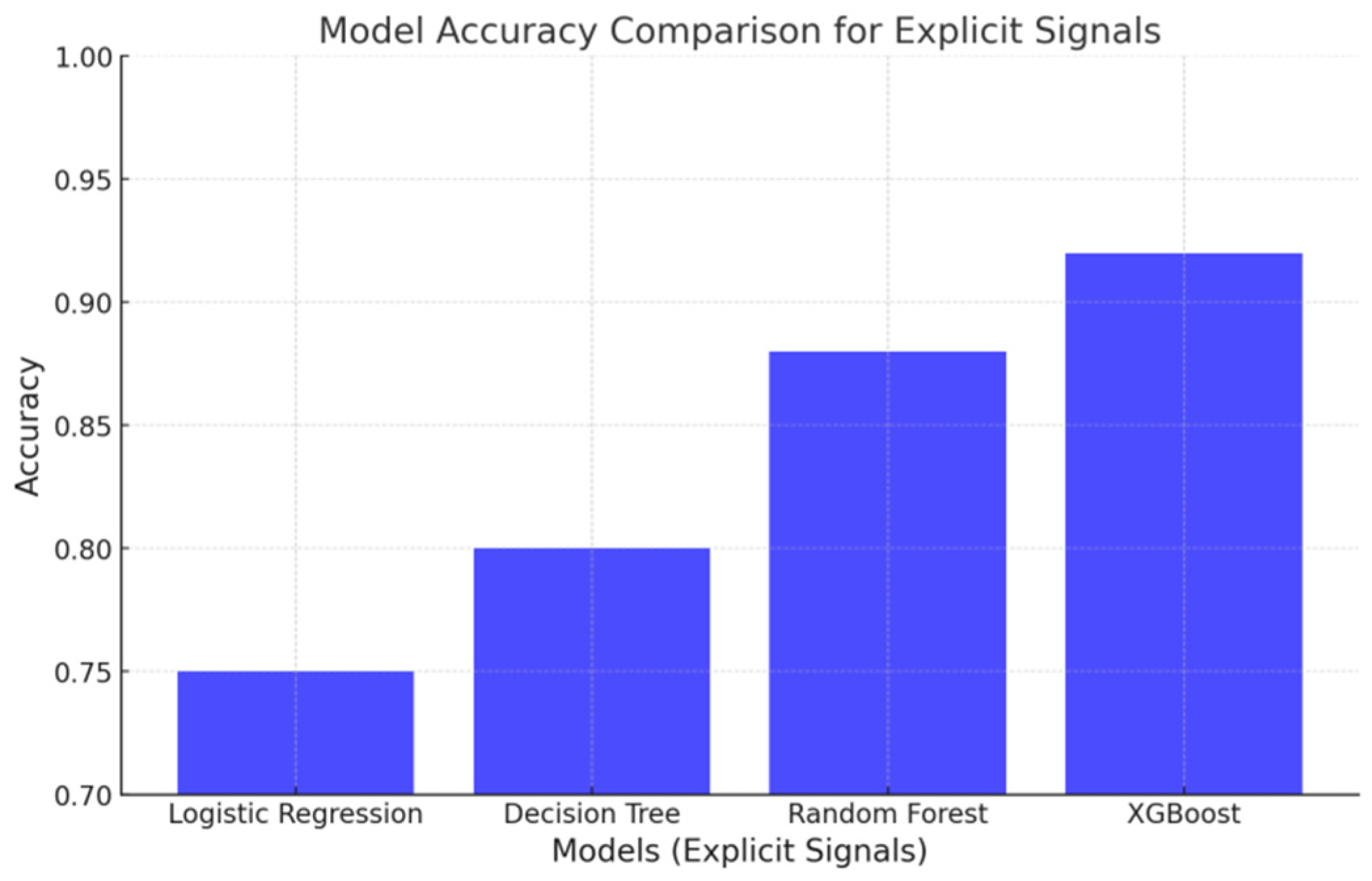

3.1. Comparative study of Choosing Model pipelines:

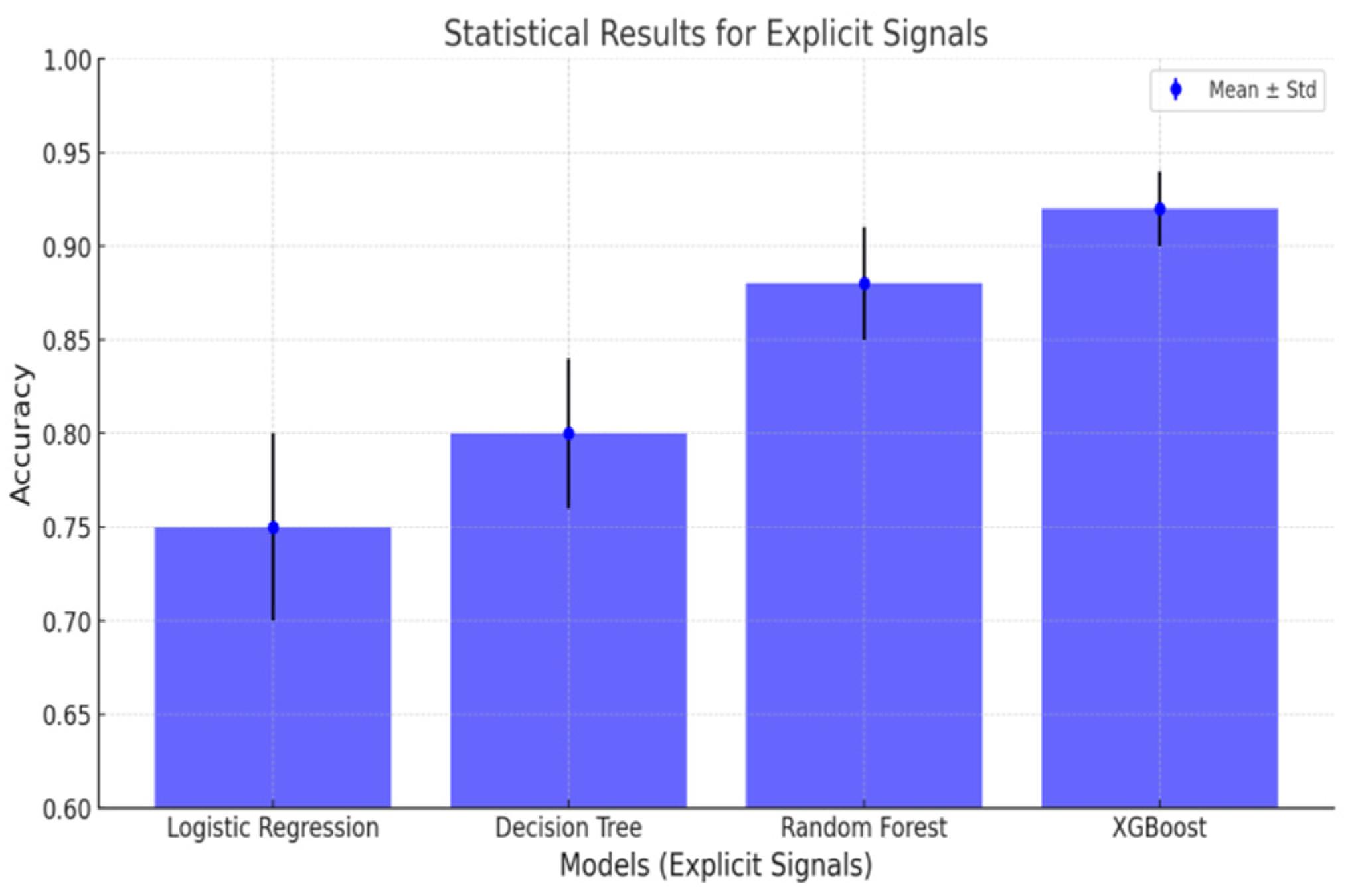

3.1.1. Suitability for Explicit Signals

3.1.2. Reason Behind Selecting XGBoost:

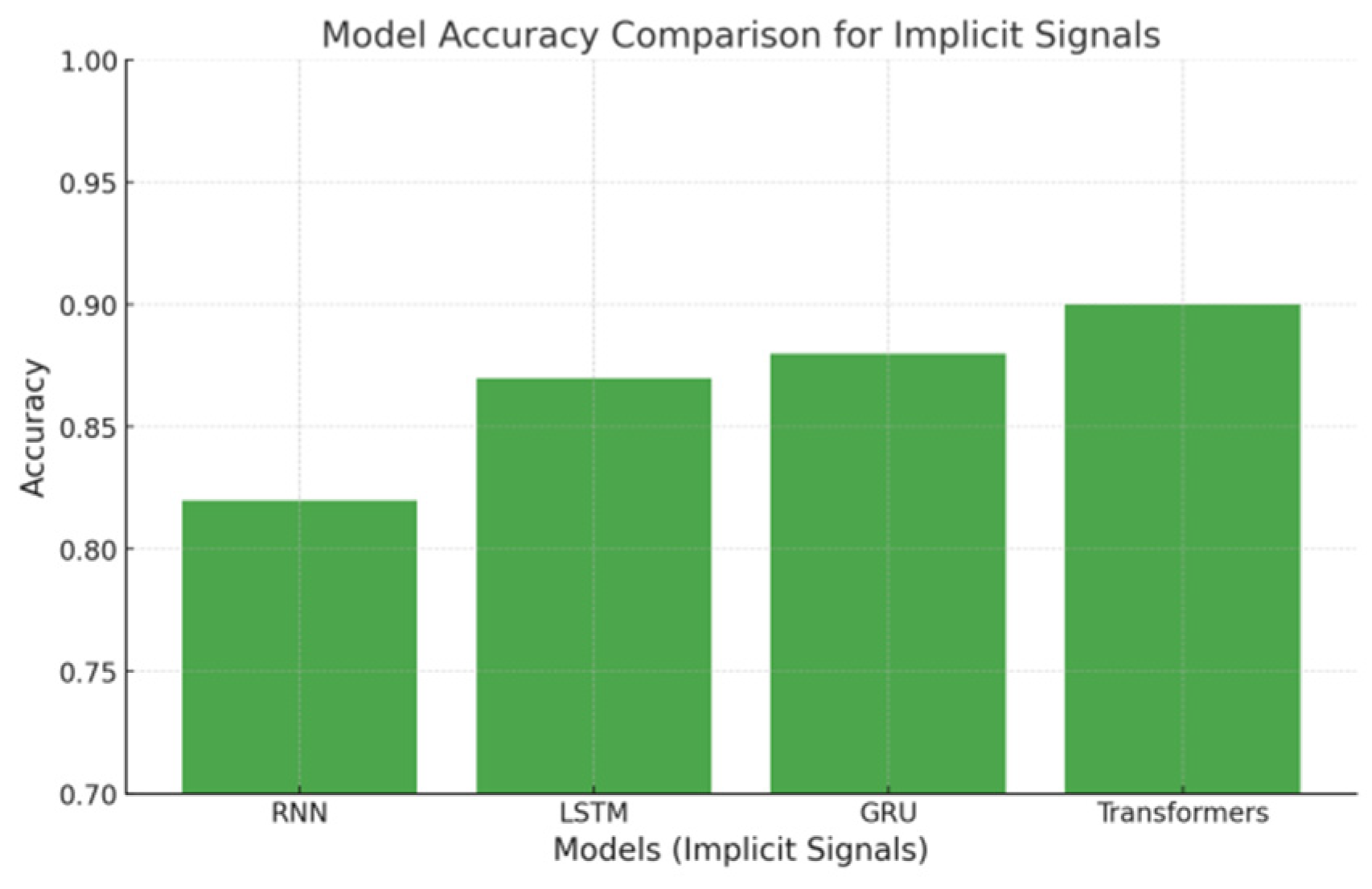

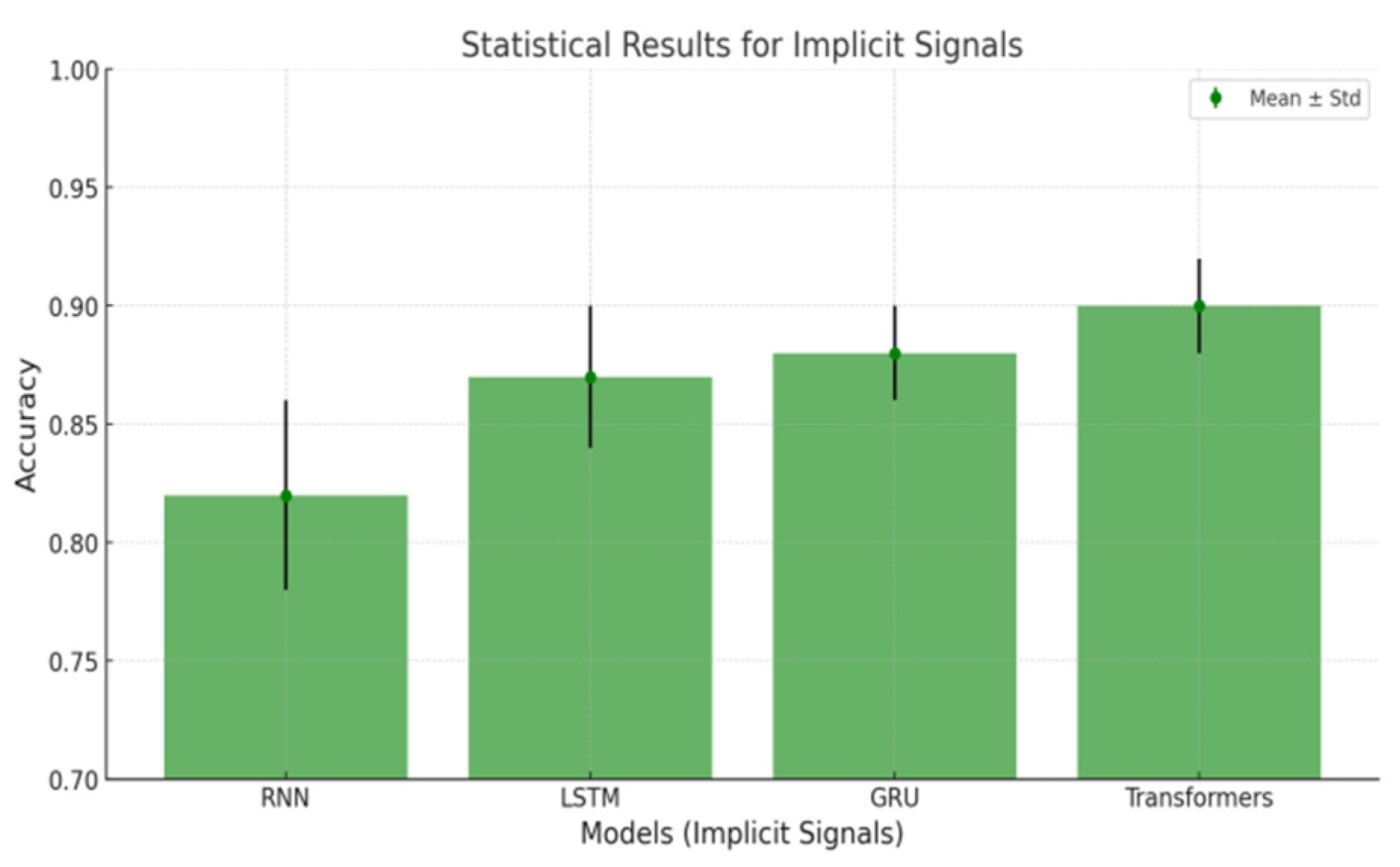

3.1.3. Suitability for Implicit Signals:

3.1.4. Reason Behind Selecting GRU :

3.1.5. Hybrid Approach: Combining XGBoost and GRU

| Model | Purpose | Strengths |

|---|---|---|

| XGBoost | Process explicit signals | High accuracy; Robust to overfitting; andInterpretable |

| GRU | Process implicit signals | Captures sequential patterns efficiently. |

| Hybrid (Ensemble) | Combine XGBoost and GRU predictions |

Achieved higher accuracy; and Robust predictions. |

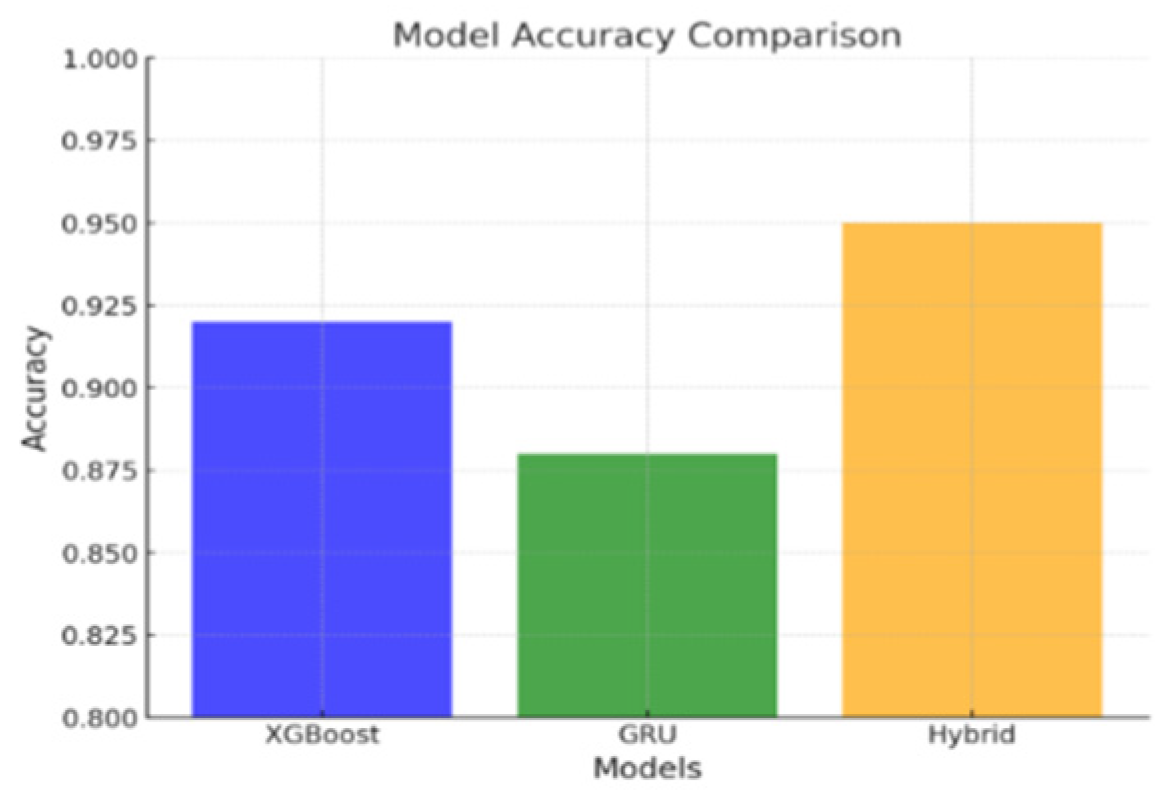

3.1.6. Measuring Performance Metrics :

| Metrics | XGBoost | GRU | Hybrid |

|---|---|---|---|

| Accuracy | 92.0% | 88.0% | 95.0% |

| Precision | 90.0% | 87.0% | 94.0% |

| Recall | 91.0% | 86.0% | 93.0% |

| F1-Score | 90.5% | 86.5% | 93.5% |

3.2. Signal Categorization in Personalized Learning Systems

3.3. Preprocessing the Categorized Signals

3.3.1. Preprocessing Explicit Signals

3.3.2. Steps in Preprocessing Explicit Signals

- Normalization:

- : The original value of the feature.

- : The minimum value of the feature in the dataset.

- : The maximum value of the feature in the dataset.

- val′ : The normalized value.

3.3.3. Features Captured from Explicit Signals:

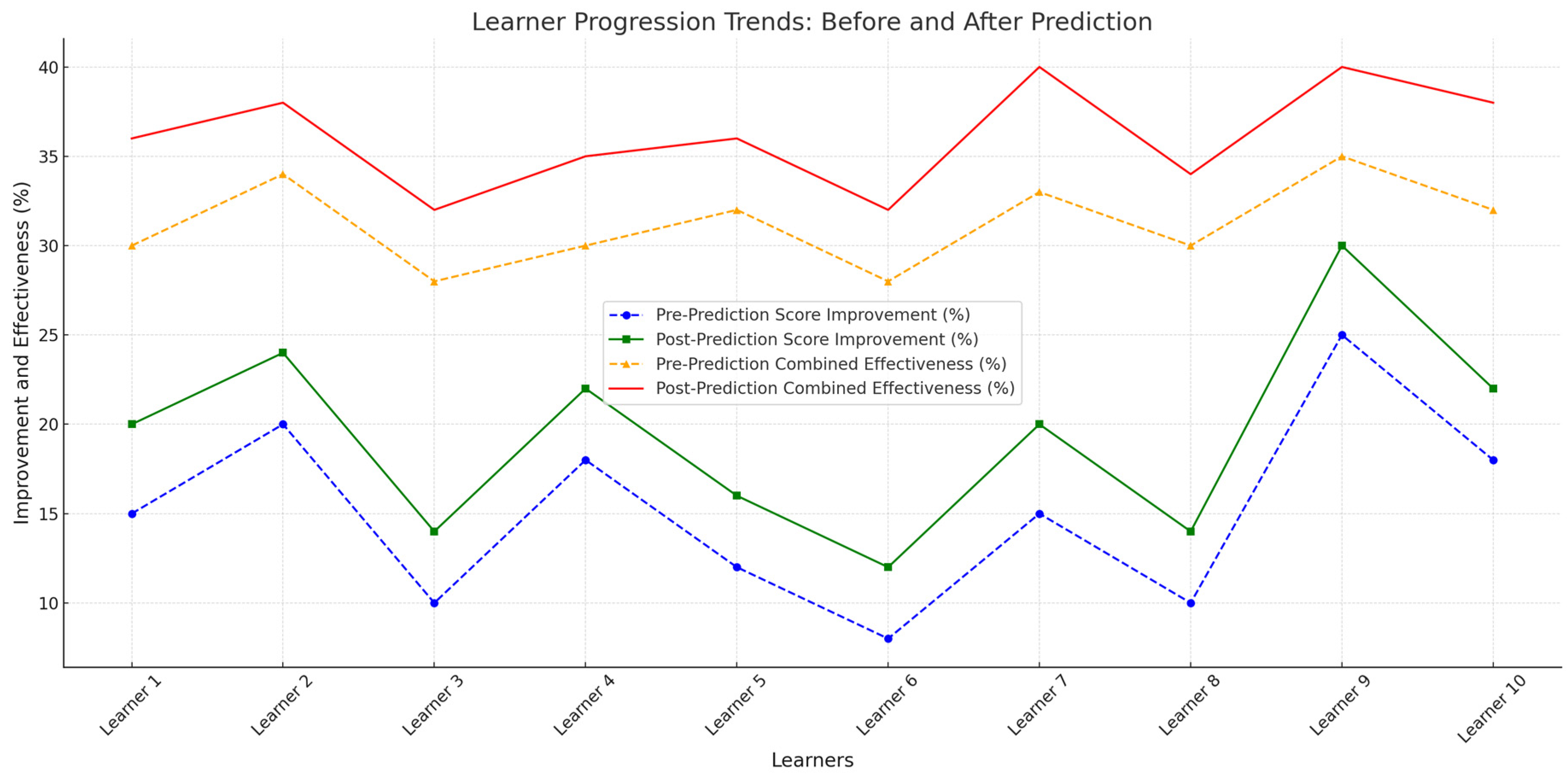

- Score Improvement:

- Learners 1, 7, 9, and 10 showed consistent or exceptional improvement with high satisfaction ratings, benefiting from tailored content and valid predictions.

- Learners 2, 5, and 8 demonstrated steady improvement, though they could benefit from advanced challenges or personalized support.

- Learners 3, 4, and 6 had lower improvements or incomplete modules. These learners require additional support through foundational reinforcements, intermediate modules, or engaging content.

3.3.4. Preprocessing Implicit Signals

3.3.5. Steps in Preprocessing Implicit Signals

3.3.6. Features Captured from Implicit Signals:

- Time Spent: Amount of time spent per task

- Retries: Number of times the test has been tried

- Engagement Rate: The interaction level and their attentiveness can be calculated using

- Click Stream Data: Number of clicks used in the specific module

- Learners 3, 7, and 10 demonstrated strong engagement trends with significant time spent, high click counts, and frequent revisits. These learners are ready for advanced topics and challenges.

- Learners 1, 5, and 8 showed consistent engagement. Providing tailored resources can help them improve their readiness for more complex modules.

- Learners 2, 4, 6, and 9 had minimal interactions, lower revisit counts, and limited time spent. These learners need targeted strategies to boost engagement and improve outcomes.

3.3.8. Combined Preprocessing for Hybrid Model

- Learners 1, 7, 9, and 10 achieved the highest combined effectiveness, driven by strong engagement and explicit improvements. These learners benefit from advanced and exploratory learning paths.

- Learners 2, 5, and 8 demonstrated steady combined effectiveness despite some engagement gaps. Personalized resources can further boost their performance.

-

Learners 3, 4, and 6 showed lower combined effectiveness due to limited explicit improvement or low engagement. These learners need targeted interventions:

- ○

- Learner 3: Needs foundational reinforcement despite high engagement.

- ○

- Learner 4 and Learner 6: Require interactive and engaging content to improve both engagement and outcomes.

3.4. Finding the Predicted Target Module

-

Step 1: Process Explicit Signals (XGBoost)

- Input: Explicit signals such as pre-test scores, post-test scores, satisfaction ratings, and module preferences.

- Processing:

- : Loss function

- : Regularization term.

- : Number of leaves in the tree.

- : Leaf weights.

- : Gradients for left and right nodes.

- : Hessians for left and right nodes.

- Output: Probability or score indicating the relevance of each module.

- Input: Sequential data like time spent, retries, engagement trends, and click stream.

-

Processing:

-

GRU processes the temporal dependencies in the data, predicting a score () for each module based on behavioral patterns as follows.

-

Update Gate (: Determines how much of the previous hidden states to retain:where:

- : Update gate at time .

- : Input at time

- : Previous hidden state.

- : Weights and bias.

- : Sigmoid activation function.

-

Reset Gate (: Controls how much of the past information to forget:

- Candidate Hidden State (: Computes the new information to be added:

- Final Hidden State(: Combines the previous hidden state and the candidate hidden state using the update gate:

- Output Prediction(: The output is computed as:

-

-

- Output: Predicted relevance score for each module.

-

The predictions from XGBoost and GRU are combined using a weighted ensemble approach:

- : Weight assigned to explicit signals (e.g., 0.6).

- Final Output(: The module with the highest score is selected as the predicted target module.

3.5. Validating the Predicted Target Module with the Knowledge Graph

3.6. Feedback Loop for Refinement

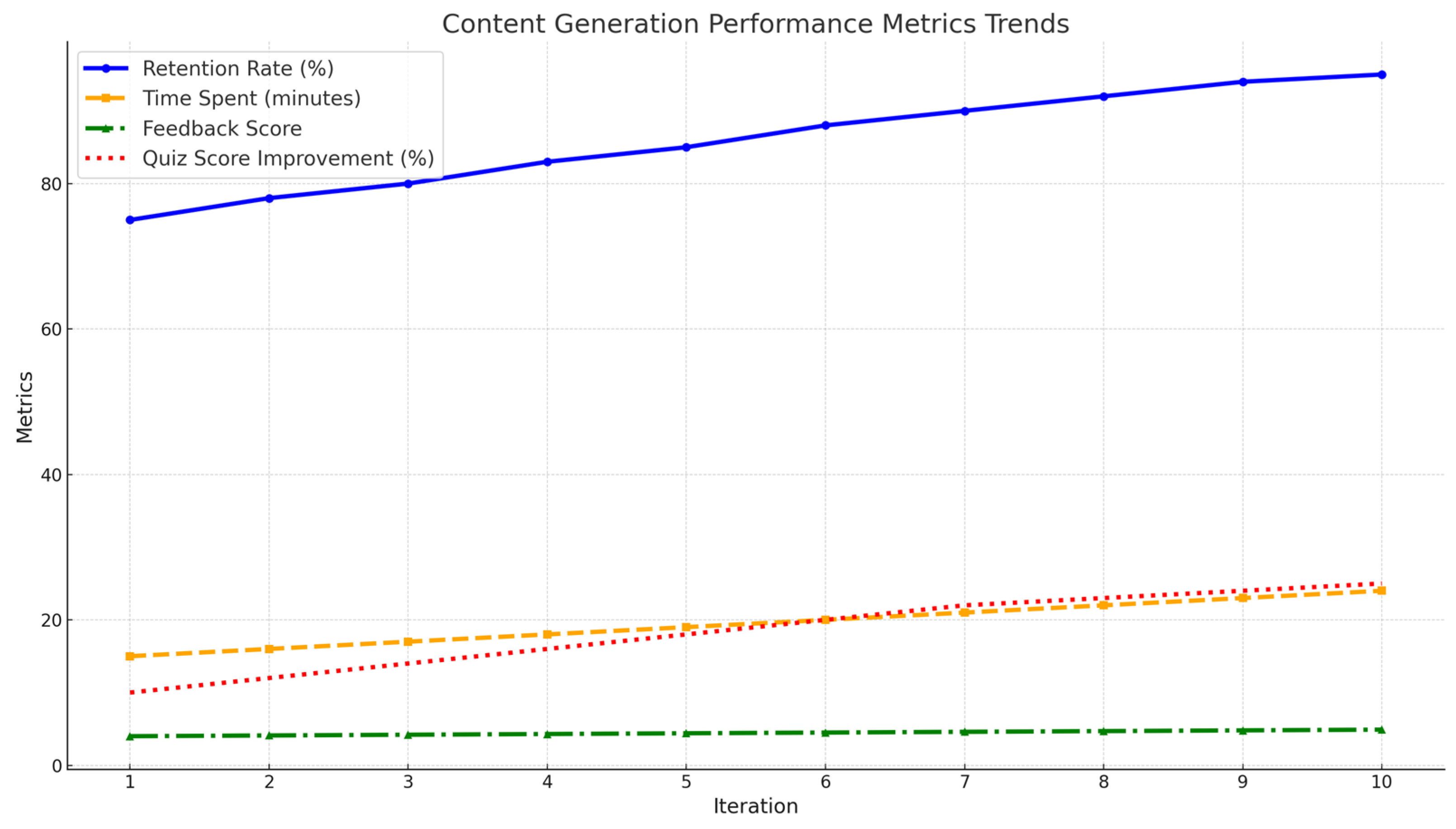

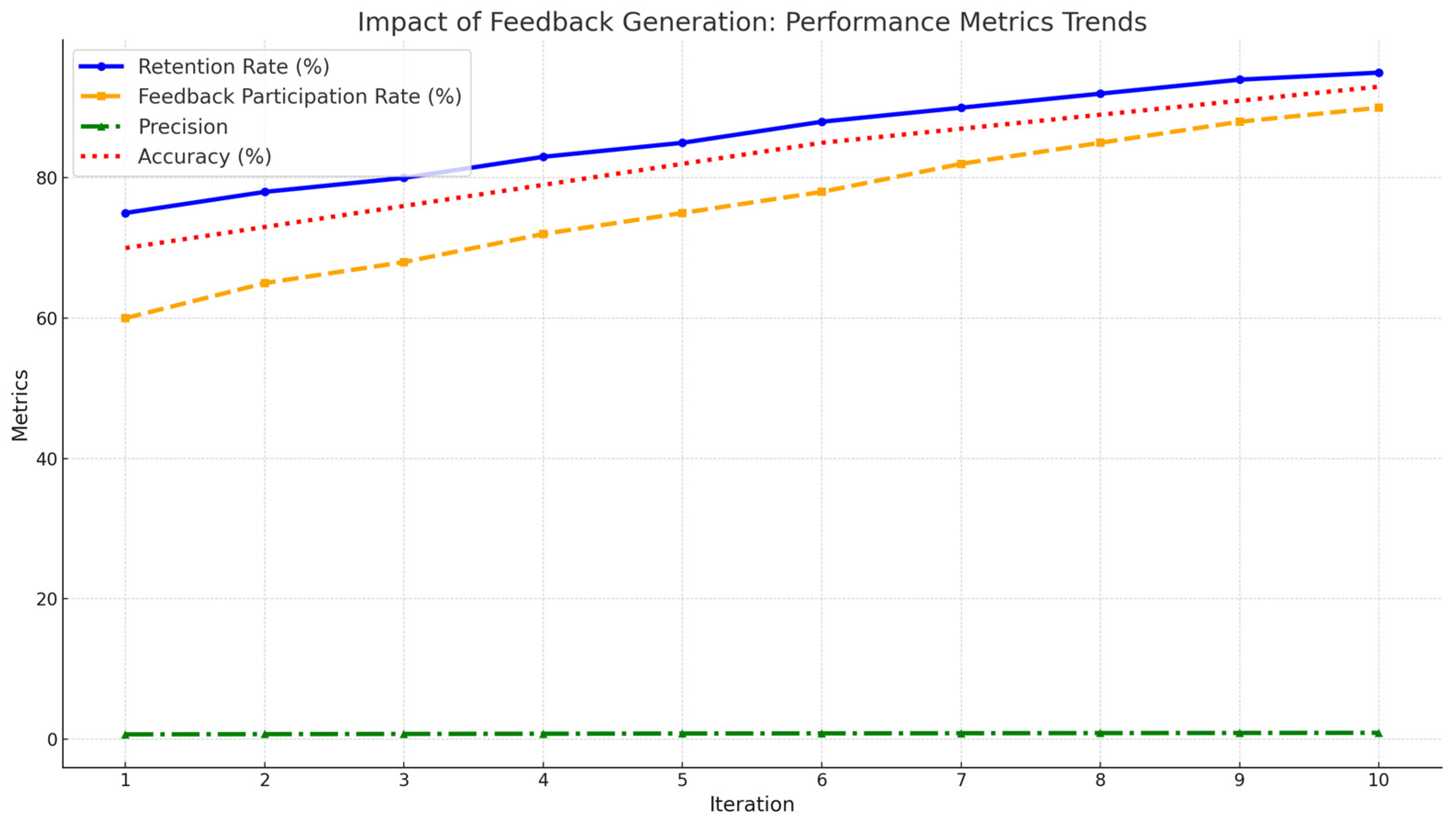

| Iteration | Feedback Collected | Action Taken | Result |

|---|---|---|---|

| 1 | "Module too difficult" - yes (Explicit) | Adjusted difficulty level of the content such as foundational concepts will be provided to progress further. | Increased learner satisfaction by 10%. |

| 2 | Low time spent, high revisit counts (Implicit) | Personalized module content(quizzes & hints). | Engagement trends improved by 12%. |

| 3 | "Recommendations are unrelated" - yes(Explicit) | Expanded knowledge graph edges by using GAN to create personalize intermediate modules. | Reduced invalid predictions by 20%. |

| 4 | High drop-off rate in advanced modules (Implicit) | Introduced intermediate modules dynamically by using GAN. | Learner retention increased by 15%. |

| 5 | Satisfaction ratings(1-5) inconsistent across modules - (Explicit) | Retrained model with updated feature weights. | Prediction accuracy improved by 8%. |

| 6 | Learners skipping certain modules (Implicit) | Make sure learner solved foundational priorities to progress consistently. | Coverage of learning paths improved by 10%. |

| 7 | "Lack of examples in content" - yes (Explicit) | Added GAN-generated examples dynamically. | Learner engagement increased by 14%. |

| 8 | High engagement but low quiz scores (Implicit) | Suggested revision modules before advancing. | Knowledge retention improved by 18%. |

| 9 | Positive feedback on personalized paths - yes (Explicit) | Reinforced current recomm endation logic. |

System stability and reliability increased. |

| 10 | Learner satisfaction consistently high (Explicit + Implicit) | Scaled system for new users. | Model readiness for deployment confirmed. |

3.7. Incorporation of GANs in DKPS

3.8. Role of GANs in Dynamic Content Generation

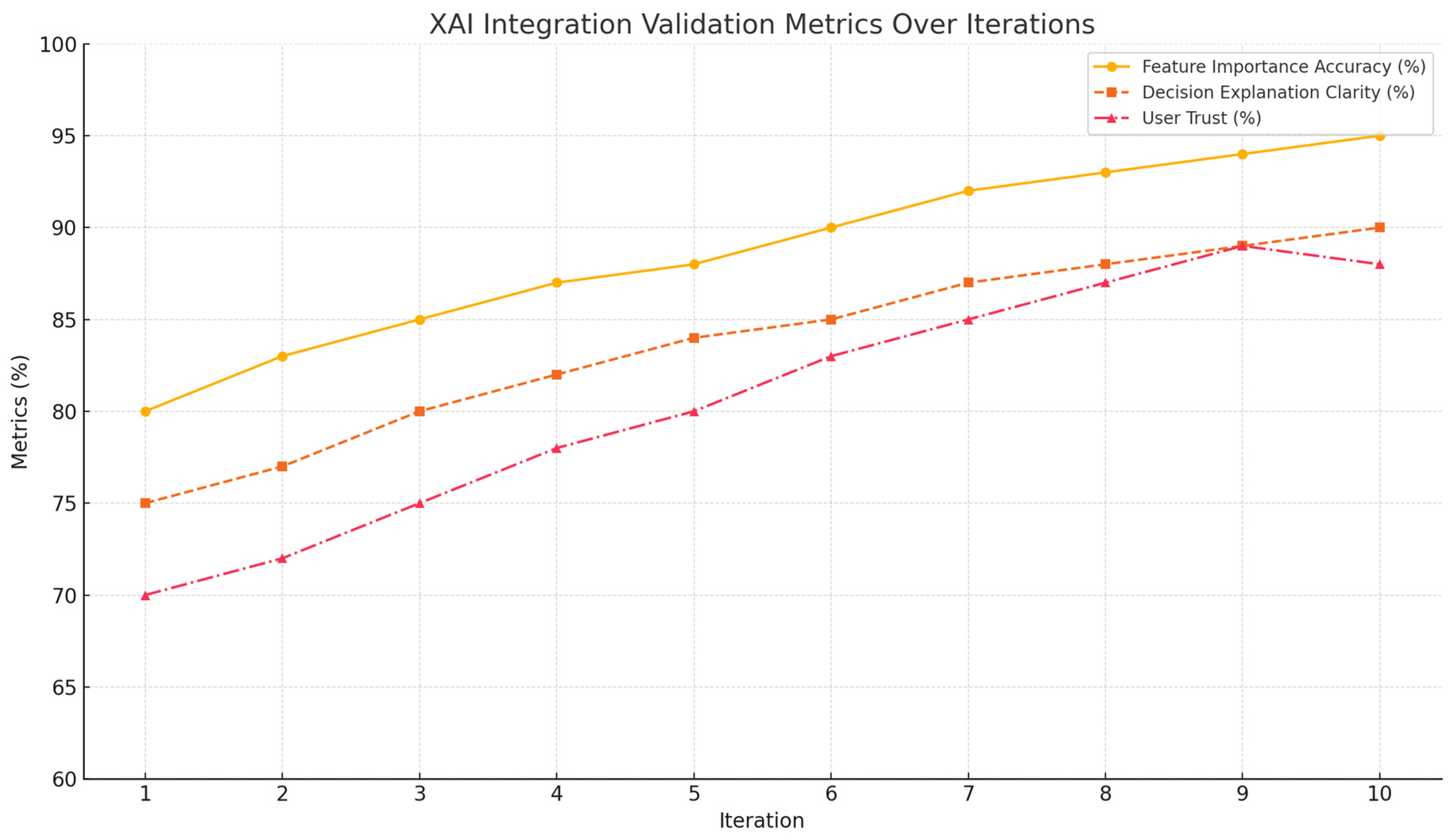

3.9. Explainable AI (XAI) Integration Across All Modules in the Proposed System

4. Dataset

5. Results and Discussion

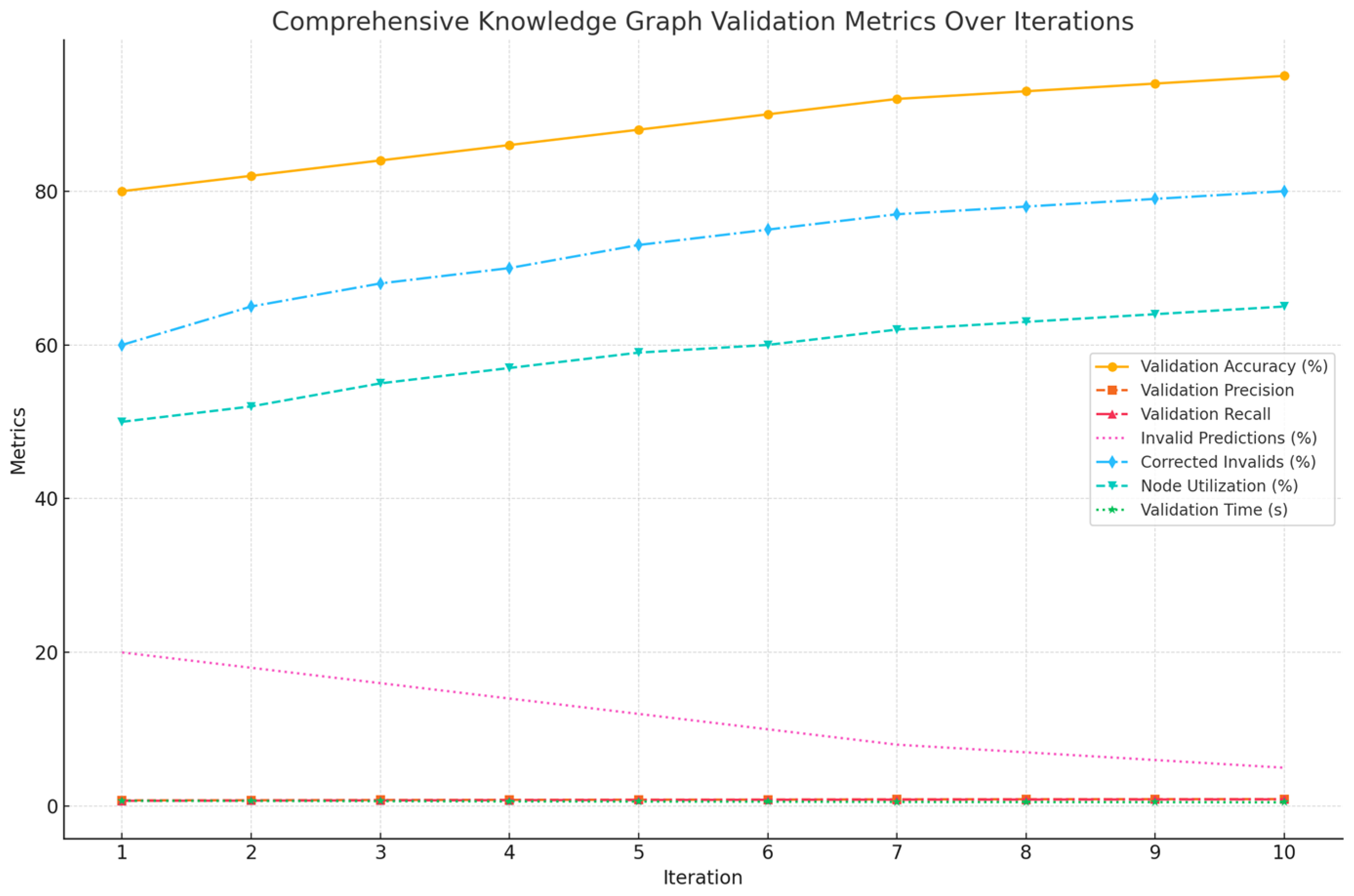

5.1. Analysis of Hybrid models

| Iteration | Accuracy (%) | Precision | Recall | F1-Score |

|---|---|---|---|---|

| 1 | 70 | 0.68 | 0.75 | 0.71 |

| 2 | 73 | 0.71 | 0.77 | 0.74 |

| 3 | 76 | 0.74 | 0.79 | 0.76 |

| 4 | 79 | 0.77 | 0.81 | 0.79 |

| 5 | 82 | 0.80 | 0.83 | 0.81 |

| 6 | 85 | 0.82 | 0.85 | 0.84 |

| 7 | 87 | 0.84 | 0.86 | 0.85 |

| 8 | 89 | 0.86 | 0.87 | 0.87 |

| 9 | 91 | 0.88 | 0.88 | 0.88 |

| 10 | 93 | 0.90 | 0.89 | 0.89 |

5.2. Knowledge Graph Validation

5.3. Content Generation

5.4. Feedback Collection

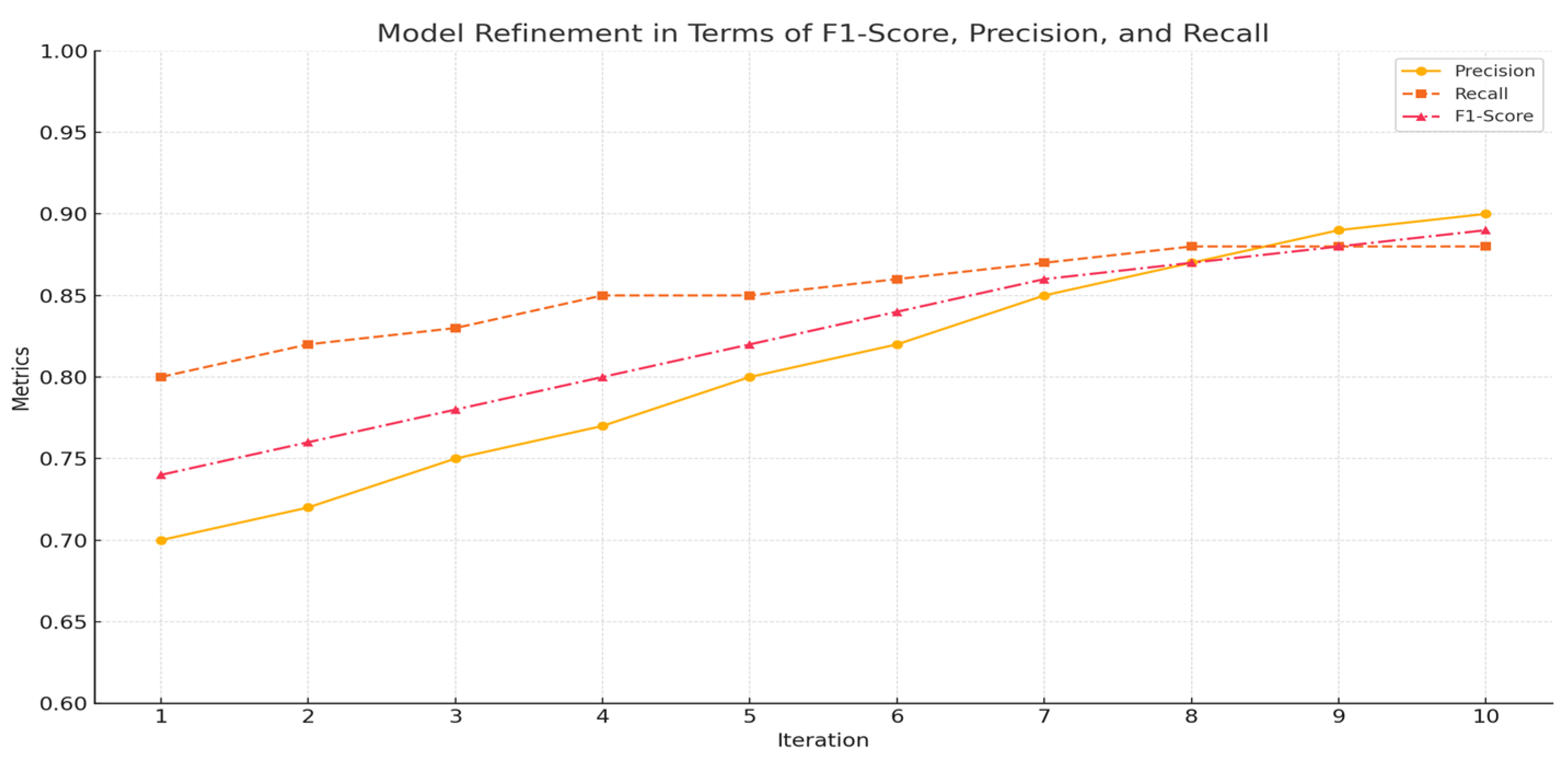

5.5. Refinement of ML Models

5.6. Explainable AI (XAI) Integration :

5.7. Learner’s Progress with DKPS:

6. Conclusion

Abbreviations

| DKPS | Dynamic Knowledge Component Prediction System |

| ML | Machine learning |

| AI | Artificial Intelligence |

| XAI | Explainable Artificial Intelligence |

| GAN | Generative Adversarial Networks |

| XGBoost | eXtreme Gradient Boosting |

| GRU | Gated Recurrent Unit |

| LSTM | Long Short-Term Memory (LSTM) |

| RNN | Recurrent Neural Network (RNN) |

References

- Imamah, U.L.; Djunaidy, A.; Purnomo, M.H. Development of dynamic personalized learning paths based on knowledge preferences & the ant colony algorithm. IEEE Access 2024, 12, 144193–144207. [Google Scholar]

- Choi, H.; Lee, H.; Lee, M. Optical knowledge component extracting model for knowledge concept graph completion in education. IEEE Access 2023, 11, 15002–15013. [Google Scholar] [CrossRef]

- Sharif, M.; Uckelmann, D. Multi-Modal LA in Personalized Education Using Deep Reinforcement Learning. IEEE Access 2024, 12, 54049–54065. [Google Scholar] [CrossRef]

- Wang, Y.; Lai, Y.; Huang, X. Innovations in Online Learning Analytics: A Review of Recent Research and Emerging Trends. IEEE Access 2024, 12, 166761–166775. [Google Scholar] [CrossRef]

- Essa, S.G.; Celik, T.; Human-Hendricks, N.E. Personalized Adaptive Learning Technologies Based on Machine Learning Techniques to Identify Learning Styles: A Systematic Literature Review. IEEE Access 2023, 11, 48392–48409. [Google Scholar] [CrossRef]

- Chen, L.; Chen, P.; Lin, Z. Artificial Intelligence in Education: A Review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Wang, H.; Tlili, A.; Lehman, J.D.; Lu, H.; Huang, R. Investigating Feedback Implemented by Instructors to Support Online Competency-Based Learning (CBL): A Multiple Case Study. Int. J. Educ. Technol. High. Educ. 2021, 18, 5. [Google Scholar] [CrossRef]

- Smith, J.A.; Johnson, L.M.; Williams, R.T. Student Voice on Generative AI: Perceptions, Benefits, and Challenges in Higher Education. Educ. Technol. Soc. 2023, 26, 45–58. [Google Scholar]

- Lu, Y.; Ma, N.; Yan, W.Y. Social Comparison Feedback in Online Teacher Training and Its Impact on Asynchronous Collaboration. J. Educ. Comput. Res. 2022, 59, 789–812. [Google Scholar] [CrossRef]

- Lee, S.H.; Kim, J.W.; Park, Y.S. The Influence of E-Learning on Exam Performance and the Role of Achievement Goals in Shaping Learning Patterns. Internet High. Educ. 2021, 50, 100–110. [Google Scholar]

- Zhang, Y.; Li, X.; Wang, Q. Dynamic Personalized Learning Path Based on Triple Criteria Using Deep Learning and Rule-Based Method. IEEE Trans. Learn. Technol. 2020, 13, 283–294. [Google Scholar]

- Chen, L.; Zhao, X.; Liu, H. Research on Dynamic Learning Path Recommendation Based on Social Network. Comput. Educ. 2019, 136, 1–10. [Google Scholar]

- Nagisetty, V.; Graves, L.; Scott, J.; Ganesh, V. XAI-GAN: Enhancing Generative Adversarial Networks via Explainable AI Systems. arXiv preprint arXiv:2002.10438, 2020.

- Zhao, Y.; Sun, X.; Li, H.; Jin, Y. PerFed-GAN: Personalized Federated Learning via GAN. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3405–3415. [Google Scholar]

- Balasubramanian, J.; Koppaka, S.; Rane, C.; Raul, N. Parameter based survey of recommendation systems. In Proceedings of the Int. Conf. Innov. Comput. Commun. (ICICC); 2020; vol. 1. Available online: https://ssrn.com/abstract=3569579.

- Shibani, A.S.M.; Mohd, M.; Ghani, A.T.A.; Zakaria, M.S.; Al-Ghuribi, S.M. Identification of critical parameters affecting an Elearning recommendation model using Delphi method based on expert validation. Information 2023, 14, 207. [Google Scholar] [CrossRef]

- Vanitha, V.; Krishnan, P.; Elakkiya, R. Collaborative optimization algorithm for learning path construction in Elearning,’’ Comput. Electr. Eng. 2019, 77, 325–338. [Google Scholar] [CrossRef]

- Nabizadeh, A.H.; Gonçalves, D.; Gama, S.; Jorge, J.; Rafsanjani, H.N. Adaptive learning path recommender approach using auxiliary learning objects,’’ Comput. Educ. 2020, 147, 103777. [Google Scholar] [CrossRef]

- Sarkar, S.; Huber, M. Personalized learning path generation in Elearning systems using reinforcement learning and generative adversarial networks. In Proceedings of the IEEE Int. Conf. Syst., Man, Cybern. (SMC), Oct. 2021; pp. 92–99. [CrossRef]

- Wang, D.; Zhang, T.; Liu, Y. FOKE: A Personalized and Explainable Education Framework Integrating Foundation Models, Knowledge Graphs, and Prompt Engineering. IEEE Access 2024, 12, 123456–123470. [Google Scholar]

- Ogata, H.; Flanagan, B.; Takami, K.; Dai, Y.; Nakamoto, R.; Takii, K. EXAIT: Educational XAI Tools for Personalized Learning. Res. Pract. Technol. Enhanc. Learn. 2024, 19, 1–15. [Google Scholar]

- Zhang, L.; Lin, J.; Borchers, C.; Cao, M.; Hu, X. 3DG: A Framework for Using Generative AI for Handling Sparse Learner Performance Data from Intelligent Tutoring Systems. arXiv preprint arXiv:2402.01746, 2024.

- Maity, S.; Deroy, A. Bringing GAI to Adaptive Learning in Education. arXiv preprint arXiv:2410.10650, 2024.

- Abbes, F.; Bennani, S.; Maalel, A. Generative AI in Education: Advancing Adaptive and Personalized Learning. SN Comput. Sci. 2024, 5, 1154. [Google Scholar] [CrossRef]

- Hariyanto, D.; Kohler, T. An Adaptive User Interface for an E-learning System by Accommodating Learning Style and Initial Knowledge. In Proceedings of the International Conference on Technology and Vocational Teachers (ICTVT 2017); Atlantis Press, 2017; p. 16-2. [Google Scholar]

- Jeevamol, S.; Renumol, V.G.; Jayaprakash, S. An Ontology-Based Hybrid E-Learning Content Recommender System for Alleviating the Cold-Start Problem. Educ. Inf. Technol. 2021, 26, 7259–7283. [Google Scholar] [CrossRef]

- Elshani, L.; PirevaNuçi, K. Constructing a Personalized Learning Path Using Genetic Algorithms Approach. arXiv preprint 2021, arXiv:2104.11276. [Google Scholar]

- Sorva, J.; Sirkiä, A. Dynamic Programming - Structure, Difficulties and Teaching. In Proceedings of the 2013 IEEE Front. In Proceedings of the 2013 IEEE Front. Educ. Conf. (FIE) 2013, 1685–1691. [Google Scholar]

- Sharma, V.; Kumar, A. Smart Education with Artificial Intelligence Based Determination of Learning Styles. Procedia Comput. Sci. 2018, 132, 834–842. [Google Scholar]

- Allioui, Y.; Chergui, M.; Bensebaa, T.; Belouadha, F.Z. Combining Supervised and Unsupervised Machine Learning Algorithms to Predict the Learners’ Learning Styles. Procedia Comput. Sci. 2019, 148, 87–96. [Google Scholar]

- Hmedna, B.; El Mezouary, A.; Baz, O.; Mammass, D. Identifying and Tracking Learning Styles in MOOCs: A Neural Networks Approach. Int. J. Innov. Appl. Stud. 2017, 19, 267–275. [Google Scholar]

- Alghazzawi, D.; Said, N.; Alhaythami, R.; Alotaibi, M. A Survey of Artificial Intelligence Techniques Employed for Adaptive Educational Systems within E-Learning Platforms. J. Artif. Intell. Soft Comput. Res. 2017, 7, 47–64. [Google Scholar]

- Nagisetty, V.; Graves, L.; Scott, J.; Ganesh, V. xAI-GAN: Enhancing Generative Adversarial Networks via Explainable AI Systems. arXiv preprint arXiv:2002.10438, 2020.

- Arslan, R.C.; Zapata-Rivera, D.; Lin, L. Opportunities and Challenges of Using Generative AI to Personalize Educational Assessments. Front. Artif. Intell. 2024, 7, 1460651. [Google Scholar] [CrossRef] [PubMed]

- Schneider, J. Explainable Generative AI (GenXAI): A Survey, Conceptualization, and Research Agenda. Artif. Intell. Rev. 2024, 57, 289. [Google Scholar] [CrossRef]

- Zhang, Y.-W.; Xiao, Q.; Song, Y.-L.; Chen, M.-M. Learning Path Optimization Based on Multi-Attribute Matching and Variable Length Continuous Representation. Symmetry 2022, 14, 2360. [Google Scholar] [CrossRef]

- Yu, H.; Guo, Y. Generative Artificial Intelligence Empowers Educational Reform: Current Status, Issues, and Prospects. Front. Educ. 2023, 8, 1183162. [Google Scholar] [CrossRef]

- Carreon, A.; Goldman, S. Artificial Intelligence to Help Special Education Teacher Workload. Center for Innovation, Design, and Digital Learning, 2024. [Google Scholar]

- Sajja, R.; Sermet, Y.; Cikmaz, M.; Cwiertny, D.; Demir, I. Artificial Intelligence-Enabled Intelligent Assistant for Personalized and Adaptive Learning in Higher Education. Information 2024, 15, 596. [Google Scholar] [CrossRef]

- Jaboob, M.; Hazaimeh, M.; Al-Ansi, A.M. Integration of Generative AI Techniques and Applications in Student Behavior and Cognitive Achievement in Arab Higher Education. Int. J. Educ. Technol. High. Educ. 2024, 21, 45. [Google Scholar] [CrossRef]

- Mao, J.; Chen, B.; Liu, J.C. Generative Artificial Intelligence in Education and Its Implications for Assessment. TechTrends 2024, 68, 58–66. [Google Scholar] [CrossRef]

- Lai, J.W. Adapting Self-Regulated Learning in an Age of Generative Artificial Intelligence Chatbots. Future Internet 2024, 16, 218. [Google Scholar] [CrossRef]

- Pesovski, I.; Santos, R.M.; Henriques, R.; Trajkovik, V. Generative AI for Customizable Learning Experiences. Sustainability 2024, 16, 3034. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, Y.; Wu, Z. Knowledge Graph-Based Behavior Denoising and Preference Learning for Sequential Recommendation. IEEE Trans. Knowl. Data Eng. 2024, 36, 2490–2503. [Google Scholar] [CrossRef]

- Choi, H.; Lee, H.; Lee, M. Optimal Knowledge Component Extracting Model for Knowledge Concept Graph Completion in Education. IEEE Access 2023, 11, 15002–15013. [Google Scholar] [CrossRef]

- Liang, X. Learning Personalized Modular Network Guided by Structured Knowledge. Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 2019, 8944–8952. [Google Scholar]

- Sarkar, S.; Huber, M. Personalized Learning Path Generation in E-Learning Systems Using Reinforcement Learning and Generative Adversarial Networks. Proc. IEEE Int. Conf. Syst. Man Cybern. 2021, 1425–1432. [Google Scholar]

| Signals Type | Description | Examples |

| Explicit Signals | Direct inputs provided by the learner or system. |

|

| Implicit Signals | Learner’s Behavioural data to represent how they learn |

|

| Research | Total Number of Parameters | Learner’s characteristics | Dynamic Personalised learning path | Feedback-driven & Interpretable |

|---|---|---|---|---|

| Kamsa (Kamsa et al., 2018) | 2 Parameters | Level of knowledge and learners’ history | Static | No |

| Vanitha (Vanitha et al., 2019) | 3 parameters | Learner emotion, cognitive ability and difficulty level of learning objective | Static | No |

| Kardan (Kardan et al., 2014) | 2 parameters | Pre-test value, grouping the learners’ category | Static | No |

| Alma (Rodriguez-Medina et al., 2022) | 2 parameters | Preference to knowledge level of the student and learning status | Static | No |

| Saadia Gutta Essa 2023 | 2 Parameters | Relevant data of learner through browser such as Browsing history & Collaborate data | Static | No |

| Hiroaki Ogata 2024 | 3 Parameters through Learner’s analytics tool | log data, survey data and assessment data | Static | No |

| Imamah Aug 2024 | 5 Parameters through created dashboard | Knowledge level, self estimation, initial module, target module and difficulty level of lo | Dynamic | No |

| Imamah Dec 2024 | Through Item Response Theory (IRT) framework | Parameter utilized by different models focuses on difficulty level, discrimination, guessing, and carelessness. | Dynamic | No |

| Proposed Approach | Explicit & implicit parameters with GAN & XAI implemented | Hybrid ML models | Dynamic | Incorporated |

| Model | Performance |

| Logistic Regression | Low accuracy for complex datasets. |

| Decision Tree | Moderate accuracy, high variance. |

| Random Forest | High accuracy, moderate efficiency. |

| Support Vector Machine (SVM) | Moderate accuracy, slow performance. |

| XGBoost | Best accuracy and efficiency. |

| Model | Performance |

| Recurrent Neural Network (RNN) | Moderate accuracy, high variance. |

| Long Short-Term Memory (LSTM) | High accuracy, slower training. |

| Gated Recurrent Unit (GRU) | High accuracy, fast training. |

| Transformers | Best accuracy, very slow. |

| Signal Type | Signal Name | Description | Example Source | ||

| Explicit | Pre-test Score | Measures prior knowledge before starting a module. | Pre-test assessment | ||

| Post-test Score | Assesses knowledge improvement after completing a module. | Post-test assessment | |||

| Satisfaction Rating | Indicates learner feedback on the module’s quality or difficulty. | Learner feedback form | |||

| Module Completion Status | Tracks whether the learner has successfully completed the module. | System logs | |||

| Initial Module | Content which the learner have chosen | Content Information | |||

| Implicit | Time Spent on Module | Measures total time the learner spends engaging with a module. | System usage logs | ||

| Click Count | Tracks the number of clicks or interactions made within the learning system. | System interaction logs | |||

| Engagement trends | Frequency of interaction with quizzes, videos, or simulations. | Interaction trackers | |||

| Revisit Count | Number of times the learner revisits specific content. | System logs |

| Step | Category | Description |

| Data Cleaning | -- | - Handle missing values (e.g., imputation with mean/median). - Remove duplicate records. |

| Transformation | Feature Scaling | Normalize or standardize numerical features to bring them to the same scale. |

| Conversion | Convert categorical variables into numerical representations | |

| Outlier Detection | Identify and remove extreme values that may skew the model’s performance. | |

| Feature Engineering | -- | Extract features such as normalization and score improvement to enhance model performance. |

| Learner ID | Pre-Test Score (%) | Post-Test Score (%) | Satisfaction Rating (1–5) | Module Completion Status (1=Completed, 0=Not Completed) | Score Improvement (%) | Initial Module |

| 1 | 70 | 85 | 5 | 1 | 15 | Data Structures |

| 2 | 60 | 80 | 4 | 1 | 20 | Data Structures |

| 3 | 55 | 65 | 5 | 1 | 10 | Algorithms |

| 4 | 72 | 90 | 3 | 0 | 18 | Binary Trees |

| 5 | 65 | 77 | 4 | 1 | 12 | Graph Algorithms |

| 6 | 50 | 58 | 3 | 0 | 8 | SQL Basics |

| 7 | 60 | 75 | 5 | 1 | 15 | Testing |

| 8 | 55 | 65 | 4 | 1 | 10 | Networking |

| 9 | 70 | 95 | 5 | 1 | 25 | Machine Learning |

| 10 | 62 | 80 | 4 | 1 | 18 | Data Analytics |

| Step | Category | Description |

| Data Cleaning | -- | Remove incomplete sequences. - Handle missing time steps using interpolation or padding. |

| Transformation | Normalization | Scale sequential data to a fixed range to improve convergence during model training. |

| Sequence Padding | Ensure all sequences are of the same length by padding shorter sequences or truncating longer ones. | |

| Categorical Conversion | Encode sequential categorical data into numerical format. | |

| Feature Engineering | -- | Extract features such as time spent, retries ,engagement rate and click rate data to improve learner’s knowledge domain |

| Learner ID | Initial Module | Time Spent (minutes) | Click Count | Engagement Trend (Low=1, Med=2, High=3) | Revisit Count | Padded Sequence |

|---|---|---|---|---|---|---|

| 1 | Data Structures | 50 | 30 | 2 | 3 | [50, 30, 2, 3, 0, 0] |

| 2 | Data Structures | 25 | 20 | 1 | 1 | [25, 20, 1, 1, 0, 0] |

| 3 | Algorithms | 60 | 50 | 3 | 5 | [60, 50, 3, 5, 0, 0] |

| 4 | Binary Trees | 15 | 10 | 1 | 1 | [15, 10, 1, 1, 0, 0] |

| 5 | Graph Algorithms | 45 | 28 | 2 | 2 | [45, 28, 2, 2, 0, 0] |

| 6 | SQL Basics | 20 | 15 | 1 | 1 | [20, 15, 1, 1, 0, 0] |

| 7 | Testing | 55 | 40 | 3 | 3 | [55, 40, 3, 3, 0, 0] |

| 8 | Networking | 35 | 25 | 2 | 2 | [35, 25, 2, 2, 0, 0] |

| 9 | Machine Learning | 10 | 8 | 1 | 1 | [10, 8, 1, 1, 0, 0] |

| 10 | Data Analytics | 65 | 45 | 3 | 5 | [65, 45, 3, 5, 0, 0] |

| Learner ID | Initial Module | Pre-Test Score (%) | Post-Test Score (%) | Improvement (%) | Satisfaction Rating (1-5) | Completion Status (1=Completed) | Time Spent (minutes) | Click Count | Engagement Trend (Low=1, Med=2, High=3) | Revisit Count | Knowledge Graph Validation Outcome |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Data Structures | 70 | 85 | 15 | 5 | 1 | 50 | 30 | 2 | 3 | Valid |

| 2 | Data Structures | 60 | 80 | 20 | 4 | 1 | 25 | 20 | 1 | 1 | Invalid |

| 3 | Algorithms | 55 | 65 | 10 | 5 | 1 | 60 | 50 | 3 | 5 | Valid |

| 4 | Binary Trees | 72 | 90 | 18 | 3 | 0 | 15 | 10 | 1 | 1 | Invalid |

| 5 | Graph Algorithms | 65 | 77 | 12 | 4 | 1 | 45 | 28 | 2 | 2 | Valid |

| 6 | SQL Basics | 50 | 58 | 8 | 3 | 0 | 20 | 15 | 1 | 1 | Invalid |

| 7 | Testing | 60 | 75 | 15 | 5 | 1 | 55 | 40 | 3 | 3 | Valid |

| 8 | Networking | 55 | 65 | 10 | 4 | 1 | 35 | 25 | 2 | 2 | Valid |

| 9 | Machine Learning | 70 | 95 | 25 | 5 | 1 | 10 | 8 | 1 | 1 | Valid |

| 10 | Data Analytics | 62 | 80 | 18 | 4 | 1 | 65 | 45 | 3 | 5 | Invalid |

| Initial Module | Target Module 1 | Target Module 2 | Target Module 3 |

|---|---|---|---|

| Data Structures | Algorithms | Trees | Graph Algorithms |

| Algorithms | Dynamic Programming | Graph Algorithms | Machine Learning |

| Binary Trees | Graph Algorithms | Advanced Trees | Segment Trees |

| Graph Algorithms | Shortest Path Algorithms | Network Flow | Advanced Graph Theory |

| SQL Basics | Database Optimization | Advanced SQL | Data Warehousing |

| Testing | Integration Testing | System Testing | Performance Testing |

| Networking | Operating Systems | Network Security | Cloud Networking |

| Machine Learning | Deep Learning | Natural Language Processing | Reinforcement Learning |

| Data Analytics | Big Data Analytics | Business Intelligence | Visualization Techniques |

| Operating Systems | Memory Management | Process Scheduling | Virtualization |

| Learner ID | Current Module | Explicit Signals | Implicit Signals | Combined Signals | Predicted Module | Knowledge Graph Validation Outcome |

|---|---|---|---|---|---|---|

| Learner 1 | Data Structures | Score Improvement: 15%, Satisfaction: 4, Completion: Yes | Time Spent: 50 mins, Clicks: 30, Engagement: Medium | High readiness; Consistent engagement | Algorithms | Valid (Algorithms is a direct successor of Data Structures) |

| Learner 2 | Data Structures | Score Improvement: 20%, Satisfaction: 5, Completion: Yes | Time Spent: 40 mins, Clicks: 25, Engagement: High | Strong readiness; Highly engaged | Machine Learning | Invalid (Machine Learning requires prior knowledge of Algorithms) |

| Learner 3 | Algorithms | Score Improvement: 10%, Satisfaction: 3, Completion: No | Time Spent: 25 mins, Clicks: 15, Engagement: Low | Needs review; Weak engagement | Data Structures | Valid (Data Structures is a prerequisite for Algorithms) |

| Learner 4 | Binary Trees | Score Improvement: 18%, Satisfaction: 5, Completion: Yes | Time Spent: 60 mins, Clicks: 35, Engagement: High | Advanced readiness; Highly engaged | Graph Algorithms | Invalid (Graph Algorithms does not directly depend on Binary Trees) |

| Learner 5 | Graph Algorithms | Score Improvement: 12%, Satisfaction: 4, Completion: Yes | Time Spent: 50 mins, Clicks: 30, Engagement: Medium | Consistent readiness; Good engagement | Shortest Path Algorithms | Valid (Shortest Path Algorithms is an advanced topic after Graph Algorithms) |

| Learner 6 | SQL Basics | Score Improvement: 8%, Satisfaction: 3, Completion: No | Time Spent: 30 mins, Clicks: 20, Engagement: Low | Weak readiness; Low engagement | Database Optimization | Invalid( it requires foundational database knowledge) – More Content should be generated to reinforce sql basics |

| Learner 7 | Testing | Score Improvement: 15%, Satisfaction: 4, Completion: Yes | Time Spent: 45 mins, Clicks: 25, Engagement: Medium | Consistent readiness; Good engagement | Integration Testing | Valid (Integration Testing builds on Testing) |

| Learner 8 | Networking | Score Improvement: 10%, Satisfaction: 3, Completion: No | Time Spent: 20 mins, Clicks: 15, Engagement: Low | Needs review; Weak engagement | Operating Systems | Valid (Operating Systems builds on Networking concepts) |

| Learner 9 | Machine Learning | Score Improvement: 25%, Satisfaction: 5, Completion: Yes | Time Spent: 65 mins, Clicks: 45, Engagement: High | Strong readiness; Excellent engagement | Deep Learning | Valid (Deep Learning is the next step after Machine Learning) |

| Learner 10 | Data Analytics | Score Improvement: 18%, Satisfaction: 4, Completion: Yes | Time Spent: 50 mins, Clicks: 30, Engagement: Medium | High readiness; Good engagement | Artificial Intelligence | Invalid (Artificial Intelligence is unrelated to Data Analytics in the KG) |

| Iteration | Precision | Recall | F1-Score |

|---|---|---|---|

| 1 | 0.70 | 0.80 | 0.74 |

| 2 | 0.72 | 0.82 | 0.76 |

| 3 | 0.75 | 0.83 | 0.78 |

| 4 | 0.77 | 0.85 | 0.80 |

| 5 | 0.80 | 0.85 | 0.82 |

| 6 | 0.82 | 0.86 | 0.84 |

| 7 | 0.84 | 0.87 | 0.85 |

| 8 | 0.86 | 0.88 | 0.87 |

| 9 | 0.88 | 0.88 | 0.88 |

| 10 | 0.90 | 0.89 | 0.90 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).