1. Introduction

Autism Spectrum Disorder (ASD) is a neurodevelopmental condition characterized by persistent difficulties in social communication and interaction, alongside restricted and repetitive patterns of behavior, interests, or activities according to the American Psychiatric Association [

1]. Children with ASD may experience challenges in establishing and maintaining social relationships with parents, peers, teachers, therapists, and healthcare professionals. These difficulties can manifest in reduced eye contact, limited use of gestures or facial expressions, difficulties in turn-taking during conversation, or a lack of interest in shared activities [

2].

Given the importance of developing interventions that support the social development of children with ASD, researchers and practitioners have increasingly turned to the use of robotic systems. Robot-assisted interventions (ROMI) [

3] have shown potential benefits for children with ASD, as these children often display a heightened interest in technology-based activities. Robots, in particular, can serve as simplified social agents that help facilitate and support interactions in a more accessible and engaging way for them [

4,

5,

6]. Their programmable nature allows them to model emotions, initiate interactions, and provide feedback in a way that can be tailored to each child’s needs.

In this context, this article presents the design of JARI, a robot capable of expressing emotions through facial expressions, movement, and the use of light and color via LED displays on its body. JARI’s design includes a non-recognizable zoomorphic form to avoid rejection—common in children with sensory sensitivities—by not resembling any specific animal or a humanoid figure, which could trigger discomfort related to the Uncanny Valley Effect (Mori, 1970). Its external appearance includes soft and textured materials that encourage tactile interaction. The robot also includes customizable clothing made from different fabrics and colors, offering additional sensory stimulation and personalization options during therapy or educational sessions.

The paper is structured as follows. First,

Section 2 presents a summary of robots designed for children with Autism Spectrum Disorder (ASD), identifying relevant characteristics such as form, body and limb mobility, emotional expression capabilities, and types of sensory interaction.

Section 3 describes the JARI robot in detail, including its mechanical structure, aesthetic design, hardware architecture, software architecture, and human–machine interface. In

Section 4, a pilot study involving children diagnosed with ASD is introduced to validate the robot’s functional features and to explore how children respond to social interactions.

Section 5 presents findings from two evaluation scenarios: one involving individual interaction with children diagnosed with moderate ASD and limited or no verbal communication, and another involving group interaction with children who require low levels of support and demonstrate high verbal communication abilities. Finally, the article concludes with a discussion in

Section 6 and closing reflections in

Section 7.

2. Background

The development of robots designed for interaction with children with ASD has advanced significantly in recent years, with approaches varying depending on their application and design. Within this field, robots can serve different roles, ranging from therapeutic devices to educational or assistive tools.

Humanoid robots such as Kaspar [

7], NAO [

8], QT-Robot [

9] and Zeno [

10] have been used in therapeutic settings to facilitate communication and social interaction in children with ASD. These robots are designed with a social interface that allows them to express basic emotions through facial movements and gestures. However, recent studies have questioned the effectiveness of these systems in emotion recognition. [

11] argues that while these robots can act as mediators in communication between children with ASD and neurotypical adults, their ability to enhance emotional recognition is limited, as human emotional interaction depends not only on fixed facial expressions but also on situational context and multimodal cues.

On the other hand, the uncanny valley is a phenomenon widely discussed in the design of social robots. According to Mori’s (1970) hypothesis, as a robot acquires more human-like characteristics, its acceptance increases until it reaches a point where excessive similarity generates a sense of rejection or discomfort. [

11] points out that this effect is particularly relevant in robots like Kaspar and Zeno, whose humanoid appearance, combined with limited facial movements, may generate a sense of eeriness in users. In contrast, robots not designed for social interaction, such as Spot, developed by Boston Dynamics, have a clearly mechanical appearance and do not produce the same level of cognitive dissonance in users.

Recent studies have explored how the Uncanny Valley Effect (UVE) manifests in children, as their perception of robot appearance may differ from that of adults. Research suggests that while younger children may not experience this effect as strongly, it tends to emerge in older children, particularly in girls around the age of ten, who show a drop in perceived likability and acceptance when exposed to highly humanlike robots [

12].

Additional contributions to the field emphasize the importance of robot morphology and its alignment with the therapy phase. Tamaral et al. [

13] propose a distinction between humanoid and non-humanoid robots, suggesting that abstract or non-biomimetic designs may be more appropriate in the initial stages of therapy due to their simplicity and lower cognitive load. As therapy progresses, more complex humanoid forms can be introduced gradually. This staged approach aims to reduce overstimulation and increase user comfort, especially in children sensitive to visual and sensory input. Their work supports the idea that robot topology must be adaptable, and that simplicity in design plays a key role in early engagement. In line with this, the authors present TEA-2, a low-cost, non-humanoid robot specifically designed for therapeutic use with children with ASD, featuring a modular structure, minimalistic aesthetic, and basic multimodal interactions—making it well-suited for early-phase therapeutic contexts.

Beyond physical design, robots for children with ASD can also be oriented toward sensory experiences or therapeutic interventions. For example, robotic music therapy systems have been shown to be effective in modeling social behaviors and improving motor coordination in children with ASD [

14]. Similarly, collaborative robots, such as the (A)MICO device, have been designed to facilitate interaction in structured environments, using multimodal feedback to enhance communication [

15].

2.1. Robot Typologies and Design Approaches

Robots used in interventions with children with ASD vary widely in morphology, level of autonomy, and interaction modalities, reflecting diverse design philosophies. Humanoid robots such as

Kaspar,

NAO,

QT-Robot, and

Zeno are designed with anthropomorphic features that enable imitation of human social behaviors, including gestures, speech, and facial expressions. These robots have been clinically validated in tasks such as emotional recognition, motor imitation, and social skills training, showing positive outcomes in both educational and therapeutic contexts [

16,

17,

18] [

17].

In contrast, some studies advocate for the use of non-humanoid or ambiguously-shaped robots, arguing that lower visual complexity can promote sustained attention and reduce sensory overstimulation in children with ASD. Examples include

Keepon, shaped like a snowman,

Moxie, a cartoon robot, and

Kiwi, a tabletop robot with an abstract form and simplified expressiveness [

19,

20]. The non-recognizable zoomorphic design aims to provide a middle ground: a friendly, organic shape that does not evoke any specific animal, increasing approachability without triggering preexisting associations.

The literature also emphasizes the importance of adjusting the level of realism in a robot according to therapeutic goals: while more realistic robots may help children generalize learned behaviors to human contexts, less realistic designs create a more controlled and less intimidating environment [

13,

19]. The choice between humanoid and non-humanoid designs should therefore be made based on individual needs, usage context, and the intended pedagogical or therapeutic objectives.

To provide a clearer understanding of the physical and expressive diversity among social robots used in ASD-related interventions,

Table 1 presents a comparative overview based on key morphological and functional characteristics. These include the robot’s form factor, mobility, articulation of head and neck, visual expression through eyes or screens, emotional expressiveness, and available sensory interaction modalities. This comparison highlights the wide spectrum of design choices, ranging from highly anthropomorphic platforms to abstract or character-like embodiments, each with distinct implications for therapeutic use and user engagement.

Based on this overview, it becomes evident that technical features such as degrees of freedom (DoF) in movement, screen-based facial animation, and multisensory input channels are carefully chosen to support interaction with children with ASD. Many robots integrate expressive mobility—such as head tilts, arm gestures, or bouncing motions—as a way to maintain engagement and communicate emotions nonverbally. Visual expressiveness also varies: while some robots use mechanical or LED-based cues, others rely on high-resolution animated faces to portray nuanced emotional states, which may help children recognize and respond to affective signals.

Furthermore, sensory interaction plays a critical role in these designs. Robots often include cameras, microphones, and touch sensors to perceive user input, supporting dynamic two-way interaction. In some cases, speech recognition and reactive motion allow the robot to respond in real time, adapting its behavior based on the child’s actions or vocalizations. These features are particularly beneficial for children with ASD, as they create predictable and simplified social scenarios that reduce cognitive load while promoting social, emotional, and communicative development. Thus, the combination of mobility, sensory feedback, and expressive capabilities is essential to shape how effectively a robot can support intervention strategies for children on the autism spectrum.

3. Design of the JARI Robotic Platform

The JARI robotic platform was designed to support social interaction and emotional learning in children with ASD aged 6 to 8 years. The design integrates mechanical, electronic, and software components to ensure that the robot can effectively engage children and foster the development of socio-emotional skills. This section outlines the technical foundations of the platform, including its design requirements, mechanical structure, electronic architecture, and the teleoperation system used for real-time control.

3.1. System Requirements

The design focuses on facilitating social interaction, enabling emotional expression, and ensuring modularity for future enhancements. The main system objectives are as follows:

Engagement: The robot should attract and maintain the attention of children with ASD through expressive movements and dynamic lighting.

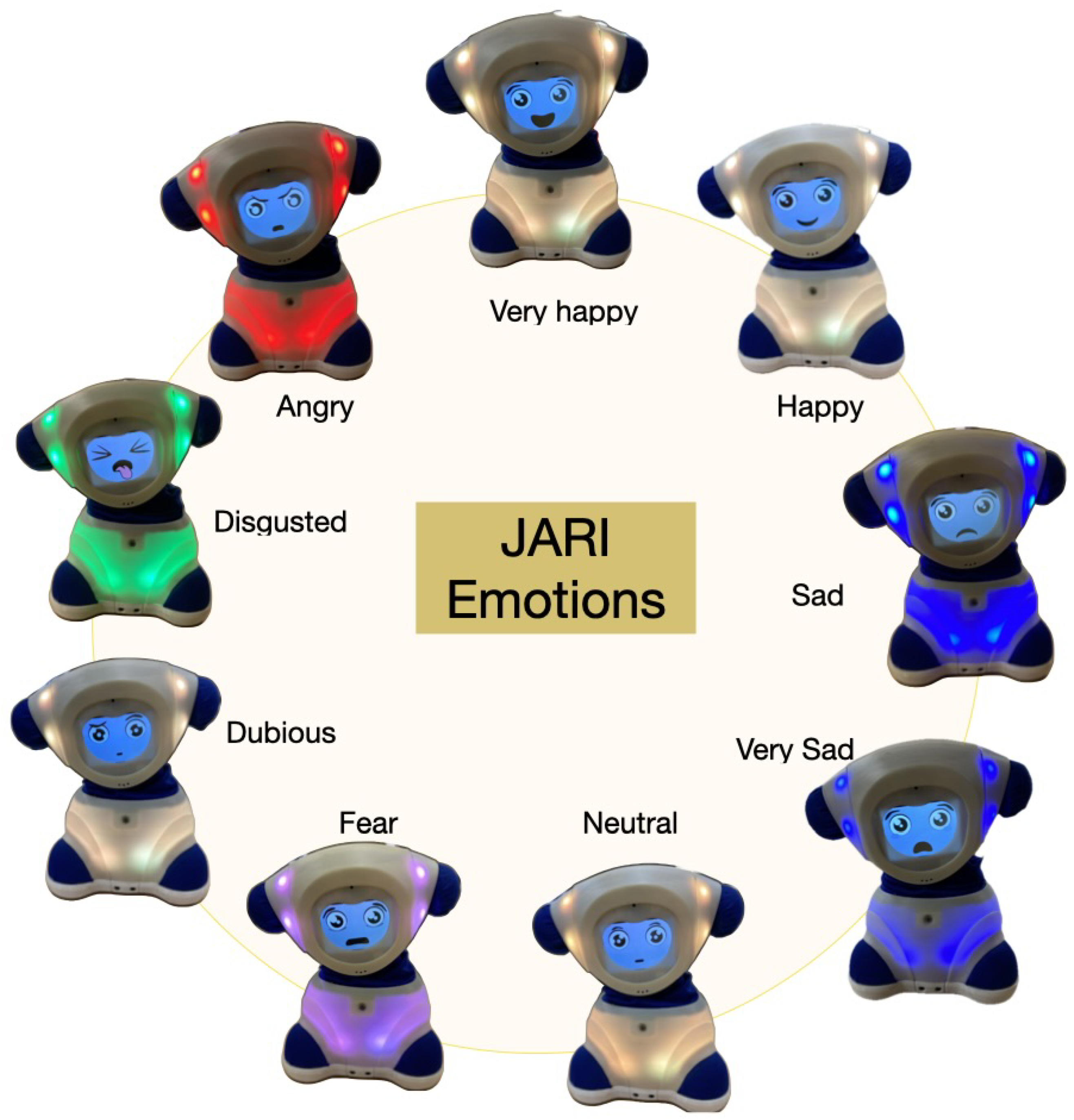

Emotion Display: JARI must be capable of expressing a range of predefined emotions to promote emotional recognition and interaction. The current set includes nine emotions: Happy, Very Happy, Sad, Very Sad, Disgusted, Angry, Fear, Doubious, and Neutral.

Robustness: The physical design must withstand frequent handling and occasional rough interactions typical in child-robot engagement.

Teleoperation: The system must allow real-time control through a web-based interface employing the Wizard of Oz (WoZ) paradigm[

24]

Adaptability: The platform should support easy updates and modifications to expand or customize its capabilities.

Movement: The robot must be capable of performing neck articulations: nodding (affirmation), shaking (negation), and lateral flexion, as well as whole-body movements including forward, backward, and rotational motions.

Lighting System: Emotions should also be expressed via color changes. Five colors are defined: yellow (happiness), blue (sadness), red (anger), green (disgust), and pink soft (anxiety).

3.2. Mechanical Design

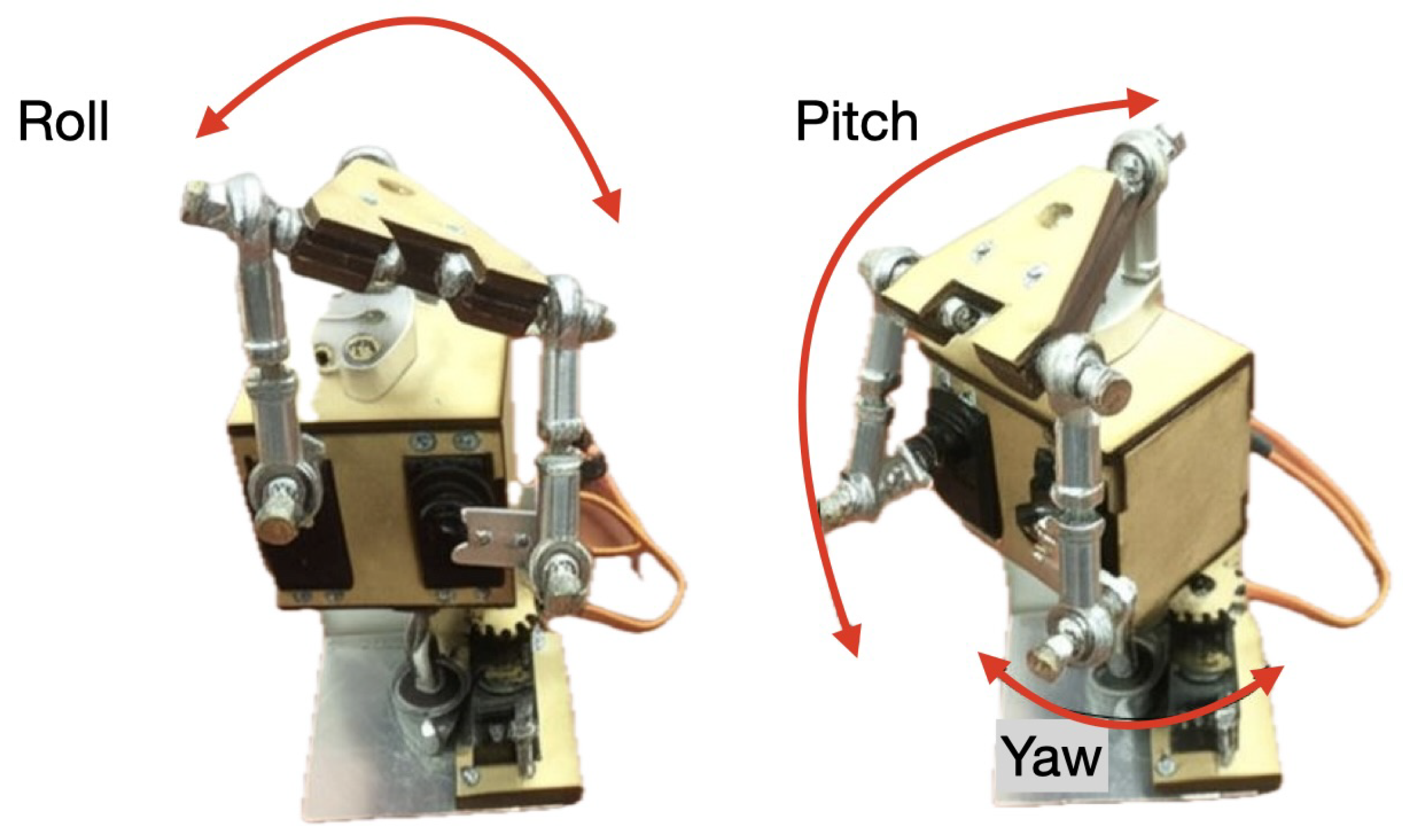

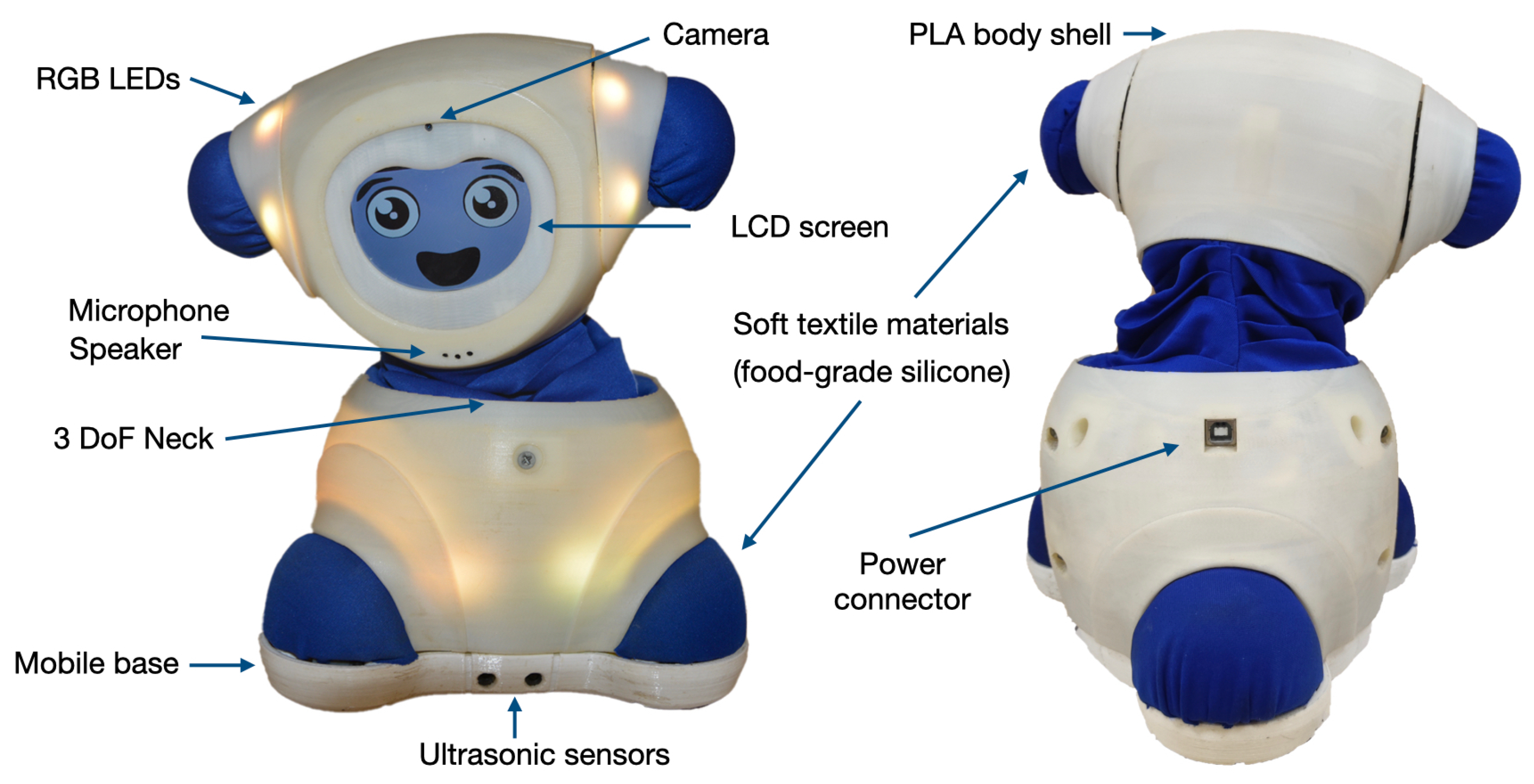

Articulated Head Movement Mechanism: The articulated head mechanism has been designed to provide robustness and durability, capable of supporting abrupt movements generated by users, particularly children, during direct interactions with the robot. This mechanism is based on a three-degree-of-freedom (3 DoF) motion platform, consisting of two active RRS (Revolute-Revolute-Spherical) legs and one passive RS (Revolute-Spherical) leg. This configuration allows roll movements (approximate 50° range) and pitch movements (approximate 70° range). The platform is built from high-strength materials such as aluminum, steel bars, and high-density polyurethane, selected to ensure stability and resistance to external forces, preventing interactions from compromising the system’s performance. The platform is actuated by two MG946R servomotors, each with a torque of 13 kg cm, responsible for roll and pitch movements illustrated in

Figure 1. A third MG946R servomotor, with an equal torque of 13 kg·cm, allows the platform to rotate on its vertical axis, achieving a yaw motion (approximate range of 180°). All servomotors are driven by Pulse Width Modulation (PWM) signals generated by an Arduino Nano microcontroller, ensuring precision and real-time response.

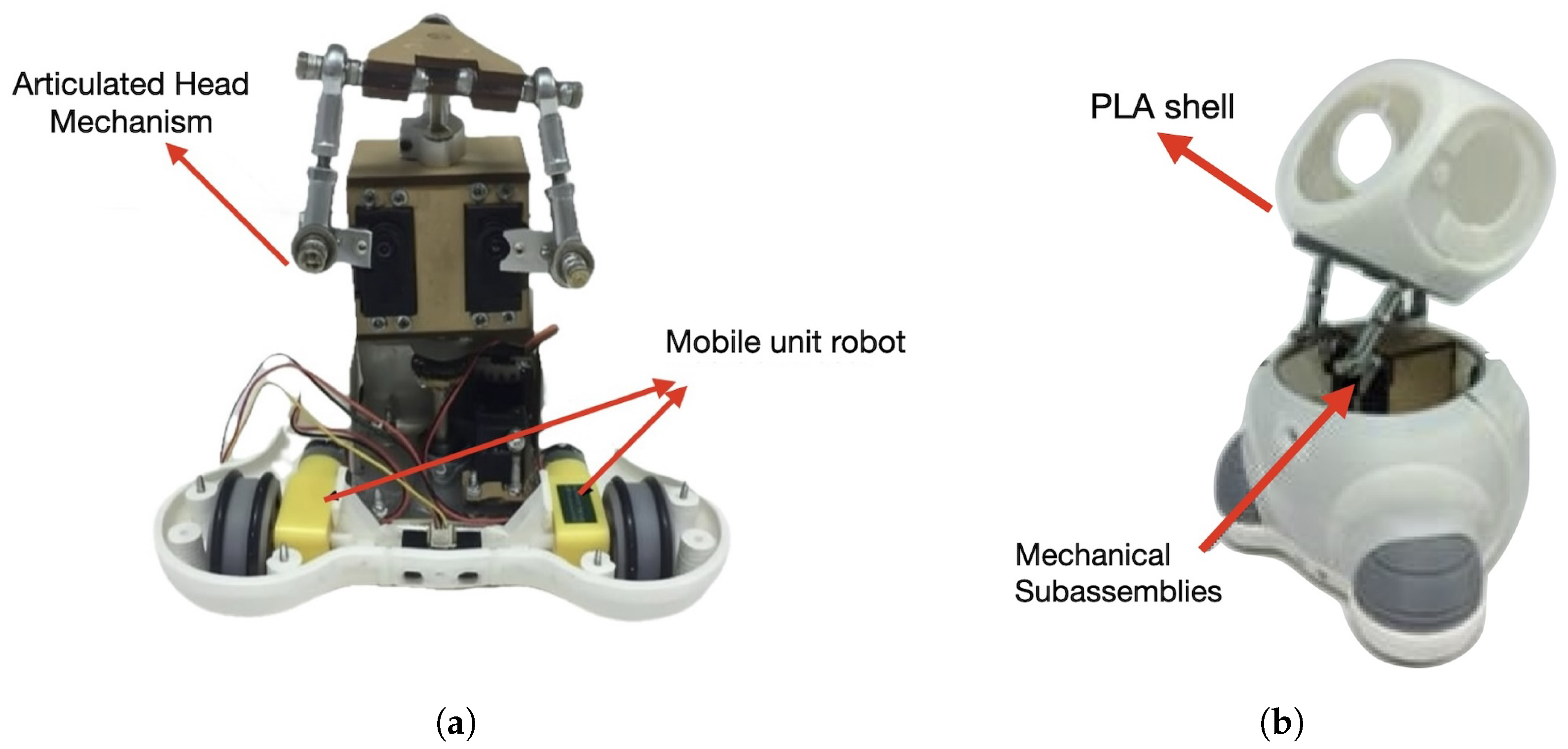

Mobile unit: The mobile base, shown in

Figure 2a), uses a differential drive system powered by two DC motors with integrated gear reduction. This configuration enables forward/backward and rotational movements. Each motor delivers a peak speed of 175 RPM under load and torque of 1.1 kg·cm. The chassis has a low center of gravity to improve balance during motion, while rubber wheels ensure adequate traction across various surfaces for smooth navigation.

External body shell: The robot’s body shell is 3D printed in polylactic acid (PLA), while the limbs, neck, and ears are coated with food-grade silicone to provide a safe and comfortable tactile experience, as presented in

Figure 2 b). The design includes strategic internal mounting points to house electronic components and mechanical subassemblies, optimizing space, and facilitating assembly. Surface finishing techniques, such as sanding and chemical smoothing, were applied to remove sharp edges and achieve a uniform appearance, improving both safety and appearance.

3.3. Electronics Architecture

The following subsection describes the key components of the robot’s electronic system:

Processing Unit: A Raspberry Pi 3 B+ is used for high-level processing. It includes built-in Wi-Fi capabilities, allowing it to establish a local wireless network for communication with the teleoperation interface, as will be described later.

Control System: An Arduino Nano handles low-power tasks and sensor integration.

-

Input Sensors:

- -

Ultrasonic distance sensors for obstacle detection and proximity awareness.

- -

Camera Module V2 (8 MP, Sony IMX219) for visual input, including facial recognition and scene analysis.

-

Output Devices:

- -

RGB LED lights for emotional signaling and visual feedback.

- -

LCD Touchscreen: A 3.5-inch USB-connected display with a resolution of 480×320 pixels and physical dimensions of approximately 92 mm × 57 mm. It is used to display pictograms and visual cues.

- -

Integrated speaker for playback of sound effects and onomatopoeic cues.

Figure 3 presents an annotated diagram of the JARI robot’s physical components. The design features a 3-degree-of-freedom neck, a camera and microphone for perception, a speaker and LCD touchscreen for expressive interaction, and RGB LEDs for emotional signaling. The body is constructed using 3D-printed PLA and incorporates soft textile materials to ensure safe physical contact. The robot includes a mobile base with wheels and ultrasonic sensors for distance sensing. The power connector is positioned at the rear of the chassis.

3.4. Aesthetic Design

JARI presents a non-recognizable zoomorphic appearance, deliberately avoiding resemblance to specific animals or humanoid forms. This design decision was made to reduce the risk of fear or rejection in children, and to prevent triggering the discomfort commonly associated with Mori’s Uncanny Valley effect. Unlike clearly defined animal shapes, such as dogs or cats, which may elicit aversions in some children, the abstract design promotes broader acceptance. Similarly, humanoid features were excluded to avoid cognitive dissonance during interaction.

To enhance engagement and encourage physical interaction, the robot incorporates materials selected for their tactile qualities. Soft and textured surfaces were integrated into the body, including silicone-covered ears and additional textile components, which not only increase sensory appeal but also provide opportunities for visual contrast and aesthetic customization. JARI also includes interchangeable garments made from a variety of fabrics and colors (see

Figure 4), which are adapted to the context of the interaction. Some garments offer soft textures, while others are intentionally rough, providing diverse sensory input during interaction. These design features support personalization and contribute to making JARI a more engaging and adaptable companion.

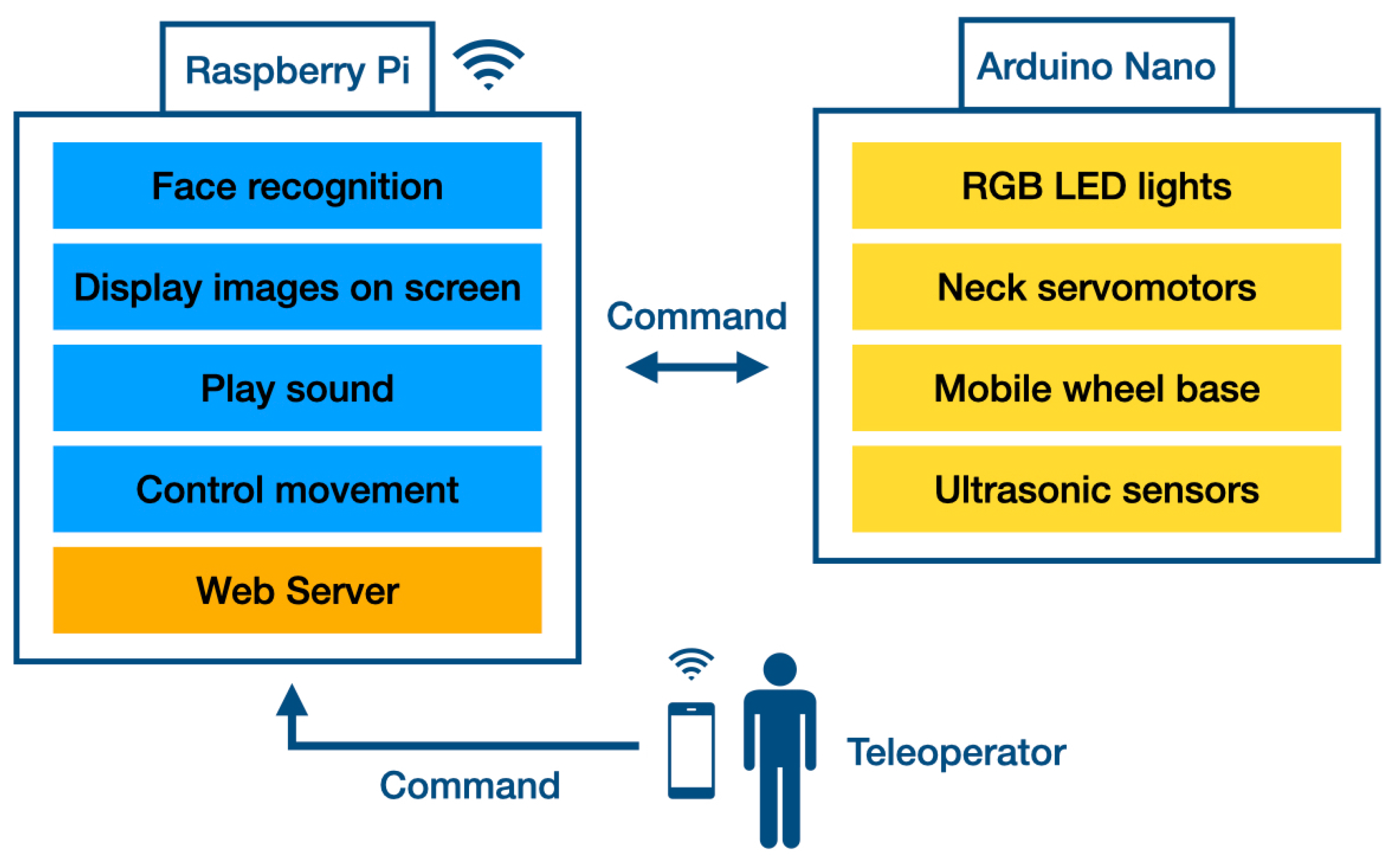

3.5. Software Architecture

The software architecture of the JARI robot is modular and designed to support multiple functionalities while maintaining smooth teleoperation. It is structured into the following layers:

Control Layer: Responsible for motor actuation, sensor data acquisition, and communication with low-level hardware components.

Behavior Layer: Manages predefined movement sequences and emotional expression routines.

Communication Layer: Facilitates data exchange and synchronization between the Raspberry Pi, Arduino, and the web-based interface.

Web Server: Hosts the graphical user interface, interprets remote commands, and ensures real-time communication between the human operator and the robot.

Interaction Layer: Enables operator control through a user-friendly web interface.

This layered architecture enables real-time control and facilitates future integration of autonomous behaviors modules.

Figure 5 illustrates the overall communication architecture between the mobile application, the control command list, and the hardware components (Raspberry Pi and Arduino Nano). As shown in the figure, the teleoperator sends commands to the robot through a web browser. These commands are transmitted via Wi-Fi, using a local network established between the teloperator’s device and the Raspberry Pi. The Raspberry Pi processes high-level tasks such as changing robot emotion or moving. It communicates with the Arduino Nano, which handles low-level components like RGB LED lights, neck servomotors, the mobile wheel base, and ultrasonic sensors.

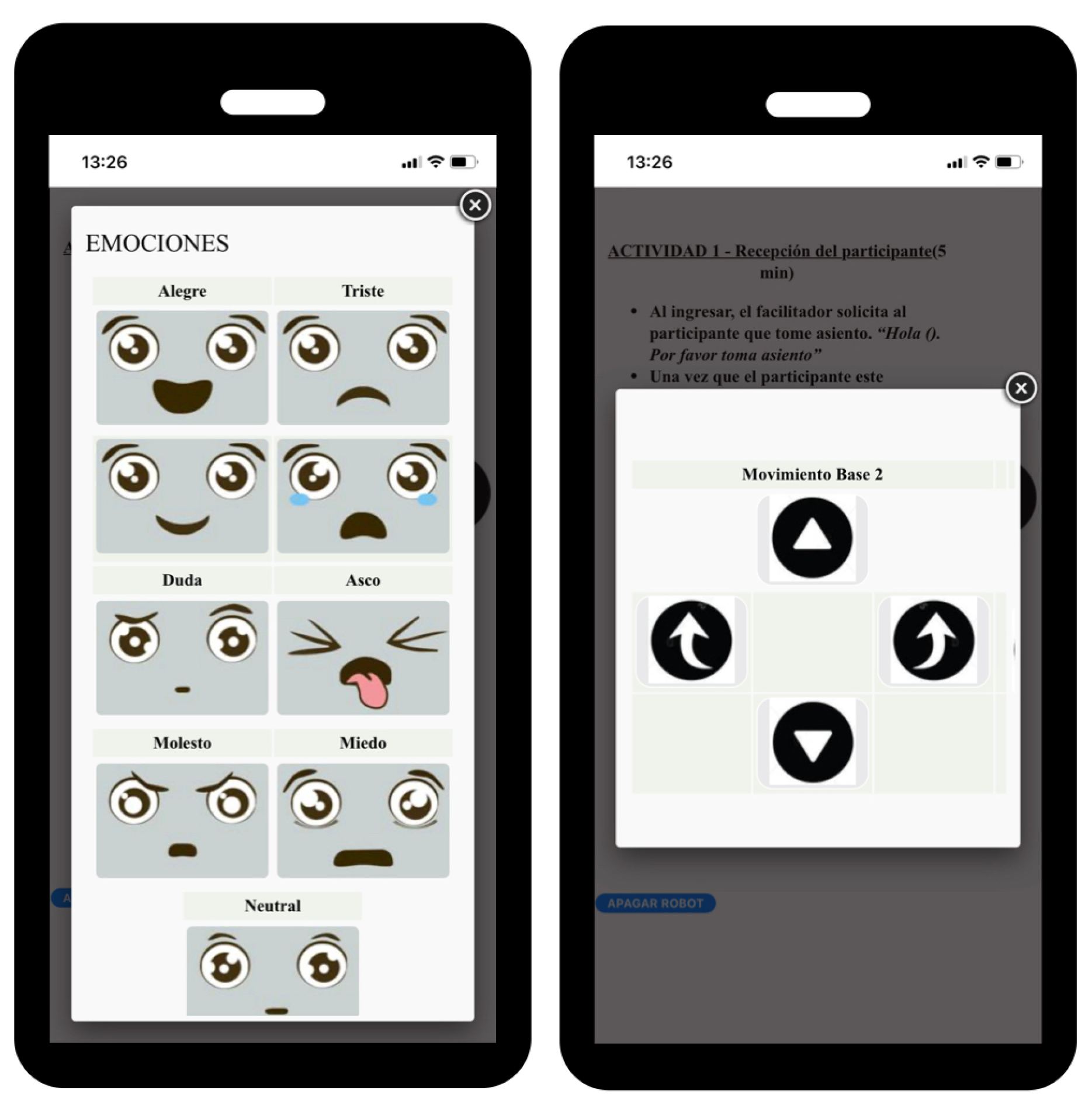

3.6. Human-Robot Interface

The robot is operated via a web application hosted on a virtual server running on the onboard Raspberry Pi. The human operator connects remotely through a standard web browser using either a desktop computer or a mobile device. The interface, as shown in

Figure 6, includes predefined movement commands for the robot’s base and a set of emotional expression patterns, allowing the operator to control the robot’s behavior and adapt interactions in real time.

Figure 7 shows the nine different emotions that the robot can express through a combination of facial expressions, colored LED lights, and movements of its neck and wheels.

This system is based on the Wizard of Oz (WoZ) paradigm paradigm, in which the robot is teleoperated to simulate autonomous behaviors. This approach offers several advantages:

Flexible Interaction: Enables real-time adaptation to children’s responses, allowing for a more personalized and engaging experience.

Testing Before Automation: Facilitates the evaluation of different interaction strategies before implementing fully autonomous behavior.

Ethical and Safety Considerations: Ensures that a human teleoperator remains in control and can intervene immediately in case of unexpected or undesired behavior.

4. Pilot Evaluation

To evaluate the JARI robot in interaction with the target population, a pilot study was conducted involving children diagnosed with ASD. The evaluation aimed to validate key design characteristics of the robot, particularly its emotional expressions, use of color, and children’s behavioral responses during interaction. The study was designed to address the following research questions (RQs):

RQ1: Does the design of the JARI robot capture and sustain the attention of children with Autism Spectrum Disorder (ASD) during interaction?

RQ2: Are children with ASD able to recognize and interpret the emotional expressions displayed by the JARI robot?

The pilot was implemented in two countries and in two types of educational contexts: 1) a special education school in Peru; and 2) an inclusive mainstream school in Spain. In Peru, the study was conducted at the Centro Ann Sullivan del Perú (CASP) in Lima, which serves children with ASD, Down syndrome, and related neurodevelopmental conditions. In Spain, the pilot took place at the Centro Cultural Palomeras in Madrid, a public school that integrates children with ASD into regular classrooms.

Two following scenarios were defined to address different interaction settings and participant profiles.

4.1. Scenario 1: Children with Limited or Nonverbal Communication

This scenario was implemented in Lima, Peru, with a group of school-aged children (6 to 8 years old) diagnosed with ASD. Most of the participants exhibited minimal or no verbal communication and used pictographic systems to express basic needs. All children attended a specialized educational center and were characterized by limited sustained attention and, in some cases, oppositional behaviors or distress in response to transitions or unexpected instructions.

A total of 11 children participated in this scenario (10 boys and 1 girl), of whom eight used nonverbal communication. The sessions were conducted individually in a dedicated room at the CASP. Following the center’s recommendations, each child was accompanied by a parent during the session to ensure comfort and reduce anxiety.

Interaction time: Approximately 20 minutes per child, including a brief “ice-breaking” period of about 2 minutes, followed by the main interaction with the robot and the associated questionnaire.

Key aspects to evaluate: Identification of robot’s emotional expressions, children’s behavioral responses to the robot’s emotional expressions, likeliness of robot and color changes linked to those expressions, and overall engagement during the session.

4.1.1. Procedure

Prior to implementation, the researchers coordinated with specialists from the CASP to adapt the protocol and questionnaire to the communication profiles and comfort needs of the children involved.

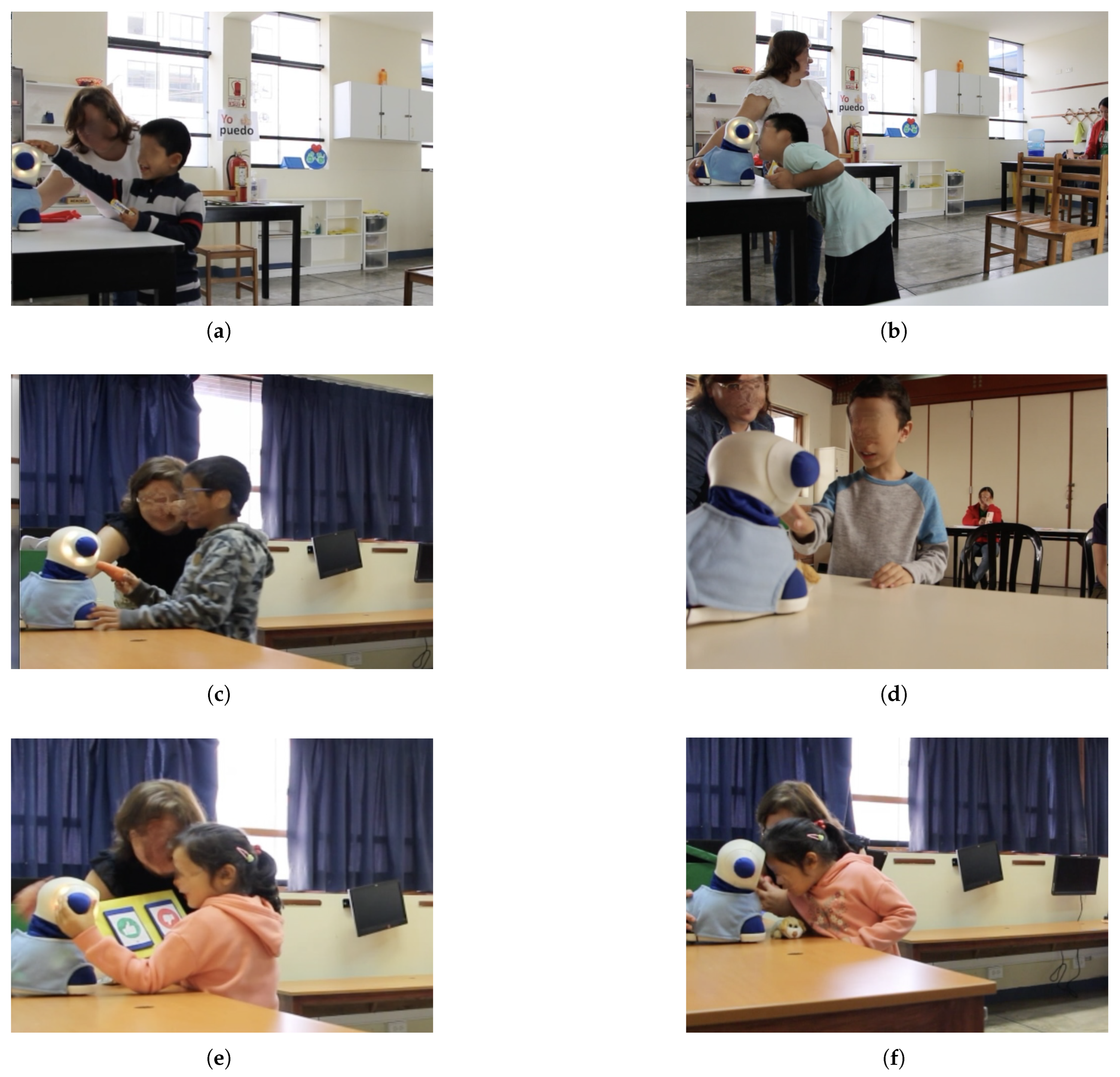

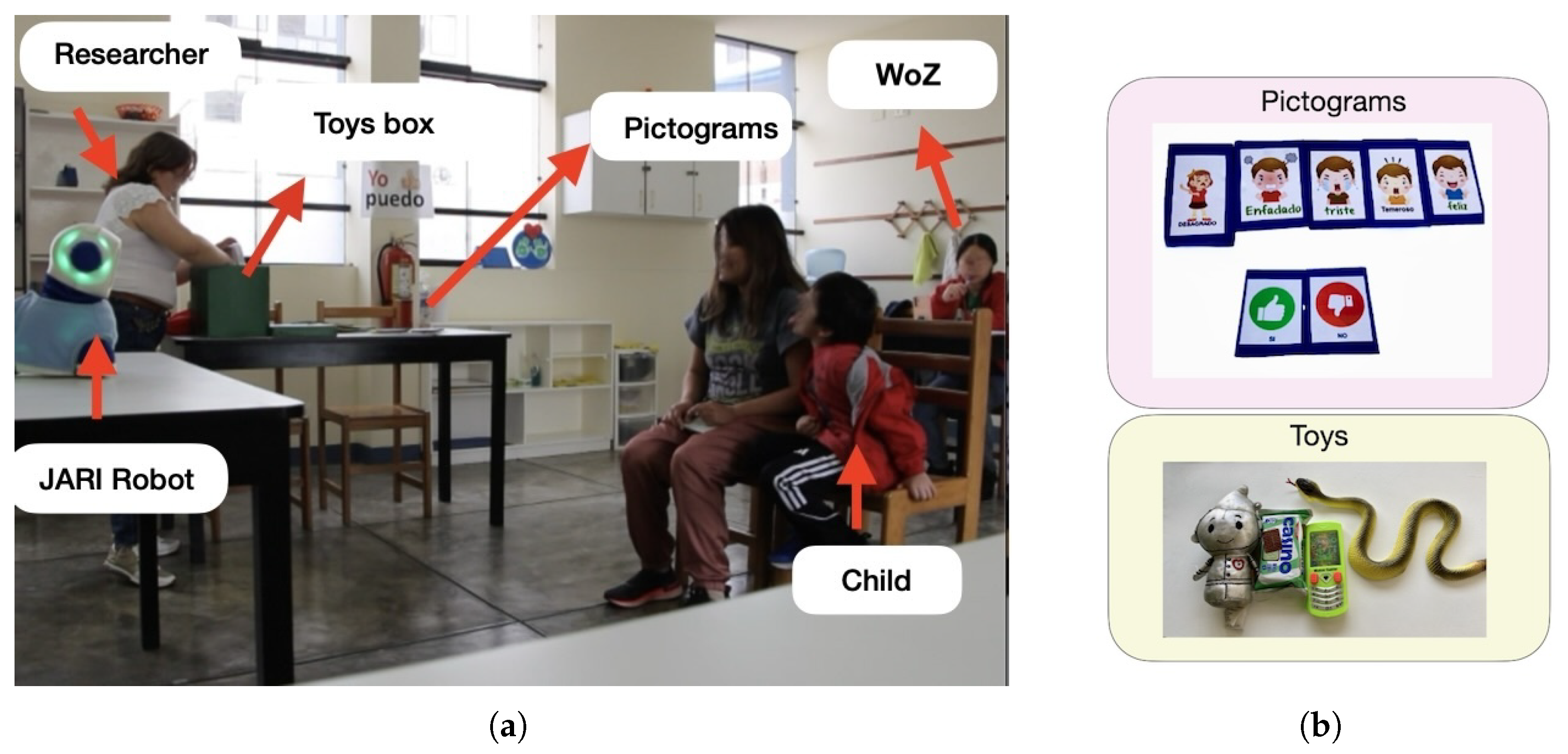

Robot Setup: The JARI robot was placed in the center of a large desk, as shown in

Figure 8(a). One researcher, positioned at the back of the room, operated the robot remotely using the Wizard of Oz paradigm, while another remained near the participant to monitor and guide the session without interfering. Each child entered the room accompanied by a parent or tutor to ensure emotional support. During the interaction, the researcher asked questions about the robot’s facial expressions using pictograms, as illustrated in

Figure 8(b).

In this case, the procedure was as follows:

Introduction: The session began with a short dialogue between the researcher and the child to establish a sense of trust and connection. Oral consent was requested, and parents confirmed consent prior to the activity. The robot was operated remotely using the WoZ paradigm.

Interaction and iterative task: Children were encouraged to present objects (e.g., plush toys, cars, carrots, cookies) to the robot. In response, the teleoperator changed the robot’s emotional expression.

Post-interaction questionnaire: After each reaction, children were asked to identify the displayed emotion and comment on whether they liked the robot and its color. In

Table 2 For children with verbal communication, responses were given orally. For nonverbal participants, researchers used pictograms to facilitate selection. This interaction was repeated with six different toys. Once the activity was completed, the child said goodbye to the robot and left the room.

4.2. Scenario 2: Children with Verbal Communication and Higher Functional Skills

This scenario was conducted at the Centro Cultural Palomeras School in Madrid, Spain, and involved a group of 7 children aged between 5 and 12 years (5 boys and 2 girls), all diagnosed with ASD. All participants demonstrated verbal communication skills, although their fluency and expressive abilities varied. Some children relied on echolalia or had difficulty initiating speech, while others communicated more fluidly. All were capable of following simple instructions. Attention levels varied across participants, with some easily distracted and one child requiring continuous support from a familiar teacher to remain engaged.

Interaction time: Approximately 25 minutes per group, including a brief introduction and explanation of the activity.

Key aspects to evaluate: The clarity and recognition of the robot’s emotional expressions, and the children’s perception of the robot’s behavior and characteristics through a post-interaction questionnaire.

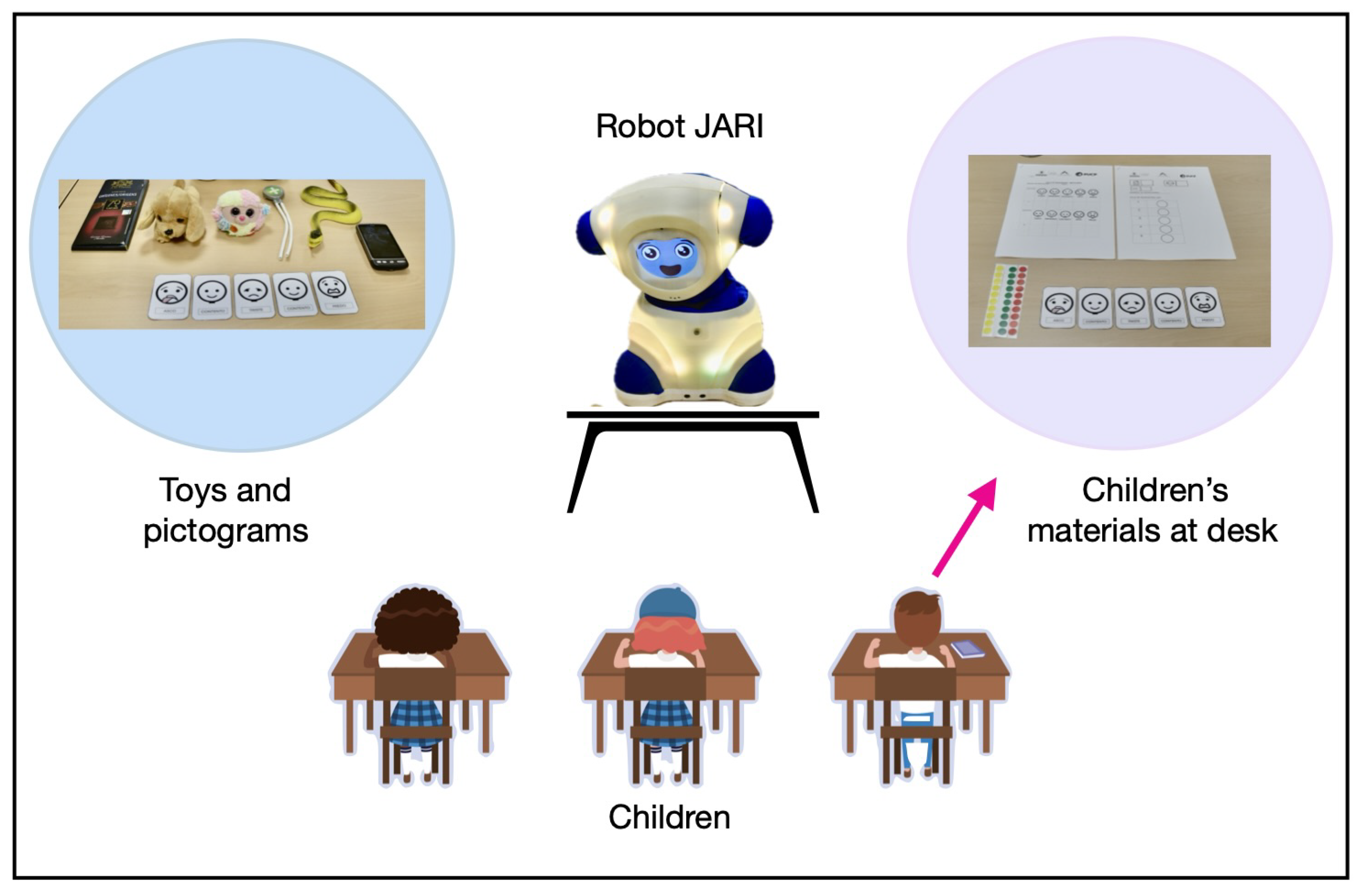

4.2.1. Procedure

Before conducting the sessions, the research team met with school staff to explain the protocol. Based on their feedback, the wording of the questionnaire was adjusted to better align with the children’s language level and comprehension. All activities and materials were administered in Spanish.

Robot Setup: The interaction took place in a familiar classroom environment, with the JARI robot positioned on a desk accessible to the children. Toys and ARASAAC

1 pictograms were prepared to facilitate the identification of emotions and support communication during the activity, as shown in

Figure 9.

In this case, the procedure was as follows:

Introduction: The session began with a brief explanation of the robot and the activity, presented as a game. Children were encouraged to interact playfully and naturally with the robot.

Interaction task: Children participated in small groups. Each child took turns showing an object to the robot (e.g., plush toy, cookie, broken cup, broken cellphone, fake snake). After each object was presented, the robot changed its emotional expression, controlled by the teleoperator. Children were then asked to identify the robot’s emotion by selecting an ARASAAC pictogram and showing it to the researcher. The emotions included happy, sad, angry, disgusted, and fear.

-

Post-interaction questionnaire: At the end of the session, each child completed a short questionnaire based on 11 statements (

Table 3). Each child received a response sheet along with three colored stickers: green (agree), yellow (neutral), and red (disagree). The researcher read each statement aloud three to four times, allowing the children enough time to place a sticker next to each one according to their interpretation or preference.

This questionnaire was adapted from a protocol previously developed by the authors in [

25], which was originally validated with neurotypical children in mainstream educational settings. In the present pilot, the objective was to explore its applicability to children with ASD and to assess its potential for use in future large-scale studies involving this population.

5. Results

This section presents the findings from both scenarios, including children’s ability to identify the robot’s emotional expressions, their preferences for specific colors associated with each emotion, behavioral responses during the interaction, and post-interaction questionnaire data.

5.1. Scenario 1

5.1.1. Quantitative findings of emotion identification and preferences

In Scenario 1, results were collected from individual sessions with children, focusing on three aspects: whether they correctly identified the robot’s emotional expressions, whether they liked each emotion, and their preferences regarding the colors displayed by the robot during emotional expression.

Additionally, children’s behavior during the interaction was recorded and analyzed through video footage, providing further insights into the types of spontaneous actions observed.

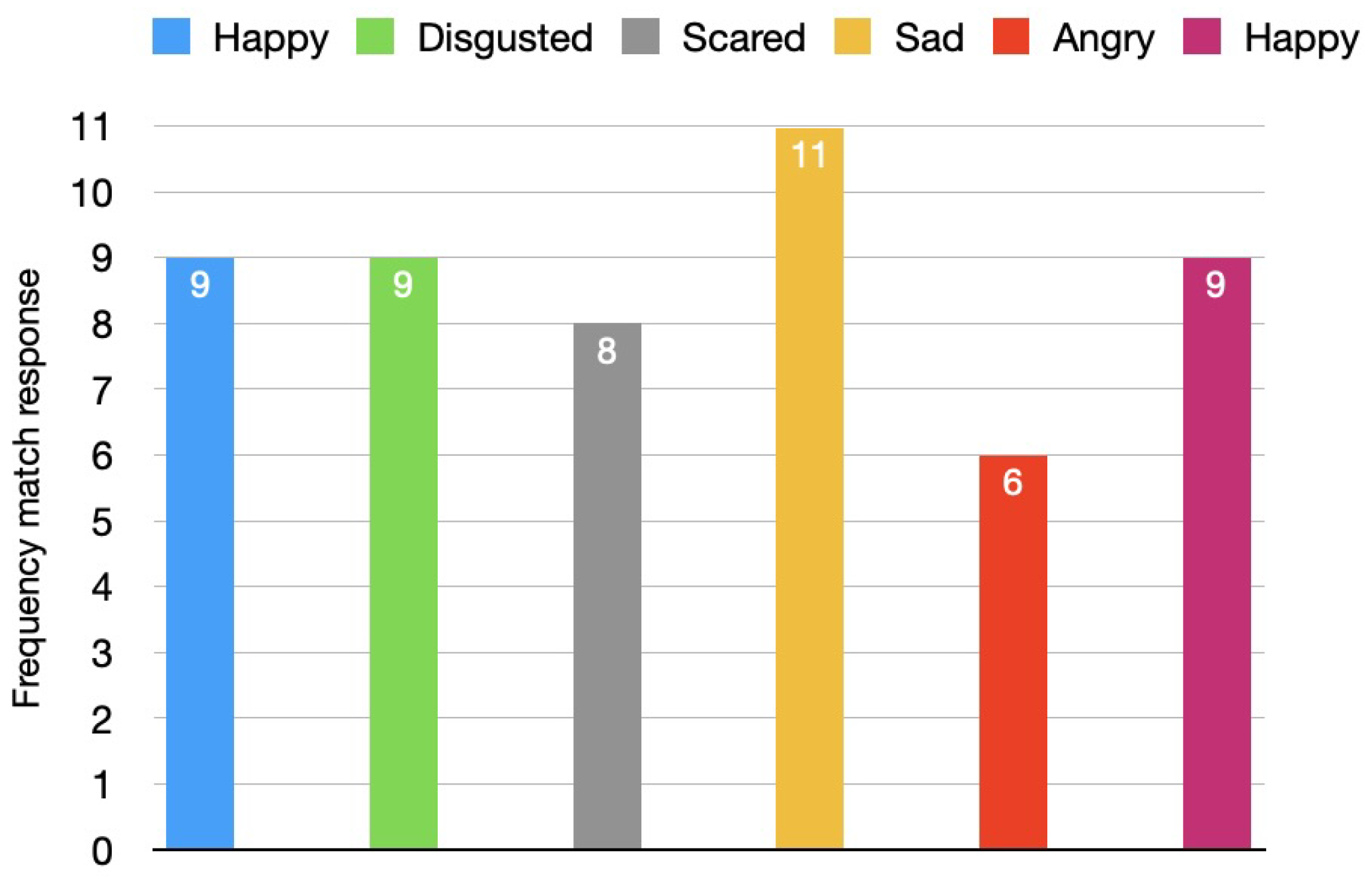

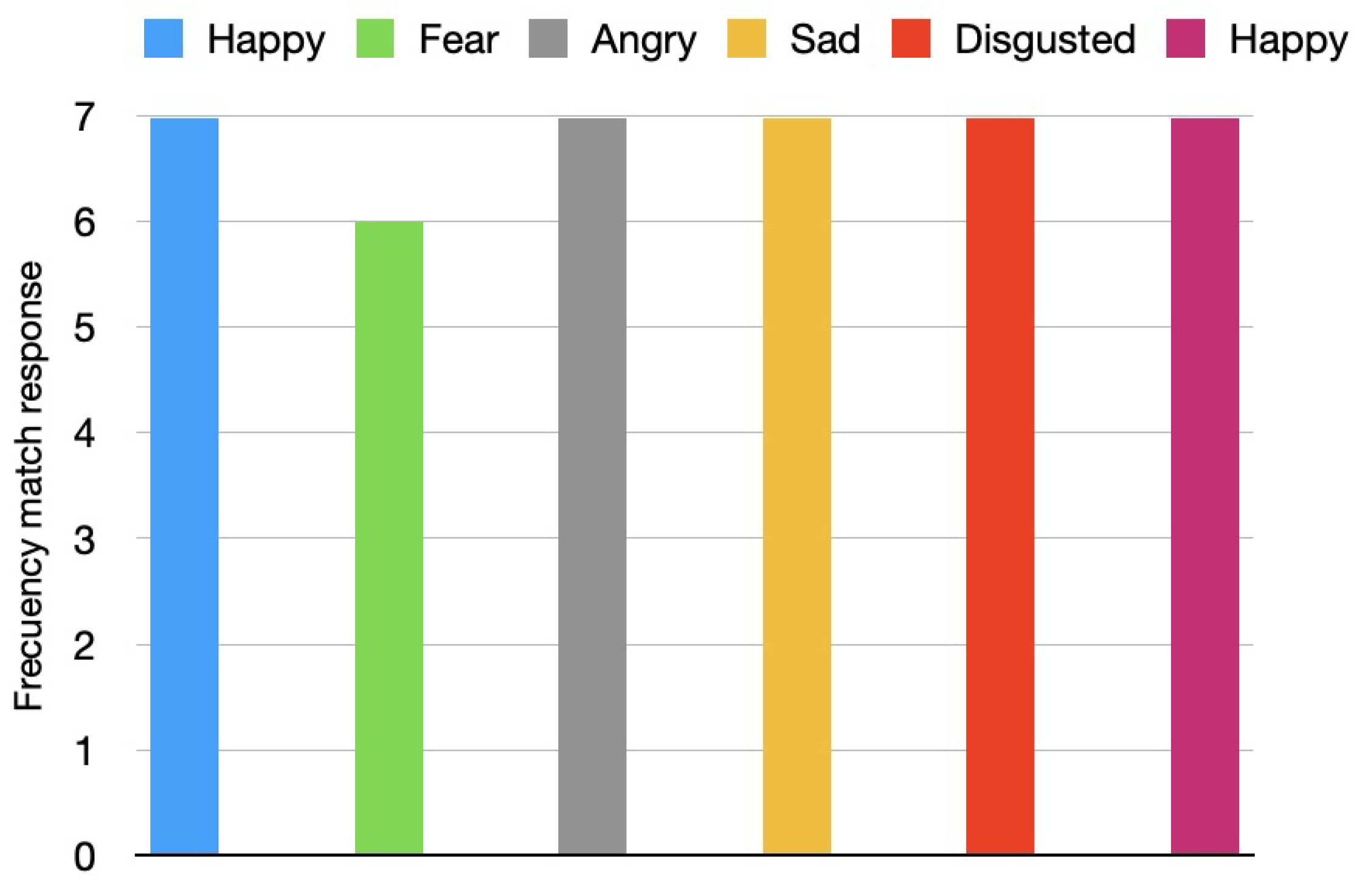

Emotion Matching: As shown in

Figure 10, most children were able to correctly identify the emotional expression displayed by the robot. Only one case showed a mismatch, while other unmatched cases occurred because the children did not provide an answer, and this could be because using pictograms as part of communication systems requires cognitive effort, which can lead to fatigue if overused or if the child is overwhelmed by the demands of the interaction [

26]. Notably, a few children chose not to continue responding after the initial rounds of interaction.

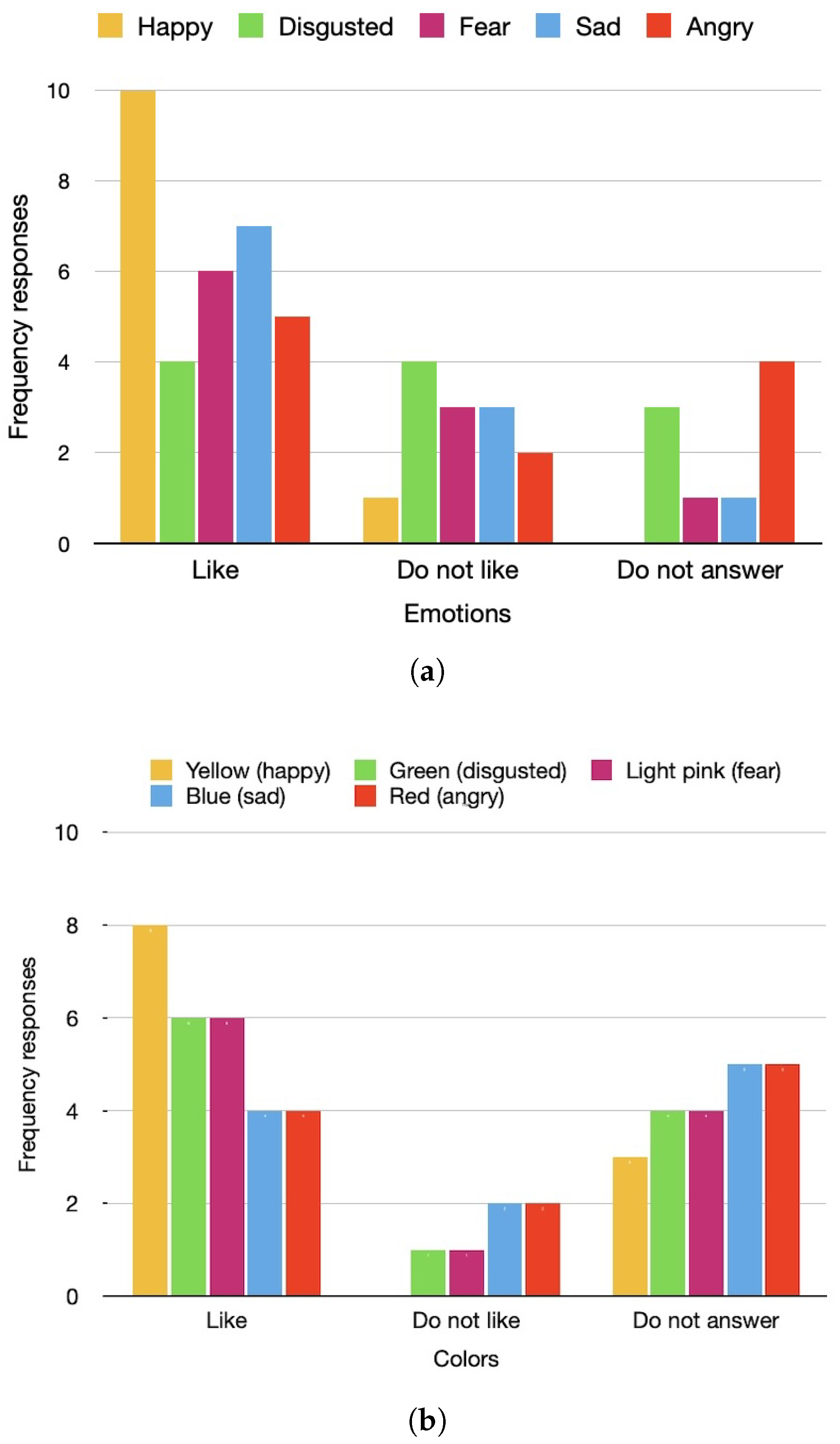

Emotion and Color Preferences:Figure 11 presents the children’s preferences regarding the robot’s emotions and associated colors. In

Figure 11(a), the "happy" emotion was the most positively received, followed by "sad" and "fear."

Figure 11(b) shows that the color yellow—used during the happy emotion—was the most preferred, followed by green and light pink. However, not all children answered these questions.

Figure 10.

Children’s response frequency when identifying the robot’s emotional expressions in Scenario 1.

Figure 10.

Children’s response frequency when identifying the robot’s emotional expressions in Scenario 1.

Figure 11.

Children’s preferences in Scenario 1. (a) Preferred emotional expressions displayed by the robot. (b) Preferred colors associated with the robot’s emotional expressions.

Figure 11.

Children’s preferences in Scenario 1. (a) Preferred emotional expressions displayed by the robot. (b) Preferred colors associated with the robot’s emotional expressions.

5.1.2. Qualitative Analysis of Children Behavior

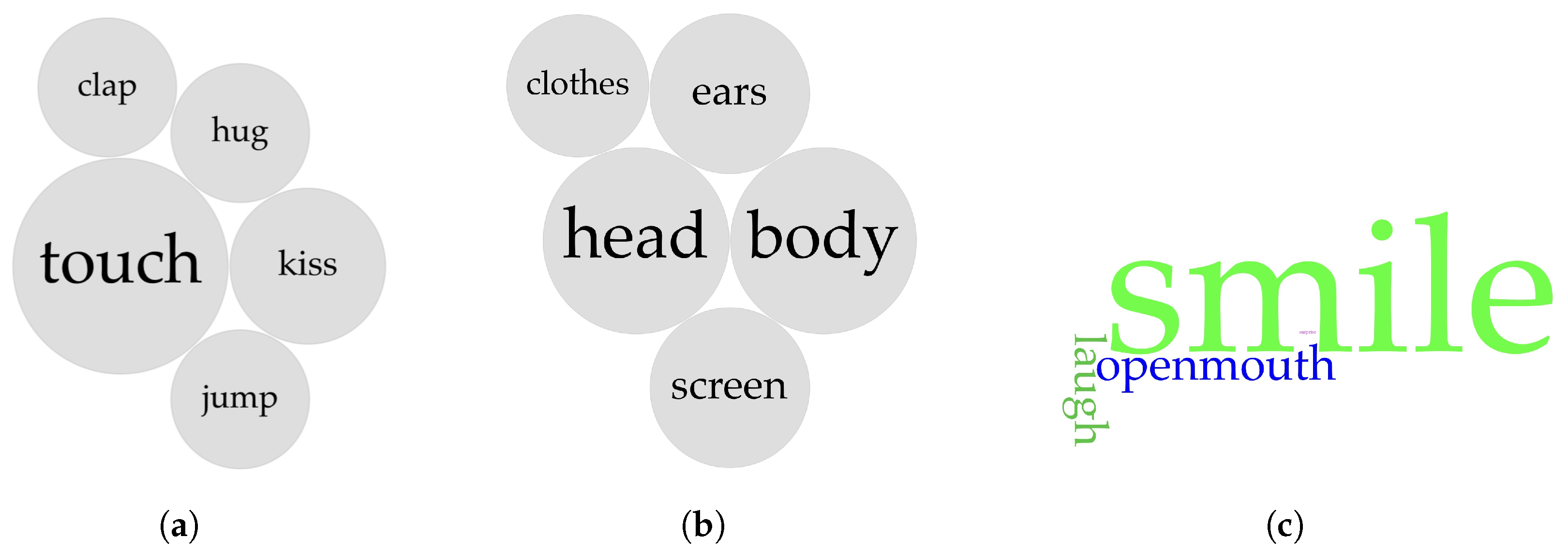

Based on the video analysis, four main types of interaction were identified:

Physical gestures, such as touching, hugging, clapping, jumping, and kissing the robot.

Facial expressions, including smiling, laughing, and opening the mouth.

Visual attention, such as sustained eye gaze toward the robot.

Proxemics, including how children approached the robot physically.

During the object-based interaction task, all children displayed sustained visual attention toward the robot. They maintained their gaze until the researcher interrupted after each toy interaction, following the dynamics established for the experiment. After responding, they resumed looking at the robot, often orienting their entire body in its direction. At this case, we did not counted as events because children keep visual attention until researcher asked questions so they oriented their gaze to the researcher or some areas of the classroom or the box toys.

In terms of proxemics, since the game required children to approach it to show objects, it was observed that they got close enough to touch, hug, or even kiss the robot. Besides, observations showed that they willingly moved closer even after the game ended, demonstrating a natural tendency to engage with the robot.

Regarding physical gestures, as shown in

Figure 12(a) the most common actions were touching parts of the robot. The children primarily touched the body, head, and ears of the robot as marked at

Figure 12(b). Interestingly, two children kissed the robot, one clapped, and another jumped while laughing in response to the robot’s angry expression.

Furthermore, facial expressions are shown in

Figure 12(c). Smiling was the most frequently observed expression. Some children also opened their mouths in reaction to the robot’s fearful or disgusted expressions—emotions in which the robot’s own mouth appeared open. This may suggest an emerging tendency to imitate the robot’s facial features, a behavior that warrants further investigation in future studies.

Figure 12.

Types of behavioral interactions: (a) Physical gestures actions. (b) Robot’s areas touched by children. (c) Facial expressions actions.

Figure 12.

Types of behavioral interactions: (a) Physical gestures actions. (b) Robot’s areas touched by children. (c) Facial expressions actions.

Table 4 summarizes the frequency of each type of observed interaction. Smiling was the most frequent facial expression and was recorded separately from laughing due to its lower intensity and clearer consistency. Touching was the most common physical gesture. Interestingly, some children also clapped, jumped, and kissed the robot, through these actions occurred less frequently, they were unexpected. Given their potential significance, analyzing these behaviors in a larger sample could provide further insights, as they may indicate a strong level of engagement.

5.2. Scenario 2

5.2.1. Quantitative Findings

In the case of Scenario 2, the results are related to the recognition of emotions by the children and their responses to the questionnaire.

Response match to robot’s emotional expression: As shown in

Figure 14, most children correctly recognized the robot’s emotional expressions. Only one child mismatched the emotion “fear”.

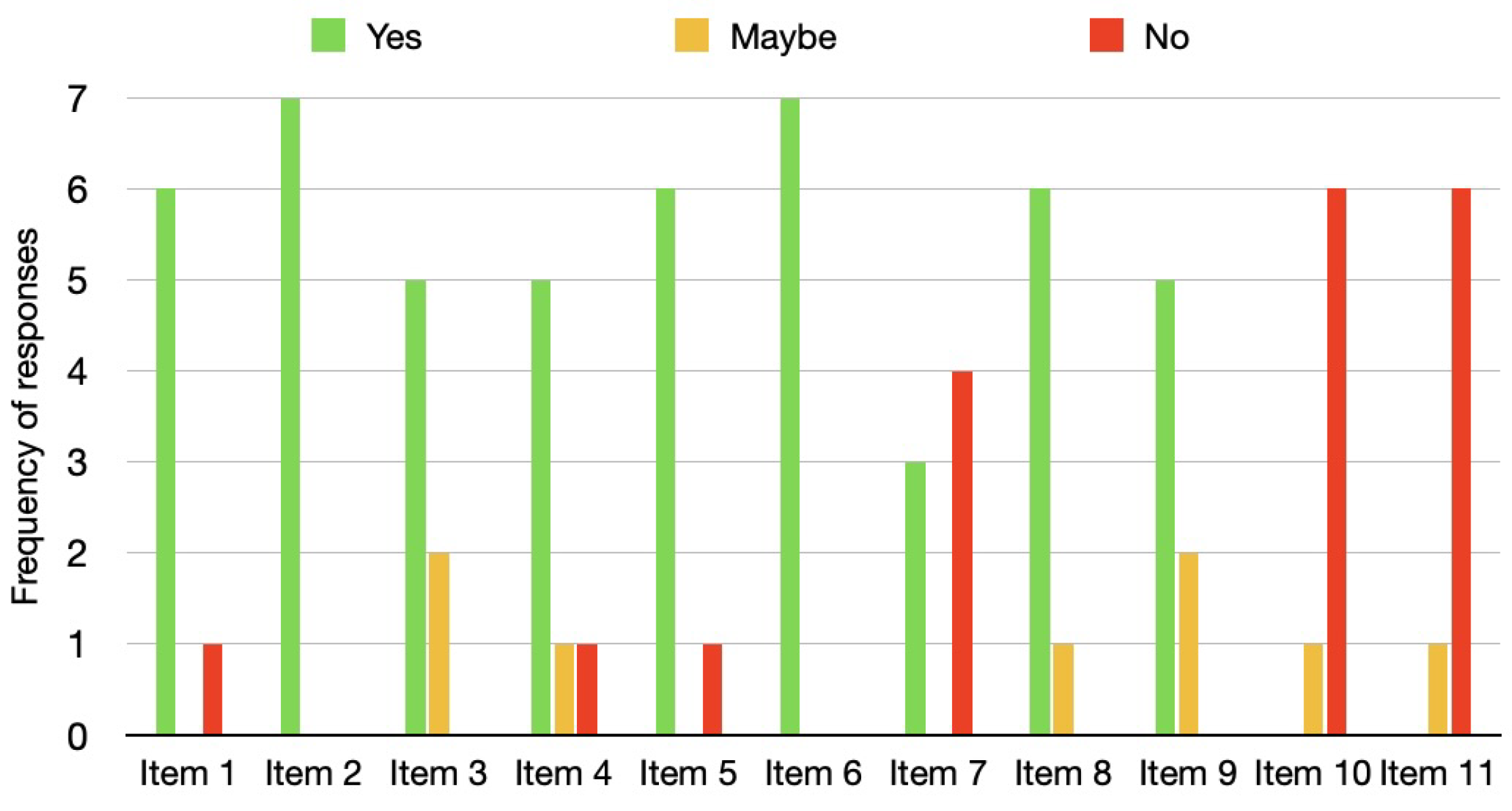

Response to the questionnaire: Regarding the responses to the questionnaire,

Figure 15 shows the frequency of responses for each item. It can be observed that Item 1 and Item 2 received high "Yes" responses, indicating that the children would like to see or play with the robot again. Item 6, "JARI is funny," also received a high number of "Yes" responses, with no children selecting "Maybe" or "No."

For Item 7, "JARI is like a person like me," four children responded "No," while three children considered the robot to be like a person. In the case of Item 8, most children viewed the robot as a pet and imagined that it could be alive.

Finally, items 10 and 11 revealed that most children were not afraid of the robot and believed that using it would not result in breaking it.

5.2.2. Qualitative analysis - Teacher and Therapist Observations

As part of the evaluation process, two educational professionals from the Centro Cultural Palomeras completed a post-interaction questionnaire regarding the children’s engagement with the JARI robot. Both observers noted that the children appeared generally motivated during the session and interacted spontaneously with the robot. They emphasized that the children were attentive to the robot’s expressions and responded appropriately in most cases, demonstrating a clear effort to interpret its emotional cues.

Additionally, the professionals observed that the use of pictograms facilitated communication and that the group setting promoted collaborative responses. One of the respondents highlighted that the robot’s design and emotional clarity were effective in maintaining the children’s attention, particularly when expressions were exaggerated and supported by color. Both professionals agreed that the robot has strong potential as a complementary tool in therapeutic and educational contexts for children with ASD.

Figure 13.

Pictures of actions done by children at interaction with JARI robot: (a) Child touching robot’s head. (b) Child approaching and looking closely at the robot’s screen. (c) Child putting a carrot on the robot’s screen. (d) Child touching the robot’s ears. (e) Child looking at the robot screen very close and touching her head with robot’s head. (f) Child smiling while interacting with the robot after presenting an object.

Figure 13.

Pictures of actions done by children at interaction with JARI robot: (a) Child touching robot’s head. (b) Child approaching and looking closely at the robot’s screen. (c) Child putting a carrot on the robot’s screen. (d) Child touching the robot’s ears. (e) Child looking at the robot screen very close and touching her head with robot’s head. (f) Child smiling while interacting with the robot after presenting an object.

Figure 14.

Child Response Frequency Matching Robot’s Emotion-Scenario 2.

Figure 14.

Child Response Frequency Matching Robot’s Emotion-Scenario 2.

Figure 15.

Children answers to questionnaire.

Figure 15.

Children answers to questionnaire.

6. Discussion

The paper introduces a new design of a social robot, created from a multidisciplinary approach to address the needs of children with Autism Spectrum Disorder (ASD). The robot was designed with the goal of becoming a social mediator among children, parents, therapists, and doctors. JARI robot has a zoomorphic but not defined shape (according to the responses from children and behavioral observations), which encourages children to approach it, touch it, hug it, and even kiss it. In the case of jumping and clapping, this occurred with one child who became excited when the robot changed its emotion to "disgusted." These actions reflect engagement [

27,

28], and directly answer our research question, confirming that the robot’s shape and emotional characteristics seem to attract children. Some researchers suggest that robot-like or animal-like appearances often attract more attention from children with ASD compared to strictly humanoid designs [

6,

29].

The matching emotions were particularly interesting. The “Sad” emotion received the highest rating, followed by “Happy” and “Disgusted”. Additionally, these emotions were attractive to children in the first scenario, with most of them enjoying the robot’s happy and sad expressions. On the other hand, the “Disgusted” emotion was the one that some children did not like. It is important to mention that the teachers at the CASP indicated that the emotion of disgust was something the children had just started learning. Moreover, the “Angry” emotion did not receive many responses, which may have been due to it being one of the last emotions presented, and the children were already tired from answering many questions. Some children took longer to respond, sometimes needing two or three attempts, and some children got distracted and did not answer at all. In future evaluations, it would be better to ask just one global question to avoid exhausting the children and prevent them from losing focus due to an overload of questions.

The color questions could be considered somewhat redundant, as yellow and the “Happy” emotion received the highest ratings. However, an interesting observation was the green color. Even though some children did not like the "Disgusted" emotion, they seemed to enjoy the green color. Similarly, they liked the light pink color associated with "Fear".

In Scenario 2, children were mostly able to identify the emotions, which may suggest that emotions are understood and recognized socially. This could be related to how the school teaches them. Based on this pilot evaluation, the design seems to be heading in the right direction, although emotions like "Fear" may require further revision in terms of expression and movement.

On the other hand, the questionnaire was tested to assess whether children with ASD could understand it and to analyze how they responded. This analysis will help determine if the questionnaire can be useful in future studies involving larger samples. From this small group, it was observed that Item 2 ("I want to play with JARI") received the highest number of "Yes" responses, suggesting that the robot engaged the children in a way that they found interesting or enjoyable. This was further supported by the high rating of "Yes" responses to Item 6 ("JARI is funny").

Regarding Item 7 ("JARI is like a pet"), children predominantly viewed the robot as a pet. This could be explained by the robot’s shape and size, which may have influenced their perception. Additionally, the responses to Item 8 ("I imagine JARI is alive") and Item 9 suggest that children may be influenced by the theory of animism proposed by Piaget [

30], where children often associate movement with being alive, a cognitive framework that is prominent among children under the age of 8. However, it would be interesting to explore why some participants, even those above 8 years old, responded with "Maybe", as this could indicate a more complex understanding of animacy or expectations about the robot.

In this initial exploration, the robot’s ability to express emotions through a combination of facial expressions displayed on a screen, dynamic LED lighting, and coordinated movements of its head and body was sufficient to attract the attention of children with ASD. These multimodal features proved effective in engaging both non-verbal children requiring communication support through pictograms, and verbal children on the Asperger spectrum. While the results from this study provide valuable insights, future research directions are needed to further refine and expand the findings. These include: (1) applying Scenario 1 and Scenario 2 to a larger sample to validate the results, (2) incorporating additional features to enable automatic responses to events such as touching or hugging, (3) personalizing certain aspects of the robot, such as integrating onomatopoeic sounds, and (4) enabling gesture and speech recognition functionalities to enhance the robot’s interactive capabilities. These future steps will help optimize the robot’s design and functionality, offering greater potential for supporting children with ASD in social and therapeutic settings.

7. Conclusions

JARI, the non-defined robot zoomorphic shape with its functions such as showing emotional expressions using lighting colors and movements, effectively encouraged children with Autism Spectrum Disorder (ASD) to interact, as they approached, touched, hugged, and even kissed it. These behaviors suggest that the robot’s design and emotional expressions facilitated engagement. The "Sad" emotion received the highest rating, followed by "Happy" and "Disgusted", though "Angry" had fewer responses, probably due to fatigue in the children.

Children perceived the robot mainly as a "pet," which can be attributed to its design and size. Many also imagined it as "alive," reflecting a cognitive framework typical for children under eight. The questionnaire showed that the children understood it and responded positively, particularly to items like "I want to play with JARI" and "JARI is funny". However, they primarily viewed the robot as non-human, mainly as a pet.

Future research should involve larger samples and explore additional features, such as automatic responses to touch or hugging, onomatopoeic sounds, and gesture or speech recognition. These modifications could enhance the robot’s interactivity, further supporting social and therapeutic interactions for children with ASD.

Author Contributions

Conceptualization, E.M and H.O.; methodology, E.M,H.O; validation, E.M., R.C. and C.G.; investigation, E.M, R.C, C.G.; data curation, E.M ; writing—original draft preparation, E.M,H.O,R.C; writing—review and editing, E.M, R.C.; funding acquisition, E.M.,C.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been funded by the Social Responsibility Direction of the Pontificia Universidad Católica del Perú and by RoboCity 2030-DIH-CM Madrid Robotics Digital Innovation Hub (Applied Robotics for Improving Citizens’ Quality of Life, Phase IV; S2018/NMT-4331), funds from the Community of Madrid, co-financed with EU Structural Funds.

Informed Consent Statement

Informed consent was obtained from all involved in the study. Written informed consent has been obtained from the parents or tutors of the participants to publish this paper.

Acknowledgments

The authors express gratitude for the collaboration of Centro Ann Sullivan del Perú, Lima city and Colegio Centro Cultural Palomeras, Madrid city ,which allowed the authors to apply the protocol of this study. They also thank Danna Arias, Marlene Bustamante, Marie Patilongo and Sheyla Blumen for their contribution in the design of this JARI robot version.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ARASAAC |

Aragonese Center of Augmentative and Alternative Communication |

| ASD |

Autism Spectrum Disorder |

| CASP |

Centro Ann Sullivan del Perú |

| DoF |

Degrees Of Freedom |

| JARI |

Joint Attention through Robotic Interaction |

| PLA |

Polylactic Acid |

| RQ |

Research Question |

| UVE |

Uncanny Valley Effect |

| WoZ |

Wizard of Oz |

References

- Association, A.P. Diagnostic and Statistical Manual of Mental Disorders : DSM-5; American Psychiatric Association, 2013.

- Klin, A.; Jones, W.; Schultz, R.; Volkmar, F.; Cohen, D. Defining and Quantifying the Social Phenotype in Autism. The American Journal of Psychiatry, 159, 895–908, [12042174]. [CrossRef]

- Zygopoulou, M. A Systematic Review of the Effect of Robot Mediated Interventions in Challenging Behaviors of Children with Autism Spectrum Disorder. SHS Web of Conferences, 139, 05002. [CrossRef]

- Scassellati, B.; Henny Admoni.; Matarić, M. Robots for Use in Autism Research. Annual Review of Biomedical Engineering, 14, 275–294. [CrossRef]

- Cabibihan, J.J.; Javed, H.; Ang, M.; Aljunied, S.M. Why Robots? A Survey on the Roles and Benefits of Social Robots in the Therapy of Children with Autism. International Journal of Social Robotics, 5, 593–618. [CrossRef]

- Watson, S.W.; Watson, S.W. Socially Assisted Robotics as an Intervention for Children With Autism Spectrum Disorder. [CrossRef]

- Coşkun, B.; Uluer, P.; Toprak, E.; Barkana, D.E.; Kose, H.; Zorcec, T.; Robins, B.; Landowska, A. Stress Detection of Children with Autism Using Physiological Signals in Kaspar Robot-Based Intervention Studies. In Proceedings of the 2022 9th IEEE RAS/EMBS International Conference for Biomedical Robotics and Biomechatronics (BioRob), 2022-08, pp. 01–07. [CrossRef]

- Gouaillier, D.; Hugel, V.; Blazevic, P.; Kilner, C.; Monceaux, J.; Lafourcade, P.; Marnier, B.; Serre, J.; Maisonnier, B. Mechatronic Design of NAO Humanoid. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, 2009-05, pp. 769–774. [CrossRef]

- Hussain, J.; Mangiacotti, A.; Franco, F.; Chinellato, E. Robotic Music Therapy Assistant: A Cognitive Game Playing Robot. In Proceedings of the Social Robotics; Ali, A.A.; Cabibihan, J.J.; Meskin, N.; Rossi, S.; Jiang, W.; He, H.; Ge, S.S., Eds. Springer Nature, 2024, pp. 81–94. [CrossRef]

- Kolibab, C.; Popa, D.; Das, S.; Alqatamin, M. Motion Assessment Using Segment-based Online Dynamic Time Warping (SODTW) during Social Human Robot Interaction (HRI) with Zeno Robot. Posters-at-the-Capitol.

- Fussi, A. Affective Responses to Embodied Intelligence. The Test-Cases of Spot, Kaspar, and Zeno. Passion: Journal of the European Philosophical Society for the Study of Emotions, 1, 85–102. [CrossRef]

- Chien, S.E.; Yu-Shan, C.; Yi-Chuan, C.; Yeh, S.L. Exploring the Developmental Aspects of the Uncanny Valley Effect on Children’s Preferences for Robot Appearance. International Journal of Human–Computer Interaction, 0, 1–11. [CrossRef]

- Tamaral, C.; Hernandez, L.; Baltasar, C.; Martin, J.S. Design Techniques for the Optimal Creation of a Robot for Interaction with Children with Autism Spectrum Disorder. Machines, 13, 67. [CrossRef]

- Fengr, H.; Mahoor, M.H.; Dino, F. A Music-Therapy Robotic Platform for Children with Autism: A Pilot Study, [2205.04251]. [CrossRef]

- Dei, C.; Falerni, M.M.; Nicora, M.L.; Chiappini, M.; Malosio, M.; Storm, F.A. Design of a Multimodal Device to Improve Well-Being of Autistic Workers Interacting with Collaborative Robots, 2023-04-27, [2304.14191]. [CrossRef]

- Puglisi, A.; Caprì, T.; Pignolo, L.; Gismondo, S.; Chilà, P.; Minutoli, R.; Marino, F.; Failla, C.; Arnao, A.A.; Tartarisco, G.; et al. Social Humanoid Robots for Children with Autism Spectrum Disorders: A Review of Modalities, Indications, and Pitfalls. Children, 9, 953. [CrossRef]

- Marino, F.; Chilà, P.; Sfrazzetto, S.T.; Carrozza, C.; Crimi, I.; Failla, C.; Busà, M.; Bernava, G.; Tartarisco, G.; Vagni, D.; et al. Outcomes of a Robot-Assisted Social-Emotional Understanding Intervention for Young Children with Autism Spectrum Disorders. Journal of Autism and Developmental Disorders, 50, 1973–1987. [CrossRef]

- Arsić, B.; Gajić, A.; Vidojković, S.; Maćešić-Petrović, D.; Bašić, A.; Parezanović, R.Z. THE USE OF NAO ROBOTS IN TEACHING CHILDREN WITH AUTISM. European Journal of Alternative Education Studies 2022-04-08, 7. [CrossRef]

- Gudlin, M.; Ivanković, I.; Dadić, K. Robots Used In Therapy For Children With Autism Spectrum Disorder. American Journal of Multidisciplinary Research and Development (AJMRD) 2022, 4.

- Pakkar, R.; Clabaugh, C.; Lee, R.; Deng, E.; Mataric, M.J. Designing a Socially Assistive Robot for Long-Term In-Home Use for Children with Autism Spectrum Disorders, [2001.09981]. [CrossRef]

- Ali, S.; Abodayeh, A.; Dhuliawala, Z.; Breazeal, C.; Park, H.W. Towards Inclusive Co-creative Child-robot Interaction: Can Social Robots Support Neurodivergent Children’s Creativity? In Proceedings of the Proceedings of the 2025 ACM/IEEE International Conference on Human-Robot Interaction. IEEE Press, 2025-03-04, HRI ’25, pp. 321–330.

- Costescu, C.; Vanderborght, B.; David, D. Robot-Enhanced CBT for Dysfunctional Emotions in Social Situations for Children with ASD. Journal of Evidence-Based Psychotherapies, 17, 119–132. [CrossRef]

- Hurst, N.; Clabaugh, C.; Baynes, R.; Cohn, J.; Mitroff, D.; Scherer, S. Social and Emotional Skills Training with Embodied Moxie, [2004.12962]. [CrossRef]

- Riek, L.D. Wizard of Oz Studies in HRI: A Systematic Review and New Reporting Guidelines. Journal of Human Robot Interaction, 1, 119–136. [CrossRef]

- Madrid Ruiz, E.P.; León, R.C.; García Cena, C.E. Quantifying Intentional Social Acceptance for Effective Child-Robot Interaction. In Proceedings of the 2024 7th Iberian Robotics Conference (ROBOT), 2024-11, pp. 1–6. [CrossRef]

- Thiemann-Bourque, K.; Brady, N.; McGuff, S.; Stump, K.; Naylor, A. A Peer-Mediated Augmentative and Alternative Communication Intervention for Minimally Verbal Preschoolers With Autism. Journal of Speech, Language, and Hearing Research: JSLHR 2016, 59, 1133–1145, [27679841]. [CrossRef]

- Dubois-Sage, M.; Jacquet, B.; Jamet, F.; Baratgin, J. People with Autism Spectrum Disorder Could Interact More Easily with a Robot than with a Human: Reasons and Limits. Behavioral Sciences 2024-02-12, 14, 131, [38392485]. [CrossRef]

- Schadenberg, B.R.; Reidsma, D.; Heylen, D.K.J.; Evers, V. Differences in Spontaneous Interactions of Autistic Children in an Interaction With an Adult and Humanoid Robot. Frontiers in Robotics and AI, 7. [CrossRef]

- Pinto-Bernal, M.J.; Sierra M., S.D.; Munera, M.; Casas, D.; Villa-Moreno, A.; Frizera-Neto, A.; Stoelen, M.F.; Belpaeme, T.; Cifuentes, C.A. Do Different Robot Appearances Change Emotion Recognition in Children with ASD? Frontiers in Neurorobotics, 17, 1044491, [36937553]. [CrossRef]

- Rabindran.; Madanagopal, D. Piaget’s Theory and Stages of Cognitive Development- An Overview. Scholars Journal of Applied Medical Sciences 2020-09-25, 8, 2152–2157. [CrossRef]

| 1 |

ARASAAC website: A Pictographic Reference System in Augmentative and Alternative Communication. |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).