2. Methods

-

1.

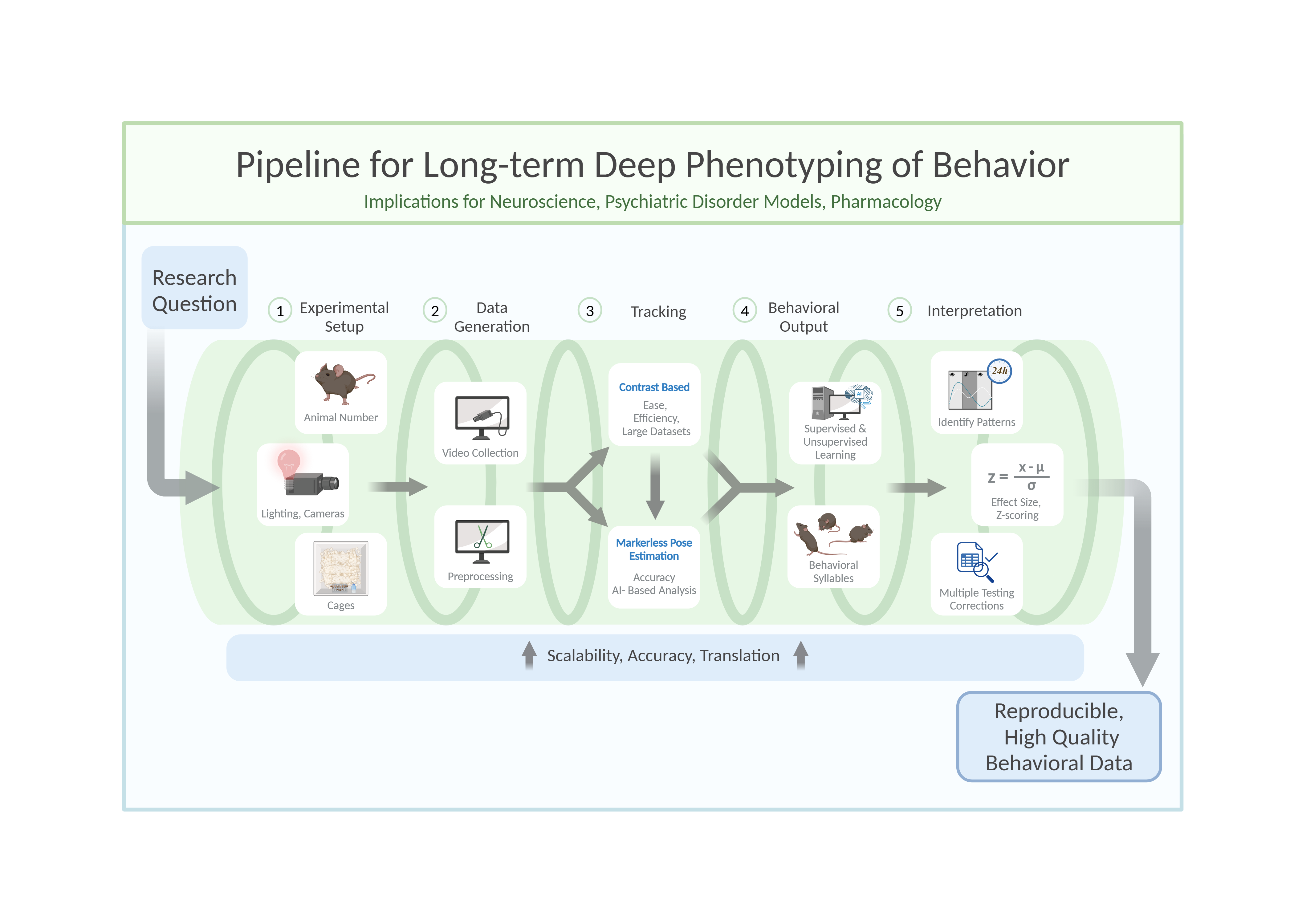

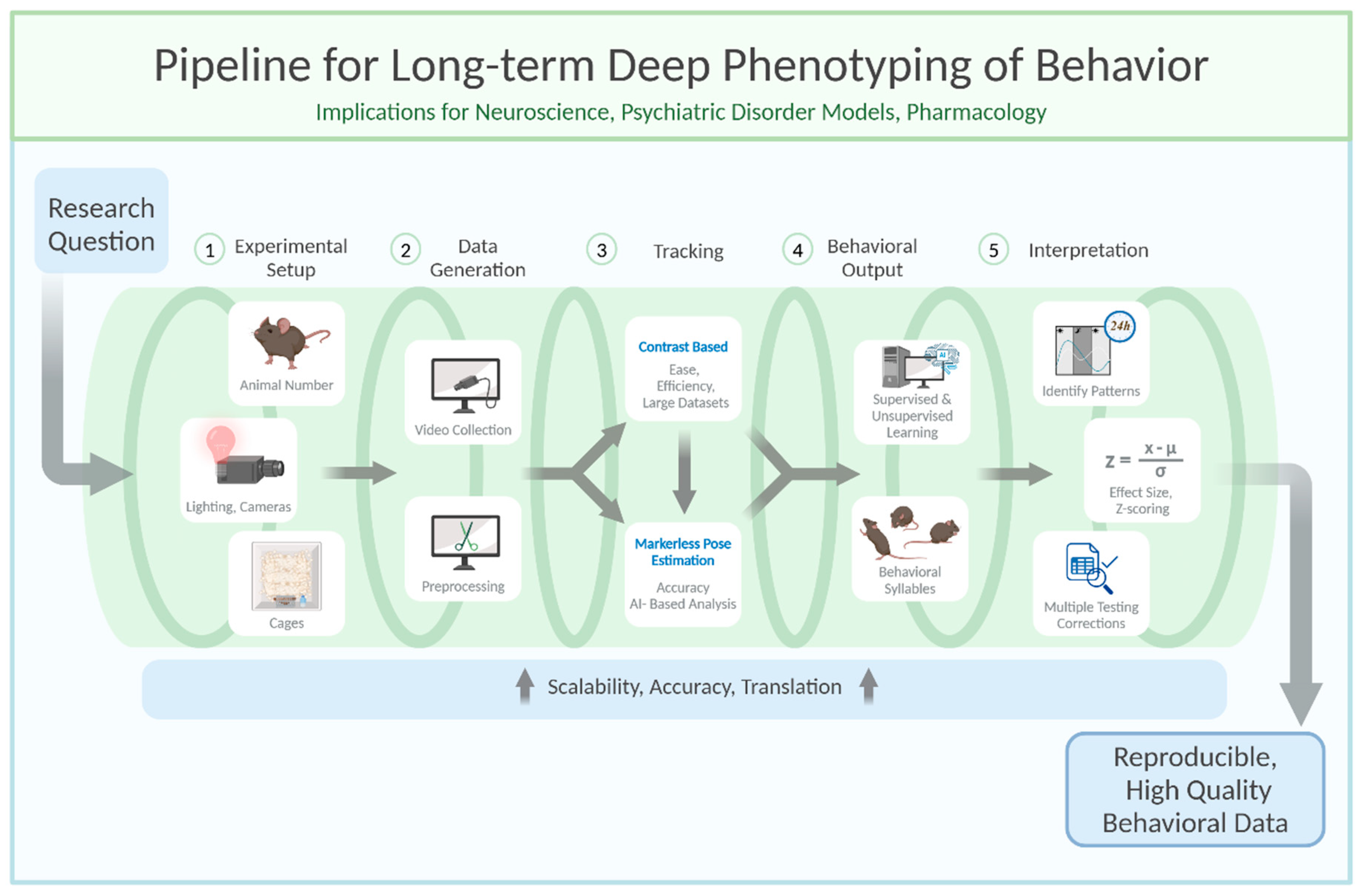

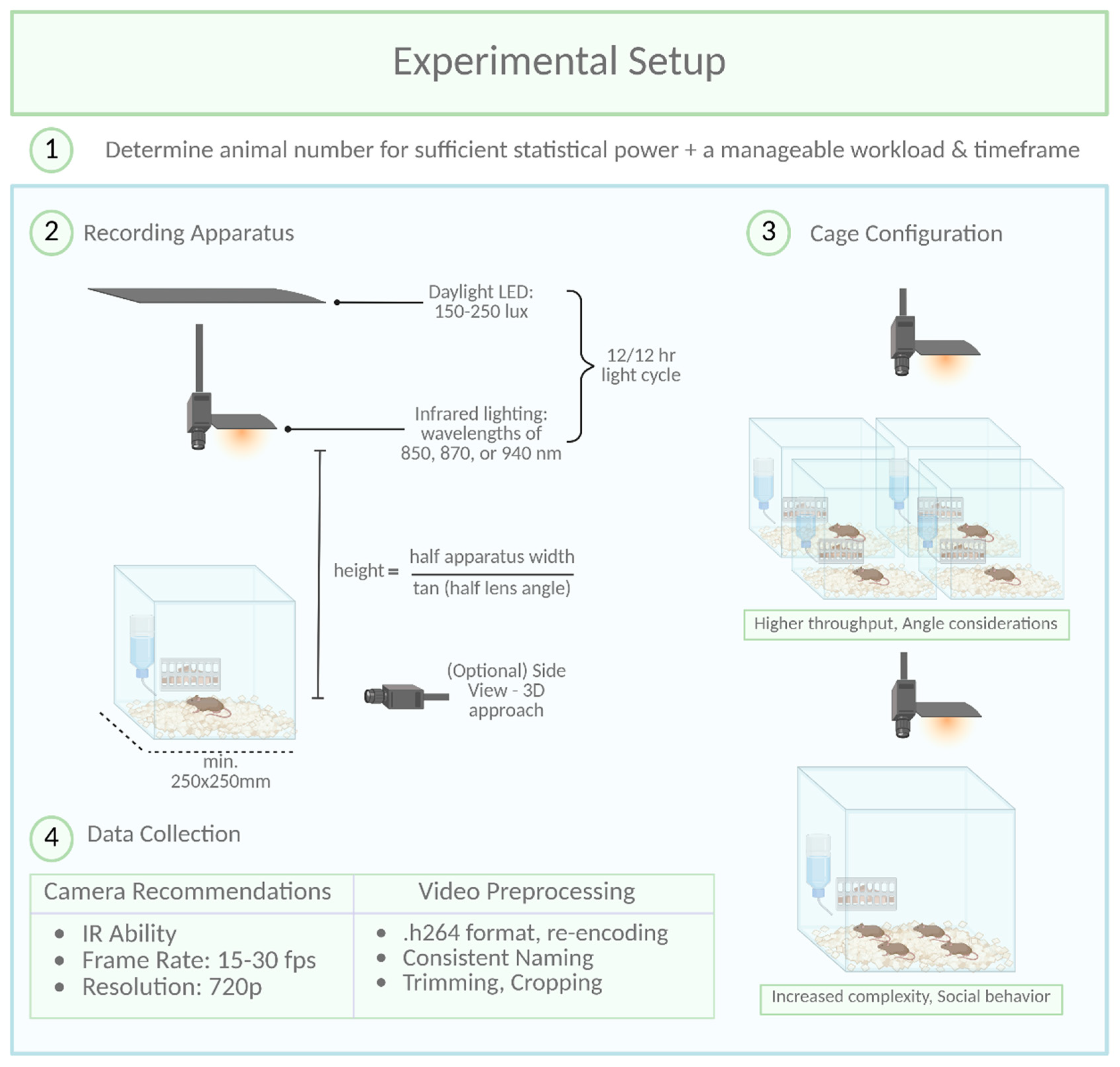

Setting Up an Experiment

Rodents are commonly used as model organisms due to their genetic similarity to humans and the wealth of behavioral paradigms available (Campbell et al., 2024; Koert et al., 2021; Petković & Chaudhury, 2022; von Mücke-Heim et al., 2023). However, capturing their behavior in a meaningful and reproducible way requires careful planning and execution. The process begins with setting up the experimental conditions, including determining the number of animals, cage configuration, and camera setup. Achieving high-quality video recordings demands attention to camera settings, such as resolution, frame rate, and daylight/infrared illumination, to ensure compatibility with subsequent processing and analysis stages. Furthermore, the choice of file type and compression settings can significantly affect data quality and storage efficiency.

When determining the number of animals per experimental cohort, two key factors must be balanced: (1) ensuring sufficient statistical power (i.e., the minimum number of animals needed to reject the null hypothesis) while adhering to the 3Rs principles (reduce, refine, replace) (Hubrecht & Carter, 2019), and (2) maintaining a manageable workload for experimenters within a reasonable timeframe. In longitudinal studies, precise timing is essential, as experimental procedures near light/dark transitions can disrupt circadian rhythms (Savva et al., 2024).

To meet statistical power requirements, behavioral research typically includes 6 to 18 animals per experimental condition in exploratory setups. However, the number of animals tested per cohort depends on factors such as room size, experimenter availability, and recording setup constraints. To reach the target sample size predefined by power analysis, experiments are conducted in multiple cohorts as needed. This approach not only accommodates logistical limitations but also minimizes cohort-specific variability, thereby enhancing reproducibility. However, it is important to note that this approach is only applicable if the n-number is defined before the experiment begins. Conversely, adding cohorts after analyzing the first cohort to achieve specific p-values is an invalid method.

Experimental cages must provide ample space for free and uninterrupted movement to ensure accurate interpretation of behaviors, particularly those involving avoidance or social interaction. To facilitate unobstructed camera observation, cages with high walls (400-500 mm) and an open top are recommended. This design prevents escapes by jumping and allows for a clear top-down view. A minimum floor size of 250 x 250 mm is advised for a single adult C57BL/6 mouse. Importantly, the cage should be large enough to allow movement without forcing the mouse to cross the center, as this can confound behavioral analysis, such as anxiety-related avoidance of the center zone. For studies involving social behavior or multiple mice, larger cages are necessary to avoid crowding and promote natural interactions.

Tracking contrast can be enhanced by using different bedding types, although this offers only slight improvements when camera image quality is already sufficient. Attention should also be given to the contrast between the mice and the cage walls. For example, tracking errors may occur if a black mouse climbs a black cage wall. Additionally, patterned or reflective cage walls should be avoided, as they may be mistaken for mouse body parts by tracking algorithms, leading to inaccuracies in the analysis. Including a solid, pre-built nest, such as a transparent plastic house, is beneficial as it provides a natural retreat for mice to hide and sleep. To prevent mice from moving the nest, it should be fixed to the cage wall. However, tracking issues may arise when mice are inside these structures, even if they are transparent. This can be addressed by excluding the structure as a tracking zone, filtering out frames where the mouse is undetectable. Plastic houses offer an additional advantage: mice often climb on top, using them as platforms for exploratory behavior.

To ensure accurate tracking, all movable solid objects should be securely fixed to the cage wall or floor, as shifting objects can significantly disrupt tracking accuracy over time.

The setup configuration for behavioral recordings depends on several factors, including cage dimensions, camera and lens specifications, IR lighting, and regular lighting for non-active phase observations (

Figure 2). While trial and error can help establish a setup, online calculators simplify component selection by determining optimal parameters like camera height or field of view, based on the general formula:

Experimenters often balance cost, space, and technical requirements, with compact machine vision cameras (e.g. Teledyne Firefly, Basler, and others) offering excellent performance. These cameras, paired with wide-aperture lenses (e.g., f1.8), capture sufficient light and reduce reliance on high ISO settings that introduce graininess. A 6mm lens mounted at 100cm can effectively cover a 50cm x 50cm cage area, though zoom lenses may provide more flexibility at the cost of low-light performance.

For 24-hour recordings, it is crucial to consider the active (dark) and non-active (light) phases of mice, as their nocturnal nature makes the dark phase particularly relevant. However, behaviors during the light phase, such as sleep patterns or resting disruptions, can also provide valuable insights. When implementing a light-dark cycle, typically 12 hours of light followed by 12 hours of darkness, it may be beneficial to consider reversing this cycle. This adjustment could be particularly important for animal facilities where daily staff duties occur near animal cages. Such a change might help mitigate issues related to sleep and rest disruption caused by human activities. Experiments during the dark phase should use infrared (IR) lighting, as visible red light disrupts rodent circadian rhythms (Dauchy et al., 2015). Specialized IR LEDs (wavelengths 850, 870, or 940 nm) are ideal, offering minimal heat and optimal illumination. Positioning IR LEDs directly above the cage avoids shadows on the floor, ensuring clear footage. For the light phase, LED bulbs with adjustable outputs are preferred due to low heat production and flexible power settings. Diffusion materials can further distribute light evenly across the cage. Importantly, light intensity should remain stable between 150–250 lux.

Low-aperture lenses (e.g., f1.8) may pose challenges when switching between IR and regular lighting, as the narrow focus plane can cause blurriness. Increasing the aperture to f8.0 broadens the focus plane, accommodating both lighting conditions, though higher ISO settings may introduce noise. Experimenters must balance these factors based on the specific requirements of their study.

If multiple cages are recorded with one camera, the camera should be angled equidistantly toward all cages to ensure uniform coverage without obstruction of the mouse. Before choosing a multi-cage-per-camera-setup, consider whether the angle and cage wall visibility must be identical for each cage in future behavioral analyses.

A top-down camera is the standard and effective choice for monitoring animal behavior in homecages, especially for tracking movement on the horizontal plane. However, if z-plane information—such as rearing or jumping—is required, options like side-view cameras, multiple synchronized cameras, motion capture systems with markers, or RGB-D depth cameras can be used. These solutions improve vertical movement detection but come with trade-offs: higher computational demands, added complexity, spatial constraints, and increased costs. For example, synchronizing multiple camera streams and managing large video datasets can be technically challenging and resource-intensive. Depth cameras, while offering direct z-axis measurements, often require careful calibration and are sensitive to lighting conditions. Motion capture systems eliminate the need for pose estimation software but require physical markers, which may interfere with natural behavior. Moreover, transforming multi-camera 2D data into usable 3D representations demands specialized expertise. Therefore, researchers must weigh the benefits of z-plane resolution against the logistical and computational demands of these more advanced setups.

-

2:

Camera settings

Several commercially available camera systems meet the requirements for behavioral recordings, with a key feature being the ability to record in infrared (IR) light. Standard cameras typically include an IR filter between the lens and detector, which blocks IR light. In contrast, IR cameras lack this filter, allowing constant IR illumination, even during daylight, when paired with appropriate IR LEDs.

Contrary to common belief, high frame rates are unnecessary and can even be counterproductive. For instance, labeling frames in DeepLabCut becomes burdensome when dealing with 24-hour recordings at 120 frames per second (fps). While automatic extraction of frames reflecting the diversity of behaviors present is possible with DeepLabCut, in longer videos manual labeling is often necessary to capture the full behavioral range. In addition, unnecessary high frame rates add analysis time without providing improved pose estimation. We recommend recording at 15-30 fps, which captures sufficient detail for fast body movements while keeping the number of frames manageable for labeling and analyzing. Importantly, it is preferable to choose a frame rate optimized for downstream processing over trying to optimize file sizes.

Full High Definition (Full HD, 1920 x 1080) or even 4K (3840 x 2160) resolution may be standard in most cameras but is not essential. A resolution of 720p is adequate for setups where the camera is positioned no more than 1 meter from the mouse.

-

3:

Video processing

Video data collected in home-cage environments can be extensive, particularly when recorded over 24-hour periods. Pre-processing of these videos, including cropping and encoding, is a critical step in preparing data for analysis.

Though preserving data in formats that support sharing and preservation is important, storing large video datasets results in challenges for file management and efficient storage.

While formats like .mov or .avi offer superior quality, they generate large, unmanageable file sizes for 24-hour recordings. Instead, we recommend recording in .h264 format with a compression rate of 3,000–8,000 kilobytes per second (kbps). Adjusting the compression rate allows a balance between file size and image quality: higher kbps for better quality and lower kbps for reduced file size. Under these settings, a 24-hour recording typically produces a file of approximately 100 GB, which takes around 20 minutes to transfer to an external hard drive at a mean speed of 130 Mbps or stream to a server.

For further file size reduction, .h264 files can be re-encoded using freely available or commercial tools (e.g. Handbrake or Adobe Media Encoder). This additional step can compress a 24-hour recording to as little as 4 GB, depending on the chosen settings, without significant loss of quality. This approach is recommended for addressing storage constraints and does not result in a perceivable loss of quality for downstream analysis using tools such as DeepLabCut.

Following a consistent naming schema helps prevent data loss and facilitates efficient sorting and batch processing. When possible, names should be descriptive of the experiment, cohort, experimenter, and standardized date in YYYYMMDD format. These names should use underscores or hyphens rather than spaces and avoid special characters, as is needed for python-based analysis packages. Version control through version numbers is recommended for preprocessed videos and altered scripts. Especially for larger datasets, it is helpful to utilize a batch processing script or tool to organize files and correctly rename versions throughout each step. This decreases the chance of file corruption and overwriting previous versions.

To ensure accurate and efficient behavioral tracking and analysis, videos should be screened to only include necessary timepoint(s) of interest and for efficient file management. For example, they can be trimmed to the exact starting point of the experimental testing, or to separate conditions across a single experiment. Additionally, longer videos can be segmented into smaller sections for more freedom in file transfer, analyzing specific time windows, or parallel processing.

Cropping the videos to one cage per video is recommended to prevent identity assignment errors and to keep the required consistency for pose estimation body part labeling schemes. Depending on the video length, trimming and cropping videos with tools such as Davinci Resolve, SimBA, or FFmpeg is feasible, with various options for automation available. Once the data has been prepared, it can be reliably used for accurate tracking and downstream behavioral analysis.

-

4:

Mouse tracking

Once the raw video data is processed, the next step is to track the animals and to interpret their behavior. This can be achieved using various methods including contrast-based tracking algorithms, Radio Frequency Identification (RFID) tagging systems, markered pose estimation, and most recently markerless pose estimation. Extraction of the pose of animals in combination with Python-based analytical packages (see table 1 for examples), which, depending on the software package used, can leverage supervised and unsupervised learning methods to extract meaningful behavioral patterns. These tools enable researchers to identify stereotypical behaviors, assess social interactions, and even perform long-term phenotyping over days or weeks. Traditional contrast-based tracking software (see

Table 1) provides efficient means to gain relevant information, particularly for single-animal tracking and basic behavioral metrics like distance travelled, speed, zone entries, etc (Lim et al., 2023).

Table 1 lists software packages with contrast-based tracking algorithms, useful for gaining an initial understanding of the activity and behavioral parameters exhibited by experimental animals in the tested setup. These contrast-based methods are particularly valuable for collecting accessible data that provide a general overview of animal behaviors. They are especially beneficial for long-term phenotyping, where deep-learning tools may generate excessive, fine-grained data, making them less suitable for extended tracking over hours or days.

Contrast-based video tracking software is widely used for behavioral research in rodents and other animals. Those software packages, like ANY-maze, EthoVision, VideoTrack, Smart, HomeCageScan, or Cineplex rely on motion detection through video recordings captured by overhead or side-mounted cameras, tracking animal movement and position based on contrast differences between the animal and the background. Usually, the center of mass of animals is tracked and advanced algorithms are used to analyze shape, structure, and movement across frames, enabling the tracking of positions within defined zones. Some software packages, like EthoVision, HomeCageScan, or VideoTrack, can track multiple subjects simultaneously and offer advanced features like body part recognition, movement pattern analysis, and customizable zones. All systems are designed to monitor behavioral assays in a variety of environments and can calculate parameters such as distance traveled, speed, and time spent in specific areas. While they are versatile and user-friendly, these systems rely on visual contrast, which can limit their accuracy in environments with low lighting or when animals have complex color patterns. Additionally, they face challenges in tracking fine motor movements or distinguishing specific body parts compared to machine learning-based tools like DeepLabCut, SLEAP, SimBA, Mars, and others (see

Table 1).

Contrast-based software packages, like ANY-maze or EthoVision, offer significant advantages in behavioral research, including ease of use, minimal training requirements, and the ability to analyze large-scale, standardized experiments. They are compatible with various experimental paradigms, such as open-field tests, elevated plus mazes, and water mazes. However, their reliance on clear contrast between the animal and its environment can cause challenges in poorly lit settings or with animals that have similar coloration to their background. Those programs also suffer from the potential distortion caused by variable camera angles, which can affect data accuracy despite manual correction tools. In comparison to machine learning tools, contrast-based tracking lacks the precision for fine movements of specific body parts with pixel-level accuracy. Deep-learning tools excel at pose estimation and unsupervised behavior classification, allowing for detailed and unbiased analysis of complex behaviors. However, these tools require more computational resources, expertise, and time to set up and train, making them more suitable for specialized, high-resolution behavioral studies rather than large-scale, general tracking. For researchers seeking a balance between ease of use and detailed analysis, contrast-based software remains a strong commercial option, while machine learning tools provide deeper analytical capabilities for more intricate studies.

Markerless pose estimation is a crucial tool for noninvasive and accurate tracking of animal movements for downstream behavioral interpretation, enabling detailed behavioral analysis even in dynamic environments with body part occlusions or fine anatomical features (Mathis et al., 2018). Open-source software packages (see table 1) provide researchers with accessible deep-learning-based solutions for predicting body part coordinates in experimental footage, even for researchers with minimal programming experience. These tools support both single- and multi-animal tracking, requiring users to manually annotate keypoints on video frames—a labor-intensive but necessary step for training accurate models.

DLC employs predefined neural network architectures (e.g., ResNet-based models) and follows a top-down approach, where keypoints are detected first and then assigned to individuals. In contrast, SLEAP allows for customizable neural network architectures and supports both top-down and bottom-up approaches, offering greater flexibility (Pereira et al., 2022). While both tools feature graphical user interfaces (GUIs), DLC typically requires more manual parameter adjustments to optimize accuracy and robustness, whereas SLEAP prioritizes user-friendliness, flexible model selection, and efficient training workflows.

Both tools are well-supported by extensive documentation, tutorials, and community-driven forums, aiding researchers in troubleshooting and model refinement. The choice between DLC, SLEAP, or other packages, depends on factors such as the complexity of the tracking task, the number of animals, and the user's programming proficiency. Regardless of the tool chosen, the development of a well-trained model is essential, as errors in pose estimation can propagate and negatively impact subsequent behavioral analyses.

SLEAP.ai was developed as an improvement over LEAP to handle multiple animals in a video. Its user-friendly graphical interface makes it accessible even for those with no prior experience in pose estimation or coding.

Similar to DeepLabCut, SLEAP.ai uses a machine learning model to identify and predict the coordinates of specific body parts (keypoints) in experimental videos. This involves manually annotating video frames with selected key points and animal identities—a labor-intensive but critical step. A well-trained model is essential for accurate behavior analysis, as errors during model development can negatively impact subsequent results.

SLEAP.ai offers tutorials on its official website and GitHub for troubleshooting, however, we decided to include a few critical steps that might help setting up a tracking project.

- 1)

While we tested models trained with 50, 150, and up to 600 frames, we found no improvement in performance beyond 500 annotated frames. Model stability is highly influenced by the number of annotated frames. Based on our experience, annotating frames from multiple videos—ideally all experimental videos—helps prevent overfitting, where a model excels on annotated videos but performs poorly on others.

- 2)

Using SLEAP’s default unet backbone, promising results were achieved with as few as 50 frames. We used the multi-animal-top-down training/inference pipeline, which worked well for tracking multiple animals. However, multi-animal-top-down-id, designed to better distinguish animal identities, struggled with visually similar animals (e.g., C57Bl/6Js). Moreover, tail markings alone were insufficient.

- 3)

Proofreading—correcting errors in animal identity assignments—is essential, as algorithms often fail in challenging scenarios, such as animals moving under or over each other. SLEAP includes tools for manual correction, though this can be time-consuming for long videos. Integrating RFID technology could address identity mismatching, especially for multi-day recordings. For shorter videos, manual correction using SLEAP tools is recommended.

- 4)

If sufficient GPU support (e.g. NVIDIA Tesla A100 40GB or similar) is not available, SLEAP supports external GPUs like Google Colab.

For researchers using SLEAP.ai for mouse experiments, the configurations of the training/inference workflow, detailed in

Table 2,

Table 3 and

Table 4, can serve as a solid starting point.

Well-established method protocols on how to use the DLC toolbox as well as GitHub tutorials exist to guide users through the command line and GUI pipeline (Nath et al., 2019). There is no one-size-fits-all set of parameters that is generalizable for all users, but we include below a non-exhaustive list of possible errors that may arise during model training and evaluation.

- 1)

Installation challenges and correct enabling of the GPU with CUDA, cudnn, and tensorflow can be difficult. DLC can run on CPUs though performance may be slower, and Google Colab can function as an alternative.

- 2)

The training dataset is different for each user, but general guidelines follow that a more varied dataset with multiple backgrounds, individuals, postures, and number of separate videos is preferable, along with skipping the labeling of unclear frames. While automatic extraction is efficient for shorter videos, manual extraction guarantees all behaviors are captured in longer videos.

- 3)

Reflections and body part occlusions can interfere with tracking accuracy. Removing reflective surfaces and adjusting lighting when possible is recommended. When body part occlusions are unavoidable, increasing training frames with more diverse postures and occlusion scenarios is key. DLC filtering and interpolation can aid in managing missing data.

- 4)

Missing data or jumping labels at the visualization step in the model evaluation are to be expected, and further processing to filter data and interpolate what are considered bad detections can improve model accuracy.

- 5)

With exceptionally large datasets and longer videos, a significant increase in computational demands can be met with parallel processing via CPUs/GPUs in a computing cluster. To circumvent GUI accessibility, it is possible to create identical parallel projects, label locally, and upload the labeled frames to the twin project for model training and analysis.

- 6)

Some videos may fail to analyze at certain points or not start at all, due to metadata corruption when managing files. Re-encoding the videos using FFmpeg can resolve issues with videos stopping just before analysis completion. This is especially important for longer videos, which should be segmented beforehand.

By following these steps, researchers can improve the robustness of their DeepLabCut models and ensure accurate pose estimation for downstream behavioral interpretations.

Table 5.

Suggested specific settings for single- and multi-animal DeepLabCut downstream analyses with DeepOF of short (minutes) and long (multiple hours) videos.

Table 5.

Suggested specific settings for single- and multi-animal DeepLabCut downstream analyses with DeepOF of short (minutes) and long (multiple hours) videos.

| DLC Config file (config.yaml): |

Labeling scheme should list the 11 DeepOF keypoints |

| Training dataset recommendations short videos (e.g. open field): |

10 frames of each unique behavior, 100-200 training frames labeled |

| Training dataset recommendations longer videos (e.g. home-cage behavior over multiple hours) |

more recommended for longer videos with varied behavior, depends on the recording setup and requires optimization. E.g. 500+ for 24-hour recordings |

| DLC Model Training pose configuration file (pose_cfg.yaml): |

Edits to the pose_cfg file that still result in well-performing models for longer videos:

- ○

decay_steps: 30000 - ○

display_iters: 1000 - ○

multi_step: - ○

- 0.0001 - ○

750 - ○

- 5.0e-05 - ○

1200 - ○

- 1.0e-05 - ○

20000

|

| DLC Model Training pose configuration file (pose_cfg_yaml) – 2 animals set up |

Editing the pose_cfg file for well-performing 2 animal 12h videos:

- ○

decay steps: 10000 - ○

display iters: 500 - ○

multistep: - ○

-0.0001 - ○

1500-3750 - ○

-5.0e-05 - ○

2400/6000 - ○

-1.0e-05 - ○

40000-10000 Save_iters = 10000 |

Multi-animal tracking with DLC, SLEAP, and other packages has revolutionized social behavior research by enabling the simultaneous tracking of multiple animals. This capability addresses a significant challenge in computer vision: the frequent interactions and occlusions that occur during recordings. The tracking of two animals with varying degrees of similarity has been extensively studied, offering solutions for stable tracking over extended periods. However, ensuring network stability is critical, as tracking labels are prone to intermingling between animals. This complexity is compounded by the management of multiple identities over the course of time.

Identity management is the defining challenge of multi-animal tracking. When animals interact, tracking points can intermingle, leading to identity switches, especially during prolonged recordings when the animals are similar in appearance. For example, studies involving two animals with moderate visual similarity have achieved stable tracking over extended periods (e.g. 12h), but only with careful adjustments to ensure network stability and robust identity discrimination.

-

1.

Salient Physical Markings

To facilitate identity differentiation, animals should appear distinct in selected frames. Tail markings have proven particularly effective, providing a reliable distinguishing feature without interfering with natural behavior. For instance, three stripes with non-toxic black marker at the base of a mouse’s tail offer consistent visibility across diverse movements and occlusions during nocturnal activity.

-

2.

Supervised identity tracking

Enabling the “identity:true” option in the config.yaml file allows DLC to train an identity head, leveraging supervised learning to enhance identity preservation (Lauer et al., 2022). This approach is particularly useful when animals are visually similar or frequently occlude one another.

-

3.

Refining Tracking Data

Post-training refinement is essential for correcting mislabeled identities. Using the GUI to extract and review outlier frames helps address unusual assemblies or skeletons missed during initial labelling. This step ensures stable tracking across experimental conditions within the same setup.

-

4.

Segmenting videos

Dividing long videos into smaller chunks reduces computational strain and minimizes errors during analysis. This approach facilitates smoother processing and improves accuracy over the course of the experiment.

Compared to single-animal setups, multi-animal tracking requires more sophisticated methods for data management and analysis. Tracklet-based approaches propagate short-term identities using lightweight predictors (e.g. ellipse trackers) to link detections across adjacent frames (Lauer et al., 2022). Global optimization refines these tracklets through graph-based network flow techniques, incorporating metrics such as: shape similarity, spatial proximity, motion affinity, dynamic similarity.

Identity preservation further relies on unsupervised re-identification using learned appearance features and motion history matching. Additionally, data-driven assembly predicts conditional random fields for keypoint locations, limb connectivity probabilities, and animal identity scores, while automatically selecting optimal skeletons based on co-occurrence statistics, spatial relationships, and motion correlations.

Despite these advancements, multi-animal tracking systems remain prone to errors in tracklet stitching or individual identity mismatches due to the inherent complexity of managing multiple subjects.

The differences between single- and multi-animal tracking are most evident in performance metrics:

-

1.

Processing Speed

Single-animal tracking achieves >1000FPS on consumer GPUs due to streamlined computations for one subject. In contrast, two-animal multi-tracking operates at approximately 800 FPS due to added overhead from identity association algorithms, occlusion handling routines, and parallel skeleton estimation

-

2.

Keypoint accuracy

While high overall, multi-animal setups show slightly reduced precision compared to single-animal tracking:

| Metric |

Single-Animal |

Two-Animal Multi |

| Head region precision |

98.2% |

97.5% |

| Distal limb accuracy |

95.1% |

92.8% |

| Inter-animal error |

N/A |

<3.2px |

To address identity mismatches, one should ensure that salient physical markers are used (e.g. tail marks) for clear differentiation, enable supervised identity tracking (“identity:true”) during training, refine mislabeled data and extract outlier frames for correction, segment videos into smaller chunks to reduce computational strain.

Tracking aggressive behaviors presents unique challenges, due to fast-paced movements that often result in inconsistent labels, which are unsuited for further analyses with the proposed tools. In addition, even when animals are visually distinct – such as different species – identity assignments must be diligently managed, as DLC may mislabel individuals during early training stages. Overall, the process adds an additional layer of due diligence to the guidelines that are followed for single-animal tracking during the training procedure.

-

5.

Post-tracking behavioral analysis

A multitude of post-tracking behavioral interpretation packages—such as DeepOF, SimBA, BORIS, B-SOID, and others—are available to analyze tracking data, each offering unique tools for behavior classification, visualization, or statistical analysis. These tools can be readily applied to tracking coordinate tables generated by pose estimation software like DeepLabCut, SLEAP, or similar platforms, as summarized in table 1. Here, we focus on DeepOF, a package developed by our lab that combines a multitude of key features, including both supervised and unsupervised analysis capabilities.

DeepOF is an open-source Python library for behavioral analysis and visualization (Bordes et al., 2023). It is intended as a secondary step after tracking the individual body-parts of each mouse. Respectively, DeepOF accepts e.g. output data from DeepLabCut or SLEAP as input. Using tabularized tracking data and videos, DeepOF offers a supervised and unsupervised pipeline for in-depth behavioral analysis and visualization of behavior-trends, - changes and -statistics.

DeepOF offers in total 15 different predefined behaviors that can be automatically detected. Nine of these behaviors are applicable to single mice: “climb_arena”, “sniff_arena”, “immobility”, “stationary_lookaround”, “stationary_passive”, “stationary_active”, “moving”, “sniffing” and “missing”. Six behaviors describe mouse-mouse interactions and hence can only be detected if more than one mouse is present. These behaviors are “nose2nose”, “nose2tail”, “nose2body”, “sidebyside”, “sidereside” and “following”. All these predefined behaviors are based on non-learning-based algorithms except for “immobility” which is determined by a gradient boosting classifier. Furthermore, “stationary_active”, “stationary_passive” and “moving” are exclusive, which means that any mouse only ever is in one of these three states. Whilst the behavior names are mostly self-explanatory, exact definitions of them and how they are detected can be found in the DeepOF documentation (Miranda et al., 2023). After supervised classification of behaviors, DeepOF offers a wide range of functions to plot and compare behavioral trends between mice and groups including basic statistics.

Besides detecting predefined behaviors, DeepOF also offers a pipeline for unsupervised clustering and evaluation of the tracked input data. In general, three different methods for clustering are implemented: a Vector Quantized Variational Autoencoder (VQ-VAE) model (van den Oord et al., 2017), a Variational Deep Embedding (VaDE) model (Jiang et al., 2017) and a model utilizing self-supervised Contrastive embedding. Even though these methods are based on the cited sources, significant customization is possible by adjusting various inputs. As for unsupervised analysis the models autonomously group similar behaviors, it is often not immediately clear how similarity is defined. Subsequently, some further processing steps are required to be able to interpret the clustering results. The entire pipeline from input data via clustering to evaluation and interpretation is described in the DeepOF user guide including tutorials (Miranda et al., 2023).

Since DeepOF is a python package, all general difficulties that may arise when working with Python (such as installing Python, installing a code editor, setting up a virtual environment and more) also apply to DeepOF and are beyond the scope of this Review. Presuming that the Python environment has been set up correctly, the following should be considered during data analysis using DeepOF:

After importing the necessary packages, the first step when working with DeepOF is to define a project. It is crucial to provide the correct video scale in mm with the “scale” input argument. Depending on your arena type, this is either the diameter of the arena (when circular) or the length of the first line you are going to draw when marking a polygonal arena during project creation. If the scale is incorrect, this will affect behavior analysis downstream as e.g. distances between mice are over- or underestimated

After project definition, run the “create” function on this project, which will calculate angles, distances, and more for the tracked data. If this function fails, this is often due to various table formatting problems, such as the names of your mouse body parts deviating from the standard names given in the DeepOF graphs. By default, only small gaps of NaNs in your data will be closed with linear interpolation. It is possible to fully impute the data with a more complex algorithm, but often not advisable, as the quality of the imputation drops with the size of the gaps.

Supervised behavior analysis is done by running the “supervised_annotation” function. For body part graphs containing less than the recommended 11 body parts, not all supervised behaviors will be detected. Different supervised parameters can be adjusted to modify detections. For example, “close_contact_tol” determines how close mouse body parts need to be to trigger different interactions.

To prepare for unsupervised behavior analysis, first a combined dataset for classifier training needs to be constructed, which can be done with the “get_coords” or “get_graph_dataset” functions. These functions will create one big, combined dataset from all the tracking data of the project. As the unsupervised deep learning models are currently not optimized for RAM usage, it is recommended for large projects to only use samples of all data by using the “bin_index” and “bin_size” input options during dataset construction.

For training an unsupervised model the function “deep_unsupervised_embedding” is used and respectively time intensive. In most cases the “pretrained” input option should be set to “false” as DeepOF only offers a pretrained model for the specific case of two mice with DeepOF_11 labeling. If the model achieves a separation between experiment groups, but the clusters itself are unsatisfying, it is often advisable to use the “recluster” function instead of training a new model.

As behaviors are detected automatically, it is always advisable to verify the accuracy of the detections. A better way than just using the Gantt plot feature and manually comparing the behavior time stamps with your videos, is using the export_annotated_video function. This function allows you to either create a specific video with a specific behavior annotated (i.e. it will be marked in all frames it occurs) or to export a supercut of all frames in which a behavior occurs of all of your videos.

These best practices for DeepOF only provide a brief overview. For detailed guidance, refer to the comprehensive DeepOF user guide and tutorials (Miranda et al., 2023).

-

6:

Data interpretation and statistical recommendations

When designing statistical procedures for data analysis, two key factors must be considered: (1) the risk of false positives increases with the number of tests performed, and (2) the detectable effect size depends on sample size.

To address multiple testing, correction methods are grouped into four categories: post-hoc tests, family-wise error rate (FWER) corrections, false discovery rate (FDR) corrections, and resampling procedures (Streiner, 2015). The choice of correction depends on the number of tests and the study’s complexity. In simple setups with few planned comparisons, corrections may be unnecessary. For more complex designs involving multiple groups or behaviors, corrections become essential.

Post-hoc tests or FWER controls, such as Bonferroni or Šidák-Bonferroni corrections, are suitable for moderate test counts where minimizing false positives is critical (Armstrong, 2014). However, these conservative methods increase the risk of Type II errors as the number of corrections grows. For large datasets, such as continuous recordings spanning hours or days, FDR corrections like Benjamini-Hochberg or Benjamini-Yekutieli offer a balance, accepting a small share of false positives to maintain statistical power. These are particularly effective in exploratory analyses with numerous tests.

Combining approaches can also be effective. For example, repeated measures ANOVA accounts for dependencies across time points, identifying significant main effects or interactions (e.g., group × time). Post-hoc tests with FDR corrections can then refine analyses at individual time points, ensuring robust error control while leveraging ANOVA’s strengths.

Sample size is another critical consideration. Small samples detect only large effects, while large samples may identify statistically significant but practically negligible differences. Reporting effect sizes (e.g., Cohen’s ds or f) alongside p-values provides context, highlighting the practical relevance of findings.

Finally, Bayesian statistics can complement frequentist methods by incorporating prior knowledge and estimating the probability of hypotheses. This approach enhances interpretative depth, offering a more comprehensive and robust assessment of results.

Although we do not endorse any specific software, we present a non-exhaustive list of popular packages and indicate their current capabilities for calculating z-scores, p-values, FDR, and effect sizes, as recommended above.

Table 6.

Feature comparison of statistical software packages: (current versions 2025).

Table 6.

Feature comparison of statistical software packages: (current versions 2025).

| Program |

Z-Score Calculation |

Benjamini-Hochberg/Yekutieli FDR |

P-Value Calculation |

Cohen’s d Effect Size |

| SPSS (IBM SPSS Statistics) |

Yes |

Yes |

Yes |

Partially (custom syntax/extensions) |

| R (with Packages) |

Yes |

Yes |

Yes |

Yes (e.g., effsize, lsr) |

| Python (with Libraries) |

Yes |

Yes |

Yes |

Yes (e.g., Pingouin, statsmodels) |

| JASP |

Yes |

No |

Yes |

Yes |

| JMP |

Yes |

Yes |

Yes |

Yes |

| Jamovi |

Yes |

No |

Yes |

Yes |

| G*Power |

No |

No |

No |

Yes |

| GraphPad Prism |

Yes |

Yes |

Yes |

No |

| Excel (with Add-Ons) |

Yes |

Yes (with add-ons) |

Yes |

Yes (with add-ons) |

| Stata |

Yes |

Yes |

Yes |

Yes |

| MATLAB |

Yes |

Yes |

Yes |

Yes (via scripts or toolboxes) |

| PSPP |

Yes |

No |

Yes |

No |

| MiniTab |

Yes |

No |

Yes |

No |

| EZAnalyze (Excel Add-In) |

Yes |

No |

Yes |

Yes |

This overview highlights the versatility of each tool for different statistical needs. Programs listed here offer comprehensive solutions, while others may require manual steps or additional extensions for certain analyses. These tools cater to varying levels of expertise and needs, from user-friendly graphical interfaces to advanced coding platforms.

For users without access to specialized software, z-scores can also be manually calculated using straightforward formulas.

Z-scores, also known as standard scores, measure how far a data point deviates from the mean in terms of standard deviations. They are calculated using the formula

where x is the individual data point, μ is the mean of the dataset, and σ is the standard deviation (Guilloux et al., 2011). By standardizing raw data into a common scale, z-scores allow researchers to compare measurements across different variables or experimental conditions, regardless of their original units or scales. This is particularly valuable in behavioral research, where diverse data types—such as activity levels, response times, or physiological measures—must often be analyzed together. Z-scores also help identify outliers, as values typically fall within a range of -3 to 3 in a normal distribution. By using z-scores, behavioral researchers can improve the precision of cross-comparisons, enhance the interpretability of results, and gain deeper insights into patterns of variability within and across study groups.

Integrated Z-scoring of supervised behavioral data is a method where the researcher can create a composite score for a particular behavioural domain. To calculate an integrated Z-score for a behavioral domain, an average of Z-scores of each relevant behaviour is used, loaded as original values or their negatives, depending on the direction of experimental effect on the individual behavior (Kraeuter, 2023). This creates a composite score that retains the original scale and meaning of the data.

While this method is a highly standardizable and interpretable method of dimensionality reduction, it assumes independence and equal weighting of all included behaviors, which may not always reflect the underlying structure of the data. Furthermore, manual selection of behaviors to include in the integrated Z-score can be biased towards prior findings, rather than patterns emerging from the data itself.

Integrated Z-scores are still useful for categorizing predefined behaviors, but exploratory approaches may be better suited for identifying unexpected relationships among behaviors. This is especially relevant for novel behavioral features extracted through unsupervised analysis, where predefined assumptions may not capture the full structure of the dataset. Such analyses are more mathematically rigorous but can easily be conducted using many of the aforementioned programs such as R, GraphPad, and Python.

Principal component analysis is a common technique which transforms a group of related l variables into a set of smaller, uncorrelated variables called principal components (PCs). PCs are ranked by the amount of variance in the dataset they capture; the first few PCs usually summarize the most important patterns in the data. The three main steps of PCA are to 1) calculate covariance of the input behaviours, 2) identify principal components that best encompass the patterns of covariance, and 3) rank the PCs by how much variance they explain, allowing visualization of broad patterns. Each datapoint is re-assigned “eigenvalues” for each of these new components, which can be analyzed for group comparisons in the same manner as individual behaviors would be, with common methods such as t-tests and ANOVAs. Users can identify behaviors that significantly contribute to each component and decide whether to prioritize readouts that strongly influence components explaining the highest variance (Budaev, 2010).

PCA is therefore helpful to simplify complex datasets in a data-driven way and identify behavioral patterns. A limitation however is that PCA does not directly identify causation or meaning—it simply finds the directions in the dataset where variance is highest. When analyzing PCA results, researchers typically focus on the PCs that capture the most variance, as these are the most informative for distinguishing patterns in behavior between experimental groups. This means PC eigenvalues are not generalizable across datasets or direct interpretations of biological differences.

Another exploratory method more focused on interpretation is factor analysis, in which a statistical model assumes that observed behaviors are being driven by underlying interpretable factors. In the context of behavioral research, factors could include constructs like social behavior, anxiety-like behavior, or exploration. Factor analysis (FA) analyzes covariance in the observed variables to determine unobserved factors and characterizes the extent to which each variable is related to each factor (Budaev, 2010).

FA can therefore be valuable for uncovering behavioral patterns that are more biologically meaningful than those identified by PCA, which identifies directions of maximal variance. It also filters out noise by removing variables that do not contribute to the meaningful patterns. It is however limited in that it assumes the behaviors are fully driven by the underlying factors, which is not always true and could lead to biased outcomes – especially when the number of samples per group is lower than the amount of input variables. Another aspect that can bias outcomes is the subjective determination of the number of factors to investigate.

Table 7.

Summary of each dimensionality reduction method.

Table 7.

Summary of each dimensionality reduction method.

| |

Integrated Z-Scores |

PCA |

Factor Analysis (FA) |

| Outcome: |

Creates an interpretable behavioral score |

Explores the structure of behavioral variation |

Identifies factors underlying behavioral variation |

| How interpretable? |

High, as scores match original scale |

Medium, as PCs are abstract and dataset-specific |

High, as meaningful underlying factors are identified |

| Handles Correlations? |

No |

Yes |

Yes |

| Sample size required: |

Small |

Moderate |

Large |

| Use when: |

Comparing predefined behavioral measures |

Exploring data-driven structure |

Understanding underlying constructs (e.g., stress, cognition) |

| Limitations: |

Does not account for patterns between behaviors |

Outcome values are not generalizable or directly biologically meaningful |

Assumes and searches for causal relationship which may not be present |

As a final consideration, we suggest a hybrid of methods to maximize meaningful data exploration and interpretation. Exploratory analyses such as PCA and factor analysis could be used as first step to reveal behaviors which are highly correlated or strongly contributing to variance in a dataset, and users could use these outcomes to inform which behaviors to combine for integrated Z-scores. This approach would maintain interpretability by keeping original values and scale from behavioral analyses but would incorporate relationships uncovered between behaviors and limit researcher bias.