1. Introduction

Spatial navigation constitutes one of the most fundamental, yet intricate challenges faced by biological entities. Beneath the surface of this cognitive feat resides an interwoven substrate of mental processes, including perception, attention, categorization, memory, problem solving, and even language (Waller & Nadel, 2013). Grasping the essence of spatial navigation is important not only for deciphering its mechanism, but also because it might provide the basis for other cognitive processes (Grieves et al., 2020). It has been the subject of modern scientific inquiry since the early 20th century, starting with the investigation of homing behavior in ants (Turner, 1907) and rat behavior in mazes (Watson & Carr, 1908). In fact, some of the most important discoveries regarding the neural underpinnings of spatial navigation, such as place and grid cells, were made on rodents (O’Keefe & Dostrovsky, 1971; Hafting et al., 2005). While eerily dissimilar compared to humans at first glance, rats share several fundamental neurological structures with humans (Xu et al., 2022), including the hippocampal formation, a part of the mammalian brain that is essential for navigation, thus enabling the cross-species interpretation of results. (O’Keefe & Nadel, 1978; Squire, 1992; Shrager et al., 2007). Furthermore, rodent brains are very well mapped (Paxinos & Watson, 2006; Feo & Giove, 2019) and are relatively simpler than human brains (Herculano-Houzel, 2009) making it easier to study specific brain functions and changes.

2. Classical Spatial Navigation Tasks

One of the most widely used testing environments for spatial navigation research is the maze. Mazes are versatile and easy to adapt for different species, including rodents and fish (Tolman, et al., 1946; Salas et al., 2008). The most famous designs include the sunburst pattern maze, used for the study of cognitive maps (Tolman et al., 1946, Tolman, 1948), the T-Maze (Yerkes, 1912; Deacon & Rawlins, 2006), optimal for the study of spatial working memory (d’Isa et al., 2021), the Radial Arm Maze for memory and problem solving (Olton & Samuelson, 1976), the Barnes Maze (Barnes, 1979) and Morris Water Maze (Morris, 1984) for allocentric navigation. Mazes offer great environmental control, but they scarcely reflect natural environments in which animals would normally navigate, and require extensive maintenance for the elimination of hidden variables such as olfactory cues in the case of rodents (Buresova & Bures, 1981).

3. Naturalistic Tasks

In contrast to the well-defined and constrained environment of mazes, naturalistic tasks aim to assess spatial navigation in real-life situations. To this end, several designs have been derived including homing behavior tasks in the case of animals, and more complex wayfinding tasks for human participants (Mandal, 2018; Malinowski et al., 2001). Homing behavior has been studied across several species, including rodents, chiroptera, birds, turtles, fish, and insects (Bingman et al., 2006; Cagle, 1944; Dittman et al., 1996; Mandal, 2018, Tsoar et al, 2011). These types of tasks assess the animal’s ability to return to a familiar location after being displaced, providing insights regarding orientational mechanisms, path integration and allocentric navigation. Various methods for tracking can be utilized, including GPS, radio telemetry or video recordings (Bingman et al., 2006; Mandal 2018). Human wayfinding tasks instrumentalize many of the variables seen in homing tasks, however they can also include the extensive use of tools such as maps, compasses and GPS devices (Malinowski et al., 2001). While studying human spatial navigation is certainly possible both in mazes and natural environments, it is not necessarily economical, nor is there a high level of control and precision guaranteed. Combining navigation in natural environments with intracranial electrophysiology is almost impractical (but see Topalovic et al, 2023).

4. Virtual Environments

The rapid spread of powerful computing devices has allowed for a new dimension of spatial navigation research to be established in virtual environments. Its massive potential is evidenced by its widespread applications in education, military training, and the study and rehabilitation of cognitive processes as early as the 1990s (Rizzo & Buckwalter, 1997). Virtual reality tasks have been widely utilized in both healthy and pathologically affected populations (Cogné et al., 2017). An especially diverse set of virtual environments have been developed for research purposes ranging from classical mazes (Konishi et al., 2013) to entire city districts with detailed environments (Taillade et al., 2013). A literature review conducted by Cogné et al. (2017) revealed that a significant number of the studies using virtual reality were conducted to assess deficits in spatial navigation performance, or in an attempt to rehabilitate them. Virtual environments are also highly controllable, giving researchers the ability to manipulate the characteristics of environmental factors such as lights, obstacles, sounds, landmarks with remarkable precision (Thurley, 2022). Another advantage that comes with virtual environments is the ease of reproducibility. While in the case of physical environments extensive efforts must be made to recreate the specifics of the experimental task, these difficulties vanish in the case of pre-packaged software, minimizing both the errors that could occur during reproducing experiments and the cost of replication. Recording data is also more streamlined, since researchers no longer require separate instruments for videotaping, location tracking and logging. VR can also prove to be more engaging, sustaining the attention and motivation of participants (Dickey, 2005), and is possible to be deployed for the assessment of participants who may have difficulty with moving in physical space.

VR scales up the deployment of controlled data acquisition via the internet or on mobile devices (Coutrot et al, 2018). Using VR games enable us to selectively activate and decipher various cognitive functions (Allen et al, 2024).

5. Real vs Virtual

Knowledge transfer between the real and virtual dimensions is essential for the validation of virtual navigation tasks. Several studies have addressed this concern and came up with supportive results. Koenig et al. (2011) demonstrated that humans can successfully apply knowledge attained in the real world in virtual environments. Another study that showed correlation between spatial navigation performance conducted in virtual environments and real-world tasks was devised by Coutrot et al. (2019). The study involved a mobile app called “Sea Hero Quest” designed for wayfinding and path integration performance assessment. After completing the virtual tasks, the participants were asked to perform the same tasks, albeit in a different environment, in the real world. Coutrot et al. (2019) have found that the wayfinding performance of the participants in the virtual environment was significantly correlated with their performance in real-world tasks. In a systematic review of egocentric and allocentric spatial memory performance in individuals with mild cognitive impairment, Tuena et al. (2021) also found that real world and virtual reality tests show a good overlap for the assessment of spatial memory. The testing of egocentric navigation with virtual tools poses one caveat however: the lack of proper idiothetic cues. While allocentric navigation is based mostly on visual cues, egocentric navigation relies heavily on vestibular, proprioceptive, somatosensory, and motor efferent signals (Ekstrom, 2023), which are lacking in virtual tasks unless omnidirectional treadmills are also integrated into the procedure (Hejtmanek et al., 2020). Hejtmanek et al. (2020) found that the higher the level of immersion, the greater the rate of learning and transfer of spatial information. An important limitation of immersive VR however is the potential for cybersickness and dizziness, which might impact the rate at which participants finish tasks. In this regard, traditional desktop-based navigation tasks can be considered superior (Hejtmanek et al., 2020). The virtual environment adds another factor to memory. Spatial locations often used as a mnemotechnic to memorize excess number of objects beyond short term memory, i.e. the “method of locations” (Lurija, 1987). A popular version of this is the “memory palace” a house with rooms where each room is highly familiar to the person and each new item to remember can be associated with a permanent object by placing it next to. By virtual walking through the rooms using imagination and visualizing the permanent objects can trigger the memory and increases the likelihood of recalling the item associated with that object relative to recall without such aid. Note that here we also increase the dimensions of the framework by walking through a virtual 3D space that enables arranging objects in 3D, as opposed to arranging them linearly or as a sequence in 1D, or in 2D as a table, modalities which are also often used in memory card games.

The third component of VR implementation of psychological tasks is to model the real-life navigation challenge that involves a mental transformation between the egocentrically presented environment and allocentric cognitive maps. VR enables us to emulate how we collect and associate information with locations in egocentric coordinate systems and convert them into allocentric cognitive maps.

Last but not least, VR enables us to change the point of view and hence the reference frame by switching camera views. Since camera views and cognitive reference frames (egocentric/allocentric) are not independent (Török et al, 2014), it enables the manipulation and testing of spatial transformations underpinning spatial navigation and spatial cognition.

6. A Novel Tool for Assessing Spatial Navigation and Memory

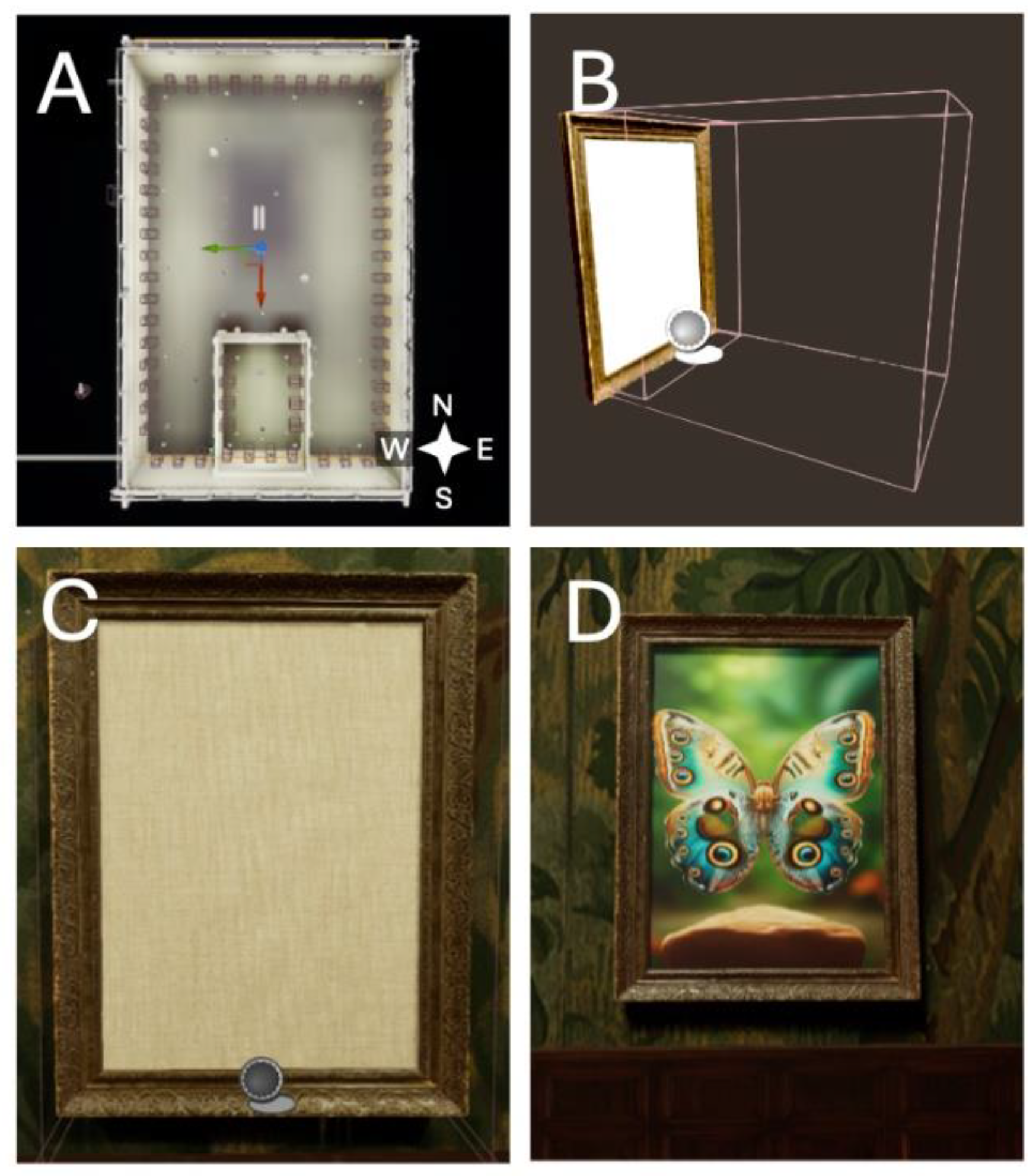

Building on the trends in spatial navigation research and leveraging advancements in VR technologies, we have developed a tool that integrates a spatial navigation task with recognition memory. While recognition memory tasks have been previously virtualized for memory improvement (Dirgantara & Septanto, 2021), at the time of writing, none provide such immersion and spatial extension as our virtual Gallery. The Gallery consists of two distinct yet embedded spaces: a smaller enclosure that also serves as the starting area, and a large hall around it (

Figure 1A). The enclosure is connected to the hall by a door (

Figure 2B), and both the enclosure and the hall are populated with two distinct sets of paintings: the first set contains 6 paintings and their identical duplicates (6 pairs = N=12), which are dispersed randomly, but spaced evenly on the walls. The second set, containing 24 different paintings (N=48), is displayed in the hall, also in a randomized fashion. The task of the participant is to locate each pair of paintings in the enclosure, then move through the door and continue matching the remaining pairs of paintings. The paintings are hidden from a distance and visible only when the participant enters their field of view represented by the collision box (32 cm) (

Figure 1B-D). The participant increments the score by 1 after visiting a painting and its matching pair consecutively (i.e., by entering the tracking zone of the two paintings without crossing or entering the tracking zone of another painting). When the participant tracks a painting that does not match the previously tracked painting, the latter painting will become the newly sought pair. Once the participant’s score reaches 30 by collecting all the paintings in both rooms, the task is complete.

7. Method

Owing to its high-fidelity graphics and the vast array of photorealistic assets, our game engine of choice was Unreal Engine version 5.3.2. For designing the interface, interactions and game logic, we’ve used the Blueprints visual programming language.

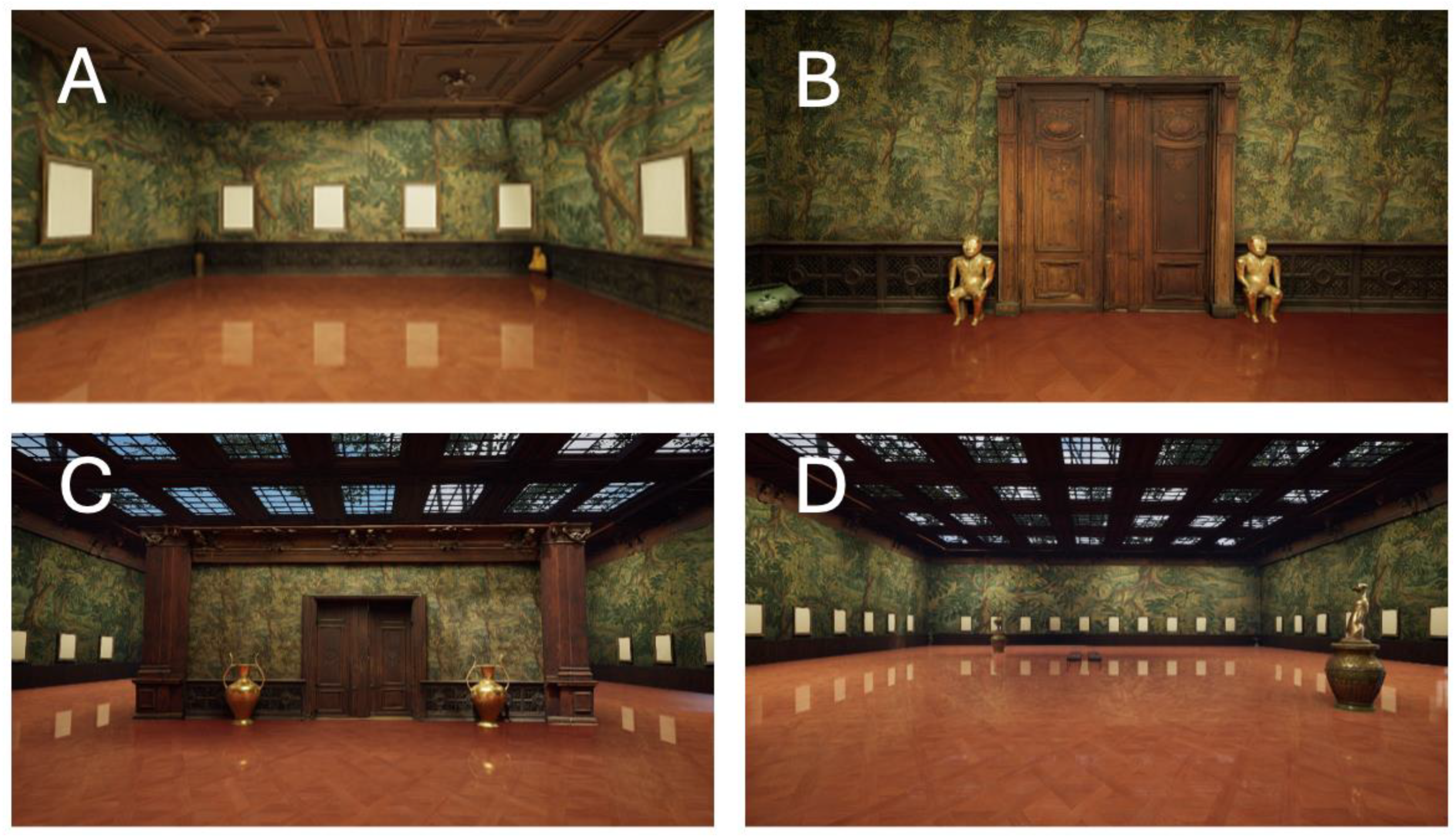

The main structural components of the Gallery are the enclosure, the hall, the walls, and the ceiling (

Figure 2). All these components have been designed from simple cube actors, molding them into the desired form using the scaling tool found in the Level editor. Once the desired setup was reached, we applied individually scaled textures to these surfaces. Following the application of textures, high-quality 3D meshes have been imported from Quixel Bridge, which is an official repository maintained by the developer of Unreal Engine. These meshes include everything from railings, and modular wooden ceiling elements, to decorative beams, vases, and statues (

Figure 2). Light is provided by a Directional Light actor that represents the Sun, which is centered in the sky to reduce shadows and celestial cues that might influence navigation (Mouristen et al., 2016). Additionally, Rectangular Light actors and Reflection Capture Spheres have been placed across the Gallery, to ensure good visibility. The intensity of the Directional Light was set to 10 lux, the color of the light being a neutral white (B=255, G=255, R=255, A=255). Rectangular Lights had an intensity of 4 EV, and a slight yellow hue (B=200, G=255, R=255, A=255). An Exponential Height Fog actor was added to provide a more natural look to the Sky actor and the environment. A post-processing volume with infinite extent was added to control the visual fidelity of the level while maintaining a high framerate. The participant controls the character from a first-person perspective. The character is surrounded by a Collision Component to ensure appropriate interaction with the environment and Paintings. The Character Movement Component handles the movement logic for the associated character. In contrast to the rest of the logic, this element has been designed in C++. The starting position is X=0, Y=0, Z=110, facing south, Z coding for the height of the mid-central point of the character. The Painting actors consist of several parts and are one of the central elements of our software. A default canvas that displays the picture is covered by an empty canvas, the visibility of which changes when the player enters the first collision box (the field of view), which extends in front of the Painting (

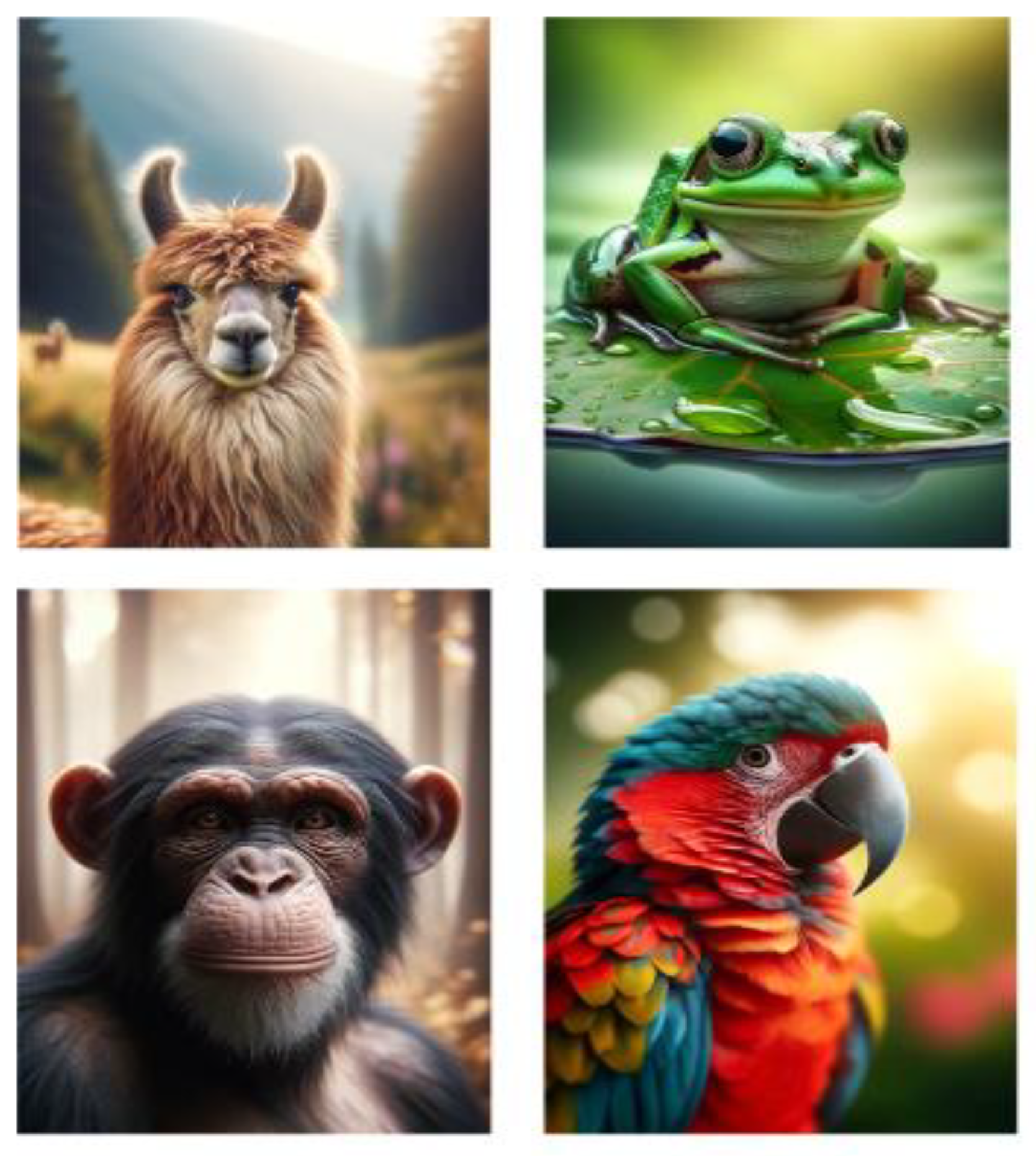

Figure 1B). When the player enters the second collision box (tracking zone), which is closely tied to the body of the Painting, the unique Pair ID of the painting is cast to the Game Manager, and the tracking begins. The stimuli consist of 30 animal pictures that were generated by AI (

Figure 1D and

Figure 3). Care has been taken to ensure that the animals do not look feral. Once the game Manager has been notified of the interaction, it stores the Pair ID of the Painting that has been interacted with. Once a second Painting is interacted with, the two IDs are compared. If they match, the IsDiscovered variable which stores a Boolean is set to True, the Score variable is incremented by 1, and the Game Manager checks for game completion. This state is reached once the number of discovered pairs is equal to the total number of pairs, which is 30 (60 Paintings in total). Once a pair has been discovered they can no longer be tracked anew. In case the Pair IDs do not match, the Current Pair ID variable is set to the latest painting interacted with. The location tracking logic is handled in the Level Blueprint. It performs this task by obtaining a reference to the Player Character at the beginning of the trial. Once the Player reference has been obtained it returns the location coordinates along an X, Y, Z axis and stores them in an array. The orientation of the Player Character is recorded in radians and stored in the same array as the location coordinates. Velocity and time are also stored in the array in question. The key presses are likewise individually recorded. The sampling frequency is tied to the framerate of the game, which is ~60Hz on a machine equipped with an RTX 3050 (4GB GDDR6) graphical processing unit. The sampling rate can be increased to the frequency of choice by changing the target framerate in the post-processing volume. For the sake of stability in initial testing, we have set the target framerate to 60Hz. While small variations occur when the trial is started due to texture streaming, these minor variations are far outweighed by the high sampling rate. The recorded data is written live into a CSV file during gameplay, thus avoiding data loss in case a crash occurs. Data analysis is conducted in MATLAB Version R2024a.

8. Results

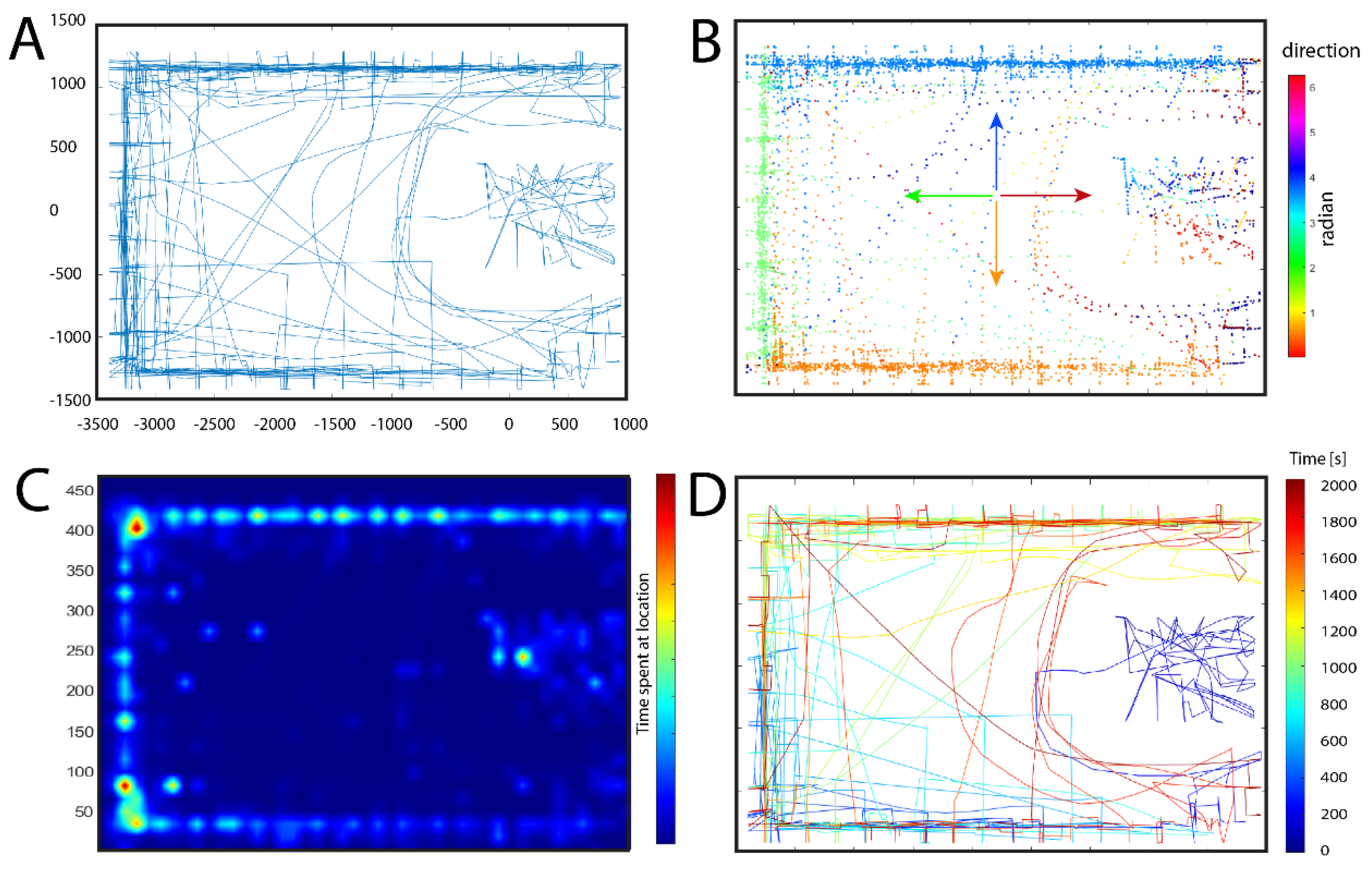

To demonstrate the viability of our software, in this section we will present data derived from one participant. Since the participant had no prior experience playing first-person games, a five-minute warm-up period was provided prior to beginning the task in a different environment. We ensured that the participant attained confidence in controlling the Player Character and following this we started the trial. The following instructions have been given prior to starting: "In this gallery, you will find many images on the wall. You can only see them when standing close to them. Each image has an identical pair somewhere on the wall. Your task is to find all the identical pairs of pictures. When you look at a picture and you remember where else you have seen the same image, walk directly to where you think you saw that image. If you are correct and the other image you found is the matching pair, you will get 1 point. Then you continue looking at other pictures until you find all matching pairs. Try to go close to the paintings as rarely as possible and complete the task with as few close looks as possible. Focus on accuracy rather than time. When you finish one room, go to the door, and continue to the other room.” The trial took a total of 1972.92 seconds, and the participant managed to discover all the pairs. No signs of cybersickness were reported and both motivation and attention were successfully maintained across the duration of the task. The data collected is illustrated in

Figure 4. We can observe several trends in the exploratory behavior of the participant. As seen in

Figure 4 A,B, the movement of the participant showed a greater tendency to cover the central area of the enclosure compared to the hall. This can be due to the smaller size of the enclosure, which enables the viewing of pictures from a more central position compared to the hall, thus leading to a more uniform coverage of the central area. Plot D (

Figure 4) shows that the participant continued the exploratory behavior by approaching all four walls of the hall one after the other, starting with the portion of the southern wall outside the enclosure, moving on to the western, northern, and eastern wall, then turning back. We can also see in plot D (

Figure 4) a growing tendency to move through the central open area of the hall, which is directly proportional to the passing of time, while in the first portion of the trial the participant was more likely to move alongside the walls. This can be attributed to the use of shortcuts once the subject memorized the positions of individual Paintings better. On plot C we can see the frequency by which a certain location was visited by the participant. The northwestern and northeastern corners seem to provide an important spatial cue, hence their frequent visitation. Another important allocentric cue is the door connecting the enclosure with the hall (

Figure 2 B and C). The southern wall was scarcely visited, a result that can be attributed to the fewer number of paintings located in the hall, since a large portion of the southern wall is obstructed by the enclosure. Looking at plots C and D, we can see a greater probability of the participant moving near the eastern wall as the experiment neared its end. This behavior can be attributed to the greater difficulty of finding the last few paintings, also prompting the participant to explore larger swaths of space, which indicates a shift in exploratory behavior in the late stages of the trial. In general, we can see a thigmotactic tendency coupled with chaining behavior exhibited in the beginning of the trial, a strategy that is gradually abandoned as the participant grows more familiar with the environment.

9. Discussion

Observing the scarcity of virtual tools that assess recognition and spatial memory in a virtual 3D environment with high fidelity graphics, we have set out to design a tool that encompasses all these attributes. Assessing recognition memory is a particularly stimulating topic in the area of spatial navigation research, since it involves two distinct components: recollection and familiarity, processes that are thought to reside within the medial temporal lobe. (Eichenbaum et al., 2007). Recollection requires remembering the context and details of previous experiences, while familiarity involves recognizing something as having been experienced before, but without recalling the specifics of the encounter (Zheng et al., 2024). Thus, our tool is capable of testing both declarative and non-declarative memory performance, coupled with spatial memory and navigational aptitudes. Special attention has been paid to utilizing high-fidelity assets to maximize immersion, this being a characteristic of great importance, since the lack of it disrupts spatial learning and memory performance (Hejtmanek et al., 2020).

The high reliability and sampling rate of navigational data also provides a significant advantage over other spatial navigation tasks in either real or virtual environments, since (1) there’s no risk of malfunctioning GPS devices, and (2) the sampling resolution in the current implementation of the Gallery is magnitudes higher than those found in some other virtual environments (e.g. Coutrot et al., 2019), with a theoretical possibility of raising it arbitrarily to an infinite extent, if a powerful enough computer is utilized. This high sampling rate allows for detailed collection of orientation and gaze data, using which it is possible to reconstruct the entire course of the trials undertaken by individuals, and replay them in real-time akin to a game of chess. Although sampling rate is dependent on the framerate, in which fluctuations might occur due to texture streaming and light bounce computations, the graphical fidelity of the environment can be adjusted to support a smooth gameplay and sampling, while still retaining vigorously higher levels of fidelity than most virtual environments in spatial navigation research. Our further prospects include the adaptation of the Gallery to head-mounted displays to increase immersion even further, potentially integrating an omnidirectional treadmill, which would aid with idiothetic cues (Hejtmanek et al., 2020), that are lacking due to the traditional desktop environment.

10. Conclusions

In summary, we have created a virtual reality tool that provides multifaceted cognitive engagement, combining elements of spatial navigation, memory, and executive functions. Our software provides a controlled environment, which would prove more challenging to achieve in a real-world task due to external variables. The difficulty of the task can be easily adjusted, and stimuli may be changed at any time should the need arise, providing flexibility and customizability. Due to the possibility of controlling lights, our environment can be utilized on clinical populations with elevated photosensitivity and provides a nonhazardous environment for the assessment of cognitive impairments and can also be used to monitor changes over time. Since the use of a head-mounted display is not mandatory, it is possible to conduct electrophysiological observation during experiments even in situations where HMDs that integrate EEG are not available.

References

- Allen, K., Brändle, F., Botvinick, M. et al. Using games to understand the mind. Nat Hum Behav 8, 1035–1043 (2024). [CrossRef]

- Barnes, C. A. (1979). Memory deficits associated with senescence: a neurophysiological and behavioral study in the rat. Journal of comparative and physiological psychology, 93(1), 74. [CrossRef]

- Bingman, V., Jechura, T., & Kahn, M. C. (2006). Behavioral and neural mechanisms of homing and migration in birds. Animal Spatial Cognition: Comparative, Neural, and Computational Approaches,[On-line]. Available: www. pigeon. osy. tufts. edu/asc/bingman.

- Buresova, O., & Bures, J. (1981). Role of olfactory cues in the radial maze performance of rats. Behavioural Brain Research, 3(3), 405-409. [CrossRef]

- Cagle, F. R. (1944). Home range, homing behavior, and migration in turtles.

- Carr, H., & Watson, J. B. (1908). Orientation in the white rat. Journal of comparative Neurology and Psychology, 18(1), 27-44.

- Cogné, M., Taillade, M., N’Kaoua, B., Tarruella, A., Klinger, E., Larrue, F.,... & Sorita, E. (2017). The contribution of virtual reality to the diagnosis of spatial navigation disorders and to the study of the role of navigational aids: A systematic literature review. Annals of physical and rehabilitation medicine, 60(3), 164-176. [CrossRef]

- Coutrot A, Silva R, Manley E, de Cothi W, Sami S, Bohbot VD, Wiener JM, Hölscher C, Dalton RC, Hornberger M, Spiers HJ. Global Determinants of Navigation Ability. Curr Biol. 2018 Sep 10;28(17):2861-2866.e4. [CrossRef] [PubMed]

- Coutrot, A., Schmidt, S., Coutrot, L., Pittman, J., Hong, L., Wiener, J. M.,... & Spiers, H. J. (2019). Virtual navigation tested on a mobile app is predictive of real-world wayfinding navigation performance. PloS one, 14(3), e0213272. [CrossRef]

- d’Isa, R., Comi, G., & Leocani, L. (2021). Apparatus design and behavioural testing protocol for the evaluation of spatial working memory in mice through the spontaneous alternation T-maze. Scientific Reports, 11(1), 21177. [CrossRef]

- Deacon, R. M., & Rawlins, J. N. P. (2006). T-maze alternation in the rodent. Nature protocols, 1(1), 7-12. [CrossRef]

- Dickey, M. D. (2005). Engaging by design: How engagement strategies in popular computer and video games can inform instructional design. Educational technology research and development, 53(2), 67-83. [CrossRef]

- Dirgantara, H. B., & Septanto, H. (2021). A Prototype of Web-based Picture Cards Matching Video Game for Memory Improvement Training. IJNMT (International Journal of New Media Technology), 8(1), 1-9. [CrossRef]

- Dittman, A. H., & Quinn, T. P. (1996). Homing in Pacific salmon: mechanisms and ecological basis. Journal of Experimental Biology, 199(1), 83-91. [CrossRef]

- Eichenbaum, H., Yonelinas, A. P., & Ranganath, C. (2007). The medial temporal lobe and recognition memory. Annual review of neuroscience, 30, 123–152. [CrossRef]

- Ekstrom, A. D., & Hill, P. F. (2023). Spatial navigation and memory: A review of the similarities and differences relevant to brain models and age. Neuron, 111(7), 1037-1049. [CrossRef]

- Feo, R., & Giove, F. (2019). Towards an efficient segmentation of small rodents brain: a short critical review. Journal of neuroscience methods, 323, 82-89. [CrossRef]

- Grieves, R. M., Jedidi-Ayoub, S., Mishchanchuk, K., Liu, A., Renaudineau, S., & Jeffery, K. J. (2020). The place-cell representation of volumetric space in rats. Nature communications, 11(1), 789. [CrossRef]

- Hafting, T., Fyhn, M., Molden, S., Moser, M. B., & Moser, E. I. (2005). Microstructure of a spatial map in the entorhinal cortex. Nature, 436(7052), 801-806. [CrossRef]

- Hejtmanek, L., Starrett, M., Ferrer, E., & Ekstrom, A. D. (2020). How much of what we learn in virtual reality transfers to real-world navigation?. Multisensory Research, 33(4-5), 479- 503. [CrossRef]

- Herculano-Houzel, S. (2009). The human brain in numbers: a linearly scaled-up primate brain. Frontiers in human neuroscience, 3, 857. [CrossRef]

- Koenig, S., Crucian, G., Dalrymple-Alford, J., & Dünser, A. (2011). Assessing navigation in real and virtual environments: A validation study. [CrossRef]

- Konishi, K., & Bohbot, V. D. (2013). Spatial navigational strategies correlate with gray matter in the hippocampus of healthy older adults tested in a virtual maze. Frontiers in aging neuroscience, 5, 28885. [CrossRef]

- Luria, A. R. (1987). The Mind of a Mnemonist: A Little Book about a Vast Memory, With a New Foreword by Jerome S. Bruner. United Kingdom: Harvard University Press.

- Malinowski, J. C., & Gillespie, W. T. (2001). Individual differences in performance on a largescale, real-world wayfinding task. Journal of Environmental Psychology, 21(1), 73-82. [CrossRef]

- Mandal, S. (2018). How do animals find their way back home? A brief overview of homing behavior with special reference to social Hymenoptera. Insectes sociaux, 65(4), 521-536. [CrossRef]

- Morris, R. (1984). Developments of a water-maze procedure for studying spatial learning in the rat. Journal of neuroscience methods, 11(1), 47-60. [CrossRef]

- Mouritsen, H., Heyers, D., & Güntürkün, O. (2016). The neural basis of long-distance navigation in birds. Annual review of physiology, 78, 133-154. [CrossRef]

- O'Keefe, J., & Dostrovsky, J. (1971). The hippocampus as a spatial map: preliminary evidence from unit activity in the freely-moving rat. Brain research. [CrossRef]

- O'keefe, J., & Nadel, L. (1978). The hippocampus as a cognitive map. Oxford university press.

- Olton, D. S., & Samuelson, R. J. (1976). Remembrance of places passed: spatial memory in rats. Journal of experimental psychology: Animal behavior processes, 2(2), 97.

- Paxinos, G., & Watson, C. (2006). The rat brain in stereotaxic coordinates: hard cover edition. Elsevier.

- Rizzo, A. A., & Buckwalter, J. G. (1997). Virtual reality and cognitive assessment and rehabilitation: the state of the art. Virtual reality in neuro-psycho-physiology, 123-145.

- Salas, Cosme & Broglio, Cristina & Durán, E. & Gómez, Antonia & Rodríguez, Fernando. (2008). Spatial Learning in Fish. [CrossRef]

- Shrager, Y., Bayley, P. J., Bontempi, B., Hopkins, R. O., & Squire, L. R. (2007). Spatial memory and the human hippocampus. Proceedings of the National Academy of Sciences, 104(8), 2961-2966. [CrossRef]

- Squire, L. R. (1992). Memory and the hippocampus: a synthesis from findings with rats, monkeys, and humans. Psychological review, 99(2), 195. [CrossRef]

- Taillade, M., Sauzéon, H., Dejos, M., Arvind Pala, P., Larrue, F., Wallet, G.,... & N'Kaoua, B. (2013). Executive and memory correlates of age-related differences in wayfinding performances using a virtual reality application. Aging, Neuropsychology, and Cognition, 20(3), 298-319. [CrossRef]

- Thurley, K. (2022). Naturalistic neuroscience and virtual reality. Frontiers in Systems Neuroscience, 16, 896251. [CrossRef]

- Tolman, E. C., Ritchie, B. F., & Kalish, D. J. J. O. E. P. (1946). Studies in spatial learning. I. Orientation and the short-cut. Journal of experimental psychology, 36(1), 13.

- Topalovic, U., Barclay, S., Ling, C. et al. A wearable platform for closed-loop stimulation and recording of single-neuron and local field potential activity in freely moving humans. Nat Neurosci 26, 517–527 (2023). [CrossRef]

- Török, Á., Nguyen, T. P., Kolozsvári, O., Buchanan, R. J., & Nadasdy, Z. (2014). Reference frames in virtual spatial navigation are viewpoint dependent. Frontiers in Human Neuroscience, 8, 88072. [CrossRef]

- Tsoar A, Nathan R, Bartan Y, Vyssotski A, Dell'Omo G, Ulanovsky N. Large-scale navigational map in a mammal. Proc Natl Acad Sci U S A. 2011 Sep 13;108(37):E718-24. [CrossRef] [PubMed] [PubMed Central]

- Tuena, C., Mancuso, V., Stramba-Badiale, C., Pedroli, E., Stramba-Badiale, M., Riva, G., & Repetto, C. (2021). Egocentric and allocentric spatial memory in mild cognitive impairment with real-world and virtual navigation tasks: A systematic review. Journal of Alzheimer's Disease, 79(1), 95-116. [CrossRef]

- Turner, C. H. (1907). Do ants form practical judgments?. The Biological Bulletin, 13(6), 333-343. [CrossRef]

- Waller, D. E., & Nadel, L. E. (2013). Handbook of spatial cognition (pp. x-309). American Psychological Association.

- Xu, N., LaGrow, T. J., Anumba, N., Lee, A., Zhang, X., Yousefi, B.,... & Keilholz, S. (2022). Functional connectivity of the brain across rodents and humans. Frontiers in Neuroscience, 16, 816331. [CrossRef]

- Yerkes, R. M. (1912). The intelligence of earthworms. Journal of Animal Behavior, 2(5), 332. [CrossRef]

- Zheng, J., Li, B., Zhao, W., Su, N., Fan, T., Yin, Y.,... & Luo, L. (2024). Soliciting judgments of learning reactively facilitates both recollection-and familiarity-based recognition memory. Metacognition and Learning, 1-25. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).