Submitted:

17 March 2025

Posted:

18 March 2025

You are already at the latest version

Abstract

Keywords:

Introduction

Methods

Framework Development Process

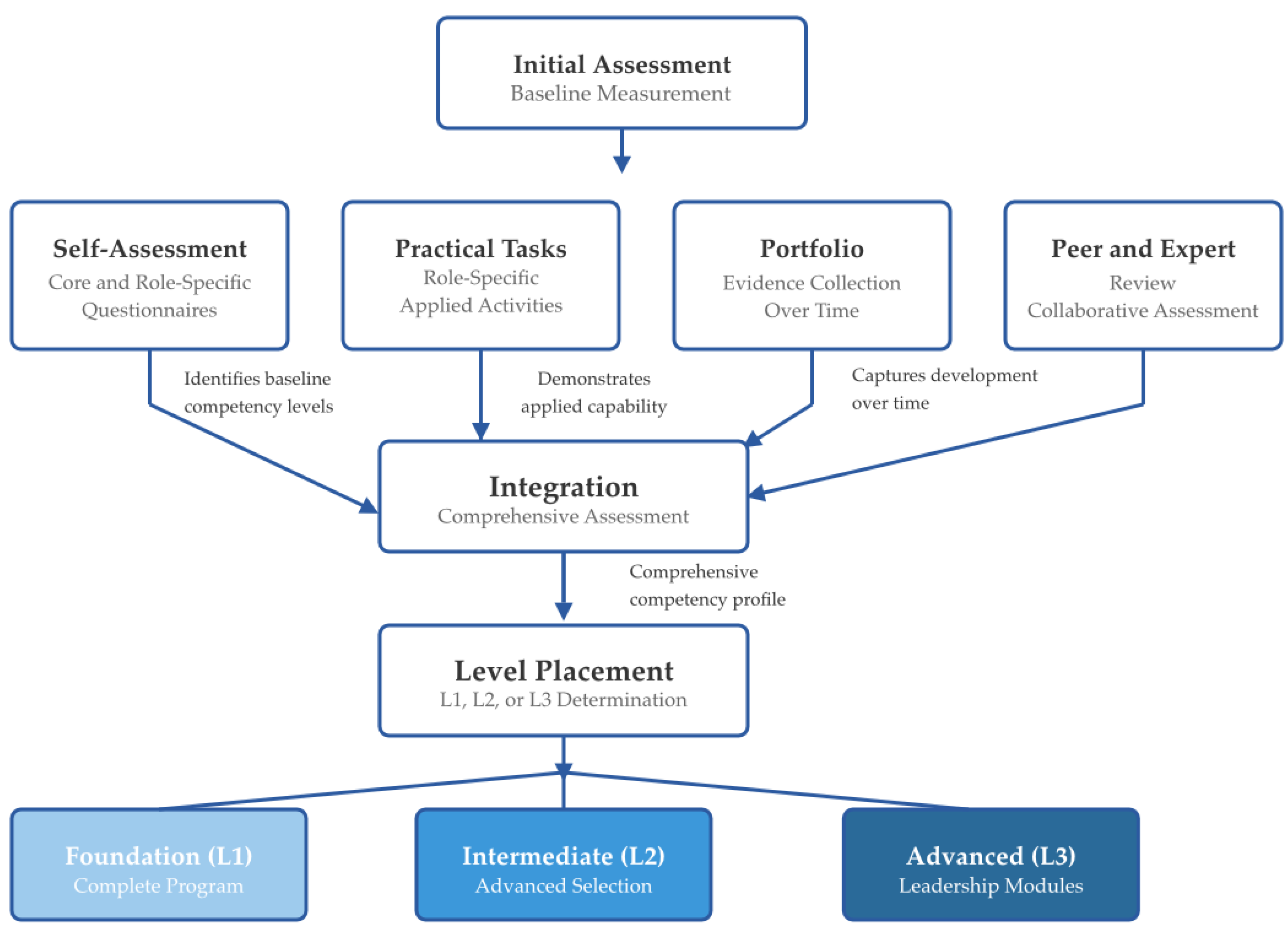

Conceptual Framework for Assessment

Results

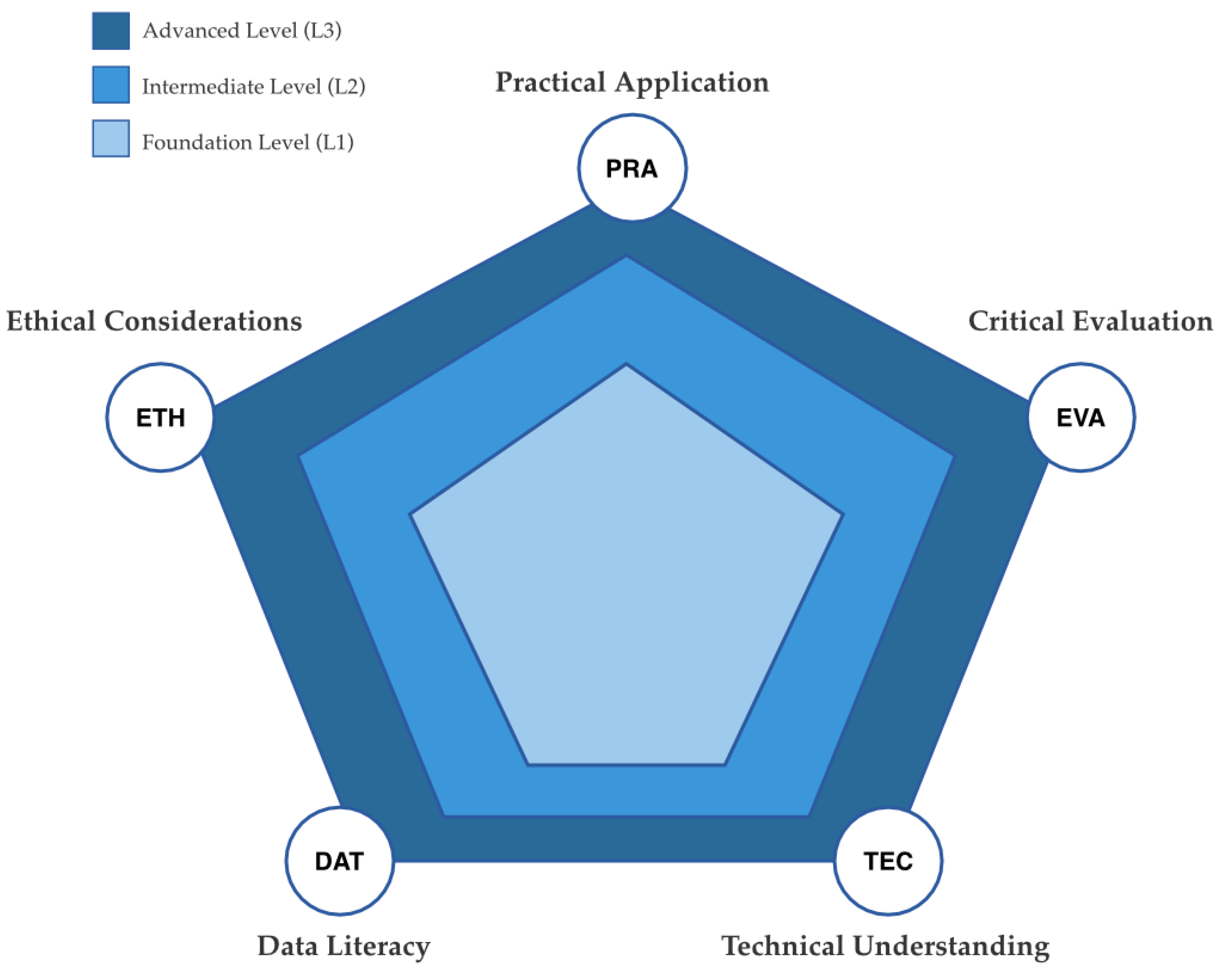

Framework Structure

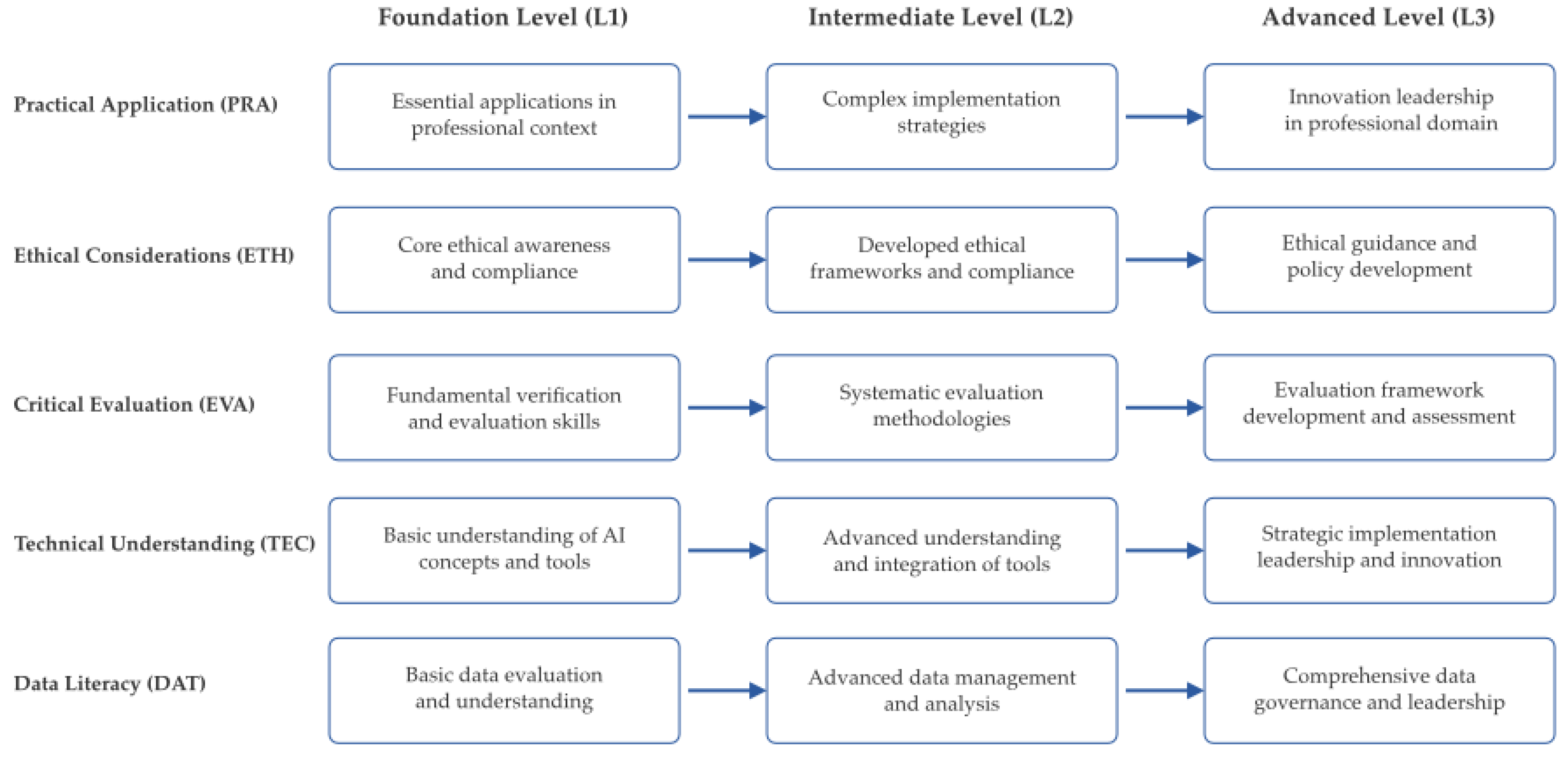

Progression Levels

- The Foundation Level acts as the entry point, emphasizing essential competencies such as a basic understanding of AI concepts and tools, fundamental verification and evaluation skills, essential practical applications within a professional context, and core ethical awareness and compliance.

- The Intermediate Level builds on the foundation with improved capabilities, including a more advanced understanding and integration of tools, systematic evaluation methods, complex implementation strategies, and developed ethical frameworks and compliance systems.

- The Advanced Level fosters leadership and innovation capabilities, including the strategic implementation of AI systems, the development of evaluation and assessment frameworks, innovation leadership in the professional realm, and the creation of ethical guidance and policy.

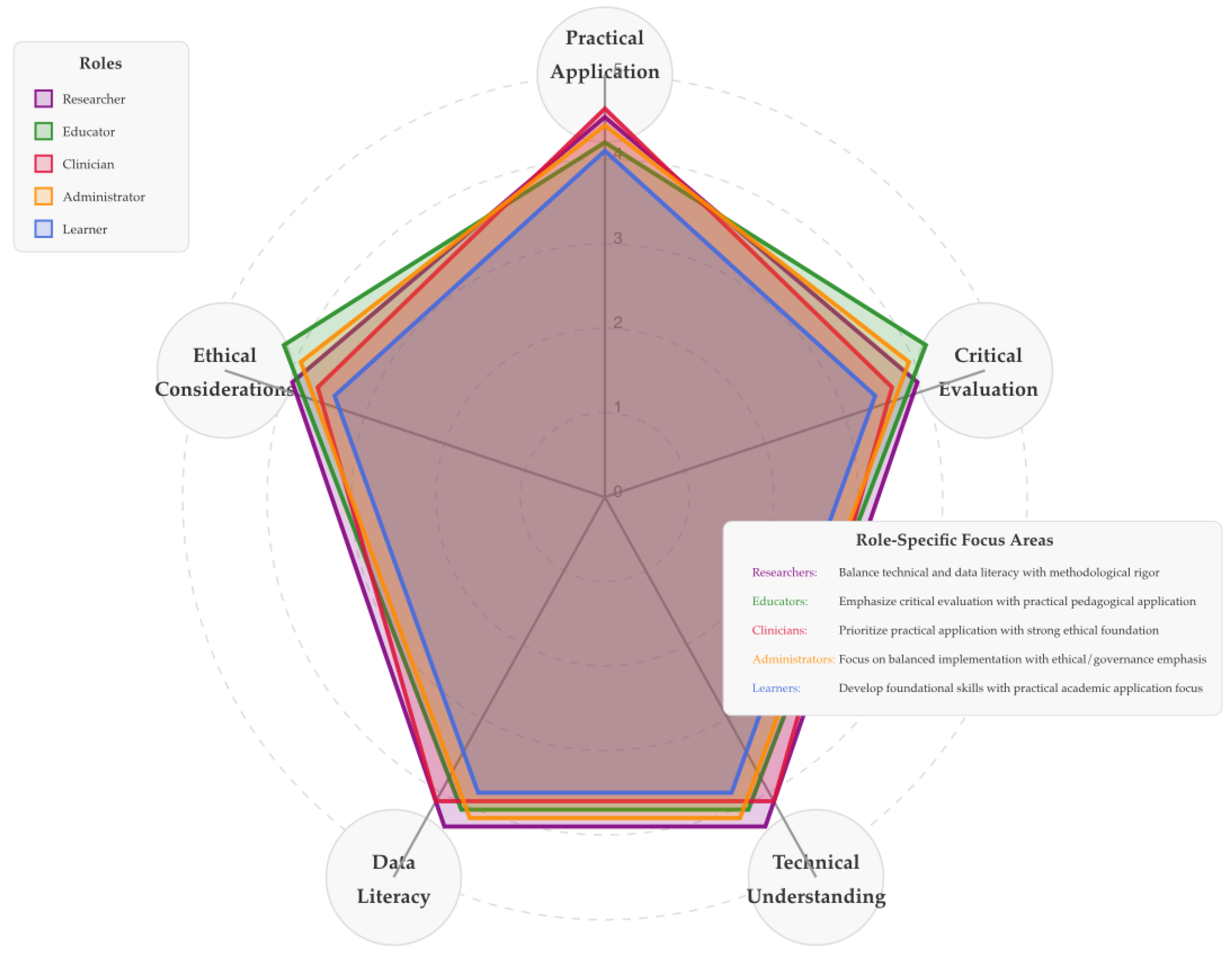

Role-Specific Frameworks

Discussion

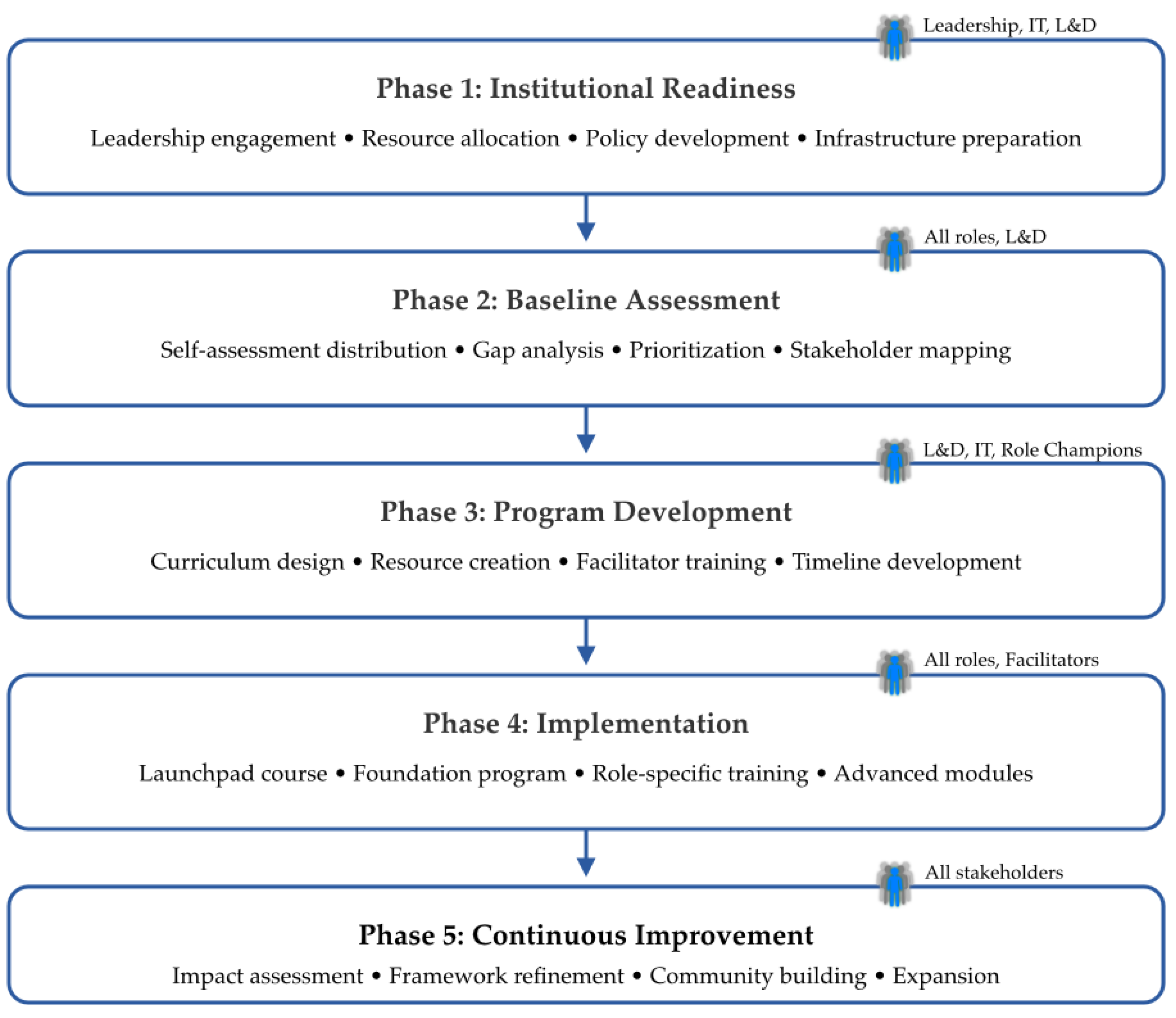

Implementation Considerations

Comparison with Existing Frameworks

Anticipated Challenges

Future Directions

Conclusions

References

- García-Peñalvo, F.J.; Corell, A.; Abella-García, V.; Grande-de-Prado, M. Artificial Intelligence in Higher Education: A Systematic Mapping. Sustainability 2022, 14, 1493. [Google Scholar] [CrossRef]

- Long, D.; Magerko, B. (2020). What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1–16). [CrossRef]

- Eaton, S.E.; Turner, K.L. Academic integrity and AI: Bridging the digital divide with education. Innovations in Education and Teaching International 2023, 60, 456–471. [Google Scholar] [CrossRef]

- Hwang, G.J.; Chen, P.Y. Artificial intelligence in education: A review of methodological approaches. Educational Research Review 2023, 38, 100474. [Google Scholar] [CrossRef]

- Prinsloo, P.; Slade, S. Promoting digital literacy in the age of AI: Frameworks for ethical decision-making. Journal of Learning Analytics 2023, 10, 7–22. [Google Scholar] [CrossRef]

- Zary, N. (2024). AI Literacy Framework (ALiF): A Progressive Competency Development Protocol for Higher Education. protocols.io. [CrossRef]

- EDUCAUSE Horizon Report. (2023). Teaching and Learning Edition. [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education--where are the educators? International Journal of Educational Technology in Higher Education 2019, 16, 1–27. [Google Scholar] [CrossRef]

- Kumar, V.; Sharma, R. AI literacy in Indian higher education: A comprehensive framework. Education and Information Technologies 2023, 28, 12789–12805. [Google Scholar] [CrossRef]

- Chan, T.W.; Looi, C.K.; Chen, W.; Wong, L.H.; Chang, B.; Liao, C.C.; Ogata, H. AI in education in Asia: A comparative framework analysis. Research and Practice in Technology Enhanced Learning 2023, 18, 1–24. [Google Scholar] [CrossRef]

- Kong, S.C.; Lai, M.; Sun, D. Teacher development in computational thinking: Design and learning outcomes of programming concepts, practices and pedagogy. Computers & Education 2020, 151, 103872. [Google Scholar] [CrossRef]

- Yuan, C.; Fan, S.; Chin, J.; Cha, W. Developing the artificial intelligence literacy scale (AILS). Interactive Learning Environments 2021, 1–16. [Google Scholar] [CrossRef]

- Aşiksoy, G. Artificial intelligence literacy scale development and investigation of university students according to gender, department, and academic achievement. International Journal of Educational Technology in Higher Education 2023, 18, 1–24. [Google Scholar] [CrossRef]

- Ma, S.; Li, Z.; Chen, Y. The Artificial Intelligence Literacy Scale for Chinese College Students (AILS-CCS): Scale Development and Validation. Education and Information Technologies 2023, 28, 1–22. [Google Scholar] [CrossRef]

- Wiljer, D.; Tavares, W.; Mylopoulos, M.; Kapralos, B.; Charow, R.; Faieta, J.; Disperati, F. Developing AI Literacy: A Critical Competency for Healthcare Professionals. Academic Medicine 2023, 98, 672–680. [Google Scholar] [CrossRef]

- Chen, X.; Zou, D.; Xie, H.; Wang, F.L. Artificial intelligence in higher education: A systematic review of research trends, applications, and challenges. Education and Information Technologies 2023, 28, 1–35. [Google Scholar] [CrossRef]

- De Silva, D.; Sriratanaviriyakul, N.; Warusawitharana, A.; Sarkar, S. (2022). AI Literacy: A Modular Approach. IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), 7-10. [CrossRef]

- Holmes, W.; Porayska-Pomsta, K.; Holstein, K.; Sutherland, E.; Baker, T.; Shum, S.B.; Koedinger, K.R. Ethics of AI in Education: Towards a Community-Wide Framework. International Journal of Artificial Intelligence in Education 2022, 32, 357–383. [Google Scholar] [CrossRef]

- Mansoor, H.M.; Al-Said, T.; Al-Anqoudi, Z.; Rahim, N.F. A.; Al-Badi, A. Cross-regional exploration of artificial intelligence literacy and readiness in higher education. Education and Information Technologies 2023, 28, 11729–11751. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M. Systematic review of research on artificial intelligence applications in higher education -- Update and extension. International Journal of Educational Technology in Higher Education 2023, 20, 1–42. [Google Scholar] [CrossRef]

| Component | Definition | Key Elements | Example Competencies |

|---|---|---|---|

| Data Literacy (DAT) | Understanding data as the foundation for AI systems |

|

|

| Technical Understanding (TEC) | Knowledge and skills required to understand AI systems and their capabilities |

|

|

| Critical Evaluation (EVA) | Systematic assessment of AI outputs, processes, and impacts |

|

|

| Ethical Considerations (ETH) | Responsible and principled use of AI in professional contexts |

|

|

| Practical Application (PRA) | Effective implementation of AI tools and processes in real-world contexts |

|

|

| Level | Data Literacy | Technical Understanding | Critical Evaluation | Ethical Considerations | Practical Application |

| Foundation (L1) | Basic evaluation of data types and structures; organization of data using standard methods; performance of simple analyses; creation of basic visualizations | Basic understanding of AI concepts and tools; identification of common applications; simple prompt creation; awareness of limitations | Fundamental verification of AI-generated content; use of basic quality checks; identification of common errors; tracking of AI impact on tasks | Core ethical awareness and guidelines; proper attribution of AI contributions; adherence to policies; identification of basic risks | Essential applications of AI in professional context; implementation of simple workflows; documentation of AI use; resolution of basic challenges |

| Intermediate (L2) | Advanced data management techniques; implementation of data quality improvement measures; application of appropriate analytical methods; design of effective visualizations for complex data | Advanced understanding of AI capabilities; use of multiple tools for complex tasks; customization of settings; development of prompt templates | Systematic evaluation methodologies; implementation of quality control procedures; development of error detection systems; analysis of efficiency gains | Developed ethical frameworks; creation of documentation systems; implementation of risk assessment protocols; assistance to peers on compliance | Complex implementation strategies; optimization of workflows; process mapping; solution development for multifaceted challenges |

| Advanced (L3) | Comprehensive data governance strategies; development of methodologies for data stewardship; creation of advanced analytical frameworks; leadership in data communication initiatives | Strategic implementation leadership; development of innovative applications; creation of training materials; design of scalable frameworks | Framework development for evaluation; establishment of quality standards; design of comprehensive impact studies; leadership in assessment methodology | Ethical guidance and policy development; leadership in compliance initiatives; design of risk management frameworks; development of system-wide safeguards | Innovation leadership in professional domain; development of transformative workflows; design of novel solutions; leadership in implementation projects |

| Stakeholder Role | Primary Focus Areas | Key Challenges | Implementation Priorities |

| Learners | Academic tool use; critical evaluation of AI-generated content; ethical compliance with institutional policies; basic data literacy for academic contexts | Academic integrity concerns; distinguishing between AI assistance and plagiarism; developing confidence in verifying AI outputs; balancing tool use with skill development | Foundation-level technical and ethical competencies; hands-on practice with academic AI tools; verification skills development; integration of AI into study methods |

| Educators | Curriculum integration; assessment adaptation; student guidance on appropriate AI use; instructional design with AI tools | Maintaining academic standards while embracing technology; designing assessments in AI-rich environments; differentiating instruction using AI; modeling appropriate AI use for students | Technical-ethical balance with pedagogical applications; AI-enhanced teaching methodologies; development of AI-aware assessment strategies; creation of AI policies for educational contexts |

| Researchers | Research methodology enhancement; literature analysis; data processing; validation of AI-generated content in scholarly contexts | Maintaining research integrity; ensuring transparency in AI use; addressing methodological questions; managing complex data with AI assistance | Advanced technical understanding with strong ethical foundation; rigorous verification protocols; methodological innovation with AI tools; transparent documentation practices |

| Clinicians | Patient care enhancement; clinical decision support; documentation efficiency; healthcare data analysis | Balancing technology with human care; ensuring patient privacy and consent; maintaining clinical judgment; integrating AI into existing workflows | Practical applications with strong ethical focus; patient-centered AI use; clinical workflow integration; data privacy and security emphasis |

| Administrators | Operational efficiency; process automation; decision support; organizational governance of AI | Developing appropriate policies; managing change resistance; ensuring fair and consistent AI implementation; addressing job displacement concerns | Balanced competencies across all framework components; focus on governance structures; risk assessment protocols; staff development support |

| Assessment Method | Primary Purpose | Advantages | Limitations | Appropriate Uses |

| Self-Assessment Questionnaire | Baseline measurement of AI literacy across framework components; identification of strengths and gaps; tracking of progress over time | Scalable and efficient; provides immediate feedback; covers all framework components; adaptable to different roles | Self-reporting bias; limited verification of actual capabilities; potential for misinterpretation of competency levels | Initial assessment; progress tracking; large-scale implementation; self-directed development planning |

| Practical Assessment Tasks | Demonstration of applied skills; verification of capability to implement AI tools in authentic contexts; observation of problem-solving approaches | Authentic evidence of capability; demonstrates application in context; reveals practical limitations and strengths; assesses integration of multiple competencies | Resource-intensive; difficult to standardize; may not assess all framework components equally; requires expert assessment | Competency verification; certification processes; summative assessment; performance evaluation |

| Portfolio Assessment | Documentation of AI literacy development over time; collection of evidence demonstrating capability across contexts; reflection on learning process | Captures development trajectory; provides authentic artifacts; encourages reflection; supports personalized evidence collection | Time-intensive to develop and assess; variable quality and comprehensiveness; requires clear assessment criteria | Longitudinal development tracking; comprehensive capability assessment; professional development planning; evidence-based certification |

| Peer & Expert Review | Collaborative assessment of AI literacy; verification of capabilities by colleagues or subject matter experts; feedback on performance in authentic contexts | Incorporates multiple perspectives; provides targeted feedback; leverages distributed expertise; addresses blind spots in self-assessment | Potential for inconsistency across reviewers; logistical challenges in coordination; may be influenced by interpersonal factors | Advanced-level assessment; community-building around AI literacy; formative feedback; validation of complex capabilities |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).