Submitted:

10 March 2025

Posted:

12 March 2025

You are already at the latest version

Abstract

Keywords:

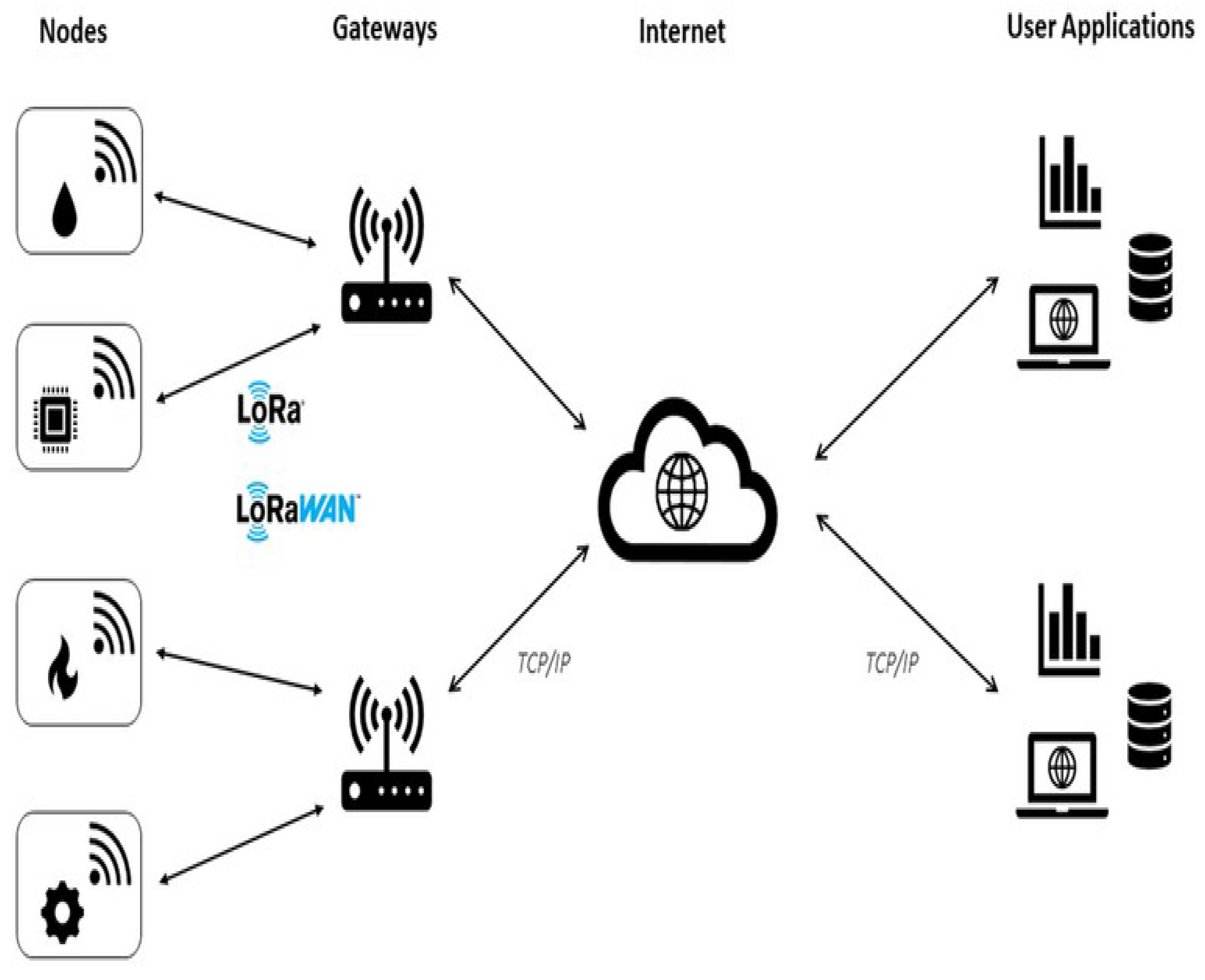

Introduction

- Contributions of the Article

- ▪

- Development of an RL-Based Scheduling Algorithm: The article presents a novel reinforcement learning-based task scheduling algorithm specifically designed for LoRaWAN networks, enhancing resource allocation and optimizing Quality of Service (QoS) metrics.

- ▪

- Performance Evaluation: It provides a comprehensive performance analysis of the proposed algorithm through simulations, demonstrating its effectiveness in improving throughput, reducing delay, and increasing packet delivery ratios compared to existing scheduling methods.

- ▪

- Insights for Future Research: The findings and methodologies outlined in the article offer valuable insights and a foundation for future research in the field of IoT and LoRaWAN, encouraging further exploration of adaptive and intelligent scheduling techniques to address the evolving challenges in network management.

Related Work

Research Methodology

Proposed Method

Research Design

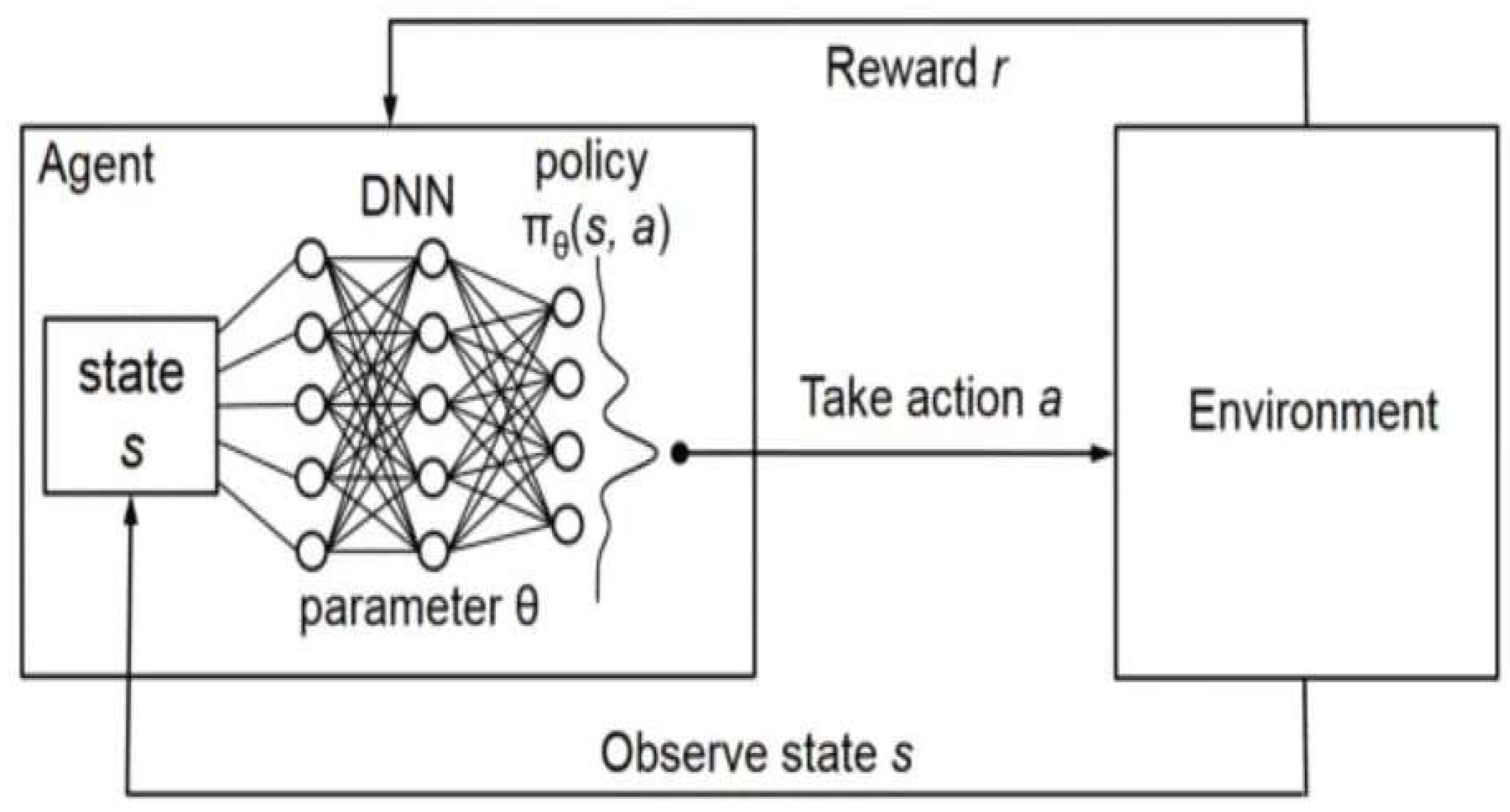

Algorithm Design and Implementation

Algorithm Design

- Agent: This is the decision-making entity. It receives the current state of the environment (s) and uses its policy (π) to select an action (a). The policy is typically implemented as a neural network (DNN) with parameters θ.

- Environment: This is the external world the agent interacts with. It receives the agent’s action (a) and provides the agent with a new state (s’) and a reward (r).

- State (s): The current situation or observation of the environment.

- Action (a): The decision or move made by the agent.

- Reward (r): A scalar value indicating the outcome of the agent’s action. Positive rewards encourage behaviours, while negative rewards discourage them.

- Policy (π): A function that maps states to actions. In DRL, it’s often represented as a neural

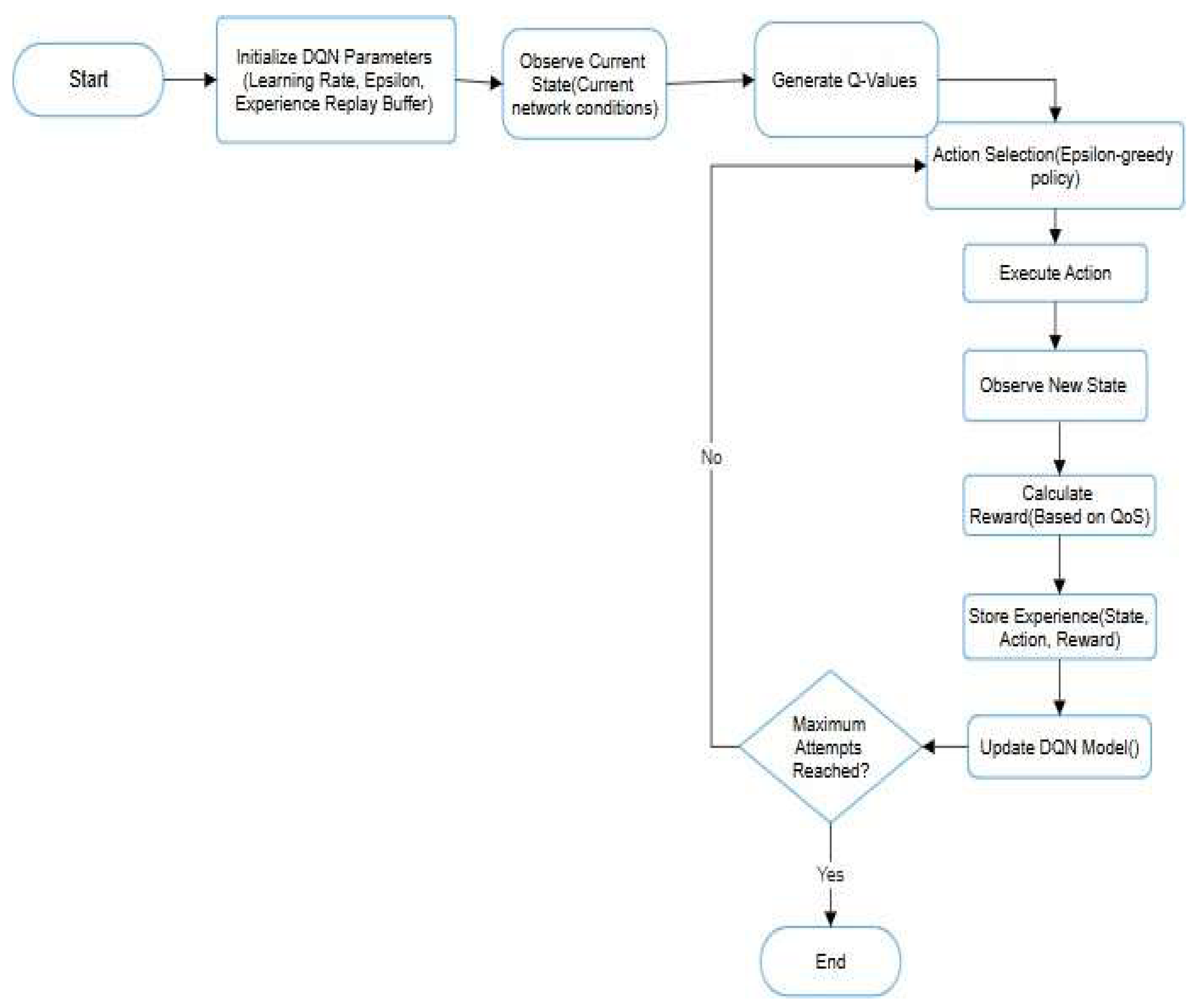

Training Phase of the Proposed Scheduling Algorithm

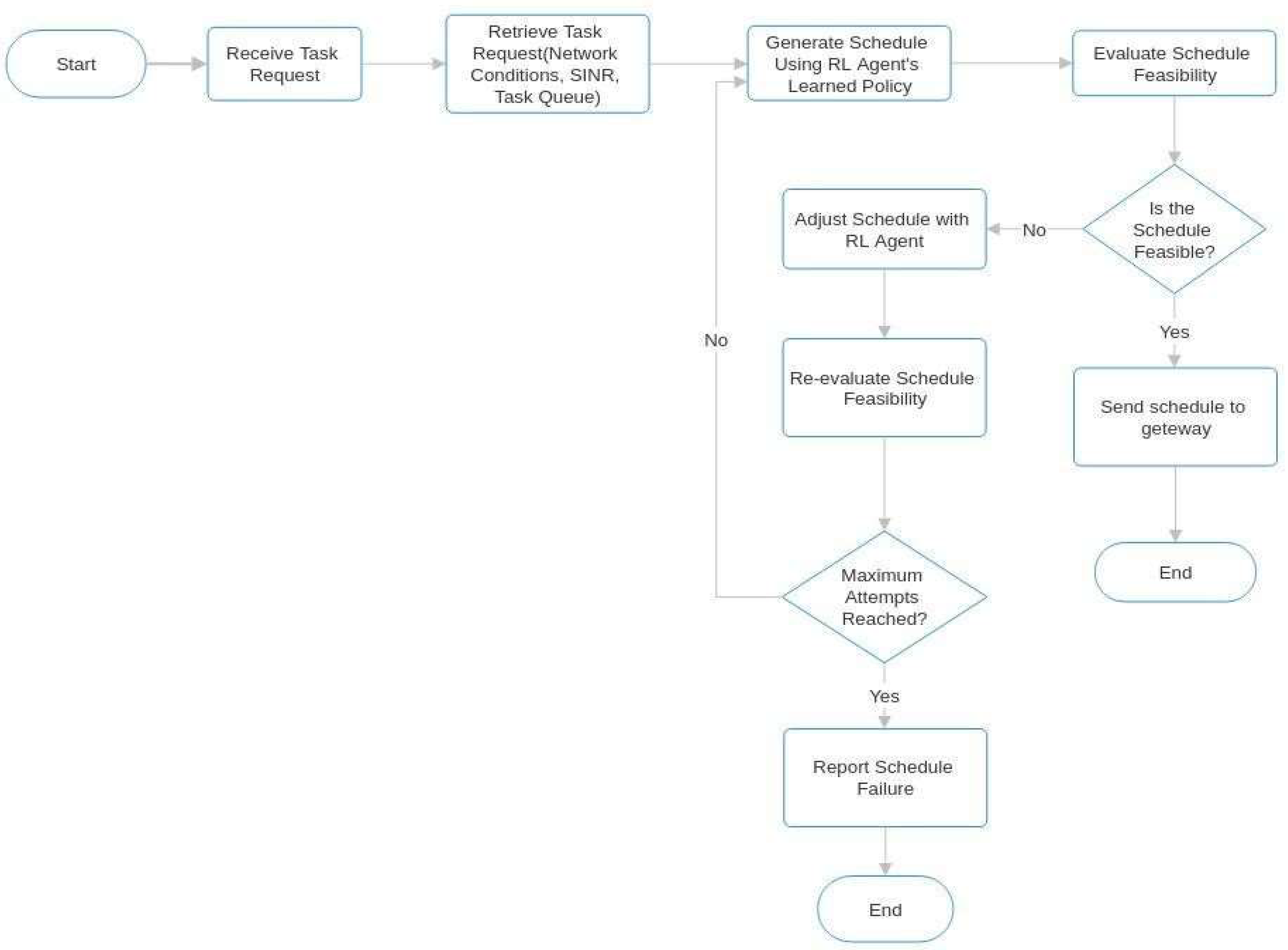

The Trained Proposed Scheduling Algorithm Diagram

Algorithm Implementation

Pseudocode for Task Scheduling Algorithm

- Initialization

- State Observation

- Action Selection

- Environment Interaction (OpenAI Gym Integration)

- Reward Calculation

- Q-Value Update (Learning)

- Training Loop

- Policy Improvement and Execution

- Algorithm 1 Initialization

- 1)

- Initialize Q-network with random weights

- 2)

- Initialize target Q-network with the same weights as Q-network

- 3)

- Initialize Replay Memory D with capacity N

- 4)

- Set ϵ for ϵ-greedy policy

- 5)

- Set learning rate α, discount factor γ, and batch size

- 6)

- Define action space A = {channel selection, task prioritization, gateway allocation}

- 7)

- Define state space S = {channel status, signal strength, gateway congestion, task deadlines}

- 8)

- Define reward function R(s, a) based on QoS metrics

- 9)

- Periodically synchronize target Q-network with Q-network weights every K episodes

- Algorithm 2 State Observation

- 1)

- FunctionObserveState()

- 2)

- Initialize state as an empty list

- 3)

- Normalize current channel status, signal strength (SINR), gateway congestion, and task deadlines

- 4)

- Append normalized values to state

- 5)

- returnstate

- Algorithm 3 Action Selection using ϵ-Greedy Policy

- 1)

- Function SelectAction(state, ϵ)

- 2)

- Generate a random number and ∈ [0, 1]

- 3)

- if rand < ϵ then

- 4)

- Choose a random action from action space A

- 5)

- else

- 6)

- Compute Q-values for all actions using Q-network

- 7)

- Choose action argmax(Q-values) // Select the action with the highest Q-value

- 8)

- end if

- 9)

- return action

- Algorithm 4 Environment Interaction

- 1)

- FunctionPerformAction(action)

- 2)

- Initialize the OpenAI Gym environment

- 3)

- if action == "channel selection" then

- 4)

- Select the channel with the lowest interference and load

- 5)

- else ifaction == "task prioritization" then

- 6)

- Prioritize tasks based on deadlines

- 7)

- else ifaction == "gateway allocation" then

- 8)

- Assign tasks to gateways with optimal load balancing and signal quality

- 9)

- end if

- 10)

- Execute the selected action in the LoRaWAN environment via OpenAI Gym

- 11)

- Observe the resulting state, reward, and whether the episode is done using GetEnvironmentFeedback() from Gym environment

- 12)

- returnnew state, reward, done

- Algorithm 5 Reward Calculation

- 1)

- FunctionCalculateReward(state, action)

- 2)

- Initialize reward = 0

- 3)

- ifQoS metrics are improved then

- 4)

- reward += k // Positive reward for improved QoS metrics

- 5)

- else

- 6)

- reward -= k // Negative reward for decreased QoS metrics

- 7)

- end if

- 8)

- returnreward

- Algorithm 6 Q-Value Update (Learning)

- 1)

- FunctionUpdateQNetwork()

- 2)

- Sample a random minibatch of transitions (state, action, reward, next state) from Replay Memory D

- 3)

- foreach transition in the minibatch do

- 4)

- target = reward

- 5)

- ifnot done then

- 6)

- target += γ × max(target Q-network.predict(next state))

- 7)

- end if

- 8)

- Compute loss as Mean Squared Error (MSE) between target and Q- network.predict(state, action)

- 9)

- Perform gradient descent step to minimize loss

- 10)

- end for

- 11)

- Periodically synchronize target Q-network with Q-network weights

- Algorithm 7 Training Loop

- 1)

- forepisode in range(total_episodes) do

- 2)

- state = ObserveState()

- 3)

- done = False

- 4)

- while not done do

- 5)

- action = SelectAction(state, ϵ)

- 6)

- new_state, reward, done = PerformAction(action)

- 7)

- Store transition (state, action, reward, new_state, done) in Replay Memory D

- 8)

- iflen(Replay Memory) > batch size then

- 9)

- UpdateQNetwork()

- 10)

- end if

- 11)

- state = new_state

- 12)

- end while

- 13)

- if ϵ > ϵ_min then

- 14)

- ϵ *= epsilon_decay // Decay exploration rate

- 15)

- end if

- 16)

- ifepisode % evaluation_interval == 0 then

- 17)

- EvaluatePolicyPerformance()

- 18)

- end if

- 19)

- end for

- Algorithm 8 Policy Improvement and Execution

- 1)

- FunctionEvaluatePolicyPerformance()

- 2)

- Initialize performance metrics

- 3)

- fortest episode in range(test episodes) do

- 4)

- state = ObserveState()

- 5)

- done = False

- 6)

- whilenot done do

- 7)

- action = SelectAction(state, ϵ = 0) // Greedy action selection during evaluation

- 8)

- new state, reward, done = PerformAction(action)

- 9)

- Update performance metrics based on reward and QoS metrics

- 10)

- state = new state

- 11)

- end while

- 12)

- end for

- 13)

- Return metrics

Algorithm Complexity Analysis

Result and Analysis

Simulation Setup and Scenarios

Parameters and Tuning Strategies

Performance Metrics Analysis

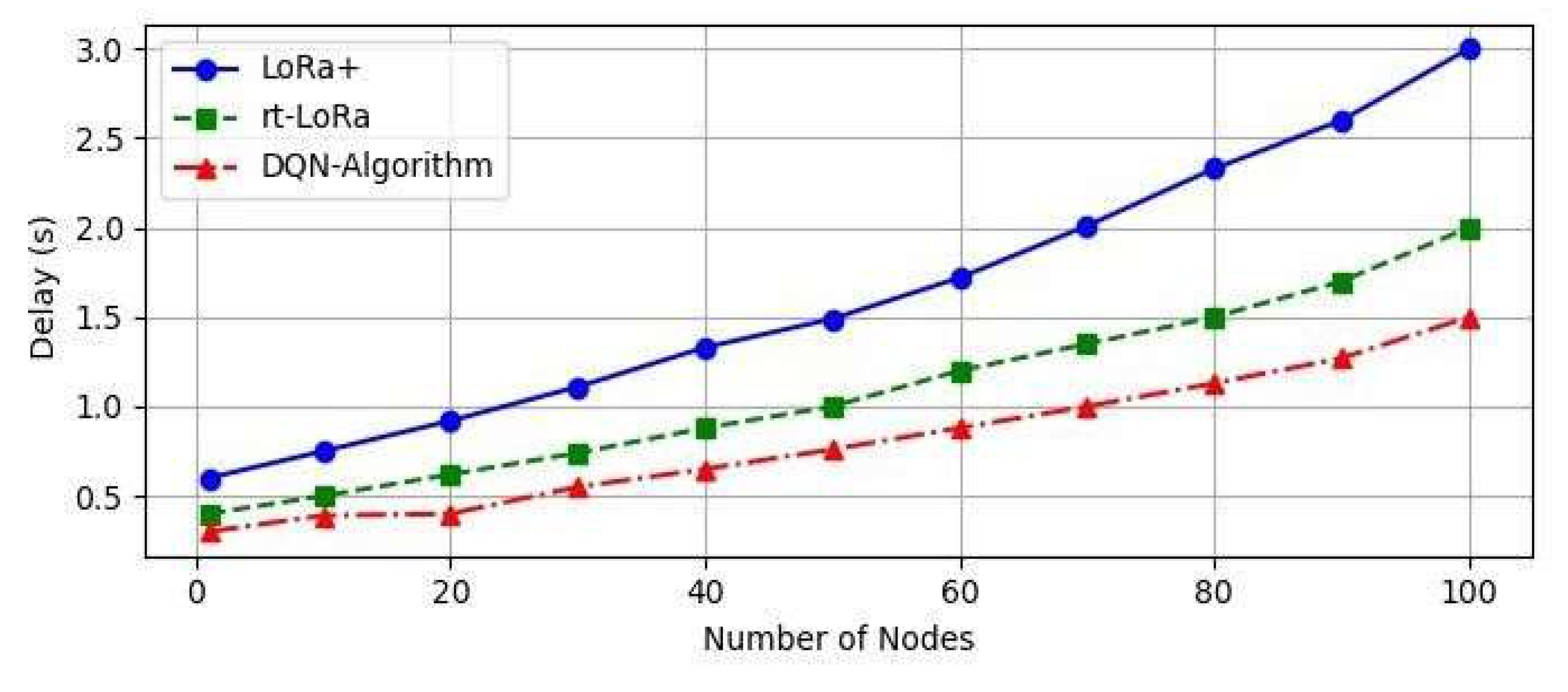

Network Delay

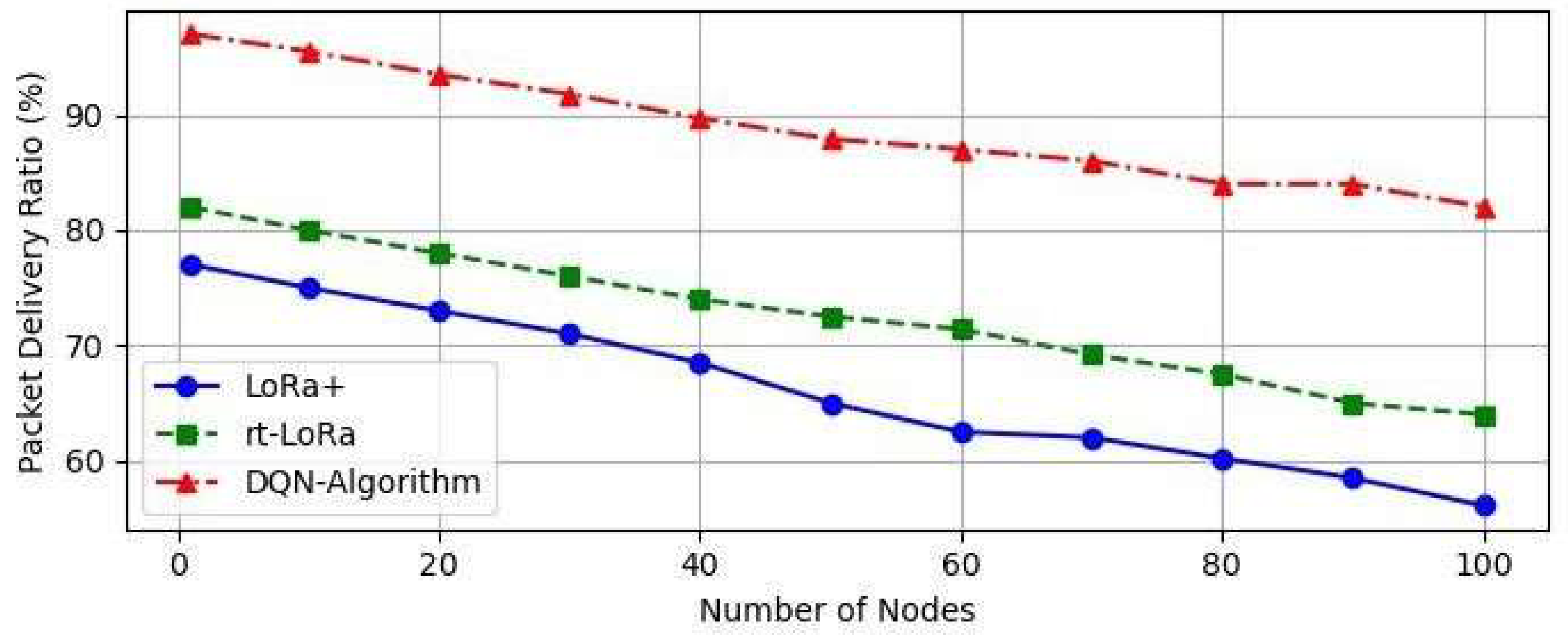

Packet Delivery Ratio (PDR)

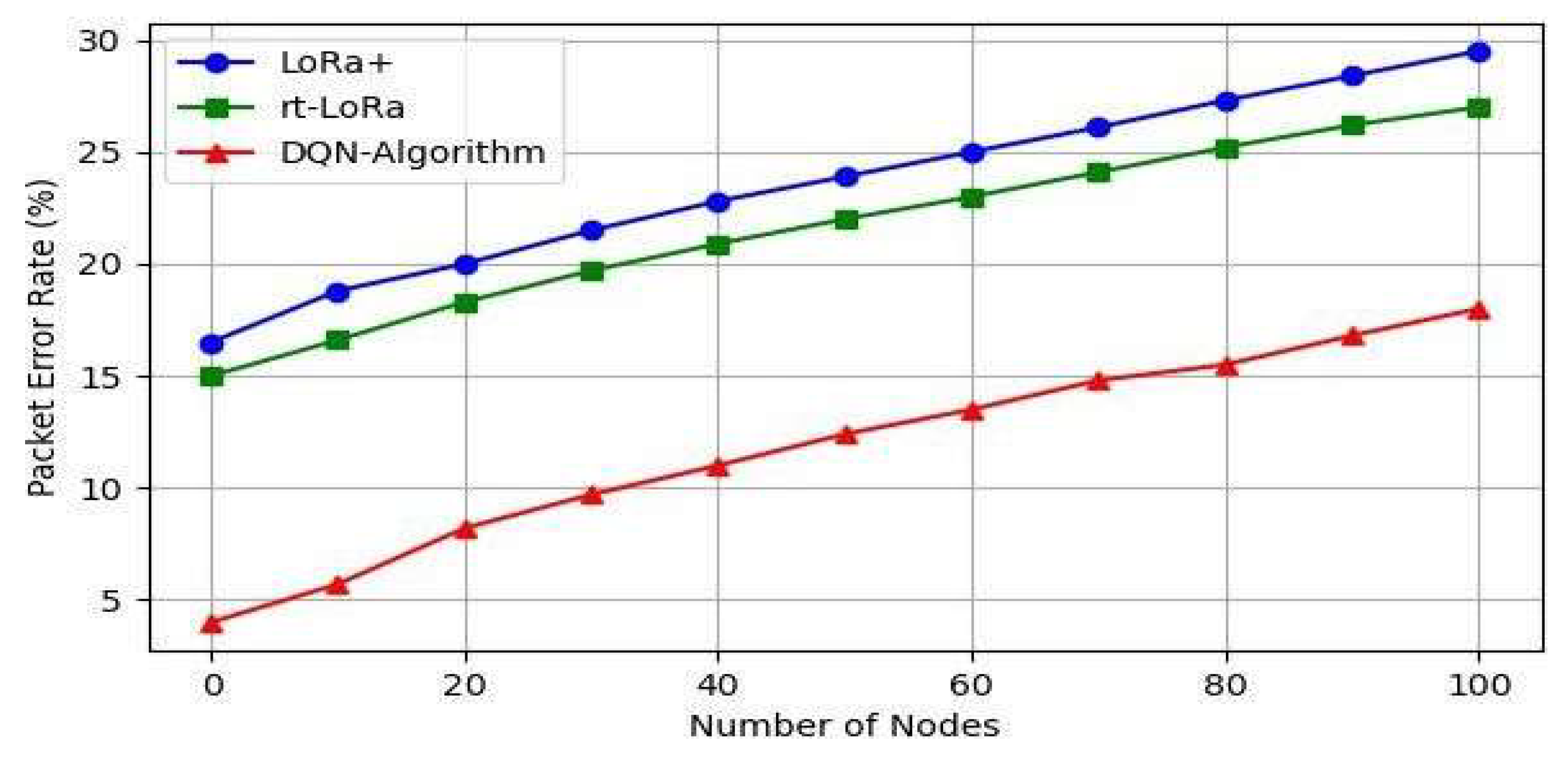

Packet Error Rate (PER)

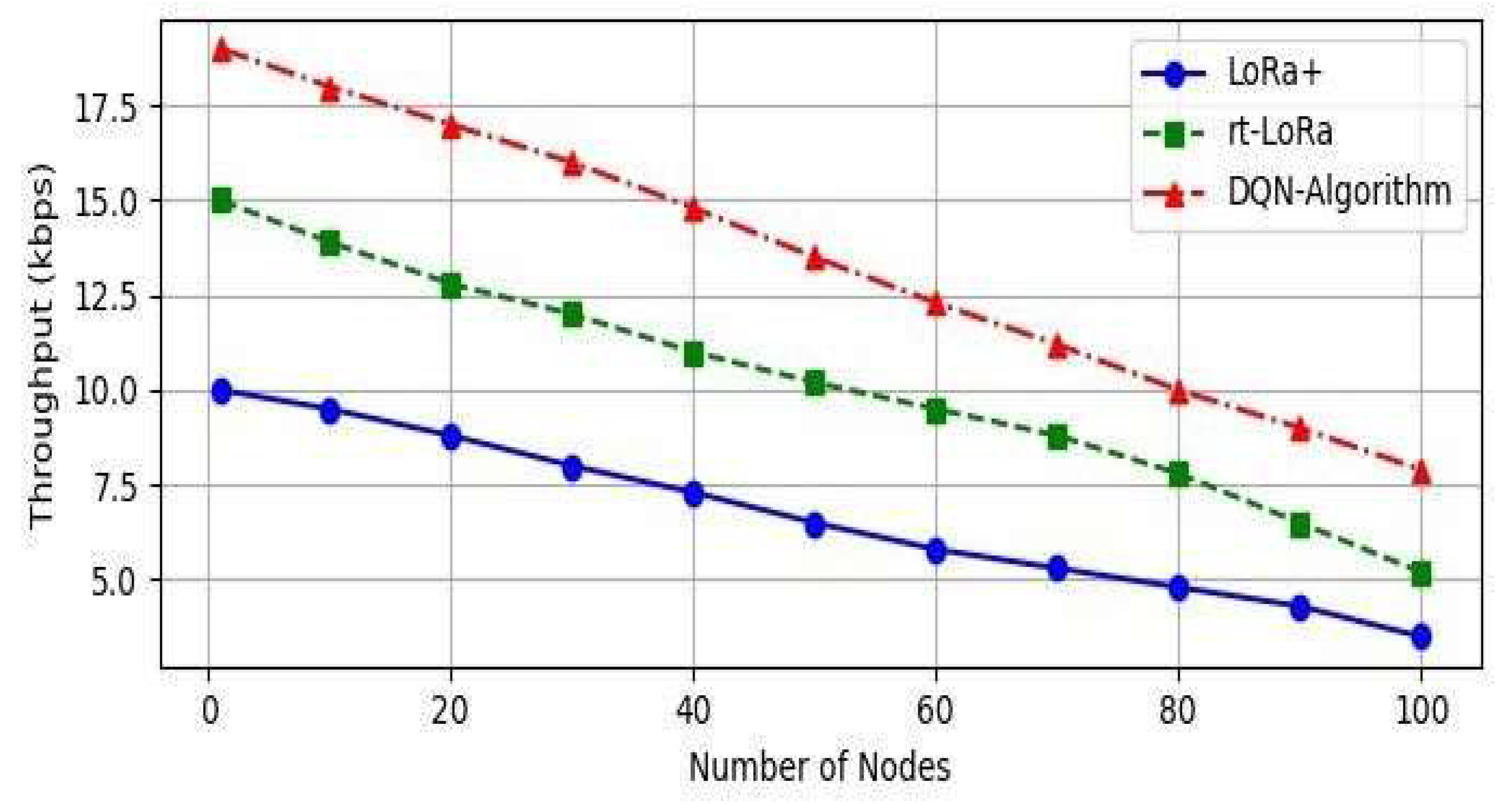

Throughput

Conclusion and Recommendations

Conclusion

Prospects for Further Research

- ▪

- Further enhancements in scheduling performance may result from evaluating additional reinforcement learning algorithms, such as deep reinforcement learning architectures, policy gradient methods, or other cutting-edge techniques.

- ▪

- Deploying dependable and secure IoT applications requires addressing security and privacy issues in LoRaWAN scheduling. How to incorporate security measures into the RL-based algorithm can be investigated in future studies.

- ▪

- Extensive testing and assessment in realistic contexts are necessary for the proposed scheduler’s real-world implementation to validate its performance in the context of network dynamics, communication latency, and device heterogeneity.

References

- Mahmood, N.H.; Marchenko, N.; Gidlund, M.; Popovski, P. Wireless Networks and Industrial IoT: Applications, Challenges and Enablers. Wirel. Networks Ind. IoT Appl. Challenges Enablers 2020, 1–296. [Google Scholar] [CrossRef]

- Mahmood, N.H.; Marchenko, N.; Gidlund, M.; Popovski, P. Wireless Networks and Industrial IoT: Applications, Challenges and Enablers; 2020; ISBN 9783030514730.

- de Oliveira, L.R.; de Moraes, P.; Neto, L.P.S.; da Conceição, A.F. Review of LoRaWAN Applications. 2020. Available online: http://arxiv.org/abs/2004.05871.

- Marais, J.M.; Malekian, R.; Abu-Mahfouz, A.M. LoRa and LoRaWAN testbeds: A review. 2017 IEEE AFRICON Sci. Technol. Innov. Africa, AFRICON 2017 2017, 1496–1501. [Google Scholar] [CrossRef]

- Mekki, K.; Bajic, E.; Chaxel, F.; Meyer, F. Overview of Cellular LPWAN Technologies for IoT Deployment: Sigfox, LoRaWAN, and NB-IoT. 2018 IEEE Int. Conf. Pervasive Comput. Commun. Work. PerCom Work. 2018 2018, 197–202. [Google Scholar] [CrossRef]

- Bouguera, T.; Diouris, J.F.; Chaillout, J.J.; Jaouadi, R.; Andrieux, G. Energy consumption model for sensor nodes based on LoRa and LoRaWAN. Sensors (Switzerland) 2018, 18, 2104. [Google Scholar] [CrossRef] [PubMed]

- Augustin, A.; Yi, J.; Clausen, T.; Townsley, W.M. A study of Lora: Long range & low power networks for the internet of things. Sensors (Switzerland) 2016, 16, 1466. [Google Scholar] [CrossRef]

- Ragnoli, M.; Barile, G.; Leoni, A.; Ferri, G.; Stornelli, V. An autonomous low-power lora-based flood-monitoring system. J. Low Power Electron. Appl. 2020, 10, 15. [Google Scholar] [CrossRef]

- Haxhibeqiri, J.; Moerman, I.; Hoebeke, J. Low overhead scheduling of LoRa transmissions for improved scalability. IEEE Internet Things J. 2019, 6, 3097–3109. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement learning_Peter. Learning. 2012, 3. Available online: http://incompleteideas.net/sutton/book/the-book.html%5Cnhttps://www.dropbox.com/s/f4tnuhipchpkgoj/book2012.pdf.

- Petäjäjärvi, J.; Mikhaylov, K.; Pettissalo, M.; Janhunen, J.; Iinatti, J. Performance of a low-power wide-area network based on lora technology: Doppler robustness, scalability, and coverage. Int. J. Distrib. Sens. Networks 2017, 13. [Google Scholar] [CrossRef]

- Polonelli, T.; Brunelli, D.; Marzocchi, A.; Benini, L. Slotted ALOHA on LoRaWAN-design, analysis, and deployment. Sensors (Switzerland) 2019, 19, 838. [Google Scholar] [CrossRef]

- Alenezi, M.; Chai, K.K.; Alam, A.S.; Chen, Y.; Jimaa, S. Unsupervised learning clustering and dynamic transmission scheduling for efficient dense LoRaWAN networks. IEEE Access 2020, 8, 191495–191509. [Google Scholar] [CrossRef]

- Leonardi, L.; Battaglia, F.; Lo Bello, L. RT-LoRa: A Medium Access Strategy to Support Real-Time Flows Over LoRa-Based Networks for Industrial IoT Applications. IEEE Internet Things J. 2019, 6, 10812–10823. [Google Scholar] [CrossRef]

- Sallum, E.; Pereira, N.; Alves, M.; Santos, M. Improving quality-of-service in LOra low-power wide-area networks through optimized radio resource management. J. Sens. Actuator Networks 2020, 9, 10. [Google Scholar] [CrossRef]

- Micheletto, M.; Zabala, P.; Ochoa, S.F.; Meseguer, R.; Santos, R. Determining Real-Time Communication Feasibility in IoT Systems Supported by LoRaWAN. Sensors 2023, 23, 4281. [Google Scholar] [CrossRef] [PubMed]

- Garrido-Hidalgo, C.; Haxhibeqiri, J.; Moons, B.; Hoebeke, J.; Olivares, T.; Ramirez, F.J.; Fernandez-Caballero, A. LoRaWAN Scheduling: From Concept to Implementation. IEEE Internet Things J. 2021, 8, 12919–12933. [Google Scholar] [CrossRef]

- Siddiqi, U.F.; Sait, S.M.; Uysal, M. Deep Reinforcement Based Power Allocation for the Max-Min Optimization in Non-Orthogonal Multiple Access. IEEE Access 2020, 8, 211235–211247. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Number of Gateways | 3 |

| Number of IoT Devices | 100 |

| Network Server | 1 |

| Environment Size | 200m x 200m |

| Maximum Distance to Gateway | 200m |

| Propagation Model | LoRa Log Normal Shadowing Model |

| Number of Retransmissions | 5(Max) |

| Frequency Band | 868MHz |

| Spreading Factor | SF7, SF8, SF9, SF10, SF11, SF12 |

| Number of Rounds | 1000 |

| Voltage | 3.3v |

| Bandwidth | 125kHz |

| Payload Length | 10 bytes |

| Timeslot Technique | CSMA10 |

| Data Rate (Max) | 250kbps |

| Number of Channels | 5 |

| Simulation Time | 600 Seconds |

| Parameter | Value |

|---|---|

| Number of Hidden Layers | 2 |

| Number of Neurons per Layer | 128 |

| Learning Rate | 0.001 |

| Discount Factor (Gamma) | 0.95 |

| Exploration Rate (Epsilon) | 1.0 |

| Exploration Decay Rate | 0.995 |

| Minimum Exploration Rate | 0.01 |

| Replay Buffer Size | 30,000 |

| Batch Size | 64 |

| Target Network Update Frequency | Every 500 steps |

| Activation Function | ReLU |

| Optimizer | Adam |

| Loss Function | Mean Squared Error |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).