Submitted:

22 February 2025

Posted:

24 February 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Data

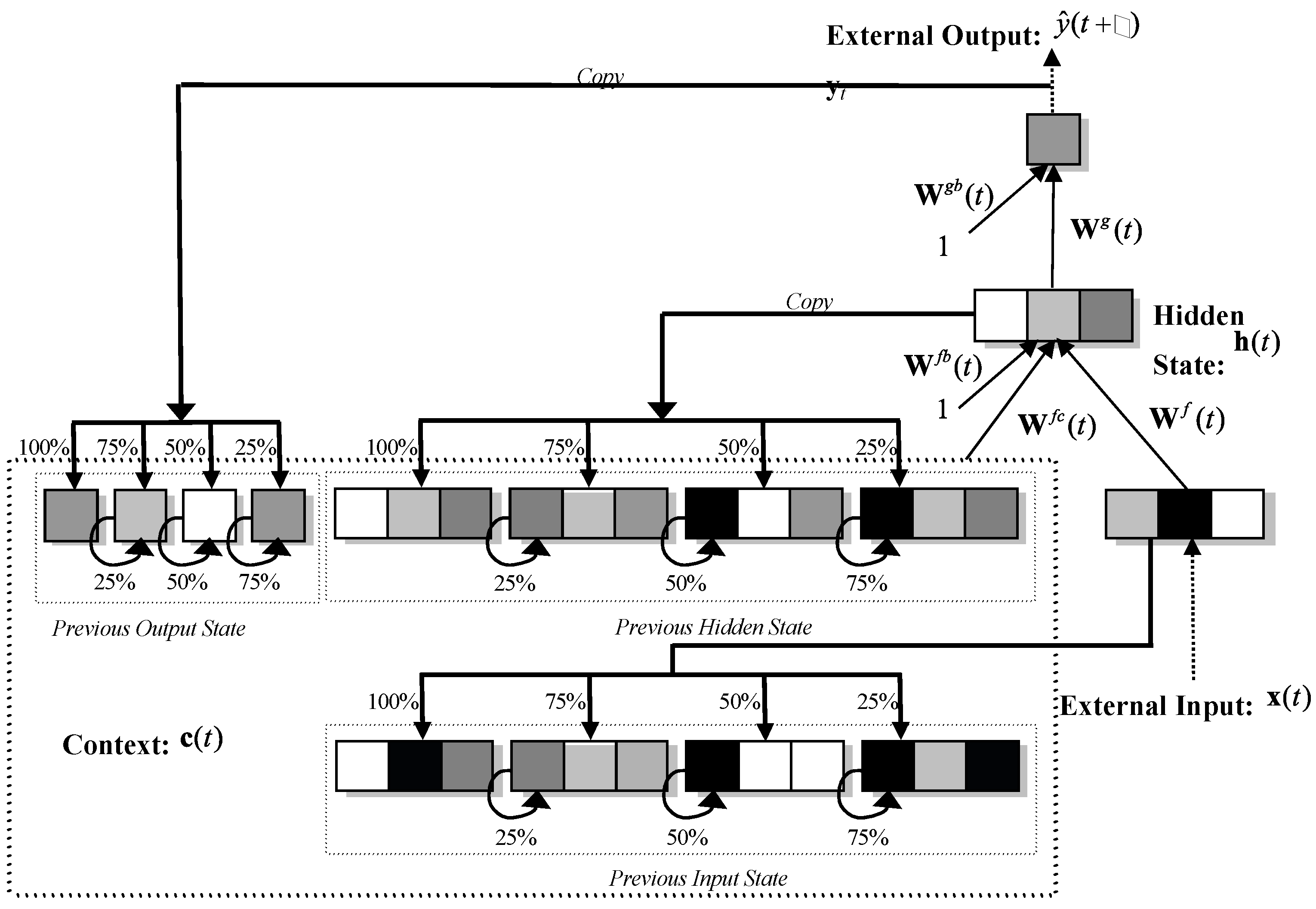

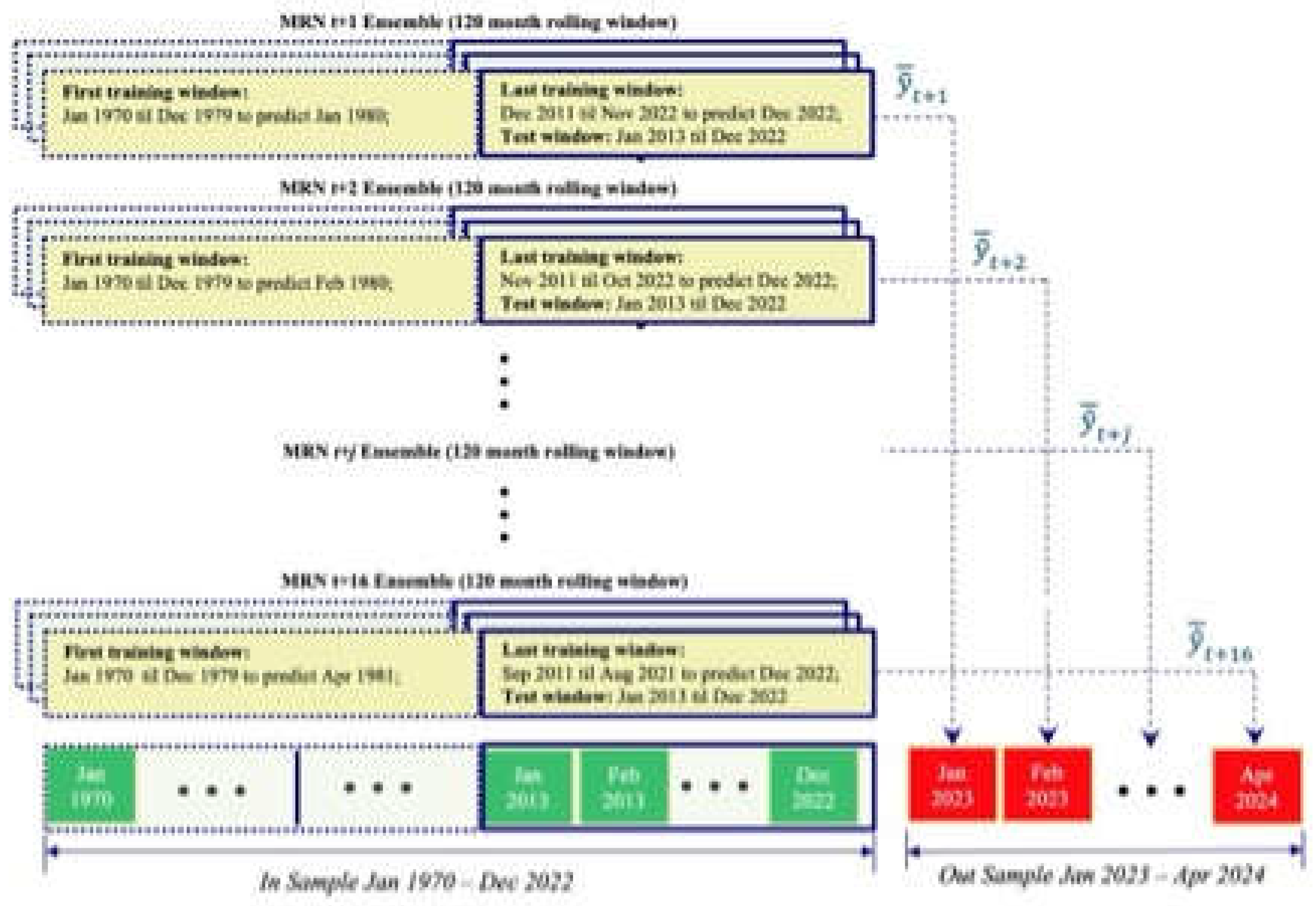

2.2. The Multi-Recurrent Network Methodology

- a)

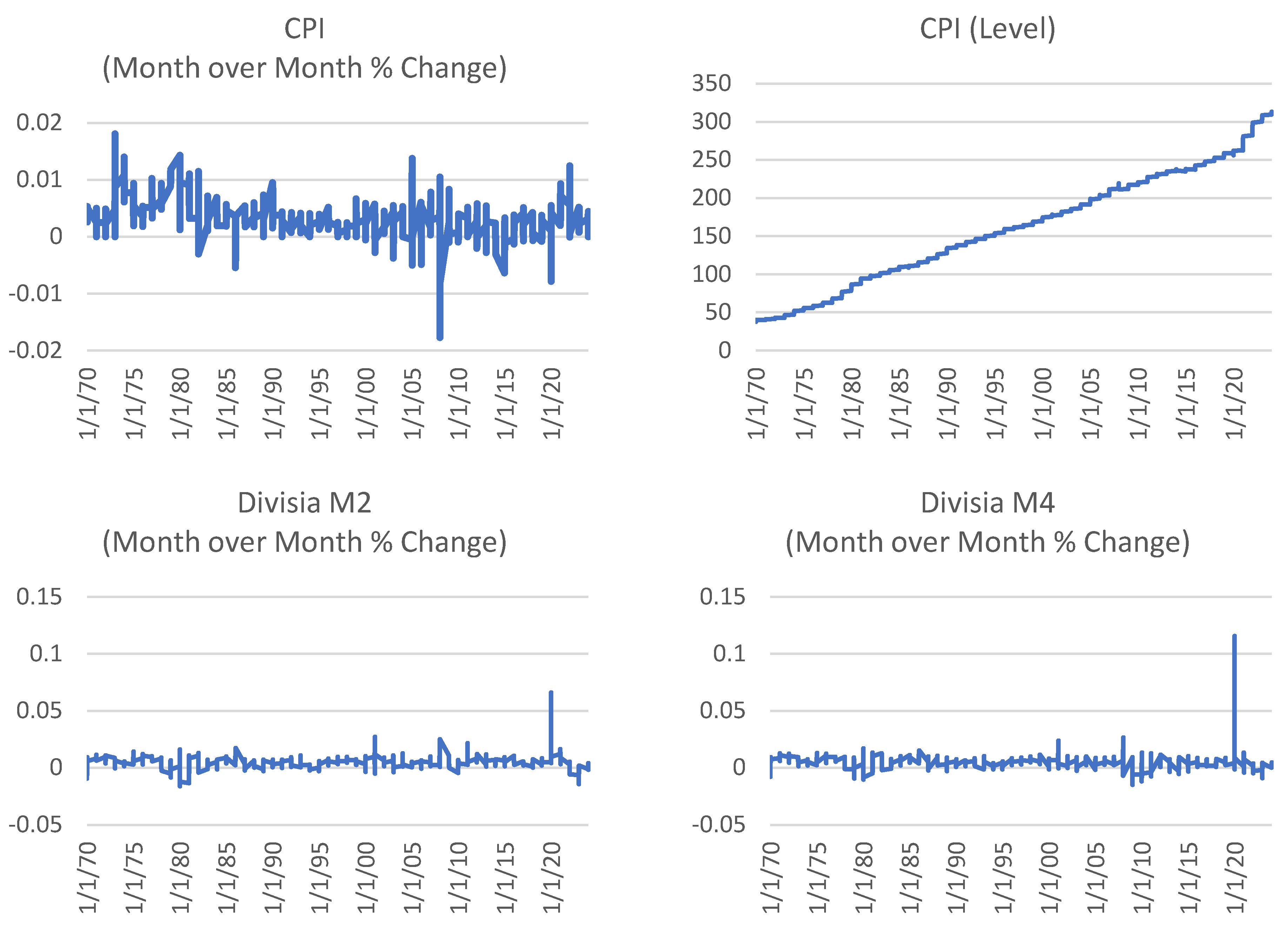

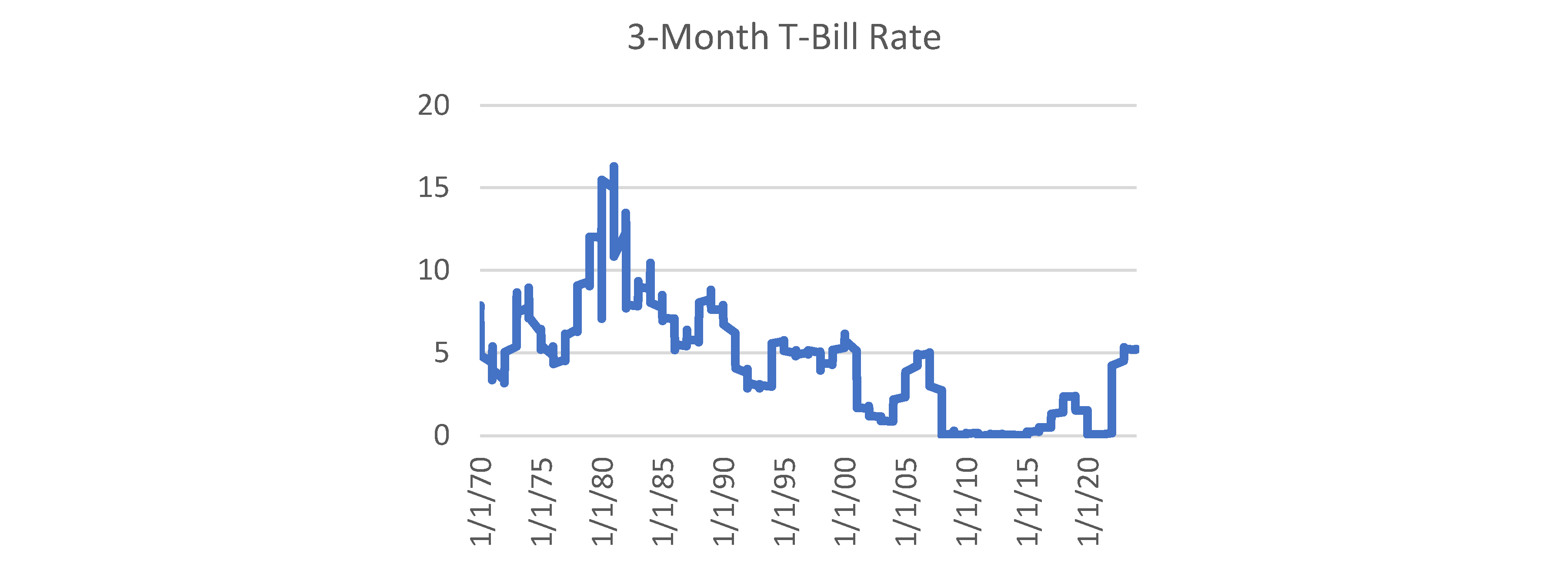

- simple MRN consisting of three input variables: the month-on-month percentage change in inflation (cpi_percmom, the auto-regressive term), the natural log of the price level (ln_cpi_level) and 3-Month Treasury Bill Secondary Market Rate (dtb3).

- b)

- intermediate MRN which includes a) plus the month-on-month growth rate (%) for the Divisia monetary measure DM4 (dm4_percmom).

- c)

- complex MRN which includes b) plus the month-on-month growth rate (%) for the Divisia monetary measure DM2 (dm2_percmom).

2.3. Survey of Professional Forecasters (SPF)

2.3. Forecast Evaluation Procedure

- Root Mean Squared Error (RMSE), which measures average forecast errors and emphasizes large deviations.

- Symmetric Mean Absolute Percentage Error (sMAPE) which expresses errors as a percentage, simplifying comparisons across different scales.

- Theil’s U Statistic, which compares model performance to a naïve forecast, with lower values indicating better accuracy.

- Improvement Over Random Walk, which quantifies how much better a forecasting model performs compared to a random walk benchmark, expressed as a proportion

3. Results

3.1. Forecast Evaluation

3.2. Comparison of CPI and Forecasts Over Time

4. Discussion

5. Conclusions

References

- Aydin, A., & Cavdar, S. (2015). Two Different Points of View through Artificial Intelligence and Vector Autoregressive Models for Ex Post and Ex Ante Forecasting. Computational Intelligence and Neuroscience, 2015, Article ID 714512. [CrossRef]

- Bachmeier, L., Leelahanon, S., & Li, Q. (2007). Money Growth and Inflation in the United States. Macroeconomic Dynamics, 11, 113–127. [CrossRef]

- Bank of England (2024). Bank of England Research Agenda for Research (2025 – 2028). Available online: https://www.bankofengland.co.uk/research/bank-of-england-agenda-for-research (accessed on 13th January 2025).

- Barnett, W.A. (1997). Which Road Leads to Stable Money? Economic Journal, 107, 1171–1185.

- Belongia, M.T., & Binner, J.M. (Eds.) (2000). Divisia Monetary Aggregates: Theory and Practice. Palgrave Macmillan. ISBN: 0-333-64744-0.

- Bengio, Y., Simard, P., & Frasconi, P. (1994). Learning Long-Term Dependencies with Gradient Descent is Difficult. IEEE Transactions on Neural Networks, 5(2), 157–166. [CrossRef]

- Binner, J.M., Gazely, A.M., Chen, S.H., & Chie, B.T. (2004a). Financial Innovation and Divisia Money in Taiwan: Comparative Evidence from Neural Network and Vector Error-Correction Forecasting Models. Contemporary Economic Policy, 22(2), 213–224. [CrossRef]

- Binner, J.M., Kendall, G., & Chen, S.H. (2004b). Applications of Artificial Intelligence in Economics and Finance. In T.B. Fomby & R.C. Hill (Eds.), Advances in Econometrics (Vol. 19, pp. 127–144). Emerald Publishing.

- Binner, J.M., Bissoondeeal, R.K., Elger, T., & Mullineux, A.W. (2005). A Comparison of Linear Forecasting Models and Neural Networks: An Application to Euro Inflation and Euro Divisia. Applied Economics, 37, 665–680. [CrossRef]

- Binner, J.M., Tino, P., Tepper, J.A., Anderson, R.G., Jones, B.E., & Kendall, G. (2010). Does Money Matter in Inflation Forecasting? Physica A: Statistical Mechanics and its Applications, 389, 4793–4808.

- Binner, J.M., Chaudhry, S.M., Kelly, L.J., & Swofford, J.L. (2018). Risky Monetary Aggregates for the USA and UK. Journal of International Money and Finance, 89, 127–138.

- Binner, J.M., Bissoondeeal, R.K., Valcarcel, V., & Jones, B.E. (2025, forthcoming). Identifying Monetary Policy Shocks with Divisia Money in the United Kingdom. Macroeconomic Dynamics, forthcoming.

- Binner, J.M., Dixon, H., Jones, B.E., & Tepper, J.A. (2024b). A Neural Network Approach to Forecasting Inflation. In UK Economic Outlook (Spring ed., Box A, pp. 8–11). NIESR.

- Bodyanskiy, Y., & Popov, S. (2006). Neural Network Approach to Forecasting of Quasiperiodic Financial Time Series. European Journal of Operational Research, 175, 1357–1366. [CrossRef]

- Box, G.E.P., & Jenkins, G.M. (1970). Time Series Analysis: Forecasting and Control. Holden-Day.

- Briggs, A., & Sculpher, M. (1998). An Introduction to Markov Modelling for Economic Evaluation. PharmacoEconomics, 13(4), 397–409. [CrossRef]

- Brown, R.G. (1956). Exponential Smoothing for Predicting Demand. http://legacy.library.ucsf.edu/tid/dae94e00 (accessed on 13th January 2025).

- Cao, B., Ewing, B.T., & Thompson, M.A. (2012). Forecasting Wind Speed with Recurrent Neural Networks. European Journal of Operational Research, 221, 148–154. [CrossRef]

- Cerqueira, V., Torgo, L., & Soares, C. (2019). Machine Learning vs Statistical Methods for Time Series Forecasting: Size Matters. Applied Soft Computing, 80, 1–18.

- Chen, J. (2020). Economic Forecasting with Autoregressive Methods and Neural Networks. Available at . [CrossRef]

- Cho, K., van Merrienboer, B., Bahdanau, D., Bougares, F., Schwenk, H., & Bengio, Y. (2014). Learning Phrase Representations Using RNN Encoder–Decoder for Statistical Machine Translation. arXiv Preprint, arXiv:1406.1078.

- Cont, R. (2001). Empirical Properties of Asset Returns: Stylized Facts and Statistical Issues. Quantitative Finance, 1(2), 223–236.

- Croushore, D., & Stark, T. (2019). Fifty Years of the Professional Forecasters. Federal Reserve Bank of Philadelphia Research Department, Quarter 4.https://www.philadelphiafed.org/the-economy/macroeconomics/fifty-years-of-the-survey-of-professional-forecasters.

- Curry, B. (2007). Neural Networks and Seasonality: Some Technical Considerations. European Journal of Operational Research, 179, 267–274.

- Cybenko, G. (1989). Approximation by Superpositions of a Sigmoid Function. Mathematics of Control, Signals and Systems, 2, 303–314. [CrossRef]

- Dede Ruslan, D., Rusiadi, R., Novalina, A., & Lubis, A. (2018). Early Detection of the Financial Crisis of Developing Countries. International Journal of Civil Engineering and Technology, 9(8), 15–26. [CrossRef]

- Dia, H. (2001). An Object-Oriented Neural Network Approach to Traffic Forecasting. European Journal of Operational Research, 131, 253–261.

- Dixon, H. (2021). The Simple Arithmetic of Inflation: Using “Drop-in” and “Drop-out” for Exploring Future Short-Run Inflation Scenarios. Box A, pp. 23–24. UK Economic Outlook – Summer 2021. NIESR. [CrossRef]

- Dorsey, R.E. (2000). Neural Networks with Divisia Money: Better Forecasts of Future Inflation? In M.T. Belongia & J.M. Binner (Eds.), Divisia Monetary Aggregates: Theory and Practice (pp. 12–28). Palgrave Macmillan.

- Elman, J. (1990). Finding Structure in Time. Cognitive Science, 14, 179–211.

- Forbes, K. (2023). Monetary Policy Under Uncertainty: The Hare or the Tortoise? Presented at the Boston Federal Reserve Bank Conference, Boston, MA.

- Friedman, M., & Schwartz, A.J. (1970). Monetary Statistics of the United States. Columbia University Press.

- Gazely, A.M., & Binner, J.M. (2000). A Neural Network Approach to the Divisia Index Debate: Evidence from Three Countries. Applied Economics, 32, 1607–1615.

- Giles, C.L., Lawrence, S., & Tsoi, A.C. (2001). Noisy Time Series Prediction Using a Recurrent Neural Network and Grammatical Inference. Machine Learning, 44(1/2), 161–183.

- Goldfeld, S., & Quandt, R.E. (1973). A Markov Model for Switching Regressions. Journal of Econometrics, 1(1), 3–15. [CrossRef]

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press. [CrossRef]

- Hamilton, J.D. (1989). A New Approach to the Economic Analysis of Non-Stationary Time Series and the Business Cycle. Econometrica, 57(2), 357–384.

- Hamilton, J.D. (1994). Time Series Analysis. Princeton University Press.

- Hamilton, J.D. (2016). Macroeconomic Regimes and Regime Shifts. (NBER Working Paper No. w21863). https://ssrn.com/abstract=2713588.

- Hellwig C. (2008). Welfare costs of inflation in a menu cost model, American Economic Review, 98 [2], pp 438 – 443.

- Hochreiter, S., & Schmidhuber, J. (1997). Long Short-Term Memory. Neural Computation, 9(8), 1735–1780. [CrossRef]

- Holt, C.C. (1957). Forecasting Seasonal and Trends by Exponentially Weighted Moving Averages. Carnegie Institute of Technology, Pittsburgh, PA.

- Hornik, K., Stinchcombe, M., & White, H. (1989). Multilayer Feedforward Networks are Universal Approximators. Neural Networks, 2(5), 359–366. [CrossRef]

- Jordan, M. (1986). Attractor Dynamics and Parallelism in a Connectionist Sequential Machine. In J. Diederich (Ed.), Artificial Neural Networks: Concept-Learning (pp. 112–127). IEEE Press. (Conference paper originally presented in 1986).

- Kelly, L.J., Binner, J.M., & Tepper, J.A. (2024). Do Monetary Aggregates Improve Inflation Forecasting in Switzerland? Journal of Management Policy and Practice, 25(1), 124–133.

- Kelly, L.J., Tepper J.A., Binner, J.M., Dixon H. and Jones B.E. (2025) National Institute UK Economic Outlook Box A: (NIESR): An alternative UK economic forecast available at https://niesr.ac.uk/wp-content/uploads/2025/02/JC870-NIESR-Outlook-Winter-2025-UK-Box-A.pdf?ver=u3KMjmyGBUB2ukqGdvpT.

- Lawrence, S., Giles, C.L., & Fong, S. (2000). Natural Language Grammatical Inference with Recurrent Neural Networks. IEEE Transactions on Knowledge and Data Engineering, 12, 126–140. [CrossRef]

- Lee, H., & Show, L. (2006). Why Use Markov-Switching Models in Exchange Rate Prediction? Economic Modelling, 23(4), 662–668.

- Lin, T., Horne, B., Tino, P., & Giles, C.L. (1996). Learning Long-Term Dependencies in NARX Recurrent Neural Networks. IEEE Transactions on Neural Networks, 7(6), 1329–1338. [CrossRef]

- Mahadik, A., Vaghela, D., & Mhaisgawali, A. (2021). Stock Price Prediction Using LSTM and ARIMA. In 2021 Second International Conference on Electronics and Sustainable Communication Systems (ICESC) (pp. 1594–1601). IEEE.

- Makridakis, S., & Hibon, M. (2000). The M3-Competition: Results, Conclusions and Implications. International Journal of Forecasting, 16(4), 451–476.

- Makridakis, S., Spiliotis, E., & Assimakopoulos, V. (2018). Statistical and Machine Learning Forecasting Methods: Concerns and Ways Forward. PLOS ONE, 13(3), e0194889. [CrossRef]

- McCallum, B.T. (1993). Unit Roots in Macroeconomic Time Series: Some Critical Issues. Federal Reserve Bank of Richmond Economic Quarterly, 79(2), 13–43.

- Moshiri, S., Cameron, N., & Scuse, D. (1999). Static, Dynamic, and Hybrid Neural Networks in Forecasting Inflation. Computational Economics, 14, 219–235. [CrossRef]

- Murray, C., Chaurasia, P., Hollywood, L., & Coyle, D. (2022). A Comparative Analysis of State-of-the-Art Time Series Forecasting Algorithms. In International Conference on Computational Science and Computational Intelligence. IEEE.

- Nakamura, E. (2005). Inflation Forecasting Using a Neural Network. Economics Letters, 86, 373–378.

- Narayanaa, T., Skandarsini, R., Ida, S.R., Sabapathy, S.R., & Nanthitha, P. (2023). Inflation Prediction: A Comparative Study of ARIMA and LSTM Models Across Different Temporal Resolutions. In 2023 3rd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA) (pp. 1390–1395). IEEE. [CrossRef]

- Nelson, E. (2003). The Future of Monetary Aggregates in Monetary Policy Analysis. CEPR Discussion Paper No. 3897. [CrossRef]

- Orojo, O. (2022). Optimizing sluggish state-based neural networks for effective time-series processing. PhD, Nottingham Trent University, Unpublished.

- Orojo, O., Tepper, J.A., Mcginnity, T.M., & Mahmud, M. (2019). A Multi-Recurrent Network for Crude Oil Price Prediction. In 2019 IEEE Symposium Series on Computational Intelligence (SSCI) (pp. 2940–2945). IEEE. [CrossRef]

- Orojo, O., Tepper, J.A., Mcginnity, T.M., & Mahmud, M. (2023). The Multi-Recurrent Neural Network for State-of-the-Art Time-Series Processing. Procedia Computer Science, 222, 488–498. [CrossRef]

- Pearlmutter, B.A. (1995). Gradient Calculations for Dynamic Recurrent Neural Networks: A Survey. IEEE Transactions on Neural Networks, 6, 1212–1228.

- Petrica, A., Stancu, S., & Tindeche, A. (2016). Limitations of ARIMA Models in Financial and Monetary Economics. Theoretical and Applied Economics, 23(4), 19–42. [CrossRef]

- Refenes, A.N., & Azema-Barac, M. (1994). Neural Network Applications in Financial Asset Management. Neural Computing and Applications, 2, 13–39.

- Roumeliotis, K.I., & Tselikas, N.D. (2023). ChatGPT and Open-AI Models: A Preliminary Review. Future Internet, 15(6), 192. [CrossRef]

- Rumelhart, D.E., Hinton, G.E., & Williams, R.A. (1986). Learning Internal Representations by Error Propagation. In D.E. Rumelhart & J.L. McClelland (Eds.), Parallel Distributed Processing: Explorations in the Microstructure of Cognition (Vol. 1, pp. 318–362). MIT Press.

- Sermpinis, G., Konstantinos, T., Andreas, B., Karathanasopoulos, C., Efstratios, F.G., & Dunis, C. (2013). Forecasting Foreign Exchange Rates with Adaptive Neural Networks, Using Radial-Basis Functions and Particle Swarm Optimization. European Journal of Operational Research, 225, 528–540.

- Sharkey, N., Sharkey, A., & Jackson, S. (2000). Are SRNs Sufficient for Modelling Language Acquisition? In P. Broeder & J.M.J. Murre (Eds.), Models of Language Acquisition: Inductive and Deductive Approaches (pp. 35–54). Oxford University Press.

- Shirdel, M., Asadi, R., Do, D., & Hintlian, M. (2021). Deep Learning with Kernel Flow Regularization for Time Series Forecasting. arXiv Preprint, arXiv:2109.11649. [CrossRef]

- Sims, C.A. (1980). Macroeconomics and Reality. Econometrica, 48(1), 1–48. [CrossRef]

- Stock, J.H., & Watson, M.W. (2007). Why Has U.S. Inflation Become Harder to Forecast? Journal of Money, Credit and Banking, 39, 3–33. [CrossRef]

- Tenti, P. (1996). Forecasting Foreign Exchange Rates Using Recurrent Neural Networks. Applied Artificial Intelligence, 10, 567–581.

- Tepper, J.A., Shertil, M.S., and Powell, H.M. (2016). On the Importance of Sluggish State Memory for Learning Long Term Dependency. Knowledge-Based Systems, 102, 1–11. [CrossRef]

- Tsay, R. S. (2005). Analysis of Financial Time Series. Wiley and Sons, ISBN:9780471690740.

- Ulbricht, C. (1994). Multi-Recurrent Networks for Traffic Forecasting. In Proceedings of the Twelfth National Conference on Artificial Intelligence (pp. 883–888). AAAI Press/MIT Press.

- Tino, P., Horne, B.G., & Giles, C.L. (2001). Attractive Periodic Sets in Discrete Time Recurrent Networks (with Emphasis on Fixed Point Stability and Bifurcations in Two–Neuron Networks). Neural Computation, 13(6), 1379–1414. [CrossRef]

- Vaswani, A., Shazeer, N.M., Parmar, N.P., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L.K. and Polosukhin, I. (2017). Attention is All You Need. arXiv Preprint, arXiv:1706.03762.

- Virili, F., & Freisleben, B. (2000). Nonstationarity and Data Preprocessing for Neural Network Predictions of an Economic Time Series. In Neural Networks, 2000. IJCNN 2000, Proceedings of the IEEE-INNS-ENNS International Joint Conference on (Vol. 5, pp. 129–134). IEEE.

- Werbos, P.J. (1990). Backpropagation Through Time: What It Does and How to Do It. Proceedings of the IEEE, 78(10), 1550–1560. [CrossRef]

- Whittle, P. (1951). Hypothesis Testing in Time Series Analysis. Almquist & Wiksell.

- Williams, R.J., & Peng, J. (1990). An Efficient Gradient-Based Algorithm for On-Line Training of Recurrent Network Trajectories. Neural Computation, 2(4), 490–501. [CrossRef]

- Yuan, C. (2011). Forecasting Exchange Rates: The Multi-State Markov-Switching Model with Smoothing (2009). International Review of Economics & Finance, 20, 55–66. https://ssrn.com/abstract=2225306 . [CrossRef]

- Zhang, G.P., & Qi, M. (2005). Neural network forecasting for seasonal and trend time series. European Journal of Operational Research, 160(2), 501–514. [CrossRef]

- Zeng, A., Chen, M., Zhang, L., & Xu, Q. (2022). Are Transformers Effective for Time Series Forecasting? arXiv Preprint, arXiv:2205.13504.

| Forecast Method | RMSE | sMAPE |

Theil U Statistic |

Improvement over Random Walk |

| simpleMRN | 0.472 | 10.91% | 0.124 | 0.876 |

| intermediateMRN | 0.637 | 16.47% | 0.167 | 0.833 |

| complexMRN | 0.627 | 15.29% | 0.164 | 0.836 |

| averageMRN | 0.395 | 9.66% | 0.104 | 0.896 |

| SPF | 0.580 | 11.33% | 0.152 | 0.848 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).